1. Introduction

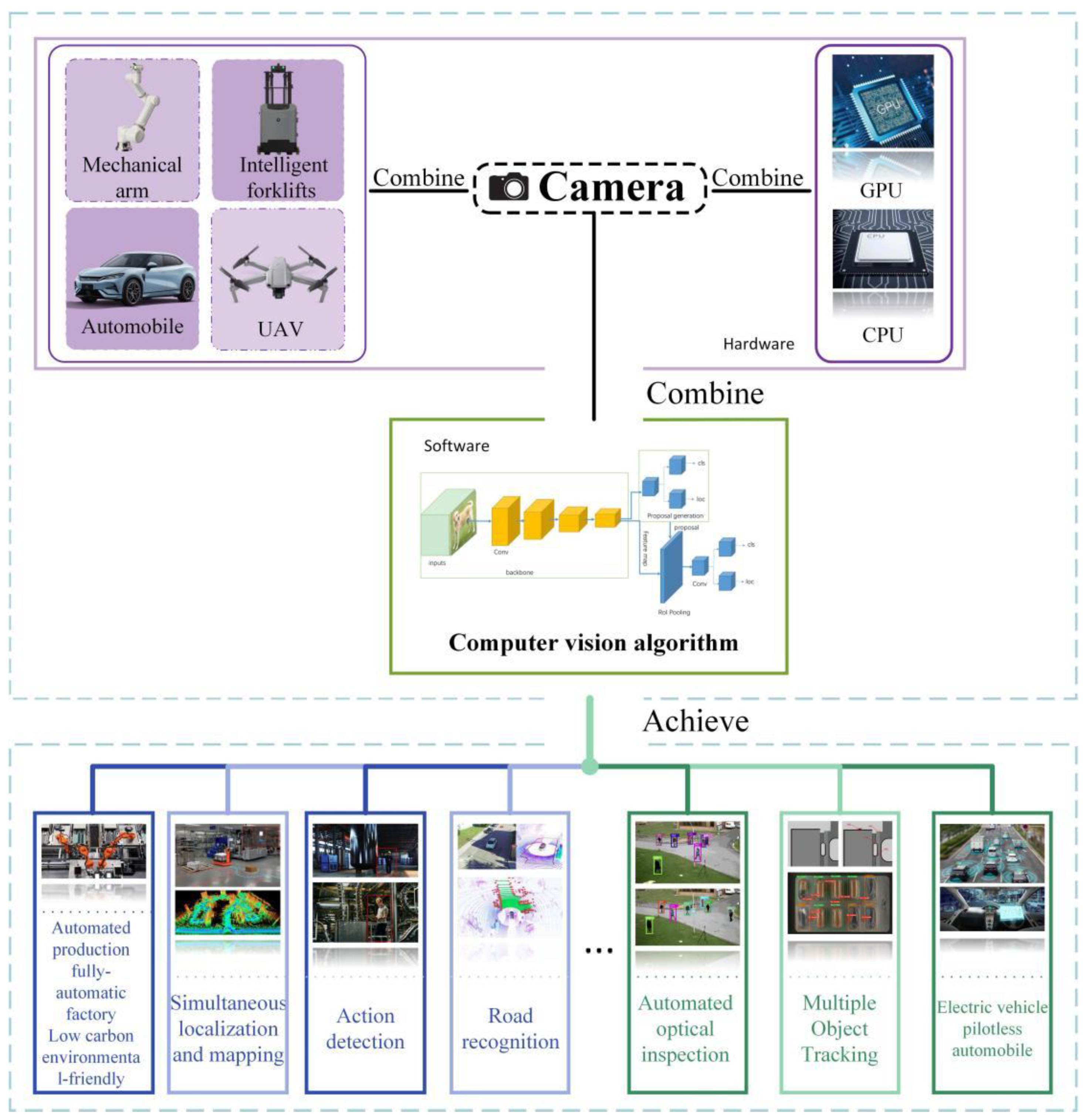

In the contemporary digital age, the deployment of computer vision systems as a substitute for traditional, expensive sensors, serving as the primary data source for a multitude of application scenarios, not only reduces costs but also significantly elevates performance ceilings. Furthermore, various systems' intelligence can be enhanced by combining AI algorithms with cognitive thinking patterns.

Visual object detection, a cornerstone task of computer vision, entails the identification of specific visual objects (such as humans, animals, roads, manufactured products, or vehicles) within digital images or video streams. By analyzing the motion patterns, characteristics, and behavioral states of the target, the automated intelligent system can determine the specific actions or inferential outcomes. Similar to this, Visual SLAM (Simultaneous Localization and Mapping) navigation operates by employing image feature collection for indoor mapping and navigation. The purpose of object detection is to advance the development of computer vision models and methods, which can be applied to other domains, such as long- and short-term visual tracking.

In recent years, the integration of vision detection technology in robotic vehicles has positioned them at the forefront of innovation in autonomous systems. These vehicles have been extensively applied across a broad spectrum of technical applications, including localization and navigation, path planning, multitask collaboration, target detection, three-dimensional pose estimation, obstacle detection and avoidance strategies, robotic grasping, automated robotic welding, and security surveillance. This widespread application underscores the pivotal role of robotic vehicles in advancing the capabilities and functionalities of autonomous systems. These applications can be realized through various methods, including vision detection, localization, and tracking. The convergence of computer vision and green energy technologies in multi-driverless systems presents a unique set of challenges and opportunities, encompassing aspects from perception and navigation to energy management and environmental impact. This survey aims to elucidate the potential of computer vision algorithms to revolutionize the interaction of multi-driverless robotic vehicles with their surroundings and contribute to a more sustainable future by examining the latest developments and applications in the field.

On the other hand, the evolution of deep convolutional neural networks coupled with the augmentation of GPU computing capabilities has been instrumental in the accelerated progression of computer vision technology in recent years, primarily due to their synergistic contributions. Presently, the majority of state-of-the-art object detection systems employ deep learning networks as the foundational framework for feature extraction and the classification of images or video streams. Therefore, this paper offers a concentrated review of deep learning algorithms, highlighting their pivotal role in the advancement of computer vision technology.

Computer vision technology has found extensive applications in a myriad of real-world domains, as illustrated in

Figure 1, including multi-driverless vehicles, robotic vision, video surveillance, SLAM (Simultaneous Localization and Mapping) navigation, human behavior detection, and automated low-carbon, environmentally friendly production in unmanned factories. These applications have permeated various sectors of modern life, encompassing security, automation, the military, transportation, and medicine, demonstrating the pervasive influence and utility of computer vision technology in contemporary society.

The field of object detection has experienced significant advancements, attributable in part to the establishment of numerous benchmarks such as Caltech [

1], KITTI [

2], ImageNet [

3], PASCAL VOC [

4], MS COCO [

5], and Open Images V5 [

6]. Furthermore, the organizers of the ECCV VisDrone 2018 competition introduced an innovative drone platform-based dataset [

7], comprising a comprehensive collection of images and videos.

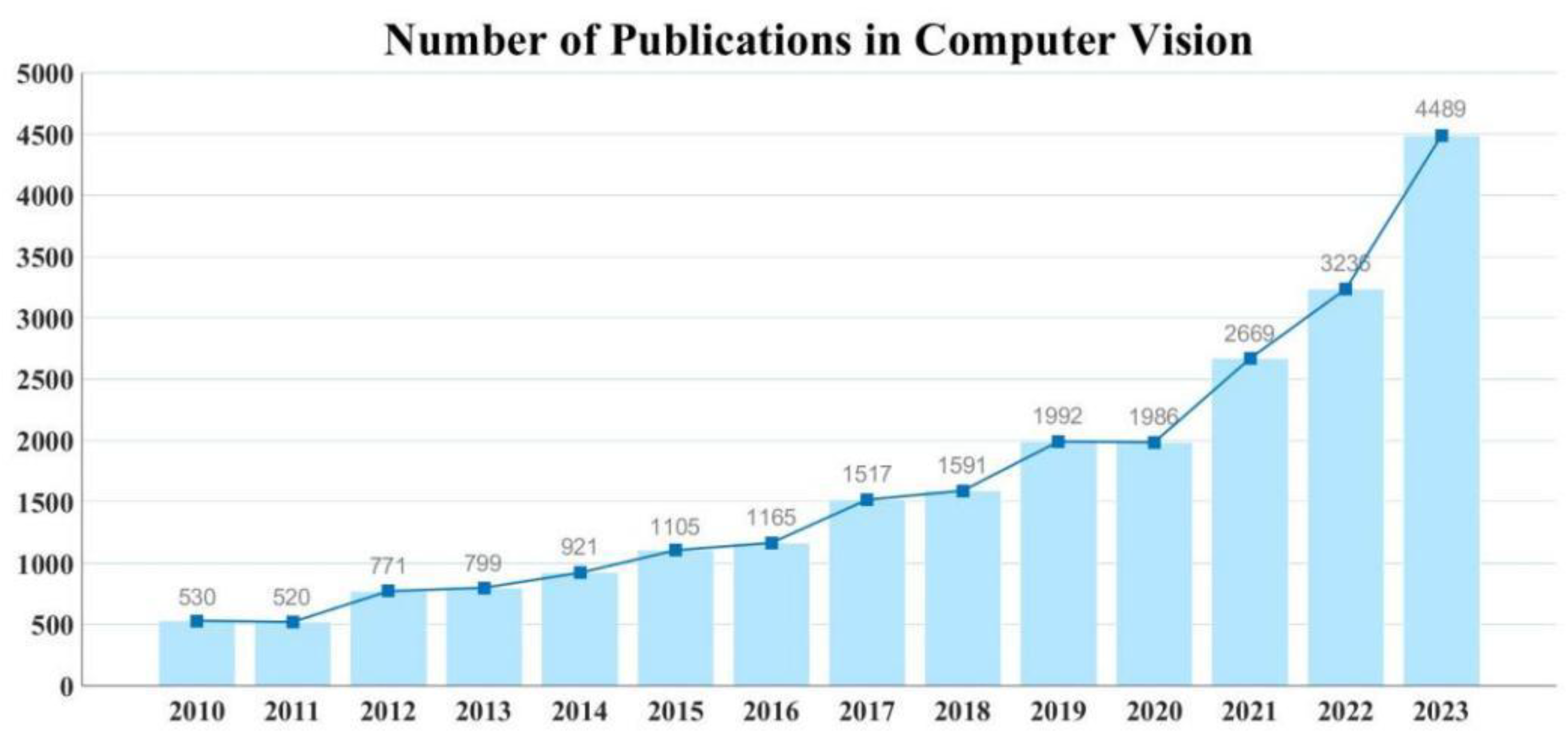

Figure 2 illustrates the ascending trend in the number of papers tagged with "computer vision" over the past decade, highlighting the field's growing prominence and impact within the scientific and academic communities.

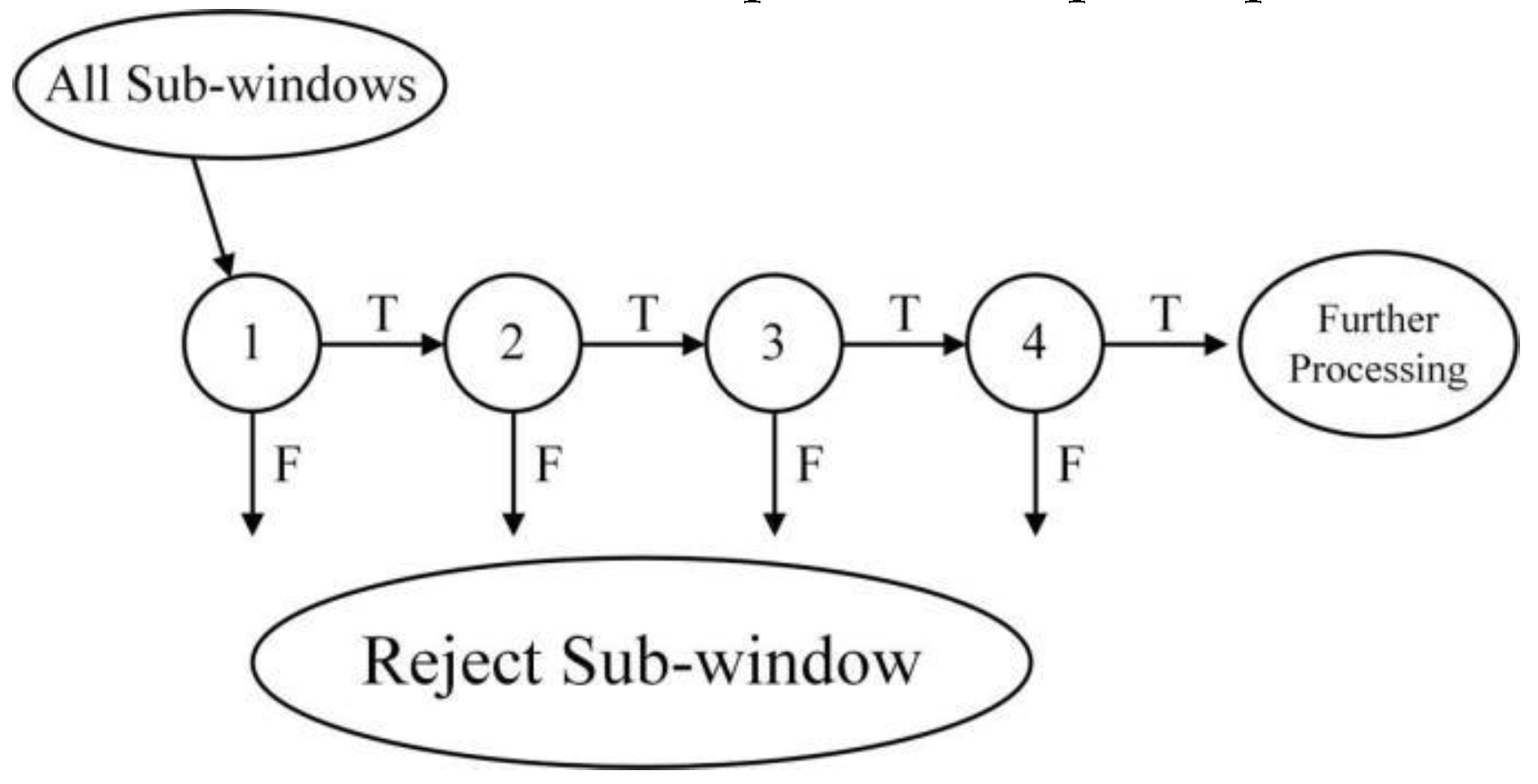

In 2001, P. Viola and M. Jones introduced the Viola-Jones (VJ) detector [

8], which effectively achieved face detection by employing techniques such as feature extraction selection, integral image processing, and multi-stage detection to enhance the performance and speed of the VJ detector. Subsequently, in 2005, N. Dalal and B. Triggs proposed the HOG (Histogram of Oriented Gradients) Detector [

9], which implemented scale-invariant feature transformation, enabling effective recognition of objects of different sizes, particularly for human detection. This method has become foundational for many computer vision technologies, providing a robust framework for object detection and recognition.

The DPM (Deformable Part-based Model), developed by P. Felzenszwalb [

10] based on HOG, represents a significant milestone in traditional vision detection. It introduced concepts such as mixture models, hard negative mining, and bounding box regression, which continue to exert a profound influence on contemporary computer vision algorithms. DPM primarily employs multiple related filters to replace the process of manually specified screening, functioning as a weakly supervised self-learning method. This detection algorithm achieved championships in the VOC-07, -08, and -09 detection challenges, demonstrating its efficacy and robustness in object detection tasks.

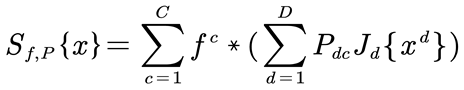

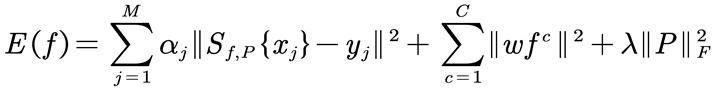

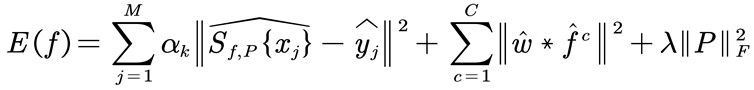

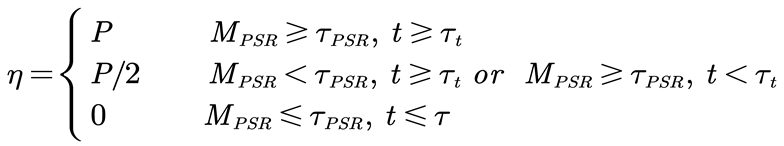

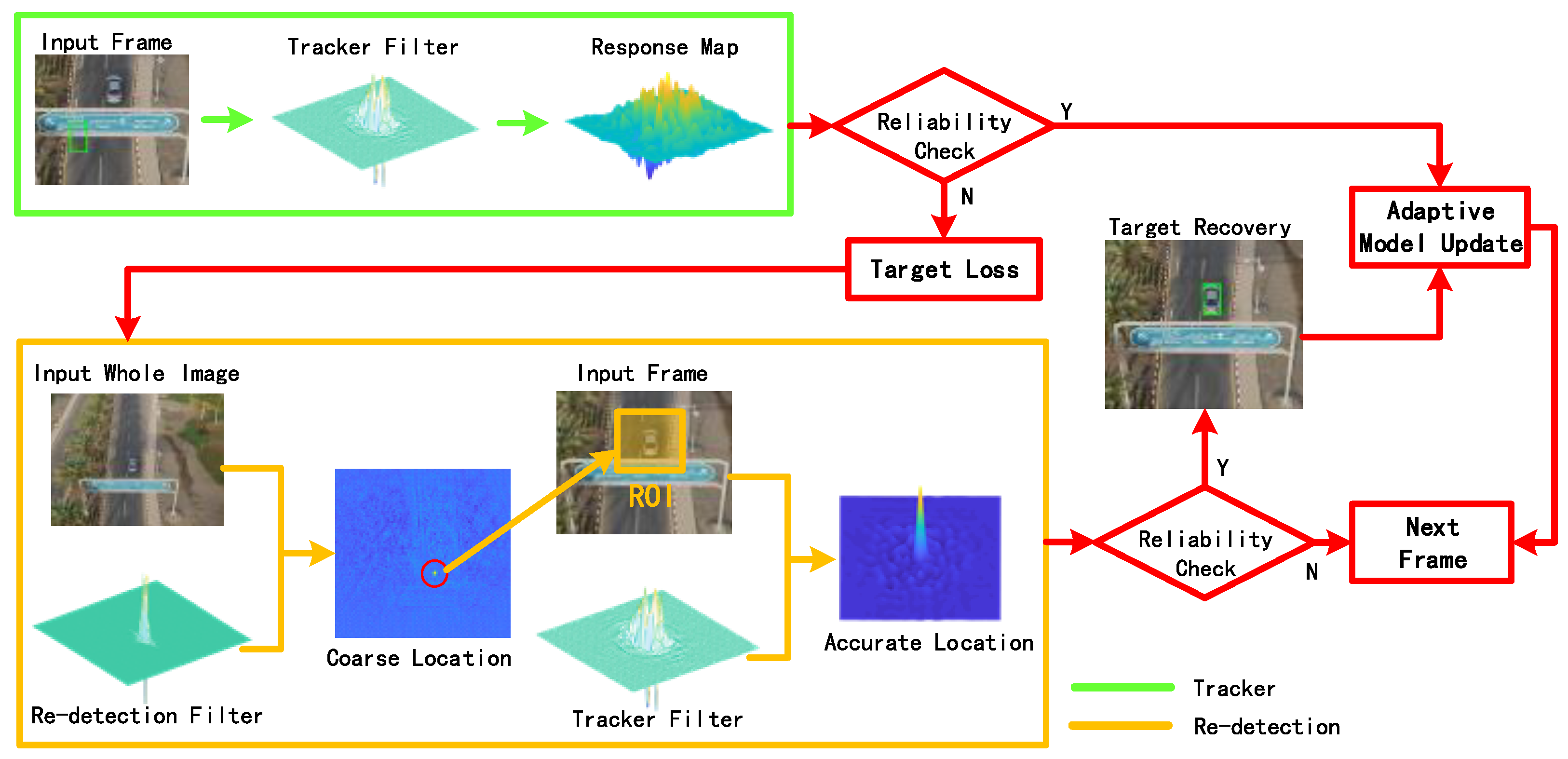

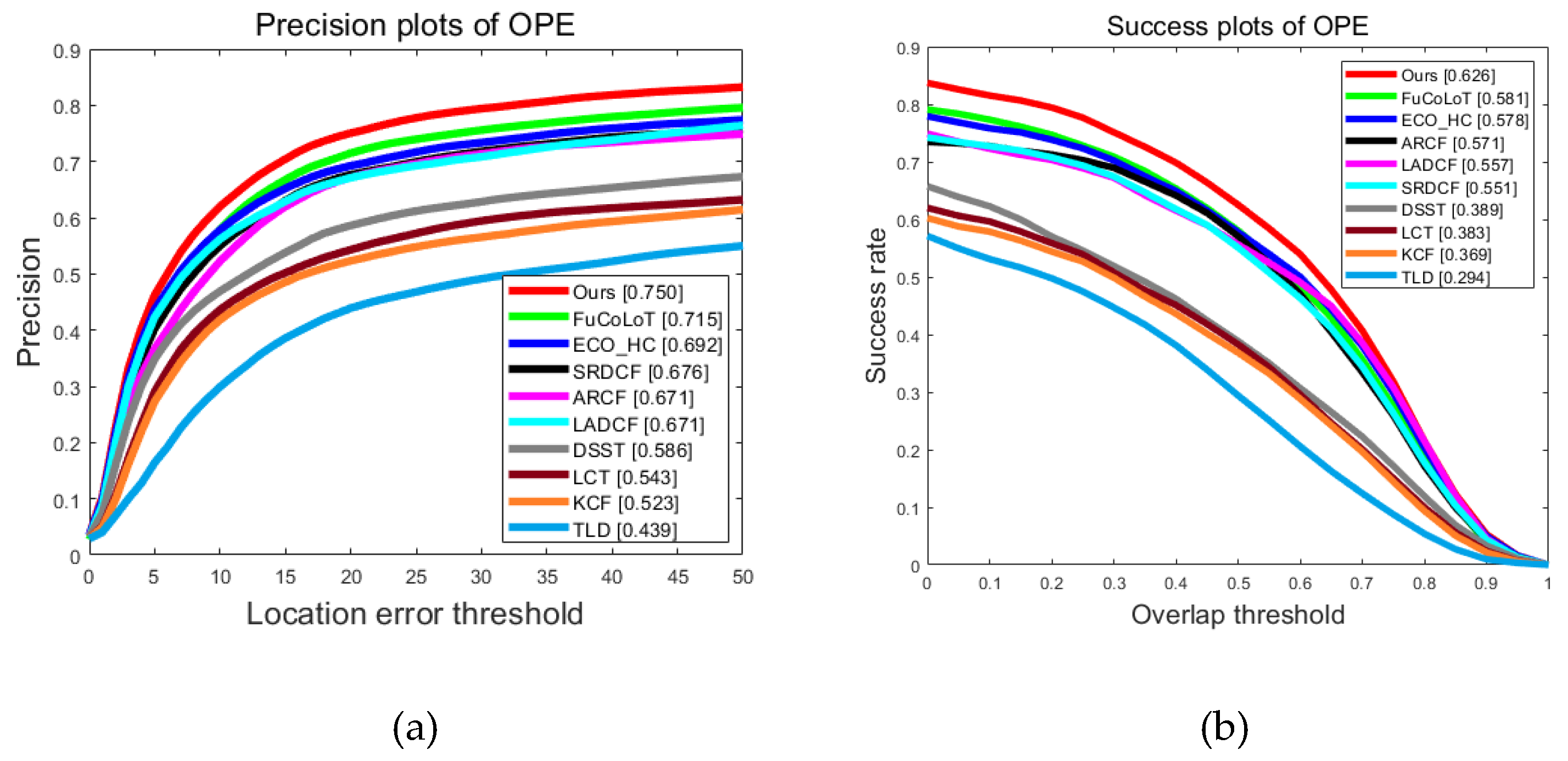

Building on the foundation of computer vision detection, Bolme et al. constructed a novel visual object tracking framework in 2010 using correlation filtering and proposed the MOSSE (Minimum Output Sum of Squared Error) algorithm [

11]. MOSSE resolved an optimization problem using the target's grayscale features and a Gaussian expected output function, training a discriminative correlation filter. In 2014, Henriques introduced the KCF (Kernelized Correlation Filters) tracking algorithm [

12], providing a complete theoretical derivation of the cyclic nature and offering a method to integrate multi-channel features into the correlation filter framework. This method incorporated multidimensional HOG features into the algorithm, further enhancing its performance. In 2019, Xu et al. proposed the LADCF (Learning Adaptive Discriminative Correlation Filters) algorithm [

13], addressing common issues of boundary effects and temporal filter degradation in correlation filter-based algorithms. This tracking algorithm combined adaptive spatial feature selection and temporal consistency constraints, enabling effective representation in low-dimensional manifolds and improving tracking accuracy. In the same year, Huang et al. introduced the ARCF (Aberrance Repressed Correlation Filters) tracking algorithm [

14], a long-term target tracking algorithm based on the BACF(Background-Aware Correlation Filters) algorithm. The ARCF incorporated aberration repressed correlation filters in the detection module, restraining the variation rate of response maps generated during the detection phases to suppress aberrations, thereby achieving more stable and accurate target tracking.

The introduction of the CNN (Convolutional Neural Network) model by R. Girshick in 2014 [

15] marked a significant milestone in the application of convolutional neural networks for object detection and tracking. Subsequently, in 2015, S. Ren et al. proposed Faster R-CNN [

16], which introduced the RPN (Region Proposal Network), further advancing the field. In the same year, R. Joseph et al. presented the YOLO model based on One-stage Detectors [

17], significantly enhancing computational speed. R. Joseph subsequently made a series of improvements to the YOLO model, leading to the development of its v2 and v3 editions [

18]. Currently, Alexey Bochkovskiy and others have updated the algorithm to YOLOv8, which has been applied in various scenarios [

19].

With the maturation of deep learning models, in 2017, Chen B. X. et al. proposed a pedestrian tracking system for unmanned vehicles based on binocular stereo cameras [

20], addressing the issue of RGBD cameras being unsuitable for outdoor use. This type of camera calculates depth information through the disparity between two cameras. In 2021, Liu et al. [

21] designed a deep learning-based robotic system closed-loop tracking framework, DeepSORT, capable of effectively implementing automatic detection and tracking of new vehicles with sensing capability in complex environments. In the same year, TEED Z. et al. proposed DROID-SLAM [

22], which had a significant impact on visual SLAM. This method liberated unmanned vehicles from the constraints of expensive LiDAR by utilizing visual cameras, enabling not only object detection but also localization and navigation. The DROID-SLAM model, based on deep learning, can effectively extract features from the front end and compute optical flow fields to iteratively update the pose and depth of the current keyframe, while performing BA(Bundle Adjustment ) optimization in the backend, greatly enhancing the system's robustness, generalization ability, and performance.A plethora of deep learning-based computer vision technologies applied to multi-vehicle unmanned driving and automated intelligent unmanned factories have effectively improved the efficiency and intelligence of various systems [

23].

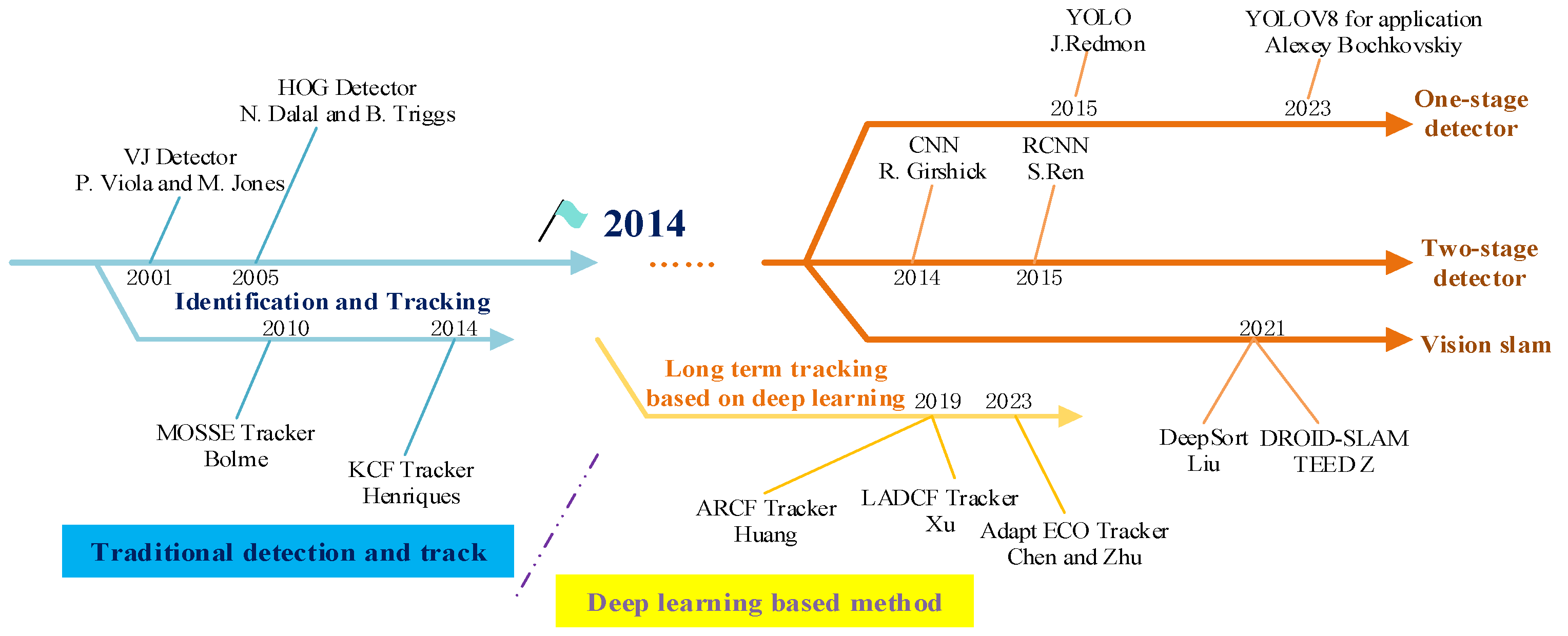

This article also presents a comprehensive survey of research on computer vision and a roadmap of milestone vision detectors, as illustrated in

Figure 3. The authors have summarized and organized the key nodes proposed by the computer vision detection network, categorizing them into traditional methods and deep learning methods based on their chronological development. Post-2014, the classification was further refined into one-stage detectors, two-stage detectors, and visual SLAM, providing an intuitive reflection of the technological evolution. These highlights distinguish the current research landscape from the numerous general object detection reviews that have been published in recent years [

24,

25,

26,

27,

28,

29,

30,

31].

Section one serves as an introduction, encompassing the background, application, research status, and classification of the field. Section two delineates the computer vision system and elucidates the basic principles of traditional correlation filtering algorithms. Section three explores vision algorithms based on deep learning developed in recent years and compares them with traditional algorithms. Additionally, this part offers a summary of detection datasets that we have created and are widely used, along with statistics for each. Section four delves into visual SLAM (Simultaneous Localization and Mapping) algorithms that support computer vision, encompassing long-term visual tracking and the collaboration between multi-driverless robotic vehicles; current challenges and future research directions are also addressed. Section five concludes the article.

3. Review of deep learning-based computer vision

3.1. Computer Vision Datasets and Metrics

The construction of larger datasets with reduced bias is a fundamental aspect of advancing computer vision algorithms. Over the past decade, a multitude of well-known datasets and benchmarks have been released, such as those from the PASCAL VOC Challenges [

34,

35](e.g., VOC2007, VOC2012) and visual recognition challenges [

36,

37](e.g., UAV20L, UAV123). In addition to general object detection, the past two decades have witnessed a proliferation of detection applications in specific domains, including pedestrian detection, face detection, text detection, traffic sign/light detection, and remote sensing target detection.

Table 1 provides a compilation of some of the popular datasets for these specific detection tasks.

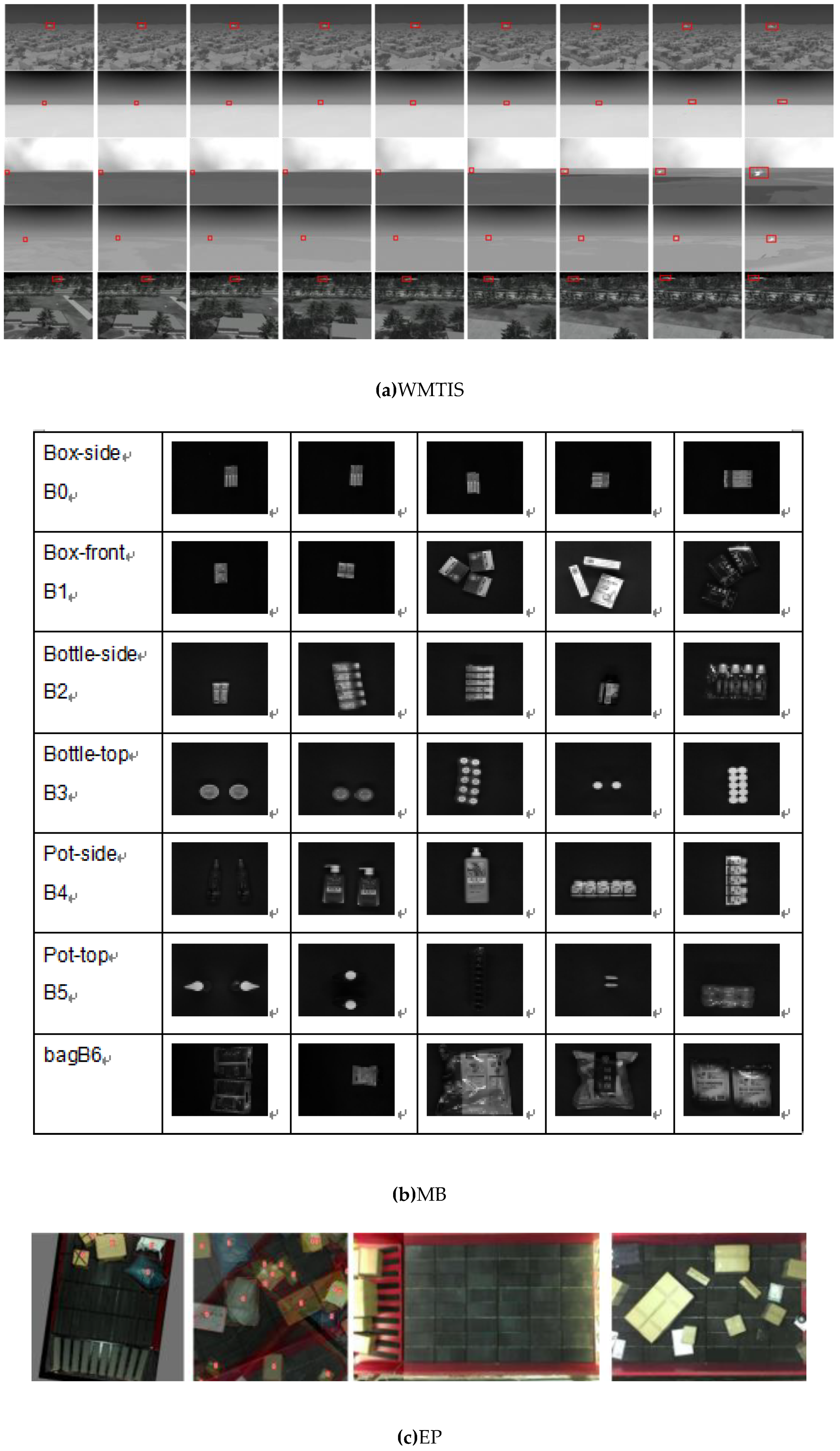

Concurrently, we have established our own datasets to meet the demands of in-depth research and practical applications. These datasets encompass various domains such as weak military targets in infrared scenes (WMTIS), the appearance of medical industry medicine boxes (MB), express packages (EP), personnel in fully automated unmanned factories, and product detection and tracking (FPP).

Table 2 outlines the parameters and descriptions of these diverse datasets, while

Figure 2 provides illustrative examples from each dataset.

Figure 7.

The datasets we have built ourselves in recent years.

Figure 7.

The datasets we have built ourselves in recent years.

3.2. Review of Deep Learning Computer Vision Based on Convolutional Neural Networks

Machines can now interpret and understand visual data in ways that were before impractical due to deep learning, which has completely changed the field of computer vision. Deep neural networks, especially convolutional neural networks (CNNs), which are built to automatically and adaptively learn spatial hierarchies of features from vast volumes of image data, are at the core of this transformation. These networks are made up of several neuronal layers, each of which is trained to detect increasingly complex features, such as edges and textures in the initial layers and higher-level objects and patterns in the deeper layers. Training these networks involves using large datasets of labeled images and a backpropagation algorithm to adjust the weights of the neurons so that the network can accurately classify or recognize objects. Deep learning in computer vision encompasses principles that apply to tasks like object detection, semantic segmentation, and image generation. These tasks utilize deep neural networks to extract meaningful information from pixels and convert it into realistic images or actionable insights. Applications ranging from surveillance and augmented reality to medical imaging and autonomous cars with sensing capability have significantly advanced as a result.

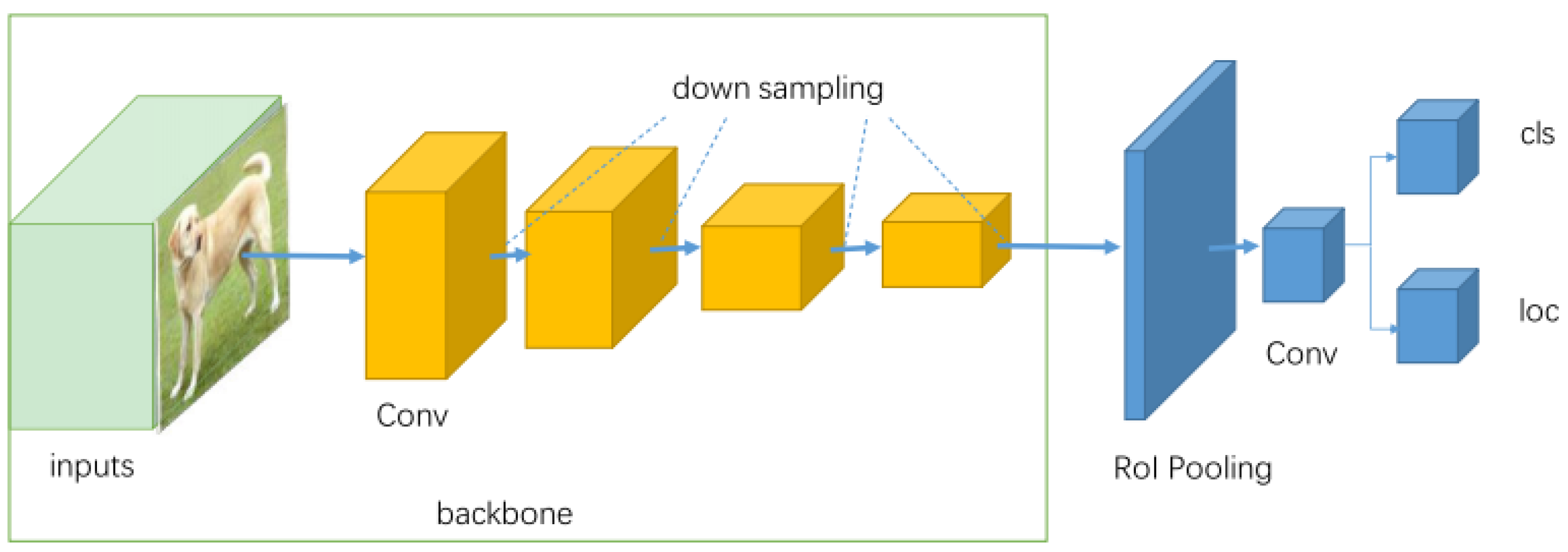

As was mentioned in the preceding section, there are now two main types of core visual detectors. The first is the one-stage detector network, which is appropriate for relatively basic application situations requiring high real-time performance because of its rapid inference speed. YOLO, SSD [

45], and other instances are among the most prevalent ones.

Figure 8 shows the fundamental structure of this type of detector.

In the structure, the yellow part represents a series of convolutional layers in the backbone network with the same resolution, while the blue part is the RoI pooling layer, which generates feature maps for objects of the same size.

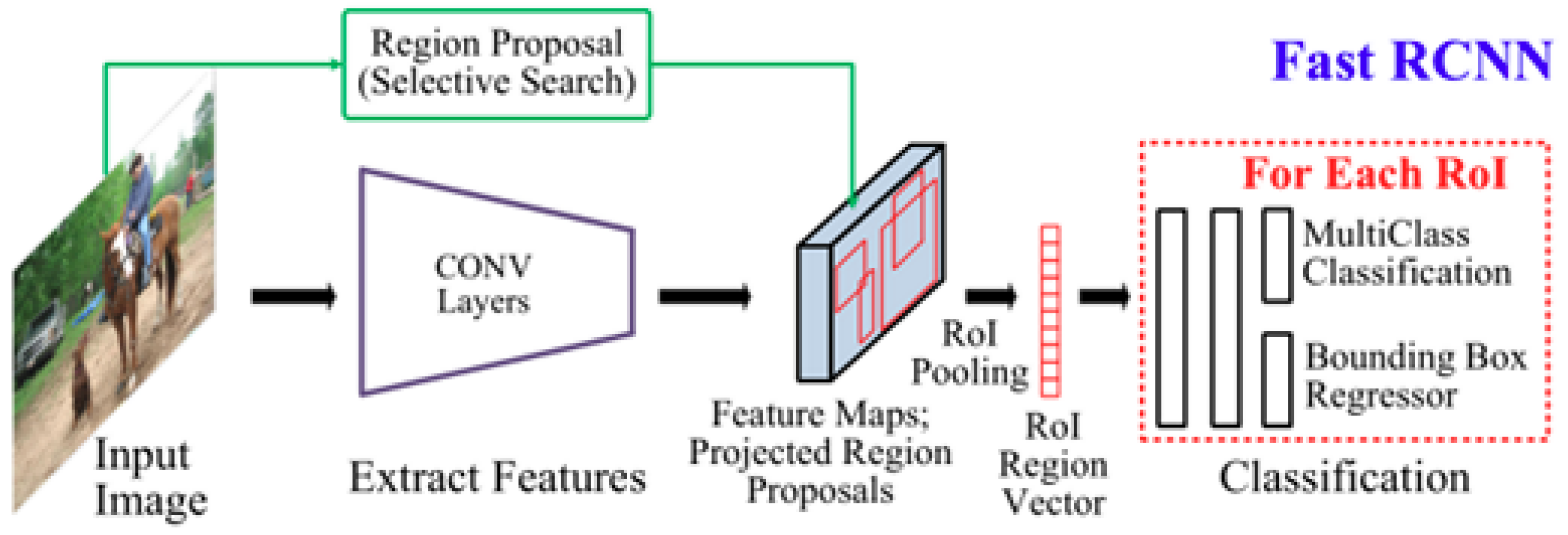

The second type is the two-stage detector network, exemplified by CNN, RCNN, and Fast RCNN. This type complements the one-stage detectors with its higher localization precision and recognition accuracy. However, it has longer inference times and demands greater computational power, making it suitable for complex applications requiring high accuracy. The two stages of two-stage detectors are separated by the RoI (Region of Interest) pooling layer. The first stage, known as the Region Proposal Network (RPN), proposes candidate object bounding boxes. In the second stage, the RoI Pooling operation extracts features from each candidate box for subsequent classification and bounding box regression tasks. Taking Fast-RCNN as an example, its basic structure is illustrated in

Figure 9.

The RCNN network is composed of four integral modules. The first module is dedicated to region proposal. The second module extracts fixed-length feature vectors from these proposed regions. The third module comprises a suite of linear support vector machines for specific categories. The final module is tasked with bounding box regression. The Fast R-CNN, as an advancement over its predecessor, commences by extracting features from the entire input image. Subsequently, it achieves scale-invariant feature representation through the RoI (Region of Interest) pooling layer. These processed features serve as the input for the subsequent fully connected layers responsible for classification and bounding box regression. This integrated approach to one-time classification and localization significantly enhances the inference speed, thereby improving the overall efficiency of the model.

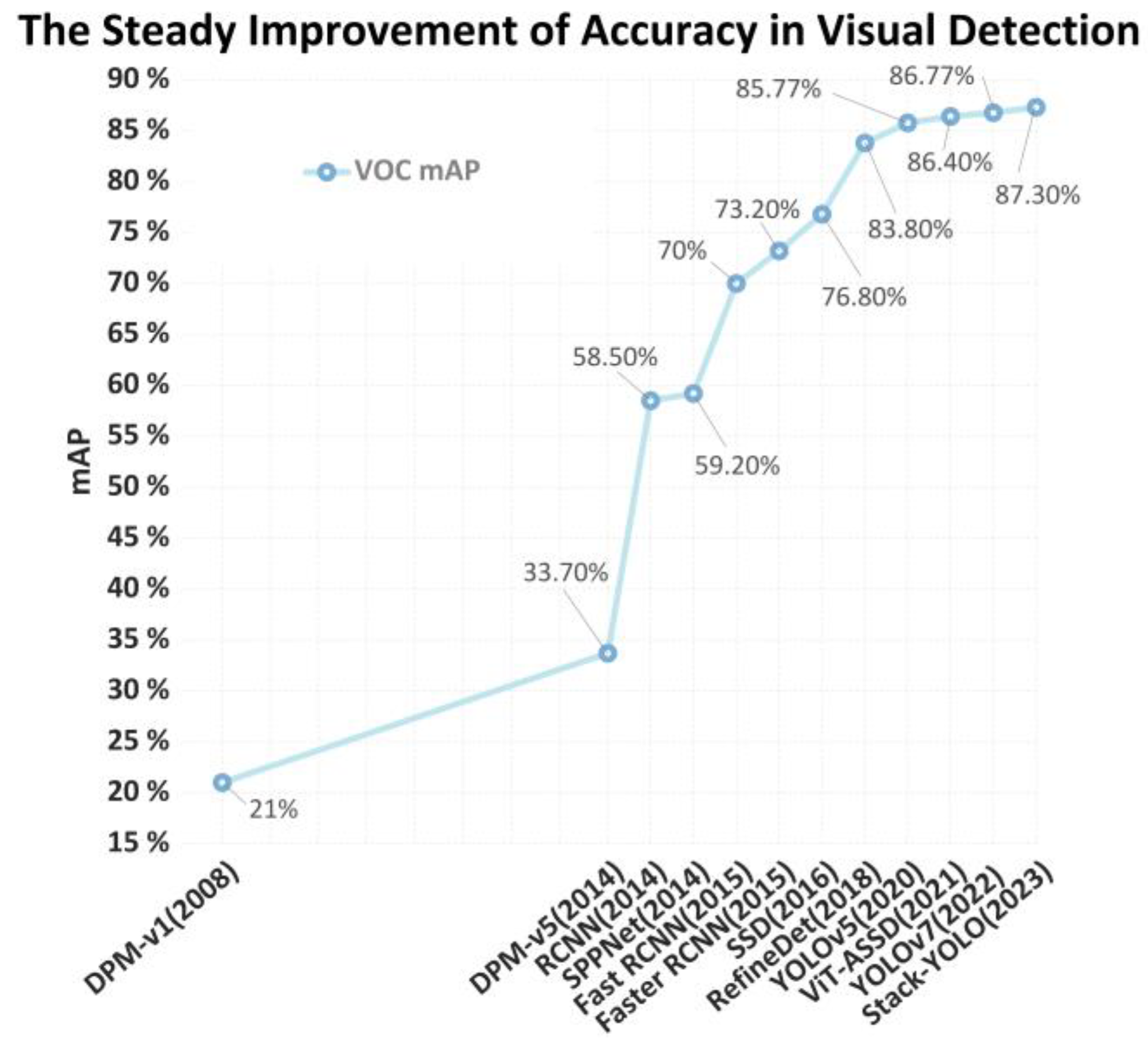

In recent years, a plethora of enhanced model algorithms have been applied to diverse public datasets. Through experimental evaluation, it has been noted that, despite not being tested under entirely identical sub-datasets, their performance generally exhibits a upward trend over time.

Table 3 presents the results of various algorithms on the MS COCO dataset, with Average Precision (AP) serving as the primary evaluation metric.

Figure 10 illustrates the performance of several mainstream algorithms on the VOC dataset [

29], showing a steady improvement in their performance.

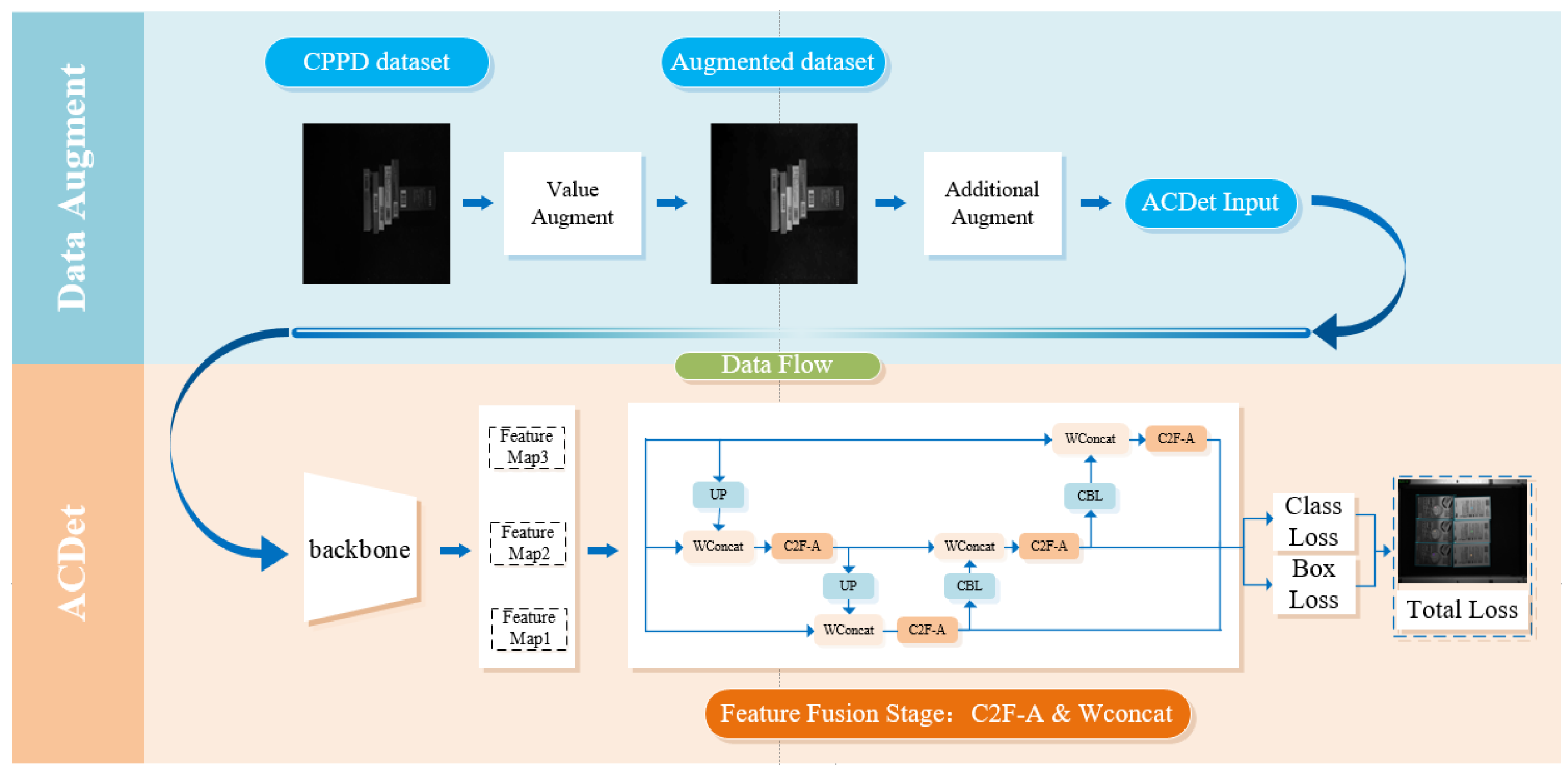

3.3. ACDet:A Vector Detection Model for Drug Packaging Based on Convolutional Neural Network

ACDet is an algorithmic solution proposed by the authors for computer vision detection in the medical industry, integrating the algorithms mentioned previously within the YOLOv8 framework. The medical sector, with its rapid automation advancements, exhibits a substantial demand for visual detection technologies. The medical industry encounters numerous challenges in drug detection, attributable to the complexity of pharmaceuticals, the diversity of packaging materials, and the variety of formats, as exemplified by the EP dataset introduced in

Section 3.1. These challenges encompass issues such as uneven lighting and the necessity for high response speeds. To address these problems, we devised a universal lightweight vector detection model. By optimizing the multi-computation module C2F-A, the model amplifies attention across multiple dimensions of gradient flow outputs, thereby enabling efficient and rapid classification of various drugs by improving the sensing ability of the network . The architecture of the model is illustrated in

Figure 11.

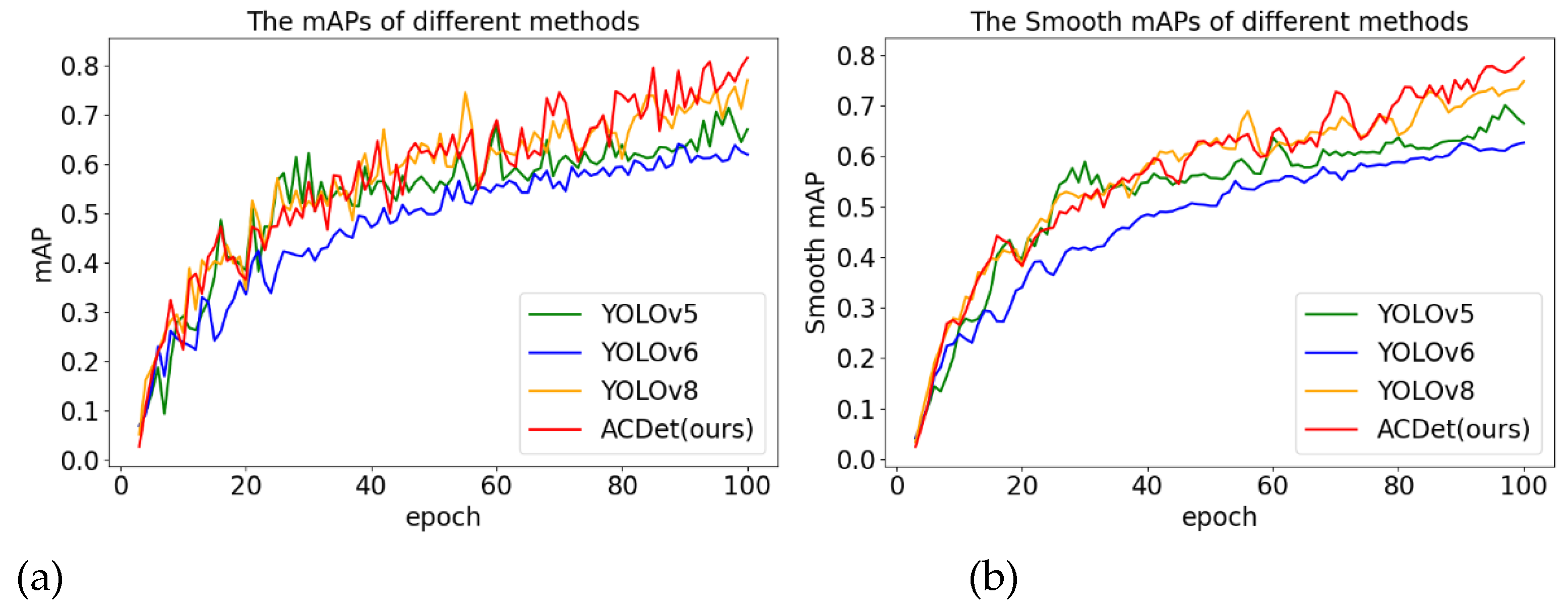

Upon testing, the model achieved an mAP of over 81% on the EP dataset. Under identical testing conditions, its performance surpasses that of YOLOV5 to V8 versions by 6.3% to 19.4%, as demonstrated in

Figure 12. This vision system has been extensively deployed, generating substantial market value, as depicted in

Figure 12. In practical applications, the system's accuracy can exceed 99.9%.

3.4. Exploration and Future Trends

The development of deep learning algorithms is intrinsically linked to the computational capabilities of hardware. In recent years, the rapid advancement of high-performance graphics processing units (GPUs) has accelerated this process, propelling the revolution in artificial intelligence. Notably, the recent introduction of the Blackwell architecture GPU, featuring 208 billion transistors and employing a custom, dual-reticle TSMC 4NP process, has been a significant milestone. The interconnect speed between two smaller chips reaches up to 10 TBps, which not only elevates the computational power to 20 petaflops (FP4 precision) but also reduces energy consumption to one-twenty-fifth of its previous level. We believe that this technological breakthrough will have a profound impact on several aspects of computer vision, shaping its future direction and trends.

1.Enhanced Performance and Real-time Processing

High-performance hardware, such as the Blackwell architecture, equipped with parallel processing capabilities and high memory bandwidth, has dramatically accelerated the performance of computer vision algorithms. Its ability to handle complex matrix operations and parallel computations efficiently has enabled real-time processing of high-resolution images and videos. This is crucial for applications like autonomous vehicles, where split-second decisions based on visual data can be lifesaving. Furthermore, the enhanced performance of these latest cards has facilitated the development of more sophisticated and accurate computer vision models, pushing the boundaries of what is achievable in object detection, image recognition, and 3D reconstruction..

2.Energy Efficiency and Sustainability

The energy efficiency of high-performance hardware represents another critical factor influencing the future trajectory of computer vision. As AI models grow in complexity, the energy consumption associated with their training and inference processes has emerged as a pressing concern. Blackwell cards, designed with energy-efficient architectures, effectively reduce power consumption without compromising performance. This advancement not only facilitates the sustainable deployment of computer vision models but also extends their applicability to mobile and embedded systems, where power availability is constrained.

3. Democratization of AI Research

High-performance hardware has contributed to the democratization of AI research by making high-performance computing more accessible to a broader range of researchers and developers. The affordability and availability of these cards have lowered the entry barrier for individuals and small organizations to experiment with and develop computer vision models. This democratization fosters innovation and diversity in the field, as more people from different backgrounds can contribute to the advancement of computer vision technology.

4.Future Prospects

The future development of computer vision is intricately linked to advancements in GPU technology, with high-performance hardware playing a pivotal role. As these cards continue to evolve, we can anticipate further enhancements in processing speed, energy efficiency, and accessibility. This progress will facilitate the development of more sophisticated computer vision applications, such as augmented reality experiences, advanced surveillance systems, and more intelligent robotics. Moreover, the integration of AI-specific hardware features in Blackwell cards, such as tensor cores for deep learning, will further augment the capabilities of computer vision models, enabling more complex and efficient computations.

Simultaneously, we posit that an infinite increase in data volume results in the exponential growth of computing nodes, and addressing this issue solely through hardware advancements is not a sustainable solution. Current deep learning recognition algorithms exhibit an excessive dependency on training datasets. Consequently, exploring environmentally friendly, low-carbon models equipped with forgetting screening mechanisms constitutes one of the critical development directions in the field.

4. Review of visual Simultaneous Localization and Mapping (SLAM)algorithms

Visual SLAM (Simultaneous Localization and Mapping) plays a pivotal role in the realm of sensing driverless robotic vehicles. By leveraging computer vision techniques, these autonomous vehicles can dynamically construct a map of their surroundings while simultaneously determining their position within that map. This capability is crucial for navigating complex environments, avoiding obstacles, and ensuring efficient route planning. In the context of sensing driverless vehicles, visual SLAM contributes to sustainable operation by optimizing energy consumption through intelligent path planning and reducing the reliance on energy-intensive sensors. Furthermore, the integration of visual SLAM in these vehicles supports the development of advanced driver-assistance systems (ADAS) and autonomous driving technologies, thereby promoting safety and enhancing the overall efficiency of transportation systems.

4.1. The Basic Principles of SLAM

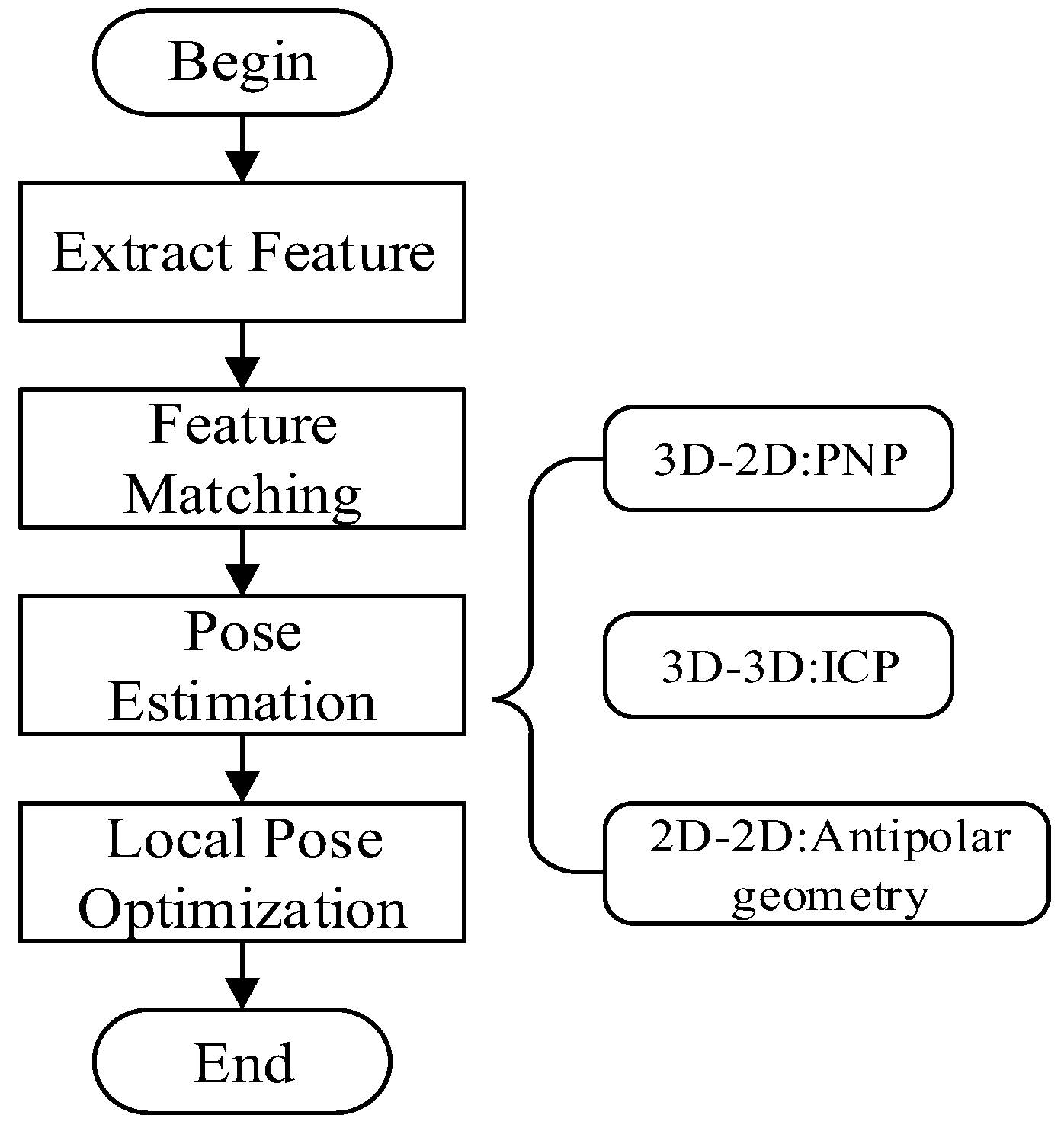

Visual SLAM digitizes real-world scenes by projecting 3D spatial points onto 2D pixel coordinates in the camera coordinate system, primarily utilizing the pinhole camera model. After the 3D space is projected onto the normalized image plane, distortion correction becomes necessary. Once processed, this data is fed into the visual front-end for VO (Visual Odometry) processing. The primary function of VO is to estimate the camera's motion roughly based on a series of adjacent image information and then provide this coarse information to the back end. Traditional visual odometry methods are mainly categorized into feature-based and direct methods, with feature-based visual odometry being the most widely utilized and developed. This process involves extracting feature points from each image, finding descriptors for feature matching, and then estimating different camera poses to obtain the corresponding visual odometry, as illustrated in

Figure 14:

The image information captured by different cameras varies, and so do the methods used for their pose estimation. For a monocular camera that obtains 2D pixel coordinates, the epipolar geometry method is employed. For stereo or depth cameras that can acquire 3D pixel coordinates, the Iterative Closest Point (ICP) method is used. If both 3D pixel coordinates and 2D camera projection coordinates are available, a combination of the two pieces of information can be utilized, employing the Perspective-n-Point (PnP) method.

After the front-end obtains the camera's motion and observation equation, as shown in Equation (10):

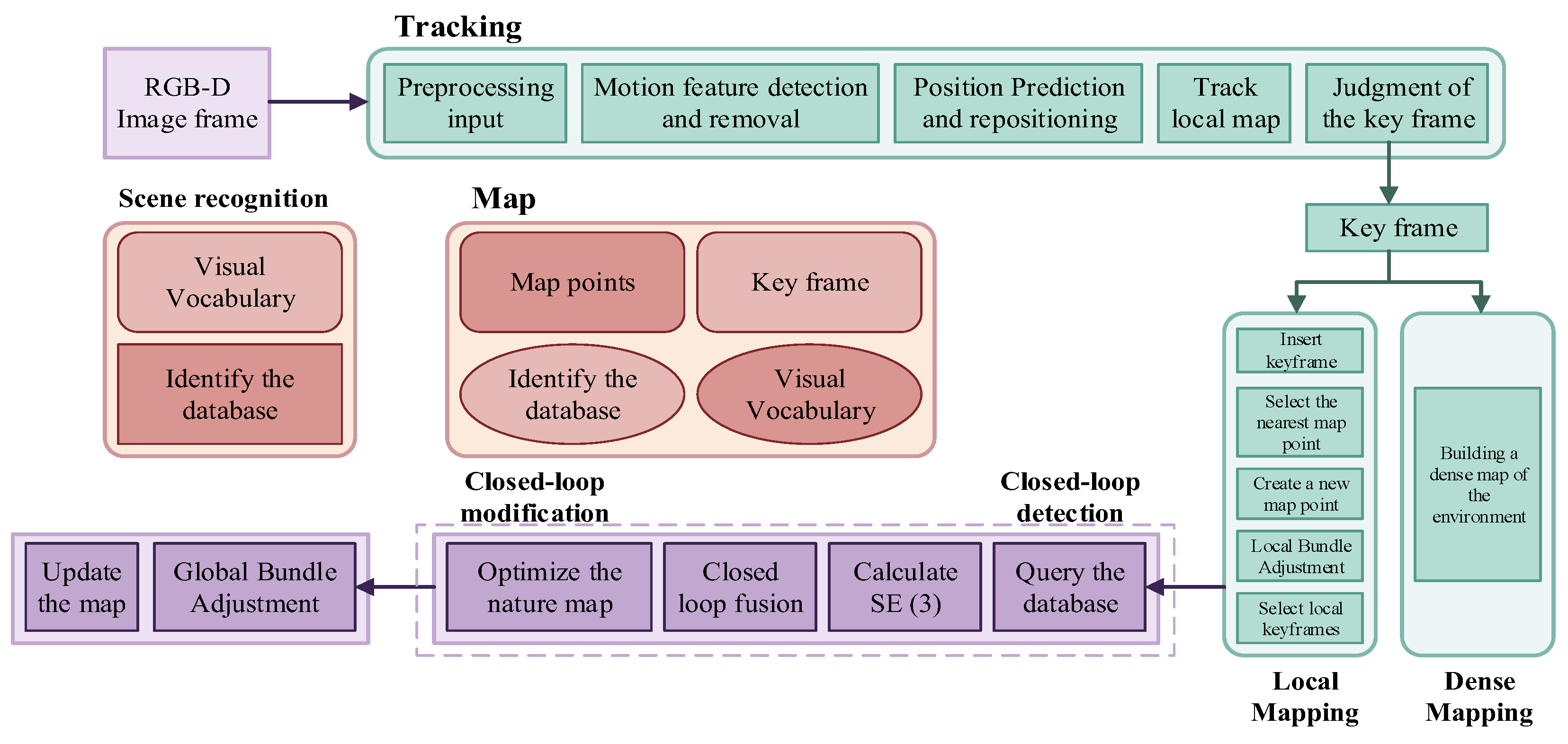

The Backend optimization is conducted to eliminate the accumulated errors and uncertainties caused by noise. This is primarily achieved through graph optimization, where the objective function can be solved using methods such as Gauss-Newton or Levenberg-Marquardt to obtain an optimized solution, resulting in better camera poses. Finally, loop closure detection is performed to determine if the detected trajectory forms a loop by comparing the similarity between the previous and current frames.Integrating visual SLAM and tracking algorithms with sensing multi-driverless vehicles can result in a system with autonomous intelligence, as illustrated in

Figure 15.

Utilizing an RGB-D camera as the sensor, the surrounding environmental information is perceived, while encoders and gyroscopes sense the internal operating information of the mobile robot. In the front end, based on the RGB and depth images obtained by the RGB-D camera, optical flow values are calculated and predicted. A dynamic target mask is generated by fusing the difference in depth values, thereby eliminating feature points on dynamic targets. Subsequently, based on the remaining static feature points, collinear and coplanar relationships are identified to extend static lines and planes. In the backend optimization phase, the pose is optimized by minimizing the residuals of static point features and static line features, thereby improving localization accuracy and enhancing navigation efficiency.

4.2. The basic Principles of SLAM

In recent years, the combination of deep learning and computer vision has achieved remarkable results in many tasks. Vision-based SLAM systems, grounded in computer vision, provide a broad development space for the application of neural networks in this field. Similar to object detection algorithms based on deep learning, the first step for SLAM systems based on deep learning is also the establishment of datasets.

Table 4 shows some of the common public datasets in recent years.

In recent years, a significant amount of research has been dedicated to optimizing the odometry of visual SLAM using deep learning methods, which are mainly categorized into supervised and unsupervised learning-based visual odometry approaches. Wang et al. [

52] introduced the first end-to-end monocular visual odometry method based on deep recurrent convolutional neural networks, which directly learns from image sequences to achieve more accurate and stable visual odometry estimation. Xiao et al. [

53] utilized a convolutional neural network to construct an SSD object detector combined with prior knowledge, enabling semantic-level detection of dynamic objects in a new detection thread. By employing a selective tracking algorithm to handle the feature points of dynamic objects, the pose estimation error caused by incorrect matching is significantly reduced. Duan et al. [

54] proposed a keyframe retrieval method based on deep feature matching, which treats the local navigation map as an image and the keyframes as keypoints of the map image. Convolutional neural networks are used to extract descriptors of keyframes for loop closure detection. DROID-SLAM, proposed in 2021, is a novel SLAM system based on deep learning. Its front-end part performs feature extraction and calculates the optical flow field, calculates three keyframes with the highest degree of co-visibility based on the optical flow field, and then iteratively updates the pose and depth of the current keyframe based on the co-visibility relationship. The back-end part optimizes all keyframes using Bundle Adjustment (BA). Each time an update iteration is performed, a frame-graph is reconstructed for all keyframes. Compared to previous approaches, the robustness, generalization ability, and success rate have been greatly improved, achieving end-to-end visual SLAM using deep learning methods.

4.3. Discuss the Current Challenges and Future Research Directions of Visual SLAM

Visual Simultaneous Localization and Mapping (SLAM) faces several challenges, including robustness to varying lighting conditions, dynamic environments, feature extraction and matching, computational efficiency, and scalability. Future research trends include the integration of deep learning techniques for improved feature extraction and object recognition, the development of more efficient algorithms for real-time processing, the fusion of multiple sensors for enhanced accuracy and robustness, and the exploration of semantic SLAM for a deeper understanding of the environment. However, current deep SLAM methods still have the following shortcomings:

1.Data volume and labeling: Deep learning necessitates large-scale data and accurate labeling, yet acquiring large-scale SLAM datasets poses a significant challenge.

2.Low real-time performance: Visual SLAM often operates under real-time constraints, and even input from low-frame-rate, low-resolution cameras can generate a substantial amount of data, requiring efficient processing and inference algorithms.

3.Generalization ability: A critical consideration is whether the model can accurately locate and construct maps in new environments or unseen scenes. Future advancements in deep SLAM methods are expected to increasingly emulate human perception and cognitive patterns, making strides in high-level map construction, human-like perception and localization, active SLAM methods, integration with task requirements, and storage and retrieval of memory. These developments will aid robots in achieving diverse tasks and self-navigation capabilities. The end-to-end training mode and information processing approach, which align with the human cognitive process, hold significant potential.

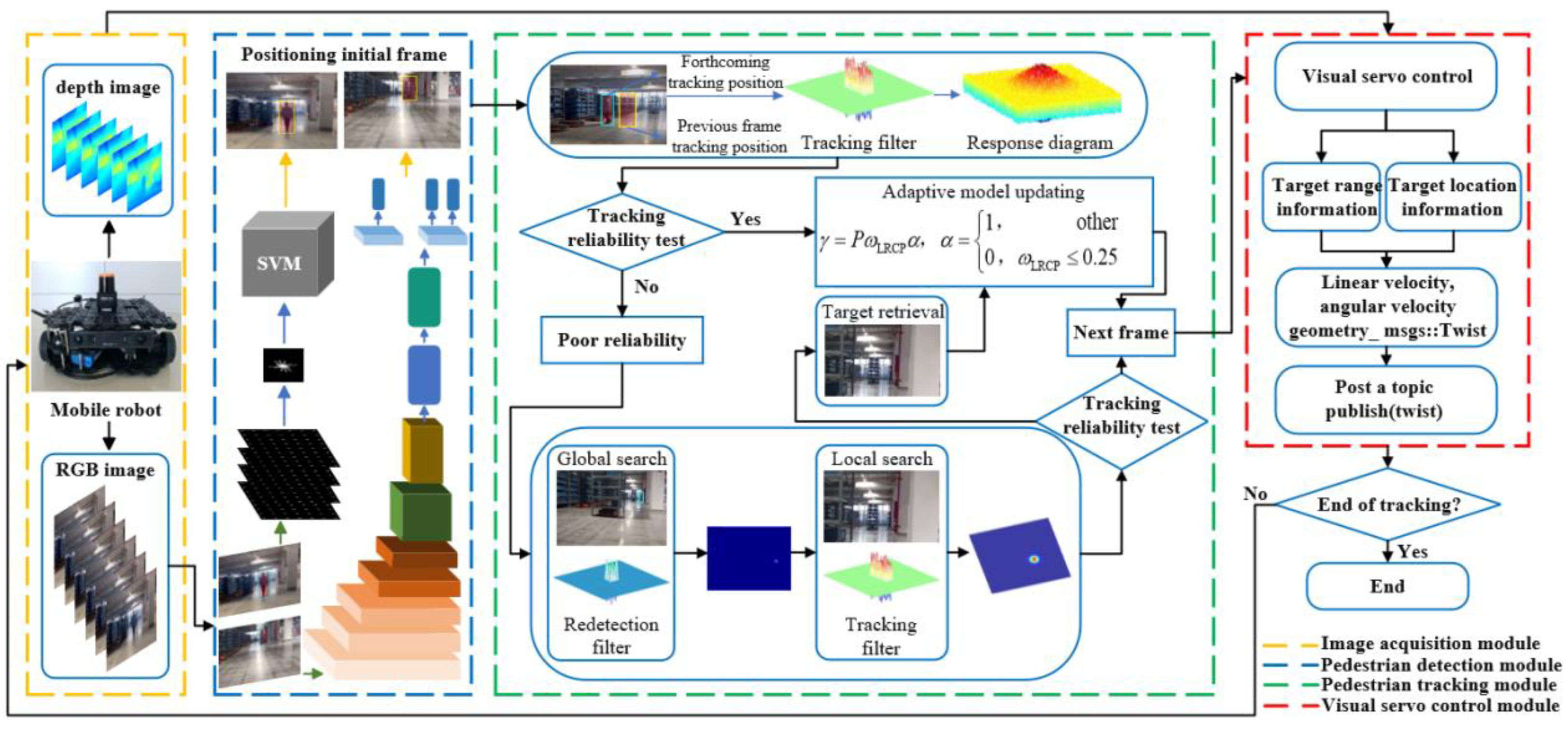

4.4. Visual Framework for Unmanned Factory Applications with Multi-Driverless Robotic Vehicles and UAVs

Based on the methods summarized in this article, we have discussed and proposed a complete vision system for complex intelligent factory environments. This system can be deployed on multiple green energy robots, drones, or vehicles, and can perform a series of tasks such as path planning, automatic navigation, intelligent obstacle avoidance, cargo grabbing, human following, emergency rescue, and more. It includes a computer vision detection module based on deep learning, comprising a composite detection and recognition module (including SVM classifiers and EPDet), a vision tracking module, a vision fusion laser SLAM positioning module, and a vehicle chassis drive control module, as shown in

Figure 16. This can provide a reference for the application of computer vision technology in the industrial field.

5. Conclusions

With the rapid advancement of computer hardware, the potential for significant development in the field of computer vision is evident, as demonstrated in this paper. Computer vision serves as a foundational element in the realm of intelligent systems and AI technologies. It can effectively replace sensors as the primary source of data input, acting as a pair of intelligent eyes that can more adeptly process data sources to drive a range of unmanned actions, such as autonomous driving in sensing driverless vehicles, obstacle avoidance, and detection tracking. The potential ceiling for this field is exceptionally high.

Furthermore, this article provides a systematic and comprehensive overview of the concepts and application directions of computer vision algorithms. It encompasses a range from traditional detection algorithms to deep learning detection algorithms based on convolutional neural networks, and further extends to visual SLAM, deep visual SLAM, and long-term tracking algorithms, offering a generalized description of their principles. It also delineates the principles, characteristics, and test conclusions of typical detection and tracking models. Additionally, the article outlines a system framework proposed based on existing computer vision algorithms, which has achieved notable results.

The future development direction of this field is also explored in depth in this paper. The current mainstream deep learning algorithms exhibit a high dependency on datasets and hardware. Concurrently, there is an increasing demand for high-precision real-time performance in current systems. Developing models that are "healthy" and "green" , incorporating a forgetting mechanism to promote low-carbon environmental protection, represents one of the significant challenges for the future..