Submitted:

17 May 2024

Posted:

19 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Works

3. Materials and Methods

3.1. Data Analysis

3.2. Model Analysis

3.3. Evaluation Metrics

4. Experimental Analysis

4.1. Initial Hypothesis Testing

- Back-Translated Set 1: We utilize Chinese (Mandarin) and Dutch as intermediate languages. The inclusion of Chinese, belonging to a different language family, is expected to introduce diverse structural variations to the paraphrased questions.

- Back-Translated Set 2: Vietnamese and Indonesian are employed as intermediate languages, all originating from different language families. We hypothesize that employing back-translation with multiple languages of contrasting origins might lead to significant alterations in question meanings, resulting in subpar paraphrases.

- Back-Translated Set 3: French is used as the sole intermediate language, aligning with prior works [26]. We speculate that employing a single pivot language closely related to the original questions' language may result in minimal changes, thus offering limited value in enriching the dataset with diverse examples.

- Manually Paraphrased Set: Questions are manually paraphrased to establish a human baseline for assessing the quality of other methods.

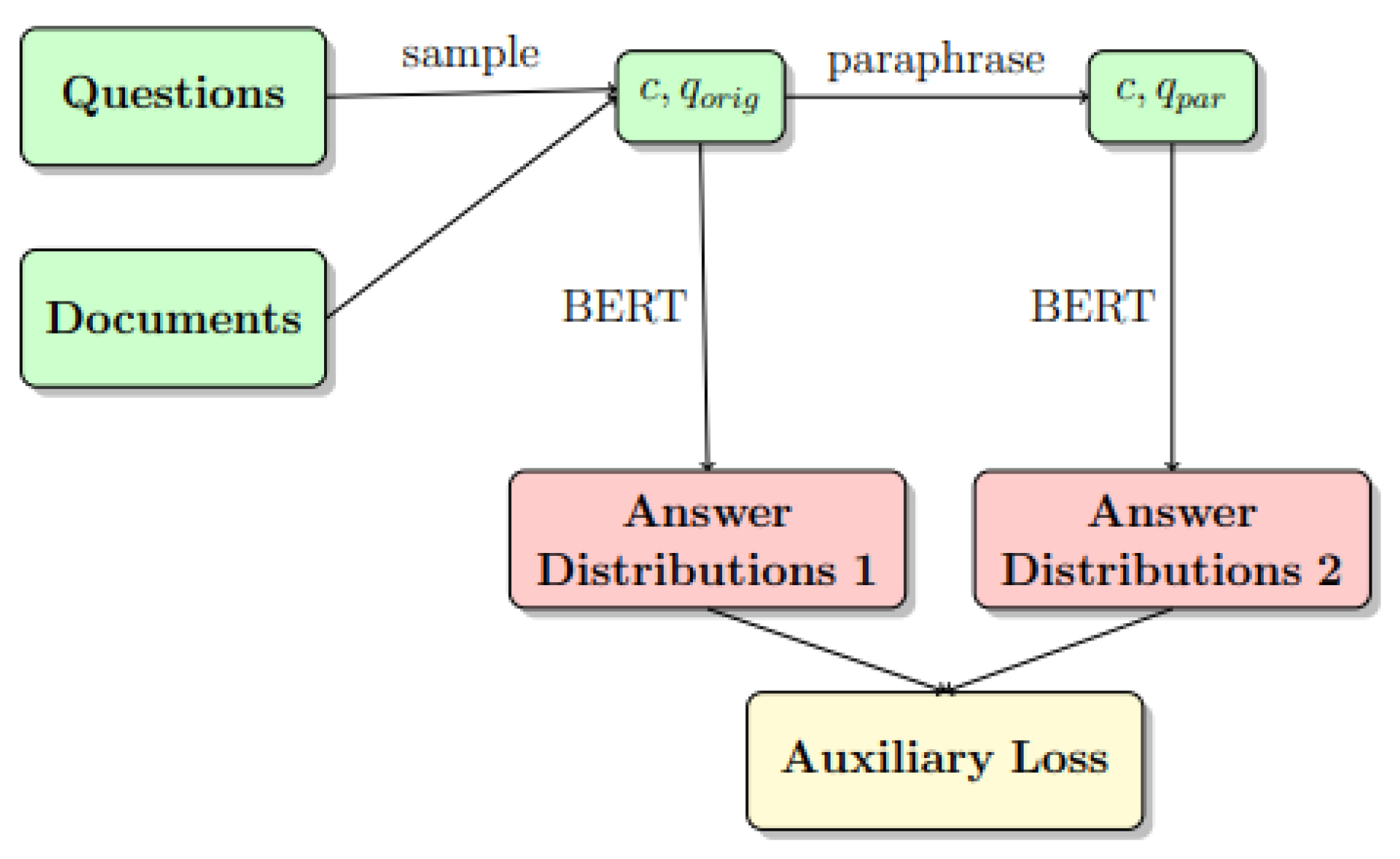

4.2. Augmented Model Experiments

5. Conclusion and Future Works

References

- V. K. Kanaparthi, “Navigating Uncertainty: Enhancing Markowitz Asset Allocation Strategies through Out-of-Sample Analysis,” Dec. 2023. [CrossRef]

- V. K. Kanaparthi, “Examining the Plausible Applications of Artificial Intelligence & Machine Learning in Accounts Payable Improvement,” FinTech, vol. 2, no. 3, pp. 461–474, Jul. 2023. [CrossRef]

- V. Kanaparthi, “Transformational application of Artificial Intelligence and Machine learning in Financial Technologies and Financial services: A bibliometric review,” Jan. 2024. [CrossRef]

- V. Kanaparthi, “AI-based Personalization and Trust in Digital Finance,” Jan. 2024, Accessed: Feb. 04, 2024. [Online]. Available: https://arxiv.org/abs/2401.15700v1.

- V. Kanaparthi, “Exploring the Impact of Blockchain, AI, and ML on Financial Accounting Efficiency and Transformation,” Jan. 2024, Accessed: Feb. 04, 2024. [Online]. Available: https://arxiv.org/abs/2401.15715v1.

- P. Kaur, G. S. Kashyap, A. Kumar, M. T. Nafis, S. Kumar, and V. Shokeen, “From Text to Transformation: A Comprehensive Review of Large Language Models’ Versatility,” Feb. 2024, Accessed: Mar. 21, 2024. [Online]. Available: https://arxiv.org/abs/2402.16142v1.

- G. S. Kashyap, A. Siddiqui, R. Siddiqui, K. Malik, S. Wazir, and A. E. I. Brownlee, “Prediction of Suicidal Risk Using Machine Learning Models.” Dec. 25, 2021. Accessed: Feb. 04, 2024. [Online]. Available: https://papers.ssrn.com/abstract=4709789.

- 8. N. Marwah, V. K. Singh, G. S. Kashyap, and S. Wazir, “An analysis of the robustness of UAV agriculture field coverage using multi-agent reinforcement learning,” International Journal of Information Technology (Singapore), vol. 15, no. 4, pp. 2317–2327, May 2023. [CrossRef]

- S. Wazir, G. S. Kashyap, and P. Saxena, “MLOps: A Review,” Aug. 2023, Accessed: Sep. 16, 2023. [Online]. Available: https://arxiv.org/abs/2308.10908v1.

- S. Naz and G. S. Kashyap, “Enhancing the predictive capability of a mathematical model for pseudomonas aeruginosa through artificial neural networks,” International Journal of Information Technology 2024, pp. 1–10, Feb. 2024. [CrossRef]

- G. S. Kashyap, A. E. I. Brownlee, O. C. Phukan, K. Malik, and S. Wazir, “Roulette-Wheel Selection-Based PSO Algorithm for Solving the Vehicle Routing Problem with Time Windows,” Jun. 2023, Accessed: Jul. 04, 2023. [Online]. Available: https://arxiv.org/abs/2306.02308v1.

- G. S. Kashyap et al., “Revolutionizing Agriculture: A Comprehensive Review of Artificial Intelligence Techniques in Farming,” Feb. 2024. [CrossRef]

- C. Buck et al., “Ask the right questions: Active question reformulation with reinforcement learning,” in 6th International Conference on Learning Representations, ICLR 2018 - Conference Track Proceedings, May 2018. Accessed: May 14, 2024. [Online]. Available: https://arxiv.org/abs/1705.07830v3.

- M. Vila, M. A. Martí, and H. Rodríguez, “Is This a Paraphrase? What Kind? Paraphrase Boundaries and Typology,” Open Journal of Modern Linguistics, vol. 04, no. 01, pp. 205–218, Feb. 2014. [CrossRef]

- M. I. and W. H., “Exploring the Recent Trends of Paraphrase Detection,” International Journal of Computer Applications, vol. 182, no. 46, pp. 1–5, 2019. [CrossRef]

- Z. Yang, Z. Dai, Y. Yang, J. Carbonell, R. Salakhutdinov, and Q. V. Le, “XLNet: Generalized autoregressive pretraining for language understanding,” in Advances in Neural Information Processing Systems, Jun. 2019, vol. 32. Accessed: May 14, 2024. [Online]. Available: https://arxiv.org/abs/1906.08237v2.

- W. B. Dolan and C. Brockett, “Automatically Constructing a Corpus of Sentential Paraphrases,” Proceedings of the Third International Workshop on Paraphrasing (IWP2005), pp. 9–16, 2005, Accessed: May 14, 2024. [Online]. Available: http://www.cogsci.princeton.edu/~wn/.

- Z. Imtiaz, M. Umer, M. Ahmad, S. Ullah, G. S. Choi, and A. Mehmood, “Duplicate Questions Pair Detection Using Siamese MaLSTM,” IEEE Access, vol. 8, pp. 21932–21942, 2020. [CrossRef]

- M. T. Ribeiro, S. Singh, and C. Guestrin, “Semantically equivalent adversarial rules for debugging NLP models,” in ACL 2018 - 56th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference (Long Papers), 2018, vol. 1, pp. 856–865. [CrossRef]

- L. Dong, J. Mallinson, S. Reddy, and M. Lapata, “Learning to paraphrase for question answering,” in EMNLP 2017 - Conference on Empirical Methods in Natural Language Processing, Proceedings, Aug. 2017, pp. 875–886. [CrossRef]

- 21. H. R. M Vila, MA Martí, “Paraphrase concept and typology. A linguistically based and computationally oriented approach,” pp. 93–100, 2013, Accessed: May 15, 2024. [Online]. Available: http://www.redalyc.org/articulo.oa?id=515751746010.

- A. Barrón-Cedeño, M. Vila, M. Antònia Martí, and P. Rosso, “Plagiarism Meets Paraphrasing: Insights for the Next Generation in Automatic Plagiarism Detection,” Computational Linguistics, vol. 39, no. 4, pp. 917–947, Dec. 2013. [CrossRef]

- L. Duong, T. Cohn, S. Bird, and P. Cook, “Low resource dependency parsing: Cross-lingual parameter sharing in a neural network parser,” in ACL-IJCNLP 2015 - 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing of the Asian Federation of Natural Language Processing, Proceedings of the Conference, 2015, vol. 2, pp. 845–850. [CrossRef]

- V. Sanh, T. Wolf, and S. Ruder, “A hierarchical multi-task approach for learning embeddings from semantic tasks,” in 33rd AAAI Conference on Artificial Intelligence, AAAI 2019, 31st Innovative Applications of Artificial Intelligence Conference, IAAI 2019 and the 9th AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Nov. 2019, pp. 6949–6956. [CrossRef]

- R. A. Caruana, “Multitask Learning: A Knowledge-Based Source of Inductive Bias,” in Proceedings of the 10th International Conference on Machine Learning, ICML 1993, 1993, pp. 41–48. [CrossRef]

- A. W. Yu et al., “QaNet: Combining local convolution with global self-attention for reading comprehension,” in 6th International Conference on Learning Representations, ICLR 2018 - Conference Track Proceedings, Apr. 2018. Accessed: May 15, 2024. [Online]. Available: https://arxiv.org/abs/1804.09541v1.

- R. Caruana, “Multitask connectionist learning,” in Proc. 1993 Connectionist Models Summer School, Mar. 1993, pp. 372–379.

- A. Wang, A. Singh, J. Michael, F. Hill, O. Levy, and S. R. Bowman, “GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding,” in EMNLP 2018 - 2018 EMNLP Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP, Proceedings of the 1st Workshop, Apr. 2018, pp. 353–355. [CrossRef]

- A. Wang et al., “SuperGLUE: A stickier benchmark for general-purpose language understanding systems,” in Advances in Neural Information Processing Systems, 2019, vol. 32.

- V. Kanaparthi, “Evaluating Financial Risk in the Transition from EONIA to ESTER: A TimeGAN Approach with Enhanced VaR Estimations,” Jan. 2024. [CrossRef]

- V. Kanaparthi, “Credit Risk Prediction using Ensemble Machine Learning Algorithms,” in 6th International Conference on Inventive Computation Technologies, ICICT 2023 - Proceedings, 2023, pp. 41–47. [CrossRef]

- V. Kanaparthi, “Examining Natural Language Processing Techniques in the Education and Healthcare Fields,” International Journal of Engineering and Advanced Technology, vol. 12, no. 2, pp. 8–18, Dec. 2022. [CrossRef]

- V. Kanaparthi, “Robustness Evaluation of LSTM-based Deep Learning Models for Bitcoin Price Prediction in the Presence of Random Disturbances,” Jan. 2024. [CrossRef]

- B. McCann, N. S. Keskar, C. Xiong, and R. Socher, “The Natural Language Decathlon: Multitask Learning as Question Answering,” Jun. 2018, Accessed: May 15, 2024. [Online]. Available: https://arxiv.org/abs/1806.08730v1.

- Y. Xu, X. Liu, Y. Shen, J. Liu, and J. Gao, “Multi-task learning with sample re-weighting for machine reading comprehension,” in NAACL HLT 2019 - 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies - Proceedings of the Conference, Sep. 2019, vol. 1, pp. 2644–2655. [CrossRef]

- X. Liu, P. He, W. Chen, and J. Gao, “Multi-task deep neural networks for natural language understanding,” in ACL 2019 - 57th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference, Jan. 2020, pp. 4487–4496. https://doi.org/10.18653/v1/p19-1441. [CrossRef]

- Q. Xie, Z. Dai, E. Hovy, M. T. Luong, and Q. V Le, “Unsupervised data augmentation for consistency training,” in Advances in Neural Information Processing Systems, 2020, vol. 2020-Decem, pp. 6256–6268. Accessed: May 15, 2024. [Online]. Available: https://github.com/google-research/uda.

- P. Rajpurkar, J. Zhang, K. Lopyrev, and P. Liang, “SQuad: 100,000+ questions for machine comprehension of text,” in EMNLP 2016 - Conference on Empirical Methods in Natural Language Processing, Proceedings, Jun. 2016, pp. 2383–2392. [CrossRef]

- M. Joshi, E. Choi, D. S. Weld, and L. Zettlemoyer, “TriviaQA: A large scale distantly supervised challenge dataset for reading comprehension,” in ACL 2017 - 55th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference (Long Papers), May 2017, vol. 1, pp. 1601–1611. [CrossRef]

- T. Kwiatkowski et al., “Natural Questions: A Benchmark for Question Answering Research,” Transactions of the Association for Computational Linguistics, vol. 7, pp. 453–466, Aug. 2019. [CrossRef]

- P. Rajpurkar, R. Jia, and P. Liang, “Know what you don’t know: Unanswerable questions for SQuAD,” in ACL 2018 - 56th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference (Long Papers), Jun. 2018, vol. 2, pp. 784–789. [CrossRef]

- H. Habib, G. S. Kashyap, N. Tabassum, and T. Nafis, “Stock Price Prediction Using Artificial Intelligence Based on LSTM– Deep Learning Model,” in Artificial Intelligence & Blockchain in Cyber Physical Systems: Technologies & Applications, CRC Press, 2023, pp. 93–99. [CrossRef]

- G. S. Kashyap, K. G. S. Kashyap, K. Malik, S. Wazir, and R. Khan, “Using Machine Learning to Quantify the Multimedia Risk Due to Fuzzing,” Multimedia Tools and Applications, vol. 81, no. 25, pp. 36685–36698, Oct. 2022. [CrossRef]

- 44. S. Wazir, G. S. Kashyap, K. Malik, and A. E. I. Brownlee, “Predicting the Infection Level of COVID-19 Virus Using Normal Distribution-Based Approximation Model and PSO,” Springer, Cham, 2023, pp. 75–91. [CrossRef]

- G. S. Kashyap, D. Mahajan, O. C. Phukan, A. Kumar, A. E. I. Brownlee, and J. Gao, “From Simulations to Reality: Enhancing Multi-Robot Exploration for Urban Search and Rescue,” Nov. 2023, Accessed: Dec. 03, 2023. [Online]. Available: https://arxiv.org/abs/2311.16958v1.

- M. Kanojia, P. Kamani, G. S. Kashyap, S. Naz, S. Wazir, and A. Chauhan, “Alternative Agriculture Land-Use Transformation Pathways by Partial-Equilibrium Agricultural Sector Model: A Mathematical Approach,” Aug. 2023, Accessed: Sep. 16, 2023. [Online]. Available: https://arxiv.org/abs/2308.11632v1.

- G. S. Kashyap et al., “Detection of a facemask in real-time using deep learning methods: Prevention of Covid 19,” Jan. 2024, Accessed: Feb. 04, 2024. [Online]. Available: https://arxiv.org/abs/2401.15675v1.

- R. Jia and P. Liang, “Adversarial examples for evaluating reading comprehension systems,” in EMNLP 2017 - Conference on Empirical Methods in Natural Language Processing, Proceedings, Jul. 2017, pp. 2021–2031. [CrossRef]

- J. Devlin, M. W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of deep bidirectional transformers for language understanding,” in NAACL HLT 2019 - 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies - Proceedings of the Conference, Oct. 2019, vol. 1, pp. 4171–4186. Accessed: May 03, 2023. [Online]. Available: https://arxiv.org/abs/1810.04805v2.

| Paraphrasing Method | Valid | Unchanged | Total | Valid/Total | Unchanged/Total |

|---|---|---|---|---|---|

| Back-Translated Set 1 | 33 | 16 | 54 | 61.11% | 29.63% |

| Back-Translated Set 2 | 16 | 15 | 54 | 29.63% | 27.78% |

| Back-Translated Set 3 | 10 | 41 | 54 | 18.52% | 75.93% |

| Development Data | EM | F1 | Mean KL | Mean Wasserstein | Confidence |

|---|---|---|---|---|---|

| Original Set | 90.74 | 95.97 | - | - | 81.51% |

| Back-Translated Set 1 | 83.33 | 90.34 | 6.05 | 2.46 x 10^-4 | 99.88% |

| Manually Paraphrased Set | 83.33 | 88.02 | 8.31 | 2.56 x 10^-4 | 82.94% |

| Trained on Full Training Set | Trained on 10% of Training Set | |||

|---|---|---|---|---|

| Development Data | EM | F1 | EM | F1 |

| Original Set | 83.00 | 89.61 | 75.80 | 83.54 |

| Adversarial Set | 51.10 | 56.27 | 39.80 | 45.14 |

| Training Data | Training Steps | Loss | α | EM | F1 | Confidence |

|---|---|---|---|---|---|---|

| 8560 (10%) | 1605 | KL | 0.2 | 70.18 | 80.16 | 26.12% |

| 8560 (10%) | 1605 | KL | 0.5 | 72.21 | 81.53 | 40.59% |

| 8560 (10%) | 1605 | KL | 0.8 | 72.93 | 82.09 | 61.32% |

| 8560 (10%) | 1605 | Symmetric KL | 0.2 | 70.06 | 79.95 | 29.53% |

| 8560 (10%) | 1605 | Symmetric KL | 0.5 | 71.80 | 81.15 | 43.88% |

| 8560 (10%) | 1605 | Symmetric KL | 0.8 | 72.03 | 81.43 | 58.97% |

| 8560 (10%) | 1605 | JS | 0.2 | 71.64 | 81.45 | 37.84% |

| 8560 (10%) | 1605 | JS | 0.5 | 72.53 | 82.08 | 54.51% |

| 8560 (10%) | 1605 | JS | 0.8 | 72.71 | 81.99 | 69.18% |

| Model | Training Steps | EM | F1 | Confidence | KL | JS |

|---|---|---|---|---|---|---|

| Original BERT Large | 7299 | 83.86 | 90.50 | 82.13% | - | - |

| Original BERT Large | 10949 | 83.11 | 90.45 | 86.17% | - | - |

| Original BERT Large | 14599 | 82.24 | 89.88 | 90.6% | - | - |

| BERT Large + DA | 7299 | 83.88 | 90.42 | 79.18% | 0.24 | 0.17 |

| BERT Large + DA | 10949 | 83.96 | 90.79 | 84.89% | 0.40 | 0.23 |

| BERT Large + DA | 14599 | 82.91 | 90.28 | 89.04% | 0.55 | 0.29 |

| Model | Training Steps | EM | F1 | Confidence | KL | JS |

|---|---|---|---|---|---|---|

| Original BERT Large | 730 | 72.96 | 82.51 | 79.42% | - | - |

| Original BERT Large | 1095 | 73.86 | 83.26 | 84.95% | - | - |

| Original BERT Large | 1460 | 73.91 | 83.16 | 87.56% | - | - |

| BERT Large + DA | 730 | 72.19 | 81.87 | 75.52% | 0.50 | 0.25 |

| BERT Large + DA | 1095 | 78.81 | 86.83 | 95.53% | 0.91 | 0.33 |

| BERT Large + DA | 1460 | 73.46 | 82.76 | 86.09% | 0.84 | 0.28 |

| Training Data | Training Steps | Loss | α | EM | F1 | Confidence |

|---|---|---|---|---|---|---|

| 8560 (10%) | 1605 | KL | 0.2 | 70.00 | 80.03 | 28.54% |

| 8560 (10%) | 1605 | KL | 0.5 | 71.03 | 80.89 | 44.15% |

| 8560 (10%) | 1605 | KL | 0.8 | 71.81 | 81.12 | 65.50% |

| 8560 (10%) | 1605 | Symmetric KL | 0.2 | 69.83 | 79.88 | 28.05% |

| 8560 (10%) | 1605 | Symmetric KL | 0.5 | 70.63 | 80.42 | 42.56% |

| 8560 (10%) | 1605 | Symmetric KL | 0.8 | 71.84 | 81.27 | 66.68% |

| 8560 (10%) | 1605 | JS | 0.2 | 70.62 | 80.36 | 33.79% |

| 8560 (10%) | 1605 | JS | 0.5 | 71.51 | 81.18 | 55.61% |

| 8560 (10%) | 1605 | JS | 0.8 | 72.69 | 81.81 | 64.45% |

| 1 | https://rajpurkar.github.io/SQuAD-explorer/ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).