1. Introduction

Medical school begins the challenging journey of equipping students for the demanding profession of being a physician. A career where the stakes are high – involving people’s lives and well-being. As such it requires discipline, perseverance, and motivation. The latter is crucial for medical students because of the rigorous and demanding nature of medical school curricula. Put simply, motivation is the general desire or willingness of someone to do something [

1]. It energizes, directs and sustains behavior. Self-Determination Theory (SDT) posits that motivation is the fundamental reason underlying individuals’ thoughts, actions, and behaviors. [

2,

3,

4]. Motivation is of particular concern in education and is something that should be paid attention to from a learning design and curriculum evaluation perspective because when learners are motivated they try harder to understand, they process information at a deeper level, and they are more apt to apply what they have learned to novel situations [

5]. In the context of well-being and burnout, students embarking on their medical school journey in the United States initially exhibit lower levels of burnout, diminished rates of depression, and an enhanced quality of life compared to their peers graduating from college into different professions. However, this trend inverts by the second year of medical school. At this point, burnout—characterized by emotional exhaustion, heightened stress, a reduced sense of personal achievement, and thoughts of suicide—intensifies, reaching its zenith during the residency period [

6,

7,

8]. The expectations placed upon medical students are continually increasing, which amplifies the imperative to support them in developing self-help strategies [

9,

10,

11,

12]. Being able to authentically measure and track motivation should assist medical educators and curriculum designers with this.

How can we measure motivation? To answer that question, we have to appreciate that motivation is a construct. Constructs are conceptual ideas or abstractions used to facilitate an understanding of something intangible [

13]. All scientific fields are founded upon frameworks consisting of constructs and the interplay between them. The natural sciences employ constructs like gravity, phylogenetic dominance, and tectonic pressure. Similarly, the behavioral sciences utilize constructs including conscientiousness, sportsmanship, and resilience. Motivation, like these, is a real albeit abstract human experience. Researchers often use questionnaires with multiple items to measure behavioral constructs. Item responses are added up to a score and it is hypothesized that this score represents a person’s position on the construct. Behavioral constructs differ in the ease with which they can be measured. Some are straightforward enough to be evaluated with just a single question or a few inquiries. In contrast, others are multifaceted and complex, comprising various dimensions unified by a central theme that collectively defines the construct. Such constructs may necessitate an extensive series of questions to be fully operationalized [

14].

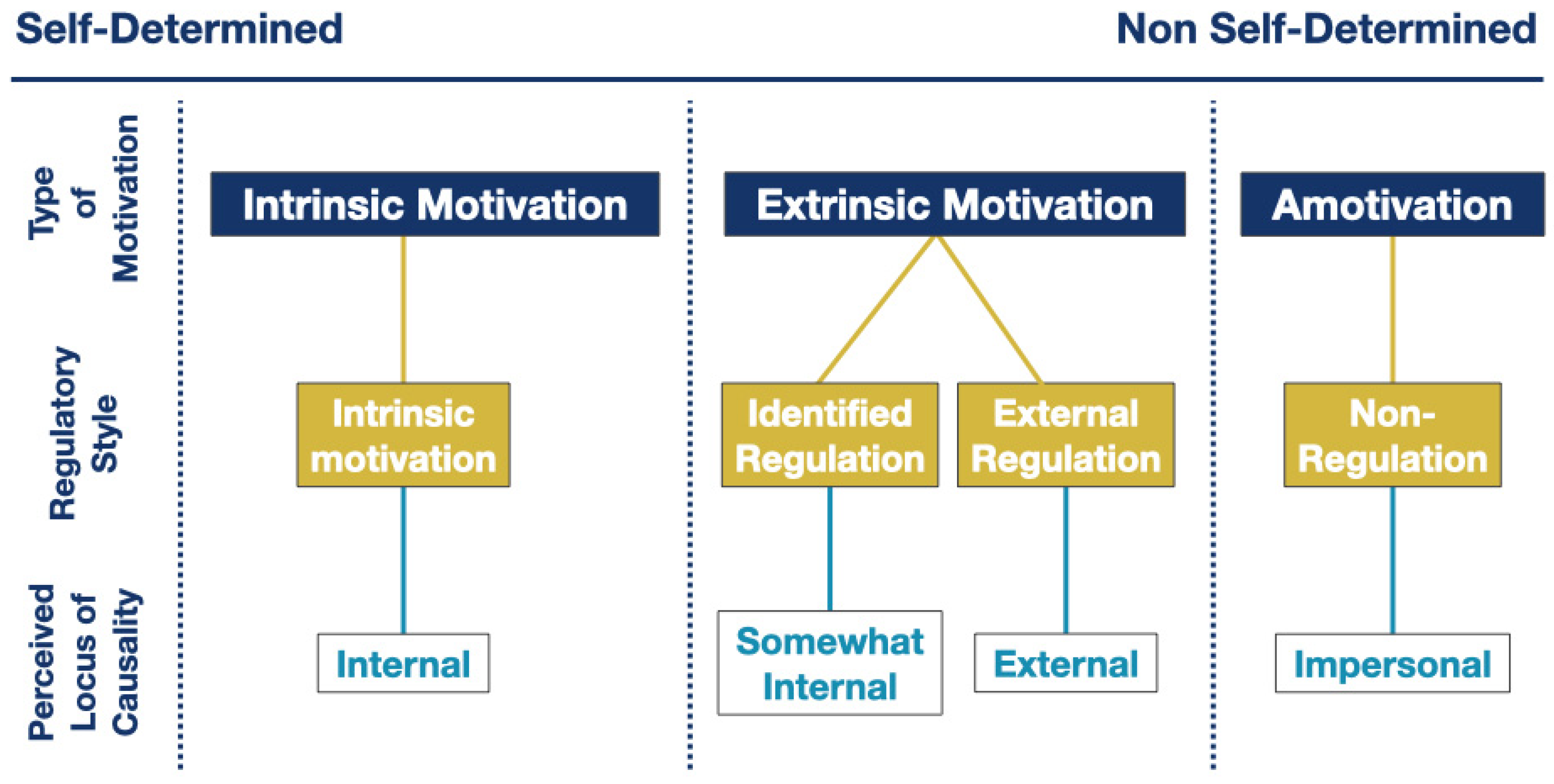

Motivation is a complex construct that contains four dimensions: intrinsic motivation, identified regulation, external regulation, and amotivation [

2]. SDT perceives these motivational dimensions as existing on a continuum, ranging from self-directed (autonomous) to those driven by external forces [

2,

3,

4] (

Figure 1). In the context of SDT, “regulation” refers to the processes that govern why and how a person initiates and maintains a particular behavior over time, especially in the face of challenges and obstacles.

Intrinsically motivated students learn because they derive pleasure and satisfaction, or get a sense of accomplishment, from learning. They enjoy engaging in the behavior for its own sake (e.g., “I enjoy running, it makes me feel good”).

Identified regulation happens when an individual acknowledges the value and importance of a behavior, even if it does not provide enjoyment or immediate gratification (e.g., “I run because I know running is good for me”).

External regulation arises when behavior is motivated by the pursuit of rewards or the avoidance of adverse outcomes, with the learner feeling compelled to act in a certain manner due to external pressures (e.g., “I run because I will be given a race medal”).

Amotivation represents a total lack of motivation, characterized by a learner’s reluctance to engage or exert effort, often accompanied by an absence of purpose or anticipation of reward. This state is akin to learned helplessness, where individuals are beset by a sense of ineffectuality and perceive no opportunity to alter the trajectory of events (e.g., “I’ve stopped running. I can’t see the point anymore – it just tires me out”). These four dimensions can and do vary from person to person over time. Educators aim for medical students to be guided by intrinsic motivation and identified regulation instead of relying on external rewards and punishments or succumbing to a lack of motivation.

The Situational Motivation Scale (SIMS) is an instrument informed by self-determination theory (SDT) to measure motivation [

15]. It was developed by Frederic Guay and colleagues in 2000 who published a series of studies that support its use within a French-Canadian college context (in Quebec students may enroll in a two-year college program between high school and university) [

15], and has since been used by many researchers to study academic motivation including within the biomedical sciences [

16]. It consists of 16 questions (

Appendix A). Four for each of the four dimensions that make up the construct of motivation. It was designed to be used situationally; to capture a measure of student motivation in situ at one point in time. In the United States and Canada, medical school operates as a graduate-level program. Pre-clerkship curricula often blend classroom instruction in integrated basic sciences with early practical exposure to real patients and the practice of medicine. Therefore, pre-clerkship medical students have to sustain a desire or willingness (i.e., be motivated) to participate authentically during learning events as well as to study further and pursue mastery after learning events conclude [

17]. These two sets of circumstances represent two different but related contexts; the former ‘situational’ and the latter ‘short-term’. However, interrupting educational events to have learners complete in situ the 16-items that make up SIMS would be disruptive and impractical for educators or curriculum designers to sustain on a routine basis; Guay et al. interrupted the French-Canadian students while they were studying alone in a college library. A ‘post-situational’ assessment (i.e., proximal to the conclusion of a learning event) is more realistic more medical students. We define the

post-situational context to mean within an hour of a learning event ending and the

short-term context to mean later that day or within a week of a learning event ending. Since measuring motivation in these contexts represents a novel use of the SIMS instrument, fresh validity evidence to support it must be obtained. Messick (1989) defined validity as “an integrated evaluative judgment of the degree to which empirical evidence and theoretical rationales support the adequacy and appropriateness of inferences and actions based on test scores and other modes of assessment.” [

18]. In other words, validity refers to data presented to support or refute the interpretation assigned to SIMS scores [

19]. Without evidence of validity, the SIMS scores obtained from medical student learners have little or no intrinsic meaning.

Thus, the objective of this study was to answer the question of whether SIMS is supported by validity evidence to measure the true state of affairs with regard to learner motivation in medical student pre-clerkship post-situational and short-term contexts.

2. Materials and Methods

2.1. Conceptual Approach to Construct Validity Evidence

Current perspectives advocate that all forms of validity be unified within a singular concept of ‘construct validity’, which refers to the extent to which a score accurately reflects the intended underlying construct [

20,

21]. Messick recognized five pillars of evidence that underpin construct validity: content, response processes, internal structure, relationships to other variables, and the implications of assessment outcomes (i.e., consequences) [

18] (

Table 1). It is important to note that, “these are not different types of validity but rather they are categories of evidence that can be collected to support the construct validity of inferences made from instrument scores” [

20]. We followed this approach as a scheme to collect and evaluate psychometric data pertaining to SIMS in post-situational and short-term medical student contexts.

Content validity focuses on the construct’s dimensions and how well they are represented [

19].

Response process validity is the extent to which the actions and thought processes of respondents demonstrate that they understand the instrument questions, and how to go about answering them, the same way as the researchers do [

19]. It seeks to identify and eliminate sources of error related to the instrument and how it is administered. Evidence of

internal structure seeks to determine whether a group of questions designed to assess the same dimension exhibit higher correlations with one another compared to groups of questions aimed at measuring different dimensions [

19]. In other words, do the 4 questions that are trying to measure intrinsic motivation ‘hang together’ better with each other than they do with the other 3 question sets that aren’t trying to measure intrinsic motivation?

Relationship to other variables evidence asks if external correlations (i.e., correlations to entirely different instruments) are confirmatory or counter-confirmatory [

19]? And

consequences evidence asks what is the impact on respondents of being appraised by the instrument [

19]? Could someone who agrees to answer the 16 questions be harmed or substantially benefit in anyway? It seeks to identify whether the appeal of any benefits or the fear of any harms could introduce sources of bias into the data collected.

2.2. Study Design and Sample

This single institution validation study invited n = 186 first- and second-year medical students from the Frank H. Netter MD School of Medicine at Quinnipiac University to participate. Participation in the study was voluntary and de-identified.

2.3. Procedures

All participants were asked to what extent they agreed on a 7-point scale (1 = not at all; 7 = completely agree) with the items on the following four survey instruments: Situational Motivation Scale (SIMS), Academic Motivation Scale (AMS), Multidimensional Crowdworker Motivation Scale (MCMS), and Maslach Burnout Inventory Student Survey Exhaustion component (MBI-SSEE) [

15,

22,

23,

24]. The

post-situation and

short-term versions of these surveys were administered on two separate occasions to all 156 study participants (

Appendix B and

Appendix C). The 83 first-year medical students completed the four surveys with regard to a two-hour small group learning event (each small group comprised 8 students + 1 faculty facilitator) on the central nervous system’s sensory systems (i.e., somatosensory, visual, auditory, olfactory, and gustatory) from a post-situational and short-term perspective; whereas, the 73 second-year medical students completed them with regard to a two-hour small group learning event (each small group comprised 8 students + 1 faculty facilitator) on pathology of kidney disorders from a post-situational and short-term perspective. All versions of the SIMS, MCMS, AMS, and MBI-SSEE were administered as paper forms. Survey packets were marked with anonymous numerical identification. Participants received verbal instructions before the survey administration commenced. Data were entered independently by authors B.W. and D.M. then compared to ensure data entry was accurate.

2.4. Scale Adaptation

To adapt SIMS to both post-situational and short-term contexts, minor grammatical changes were made to both the initial prompt and to the individual items (

Table 2). This was done to convert the language from present to past tense English. For example, the prompt, “Why are you engaged in this activity?” became “Why were you engaged in this activity?”; and item 1 “Because I think that the activity is interesting” became “Because I thought that the activity was interesting.”

2.5. Construct Validity – Content Analysis

Four subject matter experts experienced with psychometric data analysis and familiar with self-determination theory (1 PhD statistician and professor of medical sciences, 1 PhD educator and professor of learning sciences, 1 PhD educator and associate dean of medical education, and 1 EdD educator and assistant professor of education) reviewed Guay et al. (2000)’s original content validity evidence and our grammatical edits to determine if they represented substantial content changes [

15].

2.6. Construct Validity – Response Process Analysis

Six focus groups were held, with n = 7 medical students per session, to review what they understood the 16 SIMS questions to mean and to evaluate the extent to which our proposed survey administration process could be confusing to respondents. This was done in order to maximize response integrity and avoid data collected being skewed or in error because questions were misunderstood or instructions on how to fill out the SIMS survey were unclear or misleading. Standardized survey administration and data collection procedures were finalized at the conclusion of this aspect of the study.

2.7. Construct Validity – Internal Structure Analysis

Internal structure of the SIMS instrument for both post-situational and short-term contexts was assessed via four statistical analyses. The data were analyzed using IBM SPSS Statistics, Version 27 and Mplus v8.2 [

25].

Initially, to estimate reliability, Cronbach’s alpha was computed for each of the four dimensions of the motivation construct. Cronbach’s alpha assesses internal consistency by indicating the extent to which a set of items is interrelated. A ‘good’ coefficient of Cronbach’s alpha is generally one that is substantially high, implying that the items consistently measure the same underlying concept or construct. Methodologists frequently suggest a threshold of 0.7 or above as an acceptable value [

26,

27,

28,

29].

Secondly, we assessed internal consistency through the calculation of Pearson’s r, analyzing the patterns of correlations. Given that the four dimensions of motivation are situated sequentially along a continuum, the interrelationships among the four item sets of the SIMS should display a simplex pattern. This pattern dictates that items closer on the motivational continuum will exhibit stronger positive correlations than those further apart.

Thirdly, to affirm the configurational structure of the 16-item SIMS scale, confirmatory factor analysis (CFA) was conducted utilizing Mplus software. Factor is an alternative term for dimension. Following the statistical analyses reported by Guay (2000) [

15], each subset of four questions was loaded onto each of the four factors and then Geomin rotation allowed the factors to correlate [

30]. Model goodness-of-fit was assessed by The adequacy of the model’s fit was evaluated using several indices: the Bayesian Information Criterion (BIC), the ratio of the chi-square to degrees of freedom (χ²/df), the Comparative Fit Index (CFI), the Root Mean Square Error of Approximation (RMSEA), and the Standardized Root Mean Square Residual (SRMR) indices [

31]. A χ²/df value of < 2.0 [

32], a CFI value > 0.95 [

33]; a RMSEA value < 0.06 [

33]; and a SRMR value of ≤ 0.08 [

33] were deemed to be indicative of an excellent model fit. The BIC is a criterion for model selection among a set of models [

32].

Fourthly, the Wald test assessed the significance of parameter estimates and modification index (MI) values assessed how a model could be improved by allowing additional paths to be estimated. Modification indices help us answer ‘

what if ?’ questions about how to improve construct models. Values bigger than 3.84 (p< 0.05) or 10.83 (p<0.01) indicate how the model could be improved [

34].

2.8. Construct Validity – Relationship to Other Variables Analysis

Relationship to other variables was assessed by calculating the Pearson’s r for each combination of factors within the SIMS and the other three instruments (AMS, MCMS, MBI-SSEE). The patterns of correlational relationships between the theoretical constructs measured in each instrument were then examined for predicted simplex-like patterns. Data analysis was performed using IBM SPSS Statistics, Version 27.

2.9. Construct Validity – Relationship to Other Variables Analysis

The subject matter experts and focus group participants were asked if they considered a respondent completing or declining to complete the SIMS instrument to have any negative repercussions or to possibly harm learners.

2.10. Ethical Approval

The study received approval from the Quinnipiac University Institutional Review Board (protocol # 06617). Informed consent was acquired from all participants in the study.

3. Results

3.1. Participants

Of the 186 medical students invited, 156 agreed (84% response rate): 83 first-year students (57% female, 43% male, mean age = 25) and 73 second-year students (49% female, 51% male, mean age = 26). The data from five participants were excluded from the analysis because their surveys were either incomplete, improperly completed (e.g., circling multiple response numbers for a single item), or displayed straightlining (e.g., consistently circling the same response number for all items across all instruments).

3.2. Construct Validity – Content Evidence

The four subject matter experts concurred that the subtle linguistic modifications from present to past tense did not negatively impact the substance of the SIMS instrument. They affirmed that the items remained appropriately aligned with the conceptual definitions of intrinsic motivation, identified regulation, external regulation, and amotivation.

3.3. Construct Validity – Response Process Evidence

During the focus groups, survey wording and instructions were examined for potential confusion or misunderstandings. Focus group participants were unanimously comfortable with the format of the proposed procedures for survey administration and data collection. They agreed that survey volume was not onerous and data collection was not prone to systematic errors. It was confirmed they understood the 16 SIMS questions to mean the same thing as the study authors B.W. and D.M.

3.4. Construct Validity – Internal Structure Evidence

The internal structure of the SIMS was assessed by examining simplex patterns of internal Pearson’s

r correlations and reliability as reflected by Cronbach’s alpha. SIMS Cronbach’s alpha values were 0.98 (IM), 0.98 (IR), 0.95 (ER), and 0.93 (AM) for the post-situational context; and 0.98 (IM), 0.82 (IR). 0.99 (ER), and 0.91 (AM) for the short-term context (

Table 3). The internal consistency of SIMS was acceptable given that the lowest Cronbach’s alpha value was 0.82, which exceeds the ≥ 0.7 minimum criterion [

26,

27,

28,

29]. These measures of reliability indicated that questions purporting to measure the same dimension of the motivation construct scored similarly to one another.

The internal Pearson’s

r correlations of SIMS followed an ordered simplex patterns in both short-term and post-situational contexts. Theoretically proximal dimensions (e.g., IM to IR) showed positive correlations, while distal dimensions (e.g., IM to AM) showed negative correlations (

Table 4 and

Table 5).

Concerning the confirmatory factor analysis (CFA) models for the post-situational and short-term context data, all items demonstrated significant loadings on their intended factors in both models (all p-values < 0.01) (

Appendix D). Additionally, the strength of these loadings was consistent across the post-situational and short-term models (

Table 6 and

Table 7).

Correlations between factors were mostly in the low to moderate range, supporting the discriminant validity of the factors (

Table 6 and

Table 7). However, the magnitude of the correlations between factors appears to differ between the post-situational and short-term contexts. The post-situational context model showed a strong correlation between IM and IR (

r = 0.69, p<0.01), while the correlation was smaller for the short-term context model (

r = 0.25, p<0.01). This is not surprising. A medical student engaging in self-directed study in the short-term (e.g., one week after a learning event) might reasonably be expected to exemplify the overlap of intrinsic motivation—driven by genuine interest and satisfaction in learning medicine—and identified regulation, as they recognize and value the importance of this knowledge for their future role as a competent and effective physician. The AM factor significantly correlated with IM (

r = -0.30, p<0.01) and ER (

r = 0.28, p<0.01) in the post-situational context model, but not in the short-term context model (IM:

r = -0.12, p = 0.16; ER:

r = -0.12, p = 0.21). Further, there was a moderately strong positive correlation between ER and IR in the short-term context model (

r = 0.44, p<0.01), but a small negative correlation in the post-situational context model (

r = -0.07, p = 0.47).

The model goodness-of-fit indices (

Table 8 and

Table 9) indicated the short-term model achieved good fit (BIC = 8025.6, χ²/df = 1.49, CFI = 0.954, RMSEA= 0.056, SRMR = 0.082), thus supporting the configural structure of the SIMS scale for that context. Whereas the goodness-of-fit indices for the post-situational model suggested room for enhancement in its configurational structure (BIC = 8235.9, χ²/df = 2.5, CFI = 0.894, RMSEA= 0.099, SRMR = 0.079). In keeping with this there were two MI suggestions to improve model fit for the post-situational context. The first MI (24.7) indicated that having SIMS Q1 (an IM question) reassigned to the IR factor would improve fit. The second MI (10.5) indicated that Q2 (an IR question) might cross-load onto the IM factor. This helps explain the large correlation found between IM and IR in the CFA post-situational context model. The lower BIC value for the short-term model relative to the post-situational model also indicates that SIMS has a better fit for short-term contexts (

Table 8 and

Table 9).

3.5. Construct Validity – Relationship to Other Variables Evidence

MCMS and AMS are grounded in the same theoretical framework as SIMS (self-determination theory) and also seek to measure IM, IR, ER, and AM, which facilitated direct comparisons between these instruments and SIMS. MBI-SSEE seeks to measure emotional exhaustion, which is similar albeit distinct from amotivation.

The relationship of SIMS variables to those of AMC, MCMS, and MBI-SSEE were assessed by examining simplex patterns of internal Pearson’s r correlations and reliability as reflected by Cronbach’s alpha.

In the post-situational context, Cronbach’s alpha values were 0.93 (IM), 0.98 (IR), 0.89 (ER), and 0.98 (AM) for MCMS; 0.96 (IM), 0.96 (IR), 0.95 (ER), and 0.31 (AM) for AMS; and 0.98 (AM) for MBI-SSEE (

Table 10). In the short-term context, Cronbach’s alpha values were 0.98 (IM), 0.96 (IR), 0.88 (ER), and 0.99 (AM) for MCMS; 0.91 (IM), 0.97 (IR), 0.96 (ER), and 0.84 (AM) for AMS; and 0.93 (AM) for MBI-SSEE (

Table 11). Indicating that the internal consistency of MCMS, AMS, and MBI-SSEE was acceptable given that all but one of the Cronbach’s alpha values exceeds the ≥ 0.7 minimum criterion [

26,

27,

28,

29]. The notable exception being for the AM dimension of the AMS scale (Cronbach’s alpha = 0.31).

The Pearson’s

r correlations between SIMS and the MCMS and AMS scales demonstrated simplex trends in both post-situational and short-term contexts. Dimensions of the motivation construct that were theoretically close (e.g., like IM and IR) had positive correlations, whereas those that were farther apart (e.g., like IM and AM) exhibited negative correlations (

Table 10 and

Table 11). Comparison of SIMS to the MBI-SSEE likewise revealed a pattern of correlation, with IM and IR showing negative correlation with emotional exhaustion, and ER and AM demonstrating a positive correlation (

Table 10 and

Table 11).

3.6. Construct Validity – Consequences Evidence

No direct, negative repercussions of the SIMS instrument being used for its intended purpose in a medical school context were identified by the authors (B.W. and D.M.), the focus group participants, or the subject matter experts. Student participants understood through informed consent that their participation was entirely anonymous and optional, and that participation or lack thereof would have no impact on their progression through and completion of medical school.

4. Discussion

Greater emphasis is being placed on the interplay between student motivation and educational methods across a range of health professions, including medicine [

35,

36,

37,

38,

39,

40]. For example, motivational pedagogy refers to teaching methods and educational practices that aim to enhance students’ intrinsic motivation to learn. This method is based on the conviction that motivation is an essential component of the learning process, and educators can profoundly influence student engagement and success by nurturing an educational atmosphere that encourages motivational growth [

3,

41]. As an educational strategy, it necessitates a reliable means to measure and track student motivation to ensure that teaching methods are effectively tailored to enhance engagement and learning, allowing for iterative refinements that address individual motivational needs, promote optimal educational outcomes, and reduce the risk of medical student burnout. SIMS is an instrument suited to the measurement motivation within the context for which it was designed [

15]. However, when survey instruments such as SIMS are moved to different contexts, the underlying assumptions, population characteristics, validity evidence, and relevance of the construct it was originally designed to measure may no longer hold true. Accordingly, this study sought validity evidence for the use of the SIMS in accurately measuring learner motivation among medical students in pre-clerkship short-term and post-situational contexts.

In this investigation, we evaluated the validity evidence for applying SIMS to gauge medical student motivation across two distinct contexts. The first context, referred to as ‘p

ost-situational’, adapted the survey’s intended real-time assessment to a retrospectively proximal one, enabling the gathering of information on a student’s motivation after the occurrence of a learning event, rather than during. This approach provides a more feasible alternative for collecting motivational data; it minimizes disruption to the learning experience and allows for more reflective and accurate self-assessment by the respondents of their motivational states, free from the immediate pressures or engagements of the learning activity. The second context, designated as ‘s

hort-term’, assessed a student’s motivation to study over a one-week period. Medical students must engage in self-directed studying after learning events in order to consolidate their understanding, challenge their mastery of the material, and bridge the gap between theoretical knowledge and practical application, a crucial step in developing the competence and confidence required for clinical practice [

42,

43].

Expecting perfect validity evidence for a survey instrument is impractical due to several factors: validity is multi-dimensional; it is influenced by the variability in respondents’ behaviors and the characteristics of the sample used for validation. Additionally, no survey can entirely escape measurement error, and the statistical methods used to assess validity are themselves imperfect. Thus, while high-quality validity evidence is the goal, perfection in validity is an ideal rather than an attainable standard [

18,

44,

45,

46]. With this in mind, we have gathered a considerable body of construct validity evidence supporting the use of SIMS to measure motivation in pre-clerkship medical students in post-situational and short-term contexts. The

content,

response process, and

consequences evidence were acceptable for both use case scenarios. For

relationship to other variables evidence, this was also substantially true. The Pearson’s

r correlation values and simplex patterns were acceptable for both contexts, but the post-situational Cronbach’s alpha for the measurement of amotivation (AM) with the AMS survey tool was 0.31 indicating low internal reliability of the measurement of this dimension of motivation with this particular instrument. This is more of a reflection on AMS in the two medical student contexts rather than SIMS. Lastly, for

internal structure evidence, the Cronbach’s alpha and Pearson’s

r correlational data and simplex patterns were acceptable for both use case scenarios; indicating good internal consistency and reliability. All CFA hypothesized factor loadings were found to be significant (p<0.01) for both contexts. For the short-term context, the CFA model fit indices data were excellent. However, for the post-situational context, the CFA model fit indices data fell short of excellent. χ²/df (χ²/df = 2.52) was higher than the ideal fit value threshold of <2.0, but was indicative of the model fitting reasonably well; the CFI value (CFI = 0.894) was also below the preferred threshold, suggesting the model may not fit as well as it could; the RMSEA value (RMSEA = 0.099) was also a bit higher than what we would want for a good fit; whereas the SRMS (SRMR = 0.079) was just at the cutoff of what is generally considered good, suggesting an adequate model fit. In summary, the post-situational CFA data represented a model that had an acceptable fit in some respects (i.e., factor loadings and SRMS) but indicated it might be worth examining the model to see if it could be improved (i.e., χ²/df, CFI, RMSEA). This was consistent with the MI data for the post-situational context that proposed that one IM question be re-assigned to the IR cohort of questions, and another IR question might cross-load onto the IM dimension.

Hence, we can deduce that the SIMS instrument is effective in differentiating among the four dimensions of motivation delineated by SDT when administered to pre-clerkship medical students in a short-term context. The instrument’s application in a post-situational context should be approached more cautiously due to its limited capacity to discriminate between identified regulation (IR) and intrinsic motivation (IM). However, that said, a less than ideal CFA model fit in distinguishing between IR and IM within the framework of SDT is not particularly concerning, given their conceptual adjacency and that both represent self-determined forms of motivation [

4].

4.1. Limitations and Threats to Validity

This study was conducted at a single U.S. medical school, which may limit its generalizability to other domestic and international medical institutions. Nonetheless, the underlying concepts are widely relevant, and tools based on self-determination theory, such as SIMS, have been utilized in diverse research contexts worldwide. Potential validity threats like the Hawthorne effect were mitigated by the fact that students participated in a familiar environment and were accustomed to completing surveys as a part of their routine activities. Furthermore, to reduce response bias that could skew the psychometric survey outcomes, participants were not informed of the anticipated results, and the surveys were conducted anonymously.

Author Contributions

Conceptualization, D.M. and B.W.; data curation, D.M. and B.W; formal analysis, D.M. and B.W.; funding acquisition, D.M and B.W.; investigation, D.M. and B.W. ; methodology, D.M. and B.W.; project administration, D.M.; resources, D.M. and B.W.; software, D.M. and B.W.; supervision, D.M.; visualization, D.M. and B.W.; writing—original draft, D.M. and B.W.; writing—review and editing, D.M. and D.M. All authors have read and agreed to the published version of this manuscript.