Submitted:

21 May 2024

Posted:

28 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methods

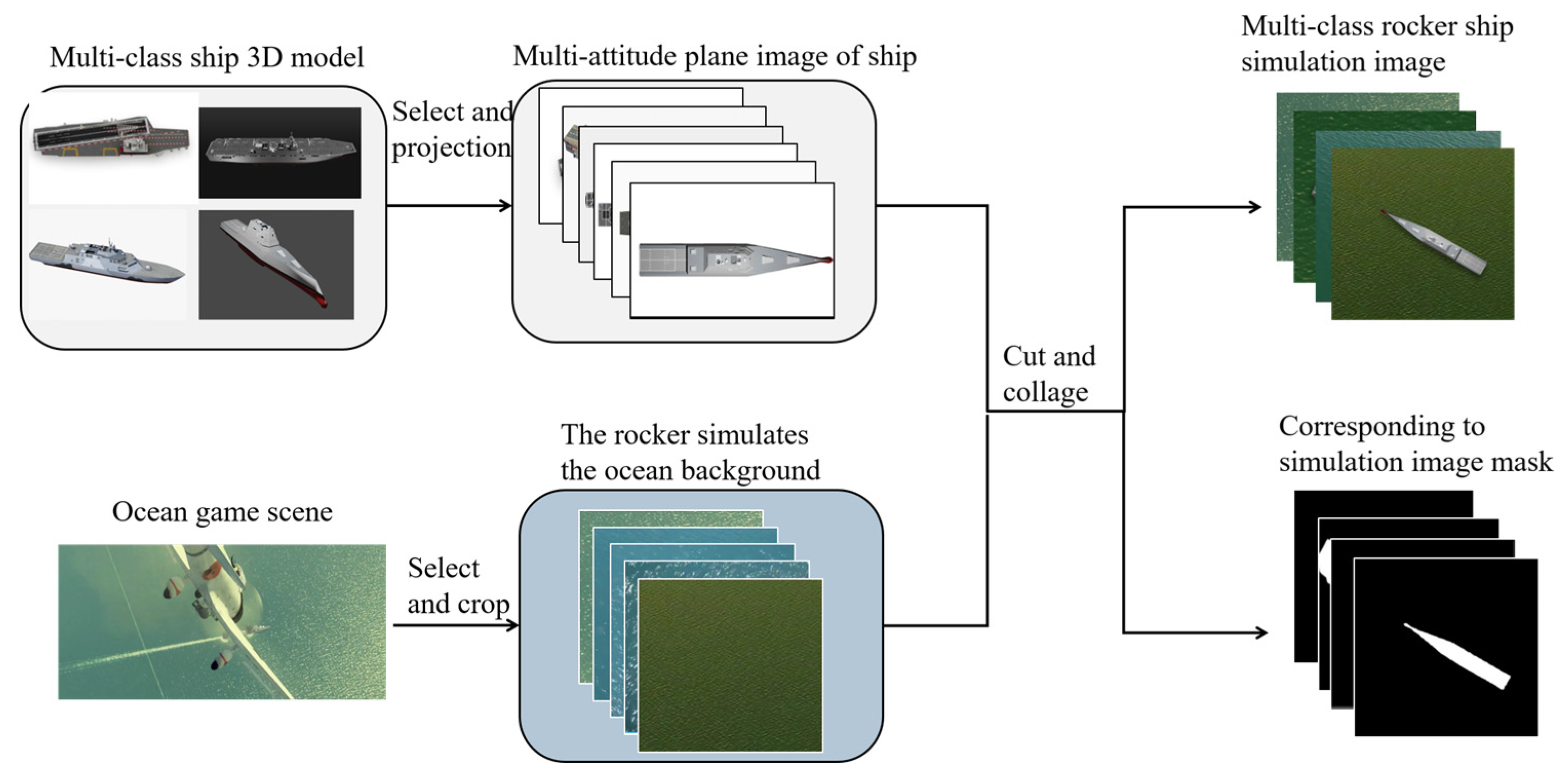

2.1. Simulated Remote Sensing Ship Image Construction

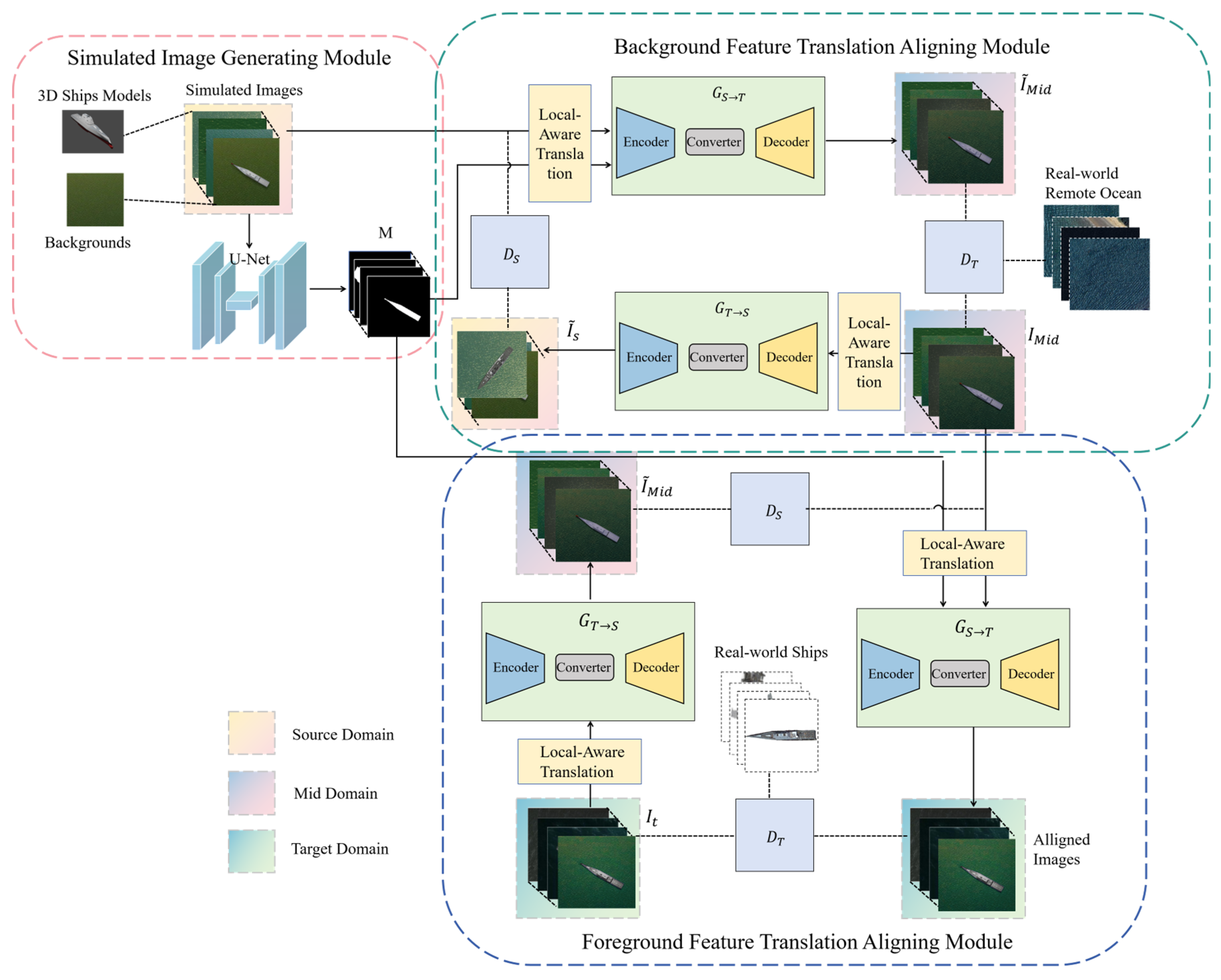

2.2. Data Augmentation Model Based on Transfer Learning

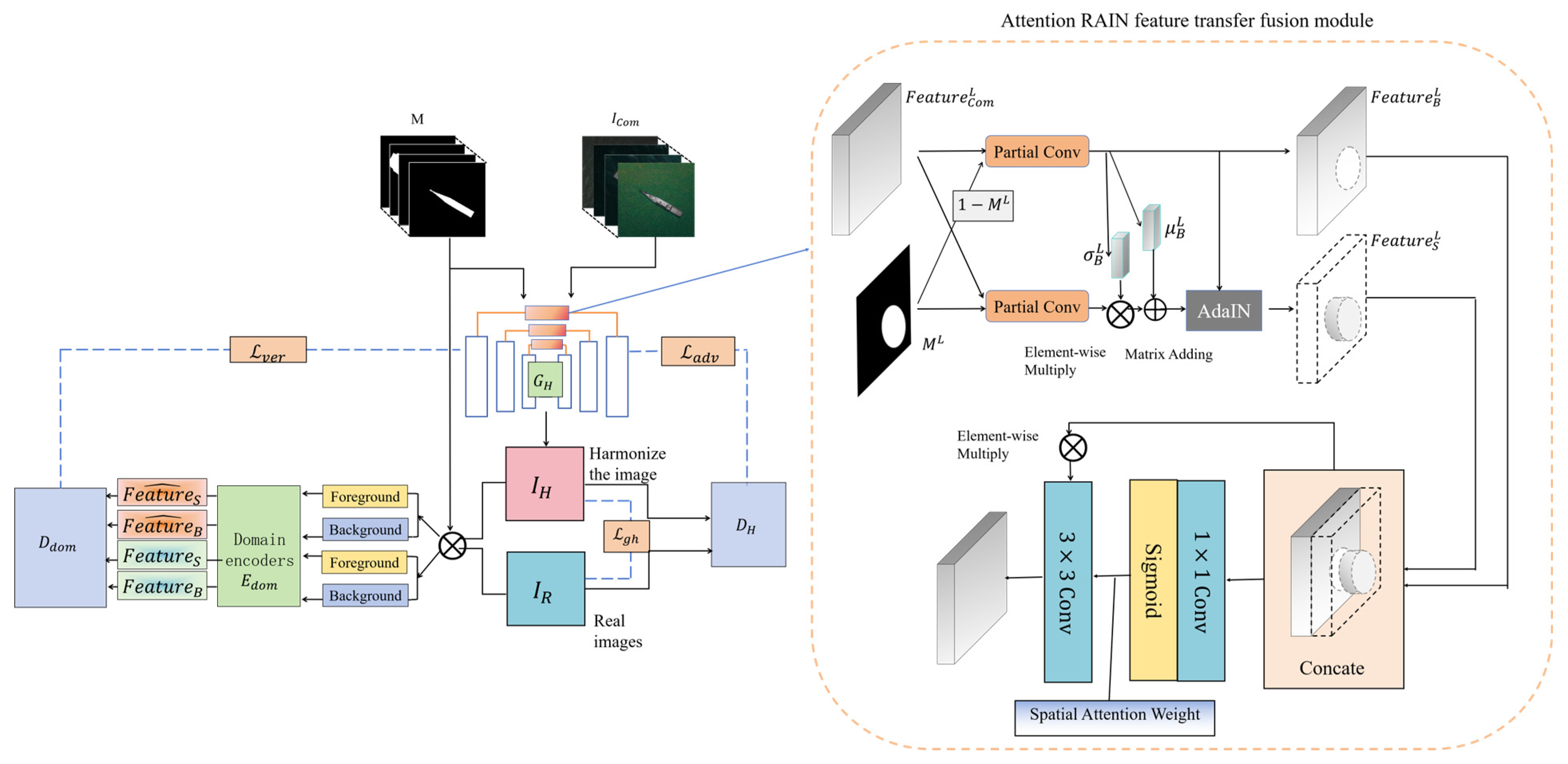

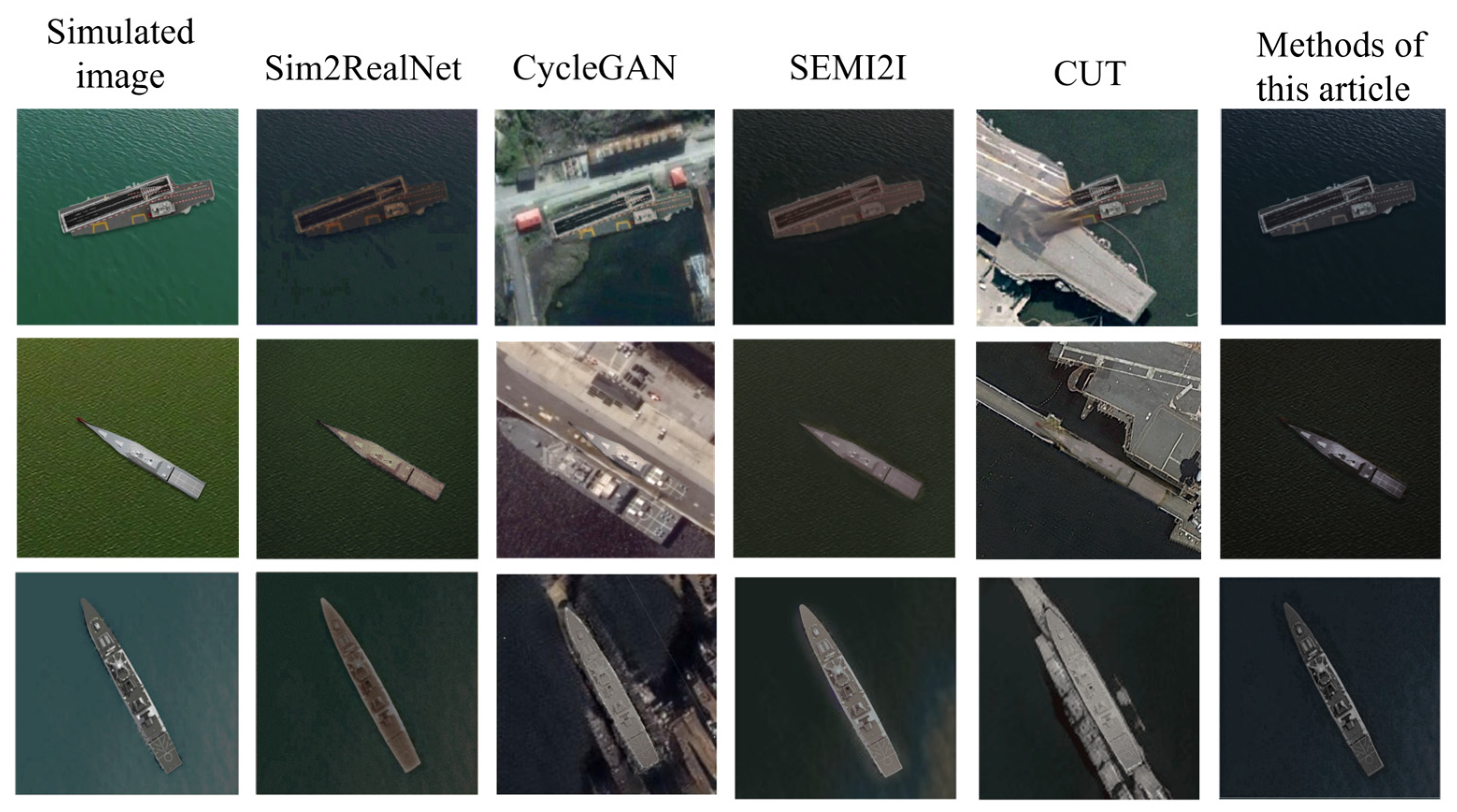

2.3. Remote Sensing Ship Image Harmonization Algorithm

3. Results

3.1. Dataset

3.2. Experimental Environment

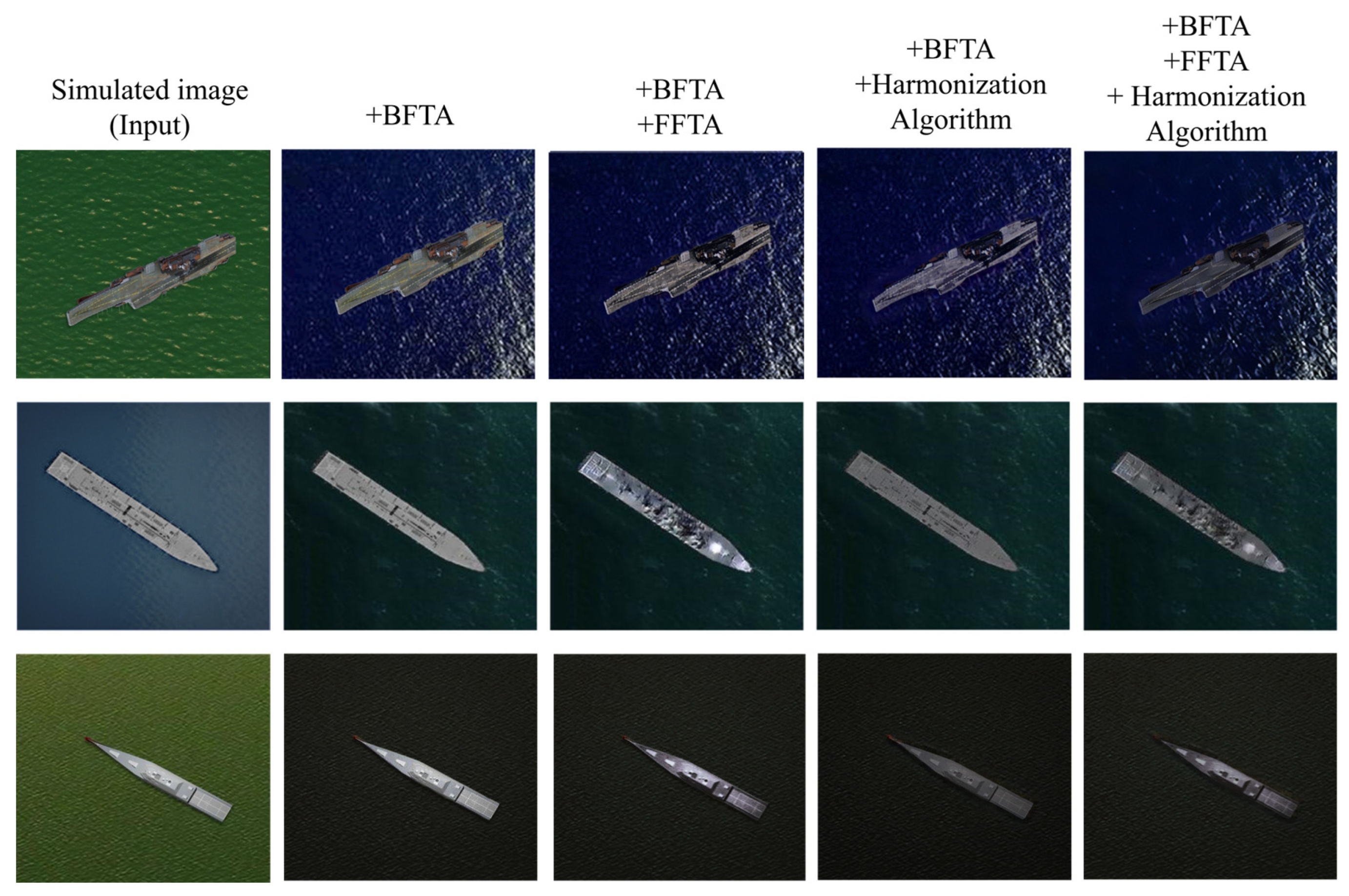

3.3. Ablation Experiment

3.4. Hybrid Dataset Experiment

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xiao Q, Liu B, Li Z, et al. Progressive data augmentation method for remote sensing ship image classification based on imaging simulation system and neural style transfer[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 9176-9186.

- Dumoulin V, Shlens J, Kudlur M.A Learned Representation For Artistic Style[J]. 2016. [CrossRef]

- Ulyanov D, Vedaldi A, Lempitsky V. Improved texture networks: Maximizing quality and diversity in feed-forward styliza-tion and texture synthesis[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2017: 6924-6932.

- Ye W, Chen Y, Liu Y,et al.Multi-style transfer and fusion of image's regions based on attention mechanism and instance segmentation[J].Signal Processing. Image Communication: A Publication of the the European Association for Signal Processing, 2023.

- Cho W, Choi S, Park D,et al.Image-to-Image Translation via Group-wise Deep Whitening and Coloring Transformation[J]. 2018. [CrossRef]

- Zhao J, Lee F, Hu C,et al.LDA-GAN: Lightweight domain-attention GAN for unpaired image-to-image transla-tion[J].Neurocomputing, 2022.

- Luan F, Paris S, Shechtman E,et al.Deep Painterly Harmonization[J].Computer Graphics Forum, 2018, 37(4):95-106. [CrossRef]

- Ling J, Xue H, Song L,et al.Region-aware Adaptive Instance Normalization for Image Harmonization[J]. 2021. [CrossRef]

- Jiang Y, Zhang H, Zhang J,et al.SSH: A Self-Supervised Framework for Image Harmonization[J]. 2021. [CrossRef]

- Guo Z, Zheng H, Jiang Y,et al.Intrinsic Image Harmonization[C]//Computer Vision and Pattern Recognition.IEEE, 2021. [CrossRef]

- Guo Z, Guo D, Zheng H,et al.Image Harmonization With Transformer[C]//International Conference on Computer Vi-sion.2021. [CrossRef]

- Cong W, Tao X, Niu L,et al.High-Resolution Image Harmonization via Collaborative Dual Transformations[J]. 2021. [CrossRef]

- Zhu Z, Zhang Z, Lin Z,et al.Image Harmonization by Matching Regional References[J]. 2022. [CrossRef]

- Zhu J Y, Park T, Isola P,et al.Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks[J].IEEE, 2017. [CrossRef]

- Ulyanov D, Vedaldi A, Lempitsky V.Instance Normalization: The Missing Ingredient for Fast Stylization[J]. 2016. [CrossRef]

- Cong W, Zhang J, Niu L,et al.Image Harmonization Dataset iHarmony4: HCOCO, HAdobe5k, HFlickr, and Hday2night.2019[2024-03-03].

- Di Y, Jiang Z, Zhang H.A Public Dataset for Fine-Grained Ship Classification in Optical Remote Sensing Images[J]. 2021. [CrossRef]

- He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2016: 770-778.

- Xie S, Girshick R,Dollár, Piotr,et al.Aggregated Residual Transformations for Deep Neural Networks[J].IEEE, 2016. [CrossRef]

- Huang G, Liu Z, Laurens V D M,et al.Densely Connected Convolutional Networks[J].IEEE Computer Society, 2016. [CrossRef]

- Han D, Kim J, Kim J.Deep Pyramidal Residual Networks[J]. 2016. [CrossRef]

- Devries T, Taylor G W.Improved Regularization of Convolutional Neural Networks with Cutout[J]. 2017. [CrossRef]

- Ma N, Zhang X, Zheng H T, et al. Shufflenet v2: Practical guidelines for efficient cnn architecture design[C]//Proceedings of the European conference on computer vision (ECCV). 2018: 116-131.

- Tan M, Le Q V.EfficientNetV2: Smaller Models and Faster Training[J]. 2021. [CrossRef]

- Liu Z, Lin Y, Cao Y,et al.Swin-Transformer: Hierarchical Vision Transformer using Shifted Windows[J]. 2021. [CrossRef]

- Xia G S, Bai X, Ding J,et al.DOTA: A Large-scale Dataset for Object Detection in Aerial Images[J]. 2017. [CrossRef]

- Cheng G, Han J, Zhou P,et al.Multi-class geospatial object detection and geographic image classification based on collection of part detectors[J].ISPRS Journal of Photogrammetry and Remote Sensing, 2014, 98(dec.):119-132. [CrossRef]

- Di Y, Jiang Z, Zhang H, et al. A public dataset for ship classification in remote sensing images[C]//Image and Signal Processing for Remote Sensing XXV. SPIE, 2019, 11155: 515-521.

- Park T, Efros A A, Zhang R, et al. Contrastive learning for unpaired image-to-image translation[C]//Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part IX 16. Springer International Publishing, 2020: 319-345.

- Tasar O, Happy S L, Tarabalka Y,et al.SemI2I: Semantically Consistent Image-to-Image Translation for Domain Adaptation of Remote Sensing Data[J].arXiv e-prints, 2020. [CrossRef]

| Ship category | Detailed name | Inclusion of Generated Samples | Training Set Size | Test Set Size |

| Aircraft_ carrier |

Charles_de_Gaulle_aircraft_carrier | Y | 34 | 34 |

| Kuznetsov-class_aircraft_carrier | Y | 34 | 34 | |

| Nimitz-class_aircraft_carrier | F | 388 | 165 | |

| Midway-class aircraft_carrier | F | 146 | 62 | |

| Landing_ship | Whitby_island-class_dock_landing_ship | F | 195 | 83 |

| Destroyer | Arleigh_Burke-class_destroyer | F | 407 | 174 |

| Atago-class_destroyer | Y | 35 | 35 | |

| Murasame-class_destroyer | F | 407 | 174 | |

| Type_45_destroyer | Y | 112 | 48 | |

| Zumwalt-class-destroyer | Y | 25 | 25 | |

| Combat_ship | Independence-class_combat_ship | F | 148 | 62 |

| Freedom-class_combat_ship | Y | 123 | 53 |

| Classification AR | Baseline | SIG | +BFTA | +BFTA +FFTA |

+BFTA +FFTA +HA |

BFTA Gain | FFTA Gain | HA Gain | |

| ResNet | 68.60 | 76.98 | 79.03 | 82.81 | 86.00 | +2.05 | +3.78 | +3.13 | |

| ResNext | 74.64 | 79.66 | 76.31 | 82.03 | 85.51 | -3.35 | +5.72 | +3.48 | |

| Pyramid | 76.57 | 78.47 | 81.63 | 85.01 | 87.45 | +3.16 | +3.38 | +2.44 | |

| EffiN-v2 | 83.68 | 86.16 | 87.23 | 88.90 | 91.69 | +1.07 | +1.67 | +2.79 | |

| Swin-T | 87.32 | 88.14 | 87.37 | 91.48 | 94.85 | -0.77 | +4.11 | +3.37 | |

| ResNet | 79.44 | 84.73 | 87.00 | 89.13 | 92.05 | +2.27 | +2.13 | +2.92 | |

| ResNext | 83.95 | 84.91 | 85.55 | 89.02 | 91.26 | +0.64 | +3.47 | +2.24 | |

| Pyramid | 84.48 | 85.33 | 87.22 | 89.30 | 92.38 | +1.89 | +2.08 | +3.08 | |

| EffiN-v2 | 89.10 | 90.56 | 90.70 | 92.31 | 94.79 | +0.14 | +1.61 | +2.48 | |

| Swin-T | 91.53 | 92.99 | 92.24 | 94.48 | 97.12 | -0.75 | +2.24 | +2.64 | |

| AR (%) |

RN-110 | ResNext | DenseNet | PyramidNet | WRN | ShuffleNet-v2 | EfficientNet-v2 | Swin-T |

| 86.20 | 86.08 | 83.44 | 87.27 | 92.63 | 90.41 | 93.10 | 95.02 | |

| 92.07 | 92.20 | 87.89 | 93.02 | 95.82 | 84.93 | 95.88 | 97.43 |

| Ship Categories | AR(%) |

| Charles_de_Gaulle_aircraft_carrier | 97.14 |

| Kuznetsov-class_aircraft_carrier | 100.00 |

| Atago-class_destroyer | 93.39 |

| Type_45_destroyer | 74.98 |

| Zumwalt-class-destroyer | 96.09 |

| Freedom-class_combat_ship | 97.00 |

| Nimitz-class_aircraft_carrier | 99.86 |

| Midway-class aircraft_carrier | 100.00 |

| Whitby_island-class_dock_landing_ship | 98.77 |

| Arleigh_Burke-class_destroyer | 97.26 |

| Murasame-class_destroyer | 97.78 |

| Independence-class_combat_ship | 98.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).