1. Introduction

Assortment planning for future products plays a crucial role in the success of e-Commerce as a platform. It involves strategically selecting and organizing a range of products to meet customer demands and maximize sales. This process involves analyzing market trends, customer preferences, and competitor strategies to identify potential gaps and opportunities. By carefully planning the assortment, retailers can ensure they offer a diverse and relevant range of products that cater to different customer segments. This helps in driving customer satisfaction, increasing sales, and staying ahead in the competitive market. Walmart collaborate with trend forecasting company that provides insights and analytics for the fashion and creative industries. They do not release public reports, as their insights are provided through a paid subscription service. However, they often share snippets of their forecasts via blog posts or on social media. For example, they might report on upcoming color trends for a particular season, predict consumer behaviors, or identify emerging fashion trends in different regions. The trend forecasting company also provides reports on retail and marketing strategies, textiles and materials innovations, product development and lifestyle and interiors trends. Their reports are typically used by retailers and marketers to plan and develop their products and strategies.

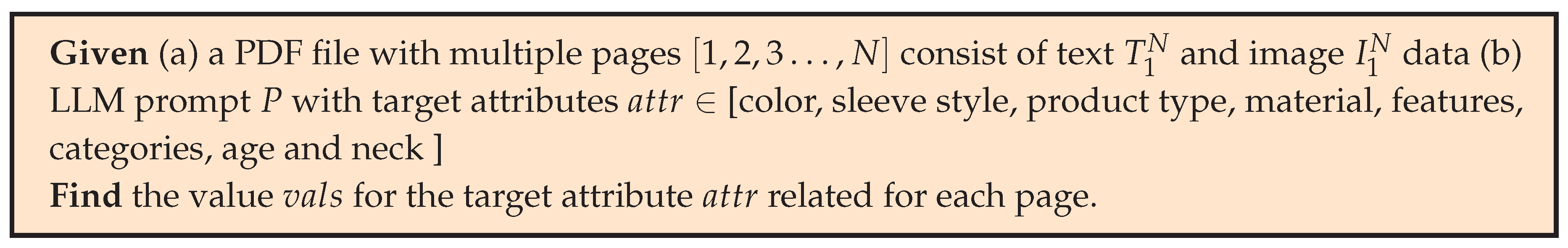

Informal Problem 1. Given a set of target attributes (e.g., color, age group, material), and unstructured information in the form of text and images: how can we extract values for the attributes? What if some of these attributes have multiple values, like colors or age group?

Correct predicted attributes helps in improved catalog mapping, which helps in generating search tags on better content quality of products. Customers can filter for products based on their exact needs and compare product variants promptly. Resulting in a seamless shopping experience while searching or browsing a product on an E-commerce platform. The Product attribute Extraction (PAE) engine can help the retail industry to onboard new items or extract attributes from existing catalog.

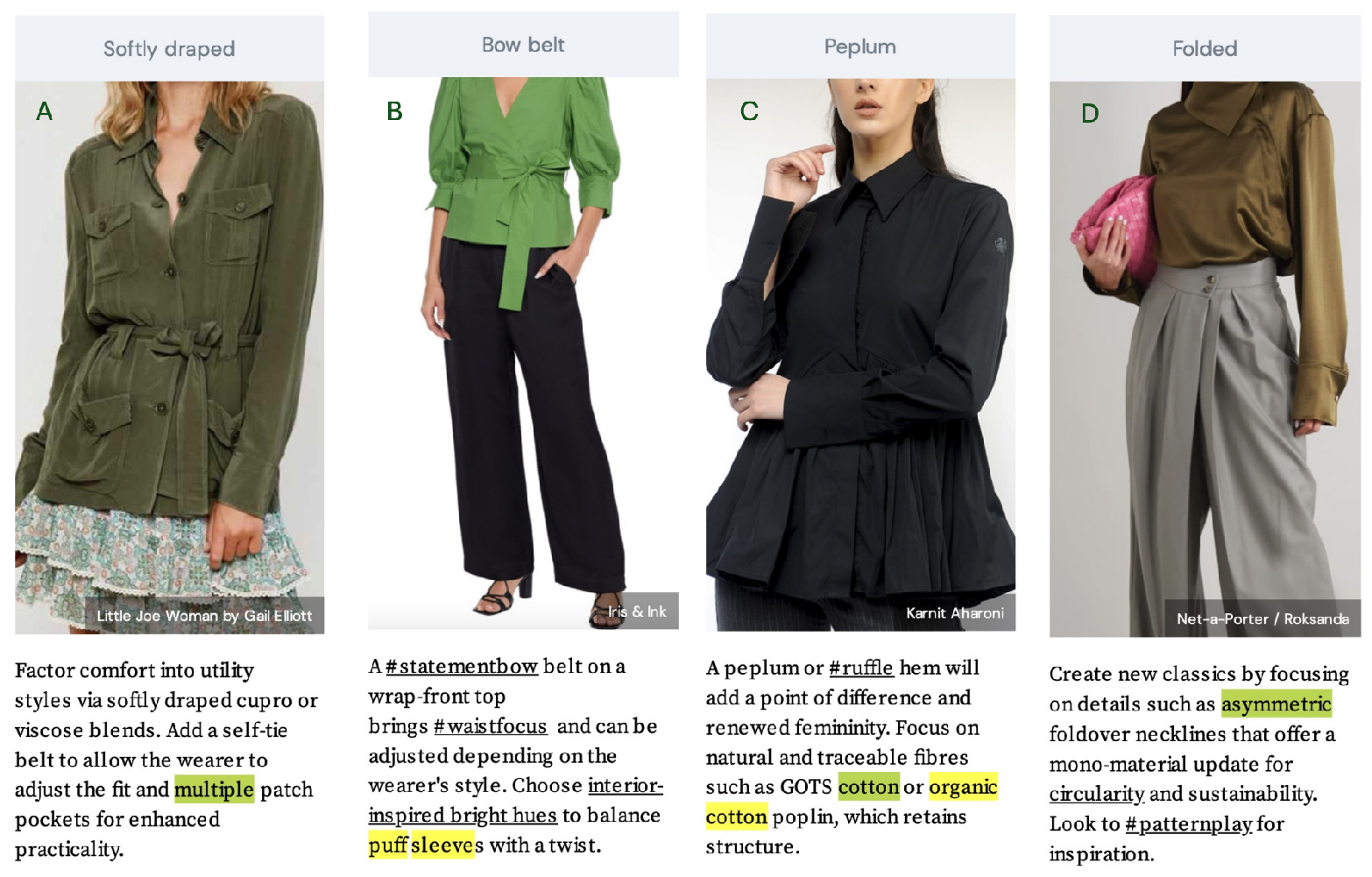

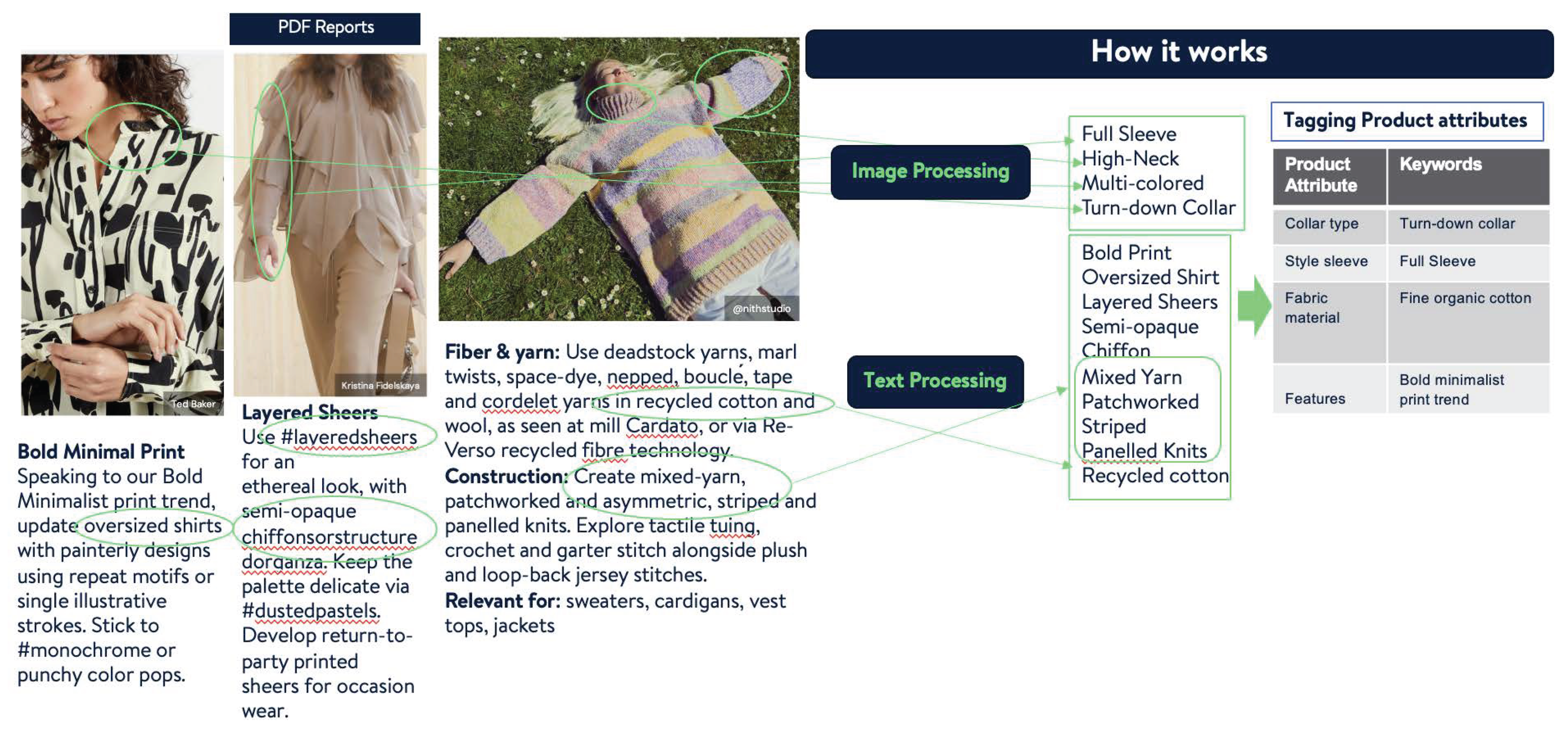

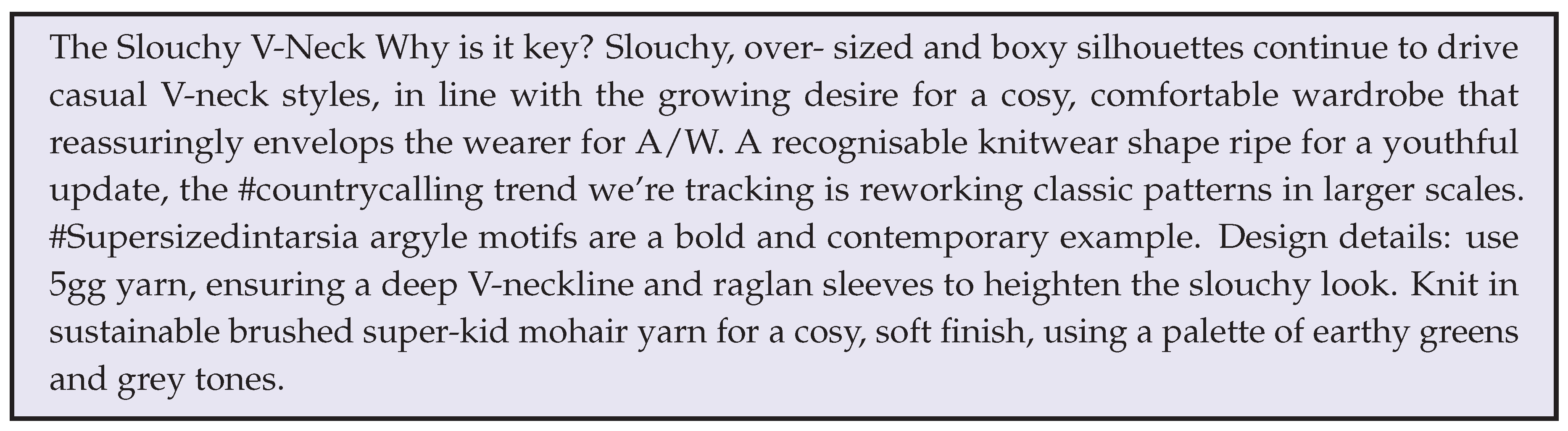

Motivating Example Retailers can use upcoming market trends to decide on product catalog assortment planning based on upcoming seasons like spring, fall and summer. For a concrete example, refer to

Figure 1, a classic shirt (with unstructured text and image data), it talks about

peplum or

ruffle hem being used on Global Organic Textile Standard

(GOTS) cotton or

organic cotton poplin. This can be referenced to having classic shirts in a catalog made of Organic/GOTS cotton and peplum/ruffle hem as features for the shirt. Based on these attribute insights, assortment planners would work closely with suppliers and designers to curate a collection of such clothing items to complete the look, including leggings, sports bras, sweatshirts, and sneakers. They would consider factors such as quality, affordability, and inclusively to ensure that the assortment caters to a wide range of customers. The images shows popular prints, innovative fabrics, and style variations within the classic shirt category. This would enable retailers to offer a diverse selection of classic shirt options that align with the latest trends. Additionally, once retailers have an assortment ready for selling, they could collaborate with fashion influencers who align with the upcoming trend to create exclusive collections or promote the existing assortment. This would help to generate excitement among customers and drive high engagement. By incorporating the recommended color palettes, visual elements, and messaging, the retailer could create an immersive shopping experience.

Previous Works In this section, we provide a brief overview of existing Multi Modal Attribute Extraction (MMAE) techniques being used to extract product attributes from Images and Text. MMAE explained in paper [

1] talks about returning values for attributes which occur in images as well as text and they do not treat the problem as a labeling problem. They define the problem as following: Given a product

i and a query attribute

a, extract a corresponding value

v from the evidence provided in terms of textual description of it (

) and a collection of images (

). For training, for a set of product items

I, for each item

, its textual description Di and the images

, and a set

comprised of attribute-value pairs

. The model is composed of three separate modules: (1) an encoding module that uses modern neural architectures to jointly embed the query, text, and images into a common latent space, (2) a fusion module that combines these embedded vectors using an attribute-specific attention mechanism to a single dense vector, and (3) a similarity-based value decoder which produces the final value prediction. Another approach for MMAE explained in paper [

2], considers cross-modality comparisons. They leverage pre-trained deep architectures to predict attributes from text or image data. By applying several refinements to leverage pre-trained architectures and build single modality models like Text only modality model, image only modality model for the task of product attribute prediction. A new modality merging method was proposed to mitigate modality collapse. For every product, it lets the model assign different weights to each modality and introduces a principled regularization scheme to mitigate modality collapse. Paper by [

3] talks about Multi modal Joint Attribute Prediction and Value Extraction for E-commerce Product. They enhance the semantic representation of the textual product descriptions with a global gated cross-modality attention module that is anticipated to benefit attribute prediction tasks with visually grounded semantics. Moreover, for different values, the model selectively utilizes visual information with a regional-gated cross-modality attention module to improve the accuracy of value extraction. Note: As these methods are industry related, hence source code is not publicly available to reproduce the outcome.

Challenges Despite the potential, leveraging PDF reports consisting text and images for attribute value extraction remains a difficult problem. We highlight few challenges faced during designing and executing extraction framework:

C1: Text Extraction from PDF: PDF reports can be a combination of multiple images, overlapping text elements, annotations, metadata and unstructured text integrated together in no specific PDF format. Extracting text from such reports can be difficult, challenging and lead to misspelled text and loss of specific topic-related context. Another issue is missing and noisy attributes. Text data might not have all the attributes which we are looking for. Therefore, visual attribute extraction plays an important role.

C2: Image Extraction from PDF: Images in PDF reports can be embedded, compressed down to reduce size, in various formats like JPEG, PNG etc. Extracting images while maintaining the resolution and quality of images requires specialized handling to accurately preserve the original appearance. Also, images could bring multi-labeled attributes which can confuse the model but can be mitigated by merging certain attribute values to help with model inferences.

C3: Extracting Product Attributes: Product tags extracted from text/images needs to be carefully mined to match product attributes. The attributes differ based on the category of products we are referring to and can have multi-labeled attributes. For example, women’s tops will have sleeve related attribute whereas women’s trousers will have type of fit attribute and sleeve attribute will be irrelevant.

C4: Mapping Product Attributes to Product Catalog: E-commerce catalog has specific products and attributes mapped to them. On-boarding new attributes based on PDF reports, requires new attribute creation/refactoring existing attributes.

Informal Problem 2. Can we develop unsupervised models that require limited human annotation? Additionally, can we develop models that can extract explainable visual attributes, unlike black-box methods that are difficult to debug?

Mitigating Challenges: Current multi-modal attribute extraction solutions [

1,

2] are inadequate in the e-commerce field when it comes to handling challenges C2 and C4. Conversely, text extraction solutions that successfully extract attribute values are primarily text-oriented [

4,

5,

6,

7] and cannot be easily applied to extracting attributes from images. In this work, we address the central question: how can we perform multi-modal product attribute extraction from upcoming trend PDF reports? The detail description is given in

Section 3 to handle each challenge. Our proposed method

PAE works on extracting upcoming trends from PDF reports generated by the trend forecasting company. This capability provides an insight into upcoming marketing trends and customer preferences. By using trend forecasting reports, catalog can be refined with new classes of products having trending attributes based on external reports, to propel value across the apparel space by accurately indicating attribute trends in the market and increasing customer satisfaction. The contributions of our paper are as follows:

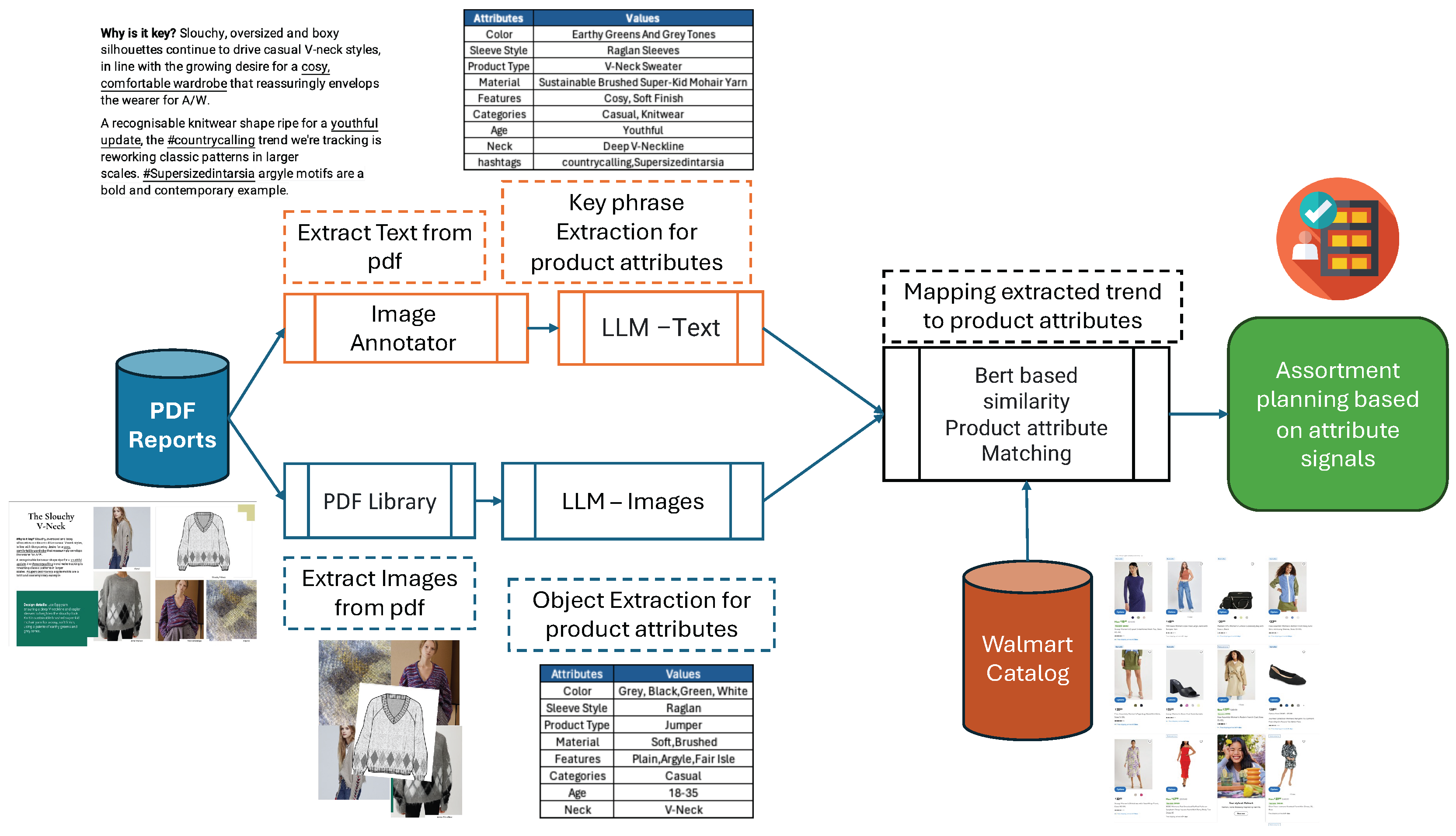

Novel Problem Formulation: We propose the end-to-end model of jointly extracting the trending product attributes and hashtags from PDF files consisting of text and image data and mapping it back with the product catalog for the final product attributes values. An example of end to end execution of product attribute extraction and mapping is shown in the

Figure 2. Due to Walmart Privacy Requirements, models and datasets are not open to public. We have elaborated the details of each model, and readers can use LLM model of their own choice.

Flexible Framework: We develop a general framework PAE for extracting text and images from PDF files and then generating product attributes. All the components are easily modified to enhance the capability or to use the framework partially for other applications. The extraction engine can be used to extract attributes for different categories of products like Electronics, Home decor etc.

Experiments: We performed extensive experiments in real-life datasets to demonstrate PAE’s efficacy. It successfully discovers attribute values from text and image data with a high F1-score of 96.8%, outperforming state-of-the-art models. This proves its ability to produce stable and promising results.

The remainder of the paper is organized as follows: The problem formulation is given in

Section 2. In

Section 3 we describe our proposed method

PAE in details with examples. Finally, we show the experimental results in

Section 4 and

Section 5 concludes the paper.

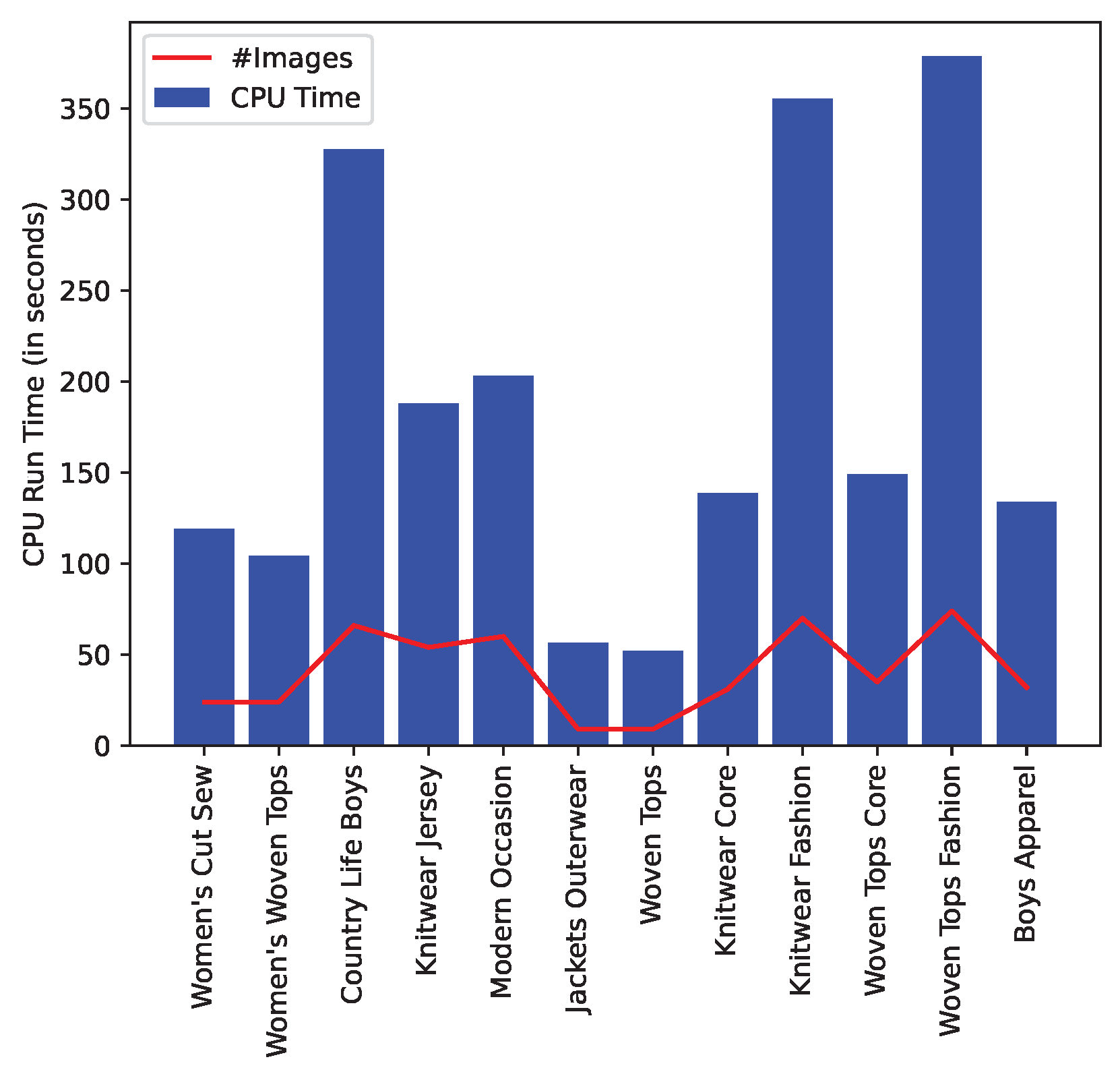

3. Product Attribute Extraction

In this work, we tackle the attribute value extraction as pair task, i.e., extracting the attribute values from image and text together. The input of the task is a “textual information

T, set of images

pair per PDF page, and the output is the product attributes values. Our framework is presented in

Figure 3. In fact, through extensive experiments (see

Section 4), we show that our proposed method is not only intuitive, but achieves state-of-the-art performance compared to previously proposed methods in literature. Due to Walmart Privacy Requirements, models and datasets are not open to public. We have elaborated the details of each model, and you can use LLM model of your choice.

3.1. Text Extraction from PDF

Text extraction from PDF is an important process that involves the conversion of data contained in PDF files into an editable and searchable format. This procedure is crucial for activities like data analysis, content re-purposing, and detecting trends from public reports. However, it can pose certain challenges. The layout complexity of a PDF document can make the extraction process difficult. For instance, the presence of multiple columns, images, tables, and footnotes can complicate the extraction of pure text. Another challenge is the use of non-standard or custom fonts in PDFs, which can lead to inaccurate extraction results. Moreover, the presence of ’noise’ such as headers, footers, HTML tags and page numbers can also interfere with the extraction process.

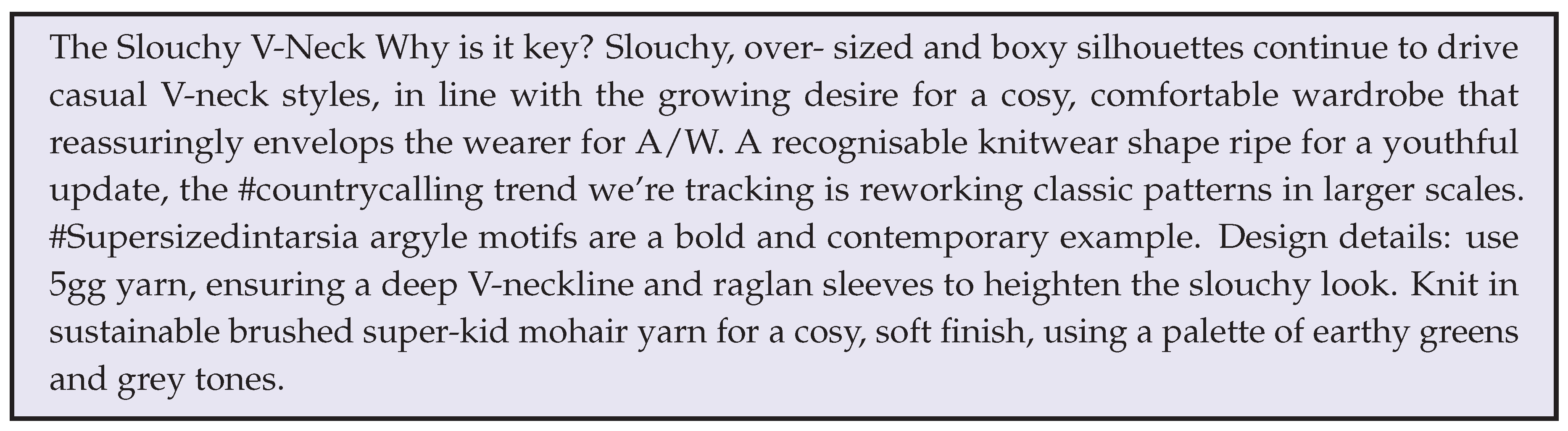

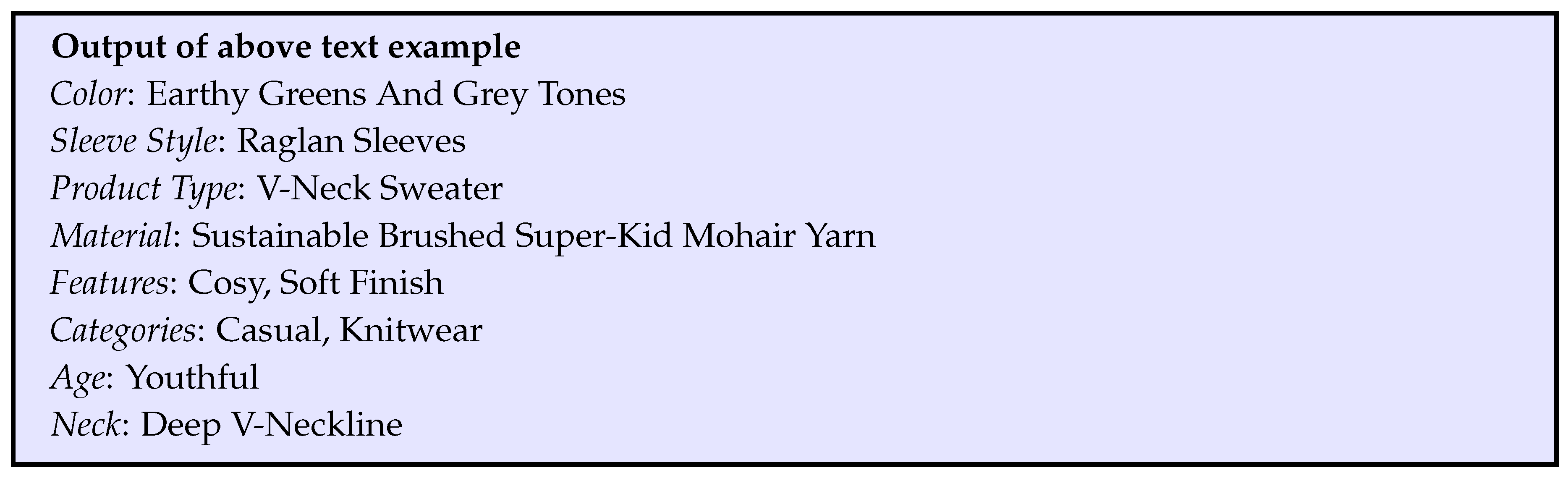

There are numerous tools available for text extraction from PDF files. Searching for text extraction from PDF on Google yields a plethora of results featuring various tools or pages suggesting such tools as pdfMiner [

8], pdfquery[

9] etc. However,

Figure 4 represents the process we used to extract the text from pdf files. First, we split the PDF files into PIL (Python Imaging Library) images using "convert from path" function from the pdf2image [

10]. Internally, the function uses the

pdfinfo command-line tool to extract metadata from the PDF file, such as the number of pages. It then uses the

pdftocairo command-line tool to convert each page of the PDF into an image. Second, we convert the images to grayscale and perform morphological transformations on each page by applying a morphological gradient operator to enhance and isolate text regions. Finally, we use Image Annotator [

11] consists of Optical Character Recognition (OCR) capabilities for text extraction. Once the text is extracted, we use the spell Corrector like language-tool to fix any misinterpreted text from OCR. The Text extracted from PDF report about product type "Slouchy V-Neck" is given below:

Figure 4.

Text Extraction via Image Annotator

Figure 4.

Text Extraction via Image Annotator

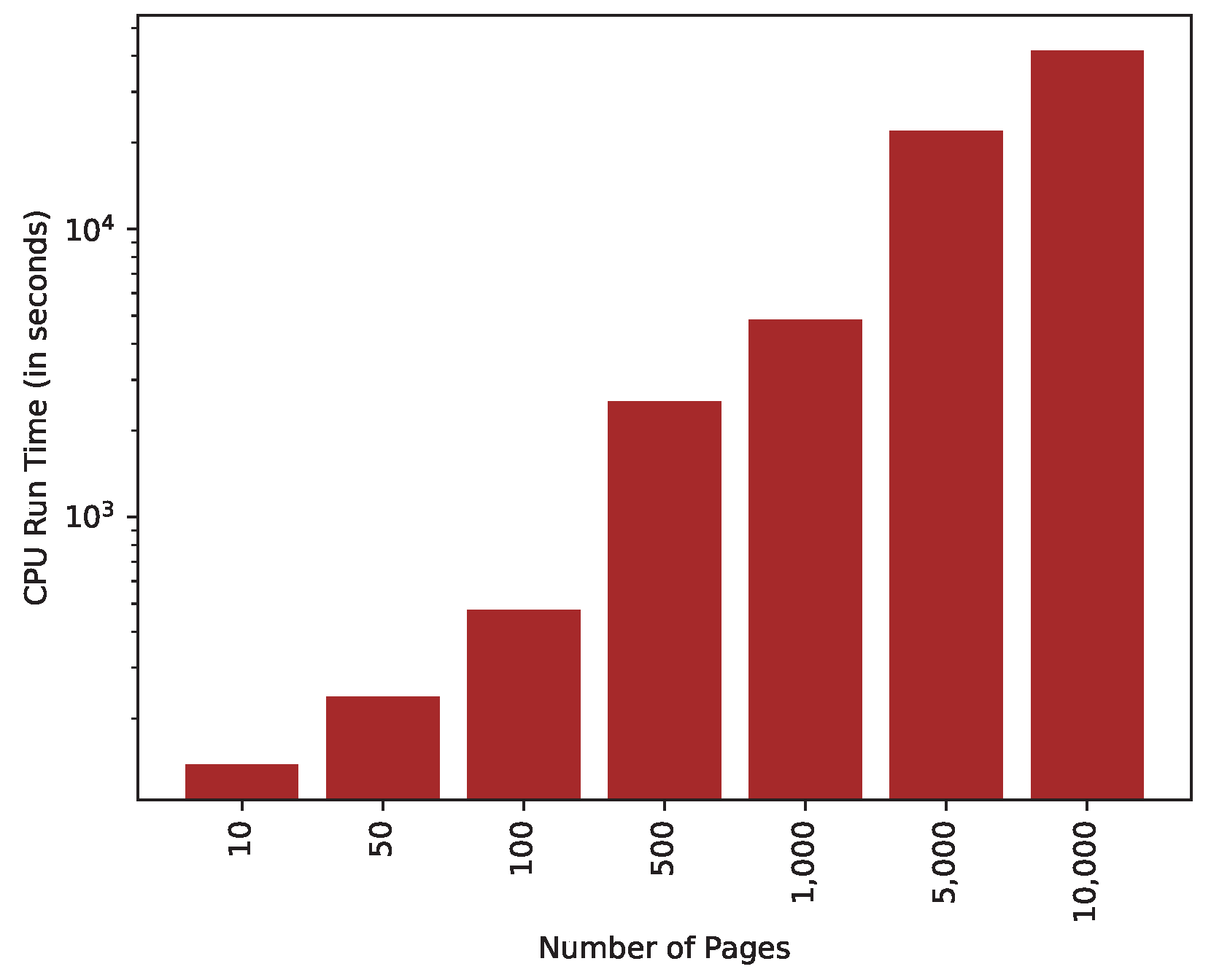

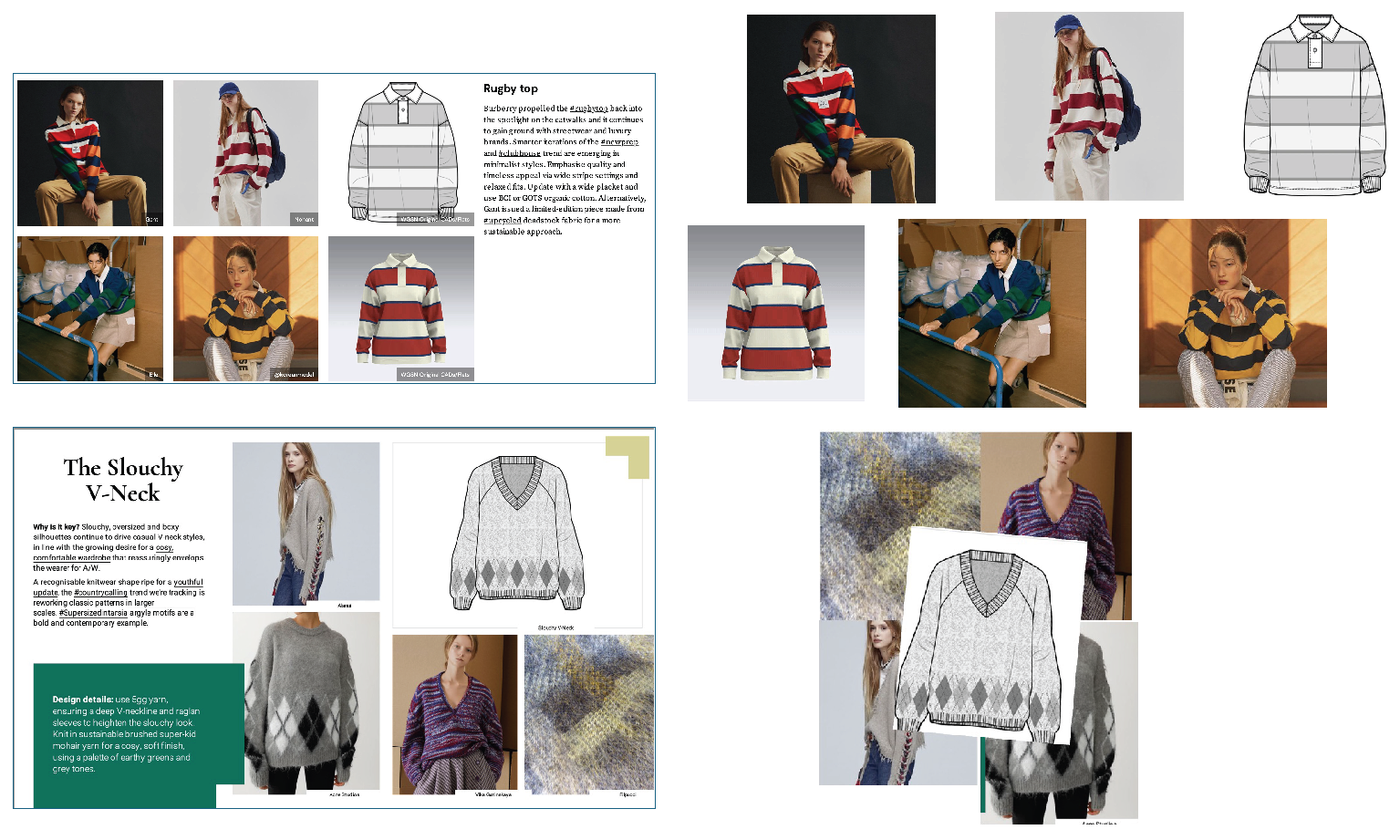

3.2. Image Extraction from PDF

PDF files can contain images in various formats such as JPEG, PNG, or TIFF. Extracting images from different formats may require multiple techniques. The second challenge could be different types of images in the pdf files, including scanned documents, vector graphics, or embedded images. Third, extracting images from large PDF files efficiently and in a timely manner can be a challenge, especially when dealing with limited system resources. To tackle the above-mentioned challenges, we exploit pure-python PDF library [

12], as a standalone library for directly extracting image objects from PDF files. With pure-python PDF library, we identify the pages with images and extract them as raw byte strings. Then, using Pillow, the extracted images are processed and saved in jpg formats.

Figure 5 shows extracted images from files.

3.3. Attribute Extraction from Text

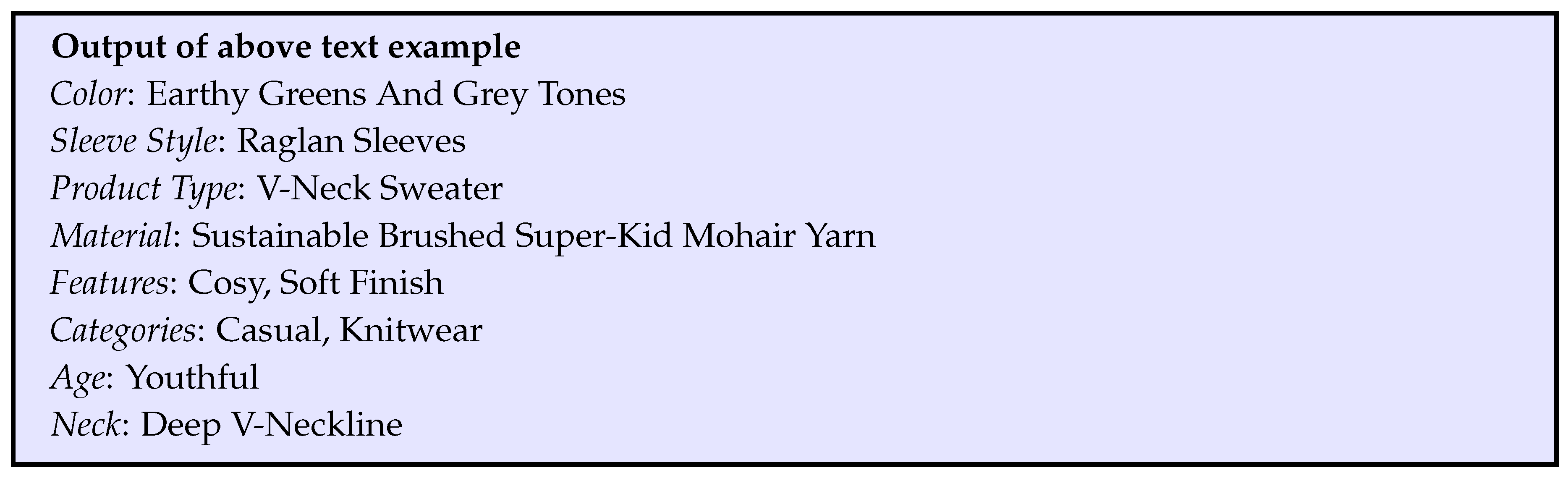

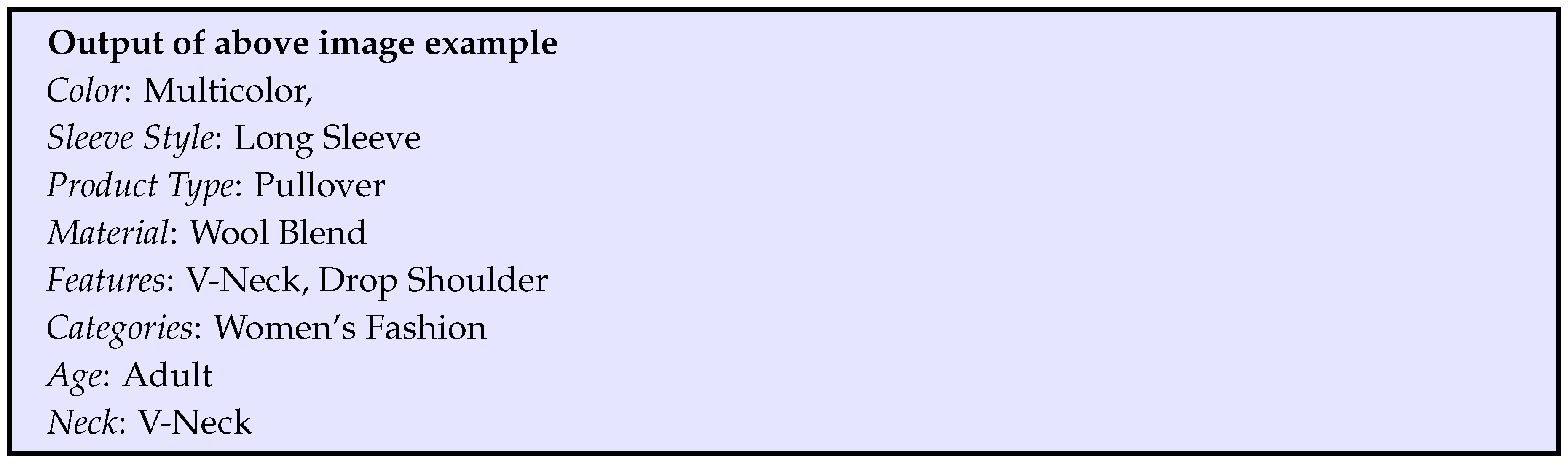

PDF reports consist of specific products or product categories, providing details on their design, features, materials, colors, and styles. These reports provide information about product innovations, market demand, and consumer preferences in upcoming months or years. Here, we extract 8 product attributes namely [Color, Sleeve Style, Product Type, Material, Features, Categories, Age, Neck]. We also extract hashtags to discover and explore content related to a specific topic or theme in catalog.

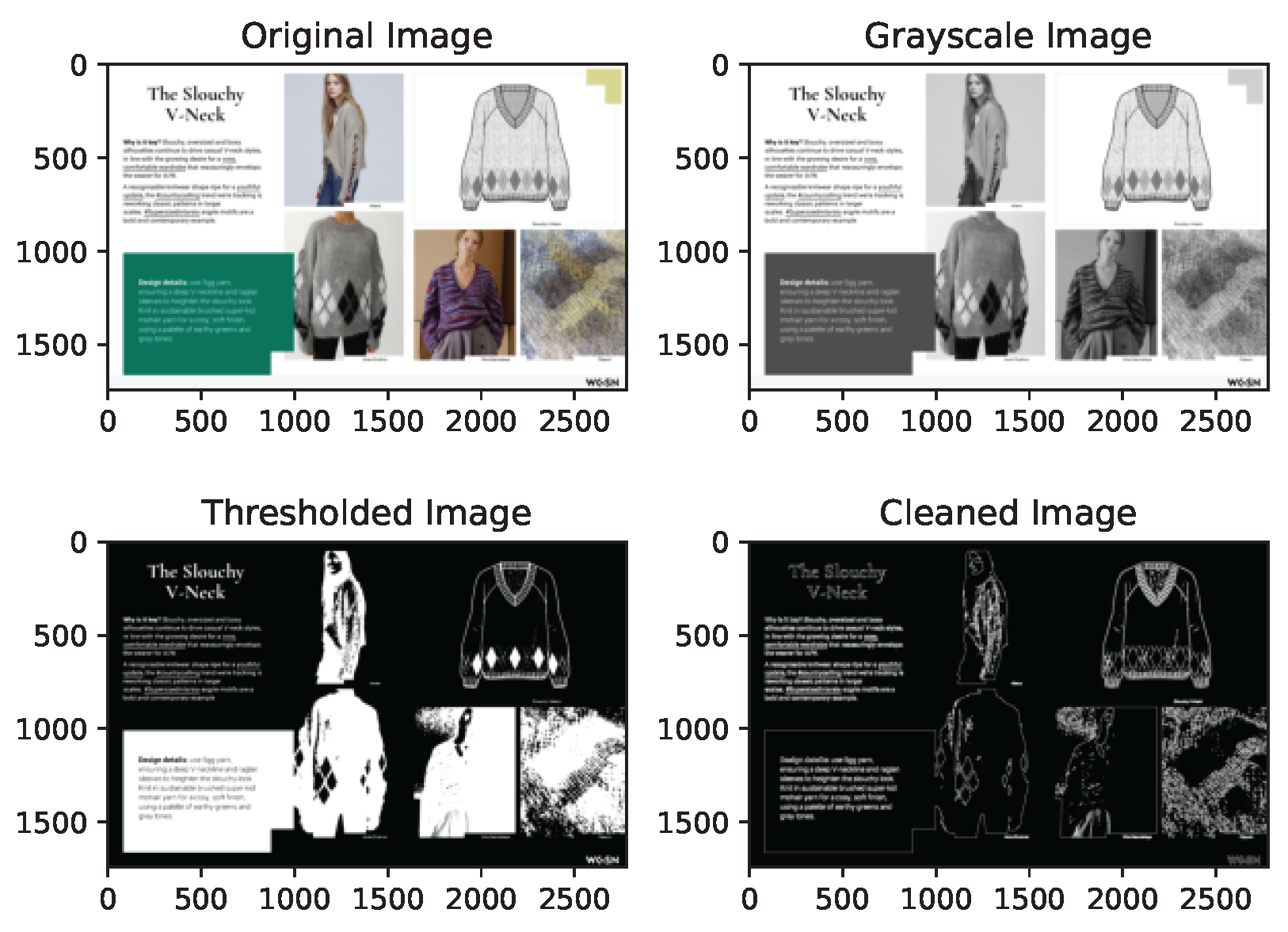

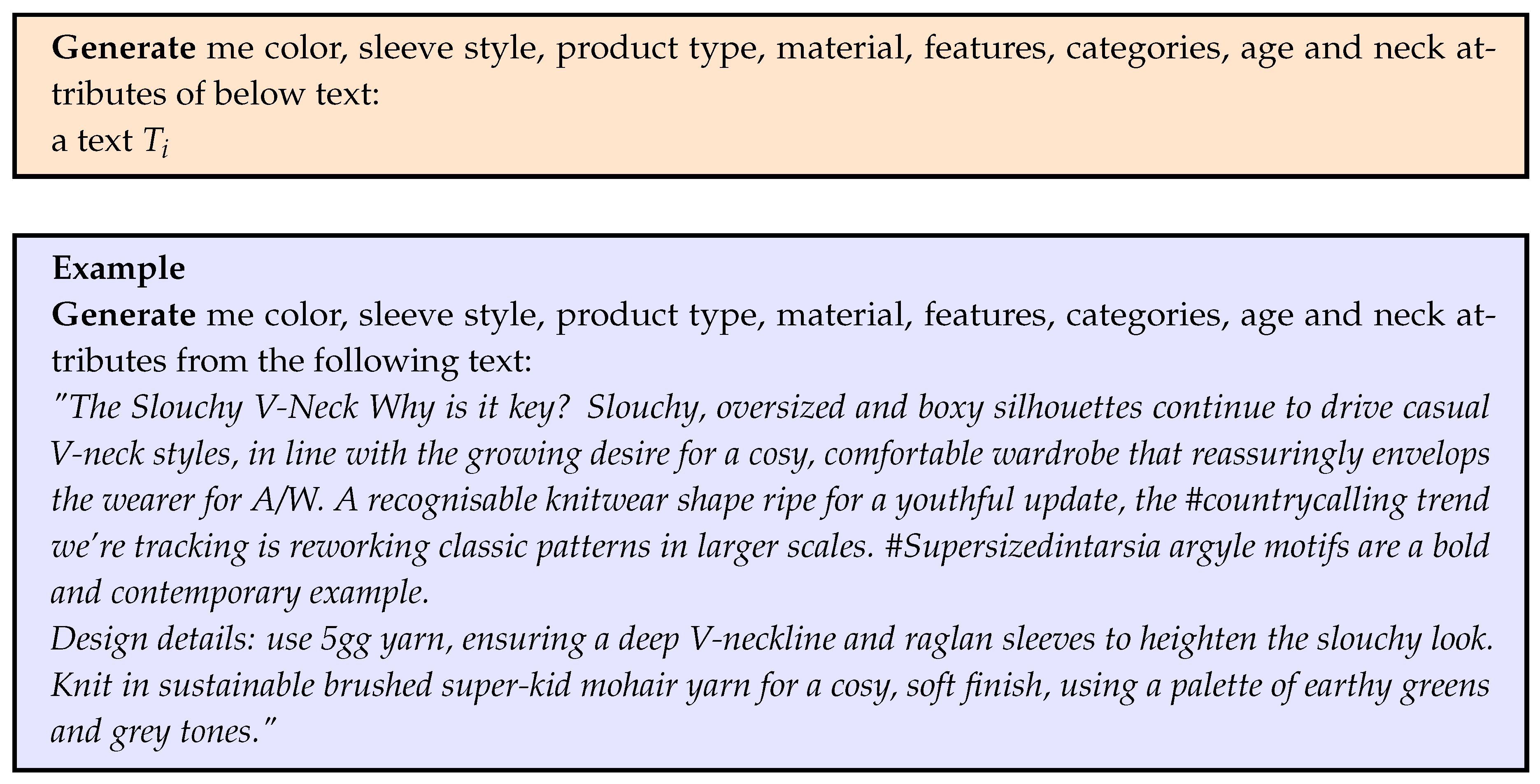

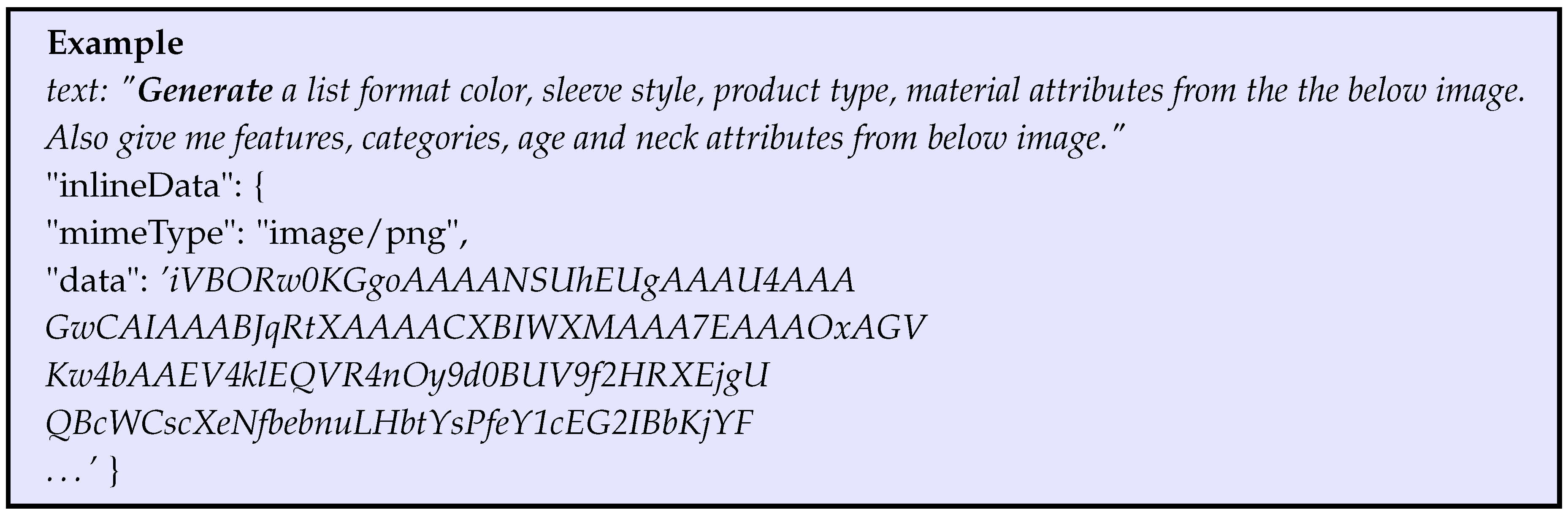

We are utilising the LLM model for extracting the attributes. Below is a sample prompt:

The output is then processed in dictionary type as follow:

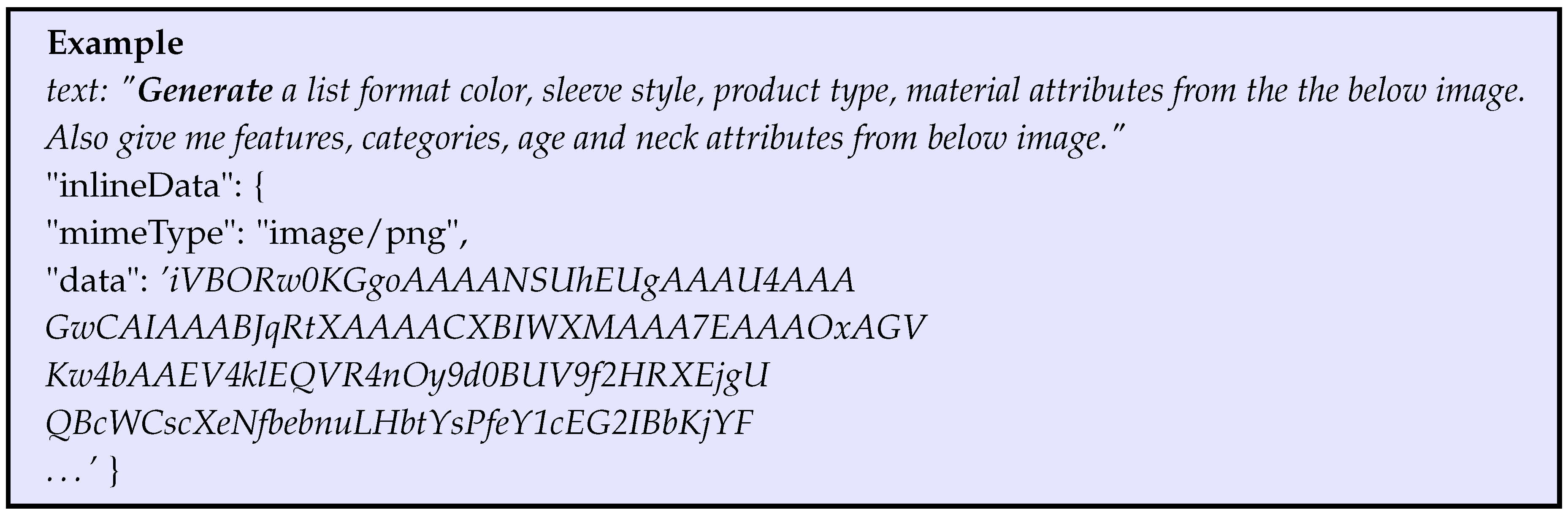

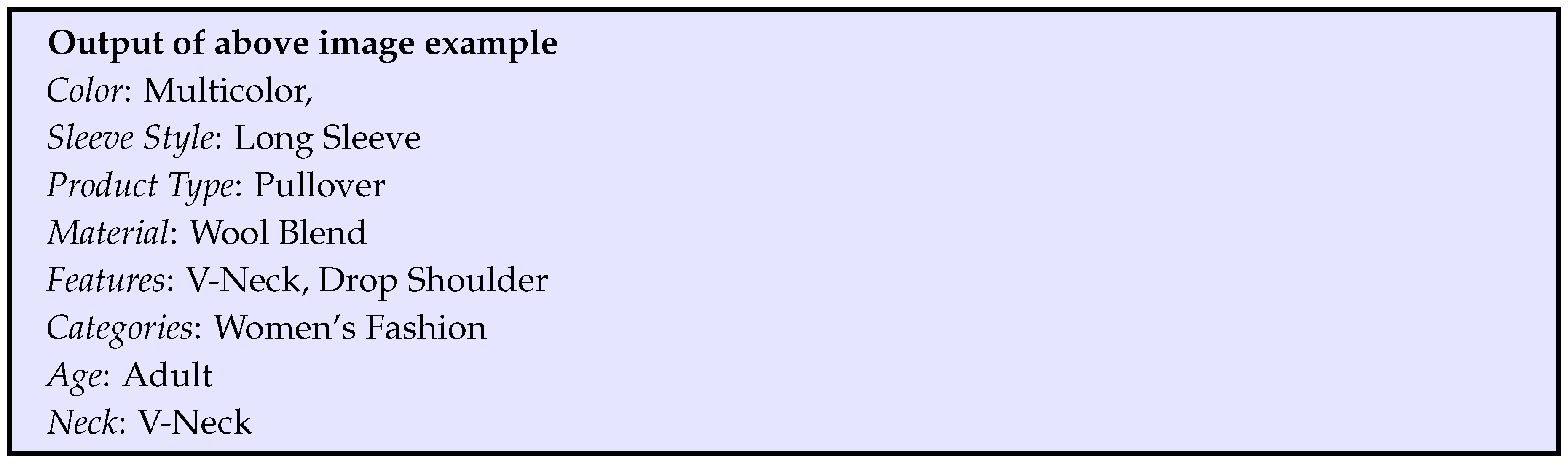

3.4. Attribute Extraction from Images

The extraction of detailed image attributes from fashion images has a wide range of uses in the field of e-commerce. The recognition of visual image attributes is vital for understanding fashion, improving catalogs, enhancing visual searches, and providing recommendations. In fashion images, the dimensionality can be higher due to the complexity and diversity of fashion items. For instance, a single piece of clothing can have multiple attributes for color, fabric type, style, design details, size, brand, and others. Hence, image attribute extraction has become more complex than text. However, these attributes can be extracted using various computer vision techniques, such as image segmentation, object detection, pattern recognition and deep learning algorithms. In this work, we explore the vision based LLM model. Each extracted image as shown in

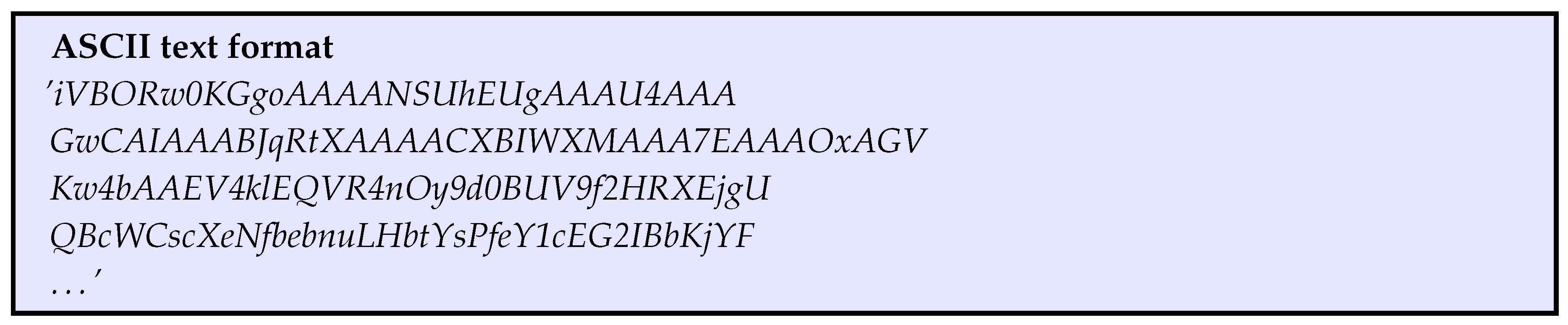

Figure 6 is converted to base64 encoding. Base64 encoding is a method of converting binary data, such as an image, into ASCII text format. This is required as current LLM models takes text format as input. The ASCII text format example as follow:

Next, we use this encoded string along with LLM prompt to generate the product attributes as follow:

The output is then processed in dictionary type as follow:

Another common issue that arises is the presence of noisy and missing labels. It is a challenging task to accurately label and annotate all the relevant information for every page in the PDF. Despite employing various automated and manual annotation processes, it is nearly impossible to obtain perfectly labeled structured data. To address this, we employ image pre-processing or data cleaning techniques to eliminate duplicate, noisy, and invalid images before proceeding with attribute extraction. Once we extract attributes from text and images on each page, we aggregate them per page for our further analysis.

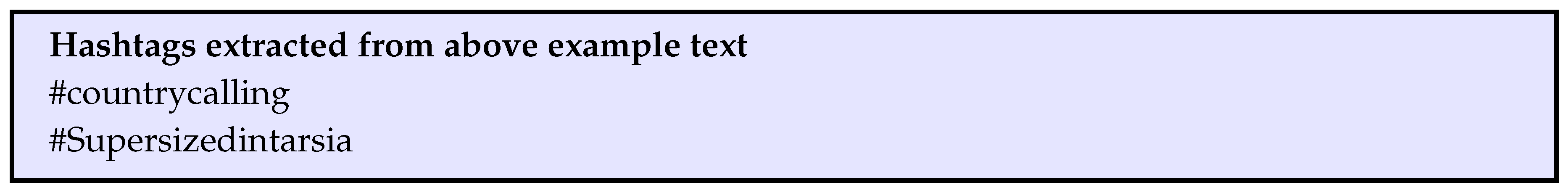

3.5. Hashtag Detection in Text

Hashtags are words or phrases preceded by the pound sign (#) and are commonly used on social media platforms to categorize and group similar content. Detecting hashtags in text is crucial for various applications such as topic modeling, sentiment analysis, and product recommendation. The process of hashtag detection involves analyzing the text and identifying words or phrases that are preceded by the pound sign, while considering factors such as word boundaries and punctuation marks. The extracted hashtags can then be used to gain insights into trending topics, user interests, or to enhance search and recommendation systems. In our work, we use the regular expression to detect the hashtags.

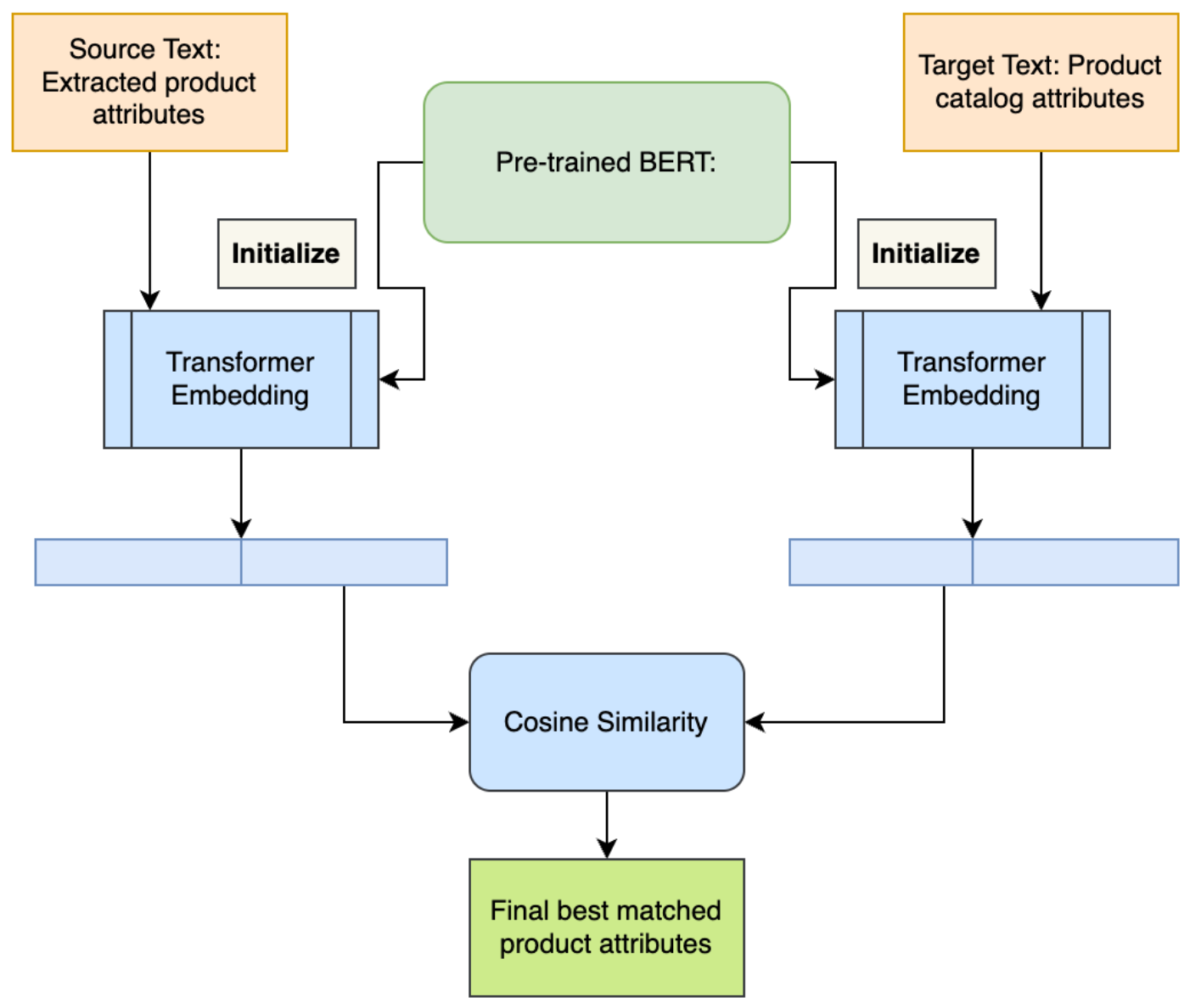

3.6. Product Attribute Matching

The purpose of product attribute matching is to ensure that extracted attributes meet specific criteria or requirements. Based on the identified attributes, retailers plan their future product assortments. This includes selecting or designing clothing items, accessories, and other fashion products that reflect the upcoming trends. One of the challenge of product attribute matching is multiple variants of representation for the same value of one attribute. For example, "vneck" and "V-Neck" is consolidated into "V-Neck" as neck product attribute.

Figure 7 shows our framework to match the predicted attributes to the existing catalog attributes. We exploit pre-trained bert uncased model. BERT is designed to pre-train deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all layers. We create representations or embeddings for predicted attributes and existing product attributes. Finally, we use cosine similarity to match the similar product attributes from the catalog.

Summary: As shown in

Figure 2, our proposed approach relies on four successive steps. First, the

text and image extraction step that extracts all the text (paragraphs) and relevant images from the given PDF files. Second,

attribute extraction step, that uses LLM models to extract relevant attributes from the images and text. Third,

merging step, we consolidate attributes into each category and keep unique values for each attribute. Finally,

catalog matching step, helps retailers to find a product that matches with existing inventory and plan for future assortment.