Note

This article is an extended version of a conference paper presented at the IEEE, titled "Improving Measurement Accuracy of Acoustic Intensity in Vibrating Machines Using a Doosan Robot [

1]. We hold the copyright to the published conference paper. The primary modifications include several significant enhancements : the integration of artificial intelligence for more accurate sound source detection, the implementation of offline programming for the robot to improve operational efficiency, the addition of a comprehensive experimental study to validate our findings, and a thorough enhancement of the data analysis with updated and more robust results. It’s important to note that in this study, we focus on acoustic pressure rather than intensity.

1. Introduction

Over the years, several research and measurement techniques have been proposed to measure and localize noise sources. A summary of the methods can be found in Refs [

2].

Jung and Ih [

3] introduced a comprehensive microphone array approach to accurately locating sound sources through acoustic intensimetry. Their study concentrated on creating three-dimensional (3D) intensimetry techniques for precise localization of compact sound sources. The analysis emphasized addressing spatial bias errors arising from the irregularity of directivity, leading to the investigation of potential compensation methods.

Botteldooren et al. [

4] conducted industrial sound source localization using microphone arrays under difficult meteorological conditions. Their investigation highlighted the need for long-term monitoring of industrial noise. The study identified difficult meteorological conditions, characterized by highly variable sound attenuation, as significant challenges.

Cherif and Atalla [

5] developed a hybrid method to measure the radiation efficiency of complex structures across a wide frequency range. This technique combines direct measurements in an anechoic room with an indirect approach utilizing Statistical Energy Analysis (SEA) in a reverberant room. Validation was performed on two types of constructions: metallic (flat and stiffened aluminum panels) and sandwich composite (honeycomb core). Experimental results were compared with analytical predictions based on Leppington’s asymptotic formulas. Their studies demonstrated that the technique accurately identifies radiation efficiency and distinguishes between intrinsic and radiation damping.

Simmons C. [

6] conducted a comprehensive study focused on measuring sound pressure levels at low frequencies within the context of room acoustics. This research involved a meticulous comparison of various methods documented in a literature survey. The primary objective was to evaluate the performance of these methods, particularly in scenarios dominated by low-frequency sounds or when measured in octave or third-octave bands.

Other studies have delved into various aspects of sound pressure measurement, including sound pressure level in voice and speech (Jan G and Svante) [

7]. The research contributes to improving the accuracy and reproducibility of sound pressure level measurements in the domain of voice and speech studies, while (Yunus, M et al.) [

8] propose an advanced method for using noise measurement as an effective tool for analysis before or after noise control efforts for industries using mechanical machinery.

These studies provide valuable insights into different facets of sound pressure measurement. However, they often rely upon arrays of multiple microphones, which have come with significant limitations, such as the necessity of intricate calibration procedures, raised costs, and extended time required for data collection, occasionally hampering real-time noise source identification.

To overcome the limitations of traditional methods, there has been a growing interest in using automation and robotics for acoustic pressure measurements. Several recent studies have explored using robotic systems in various settings.

For instance, Wang et al. [

9] developed an automated system that can measure the sound power level of HVAC systems. Meanwhile, Yang et al. [

10] developed a robotic platform for sound power and intensity measurements in open spaces. Nonetheless, these methods still require the intervention of an operator to define the accurate calibration for best mapping, which can lead to a semi-automatic measurement process.

M. Blazek et al. [

11] present a case study of sound mapping using a scanning microphone system in an urban environment. The authors used a scanning microphone system mounted on a vehicle to measure sound levels along a predefined route through the city.

C. Mansouri et al. [

12] present an experimental investigation of sound power mapping of a vibrating machine using a scanning microphone and a robot. The researchers used a Hemisphere method to measure the sound pressure at different points on the surface of a hemispherical array surrounding the machine. The scanning microphone and the robot were used to automate the measurement process and obtain a fully automatic scan of the machine. The results showed that the proposed method could accurately map the sound power of the vibrating machine, which could help industries identify and mitigate noise sources.

In the mentioned papers, the authors present varied experimental methods to measure sound power levels using robotic systems in different settings. These methodologies rely on pre-programmed instructions guiding the robots to execute repetitive tasks. Notably, none of these methods have yet integrated AI for autonomous measurements.

This paper aims to investigate and improve the acoustic pressure measurement in vibrating machines using a Doosan robot. The objective is to automate the measurement process, enabling a fully automated scan using a Doosan Robotic Vision System and a single microphone, allowing precise source localization.

This study used loudspeakers as a sound source, representing an exciting option for acoustic mapping. This paper is organized into four sections.

Section 2 describes the background of the methods used to estimate the sound pressure.

Section 3 describes the proposed experimental methods. Finally, the experimental and theoretical results are compared and discussed in

Section 4.

2. Background

This section briefly overviews sound pressure and sound pressure level measurement techniques, focusing on the fundamental principles of sound energy and its distribution. It also explores the impact of artificial intelligence (AI) on acoustic measurement.

2.1. Overview of Approaches

Sound or acoustic pressure is the local deviation from the ambient atmospheric pressure caused by a sound wave. In air, sound pressure can be measured using a microphone. The SI unit for sound pressure p is the Pascal (Pa). Sound pressure level (SPL) or sound level is a logarithmic assessment of the effective sound pressure in relation to a reference value. Expressed in decibels (dB) above a standard reference level, it utilizes 20 µPa as the standard reference for sound pressure in air or other gases. This value is commonly acknowledged as the threshold of human hearing, particularly at 1 kHz. The subsequent equation outlines the process of calculating the Sound Pressure Level

in decibels [dB] from the sound pressure (p) in Pascal [Pa].

Where is the reference sound pressure, and is the root mean square (RMS) sound pressure. Most sound level measurements will be made relative to this level, meaning 1 pascal will equal an SPL of 94 dB.

Sound intensity is defined as sound power per unit of an area. It depends on the distance from the sound source and the acoustic environment in which the sound source is [

13].

Sound power is the characteristic of a sound source. It is independent of distance and is a practical way of comparing various sound sources. Sound power can be measured in different ways by sound pressure or by sound intensity:

Where is the sound intensity measured in W/m2.

Sound power level is determined by:

Where W is the sound power [W] and is the reference sound power [W].

The fixed-array microphone method is less stringent regarding the test environment and measurement setup requirements. This method supports all noise sources (continuous, intermittent, fluctuating, isolated bursts) and does not limit source size, provided the measurement conditions are met. Measurements can be taken indoors or outdoors, with one or more sound reflection planes present. Additional procedures are suggested for applying corrections if the environment is less than ideal.

The standard outlines various arrangements of microphones around a sound source. The measurement radius,

r, must satisfy several conditions for accurate assessment. Firstly, it should be at least

or

, whichever is greater, where

represents the characteristic dimension of the tested noise source, and

is the distance between the acoustic center of the source and the ground. Secondly,

r should be greater than or equal to

, where

denotes the wavelength of the sound at the lowest frequency of interest. Finally, the radius must be at least 1 meter. These conditions ensure precise measurements of sound characteristics [

14].

Integrating automated acoustic pressure measurement using robots like Doosan cobots involves a combination of hardware and software approaches. The hardware component includes a scanning camera and microphone, which capture acoustic environmental signals. These signals are then processed and analyzed using algorithms that extract meaningful information about the acoustic pressure of the sound source. Software algorithms for processing and analyzing captured acoustic signals can employ different techniques such as Fast Fourier Transform (FFT) and Short-Time Fourier Transform (STFT) for frequency domain analysis, Spectral Subtraction for noise reduction, and Gaussian Mixture Models (GMM) for clustering and classification in audio processing [

15].

2.2. AI and Acoustic Measurements

As technology advances, AI has become an integral component in enhancing the efficiency and accuracy of sound-related analyses. The exploration will focus on how AI algorithms, particularly in conjunction with robotics and computer vision, contribute to automating and optimizing the measurement processes. AI plays a crucial role not only in detecting specific objects, but also in calculating their position relative to the microphone. It enables the robot to position itself strategically, guided by the information obtained from the camera’s detection of objects. This information is essential for the following steps. The camera first detects the sound source object, calculating and obtaining its precise position relative to the microphone. After successful detection, the measurement process is started offline using the Robot DK software. Once the process has been validated, the robot seamlessly switches to the automatic scanning phase to take acoustic measurements, using the microphone mounted on its robotic arm. This integration solves the complex problems associated with traditional methods and opens up new possibilities for real-time monitoring and a complete understanding of the acoustic environment in industrial settings.

3. Descriptions of the Measurements

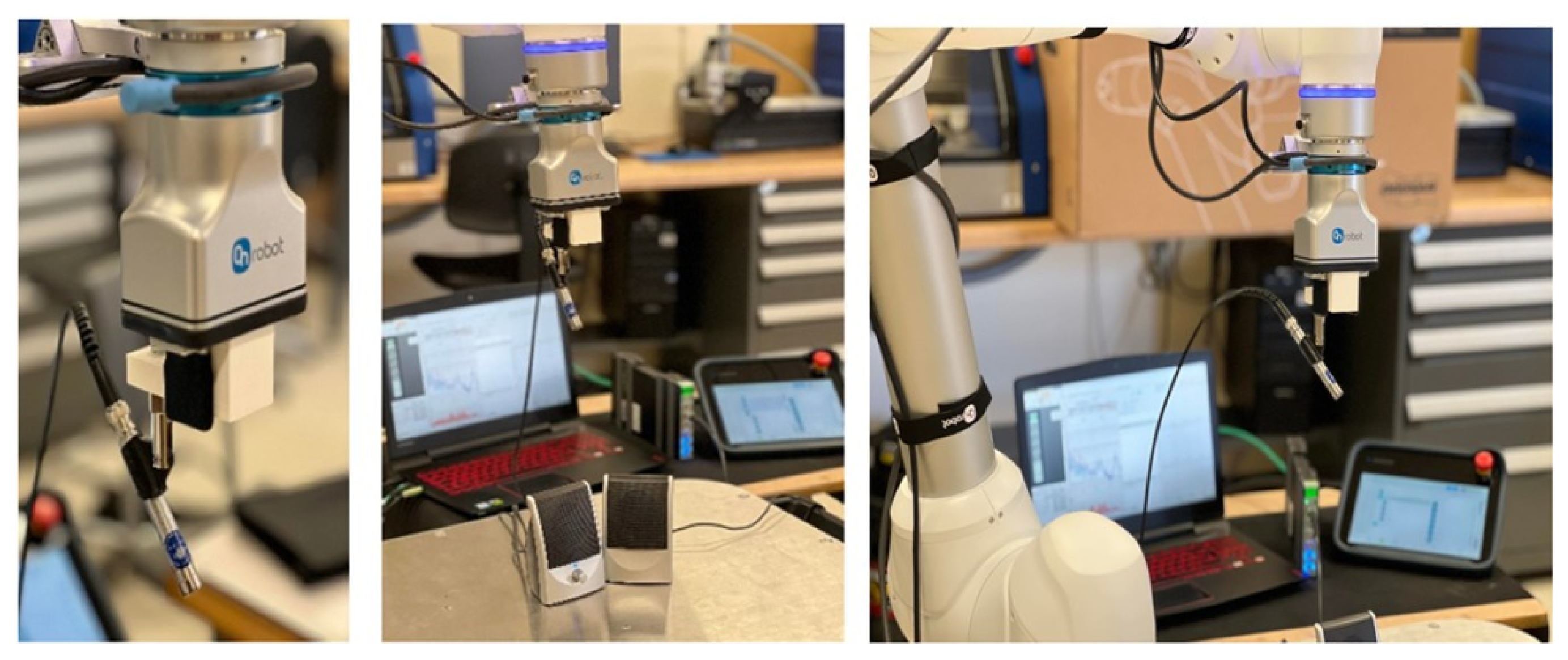

This section describes the sound pressure measurement process using a Doosan robot, a scanning camera, and a microphone. The analysis is performed in a third-octave frequency band from 10 Hz to 3.2 kHz. Comparisons between experimental and theoretical results are given in Sec. 4.

The measurement process involves several steps: first, acquiring and processing images, then detecting and localizing the sound source within the OnRobot’s Eye tool system. Afterward, the robot arm, equipped with a microphone, is moved, followed by the initiation of the automatic scan.

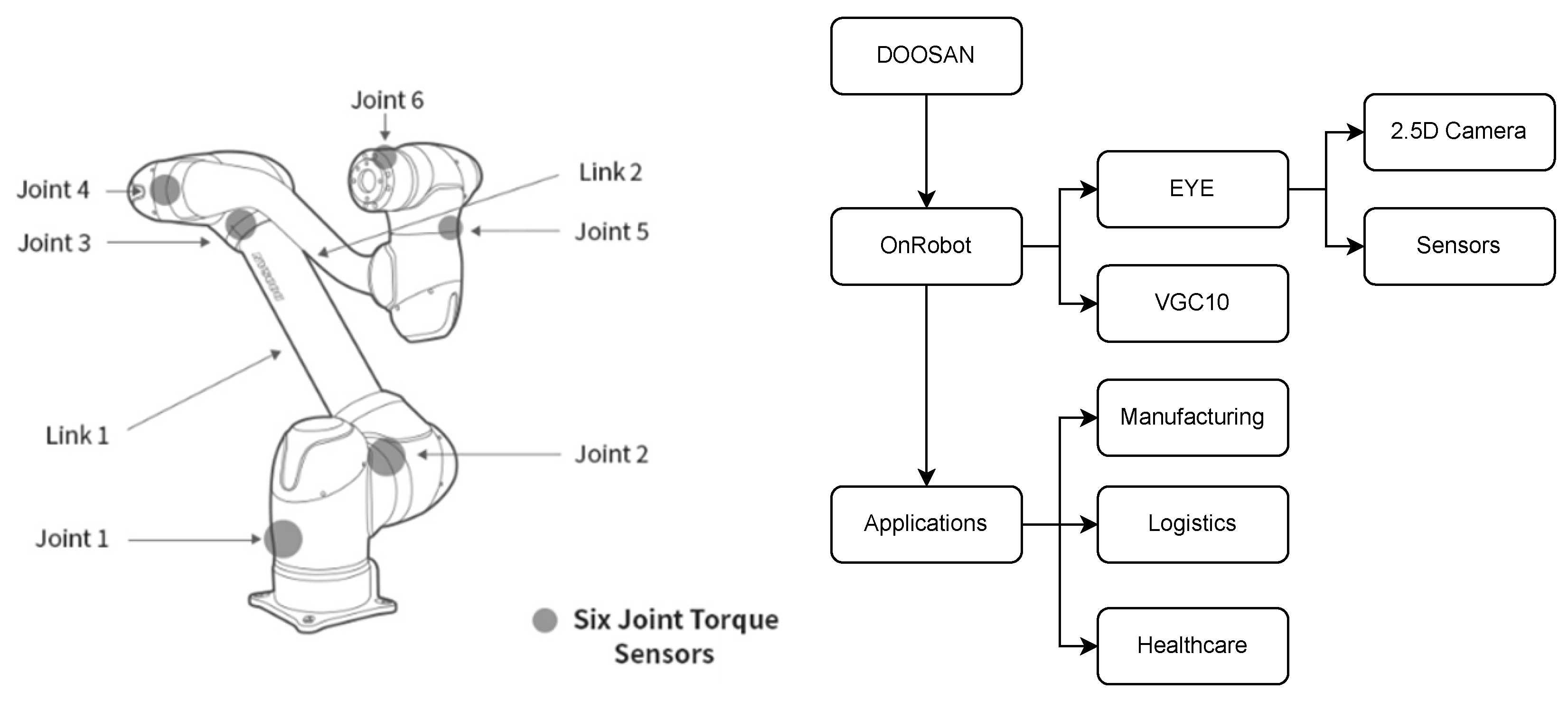

Doosan Robotics’ collaborative robots, also known as cobots, are highly regarded for their precision, versatility, and ease of use, making them ideal solutions for various industrial applications. In the context of this study,

Figure 1 provides a visual representation of the built-in processor within the Doosan collaborative robot [

16].

This illustration offers insights into the technological capabilities of the robot used, emphasizing the crucial role played by its integrated processor in ensuring optimal performance.

OnRobot’s Eye tool is an intelligent camera system specifically designed for robotic applications. It employs advanced computer vision algorithms to detect objects accurately in real time, making it an optimal tool for locating objects and calculating the distance between the source and the microphone.

The Eye tool includes a built-in processor that runs OnRobot’s proprietary software, allowing it to perform real-time object detection, classification, and tracking. Additionally, with the measuring microphone connected to the Doosan robot and the Eye tool, it is possible to measure the distance between the detected object and the robot. Then, based on the object’s location, orientation, and distance measurement, the robot’s parameters can be adjusted automatically to perform more precise scans. With these technological advancements, the Doosan robot offers a promising way to automate sound measurement.

3.1. Devices Calibration

• Microphone configuration and calibration: Initiating precise data collection with BK Connect software involves a crucial starting point: configuring the microphone. This step is pivotal for accuracy. Key parameters such as sampling frequency and microphone sensitivity need validation and other adjustments to optimize microphone performance. The TYPE 4189-A-021 microphone is employed for measurements, featuring a dynamic range of 16.5 – 134 dB and a frequency range of 20 – 20000 Hz. With an inherent noise level of 16.5 dBA, its sensitivity is 50 mV/Pa. The microphone operates within a temperature range of -20–80 ℃. Subsequently, meticulous planning is vital to define measurement positions around the vibrating source, where acoustic pressure data will be captured [

17].

In this context, microphone calibration is of paramount importance. Accurate calibration is key to getting precise acoustic data. During calibration, critical parameters like sampling frequency and microphone sensitivity are rigorously validated and adjusted as necessary.

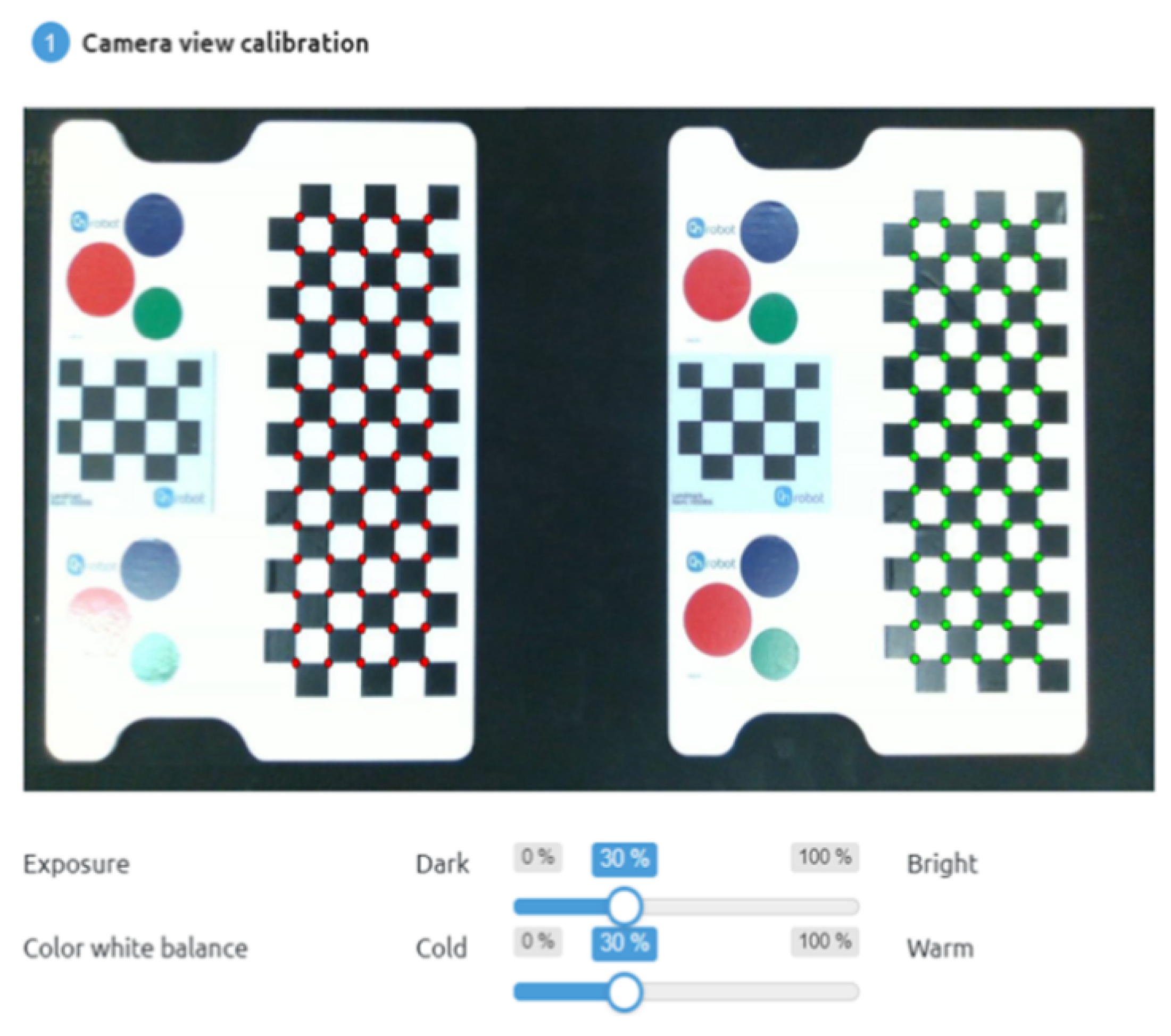

• Camera calibration:

Calibration is an essential step in the setup process of the OnRobot Eye vision system. Its purpose is to ensure that the camera is accurately aligned with the robot arm and that the image captured by the camera precisely reflects the real-world environment. The calibration process, depicted in

Figure 2, requires placing a calibration object in front of the camera and capturing images from various angles using the OnRobot Eye software.

The software then employs these images to determine the position and orientation of the camera relative to the robot arm. Once the calibration is complete, the OnRobot Eye can detect and locate objects within the robot’s workspace with unparalleled accuracy, enabling it to execute complex manipulation tasks with exceptional precision and efficiency.

3.2. Communication between Devices

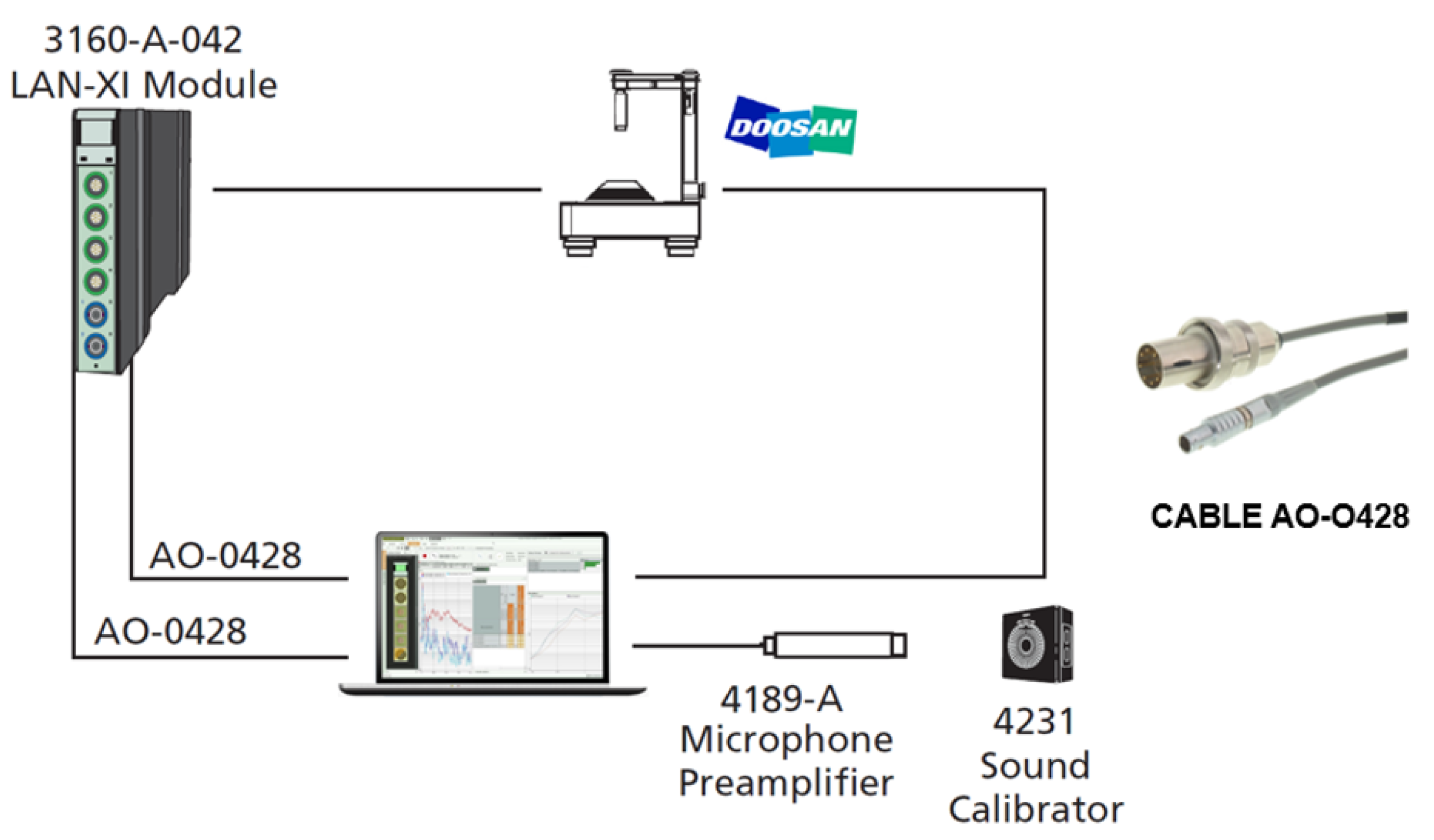

Effective communication between devices is a crucial aspect of this project, as it enables the coordination and synchronization of the various stages of data acquisition, processing, and analysis. The Transmission Control Protocol (TCP) plays a vital role in ensuring the reliability of end-to-end connections, significantly simplifying their establishment.

The compatibility of both systems with TCP/IP protocols facilitates seamless communication between the computer and the robot. This standardized communication protocol ensures efficient data exchange. The connection is established through a direct Ethernet cable link, forming a point-to-point connection. To ensure the proper functioning of this direct connection, it was imperative to configure the IP addresses to align them within the same network domain. An overview of the entire system, illustrating the fundamental functional scheme of the different interconnected devices used, is shown in

Figure 3.

3.3. Object Detection and Positioning Strategy

• Related work:

Object recognition and detection have been the subject of intense research for several decades. Initially, these techniques were based on descriptors such as HOG and LBP combined with classifiers. However, recent advances in recognition algorithms have led to significant adoption in recent years. Among these advances, convolutional neural networks (CNNs) have established themselves as the main reference for image recognition and visual classification [

18].

CNN techniques are crucial in detecting objects with the Doosan robot camera and determining their location. The aim is to locate and recognize an object within an image.

CNNs are distinguished by their multi-layer architecture, where each layer learns specific characteristics. They have been hailed for their outstanding performance and efficiency in generic object detection and classification tasks. Recent research has focused on improving deep learning methods to create more robust models. In addition, current approaches exploit unsupervised convolutional coding to reapply filtering techniques at each step, further improving detection accuracy [

19].

A new detection approach called You Only Look Once (YOLO) was introduced in research by Redmon et al. [

20]. It has contributed significantly to the advancement of real-time applications due to its fast execution. You Only Look Once (YOLO) proposes using an end-to-end neural network that predicts bounding boxes and class probabilities all at once. This approach differs from the one taken by previous object detection algorithms, which reused classifiers for detection. While algorithms like Faster RCNN detect possible regions of interest using the region proposal network and then perform recognition on these regions separately, YOLO makes all its predictions using a single fully connected layer.

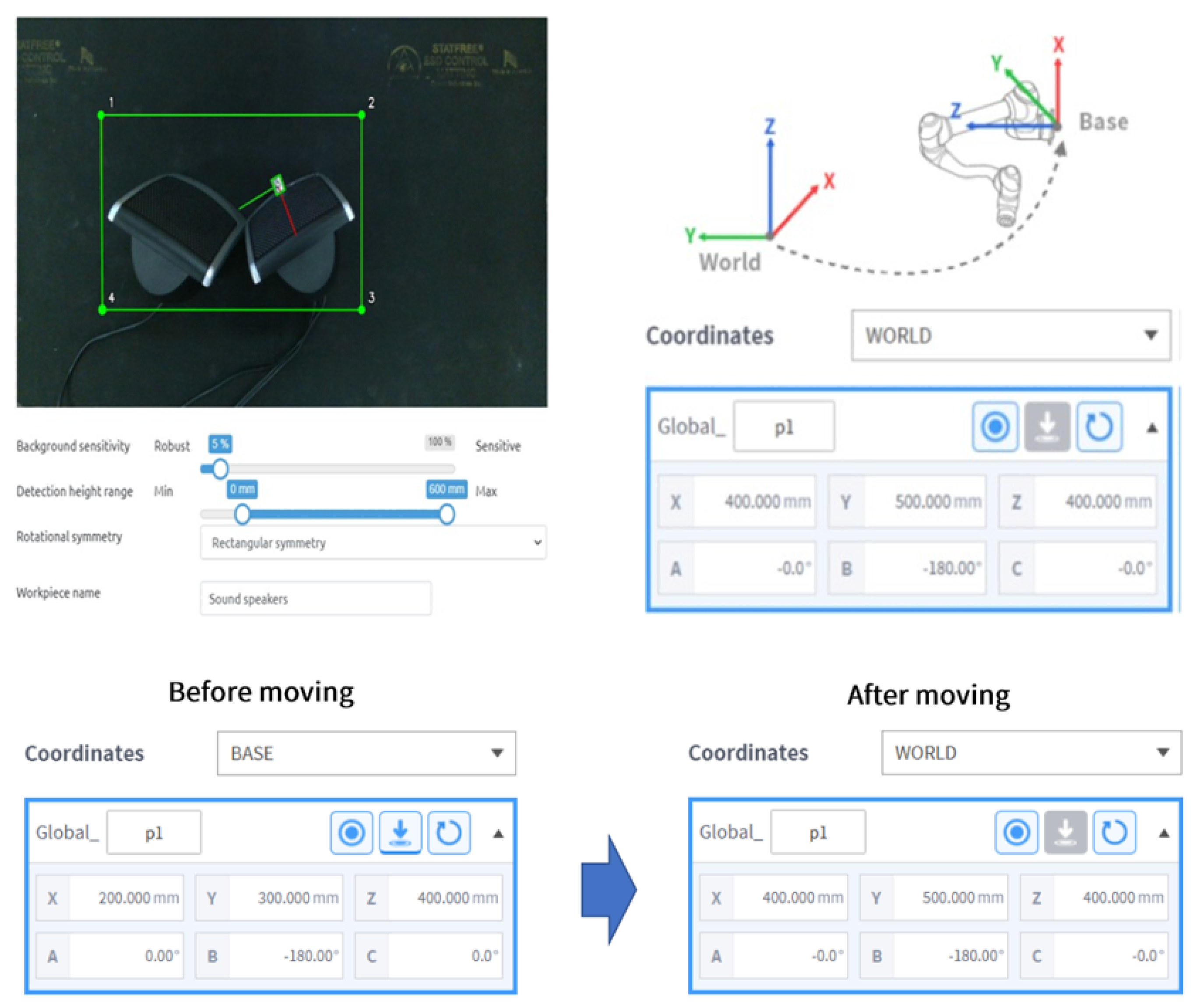

• Sound source detection and positioning strategy:

The robotic system’s functionality for object detection and positioning through the camera involves a series of well-defined steps. The high-resolution camera embedded in the system is designed to meticulously capture detailed images of the object or scene being examined. These images serve as the foundation for the subsequent stages of the process.

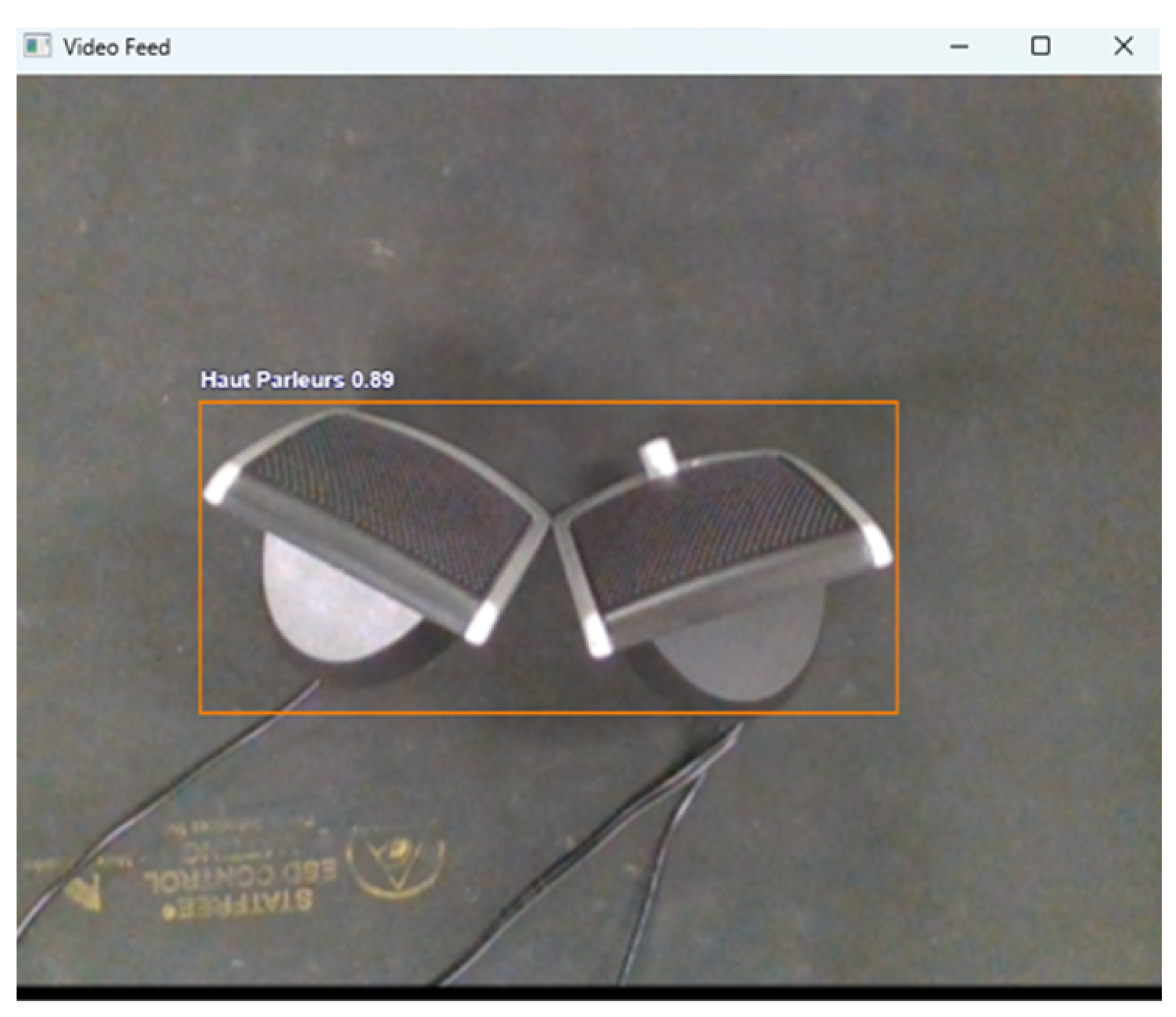

For our detection needs, we opted to utilize the YOLOv5 version of the You Only Look Once (YOLO) algorithm, which stands out as one of the most potent solutions presently accessible. To accomplish this, we employed a YOLOv5 model built upon an open-source algorithm, which we further trained and tailored by fine-tuning specific parameters to align with the requirements of speaker detection within the images.

The algorithm relies on convolutional neural networks (CNNs) to predict both the class and the location of a sound source in an image. To train the model, a database consisting of images representing different types of microphones was used to develop the learning model. The dataset was divided into an 80:20 ratio, with 80% allocated for training and the remaining 20% reserved for validation.

This process involves identifying objects in the images and marking them for the model training process. Subsequently, the model utilizes this annotated data to detect the specified objects in the images.

Figure 4 illustrates the detection of speakers as sound sources.

Following this phase, the system enters the decision-making stage. This pivotal step involves real-time adjustments and strategic positioning strategies based on the acquired visual information. The robotic system autonomously assesses the object’s characteristics, evaluates the optimal positioning for accurate measurements, considers the distance between the source and the microphone, and dynamically adjusts its position as necessary to ensure compliance with the measurement conditions of the hemisphere method.

Based on this information, the robot can be programmed to start the automatic scan. This integration of AI enhances the overall efficiency and accuracy of the source detection and positioning strategy, as exemplified in

Figure 5,

This adaptive process ensures that the robot is precisely positioned for optimal sound pressure measurements, which guarantees optimal mapping outcomes tailored to specific frequency bands.

3.4. Python for Automating Sound Measurement and Mapping

• Automatic scanning:

Once the machine learning algorithm classifies the objects and determines the distance between the source and the measuring microphone, the next step involves programming the robot to measure the sound pressure at different points within the space. This can be achieved using Python programming language, which can be used to control the robot’s movement and the microphone’s positioning. The robot can be instructed to move and scan in different locations within the space to measure sound pressure at various points. At each location, the microphone can take sound pressure measurements, which can be used to generate an acoustic map using the Hemisphere method.

Figure 6, visually depicts the solution for the automatic measurement using the cobot. The algorithm manages data collection and orchestrates the robot’s optimal path through the space, a critical aspect in ensuring thorough and efficient coverage of the investigation area.

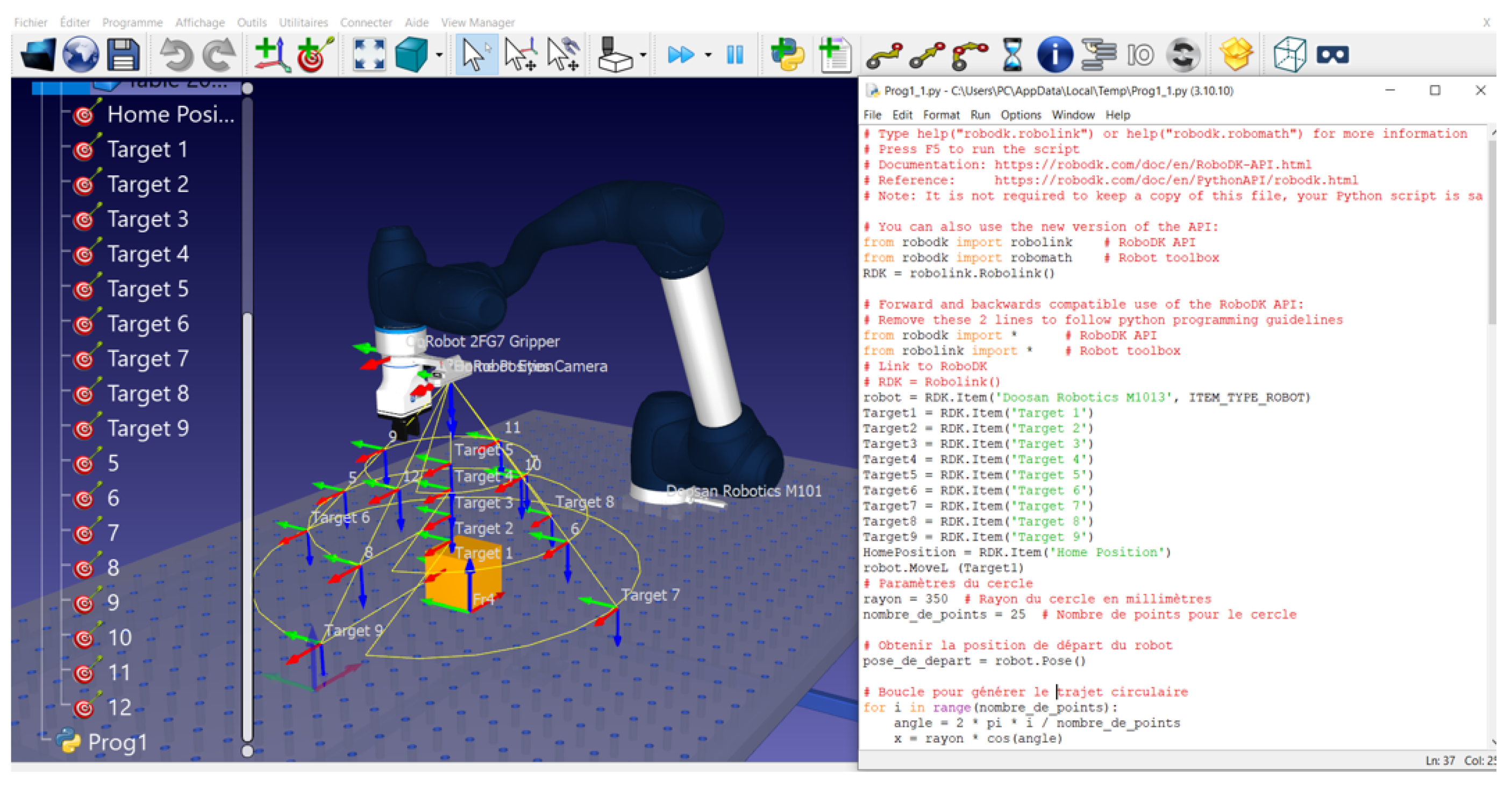

• Off-line programming:

Collaboration with RobotDk proves pivotal in pursuing precise acoustic pressure measurement and mapping. RobotDk, a leading platform for robotic control and offline trajectory programming, significantly enhances the efficiency and precision of the robot’s movements. Utilizing RobotDk’s specialized tools enables meticulous offline planning of the robot’s trajectory, ensuring comprehensive coverage of all specified locations with precision.

It facilitates the integration of trajectory planning into the algorithm, resulting in a more reliable operation. The synergy between the algorithm’s data collection and analysis capabilities, powered by Python, and RobotDk’s trajectory programming expertise promises to deliver a comprehensive and highly effective solution for sound mapping.

Moreover,

Figure 7 visually illustrates the offline programming of the spiral path, showcasing the coordinated movement of the robot during the sound measurement process. This graphical representation adds an essential visual dimension to enhance the understanding of the approach.

• LAN-XI Open API measurement automation:

The LAN-XI Open API module is a powerful tool that allows easy interaction with modules like the TYPE 3160 and offers extensive control options. The LAN-XI web interface provides a user-friendly graphical interface, making it simple for users to configure settings, monitor measurements, and access advanced features [

21]. The developed code relies on the LAN-XI Open API to automate data collection. Results are automatically saved for subsequent analysis.

The code operation is designed to coordinate a sequential process of measurements, intelligently synchronized with the robot’s movements as it transitions between different positions. Upon triggering the script, the LAN-XI module automatically initiates the first measurement. Upon completion of the initial measurement, the code attentively monitors the robot’s status, patiently waiting for it to reach the next pre-defined position. Once the robot is appropriately positioned, the script autonomously triggers the second measurement, continuing this iterative process until the completion of the predefined measurement sequence. The synchronization between robot movements and measurement initiation ensures a structured and consistent data collection process. It also optimizes the overall process efficiency by minimizing unnecessary waiting times between successive measurements.

3.5. Acoustic Map Generating

The initial step of generating an acoustic map using our developed method involves calculating the Sound Pressure Level (SPL) at each measurement point. This calculation relies on the measured sound pressure and the distance between the measuring microphone and the noise source. Once the SPL is calculated at each point, the data is plotted on a coordinate system to create the acoustic map [

22,

23].

This study employed a Bk Connect acquisition station to measure acoustic pressure, adding an extra layer of accuracy and reliability to the measurement process. The Bk Connect station is specifically designed to deliver highly accurate and precise acoustic pressure measurements. It can capture data at high sample rates, allowing for real-time SPL measurements.

4. Results and Discussion

The integration of AI algorithms extends to guide the robot’s decisions in real-time. This holistic approach guarantees a seamless and efficient workflow, where the robot detects objects and actively adapts its positioning strategies for enhanced accuracy. Through the synergy of AI and the robot, the measurement points become highly precise with fixed positions, offering the advantage of reproducibility for future measurements in contrast to traditional methods. The outcome is a cohesive system that excels in dynamic decision-making and real-time adjustments, culminating in optimal mapping.

For the development of our learning model, the model is trained with Google Colab on a GTX 1080 GPU and 64 GB RAM. Training time is considerably reduced thanks to the high performance of the GPU. The model performs relatively well with a precision of around 0.833, recall is around 0.9, and the F1 score is around 0.86 [

24].

After recording the sound, the next step is to process it for acoustic analysis. The process focuses on figuring out the shape of the recorded audio device and automatically capturing the acoustic response. This shape is determined by identifying its outer boundaries using instructions from an interface in the Matlab program.

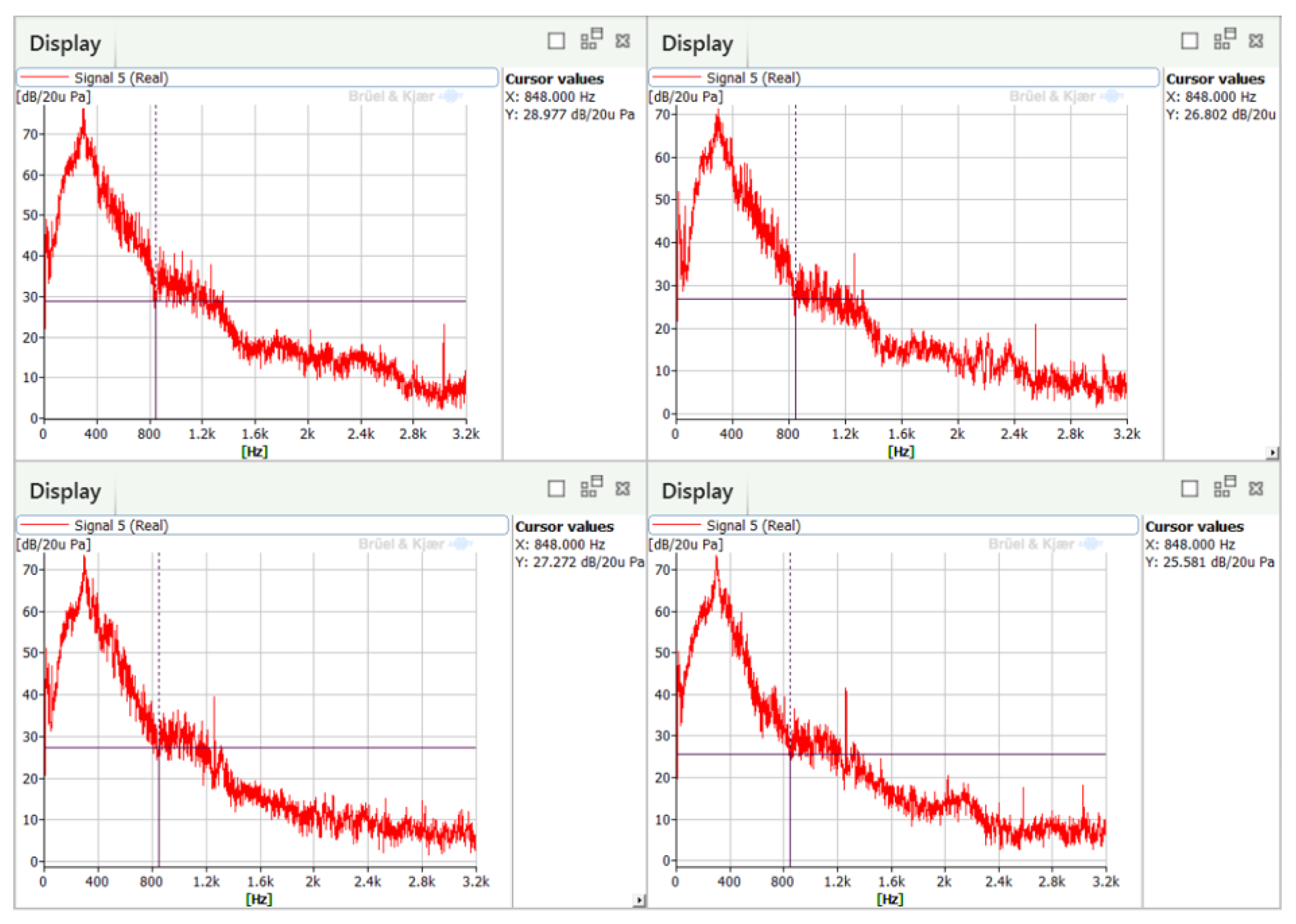

The acoustic data acquired constitute a complete set of sound pressure readings recorded at various points in the designated area. Each measurement point provides valuable information on the environment’s acoustic characteristics.

Figure 8 shows four curves that describe changes in sound at different places. The first curve is for the point closest to the sound source, and the others are for places farther away. These curves reveal how sound pressure varies over time and space, showing possible significant changes and patterns.

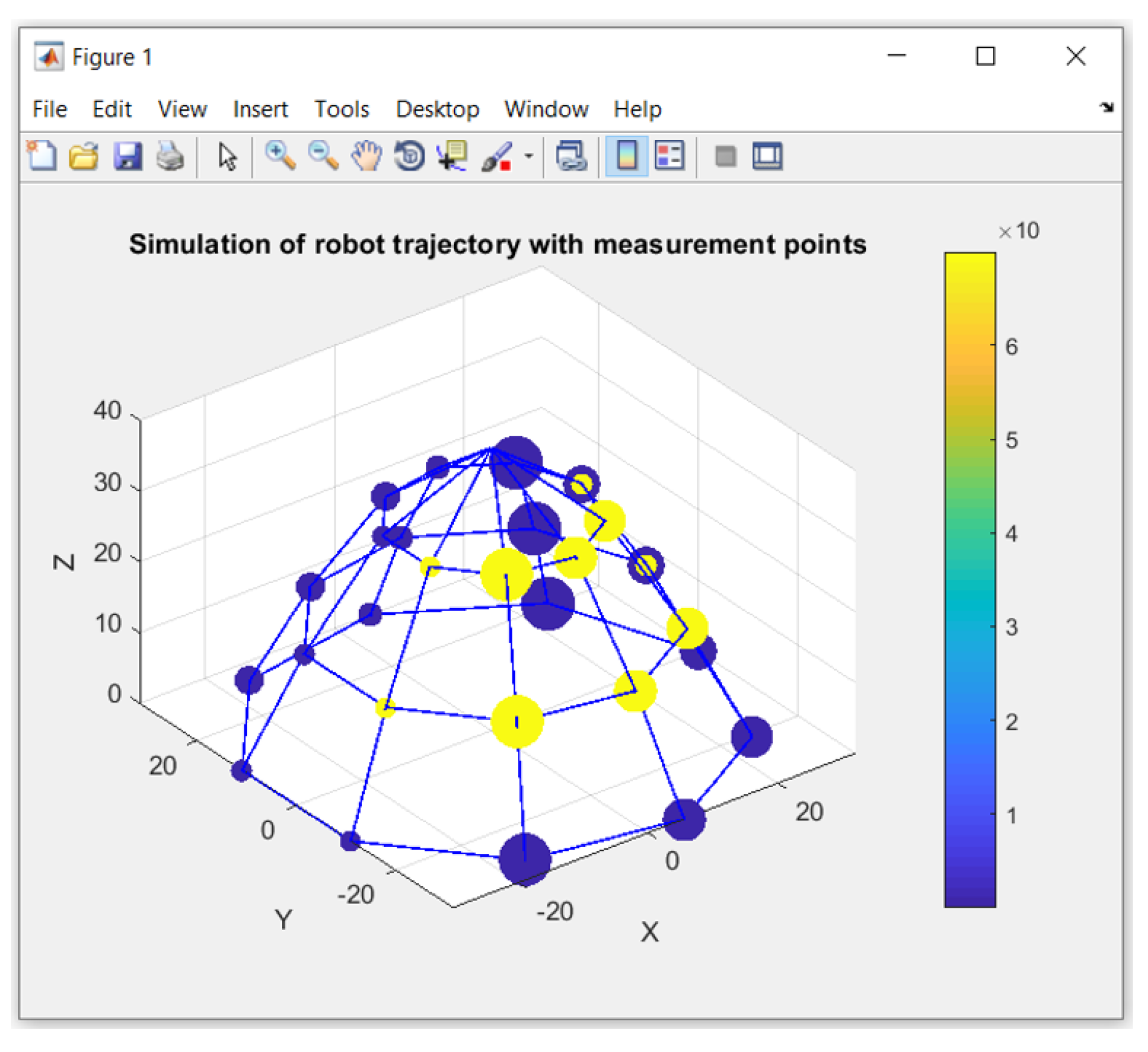

The process begins by importing the acoustic data into MATLAB for simulation, which can then be utilized to extract relevant features, as shown in

Figure 9,. The creation of the measurement contour often involves the use of signal processing tools and

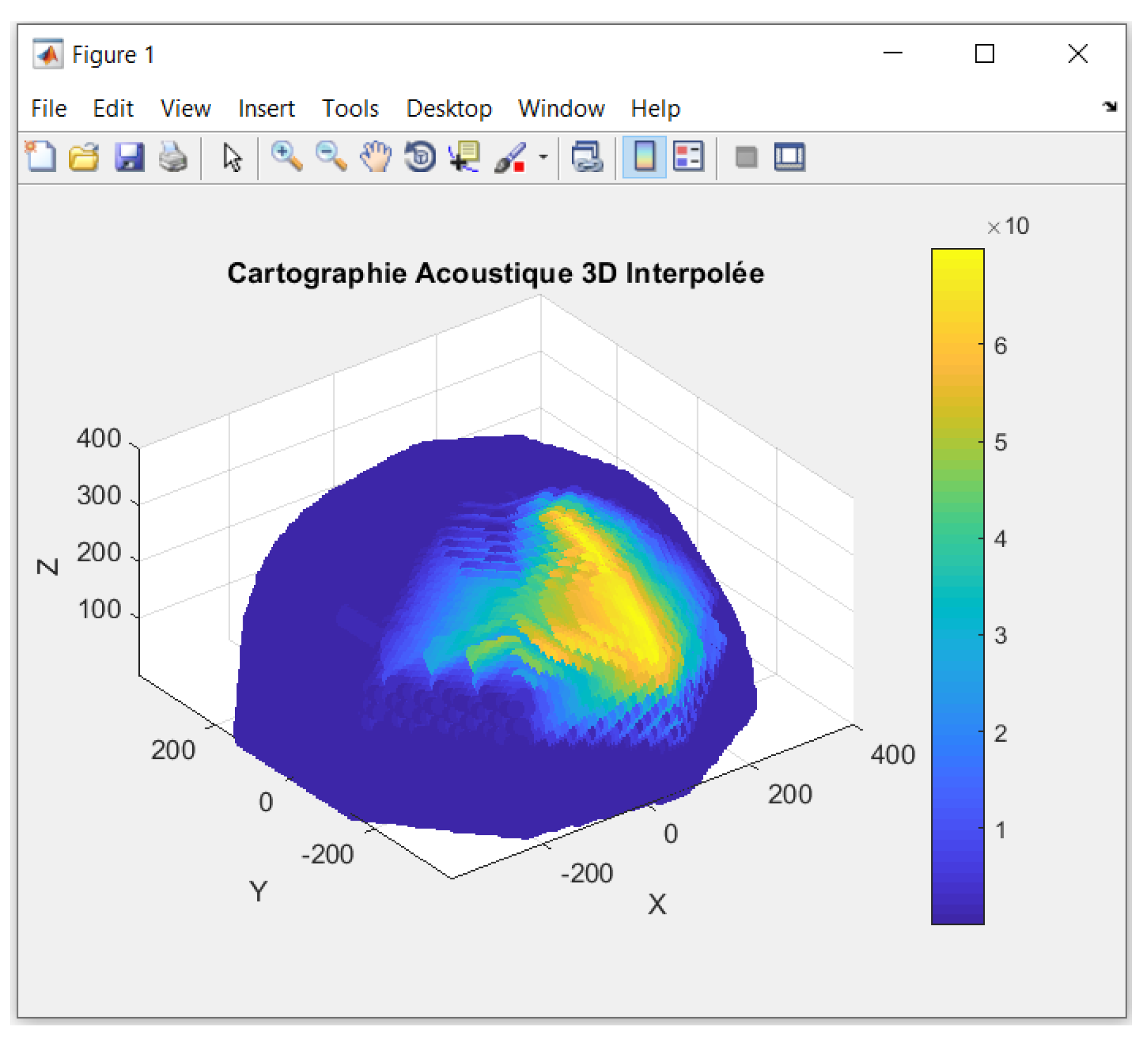

Once the measurement contours have been established, acoustic data are added to enrich the sound mapping. This stage involves integrating information gathered by the microphone-equipped robot in specific areas. Linear interpolation was used to estimate sound levels between discrete measurement points, creating a continuous surface representing acoustic variations.

Figure 10 shows the 3D sound map created using linear interpolation on measurement data. This visual provides essential information about the distribution of sound levels in the studied environment. The color variations clearly illustrate the acoustic dynamics of the area. Warmer areas indicate higher sound levels, while cooler areas, represented by lighter colors, signify lower sound levels. This visual analysis enables the identification of areas with more intense noise, helping to understand which regions experience stronger sound disturbances.

A comparison table between the developed approach and an alternative method is presented. The developed approach utilizes a single microphone mounted on a robotic arm with a scanning camera. At the same time, the alternative method employs a hemisphere approach with multiple microphones positioned around the noise source.

Table 1 outlines the key distinctions between these methods, encompassing aspects such as microphone positioning, cost, ease of use, measurement accuracy, measurement environments, measurement time, data processing, and acoustic radiation visualization.

The measurement method with a single microphone mounted on the robot arm offers several advantages over the hemisphere measurement method with multiple microphones. From a financial point of view, the developed method provides relatively low cost thanks to using a single microphone. It should be noted, however, that this method can offer superior accuracy. This is particularly true when the microphone is correctly positioned, highlighting the quality of the results obtained with this simplified approach.

Finally, the combination of microphone and camera facilitates integration with other technologies, making it more suitable for complex industrial environments, unlike the previous method, which could encounter integration difficulties due to complex communication protocols. Finally, the developed method stands out for its simplicity, low cost, versatility, and ease of integration with other technologies, making it a promising alternative to the previous method. Still, it is considered the reference method for acoustic measurements.

5. Conclusion

This paper investigated the measurement of sound pressure in industrial environments, addressing the challenges associated with traditional methods. An innovative and automated solution was explored in response to the complexity, cost, and potential risks to workers posed by classical approaches. The study leveraged the Doosan Robotic Vision System, featuring a scanning camera and microphone, to capture acoustic data. Guided by computer vision algorithms, this system not only facilitated accurate and efficient measurement but also generated a detailed map showcasing the spatial distribution of sound sources within the industrial setting.

The methodology underwent comprehensive evaluation, including comparisons with analytical predictions. Experimental results, complemented by a 3D acoustic mapping, revealed a strong correlation between the measured data and theoretical expectations. The developed method demonstrated a significant advancement in safety and precision compared to conventional techniques. The automated measurement, enabled by the Doosan robot, ensures worker safety and allows for continuous monitoring of the industrial acoustic environment.

Combining robotics and computer vision, this innovative approach provides a safer and more precise alternative for sound pressure measurement. It holds promise for industries aiming to enhance product sound quality and reduce noise levels. With its automated measurement capabilities, the Doosan Robotic Vision System integration stands out as a recommended method for swiftly acquiring accurate data and contributing to improving industrial acoustic environments.

Based on the Doosan robot and equipped with advanced sensors, the automated measurement system has a wide range of applications in various industrial contexts. It can be used in an industrial production line in the final phase after product manufacture, such as daily-use machines or other equipment. The model enables precise noise control, helping to ensure the sound quality of products before they go to market. This study’s synergy of robotics, computer vision, and IA streamlines the measurement process. It opens up new avenues for continuous monitoring and real-time analysis of acoustic environments.

Acknowledgments

The authors thank the “Université de Québec à Rimouski (UQAR)” for supporting this study by providing research facilities and resources.

Abbreviations

The following abbreviations are used in this manuscript:

| SPL |

Sound Pressure Level |

| SIL |

Sound Intensity Level |

| SSR |

Source Sound Reference |

| FFT |

Fast Fourier Transform |

| STFT |

Short-Time Fourier Transform |

| MFCC |

Mel-Frequency Cepstral Coefficients |

| GMM |

Gaussian Mixture Models |

| AI |

Artificial Intelligence |

| API |

Application Programming Interface |

References

- O. Triki, R. Cherif, and Y. Yaddaden. Improving Measurement Accuracy of Acoustic Intensity in Vibrating Machines Using a Doosan Robot. 2023, IEEE 4th International Informatics and Software Engineering Conference (IISEC). https://ieeexplore.ieee.org/document/10391014.

- DEWESOFT. Sound Pressure Measurement – Measurement With Microphone – 2014 https://training.dewesoft.com/online/course/sound-pressure-measurement#measurement-with-microphone.

- Jung, I.-J., & Ih, J.-G. (2021). Combined Microphone Array for Precise Localization of Sound Source Using Acoustic Intensimetry. Acoustical Advances, Mechanical Systems and Signal Processing, 160, 107820.

- Botteldooren, D., Van Renterghem, T., Van Der Eerden, F., Wessels, P., Basten, T., De Coensel, B. (2017). Industrial sound source localization using microphone arrays under difficult meteorological conditions. Griffith University, INTER-NOISE and NOISE-CON Congress and Conference Proceedings.

- Cherif, R., & Atalla, N. (2015). Measurement of the radiation efficiency of complex structures. Noise Control Engineering Journal, 63(4), 339-346.

- Simmons, C. (1999). Measurement of Sound Pressure Levels at Low Frequencies in Rooms. Swedish National Testing and Research Institute, Acta Acustica, 85, pp. 88-100.

- Švec, J. G., & G., S. (2018). Tutorial and Guidelines on Measurement of Sound Pressure Level in Voice and Speech. Journal of Speech, Language, and Hearing Research.

- Yunus, M., Alsoufi, M. S., & Hussain, I. H. (2015). Sound Source Localization and Mapping Using Acoustic Intensity Method for Noise Control in Automobiles and Machines. International Journal of Innovative Research in Science, Engineering and Technology.

- Wang, H., Li, H., Li, M., Li, Z., & Li, J. (2020). Robotic Sound Power Level Measurement System for HVAC Systems. IEEE Transactions on Instrumentation and Measurement, 70, 1-11.

- Yang, L., Chen, Y., Chen, G., & Zhang, H. (2021). A Robotic Platform for Sound Power and Sound Intensity Measurements in Open Spaces. Applied Sciences, 11(6), 2816.

- Blazek, M. et al. (2014). Sound Mapping in Urban Environment Using a Scanning Microphone System. Proceedings of the International Conference on Noise and Vibration Engineering (ISMA), 1601-1612.

- Mansouri et al. (2018). Experimental Study on Sound Power Mapping of a Vibrating Machine Using a Scanning Microphone and a Robot. Applied Sciences, 8(11), p. 2276.

- ISO 3745:2012(en): Acoustics — Determination of sound power levels and sound energy levels of noise sources using sound pressure — Precision methods for anechoic rooms and hemi-anechoic rooms. https://www.iso.org/obp/ui/#iso:std:iso:3745:ed-3:v1:en.

- DEWESOFT. (2014). Sound Pressure Measurement – Measurement With Microphone – DEWESOFT – Training. https://training.dewesoft.com/online/course/sound-pressure-measurement#measurement-with-microphone.

- HBK World. Sound Intensity - The Sound Intensity Equation - Measuring sound intensity. [En ligne] Disponible sur : https://www.bksv.com/en/knowledge/blog/sound/sound-intensity. Consulté le 6 mai 2024.

- doosan robotics. (2021). External Basic Training Robotics.

- Brüel & Kjær. (s. d.). Type 4189-A-021 1⁄2-inch Free-field Microphone. https://www.bksv.com/en/transducers/acoustic/microphones/microphone-set/4189-a-021.

- Li, J., Liang, X., Shen, S., Xu, T., Feng, J., & Yan, S. (2017). Scale-aware fast R-CNN for pedestrian detection. IEEE transactions on Multimedia, 20, 985–996.

- He, K., Zhang, X., Ren, S., & Sun, J. (2015). Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE transactions on pattern analysis and machine intelligence, 37.

- Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. (2016). You only look once: Unified, real-time object detection. The IEEE conference on computer vision and pattern recognition.

- Brüel & Kjær. (n.d.). Loudness Overall Analysis. https://www.bksv.com/en/analysis-software/data-acquisition-software/bk-connect/applets/loudness-overall-analysis.

- ISO 3746:2010(en). Acoustics — Determination of sound power levels and sound energy levels of noise sources using sound pressure — Survey method using an enveloping measurement surface over a reflecting plane.

- ISO 3746:2010(en). Acoustics — Determination of sound power levels and sound energy levels of noise sources using sound pressure — Survey method using an enveloping measurement surface over a reflecting plane.

- Carneiro, T., Moura, R., Neto, T., Pedro, P., & Figueiredo, R. (2018). Performance Analysis of Google Colaboratory as a Tool for Accelerating Deep Learning Applications. IEEE Access, PP(99).

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).