Submitted:

30 May 2024

Posted:

30 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- (a)

- Introducing a new unsupervised robust segmentation algorithm for 3D point clouds with high variability and noise, particularly suited for heritage building data.

- (b)

- The algorithm segments 3D heritage data into distinct architectural elements like columns, capitals, vaults, etc., yielding results suitable for further classification tasks.

- (c)

- Proposing a novel topological structure for 3D point clouds. Unlike common voxelization, this structure uses a graph that requires less computational memory and groups geometrically congruent 3D points in its nodes, regardless of graph resolution. This makes it highly effective for computer applications dealing with 3D point clouds.

2. Related Works

2.1. Region Growing

2.2. Edge Detection

2.3. Model Fitting

3. Materials and Methods

3.1. Segmentation Method

3.1.1. Overview of the Segmentation Method

3.1.2. Edge Points Detection

3.1.3. Supervoxelization and Topological Organization

3.1.4. Edges Closure

3.1.5. Segments Determination

- Region growing of supervoxels from a seed supervoxel not belonging to .

- Inclusion of edge supervoxels in one of the regions identified in step 1.

- Initialize .

- Choose a supervoxel not yet assigned to another region.

- .

- Determine the edges of in , .

- , iff and .

- Choose as new a supervoxel of of which neighborhood in the network has not yet been analyzed.

- Edge supervoxels that have some non-edge supervoxels neighbors and all of them belong to a unique region. In this case, the supervoxel in question is assigned to the region to which its neighbours belong.

- Edge supervoxels that have some non-edge supervoxels neighbours belonging to different regions. In this case, we apply equation (2) and assign the edge supervoxel to the region of the supervoxel with a lower distance value.

- Edge supervoxels in which all its neighbours are edge supervoxels. The edge supervoxel is not assigned yet.

3.2. Experimental Setup

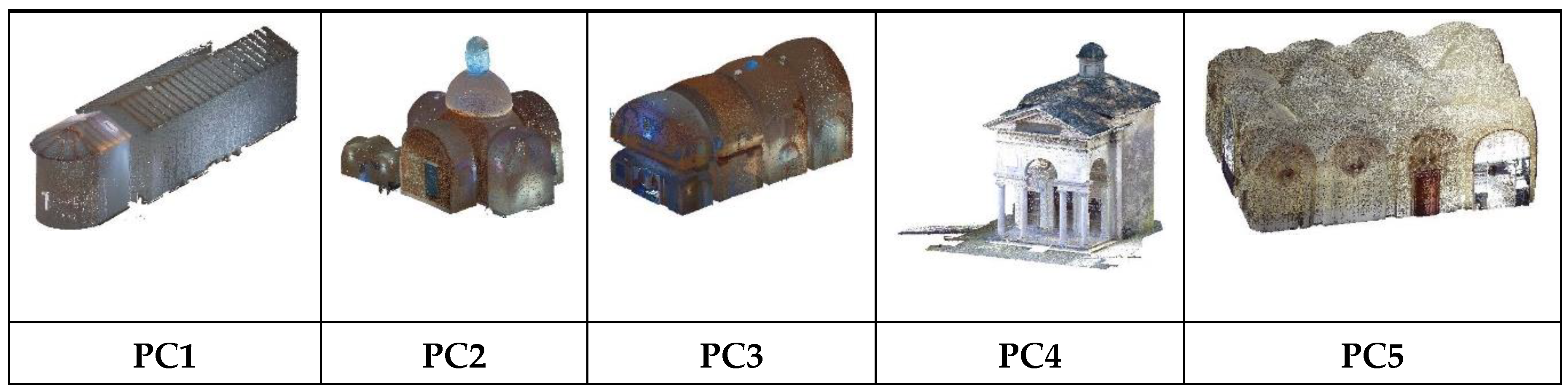

3.2.1. Point Cloud Dataset

3.2.2. Algorithm Parameter Values

3.2.3. Accuracy Evaluation Metrics

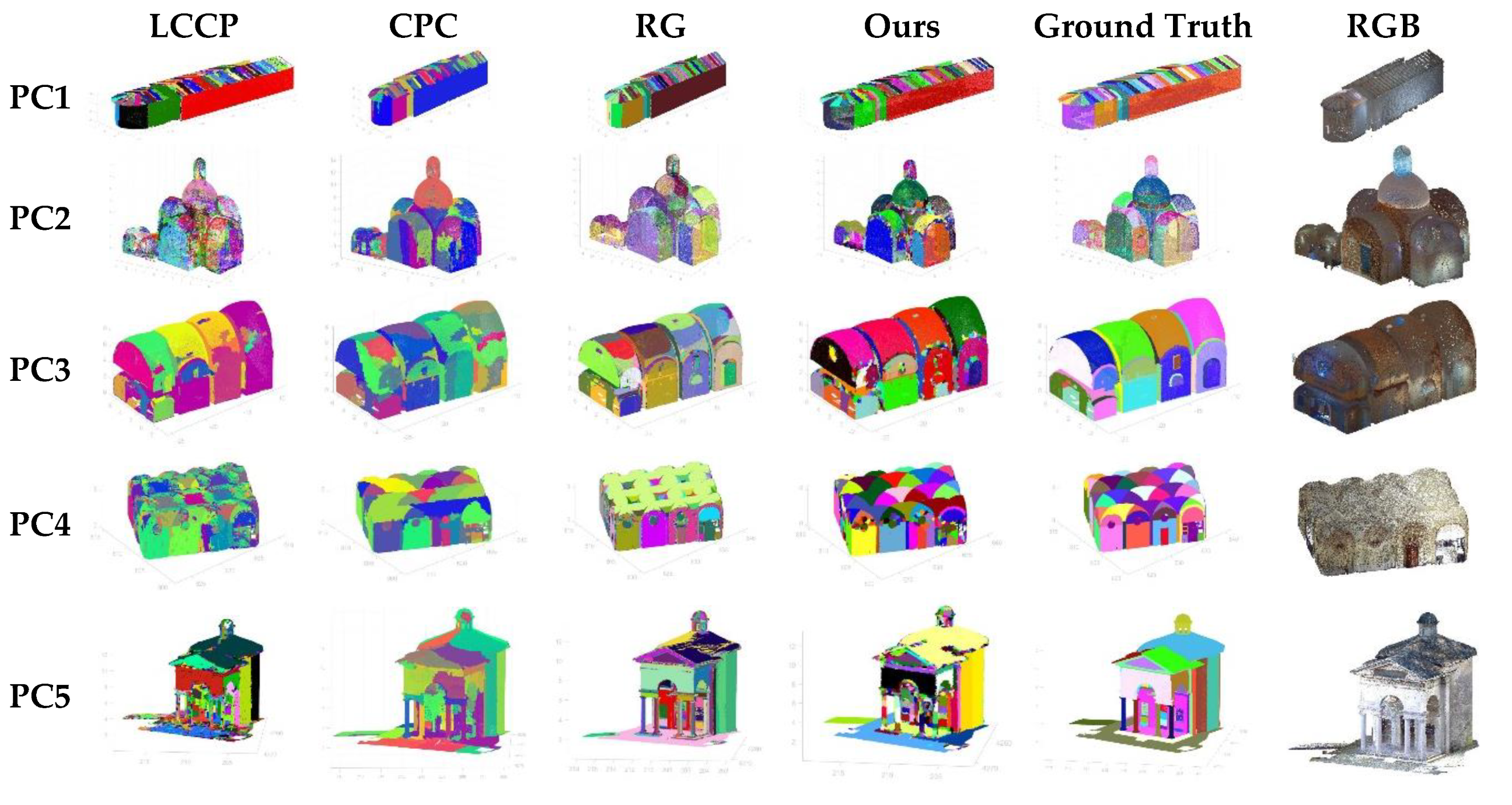

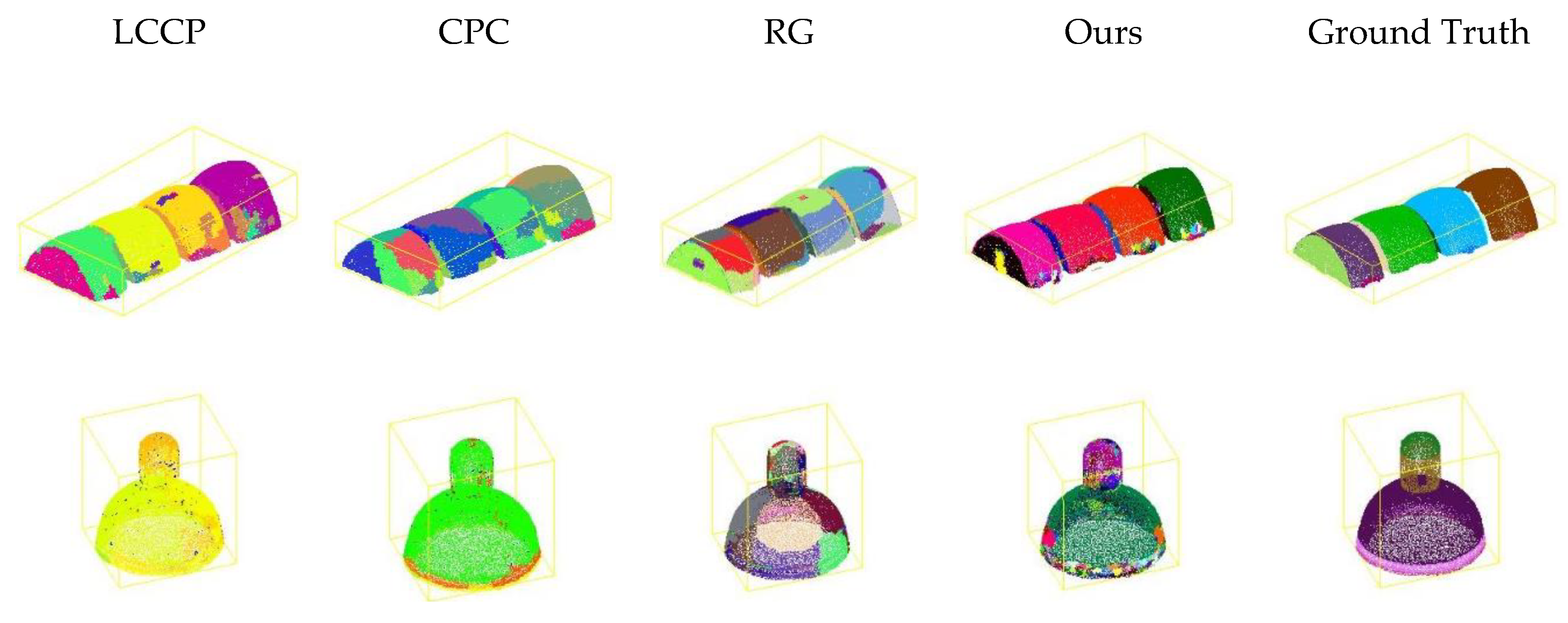

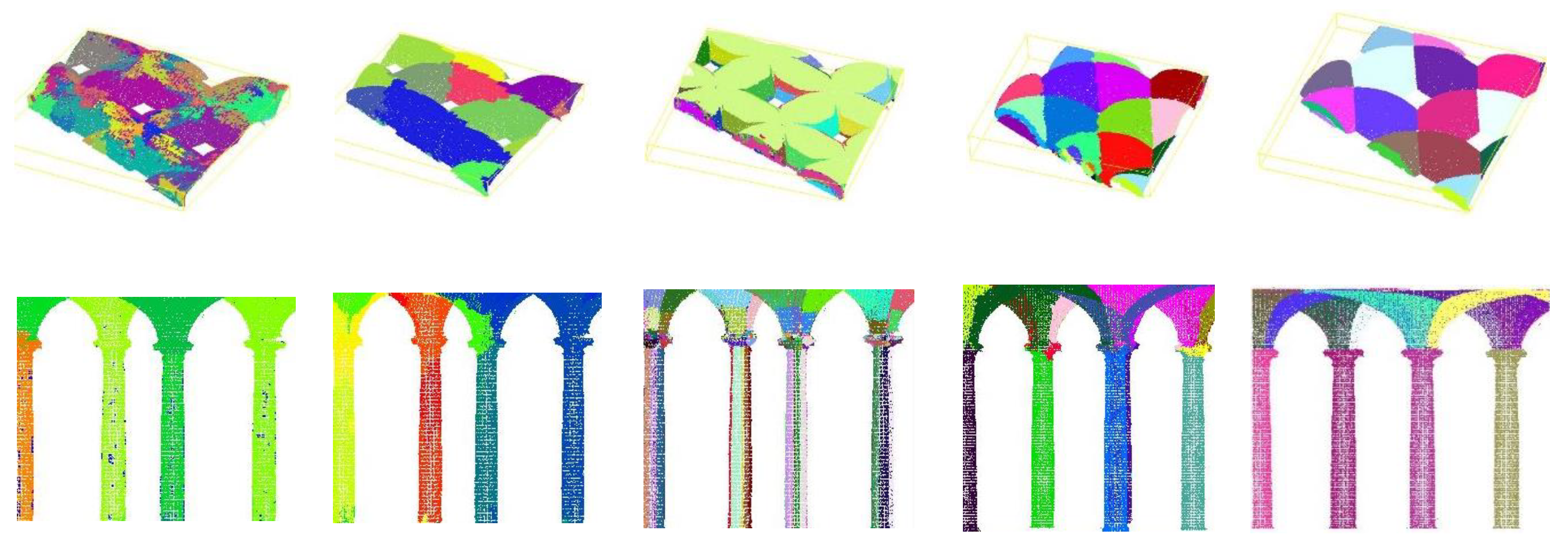

4. Results

4.1. Global Results

4.2. Curved and Planar Segments Results

- The parameter of our algorithm is the best in 60% of the results. This means that in most cases the proposed method provides a segmentation of flat areas with a maximum number of TPs without a significant number of FPs and TNs. The method we have called RG is the second best method according to the F1 parameter.

- Taking into account the parameter, the algorithm with the best results in 60% of the cases is RG. Therefore, the method that best aligns the predicted segments spatially with the real ones, which is what measures, is RG. The second best method according to this parameter is the one presented in this paper.

5. Discussion

5.1. Strength

5.2. Limitations and Research Directions

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yang, X.; Grussenmeyer, P.; Koehl, M.; Macher, H.; Murtiyoso, A.; Landes, T. Review of built heritage modelling: Integration of HBIM and other information techniques. J Cult Herit 2020, 46, 350–360. [Google Scholar] [CrossRef]

- Kaufman, A.E. Voxels as a Computational Representation of Geometry Available online:. Available online: https://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.30.8917 (accessed on 10 August 2023).

- Xu, Y.; Tong, X.; Stilla, U. Voxel-based representation of 3D point clouds: Methods, applications, and its potential use in the construction industry. Autom Constr 2021, 126. [Google Scholar] [CrossRef]

- Cotella, V.A. From 3D point clouds to HBIM: Application of Artificial Intelligence in Cultural Heritage. Autom Constr 2023, 152, 104936. [Google Scholar] [CrossRef]

- Xie, Y.; Tian, J.; Zhu, X.X. Linking Points with Labels in 3D: A Review of Point Cloud Semantic Segmentation. IEEE Geosci Remote Sens Mag 2020, 8, 38–59. [Google Scholar] [CrossRef]

- Rabbani, T.; Van Den Heuvel, F.A.; Vosselman, G. Segmentation of Point Clouds Using Smoothness Constraint. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2006, 36, 248–253. [Google Scholar]

- Poux, F.; Mattes, C.; Selman, Z.; Kobbelt, L. Automatic region-growing system for the segmentation of large point clouds. Autom Constr 2022, 138, 104250. [Google Scholar] [CrossRef]

- Deschaud, J.-E.; Goulette, F. A Fast and Accurate Plane Detection Algorithm for Large Noisy Point Clouds Using Filtered Normals and Voxel Growing. In Proceedings of the 5th International Symposium 3D Data Processing, Visualization and Transmission (3DVPT), 2010. [Google Scholar]

- Huang, J.; Xie, L.; Wang, W.; Li, X.; Guo, R. A Multi-Scale Point Clouds Segmentation Method for Urban Scene Classification Using Region Growing Based on Multi-Resolution Supervoxels with Robust Neighborhood. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2022, XLIII-B5-2022, 79–86. [CrossRef]

- Demarsin, K.; Vanderstraeten, D.; Volodine, T.; Roose, D. Detection of closed sharp edges in point clouds using normal estimation and graph theory. CAD Computer Aided Design 2007, 39, 276–283. [Google Scholar] [CrossRef]

- Stein, S.C.; Schoeler, M.; Papon, J.; Worgotter, F. Object partitioning using local convexity. In Proceedings of the Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; 2014; pp. 304–311. [Google Scholar] [CrossRef]

- Corsia, M.; Chabardes, T.; Bouchiba, H.; Serna, A. Large Scale 3D Point Cloud Modeling from CAD Database in Complex Industrial Environments. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2020, XLIII-B2-2020, 391–398. [Google Scholar] [CrossRef]

- Zhao, B.; Hua, X.; Yu, K.; Xuan, W.; Chen, X.; Tao, W. Indoor Point Cloud Segmentation Using Iterative Gaussian Mapping and Improved Model Fitting. IEEE Transactions on Geoscience and Remote Sensing 2020, 58, 7890–7907. [Google Scholar] [CrossRef]

- Schoeler, M.; Papon, J.; Worgotter, F. Constrained planar cuts - Object partitioning for point clouds. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); IEEE; 2015; pp. 5207–5215. [Google Scholar] [CrossRef]

- Macher, H.; Landes, T.; Grussenmeyer, P.; Alby, E. Semi-automatic Segmentation and Modelling from Point Clouds towards Historical Building Information Modelling. In Proceedings of the Progress in Cultural Heritage: Documentation, Preservation, and Protection (Euromed 2014); Springer: Limassol (Cyprus), 2014; pp. 111–120. [Google Scholar]

- Luo, Z.; Xie, Z.; Wan, J.; Zeng, Z.; Liu, L.; Tao, L. Indoor 3D Point Cloud Segmentation Based on Multi-Constraint Graph Clustering. Remote Sens (Basel) 2023, 15. [Google Scholar] [CrossRef]

- Araújo, A.M.C.; Oliveira, M.M. A robust statistics approach for plane detection in unorganized point clouds. Pattern Recognit 2020, 100. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X.; Ning, X. SVM-Based classification of segmented airborne LiDAR point clouds in urban areas. Remote Sens (Basel) 2013, 5, 3749–3775. [Google Scholar] [CrossRef]

- Saglam, A.; Baykan, N.A. Segmentation-Based 3D Point Cloud Classification on a Large-Scale and Indoor Semantic Segmentation Dataset. In Proceedings of the Innovations in Smart Cities Applications Volume 4; Springer International Publishing, 2021; pp. 1359–1372. [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017; pp. 652–660.

- Li, C.R.Q.; Hao, Y.; Leonidas, S.; Guibas, J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the Proceedings of the 31st International Conference on Neural Information Processing Systems; 2017; pp. 1–10.

- Haznedar, B.; Bayraktar, R.; Ozturk, A.E.; Arayici, Y. Implementing PointNet for point cloud segmentation in the heritage context. Herit Sci 2023, 11. [Google Scholar] [CrossRef]

- Grilli, E.; Remondino, F. Machine Learning Generalisation across Different 3D Architectural Heritage. ISPRS Int J Geoinf 2020, 9, 379. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Randla-Net: Efficient semantic segmentation of large-scale point clouds. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2020, 11105–11114. [CrossRef]

- Hua, B.-S.; Tran, M.-K.; Yeung, S.-K. Pointwise Convolutional Neural Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; IEEE; 2018; pp. 984–993. [Google Scholar] [CrossRef]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); IEEE; 2015; pp. 922–928. [Google Scholar] [CrossRef]

- Matrone, F.; Felicetti, A.; Paolanti, M.; Pierdicca, R. Explaining AI: Understanding Deep Learning Models for Heritage Point Clouds. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2023, X-M-1–2023, 207–214. [CrossRef]

- Matrone, F.; Grilli, E.; Martini, M.; Paolanti, M.; Pierdicca, R.; Remondino, F. Comparing machine and deep learning methods for large 3D heritage semantic segmentation. ISPRS Int J Geoinf 2020, 9. [Google Scholar] [CrossRef]

- Su, F.; Zhu, H.; Li, L.; Zhou, G.; Rong, W.; Zuo, X.; Li, W.; Wu, X.; Wang, W.; Yang, F.; et al. Indoor interior segmentation with curved surfaces via global energy optimization. Autom Constr 2021, 131, 103886. [Google Scholar] [CrossRef]

- Mohd Isa, S.N.; Abdul Shukor, S.A.; Rahim, N.A.; Maarof, I.; Yahya, Z.R.; Zakaria, A.; Abdullah, A.H.; Wong, R. Point Cloud Data Segmentation Using RANSAC and Localization. In Proceedings of the IOP Conference Series: Materials Science and Engineering; IOP Publishing Ltd, 2019; Volume 705. [Google Scholar] [CrossRef]

- Li, Z.; Shan, J. RANSAC-based multi primitive building reconstruction from 3D point clouds. ISPRS Journal of Photogrammetry and Remote Sensing 2022, 185, 247–260. [Google Scholar] [CrossRef]

- Nguyen, A.; Le, B. 3D point cloud segmentation: A survey. In Proceedings of the 2013 6th IEEE Conference on Robotics, Automation and Mechatronics (RAM); IEEE, 2013; pp. 225–230. [CrossRef]

- Stein, S.C.; Worgotter, F.; Schoeler, M.; Papon, J.; Kulvicius, T. Convexity based object partitioning for robot applications. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA); IEEE; 2014; pp. 3213–3220. [Google Scholar] [CrossRef]

- Drost, B.; Ilic, S. Local Hough Transform for 3D Primitive Detection. In Proceedings of the Proceedings - 2015 International Conference on 3D Vision, 3DV 2015; Institute of Electrical and Electronics Engineers Inc., 2015; pp. 398–406. [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Bazazian, D.; Casas, J.R.; Ruiz-Hidalgo, J. Fast and Robust Edge Extraction in Unorganized Point Clouds. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA); IEEE, 2015; pp. 1–8. [CrossRef]

- Xia, S.; Wang, R. A Fast Edge Extraction Method for Mobile Lidar Point Clouds. IEEE Geoscience and Remote Sensing Letters 2017, 14, 1288–1292. [Google Scholar] [CrossRef]

- Weber, C.; Hahmann, S.; Hagen, H. Methods for feature detection in point clouds. In Proceedings of the OpenAccess Series in Informatics; 2011; Vol. 19, pp. 90–99. [CrossRef]

- Huang, X.; Han, B.; Ning, Y.; Cao, J.; Bi, Y. Edge-based feature extraction module for 3D point cloud shape classification. Comput Graph 2023, 112, 31–39. [Google Scholar] [CrossRef]

- Ahmed, S.M.; Tan, Y.Z.; Chew, C.M.; Mamun, A. Al; Wong, F.S. Edge and Corner Detection for Unorganized 3D Point Clouds with Application to Robotic Welding. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); IEEE, 2018; pp. 7350–7355. [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans Pattern Anal Mach Intell 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Papon, J.; Abramov, A.; Schoeler, M.; Worgotter, F. Voxel Cloud Connectivity Segmentation - Supervoxels for Point Clouds. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition; IEEE, 2013; pp. 2027–2034. [CrossRef]

- Lin, Y.; Wang, C.; Zhai, D.; Li, W.; Li, J. Toward better boundary preserved supervoxel segmentation for 3D point clouds. ISPRS Journal of Photogrammetry and Remote Sensing 2018, 143, 39–47. [Google Scholar] [CrossRef]

| Point Cloud | Nº of points | Length (m) | Width (m) | Height (m) |

|---|---|---|---|---|

| PC1 | 486,937 | 26.7 | 6.86 | 7.34 |

| PC2 | 218,647 | 18 | 22.83 | 16.83 |

| PC3 | 282,870 | 17.73 | 10.91 | 8.47 |

| PC4 | 908,122 | 18.82 | 16.36 | 5.73 |

| PC5 | 825,088 | 17.4 | 17.58 | 13.57 |

| Point Cloud | LCCP | CPC | RG | Ours | ||||||||||||

| PC1 | 0.685 | 0.530 | 0.598 | 0.271 | 0.636 | 0.540 | 0.584 | 0.413 | 0.840 | 0.670 | 0.746 | 0.594 | 0.889 | 0.752 | 0.815 | 0.688 |

| PC2 | 0.651 | 0.387 | 0.486 | 0.413 | 0.574 | 0.466 | 0.514 | 0.346 | 0.840 | 0.617 | 0.711 | 0.552 | 0.920 | 0.618 | 0.740 | 0.587 |

| PC3 | 0.684 | 0.552 | 0.611 | 0.288 | 0.668 | 0.549 | 0.602 | 0.431 | 0.920 | 0.703 | 0.797 | 0.662 | 0.929 | 0.791 | 0.854 | 0.745 |

| PC4 | 0.521 | 0.494 | 0.507 | 0.123 | 0.536 | 0.403 | 0.460 | 0.299 | 0.669 | 0.452 | 0.540 | 0.370 | 0.856 | 0.601 | 0.706 | 0.545 |

| PC5 | 0.449 | 0.287 | 0.346 | 0.519 | 0.475 | 0.390 | 0.428 | 0.273 | 0.713 | 0.507 | 0.592 | 0.421 | 0.870 | 0.604 | 0.731 | 0.576 |

| Point Cloud | LCCP | CPC | RG | Ours | ||||||||||||

| PC1 | 0.619 | 0.490 | 0.547 | 0.376 | 0,651 | 0,575 | 0,611 | 0,440 | 0.881 | 0.727 | 0.797 | 0.662 | 0.963 | 0.869 | 0.913 | 0.841 |

| PC2 | 0.758 | 0.374 | 0.501 | 0.334 | 0,683 | 0,431 | 0,529 | 0,359 | 0.894 | 0.811 | 0.850 | 0.739 | 0.937 | 0.798 | 0.862 | 0.724 |

| PC3 | 0.684 | 0.648 | 0.666 | 0.499 | 0,728 | 0,585 | 0,649 | 0,480 | 0.941 | 0.892 | 0.916 | 0.845 | 0.925 | 0.886 | 0.905 | 0.826 |

| PC4 | 0.550 | 0.568 | 0.559 | 0.388 | 0,612 | 0,412 | 0,492 | 0,327 | 0.870 | 0.752 | 0.807 | 0.676 | 0.918 | 0.510 | 0.656 | 0.488 |

| PC5 | 0.437 | 0.272 | 0.336 | 0.202 | 0,475 | 0,390 | 0,428 | 0,273 | 0.708 | 0.533 | 0.608 | 0.436 | 0.883 | 0.698 | 0.779 | 0.638 |

| Point Cloud | LCCP | CPC | RG | Ours | ||||||||||||

| PC1 | 0.476 | 0.264 | 0.334 | 0.205 | 0.429 | 0.241 | 0.309 | 0.183 | 0.522 | 0.329 | 0.404 | 0.258 | 0.550 | 0.362 | 0.437 | 0.280 |

| PC2 | 0.549 | 0.406 | 0.467 | 0.305 | 0.504 | 0.384 | 0.436 | 0.279 | 0.699 | 0.343 | 0.460 | 0.299 | 0.854 | 0.492 | 0.633 | 0.463 |

| PC3 | 0.684 | 0.367 | 0.477 | 0.314 | 0.574 | 0.489 | 0.528 | 0.359 | 0.845 | 0.398 | 0.542 | 0.377 | 0.938 | 0.642 | 0.762 | 0.615 |

| PC4 | 0.451 | 0.361 | 0.402 | 0.252 | 0.445 | 0.389 | 0.415 | 0.262 | 0.238 | 0.110 | 0.150 | 0.081 | 0.799 | 0.739 | 0.768 | 0.623 |

| PC5 | 0.501 | 0.340 | 0.404 | 0.253 | 0.622 | 0.446 | 0.520 | 0.260 | 0.764 | 0.363 | 0.492 | 0.326 | 0.608 | 0.326 | 0.457 | 0.296 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).