Submitted:

30 May 2024

Posted:

31 May 2024

You are already at the latest version

Abstract

Keywords:

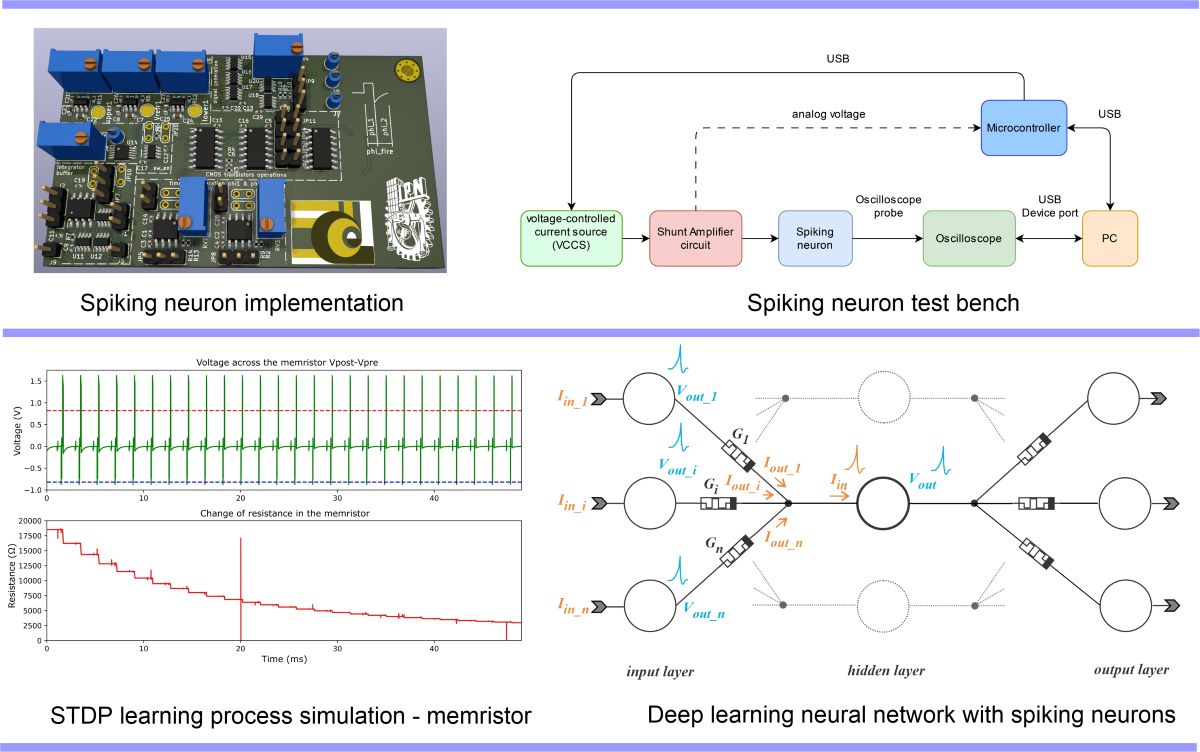

1. Introduction

2. Materials and Methods

2.1. Neuron Modeling

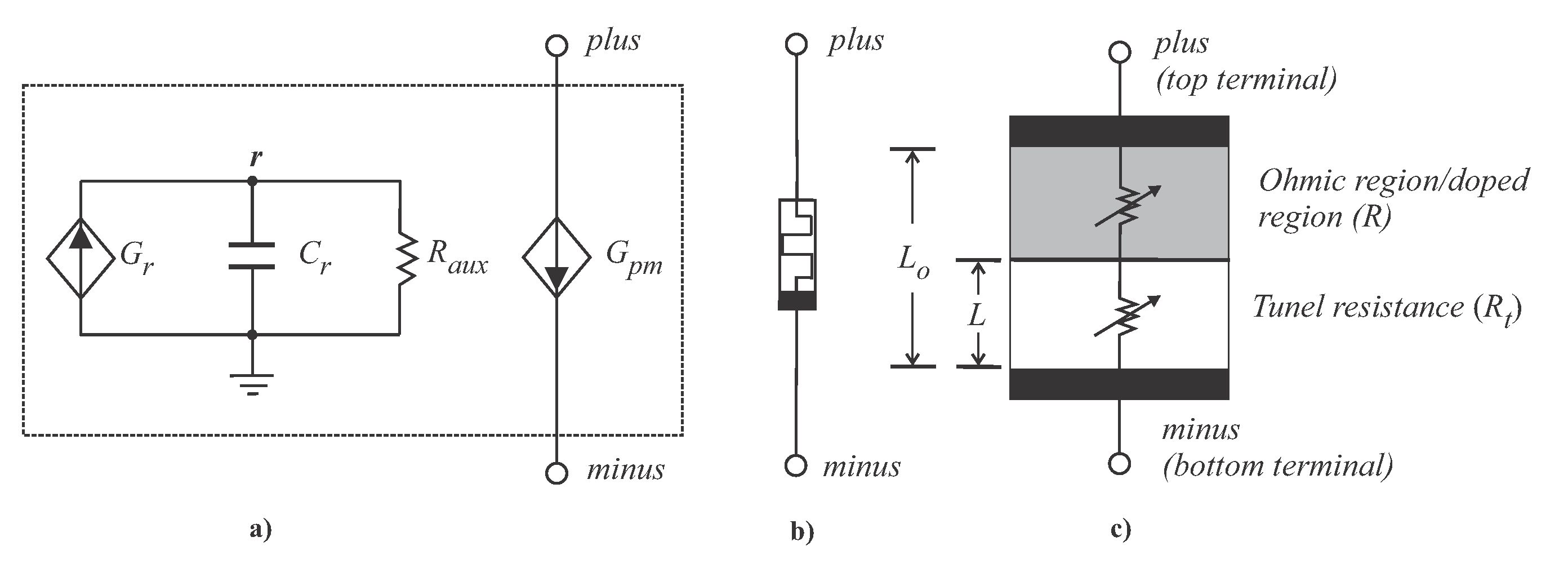

2.2. Memristor modeling

2.2.1. Vourkas Memristor Model

- .subckt memristor_vourkas plus minus PARAMS:

- + rmin=100 rmax=390 rinit=390 alpha=1e6 beta=10

- + gamma=0.1 vs=1.5 vr=-1.5 eps=0.0001 m=82 fo=310 lo=5

- Cr r 0 1 IC={rinit}

- Raux r 0 1e12

- Gr 0 r value={dr_dt(V(plus)-V(minus))*(st_f(V(plus)-V(minus))

- + *st_f(V(r)-rmin)+st_f(-(V(plus)-V(minus)))*st_f(rmax-V(r)))}

- .func dr_dt(y)={-alpha*((y-vr)/(gamma+abs(y-vr)))*st_f(-y+vr)

- + -beta*y*st_f(y-vr)*st_f(-y+vs)-alpha*((y-vs)

- + /(gamma+abs(y-vs)))*st_f(y-vs)}

- .func st_f(y)={1/(exp(-y/eps)+1)}

- Gpm plus minus value={(V(plus)-V(minus))

- + /((fo*exp(2*L(V(r))))/L(V(r)))}

- .func L(y)={lo-lo*m/y}

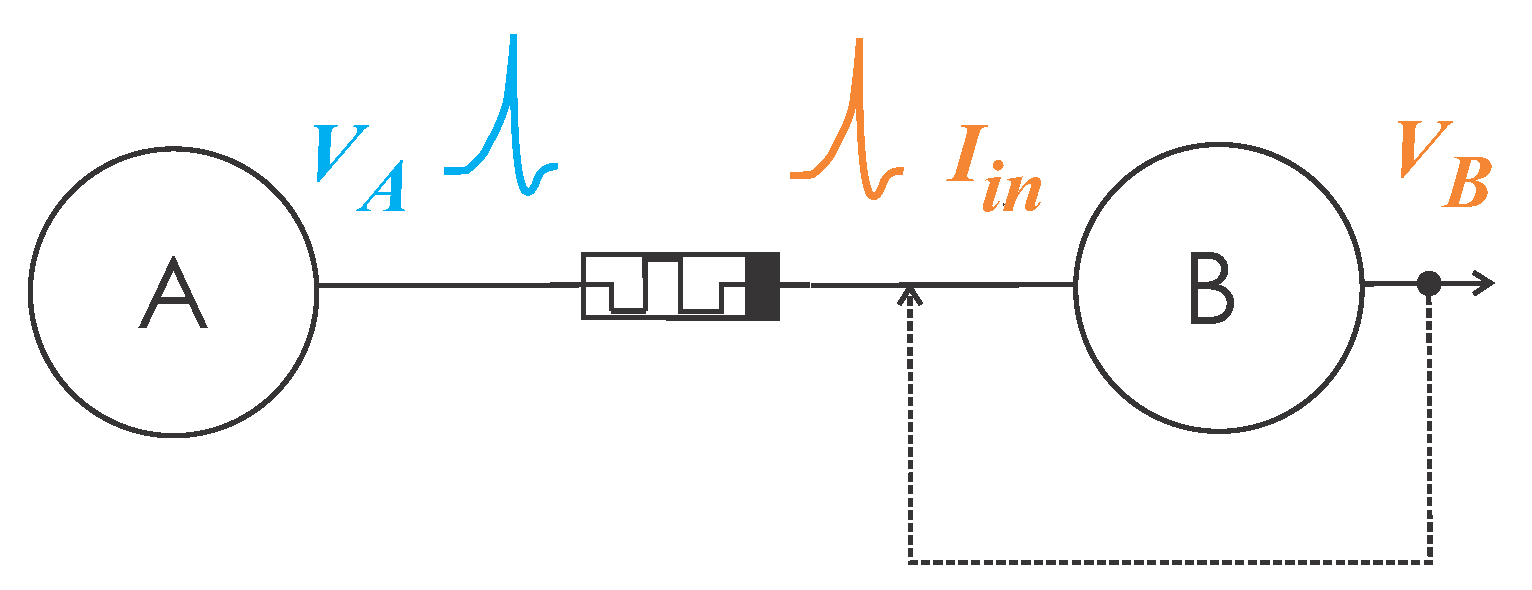

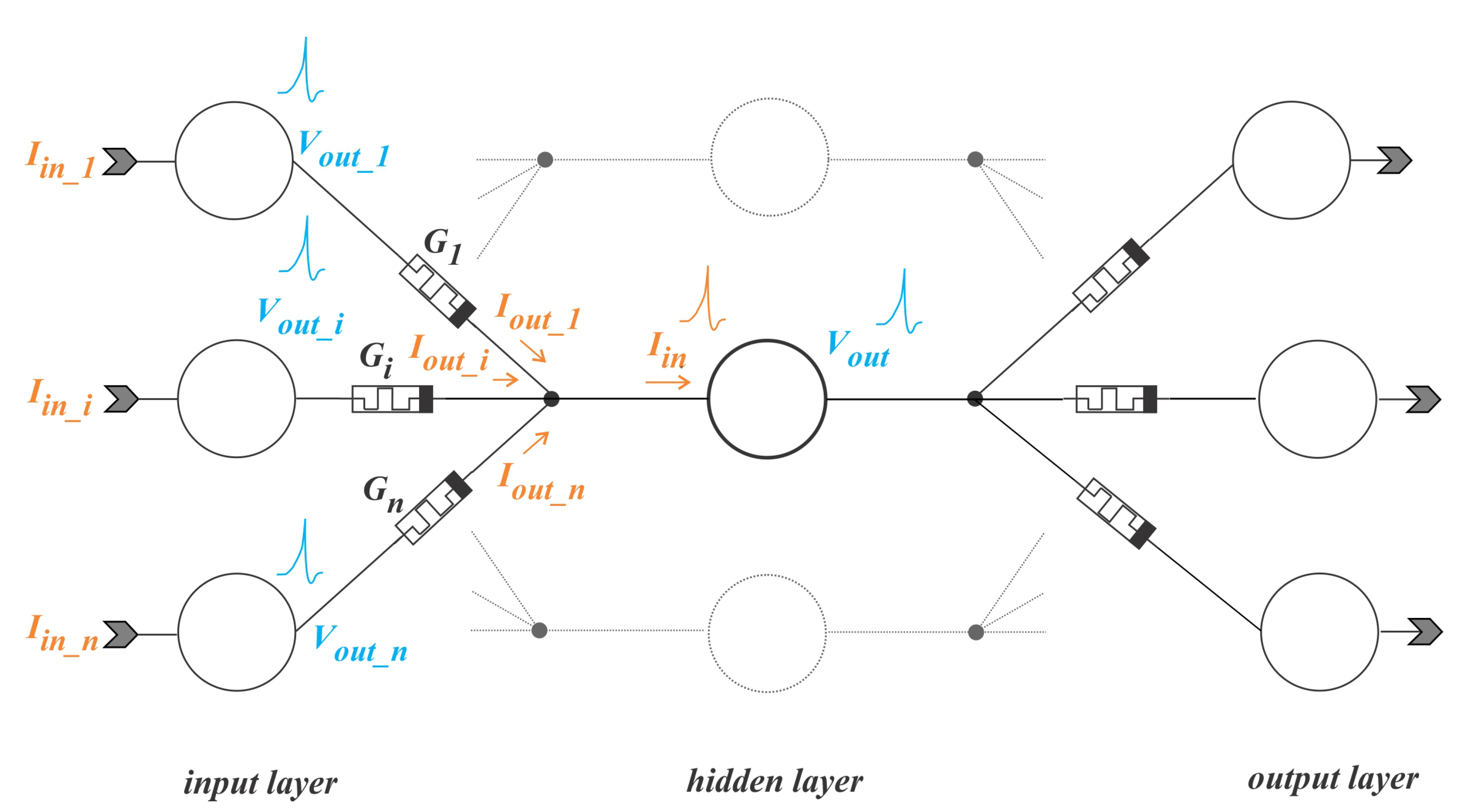

2.3. Synaptic Weight Adjustment in Neurons

2.3.1. Long-Term Potentiation and Long-Term Depression on Memristive Synapses

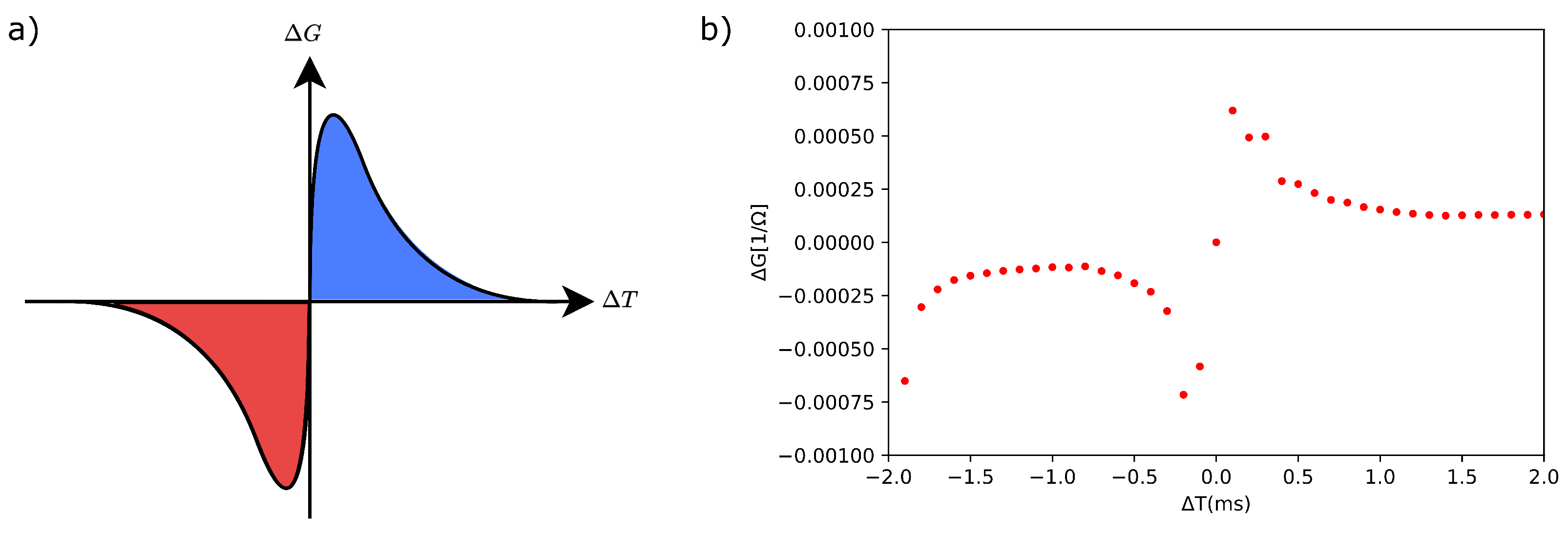

2.3.2. Spike-tIming-Dependent Plasticity

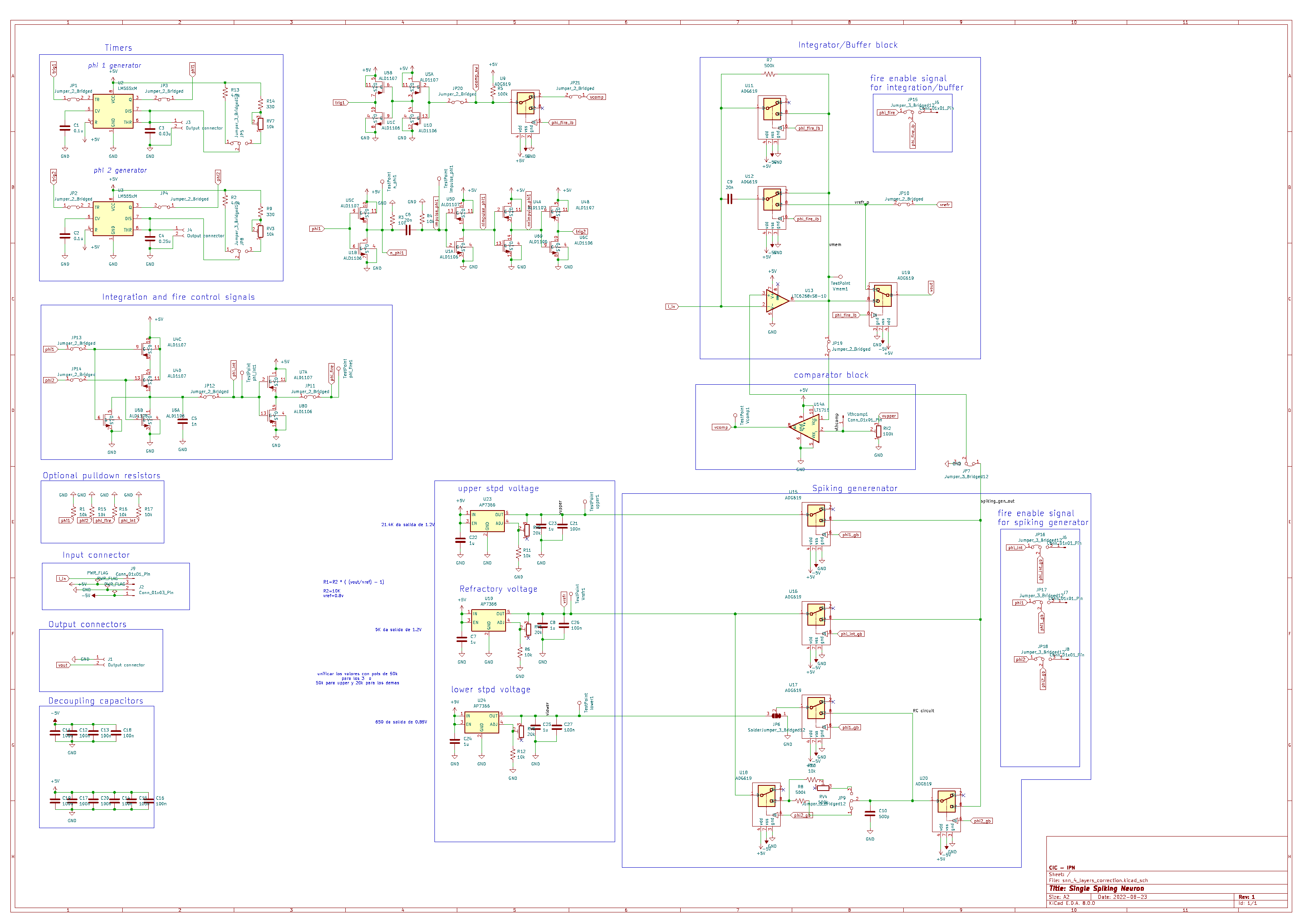

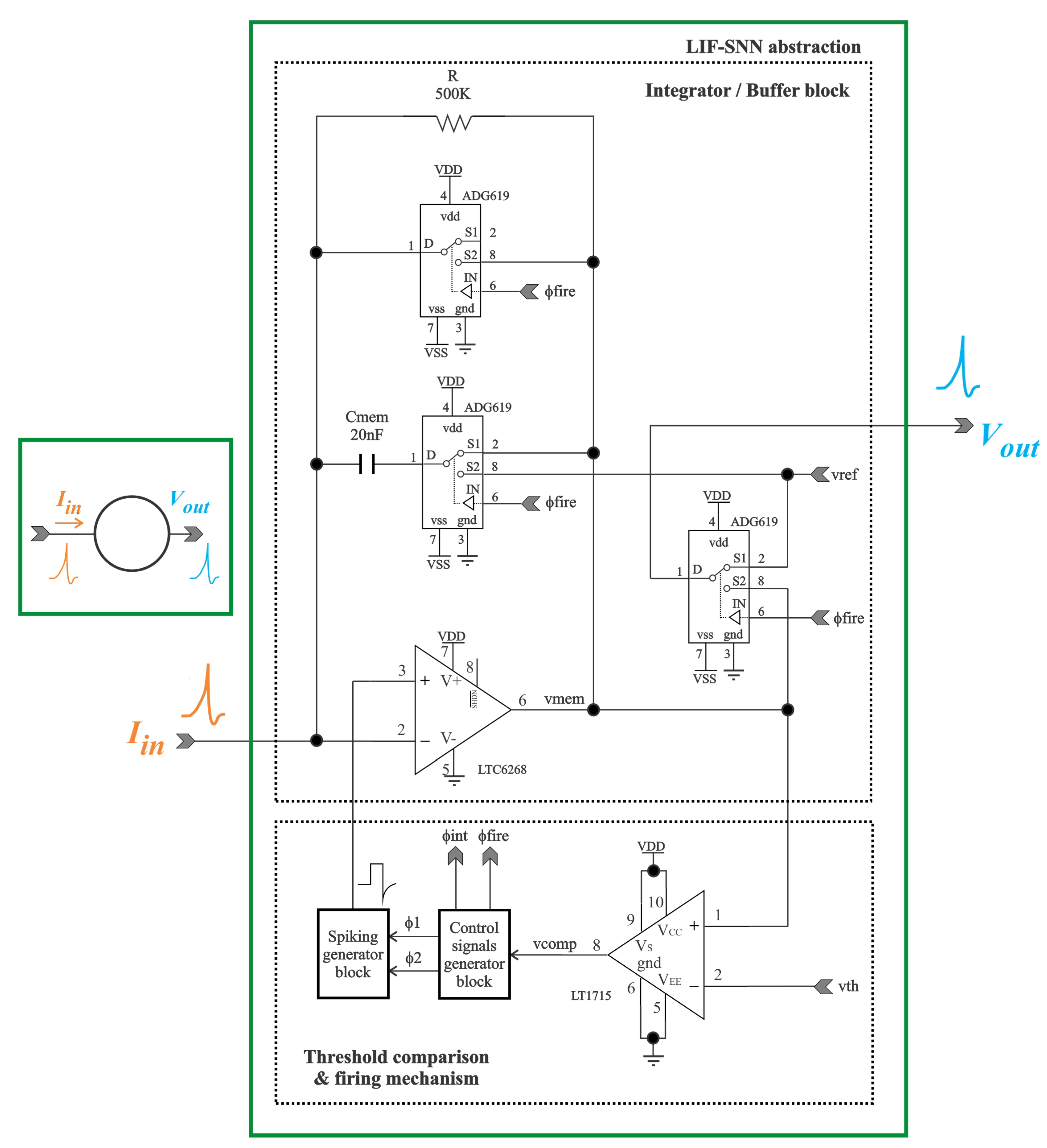

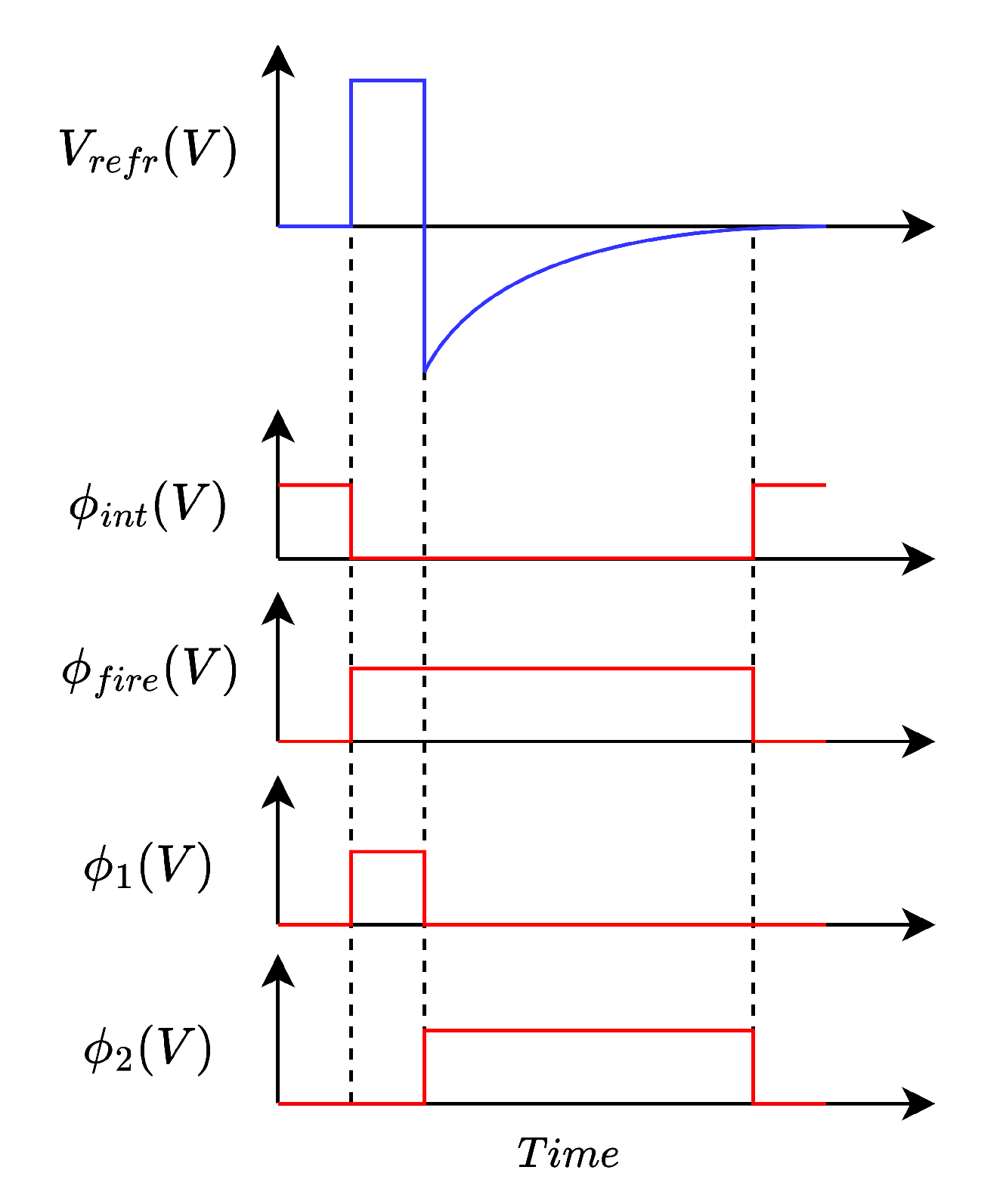

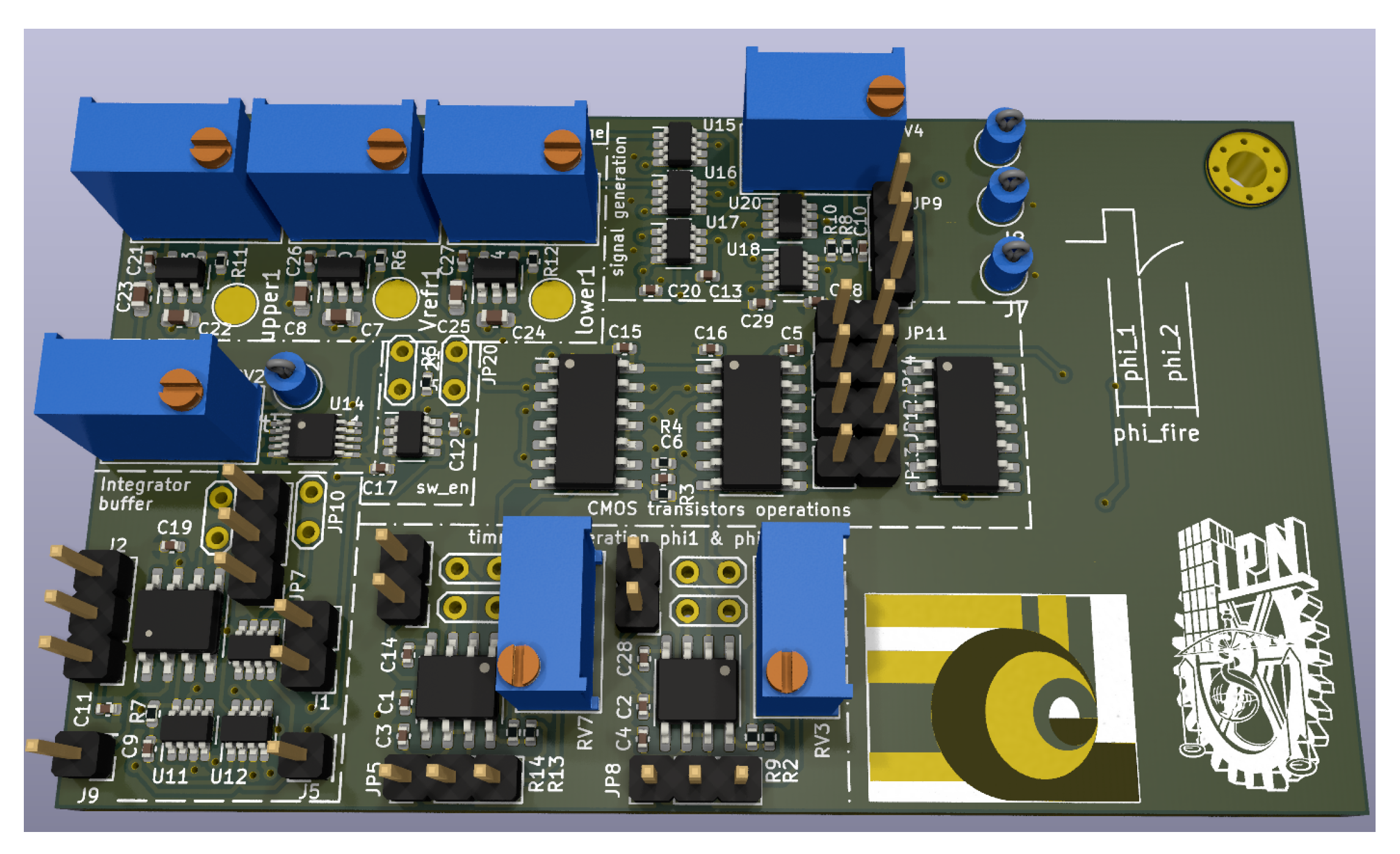

2.4. Analog Leaky Integrate-and-Fire Functional Blocks and Control Signals

2.4.1. Phase Control for Reconfiguration of the LIF Neuron

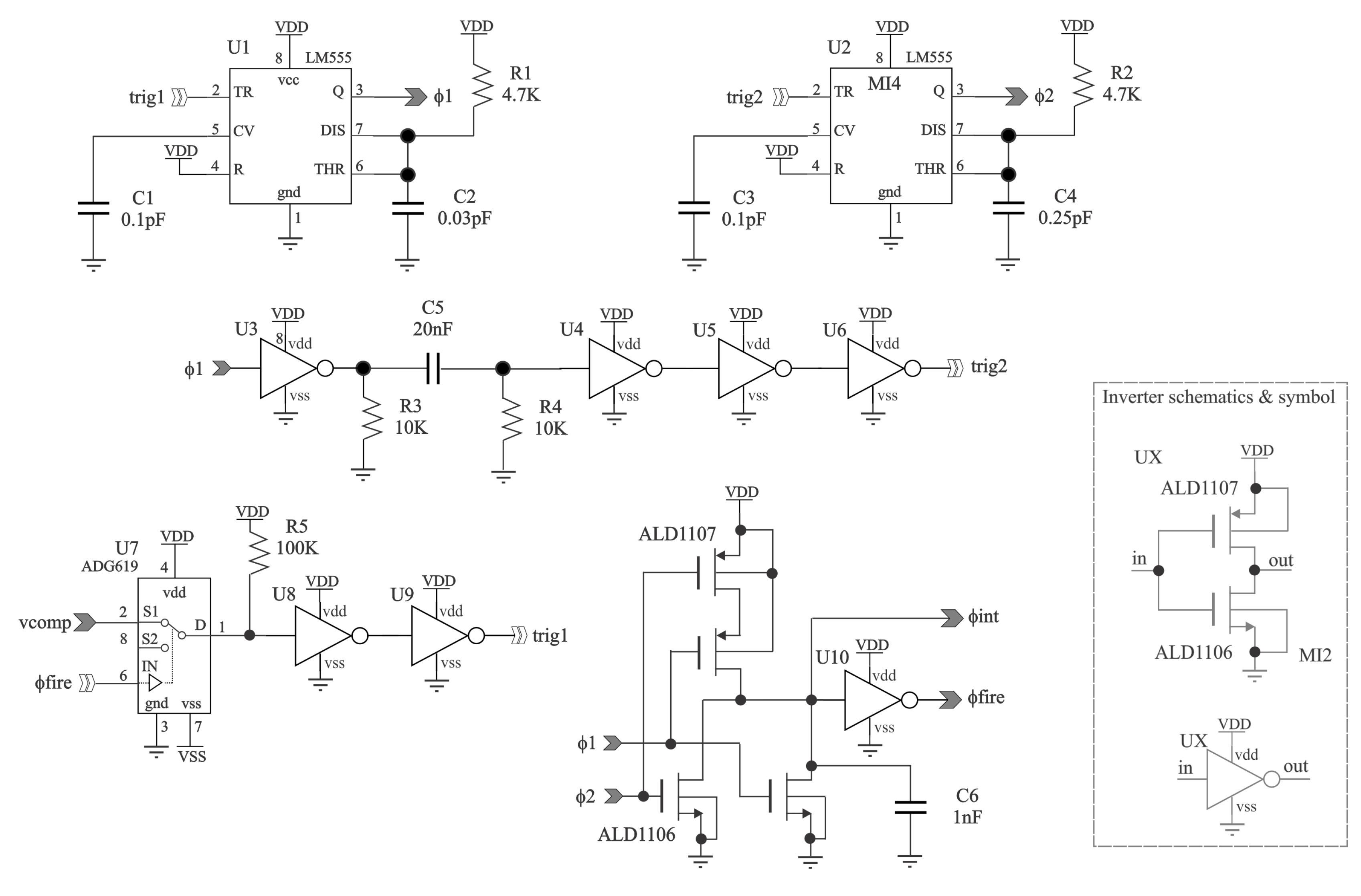

2.5. Electrical Characteristics of the Block Components of the LIF Neuron

2.5.1. Integration/Buffer and Comparator Module

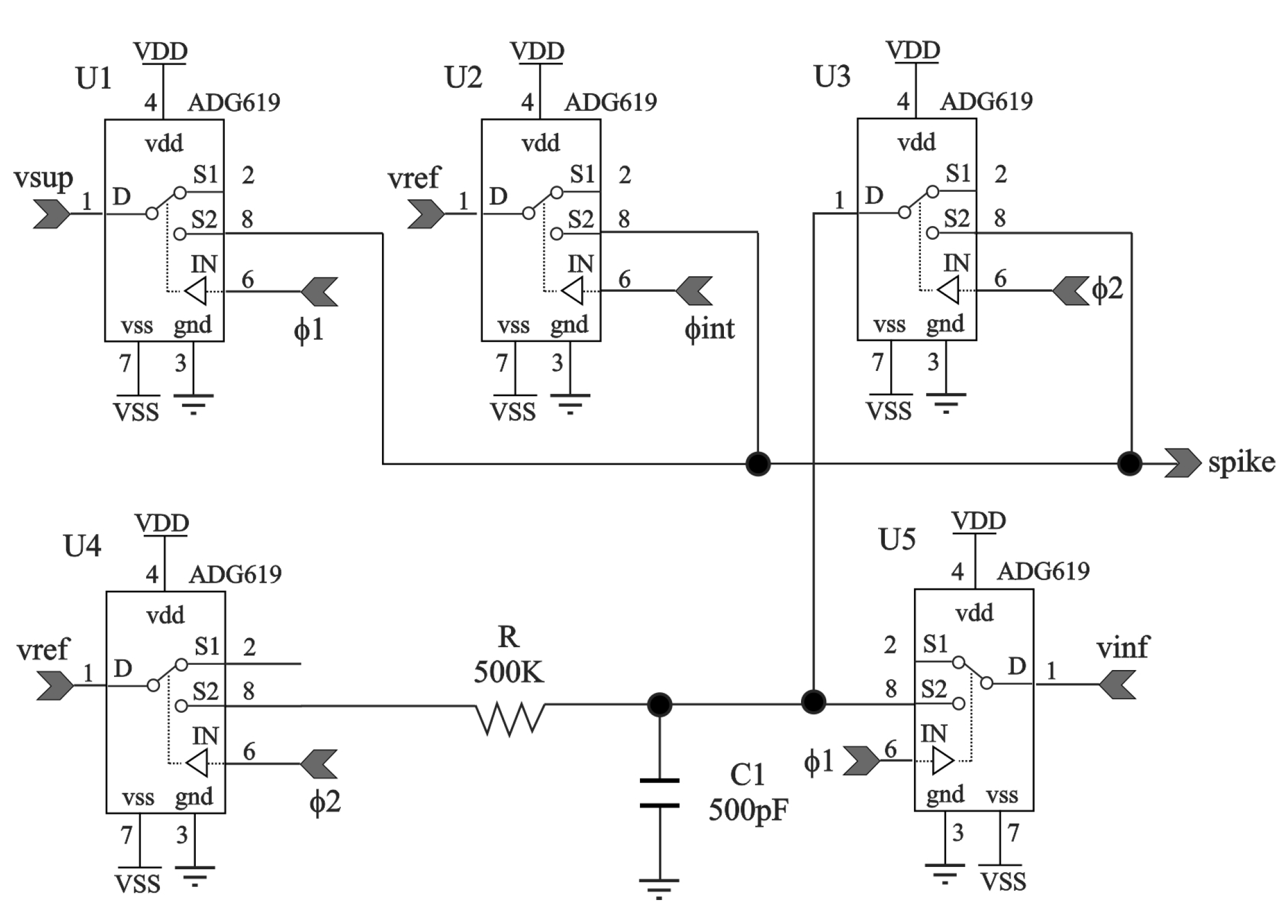

2.5.2. Selection of Transmission Gates Modules

2.5.3. Spiking Generator Block Design

- The commercial memristors by Knowm exhibit the greatest change in memristance at a low frequency (1Hz) and the smallest change at high frequency (1KHz) [29].

- A total relaxation time of 1.25ms is within the absolute refractory period of mammal neurons, where neurons cannot generate new output spikes [30] contributing to sparse computing with low power.

3. Results

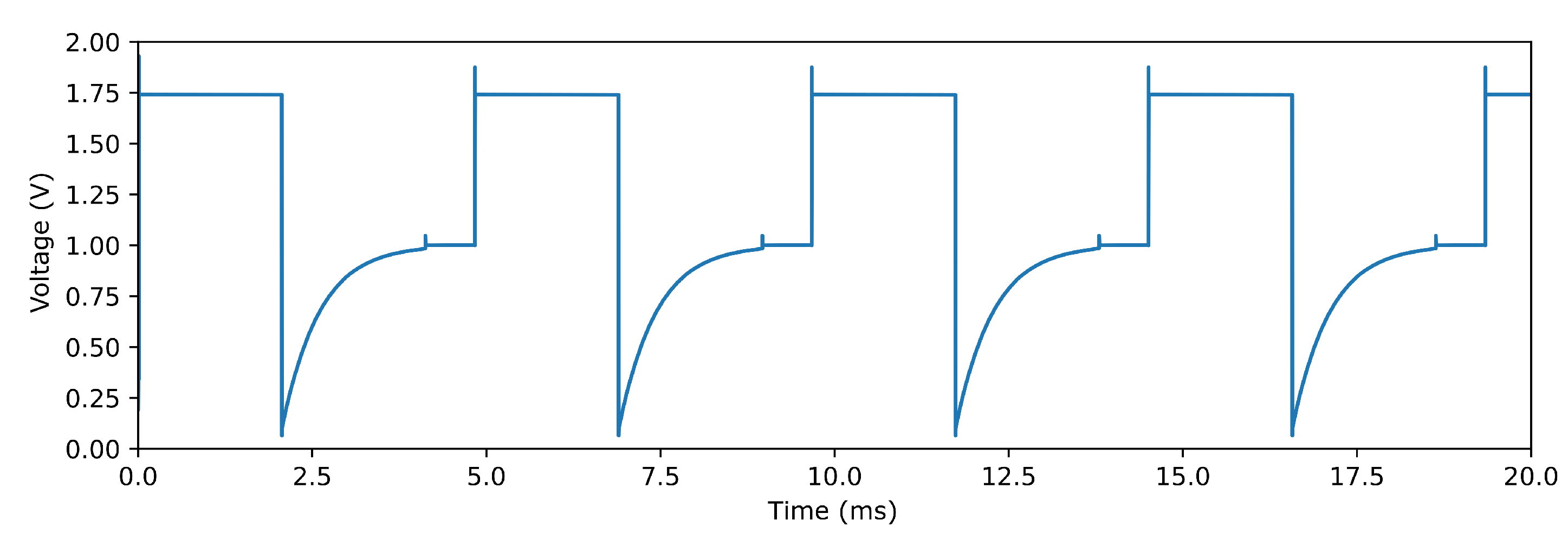

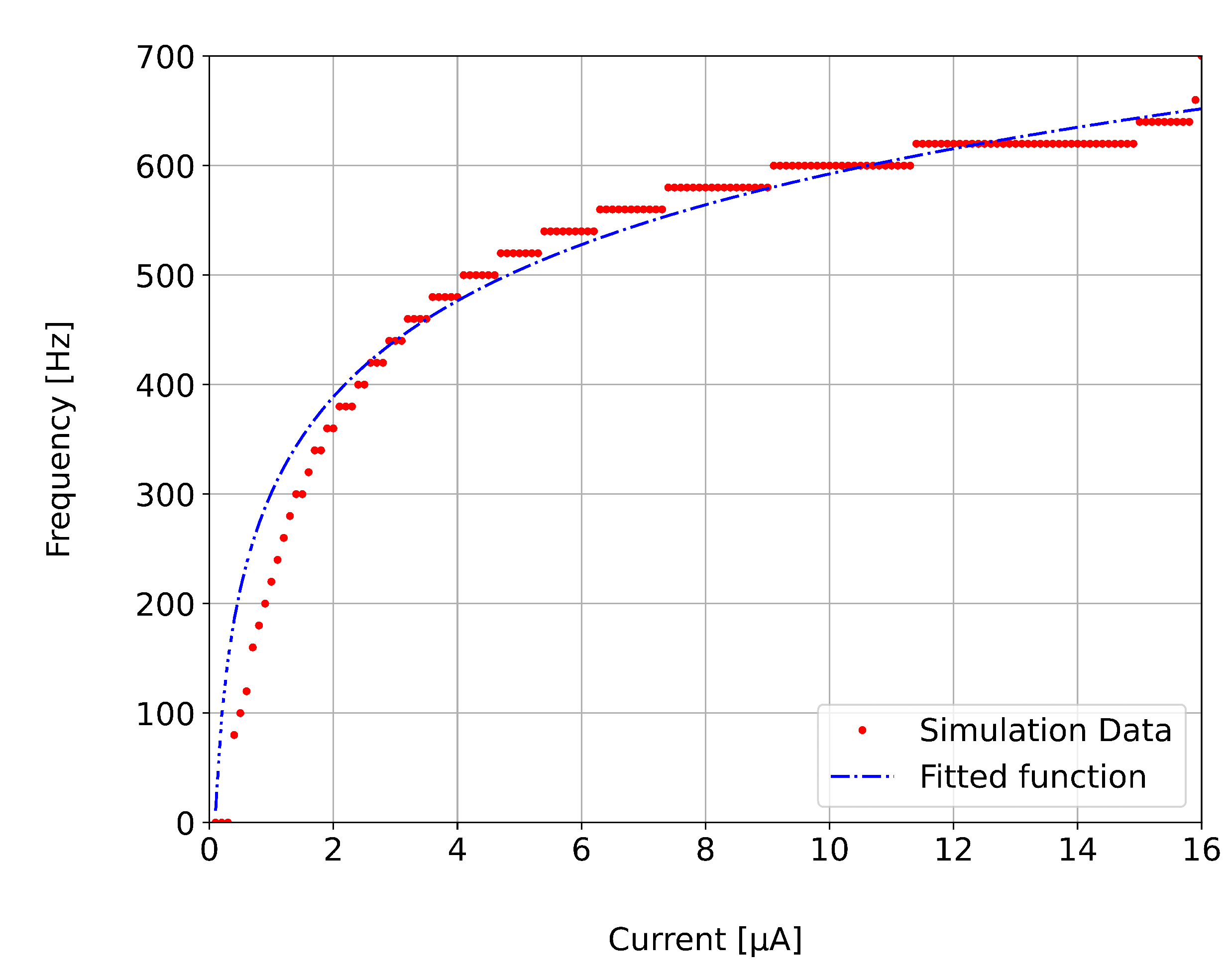

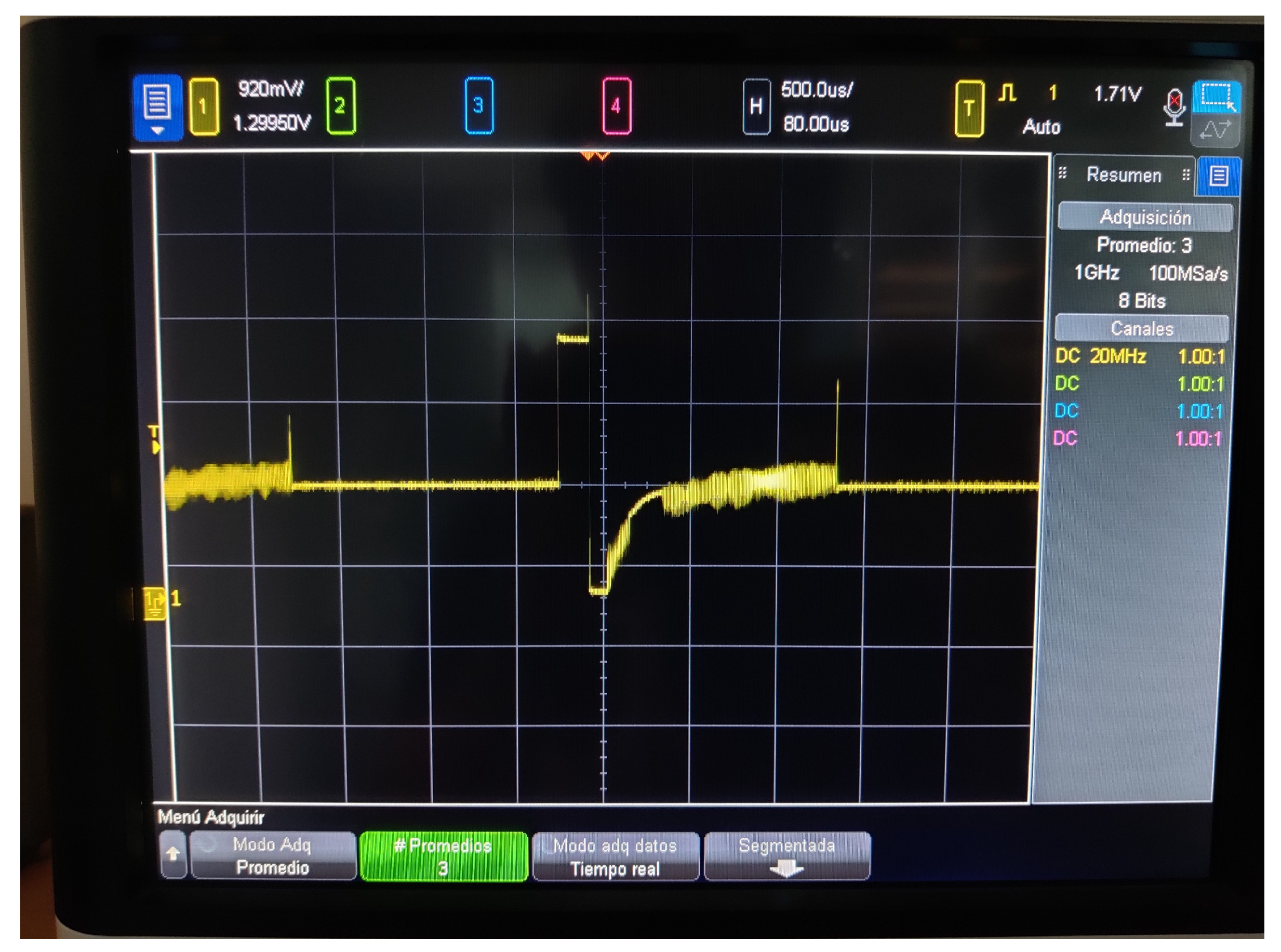

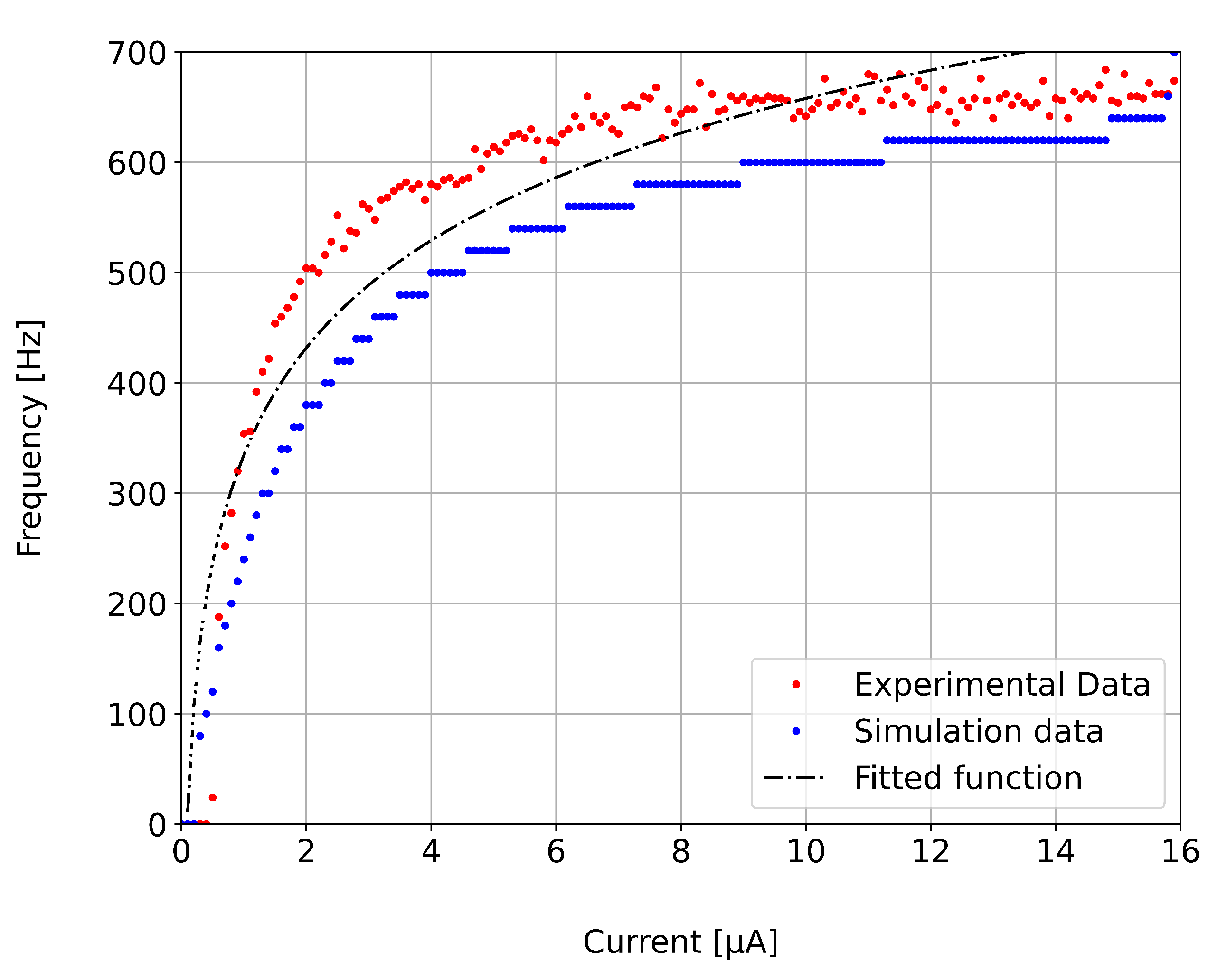

3.1. Electrical Simulation of the LIF Neuron

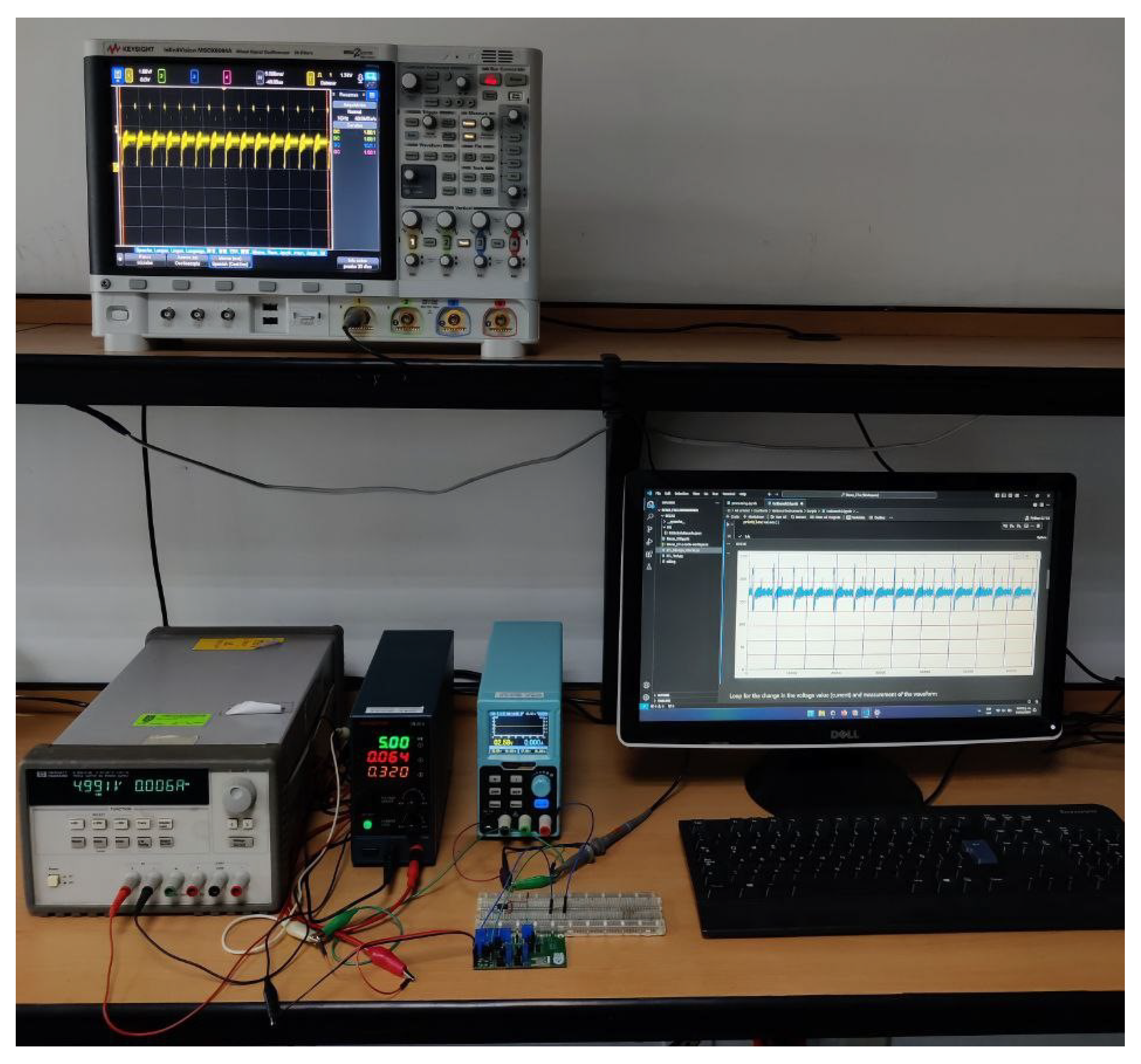

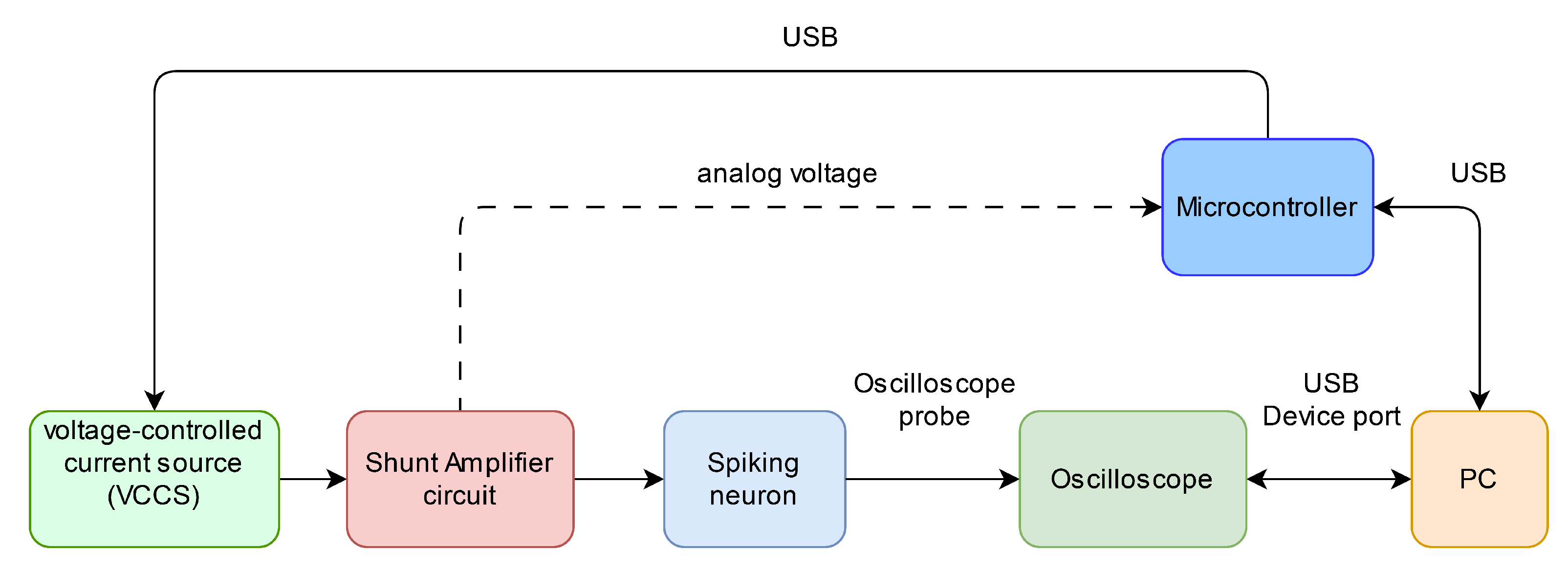

3.2. Hardware Implementation of the LIF Neuron

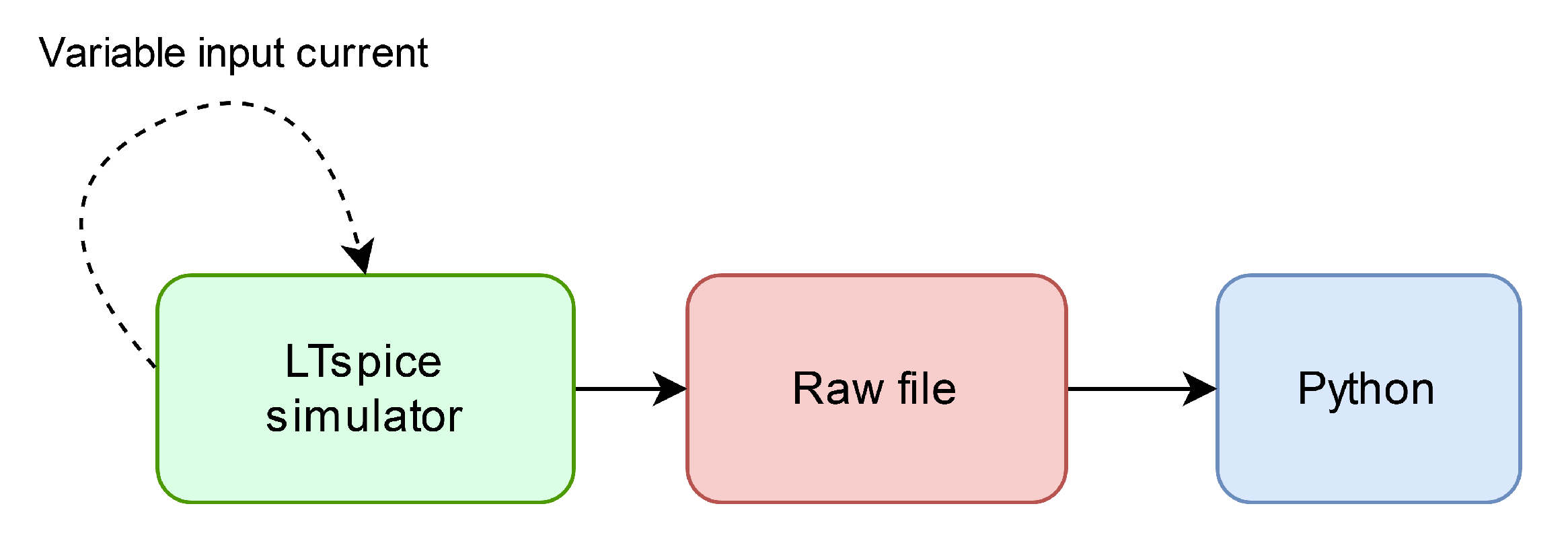

3.2.1. Methodology for the Characterization of the Physical LIF Neuron

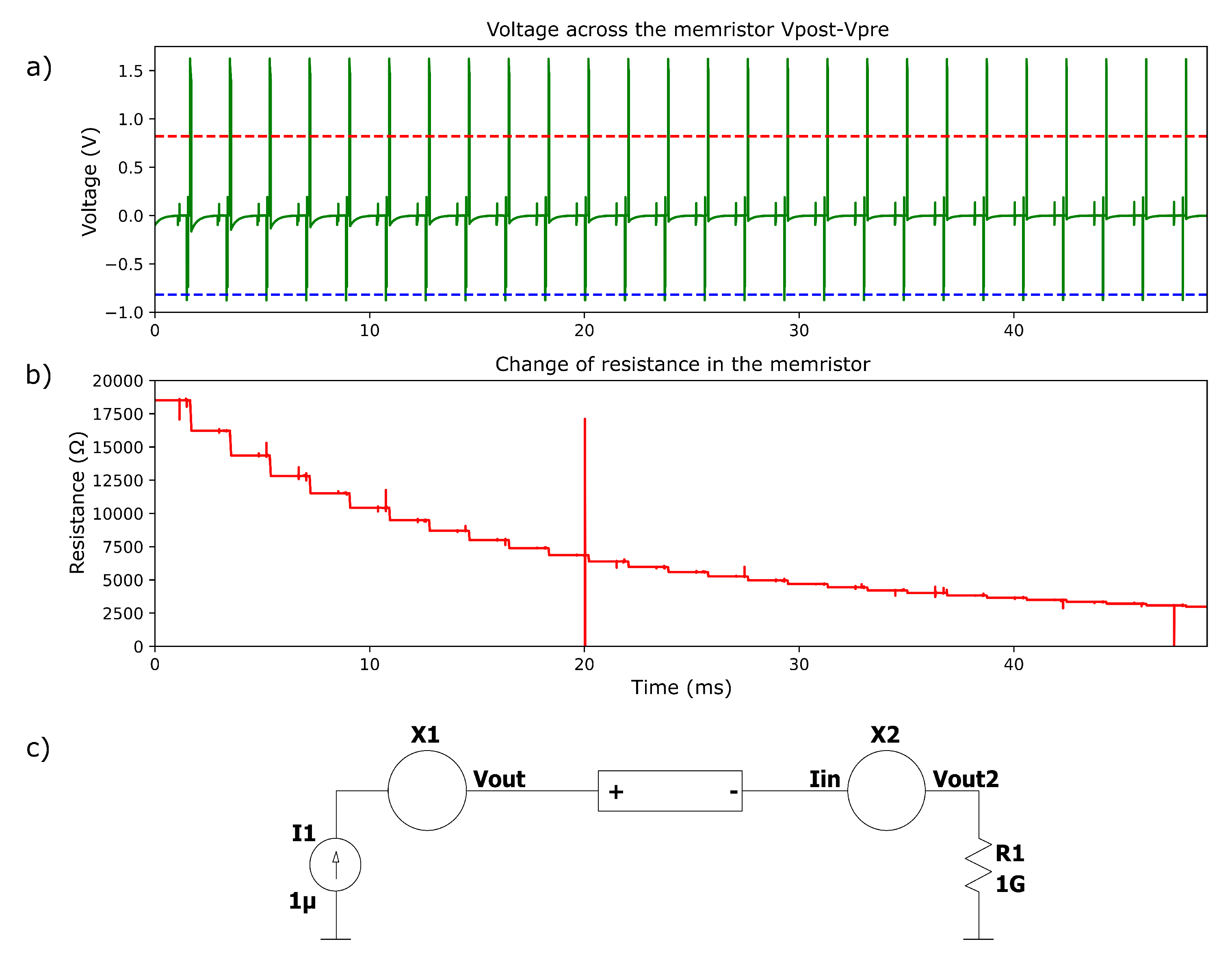

3.3. Simulation of the Synaptic Weight Adjustment of a Memristive Synapse

3.3.1. Long-Term Potentiation On Memristive Synapse

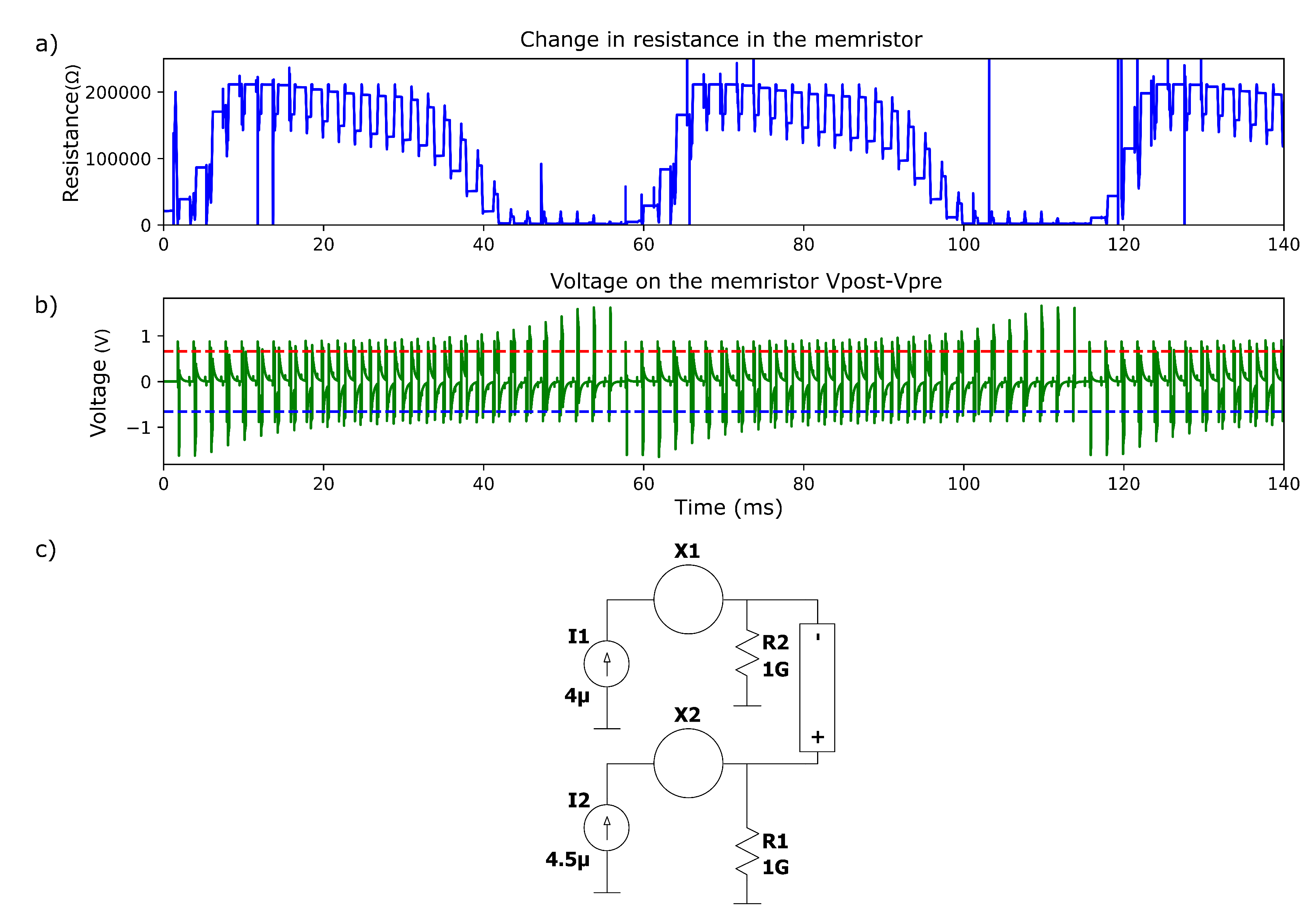

3.3.2. Spike-tIming-Dependent Plasticity (STDP) Process on a Memristive Synapse

4. Conclusions

4.1. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Opamp | Operational amplifier |

| LIF | Leaky integrate-and-fire |

| STDP | Spike timing-dependent plasticity |

| LTP | Long-term potentiation |

| LTD | long-term depression |

References

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; Agarwal, S.; Herbert-Voss, A.; Krueger, G.; Henighan, T.; Child, R.; Ramesh, A.; Ziegler, D.M.; Wu, J.; Winter, C.; Hesse, C.; Chen, M.; Sigler, E.; Litwin, M.; Gray, S.; Chess, B.; Clark, J.; Berner, C.; McCandlish, S.; Radford, A.; Sutskever, I.; Amodei, D. Language Models are Few-Shot Learners. CoRR 2020 abs/2005.14165 [2005.14165].

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical Text-Conditional Image Generation with CLIP Latents, 2022, [arXiv:cs.CV/2204.06125].

- Luccioni, A.S.; Viguier, S.; Ligozat, A.L. Estimating the Carbon Footprint of BLOOM, a 176B Parameter Language Model, 2022, [arXiv:cs.LG/2211.02001].

- Analog VLSI Implementation of Neural Systems; Springer US, 1989. [CrossRef]

- Maass, W. Networks of spiking neurons: The third generation of neural network models. Neural Networks 1997, 10, 1659–1671. [Google Scholar] [CrossRef]

- Costandi, M. Neuroplasticity; The MIT Press Essential Knowledge series; MIT Press: London, England, 2016. [Google Scholar]

- Chakraborty, I.; Jaiswal, A.; Saha, A.K.; Gupta, S.K.; Roy, K. Pathways to efficient neuromorphic computing with non-volatile memory technologies. Applied Physics Reviews 2020, 7[https://pubs.aip.org/aip/apr/article-pdf/doi/10.1063/1.5113536/14576835/021308_1_online.pdf]. 021308,. [CrossRef]

- Kornijcuk, V.; Lim, H.; Seok, J.Y.; Kim, G.; Kim, S.K.; Kim, I.; Choi, B.J.; Jeong, D.S. Leaky Integrate-and-Fire Neuron Circuit Based on Floating-Gate Integrator. Frontiers in Neuroscience 2016, 10. [Google Scholar] [CrossRef] [PubMed]

- Linares-Barranco, B.; Serrano-Gotarredona, T.; Camuñas-Mesa, L.; Perez-Carrasco, J.; Zamarreño-Ramos, C.; Masquelier, T. On Spike-Timing-Dependent-Plasticity, Memristive Devices, and Building a Self-Learning Visual Cortex. Frontiers in Neuroscience 2011, 5. [Google Scholar] [CrossRef] [PubMed]

- Chua, L. Memristor-The missing circuit element. IEEE Transactions on Circuit Theory 1971, 18, 507–519. [Google Scholar] [CrossRef]

- Nature Electronics 2023, 6, 463–463. [CrossRef]

- Hodgkin, A.L.; Huxley, A.F. A quantitative description of membrane current and its application to conduction and excitation in nerve. The Journal of Physiology 1952, 117, 500–544. [Google Scholar] [CrossRef] [PubMed]

- Gerstner, W.; Kistler, W.M.; Naud, R.; Paninski, L. Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition; Cambridge University Press, 2014. [CrossRef]

- Strukov, D.B.; Snider, G.S.; Stewart, D.R.; Williams, R.S. The missing memristor found. Nature 2008, 453, 80–83. [Google Scholar] [CrossRef] [PubMed]

- Joglekar, Y.N.; Wolf, S.J. The elusive memristor: properties of basic electrical circuits. European Journal of Physics 2009, 30, 661. [Google Scholar] [CrossRef]

- Vourkas, I.; Sirakoulis, G.C. Memristor-Based Nanoelectronic Computing Circuits and Architectures; Springer International Publishing, 2016. [CrossRef]

- Hebb, D.O. The organization of behavior; Psychology Press: Philadelphia, PA, 2002. [Google Scholar]

- Dayan, P.; Abbott, L.F. Theoretical neuroscience; Computational neuroscience, MIT Press: London, England, 2001. [Google Scholar]

- Caporale, N.; Dan, Y. Spike Timing–Dependent Plasticity: A Hebbian Learning Rule. Annual Review of Neuroscience 2008, 31, 25–46. [Google Scholar] [CrossRef] [PubMed]

- Rastogi, M.; Lu, S.; Islam, N.; Sengupta, A. On the Self-Repair Role of Astrocytes in STDP Enabled Unsupervised SNNs. Frontiers in Neuroscience 2021, 14. [Google Scholar] [CrossRef] [PubMed]

- Rozenberg, M.J.; Schneegans, O.; Stoliar, P. An ultra-compact leaky-integrate-and-fire model for building spiking neural networks. Scientific Reports 2019, 9. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Saxena, V.; Zhu, K.; Balagopal, S. A CMOS Spiking Neuron for Brain-Inspired Neural Networks With Resistive Synapses and In Situ Learning. IEEE Transactions on Circuits and Systems II: Express Briefs 2015, 62, 1088–1092. [Google Scholar] [CrossRef]

- Analog Devices. 500MHz Ultra-Low Bias Current FET Input Op Amp, 2017.

- Analog Devices. High Speed, Low Cost,Op Amp, 2006. Rev. 0.

- Banerjee, W.; Nikam, R.D.; Hwang, H. Prospect and challenges of analog switching for neuromorphic hardware. Applied Physics Letters 2022, 120. [Google Scholar] [CrossRef]

- Analog Devices. CMOS, ±5 V/+5 V,4 Ω, Single SPDT Switches, 2011. Rev. C.

- Analog Devices. CMOS, 2.5 Ω Low Voltage,Triple/Quad SPDT Switches, 2001. Rev. B.

- Analog Devices. 2.5 Ω, 1.8 V to 5.5 V, ±2.5 V Triple/Quad SPDT Switches in Chip Scale Packages, 2015. Rev. C.

- Knowm. Memristors to Machine Intelligence. Available: https://knowm.org/.

- Maida, A. Chapter 2 - Cognitive Computing and Neural Networks: Reverse Engineering the Brain. In Cognitive Computing: Theory and Applications; Gudivada, V.N.; Raghavan, V.V.; Govindaraju, V.; Rao, C., Eds.; Elsevier, 2016; Vol. 35, Handbook of Statistics, pp. 39–78. [CrossRef]

- Butts, D.A.; Goldman, M.S. Tuning Curves, Neuronal Variability, and Sensory Coding. PLoS Biology 2006, 4, e92. [Google Scholar] [CrossRef]

- Keysight. InfiniiVision X-Series Oscilloscope LabVIEW Instrument Drivers. Available: https://www.keysight.com/us/en/lib/software-detail/driver/infiniivision-xseries-oscilloscope-labview-instrument-drivers-2862255.html.

- Stoliar, P.; Yamada, H.; Toyosaki, Y.; Sawa, A. Spike-shape dependence of the spike-timing dependent synaptic plasticity in ferroelectric-tunnel-junction synapses. Scientific Reports 2019, 9. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).