1. Introduction

Integrated Information Theory (IIT) is a framework aimed at explaining the nature of consciousness in physical terms. It is grounded in both phenomenological observations (how experiences feel) and physicalist principles (how these experiences are realized in physical systems). The most recent version of IIT 4.0 commences with its zeroth axiom, asserting the existence of “Experience exists,” which presupposes a differentiation among subjective states [

1]. Further, it posits that experience inherently wields causal powers, enabling it to modify and distinguish its own state from others, both internally (exclusion and composition) and externally (since intrinsic information of the subjective state must cause some changes in the system’s behavior) [

2]. As a general principle, it proposes that consciousness corresponds to the capacity of a system to integrate information, and its causative powers can be mathematically measured by comparing the maximum of integrated information in a multilevel system, such as a living organism, whose control system contains of neural cells, neural areas, and its integral state [

3,

4,

5].

Simultaneously, IIT contends that “time” does not constitute an attribute of consciousness, as an informative, exclusive and composite state of experience is assumed to possess intrinsic causative powers without reference to temporal realization [

1]. This perspective is rooted in the conception of information as a distinct and self-contained entity capable of exerting causal influence within an objective and independent temporal and spatial framework. Such designs provide a method for capturing system dynamics, but their utility is primarily evident within closed systems governed by strict principles of change. Further, this allows to model systems with a very few elements, if compared to really existed neural networks [

1,

3]. However, real-world physical entities function as open systems, constantly interacting with their environment. It is commonly argued that the maintenance of equilibrium in a living system requires such interactions [

6], with consciousness emerging as a manifestation of this ongoing process [

7,

8]. This inconsistency between mathematical apparatus of IIT and studying real entities continues to invoke doubts in scientific status of this theory [

9].

A potential controversy arises from the disparity between the discrete nature of information, which encapsulates the system’s state at a particular moment, and the dynamic temporal essence inherent to consciousness [

10,

11,

12]. As a result, applying the present concept of information to measure consciousness leads to a significant increase in the amount of data needed, which complicates mathematical modeling and impedes efforts to describe existing conscious systems. [

13,

14]. Therefore, there is an urgent need to reevaluate the concept of information and develop measurement methodologies capable of accommodating temporal dynamics as a fundamental principle.

This review delves into these philosophical inquiries and explores methodologies for harnessing mathematical tools to describe and measure conscious systems, as well as ontological and epistemological inferences [

15,

16]. Furthermore, it advocates for the integration of entropy as a measure of a system's order into the foundational principles of IIT. This is coherent with one of its key points, which assumes consciousness to be a stochastic system [

17,

18]. Through this integration, we aim to stimulate further exploration into understanding the conceptual framework and essence of consciousness.

2. Information and its Measuring

2.1. Information Theory Approach

In a broad sense, “information” denotes data that undergoes processing, organization, or structuring in a manner that imparts meaning, facilitating the conveyance of knowledge, facts, or instructions. It encompasses the content or message conveyed by data, be it through symbols, signals, or other forms of representation. However, the precise definition of “information” may vary depending on the context in which it is employed, reverberating across a spectrum of disciplines, from science and technology to communication and cognition. Within the realms of information theory and communication, information is frequently quantified in terms of entropy or as a gauge of uncertainty reduction [

19,

20].

In information theory, entropy serves as a metric for the degree of uncertainty or unpredictability linked with a random variable. It measures the quantity of information inherent in a message. The formula for entropy (H), typically expressed in bits—the standard unit of measurement in information theory—is commonly articulated as follows:

Where:

H(X) is the entropy of the random variable X,

P(x) is the probability of outcome x

x is iterated over all the possible values of X

This formula calculates the average amount of information produced by a random variable X. The entropy is highest when all outcomes are equally likely, and it decreases as the distribution becomes more skewed or certain. Thus, entropy can also be interpreted as a measure of uncertainty reduction. For example, if we take a binary random variable X with two possible outcomes,

x1 and

x2, and associated probabilities

P(

x1) and

P(

x2) = 1 –

P(

x1). The entropy

H(X) of this binary variable can be calculated as:

In this case, when P(x1) = 0.5 (both outcomes are equally likely, like heads or tails on a coin toss), the entropy is maximum, indicating maximum uncertainty. As P(x1) deviates from 0.5 towards 0 or 1, the entropy decreases, indicating a reduction in uncertainty.

These formulas elucidate how entropy serves as a quantification of both information and uncertainty within information theory. However, it is observed that the augmentation in a system's complexity typically corresponds to an escalation in the quantity of information essential for describing or representing that system [

14]. This correlation arises from the fact that complex systems often harbor a multitude of diverse and interconnected components, engendering heightened uncertainty and variability in their behaviors. As a system becomes more intricate, it tends to manifest a broader array of potential states or configurations. Each additional component or interaction within the system contributes to the expansion of its state space, thereby amplifying the overall uncertainty regarding the system's behavior. Consequently, a greater amount of information becomes requisite to encapsulate the subtleties and intricacies of the system's dynamics. Thus, achieving precision in describing or predicting the system's state necessitates an augmented reservoir of information.

This situation underscores the notion that describing a simple system, comprising only a few components with limited interactions, necessitates a specific amount of information. However, with the addition of more components or the intensification of interactions between them, the system's behavior grows increasingly unpredictable, demanding a greater amount of information to accurately represent its state. Can we even speculate about the magnitude of information required to describe the real state of consciousness [

13]?

Current studies of neural systems highlight critical constraints of this approach: despite efforts, only a fraction of a functional neural system can be adequately described, yet it already demands a substantial volume of information. Does this suggest that advancements in the naturalistic depiction of consciousness are constrained solely by the computational capabilities of existing machines, which can be subsequently overcome [

21]? Alternatively, could it be that the very principle of considering the potential states of each agent—akin to the outcomes of a coin toss—engenders such complexity within the system that rendering it computable becomes untenable? To assess the viability of employing information to measure the consciousness of complex systems such as living beings, it is pertinent to examine more deeply how consciousness and information are conceptualized within Integrated Information Theory (IIT), where these concepts serve as cornerstones.

2.2. Information and Consciousness in IIT

Integrated Information Theory (IIT) of consciousness offers a unique perspective on the understanding of information, diverging from classical information theory. Conceived by Giulio Tononi [

5], IIT posits that consciousness emerges from the integration of information within a system, rather than from the complexity of its individual components. Consequently, IIT regards information as fundamental to the essence of consciousness. The following are key facets of Integrated Information Theory [

1,

15]:

According to IIT, consciousness is linked to a maximally integrated conceptual structure generated by a system that is called a “maximal substrate”. This structure embodies the intricate network of interconnected information processing within the system.

Φ (Phi) serves as a mathematical metric introduced by IIT to quantify the degree of integrated information within a system. It denotes the extent to which a system's components are causally interconnected in a cohesive manner, by encompassing distinctions (specific cause-effect states) and relations (overlaps among distinctions) that give rise to conscious experience. Higher Φ values correspond to heightened levels of consciousness.

IIT proposes that the subjective aspects of consciousness, known as qualia, are delineated in a multidimensional space defined by the configuration of causal interactions within the system which is called “qualia space”. Diverse arrangements of integrated information correspond to distinct qualities of conscious experience.

IIT directly equates the maximum substrate with consciousness, irrespective of its physical implementation. In accordance with this theory, any system capable of generating a maximum of information has the prior causative power over its subsystems with less maximum of integrated information.

In summary, Integrated Information Theory (IIT) posits that consciousness emerges from the intricate interactions of information within a system. Unlike classical information theory, such as Shannon information that deals with uncertainty reduction from an observer's perspective, IIT regards information as the fundamental cause-effect structure underlying subjective experience, which is concerned with the system's own perspective:

The system’s information is considered intrinsic, meaning it is evaluated based on the system’s own state and its potential to influence and be influenced by its own dynamics. This intrinsic perspective is crucial because it ensures that the measures of information and integration are relative to the system itself and not to an external observer .

Information in IIT must be specific to the system's current state, capturing the precise cause-effect relationships that characterize this state. This specificity ensures that the information is not a generalized measure but one that is highly contextual to the state of the system at a given time.

Integrated information, in this context, refers to the extent to which information within a system is interconnected and irreducible—meaning it cannot be decomposed into independent components without losing its essential properties. Systems with elevated levels of integrated information exhibit complex causal structures, characterized by a diverse array of potential states and transitions between these states. A conscious system, therefore, is one that can integrate information in a way that the whole is more than the sum of its parts, reflecting a unified and coherent experience. But then, how should we understand information?

3. Temporality of Consciousness

3.1. Consciousness, Behaviour of the System, and the World

Considering information as something that introduces orderliness and causes a particular integrated state instead of another requires understanding entropy as an opposing process. Integrated Information Theory (IIT) posits that the Maximal substrate serves as an analogue to the current state of subjective experience within the system [

1]. Since the Φ value of the Maximum cannot be simply derived from the information structures of its elements, the entropy of a conscious state also cannot be determined based on the entropy of the components of the neural system.

When assessing the presence or absence of conscious experience, we simplify the scenario to a binary state resembling a coin toss—comprising two variables. In this scenario, the entropy equals 1 bit if no additional factors are considered. As we delve deeper into delineating conscious states, their quantity expands significantly. Nevertheless, they remain within a countable set of possible conscious states accessible to the specific system under scrutiny. Consequently, while the information content of the neural system's elements measured from a third-person point of view may be extensive [

14], the information representing the subjective state should be much more succinct.

The significant reduction of information amount becomes apparent when we revisit the pivotal role of the evolutionary origins of consciousness [

22,

23,

24]. Viewing consciousness as a crucial element in the natural progression of species elucidates the free-energy principle, which necessitates the reduction of action costs and conservation of resources [

25,

26]. The integration of all incoming data in one complex state exemplifies this principle perfectly, as it obviates the need for processing the calculations required to evaluate the profound complexity of incoming data. Instead, a unified subjective state of being enables an instantaneous reaction, thereby shortening the response time to stimuli and enhancing the survival chances of the animate being. This, in turn, contributes to its evolutionary success.

For instance, an old study on crayfish reacting to an approaching shadow illustrates how its behavior alternates between freezing or escaping responses [

27]. From a third-person perspective, we could discern a multitude of variants that delineate the states of the crayfish neural system at each moment and in various environments. However, it appears that their subjective reality is confined to just two possible reactions—akin to the outcomes of a coin toss—while still retaining some variability between them. A recent study in Integrated Information Theory (IIT) elaborates on various types of control systems in frogs, focusing on their strategies for chasing prey and escaping danger [

2]. This study demonstrates that evolutionarily meaningful actions can be implemented in diverse control system designs.

The same observation can be extended to all conscious beings, including humans. Despite the capacity to discriminate between subjective states of being to an almost infinite extent, they can all be categorized within fundamental modes of reacting to the environment. Any environment can either align with the organism's objectives or oppose them. Furthermore, it can increase or decrease the integration of the system [

24]. According to Integrated Information Theory (IIT), the former scenario involves expanding the space of possibilities, while the latter reduces the possibilities of transitioning to certain states, thereby affecting the energy potential of the entire system.

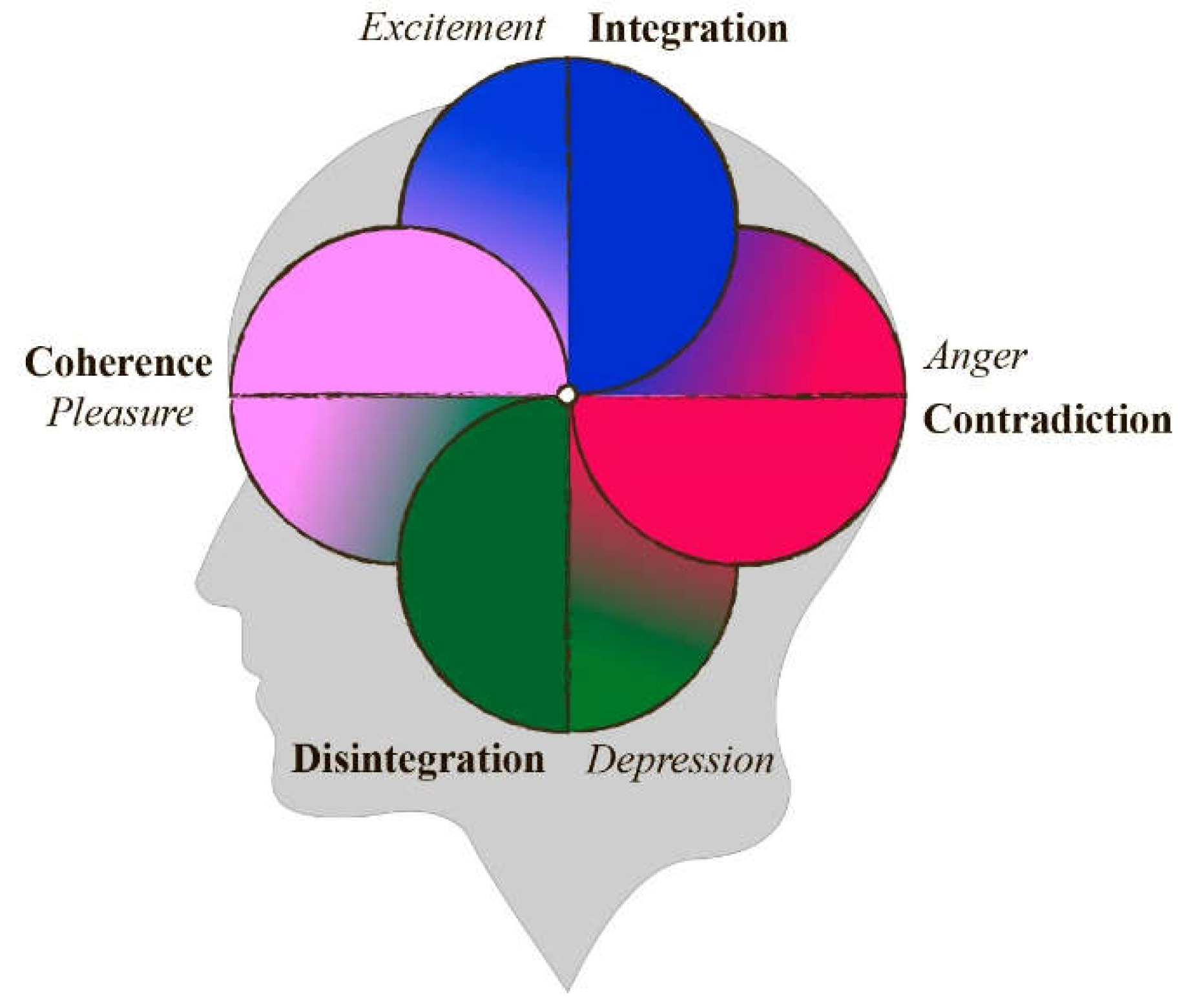

Figure 1 depicts a potential conceptualization of these states in a two-dimensional space.

From an evolutionary standpoint, the role of consciousness necessitates justification based on the more efficient utilization of resources to produce behavior suitable for the current environment [

22,

23,

24]. This implies when measured from the third-person point of view that the integration of information, represented by the Maximal substrate, should result in a decrease in the overall amount of information compared to that of the subsystems. Failure to achieve this reduction contradicts the principle of energy conservation, which is unlikely to be dominant in the real world. The challenge then lies in actualizing this principle in physical material.

3.2. Temporality of Consciousness and Neural System

In exploring avenues for generating integrated information, previous versions of IIT emphasized using recurrent chains at the level of basic elements [

3]. For instance, even a chain of recurrent diodes was hypothesized to yield integrated information, potentially exhibiting subjective states. However, attempting to measure phenomena beyond mathematical models encounters the insurmountable complexity of the system [

14]. This not only renders the suggested mathematical apparatus overly intricate but also conflicts with the imperative of natural selection for systems to be energy-efficient [

28]. So, what solution has evolution devised?

It is crucial to recognize that survival is inherently temporal, unfolding over time within a dynamic and ever-changing environment. Revisiting the real example of the crayfish [

27] or the thought experiment with the frogs [

2], we observe that its primary concern lies in detecting changes in the perceived data, which serve as signals of potential benefit or danger [

29]. Consequently, the most critical information for the organism resides in the disparity between its previous state and the new one induced by external alterations. In essence, the differential information between successive states of the system becomes paramount, as it enables organisms to adapt and respond effectively to evolving environmental circumstances. This emphasis on temporal dynamics underscores the significance of change and adaptation in the context of survival within natural ecosystems.

To perceive a change in the environment, a system must have access to both the state preceding the situation and the one occurring afterward. However, if these two states hold the same ontological status, akin to an objective description of available data, a higher-level entity is required to access, compare, and make meaningful behavioral judgments based on them [

30]. This necessitates a substantial complication of the system, as it requires measurement operations, standardized situation patterns, and a vast amount of stored information, akin to existing computer programs. Additionally, certain concepts of consciousness, such as Higher-order thought theories, propose that this functionality is implemented within the neural systems of living beings [

31,

32]. While this may hold true for theoretical decision-making and other complex mental operations, it is unlikely that basic reactions to the environment, such as the fight or flight response, adhere to this model due to their immediate and instinctual nature.

A simpler approach to comprehend states before and after interaction is for the system to possess its own dynamics, which serve as a default mode, while incoming stimuli alter it and evoke corresponding behaviors [

33,

34]. This approach has garnered substantial support in neuroimaging studies, with the functioning of the Default Mode Network and other related neural domains in humans being among the most well-known examples [

35,

36]. It was theoretically summarized by G. Northoff in the concept of the “spontaneous brain” and Spatio-temporal Theory of Consciousness [

7,

8,

37]. Essentially, this theory posits that the primary function of any neural system is to align with the external world. This alignment is facilitated by the intrinsic dynamics inherent in all neural systems, which serve as a temporal framework for the dynamics induced by external stimulation. Unique states of subjective experience arise from specific patterns of interaction between intrinsic and stimulated activity. Furthermore, the complexity of the system correlates with its capacity to acquire new knowledge: while simple animals may exhibit predetermined reactions, more complex organisms require a process of education and maturation. This phenomenon is evident in humans, where life experiences significantly influence future behavior [

38,

39,

40].

Therefore, the system’s interaction with the environment can be exemplified by the notable difference between the experiences of being a bat and a human. Additionally, the influence of life history on the formation of the human neural system and corresponding reactions introduces significant diversity in the behavior of our species. In the formation of an organism’s space of possibilities, the former biological basis serves as a scaffold for the latter particular content acquired through learning [

41].

4. Aligning with the World

4.1. Predicting Reqularities of the World

A significant challenge arises in understanding the informational dynamics of an integrated system with intrinsic activity during its interaction with the world. Attempts to compare its states before and after interaction can offer insights into the presence of consciousness [

33,

42,

43]. These and other studies have demonstrated an inverse correlation between entropy (predictability) of the neural system and states of consciousness: lower entropy corresponds to unconscious states, while higher entropy signifies awareness. This correlation suggests that consciousness plays a role in guiding behavior by aligning with the world's demands, leading to corresponding reactions from the system, which stem from changes in intrinsic activity in response to stimuli. Consequently, IIT can assert that it is the alteration of the Maximal substrate or subjective experience that influences the dynamics of the neural system [

1].

The described framework represents the range of possibilities for the system's functioning, and measuring them allows for the evaluation of the system's entropy. However, considering this process as comparing two equivalent states through a higher-order mechanism [

31,

32] is not congruent with how neural systems and consciousness operate from the intrinsic perspective, as it is not energy-efficient and assumes an endless reduction of the higher-order mechanism. As previously discussed, the evolutionary purpose of consciousness lies in aligning with the regularities of the world while conserving resources and time [

28,

44]. That is why assessing the predictability of the world stands among the most vital survival tasks for any living being. Consequently, there must exist an energy-efficient principle that governs this interaction and enables the system to monitor changes in entropy of the world. Two critical points emerge from this perspective:

Firstly, the system must maintain a certain level of orderliness to execute its intrinsic activity effectively. This implies that living beings must avoid reaching a state of maximum entropy, which would result in death [

6];

Secondly, alignment with the world necessitates the readiness to perceive unexpected events and respond accordingly. An older study drew attention to the fact that the perception of the world is not a passive reaction to stimuli but an active process of extracting affordances from the surrounding reality [

45].

Thus, no real living being can reduce the entropy of its Maximal substrate below a certain threshold without losing connection with the world and facing imminent demise [

46]. Since it is possible to predict and adapt only to some regularities of the environment, performing intrinsic dynamics with certain rhythms allows to align with the world [

47,

48].

The spontaneous activity of the neural system in an ideal resting state, devoid of any external stimulation, should demonstrate entropy levels close to the lower threshold of awareness. This intrinsic activity is observed in the operation of default mode networks, which adhere to standard patterns characteristic of a particular organism [

36,

49]. These resting states supports various cognitive functions [

35] and memory consolidation [

50], which are crucial for maintaining a coherent and adaptable conscious experience. The higher predictability of these intrinsic dynamics contributes to the indeterminacy of the subjective state or Maximal substrate, thereby increasing the system's overall alertness. Such intrinsic activity serves as a baseline, ensuring that the neural system remains in a state of readiness, capable of quickly transitioning to more active states when necessary.

However, when it encounters external or internal stimuli, it must select a specific possibility of interaction, leading to a decrease in the entropy of consciousness due to the initiation of interaction with the world. On the neural level, this process entails the activation of corresponding structures responsible for further perception and behavioral responses to the changing environment, thereby increasing the entropy of the neural system. As the duration of interaction prolongs, it becomes increasingly challenging to maintain patterns of intrinsic activity, resulting in a decline in awareness of the world and a reduction in working memory and self-control abilities [

51,

52].

4.2. Measuring Entropy of the Subjective Reality

According to the axioms of Integrated Information Theory (IIT), each conscious state is unique, comprising its own composition and containing specific information [

1]. Thus, to measure the entropy of the Maximal substrate, which represents a state of experience, we must assess the complexity of this subjective state. In contemporary neuroscience, one of the most suitable concepts for representing the capabilities of an individual’s conscious behavior is the notion of working memory [

53,

54,

55,

56]. It elucidates the complexity of a discrete experience for a given organism. As subjective reality reflects the unified functioning of the neural system, it cannot descend below a singular overwhelming state. Consequently, during each moment of awareness, a simple organism’s attention is monopolized by a single object. This singular focus appears to suffice for generating energy-efficient fight or flight responses, as observed in the case of a crayfish reacting to an approaching shadow [

27].

The quantification of working memory in humans remains a subject of ongoing debate, heavily contingent on the methods of measurement employed. Nonetheless, there exists a finite number of possible items that constrain the composition of the Integrated structure. The hypothesis of flexible working memory contends that despite the complexity of the system, interference between items imposes strict limitations on the number of items that can be processed simultaneously [

57]. This is coherent with the hypothesis of brain hominization, which posits that an increase in brain volume resulted in more complex neural connectivity, thereby enhancing the ability to discriminate states of experience [

58].

Drawing from evolutionary studies, it is assumed that human working memory is highly likely limited to four units [

59]. This hypothesis finds support in human crafting skills, which necessitate control over materials, tools, aims, and the process of implementation. Furthermore, the advancement of culture and social interactions demands no fewer cognitive units [

24]. While larger quantities of processed units may be demonstrated in specific tasks, they appear less viable in everyday life. Interestingly, many studies use examples with four elements to illustrate principles of their conceptualization, as demonstrated in the “frog” thought experiment [

2]. This may be a natural manifestation of the limits of human working memory. Thus, it is plausible to suggest that human consciousness can sustain the composition of at least four elements in a discrete state of consciousness.

The presence of multiple items increases the entropy level of the system, preventing it from becoming stuck in a single reactive state, as observed in the crayfish of frog examples. This heightened entropy confers an evolutionary advantage, enabling greater flexibility in responding to diverse situations. Consequently, the system can choose from several possible behaviors based on previous experiences, acquired knowledge, goals, and other factors, forming the foundation of what we commonly refer to as free will. However, the neural system's need to support the functioning of such a complex structure results in a significant energy expenditure [

44]. The most resource-intensive aspect is the sustained processing of a particular task, while the Maximal substrate tends to revert to the highest possible threshold of the rest state entropy.

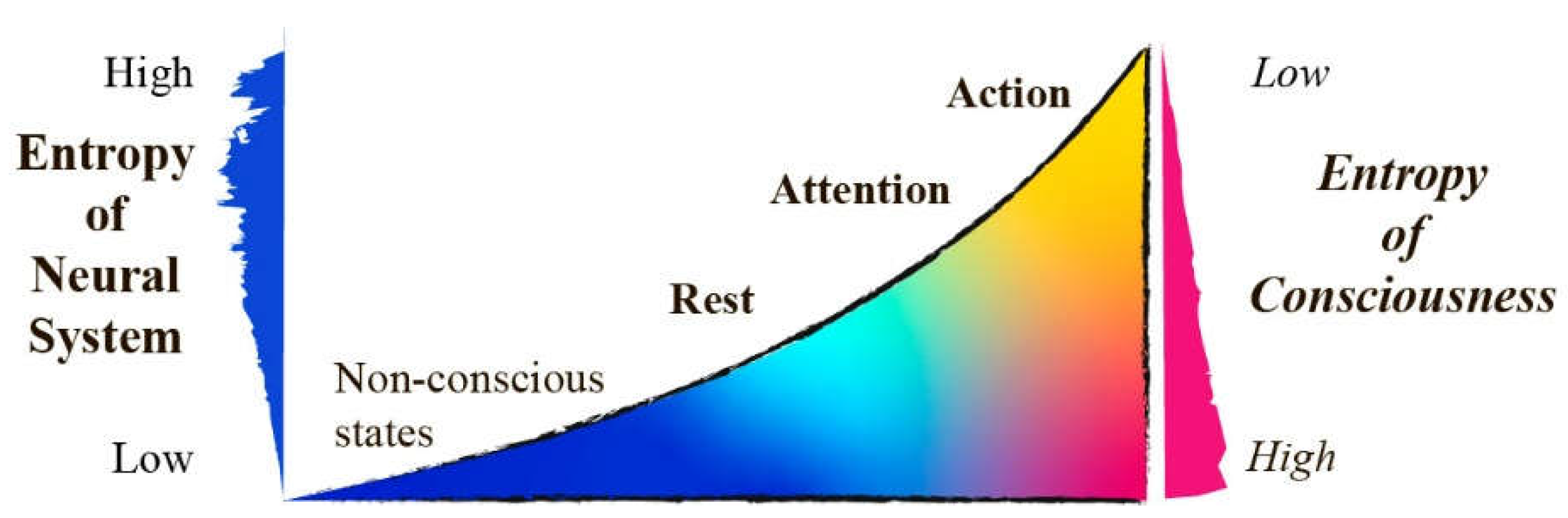

Consider the given above formula for the variable with four items, where higher entropy implies greater unpredictability or complexity in the system. Following examples illustrate how entropy can vary depending on the distribution of probabilities, reflecting different levels of predictability and control within the subjective reality.

An entropy of a variable with probabilities 0.25 for each of the 4 items is 2 bits; it may represent a rest state where attention is not captured by any specific object, indicating a higher level of uncertainty or randomness;

An entropy of a variable with probabilities 0.5, 0.2, 0.2, 0.1 is approximately 1.76 bits; it may signify an average interest in some object, suggesting a moderate level of predictability or control;

An entropy of a variable with probabilities 0.8, 0.1, 0.05, 0.05 is approximately 1.02 bits; it could indicate a thorough examination of an object, implying a higher degree of predictability or control within the subjective reality. The

Figure 2 represents it in a graphical form

These examples align with the notion that higher predictability and control within the subjective reality correspond to higher entropy in the neural system, which supports the functioning of consciousness. However, excessive demands on the neural system, such as prolonged focus of attention or overload of working memory, can lead to fatigue or the need for rest, reflecting the limitations of cognitive resources [

52,

60].

5. Reassessing Information

According to the provided analysis, evaluating the entropy of a real system involves considering the dynamics of both the external environment and the internal structure of the system itself. As demonstrated earlier, the integrated state of a neural system operates according to its own principles and influenced by external processes, which can modify its ongoing dynamics. Thus, the intrinsic activity of the system acts as a temporal framework for incoming stimulation, determining what becomes part of the subjective experience [

7,

10,

37].

As discussed earlier, consciousness cannot process information in the same way as a third-person observer would, by comparing distinct states of being. Such a process would require an impractically large amount of processed information [

14] and would be energy-ineffective [

28,

44]. Instead, conscious decision-making occurs based on how stimuli alter the intrinsic activity of the neural system, allowing for more direct responses to environmental changes [

24,

48]. The intrinsic dynamics of conscious system exhibit specific patterns that reflect the informational content of the world, integrating it into the Maximal substrate.

Therefore, to comprehend how information undergoes processing within consciousness, one must acknowledge the temporal nature of entropy in the real world. Given the presence of aging, entropy tends to escalate over time in the absence of external intervention, driven by stochastic changes that ultimately lead to the dissipation of all systems [

6]. Thus, entropy is a scale that constantly present in the environment. However, the order (negentropy) is also present in the environment in the form of regular changes, as constant circumstances hold no informational value, while irregular changes are unpredictable and impossible to adapt to [

47]. Thus, the very presence of life and evolution is an undeniable argument in favor of the IIT claim that entities with higher integrated information value (Φ) may have stronger causative powers over their elements [

2].

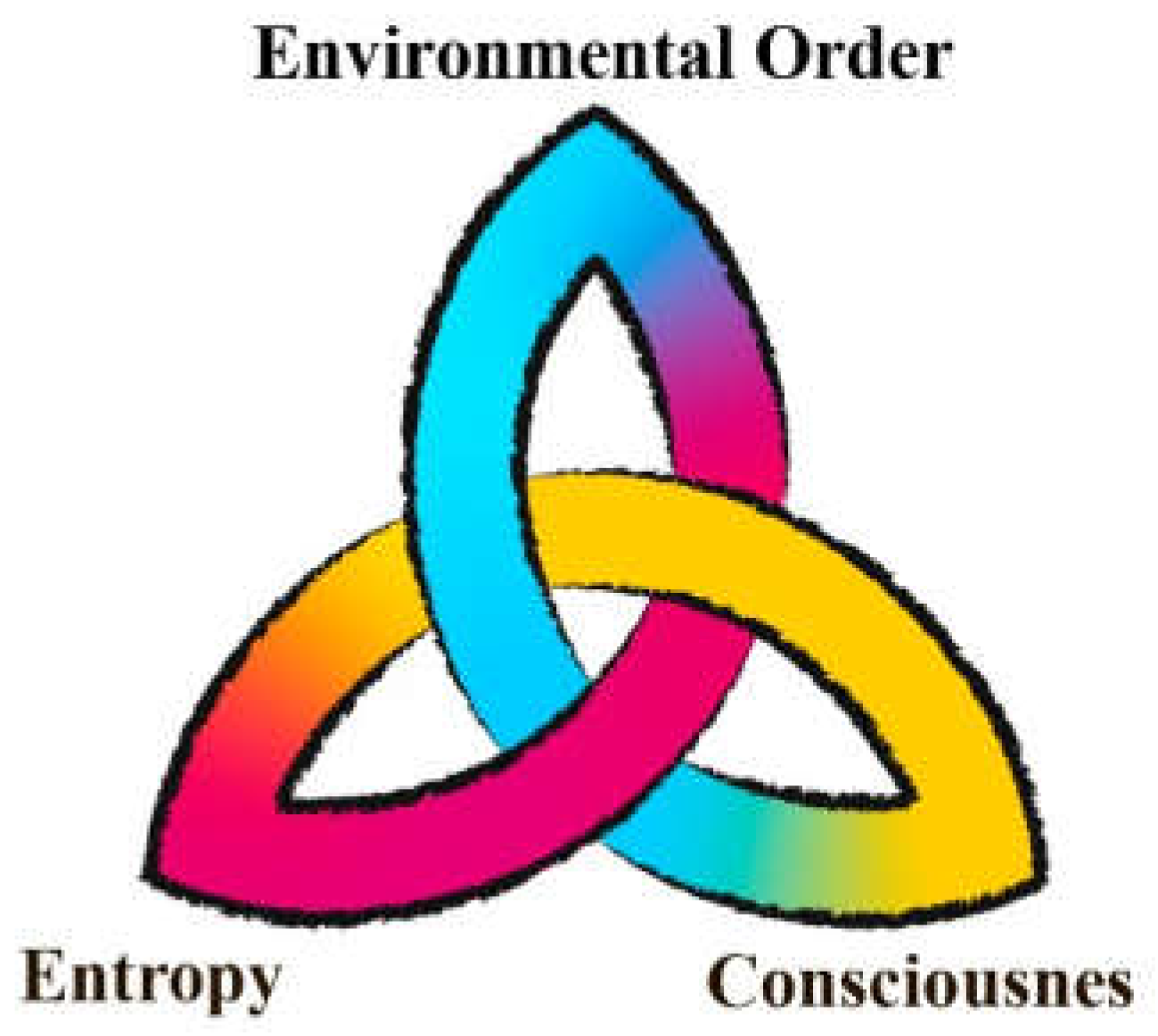

From this perspective, the integrated information of a system can be considered a dynamic entity that may be reinforced and supported by the environmental order, while also being subject to the entropy of its own lower-order elements and those of the surrounding environment. These tendencies mutually reinforce each other, and none of them can be excluded from the process of constituting the maximal substrate of consciousness. The environmental order establishes a framework for the origin and evolution of life as a self-sustaining system. Stochastic changes in the basic substrate within the environment and the organism’s body ensure its alertness and readiness to adjust organismal states to changes, thereby preventing fixation in any single state. The causative powers of the maximal substrate provide sustainability and enabling purposeful actions that can induce changes in the environmental order.

Figure 3 represents it in a graphic form.

The presented analysis underscores the paramount importance of the temporal dimension in the existence of consciousness as subject to evolutionary development. The presence of intrinsic dynamics within living systems, coupled with the imperative for consciousness to synchronize with the regularities of the world, suggests that living organisms function more akin to dynamic entities in a "small world." Within this framework, time, space, and entropy are intrinsic components that maintain alertness and the potential for action. Integrated information and entropy represent two opposing tendencies that constitute the sustainable order of consciousness. Therefore, considering entropy as a temporal value necessitates the consideration of integrated information in the same temporal context: it can be described as a vector scale of a particular system that enables it to maintain orderliness in the face of constant tendencies toward dissipation.

This framework posits that time becomes the most important foundation and axiom of any experience. Consequently, it may significantly simplify the mathematical apparatus required for calculating the integrated information value of the system, thereby contributing to its practical implementation. Introducing entropy as a vector scale into mathematical formalism will transform it from a discrete system that requires an external observer to perform higher-order computations into a much simpler intrinsic system.

6. Philosophical Inference and Challenges

The presented framework elaborates on one of the most paramount claims of Integrated Information Theory (IIT) – that only entities with causative powers truly exist [

1]. Although this claim is not new in either Western or Eastern philosophies, it remains challenging to comprehend within a naturalistic worldview [

2]. The difficulty lies in the following controversy. It is evident that consciousness can significantly influence one’s actions, which cannot easily be explained as the result of rational and energy-efficient behavior of evolutionary units. In other words, the will of consciousness can overcome the causations of its elements, and even simple self-control provides many justifications for this. This supports the idea that integrated information, as the substrate of conscious states, possesses stronger causative powers.

However, when diving into the analysis of forms of existence, one must assume that all real entities, in one form or another, are merely forms of integrated information. This assumption leads to the conclusion that any matter eventually disappears, which contradicts our daily experience that the causative powers of matter cannot be overcome by instant willing. For example, levitation in the atmosphere without proper technical equipment is impossible.

A possible answer lies in the careful consideration of the “instant will” clause mentioned above. Since entropy is a temporal value, integrated information must also be temporal, meaning any causation can only occur within the time required to overcome entropy in the affected units. This aligns with observations that slower rhythms are more powerful than faster rhythms [

7,

10] and that longer time periods may have stronger causative powers [

2]. The amount of energy needed to introduce order should depend on both the quantity of involved elementary units and their rhythms, which determine the energy potential of each unit. In this context, “energy” can be equated to causative power, or “changes.”

It is worth noting that this principle was already suggested by V. Heisenberg, who proposed that the quest for elementary particles of matter could be infinite, limited only by the powers we are able to utilize [

61]. He posited that only forms of energy or causation truly exist. This allows for the measurement of the informational value of an action from an intrinsic perspective by evaluating the change in the system's entropy. Thus, changes in entropy demonstrate causative powers, and all causative powers can be identified through changes in entropy.

However, it is still necessary to understand what lies beyond the subject of changes and whether this leads to subjective idealism or panpsychism [

62], which acknowledges the world as one ultimate or a multitude of conscious beings [

15]. Although the counterintuitive nature of this assumption cannot be considered a true scientific argument, such an anthropocentric perspective does not seem viable [

16]. The issue is not with the provided arguments but with the anthropocentric understanding of consciousness as something very similar to human experience. Human consciousness results from a long evolutionary development of much simpler structures [

24]. These entities also originate from simpler ones, indicating that physical existence and conscious life are merely different stages of order, linked in a line of continuous progress [

48]. The differentiating factor is integrated information structures, which are intrinsic, exclusive, and composite, but also inherently temporal since they are linked with entropy.

The proposed framework provides scientific explanations for the role of culture in the evolutionary progress of humans [

38,

39,

40,

41]. The temporal dimension of culture extends far beyond that of individual consciousness, which can be considered an individual subsystem [

2]. Therefore, when the integrated information of culture surpasses that of individual consciousness, it acquires sufficient causative power to guide natural development and introduce social selection into the evolution of species [

63]. This framework can be applied not only to humans but also to any social organisms or artificial structures [

64].

7. Limitations and Future Scope

The first concern addresses the suggestion to evaluate the informational value of an action by measuring the change in the system’s entropy. However, it is not unlikely that different processes can cause changes with the same amount of differential entropy but result in very different outcomes and even directions of evolution. Given that there may be three opposing vectors for changes—physical world, environmental order, and conscious will—the probability of encountering two different tendencies of entropy change is quite high. Furthermore, the possibility of free will guiding one’s behavior in a chosen direction contributes to the opacity of the state of consciousness. Thus, conscious will can guide an organism either toward progress by following higher rhythms or toward degradation by facilitating the natural process of bodily dissipation. Additionally, the latter may be necessary to abandon an unfruitful route of progression and start anew. What deepens this uncertainty is that the effects of environmental order or matter can be seen as causes of all activities. In exaggerated forms, the former results in religious idealism and fatalism, while the latter results in naive naturalism and illusionism [

65,

66].

The second difficulty arises in seeking the right temporal unit to measure changes in entropy and information of the system. Ideally, a universal temporal value would enable objective measurement of change. However, Integrated Information Theory (IIT) emphasizes that the experience of a living organism is intrinsic, exclusive, and structured [

1,

2]. Thus, changes in integrated information can be properly comprehended only from within, while a reasonable temporal unit seems to be the qualitative change in the state of being [

7,

8]. However, even within one system, changes may occur spontaneously, complicating efforts to integrate these changes into a scientific framework. How can we reconcile spontaneous changes within a system to create a consistent scientific approach?

The third weakness of the suggested study is the absence of a robust mathematical formalism to evaluate changes in integrated information in empirical studies. Without a precise mathematical framework, it is challenging to quantify and analyze how integrated information evolves in real-world scenarios. This limitation means that the current study relies heavily on theoretical speculations rather than concrete, data-driven insights. However, developing such a mathematical formalism should be grounded in empirical research rather than purely theoretical constructs or modelling. This research aimed to provide a conceptual direction and a preliminary framework. Future research should build upon this foundation, focusing on developing rigorous mathematical tools and conducting empirical studies to validate and refine the proposed concepts. By taking these further steps, the field can move towards a more precise and empirically supported understanding of integrated information in cultural and evolutionary contexts.

8. Conclusions

Utilizing mathematical tools for study has proven its efficacy in numerous advancements in medicine and technology. However, directly applying concepts from computational theory may present several challenges. Primarily, it is essential to recognize that the primary function of consciousness is to enhance adaptability for survival. Consequently, it must offer a distinct advantage and be energy-efficient.

Animate beings integrate the processing of their neural systems into a unified state of experience, representing meaningful events and enabling appropriate responses. This cannot be achieved through information processing akin to modern computers, where event data is merely stored and accessed when necessary. Instead, the intrinsic dynamics of the neural system serves as a temporal framework for incoming stimuli, resulting in an exclusive state of subjective experience. The capabilities of the neural system and individual characteristics determine how events are processed.

For reassessing information, several principles can be outlined:

The intrinsic dynamics of the neural system align with the regularities of the world;

High predictability of neural dynamics reflects uncertainty in conscious states;

High conscious control over behavior implies unpredictability of the neural system;

Entropy of consciousness should correlate with the capacities of working memory;

Phylogenesis and ontogenesis determine the significance of events for consciousness;

Maximal substrate of culture exerts causative powers over individual consciousness.

These principles may be articulated differently, but I am confident in the overall argumentative trajectory, which underscores the necessity of rethinking the concept of information, particularly concerning its application to conscious systems. The intrinsic temporality of consciousness and the imperative to align with the real world require understanding entropy as a temporal and qualitative value. As the goal of consciousness is to reduce uncertainty, it cannot exist without constant readiness to respond to surprises. Therefore, entropy must be considered among the foundational aspects of consciousness.

Funding

This research received no external funding.

Institutional Review Board Statement

“Not applicable”.

Data Availability Statement

“Not applicable”.

Acknowledgments

I am grateful to Dr. Caroline Pires Ting for her invaluable guidance in understanding the concepts of order and progress, and to Dr. Ella Dryaeva for her unwavering support.

Conflicts of Interest

The author declares no conflicts of interest.

Declaration of Generative (AI) and AI-assisted technologies in the Writing Process

During the preparation of this work the author used Chat-GPT in order to improve the text’s readability and grammar. After using this tool/service, the author reviewed and edited the content as needed and takes full responsibility for the content of the publication.

References

- Albantakis, L., et al., Integrated information theory (IIT) 4.0: Formulating the properties of phenomenal existence in physical terms. PLoS computational biology 2023, 19(10): p. e1011465-e1011465. [CrossRef]

- Grasso, M., et al., Causal reductionism and causal structures. Nature Neuroscience, 2021. 24: p. 1348-1355. [CrossRef]

- Tononi, G., et al., Integrated information theory: from consciousness to its physical substrate. Nature Reviews Neuroscience, 2016. 17(7): p. 450-461. [CrossRef]

- Koch, C., et al., Neural correlates of consciousness: progress and problems. Nature Reviews Neuroscience, 2016. 17(6): p. 395-395. [CrossRef]

- Tononi, G., Consciousness as integrated information: A provisional manifesto. Biological Bulletin, 2008. 215(3): p. 216-242. [CrossRef]

- Prigogine, I. and I. Stengers, Order out of chaos: Man's new dialogue with nature. Radical thinkers. 2017, London: Verso. xxxi, 349 pages.

- Chis-Ciure, R., L. Mellonei, and G. Northoff, A measure centrality index for systematic empirical comparison of consciousness theories. Neuroscience and Biobehavioral Reviews, 2024. 161: p. 105670. [CrossRef]

- Northoff, G., The Spontaneous Brain: From the Mind–Body to the World–Brain Problem. 2018, Cambridge, MA: The MIT Press.

- Lenharo, M., Consciousness theory slammed as 'pseudoscience' - sparking uproar. Nature, 2023.

- Northoff, G., S. Wainio-Theberge, and K. Evers, Is temporo-spatial dynamics the "common currency" of brain and mind? In Quest of "Spatiotemporal Neuroscience". Phys Life Rev, 2020. 33: p. 34-54.

- Northoff, G., S. Wainio-Theberge, and K. Evers, Spatiotemporal neuroscience - what is it and why we need it. Phys Life Rev, 2020. 33: p. 78-87. [CrossRef]

- Kolvoort, I.R., et al., Temporal integration as "common currency" of brain and self-scale-free activity in resting-state EEG correlates with temporal delay effects on self-relatedness. Hum Brain Mapp, 2020. 41(15): p. 4355-4374.

- Deweerdt, S., Deep connections. Nature, 2019. 571(7766): p. S6-S8.

- Shapson-Coe, A., et al., A petavoxel fragment of human cerebral cortex reconstructed at nanoscale resolution. Science (New York, N.Y.), 2024. 384(6696): p. eadk4858-eadk4858. [CrossRef]

- Cea, I., N. Negro, and C.M. Signorelli, The Fundamental Tension in Integrated Information Theory 4.0's Realist Idealism. Entropy, 2023. 25(10). [CrossRef]

- Sanchez-Canizares, J., Integrated Information is not Causation: Why Integrated Information Theory's Causal Structures do not Beat Causal Reductionism. Philosophia, 2023. 51(5): p. 2439-2455. [CrossRef]

- Marshall, W., et al., System Integrated Information. Entropy, 2023. 25(2). [CrossRef]

- Albantakis, L., R. Prentner, and I. Durham, Computing the Integrated Information of a Quantum Mechanism. Entropy, 2023. 25(3).

- Shannon, C.E., A mathematical theory of communication. The Bell System Technical Journal, 1948. 27(3).

- Cover, M.T. and J.A. Thomas, Elements of Information Theory, 2nd Edition. 2006, Hoboken: John Wiley & Sons, Inc.

- Voytek, B., The data science future of neuroscience theory. Nature Methods, 2022. 19(11): p. 1349-1350. [CrossRef]

- Krakauer, J.W., et al., Neuroscience Needs Behavior: Correcting a Reductionist Bias. Neuron, 2017. 93(3): p. 480-490. [CrossRef]

- Feinberg, T.E. and J. Mallatt, Subjectivity 'demystified': neurobiology, evolution, and the explanatory gap. Frontiers in Psychology, 2019. 10: p. 1686.

- Kanaev, I.A., Evolutionary origin and the development of consciousness. Neuroscience and biobehavioral reviews, 2022. 133: p. 104511. [CrossRef]

- Friston, K.J., W. Wiese, and J.A. Hobson, Sentience and the Origins of Consciousness: From Cartesian Duality to Markovian Monism. Entropy, 2020. 22(5): p. 516. [CrossRef]

- Friston, K.J., The free-energy principle: a unified brain theory? Nature Reviews Neuroscience, 2010. 11(2): p. 127-138. [CrossRef]

- Liden, W.H., M.L. Phillips, and J. Herberholz, Neural control of behavioural choice in juvenile crayfish. Proceedings of the Royal Society B-Biological Sciences, 2010. 277(1699): p. 3493-3500. [CrossRef]

- Buxton, R.B., The thermodynamics of thinking: connections between neural activity, energy metabolism and blood flow. Philosophical Transactions of the Royal Society B-Biological Sciences, 2021. 376(1815): p. 20190624.

- LeDoux, J.E., As soon as there was life, there was danger: the deep history of survival behaviours and the shallower history of consciousness. Philosophical Transactions of the Royal Society B-Biological Sciences, 2022. 377(1844): p. 20210292. [CrossRef]

- Fleming, S.M., Awareness as inference in a higher-order state space. Neuroscience of Consciousness, 2020. 6(1). [CrossRef]

- Rolls, E.T., Neural computations underlying phenomenal consciousness: A higher order syntactic thought theory. Frontiers in Psychology, 2020. 11: p. 655. [CrossRef]

- Brown, R., H. Lau, and J.E. LeDoux, Understanding the higher-order approach to consciousness. Trends in Cognitive Sciences, 2019. 23(9): p. 754-768. [CrossRef]

- Huang, Z., et al., Temporal circuit of macroscale dynamic brain activity supports human consciousness. Science Advances, 2020. 6(11): p. eaaz0087. [CrossRef]

- Northoff, G. and Z.R. Huang, How do the brain's time and space mediate consciousness and its different dimensions? Temporo-spatial theory of consciousness (TTC). Neuroscience and Biobehavioral Reviews, 2017. 80: p. 630-645. [CrossRef]

- Yeshurun, Y., M. Nguyen, and U. Hasson, The default mode network: where the idiosyncratic self meets the shared social world. Nature Reviews Neuroscience, 2021. 22(3): p. 181-192. [CrossRef]

- Raichle, M.E., The Brain's Default Mode Network, in Annual Review of Neuroscience, Vol 38, S.E. Hyman, Editor. 2015. p. 433-447.

- Northoff, G. and F. Zilio, Temporo-spatial Theory of Consciousness (TTC)-Bridging the gap of neuronal activity and phenomenal states. Behavioural Brain Research, 2022. 424. [CrossRef]

- Snell-Rood, E. and C. Snell-Rood, The developmental support hypothesis: Adaptive plasticity in neural development in response to cues of social support. Philosophical Transactions of the Royal Society B-Biological Sciences, 2020. 375(1803): p. 20190491. [CrossRef]

- Richerson, P.J. and R. Boyd, The human life history is adapted to exploit the adaptive advantages of culture. Philosophical Transactions of the Royal Society B-Biological Sciences, 2020. 375(1803): p. 20190498. [CrossRef]

- Gopnik, A., Life history, love and learning. Nature Human Behaviour, 2019. 3(10): p. 1041-1042.

- Wilson, S.P. and T.J. Prescott, Scaffolding layered control architectures through constraint closure: insights into brain evolution and development. Philosophical Transactions of the Royal Society B-Biological Sciences, 2022. 377(1844): p. 20200519. [CrossRef]

- Alu, F., et al., Approximate Entropy of Brain Network in the Study of Hemispheric Differences. Entropy, 2020. 22(11): p. 1220. [CrossRef]

- Burioka, N., et al., Approximate entropy in the electroencephalogram during wake and sleep. Clinical Eeg and Neuroscience, 2005. 36(1): p. 21-24. [CrossRef]

- Palmer, T., Human creativity and consciousness: Unintended consequences of the brain's extraordinary energy efficiency? Entropy-Switz, 2020. 22(3): p. 281.

- Gibson, J.J., The Ecological Approach to Visual Perception. 1979, Boston: Houghton Mifflin. xiv, 332 p.

- Northoff, G. and S. Tumati, "Average is good, extremes are bad" - Non-linear inverted U-shaped relationship between neural mechanisms and functionality of mental features. Neuroscience and Biobehavioral Reviews, 2019. 104: p. 11-25.

- Anokhin, P.K., Selected works. Philosophical aspects of the theory of functional systems. 1978, Moscow: Science. [CrossRef]

- Kanaev, I.A., Entropy and Cross-Level Orderliness in Light of the Interconnection between the Neural System and Consciousness. Entropy, 2023. 25(3). [CrossRef]

- Smallwood, J., et al., The default mode network in cognition: a topographical perspective. Nature Reviews Neuroscience, 2021. 22(8): p. 503-513. [CrossRef]

- Kaefer, K., et al., Replay, the default mode network and the cascaded memory systems model. Nature Reviews Neuroscience, 2022. 23(10): p. 628-640. [CrossRef]

- Sauseng, P. and H.R. Liesefeld, Cognitive Control: Brain Oscillations Coordinate Human Working Memory. Current Biology, 2020. 30(9): p. R405-R407. [CrossRef]

- Groß, D. and C.W. Kohlmann, Predicting self-control capacity - Taking into account working memory capacity, motivation, and heart rate variability. Acta Psychologica, 2020. 209: p. 103131.

- Persuh, M., E. LaRock, and J. Berger, Working Memory and Consciousness: The Current State of Play. Frontiers in Human Neuroscience, 2018. 12: p. 11. [CrossRef]

- Christophel, T.B., et al., The distributed nature of working memory. Trends in Cognitive Sciences, 2017. 21(2): p. 111-124. [CrossRef]

- Coolidge, F.L. and T. Wynn, The evolution of working memory. Annee Psychologique, 2020. 120(2): p. 103-134. [CrossRef]

- Bellafard, A., et al., Volatile working memory representations crystallize with practice. Nature, 2024. [CrossRef]

- Bouchacourt, F. and T.J. Buschman, A flexible model of working memory. Neuron, 2019. 103(1): p. 147-160.e8+.

- Changeux, J.-P., A. Goulas, and C.C. Hilgetag, A Connectomic Hypothesis for the Hominization of the Brain. Cerebral Cortex, 2021. 31(5): p. 2425-2449. [CrossRef]

- Balter, M., Evolution of behavior. Did working memory spark creative culture? Science, 2010. 328(5975): p. 160-163.

- Hahn, L.A. and J. Rose, Working Memory as an Indicator for Comparative Cognition - Detecting Qualitative and Quantitative Differences. Frontiers in Psychology, 2020. 11. [CrossRef]

- Heisenberg, W., Physics and philosophy; the revolution in modern science. 1st ed. World perspectives, v 19. 1958, New York,: Harper. 206 p.

- Hameroff, S. and R. Penrose, Consciousness in the universe: A review of the 'Orch OR' theory. Physics of Life Reviews, 2014. 11(1): p. 39-78. [CrossRef]

- Han, S., The sociocultural brain: A cultural neuroscience approach to human nature. 2017, Oxford: Oxford University Press. x, 275 pages, 16 unnumbered pages of color plates.

- Schneider, S., Artificial you: AI and the future of your mind. 2019, Princeton: Princeton University Press.

- Dennett, D.C., Facing up to the hard question of consciousness. Philosophical Transactions of the Royal Society B-Biological Sciences, 2018. 373(1755): p. 20170342. [CrossRef]

- Frankish, K., Illusionism: as a theory of consciousness. 2017, Luton, Bedfordshire: Andrews UK,. 1 online resource.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).