1. Introduction

An accurate human movement analysis [

1,

2,

3,

4,

5,

6,

7,

34] has several applications, including upper-limb (UL) rehabilitation, assessment of neurological diseases, tracking the trajectory of improvement or deterioration of arm movements, and assessing athlete performance. UL movement can be measured using wearable sensing or non-obtrusive optical marker tracking techniques. Wearable sensing techniques are simple, non-obtrusive and self-contained [

4]. Moreover, these techniques can be used to record long-term UL movements while the participants can perform activities of daily living (ADL) at home [

8,

9,

10]. Wearable inertial sensing is a compact wearable device containing a tri-axial accelerometer and or tri-axial gyroscope [

23,

24,

25,

26].

The main problem with inertial sensors is to ingrate their signal recordings over a long phase of time [

27,

28,

29,

31,

32]. As a result, their noise accumulates, leading to invalid posture or UL movement classification. This error can accumulate in as few as the 60s. In [

5] authors showed that inertial sensing data resulted in drift after every minute. Kalman filtering techniques have been used in [

5] to reduce the drift and fuse gyroscope data with inertial sensing data. However, the Kalman filter was able to reduce the drift only for a smaller period, mainly in the non-movement paradigms. Vicon technology (i.e. marker-based UL tracking) is relatively accurate but suffers from noise and light deflection errors [

11,

12,

13].

Machine learning algorithms have the potential for rapid classification and avoiding inherent issues of inertial sensing such as drift and accumulated noise. This new data-driven paradigm, i.e. combination of ML and sensing approach can be used efficiently for UL classification purposes. Recently, human activity has been recognised using ML [

6,

14,

15,

16,

17]. ML has successfully been applied to recognise gestures, neurological disorders such as Parkinson's disease [

18,

19,

27,

30], multiple sclerosis [

8] and assessment of motor functions as well as arm movements usage in stroke patients [

9,

10].

Many of the previous studies demonstrate the application of inertial sensors and the use of ML in clinical populations. However, to the best of our knowledge, no specific guidance presently exists to advise clinicians on the classification and benchmark of this ML approach, which is important being at the intersection of data science, human upper-limb movements and neurological disorders. Hence, ML has broad applicability and potential for the inertial sensing classification; however, understanding whether to develop a deep learning model or a simple Naïve Bayes [

24,

25,

26,

27] modelling approach for a specific set of data is of foremost importance.

A timely application of the combined ML and sensing classification approach to monitoring rehabilitation trajectory in stroke patients or people living with disabilities could assist clinicians. Quantification of the movement trajectory of UL in rehabilitation medicine entails classifying motor units of measurement, which are matched with the clinical outcomes. For example, an ML algorithm (hidden Markov model with logistic regression) has been applied to the inertial sensing data for UL recognition of primitives, which has achieved an overall classification performance of 79% [

8]. At the same time, this research failed to address research challenges such as computational complexity and costs. Thus the main objective of this research is to compare the hand movement classification accuracy across several well known machine learning algorithms.

The remainder of this paper is organised as below.

Section 2 describes the overall methodology with the description of data set. A brief description of ML algorithms are also presented in this section.

Section 3 presents the results and this paper is concluded in the conclusion section followed by the future works.

2. Methodolgy

2.1. Data Sets

In order to demonstrate the steps in identifying the optimal machine learning algorithm for inertial movement data, we utilised data collected in a previous study from the University of California, Irvine’s (UCI) machine learning database (Vicon Physical Action Data Set [

19,

33]). The data was collected from seven male and three female participants (aged 25 to 30). In 20 experiments, each participant performed ten normal and ten aggressive postures. Ethical regulations have been followed during the data collection according to the code of ethics of the British psychological society. Each physical activity’s data (an action) was collected separately from each trial. Each action was made for approximately 10 seconds per participant, resulting in a time series of 3000 data points with a sampling rate of 200 data points in a second (200 Hz). In this data set, two markers have been placed on the right and left arms of the participant. These markers are considered a good indicator of human upper limb movement. The Vicon data has been used extensively in rehabilitation, physical medicine and hospitals [

19,

33].

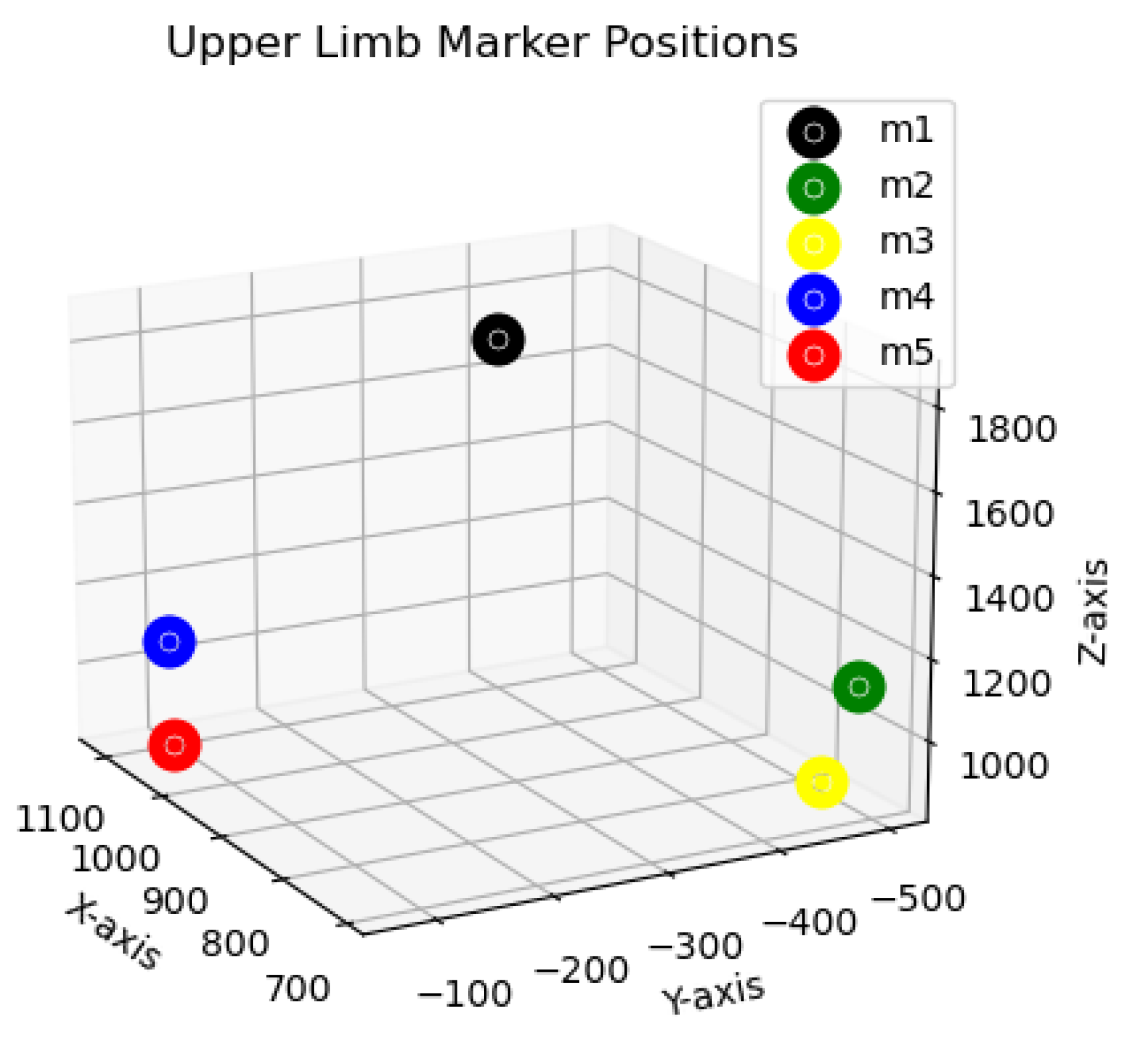

2.2. Description of the Data

The data is 3D in nature and has x, y and z coordinates representing the position in the space of every marker. In this paper, since we are interested in the UL movements, the first five markers’ (m1, m2, m3, m4 and m5) position data were used as a feature set. Two markers were attached as a segment of the human body, and hence four segments of data were obtained, starting from the head to the right arm (R-Arm) and the left arm (L-arm), as shown in

Figure 1. An illustration of the data is explained in the

Table 1 below:

Five different movement data were considered for this study, i.e. Waving, Handshaking, Clapping, Punching and Hammering. All the data are a good candidate for representing of the UL movements, providing an appropriate feature space for the machine learning algorithms explained in the section.

2.3. Machine Learning (ML) Methods

This research sought to identify the ML algorithm that performs well on positional data such as Vicon data and or inertial sensing data. The purpose is to identify functional data primitives and/or features with a very high classification rate and practical use, i.e., with low learning rates and computational time. Although, ML methodologies have also been applied to computer vision-based data, as shown by the authors in the previous studies [

20,

21,

25].

During the training phase of ML algorithms, a relationship between data characteristics (primarily the statistical features) and its labels (classes) is established. Eventually, building a model which utilises the pattern of data characteristics to identify a new data sample as one of the primitive blocks. Finally, this classification is verified against the ground-truth or human-annotated labels, resulting in classification performance.

We considered both generative and discriminative algorithms. Generative algorithmic models tend to find the underlying probabilistic distribution of data for each class or label to identify data characteristics that enable pattern matching of new samples to a given class. In contrast, discriminative algorithms model the boundaries between classes and not the data themselves. They seek to identify the line or plane separating the classes so that, based on location relative to the plane, a new data sample is assigned to the appropriate class.

This study has selected three algorithms based on their high classification performance in human UL activity. Namely, Support Vector Machines (SVM) with a radial basis kernel function (RBF), Naïve Bayes classifier and 1-D Convolutional Neural network (CNN) [

34]. All algorithms were designed in Python 3.8 using the scikit-learn library. The results and the accuracy of the algorithmic performance to classify the UL data is shown in the result section of this paper.

3. Results

Preliminary results have been presented in this under preparation report. The results are based on Naïve Bayes, SVM and CNN.

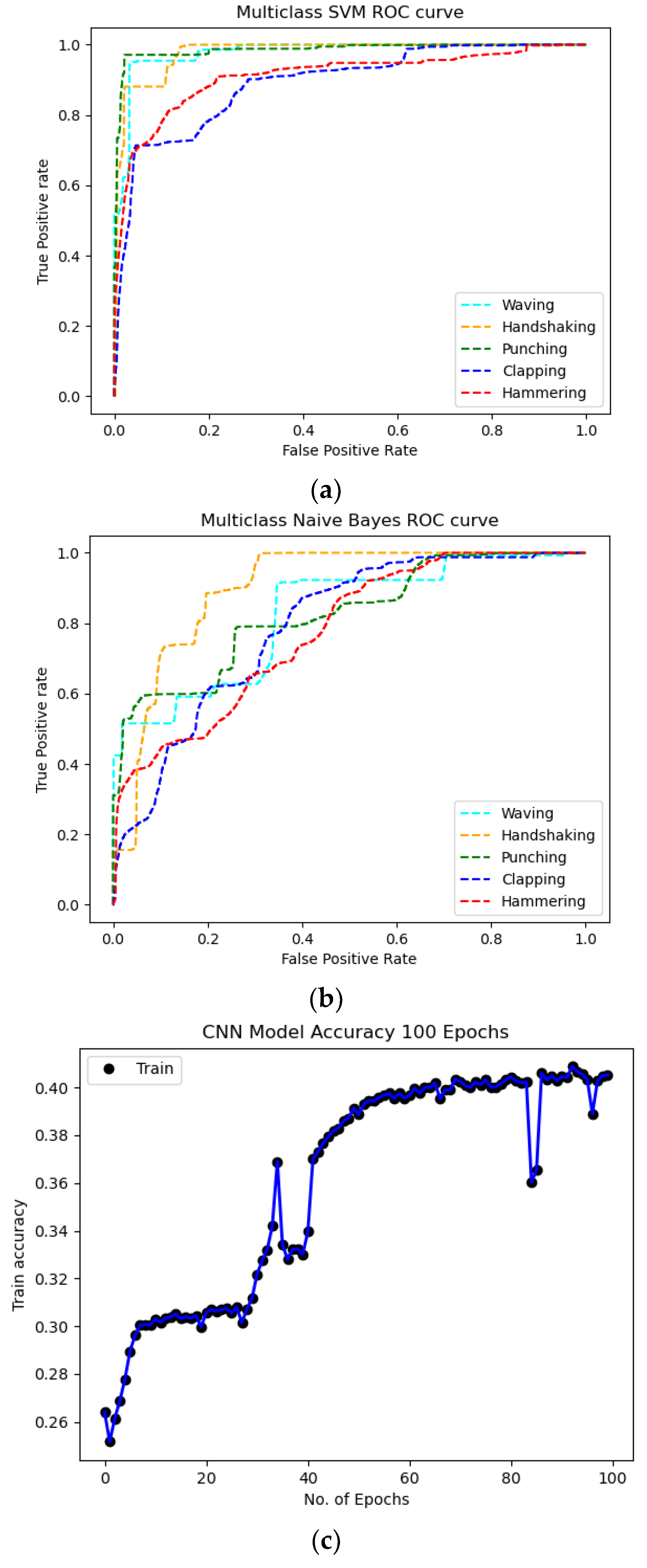

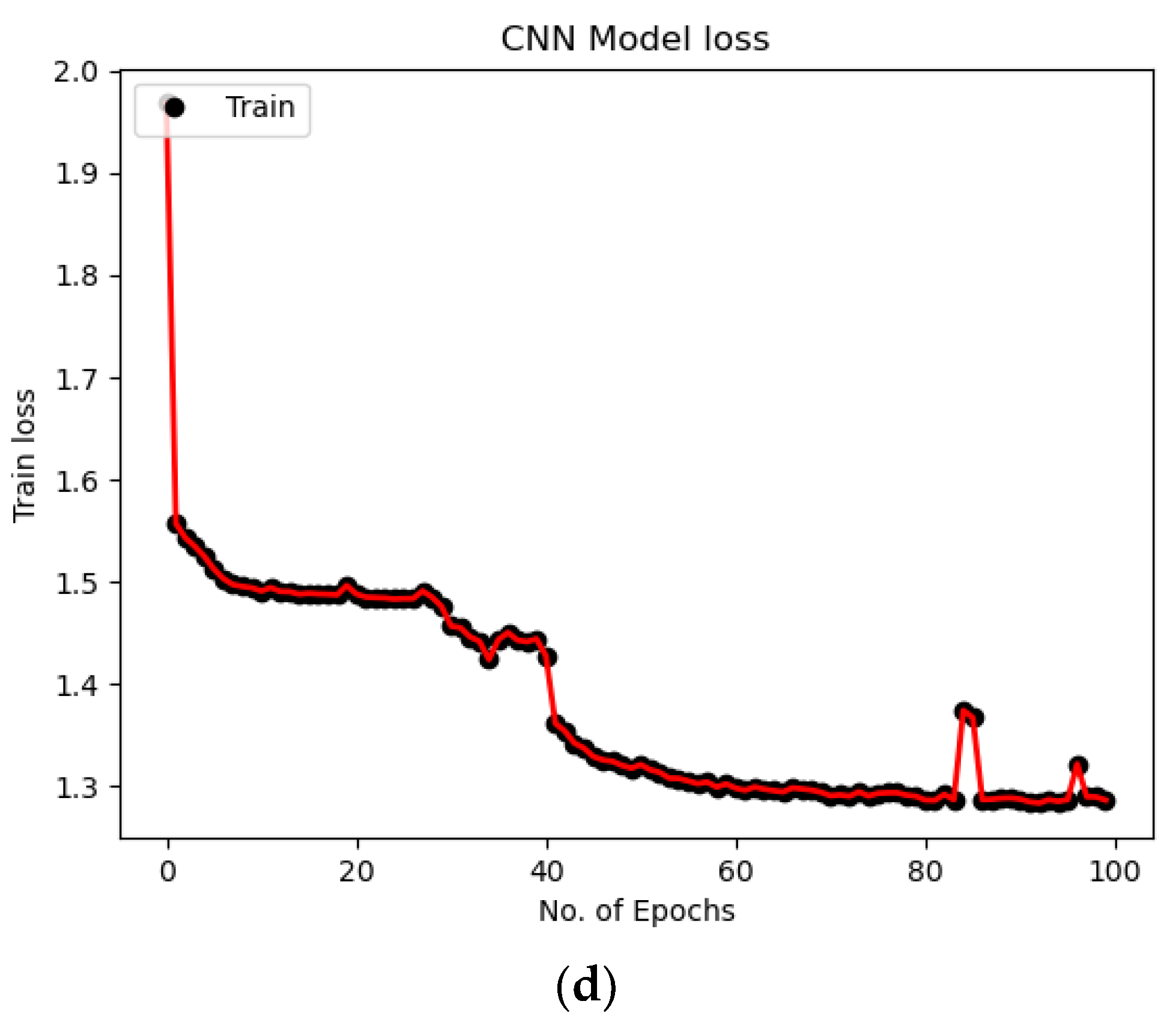

Figure 2.

Preliminary results of Machine Learning based classification of sensor data. (a) Support Vector Machine (SVM) classification performance, (b) Naïve Bayes classifier’s performance, (c) 1-D Convolutional Neural Networks (CNN) classifier training accuracy, (d) and CNN Model’s loss.

Figure 2.

Preliminary results of Machine Learning based classification of sensor data. (a) Support Vector Machine (SVM) classification performance, (b) Naïve Bayes classifier’s performance, (c) 1-D Convolutional Neural Networks (CNN) classifier training accuracy, (d) and CNN Model’s loss.

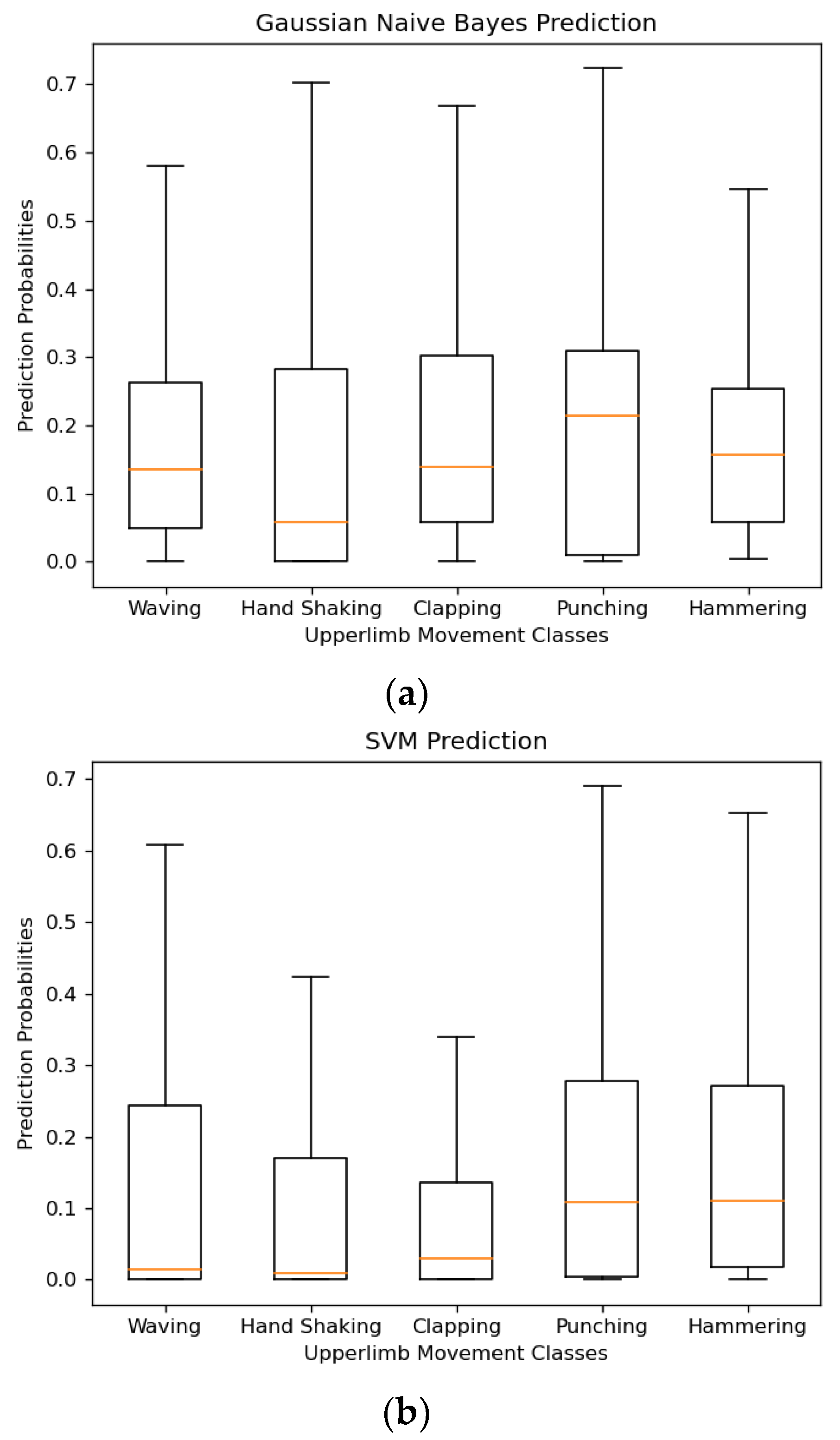

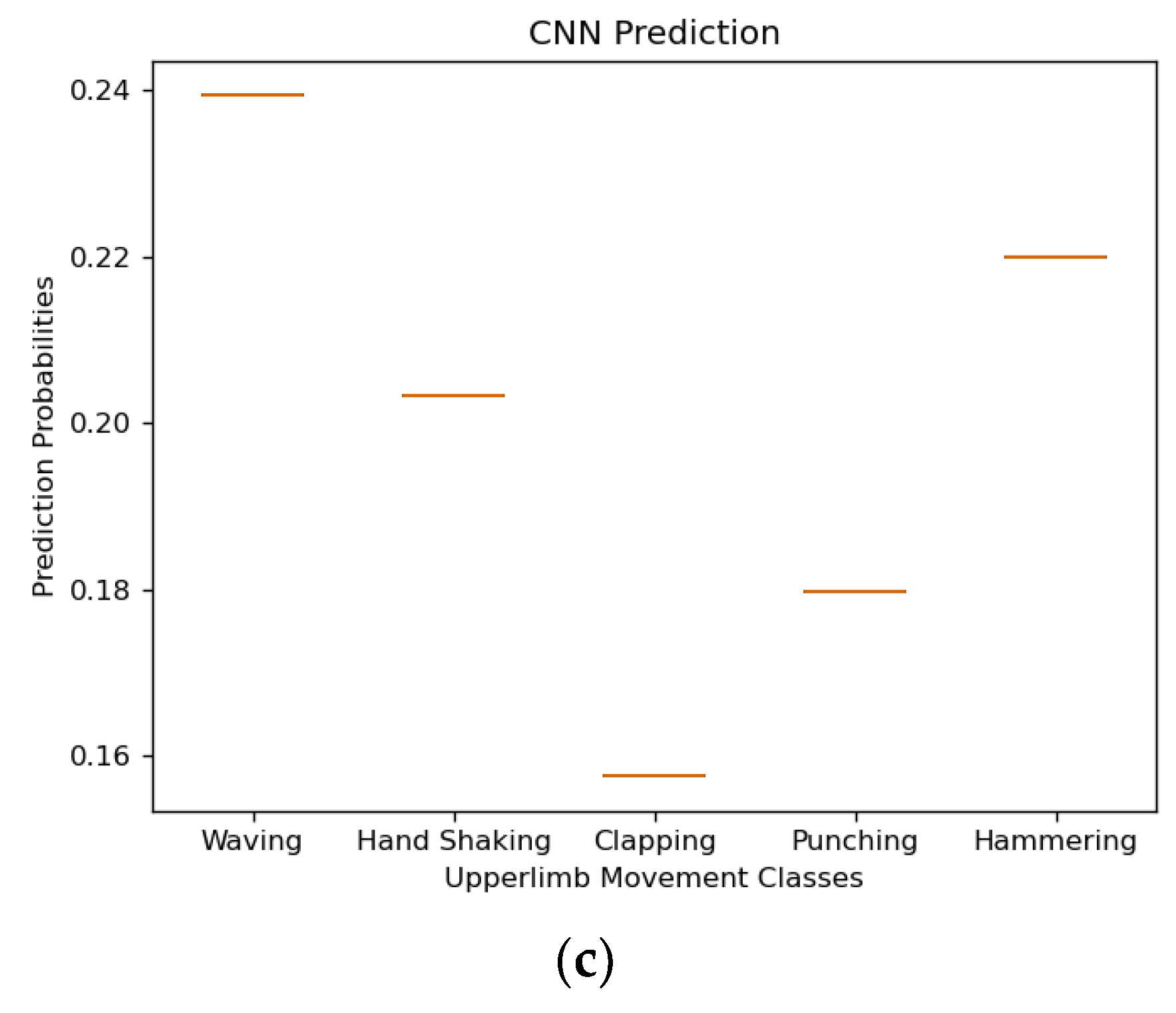

Figure 3.

Prediction probabilities boxplots of Machine Learning algorithms on test dataset. (a) Support Vector Machine (SVM) classification performance, (b) Naïve Bayes classifier’s performance, (c) 1-D Convolutional Neural Networks (CNN) classifier.

Figure 3.

Prediction probabilities boxplots of Machine Learning algorithms on test dataset. (a) Support Vector Machine (SVM) classification performance, (b) Naïve Bayes classifier’s performance, (c) 1-D Convolutional Neural Networks (CNN) classifier.

4. Conclusion

In conclusion, we present classification performance comparison of three representative machine learning algorithms. This comparison will be useful especially for clinicians inorder to optimise patients’ motion capturing needs. In this paper, we have shown practical and computational considerations towards the implementation of motion capture sensing approach in a quantitative way. In particular, our findings can be useful to define a strategy which provides a solution for the classification of functional primitives of body movements. So far SVM had the best classification accuracy, followed by the Bayes classification and CNN.

Future Work

This study has some limitations and we are planning to cover it in future. This study demonstrates the use of ML algorithms and their head to head comparison while classifying different UL functional primitives and tasks. We aim to build a universal system which switches between the most appropriate ML algorithm and retains the highest accuracy specific ML algorithm for a particular task. Due to limited data set, we did not explore the clinically valid UL postural movements, for example of stroke or people living with disabilities or other impairments, identification as a functional primitive.

Also, variability in data was not identified properly, which could be a reason for CNN to not to perform. A collaboration with ML experts and clinicians is suggested. A more refined data with more degrees of freedom or kinematics can be beneficial to define a better feature matrix for ML algorithms.

The most important aspect is that our approach allowed us to demonstrate the practical implications required in the motion classification using sensor data.

References

- Meinhold, R. J. , & Singpurwalla, N. D. (1983). Understanding the Kalman filter. The American Statistician 37(2), 123–127.

- Mihelj, M. Inverse kinematics of human arm based on multisensor data integration. J. Intell. Robot. Syst. 2006, 47, 139–153. [Google Scholar] [CrossRef]

- Marken, R., Kennaway, R., & Gulrez, T. (2022). Behavioral illusions: The snark is a boojum. Theory & Psychology, 32(3), 491-514.

- Luinge, H.J.; Veltink, P.H.; Baten, C.T. Ambulatory measurement of arm orientation. J. Biomech. 2007, 40, 78–85. [Google Scholar] [CrossRef] [PubMed]

- Gulrez, T., & Tognetti, A. (2014). A sensorized garment controlled virtual robotic wheelchair. Journal of Intelligent & Robotic Systems, 74(3), 847-868.

- Eskofier, B. M., Lee, S. I., Daneault, J. F., Golabchi, F. N., Ferreira-Carvalho, G., Vergara-Diaz, G., & Bonato, P. (2016, August). Recent machine learning advancements in sensor-based mobility analysis: Deep learning for Parkinson's disease assessment. In 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (pp. 655-658). IEEE.

- Gulrez, T., Tognetti, A., Yoon, W. J., Kavakli, M., & Cabibihan, J. J. (2016). A hands-free interface for controlling virtual electric-powered wheelchairs. International Journal of Advanced Robotic Systems, 13(2), 49. [CrossRef]

- Liu, Z., Zhang, Y., Patrick Rau, P. L., Choe, P., & Gulrez, T. (2015). Leap-motion based online interactive system for hand rehabilitation. In Cross-Cultural Design Applications in Mobile Interaction, Education, Health, Transport and Cultural Heritage: 7th International Conference, CCD 2015, Held as Part of HCI International 2015, Los Angeles, CA, USA, August 2-7, 2015, Proceedings, Part II 7 (pp. 338-347). Springer International Publishing.

- Gulrez, T., Kavakli-Thorne, M., & Tognetti, A. (2013, June). Decoding 2d kinematics of human arm for body machine interfaces. In 2013 IEEE 8th Conference on Industrial Electronics and Applications (ICIEA) (pp. 719-722). IEEE.

- Gulrez, T., Tognetti, A., Fishbach, A., Acosta, S., Scharver, C., De Rossi, D., & Mussa-Ivaldi, F. A. (2011). Controlling wheelchairs by body motions: A learning framework for the adaptive remapping of space. arXiv preprint. arXiv:1107.5387. [CrossRef]

- Gulrez, T., & Hassanien, A. E. (Eds.). (2011). Advances in robotics and virtual reality (Vol. 26). Springer Science & Business Media.

- Tognetti, A., Gulrez, T., Carbonaro, N., Dalle Mura, G., Zupone, G., & De Rossi, D. E. (2009). Integrating hands-free interface into 3d virtual reality environments. In ARO Non-Manual Control Devices Symposium.

- Mussa Ivaldi, F., Fishbach, A., Acosta, S., Gulrez, T., Tognetti, A., & De Rossi, D. E. (2006). Remapping the residual motor space of spinal-cord injured patients for the control of assistive devices. SOCIETY FOR NEUROSCIENCE.

- Gulrez, T., & Yoon, W. J. (2018). Cutaneous haptic feedback system and methods of use. US Patent, (9,946).

- Carpinella, I., Cattaneo, D., & Ferrarin, M. (2014). Quantitative assessment of upper limb motor function in Multiple Sclerosis using an instrumented Action Research Arm Test. Journal of neuroengineering and rehabilitation, 11(1), 1-16.

- Bochniewicz, E. M., Emmer, G., McLeod, A., Barth, J., Dromerick, A. W., & Lum, P. (2017). Measuring functional arm movement after stroke using a single wrist-worn sensor and machine learning. Journal of Stroke and Cerebrovascular Diseases, 26(12), 2880-2887.

- Leuenberger, K., Gonzenbach, R., Wachter, S., Luft, A., & Gassert, R. (2017). A method to qualitatively assess arm use in stroke survivors in the home environment. Medical & biological engineering & computing, 55(1), 141-150.

- Guerra, J., Uddin, J., Nilsen, D., Mclnerney, J., Fadoo, A., Omofuma, I. B.,... & Schambra, H. M. (2017, July). Capture, learning, and classification of upper extremity movement primitives in healthy controls and stroke patients. In 2017 International Conference on Rehabilitation Robotics (ICORR) (pp. 547-554). IEEE.

- Zhang, Z.Q.; Wu, J.K. A novel hierarchical information fusion method for three-dimensional upper limb motion estimation. IEEE Trans. Instrum. Meas. 2011, 60, 3709–3719. [Google Scholar] [CrossRef]

- DATASET: Theo Theodoridis. (2019). UCI Machine Learning Repository [http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science.

- Sandhu, S., Gulrez, T., & Mansell, W. (2020). Behavioral anatomy of a hunt. Attention, Perception, & Psychophysics, 82(6), 3112-3123.

- Gulrez, T., & Mansell, W. (2022). High Performance on Atari Games Using Perceptual Control Architecture Without Training. Journal of Intelligent & Robotic Systems, 106(2), 45.

- Smith, J., Gulrez, T., & Konak, M. (2021, January). Deriving optimal control from data using machine learning for force control systems. In AIAC 2021: 19th Australian International Aerospace Congress: 19th Australian International Aerospace Congress (pp. 173-178). Engineers Australia.

- Cabibihan, J. J., Alhaddad, A. Y., Gulrez, T., & Yoon, W. J. (2021). Influence of visual and haptic feedback on the detection of threshold forces in a surgical grasping task. IEEE Robotics and Automation Letters, 6(3), 5525-5532.

- Poh, C., Gulrez, T., & Konak, M. (2021, March). Minimal neural networks for real-time online nonlinear system identification. In 2021 IEEE Aerospace Conference (50100) (pp. 1-9). IEEE.

- Sandhu, S., Gulrez, T., & Mansell, W. (2020). Behavioral anatomy of a hunt: using dynamic real-world paradigm and computer vision to compare human user-generated strategies with prey movement varying in predictability. Attention, Perception, & Psychophysics, 82, 3112-3123.

- Pour, P. A., Gulrez, T., AlZoubi, O., Gargiulo, G., & Calvo, R. A. (2008, December). Brain-computer interface: Next generation thought controlled distributed video game development platform. In 2008 IEEE Symposium On Computational Intelligence and Games (pp. 251-257). IEEE.

- Mussa Ivaldi, F., Fishbach, A., Acosta, S., Gulrez, T., Tognetti, A., & De Rossi, D. E. (2006). In Society for Neuroscience Annual Congress luogo: Washington.

- Cavallo, F., Moschetti, A., Esposito, D., Maremmani, C., & Rovini, E. (2019). Upper limb motor pre-clinical assessment in Parkinson's disease using machine learning. Parkinsonism & related disorders, 63, 111-116.

- Rish, I. (2001, August). An empirical study of the naive Bayes classifier. In IJCAI 2001 workshop on empirical methods in artificial intelligence (Vol. 3, No. 22, pp. 41-46).

- Gulrez, T., Meziani, S. N., Rog, D., Jones, M., & Hodgson, A. (2016, July). Can Autonomous Sensor Systems Improve the Well-being of People Living at Home with Neurodegenerative Disorders?. In International Conference on Cross-Cultural Design (pp. 649-658). Springer, Cham.

- Jalal, A., Batool, M., & Kim, K. (2020). Stochastic recognition of physical activity and healthcare using tri-axial inertial wearable sensors. Applied Sciences, 10(20), 7122.

- Kavaliauskaitė, D., Gulrez, T., & Mansell, W. (2023). What is the relationship between spontaneous interpersonal synchronization and feeling of connectedness? A study of small groups of students using MIDI percussion instruments. Psychology of Music, 03057356231207049.

- Cabibihan, J. J., Alhaddad, A. Y., Gulrez, T., & Yoon, W. J. (2022). Dataset for influence of visual and haptic feedback on the detection of threshold forces in a surgical grasping task. Data in Brief, 42, 108045.

- Gulrez, T. (2021). Robots Used Today that we Did Not Expect 20 Years Ago (from the Editorial Board Members). Journal of Intelligent & Robotic Systems, 102(3).

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).