1. Introduction

Integrating Generative Artificial Intelligence (AI) and Large Language Models (LLMs) presents a promising frontier in intelligent network optimization. Generative AI algorithms, including those based on deep learning techniques such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), can simulate and generate data that closely resembles real-world network traffic patterns[

1]. When combined with LLMs like GPT (Generative Pre-trained Transformer), these models can analyze vast amounts of network data, identify patterns, and generate insights to optimize network configurations dynamically[

2]. By leveraging machine learning to predict traffic fluctuations and adapt network resources accordingly, organizations can enhance efficiency, reduce latency, and improve overall network performance. Generative AI and LLMs can assist in anomaly detection and security by learning normal network behaviors and identifying deviations that may indicate potential threats or performance issues.

Managing complex cloud network infrastructures poses several challenges due to their dynamic nature and scale. One significant challenge is ensuring security and compliance across distributed environments[

3]. With data spread across multiple servers and regions, maintaining robust security measures becomes crucial to prevent breaches and data leaks. Additionally, managing network performance and ensuring seamless connectivity amidst varying workloads and traffic patterns is another hurdle. Balancing cost efficiency with performance optimization requires constant monitoring and adjustment of resources to meet evolving demands while controlling expenses. Interoperability between different cloud platforms and legacy systems presents integration challenges[

4]. Coordinating across diverse technologies and ensuring compatibility often requires specialized expertise and careful planning. Additionally, maintaining visibility and control over the entire network infrastructure can be daunting, especially with hybrid or multi-cloud setups. Overall, managing complex cloud network infrastructures demands a holistic approach, combining technical expertise, strategic planning, and continuous adaptation to overcome these challenges effectively.

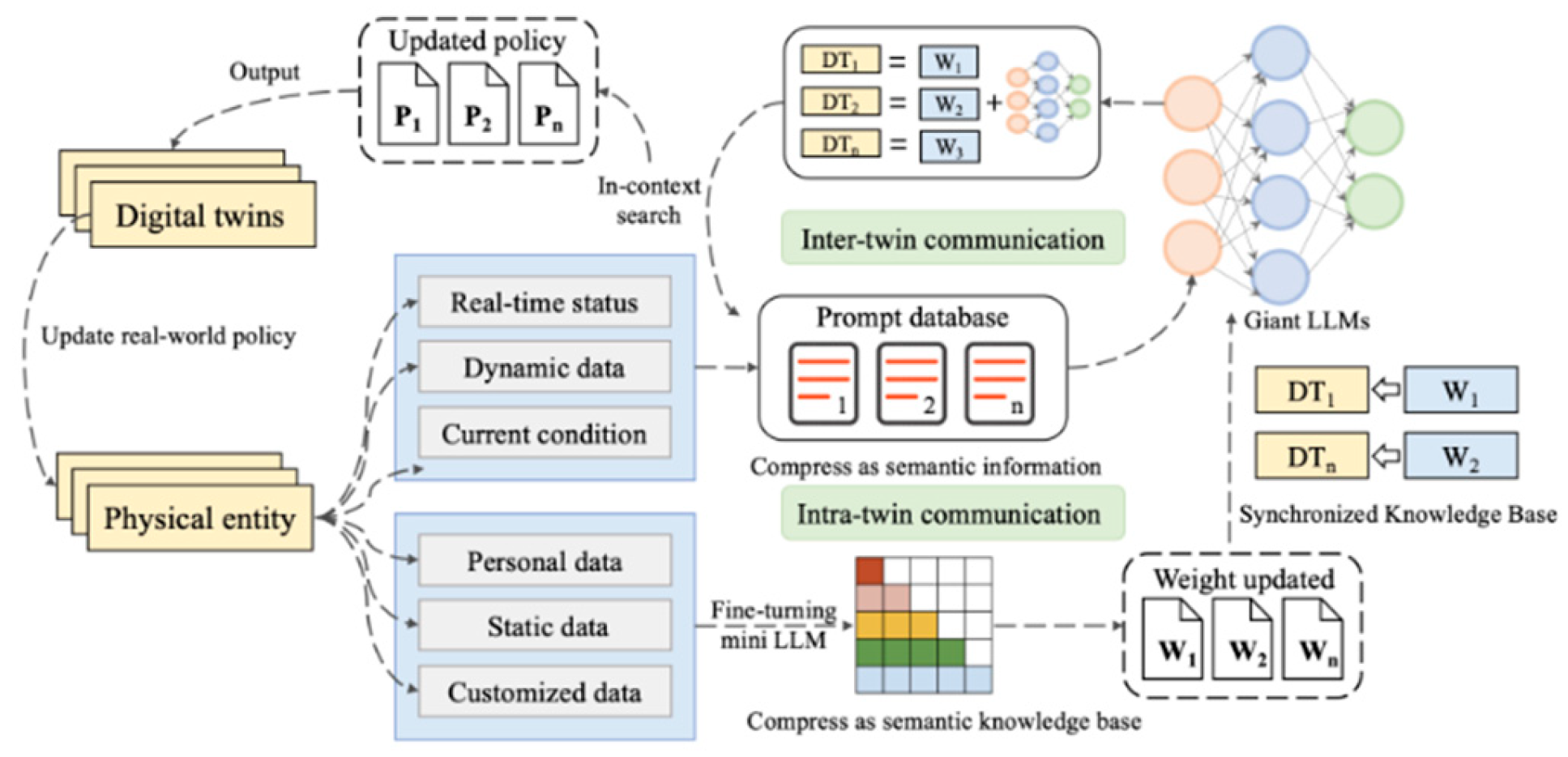

Figure 1 illustrates the Digital Twin Semantic Network within the LLM-Twin networking framework represents a sophisticated emulation of real-world network systems. By integrating Large Language Models (LLMs), it offers a dynamic and adaptable environment for simulating network behaviors and configurations[

5]. This semantic network captures intricate relationships between network components, protocols, and performance metrics, enabling detailed analysis and optimization. With its semantic understanding capabilities, the LLM-Twin framework can interpret and respond to complex queries, facilitating advanced troubleshooting and decision-making. Leveraging generative AI algorithms, it continuously refines its representations based on real-time data, ensuring accuracy and relevance.

The Digital Twin Semantic Network within the LLM-Twin networking framework serves as a powerful tool for modeling, simulating, and optimizing network infrastructures with unprecedented sophistication and intelligence[

6]. Traditional static network configurations have limitations in adapting to the dynamic demands of modern computing environments. They lack flexibility, as network settings are typically manually configured and require significant time and effort to modify. This rigidity inhibits scalability, making it challenging to accommodate fluctuating workloads or sudden changes in network traffic patterns efficiently. Moreover, static configurations are prone to misconfigurations and errors, leading to network downtime and security vulnerabilities[

7]. As a result, these configurations hinder the agility and responsiveness required to meet the evolving needs of businesses in today's fast-paced digital landscape. Overall, the limitations of traditional static network configurations underscore the necessity for more adaptive and automated approaches to network management

2. Generative AI for Network Topology Design and Optimization

Generative AI has emerged as a powerful tool for network topology design and optimization, revolutionizing how engineers approach complex networking challenges. Generative AI systems can autonomously generate and refine network topologies based on specified objectives, constraints, and historical data by leveraging machine learning algorithms. One of the key advantages of generative AI in network topology design is its ability to explore a vast solution space and discover novel architectures that may not be intuitive to human designers. Through iterative processes such as reinforcement learning or genetic algorithms, these systems can evolve and adapt network structures to achieve optimal performance metrics, such as throughput, latency, and scalability.

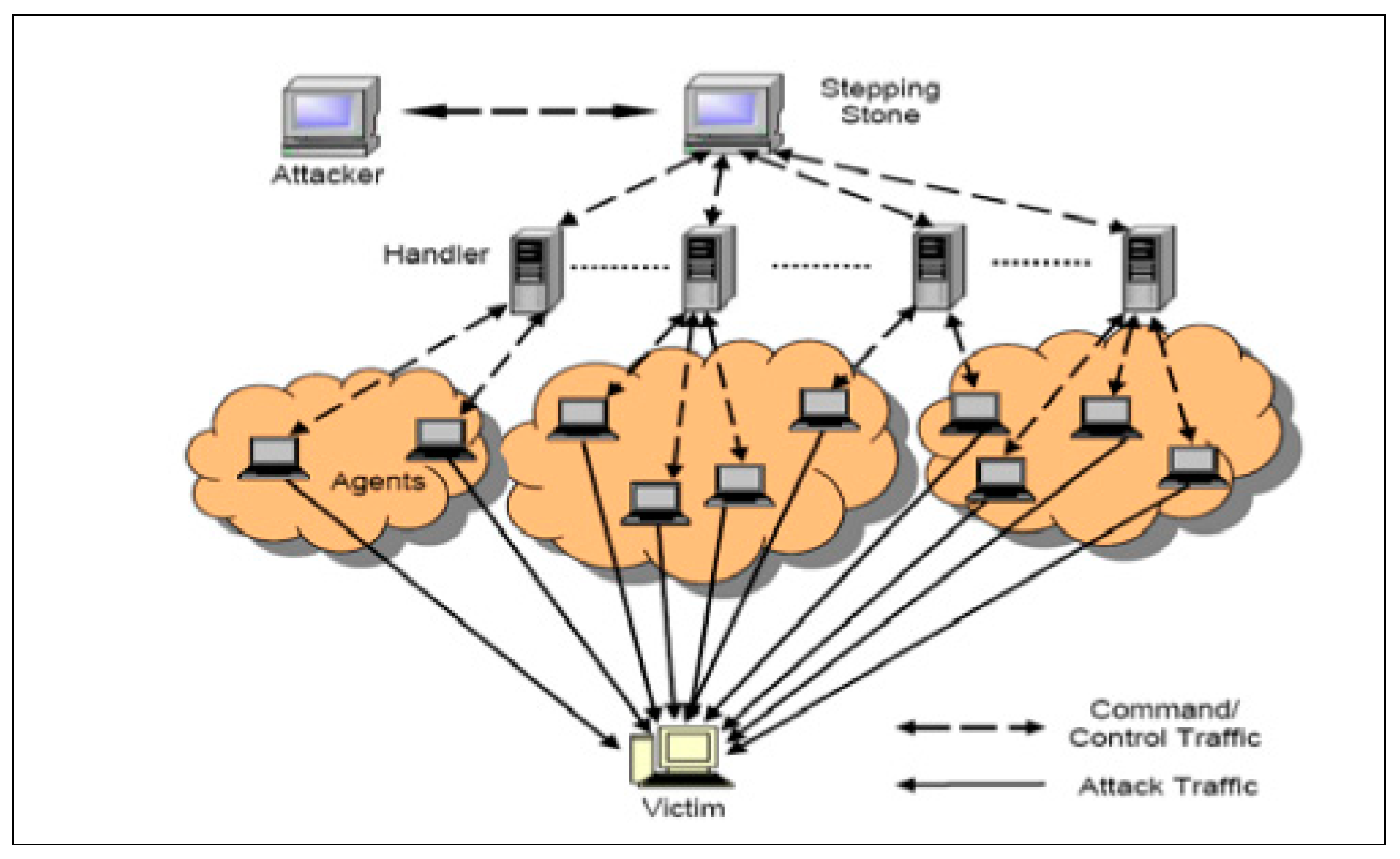

In

Figure 2, we envision a scenario where our network experiences an abrupt surge in traffic attributed to a Distributed Denial of Service (DDoS) attack. This malicious activity floods our network infrastructure with overwhelming incoming requests, disrupting normal network operations and impeding access to legitimate users. As the DDoS attack unfolds, our network monitoring systems detect anomalous patterns in traffic behavior, indicative of the attack's presence. Alerts are triggered, prompting our automated response mechanisms to spring into action. These mechanisms dynamically reconfigure network settings, such as routing policies and access controls, to mitigate the impact of the attack and restore network stability.

Anomaly detection algorithms, powered by machine learning and AI, analyze the incoming traffic streams to identify and isolate malicious traffic sources. By leveraging historical data and behavioral analytics, these algorithms swiftly distinguish between legitimate and malicious traffic, enabling targeted mitigation strategies. Throughout the simulation, our network defense mechanisms continuously adapt and evolve in response to the evolving threat landscape[

8]. Real-time monitoring, automated response actions, and advanced threat intelligence collectively fortify our network defenses, ensuring resilience against DDoS attacks and safeguarding the integrity

Generative AI enables rapid prototyping and experimentation, allowing engineers to quickly evaluate different design alternatives and identify the most promising configurations. This accelerated design cycle significantly reduces time-to-market and enhances the agility of network infrastructure deployment and optimization. Moreover, generative AI facilitates adaptive and self-optimizing networks capable of dynamically adjusting their topology in response to changing traffic patterns, failures, or environmental conditions. This inherent flexibility ensures robustness and resilience in complex network environments, enhancing overall reliability and service quality. Generative AI represents a paradigm shift in network topology design and optimization, empowering engineers to tackle increasingly complex challenges and unlock new opportunities for innovation in networking technology. As this field continues to evolve, the potential for generative AI to revolutionize network architecture and performance is boundless.

A. Cloud Network System Architecture Based on LLM

Designing a cloud network system architecture based on Large Language Models (LLMs) involves integrating AI-driven optimization techniques into various layers of the network infrastructure. Here's a conceptual overview of such an architecture:

1) Data Ingestion Layer: Data from various sources within the network, such as network devices, servers, applications, and logs, is ingested into the system[

9]. This layer may involve data preprocessing steps to clean, normalize, and transform raw data into a format suitable for analysis.

2) Data Storage and Management Layer: In this layer, ingested data is stored in a scalable and distributed data storage system, such as a data lake or a distributed database. Data management processes ensure data integrity, availability, and security. Metadata indexing and cataloging mechanisms facilitate efficient data retrieval and query processing.

3) Machine Learning Model Training and Evaluation Layer: This layer is responsible for training and evaluating machine learning models, including Generative AI and LLMs, using historical network data. Training pipelines leverage distributed computing frameworks to process large-scale datasets and train complex models. Model evaluation techniques, such as cross-validation or A/B testing, assess model performance and generalization capabilities.

4) Network Control and Management Layer: Network control and management components implement optimization directives issued by the decision-making layer. Software-defined networking (SDN) controllers, network orchestrators, and configuration management tools dynamically configure network devices, adjust routing policies, and allocate resources based on optimization recommendations[

10]. By architecting a cloud network system based on machine learning and LLMs, organizations can achieve dynamic, adaptive, and intelligent network optimization, enhancing efficiency, resilience, and scalability in their cloud infrastructures.

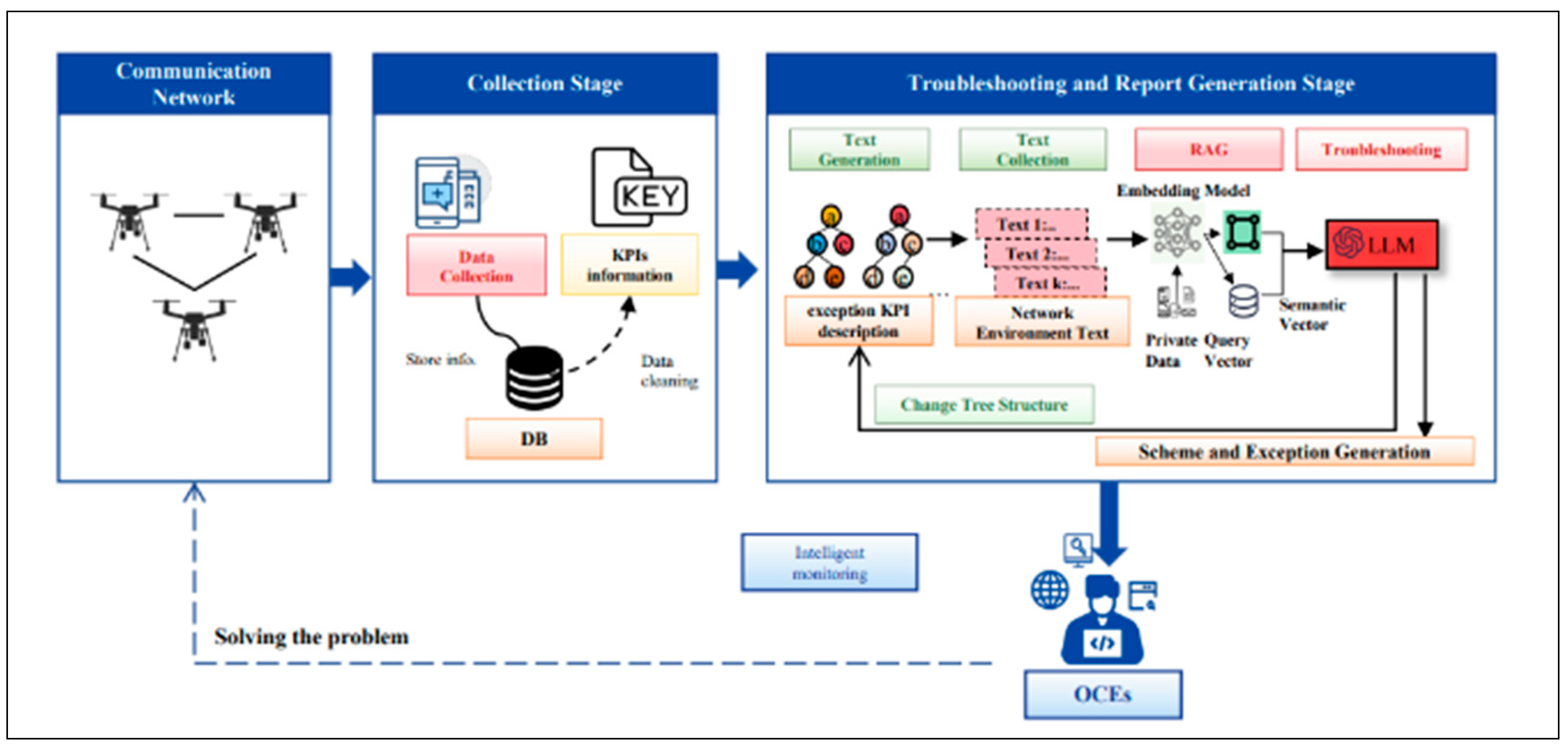

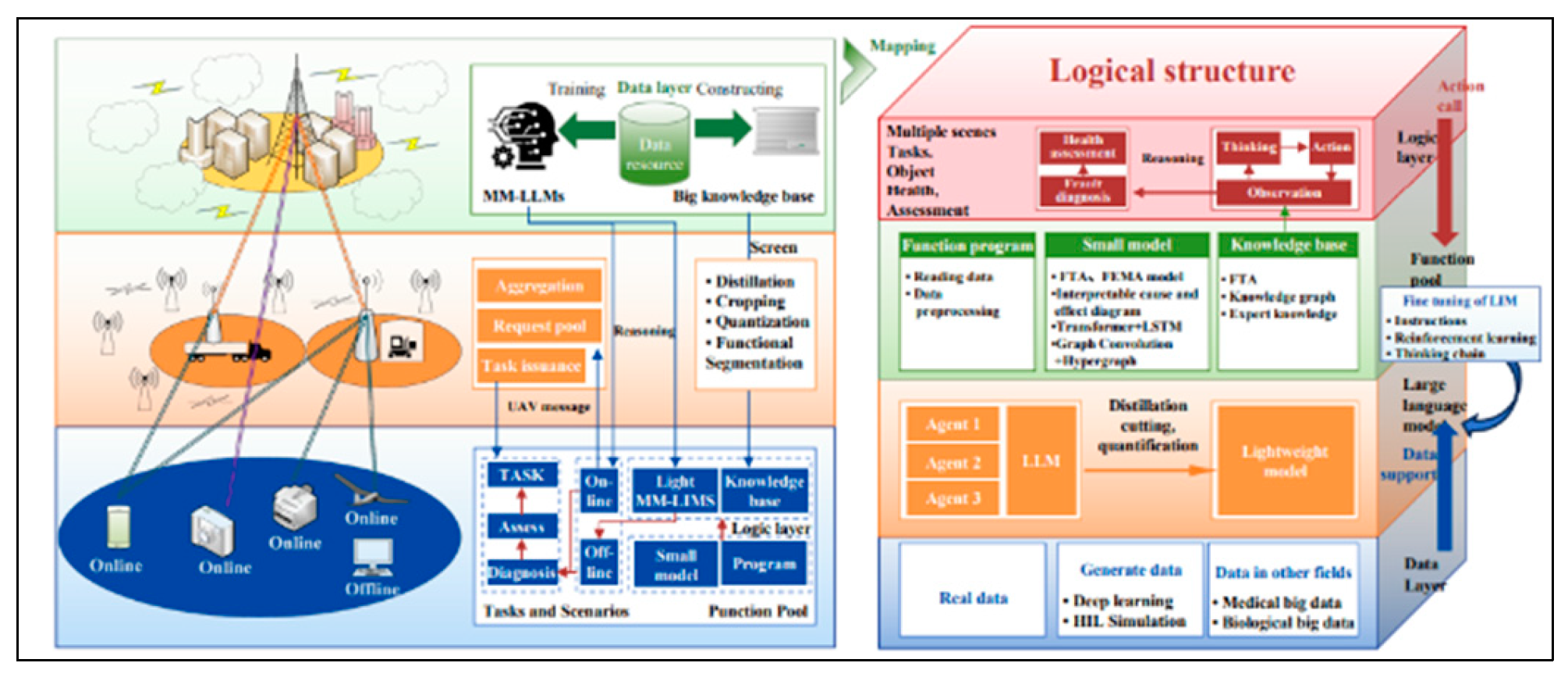

Figure 3 illustrates the schematic diagram illustrating a Cloud network system architecture integrating textual proposition learning and Large Language Models (LLMs). At the core of the architecture lies a sophisticated AI-driven optimization layer leveraging LLMs to analyze textual network data and derive actionable insights. Machine learning models within this layer dynamically optimize network configurations based on real-time data, enhancing efficiency and resilience[

11]. The decision-making and orchestration layer translates optimization directives into actionable commands for network controllers and orchestrators, ensuring alignment with organizational objectives. Continuous monitoring and feedback mechanisms provide real-time performance assessment and anomaly detection, enabling iterative improvement. Through seamless integration with external systems, including cloud platforms and security tools, the architecture facilitates interoperability and holistic network management.

3. Generative AI for Network Topology Design and Optimization

Intelligent network optimization for cloud environments represents a paradigm shift in how organizations manage and maximize the efficiency of their network infrastructures. By leveraging advanced technologies such as artificial intelligence (AI), machine learning (ML), and Large Language Models (LLMs), cloud networks can adapt dynamically to changing demands and conditions, thereby enhancing performance, scalability, and resilience.

Intelligent network optimization is the ability to analyze vast amounts of network data in real time. AI and ML algorithms can process this data to identify patterns, detect anomalies, and predict future network behavior. By understanding traffic patterns and resource utilization, these algorithms can optimize network configurations to improve efficiency and reduce latency.

LLMs play a crucial role in network optimization by providing a semantic understanding of textual data related to network operations. By comprehending network logs, reports, and configuration files, LLMs can extract actionable insights and recommendations for optimizing network performance.

Intelligent network optimization also involves proactive management of network resources. AI-driven algorithms can dynamically allocate resources based on workload demands, ensuring optimal utilization while minimizing costs.

Intelligent network optimization empowers organizations to achieve unprecedented levels of agility, scalability, and efficiency in their cloud infrastructures, driving innovation and competitive advantage in today's digital landscape.

Generative AI models can be trained on historical data, and resource utilization, to learn the underlying structures and dynamics of network behavior. Initially, these models are fed with large datasets containing historical records of network traffic, such as packet flows, bandwidth usage, and application interactions[

12]. Through techniques like unsupervised learning, Generative AI models like GANs (Generative Adversarial Networks) or VAEs (Variational Autoencoders) learn to capture the statistical properties and dependencies within the data. They then generate synthetic data that closely resembles the patterns observed in the training dataset. This process allows the model to capture complex relationships and dependencies present in the network traffic and resource utilization. These generative models can be deployed to simulate realistic traffic patterns, predict future network behavior, and optimize network configurations for improved performance, scalability, and security.

Generative AI models offer a powerful toolset for generating optimal network topologies tailored to different workloads and cloud environments. By leveraging historical data on network traffic patterns, resource utilization, and application requirements, these models can infer relationships and dependencies to design efficient network architectures. Through iterative experimentation and simulation, Generative AI models can generate diverse network topologies that balance factors like latency, bandwidth, and scalability. For instance, they can create architectures optimized for specific workloads, such as batch processing, real-time streaming, or data-intensive analytics. These models can adapt network configurations dynamically to accommodate changing demands and fluctuations in workload patterns.

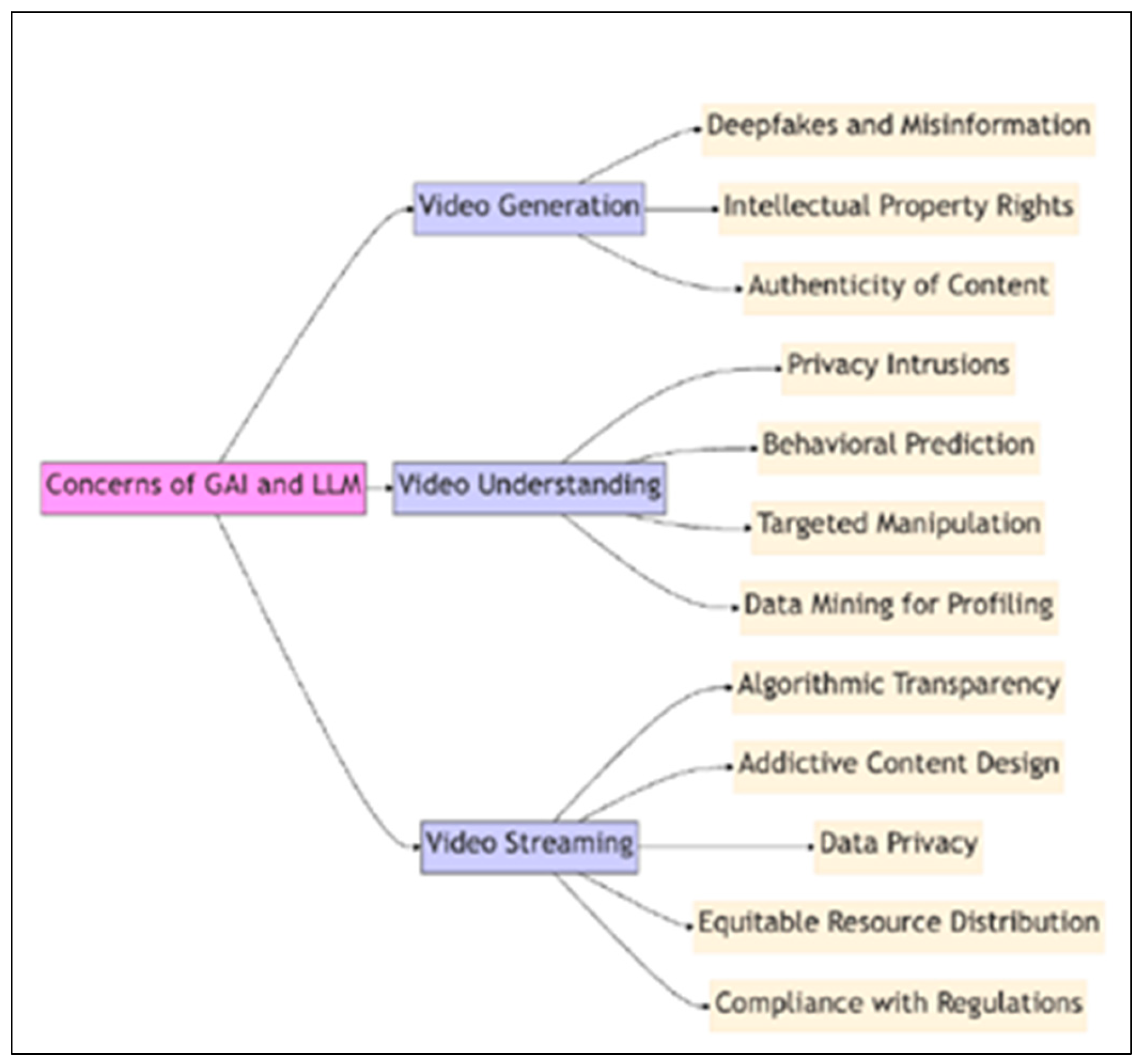

Figure 4 illustrates that GAI- and LLM-based solutions encounter various concerns that warrant careful consideration. Firstly, ensuring data privacy and security remains paramount due to the large volumes of sensitive information processed by these systems. There are challenges related to the interpretability of AI-generated decisions, necessitating transparency and accountability in their implementations. Moreover, the ethical implications of AI-driven decisions raise concerns surrounding their societal impact and responsible use. Lastly, ongoing research is required to address the robustness and reliability of GAI- and LLM-based solutions to ensure their efficacy in real-world applications.

Generative AI offers innovative solutions for network optimization, suggesting strategies across various domains such as component placement, load balancing, and routing. Firstly, it can propose the optimal placement of network components by analyzing traffic patterns and resource utilization, ensuring efficient data flow and reduced latency. Through generative algorithms, it can dynamically adjust component locations based on real-time demands, enhancing overall network performance. Generative AI excels in load balancing by intelligently distributing incoming traffic across available resources, preventing bottlenecks, and maximizing throughput. Generative AI offers a multifaceted approach to network optimization, leveraging advanced algorithms to enhance component placement, load balancing, and routing strategies, ultimately driving efficiency and scalability in modern network infrastructures

Generative AI presents a plethora of potential benefits across various domains, ranging from improved performance to enhanced efficiency and scalability. Firstly, Generative AI algorithms can optimize tasks that traditionally require extensive human intervention, leading to improved performance by automating complex processes with greater accuracy and speed. Generative AI fosters efficiency by streamlining workflows, reducing manual effort, and minimizing resource wastage. Generative AI enables scalability by facilitating the creation of scalable models and systems that can adapt to changing demands and accommodate growing datasets. Generative AI holds immense promise in driving improvements across various domains by enhancing performance, efficiency, and scalability, thereby unlocking new opportunities for innovation and growth

4. Generative AI for Network Topology Design and Optimization

Large Language Models (LLMs) offer a sophisticated architecture for network performance analysis and optimization, leveraging their advanced natural language processing capabilities and deep learning techniques to tackle complex challenges in this domain. Firstly, LLMs excel in data processing and analysis, capable of ingesting and understanding vast amounts of network-related data, including logs, telemetry, and configuration files. By parsing and contextualizing this information, LLMs can identify patterns, anomalies, and performance bottlenecks, providing valuable insights into network behavior. Additionally, LLMs can generate actionable recommendations for network optimization based on their understanding of network architectures, protocols, and best practices. By synthesizing this knowledge, LLMs can propose adjustments to routing configurations, Quality of Service (QoS) policies, or resource allocation strategies to improve network performance and efficiency.

LLMs can simulate and predict the impact of proposed changes on network performance, leveraging their ability to generate realistic scenarios and extrapolate outcomes based on historical data and network models. This predictive capability enables network operators to anticipate potential issues and proactively optimize their infrastructure to meet evolving demands. LLMs can facilitate communication and collaboration among network engineers and stakeholders by generating comprehensive reports, visualizations, and summaries of network performance metrics and optimization strategies[

13]. This enables informed decision-making and alignment of objectives across diverse teams. The architecture of LLMs presents a powerful framework for network performance analysis and optimization, offering capabilities such as data processing, recommendation generation, simulation, prediction, and communication. By harnessing the full potential of LLMs, organizations can enhance the reliability, scalability, and efficiency of their networks, driving innovation and competitiveness in the digital age.

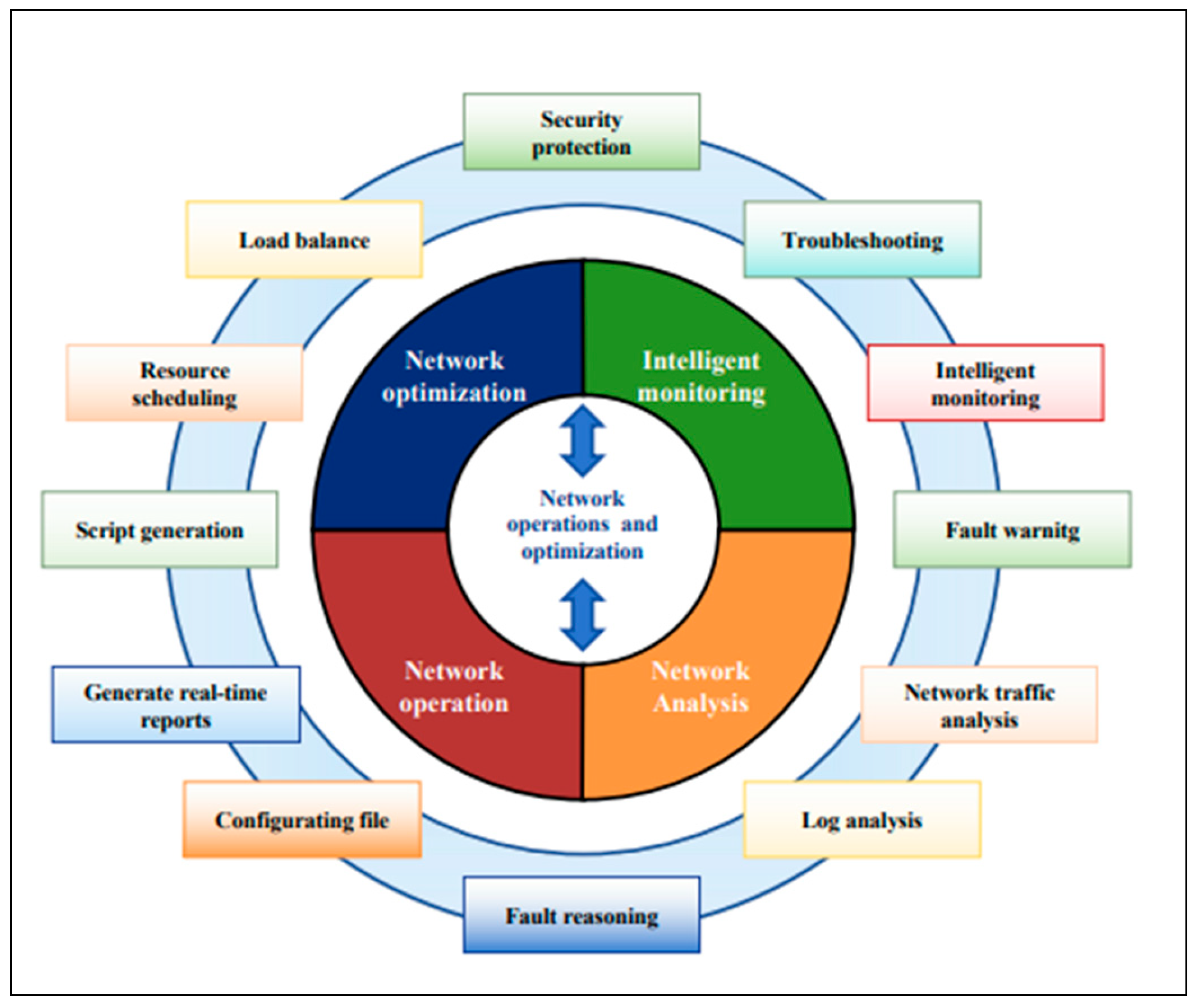

As shown in

Figure 5, networks utilizing Large Language Models (LLMs) have demonstrated a wide range of applications, effectively addressing longstanding challenges encountered in network operations and performance optimization. These applications include streamlined network monitoring, comprehensive network health assessment, insightful network analysis, and efficient resources

Figure 5 illustrates the application of Large Language Models (LLMs) in intelligent network operations and performance optimization represents a significant advancement in the field of network management. LLMs, such as GPT (Generative Pre-trained Transformer), possess natural language processing capabilities that enable them to comprehend and analyze textual data, including network logs, reports, and configuration files[

14]. By leveraging the semantic understanding encoded within these models, network operators can extract valuable insights, detect patterns, and derive actionable recommendations for optimizing network performance.

In

Figure 5, the key application of LLMs in intelligent network operations is anomaly detection. LLMs can learn the normal behavior of network traffic and performance metrics from historical data, allowing them to identify deviations indicative of potential anomalies or security threats. By analyzing textual descriptions of network events, LLMs can detect subtle anomalies that may go unnoticed by traditional monitoring systems, enabling proactive intervention to mitigate risks and maintain network integrity. LLMs play a crucial role in network configuration optimization by generating context-aware recommendations tailored to specific workloads, traffic patterns, and performance requirements. By analyzing textual propositions or descriptions of network configurations, LLMs can propose optimized settings for routers, switches, and other network devices, maximizing resource utilization, minimizing latency, and improving overall network efficiency.

The application of LLMs in intelligent network operations offers unprecedented capabilities for enhancing network resilience, efficiency, and performance through advanced anomaly detection, configuration optimization, and decision support mechanisms.

Anomaly detection with Large Language Models (LLMs) presents a potent approach to identifying bottlenecks and performance issues within networks. LLMs excel in comprehending diverse textual data sources, including logs, reports, and system alerts, enabling them to discern subtle deviations from normal behavior indicative of potential issues. LLMs can learn the typical patterns of network traffic and performance metrics, allowing them to recognize deviations that signify anomalies[

15]. By analyzing textual descriptions of events and performance data, they can pinpoint irregularities such as unexpected spikes in traffic, unusual patterns in resource utilization, or deviations from established performance benchmarks. Integrating the functionalities outlined above, as illustrated in

Figure 6, we have devised an innovative Cloud-Edge-Device intelligent network assessment system based on Large Language Models (LLMs). This pioneering network infrastructure amalgamates LLM technology with cloud computing, edge computing, and terminal devices. Leveraging LLMs' proficiency in textual comprehension and generation, this system facilitates precise evaluation and efficient management of network conditions. At the network edge, tasks are assigned, initiating LLM optimization processes such as pruning and distillation. Various terminal devices, including smartphones, cameras, and drones, gather real-time network data such as latency, packet loss, and bandwidth usage. This raw data undergoes initial processing at the edge, including cleaning and format conversion, laying the groundwork for subsequent analysis and evaluation.

The cloud-based LLM engages in data processing, extracting pertinent network optimization insights by parsing linguistic and structural cues within textual data. It builds an extensive knowledge repository, fostering the training of LLMs tailored to network conditions.

Figure 6, Trained on vast textual datasets, the cloud-based LLM comprehends and processes natural language, combining edge device data with historical records for comprehensive network status analysis.

By discerning inherent patterns and semantic cues in network data, the LLM accurately identifies issues, forecasts performance trends, and delivers evaluation outcomes. These insights are conveyed to users through a visual interface, offering real-time updates, historical trends, and risk assessments. When anomalies arise, the LLM issues warnings and provides decision support, empowering users to swiftly address issues and optimize network management efficiency.

Leveraging Large Language Models (LLMs), network configuration optimizations tailored to specific workloads and requirements can be achieved with unprecedented precision and efficiency. By considering factors like traffic patterns, latency sensitivity, and throughput demands, LLMs can propose configurations that maximize resource utilization and meet performance targets. LLMs can factor in constraints and preferences specified by network administrators, ensuring that suggested optimizations align with organizational policies and operational considerations. LLM-based approaches offer a sophisticated framework for guiding network configuration optimizations, leveraging natural language understanding to tailor recommendations to specific contexts and objectives, ultimately enhancing network performance and efficiency.

The utilization of Large Language Models (LLMs) promises a myriad of benefits across network management, including improved resource utilization, reduced latency, and proactive network management strategies. Firstly, LLMs can optimize resource utilization by analyzing vast amounts of data to identify inefficiencies and suggest refinements in network configurations. LLMs contribute to latency reduction by proactively identifying potential bottlenecks and performance issues within the network. LLMs facilitate proactive network management by leveraging predictive analytics to anticipate future demands and trends. By analyzing historical data and contextual information, LLMs can forecast potential challenges and recommend preemptive measures to ensure smooth network operation, ultimately enhancing reliability and user satisfaction.

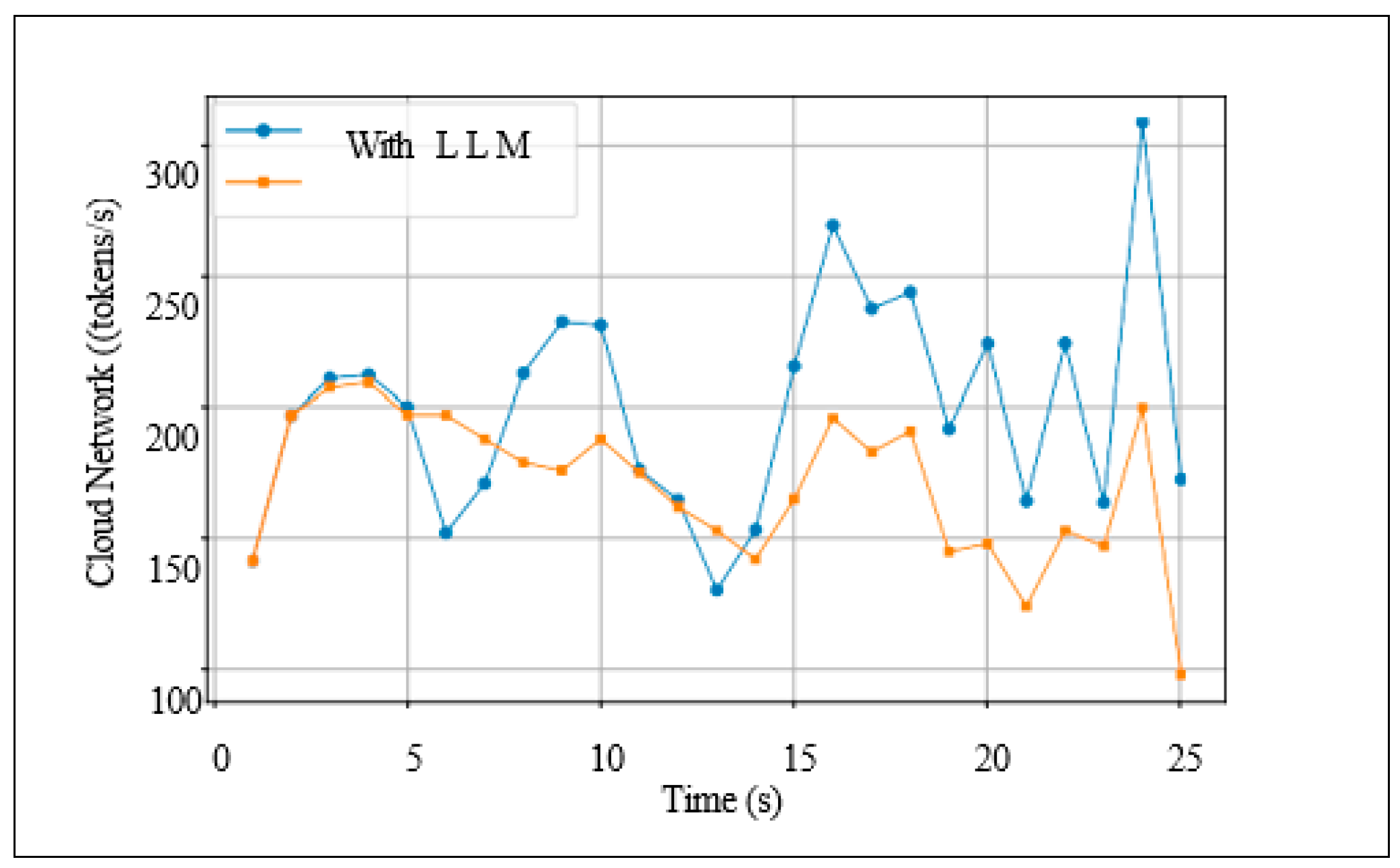

This discrepancy suggests potential differences in training methodologies or response mechanisms among the models. It implies that AI may have been trained on a diverse range of prompts or possesses a more flexible response mechanism, whereas the Dynamic Cloud network, represented by AI and LLM, may have been designed to generate responses of more consistent lengths.

5. Evaluation and Comparison of Traditional Methods

Evaluating the effectiveness of the proposed Generative AI and Large Language Model (LLM) approach requires a comprehensive methodology that assesses various aspects of performance, efficiency, and usability. Firstly, quantitative metrics such as resource utilization, latency reduction, and throughput improvement can gauge the tangible benefits brought by AI-driven optimizations. This involves comparing performance before and after implementation across different network configurations and workloads. By combining quantitative measurements, qualitative feedback, and experimental validation, a comprehensive evaluation methodology can provide a holistic understanding of the effectiveness of the proposed Generative AI and LLM approach in optimizing network configurations and performance.

Table 1 compares Generative AI and LLM approaches in the context of optimizing dynamic cloud networks. It highlights key aspects such as training data requirements, complexity of models, Flexibility in generating solutions, interpretability of results, resource demands, and adaptability to evolving environments. While Generative AI often relies on extensive datasets and complex architectures to generate diverse solutions, LLM approaches leverage pre-existing knowledge for quicker insights with relatively simpler models.

AI-driven optimization offers several advantages over traditional static configurations in terms of performance and efficiency. Firstly, AI-driven approaches, such as Generative AI and Large Language Models (LLMs), adapt dynamically to changing network conditions and workloads. They can analyze real-time data to identify bottlenecks and inefficiencies, leading to optimized configurations that enhance overall network performance. By optimizing configurations based on actual usage patterns and requirements, AI-driven approaches can allocate resources more effectively, minimizing waste and improving scalability. AI-driven optimization offers superior performance and efficiency compared to traditional static configurations, thanks to its adaptability, proactive nature, and ability to optimize resource allocation based on real-time data analysis.

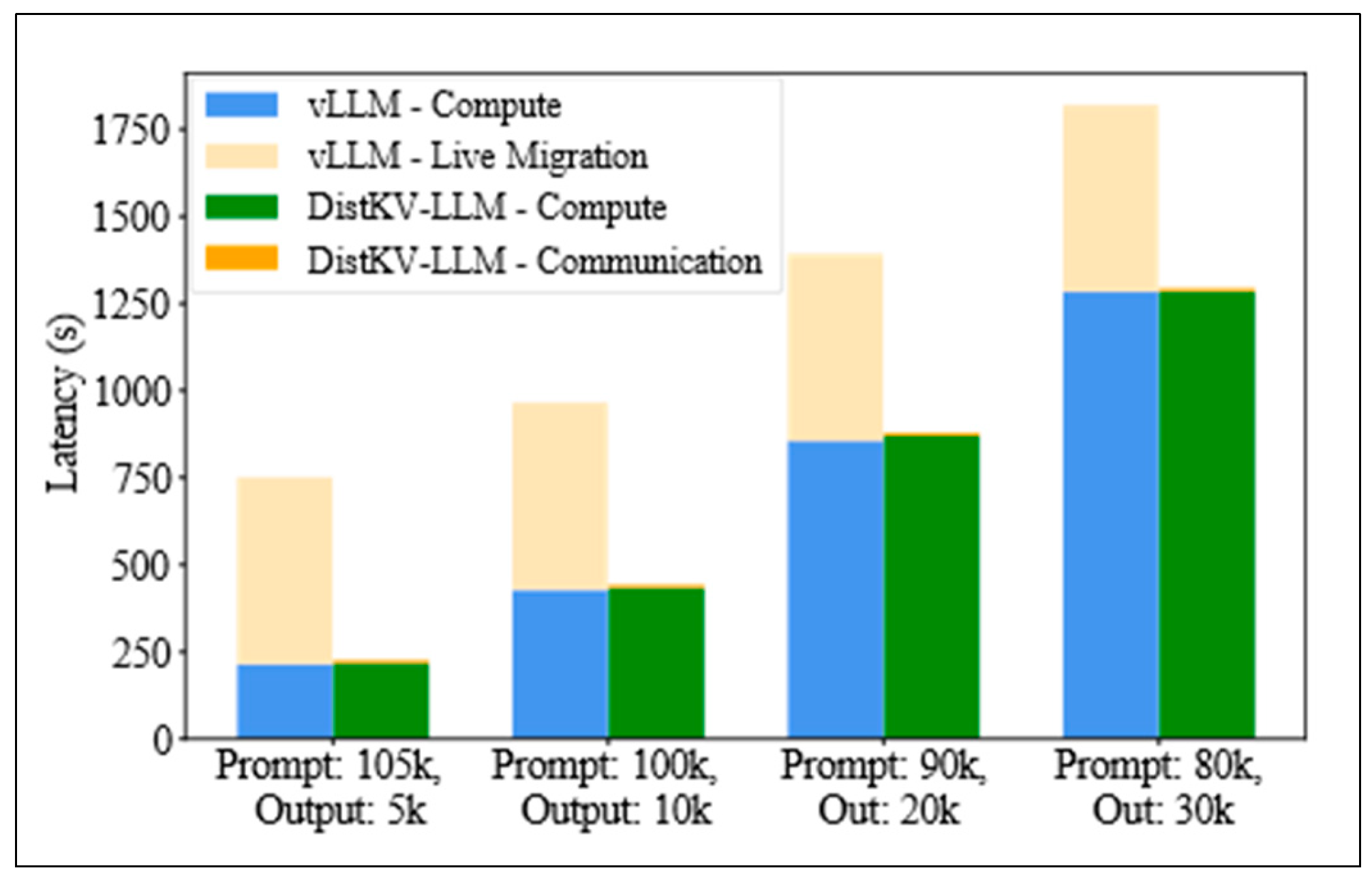

Figure 7, provides a comparative analysis of Large Language Models (LLM) and traditional cloud optimization strategies regarding migration efficiency. It illustrates the performance of LLM and cloud optimization techniques in terms of migration speed, resource utilization, and overall network stability. LLM exhibits notable advantages in minimizing migration time and optimizing resource allocation during migration tasks. Conversely, traditional cloud optimization methods often encounter bottlenecks and inefficiencies during migration processes. The figure underscores the transformative impact of LLM in streamlining migration operations, leading to enhanced network agility and performance.

Generative AI and Large Language Models (LLMs) hold immense promise in network optimization, but they also present certain limitations and challenges. The complexity and scale of network infrastructure pose challenges in training and deploying AI models effectively. LLMs require large datasets and computational resources for training, which may be prohibitive for some organizations. Generative AI and LLMs require access to large amounts of data, raising potential privacy risks if not handled properly. Maintaining the accuracy and relevance of AI-driven optimizations over time requires continuous monitoring and adaptation

6. Case Studies and Simulations

In our paper on dynamic cloud network optimization utilizing Generative AI and LLMs, we present two Compelling case studies and a simulation to illustrate the efficacy of our proposed approach. In the first case study, we simulate a scenario where our network encounters a sudden surge in traffic due to a DDoS attack

A. Dynamic Throughput Evolution: LLM vs Traditional Cloud Network

The graph illustrates the dynamic evolution of throughput, comparing the performance of Large Language Models (LLM) with traditional cloud networks over time. LLM showcases a remarkable trend of increasing throughput efficiency as time progresses, indicative of its adaptive optimization capabilities. In contrast, traditional cloud networks exhibit relatively static throughput patterns, suggesting limited responsiveness to changing network demands. This comparison underscores the transformative potential of LLM in revolutionizing network performance by continually enhancing throughput rates and adapting to fluctuating workloads. The graph serves as a visual representation of the tangible benefits LLM can offer in terms of improving data transfer rates and overall network responsiveness, highlighting its significance in the realm of modern network optimization.

Figure 8 illustrates the graph that depicts the throughput over time comparison between Large Language Models (LLM) and traditional cloud network architectures. As time progresses, LLM demonstrates a consistent increase in throughput efficiency, surpassing that of conventional cloud networks. This trend highlights LLM's capability to dynamically adapt and optimize network resources, resulting in improved data transfer rates over time. In contrast, traditional cloud networks exhibit relatively static throughput patterns, indicating limited adaptability to changing network demands. The graph underscores LLM's potential to revolutionize network performance by continually enhancing throughput rates and responsiveness to workload fluctuations. Leveraging LLMs for real-time anomaly detection, our system swiftly identifies abnormal traffic patterns and automatically reconfigures network settings to mitigate the impact, ensuring minimal downtime and maintaining network stability. The second case study focuses on workload-specific optimization, where we simulate various workload scenarios such as batch processing and real-time streaming. Additionally, our simulation showcases how these case studies and simulations serve as compelling evidence of the effectiveness of our proposed approach in achieving dynamic cloud network optimization, empowering organizations to adapt to evolving demands and conditions seamlessly.

7. Future Direction and Implication

Future research directions in the field of dynamic cloud network optimization could explore integration with cloud orchestration platforms to enhance automation and orchestration capabilities. By integrating AI-driven optimization techniques with platforms like Kubernetes or OpenStack, organizations can achieve seamless management of cloud resources, dynamic scaling, and efficient deployment of network configurations. The integration of AI in network management brings forth ethical considerations and potential security implications that warrant careful examination. One ethical concern revolves around privacy, as AI algorithms often require access to vast amounts of network data, raising questions about data ownership, consent, and the potential for unauthorized access. Moreover, the opaque nature of AI decision-making poses challenges for accountability and transparency, especially in critical network operations where human oversight is essential. Overall, integrating AI-driven optimization with cloud orchestration platforms and advancing real-time network traffic analysis could significantly enhance the efficiency and resilience of dynamic cloud networks

7. Conclusion

In conclusion, the integration of Generative Artificial Intelligence (AI) and Large Language Models (LLMs) presents a groundbreaking paradigm shift in the optimization of dynamic cloud networks. Departing from traditional static methodologies, these innovative approaches offer continuous adaptation and optimization, empowering organizations to achieve unprecedented levels of efficiency, resilience, and scalability in their cloud infrastructures. While significant progress has been made, future work should focus on enhancing the interpretability, privacy-preserving techniques, robustness, scalability, and efficiency of AI-driven optimizations. Additionally, exploring hybrid approaches that combine the strengths of Generative AI and LLMs with other optimization techniques holds promise for unlocking new possibilities in network optimization. Addressing these areas of research will further advance the effectiveness and applicability of AI-driven optimization techniques in dynamic cloud environments, paving the way for even greater innovation and performance in the future.

Author Contributions

The corresponding author developed the scope and objectives of the literature review, conducted a comprehensive literature search, analyzed and synthesized the findings, and wrote the manuscript. The co-authors provided guidance on structuring and outlining the review, ensuring that critical areas were covered. They also provided valuable feedback and editing on manuscript drafts. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- M. Xu et al., "Unleashing the power of edge-cloud generative AI in mobile networks: A survey of AI services," IEEE Communications Surveys & Tutorials, 2024. [CrossRef]

- P. Zhou et al., "A Survey on Generative AI and LLM for Video Generation, Understanding, and Streaming," arXiv preprint arXiv:2404.16038, 2024.

- H. Du et al., "The age of generative AI and AI-generated everything," arXiv preprint arXiv:2311.00947, 2023.

- G. Bai et al., "Beyond efficiency: A systematic survey of resource-efficient large language models," arXiv preprint arXiv:2401.00625, 2024.

- Y. Hong, J. Wu, and R. Morello, "LLM-Twin: Mini-Giant Model-driven Beyond 5G Digital Twin Networking Framework with Semantic Secure Communication and Computation," arXiv preprint arXiv:2312.10631, 2023.

- F. Alwahedi, A. Aldhaheri, M. A. Ferrag, A. Battah, and N. Tihanyi, "Machine learning techniques for IoT security: Current research and future vision with generative AI and large language models," Internet of Things and Cyber-Physical Systems, 2024. [CrossRef]

- J. Sauvola, S. Tarkoma, M. Klemettinen, J. Riekki, and D. Doermann, "Future of software development with generative AI," Automated Software Engineering, vol. 31, no. 1, p. 26, 2024. [CrossRef]

- M. Xu et al., "A survey of resource-efficient LLM and multimodal foundation models," arXiv preprint arXiv:2401.08092, 2024.

- B. Li, Y. Jiang, V. Gadepally, and D. Tiwari, "Toward Sustainable GenAI using Generation Directives for Carbon-Friendly Large Language Model Inference," arXiv preprint arXiv:2403.12900, 2024.

- Matharaarachchi et al., "Optimizing Generative AI Chatbots for Net-Zero Emissions Energy Internet-of-Things Infrastructure," Energies, vol. 17, no. 8, p. 1935, 2024. [CrossRef]

- S. Sagi, "Advancing AI: Enhancing Large Language Model Performance through GPU Optimization Techniques". [CrossRef]

- M. Ishaani, B. Omidvar-Tehrani, and A. Anubhai, "Evaluating human-AI partnership for LLM-based code migration," 2024.

- S. Long et al., "6G comprehensive intelligence: network operations and optimization based on Large Language Models," arXiv preprint arXiv:2404.18373, 2024.

- Y. Lu et al., "Computing in the Era of Large Generative Models: From Cloud-Native to AI-Native," arXiv preprint arXiv:2401.12230, 2024.

- Jeong, "A Study on the Implementation of Generative AI Services Using an Enterprise Data-Based LLM Application Architecture," arXiv preprint arXiv:2309.01105, 2023. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).