Submitted:

11 June 2024

Posted:

12 June 2024

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

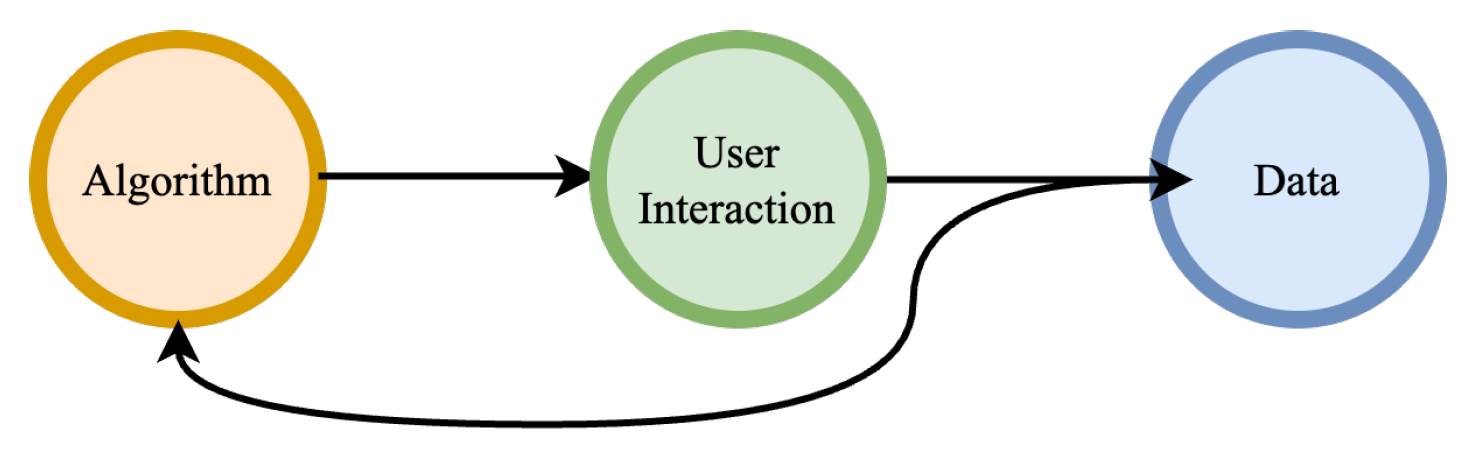

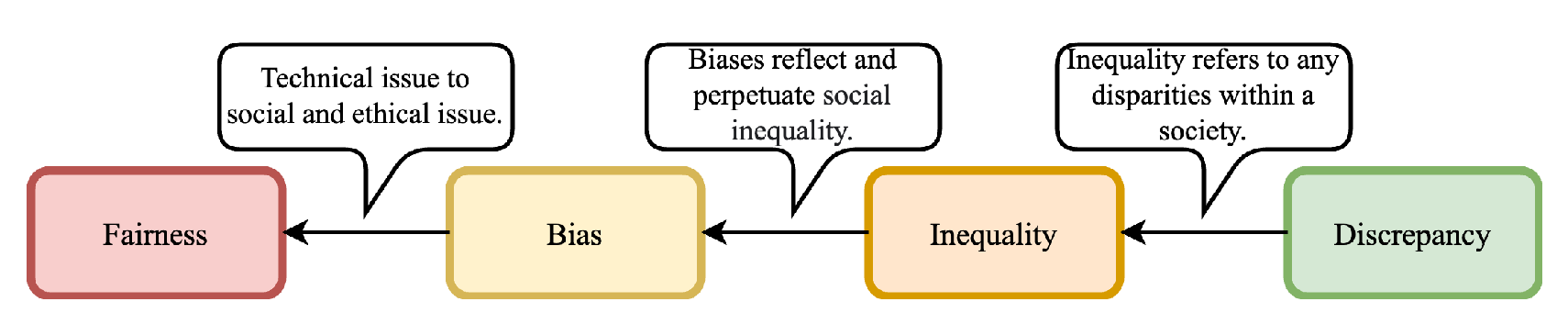

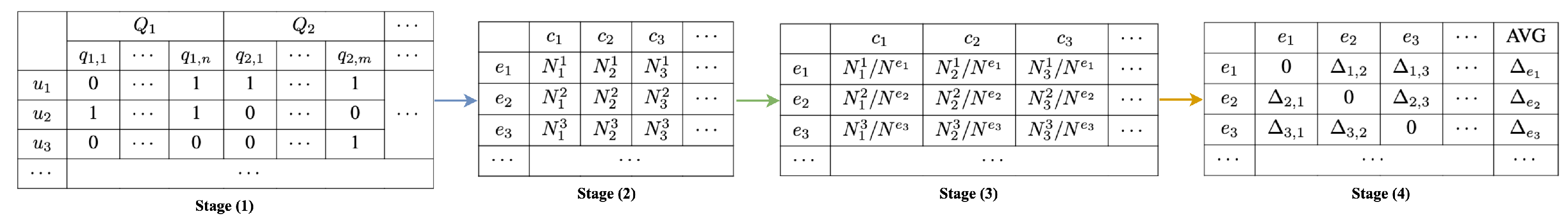

2. Quantifying the Cross-Sectoral Intersecting Discrepancies

| Algorithm 1 Quantifying the Intersecting Discrepancies within Multiple Groups |

|

3. Related Work

4. Experiments

4.1. The Anonymous project

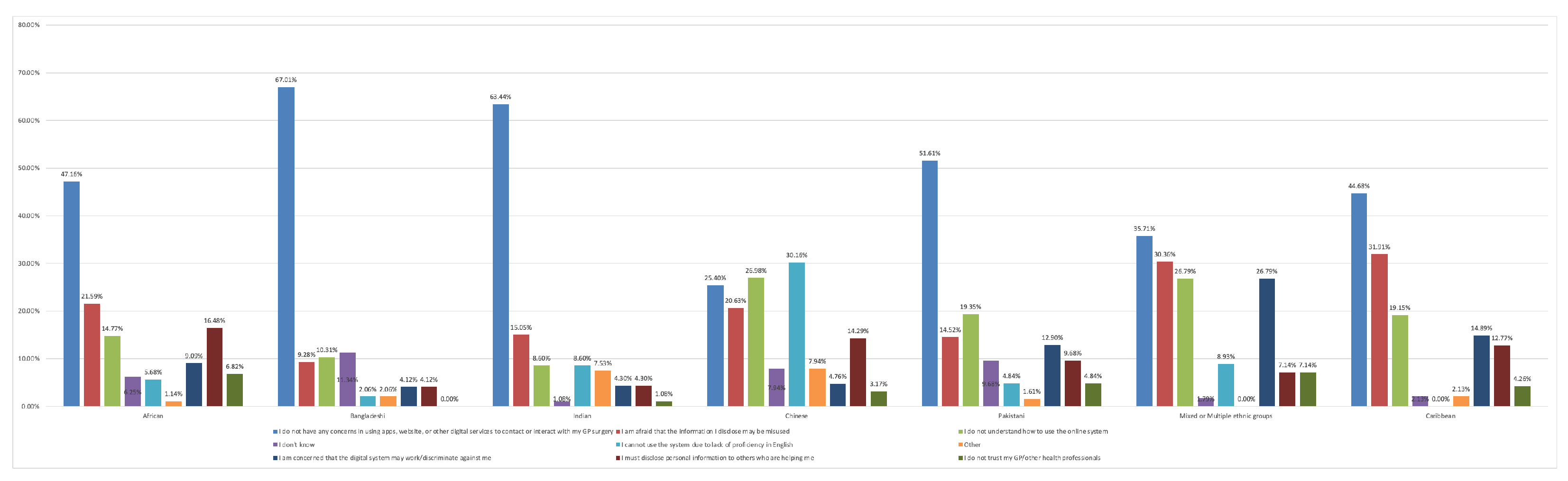

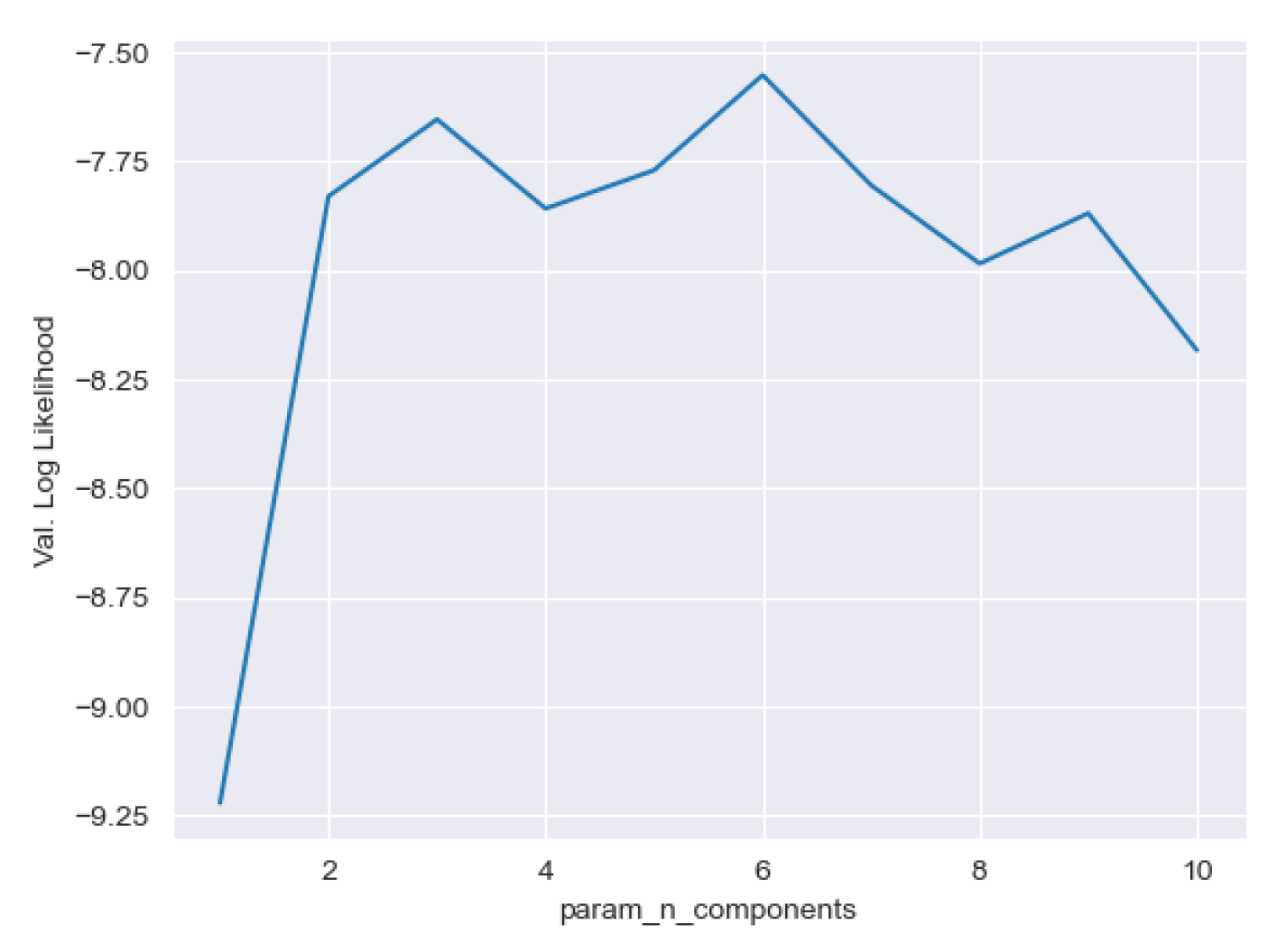

4.2. EVENS

4.3. Census 2021 (England and Wales)

5. Conclusions and Limitations

Appendix A The Anonymous project

| Anonymous project’s Target Ethnic Group | England | Scotland | Total |

|---|---|---|---|

| African | 176 | 37 | 213 |

| Bangladeshi | 97 | 41 | 138 |

| Indian | 93 | 40 | 133 |

| Chinese | 63 | 39 | 102 |

| Pakistani | 62 | 40 | 102 |

| Caribbean | 47 | 32 | 79 |

| Mixed or Multiple ethnic groups | 56 | 55 | 111 |

| Total | 594 | 284 | 878 |

Appendix B Census 2021 (England and Wales)

| Pearson | Spearman | |

|---|---|---|

| 0-20% | 0.9802 | 1 |

| 20-40% | 0.9769 | 1 |

| 40-60% | 0.9949 | 0.9 |

| 60-80% | 0.9829 | 1 |

| 80-100% | 0.9830 | 1 |

|

Appendix C Experiment Details

| Hardware | |

|---|---|

| CPU | 12th Gen Intel(R) Core(TM) i9-12950HX 2.30 GHz |

| GPU | NVIDIA GeForce RTX 3080 Ti Laptop GPU |

| Memory | 1TB |

| RAM | 64.0 GB |

| OS | Windows 11 Pro |

References

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM computing surveys (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Cirillo, D.; Catuara-Solarz, S.; Morey, C.; Guney, E.; Subirats, L.; Mellino, S.; Gigante, A.; Valencia, A.; Rementeria, M.J.; Chadha, A.S.; others. Sex and gender differences and biases in artificial intelligence for biomedicine and healthcare. NPJ digital medicine 2020, 3, 81. [Google Scholar] [CrossRef]

- Celi, L.A.; Cellini, J.; Charpignon, M.L.; Dee, E.C.; Dernoncourt, F.; Eber, R.; Mitchell, W.G.; Moukheiber, L.; Schirmer, J.; Situ, J.; others. Sources of bias in artificial intelligence that perpetuate healthcare disparities—A global review. PLOS Digital Health 2022, 1, e0000022. [Google Scholar] [CrossRef]

- Byrne, M.D. Reducing bias in healthcare artificial intelligence. Journal of PeriAnesthesia Nursing 2021, 36, 313–316. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, L. Fairness assessment for artificial intelligence in financial industry. arXiv preprint 2019, arXiv:1912.07211 2019. [Google Scholar]

- Fenu, G.; Galici, R.; Marras, M. Experts’ view on challenges and needs for fairness in artificial intelligence for education. International Conference on Artificial Intelligence in Education. Springer, 2022, pp. 243–255.

- Stiglitz, J.E. The price of inequality: How today’s divided society endangers our future; WW Norton & Company, 2012.

- UNSDG. Universal values principle two: leave no one behind, 2022.

- Leslie, D.; Mazumder, A.; Peppin, A.; Wolters, M.K.; Hagerty, A. Does “AI” stand for augmenting inequality in the era of covid-19 healthcare? bmj 2021, 372. [Google Scholar]

- Morley, J.; Machado, C.C.; Burr, C.; Cowls, J.; Joshi, I.; Taddeo, M.; Floridi, L. The ethics of AI in health care: a mapping review. Social Science & Medicine 2020, 260, 113172. [Google Scholar]

- Ferrara, E. Fairness and bias in artificial intelligence: A brief survey of sources, impacts, and mitigation strategies. Sci 2023, 6, 3. [Google Scholar] [CrossRef]

- Data, I.; Team, A. Shedding light on AI bias with real world examples. Security Intelligence 2023. [Google Scholar]

- Satz, D.; White, S. What is wrong with inequality. Inequality: The IFS Deaton Review. The IFS, 2021. [Google Scholar]

- Wu, H.; Wang, M.; Sylolypavan, A.; Wild, S. Quantifying health inequalities induced by data and AI models. arXiv preprint 2022, arXiv:2205.01066 2022. [Google Scholar]

- Morin, S.; Legault, R.; Bakk, Z.; Giguère, C.É.; de la Sablonnière, R.; Lacourse, É. StepMix: A Python Package for Pseudo-Likelihood Estimation of Generalized Mixture Models with External Variables. arXiv preprint 2023, arXiv:2304.03853 2023. [Google Scholar]

- Collins, L.M.; Lanza, S.T. Latent class and latent transition analysis: With applications in the social, behavioral, and health sciences; Vol. 718, John Wiley & Sons, 2009.

- Trocin, C.; Mikalef, P.; Papamitsiou, Z.; Conboy, K. Responsible AI for digital health: a synthesis and a research agenda. Information Systems Frontiers 2023, 25, 2139–2157. [Google Scholar] [CrossRef]

- Garattini, C.; Raffle, J.; Aisyah, D.N.; Sartain, F.; Kozlakidis, Z. Big data analytics, infectious diseases and associated ethical impacts. Philosophy & technology 2019, 32, 69–85. [Google Scholar]

- So, W.; Lohia, P.; Pimplikar, R.; Hosoi, A.; D’Ignazio, C. Beyond Fairness: Reparative Algorithms to Address Historical Injustices of Housing Discrimination in the US. Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, 2022, pp. 988–1004.

- Hagenaars, J.A.; McCutcheon, A.L. Applied latent class analysis; Cambridge University Press, 2002.

- Muthén, B.; Muthén, L.K. Integrating person-centered and variable-centered analyses: Growth mixture modeling with latent trajectory classes. Alcoholism: Clinical and experimental research 2000, 24, 882–891. [Google Scholar] [CrossRef]

- Vermunt, J.K.; Magidson, J. Latent class analysis. The sage encyclopedia of social sciences research methods 2004, 2, 549–553. [Google Scholar]

- Lazarsfeld, P.F. The logical and mathematical foundation of latent structure analysis. In Studies in social psychology in world war II Vol. IV: Measurement and prediction; 1950; pp. 362–412. [Google Scholar]

- Goodman, L.A. The analysis of systems of qualitative variables when some of the variables are unobservable. Part IA modified latent structure approach. American Journal of Sociology 1974, 79, 1179–1259. [Google Scholar] [CrossRef]

- Weller, B.E.; Bowen, N.K.; Faubert, S.J. Latent class analysis: a guide to best practice. Journal of Black Psychology 2020, 46, 287–311. [Google Scholar] [CrossRef]

- Martínez-Monteagudo, M.C.; Delgado, B.; Inglés, C.J.; Escortell, R. Cyberbullying and social anxiety: a latent class analysis among Spanish adolescents. International journal of environmental research and public health 2020, 17, 406. [Google Scholar] [CrossRef]

- Bardazzi, R.; Charlier, D.; Legendre, B.; Pazienza, M.G. Energy vulnerability in Mediterranean countries: A latent class analysis approach. Energy Economics 2023, 126, 106883. [Google Scholar] [CrossRef]

- Sinha, P.; Furfaro, D.; Cummings, M.J.; Abrams, D.; Delucchi, K.; Maddali, M.V.; He, J.; Thompson, A.; Murn, M.; Fountain, J.; others. Latent class analysis reveals COVID-19–related acute respiratory distress syndrome subgroups with differential responses to corticosteroids. American journal of respiratory and critical care medicine 2021, 204, 1274–1285. [Google Scholar] [CrossRef]

- J. Sarpong. Bame we’re not the same: Chinese, 2024. URL https://www.bbc.com/creativediversity/nuance-in-bame/chinese.

- Drummond, R.; Pratt, M. Updating ethnic and religious contrasts in deaths involving the coronavirus (covid-19), England: 24 January 2020 to 23 November 2022, 2023.

- ONS. Census 2021 data and analysis from census 2021, 2022. URL https://www.ons.gov.uk/.

- GOV.UK. National statistics english indices of deprivation 2019, 2019. URL https://www.gov.uk/government/statistics/english-indices-of-deprivation-2019.

- Lemaître, G.; Nogueira, F.; Aridas, C.K. Imbalanced-learn: A Python Toolbox to Tackle the Curse of Imbalanced Datasets in Machine Learning. Journal of Machine Learning Research 2017, 18, 1–5. [Google Scholar]

| 1 | Project name removed to maintain anonymity |

| 2 | Question 21: Which of the following concerns do you have about communicating with your general practice (GP) through apps, websites, or other online services? |

| 3 | StepMix (https://stepmix.readthedocs.io/en/latest/index.html) Python repository is used to implement LCA in this research. |

| 4 | More dataset details can be found in the Appendix A. |

| 5 |

| England | Scotland | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| African | Bangladeshi | Caribbean | Chinese | Indian | Mixed Group | Pakistani | AVG | African | Bangladeshi | Caribbean | Chinese | Indian | Mixed Group | Pakistani | AVG | |

| African | 0.0000 | 0.0899 | 0.0383 | 0.1810 | 0.0547 | 0.0590 | 0.0517 | 0.0678 | 0.0000 | 0.0666 | 0.0296 | 0.1956 | 0.0342 | 0.0118 | 0.0227 | 0.0515 |

| Bangladeshi | 0.0899 | 0.0000 | 0.1359 | 0.3734 | 0.0200 | 0.2738 | 0.0308 | 0.1320 | 0.0666 | 0.0000 | 0.0324 | 0.3043 | 0.0989 | 0.0563 | 0.0118 | 0.0815 |

| Caribbean | 0.0383 | 0.1359 | 0.0000 | 0.2456 | 0.0764 | 0.1131 | 0.0951 | 0.1006 | 0.0296 | 0.0324 | 0.0000 | 0.3430 | 0.0191 | 0.0546 | 0.0159 | 0.0706 |

| Chinese | 0.1810 | 0.3734 | 0.2456 | 0.0000 | 0.3700 | 0.1201 | 0.2459 | 0.2194 | 0.1956 | 0.3043 | 0.3430 | 0.0000 | 0.3717 | 0.1334 | 0.2438 | 0.2274 |

| Indian | 0.0547 | 0.0200 | 0.0764 | 0.3700 | 0.0000 | 0.2139 | 0.0311 | 0.1094 | 0.0342 | 0.0989 | 0.0191 | 0.3717 | 0.0000 | 0.0821 | 0.0575 | 0.0948 |

| Mixed Group | 0.0590 | 0.2738 | 0.1131 | 0.1201 | 0.2139 | 0.0000 | 0.1987 | 0.1398 | 0.0118 | 0.0563 | 0.0546 | 0.1334 | 0.0821 | 0.0000 | 0.0209 | 0.0513 |

| Pakistani | 0.0517 | 0.0308 | 0.0951 | 0.2459 | 0.0311 | 0.1987 | 0.0000 | 0.0933 | 0.0227 | 0.0118 | 0.0159 | 0.2438 | 0.0575 | 0.0209 | 0.0000 | 0.0532 |

| England | Scotland | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| African | Bangladeshi | Caribbean | Chinese | Indian | Pakistani | AVG | African | Bangladeshi | Caribbean | Chinese | Indian | Pakistani | AVG | |

| African | 0.0000 | 0.0112 | 0.0014 | 0.0038 | 0.0062 | 0.0018 | 0.0040 | 0.0000 | 0.0246 | 0.5408 | 0.0300 | 0.0637 | 0.1800 | 0.1398 |

| Bangladeshi | 0.0112 | 0.0000 | 0.0102 | 0.0031 | 0.0227 | 0.0090 | 0.0094 | 0.0246 | 0.0000 | 0.5283 | 0.0522 | 0.1539 | 0.2697 | 0.1714 |

| Caribbean | 0.0014 | 0.0102 | 0.0000 | 0.0047 | 0.0030 | 0.0002 | 0.0032 | 0.5408 | 0.5283 | 0.0000 | 0.3946 | 0.4505 | 0.2506 | 0.3608 |

| Chinese | 0.0038 | 0.0031 | 0.0047 | 0.0000 | 0.0138 | 0.0048 | 0.0050 | 0.0300 | 0.0522 | 0.3946 | 0.0000 | 0.0662 | 0.1036 | 0.1078 |

| Indian | 0.0062 | 0.0227 | 0.0030 | 0.0138 | 0.0000 | 0.0040 | 0.0083 | 0.0637 | 0.1539 | 0.4505 | 0.0662 | 0.0000 | 0.0589 | 0.1322 |

| Pakistani | 0.0018 | 0.0090 | 0.0002 | 0.0048 | 0.0040 | 0.0000 | 0.0033 | 0.1800 | 0.2697 | 0.2506 | 0.1036 | 0.0589 | 0.0000 | 0.1438 |

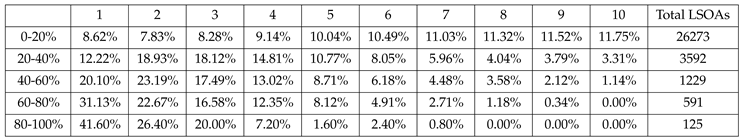

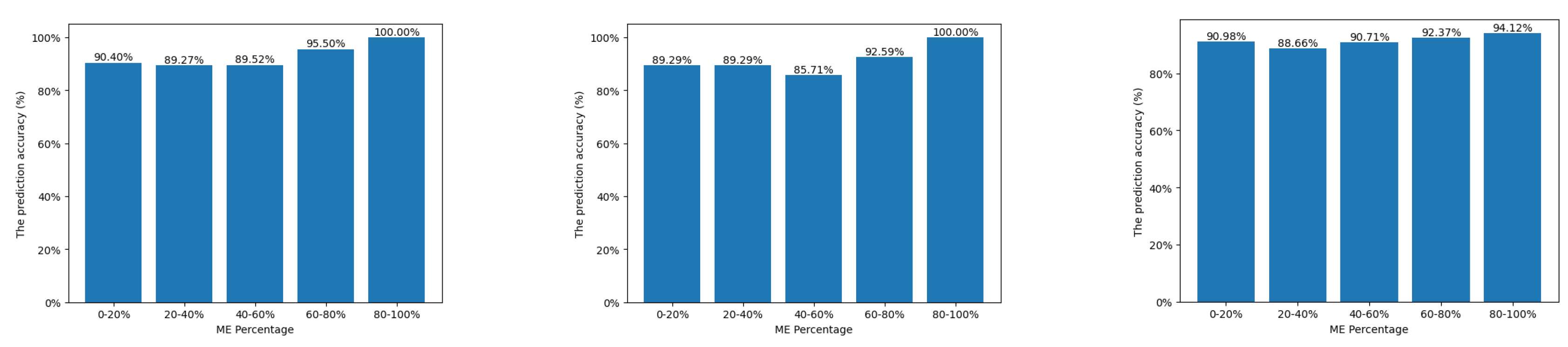

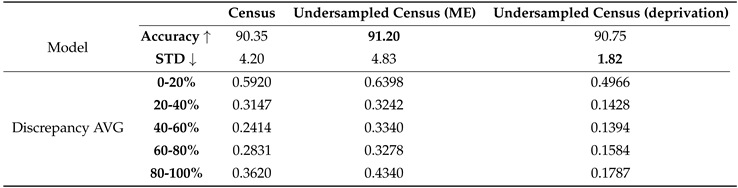

| Census | Deprivation | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0-20% | 20-40% | 40-60% | 60-80% | 80-100% | AVG | 0-20% | 20-40% | 40-60% | 60-80% | 80-100% | AVG | |

| 0-20% | 0.0000 | 0.4865 | 0.6783 | 0.8603 | 0.9347 | 0.5920 | 0.0000 | 0.2001 | 0.2896 | 0.3877 | 0.5064 | 0.2768 |

| 20-40% | 0.4865 | 0.0000 | 0.1371 | 0.3934 | 0.5565 | 0.3147 | 0.2001 | 0.0000 | 0.0313 | 0.1123 | 0.2203 | 0.1128 |

| 40-60% | 0.6783 | 0.1371 | 0.0000 | 0.1173 | 0.2744 | 0.2414 | 0.2896 | 0.0313 | 0.0000 | 0.0314 | 0.0963 | 0.0897 |

| 60-80% | 0.8603 | 0.3934 | 0.1173 | 0.0000 | 0.0445 | 0.2831 | 0.3877 | 0.1123 | 0.0314 | 0.0000 | 0.0283 | 0.1119 |

| 80-100% | 0.9347 | 0.5565 | 0.2744 | 0.0445 | 0.0000 | 0.3620 | 0.5064 | 0.2203 | 0.0963 | 0.0283 | 0.0000 | 0.1703 |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).