1. Introduction

In the context of robotics, Learning from Demonstration (LfD) refers to “

the paradigm in which robots acquire new skills by learning to imitate an expert" [

1], i.e., a robot learns to perform a task by watching a human’s actions rather than being explicitly programmed. LfD allows robots to acquire new skills or refine existing ones while reducing the need for manual programming of robot behaviors, ultimately eliminating the requirement for a robotic expert [

1].

LfD offers a distinct approach to programming robots compared to traditional manual programming methods. Traditional manual programming involves writing code or scripts to explicitly define the sequence of actions and movements for the robot to perform a task. It requires expertise in robot programming languages, kinematics, and dynamics. Moreover, writing code for complex tasks can be time-consuming, and any changes in the environment or task requirements demand extra re-programming effort [

2]. On another paradigm, optimization-based programming involves formulating the robot’s task as an optimization problem, where the objective is to minimize or maximize a certain objective [

3]. While this method optimizes the task execution based on various environments and task requirements and does not need re-programming, it still requires high robotic expertise to carefully formulate the task as an optimization problem and mathematically model the task and the environment in order to efficiently solve for the best solution.

LfD surpasses these limitations by allowing non-experts to teach the robot without mathematical formulation or any robotic knowledge. Also, the LfD algorithm learns and generalizes the task flexibly according to the environment and task requirements. Teaching a new task or refining a task takes significantly less effort, does not require robotic expertise, and can make the robotic system up and running in a relatively quicker manner. These benefits have been found to be useful in the industry, where efficiency and agility gain importance when using robots for industrial tasks [

4,

5].

Several notable reviews and surveys focus on LfD from different points of view and consider various aspects. The work in [

1] gives a general review of LfD and provides an updated taxonomy of the recent advances in the field. In [

6] the authors survey LfD for robotic manipulation, discussing the main conceptual paradigms in the LfD process. In [

7] the focus is on the applications of LfD in smart manufacturing. They introduce and compare the leading technologies developed to enhance the learning algorithms and discuss them in various industrial application cases. In [

8] the focus of the survey is on robotic assembly. Specifically, they discuss various approaches to demonstrate assembly behaviors and how to extract features to enhance LfD performance in assembly. They also analyze and discuss various methods and metrics to evaluate LfD performance. Authors in [

9] survey LfD advances with respect to human-robot collaborative tasks in manufacturing settings. They provide detailed insights into the role of various human interactions in different LfD methods. The technical nature of the survey makes it suitable for active researchers in LfD to seek new directions on making the LfD algorithms more collaborative.

While the existing studies provide valuable insights into various aspects of LfD, they often lack a comprehensive roadmap or practical guidance for practitioners. They mostly focus on presenting the state of the art without providing a clear roadmap for practitioners to implement LfD in their robotic tasks. Moreover, the technical depth of most of the studies requires a strong background in LfD, making it inaccessible to non-academic practitioners or researchers who are new to the field. Therefore, there is a need for a new study that not only consolidates existing knowledge but also bridges the gap between research and practice.

From a practical point of view, the recent advancements in the manufacturing industry create an increasing need for adaptable manufacturing robotic systems to perform flexibly with the variant demands of the market. The production schemes are shifting from mass production, where a fixed line of manufacturing is used to create products on a mass scale, to mass customization, where production is in smaller batches of different products according to the market need [

10,

11]. To retain the efficiency and cost-effectiveness of mass production schemes, robotic systems have to quickly adapt to new tasks and manufacturing requirements [

12,

13]. Such transition and requirements have led to a significant application of LfD, as a suitable solution, in the manufacturing industry. It means that the existing robotic tasks in the mass production scheme need to be transformed via LfD to meet the new requirements of mass customization, which is why it is necessary to provide guidance for industry practitioners to start deploying state-of-the-art LfD solutions in existing robotic tasks. Notably, among all the robotic systems, industrial manipulators are the most popular and versatile robot types widely used in manufacturing and production lines. Therefore, while the application of robotic systems is not limited to manipulators, it is beneficial to have the focus of this paper narrowed down to industrial manipulators, due to their critical role in the automation of manufacturing and production lines.

Motivated by the aforementioned considerations and in contrast to existing reviews, our work aims to offer a practical and structured approach to implementing LfD in manufacturing tasks. Unlike other reviews, our review takes the form of a comprehensive questionnaire-style guide, providing practitioners with a clear roadmap to integrate LfD-based robot manipulation. Tailored for moderate expertise requirements, this tutorial-style taxonomy offers step-by-step instructions, enabling both researchers and professionals to develop application-based LfD solutions. This review provides the readers with the main steps to define the problem and devise an LfD solution, as well as giving main research directions for refining the performance of the LfD. The refinement criteria are also tailored based on the practical application of LfD.

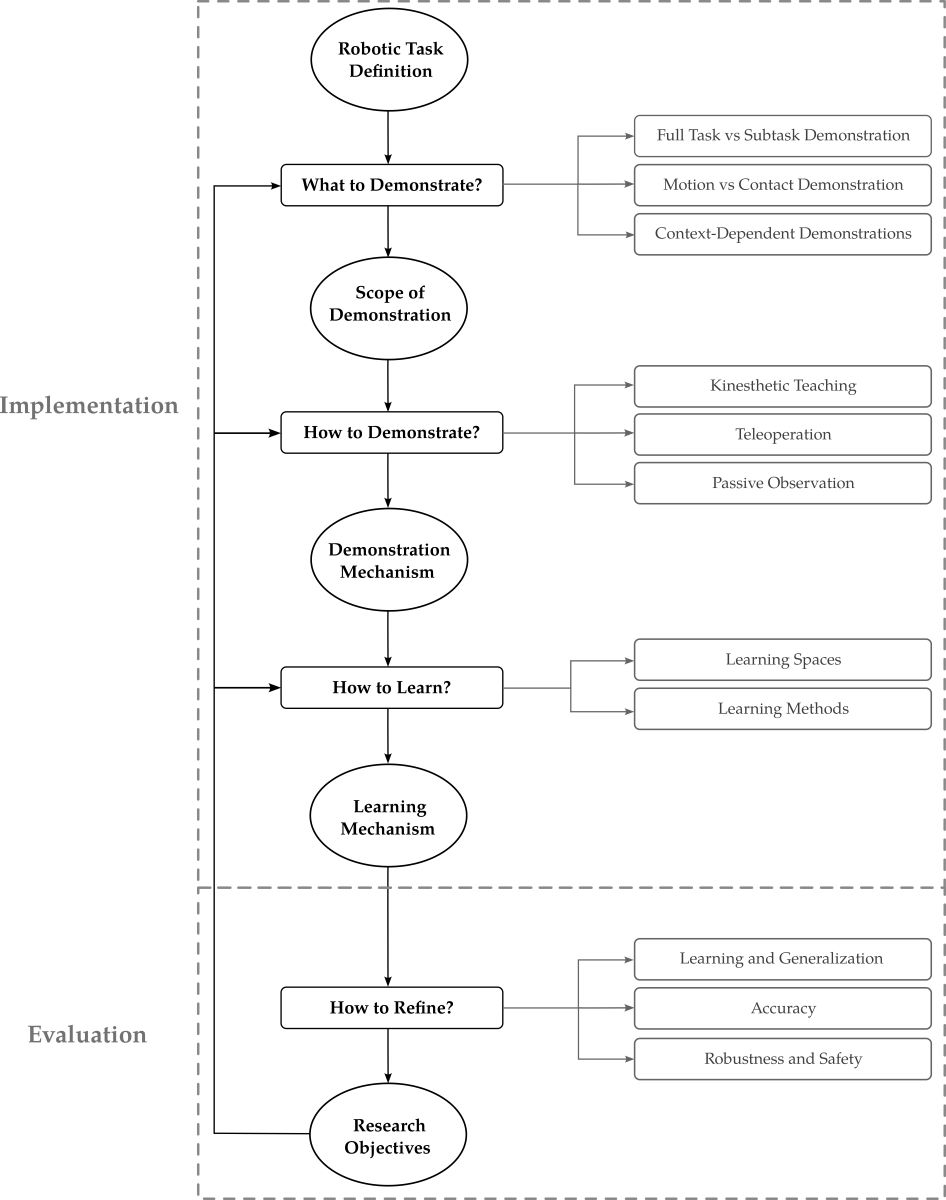

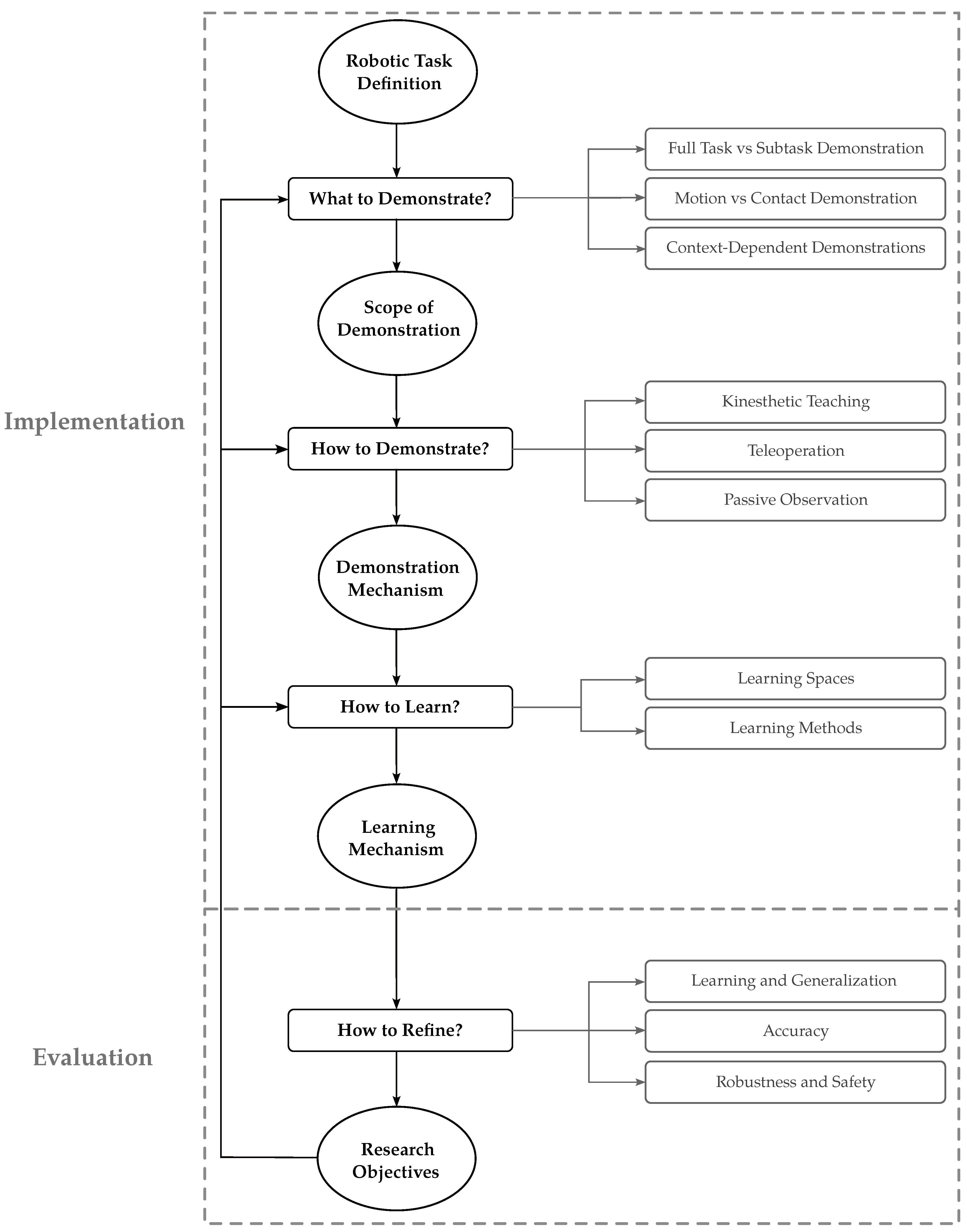

For this purpose, This paper explores how to integrate LfD into the robotization process using manipulators for manufacturing tasks, depicted in

Figure 1. First, the practitioner addresses the question of “What to demonstrate" to define the “Scope of Demonstration". Subsequently, the practitioner needs to answer the question of “How to Demonstrate" in order to devise a “Demonstration Mechanism". Accordingly, the question of “How to learn" equips the robotic manipulator with the proper “Learning mechanism". Even though the LfD process implementation is accomplished at this stage, the evaluation of LfD performance leads to the question of “How to Refine", which provides the research objectives and directions in which the performance of the LfD process can be further improved. Taking these points into account, the rest of the paper is structured as follows:

What to Demonstrate? (

Section 2): This section explores how to carefully define the task and identify certain features and characteristics that influence the design of the process.

How to Demonstrate? (

Section 3): Building upon the scope of demonstration, this section explores effective demonstration methods, considering task characteristics and their impact on robot learning.

How to Learn? (

Section 4): In this section, the focus shifts to implementing learning methods, enabling autonomous task execution based on human demonstrations.

How to Refine? (

Section 5): Concluding the structured approach, this section addresses refining LfD processes to meet industrial manufacturing requirements, outlining challenges and strategies for enhancing LfD solutions in manufacturing settings.

2. What to Demonstrate

This section focuses on the first step in developing an LfD solution, i.e., extracting the scope of the demonstration from the desired task. In this step, with a specific robotic task as the input, we explore how to determine the knowledge or skills a human teacher needs to demonstrate to the robot. The scope of demonstration establishes clear boundaries for what the robot should be able to accomplish after learning. Defining the scope is crucial because it sets the foundation for the entire LfD process. A well-defined scope ensures the provided demonstrations comprehensively and accurately capture the desired robot behavior. Conversely, a poorly defined scope can lead to incomplete or inaccurate demonstrations, ultimately limiting the effectiveness of the LfD solution.

To determine “What to demonstrate", three different aspects of the robotic task will be investigated as follows.

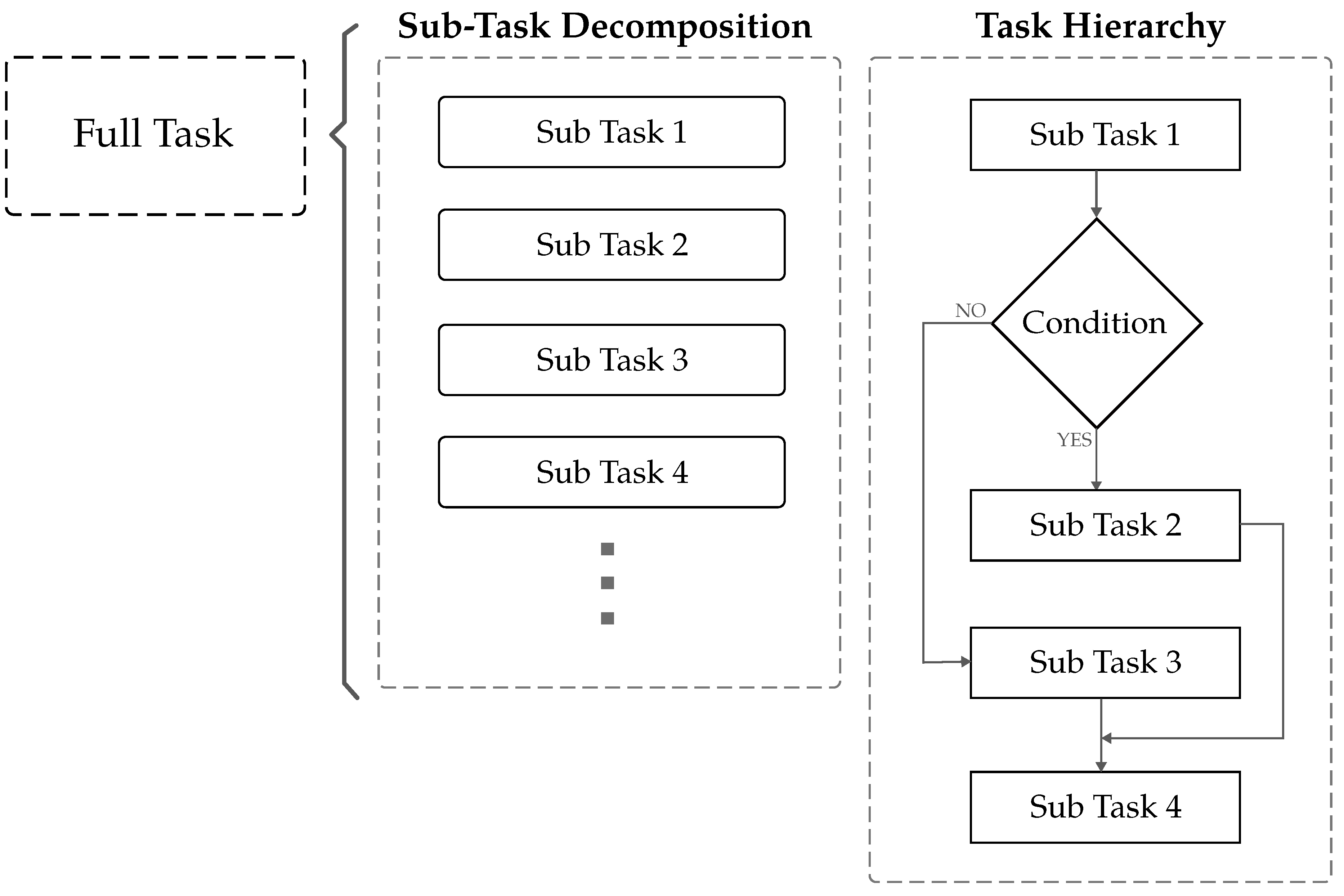

2.1. Full Task Versus Subtask Demonstration

A full robotic task can be decomposed into smaller steps called subtasks, along with their associated task hierarchy or their logical sequence (

Figure 2). In a full task demonstration, the human teacher demonstrates the entire process, including all subtasks in their logical order. In contrast, subtask demonstration focuses on teaching each subtask of the robotic task one at a time. Here we explore whether to demonstrate the full robotic task at once, or aim to demonstrate subtasks separately.

What Happens When Learning Full Task: In the case of full task demonstration, the LfD algorithm is required first to segment the task into smaller subtasks and learn them separately [

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24]. Otherwise, training a single model on the entire task can lead to information loss and poor performance [

25]. Beyond identifying subtasks, a full robotic task involves the logical order in which they should be executed – the task hierarchy. The LfD algorithm is required to extract these relationships between subtasks to build the overall task logic [

14,

16,

17,

18,

22,

23]. This segmentation is achieved through spatial and temporal reasoning on demonstration data [

15,

17,

19,

20,

21,

22,

24,

26,

27,

28,

29,

30]. Spatial features help identify subtasks, while temporal features reveal the high-level structure and sequence.

For instance, consider demonstrating a pick-and-place task as a full task. The LfD algorithm can easily segment the demonstration into “reaching", “moving", and “placing" the object, along with their sequential task hierarchy. Because these subtasks involve clearly defined motions and follow a straightforward, linear order, the LfD algorithm can reliably extract the complete logic required to perform the entire pick-and-place task. On the other hand, consider a pick-and-insert task with tight tolerances. Precise insertion is challenging to demonstrate and requires retry attempts as recovery behavior. This creates a conditional task hierarchy. The successful insertion depends on achieving tight tolerances, and if the initial attempt fails, the LfD system needs to learn the recovery behavior of repositioning the object and attempting insertion again. Consequently, LfD’s reliance on automatic segmentation to extract the detailed task logic in such cases becomes less reliable. However, background information on the task characteristic can be provided as metadata by the human teacher and leveraged by the learning algorithm to improve the segmentation method in semantic terms [

20,

31].

What Happens When Learning Subtask: When subtasks are demonstrated individually, the human teacher manually breaks down the full task and provides separate demonstrations for each one, along with the associated task hierarchy. This isolates the LfD algorithm’s learning process, allowing it to focus on learning one specific subtask at a time [

32,

33,

34,

35,

36,

37,

38]. It is evident that this approach requires extra effort from the teacher compared to full task demonstration.

For complex or intricate tasks such as pick-and-insert with tight tolerances, the teacher can individually provide “reaching", “moving", and “inserting" subtasks along with recovery behaviors. While this requires the teacher to separately define the task hierarchy and provide demonstrations for each subtask, it yields several benefits. First, the teacher can focus on providing clear and accurate demonstrations for each isolated step. Second, it allows for a reliable demonstration of the conditional hierarchy involved in complex tasks [

39,

40].

When to Demonstrate Full Task versus Subtask: Demonstrating the entire robotic task at once can be efficient for teaching simple, sequential tasks, as the algorithm can segment and learn reliably. However, for complex tasks with conditional logic or unstructured environments, this approach struggles. Breaking the task into subtasks and demonstrating them individually is more effective in these cases. This removes the burden of segmentation from the learning algorithm and allows for better performance, especially when dealing with conditional situations. By teaching subtasks first, and then layering the task hierarchy on top, robots can handle more complex tasks and learn them more efficiently. In essence, full task demonstration is recommended for simple behaviors with linear task logic, while complex tasks are recommended to be decomposed into subtasks and demonstrated separately.

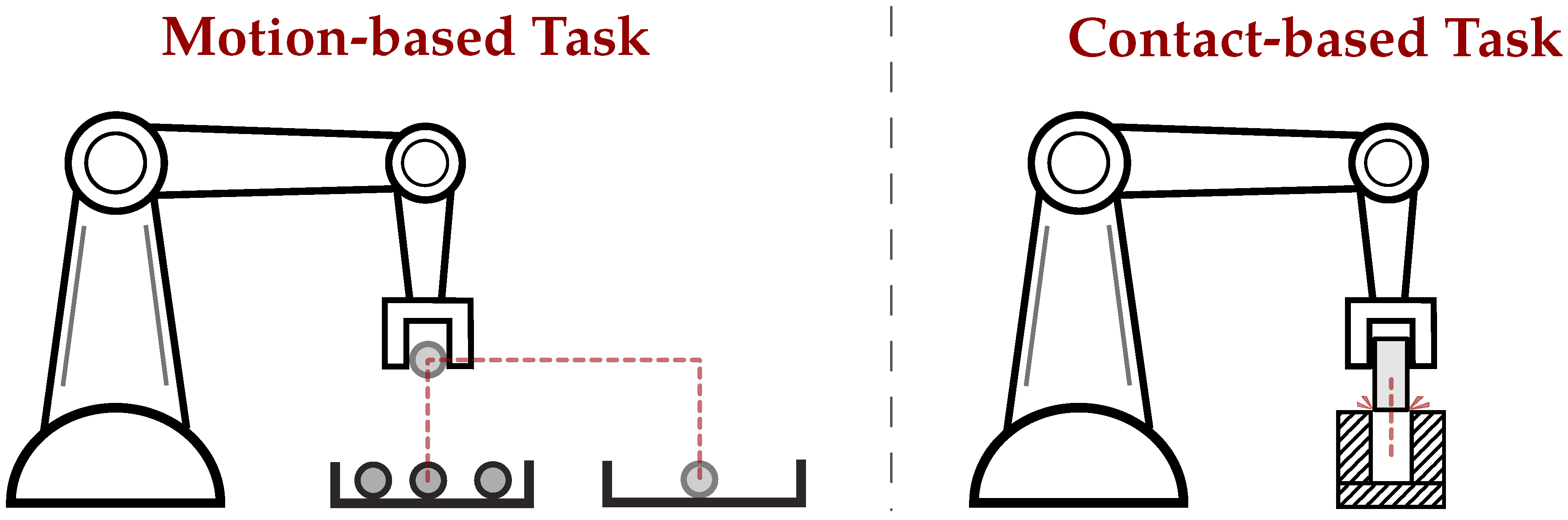

2.2. Motion-Based Versus Contact-Based Demonstration

Robot task demonstrations can be categorized into two main types: motion-based and contact-based. Motion-based tasks, like pick-and-place [

36,

37,

38], focus on teaching the robot’s movement patterns. The key information for success is captured in the robot’s trajectory and kinematics, with limited and controlled contact with the environment [

36,

37,

38,

41,

42,

43,

44,

45,

46,

47,

48]. Conversely, contact-based tasks, such as insertion [

33,

49,

50,

51,

52,

53,

54,

55,

56,

57,

58,

59,

60], require the robot to understand how to interact with objects. Here, task success also relies on understanding forces and contact points [

60]. Simply replicating the motion does not suffice and the robot needs to learn to apply appropriate force or adapt to tight tolerances to avoid failure. This highlights the importance of contact-based demonstrations for tasks where interaction with the environment is crucial. The comparison of motion-based and contact-based tasks is illustrated in

Figure 3.

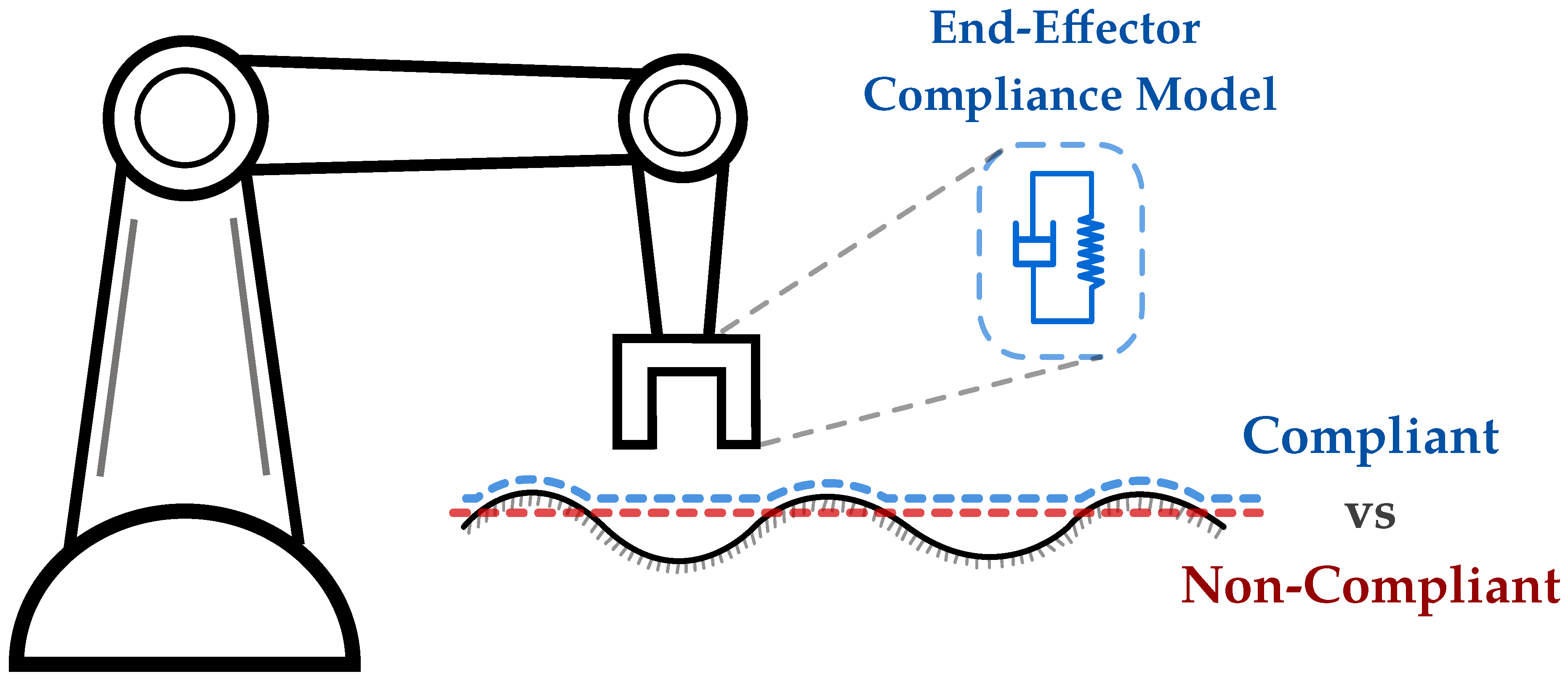

Notion of Compliance: To understand whether a task is motion-based or contact-based, it is necessary to understand the notion of compliance. Compliance in robotics refers to the capacity of a robotic system to yield or adjust its movements in response to external forces, ensuring a more adaptable and versatile interaction with its environment [

61]. This adaptability is typically achieved via Impedance Controllers, where the end effector of the robot is modeled as a spring-damper system to represent compliance (

Figure 4) [

62,

63]. In motion-based tasks, the robot prioritizes following a planned path with minimal adjustment (low compliance), i.e., external forces cannot alter the robot’s behavior, while contact-based tasks allow for more adaptation (high compliance) to better interact with the environment. It is important not to confuse compliance with collision avoidance. Collision avoidance involves actively preventing contact with the environment by adapting the behavior on the kinematic level, while compliance relates to the robot’s ability to adjust its behavior in response to external forces.

What is Learned and When to Teach: With motion-based demonstration, the LfD algorithm learns under the assumption of zero compliance and replicates the behavior on a kinematic level, i.e., strict motion. On the other hand, contact-based teaching enables the LfD algorithm to learn how to react when compliance is high, therefore it learns the skills on how to interact with the working environment.

One critical factor in selecting between motion-based and contact-based demonstration is the analysis of the desired task through the lens of its dependence on compliance. For example, in an insertion task with tight tolerances, if there are slight inaccuracies in measurements and the peg lands with a slight offset to the hole, compliance can be helpful to react skillfully to this misalignment and attempt to find the right insertion direction. Conversely, a pick-and-place task typically does not require compliance in the case when the grasping mechanism is simple and structured.

Practical Implications: From the point of view of practical implementation, the trade-off between motion and contact during the task execution is a key design factor to implement the task successfully [

15,

33,

59,

60,

64,

65,

66]. For example, in tasks such as rolling dough [

60], board wiping [

33], and grinding [

15], hybrid motion and force profile are learned as both are crucial for successful task execution i.e., a force profile is needed to be tracked alongside the motion. As mentioned, this factor is best encoded via Impedance Control, where at each point of task execution, it can be determined whether position or force requirements are in priority [

50,

67]. However, this method requires torque-controlled compliant robots and cannot be applied to many industrial robots which are position-controlled and stiff [

52]. For such robots, the alternative to Impedance Control is Admittance Control, which operates with an external force sensor and can be implemented on stiff industrial robots [

68].

2.3. Context-Dependent Demonstrations

In addition to the choice of scope between full task versus subtask and motion versus contact demonstration, the operation of the robot is influenced by various specific contexts. Such contextual settings are highly dependent on the requirements of the task and often need to be custom-designed and tailored for the task. Nonetheless, here we discuss several common contexts alongside the considerations required for each.

2.3.1. Collaborative Tasks

Tasks that involve collaborating with humans or other robots typically provide interaction through an observation or interaction interface, which serves as a channel for information exchange between the robot and its collaborators [

37,

69,

70,

71,

72,

73]. These interfaces can take various forms, such as physical interaction mechanisms or dedicated communication protocols [

72,

74,

75,

76], and are specifically designed and tailored to facilitate the collaborative task. Consequently, when providing demonstrations for such tasks, careful consideration must be given to ensure alignment with the interaction interface.

A typical example of a collaborative task is collaborative object transportation where one side of the object is held by the robot and the other part is held by the human [

70]. In this case, the interaction interface is physical Human-Robot Interaction (pHRI), where not only the motion is important, but also the compliance becomes relevant. Another aspect to consider in collaborative tasks is safety and collision avoidance since the robot’s operating environment is closely shared with the human collaborator [

37]. It means that the human teacher needs to further teach safety strategies to the robot. Moreover, collaborative tasks have a more complicated task logic since the execution can depend on the collaborator’s actions, which adds more conditions and more branching in the logic of the task. It also requires the robot to predict the intention of the human in order to follow the task hierarchy [

74].

2.3.2. Bi-Manual Tasks

Bi-manual tasks involve the coordinated use of both robot arms to manipulate objects or perform activities that require dual-handed dexterity [

27,

41,

64,

77,

78,

79]. Teaching a robot to perform bi-manual tasks through LfD should emphasize synchronization and coordination between the robot’s multiple arms. For instance, in tasks like assembling components or handling complex objects, the robot needs to learn how to distribute the workload efficiently between its arms. Also, in dual-arm assembly, the coordination of both arms played a crucial role in achieving the desired precision [

64,

78,

80]

Moreover, Bi-manual tasks often require specialized grasping strategies if both arms are simultaneously used for manipulating items [

79,

81]. Given the proximity of both arms in bi-manual tasks, safety considerations become of great importance. The teaching should emphasize safe practices, including collision avoidance strategies and safety-aware learning.

2.3.3. Via Points

In certain contexts, teaching robot-specific via-points within a task can be a highly effective way to convey nuanced information and refine the robot’s execution [

57,

82,

83]. Via points serve as intermediate locations or configurations within a task trajectory, guiding the robot through critical phases or ensuring precise execution. One notable scenario where demonstrating via points can enhance the learning process is in assembly processes [

84]. For complex machinery assembly, instructors can guide the robot through specific via points to ensure proper component alignment or correct part insertion. Additionally, via-points serve as an excellent measure of accuracy to optimize the robot motion and tool manipulation while ensuring successful task execution as long as the via point is passed throughout the path. Those enable the learning algorithm to understand optimal trajectories, adapt to changing conditions, and enhance its overall versatility.

2.3.4. Task Parameters

Some contexts require explicitly teaching a robot specific task parameters to enhance its understanding and performance in specialized scenarios. These task parameters go beyond the general actions and involve teaching the robot how to adapt to specific conditions or requirements. Here, the focus is on tailoring the robot’s learning to handle variations in the environment, object properties, or operational constraints [

72,

83,

85,

86,

87].

Teaching the robot about variations in object properties is essential for tasks where the characteristics of objects significantly impact the manipulation process. For instance, in material handling tasks, the robot needs to learn how to handle objects of different shapes, sizes, weights, and materials [

85,

88]. Demonstrations can be designed to showcase the manipulation of diverse objects, allowing the robot to generalize its learning across a range of scenarios. Additionally, robots operating in manufacturing settings often encounter specific operational constraints that influence task execution. Teaching the robot about these constraints ensures that it can adapt its actions accordingly. Examples of operational constraints include limited workspace, restricted joint movements, or specific safety protocols [

89].

3. How to Demonstrate

The next step after answering the question of “What to Demonstrate" and defining the scope of demonstration is to realize how to provide demonstrations to transfer the required knowledge and skills from the human teacher to the robot, i.e., the channel through which the information intended by the teacher could be efficiently mapped to the LfD algorithm on the robot. According to [

1], demonstration methods can be classified into three main categories: Kinesthetic Demonstration, Teleoperation, and Passive Observation. In this section, we discuss these categories and analyze their advantages and disadvantages with respect to the scope of demonstration, with a summary provided in

Table 1.

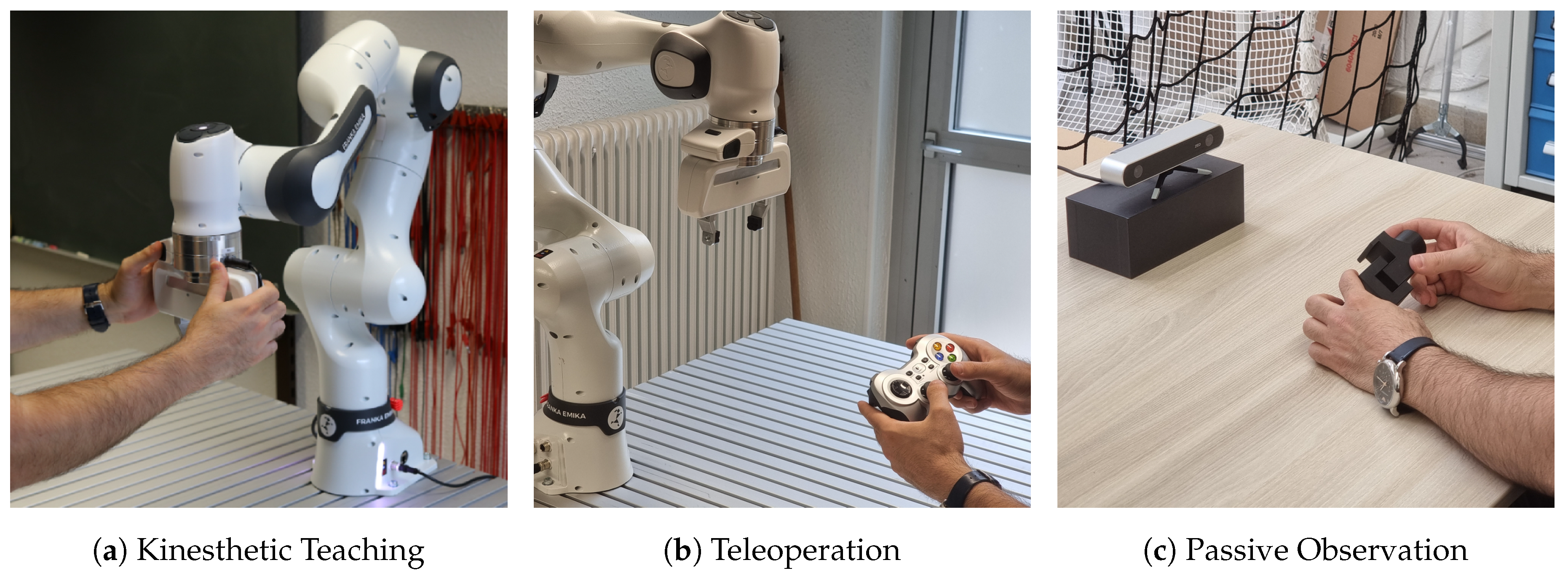

3.1. Kinesthetic Teaching

Kinesthetic teaching is a method wherein a human guides a robot through a desired motion within the robot’s configuration space. In this approach, a human physically guides the robot to perform a task, and the robot records the demonstration using its joint sensors (

Figure 5a) [

15,

32,

36,

37,

50,

52,

56,

58,

71,

90,

91,

92,

93]. The key aspect is the direct interaction between the human teacher and the robot in the robot’s configuration space.

What Setup is Required: Kinesthetic teaching offers a straightforward setup, requiring only the robot itself. This simplicity contributes to ease of implementation and reduces the complexity of the teaching process, as well as minimizing the associated costs. This makes kinesthetic teaching an affordable and cost-effective option for training robots. Moreover, the interaction with the robot is intuitive for the teacher, making it easier to convey complex tasks and subtle details.

However, the suitability of kinesthetic teaching can be limited by the physical demands it requires, particularly with larger or heavier robots. Safety concerns also arise, especially in scenarios involving rapid movements or the handling of hazardous materials. This limitation can affect the scalability of kinesthetic demonstrations in diverse manufacturing contexts.

How Demonstration Data is Obtained: Through kinesthetic teaching, the robot records the demonstration using its joint sensors. The recorded data forms the basis for training the robot, allowing it to learn and replicate the demonstrated motion. The mapping of training data into the learning algorithm is straightforward, which enhances the reliability of the demonstration framework. However, the recorded demonstration data contain noise, as it depends on the physical interaction between the human and the robot. This noise can affect the smoothness of the training data and require additional processing to improve the learning algorithm’s performance [

94]. Additionally, since the training data depends on the robot hardware, the scalability of the training data to another setup will be limited.

Recommendations: Kinesthetic teaching is effective for instructing both full task hierarchies and low-level subtasks, especially excelling in demonstrating complex and detailed subtasks with its precise physical guidance of the robot. However, for full task demonstrations, additional post-processing of training data is advised to enhance segmentation and eliminate noise. While kinesthetic teaching offers precise control for motion demonstrations, the recorded data often suffers from noise and lacks smoothness due to the physical interaction between human and robot. However, it becomes limiting for contact-based demonstrations because unreliable torque readings from joint sensors prevent teaching the desired force profile to the robot, as the human guides its movements.

3.2. Teleoperation

Teleoperation refers to the process in which the human teacher remotely controls the movements and actions of the robot (

Figure 5b). This control can be facilitated through different methods including joysticks, haptic interfaces, or other input devices, enabling the operator to teach the robot from a distance [

33,

60,

95,

96,

97,

98,

99]. This method differs from kinesthetic teaching as it allows for remote teaching to the robot.

What Setup is Required: Setting up teleoperation involves equipping the robotic manipulator with necessary sensors like cameras and force/torque sensors to relay feedback about the robot and its surroundings. On the human side, a well-designed control interface is required for intuitive and accurate control of the robot’s movements. A robust communication system, whether wired or wireless, is necessary for real-time transmission of control signals and feedback. Safety measures, including emergency stop mechanisms, are essential to prevent unexpected behaviors, especially since the robot is operated remotely and immediate access to the robot is not possible in case of a malfunction.

The teleoperation setup is inherently well-suited for the tasks being operated in dangerous or hard-to-reach environments. Moreover, the design of the teleoperation interface can be versatile according to the target task, to provide the operator with a teaching interface closest to human-like dexterity. On the downside, teleoperation requires a more sophisticated setup compared to kinesthetic teaching, and it requires further designing the control and the communication interface according to the task. While it can promote intuitive teaching, it often requires extra training for the operator on how to use the setup for their teaching and demonstration. The remote nature of teleoperation can also raise concerns over the communication latency of the setup and how it affects the task demonstration depending on the task.

How Demonstration Data is Obtained: Through teleoperation, the acquisition of the training data can be flexibly designed based on what information is required for learning. The training data can be obtained by joint sensor readings, force/torque sensors on the joints or the end effector, haptic feedback, etc. It is the decision of the robotic expert how to map the raw teaching data to processed and annotated training data suitable for the LfD algorithm. One main advantage of teleoperation is that it is possible to isolate the teaching to a certain aspect of the task. For example, in [

36], a joystick is used as the teleoperation device, where certain buttons only adjust the velocity of the robot, and other buttons directly affect the end-effector position and leave the execution velocity untouched. This benefit can enable better-tailored teaching or iterative feedback to increase the efficiency of the learning process.

Recommendations: Teleoperation is a suitable approach for demonstrating contact-rich tasks, since there is no physical interaction, and the joint torque sensors or the end effector force sensor on the robot can reliably record the contact-based demonstration as training data. It is also well-suited for tasks demanding real-time adjustments. One of the most common combinations of demonstration approaches is to use kinesthetic demonstration for motion demonstration, and use teleoperation for iterative refinements or providing contact-based demonstrations [

100].

3.3. Passive Observation

Passive observation refers to the process of a robot learning by observing and analyzing the actions performed by a human or another source without direct interaction or explicit guidance, i.e., the teaching happens with the robot outside the loop while passively observing via various sensors (

Figure 5c) [

21,

64,

78,

101,

102,

103,

104,

78]. During passive observation, the robot captures and analyzes the relevant data, such as the movements, sequences, and patterns involved in a particular task. The features and characteristics of the task are extracted from the observation and fed into the LfD algorithm as training data for learning and generalization.

What Setup is Required: Setting up the teaching framework for passive observation involves various sensors such as 2D/3D cameras, motion capture systems, etc. to enable the LfD algorithm to observe the environment and the actions performed by the human teacher. The information from raw observation is then processed by sophisticated machine learning and computer vision algorithms to extract key features of the demonstration and track them throughout the teaching. In this setup, humans often teach the task in their own configuration space (i.e., with their own hands and arms), which makes the teaching easy and highly intuitive for the humans. However, the setup is complicated and expensive due to the requirement of various sensory systems.

When it comes to the scalability of demonstration across numerous tasks and transferability from one robotic platform to another, passive observation stands out as a suitable option for large-scale collections of demonstration datasets for various tasks, making it preferable when extensive datasets are needed for training purposes. This is while kinesthetic teaching and teleoperation mainly rely on a specific robotic platform and often cannot be scaled across tasks or robots.

How Demonstration Data is Obtained: Acquisition of training data through passive observation mainly relies on the extraction of the key features, as the learning performance critically depends on how well the features represent the desired behavior of the robot on the task. This forms a bottleneck in learning, since the more complex the task, the less efficient the feature extraction. Consequently, passive observation can suffer from learning and performance issues when it comes to complex and detailed demonstrations. Overall, as the demonstration setup is complex for this approach and the correspondence problem limits the possibility of demonstrating complex tasks, this approach is not common for manufacturing use cases.

Recommendations Nonetheless, Passive observation has been used for demonstrating high-level full-task hierarchies. It is a suitable approach in scenarios where a diverse range of demonstrations across various tasks needs to be captured. For instance, in [

64], human demonstrations are observed via a Kinect motion tracker, and then a motion segmentation is applied to build the overall task logic. In terms of scalability, passive observation stands out as a fit option for large-scale data collection, making it particularly suitable when extensive datasets are needed for training purposes.

3.4. Remarks

There are alternative approaches to provide demonstrations tailored to a specific context or situation. For example, in [

105] the demonstration is in the form of comparison between trajectories, i.e., the robot produces a set of candidate trajectories, while the human provides preferences and comparison among them to imply which robot behavior is more suitable. In [

106], the human feedback has the form of a corrective binary signal in the action domain of the LfD algorithm during robot execution. The binary feedback signifies an increase or decrease in the current action magnitude.

4. How to Learn

This section focuses on the development of the LfD algorithm itself, after determining the scope of demonstration and the demonstration mechanism. The aim of this section is to consider how to design and develop a learning mechanism to meet the requirements of our desired task. We first discuss the possible learning spaces in which the robot can learn, and then we explore the most common learning methods used as the core of LfD algorithm.

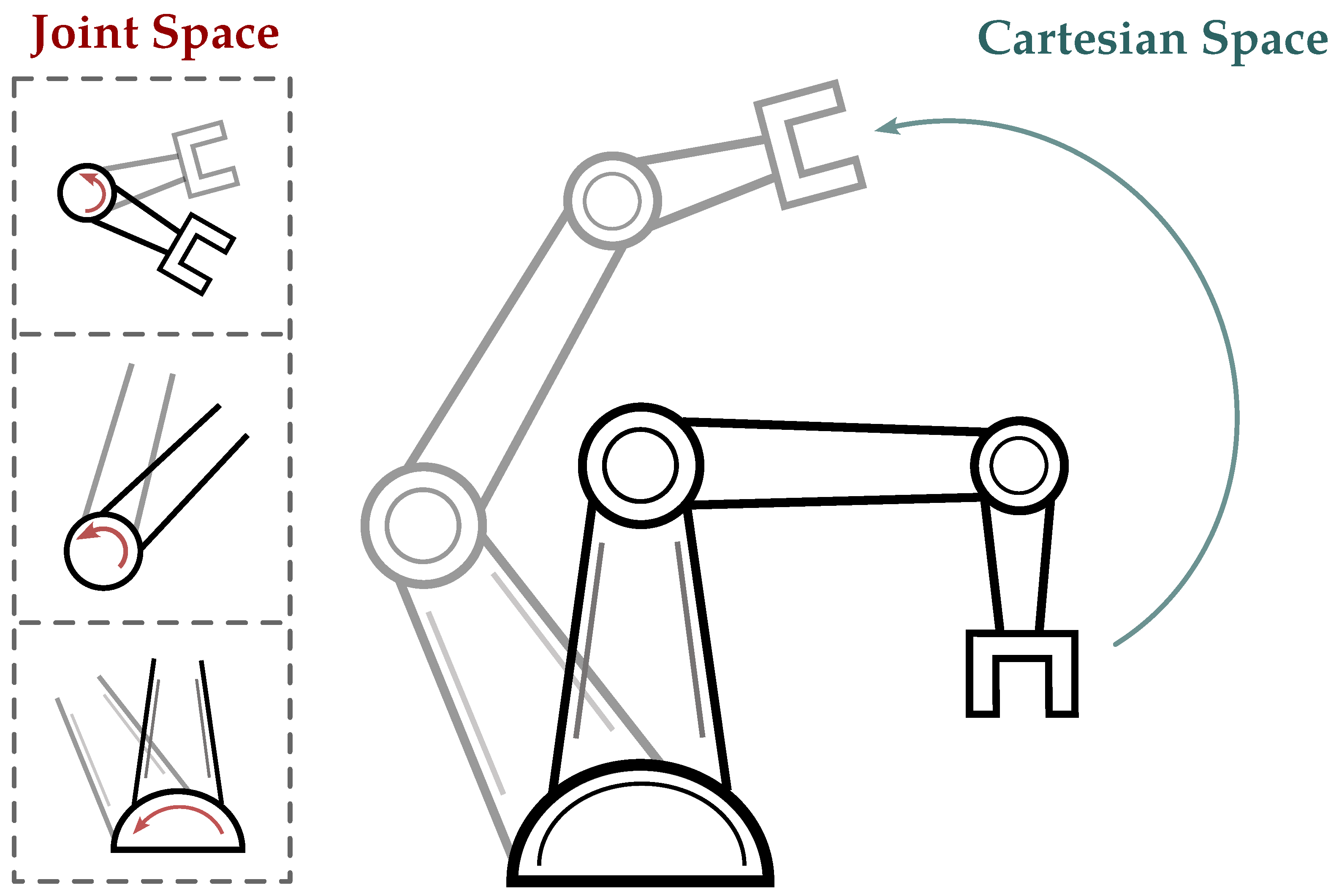

4.1. Learning Spaces

Here we discuss the concept of learning spaces when representing demonstration data. The learning space not only encompasses where the training data from demonstrations are represented but also serves as the environment where the learning algorithm operates and generalizes the learned behavior. The choice of learning space is important as it provides background knowledge to the algorithm, thereby facilitating better learning and generalization within that designated space. While there are several possibilities for learning spaces, here we focus on two main choices commonly used for robotic manipulators (

Figure 6).

4.1.1. Joint Space

The joint space of a robot is a comprehensive representation of its individual joint configurations. This space serves as the primary language of motor commands which offers a low-level and precise representation of the possible configuration of each joint [

32,

94,

107,

108].

Learning in Joint Space: Learning in joint space offers several advantages for LfD algorithms. Existing in Euclidean space makes the underlying math and data processing more efficient and straightforward. Additionally, since joint space directly corresponds to the robot’s control layer, learned behaviors can be seamlessly integrated into the controller, which further simplifies the process.

However, a potential downside of learning in joint space is the risk of overfitting to specific demonstrations, which limits the robot’s generalizability. Furthermore, focusing on joint space learning neglects to capture higher-level contextual information which relate to the semantics of the task. Finally, joint space learning is sensitive to hardware variations, making it difficult when it comes to scalability and transferring skills between different robots.

Demonstrating in Joint Space: One notable advantage of demonstrating in joint space is the richness of the information obtained during the demonstration process, including the precise configuration of each individual joint. This level of detail is particularly advantageous for kinematically redundant manipulators, which can optimize null-space motion and obstacle avoidance.

However, a drawback lies in the intuitiveness of the learning process for human teachers. While joint space offers rich data for the robot to learn from, understanding how the learning algorithm learns and generalizes from this data is not intuitive for humans. The complexity involved in translating joint configurations into meaningful task representations can pose challenges for human teachers in understanding the learning process and predicting how the robot generalizes its learned skills.

In terms of acquiring demonstration data, kinesthetic teaching and teleoperation are straightforward methods for obtaining joint space data since joint states can be directly recorded. However, passive observation requires an additional step to convert raw information into joint space data, making it less practical to operate directly in joint space for this approach. In such cases, adding unnecessary transformations to obtain joint data is not justified, as it complicates the demonstration process.

4.1.2. Cartesian Space

This section introduces Cartesian space, a mathematical representation of three-dimensional physical space using orthogonal axes. In robotics, Cartesian space is commonly employed to describe the position and orientation of a robot’s end-effector. LfD in Cartesian space involves training robots to imitate demonstrated tasks or movements by utilizing the coordinates of the end-effector [

34,

36,

50,

51,

52,

53,

54,

59,

65,

109].

Learning in Cartesian Space: Cartesian space offers a natural representation for tasks involving end-effector movements and positioning, simplifying the learning process by directly addressing the space where tasks are being operated and executed. This choice is particularly advantageous for applications requiring precise end-effector control and potentially leads to better generalization in new situations. The consistency in representation allows for more effective generalization across different robotic systems and tasks.

One significant advantage of Cartesian space is the consistency of the dimension of the end-effector pose state across various robot platforms, regardless of their joint configurations. This standardized representation facilitates a more uniform approach to learning compared to joint space, which can vary in dimensionality from one robot to another. However, challenges arise when dealing with rotations within Cartesian coordinates, as rotation resides in a different manifold. It is required to utilize the calculus in a non-Euclidean space. This can increase the computational complexity of learning algorithms compared to those in the straightforward calculations of joint space.

Furthermore, the outcome of learning in Cartesian space cannot be directly integrated into the robot’s controller. A transformation step is required to convert the learned information into joint space, adding an extra layer of complexity to ensure seamless integration into the robot’s control system.

Demonstrating in Cartesian Space: Cartesian space is an intuitive space for the human teacher, which facilitates a better understanding of how the learning process happens. Additionally, this intuitiveness enables easier inclusion of task parameters and better iterative feedback to the LfD outcome, allowing the instructor to refine and optimize the robot’s performance over successive teaching sessions.

However, a limitation arises in the encoding of end-effector behavior exclusively. In redundant manipulators, the representation in Cartesian space does not directly consider null space motion. The null space, which represents additional degrees of freedom beyond the end-effector behavior, is not explicitly encoded in Cartesian space. This limitation restricts the teacher’s ability to convey and refine complex motions involving redundant manipulators, potentially overlooking certain aspects of the robot’s capabilities.

Finally, acquiring training data in Cartesian space via kinesthetic teaching requires having the kinematic model of the robot including the end effector hand or tool, and applying forward kinematic to the raw joint readings. The approach is similar in teleoperation, although it depends on the design of the teleoperation interface. Passive observation, however, demands the development of a mapping between the human hand or held tool and the robot’s end effector. Leveraging pattern recognition and feature extraction techniques, the movements of the human hands are interpreted and translated into end-effector movements, serving as valuable Cartesian-space training data.

4.1.3. Remarks

While joint space and Cartesian space are the most common and fundamental spaces to represent a task, there are a variety of choices that can be particularly designed and developed for the designated task. For example, in [

33], the pixel space of the camera was used as a measure of distance between the peg and hole. End-to-end training on visual images was used in [

90]. Also, in [

15], while the task is learned in Cartesian space, the Cartesian frame changes at each time step in a way that the z-axis always points towards the direction in which the force has to be applied.

When choosing approaches where a cost or reward function is involved, the design of such functions depends on the choice of latent/feature space, i.e., the space that the reward or cost function has to represent. In [

105], the reward function is learned from demonstration, to represent as latent space of the training data. Later, the reward function is used by Reinforcement Learning to learn a suitable policy. Likewise, in [

110], a cost function is learned via Inverse Optimal Control, which represents the task’s requirement for successful execution.

4.2. Learning Methods

Here we provide a comparative analysis of the most common learning methods used for LfD. For each method, we discuss their learning concept and their training procedure, as well as their characteristics, strengths and weaknesses. Moreover, in

Table 2, we present a summary of our comparative analysis. The table evaluates the learning methods across several key metrics to provide insight into their respective practical strengths and weaknesses in the manufacturing context. The metrics assessed include the implementation effort, explainability, generalization capability, training data efficiency, and safety and robustness. Implementation effort helps evaluate the practicality and resource requirements of integrating a particular learning method into manufacturing processes. Explainability assesses how well the reasoning behind the learned behaviors can be easily understood and interpreted by operators. Generalization capability indicates the extent to which a learning method can adapt to new or unseen situations, enhancing its versatility and applicability. The efficiency of training data usage highlights how effectively a method can learn from limited datasets, optimizing resource utilization and reducing data acquisition costs. Finally, Safety and robustness metrics assess the reliability and resilience of learned behaviors in the face of uncertainties.

4.2.1. Movement Primitive (MP)

Learning Concept: A MP encapsulates a low-level robot behavior defined by a trajectory generator and an exit condition. The trajectory generator specifies the desired robot motion, while the exit condition determines when the movement should stop [

50,

51,

108,

111]. For instance, a typical MP involves moving a robot until contact with a surface, where the trajectory generator guides the robot until a predefined force threshold is reached, signaling the exit condition. MPs do not require demonstration at the subtask level. They are manually designed and optimized by robotic experts. Instead, LfD focuses on the task hierarchy, where the sequence in which to execute these pre-defined MPs is demonstrated to achieve a full task. Tasks composed of MPs can be structured using various methods such as state machines or task graphs. The essence of MPs is to offer a structured and optimized way to encode low-level robot behaviors that can be combined to achieve more complex tasks.

Training Procedure: The design, implementation, and optimization of MPs is performed by the robotic expert, while the human teacher demonstrates the task hierarchy composed of MPs. In this way, subtasks are structured and tailored to specific tasks, resulting in efficient, reliable, and predictable behavior, while the demonstration effort is minimized. However, the manual design and tuning of MPs by robotic experts limit flexibility and control over lower-level behaviors for teachers. While MPs enhance learning performance by constraining the learning space to predefined combinations, their effectiveness depends on expert tuning, making them less adaptable to new behaviors, especially at the subtask level. MPs restricts generalization at the subtask level, demanding expert intervention to encode, tune, and integrate new behaviors into the MPs.

4.2.2. Dynamic Movement Primitive (DMP)

Learning Concept: The DMP combines a spring damper dynamical system, known as an attractor model, with a nonlinear function to achieve a desired goal configuration [

112,

113]. The attractor model ensures convergence to the goal configuration, with its attractor point serving as the target goal. Without the nonlinear function, the model asymptotically converges to the goal. The role of the nonlinear function is to encode a certain behavior represented via the demonstration. By superposition of the nonlinear function and the attractor model, DMP replicates the demonstrated behavior. Essentially, the nonlinear function guides the attractor system towards the desired behavior, while eventually vanishing as the system reaches the goal [

15,

52,

60,

67,

76,

114,

115,

116,

117,

118].

Training Procedure: To effectively train a DMP, the training data should primarily exhibit temporal dependence, with time progression as the independent variable (x-axis) and the dependent variable (y-axis) representing aspects such as position, force, or stiffness. Subtask learning involves collecting training data by creating trajectories from joint readings, force sensors, or stiffness profiles. For whole task sequences, teaching data must first be segmented into subtasks, with each subtask fitted with its own DMP. While DMPs are more suited for learning subtasks, approaches exist where multiple DMPs can be integrated into a single model to represent entire task sequences [

119]. Motion-based and contact-based learning are feasible via DMPs, utilizing position trajectories [

52,

116], force trajectories [

120], or stiffness profiles [

121,

122] as training data. Notably, DMPs offer the advantage of requiring only a single demonstration to generate a training set and train the model, although multiple demonstrations can be utilized for a more comprehensive training set [

120,

123]. Moreover, DMPs have been employed in context-dependent learning scenarios for collaborative tasks via points or task parameters [

70,

124].

This training procedure involves representing time as a phase variable to make DMP systems autonomous from direct time dependence. Training data, along with their derivatives, are input into the DMP equation to generate target values for approximating the nonlinear term. Locally Weighted Regression (LWR) [

125] is a typical choice for the nonlinear function approximator. The learned outcome is a nonlinear function mapping the phase variable to scalar values. The attractor model of DMP is designed to ensure critical damping, facilitating convergence to the goal without oscillation. The core idea of DMP lies in representing behavior as a deterministic system augmented by forcing terms, enabling learning at a higher level. While DMPs offer simplicity and reliability in implementation and generalization, their generalization mechanism is limited to a region around the original demonstration’s configurations. Hyperparameters, although manually tuned, can be applied across various demonstrations without re-tuning. DMPs can be trained in joint space or Cartesian space, with multiple DMPs synchronized via the phase equation for joint space training, and a modified version for rotational values in Cartesian space represented using quaternions.

4.2.3. Reinforcement Learning (RL)

Learning Concept: RL in the context of LfD involves training robots to execute tasks by interacting with their environment, receiving feedback in the form of rewards or penalties, and adjusting their actions to maximize cumulative rewards [

53,

54,

57,

105,

109,

126,

127,

128,

129]. At its core, RL involves an agent (the robot) learning optimal actions to achieve a predefined objective within a given environment. This learning process hinges on the development of a policy, a strategy that guides the agent’s actions based on the current state of the environment. The policy is refined through the optimization of a value function, which estimates the expected cumulative reward for specific actions in particular states. Rewards serve as feedback, reinforcing desirable actions and discouraging undesired behavior, thereby shaping the agent’s learning trajectory. RL algorithms balance exploration and exploitation, enabling the robot to discover effective strategies while leveraging known successful actions. However, RL alone does not suffice for successful LfD without an accurate reward function encapsulating the task requirements.

Inverse Reinforcement Learning (IRL) acts as a bridge between RL and LfD. Via human demonstrations, IRL seeks the underlying implicit reward structure that guides those actions. The fundamental idea is to reverse engineer the decision-making process of the human teacher. Capturing the latent reward function enables the robot to replicate and generalize learned behavior to achieve similar goals in diverse contexts [

130,

131,

132].

Training Procedure: To Train and LfD algorithm via RL-based methods, two key components are essential: a reward function and a policy function. The reward function encapsulates the task’s definition, requirements, and success metrics, essentially encoding all the information provided by the teacher. Meanwhile, the policy function serves as the brain of the robot, dictating its behavior to execute the task. Training proceeds in two stages: first, designing or learning an appropriate reward function that accurately represents the desired task features and requirements, and second, training an RL algorithm to learn a policy function based on this reward function through interaction with the environment.

During the first stage, the focus lies on devising a reward function that encapsulates the teacher’s instructions regarding the task. While this function can be manually designed, a more comprehensive LfD solution involves learning the reward function from human demonstrations [

53,

56,

105,

133]. The effectiveness of the LfD solution is directly linked to how well the reward function encapsulates the task’s key aspects. In the second stage, assuming a reward function is already established, an RL algorithm learns a policy function by iteratively refining its behavior based on feedback from the environment and rewards obtained from the reward function.

Training via RL-based approaches offers flexibility in encoding information and learning skills, with training data ranging from raw images to robot trajectories. However, it requires careful engineering of the reward function and task analysis by robotic experts. Additionally, RL-based learning requires a dataset, not just a single demonstration, and involves modeling the environment, adding complexity to the implementation of LfD algorithms in this manner. Tuning hyperparameters associated with the policy and reward functions, as well as potentially modifying the environment model for each task, are the steps that require robotic experts to ensure successful and efficient learning.

4.2.4. Gaussian Process (GP)

Learning Concept: GPs are a probabilistic modeling approach in machine learning, capturing entire functions through mean and covariance functions known as kernel functions. The kernel function in GPs determines the similarity between function values at different points. This allows GPs to capture intricate patterns and relationships in data, while also estimating the uncertainty in those predictions. GPs are non-parametric, which means they are capable of learning from limited data points while being able to adjust their complexity with more data. Predictions from GPs include both anticipated function values and associated uncertainty, particularly useful in scenarios with sparse or noisy data [

34,

36,

82,

83].

The conceptual advantage of learning methods like GP is their ability to quantify uncertainty, which is important for understanding the model’s confidence in its predictions. This feature enhances human comprehension of the learning process and can serve as a safety mechanism for robots, where uncertain policies could lead to errors or damages. By monitoring the model’s uncertainty and providing feedback, human teachers can refine the GP’s behavior and adjust uncertainty bounds accordingly, ensuring safer and more robust execution.

Training Procedure: The training process for GP models involves learning from input-output pairs, where the independent variable can be any sequential variable, such as time or the position of the end effector. However, it is important to maintain the sequential nature of the data, e.g., restricting scenarios where the end effector revisits a location. GPs excel at learning subtasks with limited demonstration data, even one-shot demonstration input. To improve the generalization capability, it is advisable to train GP in Cartesian space. This is because small changes in joint values can result in significant changes in the end effector in joint space. Moreover, training in joint space makes the uncertainty measures less interpretable.

GP is not particularly demanding in terms of implementation and hyperparameter tuning. The robot expert needs to design a kernel function in the formulation of GP, as well as very few hyperparameters. Moreover, GP is flexible across various tasks, i.e., it does not often require significant hyperparameter tuning or design alterations when transitioning from one task to another.

4.2.5. Gaussian Mixture Model (GMM)

Learning Concept: GMMs offer a probabilistic method similar to GPs for modeling functions, representing them as a mixture of multiple Gaussian distributions. Each Gaussian component within a GMM represents a cluster in the dataset. In the context of LfD, GMMs can be used to model the underlying structure of human demonstrations. Due to their multi-modal nature, GMMs can capture complex behaviors while being flexible in terms of the number of learning variables [

33,

105,

134]. They can also encode variability in demonstrations, giving a measure of accuracy at each point. similar to GPs. Additionally, GMMs can be updated locally with new data without affecting other segments of the learned behavior. This allows GMMs to naturally cluster data and learn complex behaviors from human demonstrations.

Training Procedure: GMMs allow for flexible training variable dimensions through multivariate Gaussian distributions, enabling each distribution to represent and train on different aspects of a task or subtask. Unlike GPs which can learn from single demonstration, GMMs require multiple demonstrations to capture the statistics and effectively learn the task. Additionally, GMMs are typically more intuitive when learned in Cartesian space rather than joint space, similar to GPs.

4.2.6. Probabilistic Movement Primitive (ProMP)

Learning Concept: ProMP is a learning approach developed for LfD which employs Gaussian basis functions to model demonstrated behavior [

135]. The parameters of ProMP are typically weights associated with the basis functions. ProMP introduces a probabilistic aspect into learning, similar to GP and GMM, allowing the model to accommodate uncertainty in learned movements and generalize them to different conditions or contexts [

32,

37,

85,

136].

Training Procedure: The training procedure for ProMPs involves representing training data as time-series trajectories, with each trajectory corresponding to a specific demonstration of the task. These trajectories are often normalized or preprocessed to ensure consistency across different demonstrations. Through an optimization process, the model seeks to find the parameters that best fit the observed trajectories from demonstrations, constructing a probabilistic model capturing the distribution of trajectories and their likelihood at each point in time. However, ProMPs generally require a larger training dataset compared to other probability-based LfD methods.

4.2.7. Remarks

In addition to the mentioned methods, various other learning approaches serve as the core of LfD algorithm, with customization based on task-specific requirements. For example, Hidden Markov Model (HMMs) are commonly utilized for learning behaviors, as used in [

59]. Additionally, methods based on optimal control are also employed, which are conceptually similar to RL. For example, in [

110], Inverse Optimal Control (IOC) is used to learn the cost function of the task, similar to IRL. This function is later used to find an optimal policy to generalize the desired task.

5. How to Refine

The final step after completing the design and development of an LfD process, is to analyze and evaluate their performance, which guides the question of “How to Refine". This section dives into the main key trends and directions within the state-of-the-art that aim to refine LfD algorithms across various aspects. For each trend, we will explore how it can improve LfD performance and identify potential areas for further research. The goal is to provide insights into possible research objectives for evaluation and improvement. This analysis will provide a refined perspective on the previously discussed aspects, ultimately contributing to an iterative loop of improvement for the entire LfD process.

5.1. Learning and Generalization Performance

Although current LfD approaches can learn from human teachings and generalize the behavior to new situations, it is still far from the idea of learning behavior that can be seen from humans. If human learning capabilities are considered the ideal case, LfD approaches are not even close to cognitive learning capabilities. Therefore, it is a crucial line of refinement on the LfD approaches to get them closer to the cognitive learning power of human beings. The performance of learning and generalization refers to how well the algorithm can capture the essence of the desired behavior from human demonstration, and how intelligently the algorithm generates essentially the same behavior but adapts to the new environment or situation or context condition. Learning and generalization are two entangled factors that directly affect each other. A better the learning performance subsequently leads to a better generalization, and improving generalization essentially means that learning performance has been improved. While using typical state-of-the-art LfD approaches already performs well in learning and generalization, they operate under assumptions, and actually cannot generalize to every possible case or situation. That is why it is necessary to improve the LfD approach based on what we require for our task and what is missing in the current LfD approaches. Improving learning performance means improving how the human demonstrations are processed by the learning algorithm, as well as modifying the core algorithm of learning to better capture different aspects of the behavior from the demonstrations. One trend is the approach of incremental learning [

32,

34,

46,

52,

90]. Incremental learning refers to the ability of a robot to continuously acquire and refine its knowledge and skills over time as it interacts with its environment or receives additional demonstrations from a human operator. In [

34], A GP is learned via one demonstration, but more demonstrations are provided through the operation of the robot to further refine GP training and improve its learned behavior. As another example, authors in [

46] focused on continual learning for teaching letters in the alphabet incrementally without the algorithm forgetting the previously learned letters. The algorithm was able to write “Hello World" at the end, with the accumulated knowledge.

Building upon incremental learning, the concept of interactive learning emerges [

32,

60,

85,

106]. Interactive learning refers to a learning paradigm in which the robot actively engages with the human teacher or the learning environment to acquire knowledge or skills. Unlike passive learning methods where information is simply presented to the learner, interactive learning involves two-way communication and dynamic interaction between the learner and the learning material. In [

106], authors provide an interactive learning framework through which non-expert human teachers can advise the learner in their state-action domain. In [

32], the human teacher kinesthetically corrects the robot’s trajectory during execution to teach the robot to perform the task accurately. A joint probability distribution of the trajectories and the task context is built from interactive human corrections. This distribution is updated over time, and it is used to generalize to the best possible trajectory given a new context.

Another technique is Active Querying [

34,

85,

105]. In this technique, the learner dynamically decides which data points or demonstrations are most informative for the learning or decision-making process and requests the corresponding information from the teacher. This approach is particularly helpful for improving performance in an efficient way and acquiring the most relevant information. In [

85], they focused on the demonstration distribution when training ProMPs, and the fact that it is not trivial how to add a good demonstration in terms of improving generalization capabilities. So they learn a GMM over the demonstrations distribution. and use epistemic uncertainty to quantify where a new demonstration query is required. Their proposed active learning method iteratively improves its generalization capabilities by querying useful and good demonstrations to maximize the information gain. In a similar way, the uncertainty measure of GPs is used in [

34] to trigger a new demonstration request.

Aside from the mentioned learning paradigms, it is often required to modify learning algorithms based on the application use case or the context in which the LfD algorithm is employed. For example, there are several works to improve the performance of LfD with respect to contact-rich tasks [

50,

51,

60,

91]. In [

51], they proposed an approach to reduce the learning time of insertion tasks with tolerances of up to sub-millimeters, with application in manufacturing use cases. In [

50], a novel task similarity metric is introduced, and it is used to generalize the already-learned insertion skills to novel insertion tasks without depending on domain expertise. In another context, [

49] considers the assembly use cases and explores the idea that skillful assembly is best represented as dynamic sequences of manipulation primitives, and that such sequences can be automatically discovered by Reinforcement Learning. The authors in [

88] extended DMPs to manipulating deformable objects such as ropes or thin films, to account for the uncertainty and variability from the model parameters of the deformable object. Another important aspect of learning is to learn factor which are in non-euclidean spaces. In [

118], the formulation of DMP is modified to become geometry aware, so that the new formulation can be adapted to the geometric constraints of Reimanian manifold.

Finally, for certain contexts, some works attempt to design and develop control schemes to be learned in order to better learn and execute the behavior. The work in [

15] has focused on the fact that some tasks such as grinding, sanding, polishing, or wiping require a hybrid control strategy in order to accurately follow the motion and the force profile required for successful task execution. To enhance the learning process of these tasks, they proposed some pre-programmed control strategies with parameters to tune via non-expert demonstrations. Such parameters are extracted from one-shot demonstrations and learn the motion-force task more efficiently.

Aside from the context, several works have attempted to provide improvements on the learning performance of a certain algorithm. Such algorithm-based improvements are dedicated to resolve the performance issues inherent in a learning method. In [

117], They proposed a new formulation for DMPs in order to make it reversible in both directions and make it more generalizable. Authors in [

52] introduce Constant Speed Cartesian DMPs, which completely decouples the spatial and temporal components of the task, contributing to a more efficient learning. In their LfD approach based on IOC, the work in [

110] proposed an ensemble method which allow for more rapid learning of a powerful model by aggregating several simpler IOC models. The work in [

34] combined GPs with DMPs, as GPs do not guarantee convergance to an arbitrary goal. Therefore, a DMP is learned on the GP output to ensure that any arbitrary goal is reached. With respect to Deep learning based approaches, [

54] has focused on reducing the sample complexity by introducing self-supervised methods. For RL-based approaches, authors in [

56] have developed a method to use demonstrations for improving learning sparse-reward problems. Both demonstrations and interactions are used to enhance the performance of learning.

5.2. Accuracy

While accuracy and precision can be mainly improved by improving the learning performance, it is not necessarily sufficient to achieve the desired accuracy by focusing generally only on the learning performance. While improving learning can enhance the overall generalization performance of the algorithm in the case of new scenarios, it is not a guarantee that the generalization outcome is accurate in terms of the desired task’s metrics. Not only does the teaching process influence the accuracy of the outcome, but the execution strategy of the LfD is also the final stage that plays a major role in the outcome.

The notion of accuracy has a subjective nature, i.e., it can be defined differently from task to task. Therefore, there is no unified definition to describe the metric of accuracy across arbitrary tasks. However, several factors can be associated generally with the metrics of accuracy, to measure how accurate is the LfD algorithm, to some extent, with respect to the outcome. One of these factors is the success rate. Over various execution of the task via LfD policy, the success rate of the task to achieve a desired goal, can be a simple yet effective measure of how accurately a task is executed. If the accuracy requirements are not satisfied until a threshold, the task will not succeed at the end. Hence, success rate can be a measurable factor to describe the accuracy of a task, and a tangible metric to work towards improving by enhancing accuracy.

Besides focusing on the learning algorithm’s performance, there are more dedicated approaches and trends to improve the accuracy of an LfD algorithm. One direction is to focus on the question of how to modify the teaching and demonstration method to enable human teachers to teach the task more accurately [

32,

36,

58,

64,

70]. For example, In [

70], they separated the demonstration of the shape of the trajectory from the timing of the trajectory. While it is cognitively demanding to demonstrate a motion with high accuracy and a high velocity at the same time, the ability to independently demonstrate the path enables the teachers to solely focus on teaching and refining the robot path and define the behavior in a more precise way. Moreover, in [

58], they have combined incremental learning with variable stiffness of the robot during kinesthetic feedback. They used the variability of the already-captured demonstrations to adjust the stiffness of the robot. When there is low variation in a region, i.e., higher accuracy, the robot is more stiff, allowing the teacher to provide smaller adjustments, while regions with higher variability have lower stiffness, allowing the teacher to move the robot more freely.

Another approach is to focus on how the LfD output plan is executed finally with the robot. Here, the focus is on execution strategies with the goal of improving the success rate of a task [

51,

57,

65]. In [

65] they considered a small-parts assembly scenario, where the tolerances are comparatively low, which leads to more sensitivity to errors in misalignments due to tight tolerances. They have proposed an impedance control strategy to drive the robot along the assembly trajectory generated by LfD, but also record and track a required wrench profile so that tolerance misalignments can be resolved with the compliance of the contact, and in this way increasing the success rate of the task and avoiding failures when there is contact due to tolerance errors. In [

57], the authors claim that LfD output performs well in generalization but fails to maintain the required accuracy for a new context. Hence they use the LfD output as an initial guess for an RL algorithm designed according to the task’s model, and refine the LfD output find the optimal policy for the specific task.

5.3. Robustness and Safety

The general LfD process is mainly concerned with successfully learning and executing a desired task with a specific accuracy, while it is crucial to consider how the process lifecycle is aligned with safety requirements and how well the system can handle unexpected scenarios. This is of extra importance in manufacturing cases where the robot shares an industrial environment alongside humans, with more potential dangers. Although LfD can generalize to new scenarios, there is no guarantee that the devised task policy is aligned with safety requirements or passes through the safety region. It is also not guaranteed that in case of unforeseen situations during the task execution, the robot makes a safe decision to accommodate the new situation. Therefore, it is important to equip the LfD processes with proper mechanisms to ensure robustness and safety in the robot’s operational environment.

The concept of robustness gains meaning when there is a possibility of a fault, disturbance, or error throughout the process, that might mislead the learning process or cause the system to end up in an unknown state. In this case, a robust LfD system is capable of reliably recovering from the imposed state and successfully finishing the task whatsoever. Alongside robustness, the concept of safety ensures that the robot’s operations and decisions do not cause any harm to the humans working along, especially during the teachings and interactions. It also ensures that the operations remain in a safe region to prevent damage to the working environment, work objects, and the robot itself. It is evident that robustness and safety are essential components of LfD systems that must be carefully addressed to enable their effective deployment in real-world applications.

One main aspect of robustness and safety in LfD processes is related to the Human-Robot Interaction (HRI). Since LfD lifecycle is mainly concerned with interaction with humans, it is evident that focusing on HRI robustness and safety becomes a major trend on enhancing the reliability of LfD systems [

137].

One main trend of improving safety and robustness is focused on HRI [

36,

70,

74,

109,

127]. This includes the teaching and demonstration as well as any other form of interaction throughout the process. With respect to robustness, in [

127], to efficiently learn from suboptimal demonstrations, the paper proposes an RL-based optimization where the demonstrations serve as the constraints of the optimization framework. the objective was to outperform the suboptimal demonstrations and find the optimal trajectory in terms of length and smoothness. The work in [

109] proposes an interactive learning approach where instead of depending on perfect human demonstrations to proceed with learning, the human can interactively and incrementally provide evaluative as well as corrective feedback to enhance the robustness of learning against imperfect demonstrations. In terms of safety, in [

36] kinesthetic teaching is replaced with teleoperation to enhance safety while providing local corrections to the robot. This is because kinesthetic teaching can become more dangerous with increasing the robot’s velocity of execution. Moreover, in many works such as [

70,

74] the control scheme is based on impedance control to guarantee compliant interactions with humans, avoid sudden unexpected motions, and improve HRI safety.

Another trend for improving robustness is focused on the robustness against various disturbances and errors through out the LfD cycle [

33,

54,

71]. The work in [

71] has focused on the fact that while LfD can allow non-experts to teach new tasks in industrial manufacturing settings, exerts are still required to program fault recovery behaviors. They have proposed a framework where robots autonomously detect an anomaly in execution and learn how to tackle that anomaly by collaborating with human. They represent a task via task graphs, where a task is executed from start to end. If the robot detects an anomaly, it waits and asks humans whether to demonstrate a recovery behavior or refine the current execution behavior. In case of learning a new recovery behavior from the teacher, a conditional state is added to the graph at the point of anomaly, and next time the robot checks for the condition to see if it should continue the normal execution or switch to the recovery behavior. Similarly, the authors in [

33] proposed Bayesian GMM to quantify the uncertainty of the imitated policy at each state. They fuse the learned imitation policy with various conservative policies in order to make the final policy robust to perturbations, errors and unseen states. For example, in a board wiping task, the imitation policy learned the force profile required to wipe the board, while the conservative policy ensured circular motion on the board. together, they make the board wiping policy robust so that the motion is desirable while applied force is enough to actually wipe the board.

Lastly, there are several works focusing on robustness and safety considering the limitations in the robot’s operating environment [

115,

116]. In [

115], the problem of collision avoidance was considered. They proposed a modified formulation of DMP, where they incorporated a zeroing barrier function in the formulation and solved a nonconvex optimization in order to find a collision free path through their constrained DMP. From another perspective, the work in [

116] addressed the issue that there is no guarantee that LfD outcome respect kinematic constraints when generalizing. so they formed a QP optimization problem that enforces the kinematic constraints and finds the DMP weights where the optimal trajectory is closest to the original DMP trajectory while respecting the constraints.

6. Conclusions

This paper presented a structured approach to integrating LfD into the roboticization process using manipulators for manufacturing tasks. It addressed key questions of "What to Demonstrate," "How to Demonstrate," "How to Learn," and "How to Refine," providing practitioners with a clear roadmap to implement LfD-based robot manipulation. First, we identified the scope of demonstration based on the desired task’s characteristics and determined the knowledge and skill required to be demonstrated to the robot. Then, based on the scope of demonstration, we explored demonstration methods and how human teachers can provide demonstrations for the robot, providing insights to extract the demonstration mechanism. Next, we focused on the learning approaches to enable efficient task learning and execution from the provided demonstrations. We first explored the possible learning spaces, followed by the common learning methods along with their pros and cons. Finally, we provided trends and insights to evaluate and improve the LfD process from a practical point of view, giving research directions and objectives for building upon the state of the art.