Submitted:

27 June 2024

Posted:

27 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Plasma Model of Hollow Cathode

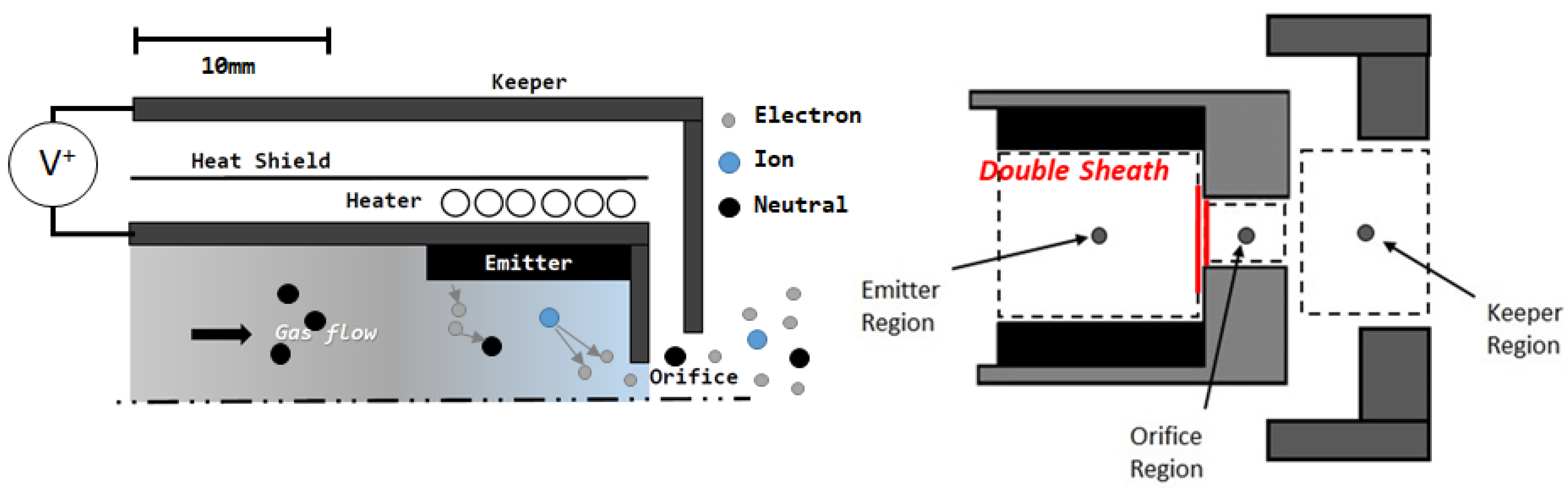

2.1. Cathode Architecture

2.2. Definition and Assumptions

- the heavy particles (ions and neutrals) are in a thermal equilibrium between each other and their temperature is assumed to be equal to the temperature of the wall, i.e., ;

- a chocked gas flow model is used in the orifice regions of both cathode tube and keeper;

- a double sheath potential drop is recorded at the orifice entrance region, thus a planar double sheath is modelled (Figure 1).

2.3. Plasma Model Equations

Keeper

Orifice

| Values |

Insert

3. Particle Swarm Optimization Methodology

3.1. Overview

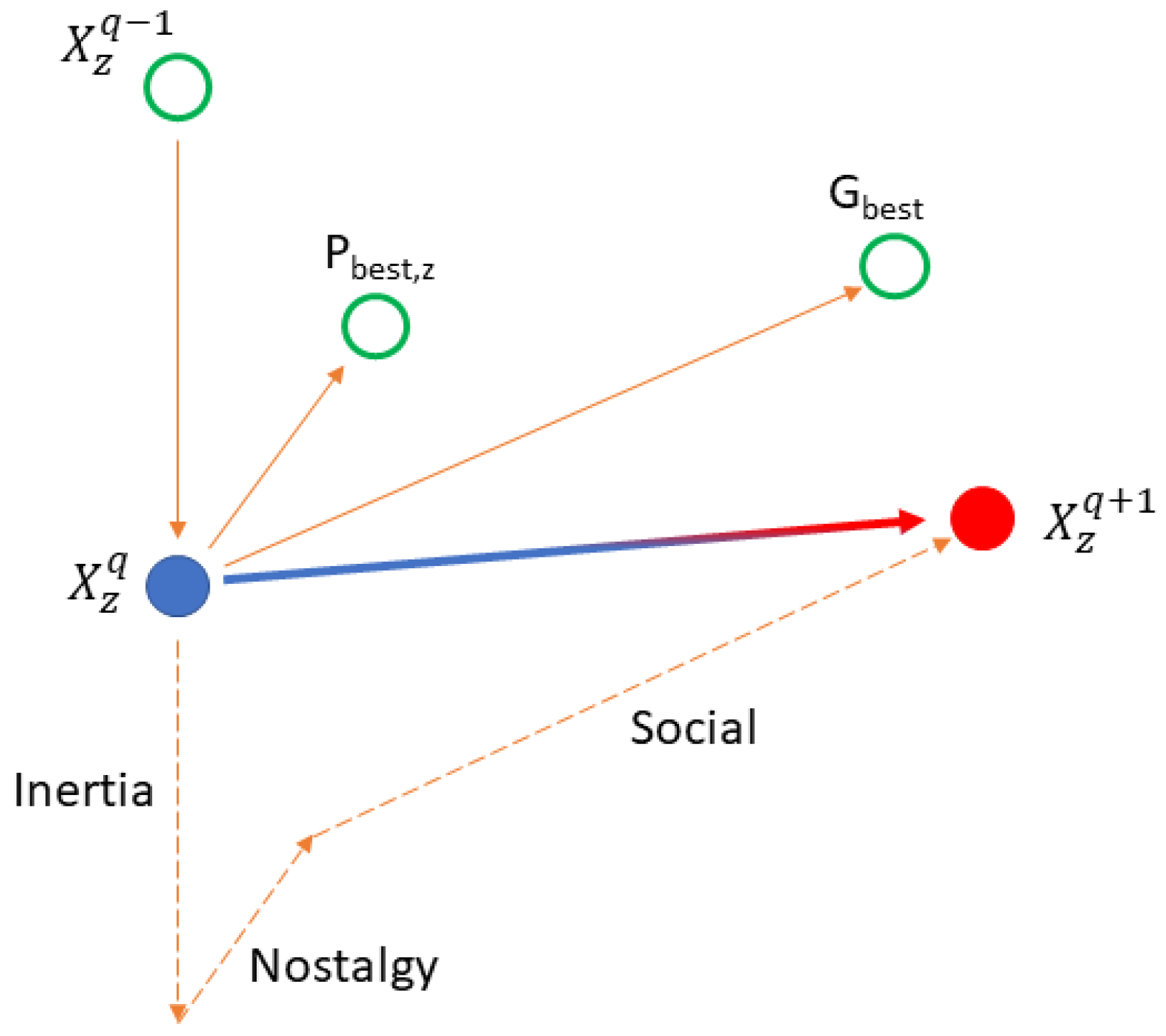

3.2. PSO General Model

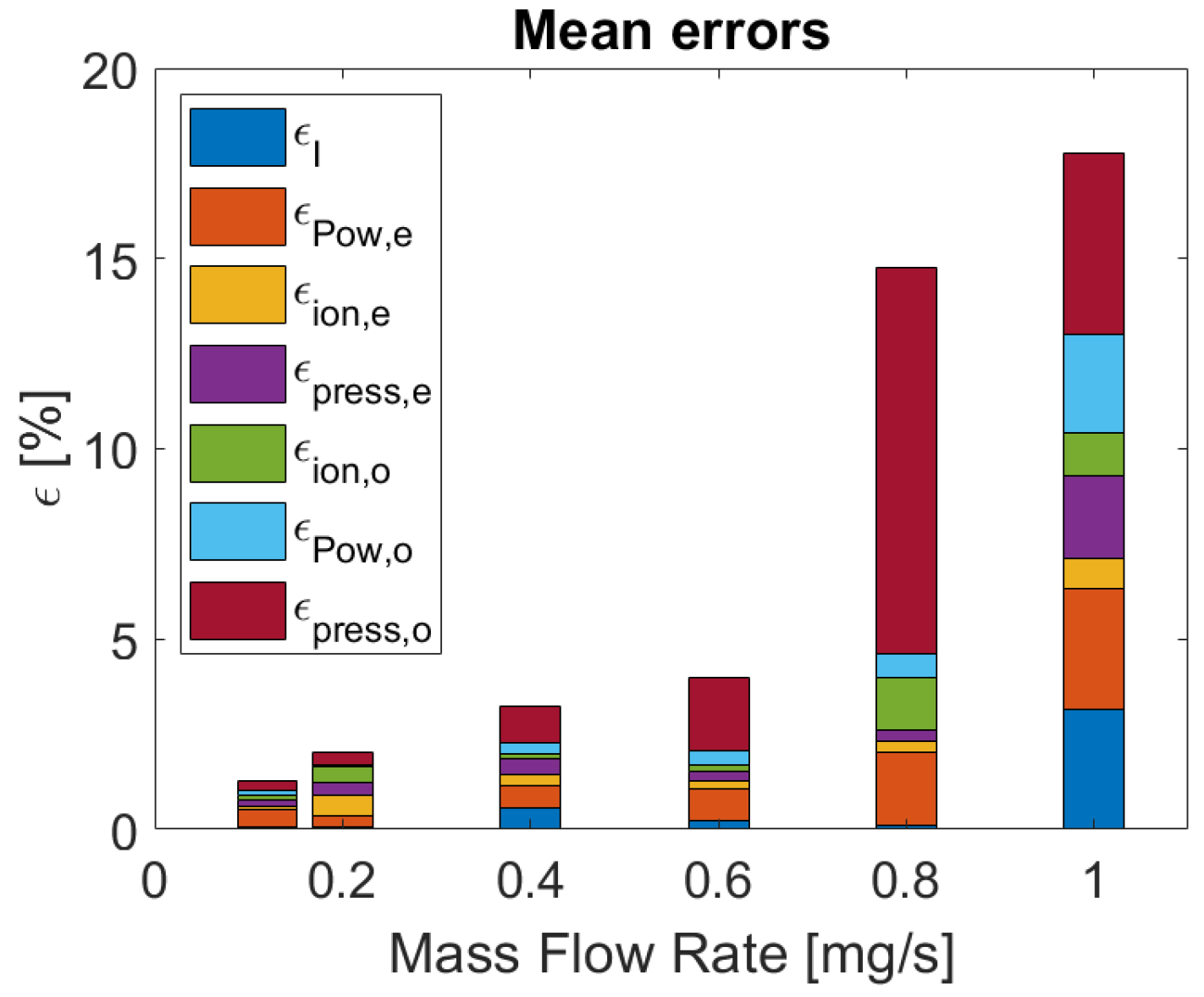

- = RHS - LHS of the Equation 9b, (current)

- = RHS - LHS of the Equation 9c (emitter power)

- = RHS - LHS of the Equation 9a (emitter ions)

- = RHS - LHS of the Equation 9d (emitter pressure)

- = RHS - LHS of the Equation 2b (orifice power)

- = RHS - LHS of the Equation 2a (orifice ions)

- = RHS - LHS of the Equation 2c (orifice pressure)

3.3. PSO-Code Based Solver

4. Results

| Variable | min | MAX | Unit |

| 1 × | 1 × | ||

| 1 × | 1 × | ||

| 5 | 50 | V | |

| 1 | 4 | ||

| 1 × | 1 × | ||

| 1 × | 1 × | ||

| 1 | 4 | ||

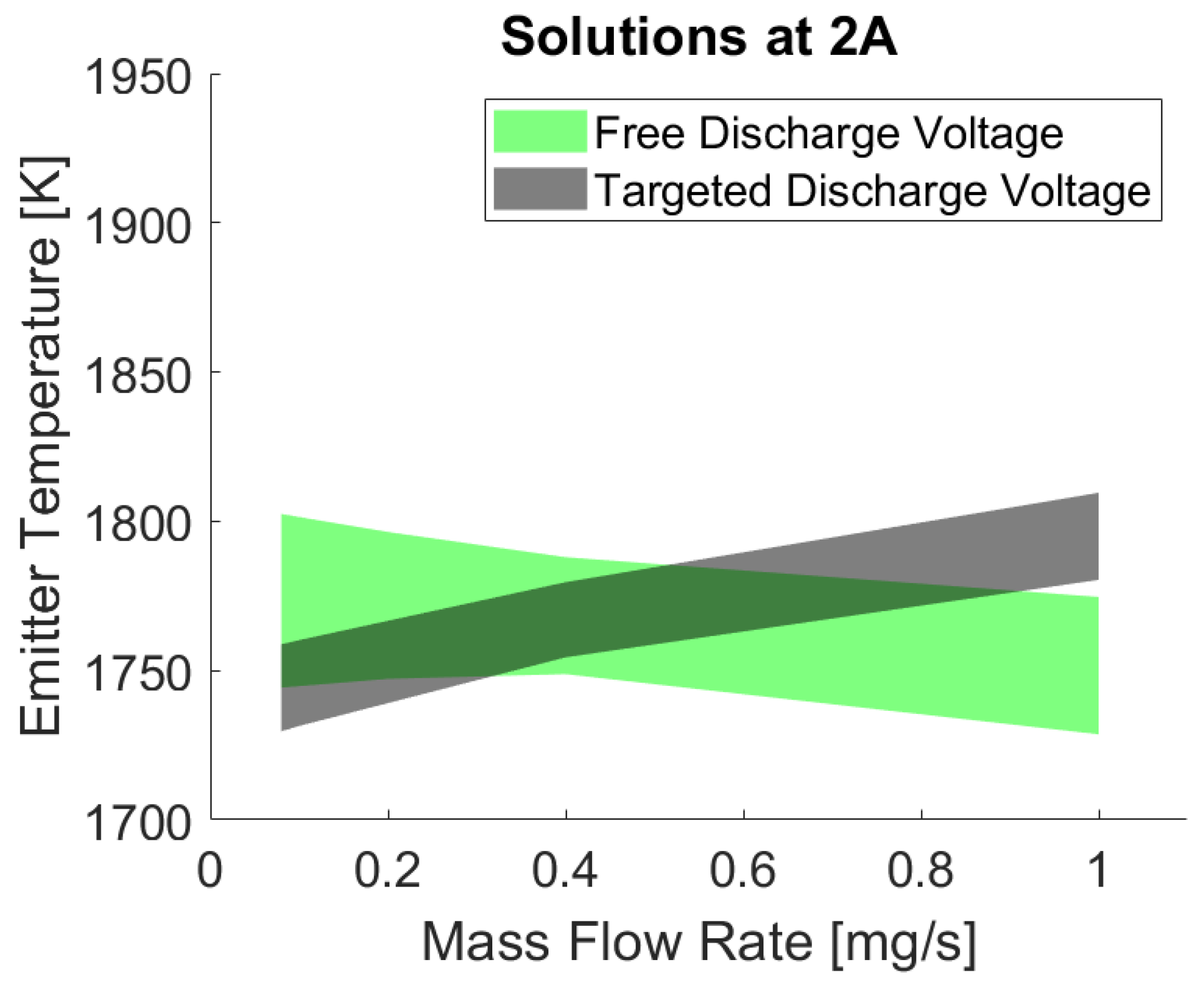

| 1700 | 1950 | K | |

| 1 × | 6 × | m | |

| 1700 | 1950 | K |

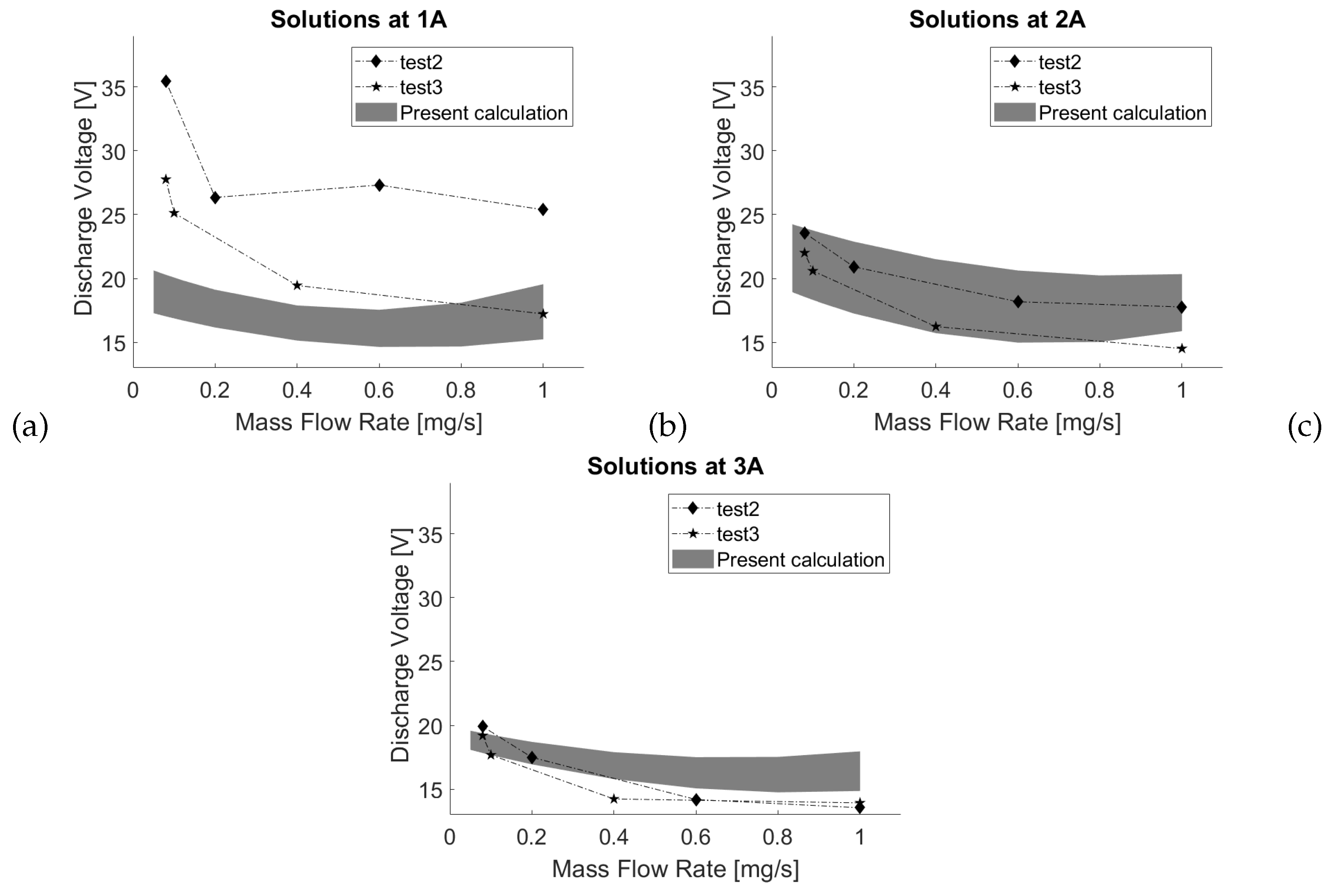

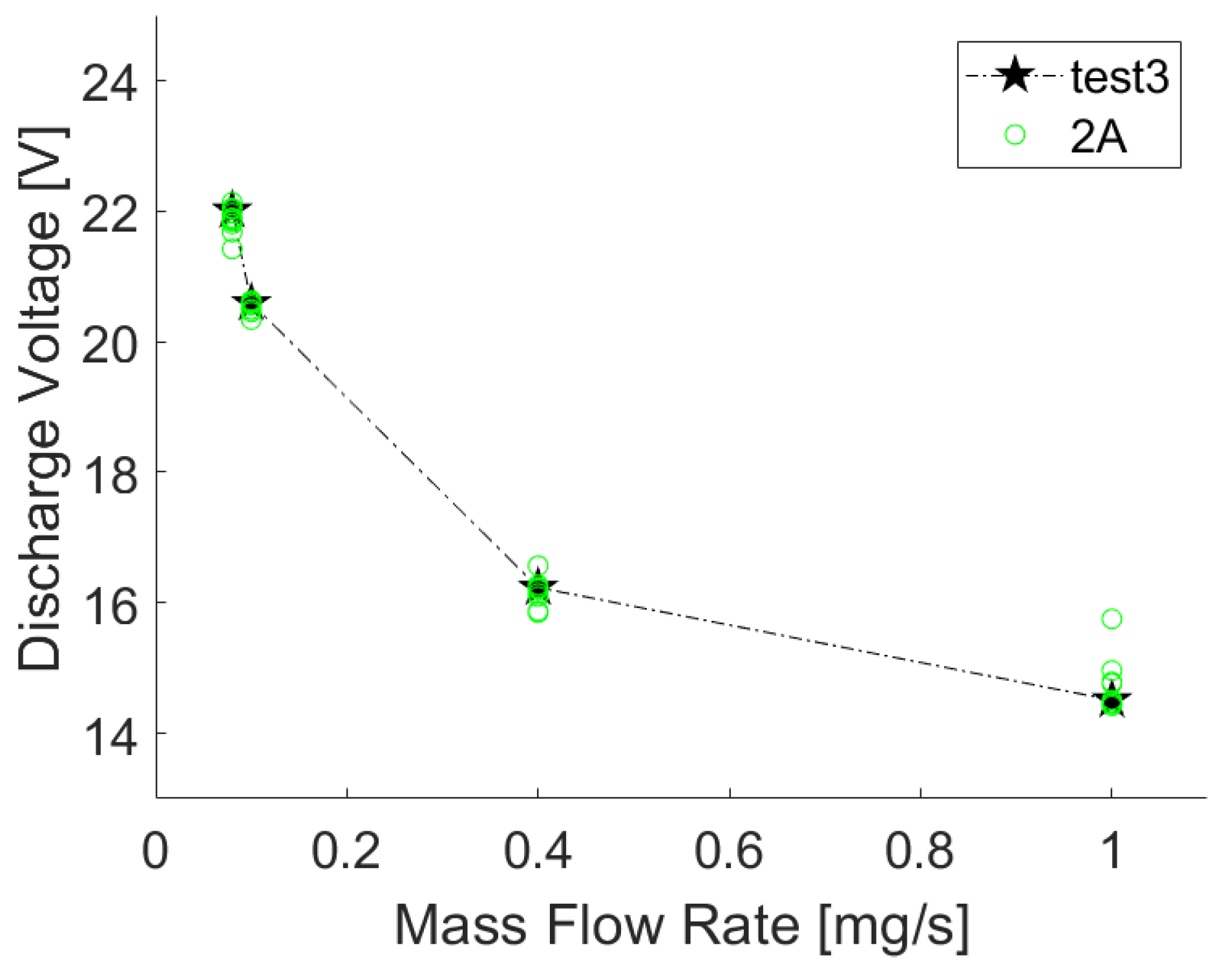

- the confidence bounds range between 2V and 5V for the current span examined;

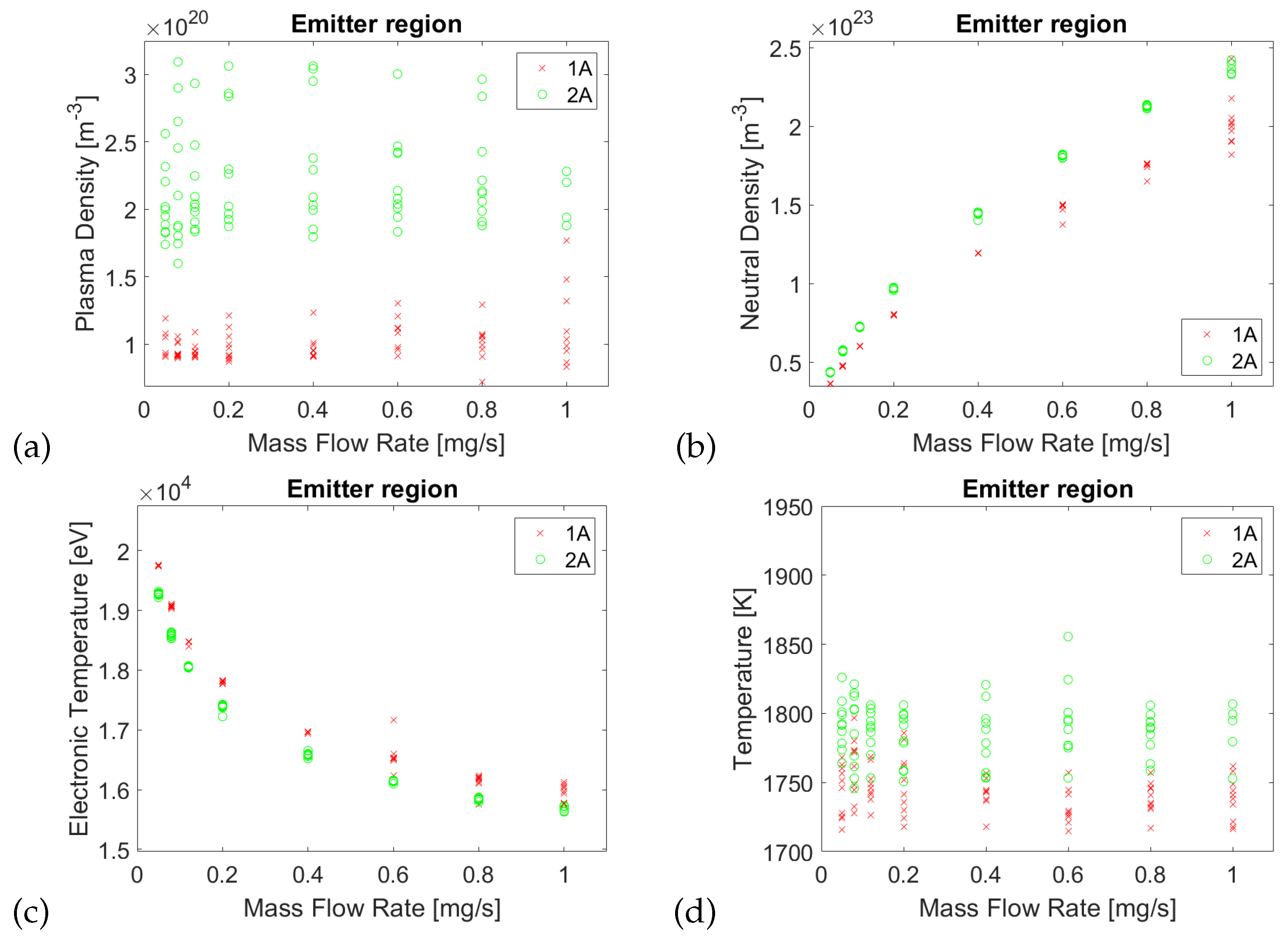

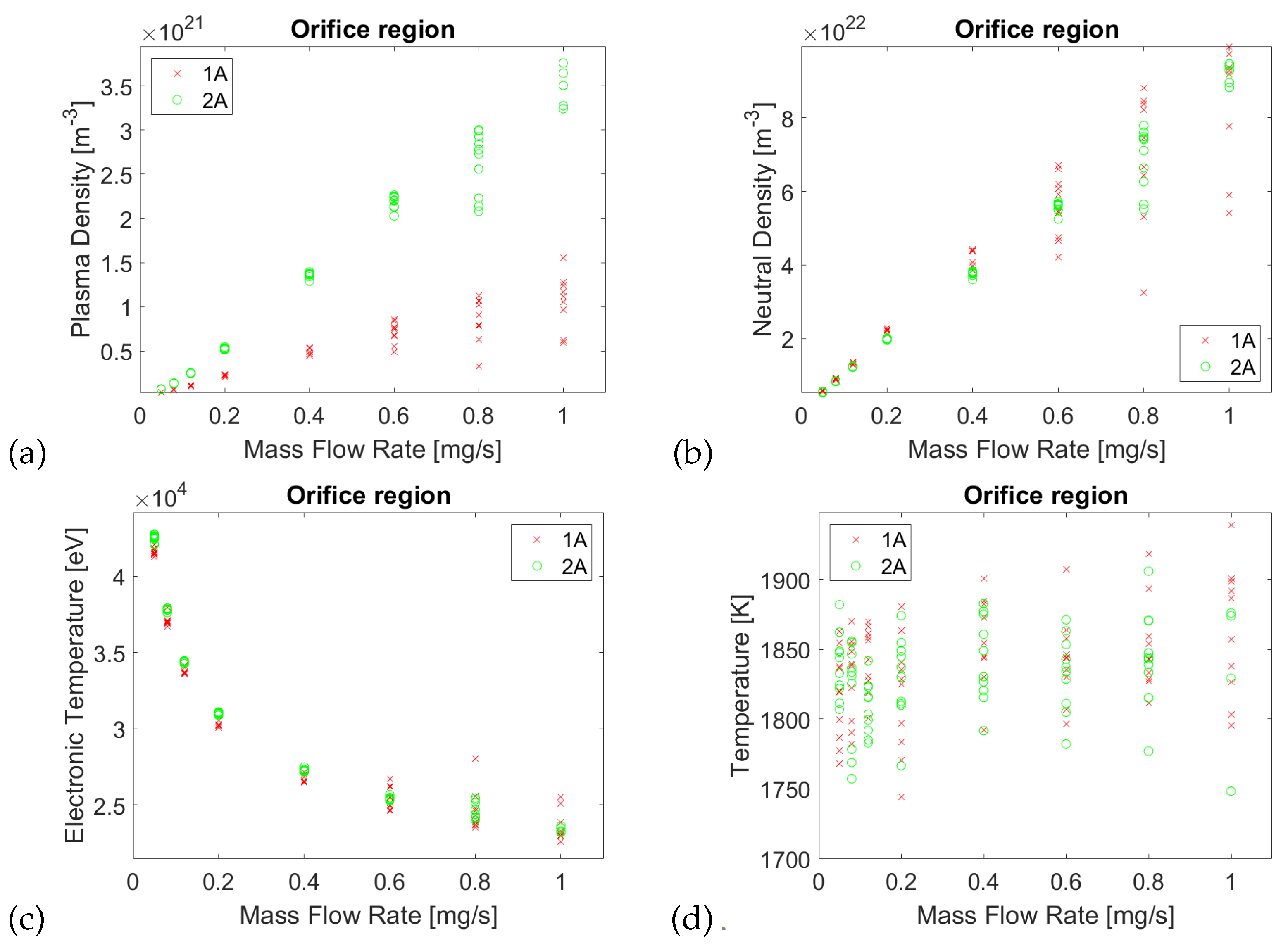

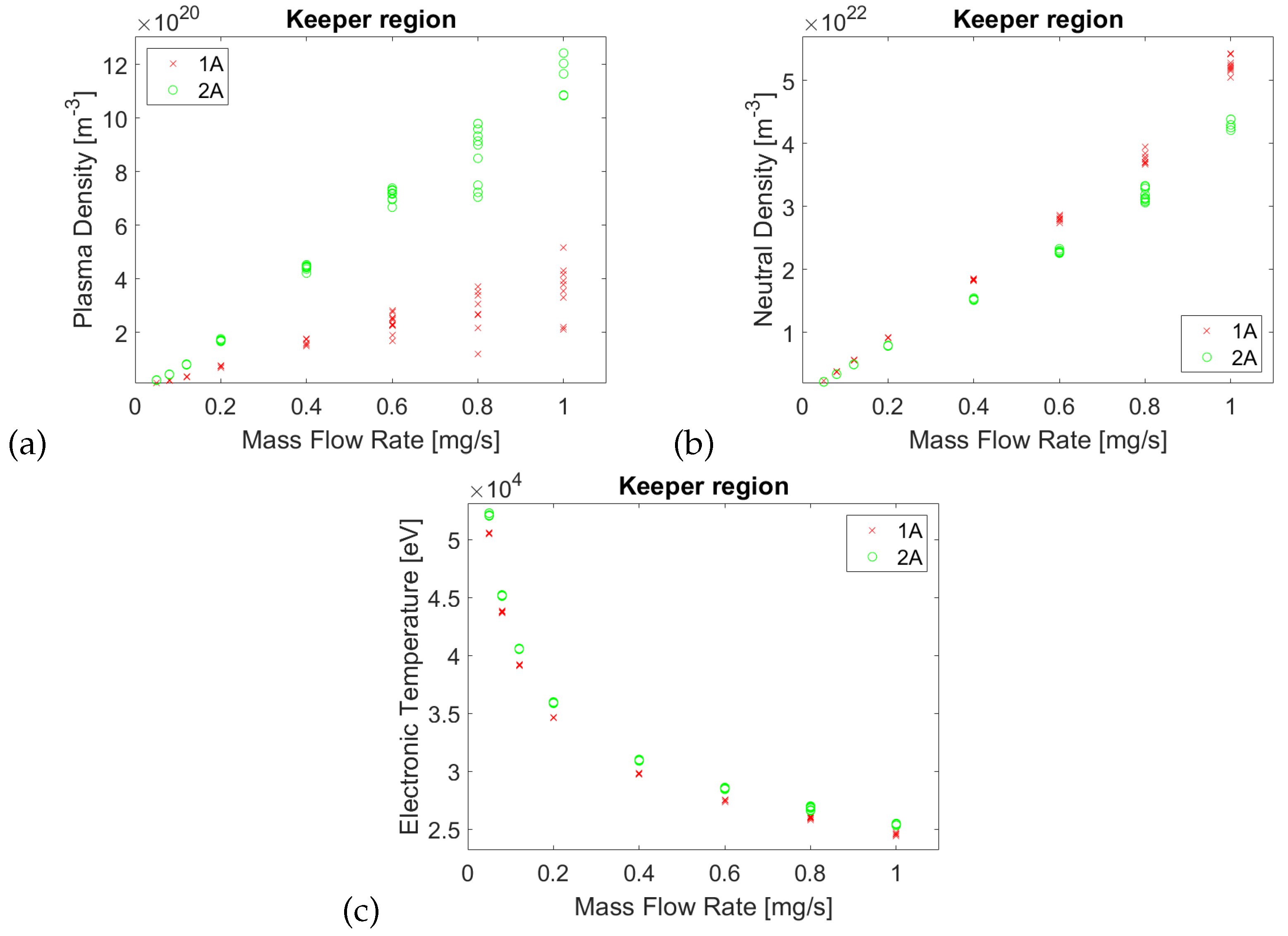

- the discharge current initially decreases with the mass flow rate, as expected, but it increases at the highest mass flow rates; this could be attributed to the absence of a second double sheath at the cathode orifice exit that could be negative because the plasma density decreases passing from the orifice region to the keeper region (see Figure 6a, Figure 7a);

- the shaded zone perfectly covers the experimental measurements obtained for a current of 2A and it is slightly higher at 3A; at 1A and at lowest mass flow rates, the current is up to 40% lower, at its maximum, than the reference data, likely because the system of equations does not adequately describe the plasma physics in those conditions.

4.1. Computational Effort

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| OHCs | Orifice Hollow Cathodes |

| EP | Electric Propulsion |

| HETs | Hall Effect Thrusters |

| GITs | Gridded Ion Thrusters |

| HEMPTs | High Efficiency Multistage Plasma Thrusters |

| PSO | Particle Swarm Optimization |

| HPTs | Helicon Plasma Thrusters |

| CIRA | Italian Aerospace Research Centre |

| PlasMCat | Plasma Model for Cathodes |

| RHS | Right Hand Side |

| LHS | Left Hand Side |

| PB | Personal Best |

| GB | Global Best |

| Symbols | |

| A | = Area |

| L | = Length |

| r | = Radius |

| d | = Diameter |

| q | = Elementary charge |

| = Boltzmann’s constant | |

| = Vacuum permittivity | |

| = Residual | |

| = Electric field at the cathode sheath | |

| = Discharge current | |

| j | = Current density |

| = Ionization rate coefficient | |

| = Electron mass | |

| = Ion mass | |

| = Mass flow rate | |

| n | = Density |

| = Ion rate | |

| p | = Pressure |

| P | = Power |

| R | = Resistance |

| T | = Temperature |

| V | = Potential |

| = Work function | |

| = Degree of ionization | |

| = Plasma resistivity | |

| = Collision frequency | |

| = Cross section, standard deviation | |

| = Specific heat ratio | |

| M | = Molecular Weight |

| = viscosity | |

| = Coulomb Logarithm | |

| D | = material-specific Richardson-Dushman constant |

| = Average ionization energy (12.12 eV for xenon) | |

| Subscripts | |

| n | = neutral |

| p | = plasma |

| e | = emitter, electron |

| o | = orifice |

| k | = keeper |

| = electron-neutral | |

| = electron-ion | |

| i | = ion moving at Bohm speed |

| = ion or ionization | |

| = electron recombination | |

| = thermionic emission | |

| = thermal | |

| = effective | |

| s | = emitter surface |

| q | = optimization iterations |

| z | = swarm individual |

| w | = wall |

| = double sheath |

References

- Goebel, D.M.; Katz, I. Fundamentals of electric propulsion: Ion and Hall thrusters; John Wiley & Sons, 2008; Vol. 1. [Google Scholar]

- Lev, D.R.; Mikellides, I.G.; Pedrini, D.; Goebel, D.M.; Jorns, B.A.; McDonald, M.S. Recent progress in research and development of hollow cathodes for electric propulsion. Reviews of Modern Plasma Physics 2019, 3, 6. [Google Scholar] [CrossRef]

- Siegfried, D.; Wilbur, P. An investigation of mercury hollow cathode phenomena. In Proceedings of the 13th International Electric Propulsion Conference; 1978. [Google Scholar] [CrossRef]

- Salhi, A. Theoretical and experimental studies of orificed, hollow cathode operation. PhD thesis, Ohio State University, 1993. [Google Scholar]

- Domonkos, M.T. Evaluation of low current orificed hollow cathode. PhD thesis, Michigan University, 1999. [Google Scholar]

- Albertoni, R.; Pedrini, D.; Paganucci, F.; Andrenucci, M. A Reduced-Order Model for Thermionic Hollow Cathodes. IEEE Transactions on Plasma Science 2013, 41, 1731–1745. [Google Scholar] [CrossRef]

- Korkmaz, O. Global numerical model for the evaluation of the geometry and operation condition effects on hollow cathode insert and orfice region plasmas. MSc dissertation, Bogazici University, 2015. [Google Scholar]

- Taunay, P.Y.C.; Wordingham, C.J.; Choueiri, E. A 0-D model for orificed hollow cathodes with application to the scaling of total pressure. In Proceedings of the AIAA Propulsion and Energy, Indianapolis, IN; 2019. [Google Scholar]

- Gurciullo, A.; Fabris, A.L.; Potterton, T. Numerical study of a hollow cathode neutraliser by means of a zero-dimensional plasma model. Acta Astronautica 2020, 174, 219–235. [Google Scholar] [CrossRef]

- Gondol, N.; Tajmar, M. A volume-averaged plasma model for heaterless C12A7 electride hollow cathodes. CEAS Space Journal 2023, 15, 431–450. [Google Scholar] [CrossRef]

- Potrivitu, G.C.; Laterza, M.; Ridzuan, M.H.A.B.; Gui, S.Y.C.; Chaudhary, A.; Lim, J.W.M. Zero-Dimensional Plasma Model for Open-end Emitter Thermionic Hollow Cathodes with Integrated Thermal Model. In Proceedings of 37th International Electric Propulsion Conference Massachusetts Institute of Technology; Cambridge, MA, USA, 2022; Vol. IEPC-2022-116. [Google Scholar]

- Potrivitu, G.C.; Xu, S. Phenomenological plasma model for open-end emitter with orificed keeper hollow cathodes. Acta Astronautica 2022, 191, 293–316. [Google Scholar] [CrossRef]

- Panelli, M.; Giaquinto, C.; Smoraldi, A.; Battista, F. A Plasma Model for Orificed Hollow Cathode. In Proceedings of the 36th International Electric Propulsion Conference, Wien, Austria; 2019; Vol. IEPC-2019-452. [Google Scholar]

- Wordingham, C.J.; Taunay, P.Y.C.R.; Choueiri, E.Y. Critical Review of Orficed Hollow Cathode Modeling. In Proceedings of the 53rd AIAA/SAE/ASEE Joint Propulsion Conference, Atlanta, GA; 2017. [Google Scholar]

- Taunay, P.Y.C.; Wordingham, C.J.; Choueiri, E. An empirical scaling relationship for the total pressure in hollow cathodes. In Proceedings of the 2018 Joint Propulsion Conference. [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. Proceedings of ICNN’95 - International Conference on Neural Networks; 1995; Vol. 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Kulkarni, M.N.K.; Patekar, M.S.; Bhoskar, M.T.; Kulkarni, M.O.; Kakandikar, G.; Nandedkar, V. Particle swarm optimization applications to mechanical engineering-A review. Materials Today: Proceedings 2015, 2, 2631–2639. [Google Scholar] [CrossRef]

- Coppola, G.; Panelli, M.; Battista, F. Preliminary design of helicon plasma thruster by means of particle swarm optimization. AIP Advances 2023, 13, 055209. Available online: https://pubs.aip.org/aip/adv/article-pdf/doi/10.1063/5.0149430/17349598/055209_1_5.0149430.pdf. [CrossRef]

- Zhang, Y.; Wang, S.; Ji, G.; et al. A comprehensive survey on particle swarm optimization algorithm and its applications. Mathematical problems in engineering 2015, 2015. [Google Scholar] [CrossRef]

- Houssein, E.H.; Gad, A.G.; Hussain, K.; Suganthan, P.N. Major advances in particle swarm optimization: Theory, analysis, and application. Swarm and Evolutionary Computation 2021, 63, 100868. [Google Scholar] [CrossRef]

- Bai, Q. Analysis of particle swarm optimization algorithm. Computer and information science 2010, 3, 180. [Google Scholar] [CrossRef]

- Jain, M.; Saihjpal, V.; Singh, N.; Singh, S.B. An overview of variants and advancements of PSO algorithm. Applied Sciences 2022, 12, 8392. [Google Scholar] [CrossRef]

- Kulkarni, M.N.K.; Patekar, M.S.; Bhoskar, M.T.; Kulkarni, M.O.; Kakandikar, G.; Nandedkar, V. Particle swarm optimization applications to mechanical engineering-A review. Materials Today: Proceedings 2015, 2, 2631–2639. [Google Scholar] [CrossRef]

- Ramírez-Ochoa, D.D.; Pérez-Domínguez, L.A.; Martínez-Gómez, E.A.; Luviano-Cruz, D. PSO, a swarm intelligence-based evolutionary algorithm as a decision-making strategy: A review. Symmetry 2022, 14, 455. [Google Scholar] [CrossRef]

- Moazen, H.; Molaei, S.; Farzinvash, L.; Sabaei, M. PSO-ELPM: PSO with elite learning, enhanced parameter updating, and exponential mutation operator. Information Sciences 2023, 628, 70–91. [Google Scholar] [CrossRef]

- Ducci, C.; Oslyak, K.; Dignani, D.; Albertoni, R.; Andrenucci, M. HT100D performance evaluation and endurance test results. In Proceedings of the Proceedings of 33rd International Electric Propulsion Conference, IEPC2013, Washington, DC, USA, 6-10 October 2013; Vol. IEPC-2013-140. [Google Scholar]

- Albertoni, R.; Pedrini, D.; Paganucci, F.; Andrenucci, M. Experimental Characterization of a LaB 6 Hollow Cathode for Low-Power Hall Effect Thrusters. Proceedings of Space Propulsion Conference, SPC2014, Cologne, Germany, 19-22 May 2014. [Google Scholar]

- Meeker, D. inite Element Method Magnetics–Version 4.0 User’s Manual. 2006. [Google Scholar]

Short Biography of Authors

|

Coppola Giovanni holds a Master degree in Space and Astronautical Engineering at the italian University of Rome, La Sapienza. He works in CIRA (Italian Aerospace Research Center), at the Space Division as researcher of the Space Propulsion Laboratory. He worked as researcher and system engineer in international projects related to hyper-sonic vehicles and electric propulsion systems. He is mainly involved in the CIRA electric propulsion laboratory projects and activity as researcher. The recent activities are associated with the development of numerical tools for the preliminary design of Gridded Ion Thrusters (GITs), Helicon Plasma Thrusters (HPTs) and HEMPT. Other activities are related to plasma modelling and optimization techniques. |

|

Mario Panelli holds a PhD in Aerospace Naval and Quality Management Engineering from the University of Naples, Federico II. He currently works in CIRA (Italian Aerospace Research Center), being affiliated to the Propulsion and Exploration Division as researcher of the Space Propulsion Laboratory. He worked as researcher, project and system engineer in different national and international project in the topics of methane-based propulsion systems, hybrid propulsion, solid propulsion and electric propulsion. He is involved in the CIRA electric propulsion projects as project engineer and researcher. His most recent activities are associated with design and testing of Orifice Hollow Cathodes (OHCs) and Hall Effect Thrusters (HETs), plasma and erosion modelling, and preliminary design of Gridded Ion Thrusters (GITs), Helicon Plasma Thrusters (HPTs) and HEMPT. |

|

Francesco Battista holds a PhD in Aerospace Engineering from University of Pisa. He currently works in CIRA (Italian Aerospace Research Center), being affiliated to the Propulsion and Exploration Division as responsible of the Space Propulsion Laboratory. He worked as system engineer and project manager in different national and international project mainly in the topics of methane-based propulsion systems, hybrid propulsion and electric propulsion. He is involved in the CIRA electric propulsion projects as project manager and technical responsible. He is author or co-author of more than 100 papers, published on international journals and conference proceedings. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).