Submitted:

19 June 2024

Posted:

21 June 2024

You are already at the latest version

Abstract

Keywords:

Author Summary

Introduction

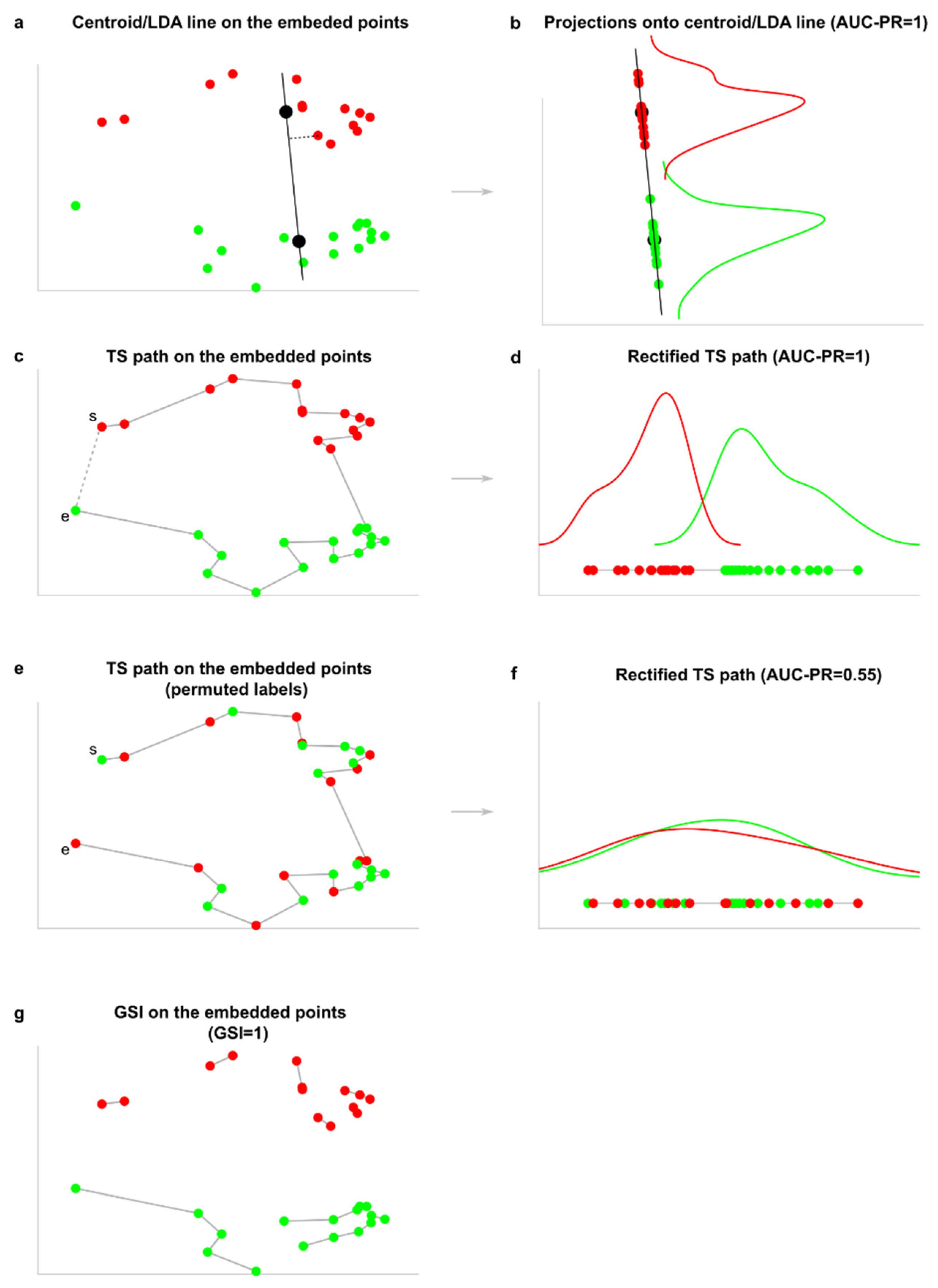

Geometric Separability of Mesoscale Patterns in Data Represented in a Two-Dimensional Space

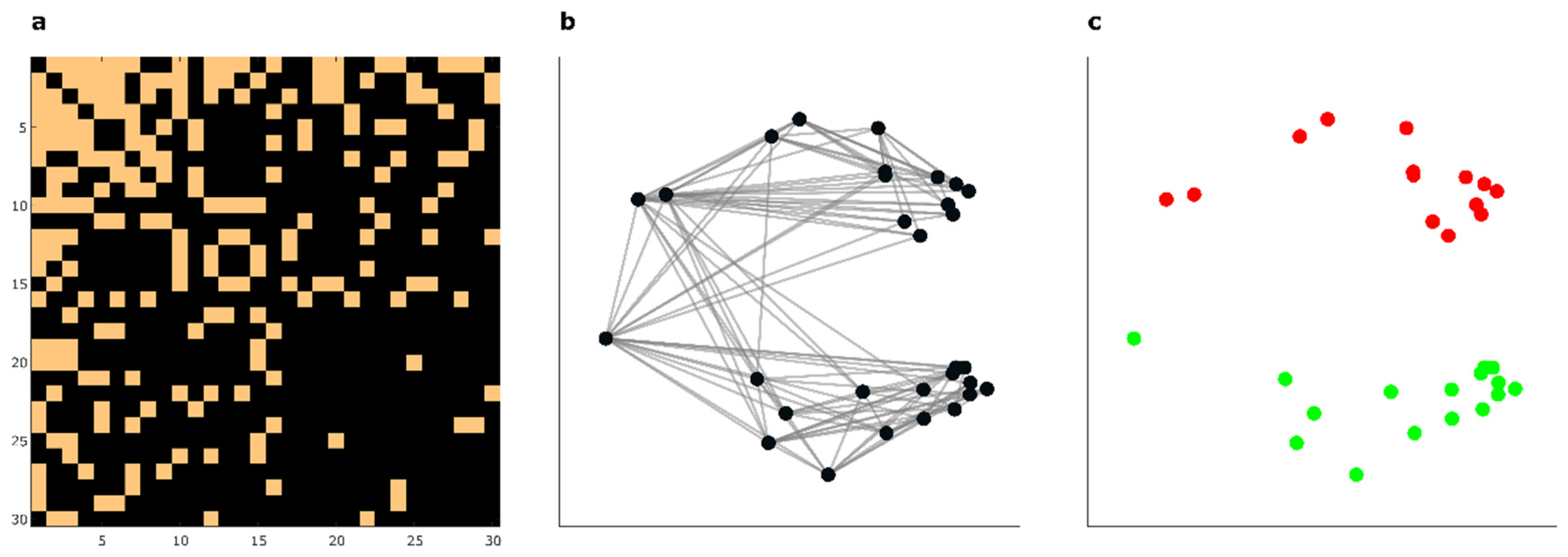

Geometric Separability of Mesoscale Patterns in Complex Networks Represented in a 2D Space

Results

Innovations of This Study

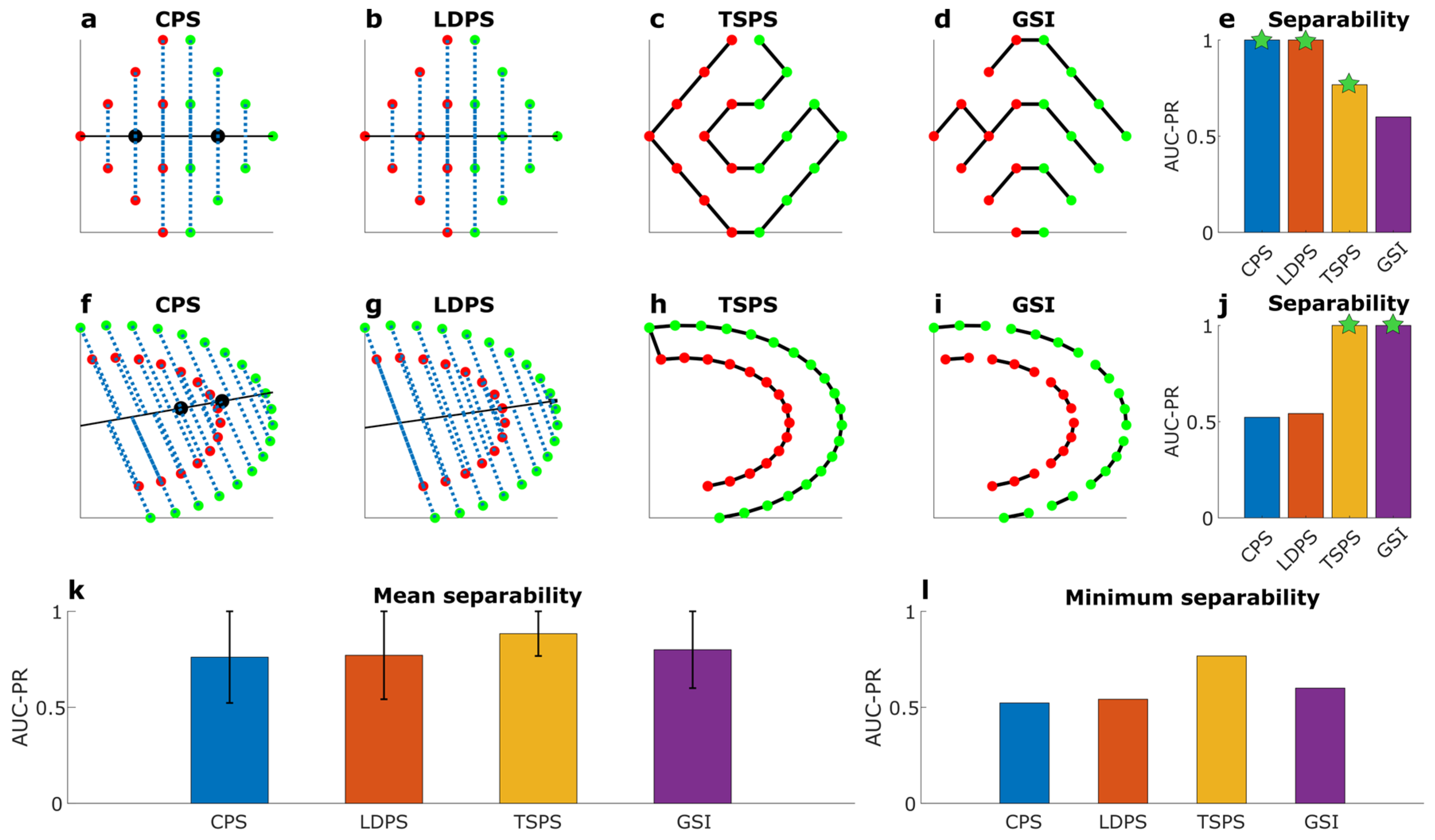

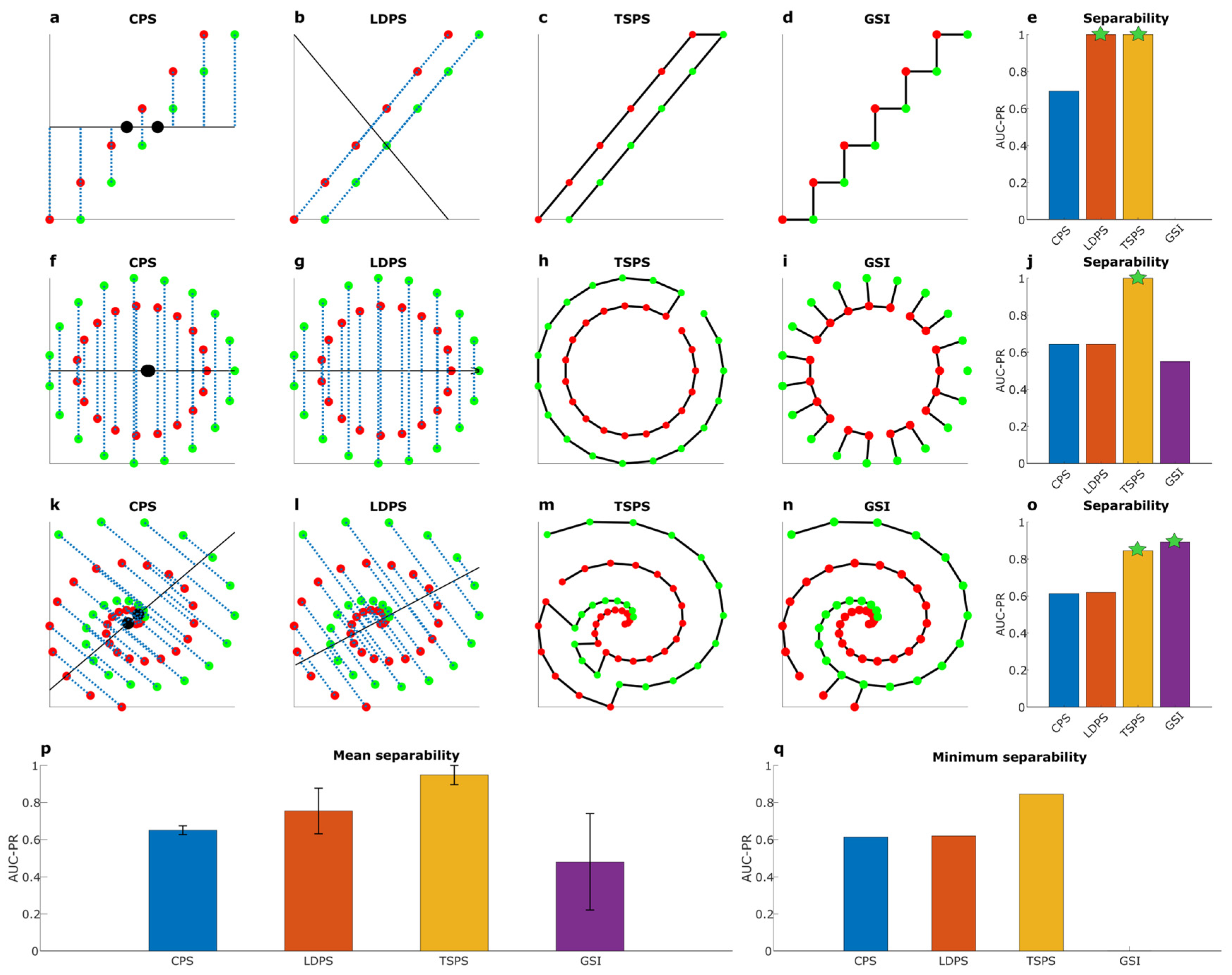

Empirical Evidence on Artificial Datasets and the Adaptive Geometrical Separability (AGS)

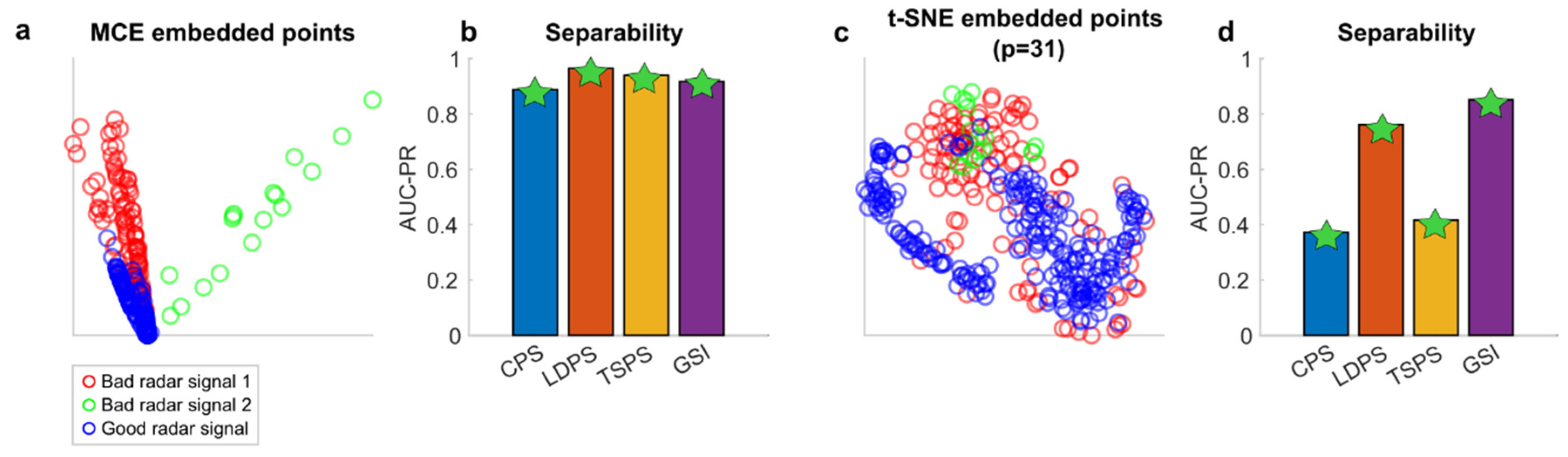

Empirical Evidence on Real Complex Multidimensional Data

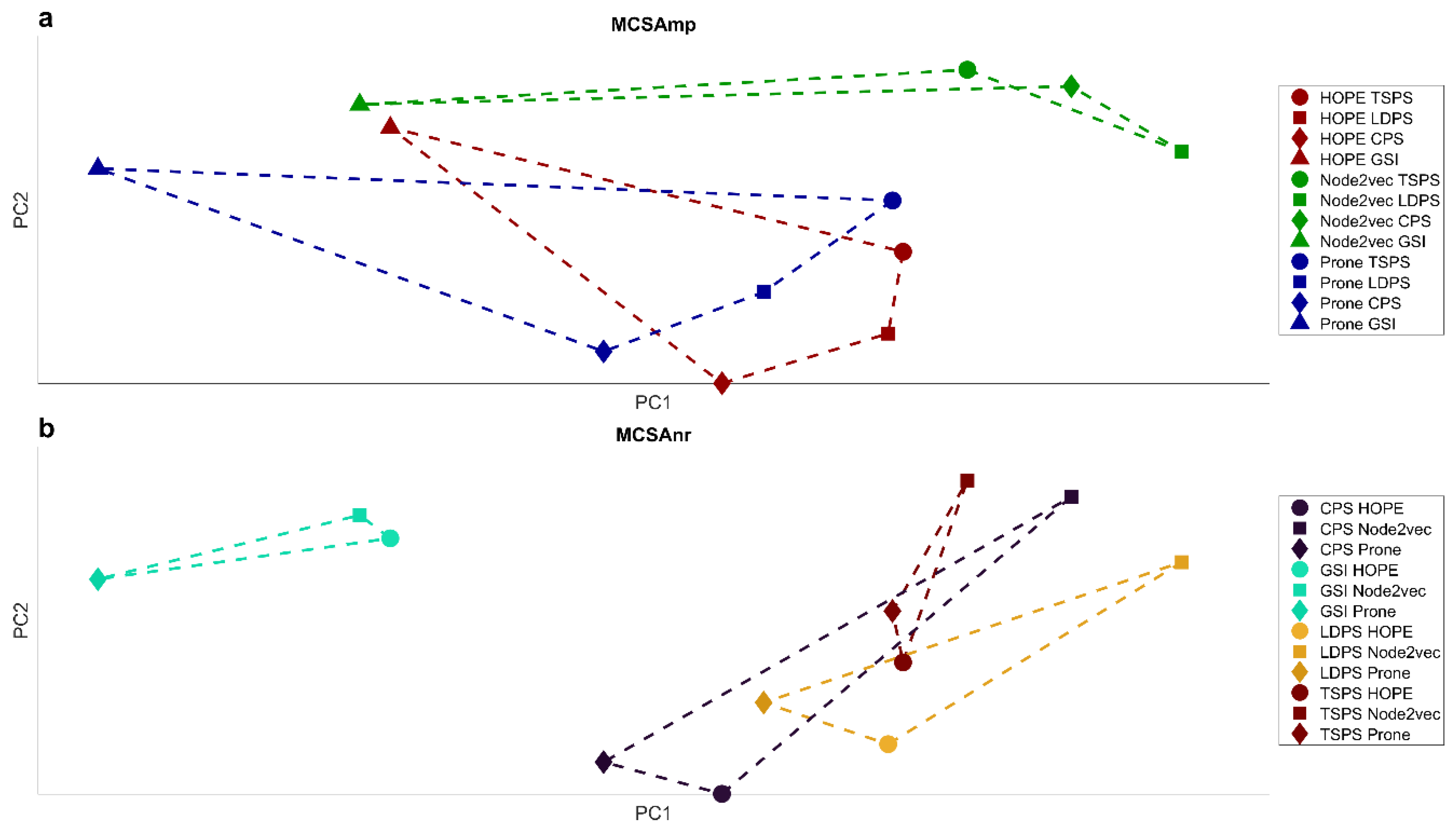

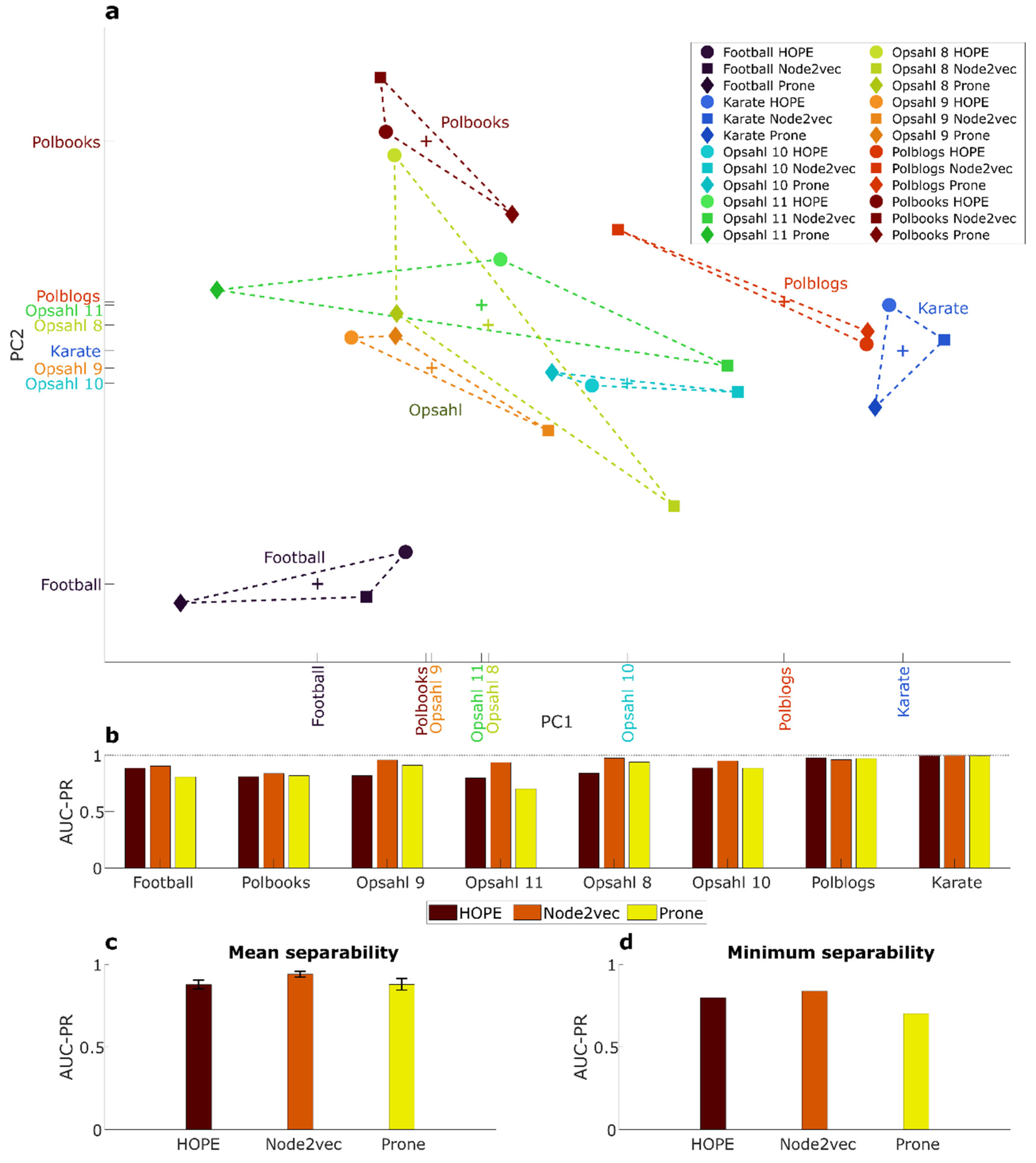

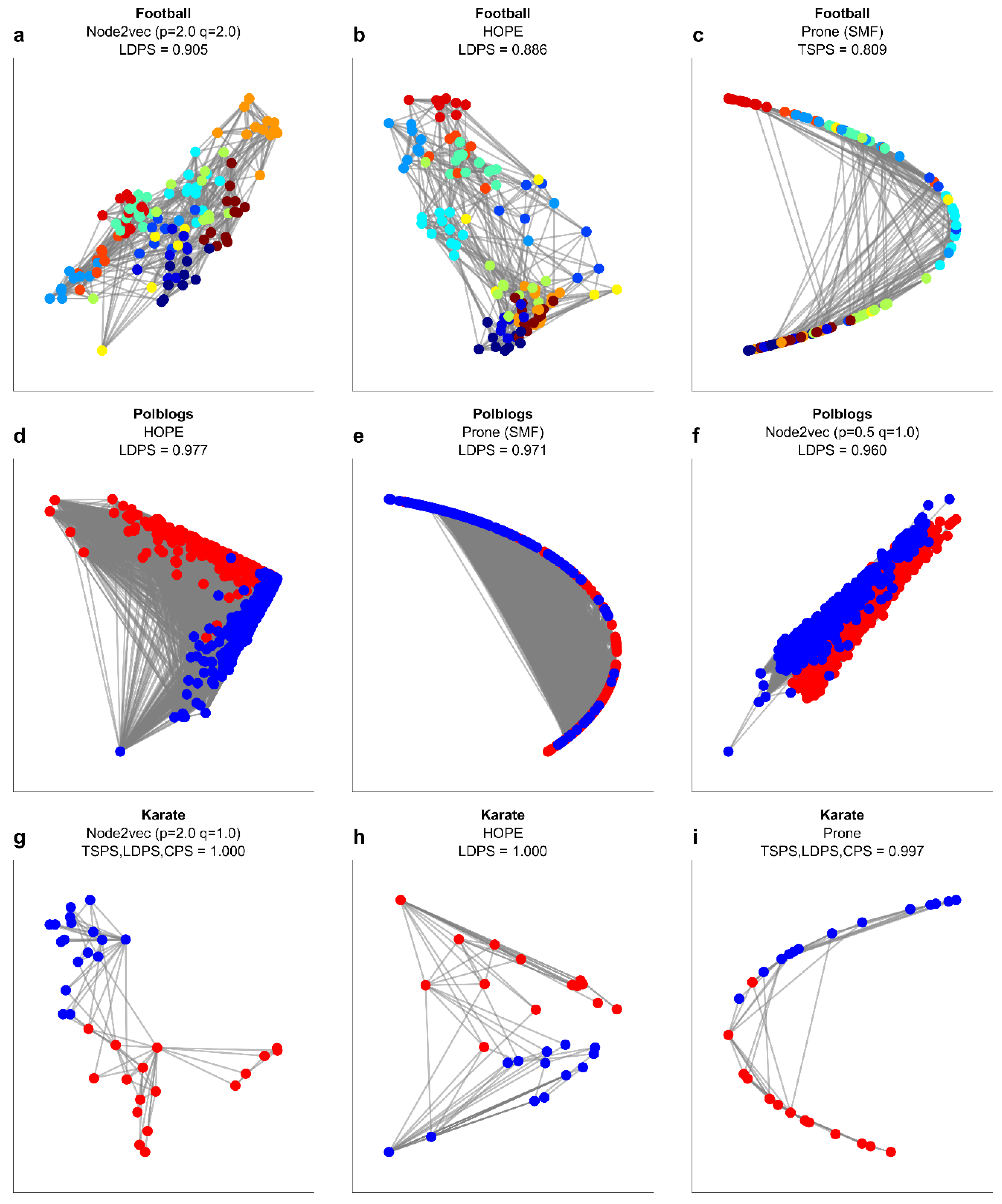

Empirical Evidence on Real Complex Networks

Discussion

Methods

Data and Algorithms

Synthetic Data Generation

Real Complex Multidimensional Data

Real Complex Networks

Network Embedding Methods

Geometric Separability Indices

Centroid Projection Separability (CPS) and Linear Discriminant Projection Separability (LDPS) Indices

Geometrical Separability Index (GSI)

Travelling Salesman Projection Separability (TSPS)

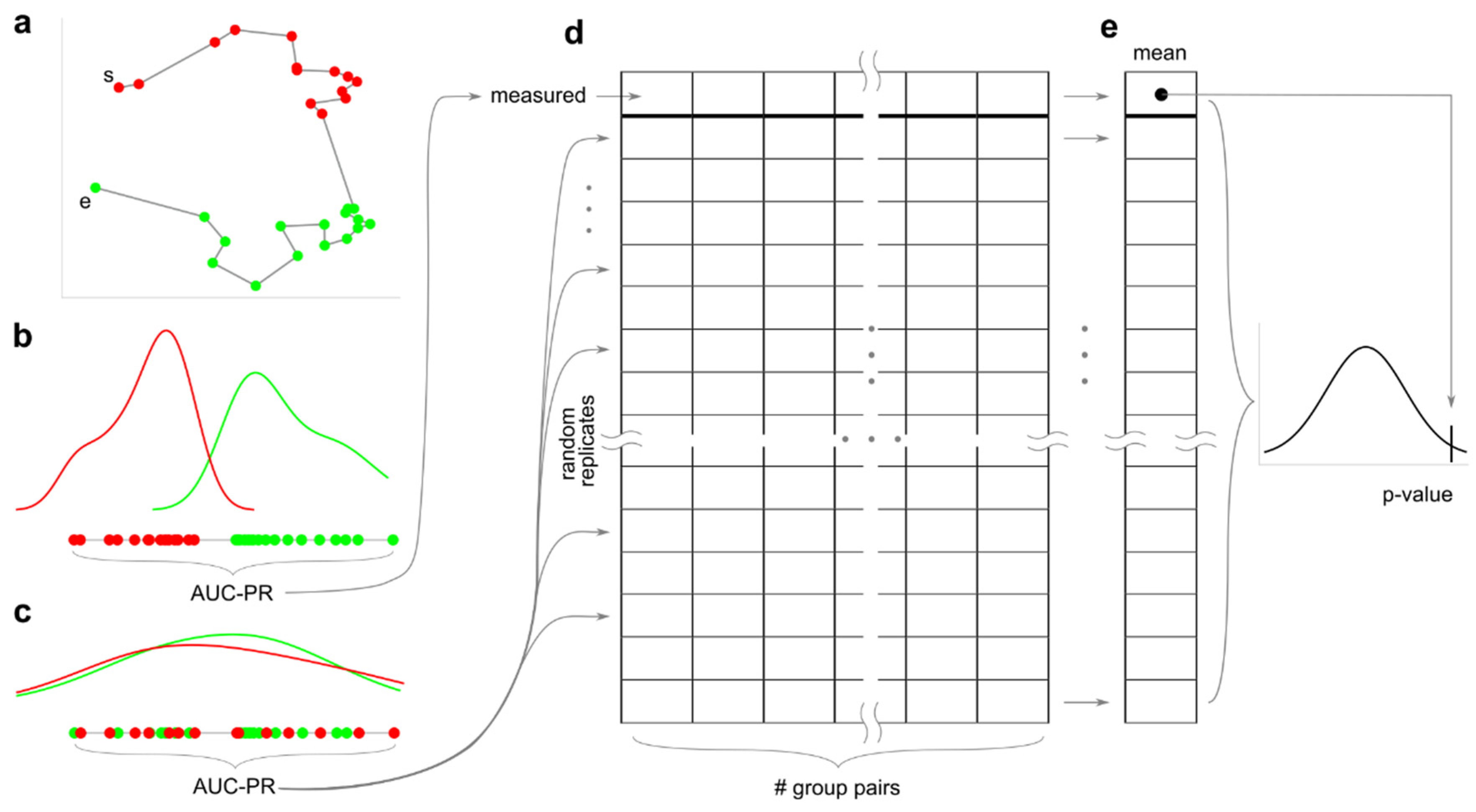

Statistical Significance of the Geometric Separability Measures

Adaptive Assessment of the Community Separability

Hardware and Software

Data Availability

- Football, Karate, Polbooks, and Polblogs: http://www-personal.umich.edu/~mejn/netdata/

- Opsahl (all networks): https://toreopsahl.com/datasets/

Code Availability

Author Contributions

Funding

Acknowledgements

Competing Interests

References

- Thornton, C. Separability is a Learner’s Best Friend. 1998. [Google Scholar] [CrossRef]

- Acevedo, A.; et al. Measuring Group Separability in Geometrical Space for Evaluation of Pattern Recognition and Dimension Reduction Algorithms. IEEE Access 2022, 10, 22441–22471. [Google Scholar] [CrossRef]

- Dunn, J.C. A fuzzy relative of the ISODATA process and its use in detecting compact well-separated clusters. Journal of Cybernetics 1973, 3. [Google Scholar] [CrossRef]

- Calinski, T.; Harabasz, J. A Dendrite Method for Cluster Analysis. Commun Stat Simul Comput 1974, 3. [Google Scholar] [CrossRef]

- Davies, D.L.; Bouldin, D.W. A Cluster Separation Measure. IEEE Trans Pattern Anal Mach Intell 1979, PAMI-1. [Google Scholar] [CrossRef]

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J Comput Appl Math 1987, 20. [Google Scholar] [CrossRef]

- Bezdek, J.C.; Pal, N.R. Cluster validation with generalized Dunn’s indices. In Proceedings of the 1995 2nd New Zealand International Two-Stream Conference on Artificial Neural Networks and Expert Systems, ANNES 1995; 1995. [Google Scholar] [CrossRef]

- Hu, L.; Zhong, C. An internal validity index based on density-involved distance. IEEE Access 2019, 7. [Google Scholar] [CrossRef]

- Minsky, M.L.; Papert, S. Perceptrons, Expanded Edition An Introduction to Computational Geometry. MIT Press, 1969. [Google Scholar]

- Minsky, M.L.; Papert, S. Perceptrons (An Introduction to Computational Geometry): Epilogue. Handbook of attachment: Theory, research, and clinical. 1988. [Google Scholar]

- FISHER, R.A. THE USE OF MULTIPLE MEASUREMENTS IN TAXONOMIC PROBLEMS. Ann Eugen 1936, 7. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach Learn 1995, 20. [Google Scholar] [CrossRef]

- Noble, W.S. What is a support vector machine? Nature Biotechnology 2006, 24. [Google Scholar] [CrossRef]

- Abdiansah, A.; Wardoyo, R. Time Complexity Analysis of Support Vector Machines (SVM) in LibSVM. Int J Comput Appl 2015, 128. [Google Scholar] [CrossRef]

- Tsang, I.W.; Kwok, J.T.; Cheung, P.M. Core vector machines: Fast SVM training on very large data sets. Journal of Machine Learning Research 2005, 6. [Google Scholar]

- Raghavan, V.; Bollmann, P.; Jung, G.S. A Critical Investigation of Recall and Precision as Measures of Retrieval System Performance. ACM Transactions on Information Systems (TOIS) 1989, 7, 205–229. [Google Scholar] [CrossRef]

- Zhao, Y.; et al. Spatial Reconstruction of Oligo and Single Cells by De Novo Coalescent Embedding of Transcriptomic Networks. Advanced Science 2023, 10, 2206307. [Google Scholar] [CrossRef]

- Girvan, M.; Newman, M.E.J. Community structure in social and biological networks. Proc Natl Acad Sci U S A 2002, 99, 7821–7826. [Google Scholar] [CrossRef]

- Muscoloni, A.; Cannistraci, C.V. A nonuniform popularity-similarity optimization (nPSO) model to efficiently generate realistic complex networks with communities. New J Phys 2018, 20, 52002. [Google Scholar] [CrossRef]

- Alessandro, M.; Carlo Vittorio, C. Leveraging the nonuniform PSO network model as a benchmark for performance evaluation in community detection and link prediction. New J Phys 2018, 20, 063022. [Google Scholar] [CrossRef]

- Cannistraci, C.V.; Alanis-Lobato, G.; Ravasi, T. Minimum curvilinearity to enhance topological prediction of protein interactions by network embedding. Bioinformatics 2013, 29, i199–i209. [Google Scholar] [CrossRef]

- Kovács, B.; Palla, G. Model-independent embedding of directed networks into Euclidean and hyperbolic spaces. Commun Phys 2023, 6, 28. [Google Scholar] [CrossRef]

- Ou, M.; Cui, P.; Pei, J.; Zhang, Z.; Zhu, W. Asymmetric transitivity preserving graph embedding. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; volumes 1105–1114. [Google Scholar]

- Muscoloni, A.; Thomas, J.M.; Ciucci, S.; Bianconi, G.; Cannistraci, C.V. Machine learning meets complex networks via coalescent embedding in the hyperbolic space. Nat Commun 2017, 8, 1615. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.-J.; Yang, K.-C.; Radicchi, F. Systematic comparison of graph embedding methods in practical tasks. Phys. Rev. E 2021, 104, 44315. [Google Scholar] [CrossRef] [PubMed]

- Kojaku, S.; Radicchi, F.; Ahn, Y.-Y.; Fortunato, S. Network community detection via neural embeddings. arXiv 2023. [Google Scholar] [CrossRef]

- Tandon, A.; et al. Community detection in networks using graph embeddings. Phys. Rev. E 2021, 103, 22316. [Google Scholar] [CrossRef]

- Cherifi, H.; Palla, G.; Szymanski, B.K.; Lu, X. On community structure in complex networks: challenges and opportunities. Appl Netw Sci 2019, 4, 117. [Google Scholar] [CrossRef]

- Applegate, D.L.; et al. Certification of an optimal TSP tour through 85,900 cities. Operations Research Letters 2009, 37, 11–15. [Google Scholar] [CrossRef]

- Newman, M.E.J. Fast algorithm for detecting community structure in networks. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics 2004, 69. [Google Scholar] [CrossRef]

- Sigillito, V.G.; Wing, S.P.; Hutton, L.V.; Baker, K.B. Classification of radar returns from the ionosphere using neural networks. Johns Hopkins APL Technical Digest (Applied Physics Laboratory) 1989, 10. [Google Scholar]

- Shieh, A.D.; Hashimoto, T.B.; Airoldi, E.M. Tree preserving embedding. Proc Natl Acad Sci U S A 2011, 108. [Google Scholar] [CrossRef]

- Van Der Maaten, L.J.P.; Hinton, G.E. Visualizing high-dimensional data using t-sne. Journal of Machine Learning Research 2008. [Google Scholar]

- Zhang, J.; Dong, Y.; Wang, Y.; Tang, J.; Ding, M. ProNE: fast and scalable network representation learning. In Proceedings of the 28th International Joint Conference on Artificial Intelligence; 2019; pp. 4278–4284. [Google Scholar]

- Grover, A.; Leskovec, J. Node2vec: Scalable feature learning for networks. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; volumes 855–864. [Google Scholar]

- Cannistraci, C.V.; Muscoloni, A. Geometrical congruence, greedy navigability and myopic transfer in complex networks and brain connectomes. Nat Commun 2022, 13, 7308. [Google Scholar] [CrossRef]

- Muscoloni, A.; Cannistraci, C.V. Minimum curvilinear automata with similarity attachment for network embedding and link prediction in the hyperbolic space. arXiv 2018, arXiv:1802.01183. [Google Scholar]

- Cai, H.; Zheng, V.W.; Chang, K.C.C. A Comprehensive Survey of Graph Embedding: Problems, Techniques, and Applications. IEEE Trans Knowl Data Eng 2018. [Google Scholar] [CrossRef]

- Goyal, P.; Ferrara, E. Knowledge-Based Systems Graph embedding techniques, applications, and performance: A survey. Knowl Based Syst 2018. [Google Scholar] [CrossRef]

- Cacciola, A.; et al. Coalescent Embedding in the Hyperbolic Space Unsupervisedly Discloses the Hidden Geometry of the Brain.

- Zheng, M.; Allard, A.; Hagmann, P.; Alemán-Gómez, Y.; Ángeles Serrano, M. Geometric Renormalization Unravels Self-Similarity of the Multiscale Human Connectome. Proc Natl Acad Sci U S A 2020, 117. [Google Scholar] [CrossRef] [PubMed]

- Russell, M.; Aqil, A.; Saitou, M.; Gokcumen, O.; Masuda, N. Gene communities in co-expression networks across different tissues. PLoS Comput Biol 2023, 19. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; et al. Scaling law of real traffic jams under varying travel demand. EPJ Data Sci 2024, 13, 30. [Google Scholar] [CrossRef]

- Wang, X.; Sirianni, A.D.; Tang, S.; Zheng, Z.; Fu, F. Public Discourse and Social Network Echo Chambers Driven by Socio-Cognitive Biases. Phys Rev X 2020, 10. [Google Scholar] [CrossRef]

- Evans, T.; Fu, F. Opinion formation on dynamic networks: Identifying conditions for the emergence of partisan echo chambers. R Soc Open Sci 2018, 5. [Google Scholar] [CrossRef] [PubMed]

- Zachary, W.W. An Information Flow Model for Conflict and Fission in Small Groups. J Anthropol Res 1977, 33, 452–473. [Google Scholar] [CrossRef]

- Cross, R.; Parker, A.; Cross, R. The Hidden Power of Social Networks: Understanding How Work Really Gets Done in Organizations. Harvard Business School Publishing: Harvard, MA, USA, 2004. [Google Scholar]

- Adamic, L.A.; Glance, N. The political blogosphere and the 2004 U.S. Election: Divided they blog. In Proceedings of the 3rd International Workshop on Link Discovery, LinkKDD 2005 - in conjunction with 10th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; Association for Computing Machinery, Inc.: New York, New York, USA, 2005; pp. 36–43. [Google Scholar] [CrossRef]

- Yan, S.; Xu, D.; Zhang, B.; Zhang, H.J. Graph embedding: A general framework for dimensionality reduction. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2005; IEEE, 2005; vol. II, pp. 830–837. [Google Scholar]

- Harel, D.; Koren, Y. Graph drawing by high-dimensional embedding. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer Verlag, 2002; vol. 2528 LNCS, pp. 207–219. [Google Scholar]

- Fu, G.H.; Xu, F.; Zhang, B.Y.; Yi, L.Z. Stable variable selection of class-imbalanced data with precision-recall criterion. Chemometrics and Intelligent Laboratory Systems 2017, 171, 241–250. [Google Scholar] [CrossRef]

- Ge, Y.; et al. Cell Mechanics Based Computational Classification of Red Blood Cells Via Machine Intelligence Applied to Morpho-Rheological Markers. IEEE/ACM Trans Comput Biol Bioinform 2021, 18, 1405–1415. [Google Scholar] [CrossRef]

- Applegate, D.L.; Bixby, R.E.; Chvatal, V.; Cook, W.J. The Traveling Salesman Problem: A Computational Study (Princeton Series in Applied Mathematics); Princeton University Press: USA, 2007. [Google Scholar]

- Laporte, G. The traveling salesman problem: An overview of exact and approximate algorithms. Eur J Oper Res 1992, 59, 231–247. [Google Scholar] [CrossRef]

- Hahsler, M.; Hornik, K. TSP - Infrastructure for the Traveling Salesperson Problem. J Stat Softw 2007, 23, 1–21. [Google Scholar] [CrossRef]

- Durán, C.; et al. Nonlinear machine learning pattern recognition and bacteria-metabolite multilayer network analysis of perturbed gastric microbiome. Nat Commun 2021, 12, 1926. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).