1. Introduction

In the current data-rich environment, many predictors are often available in time series forecasting. However, conventional methods have encountered serious difficulties in exploiting the information contained in such high-dimensional data. In particular, the curse of dimensionality often leads to unreliable forecasts when the conventional methods are applied naively. To exploit the information in the high-dimensional predictors, the use of latent factors has emerged among the first successful approaches. See, for instance, Pena and Box [

1], Stock and Watson [

2,

3], Lam

et al. [

4], and Chapter 6 of Tsay [

5]. These factor-based methods are also widely used in the econometric analysis of high-dimensional time series [e.g.

6,

7,

8,

9].

Nevertheless, existing factor-based methods may still be inadequate, and several issues have been constantly observed in practice. For example, weak factors are prevalent in real-world data and extracting factors using all predictors may not be optimal [

10]. In addition, some factor models may still contain many parameters when the factor dimension is not small. On the other hand, Kim and Swanson [

11] reported, from an extensive experiment, that combining shrinkage methods with factor-based approaches can yield more accurate forecasts among many targeted macroeconomic time series. This implies that there might exist some irrelevant predictors in an empirical application. The recently proposed scaled principal component analysis of Huang

et al. [

12] and the supervised dynamic PCA (sdPCA) of Gao and Tsay [

13] partially remedy this issue by constructing factors in a supervised fashion, so that the effects of irrelevant predictors are reduced. Limited experience indicates that when the number of predictors far exceed the sample size or when the predictors are highly correlated, the performance of such supervised approaches can still be compromised.

To efficiently extract predictive factors from high-dimensional data, in this paper, we propose a new method that blends variable selection in factor estimation. The proposed method uses the group orthogonal greedy algorithm (GOGA, Chan

et al. 14) to screen variables that are relevant to the prediction problem at hand, and apply the sdPCA of Gao and Tsay [

13] to extract factors from the selected variables. The orthogonal greedy algorithm, as well as its variant GOGA for grouped predictors, have been employed for variable selection for high-dimensional linear models, especially with dependent data and highly correlated predictors [

14,

15,

16]. Since both GOGA and sdPCA serve to minimize the effects of noisy irrelevant variables, the combined procedure, which we call GO-sdPCA, can effectively construct highly predictive factors.

It is worth pointing out that the novelty of our method lies in a key step that successfully combines variable selection with factor-based methods. Indeed, variable selection techniques commonly employed such as the Lasso of Tibshirani [

17] or the OGA of Ing and Lai [

16] tend to select a sparse representation of the data. These methods will select only a few variables when many variables are driven by common factors and thereby highly correlated. For factor estimation, this can be undesirable since factor recovery typically benefits from the many variables that are loaded on the shared factors [see, e.g. [

4,

13]. This issue can be even more pronounced when the factors are strong, in which case the factors can be accurately recovered by employing many predictors. To circumvent this predicament, we propose to use a “peeling” technique, which repeatedly apply GOGA to the data after previously selected variables are dropped from the set of candidate predictors. In this way, our method can select more variables in the model and discriminate between relevant and irrelevant predictors. In

Section 4, we intoduce the proposed method in more details. To better motivate the proposed method, we briefly review the orthogonal greedy algorithm (OGA) and its variants in

Section 2. We also review the high-dimensional information criteria that are often used to balance the model complexity and the model fit along OGA iterations. The sdPCA is reviewed in

Section 3, where we particularly emphasize the method’s design principles.

We use simulation studies and some empirical analyses to examine the performance of the proposed GO-sdPCA approach. In addition to comparing with some factor-based methods, such as the diffusion index approach of Stock and Watson [

2,

3], the sdPCA, and the time series factor model of Lam

et al. [

4], we also compare GO-sdPCA against the Lasso and the random forests of Breiman [

18], both of which are widely-used and versatile tools in machine learning. We found that GO-sdPCA improves upon most of factor-based approaches and fares well with selected competing methods, even when the number of relevant variables is comparable with the sample size. The simulation results are discussed in

Section 5.

In

Section 6, we apply the proposed method to two real datasets. The first dataset is the well-known FRED-MD macroeconomic data [

9]. Time series forecasting has been a vital topic both in econometric research and in policy-making pertaining to this data set. The other dataset contains the hourly particulate matters (PM

2.5) measurements in Taiwan. The PM

2.5 data play an important role in the environmental studies as well as informing public health policies. However, since its measurements are taken over time and space, the dataset is naturally of high dimension and presents serious challenges to forecast. Our findings demonstrate that the proposed method consistently offers more accurate forecasts than the competing methods, validating its practical utility.

2. Orthogonal Greedy Algorithm

In this section, we briefly review the (group) orthogonal greedy algorithm. The OGA is an iterative algorithm that sequentially chooses predictors to form a regression model. Theoretically grounded in approximation theory [

19], the OGA is also easy to implement computationally. In the statistical learning and high-dimensional linear regression literature, Barron

et al. [

20] and Ing and Lai [

16] analyzed its convergence rate as well as variable screening capability. Its generalization to grouped predictors was studied by Chan

et al. [

14] with application to threshold autoregressive time series models.

Throughout this paper, we denote by

the data of the response variable we are interested in, where

n is the sample size. We also have data for the

p predictors,

, where

. The OGA is defined as follows. Starting with

and

, the OGA computes, at iteration

,

and updates

where

is the orthogonal projection matrix associated with the linear space spanned by

. Clearly, the OGA sequentially selects variable to include in the model; the set

denotes the index set corresponding to the predictors already selected at iteration

k. Intuitively, in each iteration OGA selects the variable that best explains the current residuals. There are numerical schemes to speed up the computation of the residuals such as using sequential orthogonalization. We refer to Ing and Lai [

16] and Chan

et al. [

14] for details.

For the purpose of this paper, we will make use of a slight generalization of the OGA that deals with grouped predictors. Chan

et al. [

14] studied the group OGA (GOGA) and applied the method to estimate threshold time series models. Consider the

j-th predictor,

. Instead of being a scalar predictor, it contains

component predictors,

, thereby forming a “group” predictor. Then, by substituting

in (

1), the procedure becomes the GOGA.

After

iterations, the GOGA selects the (group) predictors corresponding to

. Bias-variance trade-off manifests when selecting the number of predictors to be included, as a large

may lead to over-fitting. To select a desirable model complexity, Ing [

15] suggests using the high-dimensional Akaike information criterion (HDAIC). The HDAIC of the model at iteration

k is defined as

where

, and

C is a constant to be tuned. Then the model selected by HDAIC is

Theoretically, Ing [

15] proved that the resulting model selected by OGA+HDAIC adapts to the underlying sparsity structure. In particular, consider the high-dimensional regression model,

Then the conditional mean squared prediction error of OGA+HDAIC is of rate

for some

and positive constants

. See Theorem 3.1 of Ing [

15] for details. Note that these rates are minimax optimal, and, are automatically achieved by OGA+HDAIC without knowledge about the sparsity pattern. In this paper, we will leverage this property to select good predictors in constructing forecasts. In this way, the variables used in our method is carefully selected in a supervised fashion, which is more effective than employing all predictors in the high-dimensional data.

3. Supervised Dynamic PCA

Next, we review the supervised dynamic PCA (sdPCA) proposed by Gao and Tsay [

13] for forecasting. The sdPCA is a factor-based forecasting approach, which took a major role in the literature of time series forecasting. Tailored to incorporate dynamic information, the sdPCA has been shown to outperform some existing factor-based approaches such as the diffusion index model of Stock and Watson [

2,

3], and the scaled PCA of Huang

et al. [

12].

We first outline the sdPCA procedure. Given a forecast horizon

h, the sdPCA first constructs intermediate predictions using each individual predictor and its lagged values. For instance, one can regress

on

, where

is a user-specified lag value, and obtain the fitted values

where

is the intercept estimate and

are the slope estimates. For different

j’s, the number of lags

used in the regression can differ. For instance,

can be selected by the BIC. Then, with the constructed intermediate predictions,

’s, the sdPCA apply PCA to estimate a lower dimensional factor

, where

. Therefore, the data to which the PCA applies,

, are in the same unit (as

), which scales the variables according to their predictive power.

Finally, one may employ a linear model for the predictive equation,

where

and

are intercept and slope estimates respectively, and ∼ signifies that we run the linear regression while the underlying relationship of

and

may not be exactly linear. Gao and Tsay [

13] also suggests to use Lasso to estimate (

5) instead of the usual linear regression if the number of common factors is large. Additionally, one can also include some lags of

is (

5) and let Lasso perform variable selection.

The sdPCA has several advantages over the conventional PCA methods. First, the PCA is not scale-invariant. On the contrary, the sdPCA constructs predictors that are in the same unit, which naturally scales the predictors according to their predictive capabilities. Second, instead of performing PCA directly on contemporaneous data

, the sdPCA sources from the lagged information in

,

, where

. For the conventional PCA to use the lagged information, one often needs to augment the data by appending the lagged variables so that PCA is performed on

, which leads to even higher dimensionality. Lastly, the usual PCA is performed in an unsupervised fashion, whereas the sdPCA constructs the factors in a supervised fashion. While the usual principal component directions are not necessarily predictive of the response, factors extracted by sdPCA can potentially yield better forecasts. In fact, Gao and Tsay [

13] have showed that sdPCA has a lower mean square forecasting error than the approaches of Stock and Watson [

2,

3] and Huang

et al. [

12].

In this paper, we employ the sdPCA to capitalize on the aforementioned properties. However, for noisy high-dimensional data, the performance of sdPCA may be severely compromised. Hence, careful dimension reduction before applying the sdPCA is desirable. In the next section, we describe the proposed procedure, which combines GOGA and HDAIC with sdPCA to improve the accuracy of prediction.

4. The Proposed GO-sdPCA

In this section, we introduce the proposed method, GO-sdPCA, which screens variables by the GOGA and HDAIC and then estimates factors by the sdPCA approach. In order to facilitate factor recovery, we apply a “peeling” technique to select more factor-relevant variables in the new procedure.

To tackle the difficulties encountered by the factor-based approaches when applied to high-dimensional data, our method begins with dimension reduction by employing the GOGA introduced in

Section 2. Because of the serial dependence in the data, it is beneficial to select variables based not only on cross-sectional correlation but also on the lagged information. To this end, for each predictor

, we consider the group predictor,

where

is a pre-specified integer for the number of lagged values to consider, and

is the forecast horizon. Let

be the response vector. Then, we employ the GOGA algorithm in

Section 2, with

and

substituted by (

6) and (

7), respectively. Since each group predictor already incorporates past information, the GOGA algorithm can select variables while accounting for the dynamic information.

We also employ the HDAIC (defined in (

2)) after GOGA to prevent the inclusion of irrelevant variables. Since the above procedure depends on the number of GOGA iterations

, the HDAIC constant

C in (

2), the number of lags

used in forming the group predictors, and the pool of candidate predictors

, we conveniently denote it as a set-valued function,

where

is the index set outputted by GOGA+HDAIC, as in (

3). For completeness, we summarize the GOGA+HDAIC method introduced in Section (

Section 2) in Algorithm 1.

|

Algorithm 1:GOGA+HDAIC () |

|

Input: Number of maximum iterations , HDAIC parameter C, number of lags , candidate set

Initialization: ; selected index

-

1

for do

-

2

Select

where is defined in ( 6). -

3

Update

-

4

Update , where is the projection matrix associated with

-

5

end -

6

Choose, as in ( 3), where HDAIC is defined in ( 2) with C set to the inputted value.

Output: selected indices

|

Because the orthogonal residuals are used in the greedy search, GOGA tends to differentiate highly correlated predictors and only selects those predictors that explain distinct (close to orthogonal) directions of the response. However, in factor estimation, the relevant variables loaded on the common factors tend to be highly correlated and failing to employ these correlated predictors may lose some statistical efficiency for the inference of the underlying factors (the blessing of dimensionality, [

4,

13]). Therefore, to encourage GOGA to screen the factor-relevant, correlated predictors, we propose a technique, referred to as “peeling,” that allows careful inclusion of more variables. The idea is to repeatedly apply GOGA+HDAIC, with variables selected from previous implementations discarded from the candidate set. Hence, in each iteration, GOGA+HDAIC is forced to select a new set of variables that best predict

. Formally, the peeling algorithm is described in Algorithm 2. Let

M be the number of peeling iterations. In the following, we denote the peeling procedure, which depends on

M,

, and

C, by the set-valued function

. Namely,

|

Algorithm 2:Peeling () |

|

Input: Number of peeling iterations M, number of GOGA iterations , HDAIC parameter C, number of lags in group predictors

Initialization: ,

-

1

for do

-

2

-

3

Update

-

4

Discard selected variables from the candidate set

-

5

end

Output: selected indices

|

It is worth noting that peeling is different from simply running GOGA for many iterations. Although GOGA also selects distinct variables along its iteration because of orthogonalization (that is, GOGA does not revisit any previously selected variable), peeling, which discards previously selected variables from the candidate set, would produce very different results. For high-dimensional data, running many iterations of GOGA will lead to extremely small residuals, which may no longer carry sufficient variations to detect the remaining relevant variables (with finite sample). On the other hand, peeling

re-starts GOGA in every iteration, using

instead of the previous residuals in the GOGA algorithm. Since GOGA is re-started with a smaller pool of candidate variables, the residuals in peeling will not shrink to zero after many (potentially more than

n) variables are already contained in

. We also remark that the idea of peeling is akin to the random forest which uses randomly selected variables in building each tree. Hence, peeling can be used to detect the weak predictors in the case where a few of the predictors are highly predictive and many others are only weakly predictive [see, e.g. Chpt. 8 of [

21].

After variable selection by peeling, sdPCA is employed to estimate the factors

from the selected variables. Let

be the number of lags used in constructing the intermediate predictions (

4). Finally, the predictive model

is estimated by OLS or Lasso, where

is the number of autoregressive variables. The above procedure, which combines GOGA, peeling, and sdPCA, is called GO-sdPCA.

In closing this section, we briefly discuss the selection of the number of lags. In practice, the number of lags used in GOGA, used in the sdPCA step, and in the predictive equation can all differ. If is large, we can detect the predictors whose distant lags are predictive of the response. However, each step of GOGA iteration will then consume more useful variations, so GOGA will consequently select a smaller number of grouped predictors, which can be counterproductive for the subsequent factor estimation. Thus it is advisable to use a smaller in the screening step, which leads to more predictors selected, and use a larger in the sdPCA step to extract information from the past.

5. Simulation Studies

In this section, we assess the finite-sample performance of the proposed GO-sdPCA method via simulation studies. Some existing factor-based methods are employed as benchmarks, such as the diffusion index approach of Stock and Watson [

2,

3], the time series factor model of Lam

et al. [

4], and the supervised dynamic PCA of Gao and Tsay [

13]. These benchmarks are referred to as SW, LYB, and sdPCA, respectively.

After factor estimation, the predictive model

is estimated by OLS, where

q is an integer specified later, and

is constructed using different approaches. For the proposed GO-sdPCA (shorthanded as GsP* hereafter), we set

in the HDAIC definition (

2) and use 10 peeling iterations. In each peeling iteration, GOGA is applied with

set to 2 for the group predictors. Then, in the sdPCA step,

r factors are extracted with

lags used in constructing the intermediate predictions in (

4). To demonstrate the usefulness of the peeling technique, we also consider implementing GO-sdPCA by naively combining GOGA+HDAIC with sdPCA. That is, we only run one peeling iteration and the variables selected are exactly the ones selected by GOGA+HDAIC. This method is denoted as GsP in the sequel. Similarly, the forecasts of sdPCA are constructed by estimating (

10) with the factors estimated as in

Section 3. The time series factors of Lam

et al. [

4] are estimated using the eigenanalysis of a non-negative definite matrix computed from the autocovariance matrices at nonzero lags. In our implementation,

q lags of past predictors are used by LYB in the eigenanalysis. Finally, SW follows that of Stock and Watson [

2,

3] and uses PCA to extract the factors.

In addition, we employ some commonly used alternatives, including the Lasso of Tibshirani [

17] and the random forests of Breiman [

18] as competing methods. The Lasso is a versatile tool for building sparse linear regression models, while the random forest (RF) excels in capturing non-linear relationships. Recently, Chi

et al. [

22] investigated the asymptotic consistency of RF for high-dimensional data. See also Saha

et al. [

23] for application of RF to dependent data. The tuning parameters for the Lasso are selected by the BIC as suggested by Medeiros and Mendes [

24], whereas we adopt the hyper-parameters for RF as recommended by the

randomForest [

25] package in

R. Therefore, about one-third of the candidate variables is randomly selected at each split. For both methods,

q lags of the dependent variable and the predictors are used for fair comparison.

5.1. Simulation Designs and Results

In the simulations, we consider three data generating processes (DGP) to generate the synthetic data. Throughout the experiments, we use one-step-ahead forecasts () where each method makes a forecast for , which is not in the training sample. The root mean squared forecast errors, averaged over 500 Monte-Carlo simulations, are used for comparing different approaches.

DGP 1. Let

, where

is the number of underlying factors. The predictors

are generated by

where

are independent

p-dimensional

t-distributed random vectors with independent components and five degrees of freedom, and

has independent

entries, with

rows randomly set to zero. That is,

only has

s nonzero rows. Randomly generate

and

via

and

. Finally,

where

are independent standard Gaussian.

In this DGP, the parameter

s dictates both the number of relevant variables and the strength in recovering the factors. The larger

s is, the stronger the factors are. Factor strength plays a critical role in factor recovery [

2,

3,

4]. In practice,

s is seldom known. Therefore, we will consider the cases

, where

n is the sample size, to see whether the methods adapt well to various levels of factor strength.

Table 1 reports the root mean squared forecast error (RMSFE) averaged over 500 Monte Carlo simulations. Across all sparsity levels

, the proposed GsP* delivered the most accurate forecasts and the sdPCA ranks the second. This suggests that the proposed peeling procedure and GOGA improves accuracy in forecasting. The GsP, which naively combines GOGA+HDAIC with sdPCA, shows limited forecasting capabilities with RMSFE being higher than those of the Lasso. This indicates, again, the peeling strategy markedly improved the forecasting performance by selecting more factor-relevant variables. The other forecasting methods, including SW, LYB and RF, seem to suffer from the effect of employing many irrelevant variables in the high-dimensional data. We remark that DGP 1 is essentially a sparse model because

used is relatively small. Therefore, it is not surprising to see that Lasso fares reasonably well.

DGP 2. In this DGP,

is generated via a vector MA(1) model:

where

is a randomly drawn

matrix of rank

. The coefficients

and

are randomly generated via

and

. But, for a set of random indices

J with cardinality equal to

s (i.e.,

), we set

for

. In this way,

and

share the same nonzero coordinates. Finally,

In this example, the relevant predictors have a direct impact on the response, instead of through any common factors. Additionally, when

s is large, it is very difficult to recover the regression coefficients because of the lack of sparsity. Therefore, DGP 2 fits neither the factor model nor the sparse linear model frameworks. Nevertheless, the covariance matrix of

has a special structure. Observe that

That is, the covariance matrix of has the form of a spiked matrix.

Table 2 reports the RMSFEs of the competing methods considered. The sdPCA fares the best as it yields the smallest RMSFE, especially when

s is small. This reflects that the sdPCA can better utilize the spiked covariance structure by selecting contributions from relevant predictors. However, the performance of GsP* and sdPCA converge when

, and both of them compare favorably against the other alternatives. As expected, for non-sparse models, Lasso and random forest do not work well.

DGP 3. In this example, the predictors follow a VAR model:

where

are independent

p-dimensional standard Gaussian processes. Let

be a randomly generated

matrix of rank

. Then the AR coefficient matrix is constructed as

where

denotes the operator norm. The target variable is generated via

where

are independent standard Gaussian variates and

with

in which

.

In this DGP, the predictors, following a high-dimensional VAR(1) model, exhibit complex dynamics and correlations. Without simplifying structures such as latent factors, spiked covariance matrices, and sparsity, forecasting becomes difficult.

Table 3 reports the RMSFEs of various competing methods. With small

s, which corresponds to sparse models, Lasso can suitably choose the predictors and yield relatively accurate forecasts. However, as

s increases, its RMSFE quickly increases and becomes similar to those of the factor-based approaches. The factor-based approaches as well as the RF performed similarly under this DGP. This example also demonstrates that the sdPCA method may encounter loss in prediction accuracy if the number of selected common factors is under-specified; see the case of

and

.

Overall, our simulation studies show that no forecasting method always dominates the competing methods used in the study, but the proposed GsP* procedure can be effective in some cases.

6. Empirical Examples

In this section, we apply the proposed method to two real data sets. The first data set is the U.S. macroeconomic data and the other consists of Taiwan particulate matter (PM2.5) measurements. Forecasting plays an essential role in the applications pertaining to these two data sets, despite that both of them contain high-dimensional predictors. Moreover, these two datasets have different characteristics, which enable us to examine the forecasting performance of various forecasting methods available in the literature. In addition to the proposed GO-sdPCA approach, the competing methods used in the simulation studies, such as sdPCA, SW, LYB, Lasso, and RF, are also employed in this section as benchmarks.

For both datasets, we consider the rolling-window

h-step-ahead forecasting. Let

be the target variable of interest. For predicting

, the factor-based methods use the predictive equation

where

is the vector of estimated common factors by different methods. For Lasso and RF,

are used as potential predictors.

6.1. U.S. Macroeconomic Data

First, we consider the U.S. monthly macroeconomic data from January 1973 to June 2019. The data are from the FRED-MD database maintained by St. Louis Federal Reserve at

https://research.stlouisfed.org/econ/mccracken/fred-databases/. We transform the time series to stationarity according to McCracken and Ng [

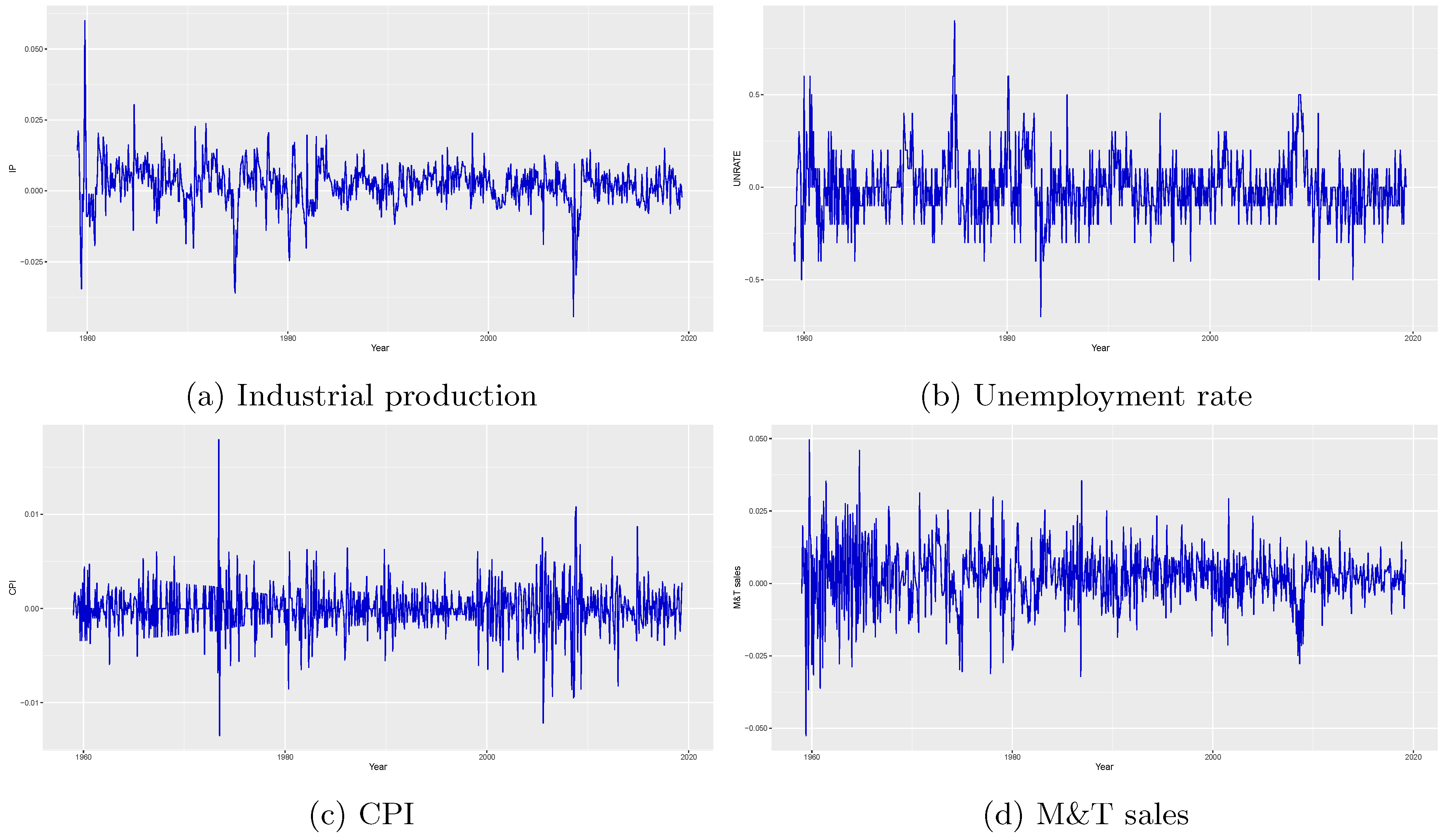

9], and, after discarding some variables containing missing values, there are 125 macroeconomic time series. Among them, we focus on predicting (1) industrial production index, (2) unemployment rate, (3) CPI-All, and (4) Real manufacturing and trade industries sales (M&T sales). Time plots of these four target series after transformations are depicted in

Figure 1.

For this data set, we consider

and different combinations of

q and

r (the number of factors extracted). Since

q lags of the predictors are used, there are

total predictors, which exceeds the sample size in each window. The last twenty years of data (240 time periods) are reserved for testing. In addition to the root mean squared forecasting errors, we also report the

p-values of the Diebold-Mariano test [

26,

27] against the alternative hypothesis that the proposed GsP* procedure is more accurate.

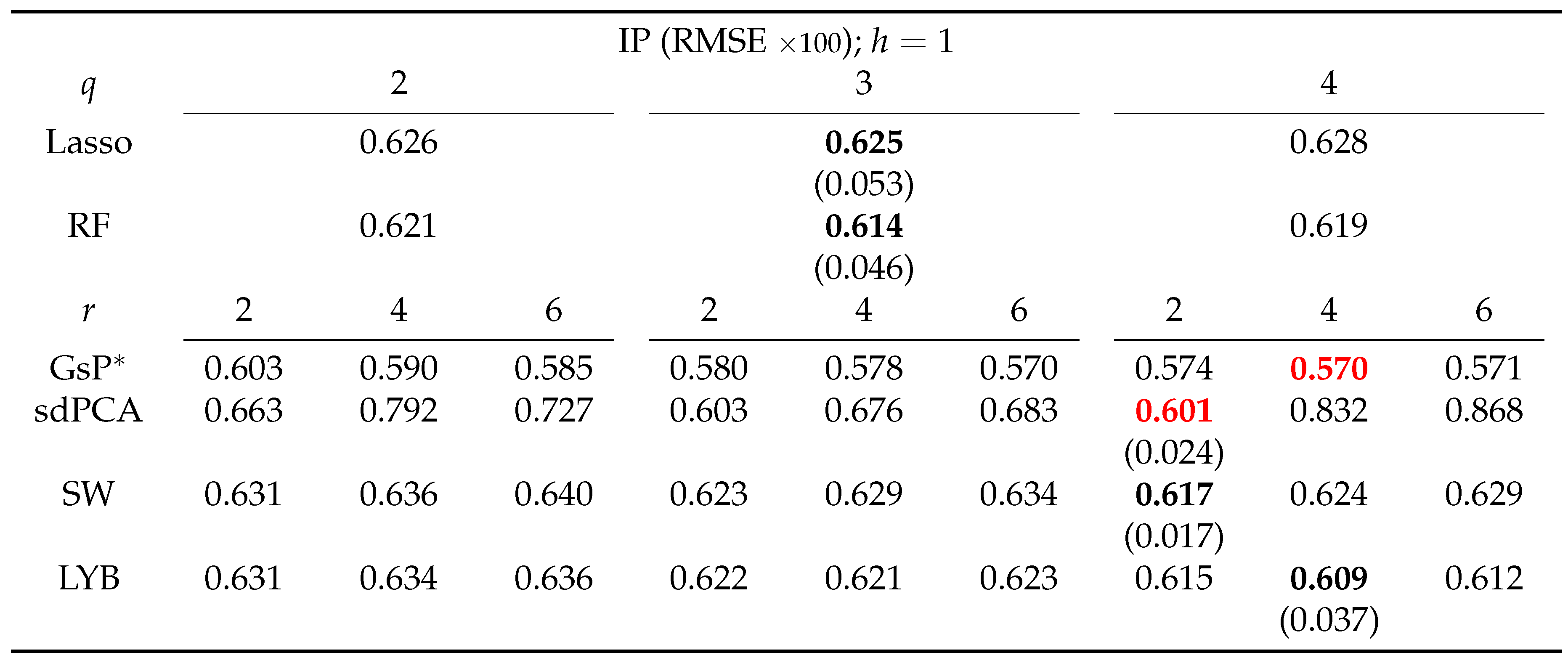

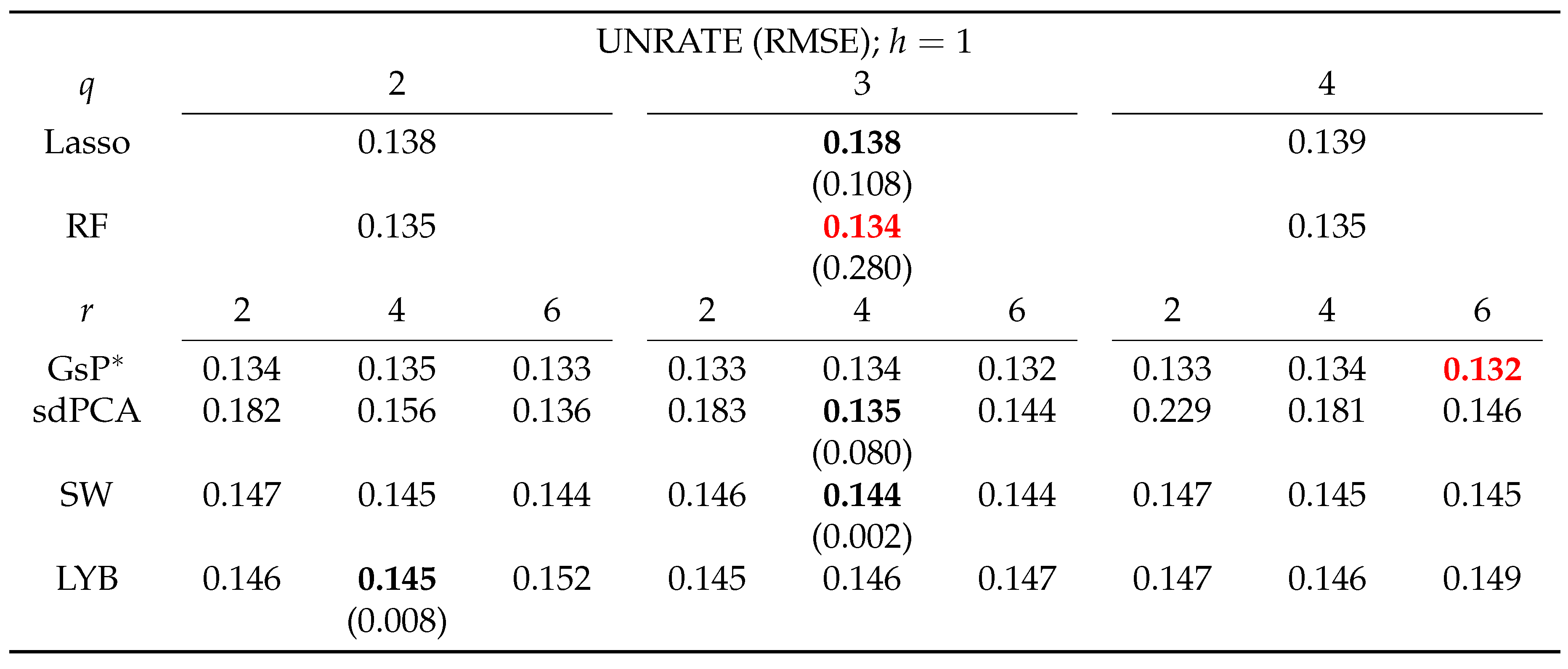

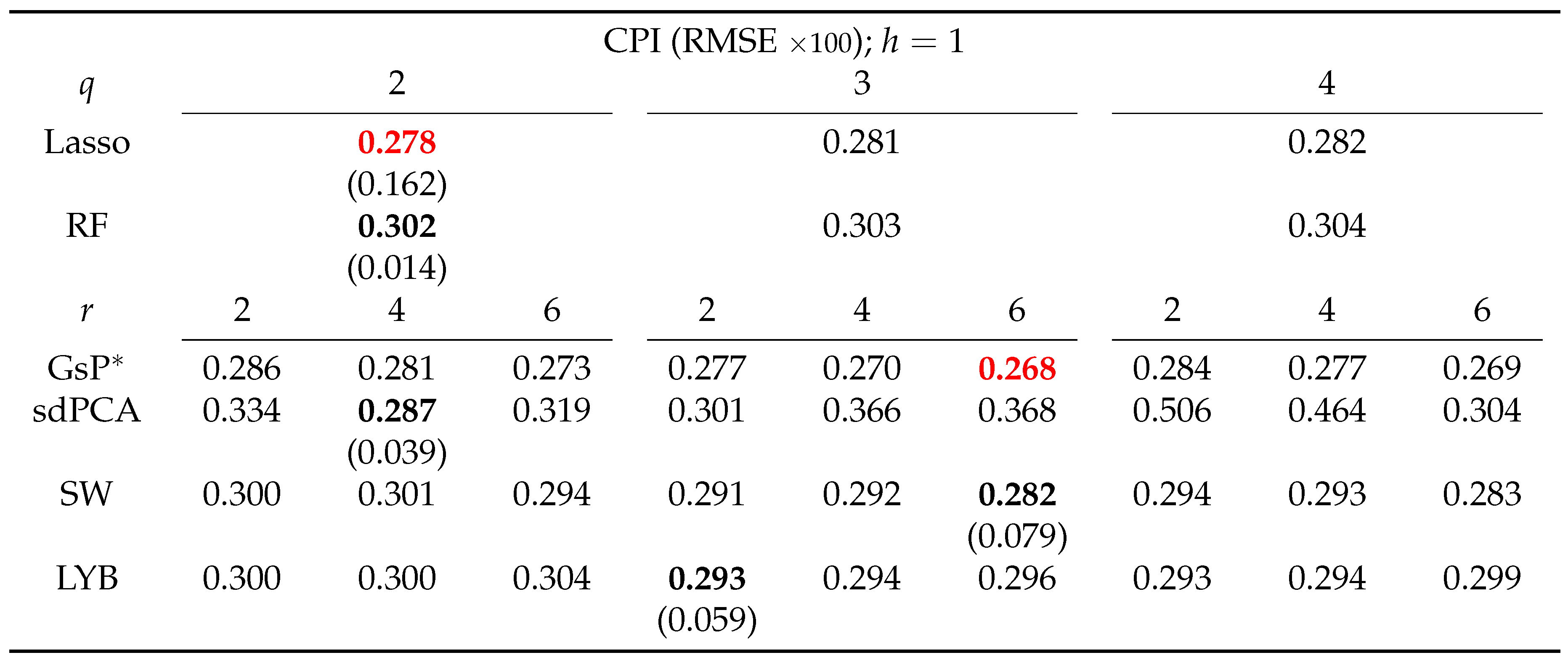

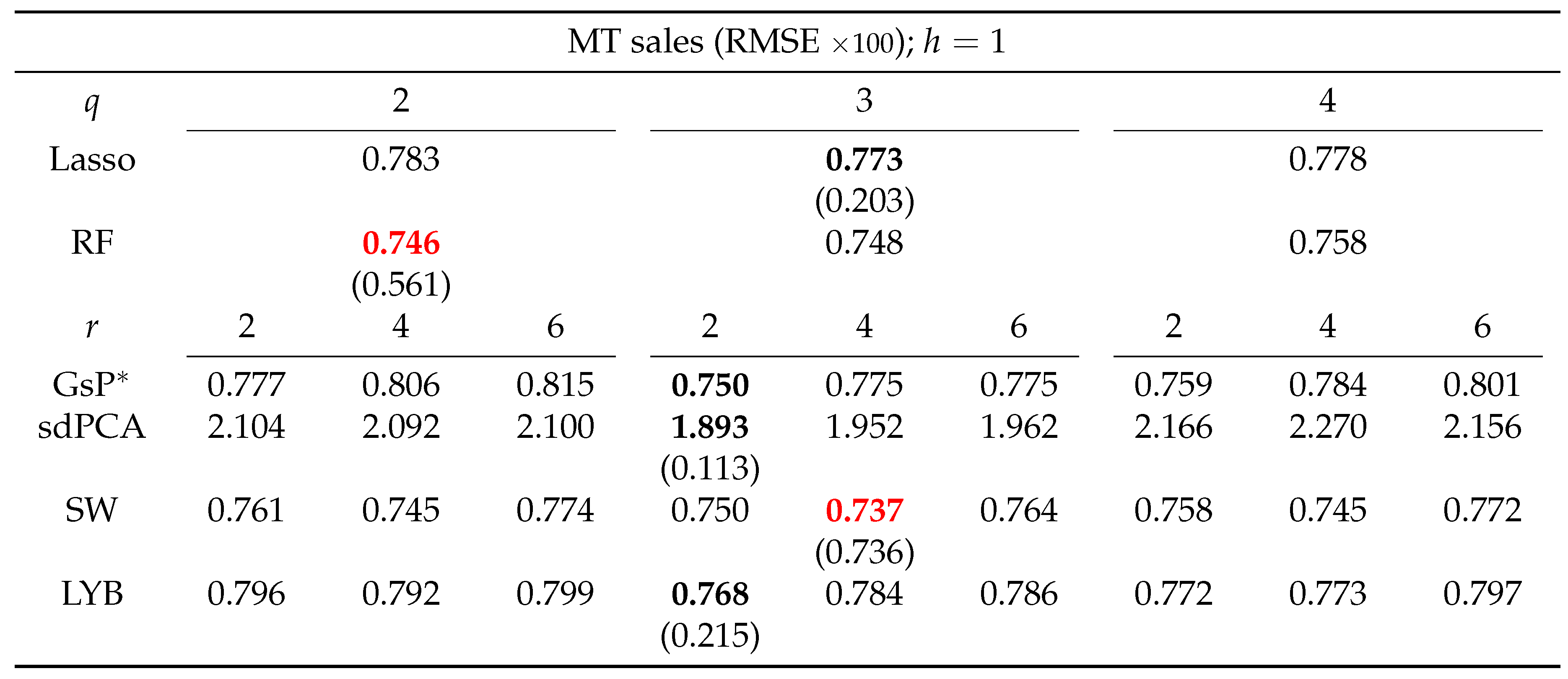

Table 4,

Table 5,

Table 6 and

Table 7 present the results. The proposed GO-sdPCA achieved the lowest RMSFE for three of the targeted series: industrial production index, unemployment rate, and CPI. It notably outperformed the factor-based alternatives sdPCA, SW, and LYB with high confidence in forecasting these three series. In addition, no other methods demonstrated such consistent effectiveness across the targeted series.

6.2. Particulate Matters in Taiwan

We next consider the data of hourly PM

2.5 measurements in Taiwan during March of 2017. The data are sourced from the

SLBDD package [

28] in

R. Each series in the data set represents measurements (in micrograms per cubic meters,

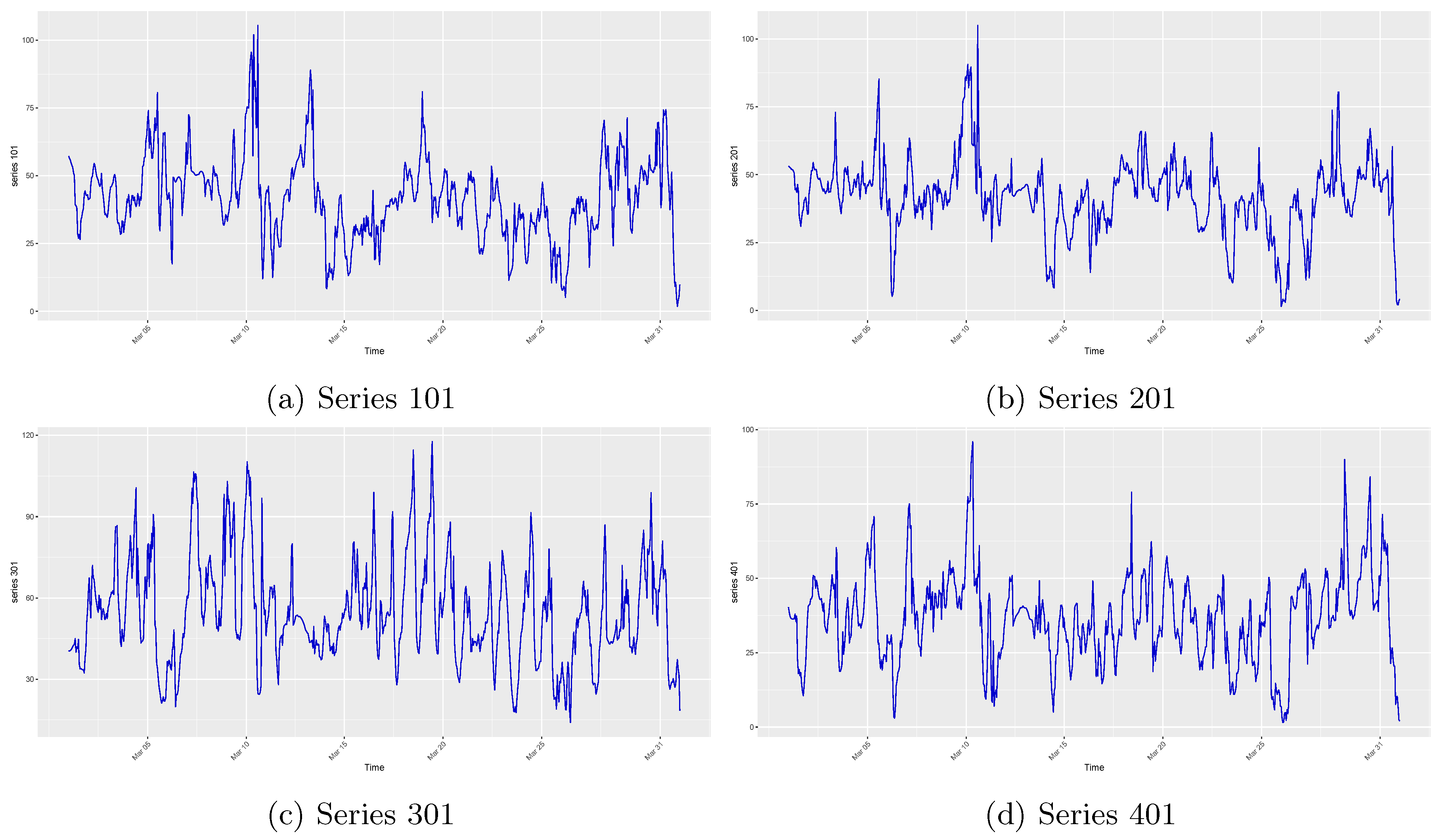

) taken by a novel device known as the AirBox. After an initial examination of these series, we remove series 29 and 70 because these series are near identically zero except at a few time points, offering no useful variations. Among the 516 series in the data set, we choose series 101, 201, 301, 401, as target series.

Figure 2 depicts their time plots.

We consider both one-step-ahead (

) and two-step-ahead (

) forecasts, and employ

lags and

factors in forecasting. The last ten days of data (240 time periods) are reserved for out-of-sample testing.

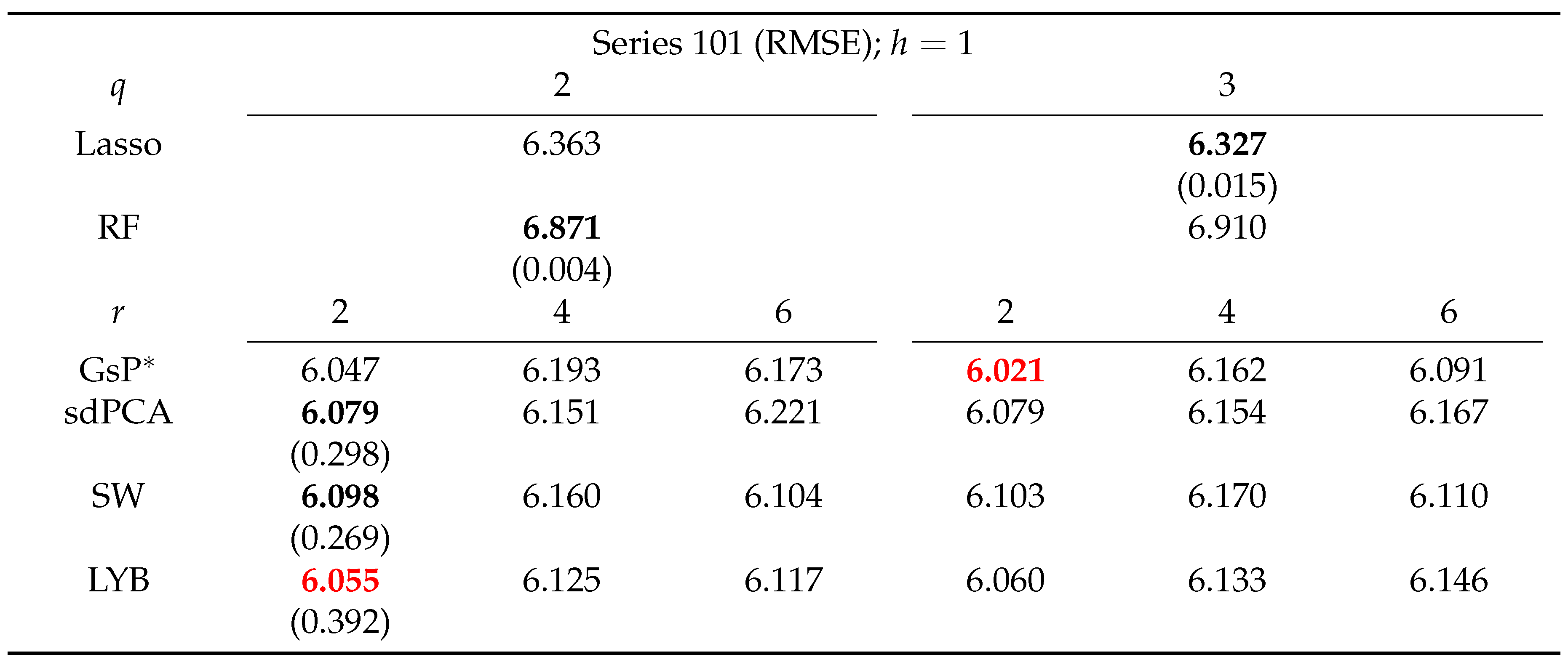

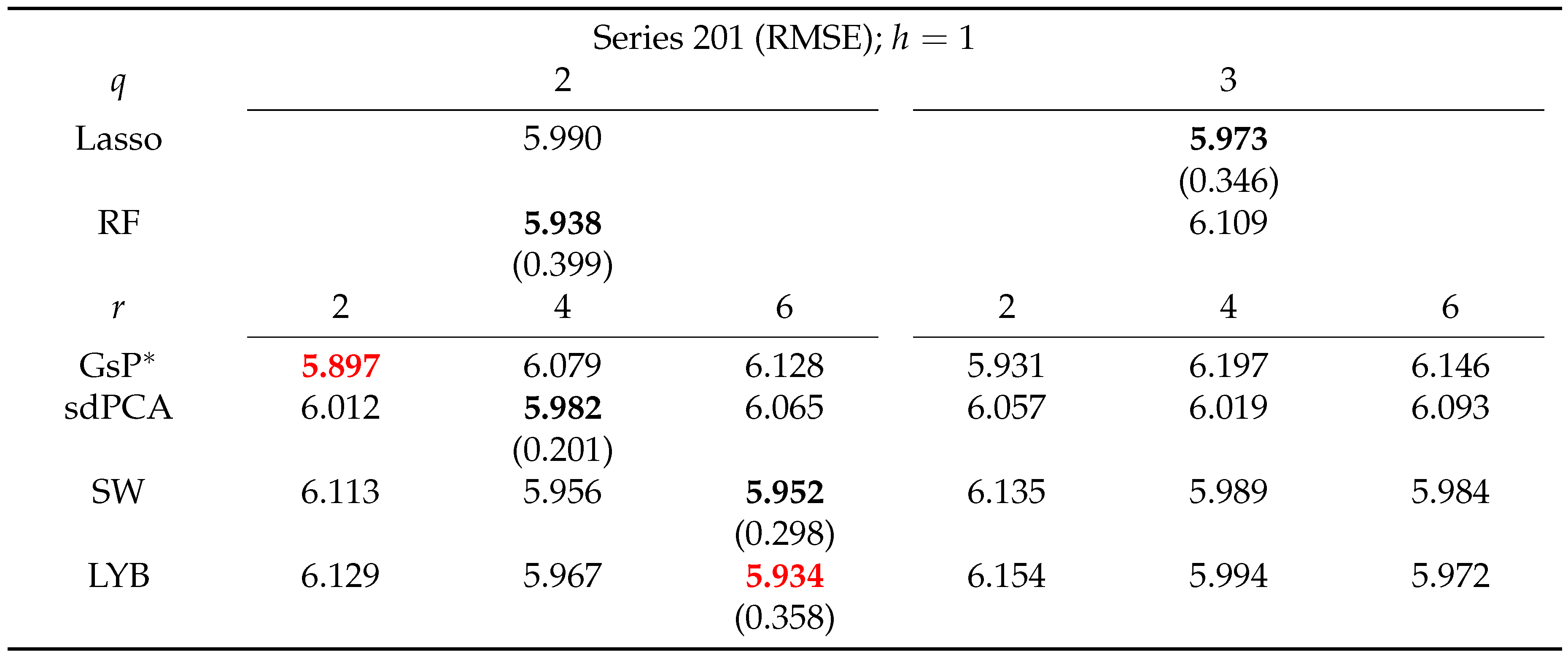

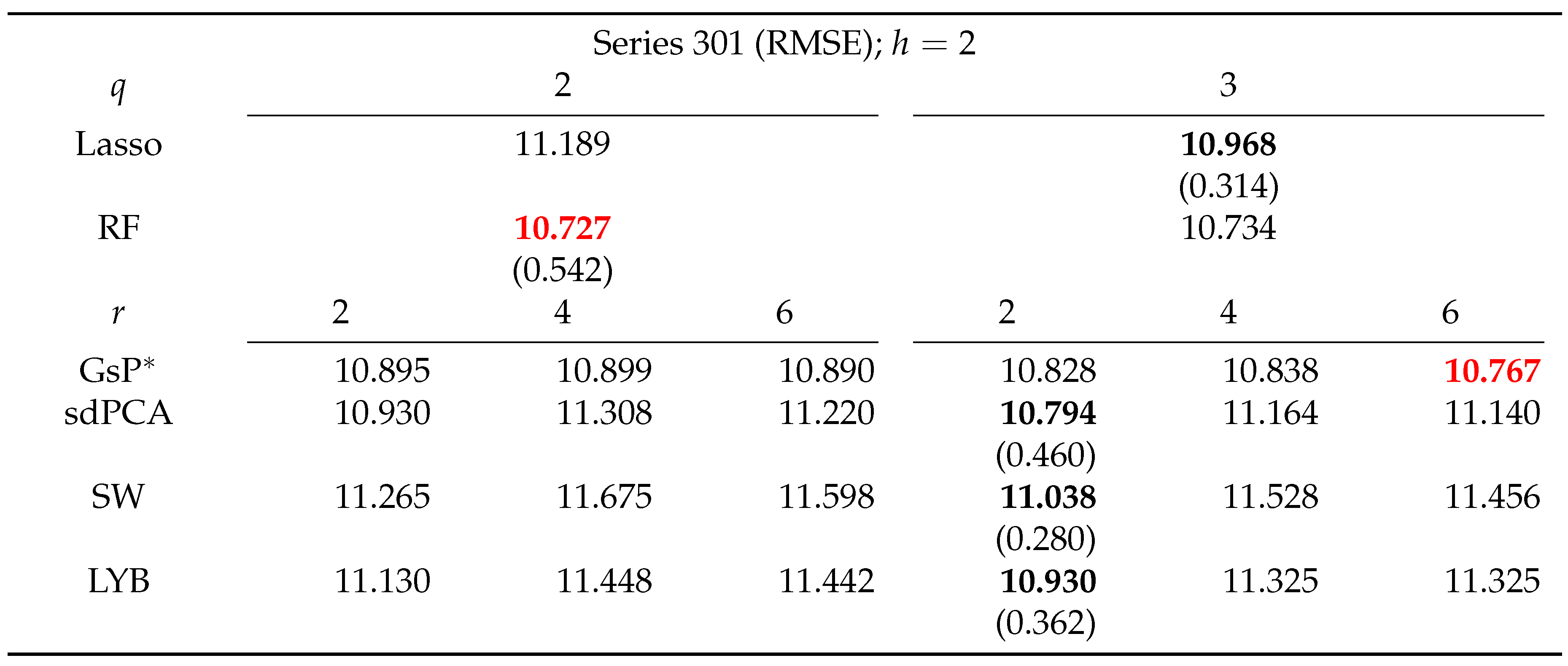

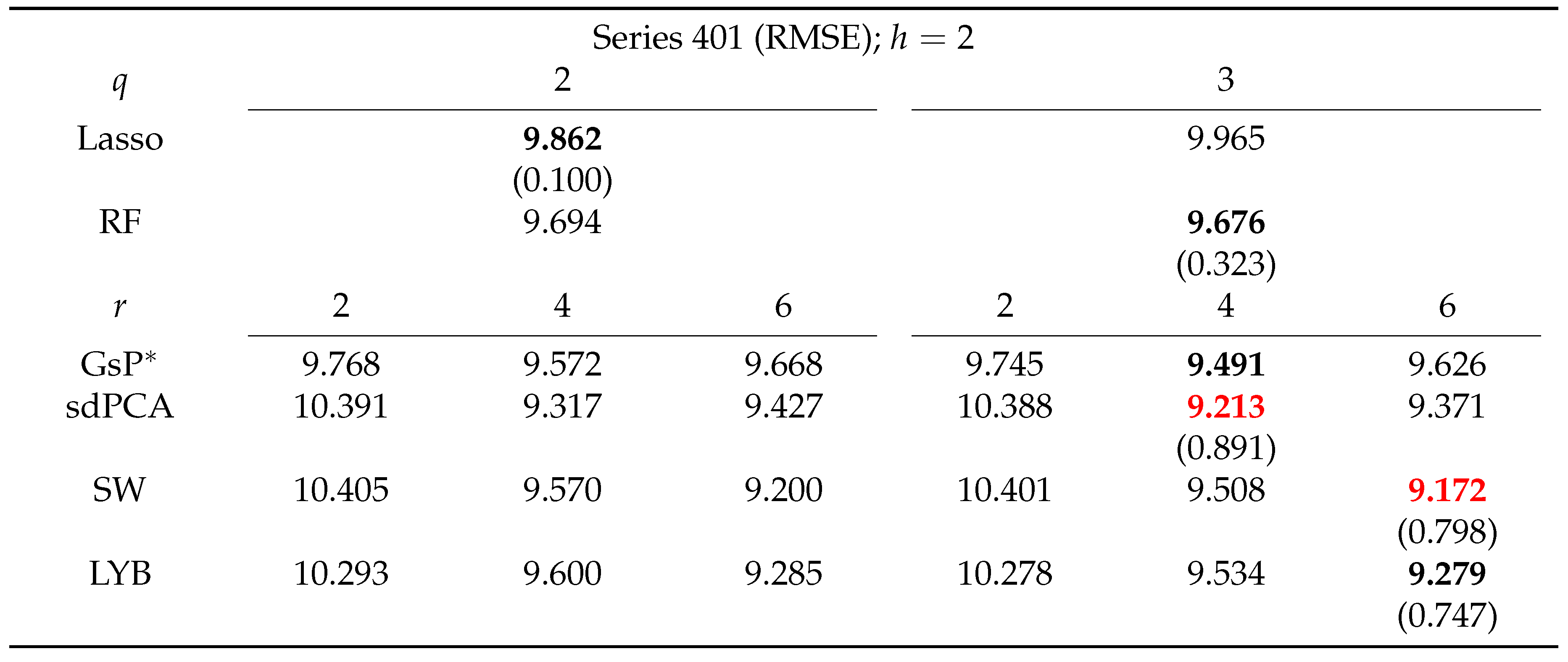

Table 8,

Table 9,

Table 10 and

Table 11 present the results for

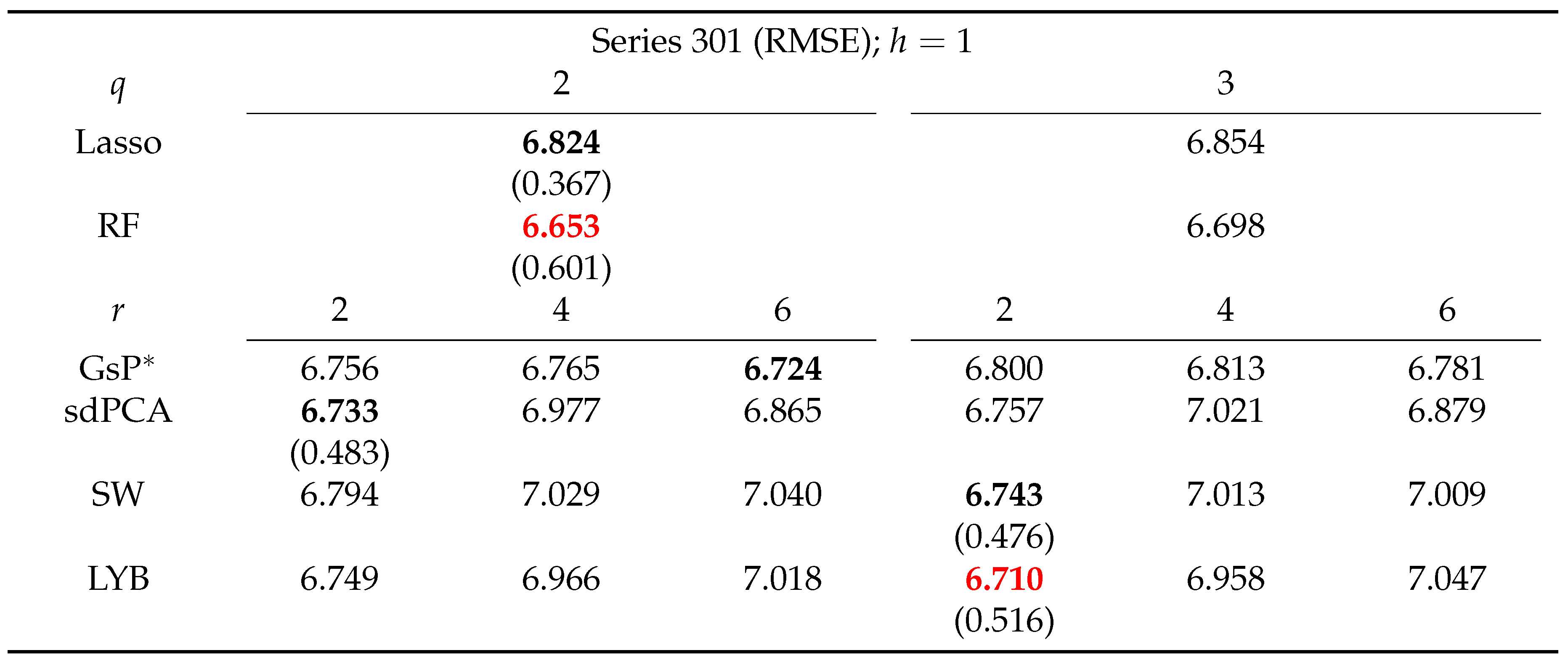

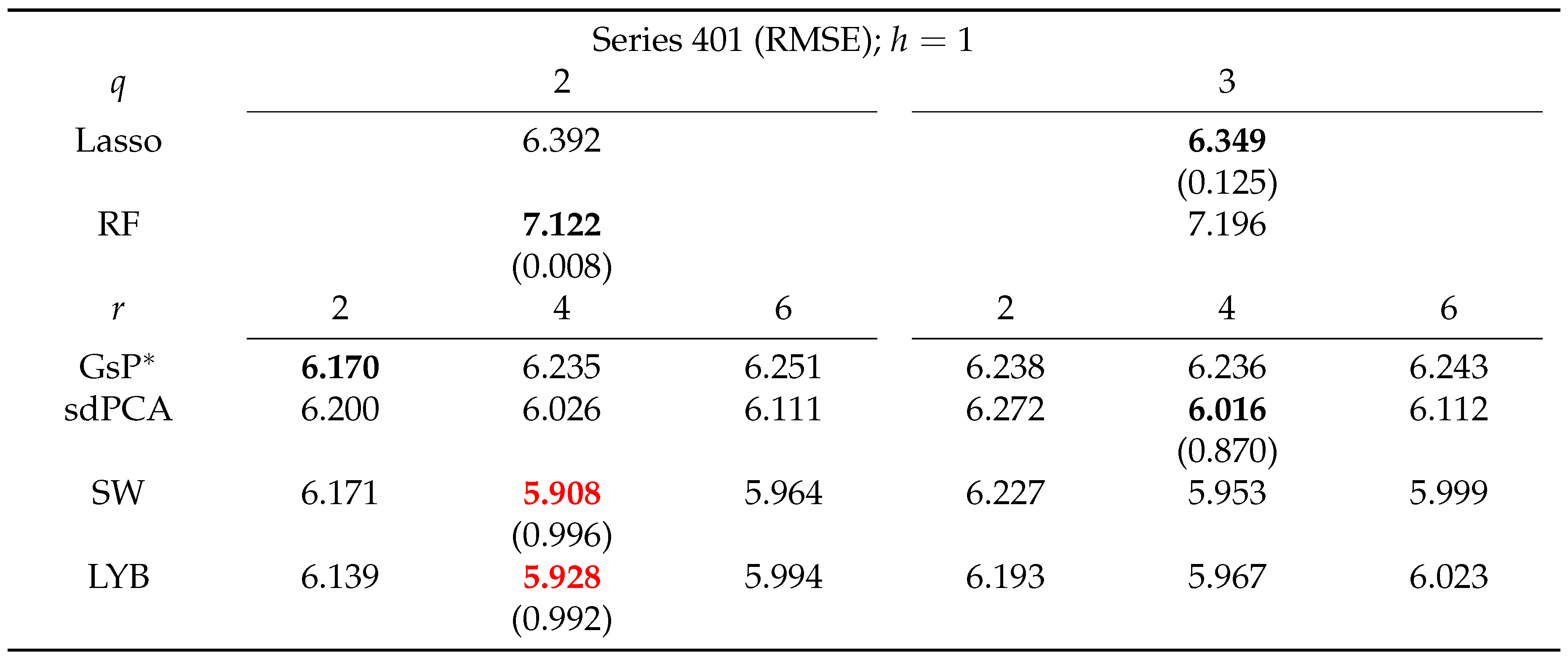

. The proposed GO-sdPCA ranks as the most predictive method in terms of RMSFE for two of the four targeted series: Series 101 and 201. It outperforms the Lasso in forecasting all four targeted series. The performance of LYB is also noteworthy. It ranks among the best two methods for all four targeted series. The DM test, on the other hand, indicates the difference in forecasting accuracy is not statistically significant. Contrary to the case in the macroeconomic data, the proposed GsP* only outperformed the factor-based methods, including the sdPCA, with some weak confidence. For the two-step-ahead forecasts, for which the results are reported in

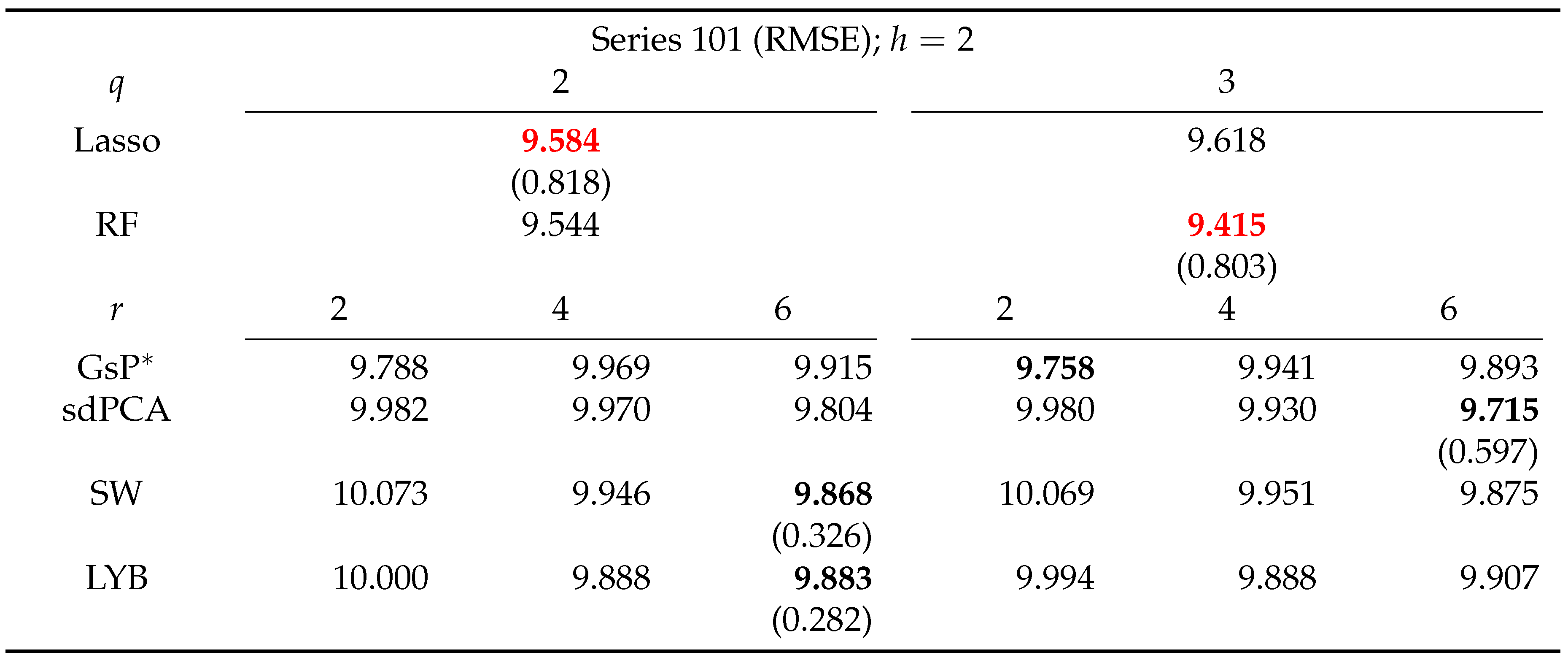

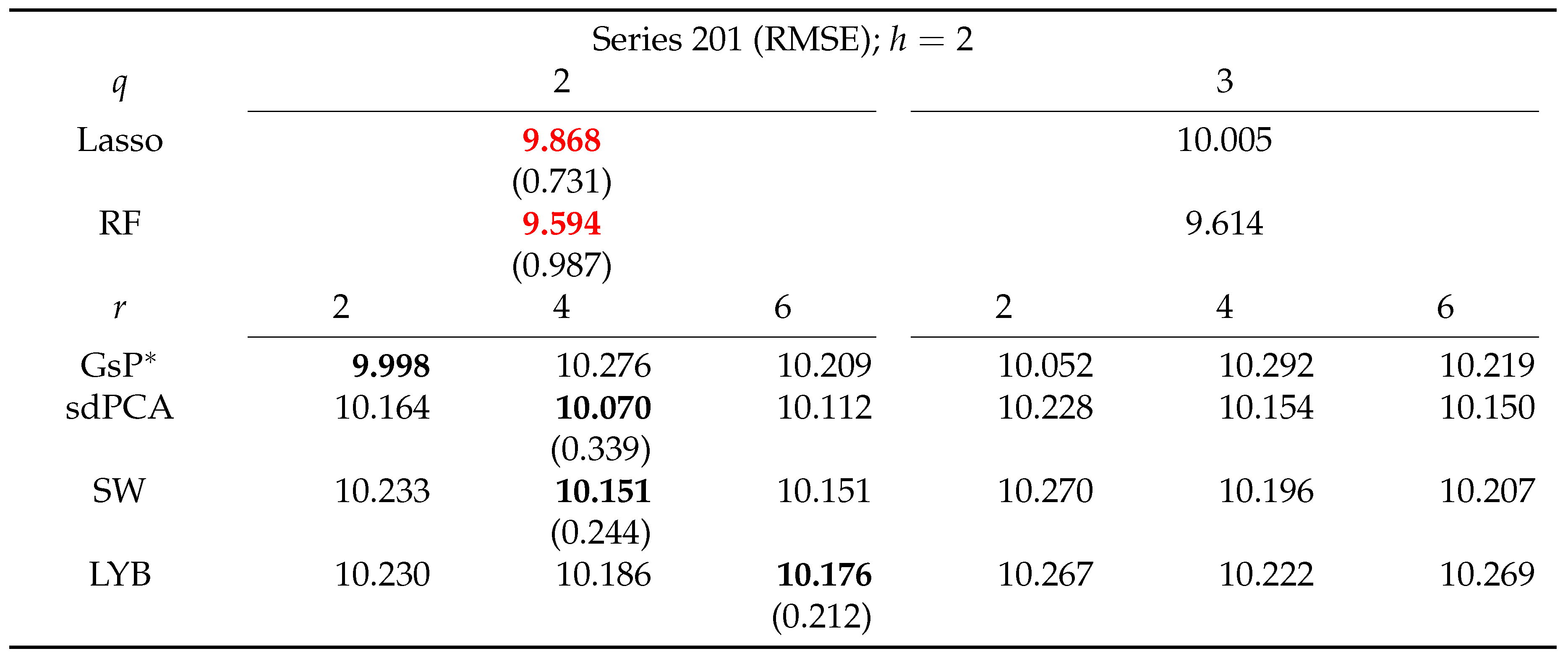

Table 12,

Table 13,

Table 14 and

Table 15, the performance of various methods is more entangled, with the RF consistently ranked among the top two methods for three of the four targeted series. With some weaker confidence, GsP* is the best performing factor-based method for two of the four targeted series. Again, the DM test fails to separate significantly various forecasting methods. We believe this result might be caused by the substantial uncertainty in the PM

2.5 measurements.

In sum, for , GsP* is effective in forecasting PM2.5 data as well as the U.S. macroeconomic data. This implies the proposed procedure is able to capture highly predictive factors across various applications. On the other hand, for , the dynamic nature of the data may be much more involved. The performance of the proposed method becomes similar to those of the other forecasting methods.

Figure 1.

Time plots of the (transformed) macroeconomic time series of interest.

Figure 1.

Time plots of the (transformed) macroeconomic time series of interest.

Figure 2.

Time plots of the four targeted hourly PM2.5 measurements on Taiwan in March 2017.

Figure 2.

Time plots of the four targeted hourly PM2.5 measurements on Taiwan in March 2017.

Table 1.

Root mean squared forecast error of various competing methods. The data are generated from DGP 1 and the results are averaged over 500 Monte Carlo simulations. n and p stand for the sample size and number of observed predictors. is defined in DGP 1, and r is the number of factors extracted using various methods.

Table 1.

Root mean squared forecast error of various competing methods. The data are generated from DGP 1 and the results are averaged over 500 Monte Carlo simulations. n and p stand for the sample size and number of observed predictors. is defined in DGP 1, and r is the number of factors extracted using various methods.

| |

GsP* |

GsP |

sdPCA |

SW |

LYB |

Lasso |

RF |

|

|

| (5, 50) |

1.894 |

2.443 |

1.993 |

2.657 |

2.136 |

2.207 |

2.860 |

| (10, 100) |

2.406 |

3.299 |

2.488 |

3.288 |

2.909 |

2.831 |

4.353 |

| (15, 150) |

3.315 |

3.908 |

3.584 |

5.368 |

5.201 |

3.537 |

5.776 |

|

| (10, 100) |

2.391 |

2.947 |

2.590 |

3.482 |

2.077 |

2.544 |

4.428 |

| (20, 200) |

3.020 |

4.355 |

3.242 |

4.626 |

3.657 |

3.679 |

6.604 |

| (30, 300) |

3.818 |

5.581 |

4.155 |

5.666 |

4.651 |

4.450 |

8.253 |

Table 2.

Root mean squared forecast error of the competing methods, divided by 1000. Data are generated from DGP 2 and the results are averaged over 500 Monte Carlo simulations. n and p stand for the sample size and number of observed predictors. is defined in DGP 2, r is the number of factors extracted using various methods, and s is a sparsity parameter.

Table 2.

Root mean squared forecast error of the competing methods, divided by 1000. Data are generated from DGP 2 and the results are averaged over 500 Monte Carlo simulations. n and p stand for the sample size and number of observed predictors. is defined in DGP 2, r is the number of factors extracted using various methods, and s is a sparsity parameter.

| |

GsP* |

GsP |

sdPCA |

SW |

LYB |

Lasso |

RF |

|

|

| (5, 50) |

0.857 |

0.883 |

0.695 |

1.017 |

0.966 |

0.870 |

2.017 |

| (10, 100) |

2.833 |

3.137 |

2.834 |

3.436 |

3.436 |

3.802 |

6.971 |

| (15, 150) |

6.559 |

7.068 |

6.379 |

7.801 |

7.761 |

9.532 |

16.264 |

|

| (10, 100) |

4.091 |

4.547 |

2.259 |

5.224 |

4.917 |

5.118 |

8.977 |

| (20, 200) |

15.891 |

17.357 |

8.411 |

20.012 |

19.411 |

20.961 |

35.132 |

| (30, 300) |

34.557 |

37.956 |

34.543 |

42.904 |

42.905 |

50.996 |

70.180 |

Table 3.

Root mean squared forecast error of various competing methods. Data are generated from DGP 3 and the results are averaged over 500 Monte Carlo simulations. n and p stand for the sample size and number of observed predictors. is defined in DGP 3, and r is the number of factors extracted using various methods.

Table 3.

Root mean squared forecast error of various competing methods. Data are generated from DGP 3 and the results are averaged over 500 Monte Carlo simulations. n and p stand for the sample size and number of observed predictors. is defined in DGP 3, and r is the number of factors extracted using various methods.

| |

GsP* |

GsP |

sdPCA |

SW |

LYB |

Lasso |

RF |

|

|

| (5, 50) |

12.255 |

12.166 |

12.347 |

12.565 |

12.469 |

7.537 |

12.813 |

| (10, 100) |

17.818 |

18.857 |

17.608 |

17.371 |

17.701 |

16.670 |

18.427 |

| (15, 150) |

23.142 |

24.417 |

23.041 |

21.777 |

22.027 |

21.125 |

22.660 |

|

| (10, 100) |

16.523 |

17.540 |

17.767 |

18.647 |

18.968 |

14.833 |

18.602 |

| (20, 200) |

27.266 |

29.110 |

26.063 |

25.291 |

25.717 |

25.953 |

26.503 |

| (30, 300) |

33.763 |

35.635 |

32.438 |

31.672 |

31.827 |

31.340 |

32.427 |

Table 4.

Root mean squared forecast errors for predicting industrial production index. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 4.

Root mean squared forecast errors for predicting industrial production index. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 5.

Root mean squared forecast errors for predicting unemployment rate. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 5.

Root mean squared forecast errors for predicting unemployment rate. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 6.

Root mean squared forecast errors for predicting CPI. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 6.

Root mean squared forecast errors for predicting CPI. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 7.

Root mean squared forecast errors for predicting MT sales. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 7.

Root mean squared forecast errors for predicting MT sales. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 8.

Root mean squared forecast errors for predicting series 101 in Taiwan PM2.5 data. The forecast horizon h is 1. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 8.

Root mean squared forecast errors for predicting series 101 in Taiwan PM2.5 data. The forecast horizon h is 1. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 9.

Root mean squared forecast errors for predicting series 201 in Taiwan PM2.5 data. The forecast horizon h is 1. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 9.

Root mean squared forecast errors for predicting series 201 in Taiwan PM2.5 data. The forecast horizon h is 1. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 10.

Root mean squared forecast errors for predicting series 301 in Taiwan PM2.5 data. The forecast horizon h is 1. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 10.

Root mean squared forecast errors for predicting series 301 in Taiwan PM2.5 data. The forecast horizon h is 1. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 11.

Root mean squared forecast errors for predicting series 401 in Taiwan PM2.5 data. The forecast horizon h is 1. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 11.

Root mean squared forecast errors for predicting series 401 in Taiwan PM2.5 data. The forecast horizon h is 1. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 12.

Root mean squared forecast errors for predicting series 101 in Taiwan PM2.5 data. The forecast horizon h is 2. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 12.

Root mean squared forecast errors for predicting series 101 in Taiwan PM2.5 data. The forecast horizon h is 2. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 13.

Root mean squared forecast errors for predicting series 201 in Taiwan PM2.5 data. The forecast horizon h is 2. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 13.

Root mean squared forecast errors for predicting series 201 in Taiwan PM2.5 data. The forecast horizon h is 2. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 14.

Root mean squared forecast errors for predicting series 301 in Taiwan PM2.5 data. The forecast horizon h is 2. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 14.

Root mean squared forecast errors for predicting series 301 in Taiwan PM2.5 data. The forecast horizon h is 2. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 15.

Root mean squared forecast errors for predicting series 401 in Taiwan PM2.5 data. The forecast horizon h is 2. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.

Table 15.

Root mean squared forecast errors for predicting series 401 in Taiwan PM2.5 data. The forecast horizon h is 2. The lowest RMSFE achieved by each method is in boldface. Among these boldfaced values, the lowest two values are marked in red. q denotes the number of lags used in estimation and r is the number of factors extracted. GsP* denotes the proposed GO-sdPCA. The figures in the parentheses are the p-values of the Diebold-Mariano test of whether GsP* is more accurate.