Submitted:

22 June 2024

Posted:

24 June 2024

You are already at the latest version

Abstract

Keywords:

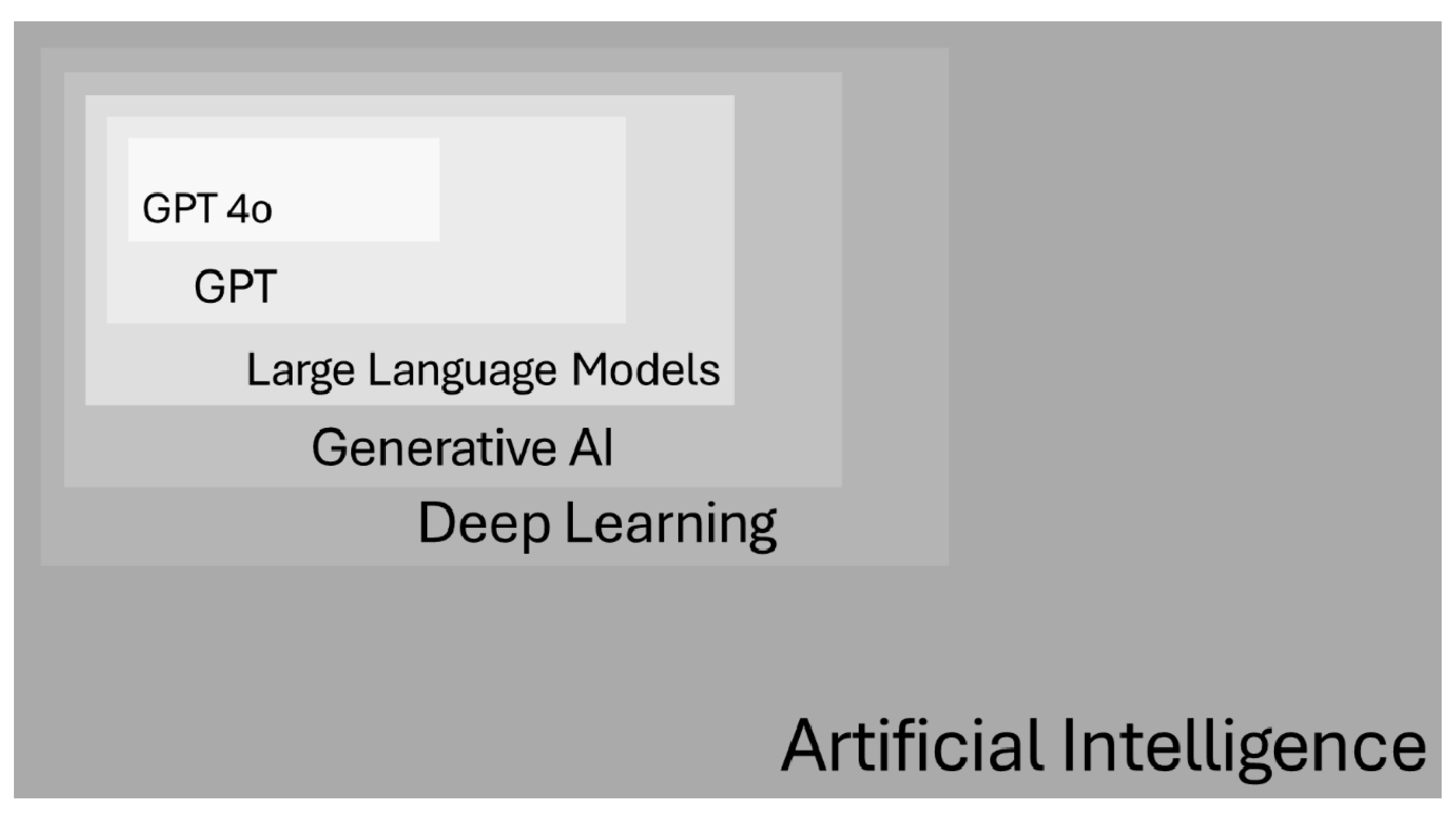

1. Introduction

1.1. Research Purpose

1.2. Related Work

2. Language Capacity of GPT-4o

2.1. Performance on Exams

2.1.1. Performance on USMLE

2.1.2. Performance on CFA

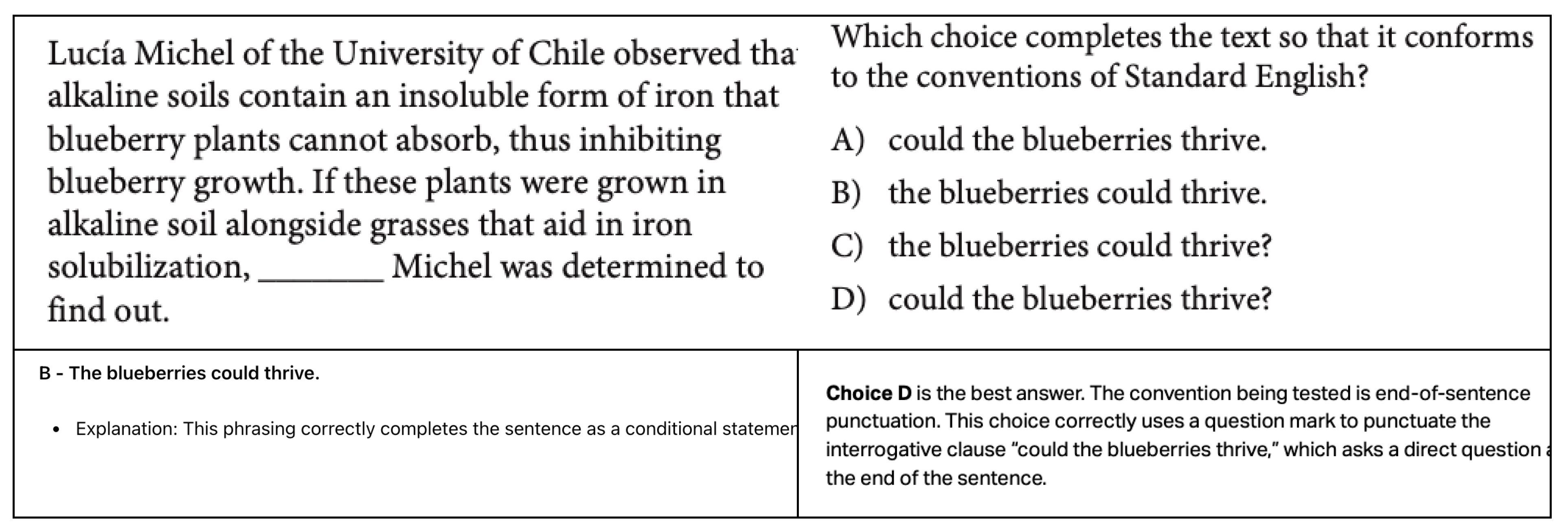

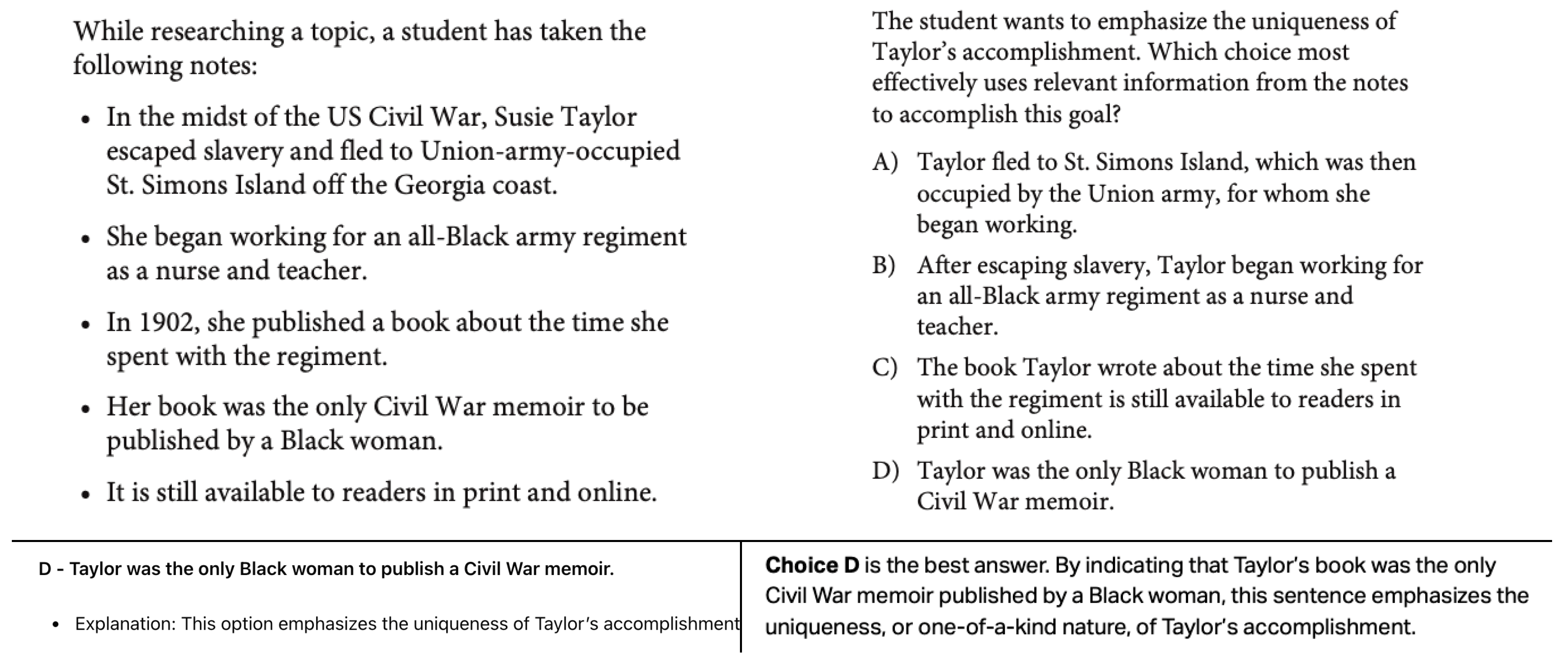

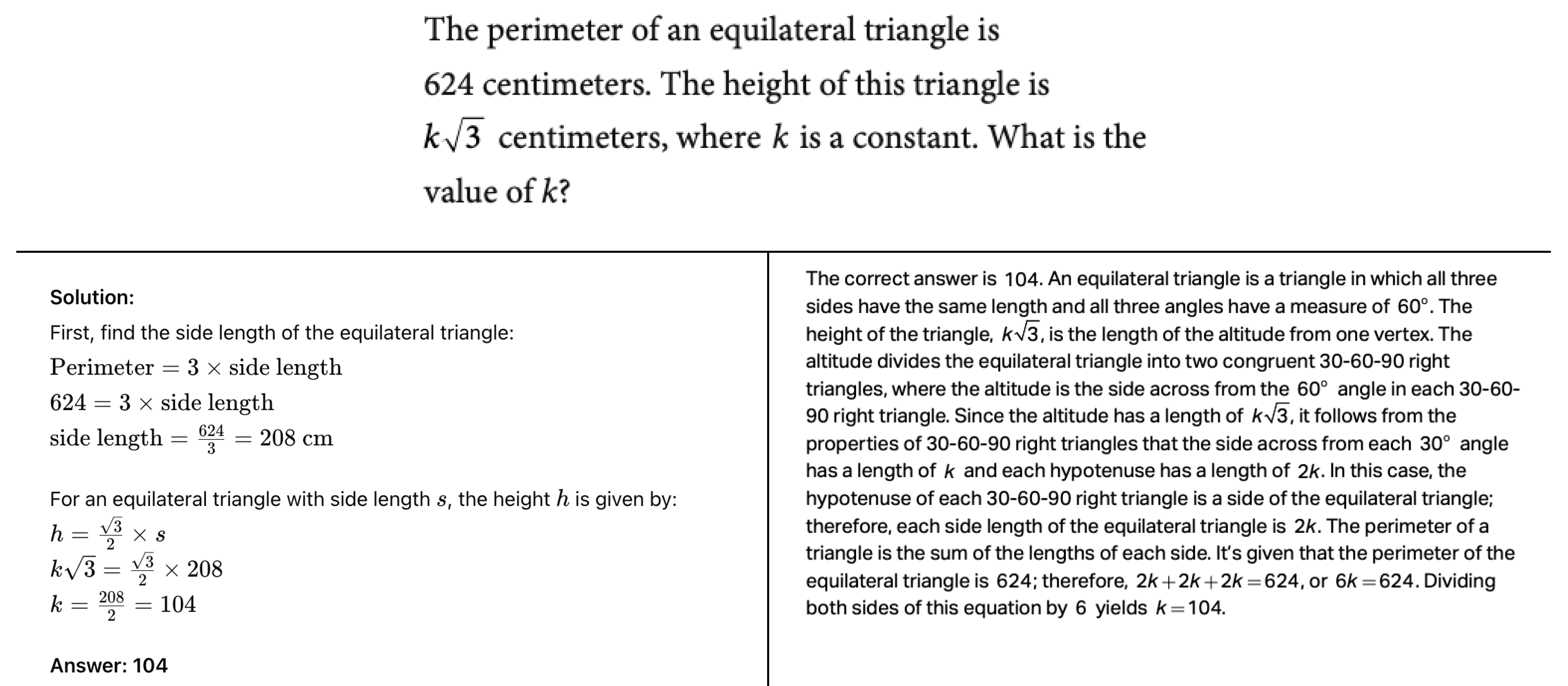

2.1.3. Performance on SAT

2.1.4. Performance on MBE

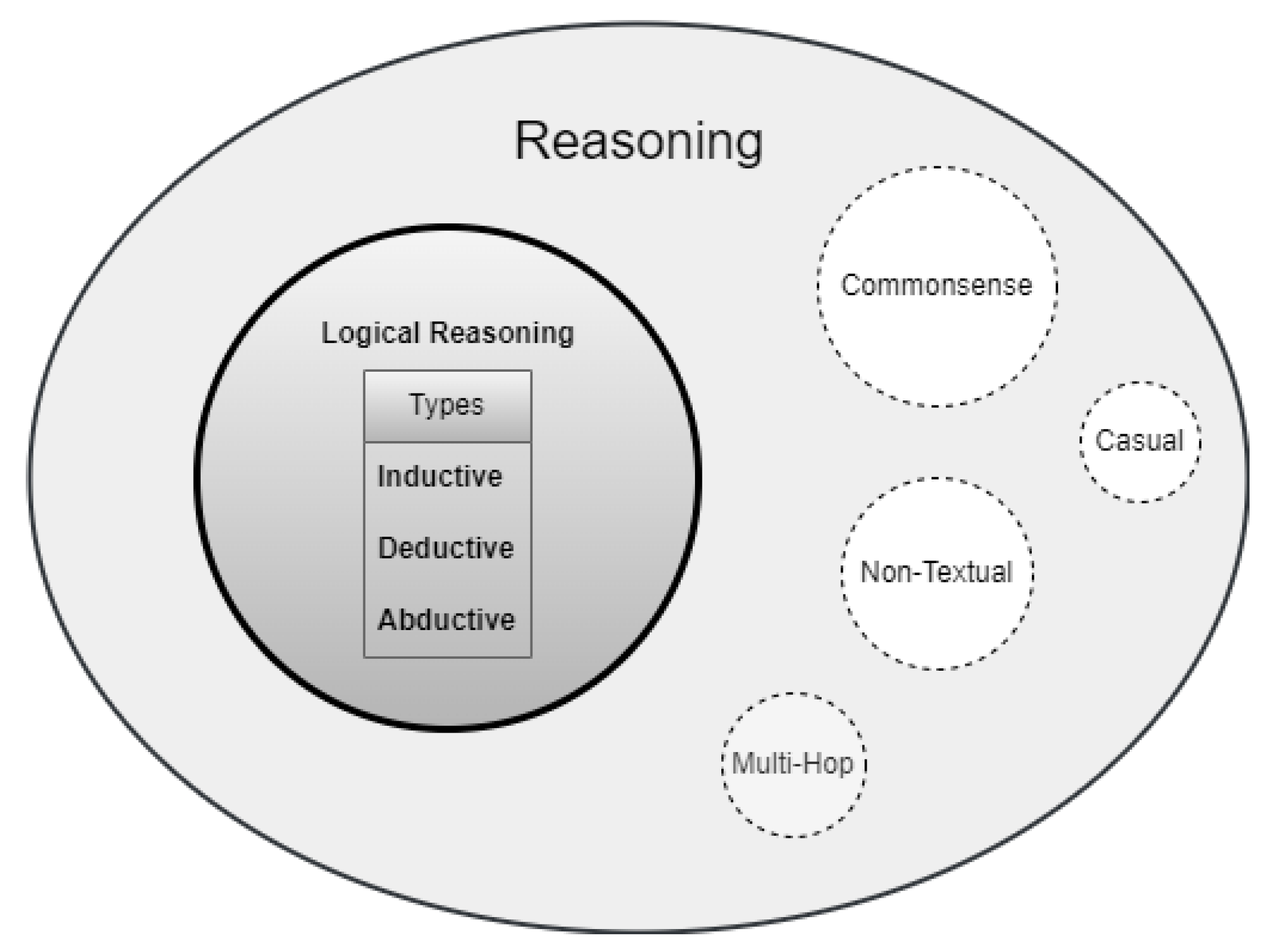

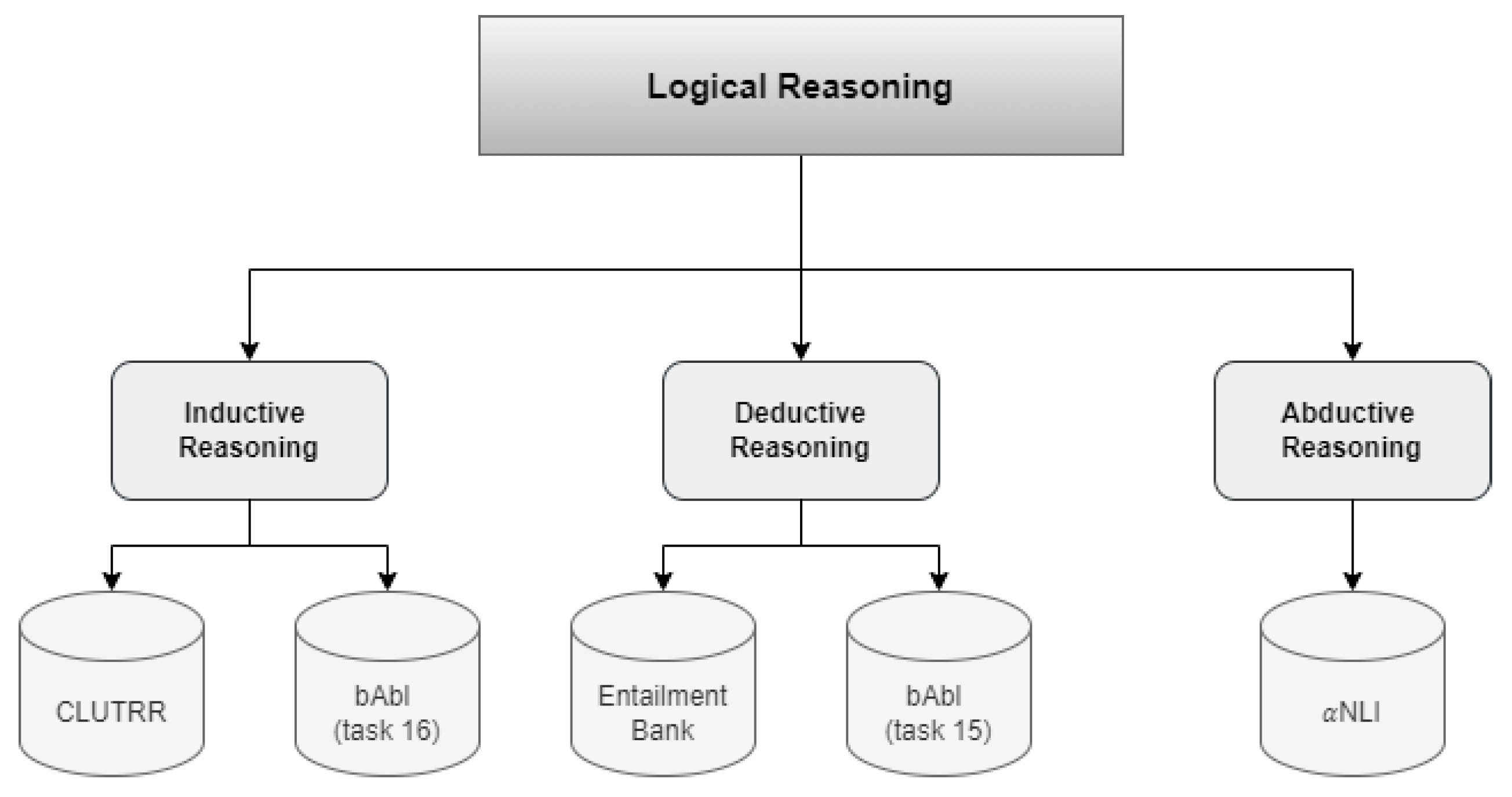

2.2. Reasoning

2.3. Language Translation

2.3.1. Data

2.3.2. Evaluation Method

- 1 indicates that the vectors are identical.

- 0 indicates that the vectors are orthogonal (i.e., no similarity).

- -1 indicates that the vectors are opposed.

2.3.3. Results

3. Vision Capacity of GPT-4o

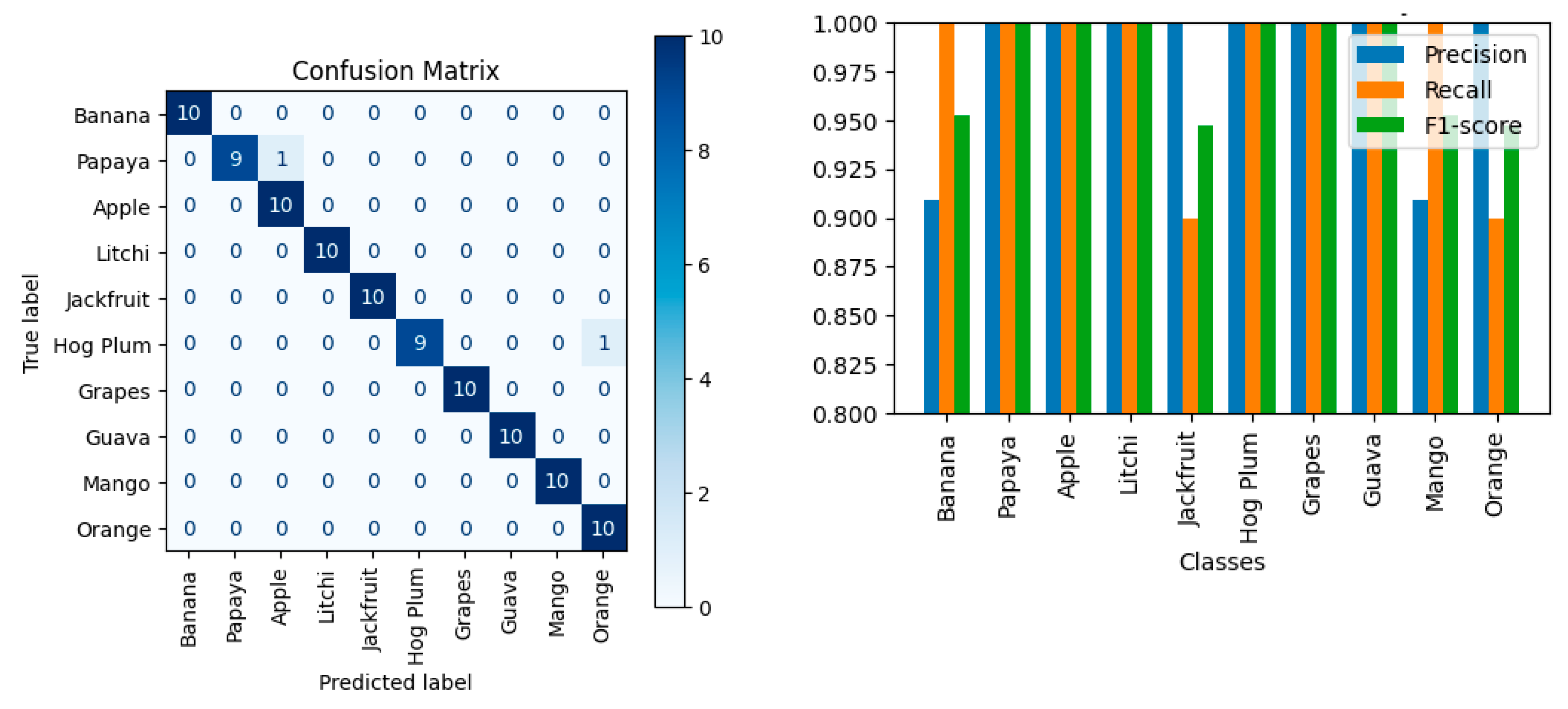

3.1. Fruits Classification

3.2. Driver Drowsiness Detection

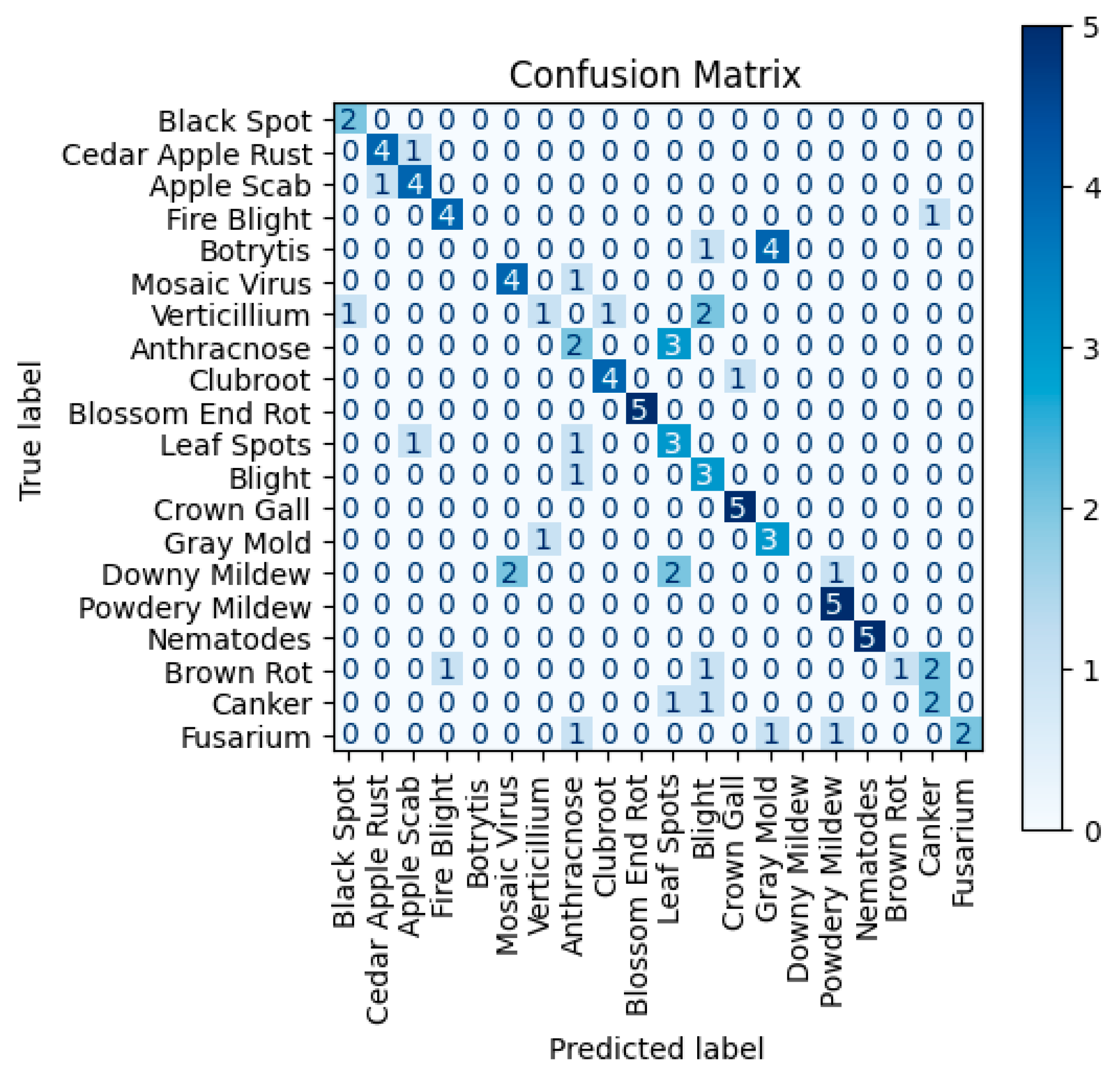

3.3. Crop Disease Classification

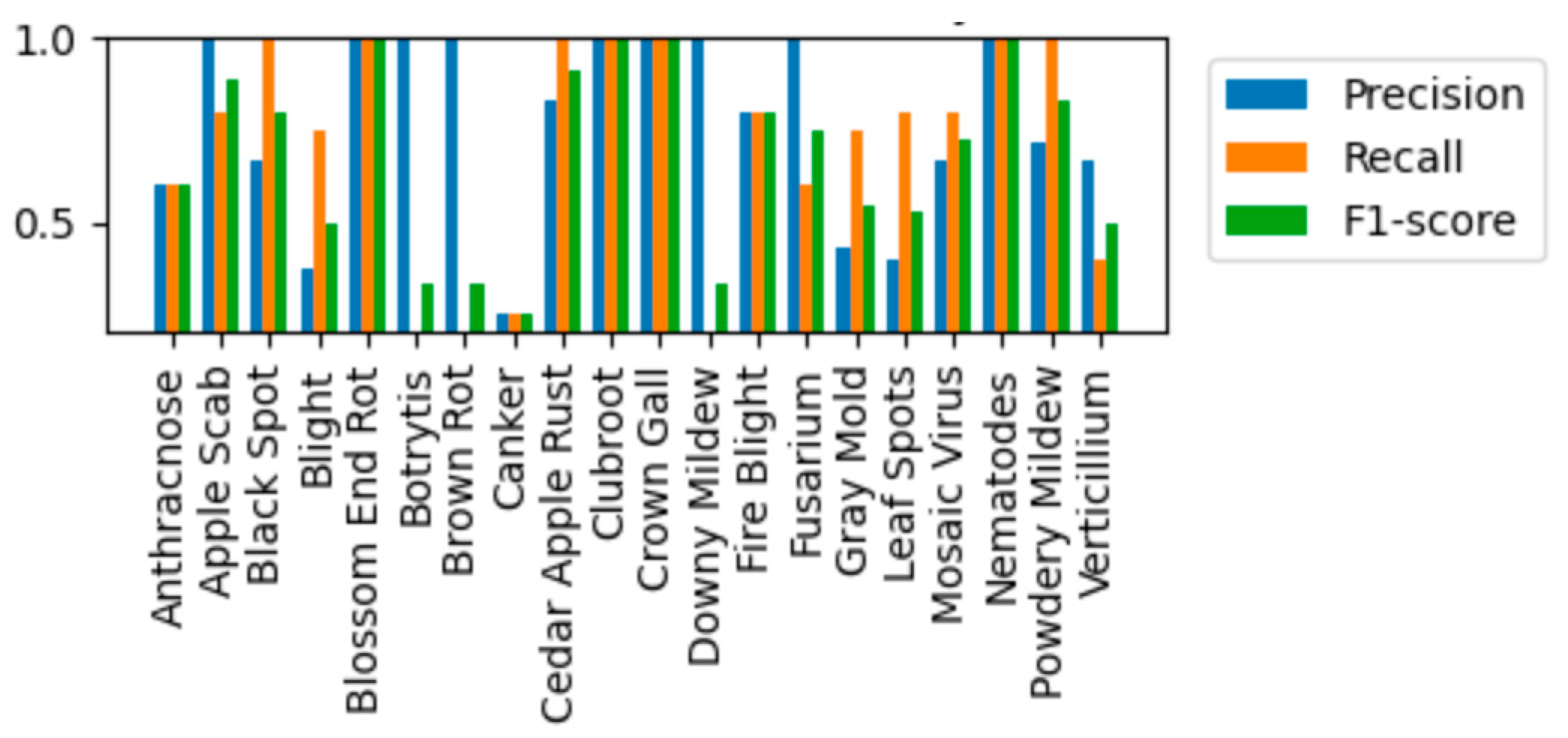

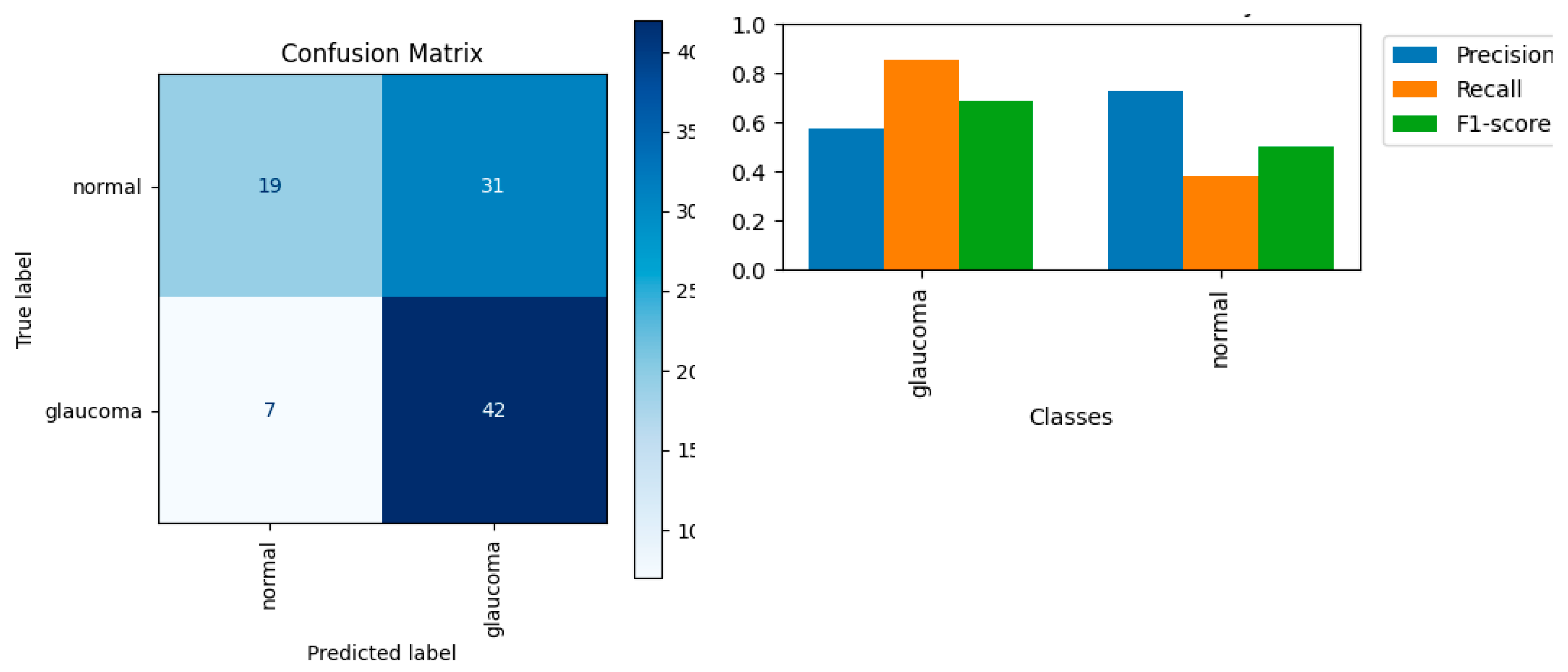

3.4. Glaucoma Detection

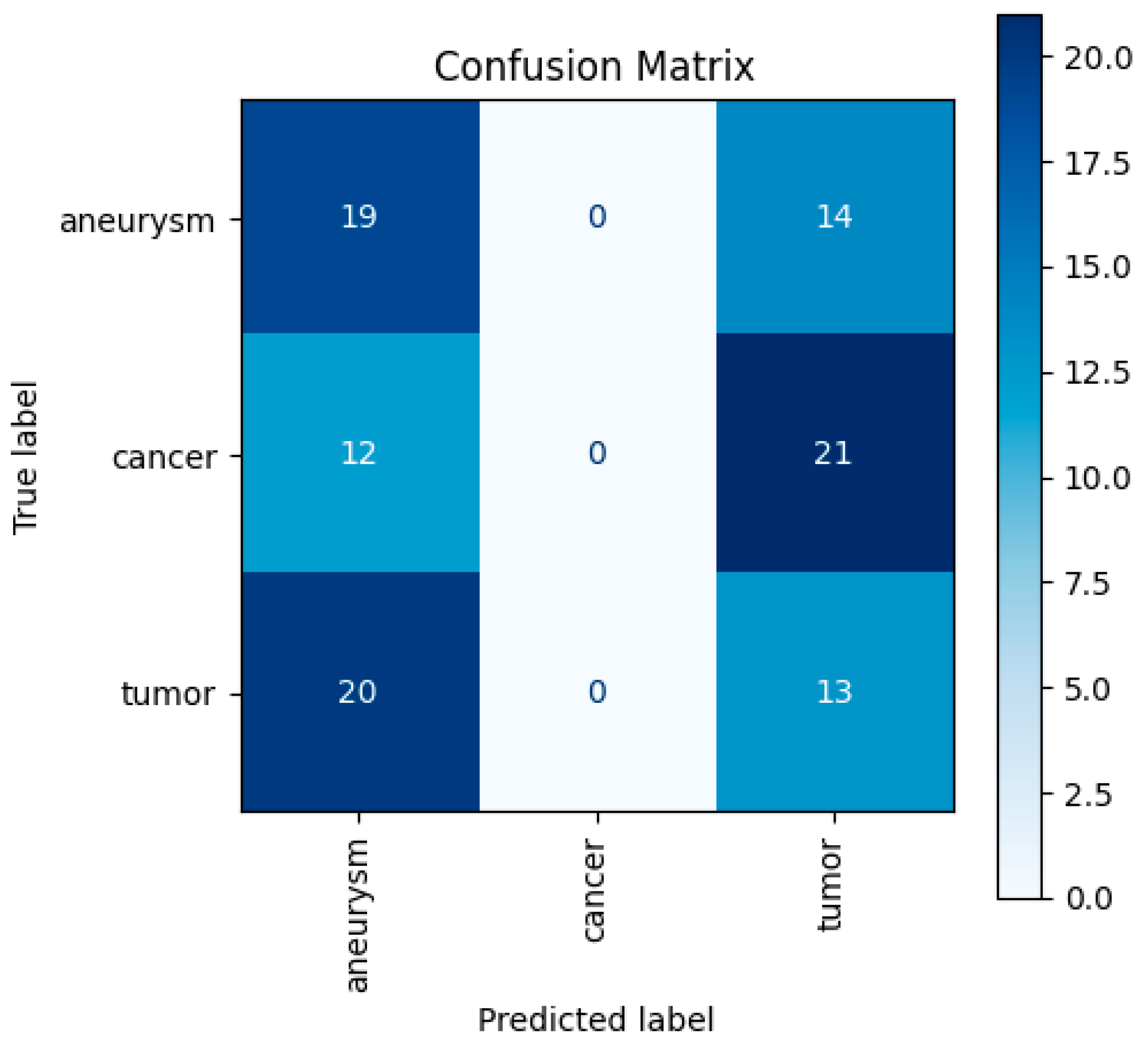

3.5. Cancer, Tumor, and Aneurysm Detection

3.6. Image Captioning

4. Speech Capacity of GPT-4o

4.1. Emotion Detection

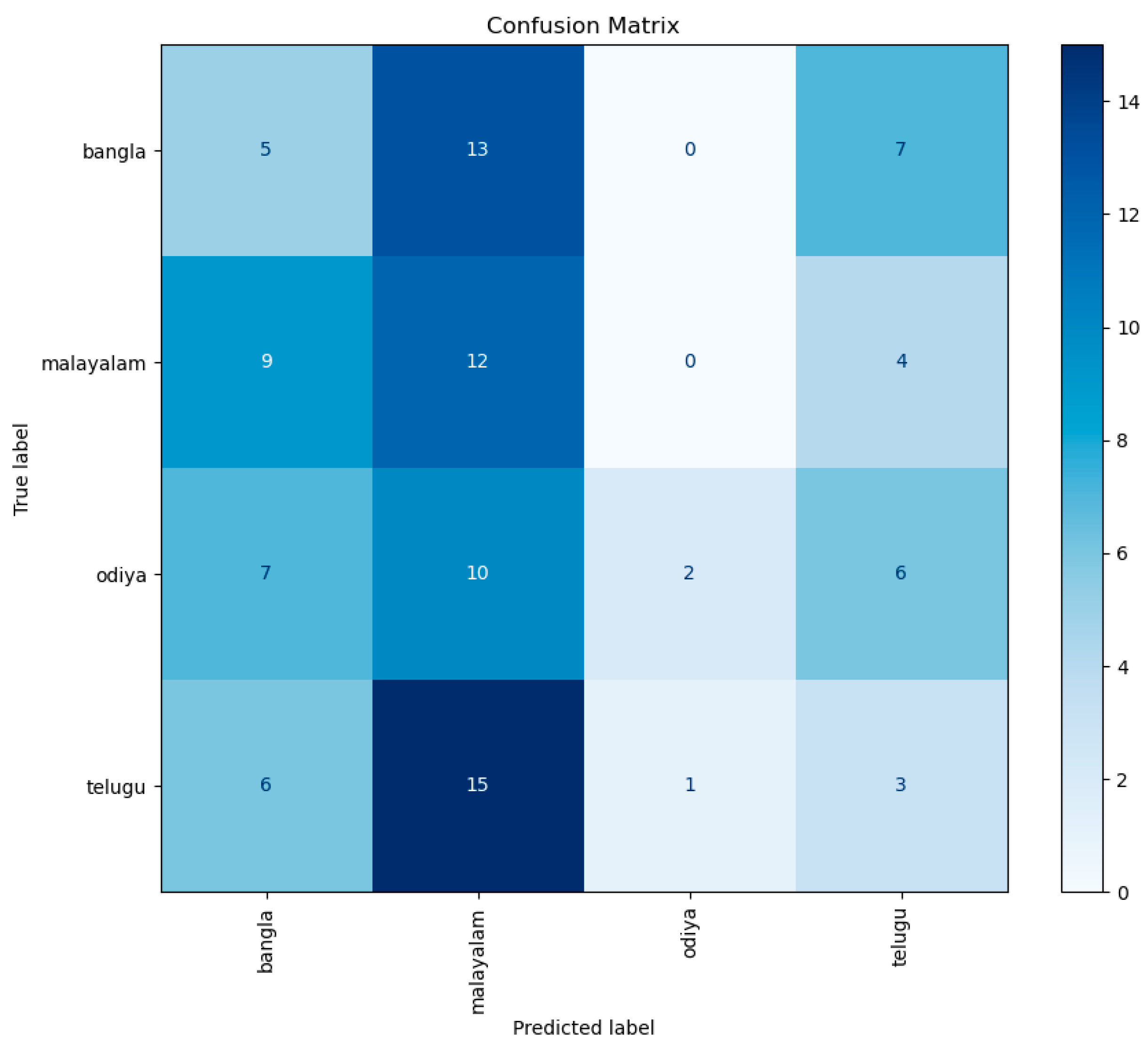

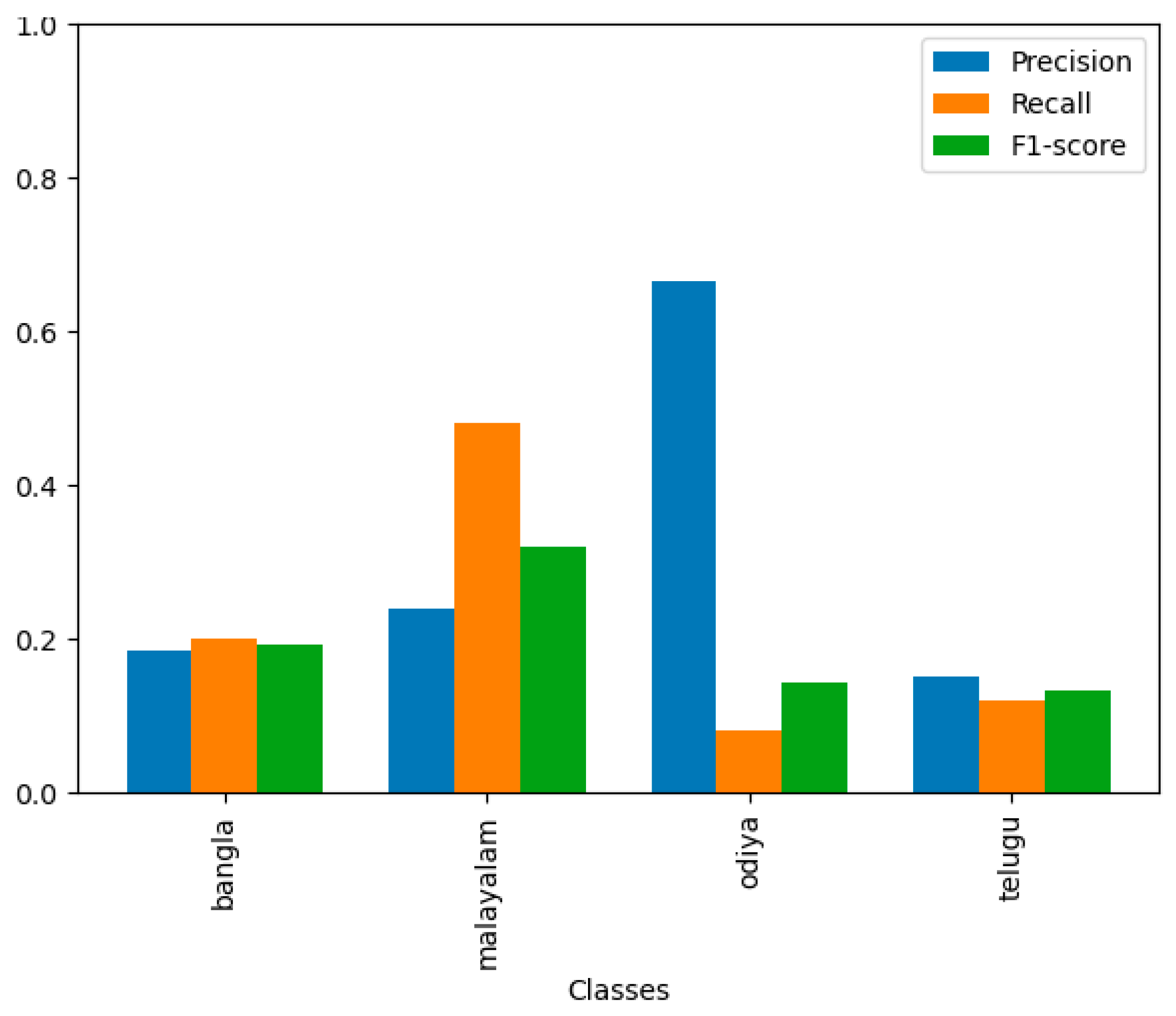

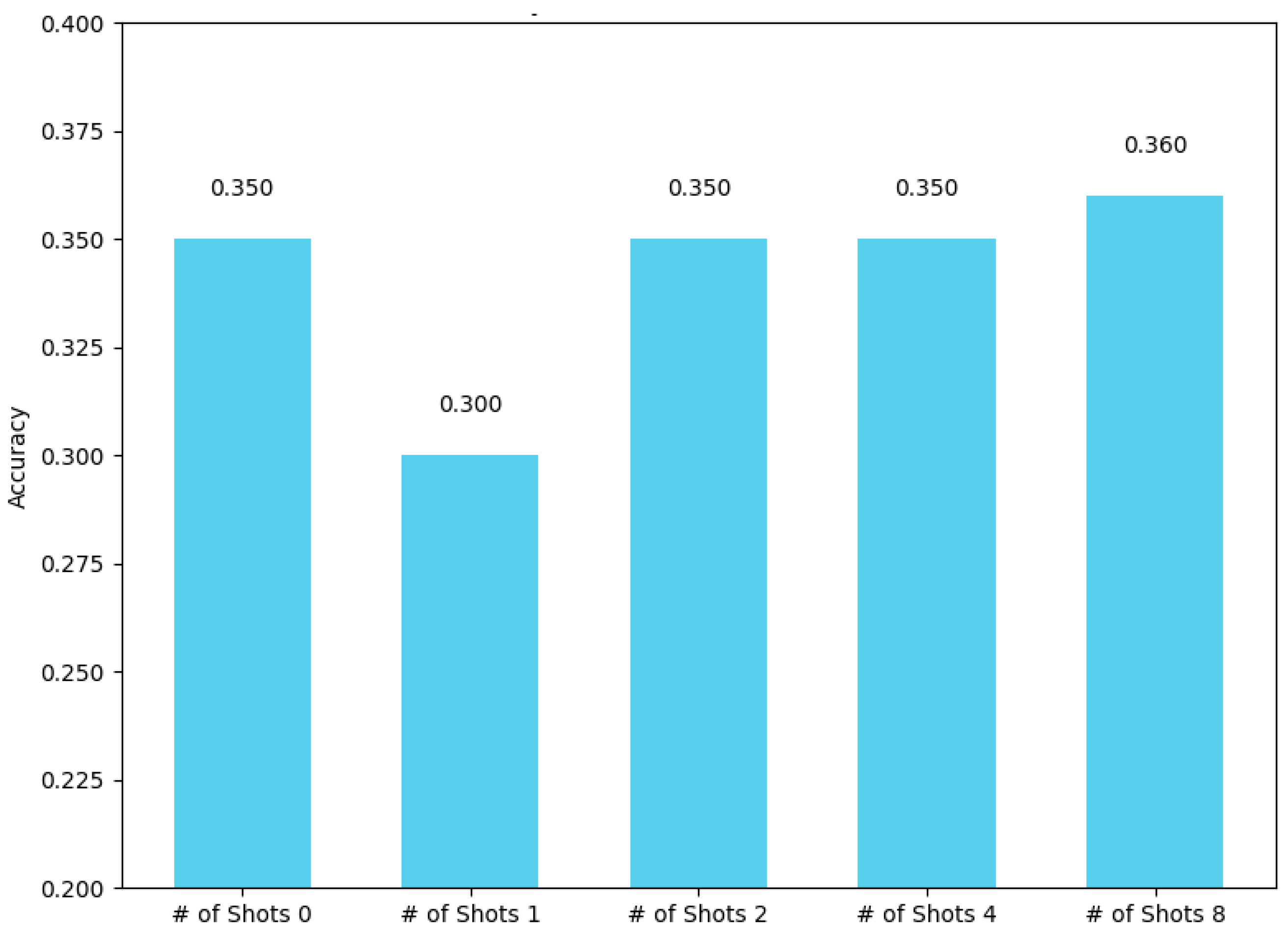

4.2. Accent Detection

5. Multimodal Capacity of GPT-4o

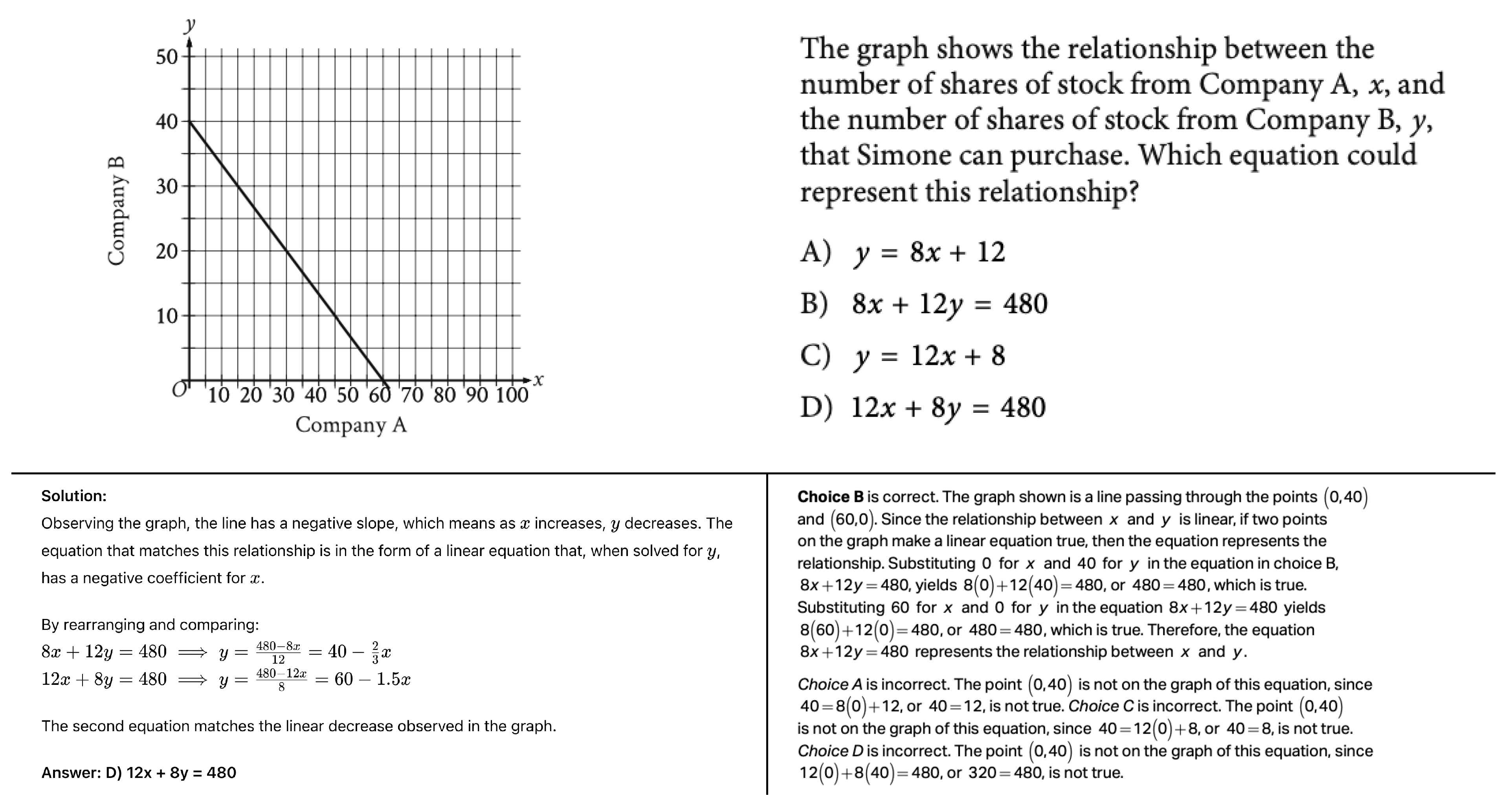

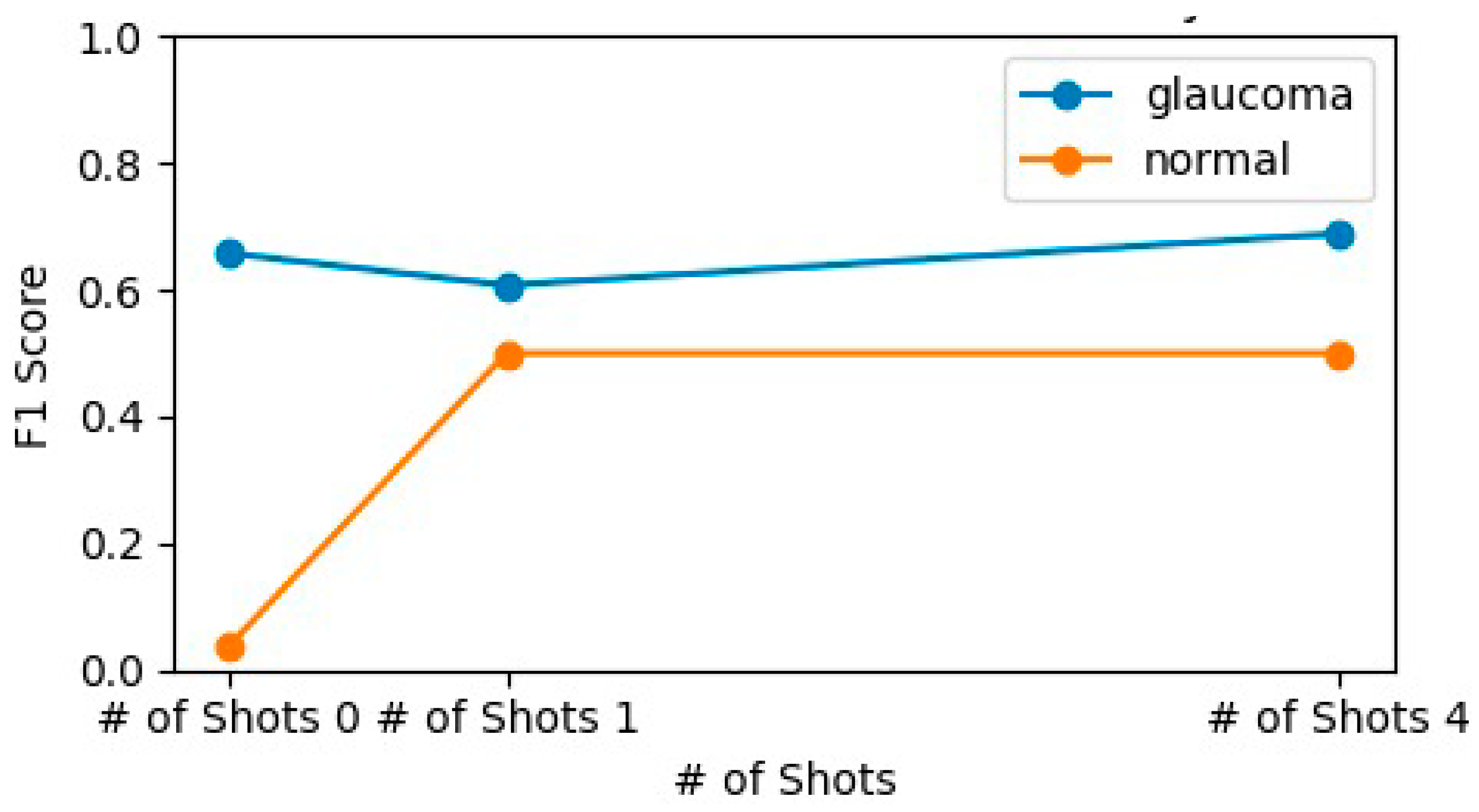

5.1. Visual Question Answering

5.2. Vision-Language Capabilities

6. Implications, Limitations, and Future Work

6.1. Implications

6.2. Limitations

6.3. Future Work

References

- Ahamad, A., Anand, A., & Bhargava, P. (2020). AccentDB: a database of non-native english accents to assist neural speech recognition. Proceedings of the 12th Language Resources and Evaluation Conference, 5351–5358. https://www.aclweb.org/anthology/2020.lrec-1.659.

- Aher, G. V., Arriaga, R. I., & Kalai, A. T. (2023). Using Large Language Models to Simulate Multiple Humans and Replicate Human Subject Studies. Proceedings of the 40th International Conference on Machine Learning, 337–371. https://proceedings.mlr.press/v202/aher23a.html.

- Allyn, B. (2024). Scarlett Johansson says she is “shocked, angered” over new ChatGPT voice. https://www.npr.org/2024/05/20/1252495087/openai-pulls-ai-voice-that-was-compared-to-scarlett-johansson-in-the-movie-her.

- Bhagavatula, C., Bras, R. L., Malaviya, C., Sakaguchi, K., Holtzman, A., Rashkin, H., Downey, D., Yih, W., & Choi, Y. (2019, September 25). Abductive Commonsense Reasoning. International Conference on Learning Representations. https://openreview.net/forum?id=Byg1v1HKDB.

- Brin, D., Sorin, V., Vaid, A., Soroush, A., Glicksberg, B. S., Charney, A. W., Nadkarni, G., & Klang, E. (2023). Comparing ChatGPT and GPT-4 performance in USMLE soft skill assessments. Scientific Reports, 13(1), 16492. [CrossRef]

- Callanan, E., Mbakwe, A., Papadimitriou, A., Pei, Y., Sibue, M., Zhu, X., Ma, Z., Liu, X., & Shah, S. (2023). Can GPT models be Financial Analysts? An Evaluation of ChatGPT and GPT-4 on mock CFA Exams (arXiv:2310.08678). arXiv. [CrossRef]

- CFA Institute. (n.d.). CFA program curriculum level I. https://www.cfainstitute.org.

- College Board. (n.d.). The SAT suite of assessments. https://www.collegeboard.org.

- Coyne, S., Sakaguchi, K., Galvan-Sosa, D., Zock, M., & Inui, K. (2023). Analyzing the Performance of GPT-3.5 and GPT-4 in Grammatical Error Correction (arXiv:2303.14342). arXiv. [CrossRef]

- Cubero, S., Aleixos, N., Moltó, E., Gómez-Sanchis, J., & Blasco, J. (2011). Advances in machine vision applications for automatic inspection and quality evaluation of fruits and vegetables. Food and Bioprocess Technology, 4, 487–504. [CrossRef]

- Dalvi, B., Jansen, P., Tafjord, O., Xie, Z., Smith, H., Pipatanangkura, L., & Clark, P. (2021). Explaining Answers with Entailment Trees. In M.-F. Moens, X. Huang, L. Specia, & S. W. Yih (Eds.), Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (pp. 7358–7370). Association for Computational Linguistics. [CrossRef]

- Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), 4171–4186.

- Dillion, D., Mondal, D., Tandon, N., & Gray, K. (2024). Large Language Models as Moral Experts? GPT-4o Outperforms Expert Ethicist in Providing Moral Guidance. OSF. [CrossRef]

- Floridi, L., & Chiriatti, M. (2020). GPT-3: Its Nature, Scope, Limits, and Consequences. Minds and Machines, 30(4), 681–694. [CrossRef]

- Gemini Team, Anil, R., Borgeaud, S., Alayrac, J.-B., Yu, J., Soricut, R., Schalkwyk, J., Dai, A. M., Hauth, A., Millican, K., Silver, D., Johnson, M., Antonoglou, I., Schrittwieser, J., Glaese, A., Chen, J., Pitler, E., Lillicrap, T., Lazaridou, A., … Vinyals, O. (2024). Gemini: A Family of Highly Capable Multimodal Models (arXiv:2312.11805). arXiv. [CrossRef]

- Gilson, A., Safranek, C. W., Huang, T., Socrates, V., Chi, L., Taylor, R. A., & Chartash, D. (2023). How Does ChatGPT Perform on the United States Medical Licensing Examination (USMLE)? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Medical Education, 9(1), e45312. [CrossRef]

- Graham, C., & Roll, N. (2024). Evaluating OpenAI’s Whisper ASR: Performance analysis across diverse accents and speaker traits. JASA Express Letters, 4(2). [CrossRef]

- Griggs, M. (2019). Building a Better Bar Exam. Texas A&M Law Review, 7, 1.

- Haleem, A., Javaid, M., Qadri, M. A., & Suman, R. (2022). Understanding the role of digital technologies in education: A review. Sustainable Operations and Computers, 3, 275–285. [CrossRef]

- Hayawi, K., & Shahriar, S. (2024). AI Agents from Copilots to Coworkers: Historical Context, Challenges, Limitations, Implications, and Practical Guidelines (2024040709). Preprints. [CrossRef]

- Hayawi, K., Shahriar, S., & Mathew, S. S. (2024). The imitation game: Detecting human and AI-generated texts in the era of ChatGPT and BARD. Journal of Information Science, 01655515241227531. [CrossRef]

- Hayes, B. K., Heit, E., & Swendsen, H. (2010). Inductive reasoning. WIREs Cognitive Science, 1(2), 278–292. [CrossRef]

- Hu, B., Sheng, Q., Cao, J., Shi, Y., Li, Y., Wang, D., & Qi, P. (2024). Bad actor, good advisor: Exploring the role of large language models in fake news detection. Proceedings of the AAAI Conference on Artificial Intelligence, 38, Article 20. [CrossRef]

- Huang, J., & Chang, K. C.-C. (2023). Towards Reasoning in Large Language Models: A Survey. In A. Rogers, J. Boyd-Graber, & N. Okazaki (Eds.), Findings of the Association for Computational Linguistics: ACL 2023 (pp. 1049–1065). Association for Computational Linguistics. [CrossRef]

- Jebraeily, Y., Sharafi, Y., & Teshnehlab, M. (2024). Driver drowsiness detection based on convolutional neural network architecture optimization using genetic algorithm. IEEE Access : Practical Innovations, Open Solutions, 12, 45709–45726. [CrossRef]

- Johnson-Laird, P. (2010). Deductive reasoning. WIREs Cognitive Science, 1(1), 8–17. [CrossRef]

- Katz, D. M., Bommarito, M. J., Gao, S., & Arredondo, P. (2024). Gpt-4 passes the bar exam. Philosophical Transactions of the Royal Society A, 382(2270), 20230254. [CrossRef]

- Khoshafah, F. (2023). ChatGPT for Arabic-English Translation: Evaluating the Accuracy. [CrossRef]

- Korinek, A. (2023). Language models and cognitive automation for economic research. National Bureau of Economic Research. [CrossRef]

- Koubaa, A. (2023). GPT-4 vs. GPT-3.5: A Concise Showdown (2023030422). Preprints. [CrossRef]

- Kunchukuttan, A., Mehta, P., & Bhattacharyya, P. (2018). The IIT bombay english-hindi parallel corpus. Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018).

- Li, H., Ding, W., Kang, Y., Liu, T., Wu, Z., & Liu, Z. (2021). CTAL: Pre-training cross-modal transformer for audio-and-language representations. Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, 3966–3977.

- López Espejel, J., Ettifouri, E. H., Yahaya Alassan, M. S., Chouham, E. M., & Dahhane, W. (2023). GPT-3.5, GPT-4, or BARD? Evaluating LLMs reasoning ability in zero-shot setting and performance boosting through prompts. Natural Language Processing Journal, 5, 100032. [CrossRef]

- Lund, B. D., Wang, T., Mannuru, N. R., Nie, B., Shimray, S., & Wang, Z. (2023). ChatGPT and a new academic reality: Artificial Intelligence-written research papers and the ethics of the large language models in scholarly publishing. Journal of the Association for Information Science and Technology, 74(5), 570–581. [CrossRef]

- Mannuru, N. R., Shahriar, S., Teel, Z. A., Wang, T., Lund, B. D., Tijani, S., Pohboon, C. O., Agbaji, D., Alhassan, J., Galley, J., & others. (2023). Artificial intelligence in developing countries: The impact of generative artificial intelligence (AI) technologies for development. Information Development, 02666669231200628. [CrossRef]

- National Conference of Bar Examiners. (n.d.). MBE sample test questions. https://www.ncbex.org.

- of State Medical Boards, F., & of Medical Examiners, N. B. (2024). USMLE step 1 content description and general information. https://www.usmle.org.

- Ongsulee, P. (2017). Artificial intelligence, machine learning and deep learning. 2017 15th International Conference on ICT and Knowledge Engineering (ICT&KE), 1–6.

- OpenAI, Achiam, J., Adler, S., Agarwal, S., Ahmad, L., Akkaya, I., Aleman, F. L., Almeida, D., Altenschmidt, J., Altman, S., Anadkat, S., Avila, R., Babuschkin, I., Balaji, S., Balcom, V., Baltescu, P., Bao, H., Bavarian, M., Belgum, J., … Zoph, B. (2024). GPT-4 Technical Report (arXiv:2303.08774). arXiv. [CrossRef]

- Oura, T., Tatekawa, H., Horiuchi, D., Matsushita, S., Takita, H., Atsukawa, N., Mitsuyama, Y., Yoshida, A., Murai, K., Tanaka, R., Shimono, T., Yamamoto, A., Miki, Y., & Ueda, D. (2024). Diagnostic Accuracy of Vision-Language Models on Japanese Diagnostic Radiology, Nuclear Medicine, and Interventional Radiology Specialty Board Examinations (p. 2024.05.31.24308072). medRxiv. [CrossRef]

- Pang, S., Nol, E., & Heng, K. (2024). ChatGPT-4o for English language teaching and learning: Features, applications, and future prospects (SSRN Scholarly Paper 4837988). [CrossRef]

- Ray, S. (2024). Google CEO says Gemini AI’s ‘unacceptable’ responses offended users and showed bias. https://www.forbes.com/sites/siladityaray/2024/02/28/google-ceo-says-gemini-ais-unacceptable-responses-offended-users-and-showed-bias/?sh=250e1a1b1103.

- Salman, S., & Liu, X. (2019). Overfitting Mechanism and Avoidance in Deep Neural Networks (arXiv:1901.06566). arXiv. [CrossRef]

- Shahriar, S. (2022). GAN computers generate arts? A survey on visual arts, music, and literary text generation using generative adversarial network. Displays, 73, 102237. [CrossRef]

- Shahriar, S., & Al Roken, N. (2022). How can generative adversarial networks impact computer generated art? Insights from poetry to melody conversion. International Journal of Information Management Data Insights, 2(1), 100066. [CrossRef]

- Shahriar, S., Al Roken, N., & Zualkernan, I. (2023). Classification of Arabic poetry emotions using deep learning. Computers, 12(5), 89. [CrossRef]

- Shen, X., Wu, Y., Backes, M., & Zhang, Y. (2024). Voice Jailbreak Attacks Against GPT-4o (arXiv:2405.19103). arXiv. [CrossRef]

- Singgalen, Y. A. (2024). Analyzing an Interest in GPT 4o through Sentiment Analysis using CRISP-DM. Journal of Information Systems and Informatics, 6(2), Article 2. [CrossRef]

- Sinha, K., Sodhani, S., Dong, J., Pineau, J., & Hamilton, W. L. (2019). CLUTRR: A Diagnostic Benchmark for Inductive Reasoning from Text. In K. Inui, J. Jiang, V. Ng, & X. Wan (Eds.), Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP) (pp. 4506–4515). Association for Computational Linguistics. [CrossRef]

- Sivarajkumar, S., Kelley, M., Samolyk-Mazzanti, A., Visweswaran, S., & Wang, Y. (2024). An Empirical Evaluation of Prompting Strategies for Large Language Models in Zero-Shot Clinical Natural Language Processing: Algorithm Development and Validation Study. JMIR Medical Informatics, 12(1), e55318. [CrossRef]

- Sonoda, Y., Kurokawa, R., Nakamura, Y., Kanzawa, J., Kurokawa, M., Ohizumi, Y., Gonoi, W., & Abe, O. (2024). Diagnostic Performances of GPT-4o, Claude 3 Opus, and Gemini 1.5 Pro in “Diagnosis Please” Cases (p. 2024.05.26.24307915). medRxiv. [CrossRef]

- Thirunavukarasu, A. J., Ting, D. S. J., Elangovan, K., Gutierrez, L., Tan, T. F., & Ting, D. S. W. (2023). Large language models in medicine. Nature Medicine, 29(8), 1930–1940. [CrossRef]

- Tiedemann, J. (2012). Parallel data, tools and interfaces in OPUS. Proceedings of the Eighth International Conference on Language Resources and Evaluation (LREC’12), 2214–2218.

- Walton, D. (2014). Abductive reasoning. University of Alabama Press.

- Wang, H., Xu, J., Xie, S., Wang, R., Li, J., Xie, Z., Zhang, B., Xiong, C., & Chen, X. (2024). M4U: Evaluating Multilingual Understanding and Reasoning for Large Multimodal Models (arXiv:2405.15638). arXiv. [CrossRef]

- Weston, J., Bordes, A., Chopra, S., Rush, A. M., van Merriënboer, B., Joulin, A., & Mikolov, T. (2015). Towards AI-Complete Question Answering: A Set of Prerequisite Toy Tasks (arXiv:1502.05698). arXiv. [CrossRef]

- Xu, S., Wang, Y., Liu, D., & Xu, C. (2024). Collage Prompting: Budget-Friendly Visual Recognition with GPT-4V (arXiv:2403.11468). arXiv. [CrossRef]

- Ying, Z., Liu, A., Liu, X., & Tao, D. (2024). Unveiling the Safety of GPT-4o: An Empirical Study using Jailbreak Attacks (arXiv:2406.06302). arXiv. [CrossRef]

- Yu, W., Yang, Z., Li, L., Wang, J., Lin, K., Liu, Z., Wang, X., & Wang, L. (2023). MM-Vet: Evaluating Large Multimodal Models for Integrated Capabilities (arXiv:2308.02490). arXiv. [CrossRef]

- Zhang, N., Sun, Z., Xie, Y., Wu, H., & Li, C. (n.d.). The latest version ChatGPT powered by GPT-4o: What will it bring to the medical field? International Journal of Surgery, 10.1097/JS9.0000000000001754. [CrossRef]

- Zhou, Y., Ong, H., Kennedy, P., Wu, C. C., Kazam, J., Hentel, K., Flanders, A., Shih, G., Peng, Y., Moy, L., & Atzen, S. (2024). Evaluating GPT-4V (GPT-4 with Vision) on Detection of Radiologic Findings on Chest Radiographs. Radiology, 311(2), e233270. [CrossRef]

- Zhu, N., Zhang, N., Shao, Q., Cheng, K., & Wu, H. (2024). OpenAI’s GPT-4o in surgical oncology: Revolutionary advances in generative artificial intelligence. European Journal of Cancer, 206. [CrossRef]

| Model | Total Questions | Correct Answers | Accuracy |

|---|---|---|---|

| GPT-3.5 | 389 | 201 | 51.67% |

| GPT-4 | 80 | 72 | 90.00% |

| GPT-4o | 118 | 98 | 83.05% |

| Model | Accuracy |

|---|---|

| GPT-3.5 | 58.80% |

| GPT-4 | 73.20% |

| GPT-4o | 85.39% |

| Test | Total Questions | Correct Answers | Accuracy |

|---|---|---|---|

| Reading & Writing M1 | 33 | 31 | 93.94% |

| Reading & Writing M2 | 33 | 29 | 87.88% |

| Math M1 | 27 | 25 | 92.59% |

| Math M2 | 27 | 22 | 81.48% |

| Model | Reading & Writing | Math |

|---|---|---|

| GPT-3.5 | 83.75% | 73.75% |

| GPT-4 | 88.75% | 87.50% |

| GPT-4 (no vision) | 88.75% | 86.25% |

| GPT-4o | 90.91% | 87.04% |

| Model | Accuracy |

|---|---|

| GPT-3.5 | 45.10% |

| GPT-4 | 75.70% |

| GPT-4o | 75.00% |

| Category/ Model |

Deductive Reasoning | Inductive Reasoning | Abductive Reasoning | ||

|---|---|---|---|---|---|

| Entailment Bank | bAbI (task 15) | CLUTRR | bAbI (task 15) | αNLI | |

| GPT 3.5 | 25/30 | 26/30 | 2/30 | 14/30 | 19/30 |

| GPT 4 | 27/30 | 30/30 | 11/30 | 28/30 | 25/30 |

| GPT 4o | 29/30 | 30/30 | 17/30 | 30/30 | 27/30 |

| Language | Translation Accuracy (%) |

|---|---|

| Spanish | 88 |

| Arabic | 78 |

| Hindi | 82 |

| French | 75 |

| Portuguese | 86 |

| Russian | 80 |

| Class | Precision | Recall | F1-Score |

|---|---|---|---|

| Banana | 0.91 | 1.00 | 0.95 |

| Papaya | 1.00 | 1.00 | 1.00 |

| Apple | 1.00 | 1.00 | 1.00 |

| Litchi | 1.00 | 1.00 | 1.00 |

| Jackfruit | 1.00 | 0.90 | 0.95 |

| Hog Plum | 1.00 | 1.00 | 1.00 |

| Grapes | 1.00 | 1.00 | 1.00 |

| Guava | 1.00 | 1.00 | 1.00 |

| Mango | 0.91 | 1.00 | 0.95 |

| Orange | 1.00 | 0.90 | 0.95 |

| Class | Precision | Recall | F1-Score |

|---|---|---|---|

| Drowsy | 0.8 | 0.8 | 0.8 |

| Natural | 0.8 | 0.8 | 0.8 |

| Class | Precision | Recall | F1-score |

|---|---|---|---|

| Anthracnose | 0.60 | 0.60 | 0.60 |

| Apple Scab | 1.00 | 0.80 | 0.89 |

| Black Spot | 0.67 | 1.00 | 0.80 |

| Blight | 0.38 | 0.75 | 0.50 |

| Blossom End Rot | 1.00 | 1.08 | 1.00 |

| Botrytis | 1.00 | 0.20 | 0.33 |

| Brown Rot | 1.00 | 0.20 | 0.33 |

| Canker | 0.25 | 0.25 | 0.25 |

| Cedar Apple Rust | 0.83 | 1.00 | 0.91 |

| Clubroot | 1.00 | 1.00 | 1.00 |

| Crown Gall | 1.00 | 1.00 | 1.00 |

| Downy Mildew | 1.00 | 0.20 | 0.33 |

| Fire Blight | 0.80 | 0.80 | 0.80 |

| Fusarium | 1.00 | 0.60 | 0.75 |

| Gray Mold | 0.43 | 0.75 | 0.55 |

| Leaf Spots | 0.40 | 0.80 | 0.53 |

| Mosaic Virus | 0.67 | 0.80 | 0.72 |

| Nematodes | 1.00 | 1.00 | 1.00 |

| Powdery Mildew | 0.71 | 1.00 | 0.83 |

| Verticillium | 0.67 | 0.40 | 0.50 |

| Class | Precision | Recall | F1-Score |

|---|---|---|---|

| Glaucoma | 0.58 | 0.86 | 0.69 |

| Normal | 0.73 | 0.38 | 0.50 |

| Class | Precision | Recall | F1-Score |

|---|---|---|---|

| Aneurysm | 0.3725 | 0.5758 | 0.4524 |

| Cancer | 0.0000 | 0.0000 | 0.0000 |

| Tumor | 0.2708 | 0.3939 | 0.3210 |

| Metric | Score |

|---|---|

| BLEU-1 | 0.193 |

| BLEU-2 | 0.095 |

| BLEU-3 | 0.058 |

| BLEU-4 | 0.031 |

| Model | Recognition | OCR | Knowledge | Language Generation | Spatial Awareness | Math | Total |

|---|---|---|---|---|---|---|---|

| GPT-3.5 | 22.3 | 31.4 | 15.6 | 16.6 | 32.9 | 24.0 | 27.6 |

| GPT-4 | 34.3 | 66.3 | 25.6 | 36.6 | 60.6 | 72.0 | 48.1 |

| GPT-4V | 67.5 | 68.3 | 56.2 | 60.7 | 69.4 | 58.6 | 67.7 |

| GPT-4o | 80.6 | 87.5 | 75.7 | 77.1 | 84.7 | 88.1 | 83.9 |

| Input Image | Questions|Assessed Capabilities | GPT-4o Responses | |

|---|---|---|---|

|

a) Q: How many gallons of supreme gasoline can I get with $50? | GPT-4o: a) With $50, you can get approximately 13.70 gallons of supreme gasoline. | |

| b) Q: What is the price difference between diesel and regular gasoline? Required capabilities: OCR, Math |

GPT-4o: The price difference between diesel and regular gasoline is $0.11 per gallon. | ||

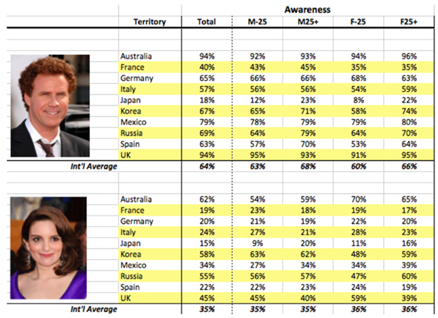

|

a) Q: Based on the image, what is the total international awareness of Tina Fey? | GPT-4o: Based on the image, the total international awareness of Tina Fey is 35%. This percentage is located at the bottom row under the column labeled "Total" for Tina Fey. | |

| b) Q: Based on the image, in which country does Tina Fey have the highest level of total awareness? Required capabilities: OCR, Spatial awareness |

GPT-4o: Based on the image, Tina Fey has the highest level of total awareness in Australia, with an awareness level of 62%. | ||

|

a) Q: What is the price for tomatoes? | GPT-4o: The price for tomatoes is 8.00, as indicated by the sign placed above the pile of tomatoes in the image. | |

| b) Q: What fruit is the the right of plums? Required capabilities: Recognition, Spatial awareness |

GPT-4o: The fruit to the right of the plums in the image is pears. | ||

|

a) Q: Which car is on the parking spot 33? | GPT-4o: The parking spot labeled 33 is empty. | |

| b) Q: Which spot is the red car parking on? Required capabilities: OCR, Recognition, Spatial awareness |

GPT-4o: The red car is parked in spot number 30. | ||

|

a) Q: Which category did the worst job in plastic recycling, considering the amount of plastic made and thrown out? | GPT-4o: The category that did the worst job in plastic recycling, considering the amount of plastic made and thrown out, is "Packaging." In 2015, 146 million metric tonnes of plastic were made for packaging, and 141 million metric tonnes were thrown out. This shows that almost all the plastic made for packaging was discarded as waste. | |

| b) Q: What is the difference in metric tonnes between the amount of plastic produced and the amount discarded? Required capabilities: OCR, Spatial awareness |

The difference between the amount of plastic produced (407 million metric tonnes) and the amount discarded (302 million metric tonnes) is: 407 million metric tonnes – 302 million metric tonnes = 105 million metric tonnes So, the difference is 105 million metric tonnes. |

||

|

Q: What does this factory make? Required capabilities: OCR |

GPT-4o: The factory in the image belongs to Leica, which is a company known for manufacturing cameras, lenses, and other optical equipment. | |

|

Q: In which country was this photo taken? Required capabilities: Recognition, Knowledge |

GPT-4o: The photo shows Novak Djokovic kissing a trophy that appears to be the Australian Open trophy. Therefore, this photo was likely taken in Australia. | |

|

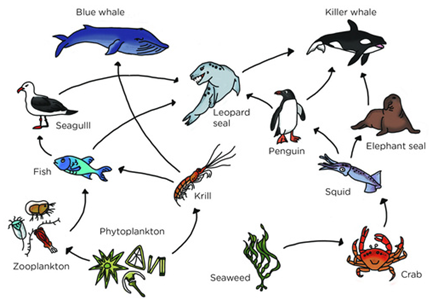

Q: Which are producers in this food web? Required capabilities: OCR, Knowledge, Spatial Awareness |

GPT-4o: In this food web, the producers are: Phytoplankton Seaweed These are the organisms that produce energy through photosynthesis and serve as the base of the food web. |

|

|

Q: Is it summertime in the scene? Answer it and give the rationale. Required capabilities: Recognition, Knowledge, Language generation |

GPT-4o: No, it is not summertime in the scene. The presence of large pumpkins suggests that it is likely fall, as pumpkins are typically associated with the autumn harvest season. Additionally, the characters are wearing long-sleeved clothing, which is more common in cooler weather. | |

|

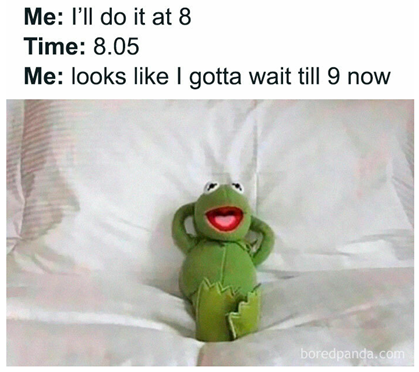

Q: Can you explain this meme? Required capabilities: Recognition, OCR, Knowledge, Language generation |

GPT-4o: This meme humorously illustrates the common tendency to procrastinate. The text shows an intention to start a task at a specific time (8:00), but when the time is slightly missed (8:05), the person decides to delay the task further, waiting until the next hour (9:00) to start. The image of Kermit the Frog relaxing on a bed adds to the humor by depicting the relaxed attitude towards procrastination. The meme pokes fun at the irrational yet relatable habit of postponing tasks due to missing an arbitrary starting time by a few minutes. | |

|

Q: What is funny about this image? Required capabilities: Recognition, Knowledge, Language generation |

GPT-4o: This image is a popular meme that combines two unrelated photos to create a humorous juxtaposition. On the left side, there is an image of a woman (Taylor Armstrong from "The Real Housewives of Beverly Hills") angrily pointing and shouting, while another woman (Kyle Richards) tries to calm her down. On the right side, there is a photo of a white cat (known as "Smudge the Cat") sitting at a dinner table with a confused and displeased expression in front of a plate of vegetables. The humor comes from the contrast between the woman's intense emotion and the cat's indifferent and bemused reaction, as if the cat is unbothered by the woman's outburst. This combination creates a relatable and funny depiction of exaggerated reactions and misunderstandings. |

|

|

Q: How to cook this dish? Required capabilities: Recognition, Knowledge, Language generation |

GPT-4o: This image shows a pie with a lattice crust. Here is a basic recipe to make a lattice-top pie, such as a strawberry or mixed berry pie: Ingredients: For the Filling: 4 cups fresh strawberries or mixed berries, 3/4 cup granulated sugar, 1/4 cup cornstarch, 1 tablespoon lemon juice, 1 teaspoon vanilla extract, A pinch of salt For the Crust: 2 1/2 cups all-purpose flour, 1 teaspoon salt, 1 teaspoon sugar, 1 cup unsalted butter, chilled and cut into small pieces, 1/4 to 1/2 cup ice water Instructions: In a large bowl, combine the flour, salt, and sugar. Add the chilled butter pieces and cut them into the flour mixture using a pastry blender or your fingers until the mixture resembles coarse crumbs. Gradually add ice water, a tablespoon at a time, mixing until the dough begins to come together. Do not overwork the dough. Divide the dough into two equal parts, shape them into disks, wrap in plastic wrap, and refrigerate for at least 1 hour. In a large bowl, combine the berries, sugar, cornstarch, lemon juice, vanilla extract, and salt. Stir gently to combine. On a lightly floured surface, roll out one disk of dough to fit a 9-inch pie pan. Place the rolled-out dough into the pie pan and trim the edges. Pour the berry mixture into the crust-lined pie pan. Roll out the second disk of dough and cut it into 1-inch-wide strips. Lay 4 to 5 strips over the filling, parallel to each other. Lay another 4 to 5 strips perpendicular to the first set, weaving them to create a lattice pattern. Trim and crimp the edges to seal. Preheat the oven to 375°F (190°C). Bake: Place the pie on a baking sheet to catch any drips and bake for 45-55 minutes, or until the crust is golden brown and the filling is bubbly. Cool: Let the pie cool completely before serving to allow the filling to set. Enjoy your homemade lattice-top pie! |

|

|

Q: Can you give a short introduction to this painting? Required capabilities: Recognition, Knowledge, Language generation |

GPT-4o: This painting is a historical Chinese artwork titled "Emperor Taizong Receiving the Tibetan Envoy," created by Yan Liben during the Tang Dynasty. The piece illustrates a significant diplomatic event where Emperor Taizong of the Tang Dynasty meets a Tibetan envoy. This event highlights the political and cultural exchanges between the Tang Dynasty and Tibet.The painting is notable for its detailed depiction of the figures, the hierarchical arrangement, and the ceremonial aspects of the meeting. Yan Liben, a renowned court artist of the Tang Dynasty, is celebrated for his ability to capture the grandeur and solemnity of imperial events. This artwork not only serves as a historical record but also exemplifies the artistic sophistication of the Tang Dynasty. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).