Submitted:

24 June 2024

Posted:

24 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

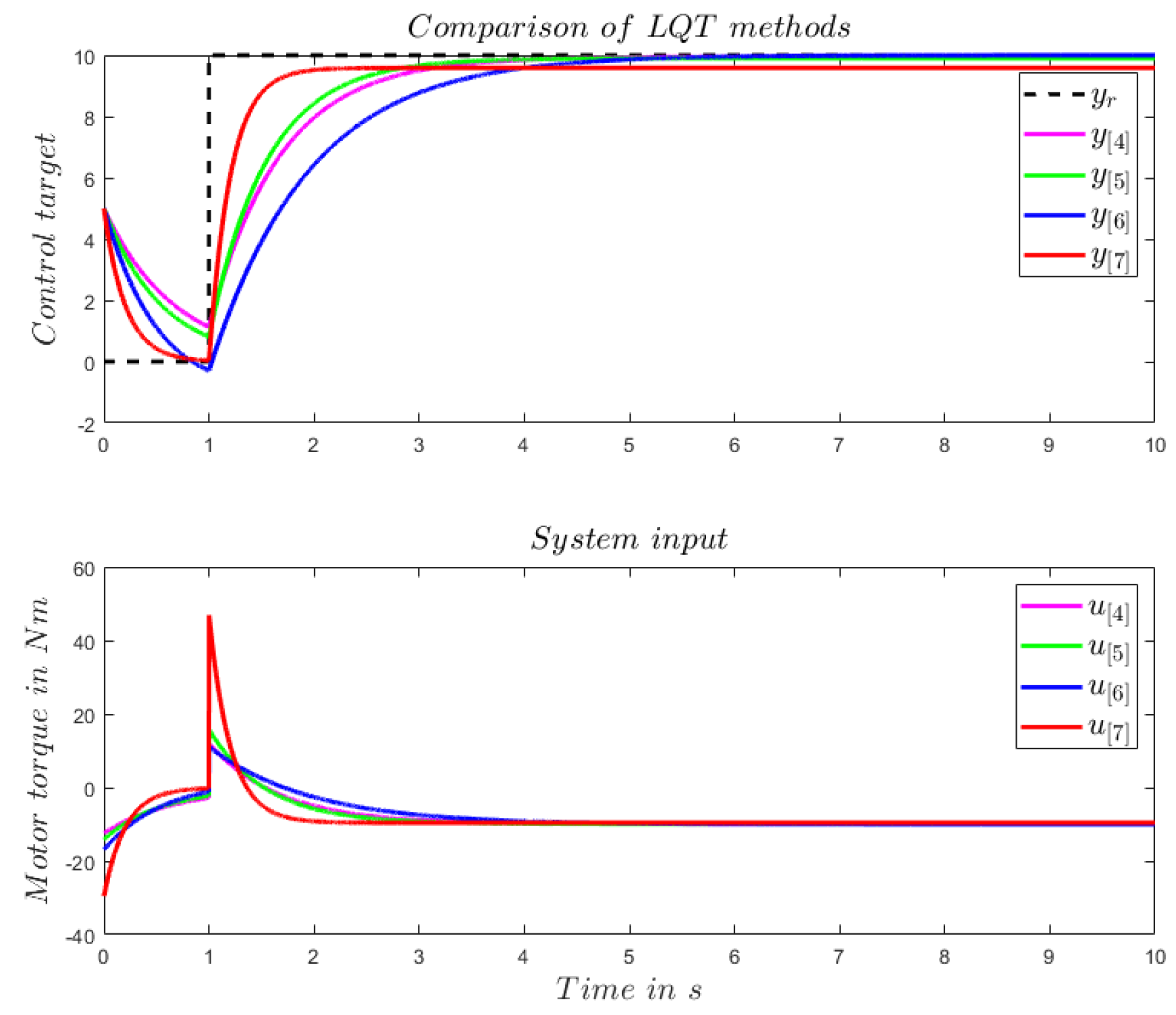

2. LQT Control Framework with Augmented System

2.1. Problem Description

2.1.1. Zero Steady-State Error

2.1.2. Formulation as a System with Exogenous Input

2.2. General LQT Control Framework

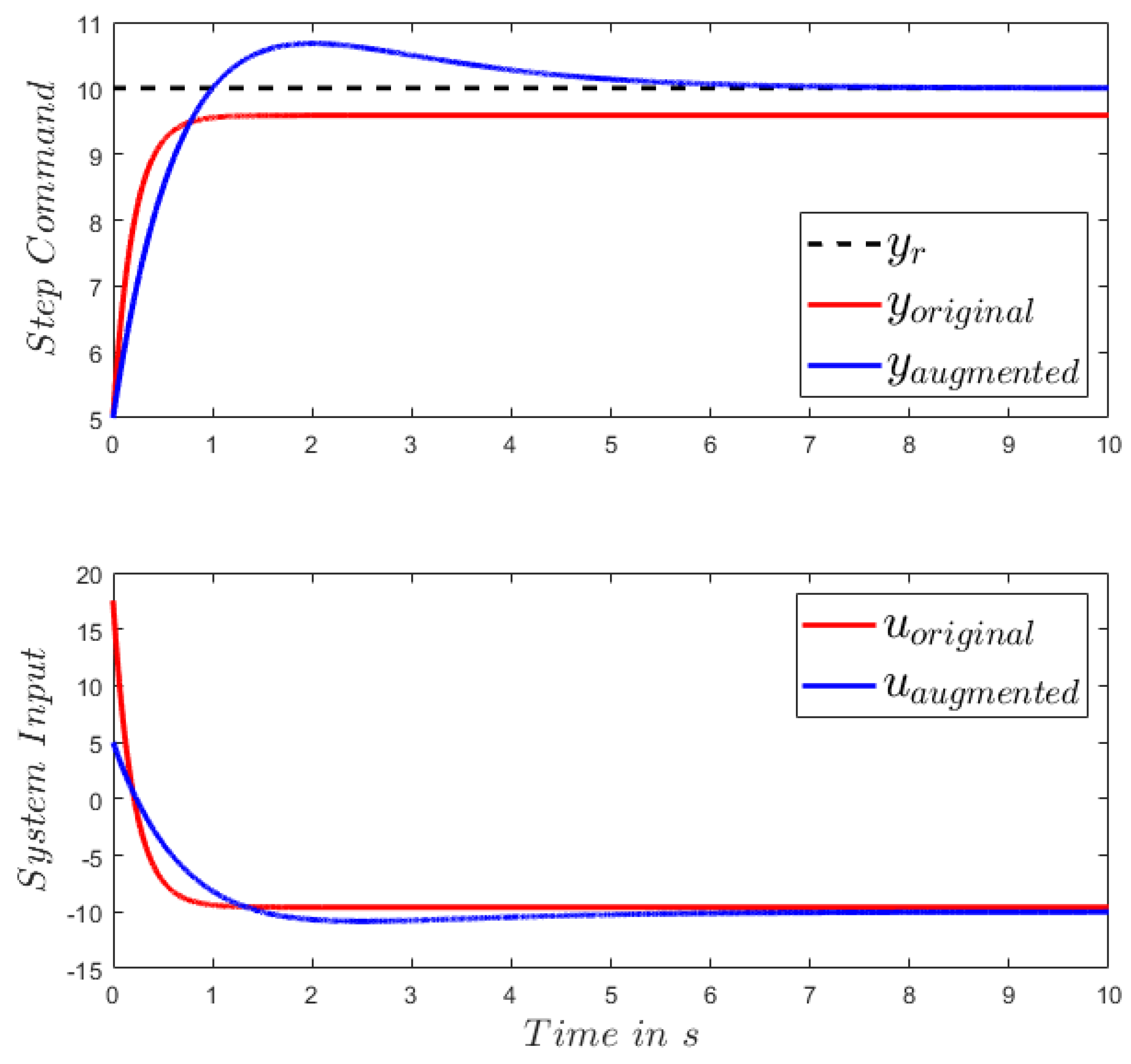

2.3. LQT Control Framework with Original System Model

2.4. LQT control framework with augmented system model

2.5. Simple Numerical Example

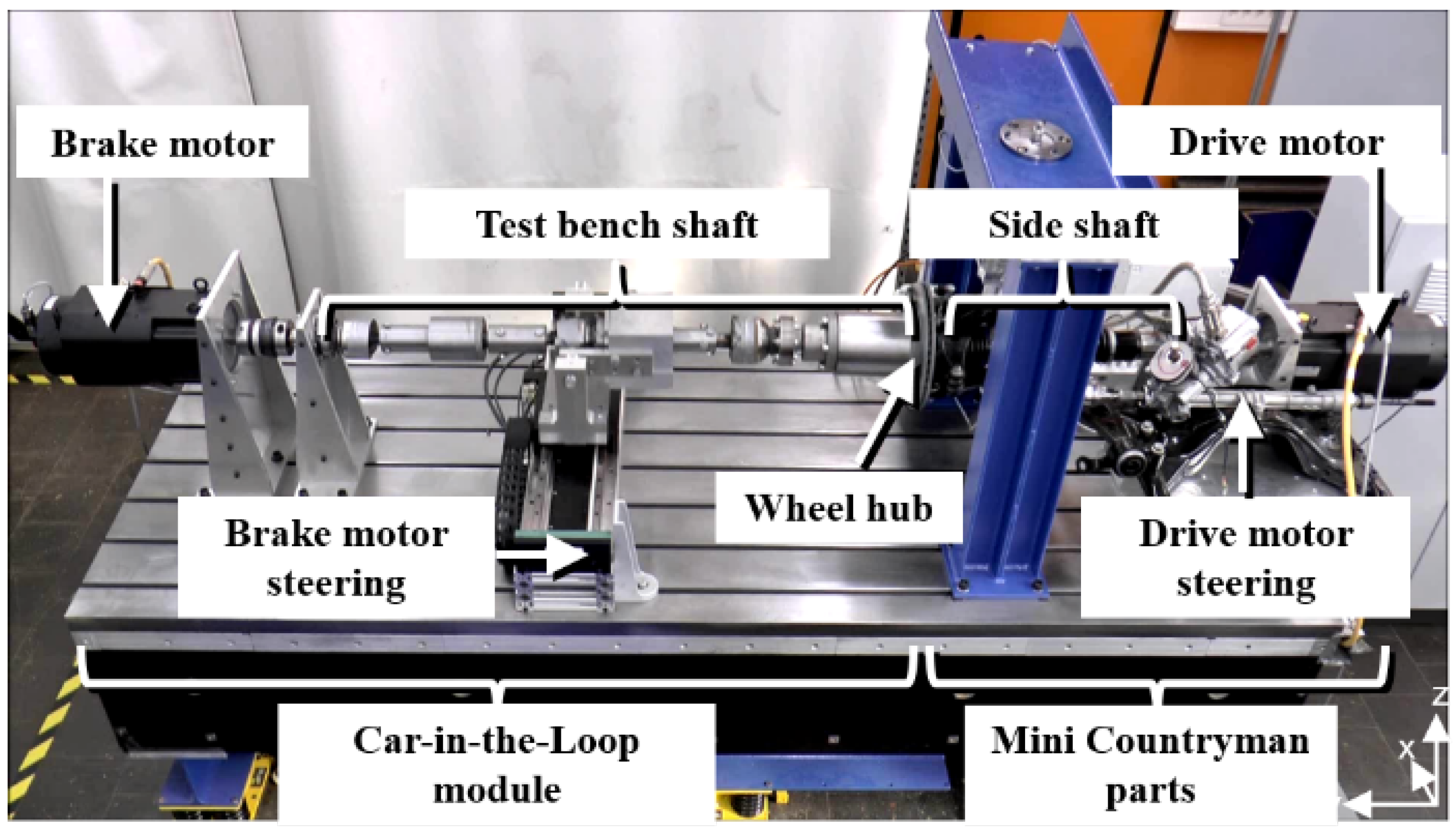

3. LQT Implementation on Car-in-the-Loop

3.1. Car-in-the-Loop Test Bench Prototype

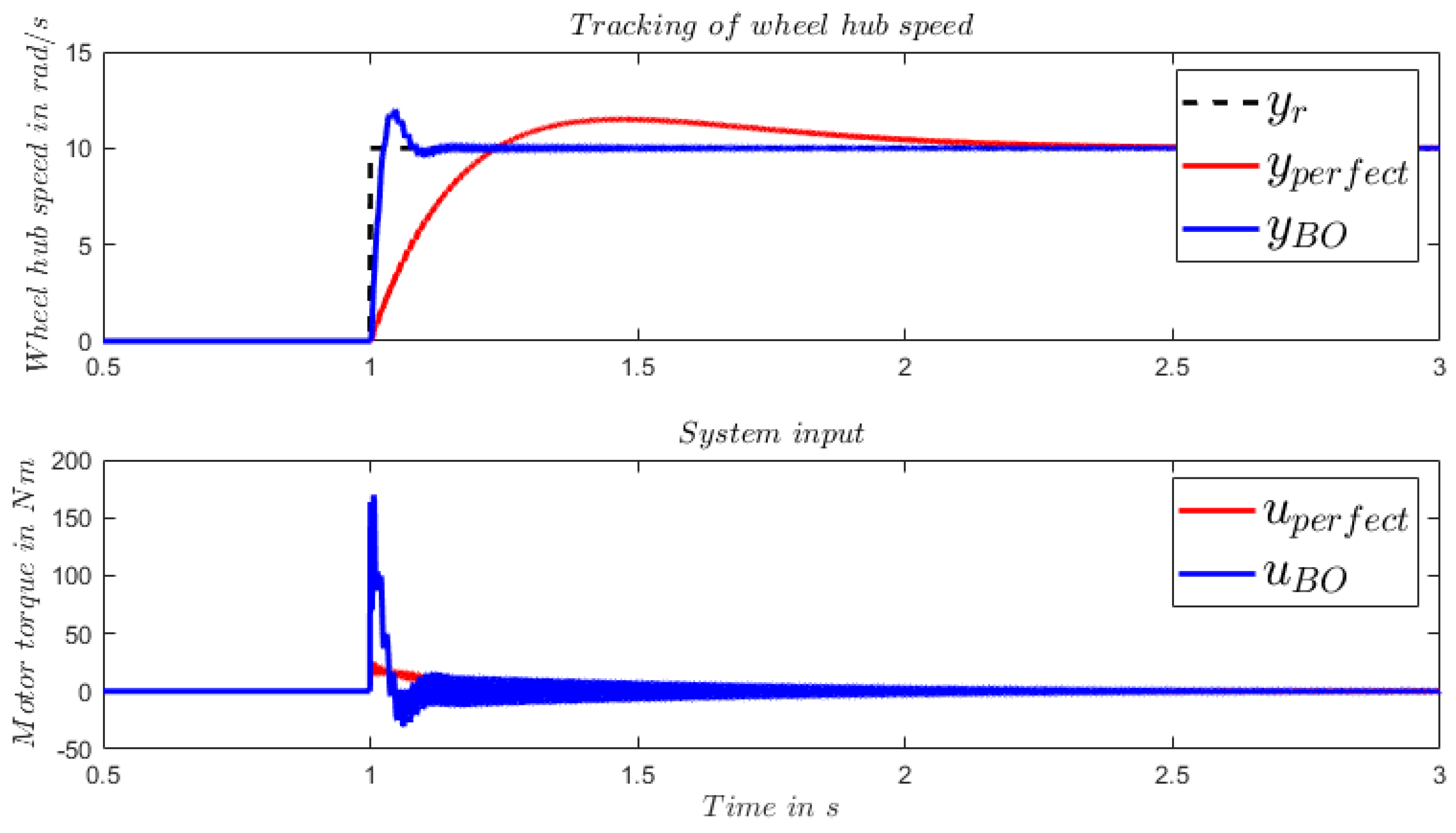

3.2. LQT Control with Perfect Model of the Real System

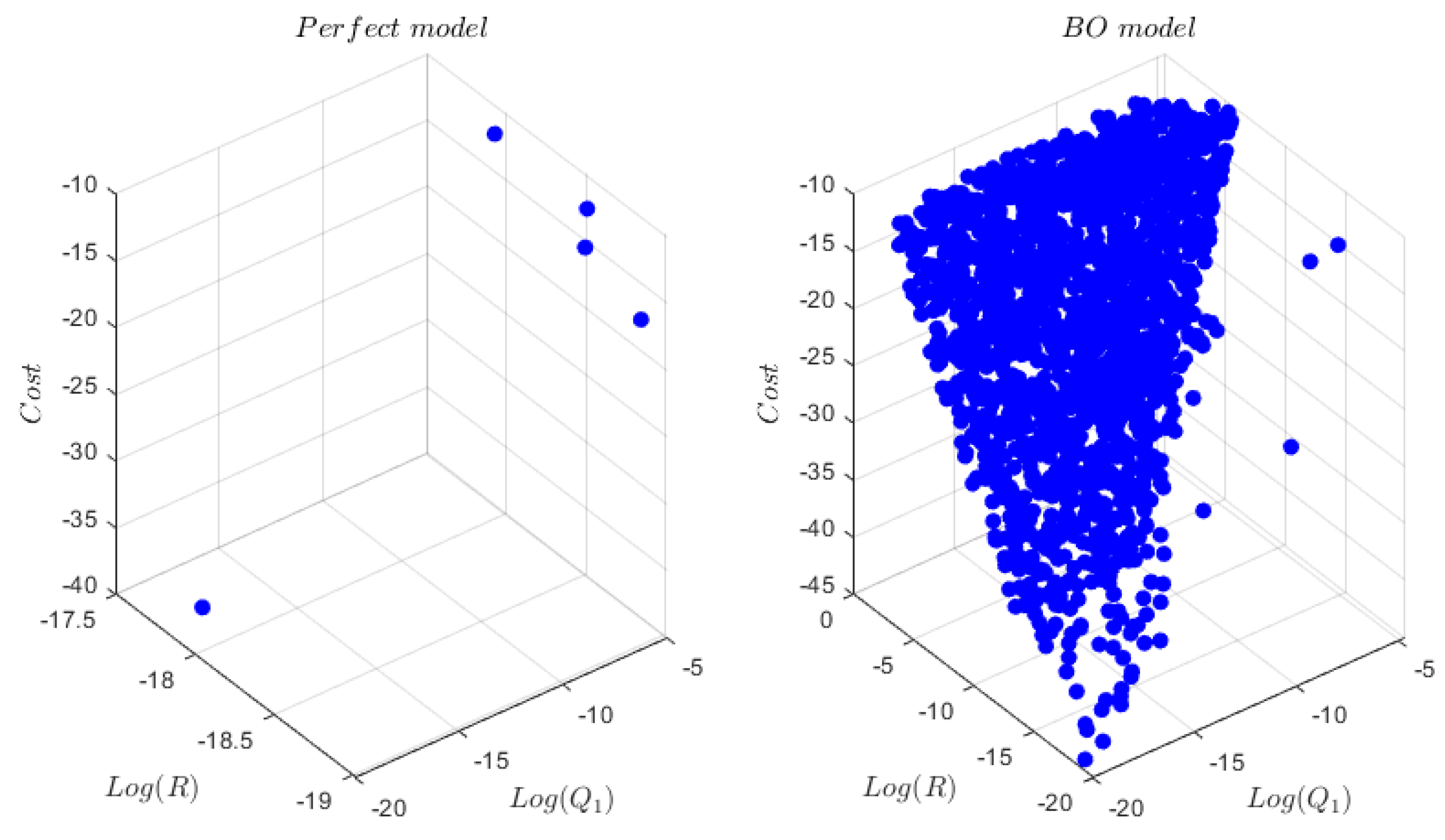

4. Model Learning via Bayesian Optimization

4.1. A Short Discussion on Model Learning for Control

4.2. Bayesian Optimization with Gaussian Process as Surrogate Model

5. LQT Implmentation on Car-in-the-Loop Revisited

| Algorithm 1: Model learning via Bayesian Optimization | |

| Step | Procedure |

| 1 | Initialize GP with |

| 2 | for |

| find | |

| conduct closed-loop experiment with | |

| measure and ; | |

| compute cost function | |

| update GP and D with | |

| 3 | Compute optimal parameter , where . |

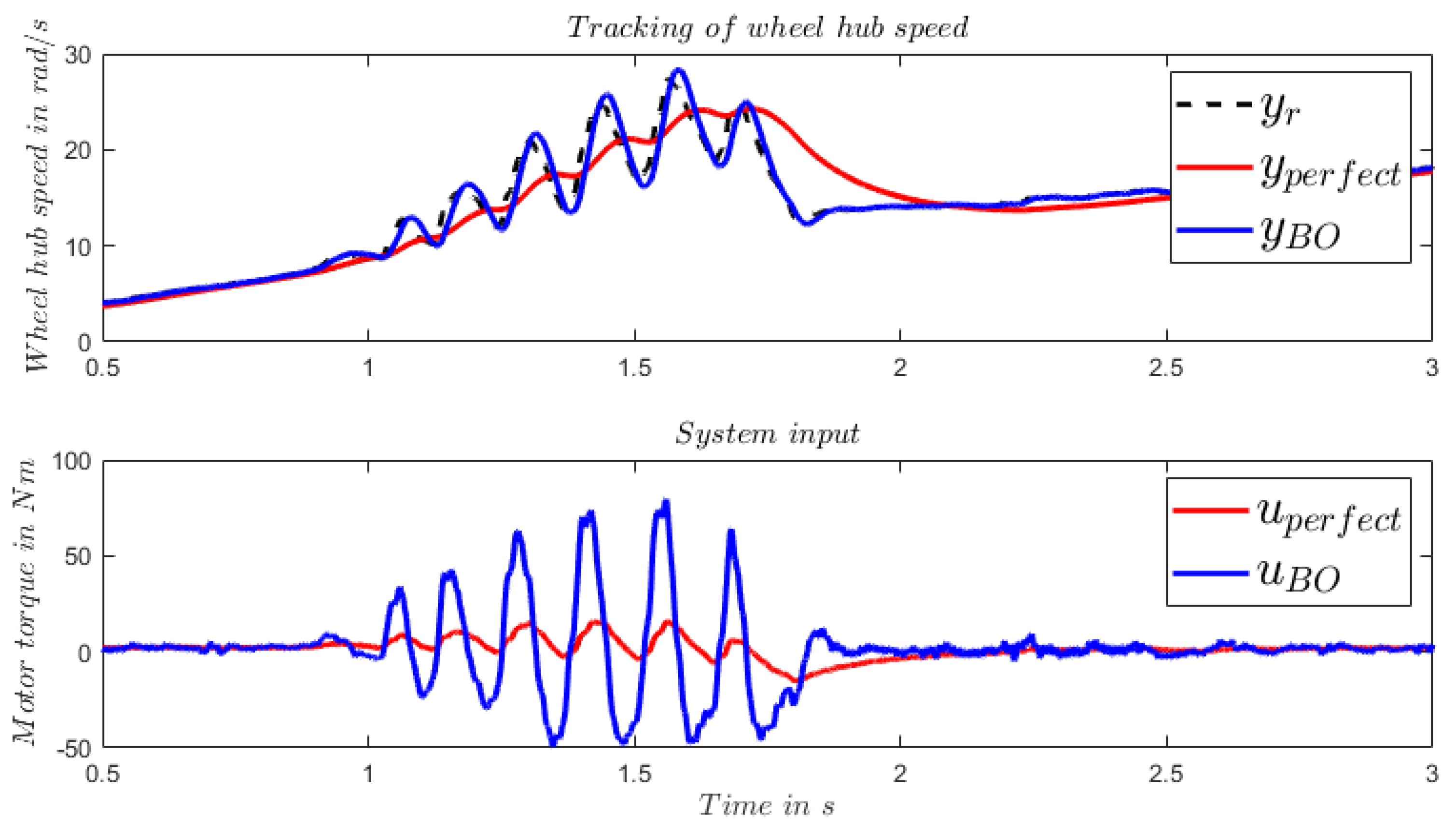

5.1. LQT with Model Learnt via BO

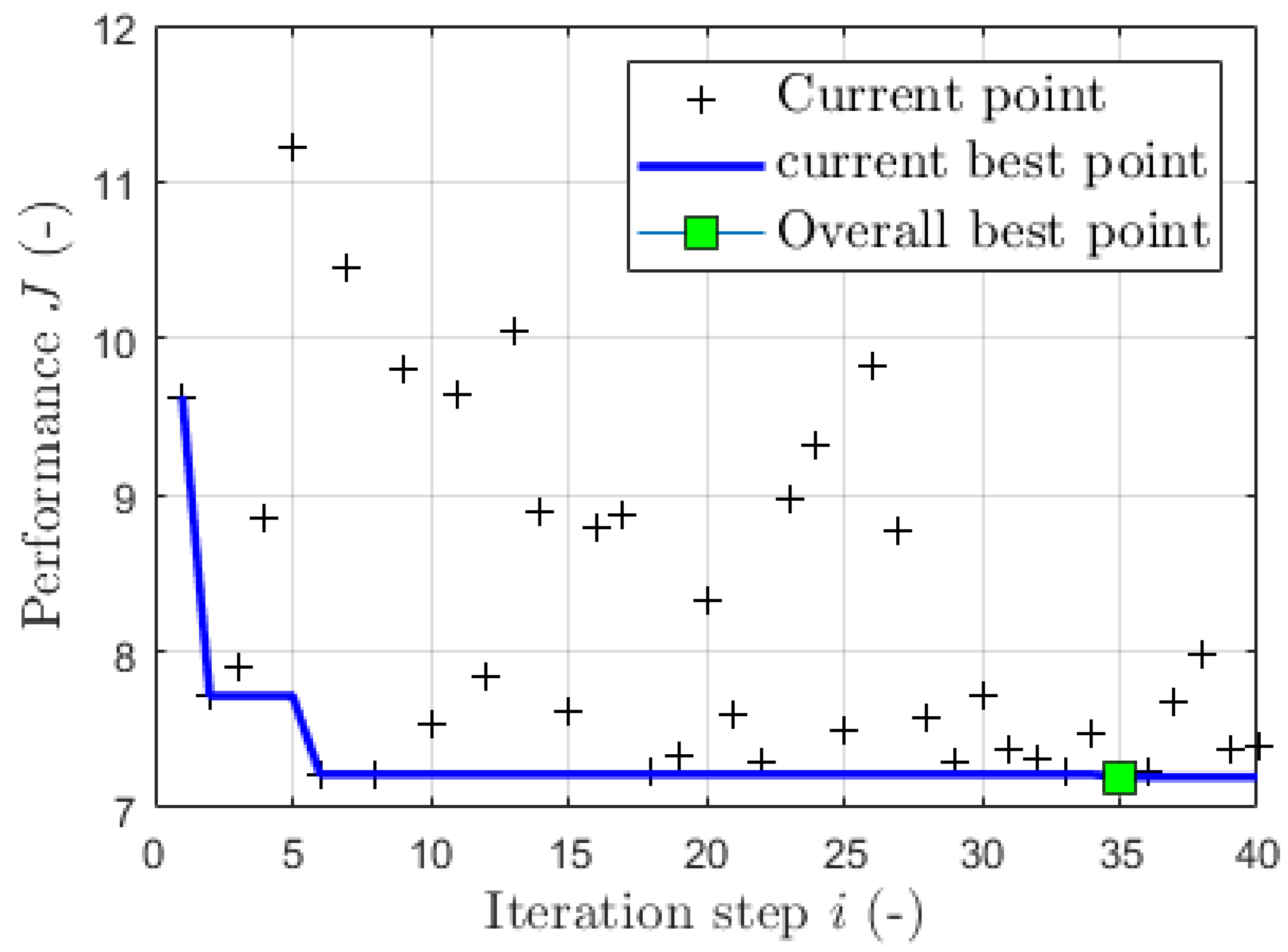

5.2. Effectiveness of Bayesian Learning

5.3. Limitations

6. Conclusion

References

- Schyr, C.; Inoue, H.; Nakaoka, Y. Vehicle-in-the-Loop Testing - a Comparative Study for Efficient Validation of ADAS/AD Functions. 2022 International Conference on Connected Vehicle and Expo (ICCVE). IEEE, 07.03.2022 - 09.03.2022, pp. 1–8. [CrossRef]

- Peter Bauer. Development and performance evaluation of an infinite horizon LQ optimal tracker.

- Park, J.H.; Han, S.; Kwon, W.H. LQ tracking controls with fixed terminal states and their application to receding horizon controls. Systems & Control Letters 2008, 57, 772–777. [Google Scholar] [CrossRef]

- Willems, J.L.; Mareels, I.M. A rigorous solution of the infinite time interval LQ problem with constant state tracking. Systems & Control Letters 2004, 52, 289–296. [Google Scholar] [CrossRef]

- Modares, H.; Lewis, F.L. Linear Quadratic Tracking Control of Partially-Unknown Continuous-Time Systems Using Reinforcement Learning. IEEE Transactions on Automatic Control 2014, 59, 3051–3056. [Google Scholar] [CrossRef]

- HAGIWARA, T.; YAMASAKI, T.; Araki, M. Two-degree-of-freedom design method of LQI servo systems: disturbance rejection by constant state feedback. International Journal of Control 1996, 63, 703–719. [Google Scholar] [CrossRef]

- Bernhard, S. Time-Invariant Control in LQ Optimal Tracking: An Alternative to Output Regulation. IFAC-PapersOnLine 2017, 50, 4912–4919. [Google Scholar] [CrossRef]

- Enrique Barbieri. On the infinite-horizon LQ tracker 2000.

- YOUNG, P.C.; WILLEMS, J.C. An approach to the linear multivariable servomechanism problem†. International Journal of Control 1972, 15, 961–979. [Google Scholar] [CrossRef]

- Fietzek, R.; Rinderknecht, S. Hardware-in-the-Loop test rig for driver assistance systems and autonomous vehicles 2015.

- Malkapure, H.G.; Chidambaram, M. Comparison of Two Methods of Incorporating an Integral Action in Linear Quadratic Regulator. IFAC Proceedings Volumes 2014, 47, 55–61. [Google Scholar] [CrossRef]

- Singh, A.K.; Pal, B.C. An extended linear quadratic regulator for LTI systems with exogenous inputs. Automatica 2017, 76, 10–16. [Google Scholar] [CrossRef]

- Mukherjee, S.; Bai, H.; Chakrabortty, A. Model-based and model-free designs for an extended continuous-time LQR with exogenous inputs. Systems & Control Letters 2021, 154, 104983. [Google Scholar] [CrossRef]

- S. Rinderknecht, R.F. Control Strategy for the Longitudinal Degree of Freedom of a Complete Vehicle Test Rig 2012.

- Brochu, E.; Cora, V.M.; Freitas, N.d. A Tutorial on Bayesian Optimization of Expensive Cost Functions, with Application to Active User Modeling and Hierarchical Reinforcement Learning.

- Khosravi, M.; Koenig, C.; Maier, M.; Smith, R.S.; Lygeros, J.; Rupenyan, A. Safety-Aware Cascade Controller Tuning Using Constrained Bayesian Optimization. IEEE Transactions on Industrial Electronics. [CrossRef]

- Fröhlich, L.P.; Küttel, C.; Arcari, E.; Hewing, L.; Zeilinger, M.N.; Carron, A. Model Learning and Contextual Controller Tuning for Autonomous Racing.

- Frazier, P.I. A Tutorial on Bayesian Optimization.

- Wang, X.; Jin, Y.; Schmitt, S.; Olhofer, M. Recent Advances in Bayesian Optimization. ACM Computing Surveys 2023, 55, 1–36. [Google Scholar] [CrossRef]

- Paulson, J.A.; Tsay, C. Bayesian optimization as a flexible and efficient design framework for sustainable process systems.

- Coutinho, J.P.; Santos, L.O.; Reis, M.S. Bayesian Optimization for automatic tuning of digital multi-loop PID controllers. Computers & Chemical Engineering 2023, 173, 108211. [Google Scholar] [CrossRef]

- Koenig, C.; Ozols, M.; Makarova, A.; Balta, E.C.; Krause, A.; Rupenyan, A. Safe Risk-averse Bayesian Optimization for Controller Tuning.

- Wabersich, K.P.; Taylor, A.J.; Choi, J.J.; Sreenath, K.; Tomlin, C.J.; Ames, A.D.; Zeilinger, M.N. Data-Driven Safety Filters: Hamilton-Jacobi Reachability, Control Barrier Functions, and Predictive Methods for Uncertain Systems. IEEE Control Systems 2023, 43, 137–177. [Google Scholar] [CrossRef]

- Stenger, D.; Ay, M.; Abel, D. Robust Parametrization of a Model Predictive Controller for a CNC Machining Center Using Bayesian Optimization. IFAC-PapersOnLine 2020, 53, 10388–10394. [Google Scholar] [CrossRef]

- Neumann-Brosig, M.; Marco, A.; Schwarzmann, D.; Trimpe, S. Data-Efficient Autotuning With Bayesian Optimization: An Industrial Control Study. IEEE Transactions on Control Systems Technology 2020, 28, 730–740. [Google Scholar] [CrossRef]

- Sorourifar, F.; Makrygirgos, G.; Mesbah, A.; Paulson, J.A. A Data-Driven Automatic Tuning Method for MPC under Uncertainty using Constrained Bayesian Optimization. IFAC-PapersOnLine 2021, 54, 243–250. [Google Scholar] [CrossRef]

- Chakrabarty, A.; Benosman, M. Safe Learning-based Observers for Unknown Nonlinear Systems using Bayesian Optimization.

- Robert, C. Grande, Girish Chowdhary, Jonathan P. How. 2014_Experimental_Validation_of_Bayesian_ Nonparametric Adaptive Control Using Gaussian Processes_ctn25.

- Bart, M. Doekemeijer.; Daan C. van der, Hoek., Jan-Willem van Wingerden. Model-based closed-loop wind farm control for power maximization using Bayesian Optimization using BO: 3rd IEEE Conference on Control Technology and Applications : August 19-21, 2019, City University of Hong Kong, Eds.; IEEE: Piscataway, NJ, 2019. [Google Scholar] [CrossRef]

- Berkenkamp, F.; Schoellig, A.P. Safe and robust learning control with Gaussian processes. 2015 European Control Conference (ECC). IEEE, 15.07.2015 - 17.07.2015, pp. 2496–2501. [CrossRef]

- M. Gevers. Identification_for_Control_From_the_Early achievements to the revival of experiments design_ctn354 2005.

- Hjalmarsson, H. From experiment design to closed-loop control. Automatica 2005, 41, 393–438. [Google Scholar] [CrossRef]

- Bansal, S.; Calandra, R.; Xiao, T.; Levine, S.; Tomlin, C.J. Goal-Driven Dynamics Learning via Bayesian Optimization.

- Doyle, J. Guaranteed margins for LQG regulators. IEEE Transactions on Automatic Control 1978, 23, 756–757. [Google Scholar] [CrossRef]

- Alanwar, A.; Stürz, Y.; Johansson, K.H. Robust Data-Driven Predictive Control using Reachability Analysis.

- Piga, D.; Forgione, M.; Formentin, S.; Bemporad, A. Performance-Oriented Model Learning for Data-Driven MPC Design. IEEE Control Systems Letters 2019, 3, 577–582. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).