Submitted:

23 June 2024

Posted:

24 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Overview

1.2. Background of Study

1.3. Problem Statement

1.4. Research Objectives

- To learn how speech recognition works with the use of a deep learning model and its execution.

- To develop a prototype that is capable of transcribing Myanmar(Burmese) Language speech into text.

- To build a website that is implemented with the prototype to use the system easily.

2. Literature Review

2.1. Overview

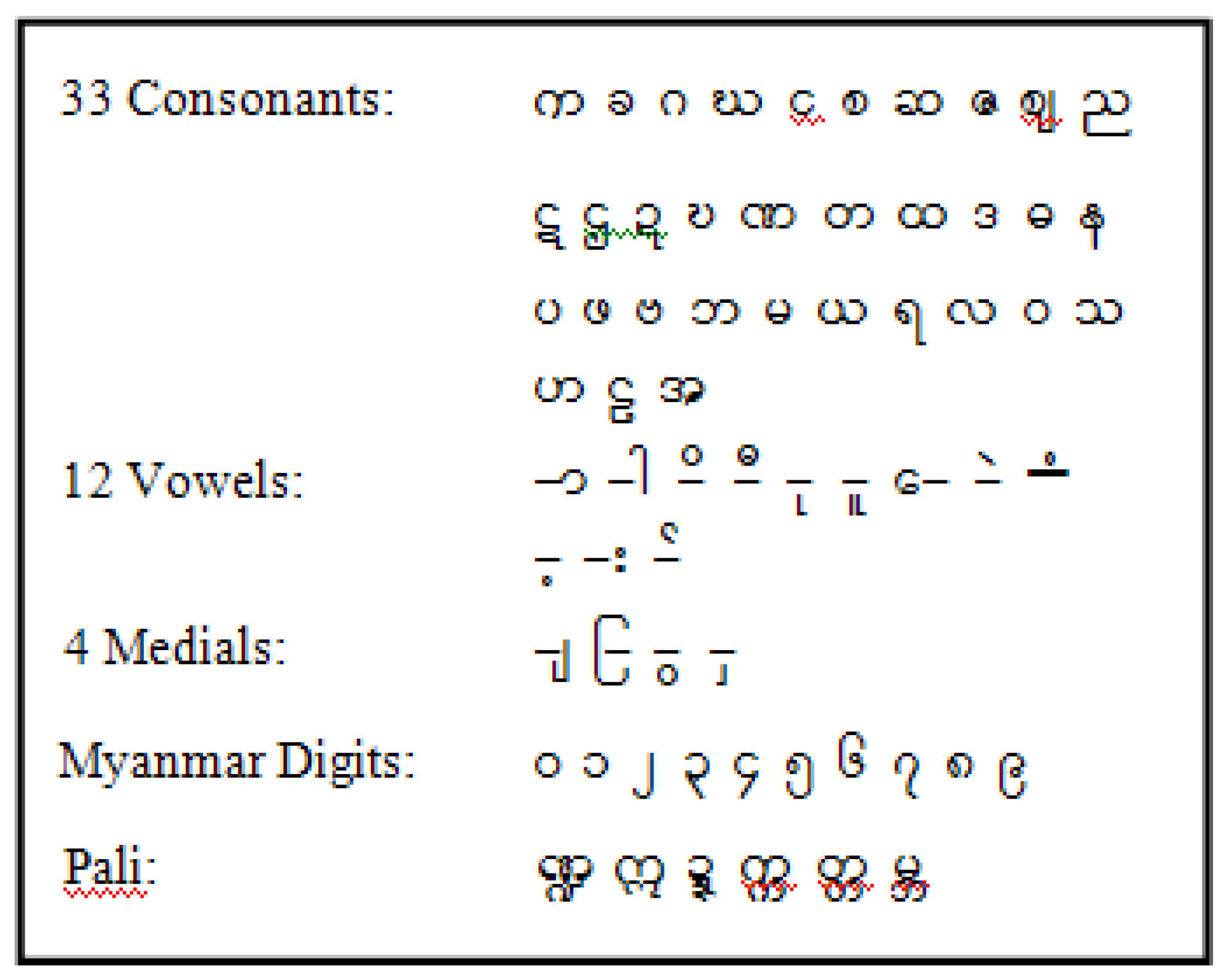

2.2. Burmese Language

2.3. Related Work

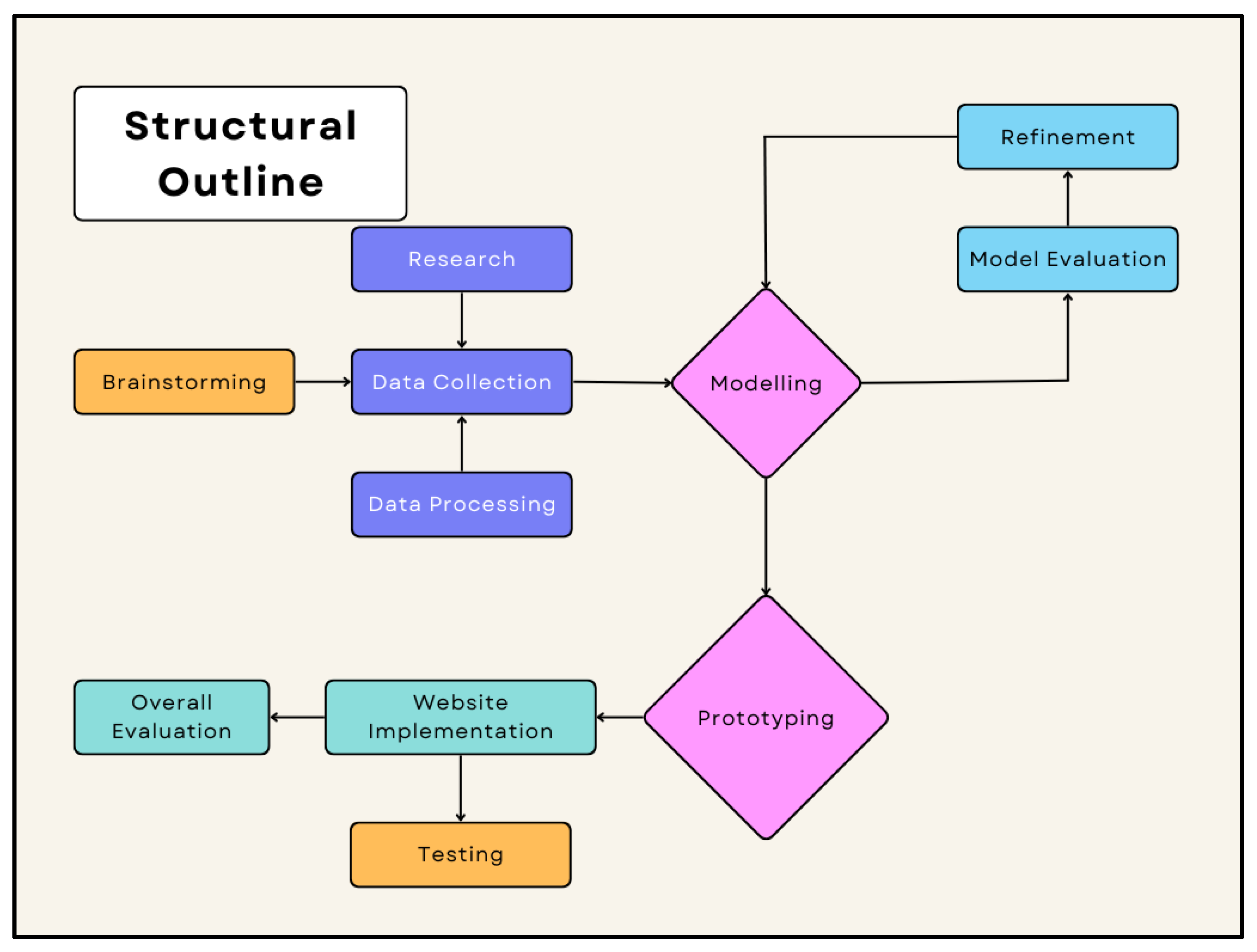

3. Methodology

3.1. Overview

3.2. Hardware Requirements

| Component | Specifications | |

|---|---|---|

| Laptop or Desktop | Operating System | Windows 10 Home |

| Central Processing Unit | AMD Ryzen 5 | |

| Memory | 16 GB | |

| Hard Disk Drive | 1TB | |

| Solid-state Drive | 256 GB | |

3.3. Software Requirements

| Software | Version |

|---|---|

| Anaconda | 2021.11 |

| Microsoft Visual Studio | 1.74.2 |

| cmd | 10.0.19045.2546 |

3.4. Programming Languages

| Programming Language | Usage |

|---|---|

| Python | Main programming language use from start to finish, model building, training, evaluation, and deployment, all are done in a single language. |

3.5. Project Timeline

| Week | DESCRIPTION |

|---|---|

| Week 1 | Article Collection |

| Week 2 | Article Analysis |

| Week 3 | Literature Review |

| Week 4 | Methodology |

| Week 5 | Proposal Submission |

| Week 6 | Project Pitching |

| Week 7 | Report Submission |

| Week 8 | Speech Dataset Collection |

| Week 9 | Speech Dataset Collection |

| Week 10 | Data Pre-processing |

| Week 11 | Data Pre-processing |

| Week 12 | Modelling |

| Week 13 | Modelling |

| Week 14 | Prototyping |

| Week 15 | Website Creation |

| Week 16 | Prototype Implementation into Website |

| Week 17 | Prototype Implementation into Website |

| Week 18 | Evaluation |

| Week 19 | Project Pitching |

| Week 20 | Overall Refining |

| Week 21 | Final Report Submission |

3.6. Project Development

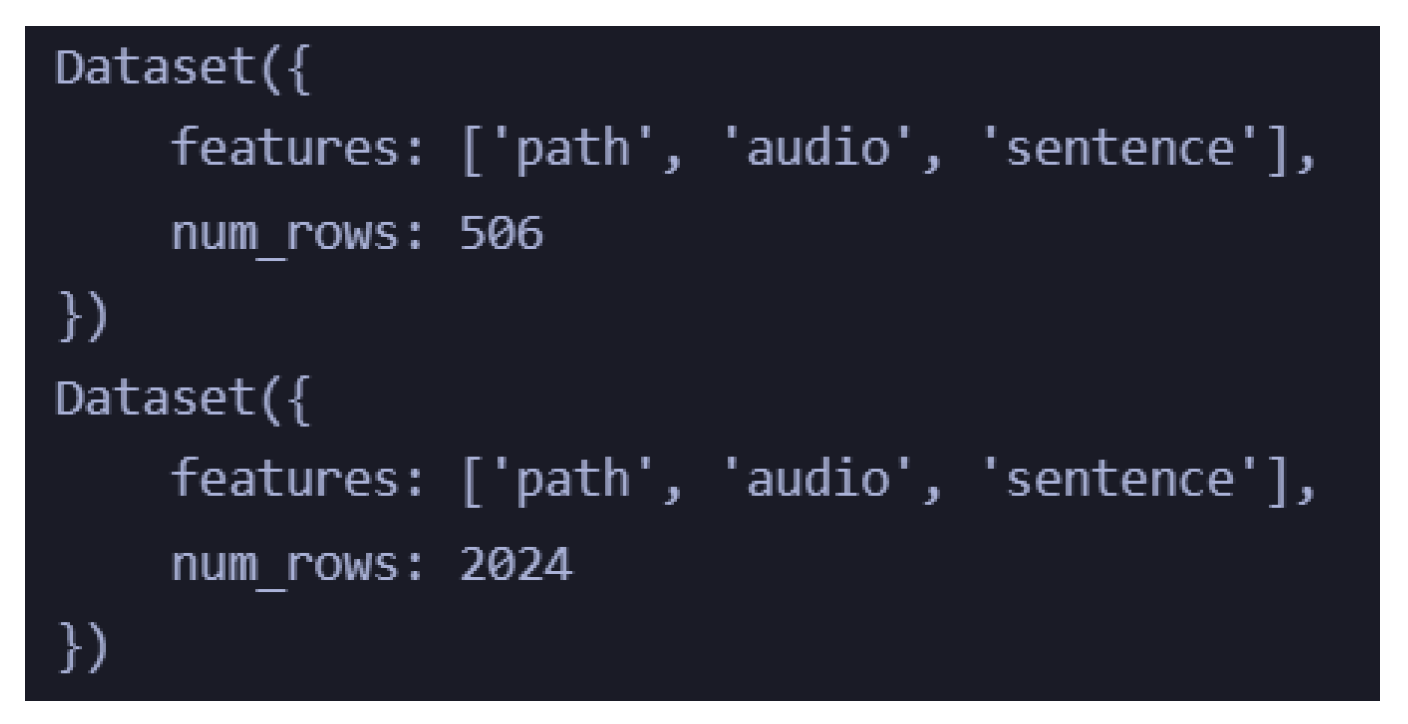

3.6.1. Data Collection

3.6.2. Data Processing

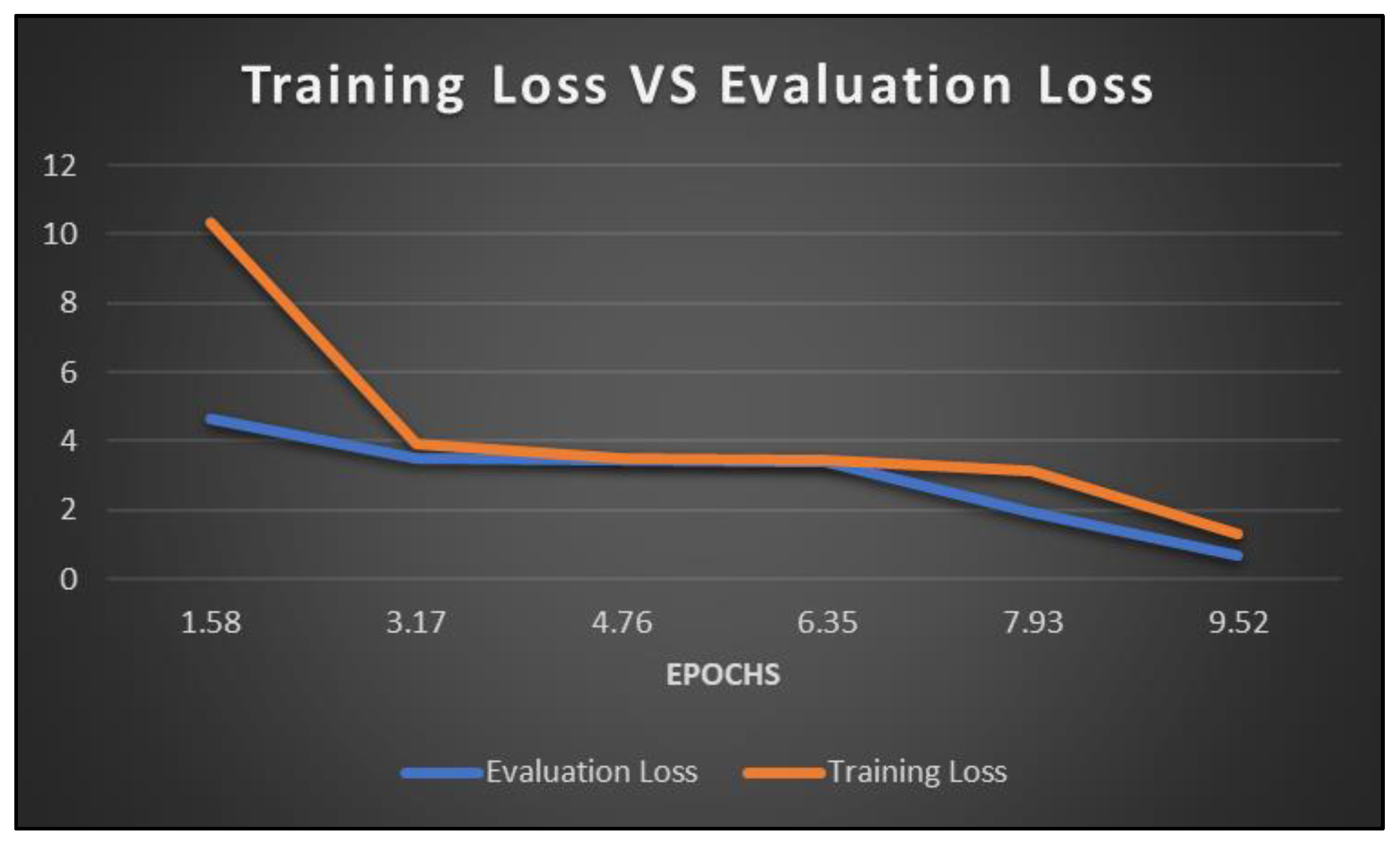

3.6.3. Modelling

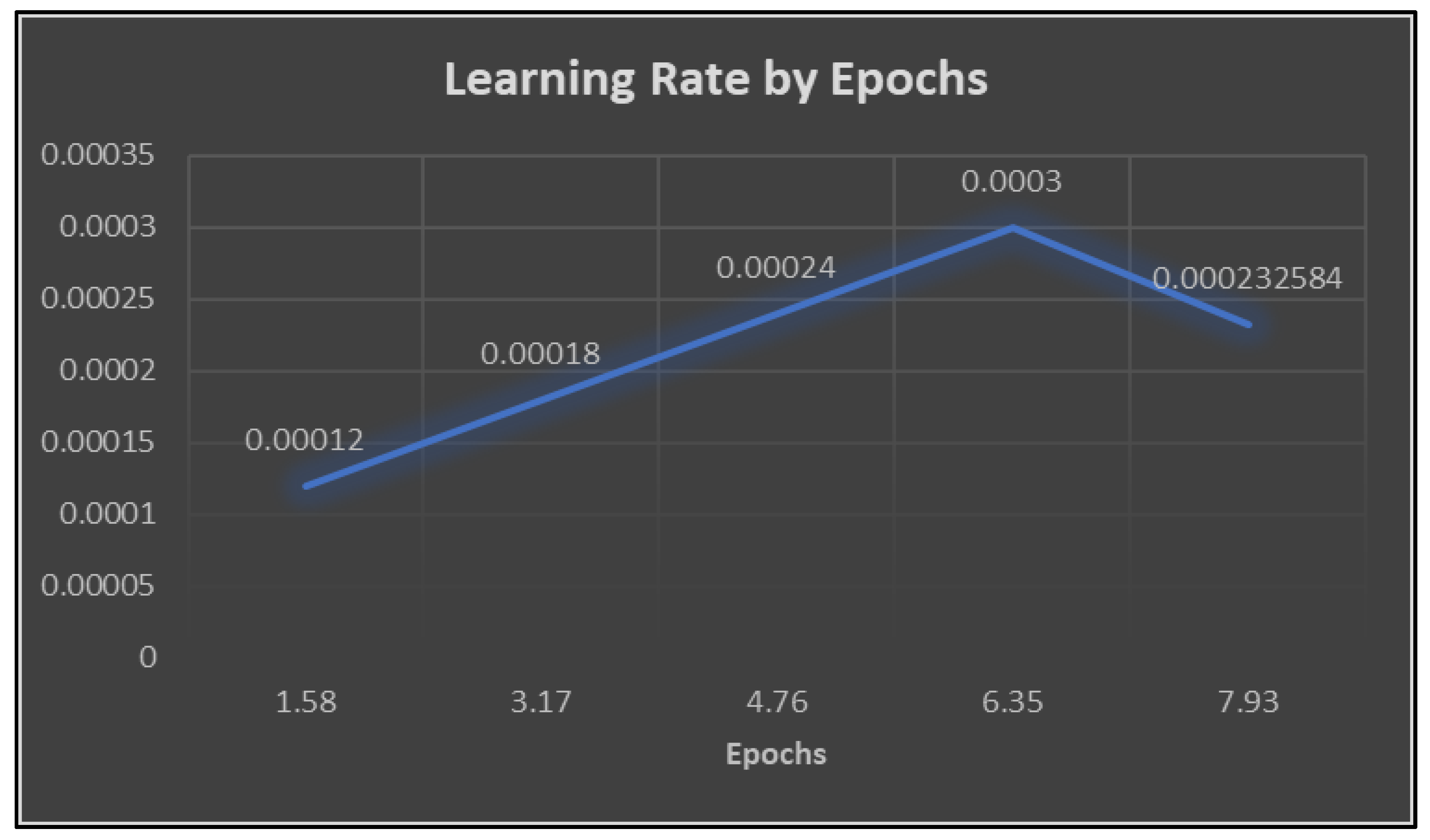

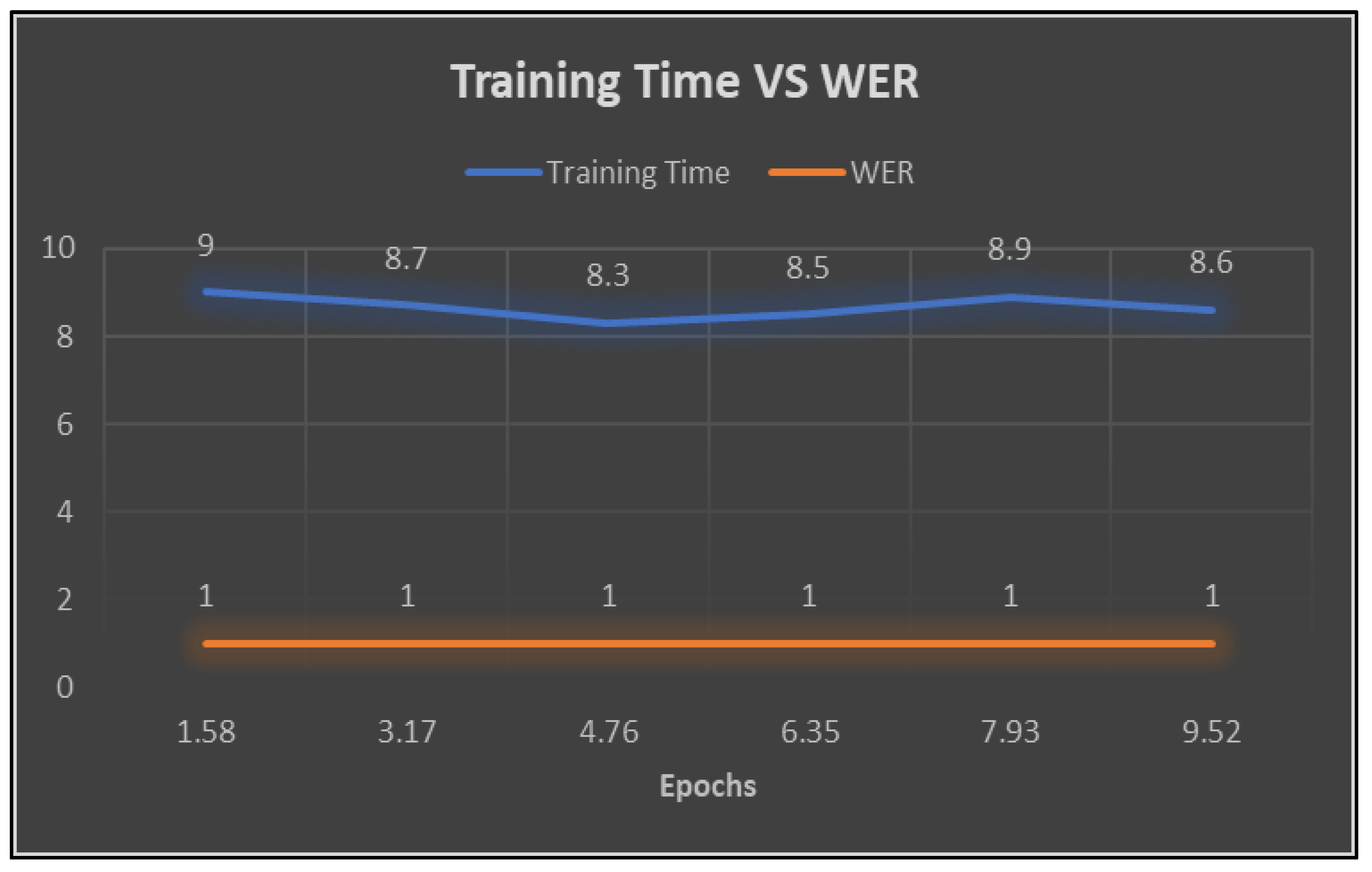

| Epoch | Training Loss | Learning Rate | Evaluation Loss | WER | Training Time |

| 1.58 | 10.3152 | 6.00E-05 | 4.660822 | 1 | 8 - 9 hours |

| 3.17 | 3.9002 | 0.00012 | 3.500513 | 1 | 8 - 9 hours |

| 4.76 | 3.4987 | 0.00018 | 3.450787 | 1 | 8 - 9 hours |

| 6.35 | 3.4524 | 0.00024 | 3.372131 | 1 | 8 - 9 hours |

| 7.93 | 3.128 | 0.0003 | 1.946949 | 1 | 8 - 9 hours |

| 9.52 | 1.3368 | 0.000233 | 0.684924 | 1 | 8 - 9 hours |

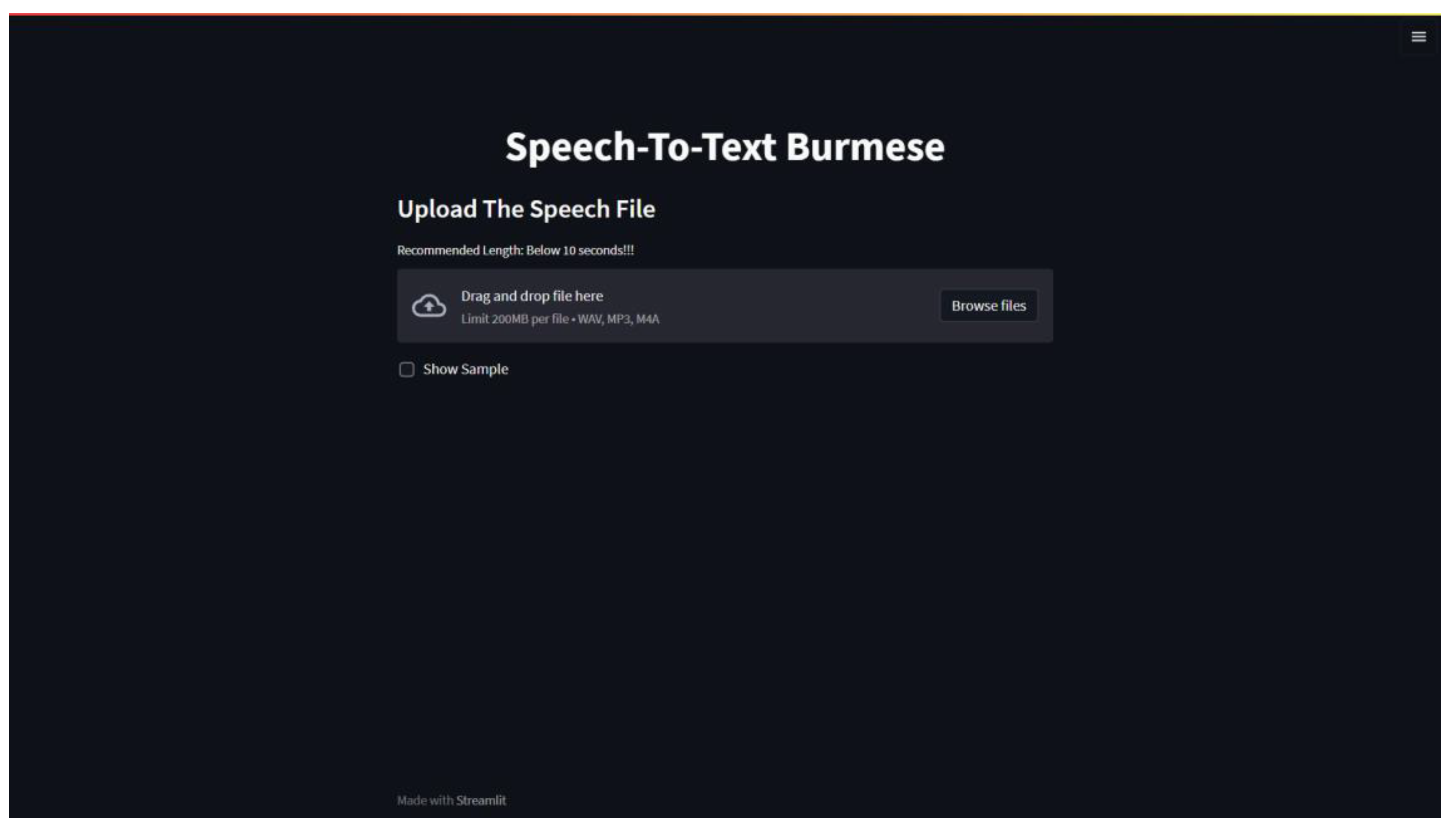

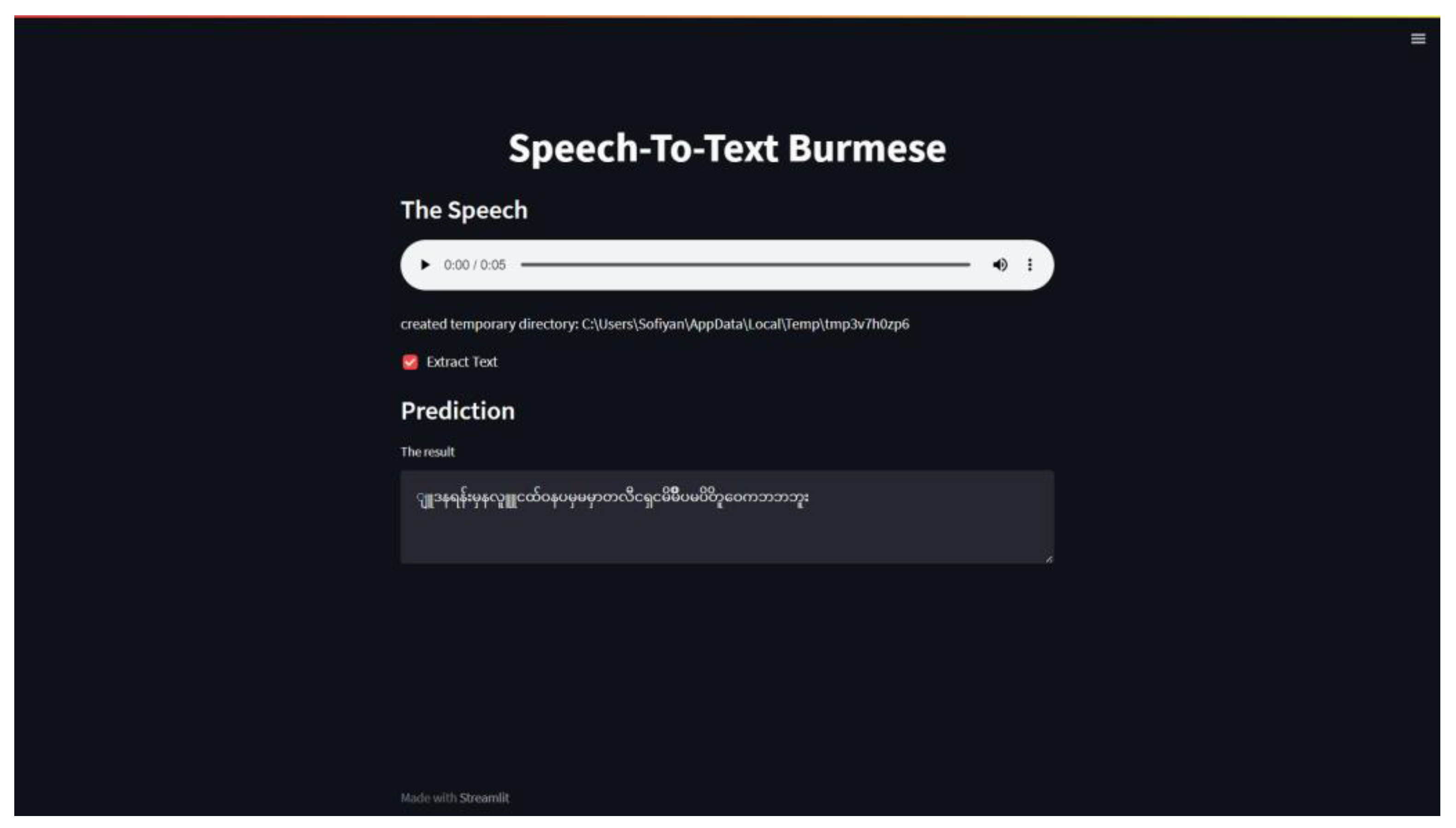

3.6.4. Prototyping

3.6.5. Deployment

4. Result and Discussion

4.1. Overview

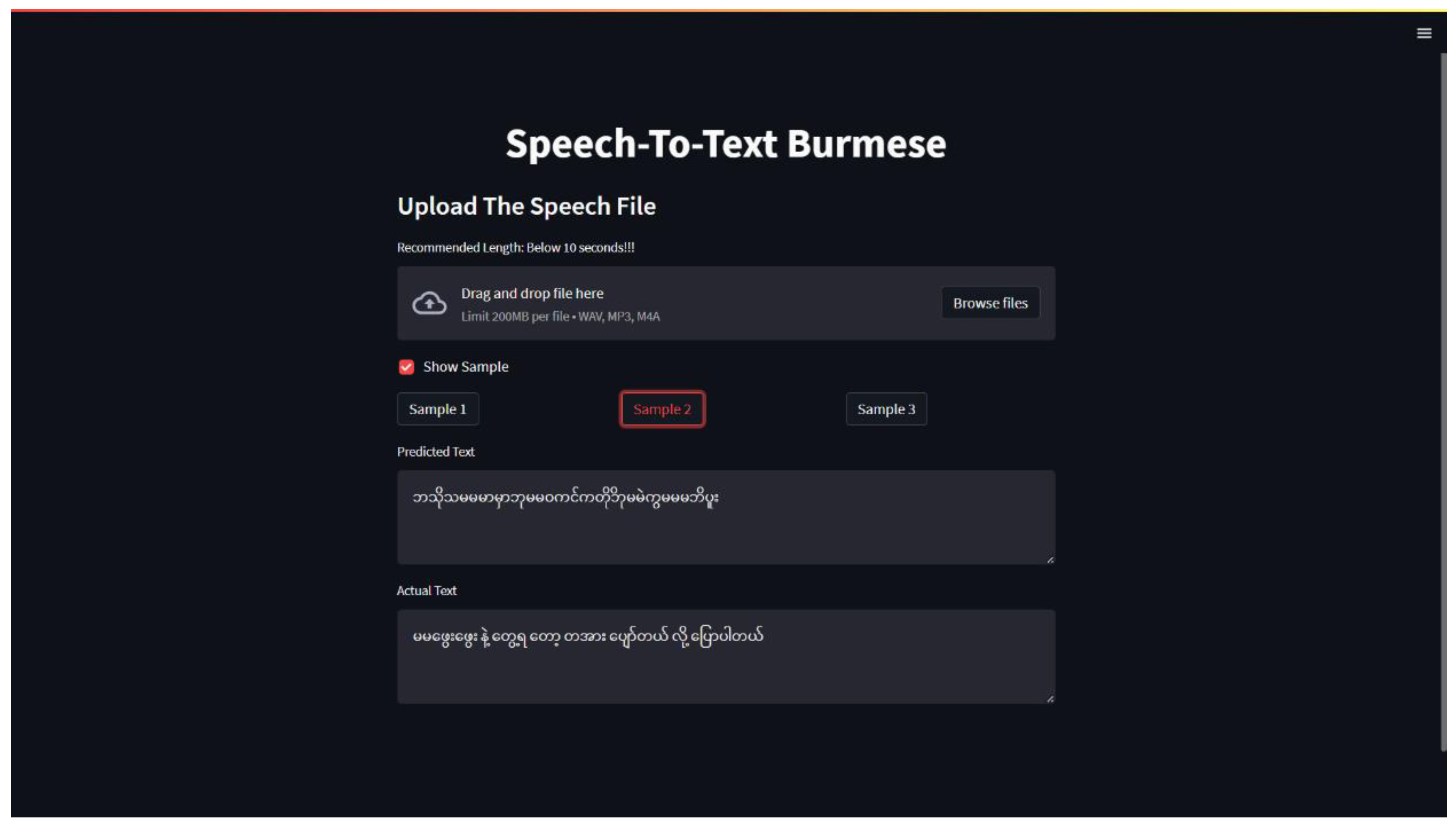

4.2. Website Deployment

5. Conclusion

5.1. Overview

5.2. Social Innovation

5.3. Future Enhancement

References

- Acharjya, D. P., Mitra, A., & Zaman, N. (2022). Deep learning in data analytics. Springer International Publishing.

- Baevski, A., Zhou, Y., Mohamed, A., & Auli, M. (2020). wav2vec 2.0: A framework for self-supervised learning of speech representations. Advances in neural information processing systems, 33, 12449-12460.

- Beg, A., & Hasnain, S. K. (2008). A speech recognition system for Urdu language. Wireless Networks, Information Processing and Systems, 118-126. [CrossRef]

- Chit, K. M., & Lin, L. L. (2021). Exploring CTC based end-to-end techniques for Myanmar speech recognition. Advances in Intelligent Systems and Computing, 1038-1046. [CrossRef]

- Fatima-tuz-Zahra, N. Jhanjhi, S. N. Brohi, N. A. Malik and M. Humayun, "Proposing a Hybrid RPL Protocol for Rank and Wormhole Attack Mitigation using Machine Learning," 2020 2nd International Conference on Computer and Information Sciences (ICCIS), Sakaka, Saudi Arabia, 2020, pp. 1-6. [CrossRef]

- Gaikwad, S. K., Gawali, B. W., & Yannawar, P. (2010). A review on speech recognition technique. International Journal of Computer Applications, 10(3), 16-24.

- Gopi, R., Sathiyamoorthi, V., Selvakumar, S., Manikandan, R., Chatterjee, P., Jhanjhi, N. Z., & Luhach, A. K. (2022). Enhanced method of ANN based model for detection of DDoS attacks on multimedia internet of things. Multimedia Tools and Applications, 1-19.

- Gouda W, Almurafeh M, Humayun M, Jhanjhi NZ. Detection of COVID-19 Based on Chest X-rays Using Deep Learning. Healthcare. 2022; 10(2):343. [CrossRef]

- Gu, N., Lee, K., Basha, M., Ram, S. K., You, G., & Hahnloser, R. H. (2024, April). Positive Transfer of the Whisper Speech Transformer to Human and Animal Voice Activity Detection. In ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 7505-7509). IEEE.

- Humayun M, Ashfaq F, Jhanjhi NZ, Alsadun MK. Traffic Management: Multi-Scale Vehicle Detection in Varying Weather Conditions Using YOLOv4 and Spatial Pyramid Pooling Network. Electronics. 2022; 11(17):2748. [CrossRef]

- Humayun M, Sujatha R, Almuayqil SN, Jhanjhi NZ. A Transfer Learning Approach with a Convolutional Neural Network for the Classification of Lung Carcinoma. Healthcare. 2022; 10(6):1058. [CrossRef]

- Hussain, K., Rahmatyar, A. R., Riskhan, B., Sheikh, M. A. U., & Sindiramutty, S. R. (2024, January). Threats and Vulnerabilities of Wireless Networks in the Internet of Things (IoT). In 2024 IEEE 1st Karachi Section Humanitarian Technology Conference (KHI-HTC) (pp. 1-8). IEEE.

- Hussain, S. J., Irfan, M., Jhanjhi, N. Z., et al. (2021). Performance enhancement in wireless body area networks with secure communication. Wireless Personal Communications, 116(1), 1–22. [CrossRef]

- Johnson, M., Lapkin, S., Long, V., Sanchez, P., Suominen, H., Basilakis, J., & Dawson, L. (2014). A systematic review of speech recognition technology in health care. BMC medical informatics and decision making, 14, 1-14.

- Kamath, U., Liu, J., & Whitaker, J. (2019). Deep learning for NLP and speech recognition (Vol. 84). Cham, Switzerland: Springer.

- Khaing, I., & Linn, K. Z. (2013). Myanmar continuous speech recognition system based on DTW and HMM. International Journal of Innovations in Engineering and Technology (IJIET), 2(1), 78-83.

- Lim, M., Abdullah, A., Jhanjhi, N. Z., Khan, M. K., & Supramaniam, M. (2019). Link prediction in time-evolving criminal network with deep reinforcement learning technique. IEEE Access, 7, 184797-184807.

- Mallick, C., Bhoi, S. K., Singh, T., Hussain, K., Riskhan, B., & Sahoo, K. S. (2023). Cost Minimization of Airline Crew Scheduling Problem Using Assignment Technique. International Journal of Intelligent Systems and Applications in Engineering, 11(7s), 285-298.

- Majid, M., Hayat, M. F., Khan, F. Z., Ahmad, M., Jhanjhi, N. Z., Bhuiyan, M. A. S.,... & AlZain, M. A. (2021). Ontology-Based System for Educational Program Counseling. Intelligent Automation & Soft Computing, 30(1).

- Mallick, C., Bhoi, S. K., Singh, T., Swain, P., Ruskhan, B., Hussain, K., & Sahoo, K. S. (2023). Transportation Problem Solver for Drug Delivery in Pharmaceutical Companies using Steppingstone Method. International Journal of Intelligent Systems and Applications in Engineering, 11(5s), 343-352.

- Minn, K. H., & Soe, K. M. (2019). Myanmar word stemming and part-of-speech tagging using rule based approach (Doctoral dissertation, MERAL Portal).

- Mon, A. N., Pa Pa, W., & Thu, Y. K. (2018). Improving Myanmar automatic speech recognition with optimization of convolutional neural network parameters. International Journal on Natural Language Computing (IJNLC) Vol, 7.

- Mon, A. N., Pa, W. P., & Ye, K. T. (2019). UCSY-SC1: A Myanmar speech corpus for automatic speech recognition. International Journal of Electrical and Computer Engineering, 9(4), 3194.

- Naing, H. M. S., Hlaing, A. M., Pa, W. P., Hu, X., Thu, Y. K., Hori, C., & Kawai, H. (2015, December). A Myanmar large vocabulary continuous speech recognition system. In 2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA) (pp. 320-327). IEEE.

- Naing, H. M. S., Hlaing, A. M., Pa, W. P., Hu, X., Thu, Y. K., Hori, C., & Kawai, H. (2015, December). A Myanmar large vocabulary continuous speech recognition system. In 2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA) (pp. 320-327). IEEE.

- Oo, Y. M., Wattanavekin, T., Li, C., De Silva, P., Sarin, S., Pipatsrisawat, K.,... & Gutkin, A. (2020, May). Burmese speech corpus, finite-state text normalization and pronunciation grammars with an application to text-to-speech. In Proceedings of the Twelfth Language Resources and Evaluation Conference (pp. 6328-6339).

- Rabiner, L. R., Wilpon, J. G., & Soong, F. K. (1989). High performance connected digit recognition using hidden Markov models. IEEE Transactions on Acoustics, Speech, and Signal Processing, 37(8), 1214-1225.

- Saeed, S., Jhanjhi, N. Z., Naqvi, M., Malik, N. A., & Humayun, M. (2019). Disparage the barriers of journal citation reports (JCR). International Journal of Computer Science and Network Security, 19(5), 156-175.

- Shah, S. A. A., Bukhari, S. S. A., Humayun, M., Jhanjhi, N. Z., & Abbas, S. F. (2019, April). Test case generation using unified modeling language. In 2019 International Conference on Computer and Information Sciences (ICCIS) (pp. 1-6). IEEE.

- Soe, W., & Theins, Y. (2015). Syllable-based Myanmar language model for speech recognition. IEEE Xplore. Retrieved from https://ieeexplore.ieee.org/document/7166608.

- Sowjanya, A. M. (2021). Self-Supervised Model for Speech Tasks with Hugging Face Transformers. Turkish Online Journal of Qualitative Inquiry, 12(10).

- Tabbakh, A. (2021). Bankruptcy prediction using robust machine learning model. Turkish Journal of Computer and Mathematics Education (TURCOMAT), 12(10), 3060-3073.

- Thein, Y. (2010). High accuracy Myanmar handwritten character recognition using hybrid approach through MICR and neural network. International Journal of Computer Science Issues (IJCSI), 7(6), 22.

- Thu, Y. K. (2021, April 17). myG2P. GitHub. Retrieved from https://github.com/ye-kyaw-thu/myG2P.

- Tucci, L., Laskowski, N., & Burns, E. (2019). A guide to Artificial intelligence in the enterprise.

- V. Singhal et al., "Artificial Intelligence Enabled Road Vehicle-Train Collision Risk Assessment Framework for Unmanned Railway Level Crossings," in IEEE Access, vol. 8, pp. 113790-113806, 2020. [CrossRef]

- Willyard, W. (2022, January 28). What role does an acoustic model play in speech recognition? Rev blog. Rev Blog. https://www.rev.com/blog/resources/what-is-an-acoustic-model-in-speech-recognition.

- Xu, S., Liu, X., Ma, K., Dong, F., Riskhan, B., Xiang, S., & Bing, C. (2023). Rumor detection on social media using hierarchically aggregated feature via graph neural networks. Applied Intelligence, 53(3), 3136-3149.

- Yong, C. T., Hao, C. V., Riskhan, B., Lim, S. K. Y., Boon, T. G., Wei, T. S., Balakrishnan, S., & Shah, I. A. (2023). An implementation of efficient smart street lights with crime and accident monitoring: A review. Journal of Survey in Fisheries Sciences.

- Yu, D., & Deng, L. (2015). Automatic speech recognition. Signals and Communication Technology. [CrossRef]

- Zaman, G., Mahdin, H., Hussain, K., Atta-Ur-Rahman, Abawajy, J., & Mostafa, S. A. (2021). An ontological framework for information extraction from diverse scientific sources. IEEE Access, 9, 42111-42124. [CrossRef]

- Zhong, G., Wang, L., Ling, X., & Dong, J. (2016). An overview on data representation learning: From traditional feature learning to recent deep learning. The Journal of Finance and Data Science, 2(4), 265-278. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).