You are currently viewing a beta version of our website. If you spot anything unusual, kindly let us know.

Preprint

Article

FireSonic: Design and Implementation of an Ultrasound Sensing-Based Fire Type Identification System

Altmetrics

Downloads

102

Views

31

Comments

0

A peer-reviewed article of this preprint also exists.

This version is not peer-reviewed

Abstract

Accurate and prompt determination of fire types is essential for effective firefighting and reducing damages. However, traditional methods such as smoke detection, visual analysis, and wireless signals pose unavailability in identifying fire types. This paper introduces FireSonic, an acoustic sensing system that leverages commercial speakers and microphones to actively probe the fire using acoustic signals, effectively identifying indoor fire types. By incorporating beamforming technology, FireSonic first enhances signal clarity and reliability, thus mitigating signal attenuation and distortion. To establish a reliable correlation between fire types and sound propagation, FireSonic quantifies the Heat Release Rate (HRR) of flames by analyzing the relationship between fire heated areas and sound wave propagation delays. Furthermore, the system extracts spatiotemporal features related to fire from channel measurements. Experimental results demonstrate that FireSonic attains an average fire type classification accuracy of 95.5% and a detection latency of less than 400 ms, satisfying the requirements for real-time monitoring. This system significantly enhances the formulation of targeted firefighting strategies, boosting fire response effectiveness and public safety.

Keywords:

Subject: Computer Science and Mathematics - Signal Processing

1. Introduction

Fire disaster is one of the most significant global threats to life and property. Annually, fire incidents result in billions of dollars in property damage and a substantial number of casualties, such as those from electrical and kitchen fires [1]. Different types of fire exhibit distinct combustion characteristics, spread rates, which require specific firefighting methods [2]. Accurately identifying the type of fire is crucial for public safety. Timely and effective determination of the fire type enables the implementation of targeted firefighting strategies, thereby minimizing fire-related damage and safeguarding lives and property. The study of different types of fires enables a deeper understanding of their combustion mechanisms and development processes. This knowledge facilitates the development of more scientific and effective firefighting techniques and equipment [3]. Not only does this enhance firefighting efficiency, but it also provides essential theoretical foundations and practical guidelines for fire prevention.

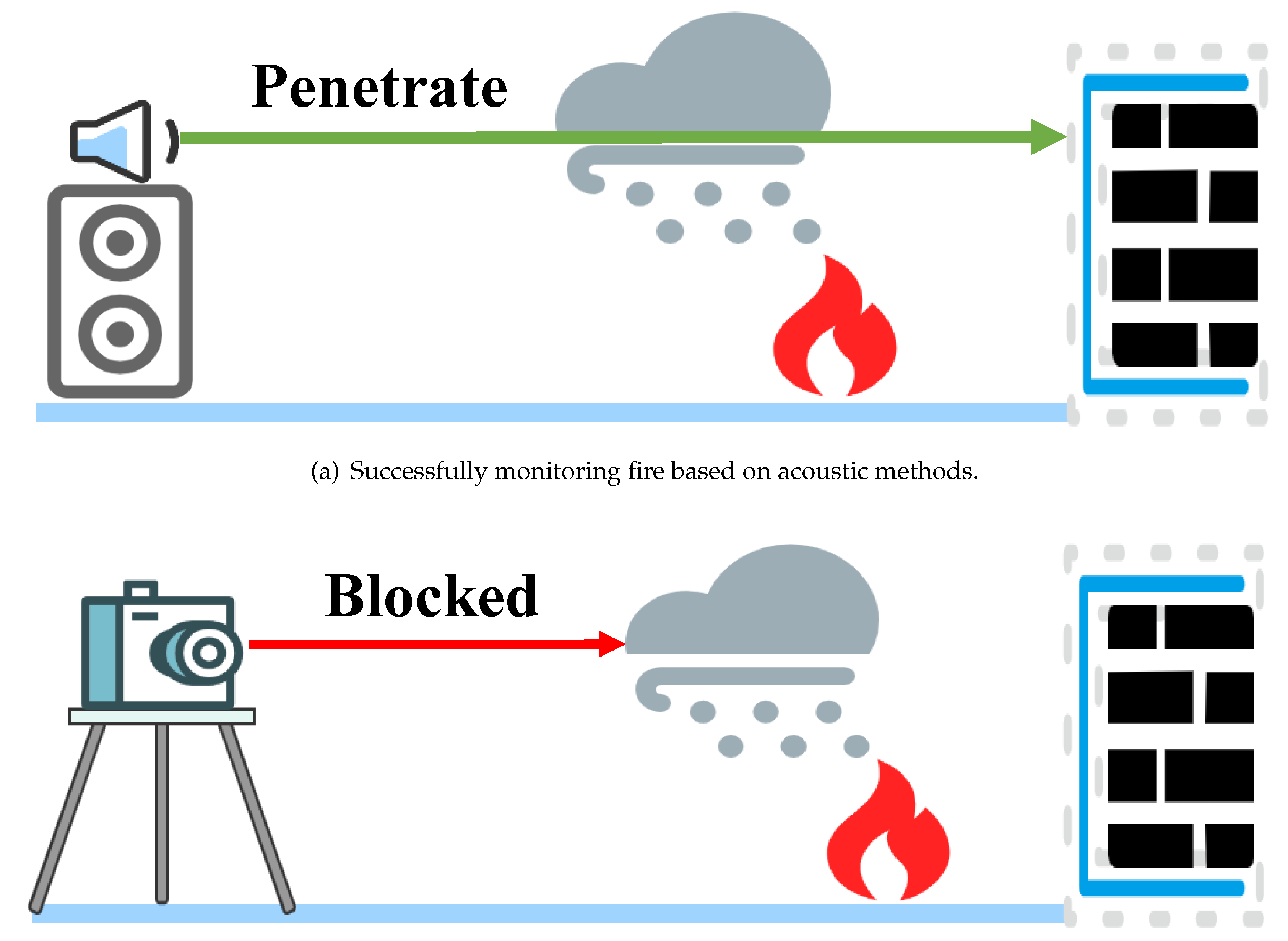

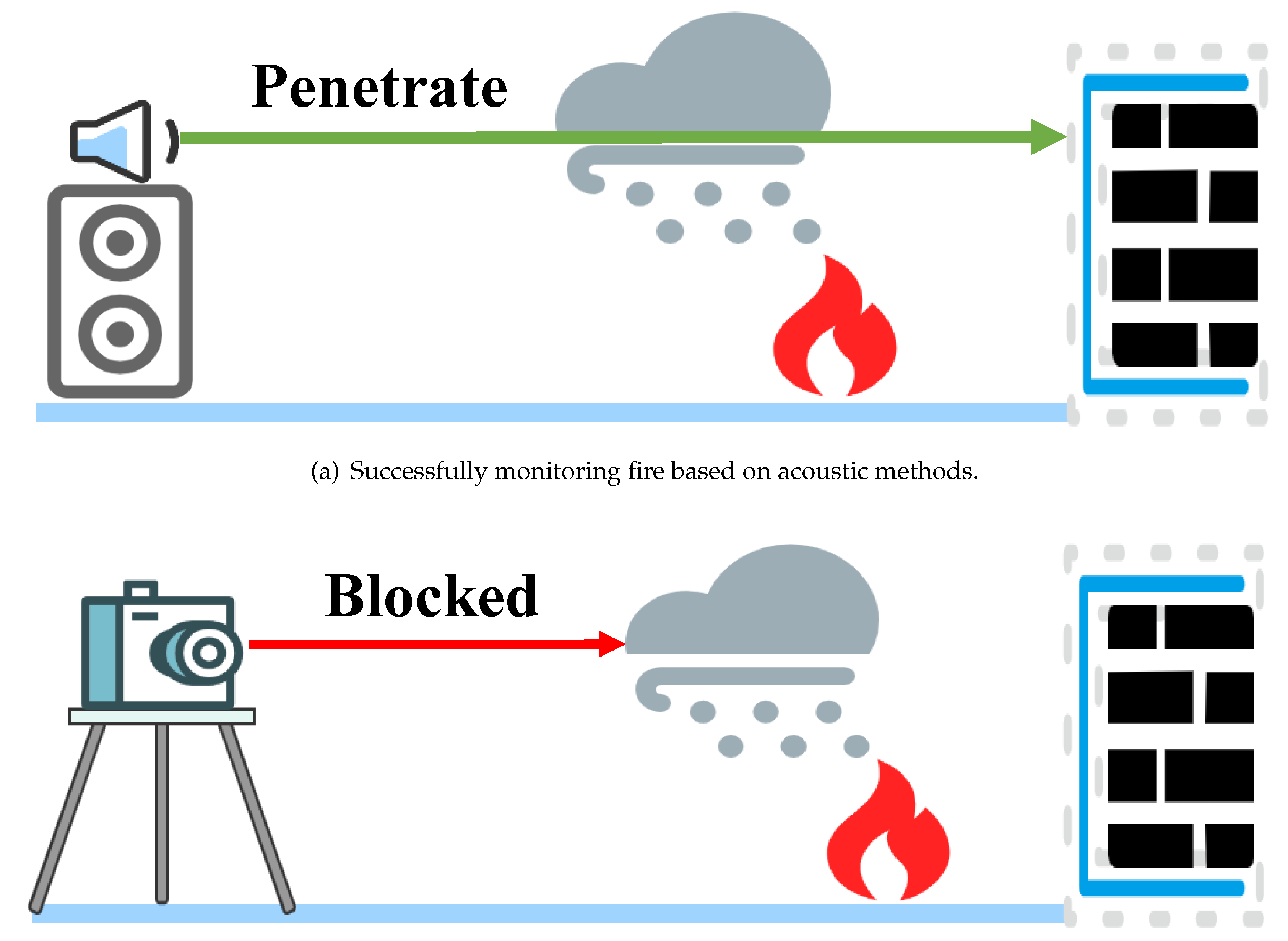

With advances in sensor technology, the development of new types of sensing systems has become possible [4,5]. Existing fire detection methods primarily rely on smoke [6,7,8,9], vision [10,11,12,13,14], radio frequency [15,16,17], and acoustics [18,19,20,21,22]. However, each of these methods has its limitations. Smoke detectors, while widely used, are prone to false alarms triggered by environmental impurities and may pose health risks due to the emitted radioactive materials they contain [9,23]. Vision-based detection systems require an unobstructed line of sight and may raise privacy concerns. Furthermore, as illustrated in Figure 1, vision-based methods struggle when cameras are contaminated or obscured by smoke, making it difficult to gather relevant information about the fire’s severity and type. In contrast, methods using wireless signals, such as RF and acoustics, can still capture pertinent data even in smoky conditions. Recent advances have seen RF sensing technology employed in fire detection tasks. However, RF detection typically requires specialized and expensive equipment and may be inaccessible in remote areas [15,17].

Acoustic-based methods for determining fire types offer several advantages[24,25,26,27,28,29,30,31]. Firstly, they provide a non-contact detection method, which avoids direct contact with the fire. Second, they enable real-time monitoring and rapid response, facilitating timely firefighting measures. Additionally, this method demonstrates strong environmental adaptability, being capable of working well in environments with smoke diffusion or low visibility, providing a more reliable means for fire monitoring [32,33,34,35,36]. However, the state-of-the-art work acoustic-based sensing system in [19] has a limited sensing range, vulnerable to daily interferences and is not capable of classifying fire types.

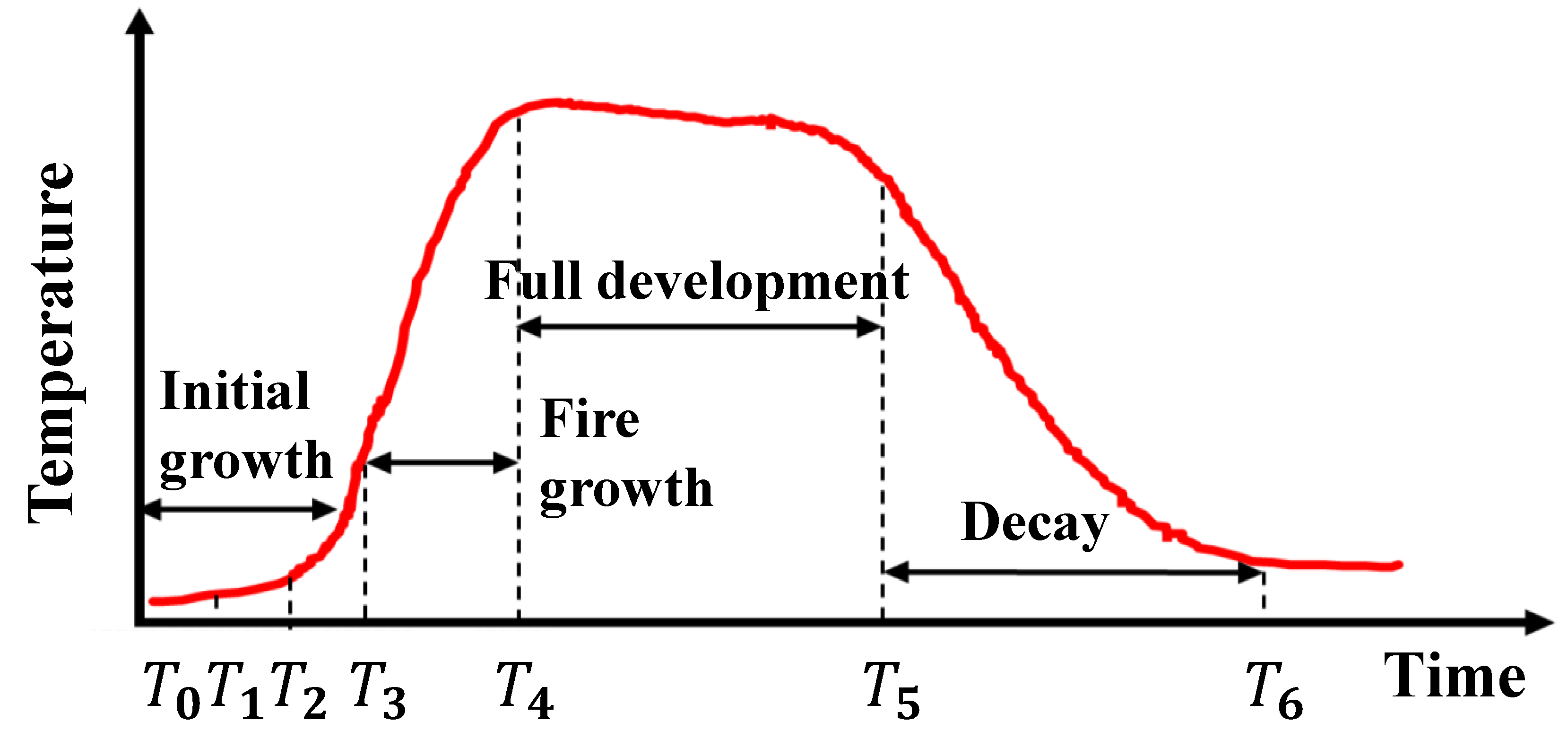

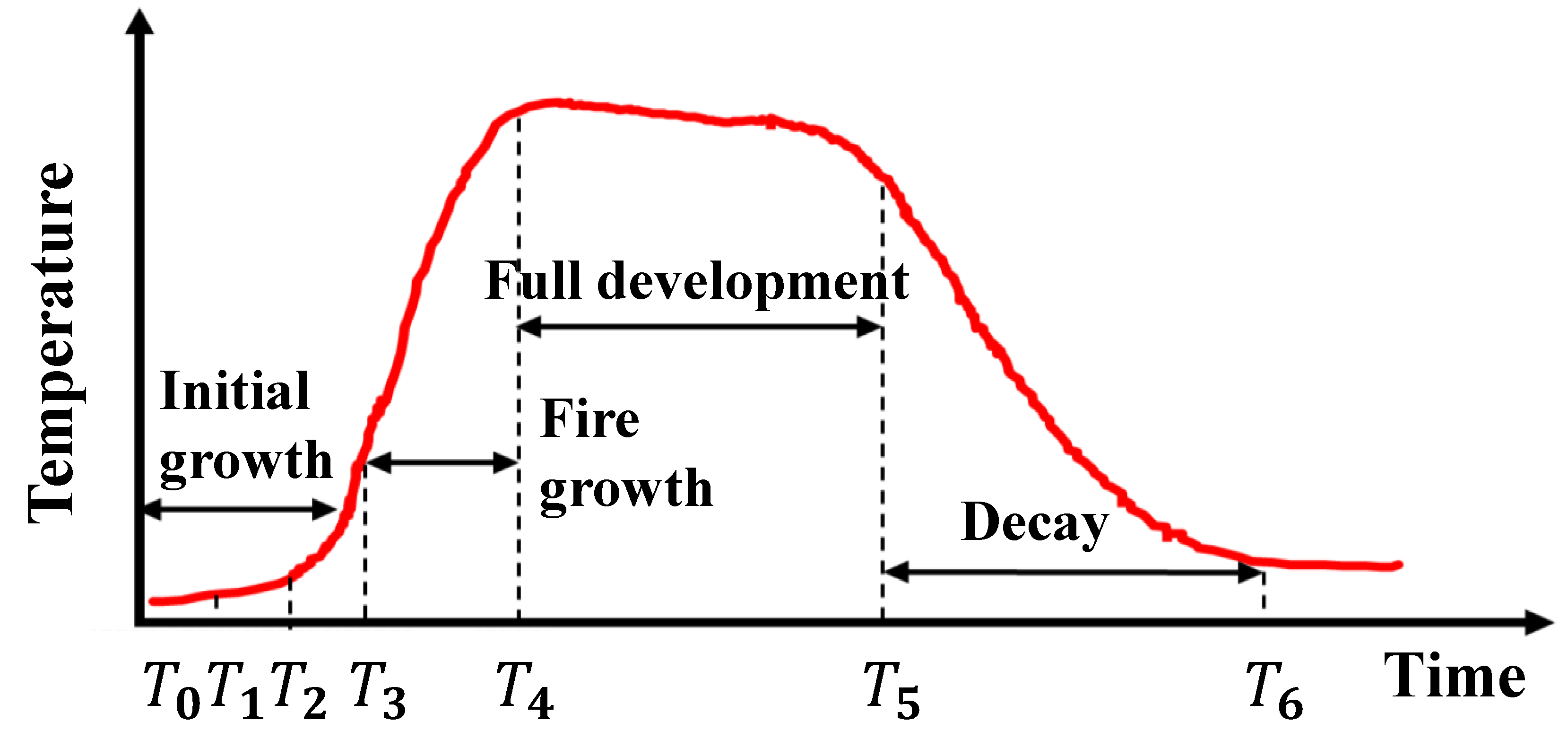

To overcome the drawbacks in aforementioned approaches, this paper introduces FireSonic, an acoustically-based system capable of discriminating fire types, characterized by a low false alarm rate, effective signal detection in smoke-filled environments, rapid detection speed, and low cost. Unlike conventional reflective acoustic sensing technologies [24,25,26,27,28,37,38,39,40], FireSonic operates by detecting changes in sound waves as they pass through flames, thereby offering a novel approach to acoustic sensing technology. This method enhances the practical application and reliability of acoustic sensors in fire detection scenarios. As shown in Figure 2, it displays the curve of the standard temperature over time for burning with sufficient oxygen, including four stages: initial growth, fire growth, full development, and decay. Due to the temperature variations exhibited by different types of fires at various stages, the objective of this study is to develop a method utilizing acoustic means to monitor the changes in this curve, thereby facilitating the determination of fire types. Research on this technology may even provide technical insights into aspects such as the rate of fire spread in forest fires in the future [41].

However, implementing such a system is a daunting task. The first challenge is obtaining high-quality signals for monitoring fires. The fire environment poses a significant challenge due to its complexity, with factors such as smoke, flames, thermal radiation, and combustion by-products interfering with sound wave propagation, resulting in signal attenuation and distortion. In addition, diverse materials like building materials and furniture affect sound waves differently, causing signal reflection, refraction, and scattering, further complicating accurate signal interpretation.

To address the first challenge, we utilize beamforming technology, adjusting the phase and amplitude of multiple sensors to concentrate on signals from specific directions, thereby reducing interference and noise from other directions. By aligning and combining signals from these sensors, beamforming enhances target signals, improving signal-to-noise ratio, clarity, and reliability. In addition, we employ spatial filtering techniques to mitigate multipath effects caused by sound waves propagating through different media in a fire, thereby minimizing signal distortion and enhancing detection accuracy.

The second challenge lies in establishing a correlation between fire types and acoustic features. Traditional passive acoustic methods are influenced by the varying acoustic signal characteristics generated by different types of fire. Factors such as the type of burning material, flame size, and shape result in diverse spectral and amplitude features of sound waves, making it difficult to accurately match acoustic signals with specific fire types. Moreover, while the latest active acoustic sensing methods can detect the occurrence of fires, the inability of flames to reflect sound waves poses a challenge in associating active acoustic signals with fire types using conventional reflection approaches.

To address the second challenge, different from directly establishing the association between fire types and sound waves, we propose a heat release based monitoring scheme, particularly quantifying the correlation between the region of fire heat release and sound propagation delays. This quantification is based on the fact that sound speed increases with temperature elevation. Larger flames generate broader high-temperature areas. We discern fire types based on real-time changes in heat production during the combustion process.

Contributions. In a nutshell, our main contributions are summarized as follows:

- We address the shortcomings of current fire detection systems by incorporating a critical feature into fire monitoring systems. To the best of our knowledge, FireSonic is the first system that leverages acoustic signals for determining fire types.

- We employ beamforming technology to enhance signal quality by reducing interference and noise, while our flame HRR monitoring scheme utilizes acoustic signals to quantify the correlation between fire heat release regions and sound propagation delays, facilitating fire type determination and accuracy enhancement.

- We implement a prototype of FireSonic using low-cost commodity acoustic devices. Our experiments indicate that FireSonic achieves an overall accuracy of in determining fire types. 1.

2. Background

2.1. Heat Release Rate

The relationship between the Heat Release Rate (HRR) and acoustic sensing can be derived through a series of physical principles. The relationship between sound speed cc and temperature T in air can be expressed by the following formula [42]:

where is the sound speed at a reference temperature (usually 293K, which is about 20°C, approximately 343 m/s).

HRR is a measure of the energy released by a fire source per unit time, typically expressed in kilowatts (kW). It can be indirectly calculated by measuring the rate of oxygen consumption by the flames. To establish the relationship between HRR and acoustic sensing, we need to consider how thermal energy affects the propagation of sound waves. As the fire releases heat, the surrounding air heats up, leading to an increase in the sound speed in that area. This change in sound speed can be perceived through changes in the propagation time of sound waves detected by sensors.

2.2. Channel Impulse Response

As signals traverse through the air, they undergo various paths due to reflections from objects in the environment, posing challenges for accurately capturing fire-related information. Consequently, the receiver picks up multiple versions of the signal, each with its own unique delay and attenuation, a phenomenon known as multipath propagation, which can lead to signal distortion and errors in fire monitoring tasks [43,44,45,46,47,48,49]. To address this issue, we utilize Channel Impulse Response (CIR) to segment these multipath signals into discrete segments or taps, enabling more precise identification of signals influenced by fire incidents.

To capture the CIR, a predefined frame is transmitted to probe the channel, subsequently received by the receiver. In the case of a complex baseband signal, measuring the CIR involves computing the cyclic cross-correlation between the transmitted and received baseband signals as:

where is CIR measurement, signifies the transmitted complex baseband signal, denotes the conjugate of at the receiver, and corresponds to the multipath delays. N is the length of . acts as a matrix illustrating the attenuation of signals with varying delays over time t.

2.3. Beamforming

In complex multipath channels, directing signal energy towards specific points of interest minimizes noise and interference, thereby enhancing the target signal. Beamforming involves steering sensor arrays’ beams to predefined angular ranges to detect signals from various directions [50]. This technique is crucial when signals originate from multiple directions and require sensing. Specifically, beamforming selectively amplifies or suppresses incoming signals by controlling the phase and amplitude of sensor array elements. To generate a beam pattern, signal amplification is focused in a desired direction while attenuating noise and interference from other directions, achieved by applying a set of weights to each sensor’s signal. These weights are optimized based on the Signal-to-Noise Ratio (SNR) or desired radiation pattern.

3. System Design

3.1. Overview

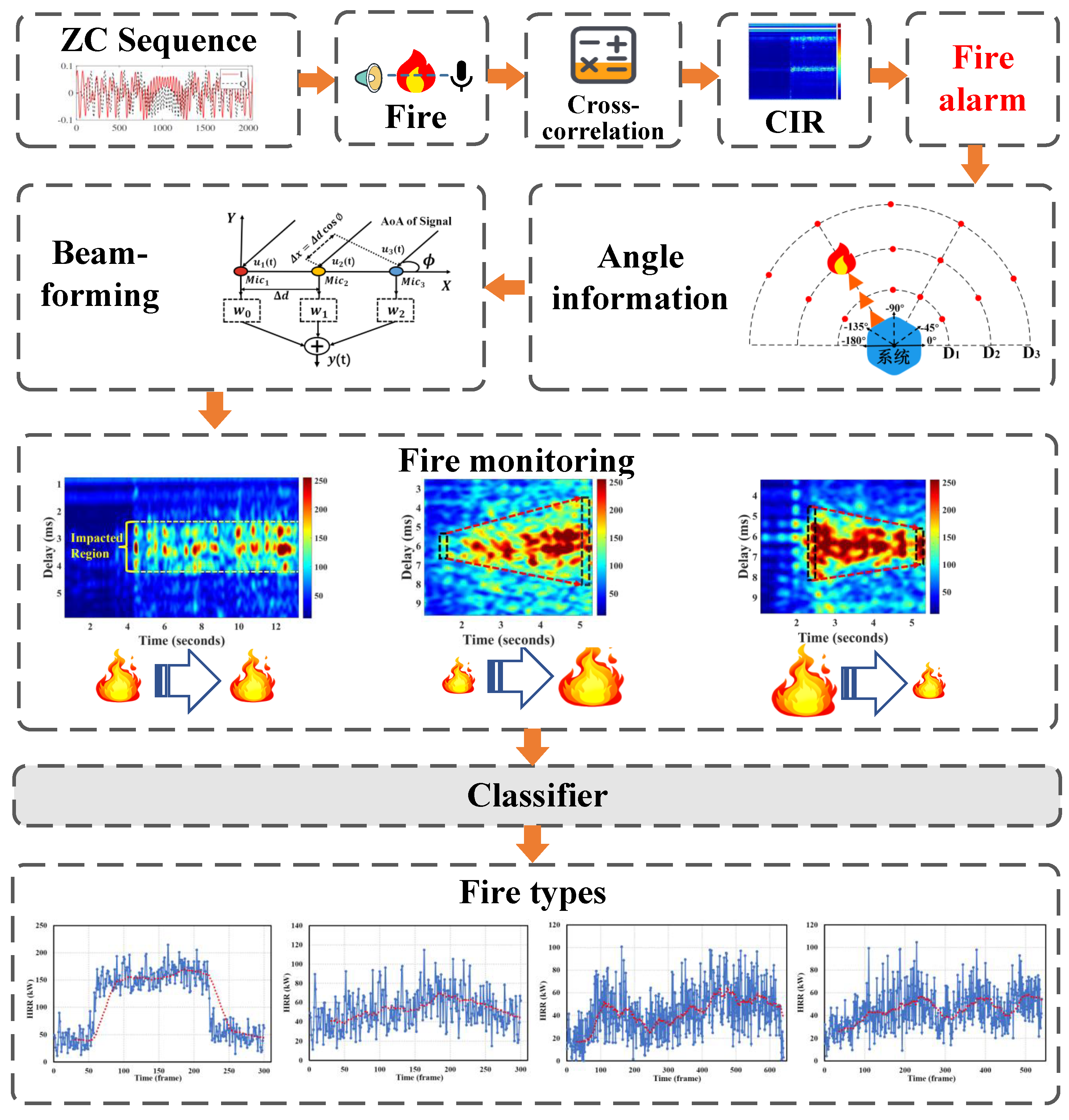

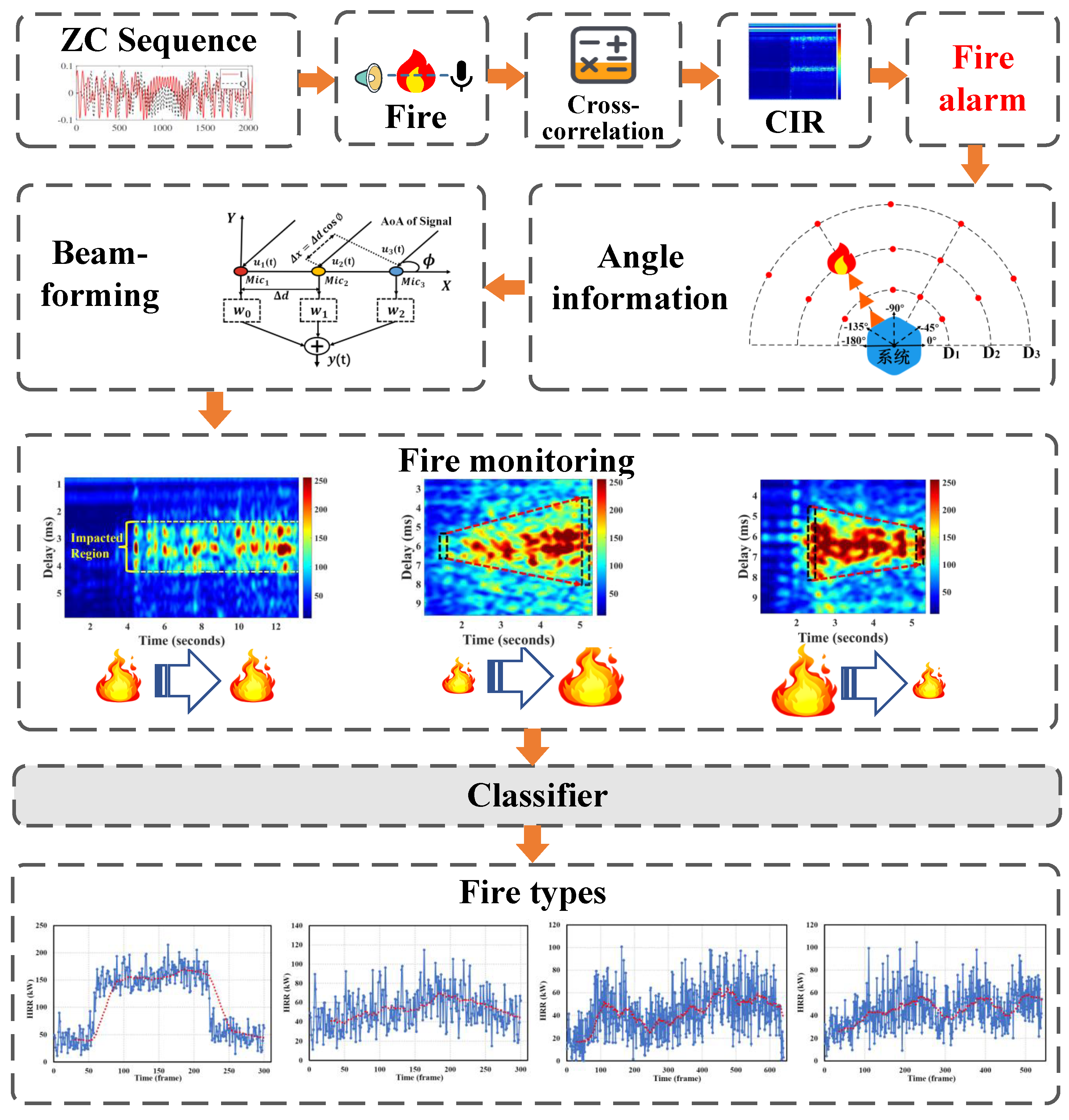

As depicted in Figure 3, the system overview is outlined. The CIR estimator sequentially measures the transmitted and received Zadoff-Chu (ZC) sequence. Specifically, ZC sequence is utilized as a probing signal for its good autocorrelation characteristics [48], while a cross-correlation-based approach is adopted for estimating the CIR affected by fire combustion. Based on the captured CIR, the system assesses the occurrence of fire by considering the differences in sound velocity induced by temperature changes and the acoustic energy absorption effects, and it triggers alarms as needed. Subsequently, beamforming is applied to pinpoint the direction of the fire, producing high-quality thermal maps of fire types. Finally, based on the captured patterns of heat release rate features, a classifier is used for fire type identification.

3.2. Transceiver

The high-frequency signal generation module utilizes a root Zadoff-Chu sequence as the transmission signal. The root ZC sequence has a length of 128 samples with an auto-correlation coefficient using u=64 and Nzc=127. Different settings of u and Nzc parameters are suited for signal output requirements under various bandwidths. The settings here are designed to meet the limitations of human hearing and hardware sampling rates. After frequency domain interpolation, the sequence extends to 2048 points, achieving a detection range of 7 meters, and is up-converted to the ultrasonic frequency band. The signal transmission and reception module stores the high-frequency signal in a Raspberry Pi system equipped with controllable speakers and controls playback via a Python program. The signals are received by a microphone array with a sampling rate of 48 kHz.

3.3. Signal Enhancement Based on Beamforming.

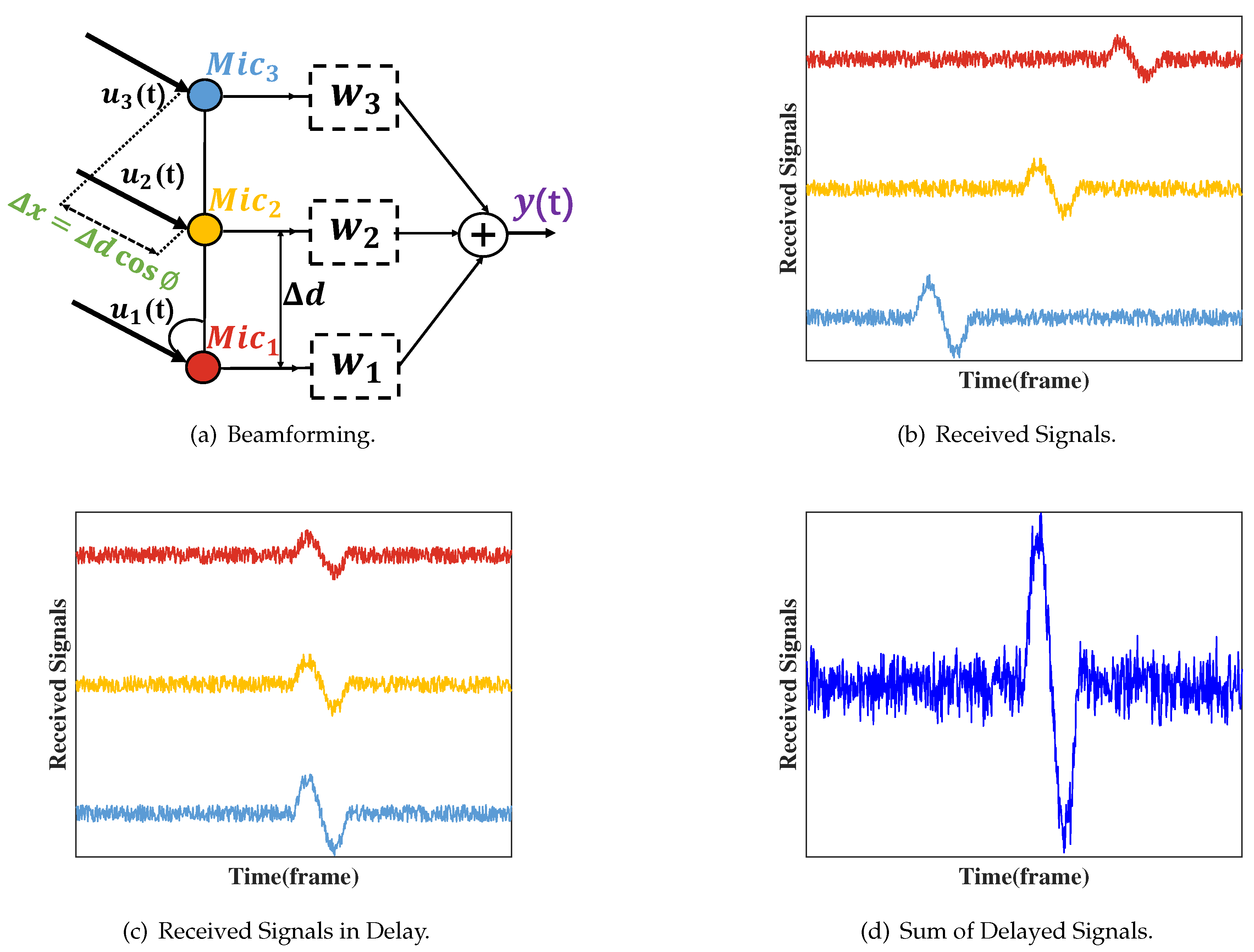

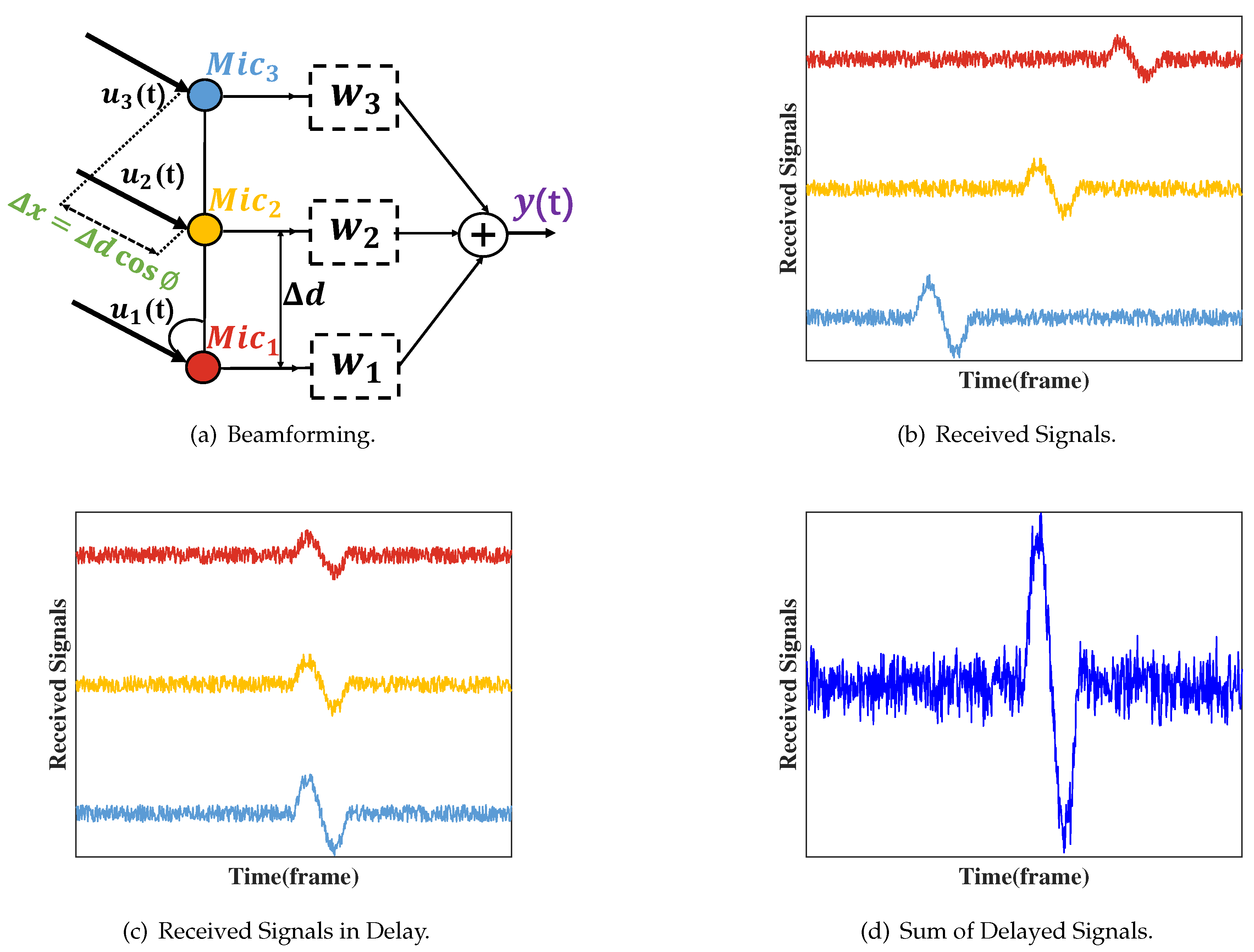

In fire monitoring, beamforming technology plays a crucial role. In complex fire environments, signals often undergo multiple paths due to various obstacles, resulting in signal attenuation and interference [50]. To address this issue, we can utilize beamforming technology to steer the receiver’s beam towards specific directions, thereby maximizing the capture of target signals and minimizing noise and interference. As illustrated in Figure 4, in a microphone array with Mic1, Mic2, and Mic3, each microphone receives signals with different delays. By using the signal from Mic2 as a reference and adjusting the phases of Mic1 and Mic3, signals can be aligned, thus concentrating the combined signal energy. By controlling the phase and amplitude of the signals, beamforming technology can selectively enhance signals in the direction of the fire source, thereby improving the sensitivity and accuracy of the fire monitoring system.

3.4. Mining Fire Related Information in CIR

We apply beamforming techniques to enhance signals from the direction of the fire while eliminating interference from other directions so far. Next, we focus on extracting information related to the extent of the fire’s impact. As sound waves travel through the high-temperature region, their propagation speed increases. The complex chemical and physical reactions during the fire cause the temperature distribution and shape of the high-temperature region to be uneven, resulting in a non-uniform spatial temperature field distribution. To characterize this distribution, we conduct the following study.

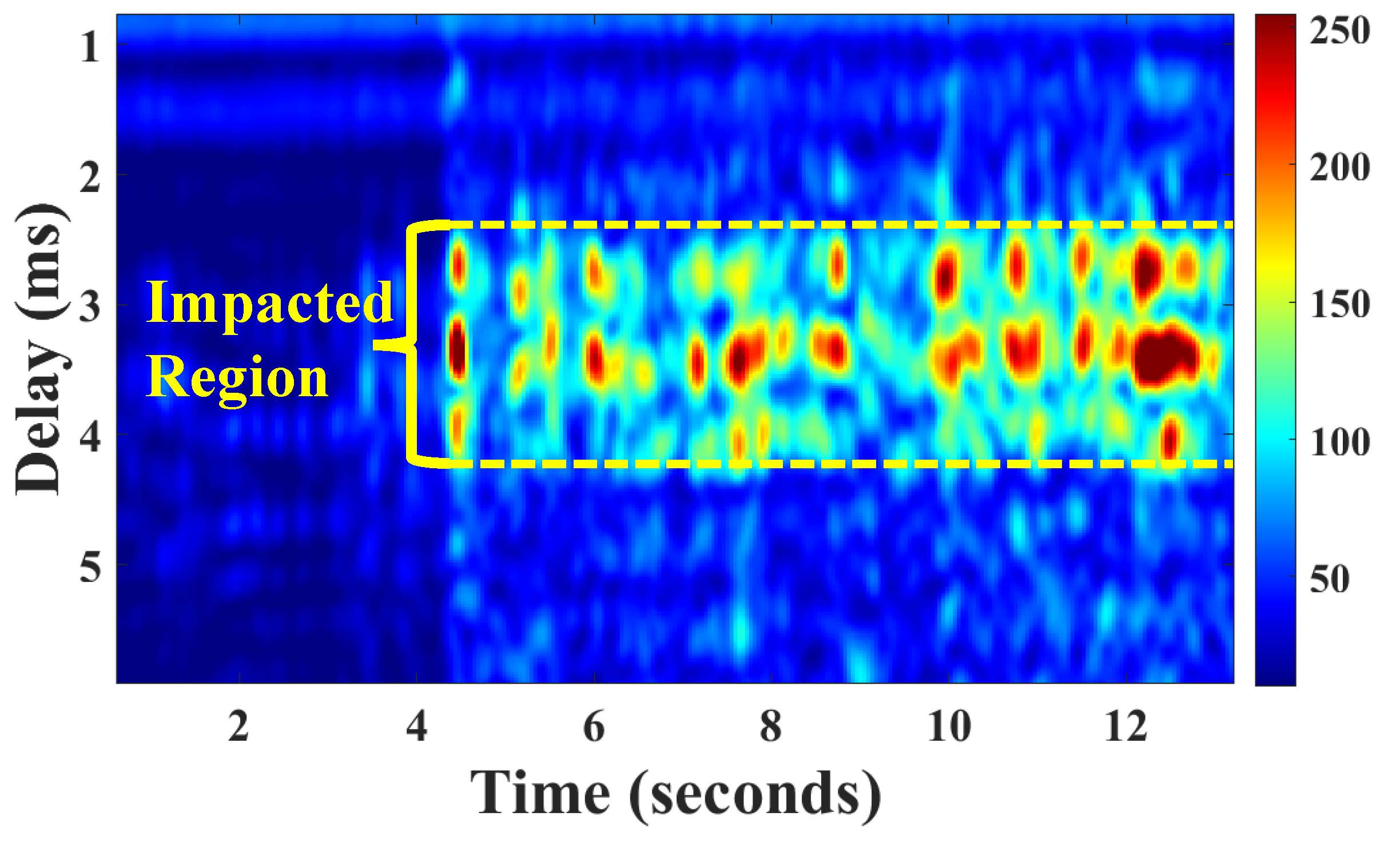

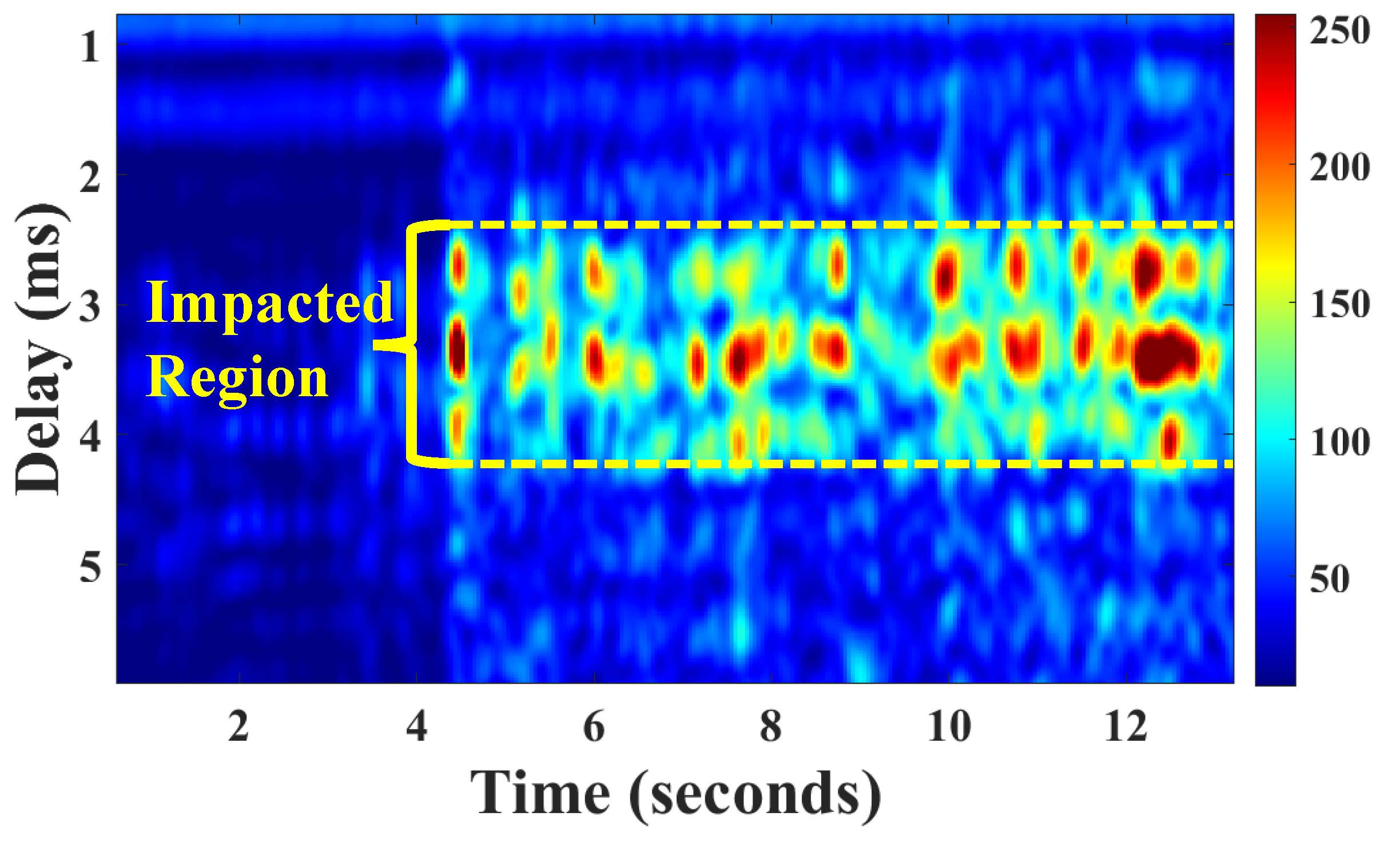

The number of taps is directly related to the spatial propagation distance of the ultrasonic signal. A smaller number of taps indicates a shorter signal propagation distance, while a larger number of taps indicates a longer propagation distance. Therefore, the sensing range s can be determined by the number of taps L, specifically expressed as , where , which represents the distance corresponding to each tap, i.e., the propagation distance of the signal between two adjacent sampling points. is the sampling rate of the signal, and v is sound speed. Specifically, we conduct CIR measurements frame by frame, where each frame represents a single CIR trace. For ease of visualization, we normalize all signal energy values across the taps to a range between 0 and 255 and represent them using a CIR heatmap, as depicted in Figure 5. The horizontal axis represents time in frames, with hardware devices capturing 23 frames of data per second at a sampling rate of 48 kHz. The vertical axis denotes the sensing range, indicated by acoustical signal delays.

4. Results

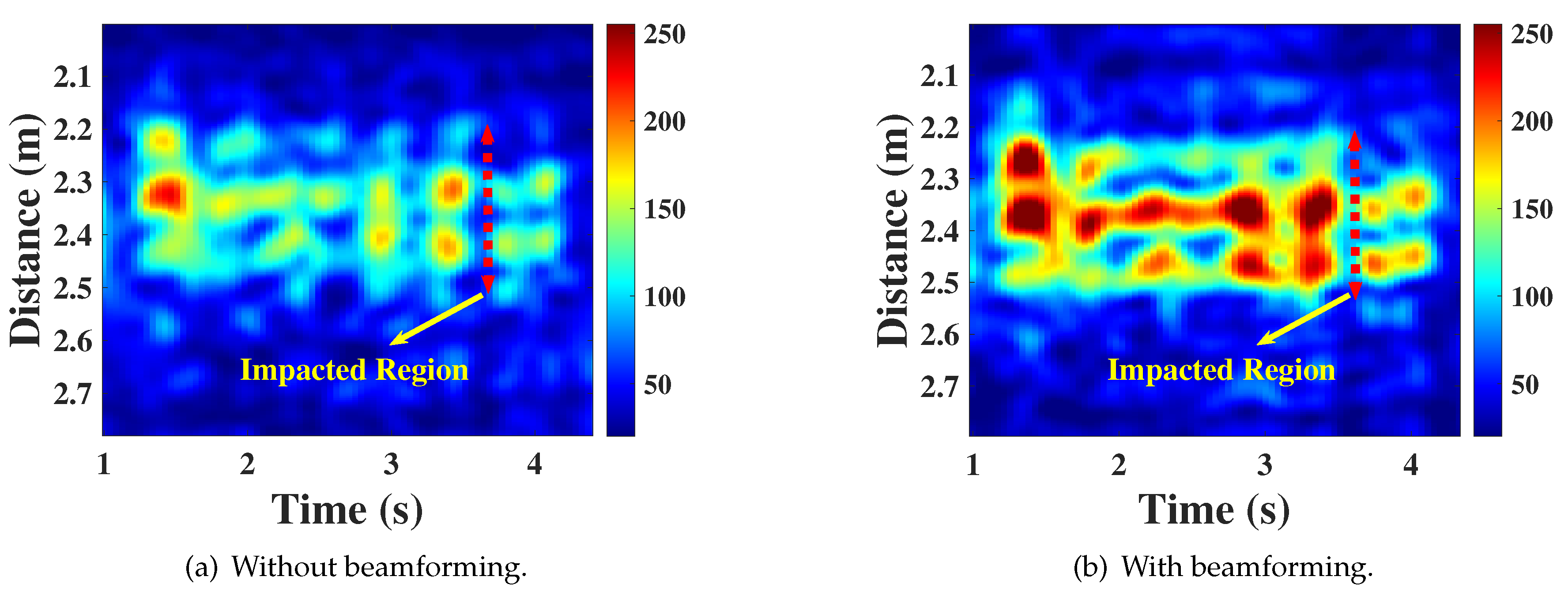

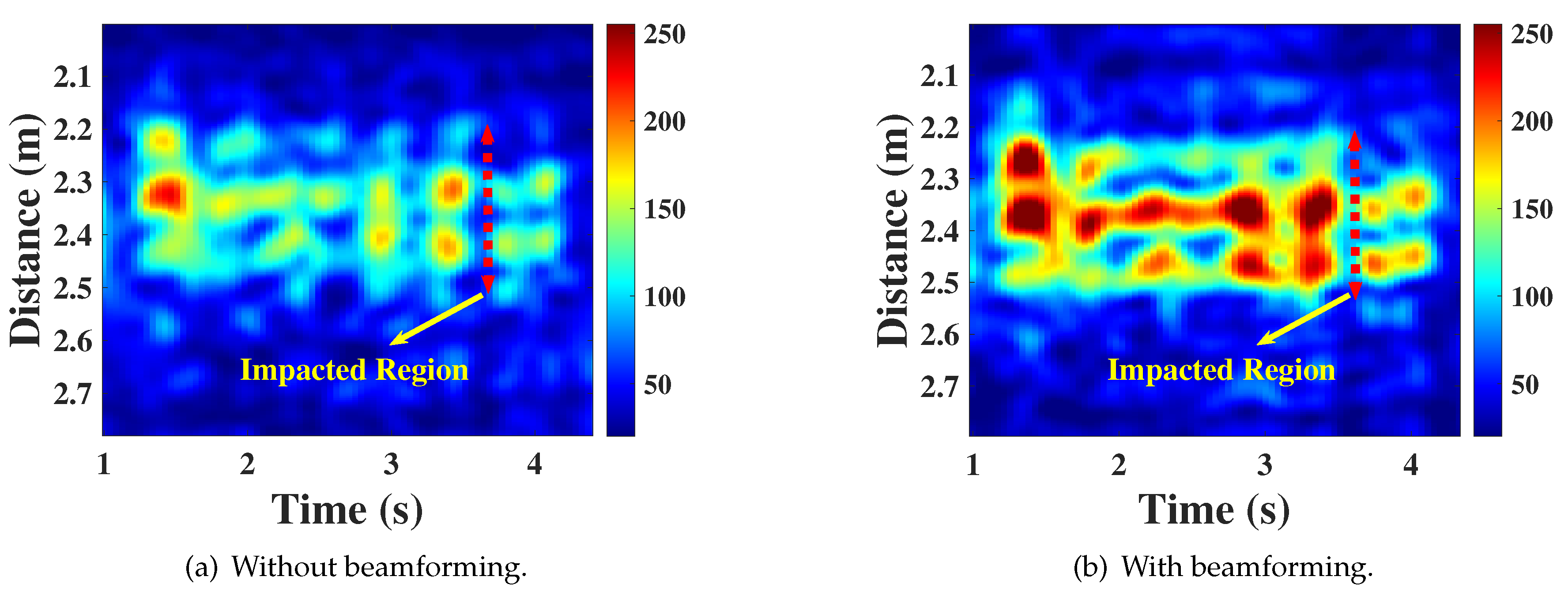

4.1. Cir Measurements before and after Using Beamforming

Figure 6(a) and Figure 6(b) illustrate the results of CIR measurements for an impacted region of 0.3 meters in diameter before and after using beamforming, respectively. It can be observed that the thermal image obtained after beamforming is more accurate. This is because beamforming technology allows for a more comprehensive capture of the characteristics of the affected region, thereby enhancing the accuracy and reliability of the measurements. We empirically set a threshold value, Th (e.g., Th=150), to select taps truly affected by high temperatures, i.e., those taps whose signal energy values exceed the threshold. Subsequently, for the selected taps, we sum their energy values and record it as the instantaneous Heat Release Rate (HRR) for that frame. It is noteworthy that during the fire combustion process, the HRR closely aligns with the temperature variation trend caused by the fire [51,52]. Therefore, we utilize HRR to describe the combustion patterns of different fire types.

4.2. Fire Type Identification

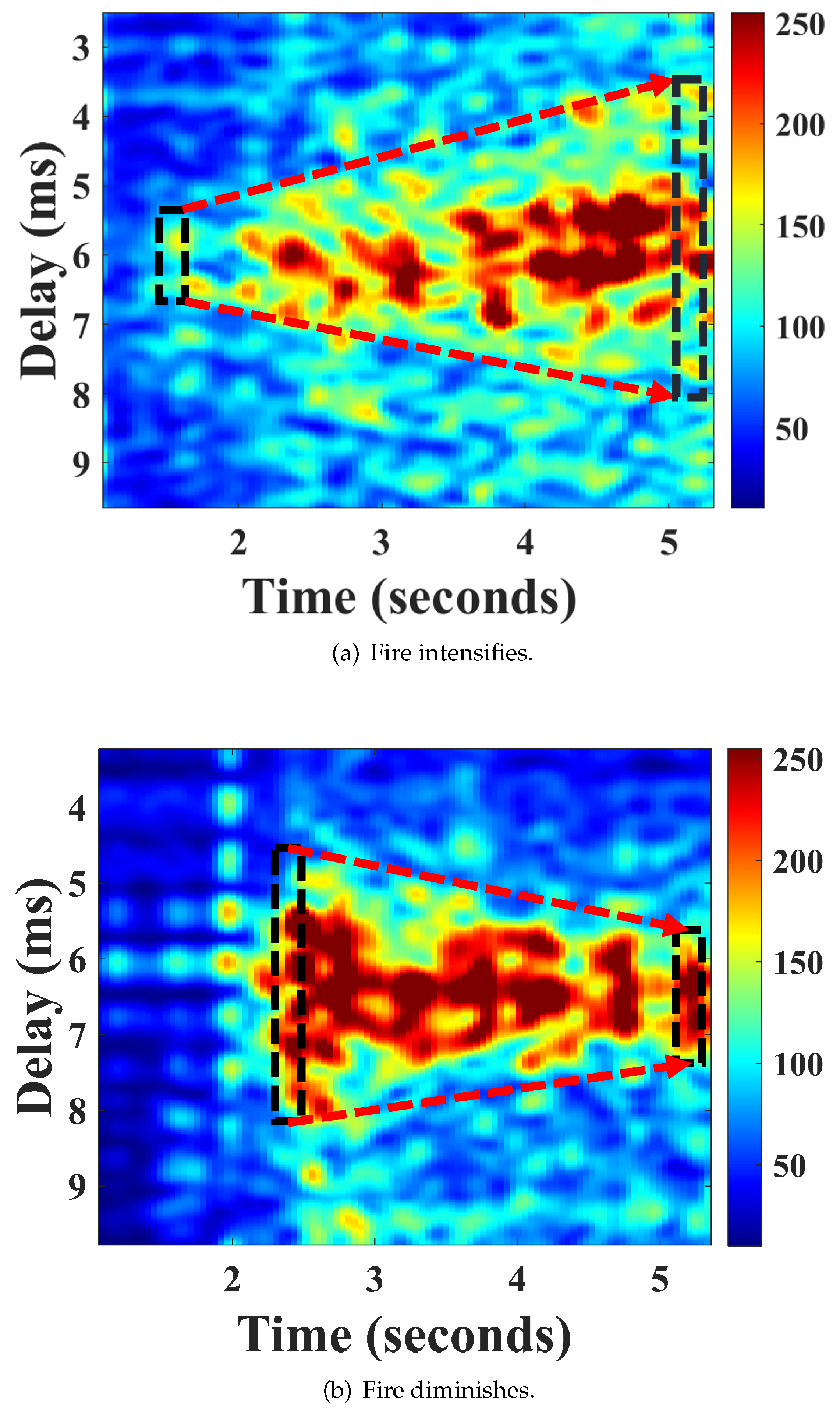

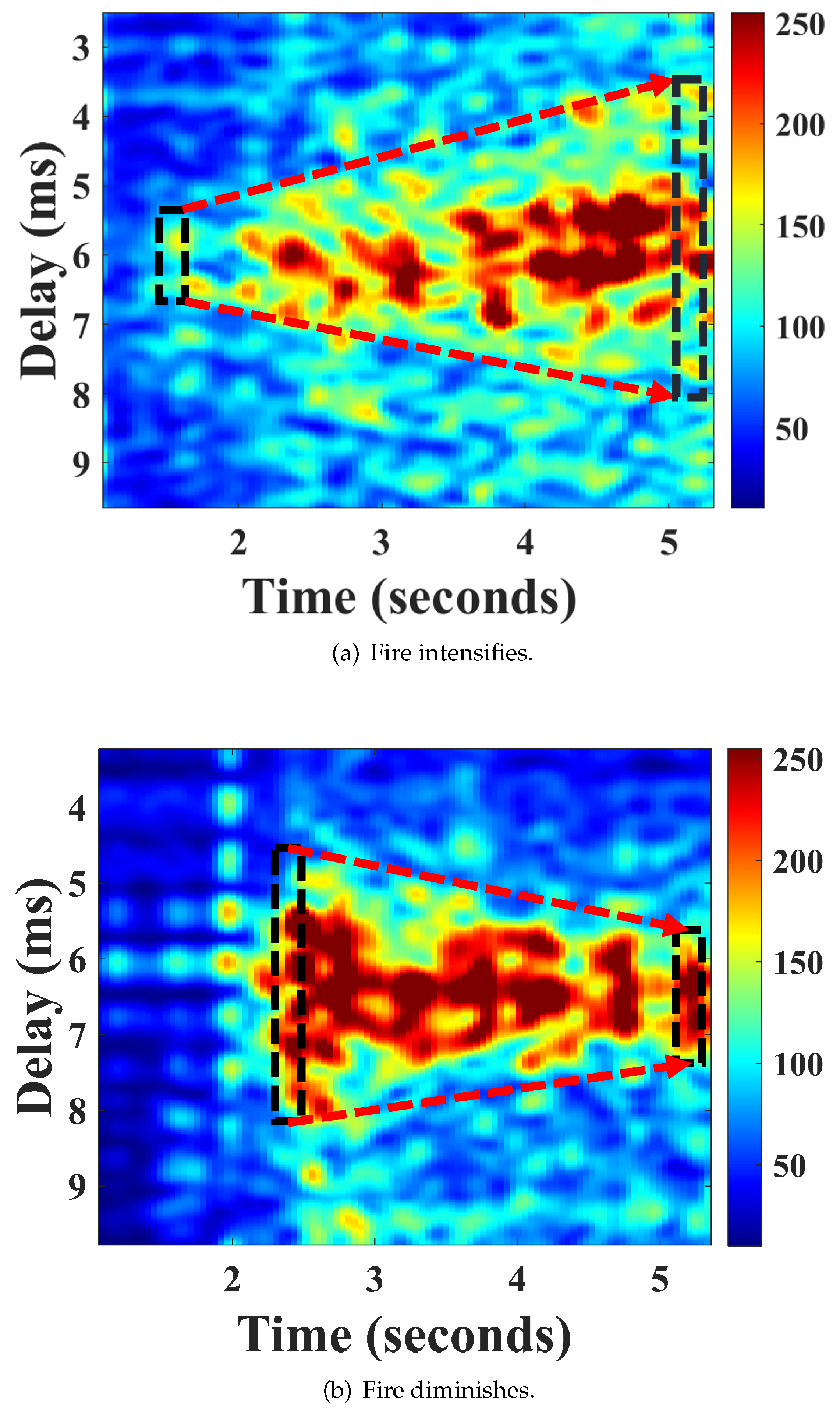

To achieve a gradual increase in flame size, we arranged four alcohol swabs in a straight line at intervals of 0.2 meters within a container, interconnected by a thread soaked in alcohol. These alcohol swabs were sequentially ignited via the thread, illustrating a progressively intensifying fire scenario. The captured CIR measurement results are presented in Figure 7(a). From the ignition of the first alcohol swab at the 1st second to the 4th second, the delay difference increased from an initial 1 ms to 4.5 ms. Similarly, by adjusting the valve of a cassette burner, we simulated a scenario of decreasing fire intensity, where the decreased from 3.5 ms to 1.5 ms in Figure 7(b). Our experimental results indicate that the delay difference is an effective indicator of fire severity and can be utilized to predict the fire’s propagation tendency.

Each trace represents a frame, and the affected area within each frame changes continuously, thereby facilitating the determination of the fire type. To accurately assess the affected areas caused by different types of fires, we define the region between the two farthest taps in each frame with normalized values greater than the set threshold as the area affected by the fire. By summing the taps contained in the high-temperature region (HTR) of each frame, we obtain the energy variation for describing the heat release rate curve. Subsequently, Moving Average (MA) processing is applied to reduce short-term fluctuations in the scatter plot, enabling the identification and analysis of the long-term development trends of fires. Next, we conduct detailed studies on different fire types. The moving average technique is employed to smooth the data, which helps eliminate short-term fluctuations and highlight long-term trends. This method is particularly useful because the sound energy influenced by the flames fluctuates significantly between adjacent frames. By calculating the average value over a period, we capture the variations in fire intensity over that period, allowing us to match the characteristics of different fire types accordingly [53].

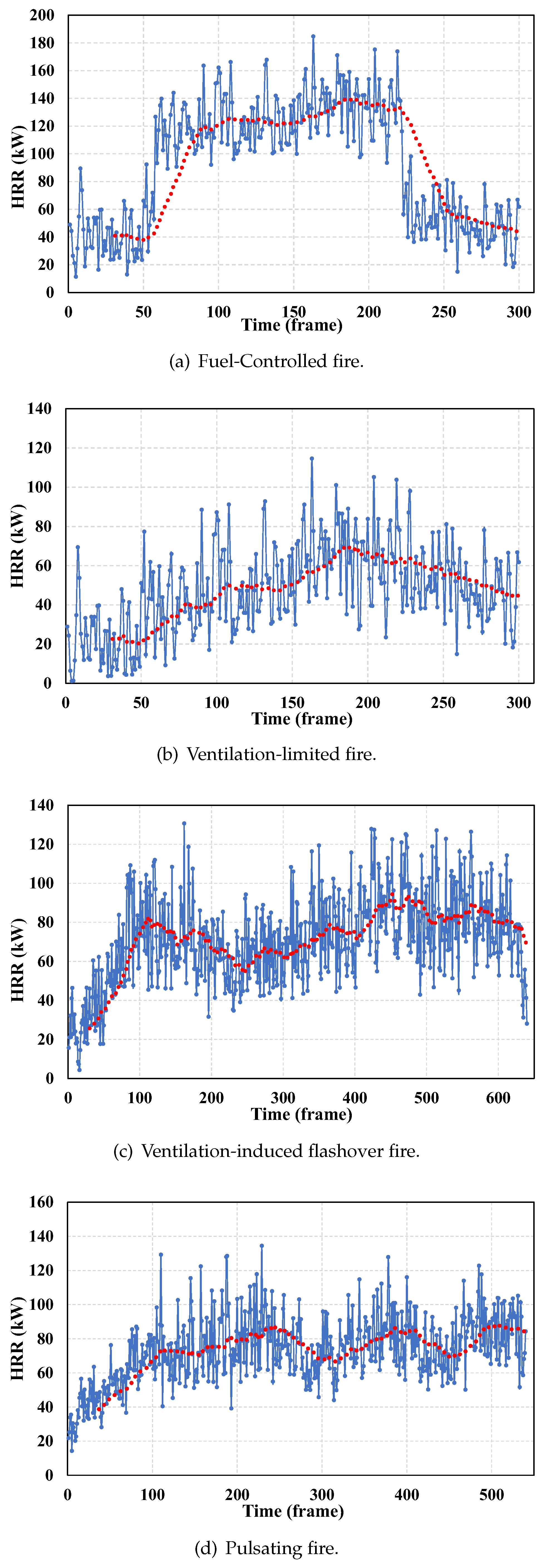

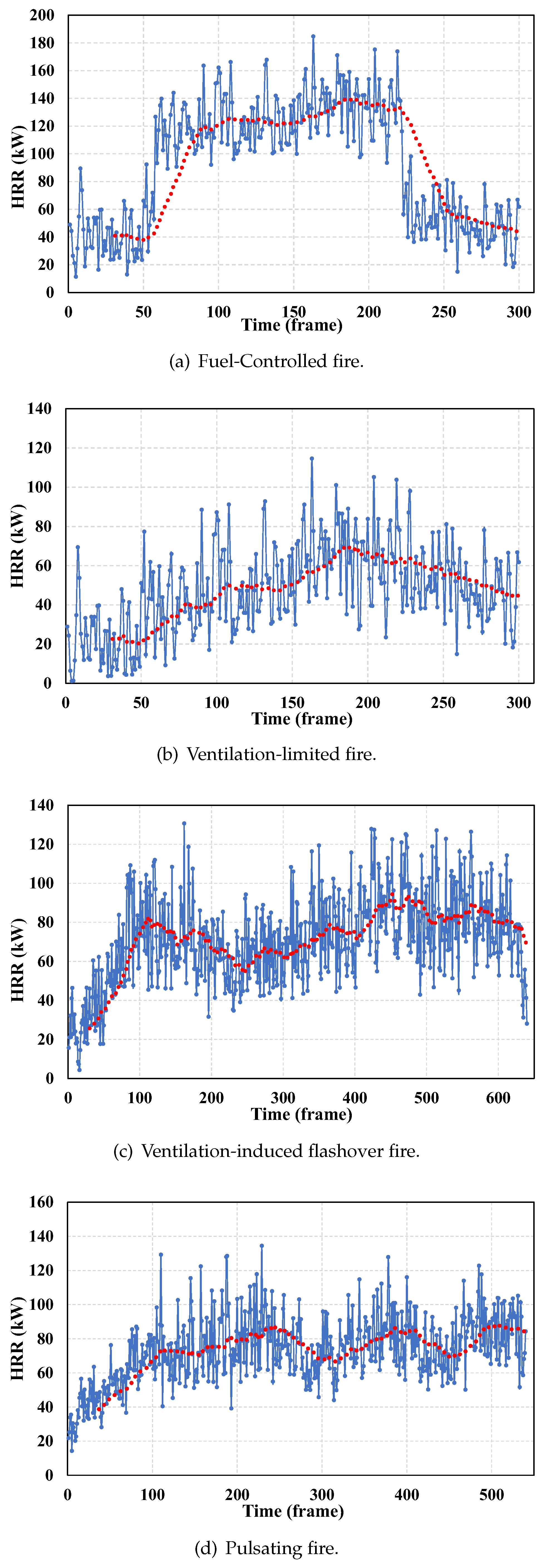

A fuel-controlled fire occurs when the development and spread of the fire are primarily determined by the quantity and arrangement of the combustible materials, given an ample supply of oxygen. In our experiment, we placed a suitable amount of paper and charcoal into a metal box as fuel, with the container top left open to provide sufficient oxygen, simulating the conditions of a real fuel-controlled fire. The experimental results are shown in Figure 8(a). Analysis of the experimental curve reveals that with ample oxygen, the HRR increases rapidly between frames 50 and 100, then stabilizes at around 140 kW. However, at frame 220, the HRR rapidly decreases due to the reduction of combustibles.

A ventilation-limited fire is characterized by the fire’s development being primarily dependent on the amount of oxygen supplied, rather than the amount of fuel. This type of fire typically occurs in enclosed or semi-enclosed spaces. In our simulation, we placed sufficient fuel in the container as fuel and covered the top with a thin metal lid to simulate the oxygen supply conditions of a real ventilation-limited fire. The experimental results are shown in Figure 8(b). Analysis indicates that even with ample fuel, the limitation of oxygen causes the HRR to gradually increase, peaking at frame 180 with an HRR of approximately 70 kW, and then gradually decreasing.

A ventilation-induced flashover fire, also known as backdraft, occurs when flames in an oxygen-deficient environment produce a large amount of incompletely burned products. When suddenly exposed to fresh air, these combustion products ignite rapidly, causing the fire to flare up violently. In our experiment, we initially placed sufficient charcoal and paper in the container as fuel, and covered the top with a thin metal lid to simulate the conditions of oxygen deficiency. As the flame within the container diminished, we used a metal wire to open the lid and introduce a large amount of oxygen, simulating the real-life oxygen supply during a flashover. The HRR curve shown in Figure 8(c) indicates that in the initial oxygen-deficient phase (before frame 230), the HRR increases and then decreases, remaining below 80 kW. After introducing sufficient oxygen by opening the lid, the HRR rapidly increases, reaching a peak of around 90 kW at frame 450, before gradually decreasing.

A pulsating fire refers to a fire where the intensity of combustion fluctuates rapidly during its development, creating a pulsating phenomenon in the combustion intensity. This is caused by the instability of oxygen supply. In our experiment, we placed an appropriate amount of paper and charcoal in the container as fuel, and used a metal lid to control the oxygen supply. After the fire intensified, we repeatedly moved the metal lid back and forth with a metal wire to simulate the instability of oxygen supply during a real fire. As shown in Figure 8(d), the HRR gradually increases to around 85 kW when both fuel and oxygen are sufficient. After frame 250, the instability in oxygen supply, caused by moving the metal lid, leads to the HRR fluctuating around 80 kW.

4.3. Classifier

We chose Support Vector Machine (SVM) as the classifier for fire type identification, with the smoothed curves as inputs. The fundamental principle is to find an optimal hyperplane that maximizes the margin between sample points of different fire classes. This approach of maximizing margins enhances the generalization capability of the model, leading to more accurate classification of new samples. Moreover, SVM focuses mainly on support vectors rather than all samples during model training. This sensitivity to support vectors enables SVM to effectively address classification issues in imbalanced datasets.

5. Experiments and Evaluation

5.1. Experiment Setup

5.1.1. Hardware

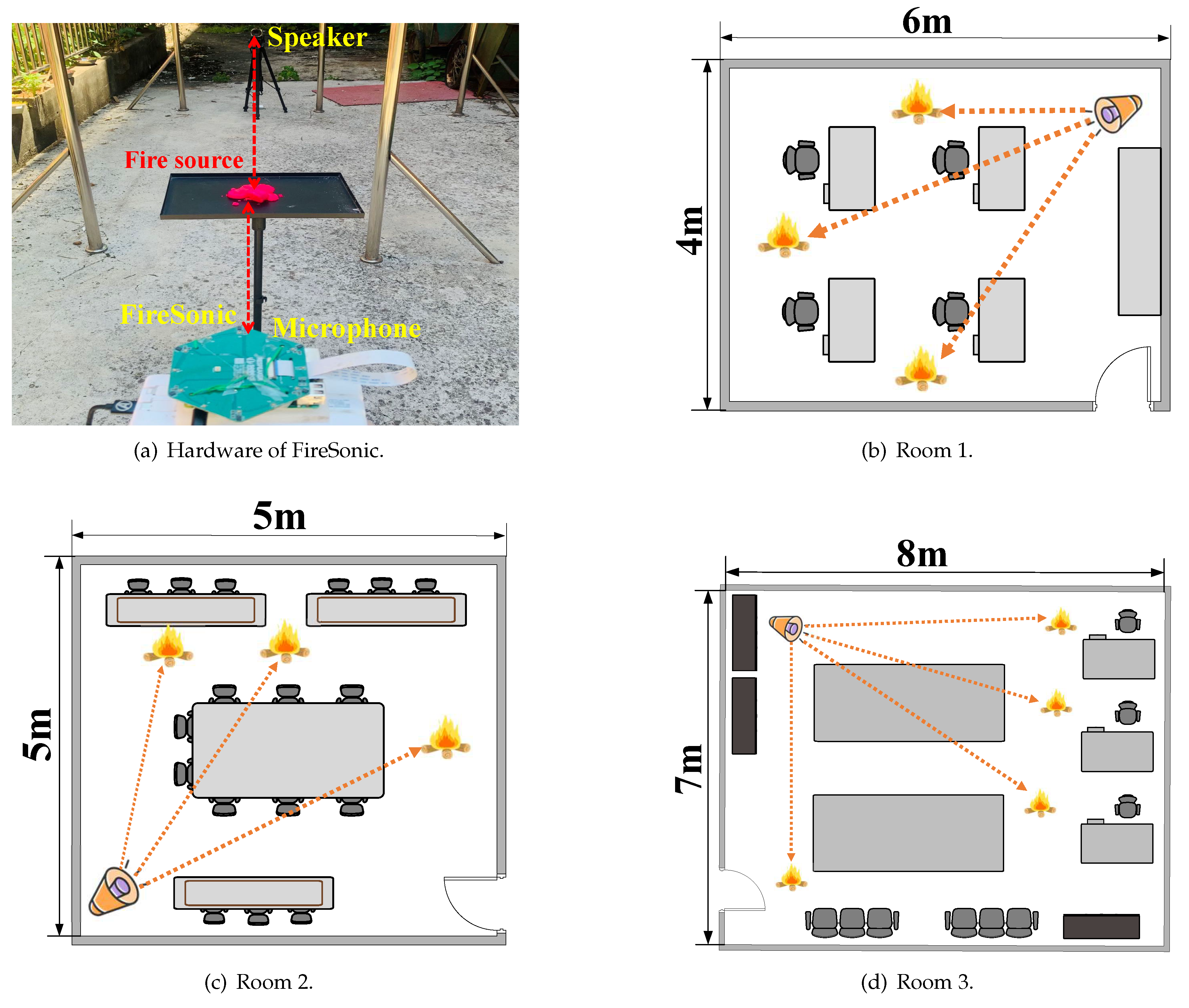

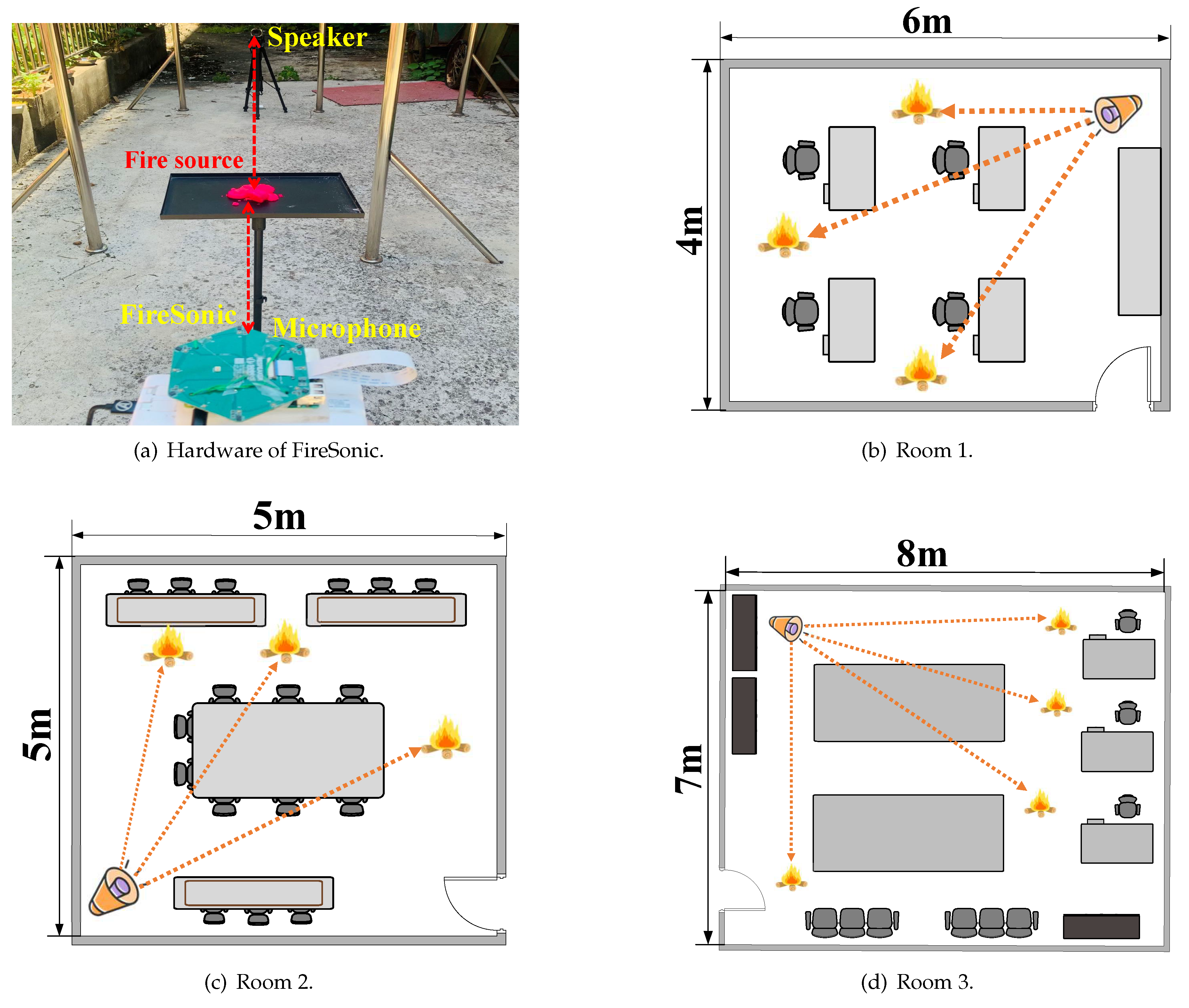

The hardware of our system is depicted in Figure 9(a), consisting of a commercial-grade speaker and a circular arrangement of microphones. The acoustic signals emitted by the speaker are partially absorbed by the flames before being captured by the microphones. The hardware components utilized in our system are depicted in Figure 9(a), comprising a commercial-grade speaker and a circular arrangement of microphones. To facilitate the precise transmission of acoustic signals, we employ the Google AIY Voice Kit 2.0, which includes a speaker controlled by an efficient Raspberry Pi Zero. For the reception of signals, we have selected the ReSpeaker 6-Mic Circular Array Kit that operates at a sampling rate of , ensuring the accurate capture of audio data in the inaudible frequency range. The FireSonic system costs less than 30 USD.

5.1.2. Data Collection

We configure the root ZC sequence with the parameters and , and then upscaled it to a sequence length of to ensure coverage of a m sensing range. The data was gathered across three distinct rooms, each with different dimensions: , , . These room layouts are depicted in Figure 9(b) - 9(d). 1000 fire type measurements were conducted in each room, resulting in a total of 3000 complete measurements.

5.1.3. Model Training, Testing and Validating

We utilize the HRR curve after MA as the model’s input, providing ample information for fire monitoring. For the offline training process, we leverage a computing system equipped with 32 GB of RAM, an Intel Core i7-13700K CPU from the 13th Generation lineup, and an NVIDIA GeForce RTX 4070 GPU. Following training, the model is stored on a Raspberry Pi for subsequent online testing. The trained model has a file size of less than 6 megabytes. It’s worth noting that we also conducted tests on unfamiliar datasets to further validate the system’s performance.

5.2. Evaluation

5.2.1. Overall Performance

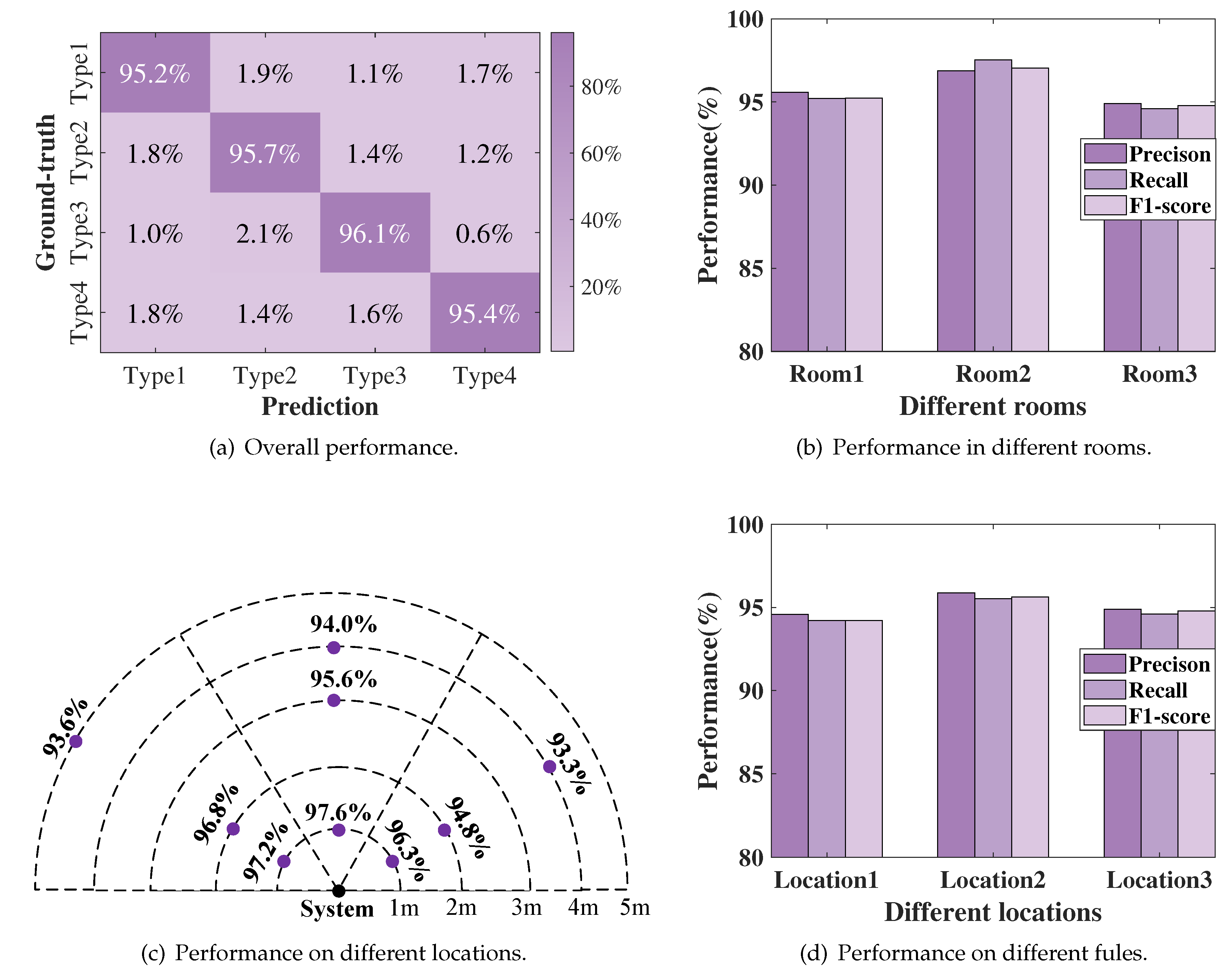

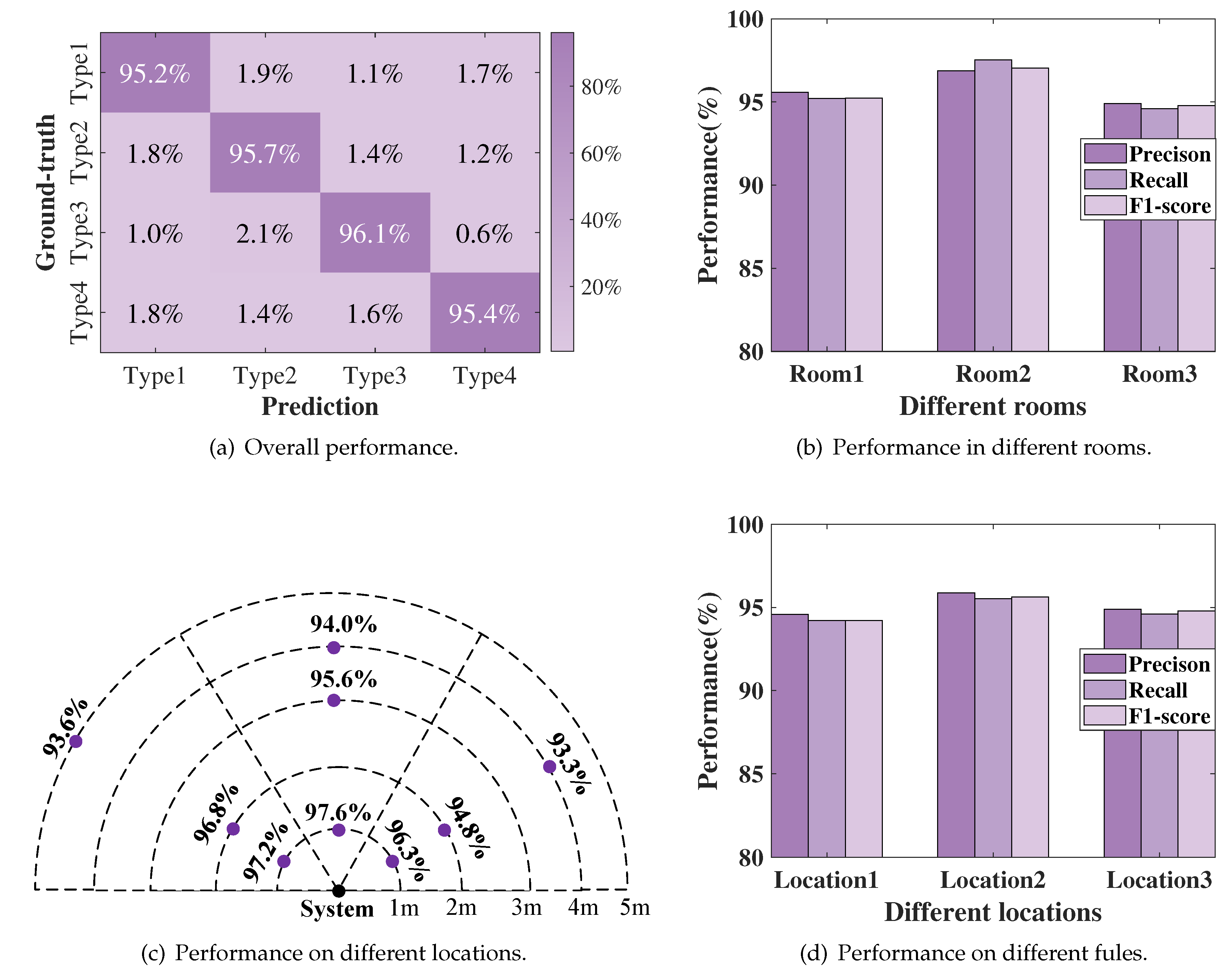

We name the four types of fires corresponding to Figure 8 as Type 1, Type 2, Type 3, and Type 4, respectively. Figure 10(a) shows the system’s judgment results on four types of fires in three rooms, achieving an average recognition accuracy rate of . As shown in Figure 10(b), even in a large room like Room 3 when a fire breaks out, the accuracy rate can reach above . With our holistic design, the system is able to provide accurate fire state information to firefighters, allowing them to take appropriate countermeasures.

5.2.2. Performance on Different Classifiers

To better demonstrate the effectiveness of capturing fire type features, we compared SVM with BP neural network (BP), Random Forest (RF), and K-Nearest Neighbors (KNN),as illustrated in Table 2. SVM exhibited the best performance in terms of highest identification accuracy, reaching . The overall data demonstrated high discriminability. However, the introduction of nonlinearity by BP neural network did not effectively leverage its advantages. RF and KNN were significantly influenced by outliers and noise, leading to inferior performance compared to SVM.

5.2.3. Performance on Different Locations

We randomly selected 9 locations in Room 3, as shown in Figure 10(c). We obtained the average classification accuracy for each location, and all were above . This performance validates the rationality of our technique in capturing fire type differences from various angles and distances, as well as corroborates the judiciousness of our feature selection based on smoothing via moving averages. This substantiates the efficacy and robustness of our holistic design for accurate fire situation awareness under diverse scenarios.

5.2.4. Performance in Smoky Environments

We randomly selected three locations within Room 1 to simulate the performance in a smoke-filled fire scenario. The system’s performance, as shown in Figure 10(d), indicates that FireSonic can achieve an accuracy rate of over even in complex smoke environments. This high level of accuracy is due to the system fundamentally operating on the principle of monitoring heat release.

5.2.5. Performance on Different Fules

To address potential biases associated with testing using a single fuel source, we expanded our evaluation to include six diverse fuels: ethanol, paper, charcoal, wood, plastics, and liquid fuel. The results, as presented in Table 1, demonstrate that the system consistently maintains an average classification accuracy exceeding across three different locations and all fuel scenarios. This uniform high accuracy confirms that the fire evolution features captured by the system are both discriminative and robust. Furthermore, these outcomes affirm the system’s ability to generalize effectively across a variety of fuel types, thereby underscoring the design’s overall effectiveness in providing precise fire situation awareness.

Table 1.

Performance on different fules.

| Types of fuels | Ethanol | Paper | Charcoal | Woods | Plastics | Liquid fuel |

|---|---|---|---|---|---|---|

| Performance | 97.2% | 98.6% | 95.1% | 94.3% | 98.2% | 97.5% |

Table 2.

Performance on different classifiers.

| Classifier | SVM | BP | RF | KNN |

|---|---|---|---|---|

| Best performance | 97.6% | 95.2% | 89.3% | 75.9% |

| Worst performance | 93.3% | 90.3% | 78.5% | 71.5% |

| Average performance | 95.5% | 93.5% | 84.7% | 72.8% |

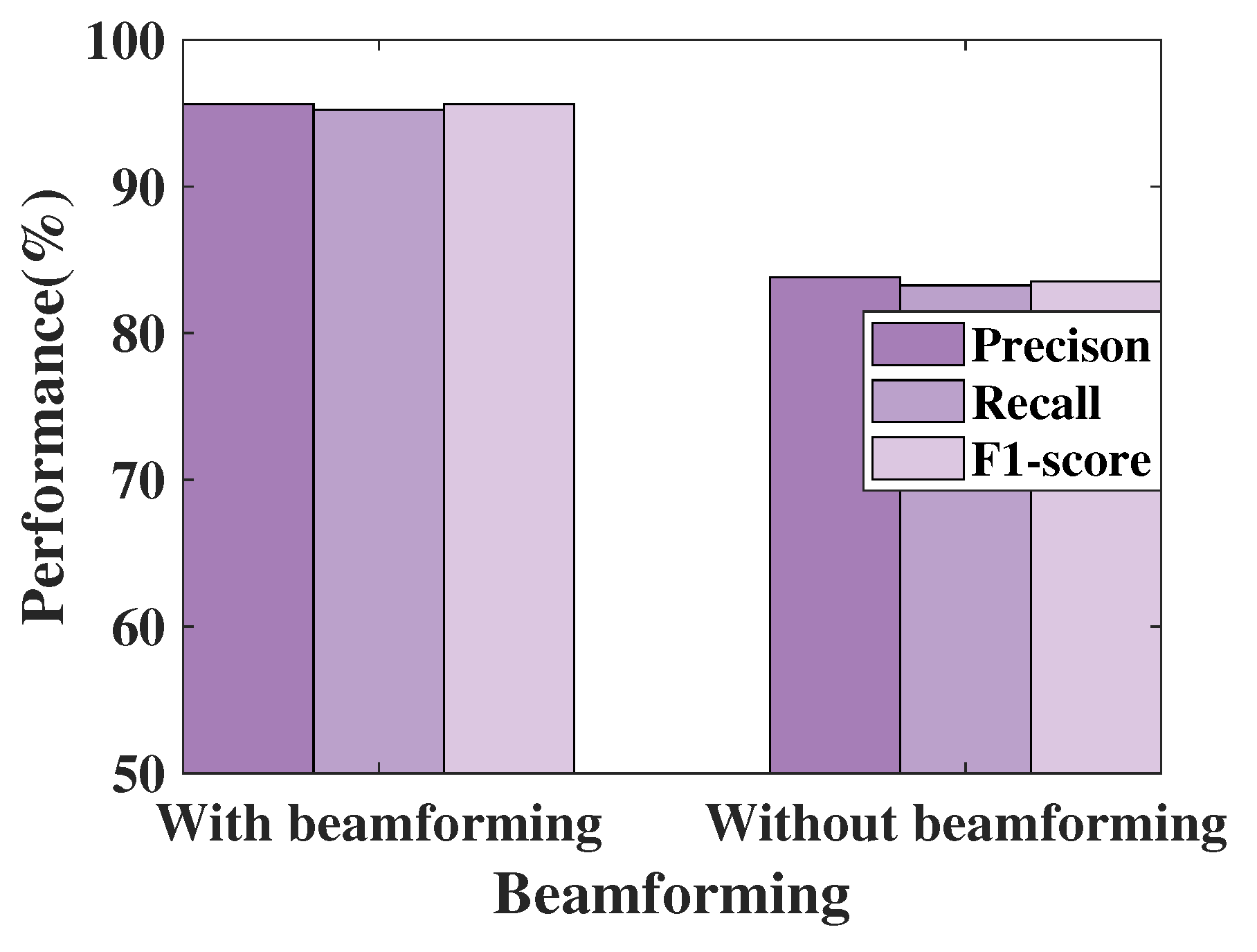

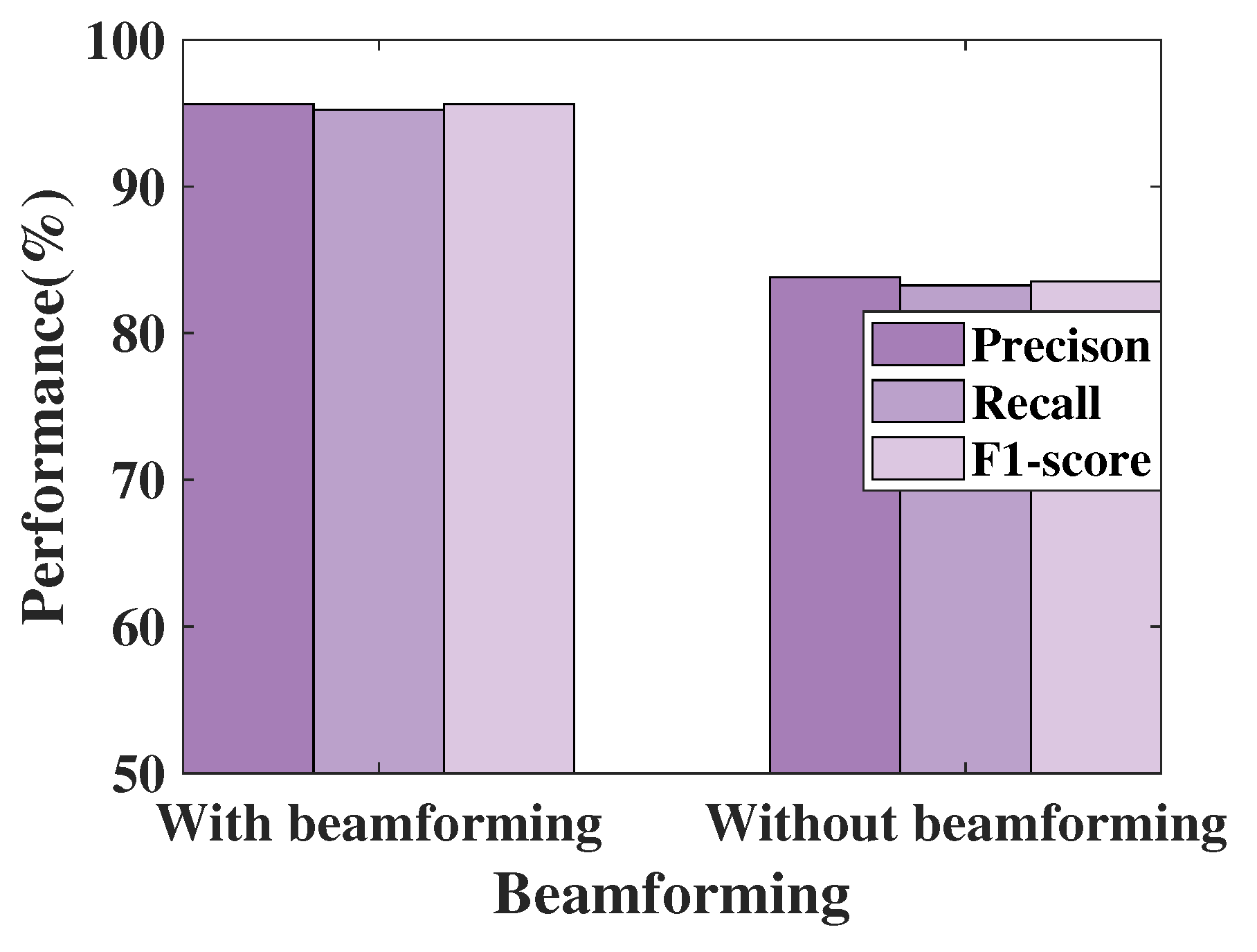

5.2.6. Comparison of Performance with and without Beamforming

To further investigate the extent of performance enhancement by beamforming, we conducted 300 measurements each with and without beamforming using FireSonic in Room3. The results, as depicted in Figure 11, show that Precision, Recall, and F1-score values are all above when beamforming is employed. In stark contrast, experiments without beamforming show performance below . This confirms the beneficial role of beamforming in capturing fire related features via CIR.

5.2.7. Detection Time

The system detection time includes a frame detection time of less than 200ms, a downconversion time of 20ms, a CIR measurement time of 30ms, and a fire type judgment computation time of 130ms. The cumulative detection duration of these four steps is less than 400ms, which sufficiently meets the real-time requirements in urgent fire scenarios.

5.2.8. Comparison with State-of-the-Art Work

To benchmark against state-of-the-art acoustic-based fire detection approaches, we employ the system in [19] as a baseline, which also leverages acoustic signals for fire detection. As illustrated in Table 3, the comparison highlights significant differences in detection capabilities. Current advanced technologies can only effectively detect fire-related sounds within a range of less than 1m. In contrast, FireSonic extends this detection range to seven meters, significantly broadening the scope of detection. We also evaluated the accuracy of the system under conditions where the range is less than one meter, and FireSonic achieves an average accuracy of , which is higher than the most recent technologies. Existing technologies show varying sensitivities to the acoustic characteristics of different materials, often performing poorly with certain types. Conversely, FireSonic primarily detects flames through monitoring heat release, thus adapting to a wider variety of materials and ignition scenarios, more closely aligning with the real demands of fire incident contexts. Additionally, FireSonic uses ultrasonic waves to detect flames, ensuring it is not affected by common low-frequency noise in the environment, such as conversations, thereby exhibiting enhanced noise immunity. Moreover, FireSonic includes the capability to identify types of fires, a significant advantage that current technologies do not offer.

6. Conclusion

In this study, we developed and validated FireSonic, an innovative ultrasound-based system for accurately identifying indoor fire types. Key achievements include an extended sensing range of up to 7 meters, high classification accuracy of , robust performance across various materials, resistance to environmental noise, real-time monitoring capabilities with a detection latency of less than 400 milliseconds, and the ability to identify fire types. By incorporating beamforming technology, FireSonic enhances signal quality and establishes a reliable correlation between heat release and sound propagation. These advancements demonstrate FireSonic’s significant scientific and practical contributions to fire detection and safety.

References

- Kodur, V.; Kumar, P.; Rafi, M.M. Fire hazard in buildings: Review, assessment and strategies for improving fire safety. PSU research review 2019, 4, 1–23.

- Kerber, S.; et al. Impact of ventilation on fire behavior in legacy and contemporary residential construction; Underwriters Laboratories, Incorporated, 2010.

- Drysdale, D. An introduction to fire dynamics; John wiley & sons, 2011.

- Foroutannia, A.; Ghasemi, M.; Parastesh, F.; Jafari, S.; Perc, M. Complete dynamical analysis of a neocortical network model. Nonlinear Dynamics 2020, 100, 2699–2714.

- Ghasemi, M.; Foroutannia, A.; Nikdelfaz, F. A PID controller for synchronization between master-slave neurons in fractional-order of neocortical network model. Journal of Theoretical Biology 2023, 556, 111311.

- Perera, E.; Litton, D. A Detailed Study of the Properties of Smoke Particles Produced from both Flaming and Non-Flaming Combustion of Common Mine Combustibles. Fire Safety Science 2011, 10, 213–226.

- Drysdale, D.D., Thermochemistry. In SFPE Handbook of Fire Protection Engineering; Hurley, M.J.; Gottuk, D.; Hall, J.R.; Harada, K.; Kuligowski, E.; Puchovsky, M.; Torero, J.; Watts, J.M.; Wieczorek, C., Eds.; Springer New York: New York, NY, 2016; pp. 138–150.

- Gaur, A.; Singh, A.; Kumar, A.; Kulkarni, K.S.; Lala, S.; Kapoor, K.; Srivastava, V.; Kumar, A.; Mukhopadhyay, S.C. Fire Sensing Technologies: A Review. IEEE Sensors Journal 2019, 19, 3191–3202.

- Vojtisek-Lom, M. Total Diesel Exhaust Particulate Length Measurements Using a Modified Household Smoke Alarm Ionization Chamber. Journal of the Air & Waste Management Association 2011, 61, 126–134, . [CrossRef]

- Muhammad, K.; Ahmad, J.; Baik, S.W. Early fire detection using convolutional neural networks during surveillance for effective disaster management. Neurocomputing 2018, 288, 30–42. Learning System in Real-time Machine Vision.

- Çelik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Safety Journal 2009, 44, 147–158.

- Liu, Z.; Kim, A.K. Review of Recent Developments in Fire Detection Technologies. Journal of Fire Protection Engineering 2003, 13, 129–151, . [CrossRef]

- Chen, J.; He, Y.; Wang, J. Multi-feature fusion based fast video flame detection. Building and Environment 2010, 45, 1113–1122.

- Kahn, J.M.; Katz, R.H.; Pister, K.S.J. Emerging challenges: Mobile networking for “Smart Dust”. Journal of Communications and Networks 2000, 2, 188–196. [CrossRef]

- Zhong, S.; Huang, Y.; Ruby, R.; Wang, L.; Qiu, Y.X.; Wu, K. Wi-fire: Device-free fire detection using WiFi networks. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), May 2017, pp. 1–6.

- Li, J.; Sharma, A.; Mishra, D.; Seneviratne, A. Fire Detection Using Commodity WiFi Devices. In Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM), 2021, pp. 1–6. [CrossRef]

- Radke, D.; Abari, O.; Brecht, T.; Larson, K. Can Future Wireless Networks Detect Fires? In Proceedings of the Proceedings of the 7th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, New York, NY, USA, 2020; BuildSys ’20, p. 286–289.

- Park, K.H.; Lee, S.Q. Early stage fire sensing based on audible sound pressure spectra with multi-tone frequencies. Sensors and Actuators A: Physical 2016, 247, 418–429.

- Martinsson, J.; Runefors, M.; Frantzich, H.; Glebe, D.; McNamee, M.; Mogren, O. A Novel Method for Smart Fire Detection Using Acoustic Measurements and Machine Learning: Proof of Concept. Fire Technology 2022, 58, 3385–3403.

- Zhang, F.; Niu, K.; Fu, X.; Jin, B. AcousticThermo: Temperature Monitoring Using Acoustic Pulse Signal. In Proceedings of the 2020 16th International Conference on Mobility, Sensing and Networking (MSN), Dec 2020, pp. 683–687.

- Cai, C.; Pu, H.; Ye, L.; Jiang, H.; Luo, J. Active Acoustic Sensing for “Hearing” Temperature Under Acoustic Interference. IEEE Transactions on Mobile Computing 2023, 22, 661–673.

- Wang, Z.; et al. HearFire: Indoor Fire Detection via Inaudible Acoustic Sensing. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies 2023, 6, 1–25.

- Savari, R.; Savaloni, H.; Abbasi, S.; Placido, F. Design and engineering of ionization gas sensor based on Mn nano-flower sculptured thin film as cathode and a stainless steel ball as anode. Sensors and Actuators B: Chemical 2018, 266, 620–636.

- Wang, Y.; Shen, J.; Zheng, Y. Push the Limit of Acoustic Gesture Recognition. IEEE Transactions on Mobile Computing 2022, 21, 1798–1811. [CrossRef]

- Yang, Y.; Wang, Y.; Cao, J.; Chen, J. HearLiquid: Non-intrusive Liquid Fraud Detection Using Commodity Acoustic Devices. IEEE Internet of Things Journal 2022, pp. 1–1. [CrossRef]

- Chen, H.; Li, F.; Wang, Y. EchoTrack: Acoustic device-free hand tracking on smart phones. In Proceedings of the IEEE INFOCOM 2017 - IEEE Conference on Computer Communications, 2017, pp. 1–9. [CrossRef]

- Sun, K.; Zhao, T.; Wang, W.; Xie, L. VSkin: Sensing Touch Gestures on Surfaces of Mobile Devices Using Acoustic Signals. In Proceedings of the Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 2018; MobiCom ’18, p. 591–605. [CrossRef]

- Tung, Y.C.; Bui, D.; Shin, K.G. Cross-Platform Support for Rapid Development of Mobile Acoustic Sensing Applications. In Proceedings of the Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services, New York, NY, USA, 2018; MobiSys ’18, p. 455–467. [CrossRef]

- Liu, C.; Wang, P.; Jiang, R.; Zhu, Y. AMT: Acoustic Multi-target Tracking with Smartphone MIMO System. In Proceedings of the IEEE INFOCOM 2021 - IEEE Conference on Computer Communications, 2021, pp. 1–10. [CrossRef]

- Li, D.; Liu, J.; Lee, S.I.; Xiong, J. LASense: Pushing the Limits of Fine-Grained Activity Sensing Using Acoustic Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 6. [CrossRef]

- Yang, Q.; Zheng, Y. Model-Based Head Orientation Estimation for Smart Devices. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5. [CrossRef]

- Chen, H.; Li, F.; Wang, Y. EchoTrack: Acoustic device-free hand tracking on smart phones. In Proceedings of the IEEE INFOCOM 2017-IEEE Conference on Computer Communications. IEEE, 2017, pp. 1–9.

- Liu, C.; Wang, P.; Jiang, R.; Zhu, Y. Amt: Acoustic multi-target tracking with smartphone mimo system. In Proceedings of the IEEE INFOCOM 2021-IEEE Conference on Computer Communications. IEEE, 2021, pp. 1–10.

- Tung, Y.C.; Bui, D.; Shin, K.G. Cross-platform support for rapid development of mobile acoustic sensing applications. In Proceedings of the Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services, 2018, pp. 455–467.

- Yang, Y.; Wang, Y.; Cao, J.; Chen, J. Hearliquid: Nonintrusive liquid fraud detection using commodity acoustic devices. IEEE Internet of Things Journal 2022, 9, 13582–13597.

- Wang, Y.; Shen, J.; Zheng, Y. Push the limit of acoustic gesture recognition. IEEE Transactions on Mobile Computing 2020, 21, 1798–1811.

- Mao, W.; Wang, M.; Qiu, L. AIM: Acoustic Imaging on a Mobile. In Proceedings of the Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services, New York, NY, USA, 2018; MobiSys ’18, p. 468–481. [CrossRef]

- Nandakumar, R.; Gollakota, S.; Watson, N. Contactless Sleep Apnea Detection on Smartphones. In Proceedings of the Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services, New York, NY, USA, 2015; MobiSys ’15, p. 45–57. [CrossRef]

- Nandakumar, R.; Iyer, V.; Tan, D.; Gollakota, S. FingerIO: Using Active Sonar for Fine-Grained Finger Tracking. In Proceedings of the Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 2016; CHI ’16, p. 1515–1525. [CrossRef]

- Ruan, W.; Sheng, Q.Z.; Yang, L.; Gu, T.; Xu, P.; Shangguan, L. AudioGest: Enabling Fine-Grained Hand Gesture Detection by Decoding Echo Signal. In Proceedings of the Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, New York, NY, USA, 2016; UbiComp ’16, p. 474–485. [CrossRef]

- Ahmad, A.D.; Abubaker, A.M.; Salaimeh, A.; Akafuah, N.K.; Finney, M.; Forthofer, J.M.; Saito, K. Ignition and burning mechanisms of live spruce needles. Fuel 2021, 304, 121371.

- Erez, G.; Collin, A.; Parent, G.; Boulet, P.; Suzanne, M.; Thiry-Muller, A. Measurements and models to characterise flame radiation from multi-scale kerosene fires. Fire Safety Journal 2021, 120, 103179.

- Wang, Y.; Shen, J.; Zheng, Y. Push the Limit of Acoustic Gesture Recognition. In Proceedings of the IEEE INFOCOM 2020 - IEEE Conference on Computer Communications, July 2020, pp. 566–575.

- Wang, W.; Liu, A.X.; Sun, K. Device-free gesture tracking using acoustic signals. In Proceedings of the Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 2016; MobiCom ’16, p. 82–94.

- Li, D.; Liu, J.; Lee, S.I.; Xiong, J. LASense: Pushing the Limits of Fine-grained Activity Sensing Using Acoustic Signals 2022. 6.

- Wang, P.; Jiang, R.; Liu, C. Amaging: Acoustic Hand Imaging for Self-adaptive Gesture Recognition. In Proceedings of the IEEE INFOCOM 2022 - IEEE Conference on Computer Communications, May 2022, pp. 80–89.

- Ling, K.; Dai, H.; Liu, Y.; Liu, A.X.; Wang, W.; Gu, Q. UltraGesture: Fine-Grained Gesture Sensing and Recognition. IEEE Transactions on Mobile Computing 2022, 21, 2620–2636. [CrossRef]

- Sun, K.; Zhao, T.; Wang, W.; Xie, L. VSkin: Sensing Touch Gestures on Surfaces of Mobile Devices Using Acoustic Signals. In Proceedings of the Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 2018; MobiCom ’18, p. 591–605.

- Tian, M.; Wang, Y.; Wang, Z.; Situ, J.; Sun, X.; Shi, X.; Zhang, C.; Shen, J. RemoteGesture: Room-scale Acoustic Gesture Recognition for Multiple Users. In Proceedings of the 2023 20th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Sep. 2023, pp. 231–239.

- Licitra, G.; Artuso, F.; Bernardini, M.; Moro, A.; Fidecaro, F.; Fredianelli, L. Acoustic beamforming algorithms and their applications in environmental noise. Current Pollution Reports 2023, 9, 486–509.

- Martinka, J.; Rantuch, P.; Martinka, F.; Wachter, I.; Štefko, T. Improvement of Heat Release Rate Measurement from Woods Based on Their Combustion Products Temperature Rise. Processes 2023, 11, 1206.

- Ingason, H.; Li, Y.Z.; Lönnermark, A. Fuel and Ventilation-Controlled Fires. In Tunnel Fire Dynamics; Springer, 2024; pp. 23–45.

- Hartin, E. Extreme Fire Behavior: Understanding the Hazards. CFBT-US. com 2008.

| 1 | Experiments were conducted with the presence of professional firefighters and were approved by the Institutional Review Board. |

Figure 1.

Monitoring in the presence of smoke.

Figure 2.

Fire evolution.

Figure 3.

Overview.

Figure 4.

Beamforming

Figure 5.

High-Temperature region in CIR measurement.

Figure 6.

The comparison before and after using beamforming

Figure 7.

Fire monitoring in CIR.

Figure 8.

Combustion curves of different fire types.

Figure 9.

Commercial speaker and microphone applied in our system and three rooms.

Figure 10.

System performance.

Figure 11.

Comparison of performance with and without beamforming.

Table 3.

Comparison with State-of-the-art Work

| Sensing range | Accuracy (1m) |

Performance across different materials |

Resistance to daily interference |

Ability to classify fire types |

|

|---|---|---|---|---|---|

| FireSonic | 7m | 98.7% | Varies minimally | Strong | Yes |

| State-of-the-art Work | 1m | 97.3% | Varies significantly | Weak | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Copyright: This open access article is published under a Creative Commons CC BY 4.0 license, which permit the free download, distribution, and reuse, provided that the author and preprint are cited in any reuse.

Submitted:

27 June 2024

Posted:

27 June 2024

You are already at the latest version

Alerts

A peer-reviewed article of this preprint also exists.

This version is not peer-reviewed

Submitted:

27 June 2024

Posted:

27 June 2024

You are already at the latest version

Alerts

Abstract

Accurate and prompt determination of fire types is essential for effective firefighting and reducing damages. However, traditional methods such as smoke detection, visual analysis, and wireless signals pose unavailability in identifying fire types. This paper introduces FireSonic, an acoustic sensing system that leverages commercial speakers and microphones to actively probe the fire using acoustic signals, effectively identifying indoor fire types. By incorporating beamforming technology, FireSonic first enhances signal clarity and reliability, thus mitigating signal attenuation and distortion. To establish a reliable correlation between fire types and sound propagation, FireSonic quantifies the Heat Release Rate (HRR) of flames by analyzing the relationship between fire heated areas and sound wave propagation delays. Furthermore, the system extracts spatiotemporal features related to fire from channel measurements. Experimental results demonstrate that FireSonic attains an average fire type classification accuracy of 95.5% and a detection latency of less than 400 ms, satisfying the requirements for real-time monitoring. This system significantly enhances the formulation of targeted firefighting strategies, boosting fire response effectiveness and public safety.

Keywords:

Subject: Computer Science and Mathematics - Signal Processing

1. Introduction

Fire disaster is one of the most significant global threats to life and property. Annually, fire incidents result in billions of dollars in property damage and a substantial number of casualties, such as those from electrical and kitchen fires [1]. Different types of fire exhibit distinct combustion characteristics, spread rates, which require specific firefighting methods [2]. Accurately identifying the type of fire is crucial for public safety. Timely and effective determination of the fire type enables the implementation of targeted firefighting strategies, thereby minimizing fire-related damage and safeguarding lives and property. The study of different types of fires enables a deeper understanding of their combustion mechanisms and development processes. This knowledge facilitates the development of more scientific and effective firefighting techniques and equipment [3]. Not only does this enhance firefighting efficiency, but it also provides essential theoretical foundations and practical guidelines for fire prevention.

With advances in sensor technology, the development of new types of sensing systems has become possible [4,5]. Existing fire detection methods primarily rely on smoke [6,7,8,9], vision [10,11,12,13,14], radio frequency [15,16,17], and acoustics [18,19,20,21,22]. However, each of these methods has its limitations. Smoke detectors, while widely used, are prone to false alarms triggered by environmental impurities and may pose health risks due to the emitted radioactive materials they contain [9,23]. Vision-based detection systems require an unobstructed line of sight and may raise privacy concerns. Furthermore, as illustrated in Figure 1, vision-based methods struggle when cameras are contaminated or obscured by smoke, making it difficult to gather relevant information about the fire’s severity and type. In contrast, methods using wireless signals, such as RF and acoustics, can still capture pertinent data even in smoky conditions. Recent advances have seen RF sensing technology employed in fire detection tasks. However, RF detection typically requires specialized and expensive equipment and may be inaccessible in remote areas [15,17].

Acoustic-based methods for determining fire types offer several advantages[24,25,26,27,28,29,30,31]. Firstly, they provide a non-contact detection method, which avoids direct contact with the fire. Second, they enable real-time monitoring and rapid response, facilitating timely firefighting measures. Additionally, this method demonstrates strong environmental adaptability, being capable of working well in environments with smoke diffusion or low visibility, providing a more reliable means for fire monitoring [32,33,34,35,36]. However, the state-of-the-art work acoustic-based sensing system in [19] has a limited sensing range, vulnerable to daily interferences and is not capable of classifying fire types.

To overcome the drawbacks in aforementioned approaches, this paper introduces FireSonic, an acoustically-based system capable of discriminating fire types, characterized by a low false alarm rate, effective signal detection in smoke-filled environments, rapid detection speed, and low cost. Unlike conventional reflective acoustic sensing technologies [24,25,26,27,28,37,38,39,40], FireSonic operates by detecting changes in sound waves as they pass through flames, thereby offering a novel approach to acoustic sensing technology. This method enhances the practical application and reliability of acoustic sensors in fire detection scenarios. As shown in Figure 2, it displays the curve of the standard temperature over time for burning with sufficient oxygen, including four stages: initial growth, fire growth, full development, and decay. Due to the temperature variations exhibited by different types of fires at various stages, the objective of this study is to develop a method utilizing acoustic means to monitor the changes in this curve, thereby facilitating the determination of fire types. Research on this technology may even provide technical insights into aspects such as the rate of fire spread in forest fires in the future [41].

However, implementing such a system is a daunting task. The first challenge is obtaining high-quality signals for monitoring fires. The fire environment poses a significant challenge due to its complexity, with factors such as smoke, flames, thermal radiation, and combustion by-products interfering with sound wave propagation, resulting in signal attenuation and distortion. In addition, diverse materials like building materials and furniture affect sound waves differently, causing signal reflection, refraction, and scattering, further complicating accurate signal interpretation.

To address the first challenge, we utilize beamforming technology, adjusting the phase and amplitude of multiple sensors to concentrate on signals from specific directions, thereby reducing interference and noise from other directions. By aligning and combining signals from these sensors, beamforming enhances target signals, improving signal-to-noise ratio, clarity, and reliability. In addition, we employ spatial filtering techniques to mitigate multipath effects caused by sound waves propagating through different media in a fire, thereby minimizing signal distortion and enhancing detection accuracy.

The second challenge lies in establishing a correlation between fire types and acoustic features. Traditional passive acoustic methods are influenced by the varying acoustic signal characteristics generated by different types of fire. Factors such as the type of burning material, flame size, and shape result in diverse spectral and amplitude features of sound waves, making it difficult to accurately match acoustic signals with specific fire types. Moreover, while the latest active acoustic sensing methods can detect the occurrence of fires, the inability of flames to reflect sound waves poses a challenge in associating active acoustic signals with fire types using conventional reflection approaches.

To address the second challenge, different from directly establishing the association between fire types and sound waves, we propose a heat release based monitoring scheme, particularly quantifying the correlation between the region of fire heat release and sound propagation delays. This quantification is based on the fact that sound speed increases with temperature elevation. Larger flames generate broader high-temperature areas. We discern fire types based on real-time changes in heat production during the combustion process.

Contributions. In a nutshell, our main contributions are summarized as follows:

- We address the shortcomings of current fire detection systems by incorporating a critical feature into fire monitoring systems. To the best of our knowledge, FireSonic is the first system that leverages acoustic signals for determining fire types.

- We employ beamforming technology to enhance signal quality by reducing interference and noise, while our flame HRR monitoring scheme utilizes acoustic signals to quantify the correlation between fire heat release regions and sound propagation delays, facilitating fire type determination and accuracy enhancement.

- We implement a prototype of FireSonic using low-cost commodity acoustic devices. Our experiments indicate that FireSonic achieves an overall accuracy of in determining fire types. 1.

2. Background

2.1. Heat Release Rate

The relationship between the Heat Release Rate (HRR) and acoustic sensing can be derived through a series of physical principles. The relationship between sound speed cc and temperature T in air can be expressed by the following formula [42]:

where is the sound speed at a reference temperature (usually 293K, which is about 20°C, approximately 343 m/s).

HRR is a measure of the energy released by a fire source per unit time, typically expressed in kilowatts (kW). It can be indirectly calculated by measuring the rate of oxygen consumption by the flames. To establish the relationship between HRR and acoustic sensing, we need to consider how thermal energy affects the propagation of sound waves. As the fire releases heat, the surrounding air heats up, leading to an increase in the sound speed in that area. This change in sound speed can be perceived through changes in the propagation time of sound waves detected by sensors.

2.2. Channel Impulse Response

As signals traverse through the air, they undergo various paths due to reflections from objects in the environment, posing challenges for accurately capturing fire-related information. Consequently, the receiver picks up multiple versions of the signal, each with its own unique delay and attenuation, a phenomenon known as multipath propagation, which can lead to signal distortion and errors in fire monitoring tasks [43,44,45,46,47,48,49]. To address this issue, we utilize Channel Impulse Response (CIR) to segment these multipath signals into discrete segments or taps, enabling more precise identification of signals influenced by fire incidents.

To capture the CIR, a predefined frame is transmitted to probe the channel, subsequently received by the receiver. In the case of a complex baseband signal, measuring the CIR involves computing the cyclic cross-correlation between the transmitted and received baseband signals as:

where is CIR measurement, signifies the transmitted complex baseband signal, denotes the conjugate of at the receiver, and corresponds to the multipath delays. N is the length of . acts as a matrix illustrating the attenuation of signals with varying delays over time t.

2.3. Beamforming

In complex multipath channels, directing signal energy towards specific points of interest minimizes noise and interference, thereby enhancing the target signal. Beamforming involves steering sensor arrays’ beams to predefined angular ranges to detect signals from various directions [50]. This technique is crucial when signals originate from multiple directions and require sensing. Specifically, beamforming selectively amplifies or suppresses incoming signals by controlling the phase and amplitude of sensor array elements. To generate a beam pattern, signal amplification is focused in a desired direction while attenuating noise and interference from other directions, achieved by applying a set of weights to each sensor’s signal. These weights are optimized based on the Signal-to-Noise Ratio (SNR) or desired radiation pattern.

3. System Design

3.1. Overview

As depicted in Figure 3, the system overview is outlined. The CIR estimator sequentially measures the transmitted and received Zadoff-Chu (ZC) sequence. Specifically, ZC sequence is utilized as a probing signal for its good autocorrelation characteristics [48], while a cross-correlation-based approach is adopted for estimating the CIR affected by fire combustion. Based on the captured CIR, the system assesses the occurrence of fire by considering the differences in sound velocity induced by temperature changes and the acoustic energy absorption effects, and it triggers alarms as needed. Subsequently, beamforming is applied to pinpoint the direction of the fire, producing high-quality thermal maps of fire types. Finally, based on the captured patterns of heat release rate features, a classifier is used for fire type identification.

3.2. Transceiver

The high-frequency signal generation module utilizes a root Zadoff-Chu sequence as the transmission signal. The root ZC sequence has a length of 128 samples with an auto-correlation coefficient using u=64 and Nzc=127. Different settings of u and Nzc parameters are suited for signal output requirements under various bandwidths. The settings here are designed to meet the limitations of human hearing and hardware sampling rates. After frequency domain interpolation, the sequence extends to 2048 points, achieving a detection range of 7 meters, and is up-converted to the ultrasonic frequency band. The signal transmission and reception module stores the high-frequency signal in a Raspberry Pi system equipped with controllable speakers and controls playback via a Python program. The signals are received by a microphone array with a sampling rate of 48 kHz.

3.3. Signal Enhancement Based on Beamforming.

In fire monitoring, beamforming technology plays a crucial role. In complex fire environments, signals often undergo multiple paths due to various obstacles, resulting in signal attenuation and interference [50]. To address this issue, we can utilize beamforming technology to steer the receiver’s beam towards specific directions, thereby maximizing the capture of target signals and minimizing noise and interference. As illustrated in Figure 4, in a microphone array with Mic1, Mic2, and Mic3, each microphone receives signals with different delays. By using the signal from Mic2 as a reference and adjusting the phases of Mic1 and Mic3, signals can be aligned, thus concentrating the combined signal energy. By controlling the phase and amplitude of the signals, beamforming technology can selectively enhance signals in the direction of the fire source, thereby improving the sensitivity and accuracy of the fire monitoring system.

3.4. Mining Fire Related Information in CIR

We apply beamforming techniques to enhance signals from the direction of the fire while eliminating interference from other directions so far. Next, we focus on extracting information related to the extent of the fire’s impact. As sound waves travel through the high-temperature region, their propagation speed increases. The complex chemical and physical reactions during the fire cause the temperature distribution and shape of the high-temperature region to be uneven, resulting in a non-uniform spatial temperature field distribution. To characterize this distribution, we conduct the following study.

The number of taps is directly related to the spatial propagation distance of the ultrasonic signal. A smaller number of taps indicates a shorter signal propagation distance, while a larger number of taps indicates a longer propagation distance. Therefore, the sensing range s can be determined by the number of taps L, specifically expressed as , where , which represents the distance corresponding to each tap, i.e., the propagation distance of the signal between two adjacent sampling points. is the sampling rate of the signal, and v is sound speed. Specifically, we conduct CIR measurements frame by frame, where each frame represents a single CIR trace. For ease of visualization, we normalize all signal energy values across the taps to a range between 0 and 255 and represent them using a CIR heatmap, as depicted in Figure 5. The horizontal axis represents time in frames, with hardware devices capturing 23 frames of data per second at a sampling rate of 48 kHz. The vertical axis denotes the sensing range, indicated by acoustical signal delays.

4. Results

4.1. Cir Measurements before and after Using Beamforming

Figure 6(a) and Figure 6(b) illustrate the results of CIR measurements for an impacted region of 0.3 meters in diameter before and after using beamforming, respectively. It can be observed that the thermal image obtained after beamforming is more accurate. This is because beamforming technology allows for a more comprehensive capture of the characteristics of the affected region, thereby enhancing the accuracy and reliability of the measurements. We empirically set a threshold value, Th (e.g., Th=150), to select taps truly affected by high temperatures, i.e., those taps whose signal energy values exceed the threshold. Subsequently, for the selected taps, we sum their energy values and record it as the instantaneous Heat Release Rate (HRR) for that frame. It is noteworthy that during the fire combustion process, the HRR closely aligns with the temperature variation trend caused by the fire [51,52]. Therefore, we utilize HRR to describe the combustion patterns of different fire types.

4.2. Fire Type Identification

To achieve a gradual increase in flame size, we arranged four alcohol swabs in a straight line at intervals of 0.2 meters within a container, interconnected by a thread soaked in alcohol. These alcohol swabs were sequentially ignited via the thread, illustrating a progressively intensifying fire scenario. The captured CIR measurement results are presented in Figure 7(a). From the ignition of the first alcohol swab at the 1st second to the 4th second, the delay difference increased from an initial 1 ms to 4.5 ms. Similarly, by adjusting the valve of a cassette burner, we simulated a scenario of decreasing fire intensity, where the decreased from 3.5 ms to 1.5 ms in Figure 7(b). Our experimental results indicate that the delay difference is an effective indicator of fire severity and can be utilized to predict the fire’s propagation tendency.

Each trace represents a frame, and the affected area within each frame changes continuously, thereby facilitating the determination of the fire type. To accurately assess the affected areas caused by different types of fires, we define the region between the two farthest taps in each frame with normalized values greater than the set threshold as the area affected by the fire. By summing the taps contained in the high-temperature region (HTR) of each frame, we obtain the energy variation for describing the heat release rate curve. Subsequently, Moving Average (MA) processing is applied to reduce short-term fluctuations in the scatter plot, enabling the identification and analysis of the long-term development trends of fires. Next, we conduct detailed studies on different fire types. The moving average technique is employed to smooth the data, which helps eliminate short-term fluctuations and highlight long-term trends. This method is particularly useful because the sound energy influenced by the flames fluctuates significantly between adjacent frames. By calculating the average value over a period, we capture the variations in fire intensity over that period, allowing us to match the characteristics of different fire types accordingly [53].

A fuel-controlled fire occurs when the development and spread of the fire are primarily determined by the quantity and arrangement of the combustible materials, given an ample supply of oxygen. In our experiment, we placed a suitable amount of paper and charcoal into a metal box as fuel, with the container top left open to provide sufficient oxygen, simulating the conditions of a real fuel-controlled fire. The experimental results are shown in Figure 8(a). Analysis of the experimental curve reveals that with ample oxygen, the HRR increases rapidly between frames 50 and 100, then stabilizes at around 140 kW. However, at frame 220, the HRR rapidly decreases due to the reduction of combustibles.

A ventilation-limited fire is characterized by the fire’s development being primarily dependent on the amount of oxygen supplied, rather than the amount of fuel. This type of fire typically occurs in enclosed or semi-enclosed spaces. In our simulation, we placed sufficient fuel in the container as fuel and covered the top with a thin metal lid to simulate the oxygen supply conditions of a real ventilation-limited fire. The experimental results are shown in Figure 8(b). Analysis indicates that even with ample fuel, the limitation of oxygen causes the HRR to gradually increase, peaking at frame 180 with an HRR of approximately 70 kW, and then gradually decreasing.

A ventilation-induced flashover fire, also known as backdraft, occurs when flames in an oxygen-deficient environment produce a large amount of incompletely burned products. When suddenly exposed to fresh air, these combustion products ignite rapidly, causing the fire to flare up violently. In our experiment, we initially placed sufficient charcoal and paper in the container as fuel, and covered the top with a thin metal lid to simulate the conditions of oxygen deficiency. As the flame within the container diminished, we used a metal wire to open the lid and introduce a large amount of oxygen, simulating the real-life oxygen supply during a flashover. The HRR curve shown in Figure 8(c) indicates that in the initial oxygen-deficient phase (before frame 230), the HRR increases and then decreases, remaining below 80 kW. After introducing sufficient oxygen by opening the lid, the HRR rapidly increases, reaching a peak of around 90 kW at frame 450, before gradually decreasing.

A pulsating fire refers to a fire where the intensity of combustion fluctuates rapidly during its development, creating a pulsating phenomenon in the combustion intensity. This is caused by the instability of oxygen supply. In our experiment, we placed an appropriate amount of paper and charcoal in the container as fuel, and used a metal lid to control the oxygen supply. After the fire intensified, we repeatedly moved the metal lid back and forth with a metal wire to simulate the instability of oxygen supply during a real fire. As shown in Figure 8(d), the HRR gradually increases to around 85 kW when both fuel and oxygen are sufficient. After frame 250, the instability in oxygen supply, caused by moving the metal lid, leads to the HRR fluctuating around 80 kW.

4.3. Classifier

We chose Support Vector Machine (SVM) as the classifier for fire type identification, with the smoothed curves as inputs. The fundamental principle is to find an optimal hyperplane that maximizes the margin between sample points of different fire classes. This approach of maximizing margins enhances the generalization capability of the model, leading to more accurate classification of new samples. Moreover, SVM focuses mainly on support vectors rather than all samples during model training. This sensitivity to support vectors enables SVM to effectively address classification issues in imbalanced datasets.

5. Experiments and Evaluation

5.1. Experiment Setup

5.1.1. Hardware

The hardware of our system is depicted in Figure 9(a), consisting of a commercial-grade speaker and a circular arrangement of microphones. The acoustic signals emitted by the speaker are partially absorbed by the flames before being captured by the microphones. The hardware components utilized in our system are depicted in Figure 9(a), comprising a commercial-grade speaker and a circular arrangement of microphones. To facilitate the precise transmission of acoustic signals, we employ the Google AIY Voice Kit 2.0, which includes a speaker controlled by an efficient Raspberry Pi Zero. For the reception of signals, we have selected the ReSpeaker 6-Mic Circular Array Kit that operates at a sampling rate of , ensuring the accurate capture of audio data in the inaudible frequency range. The FireSonic system costs less than 30 USD.

5.1.2. Data Collection

We configure the root ZC sequence with the parameters and , and then upscaled it to a sequence length of to ensure coverage of a m sensing range. The data was gathered across three distinct rooms, each with different dimensions: , , . These room layouts are depicted in Figure 9(b) - 9(d). 1000 fire type measurements were conducted in each room, resulting in a total of 3000 complete measurements.

5.1.3. Model Training, Testing and Validating

We utilize the HRR curve after MA as the model’s input, providing ample information for fire monitoring. For the offline training process, we leverage a computing system equipped with 32 GB of RAM, an Intel Core i7-13700K CPU from the 13th Generation lineup, and an NVIDIA GeForce RTX 4070 GPU. Following training, the model is stored on a Raspberry Pi for subsequent online testing. The trained model has a file size of less than 6 megabytes. It’s worth noting that we also conducted tests on unfamiliar datasets to further validate the system’s performance.

5.2. Evaluation

5.2.1. Overall Performance

We name the four types of fires corresponding to Figure 8 as Type 1, Type 2, Type 3, and Type 4, respectively. Figure 10(a) shows the system’s judgment results on four types of fires in three rooms, achieving an average recognition accuracy rate of . As shown in Figure 10(b), even in a large room like Room 3 when a fire breaks out, the accuracy rate can reach above . With our holistic design, the system is able to provide accurate fire state information to firefighters, allowing them to take appropriate countermeasures.

5.2.2. Performance on Different Classifiers

To better demonstrate the effectiveness of capturing fire type features, we compared SVM with BP neural network (BP), Random Forest (RF), and K-Nearest Neighbors (KNN),as illustrated in Table 2. SVM exhibited the best performance in terms of highest identification accuracy, reaching . The overall data demonstrated high discriminability. However, the introduction of nonlinearity by BP neural network did not effectively leverage its advantages. RF and KNN were significantly influenced by outliers and noise, leading to inferior performance compared to SVM.

5.2.3. Performance on Different Locations

We randomly selected 9 locations in Room 3, as shown in Figure 10(c). We obtained the average classification accuracy for each location, and all were above . This performance validates the rationality of our technique in capturing fire type differences from various angles and distances, as well as corroborates the judiciousness of our feature selection based on smoothing via moving averages. This substantiates the efficacy and robustness of our holistic design for accurate fire situation awareness under diverse scenarios.

5.2.4. Performance in Smoky Environments

We randomly selected three locations within Room 1 to simulate the performance in a smoke-filled fire scenario. The system’s performance, as shown in Figure 10(d), indicates that FireSonic can achieve an accuracy rate of over even in complex smoke environments. This high level of accuracy is due to the system fundamentally operating on the principle of monitoring heat release.

5.2.5. Performance on Different Fules

To address potential biases associated with testing using a single fuel source, we expanded our evaluation to include six diverse fuels: ethanol, paper, charcoal, wood, plastics, and liquid fuel. The results, as presented in Table 1, demonstrate that the system consistently maintains an average classification accuracy exceeding across three different locations and all fuel scenarios. This uniform high accuracy confirms that the fire evolution features captured by the system are both discriminative and robust. Furthermore, these outcomes affirm the system’s ability to generalize effectively across a variety of fuel types, thereby underscoring the design’s overall effectiveness in providing precise fire situation awareness.

Table 1.

Performance on different fules.

| Types of fuels | Ethanol | Paper | Charcoal | Woods | Plastics | Liquid fuel |

|---|---|---|---|---|---|---|

| Performance | 97.2% | 98.6% | 95.1% | 94.3% | 98.2% | 97.5% |

Table 2.

Performance on different classifiers.

| Classifier | SVM | BP | RF | KNN |

|---|---|---|---|---|

| Best performance | 97.6% | 95.2% | 89.3% | 75.9% |

| Worst performance | 93.3% | 90.3% | 78.5% | 71.5% |

| Average performance | 95.5% | 93.5% | 84.7% | 72.8% |

5.2.6. Comparison of Performance with and without Beamforming

To further investigate the extent of performance enhancement by beamforming, we conducted 300 measurements each with and without beamforming using FireSonic in Room3. The results, as depicted in Figure 11, show that Precision, Recall, and F1-score values are all above when beamforming is employed. In stark contrast, experiments without beamforming show performance below . This confirms the beneficial role of beamforming in capturing fire related features via CIR.

5.2.7. Detection Time

The system detection time includes a frame detection time of less than 200ms, a downconversion time of 20ms, a CIR measurement time of 30ms, and a fire type judgment computation time of 130ms. The cumulative detection duration of these four steps is less than 400ms, which sufficiently meets the real-time requirements in urgent fire scenarios.

5.2.8. Comparison with State-of-the-Art Work

To benchmark against state-of-the-art acoustic-based fire detection approaches, we employ the system in [19] as a baseline, which also leverages acoustic signals for fire detection. As illustrated in Table 3, the comparison highlights significant differences in detection capabilities. Current advanced technologies can only effectively detect fire-related sounds within a range of less than 1m. In contrast, FireSonic extends this detection range to seven meters, significantly broadening the scope of detection. We also evaluated the accuracy of the system under conditions where the range is less than one meter, and FireSonic achieves an average accuracy of , which is higher than the most recent technologies. Existing technologies show varying sensitivities to the acoustic characteristics of different materials, often performing poorly with certain types. Conversely, FireSonic primarily detects flames through monitoring heat release, thus adapting to a wider variety of materials and ignition scenarios, more closely aligning with the real demands of fire incident contexts. Additionally, FireSonic uses ultrasonic waves to detect flames, ensuring it is not affected by common low-frequency noise in the environment, such as conversations, thereby exhibiting enhanced noise immunity. Moreover, FireSonic includes the capability to identify types of fires, a significant advantage that current technologies do not offer.

6. Conclusion

In this study, we developed and validated FireSonic, an innovative ultrasound-based system for accurately identifying indoor fire types. Key achievements include an extended sensing range of up to 7 meters, high classification accuracy of , robust performance across various materials, resistance to environmental noise, real-time monitoring capabilities with a detection latency of less than 400 milliseconds, and the ability to identify fire types. By incorporating beamforming technology, FireSonic enhances signal quality and establishes a reliable correlation between heat release and sound propagation. These advancements demonstrate FireSonic’s significant scientific and practical contributions to fire detection and safety.

References

- Kodur, V.; Kumar, P.; Rafi, M.M. Fire hazard in buildings: Review, assessment and strategies for improving fire safety. PSU research review 2019, 4, 1–23.

- Kerber, S.; et al. Impact of ventilation on fire behavior in legacy and contemporary residential construction; Underwriters Laboratories, Incorporated, 2010.

- Drysdale, D. An introduction to fire dynamics; John wiley & sons, 2011.

- Foroutannia, A.; Ghasemi, M.; Parastesh, F.; Jafari, S.; Perc, M. Complete dynamical analysis of a neocortical network model. Nonlinear Dynamics 2020, 100, 2699–2714.

- Ghasemi, M.; Foroutannia, A.; Nikdelfaz, F. A PID controller for synchronization between master-slave neurons in fractional-order of neocortical network model. Journal of Theoretical Biology 2023, 556, 111311.

- Perera, E.; Litton, D. A Detailed Study of the Properties of Smoke Particles Produced from both Flaming and Non-Flaming Combustion of Common Mine Combustibles. Fire Safety Science 2011, 10, 213–226.

- Drysdale, D.D., Thermochemistry. In SFPE Handbook of Fire Protection Engineering; Hurley, M.J.; Gottuk, D.; Hall, J.R.; Harada, K.; Kuligowski, E.; Puchovsky, M.; Torero, J.; Watts, J.M.; Wieczorek, C., Eds.; Springer New York: New York, NY, 2016; pp. 138–150.

- Gaur, A.; Singh, A.; Kumar, A.; Kulkarni, K.S.; Lala, S.; Kapoor, K.; Srivastava, V.; Kumar, A.; Mukhopadhyay, S.C. Fire Sensing Technologies: A Review. IEEE Sensors Journal 2019, 19, 3191–3202.