1. Introduction

Annually, more than 1,414,259 cases of prostate cancer (PCa) are diagnosed, with a staggering 375,304 individuals succumbing to the disease [

1]. Timely detection and prompt intervention play pivotal roles in efficiently managing prostate cancer and enhancing the prospects of favorable results. Prostate cancer (PCa) often presents a challenging scenario due to its propensity for non-aggressive behavior, posing a dilemma regarding the necessity of escalated treatment measures to mitigate the risk of disease progression in patients. Addressing this challenge, the Gleason Grading System categorizes tumors into distinct risk groups based on their pathological characteristics, a framework endorsed by both the International Society of Urological Pathology (ISUP) and the World Health Organization (WHO) [

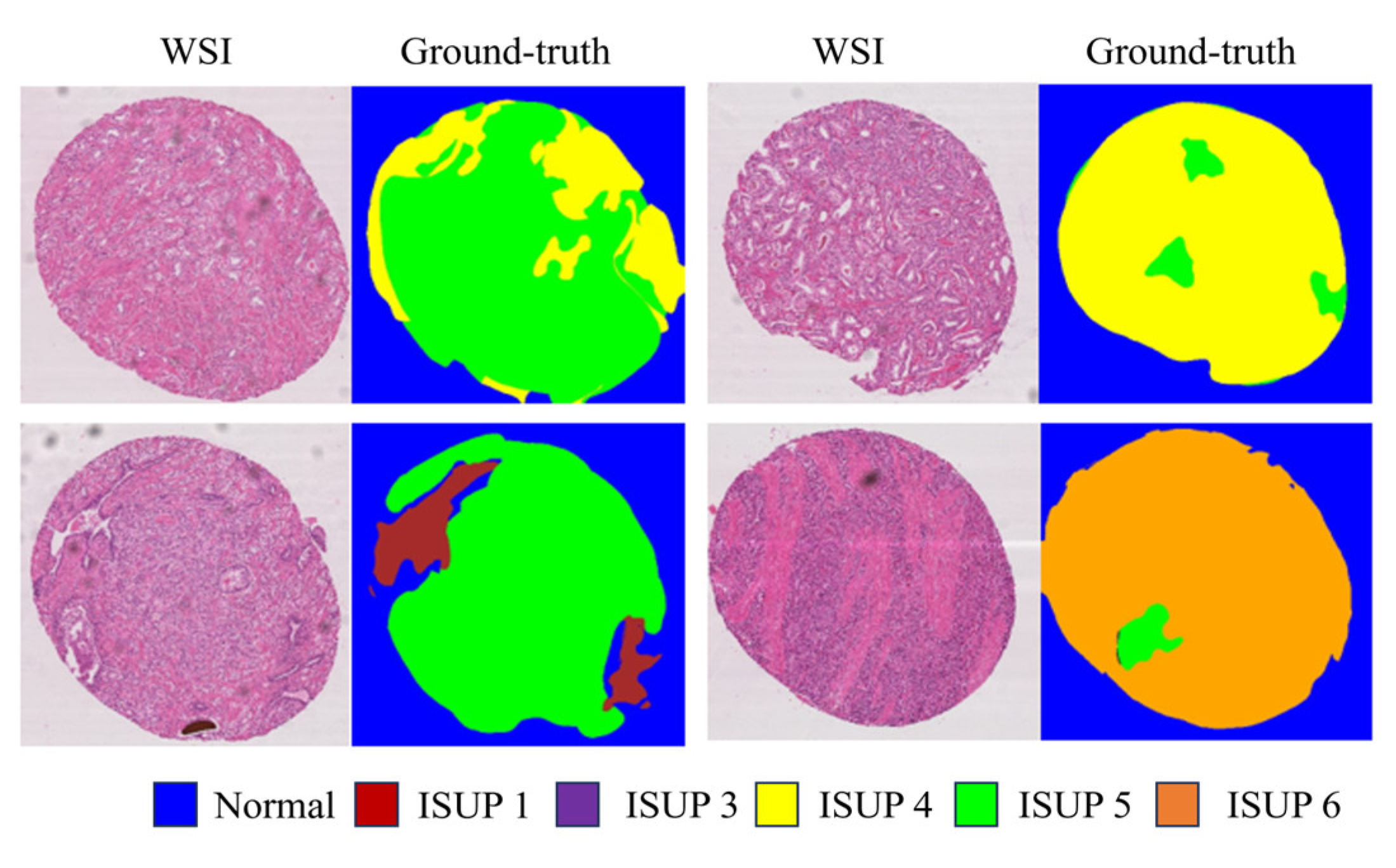

2]. Typically, pathologists perform manual examination and assessment of slides stained with Hematoxylin and Eosin (HE) to ascertain tumor status. The Gleason grading system for prostate cancer originated in the late 1960s and underwent further refinement throughout the subsequent decade. By the late 1980s, it had established itself as the predominant pathological grading system for prostate cancer diagnosis. Staging and grading are paramount in determining prognosis and devising tailored treatment strategies based on the outcomes observed in comparable patients [

3]. Different methods are available for PCa staging and detection. However, the most accurate approach is for skilled pathologists to examine stained biopsy tissue under a microscope. A Gleason grade of 1 to 5 is given to each tissue location based on the visible histological patterns. A tissue with the most prominent pattern (Gleason grade of 4) and the second most significant pattern (Gleason grade of 3) will have a Gleason score of 4+3. The final Gleason score is reported as the sum of the most and second most prominent patterns. Pathologists commonly conduct manual examination and assessment of slides stained with Hematoxylin and Eosin (HE). Nevertheless, the manual quantification of tumor sections, especially across various Gleason scores, may entail subjective judgments and prove challenging to consistently execute among diverse observers. This inherent subjectivity has the potential to introduce discrepancies in slide interpretation (refer to

Figure 1), thereby compromising the accuracy and dependability of final diagnoses and treatment strategies. Hence, the adoption of computerized pathology and deep learning methodologies holds promise for prostate cancer diagnosis from biopsies, offering potential relief from manual workload for pathologists and mitigating issues associated with individual subjectivity. In recent studies [

4], both deep learning and non-deep learning approaches have been employed by researchers for anomaly detection in medical images. Deep learning has elevated the state-of-the art scores across a multitude of domains [

5], including image processing, video analysis, as well asspeech and audio recognition. In medical domain, it has shown promising results for tumor detection and breast cancer [

6,

7]. The paper [

8] involved training deep learning models such as DeepLabv3, U-Net, and PSPNet on 60 the MICCAI dataset. The reported results indicate that PSPNet achieved the highest F1 score of 0.827 and Jaccard Index score of 0.836 among the models evaluated. On the other hand, DeepLabv3 achieved an F1 score of 0.798 and a Jaccard Index score of 0.821. Another work [

9] utilized the DeepLabV3+ model with MobileNetV2 backbone on the Gleason 2019 challenge dataset, achieving a mean Cohen’s quadratic kappa score of 0.56. [

10] leveraged two datasets—Harvard and Gleason Challenge 2019—to train and validate the model. Using Unet based architecture with different encoders produced average cohen’s kappa score of 0.728 and F1 score of 0.732 on both the dataset. Xu et al. [

11] proposed a weakly supervised approach for histopathology cancer image segmentation and classification, demonstrating significant improvements in the accuracy and efficiency of cancer detection in medical images. This method effectively leverages limited annotated data to train robust models for accurate cancer diagnosis. The study underscores the potential of weakly supervised learning in enhancing histopathological image analysis.

2. Proposed Methods

Image segmentation involves partitioning an image into multiple segments, assigning each pixel to a specific object type. Typically, this process employs a fundamental architecture comprising an encoder and a decoder. The encoder discerns features from the image using filters, while the decoder produces the ultimate output, often a segmentation mask outlining the objects. Many architectures adopt this standard structure or its modifications for image segmentation tasks.

Following image processing, the training of five architectures, namely UNet, DeepLabV3, UNet++, DeepLabV3+ and proposed GAN based network, was conducted. After hyperparameter tuning a cross-entropy loss function with a learning rate of 0.0005 was employed, utilizing ImageNet pre trained weights. Evaluation of the results was performed using two metrics: F1 score and Jaccard Index, facilitating comparison. The code was based on Pytorch library, and the models were trained on A100 gpu for 20 epochs.

1) UNet: The UNet architecture is particularly designed for semantic segmentation tasks, particu larly in biomedical image analysis. The U-Net architecture encompasses a contracting pathway for context extraction and an expanding pathway for precise localization. In the contracting pathway, standard convolutional and pooling layers are employed, while the expanding pathway integrates upsampling and convolutional layers. Incorporating skip connections enables the fusion of feature maps from both pathways, facilitating the preservation of spatial information and refinement of segmentation results. Training Unet produced an F1 score of 0.843 and Jaccard Index of 0.66 on the test dataset.

2)DeepLabV3: DeepLabv3’s pivotal advancement revolves around its implementation of atrous convolution, also referred to as dilated convolution, featuring multiple rates within the atrous spatial pyramid pooling (ASPP) module. This integration facilitates the efficient capture of multi-scale context information, enhancing object segmentation across various scales. Notably, DeepLabv3 has attained cutting-edge performance levels on established benchmark datasets for semantic segmentation tasks [

14,

15]. DeepLabV3 produced an F1 score of 0.86 and Jaccard Index of 0.70 on the test dataset.

3)UNet++: UNet++ extends the original UNet architecture, addressing its limitations by introducing a nested and densely connected U-shaped network. This innovation involves incorporatingnested and dense skip pathways, departing from the single skip connections of the original UNet. UNet++ employs multiple skip connections at various scales, forming a nested architecture that enhances the network’s ability to capture multi-scale contextual information for more precise segmentation. These connections promote feature reuse and gradient flow, mitigating the vanishing gradient issue during training and enhancing the network’s capacity to learn intricate features. Unet++ produced an F1 score of 0.853 and Jaccard Index score of 0.680.

4)DeepLabV3+: DeepLabV3+ builds upon DeepLabV3 by introducing an encoder-decoder architecture. This addition addresses the issue of lost spatial information during encoding in DeepLabV3. While DeepLabV3 relies on ASPP for capturing multi-scale context, DeepLabV3+ utilizes the decoder module to refine the segmentation maps and achieve more precise boundary delineation, ultimately leading to improved segmentation accuracy. DeepLabV3+ produced an F1 score of 0.824 131 and Jaccard Index score of 0.622.

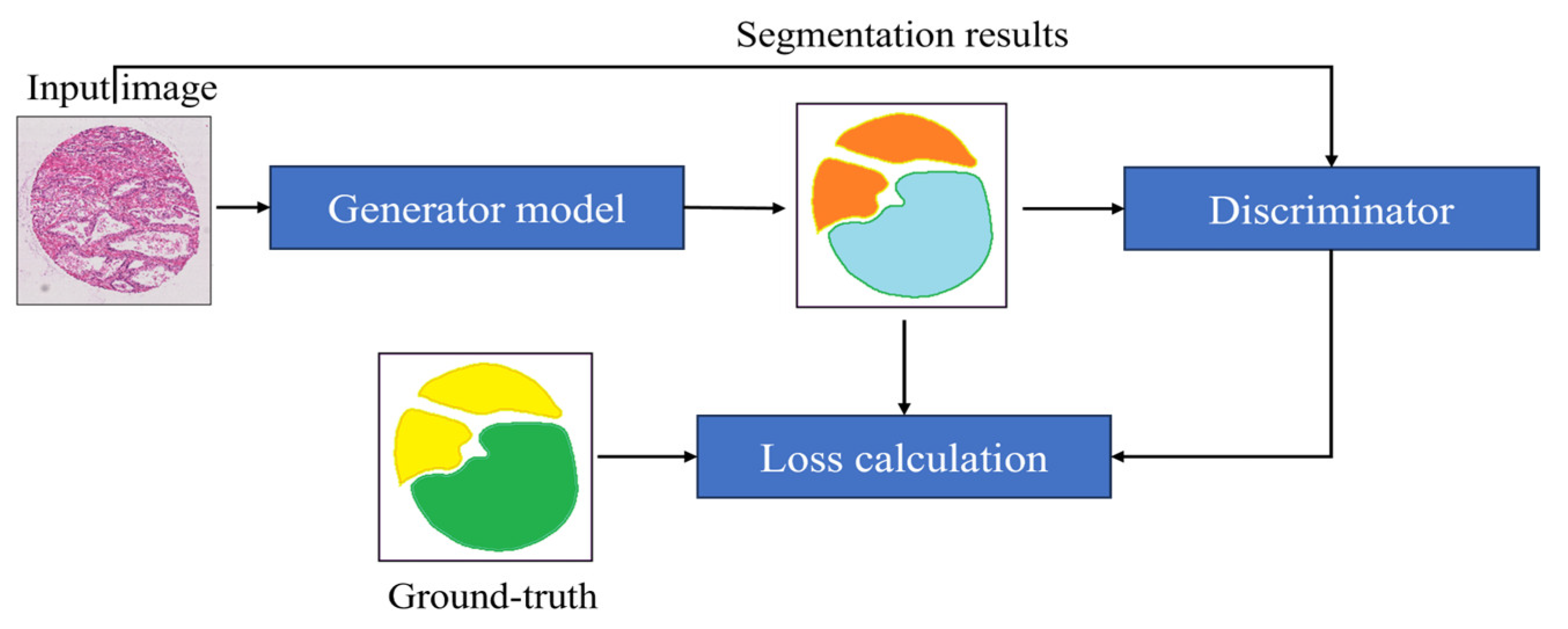

5)Proposed segmentation: We used a Generative segmentation that combines two powerful techniques, generative adversarial networks (GANs) and image segmentation, to improve the ac curacy of segmenting images. In this method, a UNet model acts as the generator, transforming low-resolution images into detailed segmented images. Instead of comparing with real images, a discriminator evaluates how close the generated segmented images are to the actual segmented images. The process is guided by a loss function that ensures the generated images match the real ones as closely as possible. By iteratively refining the generator based on feedback from the discriminator, this approach produces highly accurate segmentations with realistic details.

For the generator loss

For the discriminator loss

In the equation is the loss for the generator, is the loss for the discriminator, MSE stands for Mean Squared Error, MAE stands for Mean Absolute Error, Dis is the discriminator, λ is a weight parameter for balancing the MAE term in the generator loss, represents the segmented image, I represents the input image, represents the ground-truth image, 1 represents a tensor of ones, and 0 represents a tensor of zeros.

Figure 3.

The Block Diagram of the Proposed Segmentation Algorithm.

Figure 3.

The Block Diagram of the Proposed Segmentation Algorithm.

4. Results

The performance of various deep learning architectures for prostate cancer segmentation and grading was evaluated on the MICCAI-2019 dataset.

Table 1 summarizes the performance metrics for each model, including the Jaccard Index, F1 Score, and Accuracy.

The UNet architecture achieved an F1 score of 0.843 and a Jaccard Index of 0.660. While the model showed considerable performance, it was outperformed by other architectures in this study. DeepLabV3, which integrates atrous convolution for capturing multi-scale context, attained an F1 score of 0.860 and a Jaccard Index of 0.700. This model’s ability to handle objects at different scales proved beneficial for the task at hand, leading to an improved segmentation performance over the UNet. UNet++, an extension of the original UNet with nested and densely connected skip pathways, achieved an F1 score of 0.853 and a Jaccard Index of 0.680. The nested architecture of UNet++ facilitated the capture of multi-scale contextual information more effectively than the traditional UNet, resulting in better segmentation accuracy. DeepLabV3+, which builds upon DeepLabV3 by incorporating an encoder-decoder architecture, produced an F1 score of 0.824 and a Jaccard Index of 0.622. Although it introduced an effective refinement step through the decoder module, its performance did not surpass that of DeepLabV3, indicating the need for further optimization. 163 The proposed GAN-based segmentation model outperformed all other architectures with an F1 score of 0.872 and a Jaccard Index of 0. 777.The results demonstrate that the GAN-based model’s ability to leverage adversarial learning provides a distinct advantage in achieving high segmentation accuracy. The performance of each model was further assessed based on their accuracy in predicting the correct Gleason grades. The GAN-based model achieved the highest accuracy of 0.935, reflecting its superior capability in distinguishing between different Gleason grades accurately. In contrast, DeepLabV3 and UNet++ also demonstrated high accuracies of 0.920 and 0.919, respectively, confirming their effectiveness in this task. In conclusion, the proposed GAN-based segmentation model has shown remarkable performance in the automated Gleason grading of prostate cancer, significantly outperforming traditional models. The integration of adversarial training within the segmentation framework has proven to be a pivotal factor in enhancing segmentation accuracy and reliability. Future work will aim to further refine these models and explore additional strategies to improve their performance, ultimately contributing to more accurate and efficient prostate cancer diagnosis.

5. Discussion

The findings from this study highlight the significant potential of deep learning techniques in automating the Gleason grading of prostate cancer. The process of pre-training the encoder components of segmentation models on extensive datasets such as ImageNet has been instrumental. This approach allows the models to extract transferable features that can be fine-tuned for specific tasks using smaller datasets. This transfer learning technique has proven effective in enhancing the performance of the models evaluated in this study. The GAN-based segmentation model demonstrated superior performance metrics, highlighting the efficacy of adversarial training in improving segmentation accuracy. The results indicate that traditional models like UNet and DeepLabV3, while effective, do not capture the intricate details as efficiently as the GAN-based model. The GAN’s ability to generate more precise segmentations can be attributed to the adversarial training process, which continuously pushes the generator to produce outputs that are indistinguishable from the ground truth. This capability is crucial in medical image analysis, where accurate segmentation is vital for reliable diagnosis and treatment planning. Future research will focus on exploring alternative architectures and strategies to further enhance the performance of automated Gleason grading models. One promising direction is the use of ensemble methods, which combine the strengths of multiple models to improve overall accuracy and robustness. Additionally, incorporating domain-specific knowledge into the models could provide further improvements in performance by leveraging expert insights and annotations. Expanding the dataset by integrating data from multiple sources will be crucial in developing robust models capable of generalizing across diverse patient populations. This diversification will enable the models to capture domain-invariant features more effectively, leading to robust performance in real-world clinical settings. The ultimate objective is to transition these advanced models into practical tools that can assist pathologists in clinical practice. Developing a web-based application based on these models will provide an accessible and user-friendly platform for automated Gleason grading. This tool has the potential to significantly impact clinical workflows by reducing the manual workload for pathologists, improving diagnostic consistency, and providing timely and precise diagnostic support. Upon achieving satisfactory performance metrics, the next steps will involve rigorous validation of the models in clinical settings to ensure their reliability and efficacy. Collaborating with medical professionals and integrating their feedback will be essential in refining the application and ensuring it meets the needs of clinical practice.

6. Conclusions

The proposed approach, framed as a classification problem and utilizing ResNet models in conjunction with the Diagset dataset, highlights its potential as a robust tool for prostate cancer diagnosis and Gleason grading. The integration of machine learning and deep learning techniques represents a pivotal advancement in prostate cancer diagnosis and grading. The proposed methodology, centered around convolutional neural networks operating on histopathological scans at multiple magnification levels, presents a comprehensive and robust approach to prostate cancer diagnosis and Gleason grading. Leveraging the rich resource provided by the Diagset dataset, this research offers insights into the intricate features underlying prostate cancer pathology across different Gleason grades and tissue types. The comprehensive evaluation of the proposed model’s performance across various magnification levels reaffirms its adaptability and reliability in accurately assessing prostate cancer pathology. The consistent high accuracies achieved by the ResNet models further validate their stability and efficacy, positioning them as valuable assets in clinical practice for prostate cancer diagnosis and Gleason grading. Moving forward, an intriguing prospect involves transforming these models into an accessible web application, which would democratize access to advanced diagnostic tools. This application would allow healthcare professionals to seamlessly integrate machine learning into clinical practice, offering features such as real-time analysis of histopathological images and automated Gleason grading. Additionally, the application would facilitate integration with electronic medical record systems, enabling streamlined patient management and improved clinical workflows. By providing an intuitive interface, the web application would ensure that sophisticated machine learning tools are accessible to clinicians without requiring extensive technical expertise, thereby enhancing diagnostic accuracy and efficiency in diverse healthcare settings.

This study establishes a solid foundation for advancing prostate cancer diagnosis and Gleason grading through machine learning. Future research should explore additional algorithms, such as Vision Transformers, and improve model efficiency to further enhance accuracy, scalability, and clinical utility. By addressing these areas, future work can expand the capabilities and applications of these models, ultimately benefiting healthcare providers and patients alike.

Supplementary Materials

Not applicable.

Funding

This research received no funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Acknowledgments

In this section, you can acknowledge any support given which is not covered by the author’s contribution or funding sections. This may include administrative and technical support, or donations in kind (e.g., materials used for experiments).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rice-Stitt, T., Valencia-Guerrero, A., Cornejo, K. M., & Wu, C. L. Updates in Histologic Grading of Urologic Neoplasms. Archives of Pathology & Laboratory Medicine 2020, 144(3), 335–343. [CrossRef]

- Goldenberg, S. L., Nir, G., & Salcudean, S. E. A new era: artificial intelligence and machine learning in prostate cancer. Nature Reviews Urology 2019, 16(7), 391-403. [CrossRef]

- Ström, P. , et al. Artificial intelligence for diagnosis and grading of prostate cancer in biopsies: a population-based, diagnostic study. The Lancet Oncology 2020, 21(2), 222-232. [CrossRef]

- Bhattacharjee, S., et al. Quantitative Analysis of Benign and Malignant Tumors in Histopathology: Predicting Prostate Cancer Grading Using SVM. Applied Sciences 2019, 9(15), 2969. [CrossRef]

- Abraham, B., & Nair, M. S. Automated grading of prostate cancer using convolutional neural network and ordinal class classifier. Informatics in Medicine Unlocked 2019, 17, 100256. [CrossRef]

- Alzubaidi, L., et al. Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. Journal of Big Data 2021, 8(1), 53. [CrossRef]

- Wang, J., et al. Cnn-rnn: A unified framework for multi-label image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, 2285-2294.

- Kumar, M. D., Babaie, M., Zhu, S., Kalra, S., & Tizhoosh, H. R. A comparative study of CNN, BoVW and LBP for classification of histopathological images. In 2017 IEEE Symposium Series on Computational Intelligence (SSCI), 2017, 1-7. [CrossRef]

- LeCun, Y., Bengio, Y., & Hinton, G. Deep learning. Nature 2015, 521(7553), 436-444. [CrossRef]

- Pati, P.; et al. Weakly supervised joint whole-slide segmentation and classification in prostate cancer. Medical Image Analysis 2023, 89, 102915. [Google Scholar] [CrossRef] [PubMed]

- Fakoor, R.; Ladhak, F.; Nazi, A.; Huber, M. Using deep learning to enhance cancer diagnosis and classification. In Proceedings of the International Conference on Machine Learning 2013, 28, 3937–3949. [Google Scholar]

- Xu, W.; Fu, Y.L.; Zhu, D. ResNet and its application to medical image processing: Research progress and challenges. Computer Methods and Programs in Biomedicine 2023, 2023, 107660. [Google Scholar] [CrossRef]

- Almoosawi, N.M.; Khudeyer, R.S. ResNet-34/DR: a residual convolutional neural network for the diagnosis of diabetic retinopathy. Informatica 2021, 45. [Google Scholar]

- Guo, M., & Du, Y. Classification of thyroid ultrasound standard plane images using ResNet-18 networks. In 2019 IEEE 13th International Conference on Anti-counterfeiting, Security, and Identification (ASID), 2019, 324-328.

- Yurtkulu, S. C., Şahin, Y. H., & Unal, G. Semantic segmentation with extended DeepLabv3 architecture. In 2019 27th Signal Processing and Communications Applications Conference (SIU), 2019, 1-4.

- Heryadi, Y., et al. The effect of ResNet model as feature extractor network to performance of DeepLabV3 model for semantic satellite image segmentation. In 2020 IEEE Asia-Pacific Conference on Geoscience, Electronics and Remote Sensing Technology (AGERS), 2020, 74-77.

- Kayalibay, B., Jensen, G., & van der Smagt, P. CNN-based segmentation of medical imaging data. arXiv preprint arXiv:1701.03056 2017.

- Mortazi, A., & Bagci, U. Automatically designing CNN architectures for medical image segmentation. In Machine Learning in Medical Imaging: 9th International Workshop, MLMI 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, September 16, 2018, Proceedings 9, pp. 98–106. Springer International Publishing.

- Liu, F.; Lin, G.; Shen, C. CRF learning with CNN features for image segmentation. Pattern Recognition 2015, 48, 2983–2992. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal YP, S.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Information Processing in Agriculture 2020, 7, 566–574. [Google Scholar] [CrossRef]

- Dolz, J.; et al. HyperDense-Net: a hyper-densely connected CNN for multi-modal image segmentation. IEEE Transactions on Medical Imaging 2019, 38, 1116–1126. [Google Scholar] [CrossRef]

- Sultana, F.; Sufian, A.; Dutta, P. Evolution of image segmentation using deep convolutional neural network: A survey. Knowledge-Based Systems 2020, 201, 106062. [Google Scholar] [CrossRef]

- Frontiers in Radiology. Cardiothoracic Imaging. Vol. 3. January 12, 2024. [CrossRef]

- He, K., Zhang, X., Ren, S., & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, 770–778. [CrossRef]

- Wang, X., et al. Weakly supervised framework for detecting lesions in medical images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, 3514-3522.

- Liu, F.; et al. Deep residual network for joint analysis of breast and optical images in cancer detection. IEEE Journal of Biomedical and Health Informatics 2019, 23, 1395–1405. [Google Scholar]

- Zhu, H.; et al. ResNet-50 architecture for breast cancer histopathological image classification. Journal of Medical Systems 2020, 44, 77. [Google Scholar]

- Cao, R., et al. Prostate cancer detection and segmentation in multi-parametric MRI via CNN and conditional random field. In 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), 2019, pp. 1900–1904. [CrossRef]

- Soni, M., et al. Light weighted healthcare CNN model to detect prostate cancer on multiparametric MRI. Computational Intelligence and Neuroscience 2022. [CrossRef]

- Tolkach, Y.; et al. High-accuracy prostate cancer pathology using deep learning. Nature Machine Intelligence 2020, 2, 411–418. [Google Scholar] [CrossRef]

- Abbasi, A.A.; et al. Detecting prostate cancer using deep learning convolution neural network with transfer learning approach. Cognitive Neurodynamics 2020, 14, 523–533. [Google Scholar] [CrossRef]

- De Vente, C.; et al. Deep learning regression for prostate cancer detection and grading in bi-parametric MRI. IEEE Transactions on Biomedical Engineering 2020, 68, 374–383. [Google Scholar] [CrossRef] [PubMed]

- Anguita, D. , et al. The ‘K’ in K-fold Cross Validation. In ESANN 2012, 102, 441–446. [Google Scholar]

- Li, Z.; et al. A survey of convolutional neural networks: analysis, applications, and prospects. IEEE Transactions on Neural Networks and Learning Systems 2021, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Albawi, S., Mohammed, T. A., & Al-Zawi, S. Understanding of a convolutional neural network. In 2017 International Conference on Engineering and Technology (ICET) 2017, 1-6. [CrossRef]

- Shah, R.B. Current perspectives on the Gleason grading of prostate cancer. Archives of Pathology & Laboratory Medicine 2009, 133, 1810–1816. [Google Scholar]

- Tabesh, A.; et al. Multifeature prostate cancer diagnosis and Gleason grading of histological images. IEEE Transactions on Medical Imaging 2007, 26, 1366–1378. [Google Scholar] [CrossRef] [PubMed]

- Kott, O.; et al. Development of a deep learning algorithm for the histopathologic diagnosis and Gleason grading of prostate cancer biopsies: a pilot study. European Urology Focus 2021, 7, 347–351. [Google Scholar] [CrossRef] [PubMed]

- Sarwinda, D.; et al. Deep learning in image classification using residual network (ResNet) variants for detection of colorectal cancer. Procedia Computer Science 2021, 179, 423–431. [Google Scholar] [CrossRef]

- Hou, L. , et al. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, 2424–2433. [Google Scholar]

- Koziarski, M.; et al. Combined cleaning and resampling algorithm for multi-class imbalanced data with label noise. Knowledge-Based Systems 2020, 204, 106223. [Google Scholar] [CrossRef]

- Deng, J. , et al. Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009, 248-255.

- Samaratunga, H.; et al. From Gleason to International Society of Urological Pathology (ISUP) grading of prostate cancer. Scandinavian Journal of Urology 2016, 50, 325–329. [Google Scholar] [CrossRef] [PubMed]

- Nir, G.; et al. Automatic grading of prostate cancer in digitized histopathology images: Learning from multiple experts. Medical Image Analysis 2018, 50, 167–180. [Google Scholar] [CrossRef]

- Gleason, D.F. Classification of prostatic carcinomas. Cancer Chemotherapy Reports 1966, 50, 125–128. [Google Scholar]

- Nagpal, K.; et al. Development and validation of a deep learning algorithm for improving Gleason scoring of prostate cancer. NPJ Digital Medicine 2019, 2, 48. [Google Scholar] [CrossRef]

- Litjens, G.; et al. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Scientific Reports 2016, 6, 26286. [Google Scholar] [CrossRef]

- Wang, R., Yang, S., Wang, M., Zhou, Y., Li, X., Chen, W., Liu, W., Huang, Y., Wu, J., Cao, J., Feng, L., Wan, J., Wang, J., Huang, L., & Qian, K. (2024). A sustainable approach to universal metabolic cancer diagnosis. Nature Sustainability, 7(5), 602–615. [CrossRef]

- Srinidhi, C.L.; Ciga, O.; Martel, A.L. Deep neural network models for computational histopathology: A survey. Medical Image Analysis 2021, 67, 101813. [Google Scholar] [CrossRef] [PubMed]

- Lu, L.; et al. Deep learning and convolutional neural networks for medical image computing. Advances in Computer Vision and Pattern Recognition 2017, 10, 978–3. [Google Scholar]

- Milosevic, M., Jin, Q., Singh, A., & Amal, S. (2024). Applications of AI in multi-modal imaging for cardiovascular disease. *Frontiers in Radiology*, 3. [CrossRef]

- Rice-Stitt, T., Valencia-Guerrero, A., Cornejo, K. M., & Wu, C. L. (2020). Updates in Histologic Grading of Urologic Neoplasms. Archives of Pathology & Laboratory Medicine, 144(3), 335–343. [CrossRef]

- Singh, A., Wan, M., Harrison, L., Breggia, A., Christman, R., Winslow, R. L., & Amal, S. (2023). Visualizing Decisions and Analytics of Artificial Intelligence based Cancer Diagnosis and Grading of Specimen Digitized Biopsy: Case Study for Prostate Cancer. In Companion Proceedings of the 28th International Conference on Intelligent User Interfaces (pp. 166–170). Association for Computing Machinery. [CrossRef]

- Liu, B., Wang, Y., Weitz, P., Lindberg, J., Hartman, J., Wang, W., Egevad, L., Grönberg, H., Eklund, M., & Rantalainen, M. (2022). Using deep learning to detect patients at risk for prostate cancer despite benign biopsies. iScience, 25(7), 104663. [CrossRef]

- Wang, R., Yang, S., Wang, M. et al. A sustainable approach to universal metabolic cancer diagnosis. Nat Sustain 7, 602–615 (2024). [CrossRef]

- Gan, Y., Li, L., Zhang, L., Yan, S., Gao, C., Hu, S., Qiao, Y., Tang, S., Wang, C., & Lu, Z. (2018). Association between shift work and risk of prostate cancer: a systematic review and meta-analysis of observational studies. *Carcinogenesis*, 39(2), 87–97. [CrossRef]

- Huang, R., Li, Y., Wu, H., Liu, B., Zhang, X., & Zhang, Z. (2023). 68Ga-PSMA-11 PET/CT versus 68Ga-PSMA-11 PET/MRI for the detection of biochemically recurrent prostate cancer: a systematic review and meta-analysis. “Frontiers in Oncology”, 13, 1216894. [CrossRef]

- Yang, C., Sheng, D., Yang, B., Zheng, W., & Liu, C. (2024). A Dual-Domain Diffusion Model for Sparse-View CT Reconstruction. “IEEE Signal Processing Letters”, 31, 1279–1283. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).