1. Introduction and Summary

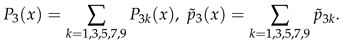

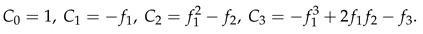

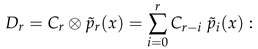

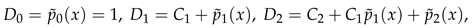

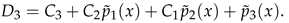

Suppose that we have a non-lattice estimate of an unknown parameter of a statistical model, based on a sample of size n. The distribution of a standard estimate is determined by the coefficients obtained by expanding its cumulants in powers of . In §2 we summarise the extended Edgeworth-Cornish-Fisher expansions of Withers (1984) for when . Then we give the multivariate Edgeworth expansions to . We show that the distribution of has the form where the normal distribution with , and for where has terms, reducible using symmetry. Its density has a similarly form. We argue that these expansions may be valid even if if is bounded.

§3 gives these expansions in complete detail when .

In §4 we suppose that and partition as of dimensions and We derive expansions for the conditional density and distribution of given to . §5 specialises to bivariate estimates.

§6 gives the extended Cornish-Fisher expansions for the quantiles of the conditional distribution when . An example is the distribution of a sample mean given the sample variance.

2. Extended Edgeworth-Cornish-Fisher theory

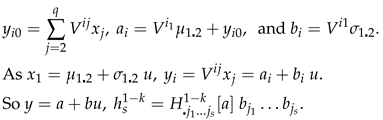

Univariate estimates. Suppose that

is a

standard estimate of

with respect to

n, typically the sample size. That is,

as

, and its

rth cumulant can be expanded as

where the

cumulant coefficients may depend on

n but are bounded as

, and

is bounded away from 0. Here and below ≈ indicates an asymptotic expansion that need not converge. So (

1) holds in the sense that

where

means that

is bounded in

n. Withers (1984) extended Cornish and Fisher (1937) and Fisher and Cornish (1960) to give the distribution and quantiles of

have asymptotic expansions in powers of

:

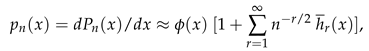

where is a unit normal random variable with density , and are polynomials in x and the standardized cumulant coefficients

and so on, where is the kth Hermite polynomial,

See Withers (1984) for

, Withers (2000) for (6) and §6 for relations between

. Also,

For , is a polynomial of order only , while is of order .

The original Edgeworth expansion was for

the mean of

n independent identically distributed random variables from a distribution with

rth cumulant

. So (

1 ) holds with

, and other

. An explicit formula for its general term was given in Withers and Nadarajah (2009) using Bell polynomials.

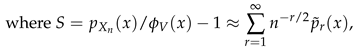

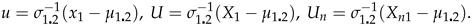

Ordinary Bell polynomials. For a sequence

the partial ordinary Bell polynomial , is defined by the identity

where

for

They are tabled on p309 of Comtet (1974).

The complete ordinary Bell polynomial,

is defined in terms of

S by

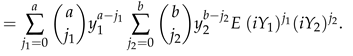

Multivariate estimates. Suppose that is a standard estimate of

with respect to n. That is, as , and for ,

the

rth order cumulants of

can be expanded as

where the

cumulant coefficients may depend on

n but are bounded as

. So the bar replaces

by

j:

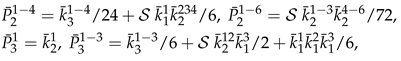

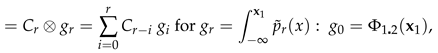

with density and distribution

V may depend on

n, but we assume that

is bounded away from 0. Set

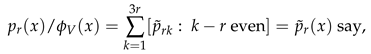

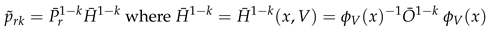

where for

is a function of

given for the 1st time in the appendix. In (13), (14) and below, we use

the tensor summation convention of implicitly summing

over their range

. We make

symmetric in

using the operator

that symmetrizes over

:

The terms involving

are given in the

Appendix A. By Withers and Nadarajah (2010b) or Withers (2024),

has distribution and density

is

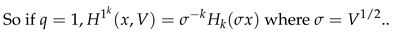

the multivariate Hermite polynomial. For their dual form see Withers and Nadarajah (2014). By Withers (2020), for

,

where

is the

element of

and

is the

element of

This gives

in terms of the moments of

Y. For example

This gives the Edgeworth expansion for the distribution of to . See Withers (2024) for more terms.

For large q, of (21) and (23) have terms. So if and , then where . So if for example is bounded, then the Edgeworth series should converge if

The log density can be expanded as

See Withers and Nadarajah (2016). Also for

of (6),

Example 1.

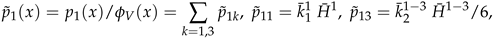

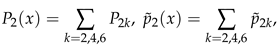

Let be a sample mean. Then , and only the leading coefficient in (11) are non-zero. So . In order needed, the non-zero are

and have terms but many are duplicates. We now show how symmetry reduces this to terms. We use the multinomial coefficient . For example .

Set

where tensor summation is

not used. By (22),

where all

are distinct. Similarly we can write out

for

This reduces the number of terms in

from

to

for

, to

for

, to

for

and to

for

If we reinterpret

as

, where again tensor summation is

not used, then we can reinterpret the above expression for

, as an expression for

. For example,

These results can be extended to

Type B estimates, that is to

with cumulant expansions not of type (11), but

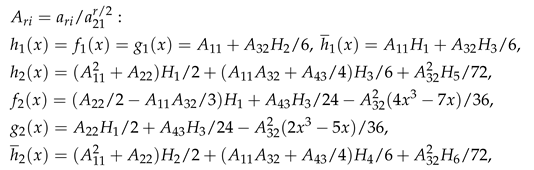

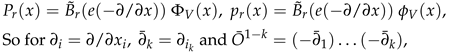

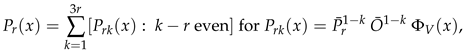

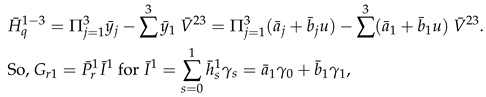

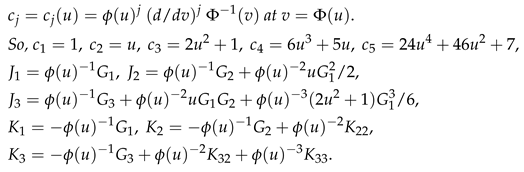

3. The Distribution of for

We first give

of (22) for

, and then

of (21).

for

of (16), (17), where we use the dual notation,

So

and

are given by (29) with

and 2,

For more examples see Withers (2000).

is just

with 1 and 2 reversed. The other

needed in (31) for

are as follows.

(18) and (22) now give the distribution and density of

to

. Set

Then

of (21) is given by replacing

by

in the expressions above for

. That is,

(18) and (21) now give to for .

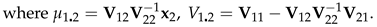

4. The Conditional Density and Distribution

For and partition and , as where are vectors of length . Partition as where are .

The conditional density of

given

, is

is

of (22) for

, and

is the density of

. By (37)–(39), §2.5 of Anderson (1958),

The distribution of

is

By (22), for

and

of (14)–(16),

and

is given by replacing

and

in

by

and now implicit summation in (41) is for

over

. So,

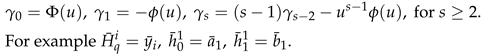

, and so on. For

of (7), set

So the conditional density

of (34), relative to

of (37), is

So now we have the conditional density to

. The expansion for

the conditional distribution about

of (39), is

This gives

in terms of

, given by (54) in terms of

and derivatives of

. (51) now gives

in terms of

of (36). So

and (50) give the conditional distribution to

. Alternatively, as

is a polynomial in

, by (37),

is linear in

for

where

We now illustrate this.

By (39),

By (50), for

of (46),

given by (52) in terms of

. For

of (53), by (37),

Set

By (53),

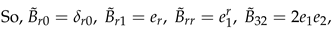

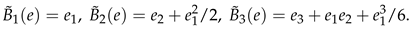

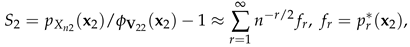

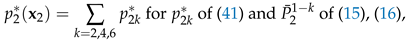

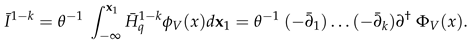

5. The Case

In this case

and for

of (42) and

u of (55),

and

of (6). For example by (30),

and

are given in §3 in terms of

(47) gives

in terms of

and

, which are given for

by (22) in terms of

of §3. For

of (32), set

for

of (59) and (60). For example

and

is giving by reversing 1 and 2 in

. Alternatively, we can use

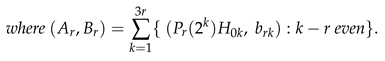

Theorem 1.

Set For even, of (53) is given by

PROOF For

of (54),

This gives

, and so

of (52) and so

to

, in terms of the coefficients

So (67) gives

in terms of

of §3 via

The explicit form for (66), despite the work needed to obtain of (64).

Example 2. If the distribution of is symmetric about w, then for r odd, , and the non-zero are

Example 3.

Let be a sample mean. Then , and only the leading coefficients in (11) are non-zero. So . The non-zero were given in Example 2.1. For are given by §3 with these non-zero , and

needed for of §3 does not simplify. Nor does of §3 needed for .

Example 4.

Consider the classical problem of the distribution of a sample mean, given the sample variance. So Let be the usual unbiased estimates of the 1st 2 cumulants from a univariate random sample of size n from a distribution with rth cumulant . So By the last 2 equations of §12.15 and (12.35)–(12.38) of Stuart and Ord (1991), the cumulant coefficients needed for of (14) for , that is, the coefficients needed for the conditional density to are

(47) gives in terms of and , that is, in terms of and of §3 in terms of of (31). In this example, many of these are 0. By (15)–(17) and the Appendix A, the non-zero are in order needed,

(24)–(26) now give and for . By (18) and (47), this gives the conditional density to . (67) gives needed for the conditional distribution to in terms of of (68). So

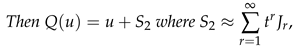

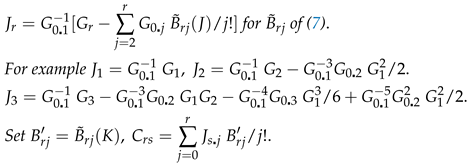

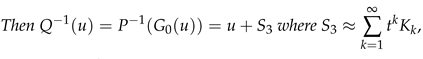

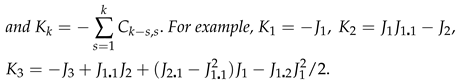

6. Conditional Cornish-Fisher Expansions

Suppose that . Here we invert the conditional distribution (56), to obtain its its extended Cornish-Fisher expansions similar to (4). For any function with finite derivatives, set

Lemma 1.

Suppose that is 1 to 1 increasing with jth derivative , and for some ,

PROOF Set

One obtains

similarly.

A different form for (73) was given in Theorem A2 of Withers (1983). So

We can now give the quantiles of the conditional distribution (56).

Theorem 2.

A simpler formula for is

PROOF Apply Lemma 6.1 to (56) with

. Take

and

for

given by (52) in terms of

of (59). So

and for

of (56),

and

are given by (70) and (72) in terms of

and their derivatives. These are given by

(74) follows from (3.2) of Withers (1984). □

We had hoped to read the conditional off the conditional density. But the expansion (47) cannot be put into the form (3) if , as the coefficient of in of (5) is 0. So the conditional estimate is generally not a standard estimate. (An exception is when since then and by (50), of (39). We have yet to see what exponential families this extends to.) It might be possible to remedy this by extending the results here to Type B estimates. But there seems little point in doing so.

7. Conclusions

§2 and the

Appendix A give the density and distribution of

to

, for

any standard estimate, in terms of certain functions of the cumulants coefficients

of (11), the coefficients

of (14)–(17). Most estimates of interest are standard estimates, including functions of sample moments, like the sample correlation, and any multivariate function of

k-statistics,. §3 gave the density and distribution of

in more detail when

using the dual notation

. §4 gave the conditional density and distribution of

given

to

where

is any partition of

. The expansion (47) gives the conditional density of a standard estimate in terms of

of (47). The conditional distribution (50) to

requires the function

of (54), or its expansion (59) or (65). §6 gave the extended Cornish-Fisher expansions for the quantiles of the conditional distribution when

.

8. Discussion

A good approximation for the distribution of an estimate, is vital for statistical inference. It enables one to explore the distribution’s dependence on underlying parameters, such as correlation. Our analytic method avoids the need for simulation or jack-knife or bootstrap methods while providing greater accuracy than them. Hall (1992) uses the Edgeworth expansion to show that the bootstrap gives accuracy to . Hall (1988) says that “2nd order correctness usually cannot be bettered”. Fortunately this is not true for our analytic method. Simulation, while popular, can at best shine a light on behaviour when there is only a small number of parameters.

Estimates based on a sample of independent but not identically distributed random vectors, are also generally standard estimates. For example for a univariate sample mean where has rth cumulant , then where is the average rth cumulant. For some examples, see Skovgaard (1981a, 1981b) and Withers and Nadarajah (2010a, 2020b). The last is for a function of a weighted mean of complex random matrices.

A promising approach is the use of conditional cumulants. §6.2 of McCullagh (1984) uses conditional cumulants to give the conditional density of a sample mean to . §5.6 of McCullagh (1987) gave formulas for the 1st 4 cumulants conditional on when and are uncorrelated. He says that assumption can be removed but gives no details how. That might give an alternative to our approach, but seems unlikely as the conditional estimate is generally not a standard estimate.

(7.5) of Barndoff-Nielsen and Cox (1989) gave the 3rd order expansion for the conditional density of a sample mean to , but did not attempt to integrate it.

Here we have only considered expansions about the normal. However expansions about other distributions can greatly reduce the number of terms by matching the leading bias coefficient. The framework for this is Withers and Nadarajah (2010a). For expansions about a matching gamma, see Withers and Nadarajah (2011, 2014).

The results here can be extended to tilted (saddlepoint) expansions by applying the results of Withers and Nadarajah (2010a). Tilting was 1st used in statistics by Daniels (1954). He gave an approximation to the density of a sample mean. A conditional distribution by tilting was first given by Skovgaard (1987) up to for the distribution of a sample mean conditional on correlated sample means. For some examples, see Barndoff-Nielsen and Cox (1989). For other some results on conditional distributions, see Pfanzagl (1979), Booth et al. (1992), DiCiccio et al. (1993), Hansen (1994), Moreira (2003), Chapter 4 of Butler (2007), and Kluppelberg and Seifert (2020). The results given here form the basis for constructing confidence intervals and confidence regions. See Withers (1989).

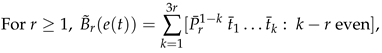

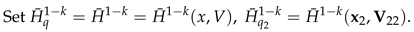

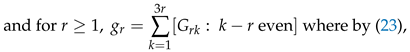

Appendix A. The Coefficients P ¯ r 1-k Needed for (14)

Here we give the coefficients

needed for (14) for

using the symmetrising operator

. They are given for

by (15), and for

by (16)–(17) and the following.

References

- Anderson, T. W. (1958) An introduction to multivariate analysis. John Wiley, New York.

- Barndoff-Nielsen, O.E. and Cox, D.R. (1989). Asymptotic techniques for use in statistics. Chapman and Hall, London.

- Booth, J., Hall, P. and Wood, A. (1992) Bootstrap estimation of conditional distributions. Annals Statistics, 20 (3), 1594–1610. [CrossRef]

- Butler, R.W. (2007) Saddlepoint approximations with applications, pp. 107–144. Cambridge University Press. [CrossRef]

- Comtet, L. Advanced Combinatorics; Reidel: Dordrecht, The Netherlands, 1974.

- Cornish, E.A. and Fisher, R. A. (1937) Moments and cumulants in the specification of distributions. Rev. de l’Inst. Int. de Statist. 5, 307–322. Reproduced in the collected papers of R.A. Fisher, 4.

- Daniels, H.E. (1954) Saddlepoint approximations in statistics. Ann. Math. Statist. 25, 631–650. [CrossRef]

- DiCiccio, T.J., Martin, M.A. and Young, G.A. (1993) Analytical approximations to conditional distribution functions. Biometrika, 80 4, 781–790. [CrossRef]

- Fisher, R. A. and Cornish, E.A. (1960) The percentile points of distributions having known cumulants. Technometrics, 2, 209–225.

- Hall, P. (1988) Rejoinder: Theoretical Comparison of Bootstrap Confidence Intervals Annals Statistics, 16 (3),9 81–985.

- Hall, P. (1992) The bootstrap and Edgeworth expansion. Springer, New York.

- Hansen, B.E. (1994) Autoregressive conditional density estimation. International Economic Review, 35 (3), 705–730. [CrossRef]

- Kluppelberg, C. and Seifert, M.I. (2020) Explicit results on conditional distributions of generalized exponential mixtures. Journal Applied Prob., 57 3, 760–774. [CrossRef]

- McCullagh, P., (1984) Tensor notation and cumulants of polynomials. Biometrika 71 (3), 461–476. McCullagh (1984). [CrossRef]

- McCullagh, P., (1987) Tensor methods in statistics. Chapman and Hall, London.

- Moreira, M.J. (2003) A conditional likelihood ratio test for structural models. Econometrica, 71 (4), 1027–1048.

- Pfanzagl, P. (1979). Conditional distributions as derivatives. Annals Probability, 7 (6), 1046–1050.

- Stuart, A. and Ord, K. (1991). Kendall’s advanced theory of statistics, 2. 5th edition. Griffin, London.

- Skovgaard, I.M. (1981a) Edgeworth expansions of the distributions of maximum likelihood estimators in the general (non i.i.d.) case. Scand. J. Statist., 8, 227-236.

- Skovgaard, I. M. (1981b) Transformation of an Edgeworth expansion by a sequence of smooth functions. Scand. J. Statist., 8, 207-217.

- Skovgaard, I.M. (1987) Saddlepoint expansions for conditional distributions, Journal of Applied Prob., 24 (4), 875–887. [CrossRef]

- Withers, C.S. (1983) Accurate confidence intervals for distributions with one parameter. Ann. Instit. Statist. Math. A, 35, 49–61. [CrossRef]

- Withers, C.S. (1984) Asymptotic expansions for distributions and quantiles with power series cumulants. Journal Royal Statist. Soc. B, 46, 389–396. Corrigendum (1986) 48, 256. For typos, see p23–24 of Withers (2024).

- Withers, C.S. (1989) Accurate confidence intervals when nuisance parameters are present. Comm. Statist. - Theory and Methods, 18, 4229–4259. [CrossRef]

- Withers, C.S. (2000) A simple expression for the multivariate Hermite polynomials. Statistics and Prob. Letters, 47, 165–169. [CrossRef]

- Withers, C.S. (2024) 5th-Order multivariate Edgeworth expansions for parametric estimates. Mathematics, 12,905, Advances in Applied Prob. and Statist. Inference. https://www.mdpi.com/2227-7390/12/6/905/pdf.

- Withers, C.S. and Nadarajah, S. (2010a) Tilted Edgeworth expansions for asymptotically normal vectors. Annals of the Institute of Statistical Mathematics, 62 (6), 1113–1142. For typos, see p25 of Withers (2024). [CrossRef]

- Withers, C.S. and Nadarajah, S. (2010b) The bias and skewness of M-estimators in regression. Electronic Journal of Statistics, 4, 1–14. http://projecteuclid.org/DPubS/Repository/1.0 /Disseminate?view=bodyid=pdfview1handle=euclid.ejs/1262876992 For typos, see p25 of Withers (2024).

- Withers, C.S. and Nadarajah, S. (2011) Generalized Cornish-Fisher expansions. Bull. Brazilian Math. Soc., New Series, 42 (2), 213–242. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2014) Expansions about the gamma for the distribution and quantiles of a standard estimate. Methodology and Computing in Applied Prob., 16 (3), 693-713. For typos, see p25–26 of Withers (2024). [CrossRef]

- Withers, C.S. and Nadarajah, S. (2016) Expansions for log densities of multivariate estimates. Methodology and Computing in Appl. Prob.ability, 18, 911–920. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2020) The distribution and percentiles of channel capacity for multiple arrays. Sadhana, SADH, Indian Academy of Sciences, 45 (1), 1–25. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).