1. Introduction

In today's dynamic financial landscape, the integration of deep learning models has revolutionized the approach to risk prediction and analysis. Traditional quantitative methods often rely on metrics like maximum drawdown to evaluate risk control capabilities. However, with the advent of deep learning, there's a growing recognition that risk assessment requires a more nuanced understanding, extending beyond simplistic measures. Deep learning models offer unparalleled sophistication in capturing complex patterns and dynamics within financial data. Yet, their efficacy in managing risks hinges not only on statistical metrics but also on their generalization ability—their capacity to adapt to unforeseen market conditions, especially during crises such as stock market crashes.

While metrics like maximum drawdown, Sharpe ratio, and Sortino ratio provide valuable insights, their effectiveness in gauging a model's resilience to extreme events depends heavily on its ability to generalize. A model may perform exceptionally well in backtesting or even during a limited live trading period. Still, its true test lies in its response to unprecedented market turmoil.

This article delves into the critical considerations for evaluating the risk control capabilities of deep learning models in finance. It underscores the importance of not only statistical metrics but also a comprehensive understanding of a model's generalization ability, particularly in the face of adverse market conditions.By exploring how deep learning models fare in scenarios such as stock market crashes and emphasizing the significance of cross-validation techniques, this article aims to provide practitioners with insights into building robust risk management systems. Ultimately, it advocates for a holistic approach that combines quantitative analysis with an understanding of macroeconomic factors to enhance financial risk prediction and analysis in today's volatile markets.

2. Related work

2.1. Deep learning

The origins of deep learning can be traced back to the 1950s, and Bloom's classification of cognitive dimensions in the Classification of Educational Goals reflects the idea that there are deep and shallow learning levels (Lorraine W. Anderson et al., 2009). In the early days of research, most researchers considered deep learning to be the opposite of superficial learning. Biggs (2001) pointed out that deep learning is a kind of learning mode in which knowledge is cognitively processed at a high level or actively, while the corresponding shallow learning is cognitively processed at a low level, such as repetitive memory or mechanical recitation. With the further research and development of deep learning theory, Beattie et al. (1997) proposed that deep learning means that learners actively learn in order to understand and apply knowledge, which is mainly manifested by critical understanding and deep processing of knowledge, and emphasizes the connection with previous knowledge and experience.

The differences between deep learning and shallow learning in memory style, knowledge system, focus of attention, learner's learning motivation, learning engagement, reflective state in learning, thinking level and transfer ability of learning results, etc. It is concluded that deep learning has five characteristics: focusing on the cultivation of critical thinking ability, emphasizing the correlation and integration of information, promoting the construction and reflection of knowledge, the transfer and application of conscious knowledge and ability, and the cultivation of problem-solving. It is also pointed out that these five characteristics of deep learning are not isolated, but interrelated as a whole, which jointly promote the realization of deep learning.

2.2. Deep learning model

Deep learning is an important branch of the field of artificial intelligence, which has made remarkable progress in recent years. Among them, RNN, CNN, Transformer, BERT and GPT are five commonly used deep learning models, which have made important breakthroughs in computer vision, natural language processing and other fields. RNN is a kind of neural network model, its basic structure is a cyclic body, which can process sequence data. RNNS are characterized by their ability to process the current input while remembering previous information. This structure makes RNNS well suited for tasks such as natural language processing and speech recognition, which require processing data with temporal relationships.

CNN is a kind of neural network model, its basic structure is composed of multiple convolutional layers and pooling layers. The convolution layer can extract the local features in the image, while the pooling layer can reduce the number of features and improve the computational efficiency. This structure of CNN makes it well suited for computer vision tasks such as image classification, object detection, and so on. Compared with RNN, CNN is better at processing image data because it can automatically learn local features in the image without the need to manually design a feature extractor.

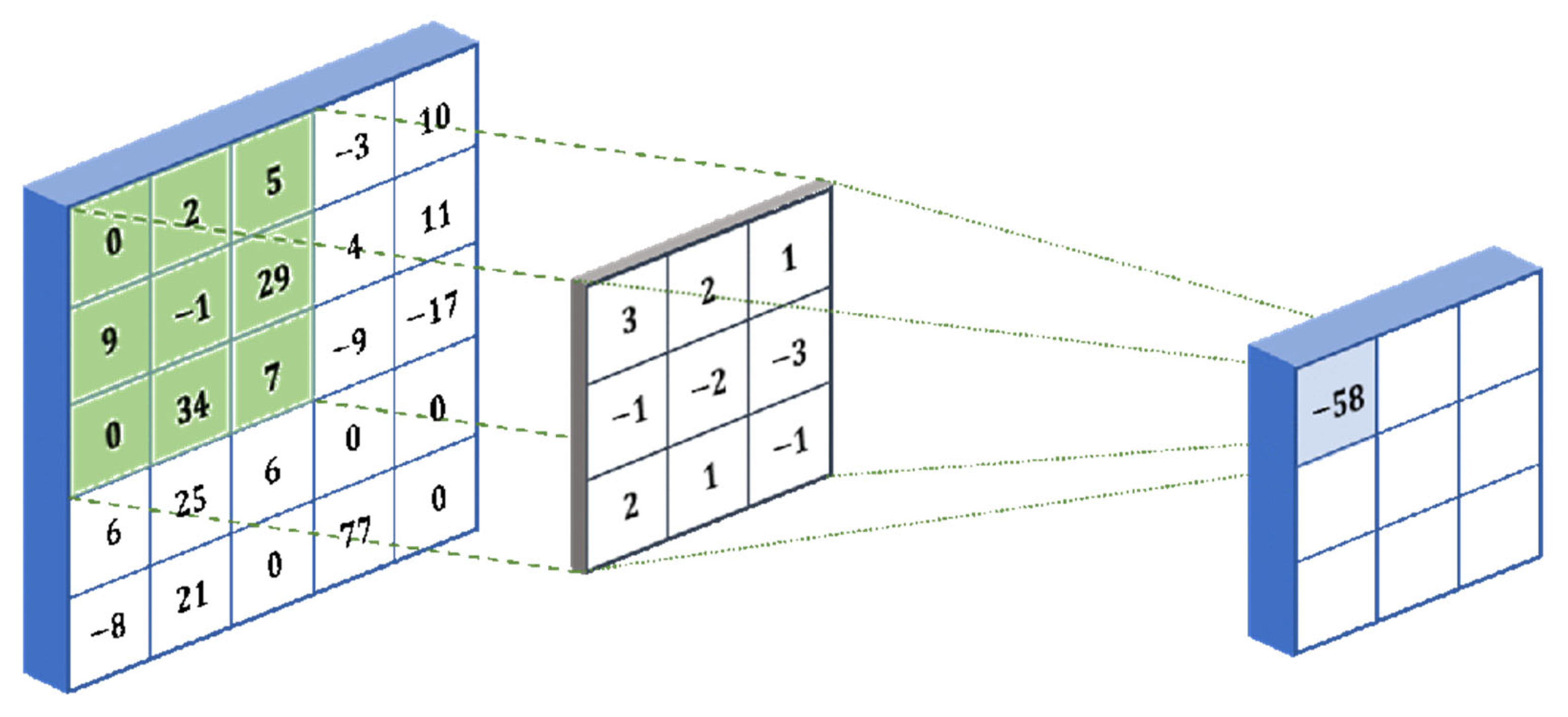

Figure 1.

Convolutional network model frame diagram.

Figure 1.

Convolutional network model frame diagram.

How convolutional neural networks work:

CNN applies filters (small rectangles) to the input image to detect features such as edges or shapes. The filter slides over the width and height of the input image and calculates the dot product between the filter and the input to generate the activation graph.

The activation graph is fed into the pooling layer, and the graph is downsampled to reduce the dimension. This makes the model more efficient and robust. The final layer is the fully connected layer, which classifies the input images into categories such as "dog" or "cat."

Some popular CNN architectures include AlexNet, VGGNet, ResNet, and Inception. These have been used to solve complex problems, such as identifying thousands of objects or detecting disease through medical scans.

To build a CNN, you can define the architecture by selecting hyperparameters, such as the number of filters, filter size, stride length, and pool size.

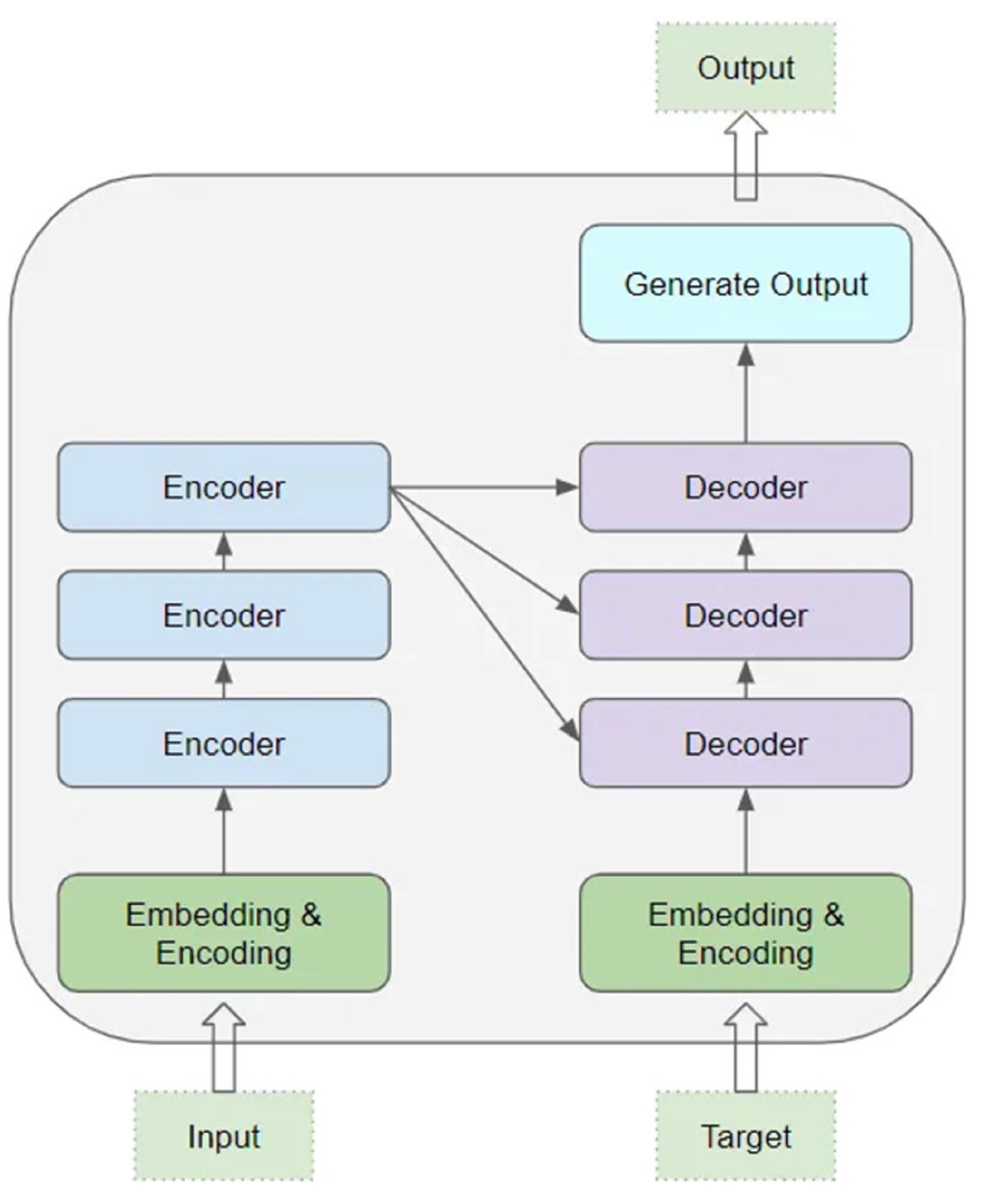

Transformer is a neural network model based on self-attention mechanism, and its basic structure is composed of multiple encoders and decoders. The encoder can convert an input sequence into a vector representation, while the decoder can convert that vector representation back into an output sequence. Transformer's biggest innovation is the introduction of a self-attention mechanism, which allows the model to better capture long-distance dependencies in the sequence. Transformer has seen great success in natural language processing, such as machine translation, text generation and other tasks.

As can be seen from

Figure 2, the core of Transformer is composed of multiple Encoder and Decoder layers. Here, we refer to a single layer as an encoder or decoder, and a group of such layers as an encoder or decoder group. The encoder group and the decoder group each have their respective Embedding layers to process their own input data. Finally, the final result is generated through an output layer.

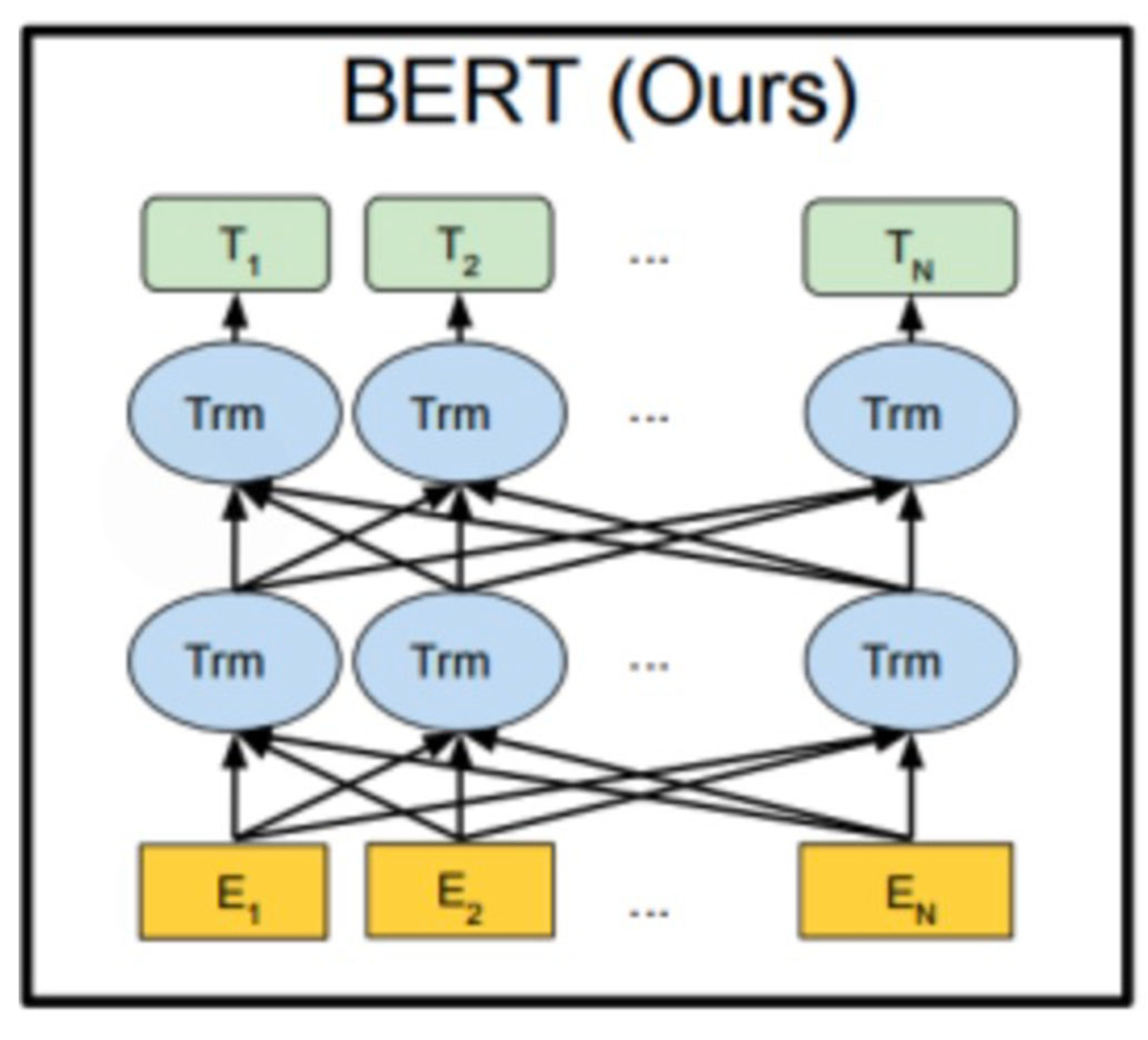

BERT is a pre-trained language model based on Transformer, and its biggest innovation is the introduction of a bi-directional Transformer encoder, which allows the model to consider both the contextual information of the input sequence. BERT learns a wealth of language knowledge by pre-training on large amounts of text data, and then fine-tunes it on specific tasks, such as text classification, sentiment analysis, and more. BERT has achieved great success in the field of natural language processing and is widely used for a variety of NLP tasks.

BERT uses Transformer, an architecture based on a Self-Attention Mechanism. Transformer allows the model to process input sequences taking into account contextual information from all locations simultaneously. The advantages of BERT model in forecasting are mainly reflected in the following aspects:

Context understanding: BERT (Bidirectional Encoder Representations from Transformers) is a pre-trained language model whose biggest feature is the introduction of bidirectional context understanding. While traditional language models such as Word2Vec and GloVe can only predict words based on information from the left or right side of the context, BERT is able to consider both the left and right side of the context, better capturing meaning and relationships within the context.

Richness of semantic representation: BERT models are pre-trained on large-scale corpora and learn rich semantic representations. This makes BERT have good universality and transferability in various natural language processing tasks, and can deal with language understanding problems in different fields and different contexts.

Fine-tuning mechanism: BERT models can translate general language understanding into task-specific performance improvements through fine-tuning on specific tasks. By fine-tuning domain-specific datasets, BERT can be personalized optimized for different tasks, improving prediction accuracy.

Multi-task learning: BERT models support multi-task learning and can be trained on multiple related tasks at the same time, thus improving the generalization ability and applicability of the model. This multi-tasking approach to learning allows BERT models to better understand the diversity and complexity of languages.

The effect of transfer learning: BERT model has a strong transfer learning ability because it is pre-trained on a large-scale corpus. Even in the task facing a small amount of labeled data, BERT can adapt quickly and achieve good results through fine-tuning technology.

GPT is also a pre-trained language model based on Transformer, and its biggest innovation is the use of a one-way Transformer encoder, which allows the model to better capture contextual information about the input sequence. GPT learns a wealth of language knowledge by pre-training on large amounts of text data, and then fine-tuning on specific tasks, such as text generation, summarization, and so on. GPT has also achieved great success in the field of natural language processing and is widely used for a variety of NLP tasks.

2.3. Risk prediction model

Financial risk prediction plays a vital role in the financial field. Machine learning methods have been widely used to automatically detect potential risks, thereby saving labor costs. However, developments in the field in recent years have lagged behind two facts: 1) the algorithms used are somewhat outdated, especially in the context of rapid advances in generative AI and large language models (LLMs); 2) The lack of a unified and open source financial benchmark has hampered research for years. To address these issues, we propose FinPT and FinBench: the former is a novel approach to financial risk prediction through Profile Tuning of large pre-trained base models, while the latter is a high-quality dataset of financial risk such as default, fraud, and customer churn. In FinPT, we fill financial table data into predefined instruction templates, obtain natural language customer descriptions by prompting LLMs, and use these descriptions to fine-tune large underlying models for predictions. We demonstrate the validity of the proposed FinPT by conducting experiments with a series of representative strong benchmark models on FinBench. The analysis further deepens the understanding of LLMs in financial risk prediction.

The predictive model of time series in deep learning models has changed dramatically in the last two years, especially since the MAE of kaiming appears. Now the model of time series can also be pre-trained without supervision using a method similar to MAE. The Makridakis M-Competitions series (known as the M4 and M5 respectively) took place in 2018 and 2020 respectively (the M6 also took place this year). For those who do not know, M-series games can be thought of as a summary of an existing state of the time series ecosystem, providing empirical and objective evidence for the current theory and practice of prediction.

The 2018 M4 results showed that pure "ML" methods outperformed traditional statistical methods by a large margin, which was unexpected at the time. In M5, two years later, the highest score was having only the "ML" method. And all of the top 50 are basically ML-based (mostly tree models). The game saw the debut of LightGBM (used for time series forecasting) as well as Amazon's Deepar and N-Beats. The N-Beats model was released in 2020 and outperformed the winner of the M4 contest by 3%!

Therefore, this paper proposes a deep generation modeling method for financial time series and applies it to VaR (Value at Risk) estimation. The method is based on deep learning techniques to generate models to simulate the probability distribution of financial time series, so as to achieve predictions of future risks. In order to introduce this method, the paper first introduces the characteristics and challenges of financial time series, including nonlinearity, non-normal distribution and volatility aggregation. Then, the paper puts forward the concept of deep generative modeling, that is, using deep learning models to generate samples that conform to the characteristics of financial time series. Then, the paper introduces the definition and importance of VaR, and points out the limitations of traditional methods in VaR estimation.

3. Methodology

3.1. Data preprocessing

When pre-processing financial time series data, it is usually necessary to perform operations such as cleaning, smoothing and standardization to improve the stability and accuracy of the model. In order to adapt to the requirements of deep learning models, we divide the time series data into fixed size time Windows, treating each window as a two-dimensional array, similar to images in image applications. This approach helps the model to capture complex patterns and dynamic features in time series.

These time Windows are stitched together into a three-dimensional array for training and fitting the model. The first 10 days of each window are used as conditions and the last 10 days are used as targets. Thus, each two-dimensional array can be called a data frame, window, slice, or sequence. Generally speaking, the sequence of dimension for (p + q) x d, including p length is condition, q is the target sequence length, d is the number of time series.

This data processing approach not only helps to improve the performance of the model, but also enables the model to better understand the correlations and trends in the time series data. By converting time series data into two-dimensional arrays and leveraging the powerful properties of deep learning models, we can more accurately predict changes in financial markets, thereby better managing risk and making investment decisions.

3.2. Build Deep Learning Model

In this experiment, deep learning models (such as generative adversarial network, variational autoencoder, diffusion model, etc.) are used to build a generative model of financial time series, and new samples are generated by learning the distribution characteristics of data.

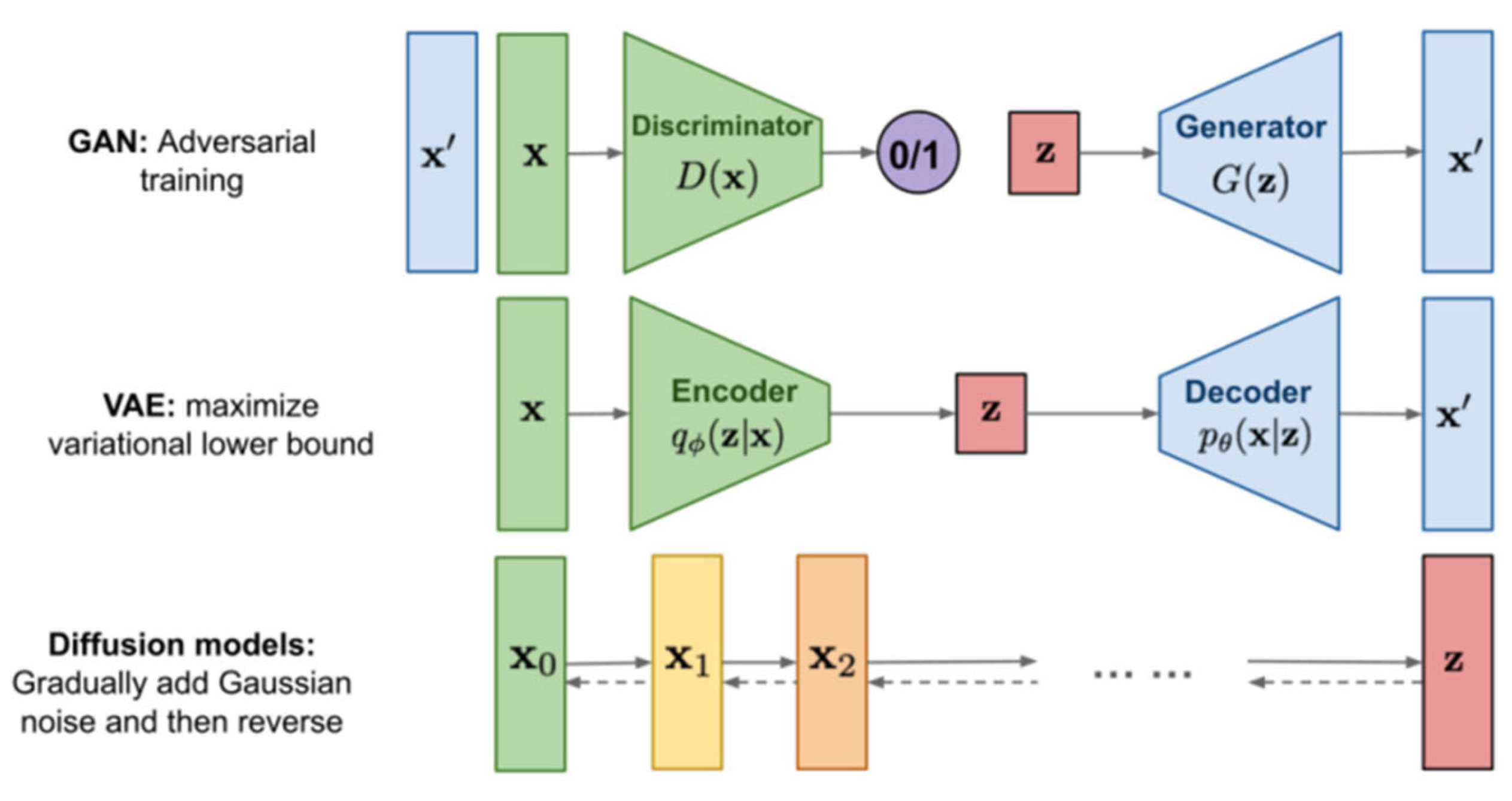

Figure 4.

Architecture comparison among three group of generatimodis.

Figure 4.

Architecture comparison among three group of generatimodis.

In this experiment, each model has its own unique role and advantages (Figure 5).

Generative adversarial networks (GANs) learn the distribution features of data by competitively training generators and discriminators. The generator tries to generate a sample that is similar to the real data, while the discriminator tries to distinguish between the real data and the sample generated by the generator. Through continuous adversarial learning, the generator is able to generate high-quality financial time series data, thus providing an effective way to model market fluctuations and trends.

Variational autoencoders (VAE) are probabilistic generation models that generate new samples by learning the potential variable space of the data. VAE maps the input data into the potential space via an encoder and the potential variables back into the original data space via a decoder. By modeling the data in the underlying space, VAE is able to generate financial time series data with diversity and continuity to better capture the complex features of the market.

The diffusion model is a generative model based on the principles of physics that simulates the random walk process of asset prices in financial markets. The diffusion model takes into account the volatility and randomness of prices, and describes the changes of asset prices through stochastic differential equations. By simulating the random process in the market, the diffusion model can generate financial time series data with fidelity and interpretability, which provides an important tool for the modeling and analysis of the financial market.

To sum up, by using generative adversarial network, variational autoencoder, diffusion model and other deep learning models to build financial time series generative model, we can better understand and simulate the volatility and complexity of the market, and provide an important reference for financial market prediction and decision-making.

3.3. Model training and optimization

Training the generated model with training data and optimizing the model parameters through optimization algorithms (such as stochastic gradient descent) to improve the fitting ability and generalization ability of the model are key steps. Through continuous iteration of the training data, the generation model can gradually learn the distribution characteristics of the data, so as to generate more realistic samples. The selection of optimization algorithm and parameter adjustment are very important to improve the performance of the model. Algorithms such as stochastic gradient descent can effectively search the optimal solution in the parameter space, so as to improve the performance of the model.

VaR estimation is a commonly used risk measurement method in the financial field. By using the trained generation model, we can simulate and generate a large number of samples, and calculate VaR values based on these samples, so as to realize the estimation of future risks. The samples generated by the deep generation modeling method can better reflect the complex features and tail risks of financial time series, so as to improve the accuracy and stability of VaR estimation.

In this paper, the empirical research and comparison with traditional methods show that the deep generation modeling method has significant effectiveness and advantages in financial time series analysis. Compared with traditional methods, the deep generation modeling method can capture the nonlinear characteristics and tail risks of financial time series more accurately, and improve the accuracy and stability of risk measurement. The advantages of this approach lie in its better ability to model the distribution of data, as well as the fidelity and diversity of the generated samples, thus providing a more reliable basis for risk management and decision-making in financial markets.

3.4. Experimental design

1. Data sets: This paper uses three financial time series data sets, namely S&P 500, Nikkei 225 and Euro Stoxx 50.

2. Concept and definition: This paper uses deep generation model to model financial time series, including autoencoder, variational autoencoder, generative adversarial network and other models. This paper also introduces the concept and definition of value risk (VaR), which is an important index to measure financial risk.

3. Experimental indicators: This paper introduces a method for comparing the performance of different generation models, and measures the similarity between the generated synthetic data and the real data through quantitative and qualitative indicators. Qualitative indicators include visual comparison of empirical distribution, t-SNE, PCA and UMAP. The quantitative indexes include distribution distance, ACF and backtest. The authors use short - and long-path composite samples to evaluate model performance and provide a comprehensive score to compare the performance of different models. The model ranking depends on the KPIs used and the way the scores are combined.

3.5. Experimental result

In this paper, we compare the performance of different depth generation models on three different financial datasets. We find that generative adversarial networks (Gans) perform best in predicting VaR (Value at Risk). By competitively training generators and discriminators, Gans can effectively capture the features of data distribution and generate high-quality samples. This ability makes Gans outstanding in simulating volatility and tail risk in financial markets, thus improving the forecasting accuracy of VaR.

When it comes to predicting returns, we find that variational autoencoders (VAE) perform best. VAE is a probabilistic generation model that generates new samples by learning the potential variable space of the data. Its ability to generate samples with diversity and continuity gives VAE an advantage in predicting the return rate of financial assets. VAE can improve the forecasting accuracy of financial time series data by effectively modeling the rate of return.

In addition, we found that integrating multiple models can further improve predictive performance. By weighted average or voting the prediction results of multiple models, the prediction error of a single model can be reduced and the overall prediction performance can be improved. This integrated approach makes full use of the strengths of different models and compensates for their weaknesses, resulting in more robust and accurate prediction results.

In summary, the results of this paper show that different deep generation models have their own advantages and applicable scenarios in financial time series data prediction. By reasonably selecting and integrating multiple models, the forecasting performance can be further improved to provide more reliable support for risk management and decision making in financial markets.

4. Conclusions

The application of deep generation model in the financial field has extensive potential and important significance. With the continuous development and maturity of deep learning technology, deep generation models can better capture complex patterns and dynamic characteristics in financial time series data, and provide new ideas and tools for financial market prediction, risk management and decision-making. Deep generation models can be applied to risk measurement and management in financial markets. In addition to VaR estimates, deep generation models can also be used to predict other important Risk indicators, such as Conditional Value at Risk, Expected Shortfall, etc. Through comparative and empirical studies of different depth-generating models, their performance and applicability to different risk measures can be evaluated more comprehensively.

Deep generation models can also be used to enhance and extend financial time series data. Traditional time series data are often affected by noise and missing values, which limits the performance and predictive power of the model. The synthesized data generated by the deep generation model can be used to increase the diversity and richness of the original data, thereby improving the robustness and generalization of the model.

Deep generation models can also be used in simulations and simulations of financial markets. The simulation generates a large amount of financial time series data, which can evaluate the performance of different strategies and trading rules in different market environments, and provide references for portfolio optimization and risk management. In the future, the deep generation model can also be combined with other deep learning technologies, such as reinforcement learning, transfer learning, etc., to further improve the performance and application range of the model. Through continuous innovation and exploration, the deep generation model will play an increasingly important role in the financial field and provide more powerful support for the stable and healthy development of the financial market.

References

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT press.

- Menghani, G. (2023). Efficient deep learning: A survey on making deep learning models smaller, faster, and better. ACM Computing Surveys, 55(12), 1-37.

- Janiesch, C., Zschech, P., & Heinrich, K. (2021). Machine learning and deep learning. Electronic Markets, 31(3), 685-695.

- Mosavi, A., Ardabili, S., & Varkonyi-Koczy, A. R. (2019, September). List of deep learning models. In International conference on global research and education (pp. 202-214). Cham: Springer International Publishing.

- Tian, J.; Li, H.; Qi, Y.; Wang, X.; Feng, Y. Intelligent medical detection and diagnosis assisted by deep learning. Appl. Comput. Eng. 2024, 64, 116–121. [CrossRef]

- Shi, Y., Li, L., Li, H., Li, A., & Lin, Y. (2024). Aspect-Level Sentiment Analysis of Customer Reviews Based on Neural Multi-task Learning. Journal of Theory and Practice of Engineering Science, 4(04), 1-8.

- Guo, L., Li, Z., Qian, K., Ding, W., & Chen, Z. (2024). Bank Credit Risk Early Warning Model Based on Machine Learning Decision Trees. Journal of Economic Theory and Business Management, 1, 24–30.

- Li, Zihan, et al. "Robot Navigation and Map Construction Based on SLAM Technology." (2024).

- Fan, C., Ding, W., Qian, K., Tan, H., & Li, Z. (2024). Cueing Flight Object Trajectory and Safety Prediction Based on SLAM Technology. Journal of Theory and Practice of Engineering Science, 4(05), 1-8.

- Dhand, A., Lang, C. E., Luke, D. A., Kim, A., Li, K., McCafferty, L.,... & Lee, J. M. (2019). Social network mapping and functional recovery within 6 months of ischemic stroke. Neurorehabilitation and neural repair, 33(11), 922-932.

- Wang, Yong, et al. "Machine Learning-Based Facial Recognition for Financial Fraud Prevention." Journal of Computer Technology and Applied Mathematics 1.1 (2024): 77-84.

- Lin, Y., Li, A., Li, H., Shi, Y., & Zhan, X. (2024). GPU-Optimized Image Processing and Generation Based on Deep Learning and Computer Vision. Journal of Artificial Intelligence General science (JAIGS) ISSN: 3006-4023, 5(1), 39-49.

- Shi, Y.; Yuan, J.; Yang, P.; Wang, Y.; Chen, Z. Implementing intelligent predictive models for patient disease risk in cloud data warehousing. Appl. Comput. Eng. 2024, 67, 34–40i, Y. [CrossRef]

- Zhan, T.; Shi, C.; Shi, Y.; Li, H.; Lin, Y. Optimization techniques for sentiment analysis based on LLM (GPT-3). Appl. Comput. Eng. 2024, 67, 41–47. [CrossRef]

- Allman, R., Mu, Y., Dite, G. S., Spaeth, E., Hopper, J. L., & Rosner, B. A. (2023). Validation of a breast cancer risk prediction model based on the key risk factors: family history, mammographic density and polygenic risk. Breast Cancer Research and Treatment, 198(2), 335-347.

- Wu, B., Xu, J., Zhang, Y., Liu, B., Gong, Y., & Huang, J. (2024). Integration of computer networks and artificial neural networks for an AI-based network operator. Applied and Computational Engineering, 64, 115-120.

- Yu, D., Xie, Y., An, W., Li, Z., & Yao, Y. (2023, December). Joint Coordinate Regression and Association For Multi-Person Pose Estimation, A Pure Neural Network Approach. In Proceedings of the 5th ACM International Conference on Multimedia in Asia (pp. 1-8).

- Song, Jintong, et al. "LSTM-Based Deep Learning Model for Financial Market Stock Price Prediction." Journal of Economic Theory and Business Management 1.2 (2024): 43-50.

- Jiang, Wei, et al. "Applications of generative AI-based financial robot advisors as investment consultants." Applied and Computational Engineering 67 (2024): 28-33.

- Bai, Xinzhu, Wei Jiang, and Jiahao Xu. "Development Trends in AI-Based Financial Risk Monitoring Technologies." Journal of Economic Theory and Business Management 1.2 (2024): 58-63.

- Li, Huixiang, et al. "AI Face Recognition and Processing Technology Based on GPU Computing." Journal of Theory and Practice of Engineering Science 4.05 (2024): 9-16.

- Yuan, J., Lin, Y., Shi, Y., Yang, T., & Li, A. (2024). Applications of Artificial Intelligence Generative Adversarial Techniques in the Financial Sector. Academic Journal of Sociology and Management, 2(3), 59-66.

- Fan, C.; Li, Z.; Ding, W.; Zhou, H.; Qian, K. Integrating artificial intelligence with SLAM technology for robotic navigation and localization in unknown environments. Appl. Comput. Eng. 2024, 67, 22–27. [CrossRef]

- Chen, Zhou, et al. "Application of Cloud-Driven Intelligent Medical Imaging Analysis in Disease Detection." Journal of Theory and Practice of Engineering Science 4.05 (2024): 64-71.

- Wang, B., Lei, H., Shui, Z., Chen, Z., & Yang, P. (2024). Current State of Autonomous Driving Applications Based on Distributed Perception and Decision-Making.

- Song, Jintong, et al. "LSTM-Based Deep Learning Model for Financial Market Stock Price Prediction." Journal of Economic Theory and Business Management 1.2 (2024): 43-50.

- Bai, Xinzhu, Wei Jiang, and Jiahao Xu. "Development Trends in AI-Based Financial Risk Monitoring Technologies." Journal of Economic Theory and Business Management 1.2 (2024): 58-63.

- Shi, Y.; Yuan, J.; Yang, P.; Wang, Y.; Chen, Z. Implementing intelligent predictive models for patient disease risk in cloud data warehousing. Appl. Comput. Eng. 2024, 67, 34–40. [CrossRef]

- Yang, P.; Chen, Z.; Su, G.; Lei, H.; Wang, B. Enhancing traffic flow monitoring with machine learning integration on cloud data warehousing. Appl. Comput. Eng. 2024, 67, 15–21. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).