3.5.2. Analysis of Ablation Experiment Results

In this experiment, the prediction results of TCN, GRU, TCN-GRU, and BiTCN-BiGRU prediction methods were taken as the comparison reference, recorded as the experimental control group, and compared with the prediction results of the proposed model to confirm the following two points:

(1) The feature capture efficiency and prediction accuracy of BiTCN-BiGRU are superior to the basic BiTCN, BiGRU, TCN and GRU prediction methods.

(2) KOA, as a population optimization algorithm, can effectively improve the adjustment efficiency and prediction accuracy of hyperparameters.

Therefore, on the experimental platform, we conducted relevant experiments and obtained the following results

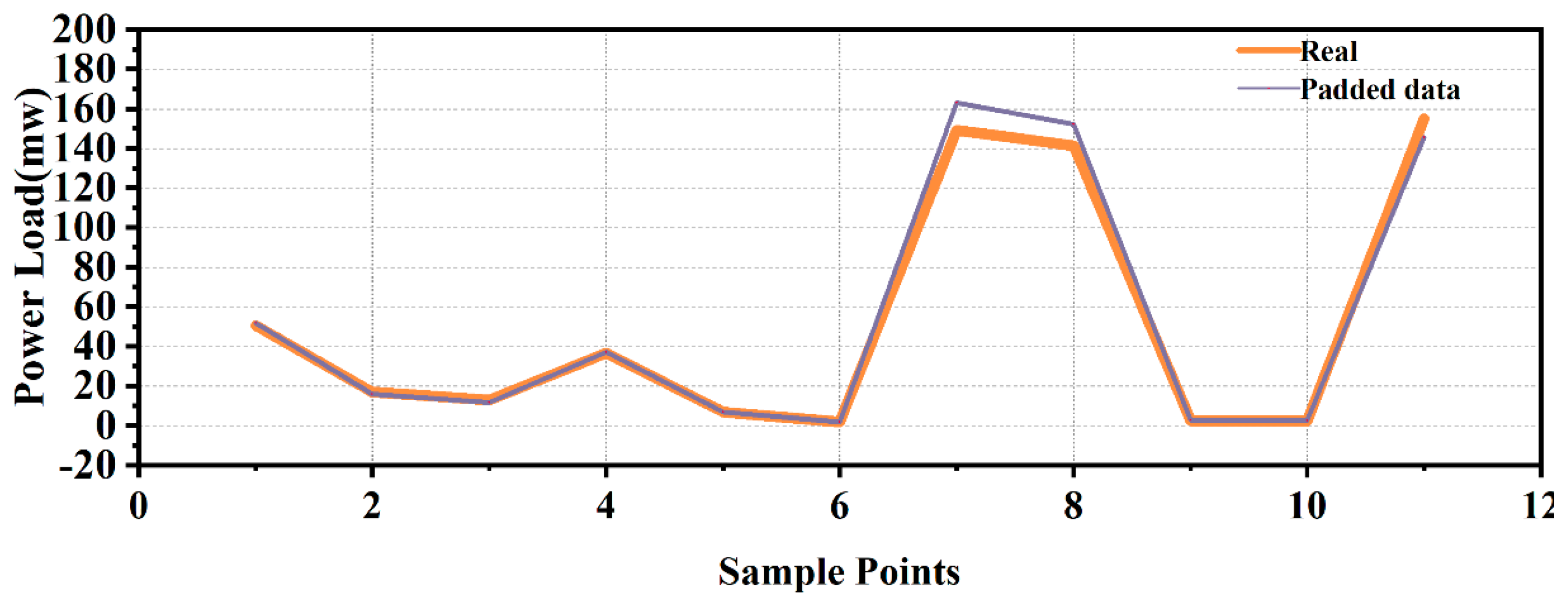

Table 5 and

Figure 9:

P.S: Bbmeans BiGRU-BiTCN, BBA means BiGRU-BiTCN-Attention

The experimental results show that:

The initial model is a network based on a gated cycle unit (GRU), which has the worst performance on all evaluation indicators, including RMSE (31.71%), MAPE (39.53%), and MAE (31.99). After the introduction of the temporal convolutional network (TCN), RMSE, MAPE, and MAE of the model improved by 11.38%, 19.48%, and 24.04%, respectively, indicating that TCN has a significant advantage in capturing long-term dependencies of time series. The bidirectional GRU (BiGRU) and bidirectional TCN (BiTCN) structures are further used to improve the model performance. Compared with unidirectional TCN, the BiTCN model enhanced by 8.08%, 3.31%, and 6.23% in RMSE, MAPE, and MAE, respectively. The TCN-GRU model combined with TCN and GRU also showed improved performance compared with the single BiTCN model, with RMSE increased by 6.81%. Further, the BITCN-BiGRU-Attention model formed by combining BiTCN and BiGRU and introducing the attention mechanism has achieved significant performance improvement in all evaluation indicators, among which RMSE, MAPE, and MAE have increased by 8.92%, 28.04%, and 24.49%, respectively. This indicates that the attention mechanism can effectively enhance the model’s ability to recognize essential features in time series, thus improving the prediction accuracy. The KOA-BiTCN-BiGRU-Attention model with the introduction of the KOA mechanism achieved the most significant improvement in all indicators, among which RMSE, MAPE, and MAE improved by 22.87%, 43.48%, and 30.61%, respectively. The above experiments verify the performance of the proposed model.

At the same time, in order to verify the optimization efficiency of the KOA optimization algorithm, this paper selected the BiTCN-BiGRU-Attention model optimized by TSA, SMA, GWO, and WOA optimization algorithms for performance comparison. The relevant algorithms are introduced as follows:

The Tunicate Swarm Algorithm (TSA) is a new optimization algorithm proposed by Kaur et al. It is inspired by the swarm behavior of the capsule to survive successfully in the deep sea. The TSA algorithm simulates the jet propulsion and swarm behavior of the capsule during navigation and foraging. This algorithm can solve relevant cases with unknown search space [

43].

The slime mold algorithm is an intelligent optimization algorithm proposed in 2020, which mainly simulates the foraging behavior and state changes of physarum polycephalum in nature under different food concentrations. Myxomycetes primarily secrete enzymes to digest food. The front end of myxomycetes extends into a fan shape, and the back end is surrounded by a network of interconnected veins. Different concentrations of food in the environment affect the flow of cytoplasm in the vein network of myxomycetes, thus forming other states of myxomycetes foraging [

30].

Grey Wolf Optimizer (GWO) is a population intelligent optimization algorithm proposed in 2014 by Mirjalili et al., scholars from Griffith University in Australia. This algorithm is an optimization search method developed inspired by the prey-hunting activities of grey Wolves [

44].

The Whale Optimization Algorithm (WOA) is a new swarm intelligence optimization algorithm proposed by Mirjalili and Lewis from Griffith University in Australia in 2016. Inspired by the typical air curtain attack behavior of humpback whales in the process of simulating simple prey, WOA optimized the relevant process [

45].

According to the experimental results, the KOA-BiTCN-BiGRU-Attention model outperforms other variant models on all evaluation indexes. Specifically, compared with the TSA-BiTCN-BiGRU-Attention model, the KOA variant achieved 10.53%, 15.95%, and 11.92% improvement in RMSE, MAPE, and MAE, respectively. Similarly, compared to the SMA-BiTCN-BiGRU-Attention model, the KOA variant achieved a 13.15%, 35.32%, and 20.51% improvement on these three metrics, respectively. In addition, compared with the GWO-BiTCN-BiGRU-Attention and WOA-BiTCN-BiGRU-Attention models, the KOA variant also showed outstanding improvement in RMSE, MAPE, and MAE, reaching 12.32%, 14.47%, 12.21%, 16.19%, 25.58%, and 17.06%, respectively. This proves that the KOA optimization algorithm has obvious advantages over traditional optimization methods.

Then, this paper selects the classical model and sets the relevant parameters consistent with the model proposed in this paper for comparative experiments. The types of models are as follows: Decision Tree: A decision tree builds a tree-like model of decision rules by recursively splitting the data set into smaller subsets. Each internal node represents a test on an attribute, each branch represents the result of the test, and each leaf node represents a prediction result. In time series forecasting, the decision tree can predict a target value at a future point in time based on the eigenvalue at a past point in time.

XGBoost: XGBoost is an efficient gradient lifting library that uses the Gradient lifting (GBM) framework. XGBoost adds trees at each step to minimize the loss function, which is used to measure the difference between the predicted and actual values of the model. XGBoost has regularization capabilities that help reduce the overfitting of the model, resulting in improved prediction accuracy in time series predictions. Gaussian Regression: Gaussian regression is a regression method based on Gaussian processes that incorporate prior knowledge of the infinite-dimensional space of the function into the model, learning this distribution through observations of the training data. In time series forecasting, Gaussian regression can take advantage of its smoothing properties to predict continuous time series data.

Extreme Learning Machine Regression (ELM): ELM is a learning algorithm proposed for single-hidden layer feedforward neural networks. Its core idea is to initialize the weight and bias of the hidden layer randomly and then directly calculate the weight of the output layer using an analytical method. This method reduces the number of parameters that need to be adjusted, thus speeding up the learning speed, and is especially suitable for fast prediction of time series data.

GRNN (Generalized Regression Neural Network): A GRNN is a neural network based on kernel regression that calculates the distance of each input vector from every sample in the training set and then estimates the output value based on those distances. GRNN is particularly well suited for dealing with noisy datasets and nonlinear problems, making it a powerful tool for time series prediction.

Generalized Additive Models Regression (GAM): GAM models the relationship between response and predictor variables by adding the sum of multiple smoothing functions, one predictor for each smoothing function. This model structure makes GAM both flexible and easy to interpret, making it ideal for solving complex nonlinear relationships in time series data.

SVR (Support Vector Regression): SVR uses the same principles as support vector machines, but the goal in regression problems is to have the data points fall within the ε band of the decision boundary defined by the support vector as much as possible. The SVR makes the model more robust by introducing relaxation variables to handle situations where it is impossible to fall entirely within the ε band.

BP (Back Propagation Neural Network): The BP algorithm updates the weight and bias of the network by calculating the error between the prediction and the actual output and propagating this error backward through the network. This approach allows multilayer feedforward neural networks to be able to learn complex non-linear relationships, making it a powerful tool in time series prediction.

LSTM (Long Short-Term Memory): LSTM controls the flow of information by introducing three gates (input gate, forget gate, and output gate), which allows it to eliminate or mitigate the gradient disappearance problem in traditional RNNS, thus effectively capturing long-term dependencies. This property makes LSTM suitable for time series prediction, especially when predicting long-time series.

LSTM-CNN (Long Short-Term Memory Convolutional Neural Network): LSTM-CNN exploits LSTM’s ability to process sequence data and CNN’s advantage in feature extraction by combining LSTM with CNN. This combination can effectively process the spatio-temporal features in the time series data, thus improving the prediction accuracy.

MLP (Multi-Layer Perceptron): MLP is able to capture complex nonlinear relationships by introducing one or more hidden layers and using nonlinear activation functions to increase the expressive power of the network. This makes MLP a powerful tool for dealing with time series prediction problems, especially when complex non-linear patterns are present in the data.

The MLP-DT(Ensemble Learning) ensemble learning method combines the strengths of Multilayer Perceptron (MLP) and Decision Tree (DT) regression models to enhance overall predictive performance and generalization ability. First, MLP and DT are selected as base models due to their superior predictive performance. Next, a hyperparameter space is defined for each model, and model training is conducted on the training set while continuously tuning hyperparameters on the validation set to find the optimal models and record validation errors. Finally, the validation errors of different models are compared; if the error differences are significant, the model with the smaller error is selected. If the errors are within a certain threshold, the predictions of the two models are combined using weighted averaging. The final prediction is the weighted sum of the two models’ predictions.

P.S: DT means Decision Tree

In all the above evaluation indicators, the KOA-BiTCN-BiGRU-Attention model showed significant performance improvement compared with other models. Compared with the ELM model, the improvement percentage of KOA-BiTCN-BiGRU-Attention on RMSE, MAPE, and MAE was 76.80%, 73.46%, and 79.67%, respectively, showing excellent performance for processing very complex time series data. In addition, compared with the classical LSTM model, the improvement of the KOA-BiTCN-BiGRU-Attention model in these three indicators reached 71.12%, 74.02%, and 76.01%, respectively, which had different degrees of improvement compared with other models. This further proves the advanced nature and high efficiency of the model proposed in this paper.

The specific improved performance visualization is as follows

Figure 12:

P.S: TG means TCN-GRU, CL means CNN-LSTM

In summary, this section verifies the performance of the proposed 24-step multi-variable time series short-term load forecasting model. At the same time, we will further study the model in the future to improve its relevant performance and calculation speed.