1. Introduction

In everyday life, individuals often need to prove statements to others. The simplest method is by plainly stating, explaining, or showing evidence that can be verified. For instance, when purchasing age-restricted goods, a customer might show an identity document to prove their age to a cashier. However, this process can expose more information than necessary, such as the customer’s exact birth date and other personal details. In digital environments, the risk is even higher as servers can store copies of sensitive information. Zero-Knowledge Proofs (ZKPs), first introduced in a work by Goldwasser et al. [

1], are a recent technology that could solve these problems. ZKPs allow a prover to prove a given statement, the proof of which a verifier can subsequently verify without being able to obtain any knowledge besides the facts induced by the correctness of the statement itself. However, traditional ZKPs are interactive, meaning that they require multiple interactions between the prover and verifier before the verifier can trust or reject the statement. Additionally, other parties cannot verify the same proof afterward since this would require additional interactions. This limits the practicality of standard ZKPs. To this end, Blum et al. proposed Non-Interactive Zero-Knowledge Proofs (NIZKPs) [

2]. NIZKPs enable a verifier to verify a claim in a single interaction while also allowing other verifiers to verify the truth of the proven statement at another point in time.

Notably, ZKPs, especially the non-interactive variants, have gained prominence in cryptocurrencies like ZCash [

3] and Ethereum [

4]. In these contexts, they facilitate transaction verification without disclosing sensitive transaction details, thereby preserving privacy. Although cryptocurrencies have been the main source of interest in ZKPs due to their surge in popularity next to other blockchain technologies, the utility of ZKPs extends far beyond this domain. In our previous Systematic Literature Review (SLR) work [

5], a summary of which we detail later, we collected applications of the three main NIZKP protocols relating to privacy-preserving authentication. Notably, we investigated applications and the performance of the zk-SNARK (zero-knowledge Succinct Non-Interactive Argument of Knowledge) [

6] [

7], zk-STARK (zero-knowledge Succinct Transparent Argument of Knowledge) [

8], and Bulletproof [

9] protocols. In the SLR work, we examined a total of 41 works that applied NIZKP protocols in a diverse set of applications. However, we found high variability in protocol performance metrics between the several applications, which we believed to be attributable in large part to the difference in applications and benchmarking procedures. This result indicated that a research gap exists for a comparison of the three main NIZKP protocols benchmarked in an equal, real-world applicable, use case.

Our aim in this work is to satisfy the observed research gap by performing a benchmark of the three main NIZKP protocols implemented in an equal, real-world privacy-preserving related, application. The relevance of this lies mostly with researchers and application designers obtaining a meaningful overview of the main NIZKP protocols, the situations in which they excel, and their implied performance characteristics. Insights from this work can furthermore guide researchers to the main aspects of concern when applying NIZKP protocols to real-world applications. This, in turn, can incite research into mathematical improvements and newly designed NIZKP protocols that reduce the deficiencies of existing protocols.

To define our aims and objectives for this research, we first outline the key research questions that we intend to address as a result of this research work. These questions serve to guide the main direction of this research investigating the differences between the zk-SNARK, zk-STARK, and Bulletproof protocols:

What are the performance differences between the three included NIZKP protocols, as observed from a real-world implementation of each protocol in an application that is as equal as possible, expressed in efficiency and security level?

What use case contexts are most beneficial for each NIZKP protocol, given the unique combination of its features and performance metrics?

In our previous SLR work [

5], the applications described in the included research works were each implemented with a single protocol. This meant that the research works were hard to compare on common grounds because of the dissimilar applications, benchmark procedures, and results. The objective of this research is therefore to implement a single application for the three protocols in a manner that is as similar as possible, with the direct purpose of making comparisons between the three protocols more straightforward. As a result, the comparison outcomes should be more informative. This objective is deeply embedded in the previously stated research questions, meaning that these questions will guide us towards a deep exploration of the three NIZKP protocols in a manner that aims to expose and clarify their associated differences.

We now reflect on the aims we set for our overall research, specifying the aims that we were unable to fulfill to our expectation in the SLR. These aims were to fill the research gap in comparing the three most used NIZKP protocols and to provide recommendations on the settings in which each protocol is most advantageous. The objectives we therefore set to achieve in this research work were:

Create an implementation and evaluate the protocols in a practical setting, using a common benchmark for a real-world use case.

Create a comparison of the efficiency and security of these three protocols, including their trade-offs between efficiency and security.

Describe recommendations for the use of these protocols in different applications, based on their strengths and weaknesses.

While we made advances on these objectives in our previous SLR work, we intend to further progress in the development of understanding related to these aims. This specific research work therefore aims to more comprehensively achieve the stated objectives to determine conclusive answers to the research questions from the previous section. To conclude, our aims and objectives for this research are to further detail the performance characteristics of the three most prevalent NIZKP protocols. We aim to do so by more comprehensively comparing those protocols in a benchmark, where we implemented each protocol in an application that is as equal as possible between the three implementations. We can then thoroughly answer which aspects of each NIZKP protocol should be considered when choosing a protocol to be applied in a particular environment.

The scope of our research is twofold. First, we briefly describe the mathematical and cryptographic primitives underlying each of the three main NIZKP protocols, the intention of which is to provide a concise understanding of the fundamental techniques that differentiate them. We do not, however, aim to accomplish a comprehensive mathematical and cryptographic manual that can be used as the basis for implementing the protocol itself in code or to create a new protocol from scratch. Furthermore, we describe the security model of each protocol, next to some vulnerabilities that have surfaced in at least some of the NIZKPs included in this work. The intention for these is, again, not to be comprehensive; instead, the information should serve as a general overview of security aspects and security vulnerabilities to consider when choosing a NIZKP protocol. Second, this work designs and performs a benchmark comparing the three NIZKP protocols zk-SNARK, zk-STARK, and Bulletproofs on their performance and security level. In the benchmark, each protocol implements an as equal as possible, privacy-preserving authentication-related application using general-purpose programming libraries that implement each protocol. There are several limitations to this part of our scope. First, we intend to implement each protocol in an application to enable straightforwardly comparing their performance. For this, the application should be as equal as possible. The application, however, does not have to consider and implement each aspect that a production-ready real-world application would, as long as the benchmark results are representative. Second, we implement each protocol in a single application. We do not implement multiple application benchmarks and will not implement the benchmark application for an exhaustive selection of programming languages and NIZKP protocol libraries. Provided that our benchmark implements the application using at least each of the NIZKP protocols, we realized this scope. Finally, while we aspire to benchmark the security level of each protocol, we will not designate time for an in-depth attempt at breaking the security for each protocol. We leave this up to other researchers, as this is more meaningful to perform in the context of an actual production-ready application than in our representative benchmark application.

As mentioned before, the relevance of this work lies mostly in providing other researchers and application designers with a meaningful overview of the three most prevalent NIZKP protocols and the situations in which they excel. The description of their mathematical and cryptographic primitives, as well as their security aspects and trade-offs, should provide researchers with a concise reference for understanding each protocol. Next, the benchmark results should provide researchers and application designers with a novel comparison of the three NIZKP protocols in an equal setting. This, in turn, should help them make informed decisions about which protocols to apply in which real-world applications, given the performance characteristics we detailed. While our previous SLR work was a first step in achieving this, this research takes it a step further, helping researchers and application designers to choose the best-fitting NIZKP protocol for their requirements.

Therefore, we believe that our work benefits multiple entities. First, it serves as an additional work for researchers just entering the field of NIZKPs next to our previous SLR work [

5]. Second, it should help individuals and organizations interested in applying NIZKP protocols to real-world applications by providing them with insights into each protocol’s performance and suitability in privacy-preserving related applications. Ultimately, we believe that our work will benefit academia, industry, and society as a whole by advancing the understanding and application of NIZKP protocols.

We organized this work as follows. First, we summarize our previous SLR work, detailing its findings and the rationale for this follow-up research. Second, we describe our methodology for performing a benchmark comparison of NIZKP protocols, including the design and approach used for analyzing our results. Third, we provide a brief overview of the mathematical and cryptographic primitives for each of the three NIZKP protocols. Fourth, we detail the setup used for the benchmark, including the software, hardware, and specifics of our implementation. Fifth, we present the results from our benchmark and analyze them. Sixth, we discuss our results by answering our research questions and detailing the strengths and limitations of this research, as well as highlighting the significance of our results. Finally, we conclude this research with the main findings and recommendations, as well as a description of potential future research directions.

2. Related Work

In our previous SLR work, we analyzed a broad spectrum of research works that described diverse use cases related to authentication. All included works were related because of our requirement that the use case applied at least one of the three NIZKP protocols, zk-SNARK, zk-STARK, or Bulletproofs, for some privacy-preserving use within the application context. Ultimately, we examined 41 research works that surfaced from our collection and filtering criteria, discussing their implementation of the NIZKP protocol, and comparing these implementations on their use case. Furthermore, we discussed the performance and security of the NIZKP in the application when a work included benchmarked figures for these. For anyone interested in a more detailed description of our SLR intentions, collection and filtering process, results, and discussion, amongst other things, we recommend consulting the full research document [

5]. We limit the remainder of this section to highlight the key findings from the SLR.

To start, 31 of the 41 works included in our SLR employed the zk-SNARK protocol in their described application, whereas the other 10 works utilized the Bulletproof protocol. This indeed means that our work did not end up including any works that based their application on the zk-STARK protocol. While this prevented us from drawing definitive conclusions on the proportionate use of the zk-STARK protocol compared to the other protocol, we did remark that this finding signifies the zk-STARK protocol was not commonly deployed in privacy-preserving authentication-related applications. More specifically, applications adhering to the search and filtering criteria from the SLR do not seem to utilize the zk-STARK protocol. We exert confidence in the notion that the reason for this will be more evident by the end of this work.

We also want to recite the observation that all but two works did not mention the quantum resistance of their implementation. We find this interesting especially since none of the 41 included works applied the only quantum resistant protocol, zk-STARK. This clearly emphasizes a lack of consideration regarding this security aspect, despite quantum computing and quantum-resistant cryptographic protocols having been an ongoing important topic for the past few years [

10].

Of the 41 works included in the SLR, 30 works included some form of performance analysis of the implementation. Among those, 22 employed the zk-SNARK protocol, with the remaining eight works utilizing Bulletproofs. In the SLR we discussed the performance results in several categories, though here we will only review the overall performance differences between all works. We observed highly varying measures in multiple categories of performance metrics, including the proof size, proof generation time, and proof verification times. These variations were significant, with several orders of magnitude performance difference between the same protocol applied in different works. Considering this extreme variance in observed metrics, we concluded that it was impossible to draw any definitive conclusions from comparing the performance between applications. The research works would have to specifically perform their benchmarks in a related way to another research work for us to draw any revealing conclusions from the comparison.

We had to draw a similar conclusion to that of the performance comparison for the security comparison, which proved to be even more complex to perform and accomplish a reasonable comparison from. The main reason for this difficulty was the diverse ways researchers used to describe the security of each implementation. Some works described the security by proving mathematical theorems in either natural language or as mathematical statements, whereas others described the security requirements of their application and mentioned either how they were achieved or how attacks were mitigated through implemented security measures, just to name a few of the encountered possibilities. Altogether, our SLR work had a particularly challenging time inferring any reliable security comparison outcomes from the 31 works that included some form of security analysis.

2.1. Research gaps

To remediate the current impossibilities of comparing different applications and their applied protocols on their performance and security, as described in

Section 2, we suggested future research into a benchmarking standard. More concretely, we stated that the following actionable question arose from our SLR:

"How can future security analyses of non-interactive zero-knowledge proof application implementations be standardized to facilitate better comparison?" When every research work utilizing NIZKP protocols would follow such standard, it would facilitate a more uniform benchmarking procedure which enables an equitable and in-depth performance comparison between works. Yet, as our SLR found multiple research gaps stemming from limitations in current research works, this is not the research direction that we took for this work.

The research gap that we intend to address in this work is the lack of availability, to the best of our knowledge, of a comprehensive applied performance comparison on the three main NIZKP protocols. Such benchmarks should utilize each of the zk-SNARK, zk-STARK, and Bulletproof protocols in an identical application to allow anyone to extract meaningful metrics from the benchmark. In the next section, we explain how we will approach to addressing this research gap.

2.2. Addressing research gaps

This work intends to perform the benchmark described in

Section 2.1 to fill the previously stated research gap. This means that we will describe, in detail, the design and implementation of a benchmark application that we implemented as equally as possible for each of the three NIZKP protocols. To achieve such implementation, we select at least one programming library for each of the zk-SNARK, zk-STARK, and Bulletproof protocols, and use these libraries to implement an identical application design. We can then conduct the benchmarking procedure, which we meticulously define in this document, and thereby obtain metrics on the performance of each protocol implementation. This data we then use to compare the protocols on their performance facets, to conclude, and to provide recommendations on which situations warrant the usage of each protocol given their features, performance, and security characteristics.

The design of our benchmark will inherently incur some limitations on the results that we obtain, in turn limiting the indications we can provide from a comparison using these metrics. We, however, express our conviction that the benchmark results will be beneficial for improving scientific knowledge on the NIZKP protocols regardless of the limitations and that the comparison will furthermore help many researchers obtain knowledge on the performance and security aspects embedded in each protocol.

Overall, we considered the stated knowledge gap to be important to fill given the rise in popularity of NIZKPs which we previously observed in our SLR from the increasing number of published research works by year utilizing NIZKP protocols (see

Figure 5 in our SLR [

5]). Being well-informed on the performance and security characteristics of each protocol is an important first aspect of selecting the right protocol for a given application. A comparison between the three main NIZKP protocols implemented in an identical application, as proposed by this work, could therefore strengthen the current corpus of scientific knowledge on this topic.

3. Methodology

. In this section, we detail the methodology that we applied to obtain an answer to the research questions. We define an approach in which we describe how we aimed to achieve the defined objective in

Section 3.1. Then, in

Section 3.2, we describe in a detailed manner the design of our benchmark, as well as the application on which we benchmark the three NIZKP protocols. Finally, we outline the results that we intend to obtain from the benchmark and the analyses that we will conduct on the acquired data in

Section 3.3 and provide a schematic overview of our work in

Section 3.4.

3.1. Approach

As we previously stated, the main approach of this research was to design a benchmark that implements the same application, or as close as possible, for each of the NIZKP protocols. For this, we used general-purpose programming libraries that implement the three types of NIZKPs of interest: zk-SNARK, zk-STARK, and Bulletproofs. This would give us the ability to directly compare the metrics collected from the benchmark between the protocols, or at minimum the metrics available for all three. The benchmark should preferably use a full-featured, stable programming library to implement the NIZKP application since this provided us with the most options, stable performance, and a hopefully somewhat optimized codebase. Additionally, we preferred for all three protocol libraries to use the same programming language, since this would remove the variable of different performance and options of different programming languages. We also expressed a preference for low-level compiled languages over higher-level interpreted languages, to reduce runtime overhead and performance variability. We required the NIZKP libraries to be intended for general-purpose use, meaning that they were usable for all kinds of proofs in various application settings. While it would have technically been possible to implement a custom NIZKP protocol implementation for one specific application, enabling optimisations for that specific application, we wanted our benchmark to be representative of all kinds of different applications. Furthermore, while we only implemented a single application in our benchmark, by using general-purpose NIZKP libraries for each protocol the performance differences between the protocols can be generalized for many other applications. We implemented the benchmark in code using the same programming language that the NIZKP libraries were written in, which enabled us to perform benchmarks directly on individual parts of the code. This was a requirement for us because we needed to benchmark the separate phases of the protocol, namely the setup, proving, and verification phases. Implementing the benchmark in this manner furthermore allowed us to access the size and security level metrics provided by the programming languages and NIZKP libraries. Both metrics would have been harder to benchmark accurately when running a benchmark using just compiled binaries as input.

3.2. Design

As outlined in our approach, our goal was to design an application, preferably related to privacy-preserving authentication, that could be equally implemented across three NIZKP protocols. This allowed us to benchmark their performance differences effectively. Initially inspired by Cloudflare’s concept of using Hardware Security Keys (HSKs) for personhood attestation [

11], further elaborated by Whalen et al. [

12], our design aimed to replace CAPTCHAs with HSK-based signature validation. This concept evolved into zkAttest by Faz-Hernández et al. [

13], using sigma-protocol ZKPs to attest personhood while preserving HSK certificate privacy. Due to implementation constraints and time limitations, we simplified our benchmark application to a hash function across all protocols, reflecting foundational performance insights despite not directly targeting privacy-preserving authentication scenarios. This approach allowed scalable benchmarking, offering crucial insights into protocol performance across varying computational loads.

3.3. Results analysis

Now that we have defined our approach for the benchmark, we conclude the methodology by outlining the metrics we aimed to collect and the analyses we intended to conduct on those metrics.

Regarding the metrics, it’s important to note that they varied between the protocols. For instance, the zk-SNARK protocol necessitates a trusted setup, unlike zk-STARK and Bulletproofs. Therefore, for zk-STARK, we focused on the size of the CRS, a metric not applicable to the other protocols. Common metrics across all three protocols included proof size, proof generation time, proof verification time, and the theoretical security levels of the proofs, although achieving uniform data across all protocols proved challenging, as clarified in

Section 5.3.

Additionally, certain metrics were contingent on how each library implemented the ZKP protocol, such as additional compilation requirements or inclusion of commitments in the proof. Our aim was to provide comprehensive metrics relevant to each protocol, enabling a robust comparison on data transfer, storage size, and computation times.

In terms of analysis, we evaluated several key aspects across the protocols:

Setup requirements and time: What are the trusted setup requirements for each protocol? How long does setup take, and what is the data size involved?

Proof generation: How long does it take to generate a proof? What is the resulting data size necessary for proof verification?

Verification: What is the verification time for the proofs?

Security aspects: How do the security levels differ between protocols? How does altering security levels impact other metrics?

Furthermore, we provided qualitative insights into aspects of the protocols and their library implementations that transcend exact metrics. Specifically, we discussed practical considerations where certain implementations may excel or falter based on situational demands.

3.4. Overview

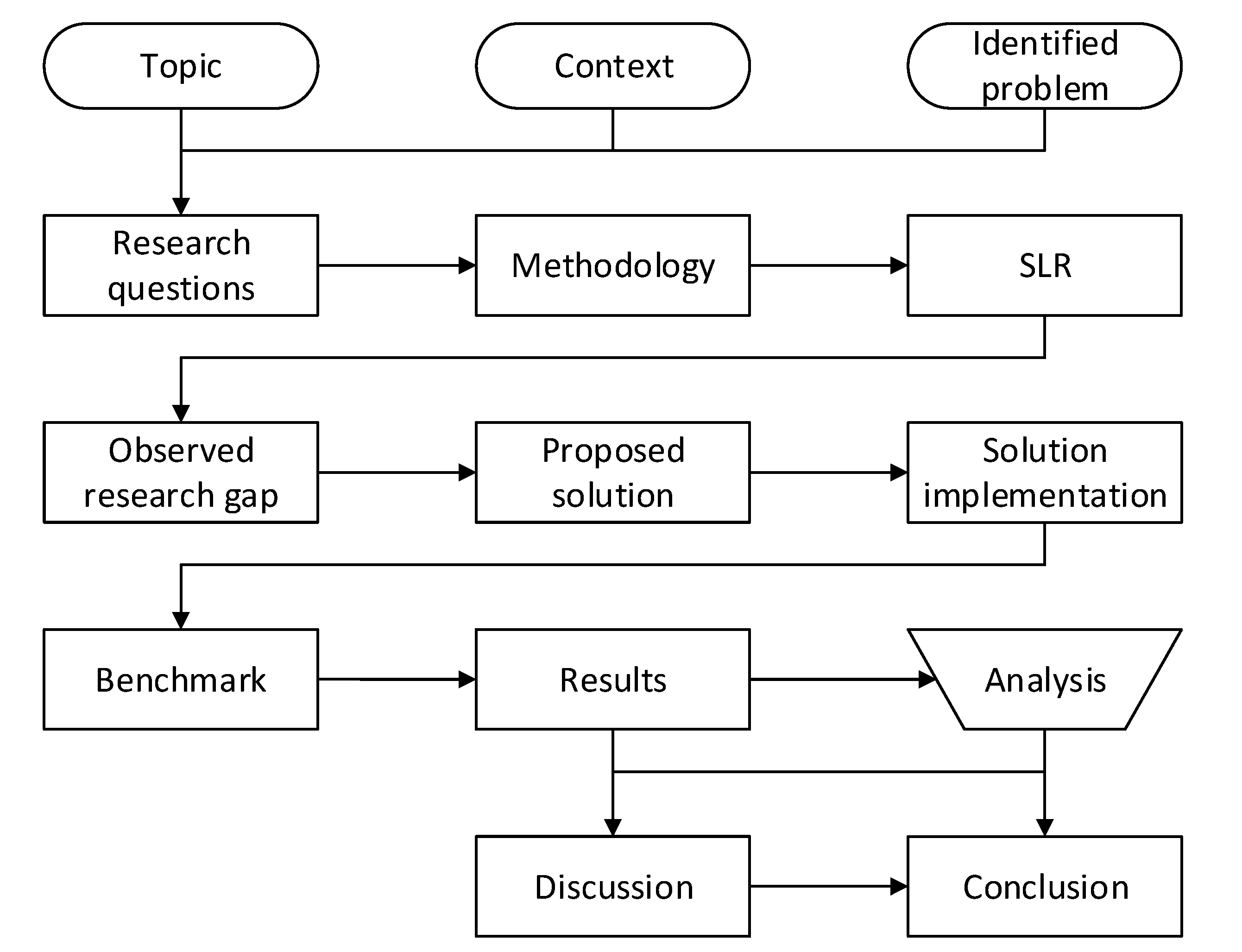

To conclude this section, we provide a schematic overview of the entire process for our research work, including the previously performed SLR, in

Figure 1.

4. Protocol Comparison

To start off this section, we emphasize the inclusion of this comparison to understand the origins of performance and security differences among the various protocols. The mathematical and cryptographic primitives underlying a NIZKP protocol not only enable the functionality of proving statements succinctly and with privacy but also define their core features, strengths, and limitations. These foundational elements significantly influence the performance and security characteristics of each protocol. Therefore, comprehending these underlying differences is crucial for gaining a comprehensive understanding of this study, including the benchmarks performed and the subsequent conclusions drawn. In addition to the performance primitives, this chapter also briefly touches upon the security models and assumptions inherent to each protocol. Understanding these models and assumptions is essential for anyone integrating NIZKPs into their applications. Deviations from these models can compromise the expected security levels, posing risks in critical scenarios such as medical data protection or financial transaction integrity. Hence, familiarity with these aspects is vital for informed protocol selection and implementation. Furthermore, we underscore the importance of understanding the historical implementation pitfalls of NIZKP protocols. By outlining past vulnerabilities—describing their nature, affected protocols, and remedial measures—we aim to prevent recurrent errors and enhance overall implementation security. This highlights the necessity for implementers to possess a foundational understanding of the mathematical and cryptographic underpinnings of NIZKPs. Such knowledge mitigates the risks associated with flawed implementations and contributes to the robustness of applications leveraging zero-knowledge proofs. Given these considerations, we argue that a grasp of NIZKP protocol primitives is advantageous, especially for readers less versed in the field. To aid comprehension, this chapter includes a concise overview of these primitives, facilitating a clearer understanding of subsequent discussions and analyses. We summarize the defining characteristics of the zk-SNARK, zk-STARK, and Bulletproof protocols.

Table 1 shows this comparison. Additionally, we briefly describe how we obtained the values listed in that table.

First, for zk-SNARK, the values for "Proof size", "Proof generation", and "Proof verification" were obtained from the introduction chapter of the Pinocchio paper by Parno et al. [

6] and the Groth16 SNARK paper by Groth et al. [

7]. They emphasize that the proof size is constant and the generation and verification times are linear relative to the computation size. Second, for zk-STARK, Ben-Sasson et al. [

8] provided details on the complexities of "Proof generation" and "Proof verification" in their paper. The proof size complexity, stated to be polylogarithmic, was confirmed through references and documentation from StarkWare [

14]. Third, for Bulletproofs, the proof size complexity was obtained from Bünz et al. [

9], where it is stated to be logarithmic in the number of multiplication gates. The linear complexities for proof generation and verification were confirmed through their detailed explanations in the Bulletproof paper. The values for "Trusted setup", "Quantum secure", and "Assumptions" were collected based on the comprehensive overview of the mathematical foundation and security assumptions of the three protocols. It’s important to note that the complexities of proof size, generation, and verification may vary slightly due to the specific implementations and details of each protocol. For precise details, we recommend consulting the cited works directly.

5. Proposed Solution

In this section, we describe the proposed solution according to the methodology as described in

Section 3. First, in

Section 5.1 we restate our implementation for the proposed solution, and link this to the research gap observed in our SLR. In

Section 5.2, we then describe in detail the software and hardware that were used to perform the benchmark, while in

Section 5.3 we comprehensively describe the implementation of the benchmark design as outlined in

Section 3.2. After that, we detail the benchmark procedure that we followed to obtain the actual results from our implementation in

Section 5.4. Finally, we provide a justification for our proposed solution where we briefly state how our proposed solution will address our research questions in this work in

Section 5.5 and present a schematic overview of our proposed solution in

Section 5.6.

5.1. Solution

In

Section 2.1, we previously stated which of the research gaps, observed in our previous SLR, we intend to address in this work. To summarize in a single sentence, we intend to address the lack of a comprehensive applied performance comparison on the three main NIZKP protocols in existing research works. We described our methodology, how we intend to resolve our chosen research gap, in

Section 3. Specifically, in

Section 3.2 we decided to implement a hash function application using each of the three protocols. Using these equivalent application implementations utilizing several NIZKP protocols, we can benchmark the performance and subsequently compare the resulting metrics between the protocols. To link our implementation back to the observed research gap, by implementing each of the three protocols of interest we provide the comparison between the zk-SNARK, zk-STARK, and Bulletproof protocols that is absent in current literature. We additionally go one step further by implementing these protocols in an equivalent application, which means that we remove the difficulty of comparing the performance between different protocol use cases as was a significant limitation to the protocol comparison in our SLR. By benchmarking each protocol utilized in an identical application, we provide the closest possible comparison between the NIZKP protocols.

5.2. Software & Hardware

This section describes our use of software and hardware in implementing and performing the benchmark. Knowing the exact version of each piece of software that we used is important, because different software, and even different software versions of the same software, can induce vastly different implementations which exhibit vastly different performance characteristics. By providing the exact version of each used piece of software, we strive to make our benchmark repeatable by other researchers. Likewise, knowing the hardware used in a benchmark is important because using different hardware can manifest in vastly differing benchmark results. While we would expect different hardware to produce metrics that are proportionate to the speed of the hardware, where the metrics for each protocol change according to the performance of the hardware, this is undoubtedly not guaranteed. Such expectations may particularly not hold when using different processor designs, including different implemented instruction sets (e.g. AVX, AVX2) or an entirely different processor architecture (e.g. ARM instead of x86-64). For this reason, we list the hardware that we used to perform the benchmark, intending to make the benchmark repeatable for other researchers. Alternatively, the list of hardware allows other researchers to explain observed performance differences in reproduced benchmarks when they used different hardware.

5.2.1. Software

For the software, the most important components in the benchmark are of course the ZKP libraries used to implement the three protocols. For this reason, these libraries were the first software that we decided on.

Initially, we started looking at ZKP libraries implemented in the Go language since this was the language with which we were most familiar. It also satisfied our requirement of being a compiled and performant language. We found, however, that only a full-featured zk-SNARK library named Gnark [

15] was available in Go. Because of the requirements we set in

Section 3.1, we should preferably choose a library for each protocol in the same programming language, this would not work. However, we noticed that the Gnark package was well documented and had implemented more primitive building blocks than other libraries we found for the three protocols. For this reason, we found this package interesting to use for initial proof of concept implementations for ideas we thought of. Additionally, we expected that it would be useful to implement our benchmark application in the Gnark package as well, next to the zk-SNARK implementation in the language of the other two protocol libraries. This SNARK implementation in Go could then indicate, when compared to the other SNARK implementation, what potential performance differences a library implementation in a different programming language can make.

This led us to perform a more general cursory search for ZKP libraries, through which we found that Rust had a well-implemented Bulletproof library [

16]. We also found and examined several JavaScript libraries, but these did not fulfill our requirement of being written in a compiled and high-performance language. For example, the bulletproof-js library [

17] includes a benchmark comparison to other Bulletproof libraries in their documentation, including a comparison to the aforementioned Rust Bulletproof library. This comparison demonstrated that the performance of the bulletproof-js library is several orders of magnitude lower than that of the comparable Rust Bulletproof library, which indicated to us that Rust might be a suitable candidate language to find an implementation for the other ZKP protocols. We also noticed, by not finding any STARK libraries written in either Go or JavaScript, that a full-featured zk-STARK library would be the most difficult to find. Therefore, we focused our attention on finding a good STARK library first. We found a library called libSTARK [

18], which is a STARK implementation in C++ by the authors of the original STARK paper. However, our initial impression was that it seemed that this library uses a special notation to design circuits and that we would not be able to freely implement it with the main programming language. We furthermore found the Rust Winterfell crate [

19], which seemed well-implemented, provided documentation, and was in active development. There were some limitations to this library though, including that it does not implement perfect zero-knowledge and focuses on succinctly proving computations instead of knowledge. We will describe these limitations in more detail in

Section 5.3. However, even with these limitations in mind, it was the best option we found. We already identified the Rust Bulletproof crate earlier, which meant that we only had to find a SNARK library to have discovered a library for each protocol in the Rust language. We found this in the Rust Bellman crate [

20]. With us unearthing a full-featured library implementation for all three protocols written in Rust, we decided to implement our benchmark in Rust. Besides having a library implementing each protocol, the libraries were each well-implemented, at least somewhat documented, and well-known. In summary, we found that implementing the ZKP application in Rust using the Bellman, Bulletproof, and Winterfell crates was the best option for our benchmark.

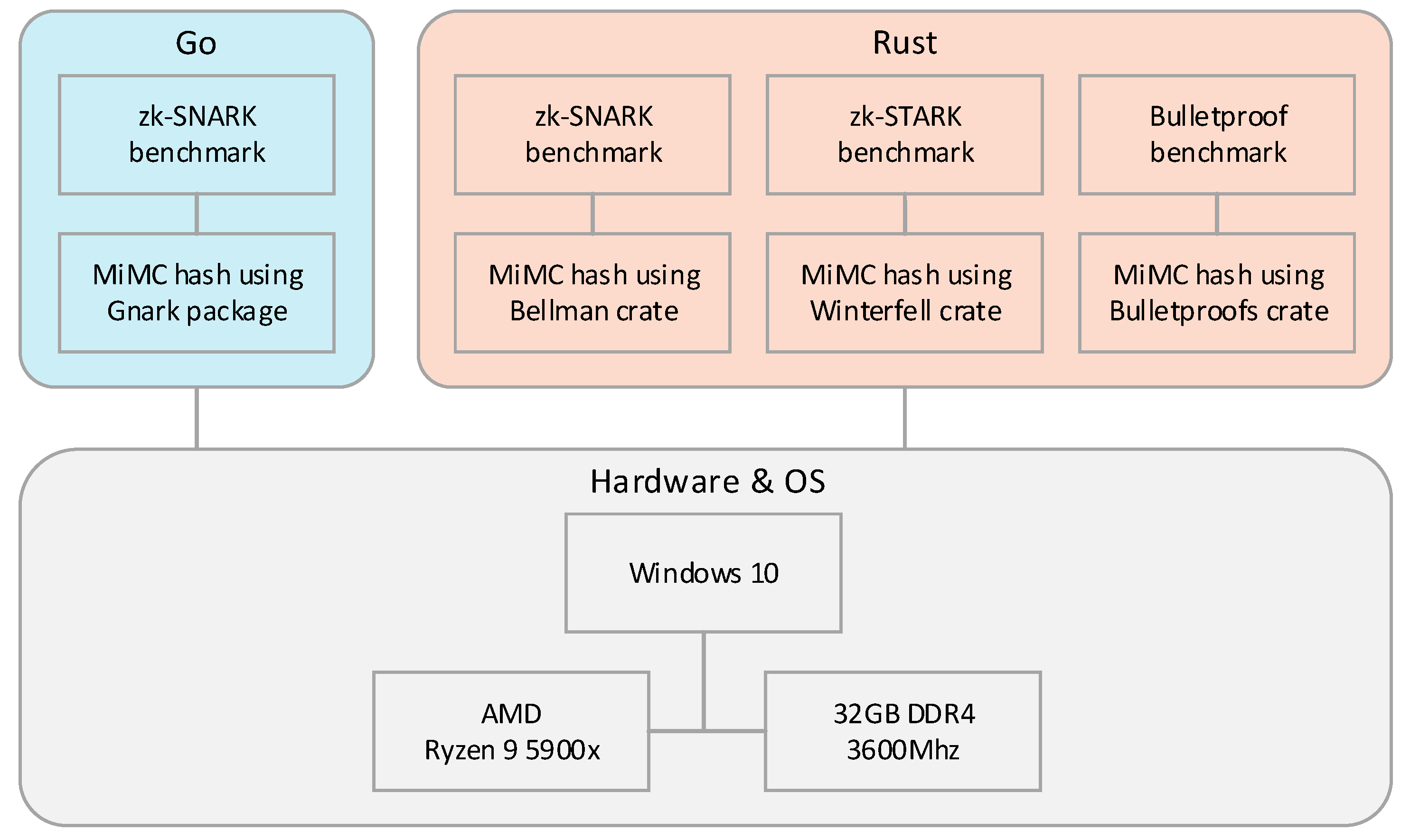

To summarize, we ended up using four ZKP libraries written in two different programming languages. Since our benchmark implementation depended on these ZKP protocol libraries, we included those as our main dependencies. We additionally depended on several cryptographic libraries required for using the mentioned NIZKP libraries. We detail the full list of (direct) dependencies by language in

Table 2.

Because of our chosen ZKP libraries, we required the usage of the two programming languages Go and Rust, as well as the Rust package manager Cargo. The used version for each software is listed in

Table 3.

5.2.2. Hardware

As for the used hardware, we performed the benchmarks on a desktop computer with the following specifications:

The computer ran Windows 10 version 22H2 as the operating system and we configured it to run in the better performance power mode. The D.O.C.P. (Direct Overclock Profile) setting was enabled in the motherboard settings to attain the intended speeds as specified for the memory modules. We did not apply any further overclock or undervolt, meaning that the processor ran at stock speeds.

5.3. Implementation

Now that we determined which software and dependencies we want to use to implement the benchmark, we describe the actual implementation of the benchmark using the chosen ZKP libraries.

Our initial idea for the implementation, as described in

Section 3.2, comprised of a zero-knowledge proof which proved that a given public Elliptic Curve Digital Signature Algorithm (ECDSA) key verified a signature and is included on a list of trusted keys. The intention for such proof was to prove that the user utilized a hardware security key from a trusted manufacturer to sign a message, without leaking the manufacturer details or batch information of the hardware security key. Our benchmark application would have implemented such proof for each of the three ZKP protocols, albeit without communicating to a real hardware security key, generating the public keys in code instead. Our first step in creating the implementation was to create a proof of concept using the Gnark zk-SNARK library. We chose to implement the proof of concept in Gnark because of the great documentation, familiarity with the language, and numerous existing cryptographic primitives that the codebase contained. We started out with an implementation using the Edwards-curve Digital Signature Algorithm (EdDSA) to get familiar with the Gnark library since creating a Gnark circuit for proving the verification of an EdDSA signature was explained in a tutorial [

21]. We expanded this proof to additionally verify that the used public key was included in a provided list of trusted public keys. We defined the public key as a secret input to the circuit, while we set the message, signature, and trusted key list as public inputs. The code for this implementation can be found in the Git repository for this research [

22]. With a working implementation for EdDSA, we re-implemented the same approach in Gnark for ECDSA. This process was more involved, because we had to use more primitive cryptographic building blocks, yet eventually we got the ECDSA-proof circuit working identically to the EdDSA circuit. We should note though that, since we ended up not using this implementation, we did not fully implement some aspects of the proof that did not impact functionality but would have impacted security in any real use cases. The corresponding code can be found in our Git repository [

22].

Now that we had a working zk-SNARK implementation using the Gnark library, we knew that the idea would technically be possible to implement. With that said, we did have to implement the same application for each of the three ZKP protocol libraries in Rust, which is where we hit some difficulties. First, while we implemented the proof-of-concept idea in Gnark because it provided a tutorial, documentation, and many cryptographic primitives, this was not the case for the Rust ZKP libraries. This meant that we would have had to implement these primitives ourselves, leading to more opportunities for security issues. More importantly, we expected that this would take more time than we had available for the research. Even more critically, their creators geared the zk-STARK library towards succinctly proving computations, as opposed to knowledge like the zk-SNARK and bulletproof libraries. This meant that the application would require a completely different approach in the STARK implementation compared to the other two protocols. On top of this, at the time of implementation, the STARK library did not provide perfect zero-knowledge. This meant that there was no option for us to provide the used public key to the circuit, as required in our proof of concept since the proof would not keep this key private. While it sounds strange to have to keep a public key secret, we reiterate that openly providing this key would reveal some privacy-sensitive information about the used hardware security key. As a result, doing so would invalidate the entire reason for utilizing a NIZKP in the application in the first place. For these reasons, we decided to abandon this idea for our benchmark application. Instead, we opted to use a more rudimentary application.

For the basic ZKP application idea that we could implement more equally for all three protocols, we decided to implement a hash function. Our application would ensure this hash either had a variable number of rounds or would use the hash as part of a hash chain, to enable some way to increase the required amount of work in the proof. After some deliberation between the MiMC [

23], Poseidon [

24], and Rescue [

25] hashes, we eventually chose the MiMC hash function. Namely, this hash function is well-optimized for zero-knowledge proofs [

26], has a simple algorithm that is easy to implement in proof circuits, and example implementations we could adapt and build on were available for the SNARK and Bulletproof Rust ZKP libraries. The number of rounds used in the MiMC hash can be varied in our benchmark, where each round requires a different round constant for security. This enabled us to implement the hash for all three protocols, since, at least for our intents and purposes, proving knowledge of the pre-image of a public hash is the same as proving the computation of calculating the required hash from a pre-image provided by the prover. Though, in the latter case, applicable to the STARK implementation, the pre-image would not necessarily remain private. For equality reasons, we therefore did not focus on these variables remaining private in the other protocols either. This is a limitation of our benchmark, for which we decided that the most important aim was to keep the proof as similar as possible. Since this limitation is important to consider for real-world implementations using ZKPs, we further discuss this limitation in

Section 7.4.

To summarize, our actual implementation existed of a proof that verifies that the prover knows a pre-image to a certain MiMC hash image. The MiMC hash had a variable number of rounds, and we provided the round constants as input to the circuit. We implemented this application in each of the three chosen Rust protocol libraries. Our implementation adapted and built upon example implementations for both the Rust SNARK library [

27] and Bulletproof library [

28], while we created the Winterfell STARK library implementation from scratch. Moreover, we implemented the application in the Go Gnark zk-SNARK library as well, for comparison reasons described in

Section 5.2. We conjecture that this implementation provided the best possible comparison between the three protocols. Where significant for such real-world implementations, we provide additional protocol-specific context in

Section 6 and

Section 7. We also present additional justification for our implementation idea in

Section 3.2. The code for all implementations can be found in the Git repository for this research [

22].

An important consideration for the Bulletproof implementation was that we did not apply any form of batch verification, even though this is one of the beneficial aspects of the Bulletproof protocol that the Bulletproof library implements. While such batching verification could reduce the total verification time compared to performing each proof verification separately, it required an application where such batching is viable. In this work, we benchmarked the process of generating and verifying a single proof, which means that batching did not apply to our benchmark. We will discuss the implications of this in

Section 7.

Finally, when inspecting our implementation, one should consider that we used seeded randomness for our benchmark. This means that the randomness we used in our implementation is not secure. Any real-world implementation should at minimum replace the seeded randomness with a cryptographically secure randomness source.

5.4. Benchmark procedure

With the implementation code completely written, we commenced the benchmark procedure. First, we restarted the hardware which we performed the benchmark on to clear as many resources as possible. After this restart we waited a minute for the operating system and all initiated startup processes to settle. We then opened a separate terminal window in the Rust and Go implementation directories.

The first benchmark we performed was the benchmark comparing the protocols on several numbers of rounds. For the number of rounds, we settled on the numbers corresponding to

with

, since this formula is a requirement for the zk-STARK implementation as described in

Section 5.3. This gave us the set of MiMC rounds

, which we believe provided a nice range to represent the performance differences between the NIZKP protocols for various amounts of required work. We ensured that we applied the correct default configurations and had set the desired number of MiMC rounds in the benchmark code. We then issued the `cargo bench` command, which compiled the Rust code as a release target for the best performance and used this compiled binary to run the benchmark for each of the three protocols sequentially. When the benchmark for the Rust implementations was complete, we logged the benchmark results and other metric outputs in an Excel sheet for each protocol under the set number of MiMC rounds. With the Rust benchmark results recorded, we switched to the other terminal for the Go implementation and repeated the process, only using the `go test -bench . ./internal/hash/.` command instead. This command, like the `cargo bench` command for Rust, compiled the Go SNARK MiMC implementation and ran the benchmark outputting the results. When we performed all benchmarks for a given number of MiMC rounds, we repeated the process for each other number of rounds, noting down all the results in the same Excel sheet. We additionally ran a benchmark comparing the performance of the zk-STARK implementation for different options. The process for this benchmark resembled the procedure described above, yet instead of using fixed option parameters with a dynamic number of rounds, we fixed the number of rounds and modified the default option parameters by a single option at a time. By initiating the `cargo bench stark` command, we conducted the benchmark for just the zk-STARK implementation and obtained the performance difference caused by a single option parameter change. We then recorded the benchmark results and metrics in the Excel sheet and subsequently reverted the option parameter to the default, repeating this process for all options and several parameters for each option. Finally, we performed one final benchmark for the STARK, in which we set the option parameters to a combination of values that provided the best performance according to the individual parameter benchmarks. Now that we performed all benchmarks, we processed the metrics in the Excel sheet into the benchmark result tables and graphs found in

Section 6.1. The code that we wrote to implement all benchmarks can be found in the Git repository corresponding to this work [

22].

5.5. Justification

Now that we depicted our proposed solution in-depth, we succinctly provide a justification for how this proposed solution addresses the research questions as stated in

Section 1. We address the first research question, "What are the performance differences between the three included NIZKP protocols, as observed from a real-world implementation of each protocol in an application that is as equal as possible, expressed in efficiency and security level?", with our proposed solution. By implementing the identical MiMC hash application utilizing a real-world library implementation for each of the three included NIZKP protocols, we will be able to observe the performance metrics related to the efficiency and security level for each. While the performance and security metrics available in each protocol will limit our scope, we can compare the metrics that we were able to obtain for each protocol to provide an answer to this first research question. By extracting the strengths of each included NIZKP protocol from the performance metrics, and cross-referencing these with the unique requirements of several applications, we can distil knowledge on the use case contexts that are most beneficial for each protocol. Using this extracted knowledge, we will then be able to answer the second research question, which should provide researchers with recommendations on the situations in which a given NIZKP protocol is best applied. To conclude, we express our confidence that by implementing the proposed application we will be able to provide a comprehensive answer the research questions stated at the start of this work. We consider this to constitute sufficient justification to implement our proposed solution.

5.6. Overview

To conclude this section, we provide a schematic overview of our proposed solution in

Figure 2.

7. Discussion

In this section, we discuss the research and benchmark performed as described in previous chapters. Starting in

Section 7.1, we discuss the results achieved from the benchmark, including a discussion on our findings as well as a general discussion on the implementation and the used ZKP protocol libraries. With the achieved results discussed, we aim to answer our research questions from in

Section 7.2. We continue the discussion by talking about the strengths of our research in

Section 7.3, and subsequently contrast these strengths by examining the limitations of our work in

Section 7.4. Finally, in , we discuss the significance of our work and the potential use cases for the contained knowledge.

7.1. Achieved results

In our work, we benchmarked four general purpose NIZKP libraries implementing the zk-SNARK, zk-STARK, and Bulletproof protocols for in real applications. We benchmarked these libraries in an equal an equivalent application related to the privacy-preserving authentication context. From the benchmark results, detailed in

Section 6, we observed the following ordering between the protocols regarding proof size, proof generation time, and proof verification time:

Proof size: We found that the SNARK protocol produced the smallest proofs, with the zk-STARK protocol producing the largest proofs. The Bulletproof implementation produced proofs that were somewhere in the middle, yet closer to the proof size from the SNARK. The Bulletproof proof size was within one order of magnitude from the two SNARK implementations, while the STARK implementation proof was at least one order of magnitude larger than the two other protocols. We note that this observation considers just the proof size, not including the verifying key size in the SNARK protocol.

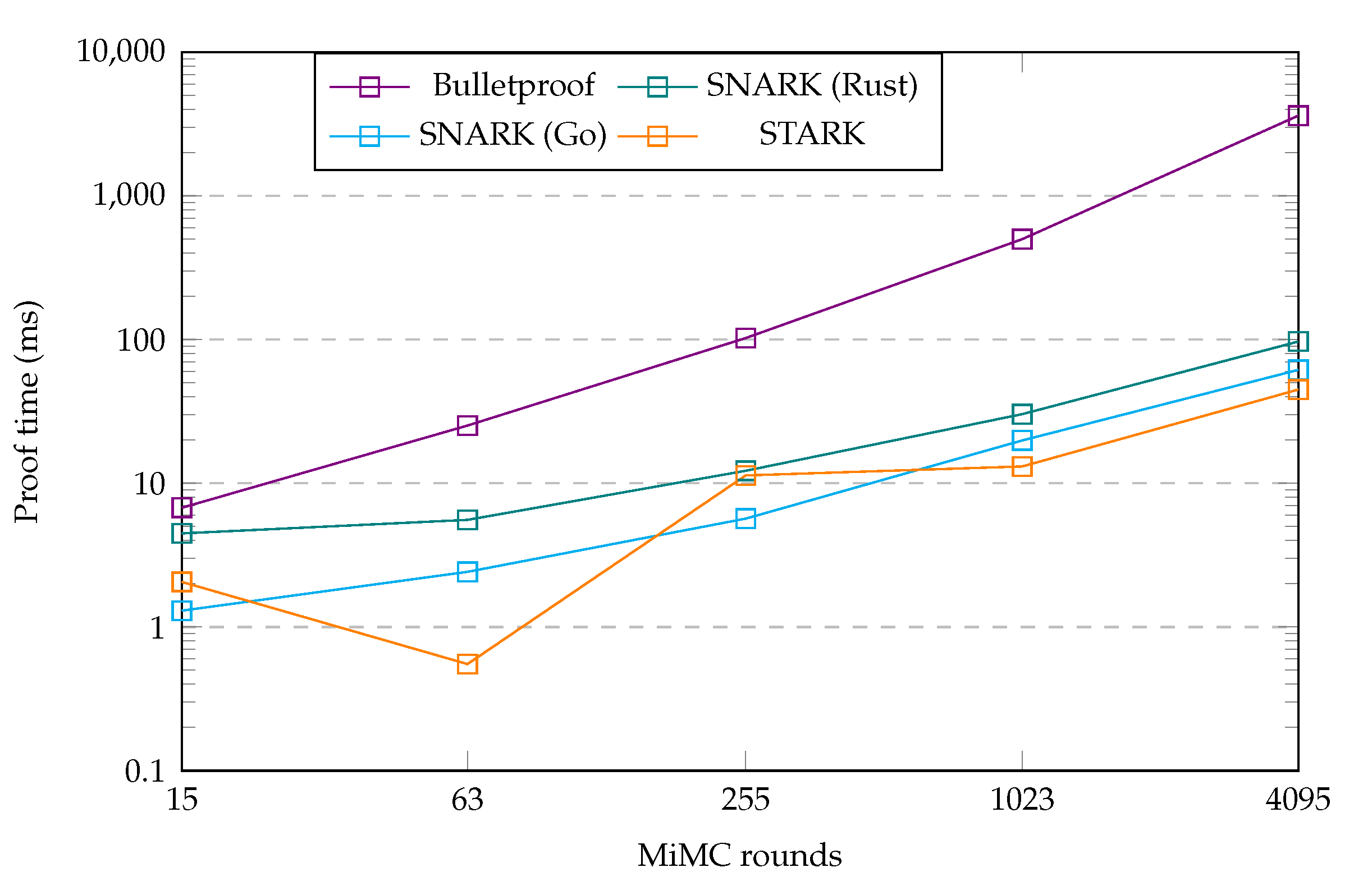

Proof generation time: Though with some fluctuations in the duration metrics, we overall observed the STARK implementation to be the fastest in generating a proof. The two SNARK implementations came in at the second place, with the proof times for these three implementations remaining within one order of magnitude difference. Generating a proof using the Bulletproof implementation took longer than for the other protocols, with a proof time that was more than an order of magnitude larger for the upper MiMC round numbers.

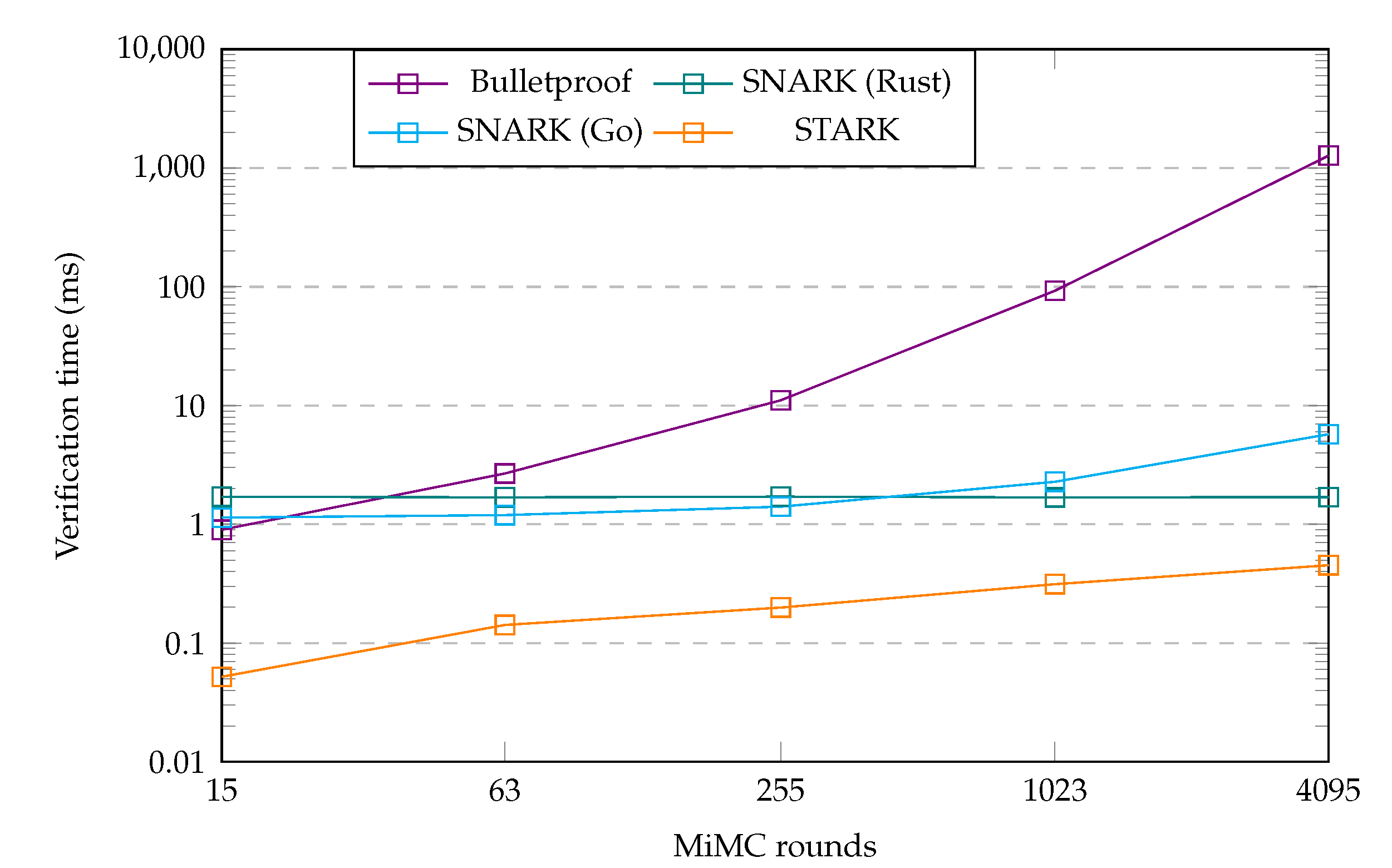

Proof verification time: When verifying a proof, the STARK protocol performed the verification fastest. The Bulletproof proof verified the slowest, except at the lowest number of MiMC rounds where the proof verified slightly faster than the two SNARK proofs. Interestingly, the verification times for the STARK and Bulletproof proofs increased much more rapidly with the number of MiMC rounds than the SNARK proofs. While the STARK implementation was well over an order of magnitude faster at lower MiMC round numbers, this difference had shrunk to just around or even within an order of magnitude difference compared to the Go or Rust SNARK implementations, respectively, at the largest number of MiMC rounds. In the same way, the Bulletproof proof went from verifying slightly faster than the SNARK proofs at the lowest number of MiMC rounds, to verifying more than two orders of magnitude slower than the SNARK proof by the largest number of benchmarked MiMC rounds.

We included these metrics for reference in

Table 8. Assuming the found metrics are valid, and disregarding that the hardware used to perform the benchmark is unknown, we cross referenced the metrics to our results obtained from the benchmark to observe that our results indicated a corresponding performance ordering for most metrics. The ordering for the proof size matched, and even the exact figures were comparable to the ones we obtained at higher numbers of MiMC rounds. We remark that it is not exactly meaningful that the exact metrics match, though, since we expect the found comparison to be obtained from an entirely different application benchmarked on different hardware. We therefore expect this correspondence to be coincidental. For the proof time, the ordering of the best performing protocols also matched, even with the SNARK and STARK metrics being much closer to each other than to the Bulletproof at higher MiMC round numbers. Only for the verification time, the ordering in our benchmark was different to the cross-reference source. Whereas in our benchmark the STARK implementation verified faster than the SNARK implementations, the cross-referenced comparison stated the inverse. What did match, however, was that the SNARK and STARK times were much closer together, with the Bulletproof proof verifying significantly slower. At least, when considering the results we obtained for larger numbers of MiMC rounds.

Regarding the cross-check for the proof size, this only included the actual proof size. When we included the verification key as well, as required by the verifier to verify a proof in the two SNARK protocol implementations, the outcome changed. Not only did the Rust implementation in that case have a combined size almost as large as the proof size for the Bulletproof protocol, for lower MiMC round numbers, the total size of this data for the Go SNARK implementation became larger than the Bulletproof proof. Not only that, but the combined size also furthermore became so large at higher numbers of MiMC rounds that the Go SNARK implementation had a larger combined verification key and proof size than the size of the STARK implementation proof. That was the case without even including the witness size, which the verifier additionally required in the Go SNARK implementation. Not only would including the verifying key in the comparison alter the performance ordering between the different protocols, but it also furthermore unveiled a clear contrast between the performance of two implementations of the same protocol. A contrast which manifested itself to a significantly smaller degree in the time-based metrics. We found this difference, a verifying key constant in size or almost increasing exponentially in size with the number of MiMC rounds, intriguing at the very least. While we aimed to limit such contrast between the different implementations of the three different protocols by using libraries written in the same programming language for each protocol, these observations not only tell us that that was the right thing to do, but also show the importance of optimized protocol libraries. Such optimization can make a substantial difference in the performance, even when both library implementations use the same Groth16 backend [

7] underneath.

Lastly, we want to discuss the results achieved in the benchmark comparing the configuration parameter values for the zk-STARK protocol implementation. We examined the performance when configured using the settings that individually provided optimal performance, as described in

Section 5.4. We found that this improved the performance compared to our default configuration for all metrics except the conjured security level. We could argue that this means that we initially chose the wrong default configuration parameters. However, as mentioned in

Section 6.2, we achieved even better performance metrics when using the default configuration adjusting only the FRI maximum remainder degree. This demonstrated that the ’optimal’ configuration parameter values when combined are not necessarily ’optimal’ at all, and that the combination of different parameters forms a complex system of trade-offs. To truly inspect the impact of each parameter and the best performing configuration, in that case, would require more than benchmarking all combinations of parameters. Just to benchmark all combinations of our selected individual parameter changes would require benchmarking

configurations. Considering all parameter values would significantly increase this value. Even then, we would have benchmarked for just a single number of MiMC rounds, which as seen from our benchmark can significantly influence the performance of the STARK protocol implementation. And even at that point, we still would have only performed the benchmarks on a single hardware configuration, while different hardware configurations may benefit from different software configuration settings. Because of this, we still consider our approach of choosing the initial configuration using parameter values somewhere in the middle to be a safe choice, which enabled us to inspect the impact each parameter has on the protocol performance. In addition, we observed that the proof size, verification time, and conjured security level were not extremely different. Even the proof time, for which our default number of queries of 42 was a bad pick, reduced only six times by choosing 41 as the number of queries. While such performance improvement is not negligible, it is sufficiently within an order of magnitude difference even though it constitutes a larger improvement than the threefold improvement achieved by the combination benchmark. Given that the zk-STARK protocol had a proof size more than an order of magnitude larger than the second largest proof size created by the Bulletproof protocol, not to mention that the STARK implementation already showed the best performance for the proof time and proof verification time, a more optimal configuration would ultimately not have altered our conclusions. We therefore conclude that our findings are still valid, despite the sub-optimal default configuration that we used for the zk-STARK protocol.

7.2. Research Question Answers

Based on the achieved results, we can now attempt to answer the research questions from

Section 1. The two research questions stated for this work were:

What are the performance differences between the three included NIZKP protocols, as observed from a real-world implementation of each protocol in an application that is as equal as possible, expressed in efficiency and security level?

What use case contexts are most beneficial for each NIZKP protocol, given the unique combination of its features and performance metrics?

The first question we can conveniently answer for the performance by using

Table 9, which includes the averaged performance for each protocol over the five benchmarks with different number of MiMC rounds. Important to note for this table is that we calculated the average using the original, exact, numbers, then rounded the average for the proof and verification times to three decimals.

From this table we can clearly observe that the SNARK protocol generates the smallest proofs, whereas the generated proofs from the Bulletproof and STARK protocols are slightly larger or significantly larger, respectively. This proved to be a significant disparity with the proof and verification times, for which we observed the shortest average proof generation and verification times from the STARK protocol. The SNARK and Bulletproof protocols took longer to create and verify their proofs. This observation answers the research question regarding the performance aspect, yet it is not a comprehensive perspective on its own. The SNARK protocol, as implemented in our benchmark, required a trusted setup. There exist situations where this is not desirable, as it requires trust in the party that performs the setup. Similarly, the STARK protocol in our benchmark involved limitations using private data in the proofs, whereas for the Bulletproof protocol we did not apply some specific benefits not found in other protocols. We refer the reader to other sections in this chapter for more discussion on this aspect. Given the limited availability of security level metrics from the libraries we used to implement the benchmark applications, we were unfortunately, as likewise discussed in other sections in this chapter, unable to answer the security level component of this question. While other sources for these metrics indicated that the security level was comparative for the used configurations, this was no guarantee and would require additional research and implementation work to confirm.

The second research question we answer in detail through our recommendations in

Section 8.2. To summarize: The zk-SNARK protocol is a good overall choice for performance, granted that a trusted setup is conceivable for the specific use case. The small proof sizes make the protocol particularly beneficial for Internet of Things (IoT) usage, where notable storage, bandwidth, or processing power limitations apply. The Bulletproof protocol is a viable alternative for the zk-SNARK in these applications when a trusted setup is unacceptable and can furthermore be a great option for applications that require proofs that values lie within a pre-determined range. This suitability, however, comes at the cost of much larger proof creation and verification times, though the latter of can be reduced significantly when the application allows batching of proof verifications. The zk-STARK protocol, finally, is currently best applied to succinctly prove the correctness of computations. This makes the STARK protocol for example applicable to cloud computing and distributed learning applications. The STARK protocol allows to quickly generate a proof for large statements, and is even quicker in verifying the generated proofs, though there exists a significant trade-off in the substantial size of the generated proofs. Finally, the zk-STARK protocol is the only viable option when the quantum resistance of the protocols is an important requirement, given that the other two protocols use cryptographic primitives that are not quantum resistant.

With the research questions answered, we reflect on the aims and objectives from

Section 1 in which we presented the following research questions:

Create an implementation and evaluate the protocols in a practical setting, using a common benchmark for a real-world use case.

Create a comparison of the efficiency and security of these three protocols, including their trade-offs between efficiency and security.

Describe recommendations for the use of these protocols in different applications, based on their strengths and weaknesses.

Regarding the first objective, we proclaim that we fully achieved it considering that our benchmark indeed evaluated the protocols in a practical setting for a real-world use case. Regarding the second objective, while we were able to compare the efficiency of the three NIZKP protocols including their efficiency trade-offs, we were insufficiently able to do the same for the security aspects of the protocols. Given the limitations of the libraries that we used to benchmark the three protocols, we could only obtain the security level metrics from a single protocol. While this work did include an attempt to complement these metrics using expertise from works by other authors, this did not satisfy the comparison for the actual implementations that we had in mind. Somewhat consoling is our inclusion of the security primitives and limitations for each protocol in

Section 4, which provided alternative knowledge on the security of each protocol that should partly offset the limited security comparison in the practical setting. This aspect constitutes a potential direction for future research. The third objective, we adequately answer in

Section 8.2. While it was inconceivable to enumerate all potential applications best suited to each protocol, we believe that we provided a fair number of categories and applications that constitute a thriving environment for each protocol. We leave the ideation of other applications up to other researchers, which they can derive from the information conveyed in this work, with the potential for them to unearth entirely new, unprecedented, application categories.

7.3. Strengths

The main strength of this work lies in the benchmark procedure performed on the three main NIZKP protocols: zk-SNARK, zk-STARK, and Bulletproofs. The benchmark application that we implemented for this procedure was relevant to real-life applications focusing on privacy-preservation and authentication. Additionally, we performed the benchmark using four existing general purpose NIZKP libraries that allowed for general applicability in all kinds of zero-knowledge proof applications. This is an important aspect of our work, since these libraries enable using ZKPs in all kinds of applications without the extensive knowledge that would be required to securely realize a custom implementation for one of the NIZKP protocols. All together, this means that our benchmark provides a helpful indication of the performance differences between each ZKP protocol when utilized. To the best of our knowledge, our work constitutes the first research that directly compares the three main NIZKP protocols using results from an equivalent benchmark implemented with existing general purpose ZKP programming libraries. We argue that our decision to use general purpose NIZKP libraries increases the relevance of the obtained benchmark results for researchers aiming to implement an application, since the libraries allow researchers to implement a ZKP into their application faster and more securely without deep knowledge on the cryptography behind each protocol. In situations where the overhead of general purpose NIZKP libraries is known to be unacceptable, the exact ZKP protocol that one should use is undoubtedly known. In the unlikely event where this statement does not apply, the relative speed by which the general purpose NIZKP libraries allow to implement a ZKP will quickly surface this requirement from the proof-of-concept implementation. Affected researchers can then pivot to a custom NIZKP implementation, or different protocol altogether, without having wasted too much research time.

While in

Section 7.1 we detailed some metrics that float around on the internet comparing the three main NIZKP protocols, we were unable to find the source of these metrics. As a result, we could not determine which application they benchmarked and which hardware and software they used in the process. This left us with uncertainty regarding how the metrics were obtained. In contrast, one of the main strengths of our work is the detailed documentation of the benchmarking procedure. Not only does this enable other researchers reproduce our efforts, it furthermore allows them to extend this research work to fill additional knowledge gaps and advance knowledge on the topic of ZKPs.

Another strength of our work is that it not only provides a comparison benchmark between the three main NIZKP protocols, but it also describes the cryptographic primitives forming each protocol in

Section 4. This not only allow researchers to gain insights for the right ZKP protocol to use in their application regarding performance, but also provides them with a source for knowledge on the cryptographic primitives behind each of the ZKP protocols. From our perspective, this makes our work an ideal starting point for any researcher to obtain more knowledge on of the three NIZKP protocols, especially when they have the intent to utilize one of the three discussed NIZKP protocols for a privacy-preserving application.

7.4. Limitations

In view of the strengths as discussed in

Section 7.3, it is just as important to discuss the many limitations of this work. Discussing these limitations accentuates where our work leaves something to be desired, and where other researchers can step in to fill the knowledge gaps. Most of the limitations described in this section were a direct result of the scoping of the work and the decisions we made in the process. Some of these decisions were a compromise, where we deliberately chose to accept a limitation mentioned in this section to further increase one of the strengths of this work as mentioned in

Section 7.3.

The main limitation to this work is that the results obtained from the benchmark do not necessarily indicate the performance of only the protocol. The metrics partially reflect the performance of the ZKP implementation library, which may or may not be well optimized, and to a lesser degree that of the programming language in which it is written. This is a direct trade-off from our aim to benchmark a real-world implementation of an application using zero-knowledge proofs, which necessarily involved an implementation of each NIZKP protocol that can impact the performance. We further increased the impact of the implementation on the protocol performance through our decision to benchmark general purpose NIZKP libraries. While we justified this decision by stating that this is how most applications will implement ZKPs, through a general purpose NIZKP library that removes the extensive knowledge requirement for a custom implementation, it did mean that the obtained performance metrics were even further removed from the theoretical performance that the protocol could provide. We observed this impact first hand when inspecting and discussing the performance differences between the Rust and Go implementations of the zk-SNARK protocol. These two libraries showed vastly different performance, even while we ensured both used BLS12-381 elliptic curve [

38] and the Groth16 backend [

7]. To reduce the impact of this limitation, we decided early on to implement the benchmark using a library for each ZKP protocol written in the same programming language. As discussed in

Section 5, we chose the Rust language for this, while we also included a single library in another language as a means for comparison. The comparison enabled us to show, with numbers, how the library can impact the performance of a protocol, as discussed in

Section 7.1. While we expect this decision to have benefited the conclusiveness of the obtained benchmark results, we also admit that we cannot guarantee this. There are simply not enough libraries that implement zero-knowledge proof protocols to include multiple libraries written in the same programming language for the same ZKP protocol in this research. This is another limitation of our work, which other researchers have the potential to rectified in the future when alternative NIZKP libraries have emerged for each protocol. The comparison with metrics for each protocol circulating on the internet which we used to show that our benchmark achieved comparable results, however, contributed to our confidence that the overall performance observations from our benchmark were accurate despite these limitations.

8. Conclusion

In this section, we conclude our research in which we performed a benchmark for the zk-SNARK, zk-STARK, and Bulletproof ZKP protocols. First off, in

Section 8.1 we recollect the results from

Section 6 and reiterate our key findings. Following our key findings, we provide some recommendations on the utilization of NIZKPs that followed from our benchmark in

Section 8.2. Subsequently, we provide some promising future research directions on all kinds of NIZKP aspects that we would like to see realized in

Section 8.3. In drawing things to a close, we finalize our work by providing a conclusion with some final remarks in

Section 8.4.

8.1. Key Findings

In this chapter, we concisely reiterate the key takeaways from our NIZKP protocol benchmark. For more in depth findings, we refer the reader to

Section 6 and

Section 7, corresponding to the results and discussion chapters. We first recollect the results of the performance metrics found for all three NIZKP protocols, averaged over the five benchmarks on different numbers of hash rounds, listed in

Table 9. From this table we clearly observed that the SNARK protocol generated the smallest proofs, while the STARK protocol generated by far the largest proofs. Regarding the proof generation and verification times, the STARK protocol was faster in both metrics than the two SNARK protocol implementations, while the Bulletproof protocol turned out to be by far the slowest for these metrics. We furthermore observed these findings to be analogous to the externally found protocol comparison for which we could not determine how they were benchmarked, included for reference in

Table 8. The exception to this equivalence was the protocol ordering in the proof verification times between the SNARK and STARK, which switched place in our results. Given that the absolute difference between these reversed metrics was small for both our results and the external results, especially compared to the difference with the Bulletproof protocol, this does not constitute an alarming difference.

With all configuration settings in the zk-STARK protocol library, we found it sensible to benchmark the performance differences between these configurations. While we discovered that our default configuration may not have been optimal, we remarked that this realistically did not impact the conclusion from the comparisons between the protocols. Furthermore, we observed that the configuration parameter values which were individually optimal did not exactly provide the best possible performance when combined. We claimed this to be a result of the complexity of the inner working of the protocol and stated our suggestion to evaluate several configurations that fit the context when utilizing zk-STARKs in an application use case.

Regarding the security level of the protocols, we identified evidence that the performance on this aspect between the protocols did not deviate for our chosen configurations. With that said, this finding was inconclusive given that three of the four protocol implementing libraries did not include a method to obtain such security level metric. As such, we had to supplement our findings with complementary data from research works by other authors.

8.2. Recommendations

Reflecting on the obtained results from

Section 6, and the discussion that subsequently ensued in

Section 7, in this section we strive to provide some recommendations on which application contexts we would recommend utilizing each protocol.

We start with the zk-SNARK protocol. The two implementations for this protocol showed the smallest proof size, in addition to the proof size itself being constant. The small proof size makes this protocol a great contender for applications where either storage space is limited, or where the network connection has a restricted capacity or transfer speed. An example of a situation where storage space is limited is in blockchain systems, for which we can see zk-SNARK protocol already in use in e.g. ZCash [

45]. Limited network connections, on the other hand, are a reality for Low Power Wide Area Networks (LPWANs), often used in Internet of Things (IoT) applications and sensor networks where the devices are in a remote location and have low power requirements [

46]. The small and constant size of the SNARK proofs, especially those created by the Rust implementation, make the zk-SNARK protocol a good protocol to consider for these kinds of applications. Furthermore, as benchmarked, creating a SNARK proof is not much more compute intensive than creating a STARK proof, which is beneficial for the IoT application where devices and sensors are often low powered devices with little compute power. The most important consideration to make before applying the zk-SNARK protocol, even for these applications, is whether the requirement for a trusted setup is acceptable. There are sparks of hope to apply the zk-SNARK protocol in situations where a trusted setup is unacceptable. Researchers have recently created new SNARK backend techniques, including SuperSonic [

47] and Halo [

48], that do not require a trusted setup in certain situations. ZCash currently uses a Halo 2 zk-SNARK backend [