Submitted:

03 July 2024

Posted:

04 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. FR3 Band; The Goldilocks Spectrum

1.2. A Shared Spectrum Economy

1.3. The Need for Real-Time AI-Spectral Perception

1.4. Spectrum Sensors with Omnipresent Perception

2. Review

2.1. Dynamic Spectrum Access: Signal Processing and ML/DL Approaches

2.2. Radio Astronomy and RFI

2.3. Approximate DFT

3. System Overview

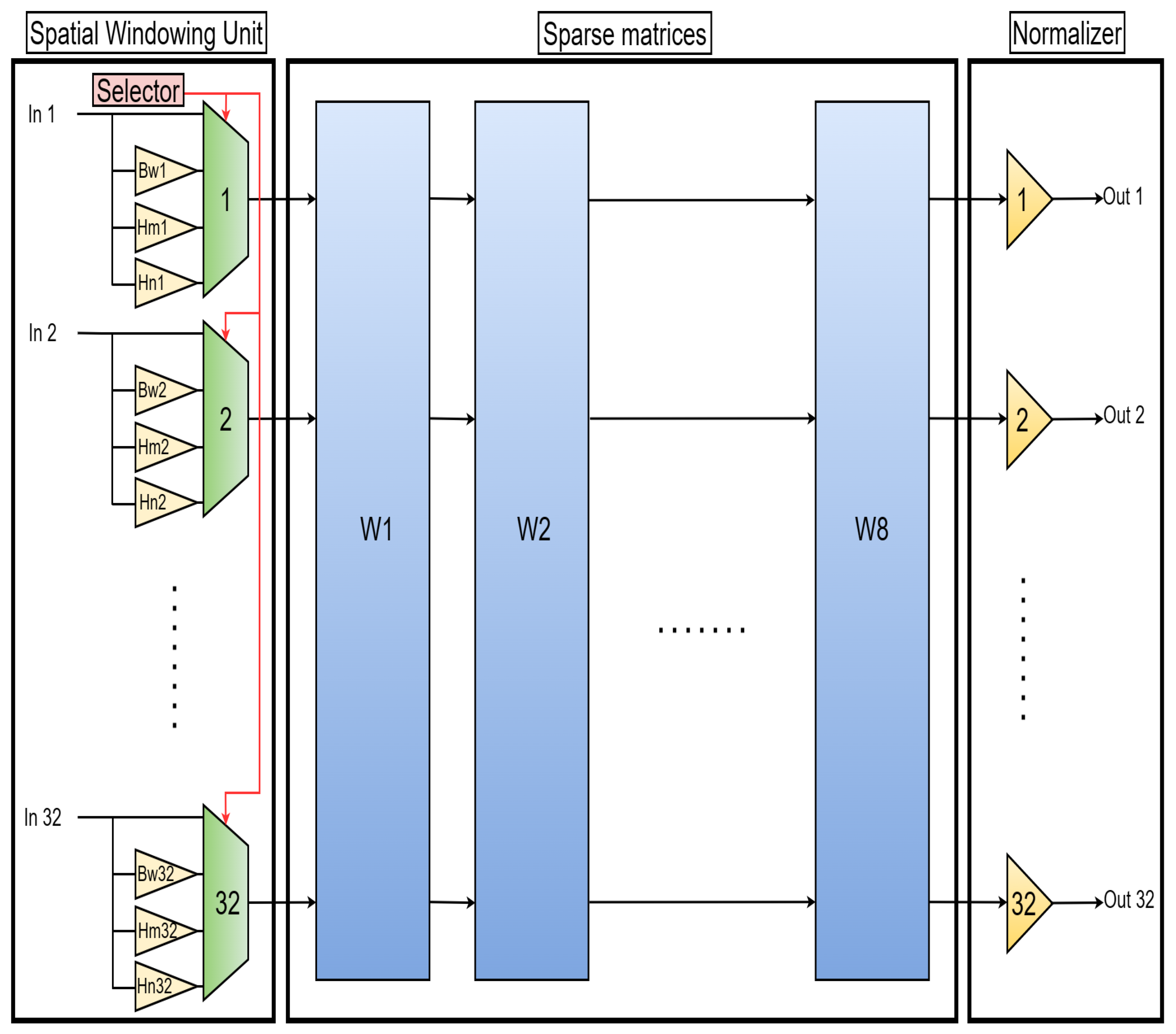

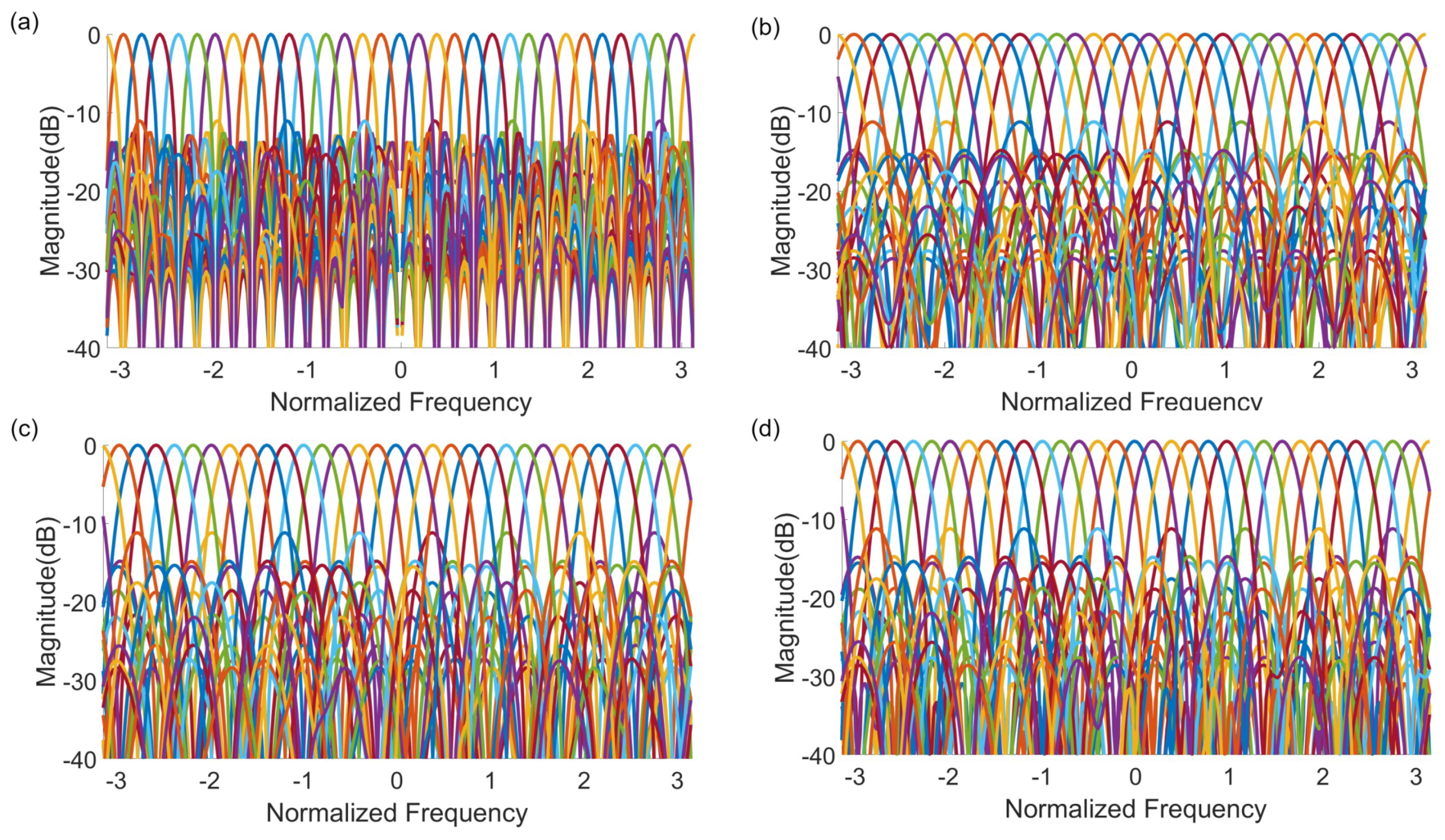

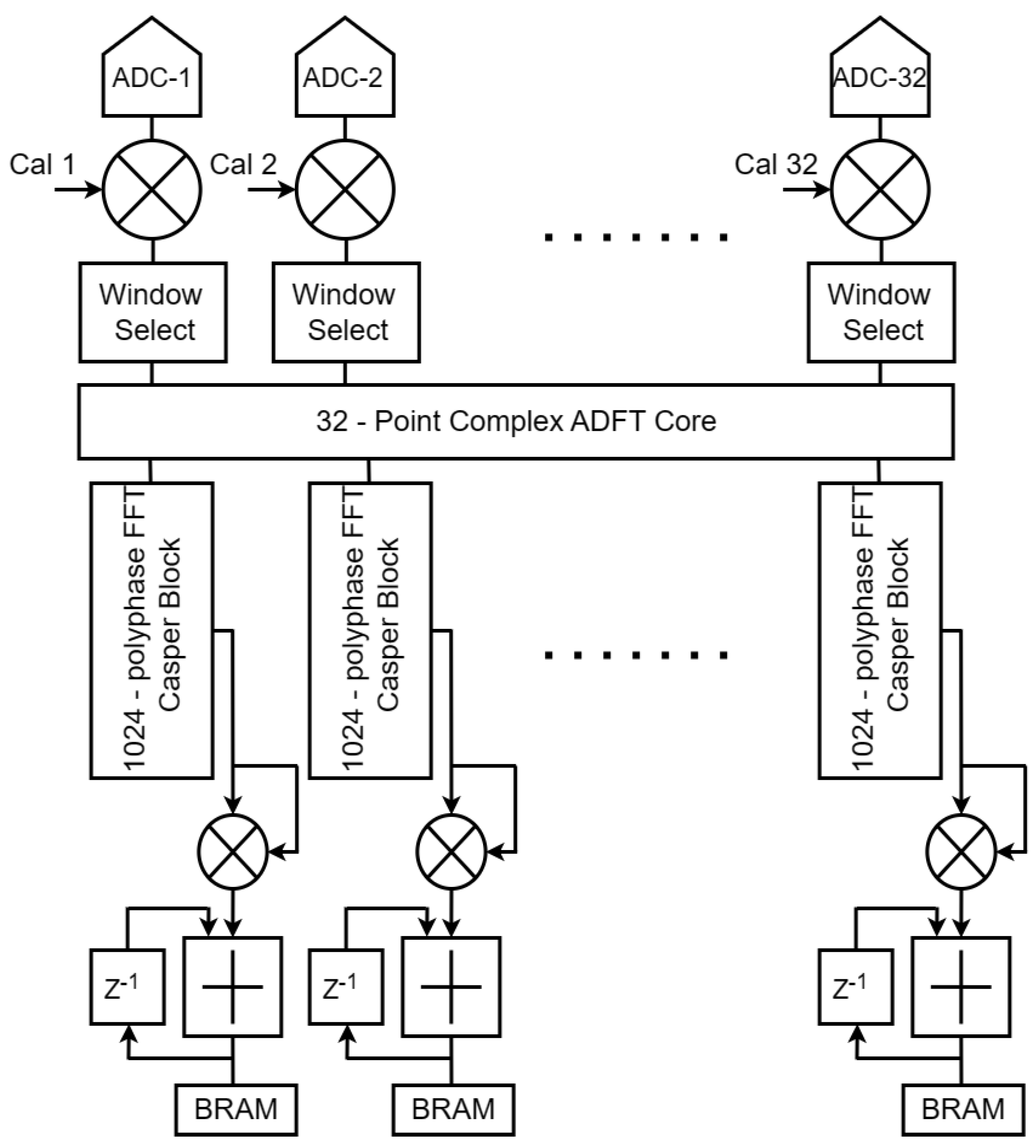

3.1. Proposed Architecture

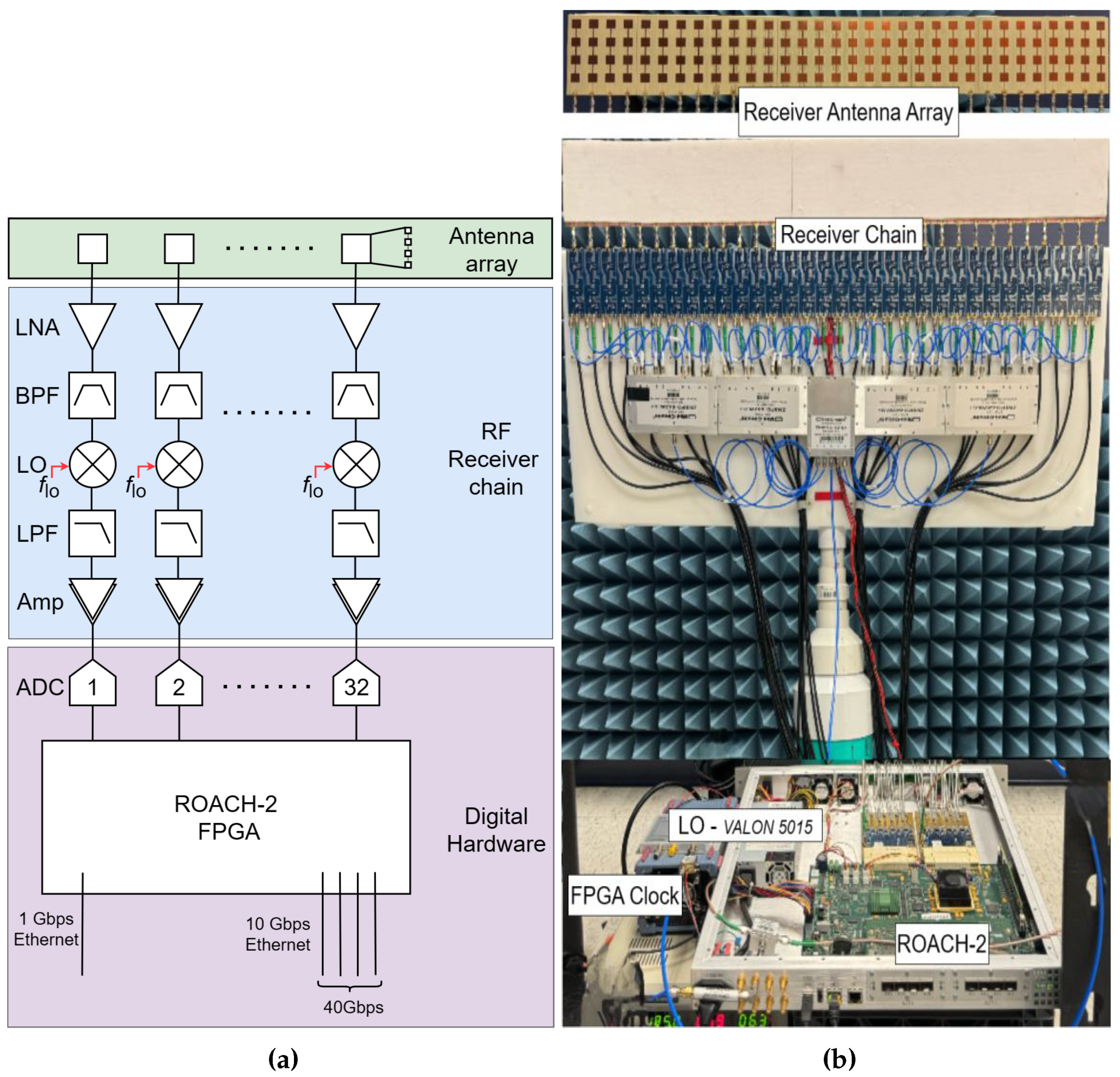

3.2. Analog Front-Ends

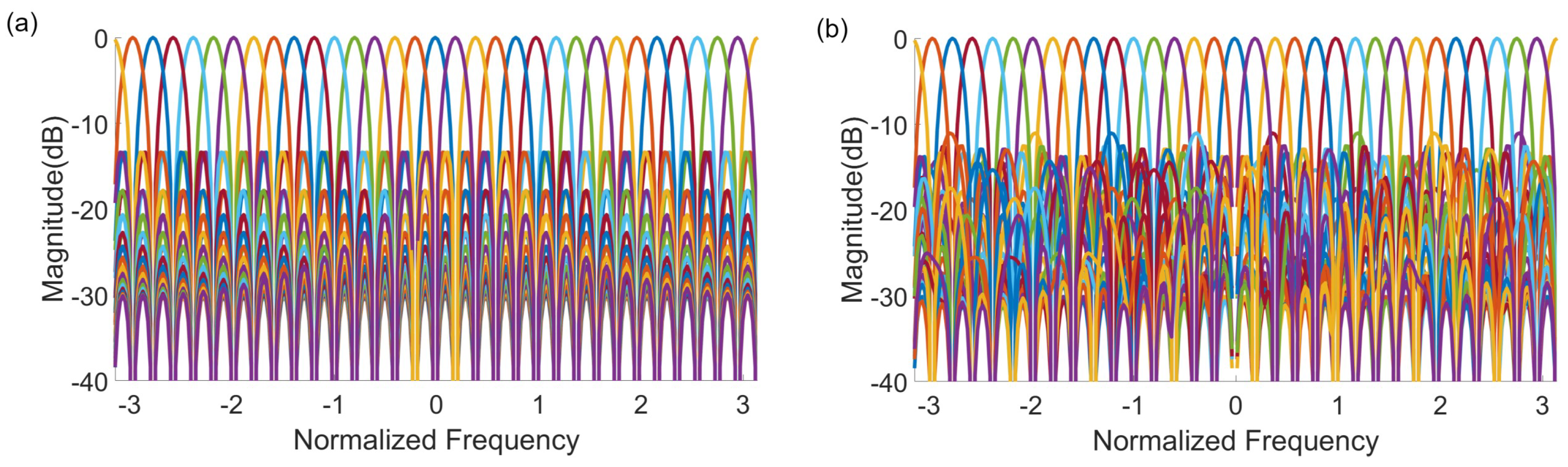

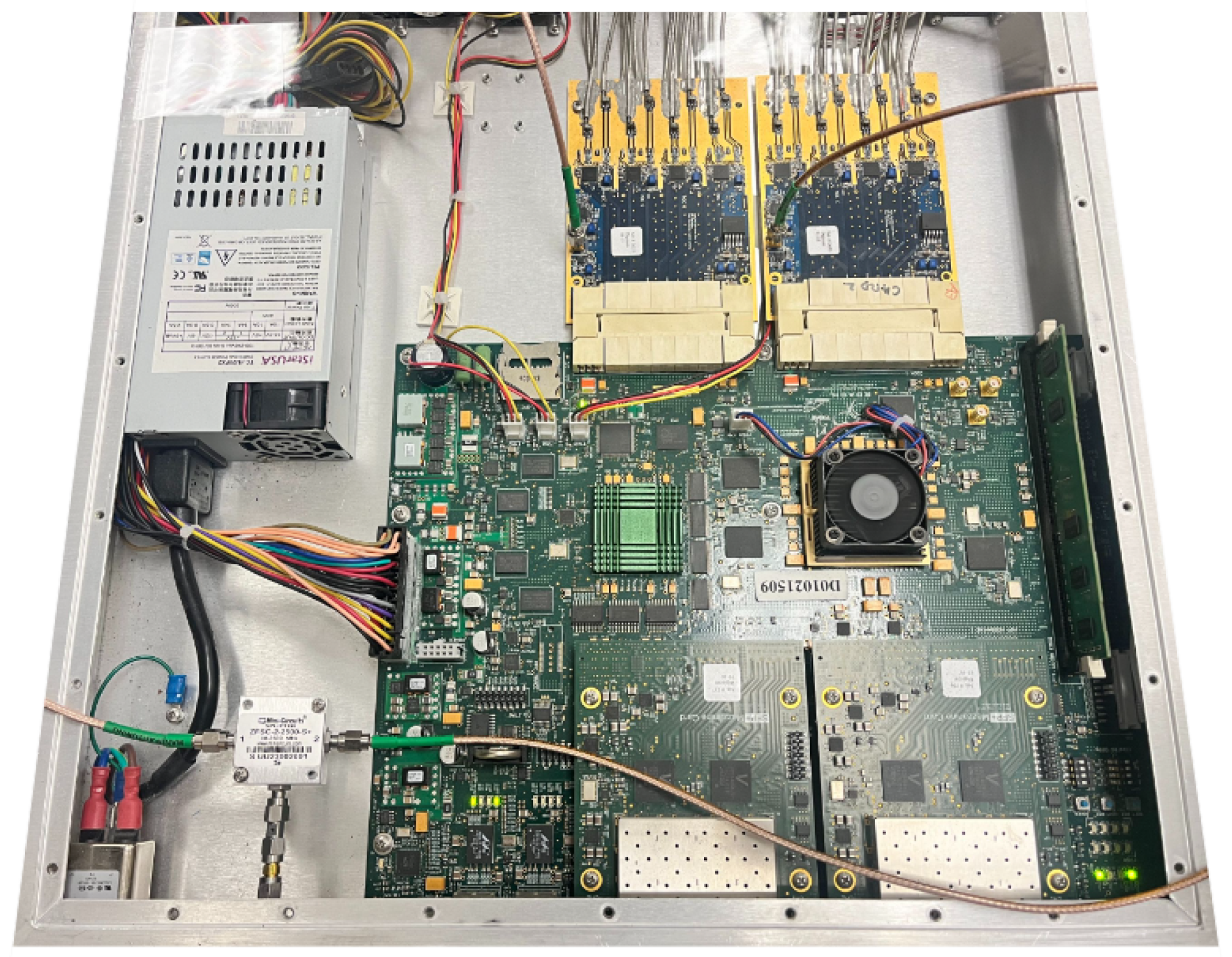

3.3. ADFT Cores and Digital ADFT Spectral Estimation

3.4. Digital FFT Spectrometers

3.5. Digital High-Speed Connectivity

4. Experimental Results

4.1. Calibration

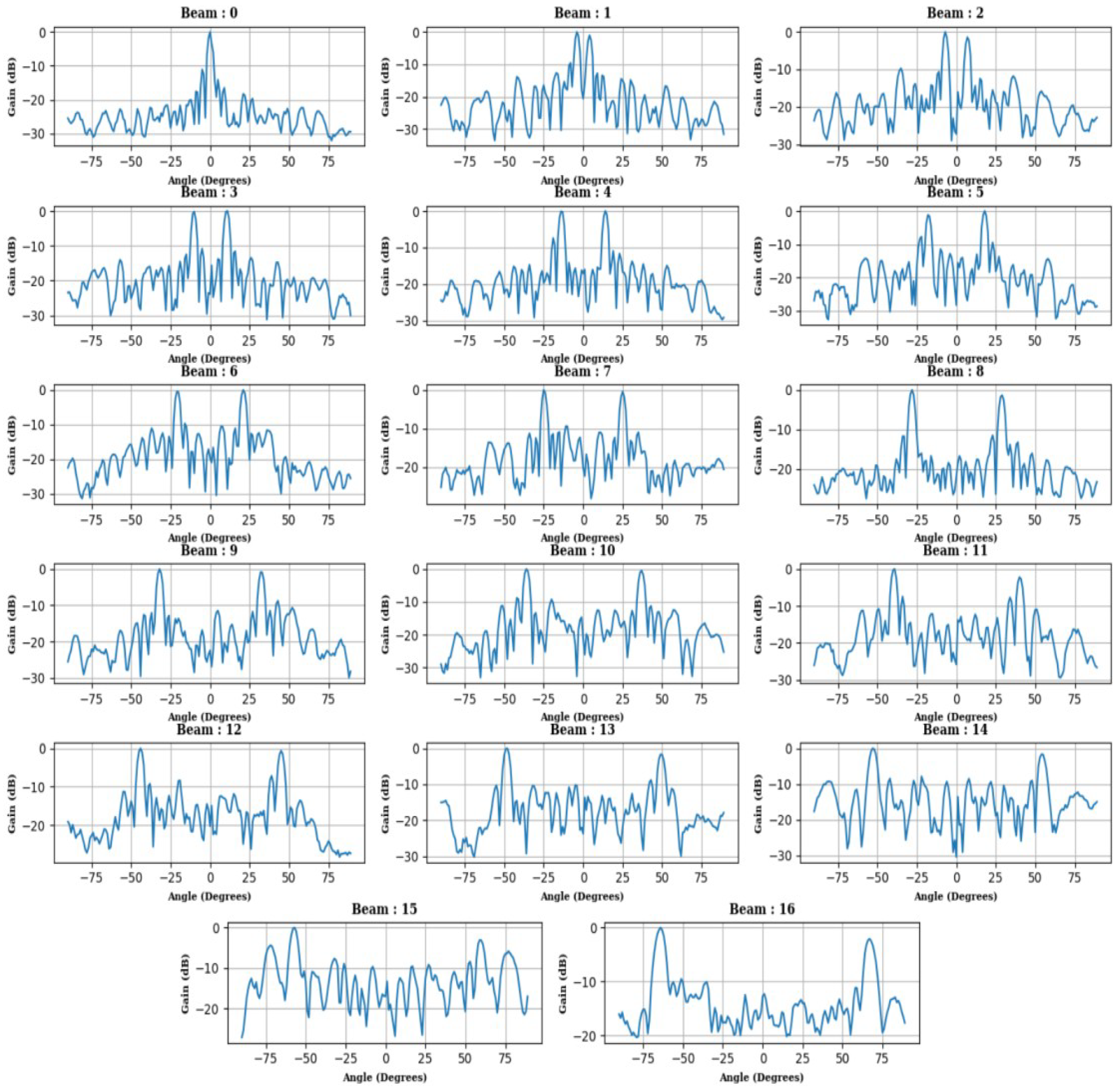

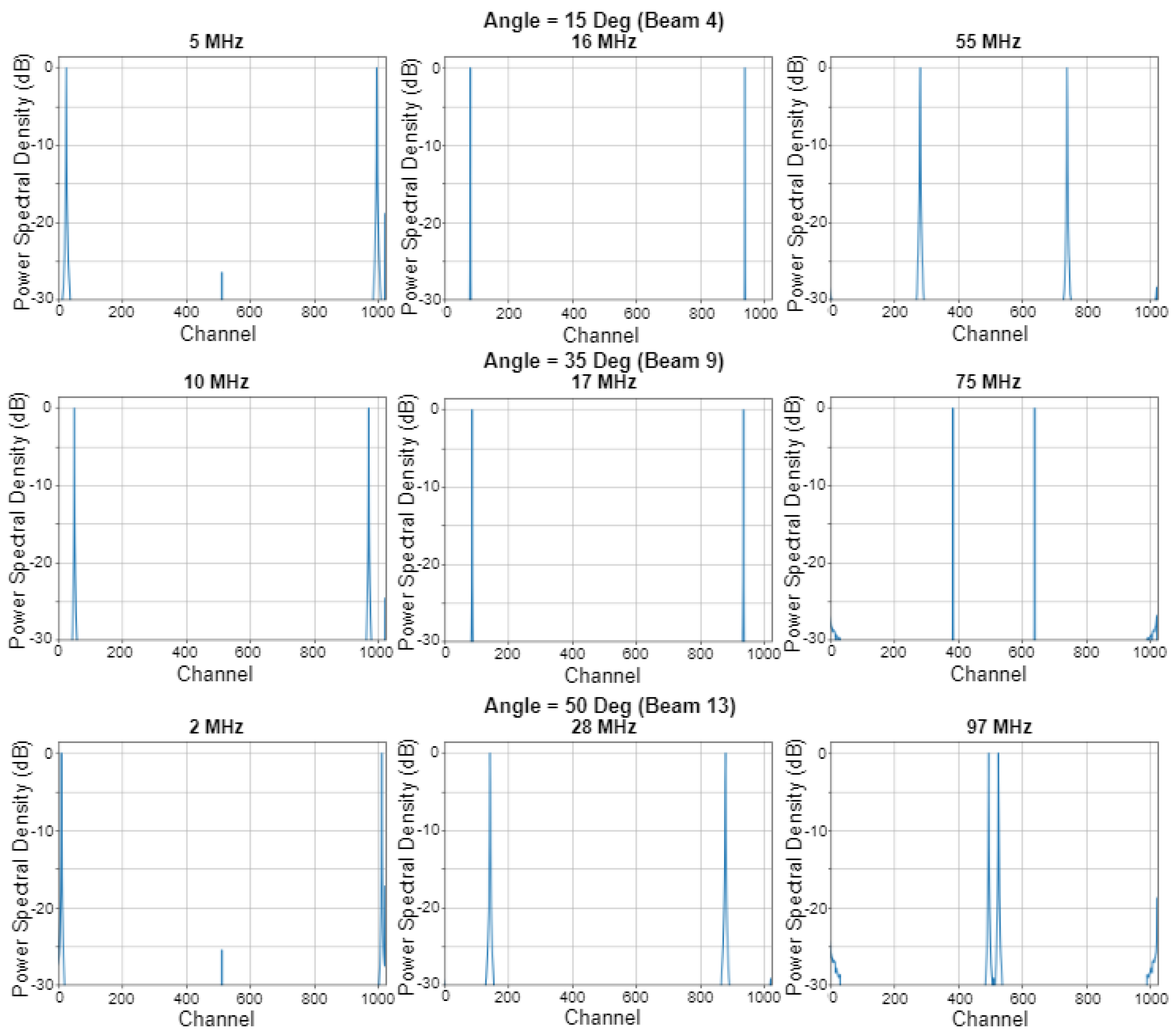

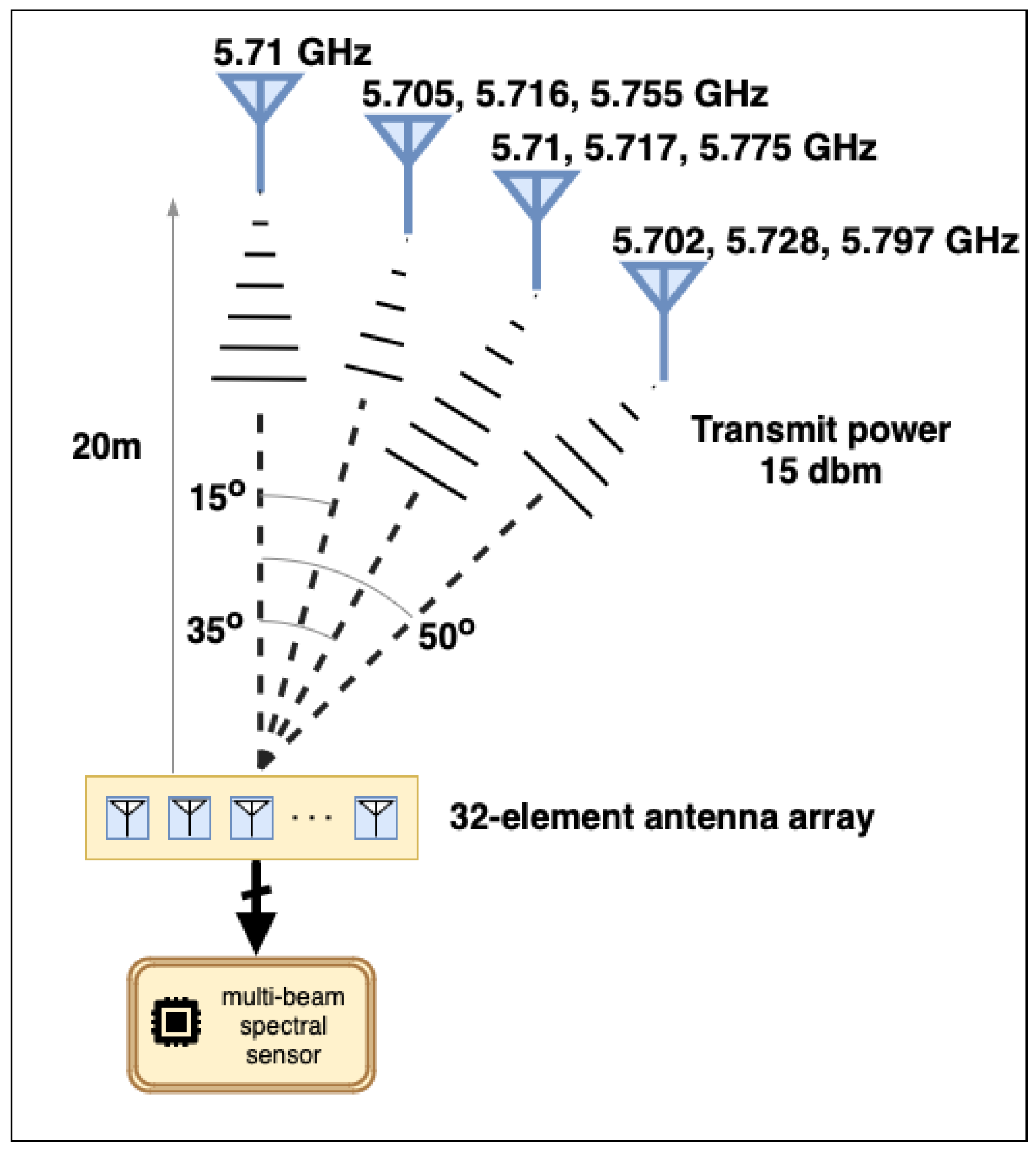

4.2. Beam Measurements

5. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADC | Analog to digital converter |

| ADFT | Approximate discrete Fourier transform |

| AI | Artificial intelligent |

| ALMA | Atacama large millimeter array |

| BRAM | Block random access memory |

| CASPER | Collaboration for astronomy signal processing and electronics research |

| CSI | Channel state information |

| CW | Continuous wave |

| DFT | Discrete Fourier transform |

| DL | Deep learning |

| DSA | Dynamic spectrum access |

| DSP | Digital signal processing |

| FCC | Federal communication commission |

| FFT | Fast Fourier transform |

| FPGA | Field programmable gate array |

| GNSS | Global navigation satellite system |

| IF | Intermediate frequency |

| IOT | Internet of things |

| ISI | Inter-symbol interference |

| ITU | International telecommunication union |

| LNA | Low noise amplifier |

| LO | Local oscillator |

| ML | Machine learning |

| ngVLA | Next generation very large array |

| NSF | National science foundation |

| OET | Office of engineering and technology |

| PCB | Printed circuit board |

| PSD | Power spectral density |

| PU | Primary user |

| RF | Radio frequency |

| RFI | Radio frequency interference |

| RL | Reinforcement learning |

| ROACH | Reconfigurable open architecture computing hardware |

| SDR | Software defined radio |

| SFP | Small form-factor pluggable |

| SNR | Signal to noise ratio |

| SU | Secondary user |

| ULA | Uniform linear array |

| WSU | Wideband sensitivity upgrade |

Appendix A

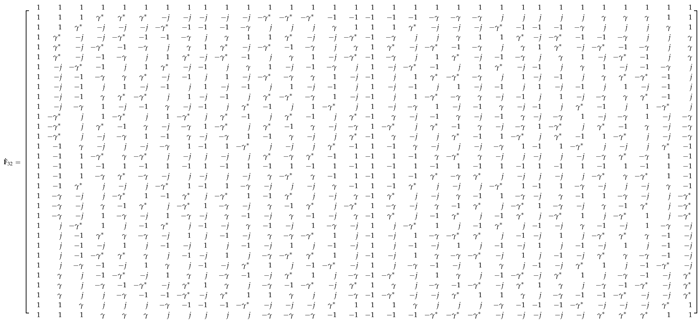

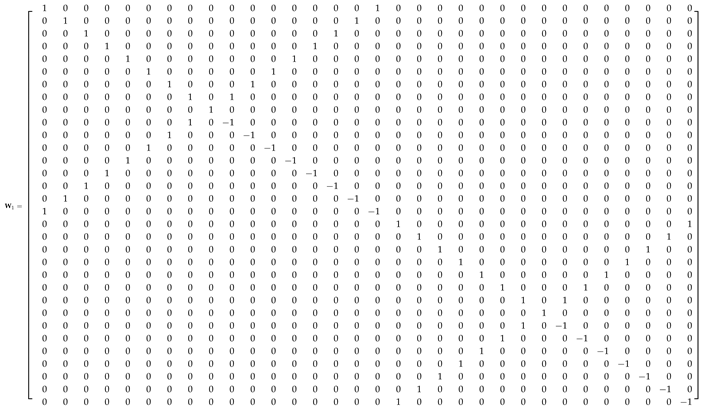

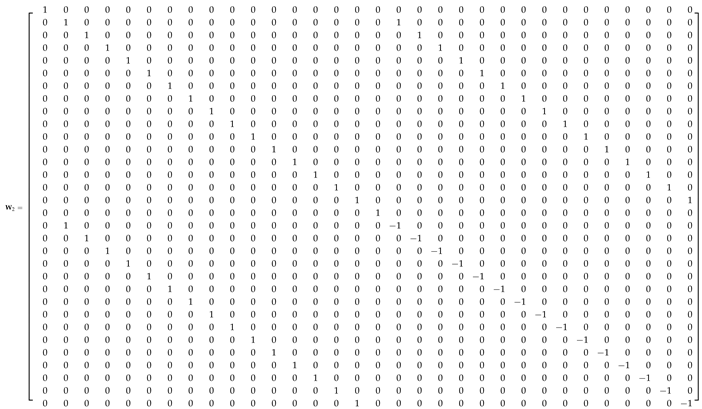

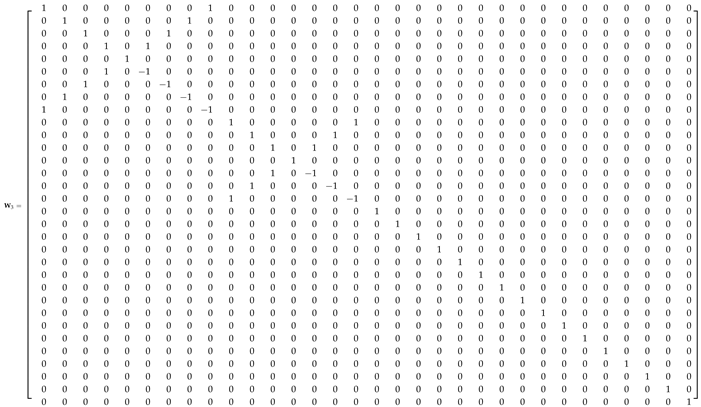

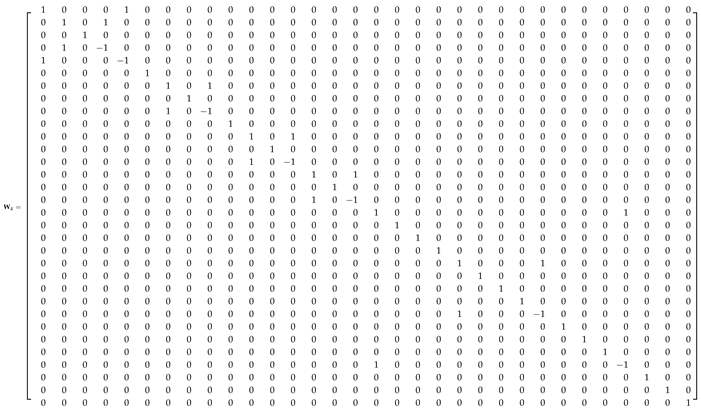

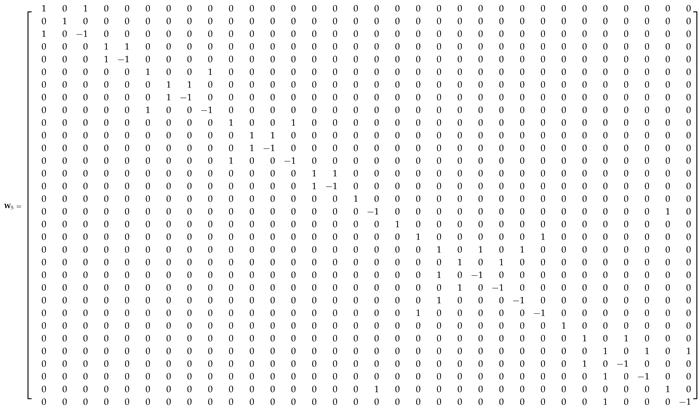

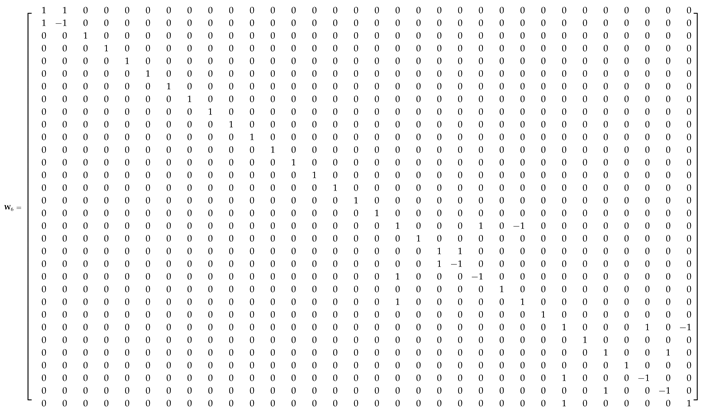

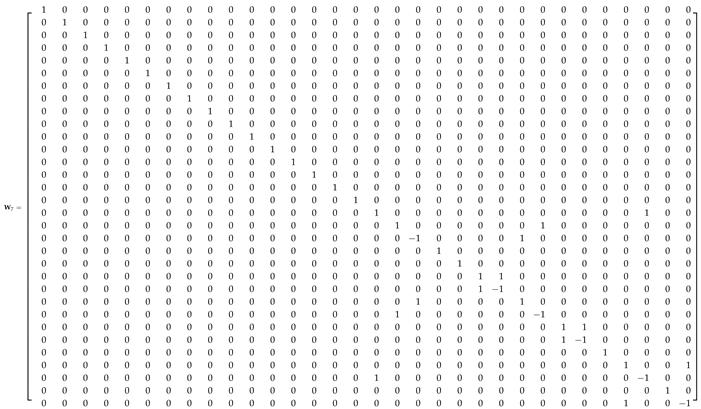

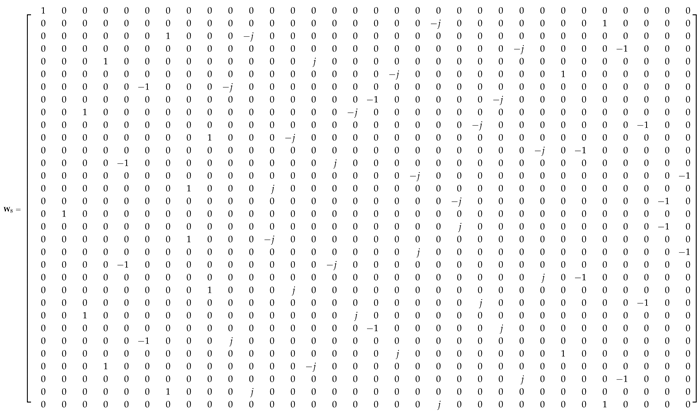

Appendix A.1. 32-Point ADFT Fast Algorithm

References

- Tolkien, J. The Hobbit & The Lord of the Rings; Houghton Mifflin Harcourt, 1954.

- Kang, S.; Mezzavilla, M.; Rangan, S.; Madanayake, A.; Venkatakrishnan, S.B.; Hellbourg, G.; Ghosh, M.; Rahmani, H.; Dhananjay, A. Cellular Wireless Networks in the Upper Mid-Band. IEEE Open Journal of the Communications Society 2024, pp. 1–1. [CrossRef]

- The White House. NATIONAL SPECTRUM STRATEGY, 2023.

- Restuccia, F.; Melodia, T. Deep Learning at the Physical Layer: System Challenges and Applications to 5G and Beyond. IEEE Communications Magazine 2020, 58, 58–64. [CrossRef]

- Triaridis, K.; Doumanidis, C.; Chatzidiamantis, N.D.; Karagiannidis, G.K. MM-Net: A Multi-Modal approach towards Automatic Modulation Classification. IEEE Communications Letters 2023, pp. 1–1. [CrossRef]

- Baldesi, L.; Restuccia, F.; Melodia, T. ChARM: NextG Spectrum Sharing Through Data-Driven Real-Time O-RAN Dynamic Control. In Proceedings of the Proceedings of IEEE International Conference on Computer Communications (INFOCOM). IEEE, 2022, pp. 240–249.

- Zhang, L.; Xiao, M.; Wu, G.; Alam, M.; Liang, Y.C.; Li, S. A Survey of Advanced Techniques for Spectrum Sharing in 5G Networks. IEEE Wireless Communications 2017, 24, 44–51. [CrossRef]

- Hu, F.; Chen, B.; Zhu, K. Full Spectrum Sharing in Cognitive Radio Networks Toward 5G: A Survey. IEEE Access 2018, 6, 15754–15776. [CrossRef]

- Shokri-Ghadikolaei, H.; Boccardi, F.; Fischione, C.; Fodor, G.; Zorzi, M. Spectrum Sharing in mmWave Cellular Networks via Cell Association, Coordination, and Beamforming. IEEE Journal on Selected Areas in Communications 2016, 34, 2902–2917. [CrossRef]

- Lv, L.; Chen, J.; Ni, Q.; Ding, Z.; Jiang, H. Cognitive Non-Orthogonal Multiple Access with Cooperative Relaying: A New Wireless Frontier for 5G Spectrum Sharing. IEEE Communications Magazine 2018, 56, 188–195. [CrossRef]

- Agarwal, S.; De, S. eDSA: Energy-efficient Dynamic Spectrum Access Protocols for Cognitive Radio Networks. IEEE Transactions on Mobile Computing 2016, 15, 3057–3071. [CrossRef]

- Chiwewe, T.M.; Hancke, G.P. Fast Convergence Cooperative Dynamic Spectrum Access for Cognitive Radio Networks. IEEE Transactions on Industrial Informatics 2017. [CrossRef]

- Chang, H.H.; Song, H.; Yi, Y.; Zhang, J.; He, H.; Liu, L. Distributive Dynamic Spectrum Access Through Deep Reinforcement Learning: A Reservoir Computing-based Approach. IEEE Internet of Things Journal 2018, 6, 1938–1948. [CrossRef]

- Nguyen, H.Q.; Nguyen, B.T.; Dong, T.Q.; Ngo, D.T.; Nguyen, T.A. Deep Q-Learning with Multiband Sensing for Dynamic Spectrum Access. In Proceedings of the IEEE International Symposium on Dynamic Spectrum Access Networks (DySPAN), Oct 2018, pp. 1–5. [CrossRef]

- Naparstek, O.; Cohen, K. Deep Multi-user Reinforcement Learning for Distributed Dynamic Spectrum Access. IEEE Transactions on Wireless Communications 2019, 18, 310–323. [CrossRef]

- Wang, S.; Liu, H.; Gomes, P.H.; Krishnamachari, B. Deep Reinforcement Learning for Dynamic Multichannel Access in Wireless Networks. IEEE Transactions on Cognitive Communications and Networking 2018, 4, 257–265. [CrossRef]

- Yu, Y.; Wang, T.; Liew, S.C. Deep-Reinforcement Learning Multiple Access for Heterogeneous Wireless Networks. IEEE Journal on Selected Areas in Communications 2019, 37, 1277–1290. [CrossRef]

- Reston,VA. Ligado Networks Sues U.S. Government for Unlawful and Uncompensated Taking of Ligado’s Exclusive FCC-Licensed Spectrum, 2023.

- Barden, S.; Dewdney, P.; Friesen, R.; Murowinski, R.; Savanandam, S. ATD Strategic R&D Review Report - Signal Processing Portfolio. Herzberg Astronomy and Astrophysics Research Centre- National Research Council Canada, BC, Canada March 2022.

- FCC Spectrum Policy Task Force. Report of the spectrum efficiency working group, Nov. 2002. Accessed on May 28, 2024.

- Mitola, J.; Maguire, G. Cognitive radio: making software radios more personal. IEEE Personal Communications 1999, 6, 13–18. [CrossRef]

- Haykin, S. Cognitive radio: brain-empowered wireless communications. IEEE Journal on Selected Areas in Communications 2005, 23, 201–220. [CrossRef]

- Liang, Y.C.; Chen, K.C.; Li, G.Y.; Mahonen, P. Cognitive radio networking and communications: an overview. IEEE Transactions on Vehicular Technology 2011, 60, 3386–3407. [CrossRef]

- Wang, B.; Liu, K.R. Advances in cognitive radio networks: A survey. IEEE Journal of Selected Topics in Signal Processing 2011, 5, 5–23. [CrossRef]

- Zhao, Q.; Sadler, B.M. A Survey of Dynamic Spectrum Access. IEEE Signal Processing Magazine 2007, 24, 79–89. [CrossRef]

- Ji, Z.; Liu, K.R. Dynamic Spectrum Sharing: A Game Theoretical Overview. IEEE Communications Magazine 2007, 45, 88–94. [CrossRef]

- Bhattarai, S.; Park, J.M.J.; Gao, B.; Bian, K.; Lehr, W. An Overview of Dynamic Spectrum Sharing: Ongoing Initiatives, Challenges, and a Roadmap for Future Research. IEEE Transactions on Cognitive Communications and Networking 2016, 2, 110–128. [CrossRef]

- Zeng, Y.; Liang, Y.C.; Zhang, R. Blindly Combined Energy Detection for Spectrum Sensing in Cognitive Radio. IEEE Signal Processing Letters 2008, 15, 649–652. [CrossRef]

- Ling, X.; Wu, B.; Wen, H.; Ho, P.H.; Bao, Z.; Pan, L. Adaptive Threshold Control for Energy Detection Based Spectrum Sensing in Cognitive Radios. IEEE Wireless Communications Letters 2012, 1, 448–451. [CrossRef]

- Ranjan, A.; Anurag.; Singh, B. Design and analysis of spectrum sensing in cognitive radio based on energy detection. In Proceedings of the International Conference on Signal and Information Processing, 2016, pp. 1–5.

- Arjoune, Y.; Mrabet, Z.E.; Ghazi, H.E.; Tamtaoui, A. Spectrum sensing: Enhanced energy detection technique based on noise measurement. In Proceedings of the IEEE Annual Computing and Communication Workshop and Conference, 2018, pp. 828–834.

- Jiang, C.; Li, Y.; Bai, W.; Yang, Y.; Hu, J. Statistical matched filter based robust spectrum sensing in noise uncertainty environment. In Proceedings of the International Conference on Communication Technology, 2012, pp. 1209–1213.

- Zhang, X.; Chai, R.; Gao, F. Matched filter based spectrum sensing and power level detection for cognitive radio network. In Proceedings of the IEEE Global Conference on Signal and Information Processing, 2014, pp. 1267–1270.

- Lv, Q.; Gao, F. Matched filter based spectrum sensing and power level recognition with multiple antennas. In Proceedings of the IEEE China Summit and International Conference on Signal and Information Processing, 2015, pp. 305–309.

- Ilyas, I.; Paul, S.; Rahman, A.; Kundu, R.K. Comparative evaluation of cyclostationary detection based cognitive spectrum sensing. In Proceedings of the IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference, 2016, pp. 1–7.

- Cohen, D.; Eldar, Y.C. Compressed cyclostationary detection for Cognitive Radio. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2017, pp. 3509–3513.

- Zeng, Y.; Liang, Y.C. Spectrum-Sensing Algorithms for Cognitive Radio Based on Statistical Covariances. IEEE Transactions on Vehicular Technology 2009, 58, 1804–1815. [CrossRef]

- Zeng, Y.; Liang, Y.C. Eigenvalue-based spectrum sensing algorithms for cognitive radio. IEEE Transactions on Communications 2009, 57, 1784–1793. [CrossRef]

- Zayen, B.; Hayar, A.; Kansanen, K. Blind Spectrum Sensing for Cognitive Radio Based on Signal Space Dimension Estimation. In Proceedings of the 2009 IEEE International Conference on Communications, 2009, pp. 1–5.

- Farhang-Boroujeny, B. Filter Bank Spectrum Sensing for Cognitive Radios. IEEE Transactions on Signal Processing 2008, 56, 1801–1811. [CrossRef]

- Quan, Z.; Cui, S.; Sayed, A.H.; Poor, H.V. Optimal Multiband Joint Detection for Spectrum Sensing in Cognitive Radio Networks. IEEE Transactions on Signal Processing 2009, 57, 1128–1140. [CrossRef]

- Raghu, I.; Chowdary, S.S.; Elias, E. Efficient spectrum sensing for Cognitive Radio using Cosine Modulated Filter Banks. In Proceedings of the IEEE Region 10 Conference, 2016, pp. 2086–2089.

- Kumar, A.; Saha, S.; Bhattacharya, R. Improved wavelet transform based edge detection for wide band spectrum sensing in Cognitive Radio. In Proceedings of the USNC-URSI Radio Science Meeting, 2016, pp. 21–22.

- Polo, Y.L.; Wang, Y.; Pandharipande, A.; Leus, G. Compressive wide-band spectrum sensing. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, 2009, pp. 2337–2340.

- Arjoune, Y.; Kaabouch, N. Wideband Spectrum Sensing: A Bayesian Compressive Sensing Approach. Sensors 2018, 18, 1–15. [CrossRef] [PubMed]

- Li, Z.; Chang, B.; Wang, S.; Liu, A.; Zeng, F.; Luo, G. Dynamic Compressive Wide-Band Spectrum Sensing Based on Channel Energy Reconstruction in Cognitive Internet of Things. IEEE Transactions on Industrial Informatics 2018, 14, 2598–2607. [CrossRef]

- Yang, L.; Fang, J.; Duan, H.; Li, H. Fast Compressed Power Spectrum Estimation: Toward a Practical Solution for Wideband Spectrum Sensing. IEEE Transactions on Wireless Communications 2020, 19, 520–532. [CrossRef]

- Ding, G.; Wu, Q.; Yao, Y.D.; Wang, J.; Chen, Y. Kernel-Based Learning for Statistical Signal Processing in Cognitive Radio Networks: Theoretical Foundations, Example Applications, and Future Directions. IEEE Signal Processing Magazine 2013, 30, 126–136. [CrossRef]

- Thilina, K.M.; Choi, K.W.; Saquib, N.; Hossain, E. Machine Learning Techniques for Cooperative Spectrum Sensing in Cognitive Radio Networks. IEEE Journal on Selected Areas in Communications 2013, 31, 2209–2221. [CrossRef]

- Mikaeil, A.M.; Guo, B.; Wang, Z. Machine Learning to Data Fusion Approach for Cooperative Spectrum Sensing. In Proceedings of the International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery, 2014, pp. 429–434.

- Ghazizadeh, E.; Nikpour, B.; Moghadam, D.A.; Nezamabadi-pour, H. A PSO-based weighting method to enhance machine learning techniques for cooperative spectrum sensing in CR networks. In Proceedings of the Conference on Swarm Intelligence and Evolutionary Computation, 2016, pp. 113–118.

- Khalfi, B.; Zaid, A.; Hamdaoui, B. When machine learning meets compressive sampling for wideband spectrum sensing. In Proceedings of the International Wireless Communications and Mobile Computing Conference, 2017, pp. 1120–1125.

- Lee, W.; Kim, M.; Cho, D.H. Deep Cooperative Sensing: Cooperative Spectrum Sensing Based on Convolutional Neural Networks. IEEE Transactions on Vehicular Technology 2019, 68, 3005–3009. [CrossRef]

- Liu, C.; Wang, J.; Liu, X.; Liang, Y.C. Deep CM-CNN for Spectrum Sensing in Cognitive Radio. IEEE Journal on Selected Areas in Communications 2019, 37, 2306–2321. [CrossRef]

- Soni, B.; Patel, D.K.; López-Benítez, M. Long Short-Term Memory Based Spectrum Sensing Scheme for Cognitive Radio Using Primary Activity Statistics. IEEE Access 2020, 8, 97437–97451. [CrossRef]

- Peng, Q.; Gilman, A.; Vasconcelos, N.; Cosman, P.C.; Milstein, L.B. Robust Deep Sensing Through Transfer Learning in Cognitive Radio. IEEE Wireless Communications Letters 2020, 9, 38–41. [CrossRef]

- Zhang, W.; Wang, Y.; Chen, X.; Cai, Z.; Tian, Z. Spectrum Transformer: An Attention-based Wideband Spectrum Detector. IEEE Transactions on Wireless Communications 2024, pp. 1–1.

- Sarikhani, R.; Keynia, F. Cooperative Spectrum Sensing Meets Machine Learning: Deep Reinforcement Learning Approach. IEEE Communications Letters 2020, 24, 1459–1462. [CrossRef]

- Cai, P.; Zhang, Y.; Pan, C. Coordination Graph-Based Deep Reinforcement Learning for Cooperative Spectrum Sensing Under Correlated Fading. IEEE Wireless Communications Letters 2020, 9, 1778–1781. [CrossRef]

- Gao, A.; Du, C.; Ng, S.X.; Liang, W. A Cooperative Spectrum Sensing With Multi-Agent Reinforcement Learning Approach in Cognitive Radio Networks. IEEE Communications Letters 2021, 25, 2604–2608. [CrossRef]

- Ngo, Q.T.; Jayawickrama, B.A.; He, Y.; Dutkiewicz, E. Multi-Agent DRL-Based RIS-Assisted Spectrum Sensing in Cognitive Satellite–Terrestrial Networks. IEEE Wireless Communications Letters 2023, 12, 2213–2217. [CrossRef]

- Ali, A.; Hamouda, W. Advances on Spectrum Sensing for Cognitive Radio Networks: Theory and Applications. IEEE Communications Surveys & Tutorials 2017, 19, 1277–1304.

- Arjoune, Y.; Kaabouch, N. A Comprehensive Survey on Spectrum Sensing in Cognitive Radio Networks: Recent Advances, New Challenges, and Future Research Directions. Sensors 2019, 19, 1–32. [CrossRef]

- Zhang, Y.; Luo, Z. A Review of Research on Spectrum Sensing Based on Deep Learning. Electronics 2023, 12, 1–42. [CrossRef]

- Wijenayake, C.; Madanayake, A.; Kota, J.; Bruton, L. Space-Time Spectral White Spaces in Cognitive Radio: Theory, Algorithms, and Circuits. IEEE Journal on Emerging and Selected Topics in Circuits and Systems 2013, 3, 640–653. [CrossRef]

- Madanayake, A.; Wijenayake, C.; Tran, N.; Cooklev, T.; Hum, S.; Bruton, L.T. Directional spectrum sensing using tunable multi-D space-time discrete filters. In Proceedings of the IEEE International Symposium on a World of Wireless, Mobile and Multimedia Networks, 2012, pp. 1–6.

- Wijenayake, C.; Madanayake, A.; Bruton, L.T.; Devabhaktuni, V. DOA-estimation and source-localization in CR-networks using steerable 2-D IIR beam filters. In Proceedings of the IEEE International Symposium on Circuits and Systems, 2013, pp. 65–68.

- Gunaratne, T.K.; Bruton, L.T. Adaptive complex-coefficient 2D FIR trapezoidal filters for broadband beamforming in cognitive radio systems. Circuits, Systems, and Signal Processing 2011, 30, 587–608. [CrossRef]

- Wilcox, D.; Tsakalaki, E.; Kortun, A.; Ratnarajah, T.; Papadias, C.B.; Sellathurai, M. On Spatial Domain Cognitive Radio Using Single-Radio Parasitic Antenna Arrays. IEEE Journal on Selected Areas in Communications 2013, 31, 571–580. [CrossRef]

- Qian, R.; Sellathurai, M.; Ratnarajah, T. Directional spectrum sensing for cognitive radio using ESPAR arrays with a single RF chain. In Proceedings of the 2014 European Conference on Networks and Communications (EuCNC), 2014, pp. 1–5.

- Yazdani, H.; Vosoughi, A. On cognitive radio systems with directional antennas and imperfect spectrum sensing. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2017, pp. 3589–3593.

- Pulipati, S.; Ariyarathna, V.; Edussooriya, C.U.S.; Wijenayake, C.; Wang, X.; Madanayake, A. Real-Time FPGA-Based Multi-Beam Directional Sensing of 2.4 GHz ISM RF Sources. In Proceedings of the 2019 Moratuwa Engineering Research Conference (MERCon), 2019, pp. 129–134.

- Richard Thompson, A.; Gergely, T.E.; Vanden Bout, P.A. Interference and Radioastronomy. Physics Today 1991, 44, 41–49. [CrossRef]

- Science Goals - Square Kilometre Array Observatory. https://www.skao.int/en/explore/science-goals. Accessed: 2024-05-27.

- Carpenter, J.; Brogan, C.; Iono, D.; Mroczkowski, T. The ALMA2030 Wideband Sensitivity Upgrade. ALMA Memo 621.

- Science with a Next-Generation Very Large Array. https://ngvla.nrao.edu/page/science. Accessed: 2024-05-27.

- Sihlangu, I.; Oozeer, N.; Bassett, B.A. Nature and Evolution of UHF and L-band Radio Frequency Interference at the MeerKAT Radio Telescope. In Proceedings of the RFI Workshop 2022, European Centre for Medium-Range Weather Forecasts (ECMRWF) [Online], 2 2022.

- Galt, J. Contamination from satellites. Nature 1990, 345, 483–483. [CrossRef]

- De Pree, C.G.; Anderson, C.R.; Zheleva, M. Astronomy is under threat by radio interference from satellites - here’s what can be done about it. https://www.weforum.org/agenda/2023/03/astronomy-radio-interference-satellites-technology/, 2023. Accessed: 2024-05-27.

- Preferred frequency bands for radio astronomical measurements below 1 THz. Recommendation ITU-R RA.314-11, International Telecommunication Union - Radiocommunication Sector, https://www.itu.int/dms_pubrec/itu-r/rec/ra/R-REC-RA.314-11-202312-I!!PDF-E.pdf, 2023.

- Protection criteria used for radio astronomical measurements. Recommendation ITU-R RA.769-2, International Telecommunication Union - Radiocommunication Sector, https://www.itu.int/dms_pubrec/itu-r/rec/ra/R-REC-RA.769-2-200305-I!!PDF-E.pdf, 2003.

- Fridman, P.A.; Baan, W.A. RFI mitigation methods in radio astronomy. Astronomy & Astrophysics 2001, 378, 327–344. [CrossRef]

- Zhang, L.; Jin, R.; Zhang, Q.; Wang, R.; Zhang, H.; Wen, Z. Fusion Method of RFI Detection, Localization, and Suppression by Combining One-Dimensional and Two-Dimensional Synthetic Aperture Radiometers. Remote Sensing 2024, 16, 667. [CrossRef]

- Proakis, J.G.; Manolakis, D.G. Digital Signal Processing; Prentice Hall International Editions, Pearson Prentice Hall, 2007. [CrossRef]

- Oppenheim, A.V.; Schafer, R.W.; Buck, J. Discrete-time Signal Processing; Prentice Hall International Editions, Prentice Hall, 1999.

- Baghaie, R.; Dimitrov, V. DHT algorithm based on encoding algebraic integers. Electronics Letters 1999, 35, 1303–1305. [CrossRef]

- Britanak, V.; Yip, P.; Rao, K.R. Discrete Cosine and Sine Transforms; Academic Press, 2007.

- Feig, E.; Winograd, S. On the multiplicative complexity of discrete cosine transforms. IEEE Transactions on Information Theory 1992, 38, 1387–1391. [CrossRef]

- Malvar, H.S.; Hallapuro, A.; Karczewicz, M.; Kerofsky, L. Low-complexity transform and quantization in H.264/AVC. IEEE Transactions on Circuits and Systems for Video Technology 2003, 13, 598–603. [CrossRef]

- Winograd, S. Arithmetic Complexity of Computations; Vol. 33, CBMS-NSF Regional Conference Series in Applied Mathematics, SIAM, 1980.

- Winograd, S. On Computing the Discrete Fourier Transform. Mathematics of Computation 1978, 32, 175–199. [CrossRef]

- Heideman, M.T. Multiplicative Complexity, Convolution, and the DFT; Springer-Verlag, 1988.

- Oppenheim, A.V.; Verghese, G.C. Signals, Systems & Inference; Prentice-Hall Signal Processing Series, Pearson, 2016.

- Cintra, R.J. An integer approximation method for discrete sinusoidal transforms. Circuits, Systems, and Signal Processing 2011, 30, 1481–1501, [2007.02232]. [CrossRef]

- Suárez Villagrán, D.M. Discrete Fourier transform approximations with applications in detection and estimation. Master’s thesis, Universidade Federal de Pernambuco, Recife, Brazil, 2015. Advisors: Dr R J Cintra and Dr F M Bayer.

- Madanayake, A.; Ariyarathna, V.; Madishetty, S.; Pulipati, S.; Cintra, R.J.; Coelho, D.; Oliveira, R.; Bayer, F.M.; Belostotski, L.; Mandal, S.; et al. Towards a Low-SWaP 1024-Beam Digital Array: A 32-Beam Subsystem at 5.8 GHz. IEEE Transactions on Antennas and Propagation 2020, 68, 900–912, [2207.09054]. [CrossRef]

- Madanayake, A.; Cintra, R.J.; Akram, N.; Ariyarathna, V.; Mandal, S.; Coutinho, V.A.; Bayer, F.M.; Coelho, D.; Rappaport, T.S. Fast Radix-32 Approximate DFTs for 1024-Beam Digital RF Beamforming. IEEE Access 2020, 8, 96613–96627. [CrossRef]

- Blahut, R.E. Fast Algorithms for Signal Processing; Cambridge University Press, 2010. [CrossRef]

- Madanayake, A.; Ariyarathna, V.; Madishetty, S.; Pulipati, S.; Cintra, R.J.; Coelho, D.; Oliveira, R.; Bayer, F.M.; Belostotski, L.; Mandal, S.; et al. Towards a Low-SWaP 1024-Beam Digital Array: A 32-Beam Subsystem at 5.8 GHz. IEEE Transactions on Antennas and Propagation 2020, 68, 900–912. [CrossRef]

- The Collaboration for Astronomy Signal Processing and Electronics Research, 2024. [Accessed 23-05-2024].

- The CASPER Toolflow. GitHub, 2024. [Accessed 24-05-2024].

- Proakis, J.G.; Manolakis, D.G., Digital Signal Processing; Prentice Hall, 2022; chapter 10, pp. 664–670.

- Stutzman, W.L.; Thiele, G.A. Antenna Theory and Design; Wiley: Hoboken, NJ, USA, 2012.

| Method | Real multiplications | Real additions |

| Exact 32-point DFT () | 1408 | 1666 |

| Radix-2 Cooley-Tukey FFT () [98] | 88 | 408 |

| Approximate DFT () [95] | 0 | 1282 |

| Fast algorithm for () [99] | 0 | 144 |

| Operation | ||||||||

|---|---|---|---|---|---|---|---|---|

| Real multiplications | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Real additions | 30 | 30 | 14 | 14 | 30 | 14 | 12 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).