Submitted:

04 July 2024

Posted:

08 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

2.1. Attack Traffic Detection Technology Based on Machine Learning

2.2. Attack Traffic Detection Technology Based on Deep Learning

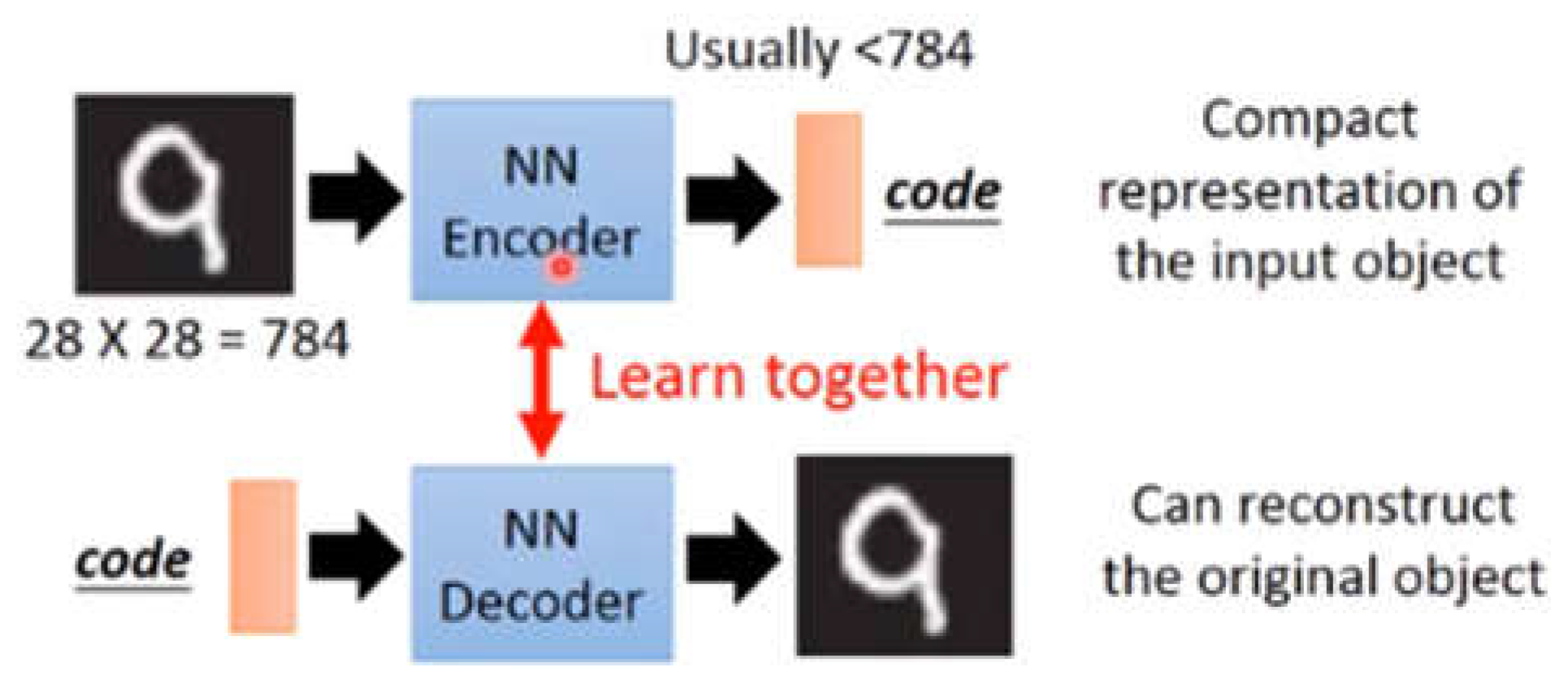

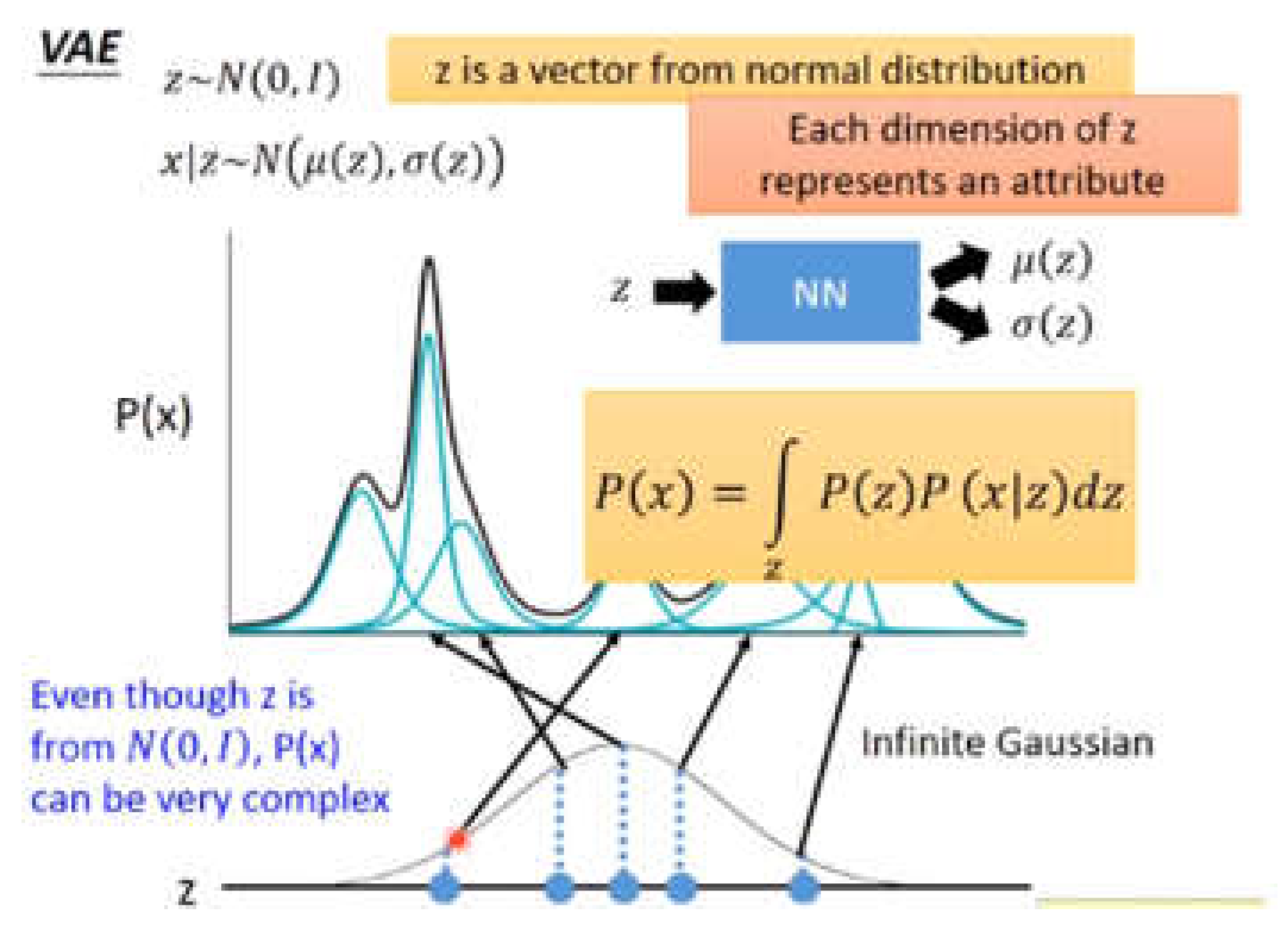

2.3. Variational Autoencoder (VAE)[7]

3. Methodology

3.1. Data Set

| Dataset Path | /root/yolov8-main/dataset/data2/images |

| Training Set | train |

| Validation Set | val |

| Test Set | test |

| Number of Classes | 10 |

| Class Names | |

| 0: pedestrian | Pedestrian |

| 1: person | Person |

| 2: car | Car |

| 3: van | Van |

| 4: bus | Bus |

| 5: truck | Truck |

| 6: motor | Motor |

| 7: bicycle | Bicycle |

| 8: awning-tricycle | Awning-tricycle |

| 9: tricycle | Tricycle |

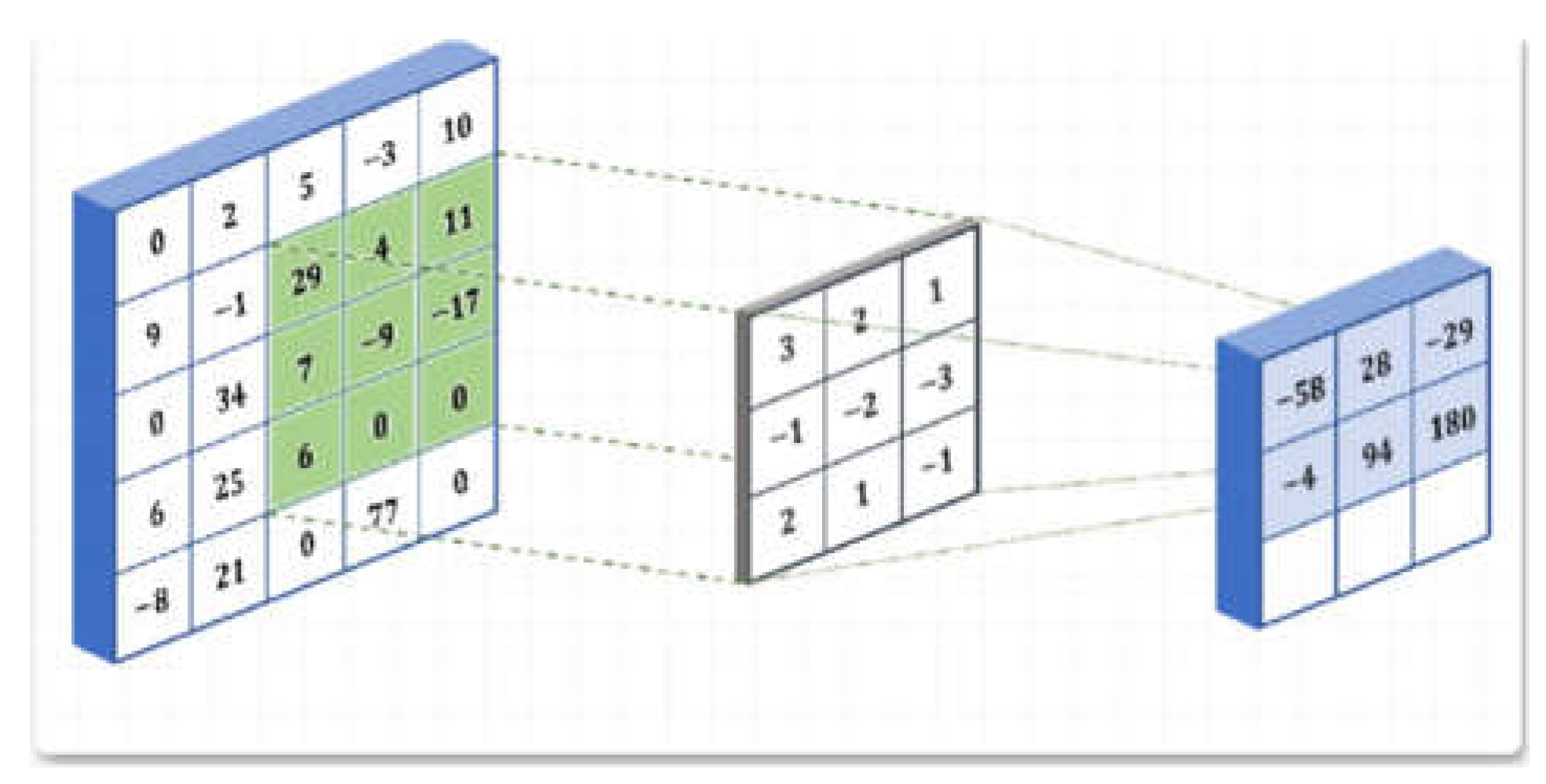

3.2. CNN Model

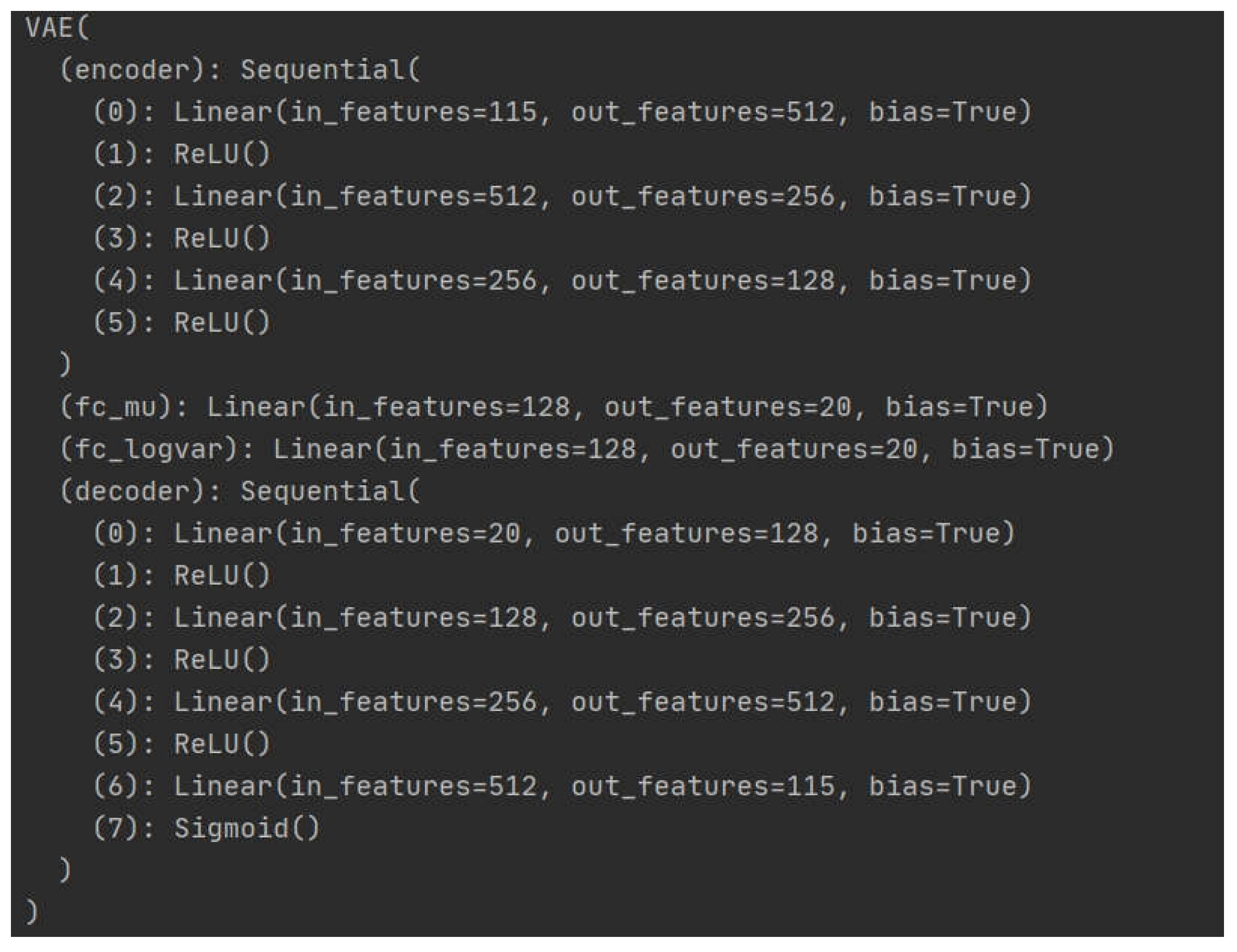

3.3. Variational Autoencoder (AVE) Model

3.4. Anomaly Detection Method

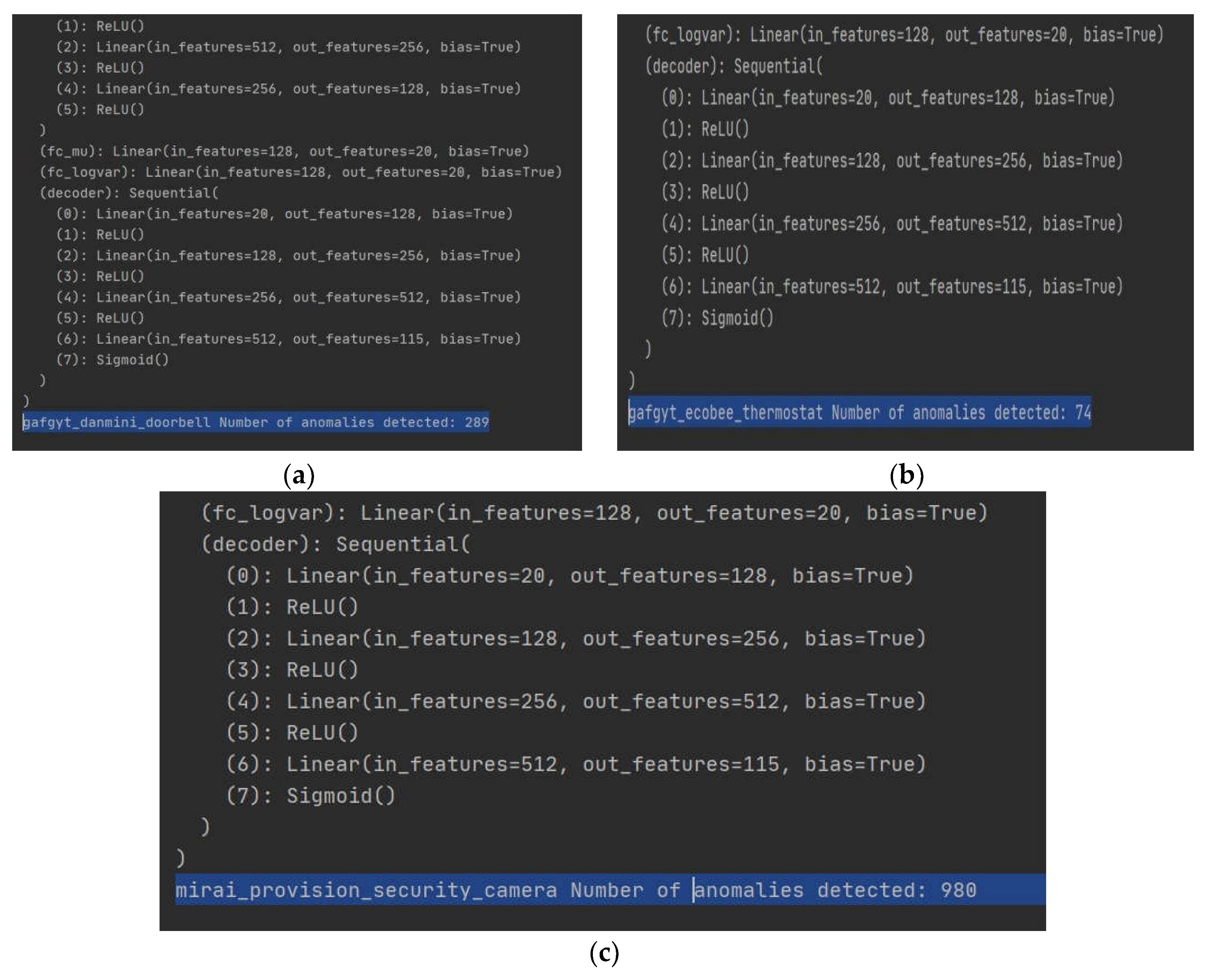

4. Experiment

4.1. Experimental Design

| Component | Details |

| CPU | Intel Core i7 |

| GPU | NVIDIA GTX 1080 Ti |

| RAM | 16 GB |

| Storage | 500 GB SSD |

| Component | Details |

| Operating System | Ubuntu 20.04 |

| Programming Language | Python 3.8 |

| Deep Learning Framework | PyTorch 1.10.0 |

| Other Libraries | pandas, numpy, matplotlib |

| Dataset Type | Details |

| Training Set | Stored in the train folder, includes traffic data from multiple IoT devices |

| Test Set | Stored in the test folder, used to evaluate model performance |

4.2. Model Architecture and Hyperparameters

| Component | Details |

| Convolutional Layers | Conv1: Input 1, Output 32, Kernel size 3, Padding 1 <br> Conv2: Input 32, Output 64, Kernel size 3, Padding 1 <br> Conv3: Input 64, Output 128, Kernel size 3, Padding 1 <br> Conv4: Input 128, Output 128, Kernel size 3, Padding 1 |

| Pooling Layers | Max Pooling, Kernel size 2, Stride 2 |

| Fully Connected Layers | FC1: Input 128 * 7, Output 128 <br> FC2: Input 128, Output 9 |

| Activation Function | ReLU |

| Optimizer | Adam, Learning rate 0.01 |

| Loss Function | CrossEntropyLoss |

| Batch Size | 32 |

| Training Epochs | 10 |

| Component | Details |

| Encoder | Linear Layers: Input dimension input_dim, Output 512 <br> Output 256 <br> Output 128 |

| Activation Function | ReLU |

| Latent Variables | Mean Layer: Input 128, Output latent_dim <br> Log-Variance Layer: Input 128, Output latent_dim |

| Decoder | Linear Layers: Input latent_dim, Output 128 <br> Output 256 <br> Output 512 <br> Output input_dim, Activation Function Sigmoid |

| Optimizer | Adam, Learning rate 0.01 |

| Loss Function | Reconstruction Loss (MSE Loss) and KL Divergence |

| Batch Size | 128 |

| Training Epochs | 50 |

| Latent Dimension | 20 |

4.3. Experimental Procedure

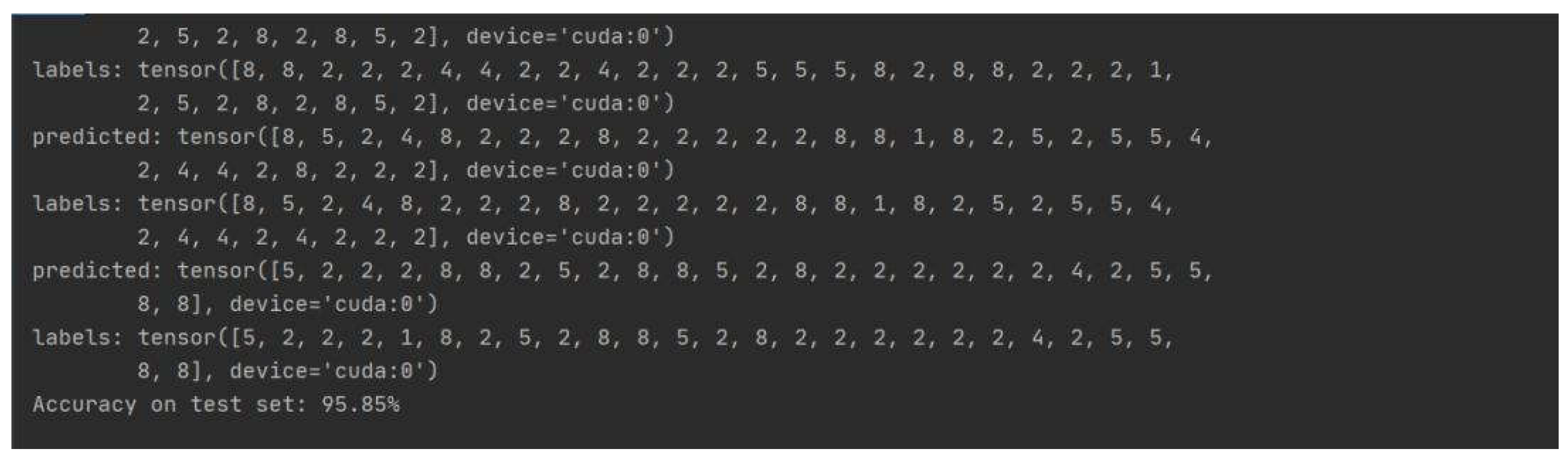

4.4. Experimental Result

4.5. Experimental Discussion

4.6. Improvement Strategy

5. Conclusion

References

- Zhan, X., Shi, C., Li, L., Xu, K., & Zheng, H. (2024). Aspect category sentiment analysis based on multiple attention mechanisms and pre-trained models. Applied and Computational Engineering, 71, 21-26. [CrossRef]

- Wu, B., Xu, J., Zhang, Y., Liu, B., Gong, Y., & Huang, J. (2024). Integration of computer networks and artificial neural networks for an AI-based network operator. Applied and Computational Engineering, 64, 115-120. [CrossRef]

- Liang, P., Song, B., Zhan, X., Chen, Z., & Yuan, J. (2024). Automating the training and deployment of models in MLOps by integrating systems with machine learning. Applied and Computational Engineering, 67, 1-7.

- Li, A., Yang, T., Zhan, X., Shi, Y., & Li, H. (2024). Utilizing Data Science and AI for Customer Churn Prediction in Marketing. Journal of Theory and Practice of Engineering Science, 4(05), 72-79.

- Wu, B., Gong, Y., Zheng, H., Zhang, Y., Huang, J., & Xu, J. (2024). Enterprise cloud resource optimization and management based on cloud operations. Applied and Computational Engineering, 67, 8-14. [CrossRef]

- Xu, J., Wu, B., Huang, J., Gong, Y., Zhang, Y., & Liu, B. (2024). Practical applications of advanced cloud services and generative AI systems in medical image analysis. Applied and Computational Engineering, 64, 82-87.

- Huang, J., Zhang, Y., Xu, J., Wu, B., Liu, B., & Gong, Y. Implementation of Seamless Assistance with Google Assistant Leveraging Cloud Computing.

- Zhang, Y., Liu, B., Gong, Y., Huang, J., Xu, J., & Wan, W. (2024). Application of machine learning optimization in cloud computing resource scheduling and management. Applied and Computational Engineering, 64, 9-14.

- Shi, Y., Li, L., Li, H., Li, A., & Lin, Y. (2024). Aspect-Level Sentiment Analysis of Customer Reviews Based on Neural Multi-task Learning. Journal of Theory and Practice of Engineering Science, 4(04), 1-8.

- Shi, Y., Yuan, J., Yang, P., Wang, Y., & Chen, Z. Implementing Intelligent Predictive Models for Patient Disease Risk in Cloud Data Warehousing. [CrossRef]

- Zhan, T., Shi, C., Shi, Y., Li, H., & Lin, Y. (2024). Optimization Techniques for Sentiment Analysis Based on LLM (GPT-3). arXiv preprint arXiv:2405.09770.

- Li, Huixiang, et al. “AI Face Recognition and Processing Technology Based on GPU Computing.” Journal of Theory and Practice of Engineering Science 4.05 (2024): 9-16.

- Yuan, J., Lin, Y., Shi, Y., Yang, T., & Li, A. (2024). Applications of Artificial Intelligence Generative Adversarial Techniques in the Financial Sector. Academic Journal of Sociology and Management, 2(3), 59-66.

- Lin, Y., Li, A., Li, H., Shi, Y., & Zhan, X. (2024). GPU-Optimized Image Processing and Generation Based on Deep Learning and Computer Vision. Journal of Artificial Intelligence General science (JAIGS) ISSN: 3006-4023, 5(1), 39-49.

- Chen, Zhou, et al. “Application of Cloud-Driven Intelligent Medical Imaging Analysis in Disease Detection.” Journal of Theory and Practice of Engineering Science 4.05 (2024): 64-71.

- Wang, B., Lei, H., Shui, Z., Chen, Z., & Yang, P. (2024). Current State of Autonomous Driving Applications Based on Distributed Perception and Decision-Making.

- Jiang, W., Qian, K., Fan, C., Ding, W., & Li, Z. (2024). Applications of generative AI-based financial robot advisors as investment consultants. Applied and Computational Engineering, 67, 28-33.

- Ding, W., Zhou, H., Tan, H., Li, Z., & Fan, C. (2024). Automated Compatibility Testing Method for Distributed Software Systems in Cloud Computing.

- Fan, C., Li, Z., Ding, W., Zhou, H., & Qian, K. Integrating Artificial Intelligence with SLAM Technology for Robotic Navigation and Localization in Unknown Environments. [CrossRef]

- Guo, L., Li, Z., Qian, K., Ding, W., & Chen, Z. (2024). Bank Credit Risk Early Warning Model Based on Machine Learning Decision Trees. Journal of Economic Theory and Business Management, 1(3), 24-30.

- Li, Zihan, et al. “Robot Navigation and Map Construction Based on SLAM Technology.” (2024).

- Fan, C., Ding, W., Qian, K., Tan, H., & Li, Z. (2024). Cueing Flight Object Trajectory and Safety Prediction Based on SLAM Technology. Journal of Theory and Practice of Engineering Science, 4(05), 1-8.

- Srivastava, S., Huang, C., Fan, W., & Yao, Z. (2023). Instance Needs More Care: Rewriting Prompts for Instances Yields Better Zero-Shot Performance. arXiv preprint arXiv:2310.02107.

- Xiao, J., Wang, J., Bao, W., Deng, T. and Bi, S., Application progress of natural language processing technology in financial research. [CrossRef]

- .

- Ding, W., Tan, H., Zhou, H., Li, Z., & Fan, C. Immediate Traffic Flow Monitoring and Management Based on Multimodal Data in Cloud Computing.

- Qian, K., Fan, C., Li, Z., Zhou, H., & Ding, W. (2024). Implementation of Artificial Intelligence in Investment Decision-making in the Chinese A-share Market. Journal of Economic Theory and Business Management, 1(2), 36-42.

- Tian, J., Li, H., Qi, Y., Wang, X., & Feng, Y. (2024). Intelligent Medical Detection and Diagnosis Assisted by Deep Learning. Applied and Computational Engineering, 64, 121-126.

- Zhou, Y., Zhan, T., Wu, Y., Song, B., & Shi, C. (2024). RNA Secondary Structure Prediction Using Transformer-Based Deep Learning Models. arXiv preprint arXiv:2405.06655. [CrossRef]

- Liu, B., Cai, G., Ling, Z., Qian, J., & Zhang, Q. (2024). Precise Positioning and Prediction System for Autonomous Driving Based on Generative Artificial Intelligence. Applied and Computational Engineering, 64, 42-49. [CrossRef]

- Cui, Z., Lin, L., Zong, Y., Chen, Y., & Wang, S. (2024). Precision Gene Editing Using Deep Learning: A Case Study of the CRISPR-Cas9 Editor. Applied and Computational Engineering, 64, 134-141.

- Yu, D., Xie, Y., An, W., Li, Z., & Yao, Y. (2023, December). Joint Coordinate Regression and Association For Multi-Person Pose Estimation, A Pure Neural Network Approach. In Proceedings of the 5th ACM International Conference on Multimedia in Asia (pp. 1-8).

- Wang, B., He, Y., Shui, Z., Xin, Q., & Lei, H. (2024). Predictive Optimization of DDoS Attack Mitigation in Distributed Systems using Machine Learning. Applied and Computational Engineering, 64, 95-100. [CrossRef]

- He, Zheng, et al. “Application of K-means clustering based on artificial intelligence in gene statistics of biological information engineering.”.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).