In this section, we first conduct a comprehensive quantitative comparison between CrackScopeNet and the most advanced segmentation models in various metrics, visualize the results, and comprehensively analyze the detection performance. Subsequently, we explore the transfer learning capability of our model on crack datasets specific to other scenarios. Finally, we perform ablation studies to meticulously examine the significance and impact of each component within CrackScopeNet.

4.1. Comparative Experiments

The primary objective is to achieve an exceptional balance between the accuracy of crack region extraction and inference speed. Thus, we compare CrackScopeNet with three types of models: classical general semantic segmentation models, advanced lightweight semantic segmentation models, and the latest models designed explicitly for crack segmentation, totaling 13 models. Specifically, U-Net [

51], PSPNet [

37], SegNet [

52], DeeplabV3+ [

23], SegFormer [

19], and SegNext [

24] are selected as six classical high-accuracy segmentation models. BiSeNet [

28], BiSeNetV2 [

53], STDC [

54], TopFormer [

55], and SeaFormer [

56] are chosen for their advantage in inference speed as lightweight semantic segmentation models. Notably, SegFormer, TopFormer, and SeaFormer are Transformer-based methods that have demonstrated outstanding performance on large datasets such as Cityscapes [

57]. Additionally, we compare two specialized crack segmentation models, U2Crack [

50] and HrSegNet [

29], which have been optimized for the crack detection scenario based on general semantic segmentation models.

It is important to note that to ensure the models could be easily converted to ONNX format and deployed on edge devices with limited computational resources and memory, we select lightweight backbones: MobileNetV2 [

58] and ResNet-18 [

59] for the DeepLabV3+ and BiSeNet models, respectively. For SegFormer and SegNext, we choose the lightweight versions SegFormer-B0 [

19] and SegNext_MSCAN_Tiny [

24], which are suited for real-time semantic segmentation as proposed by the authors. For TopFormer and SeaFormer, we discover during training that the tiny versions are difficult to converge, so we only utilize their base versions.

Quantitative Results. Table 4 presents the performance of each baseline network and the proposed CrackScopeNet on the CrackSeg9k dataset, with the best values highlighted in bold. Analyzing the accuracy of different types of segmentation networks in the table reveals that larger models generally achieve higher mIoU scores than lightweight models. Specifically, compared to classical high-accuracy models, the proposed CrackScopeNet achieves the best performance in terms of mIoU, Recall, and F1 scores. Although our model’s precision is 1.26% lower than U-Net, U-Net has a poor recall performance (-2.24%), and our model’s parameters and FLOPs are reduced by 12 and 48 times, respectively.

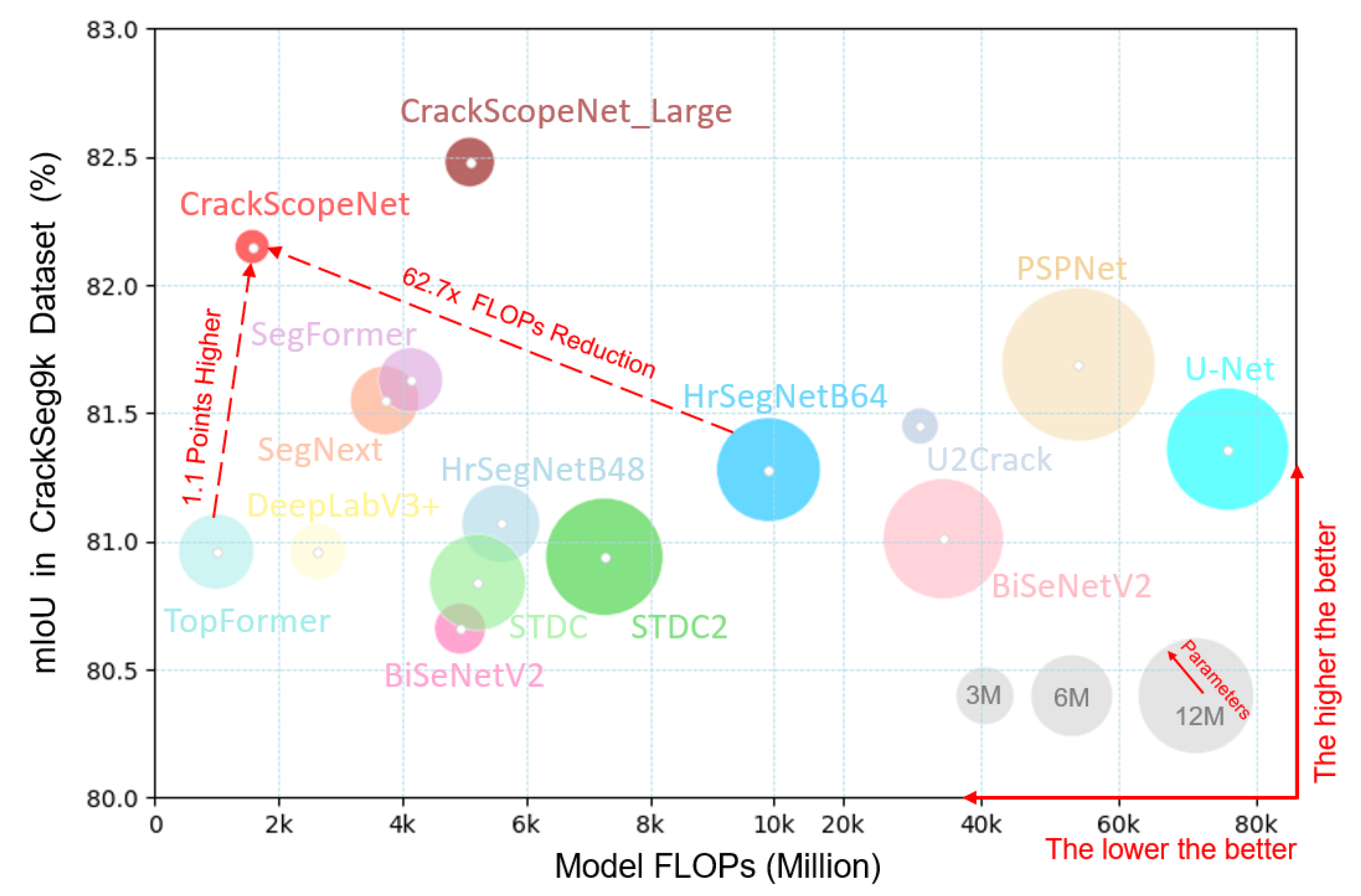

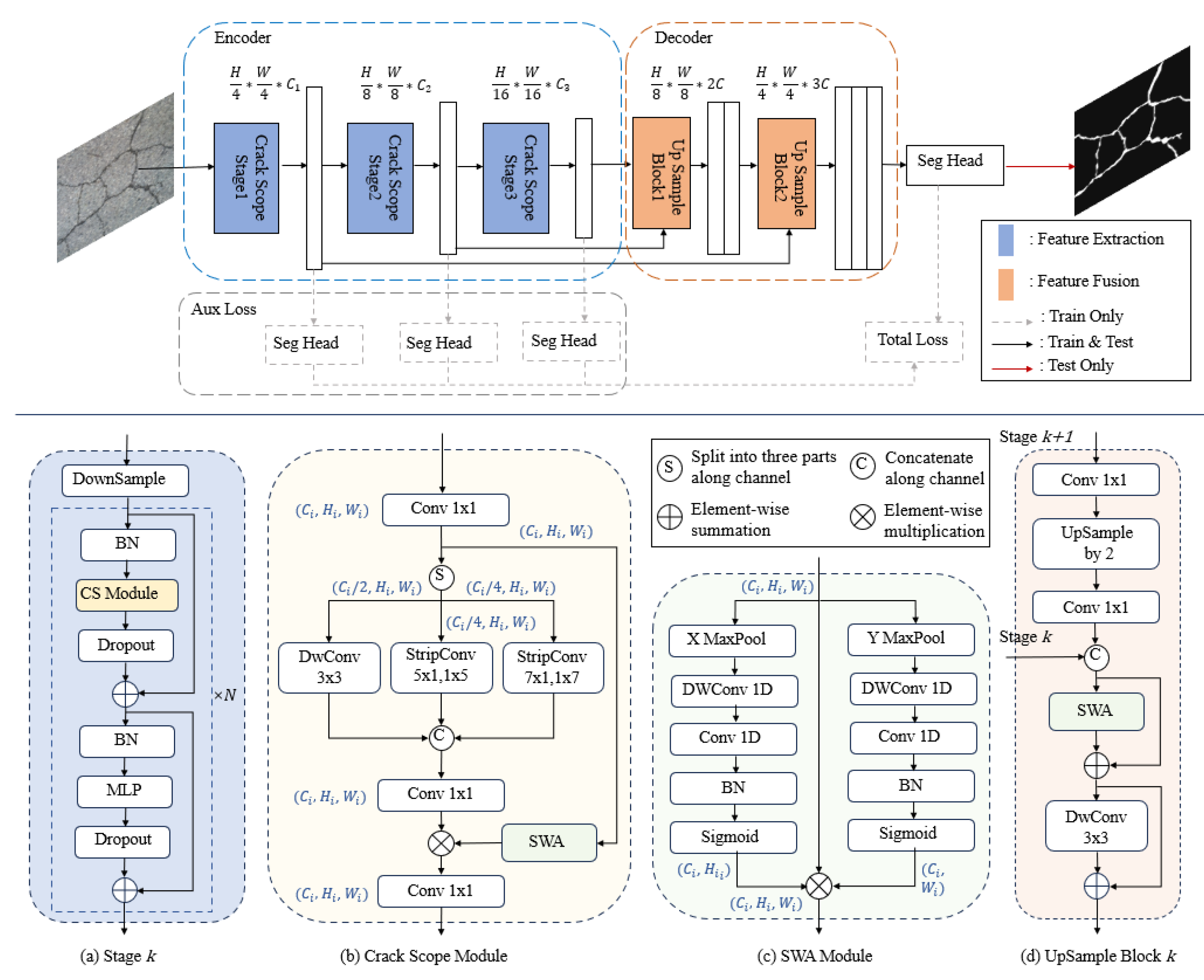

In terms of network lightweightness, the CrackScopeNet proposed in this paper achieves the best accuracy-lightweight balance on the CrackSeg9k dataset, as more intuitively illustrated in

Figure 1. Our model achieves the highest mIoU with only 1.047M parameters and 1.58G FLOPs, making it incredibly lightweight. CrackScopeNet’s FLOPs are slightly higher than those of TopFormer and SeaFormer but lower than all other small models. Notably, due to the small size of crack dataset, the learning capability of lightweight segmentation networks is evidently limited, as mainstream lightweight segmentation models do not consider the unique characteristics of cracks, resulting in poor performance. The CrackScopeNet architecture, while maintaining superior segmentation performance, successfully achieves the design goal of a lightweight network structure, making it easily deployable on resource-constrained edge devices.

Moreover, compared to the state-of-the-art crack image segmentation algorithms, the proposed method achieves a mIoU score of 82.15% with only 1.047M parameters and 1.58G FLOPs, surpassing the highest-accuracy versions of U2Crack and HrSegNet models. Notably, the HrSegNet model employ an Online Hard Example Mining (OHEM) technique during training to improve accuracy. In contrast, we only use a cross-entropy loss function for model parameter updates without deliberately employing training tricks to enhance performance, showcasing the significant benefits of considering crack morphology in our model design.

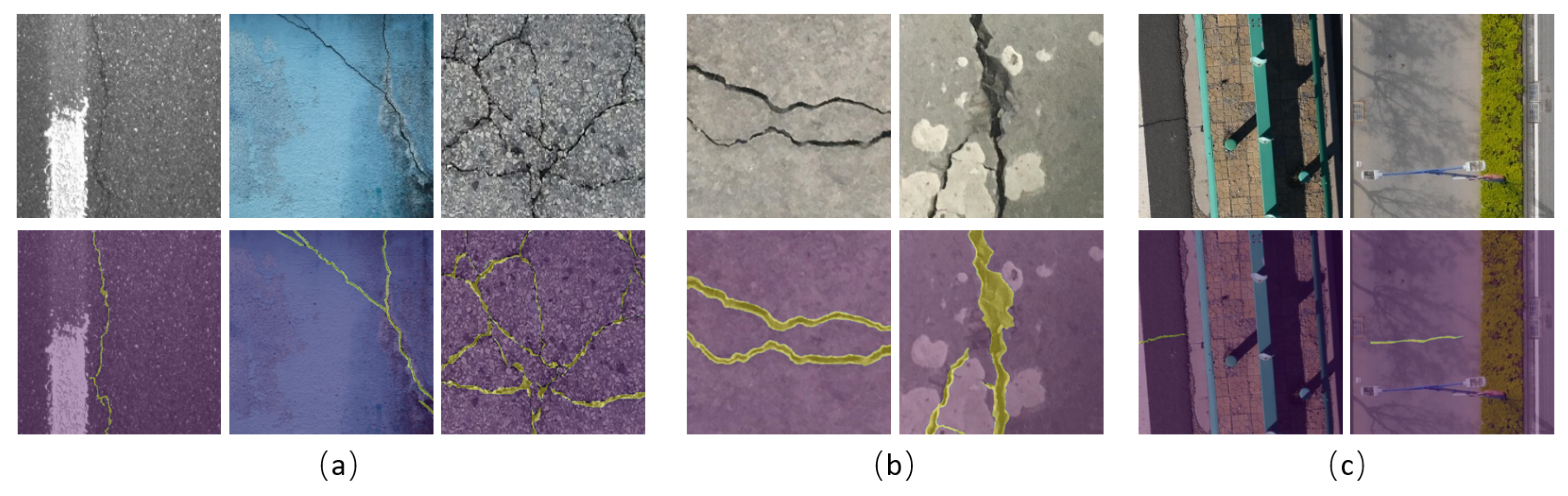

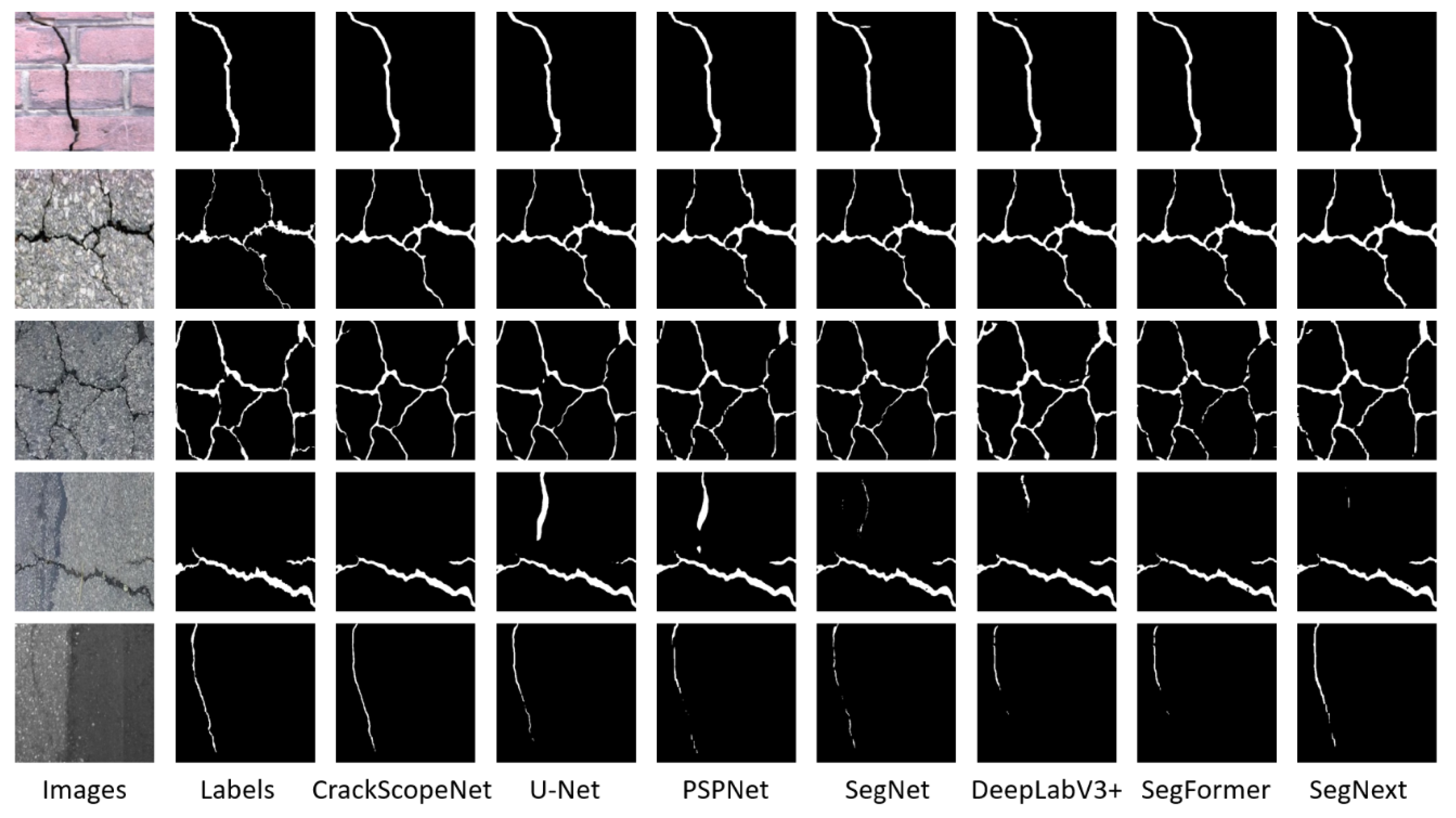

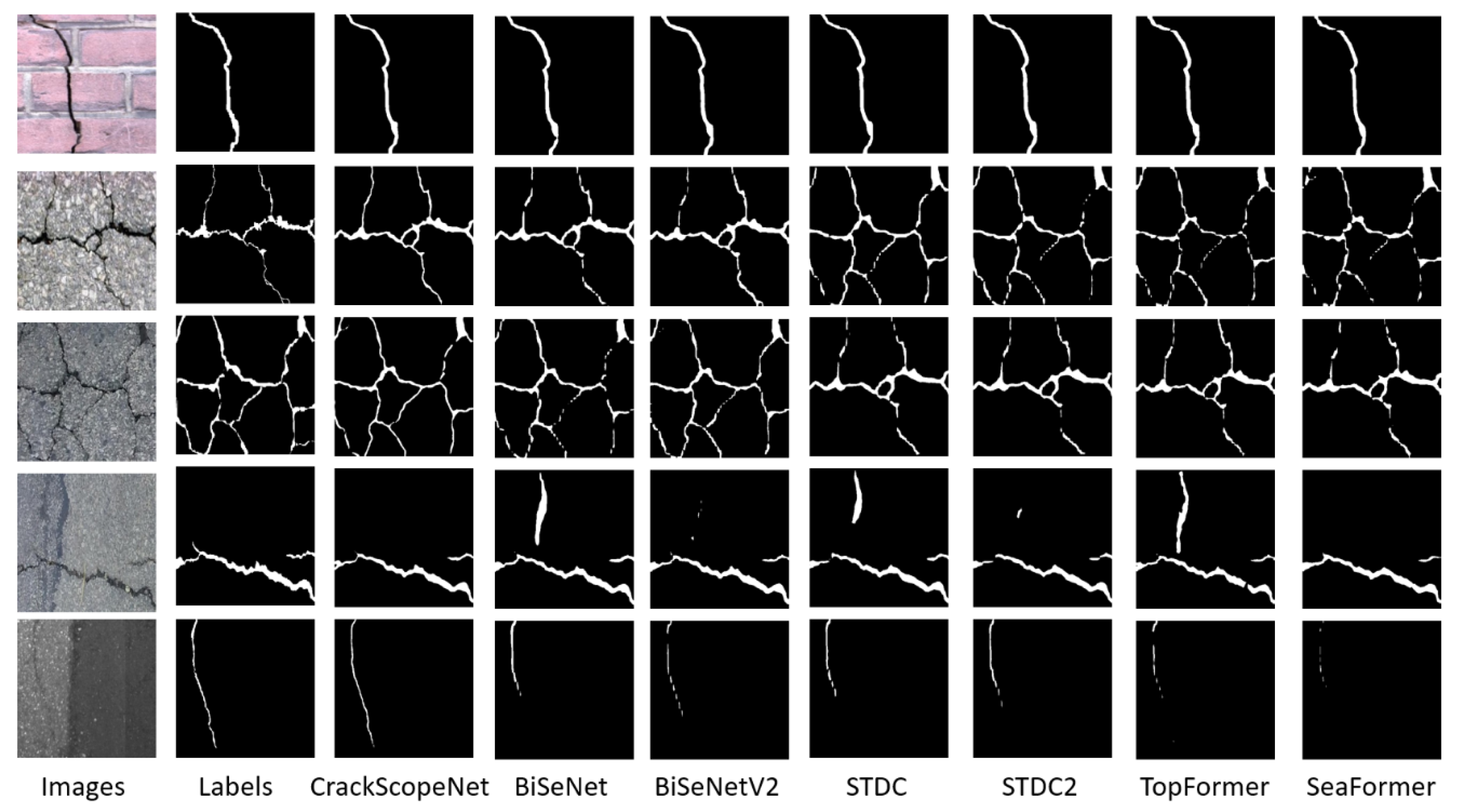

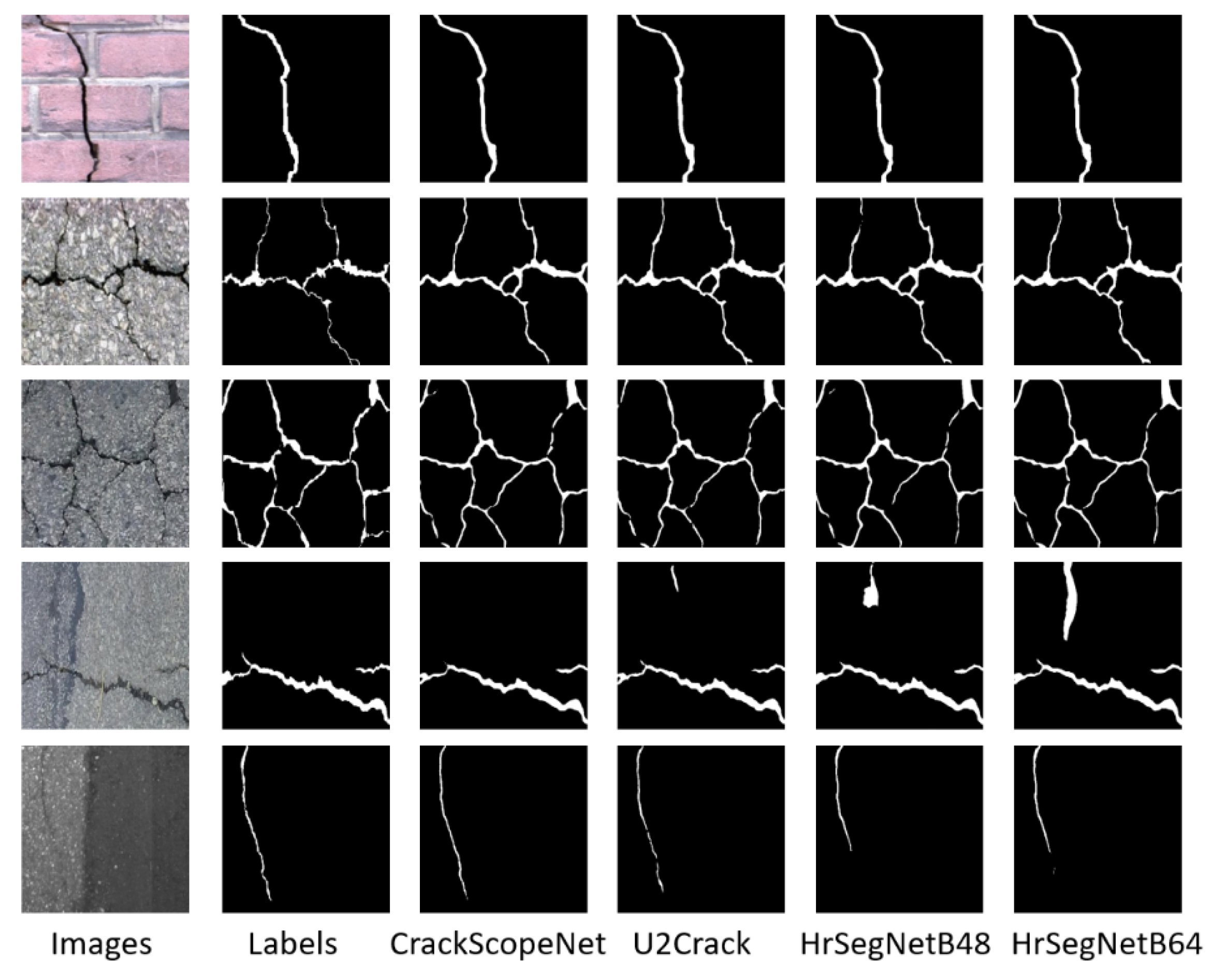

Qualitative Results.Figure 4,

Figure 5, and

Figure 6 display the qualitative results of all models. CrackScopeNet achieves superior visual performance compared to other models. From the first, second, and third rows of

Figure 4, it can be observed that CrackScopeNet and the more significant parameter segmentation algorithms achieve satisfactory results for high-resolution images with apparent crack features. In the fourth row, where the original image contains asphalt with color and texture similar to cracks, CrackScopeNet and SegFormer successfully overcome such background noise interference. This is attributed to their long-range contextual dependencies, effectively capturing relationships between cracks. From the fifth row, CrackScopeNet exhibits robust performance even under uneven illumination conditions. This can be attributed to the design of CrackScopeNet, which considers both local and global features of cracks, effectively suppressing other noises.

Figure 5 clearly shows that lightweight networks struggle to eliminate background noise interference and produce fragmented segmentation results for fine cracks. This outcome is due to the limited parameters learned by lightweight models. Finally,

Figure 6 presents the visualization results of the most advanced crack segmentation models. U2Crack [

50], based on the ViT [

17] architecture, achieves a broader receptive field, somewhat alleviating background noise but at the cost of significant computational overhead. HrSegNet [

29] maintains a high-resolution branch to capture rich, detailed features. As seen in the last two columns of

Figure 6, with increased channels in the HrSegNet network, more detailed information is extracted, but this also leads to misclassifying background information as cracks, explaining why HrSegNet’s precision score is high while the recall score is low. In summary, CrackScopeNet outperforms other segmentation models with lower parameters and FLOPs by demonstrating excellent crack detection performance under various noise conditions.

Inference on Navio2-based Drones.

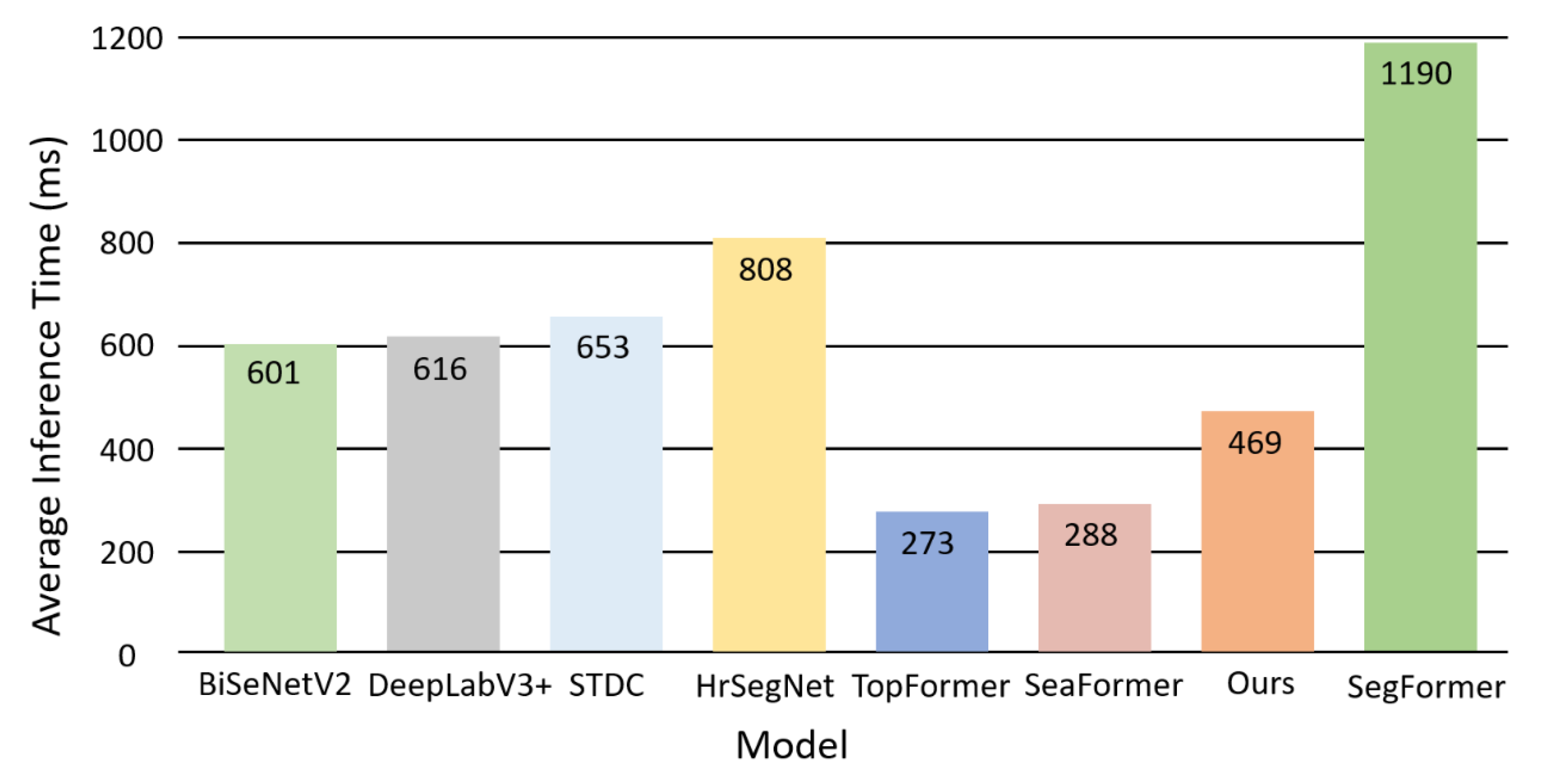

In practical applications, there remains a substantial gap for real-time semantic segmentation algorithms designed and validated for mobile and edge devices, which face challenges such as limited memory resources and low computational efficiency. To better simulate edge devices used for outdoor structural health monitoring, we explore the inference speed of models without GPU acceleration. We convert the models to ONNX format and test the inference speed on Navio2-based drones equipped with a representative Raspberry Pi 4B, focusing on models with tiny FLOPs and parameter counts: BiSeNetV2, DeepLabV3+, STDC, HrSegNetB48, SegFormer, TopFormer, SeaFormer, and our proposed model. The test settings are input image size of 3×400×400, batch size of 1, and 2000 testing epochs. To ensure fair comparisons, we do not optimize or prune any models during deployment, meaning the actual inference delay in practical applications could be further reduced based on these test results.

As shown in

Figure 7, the test results indicate that when running on highly resource-constrained drone platform, the proposed CrackScopeNet architecture achieves faster inference speed compared to other real-time or lightweight semantic segmentation networks based on convolutional neural networks, such as BiSeNet, BiSeNetV2, and STDC. Additionally, TopFormer and SeaFormer are designed with deployment on resource-limited edge devices in mind, resulting in extremely low inference latency. However, these two models perform poorly on the crack datasets due to inadequate data volume. Our proposed CrackScopeNet model, while maintaining rapid inference speed, achieves remarkable crack segmentation accuracy, establishing its advantage over competing models.

These results confirm the efficacy of deploying the CrackScopeNet model on outdoor mobile devices, where high-speed inference and lightweight architecture are crucial for the real-time processing and analysis of infrastructure surface cracks. By outperforming other state-of-the-art models, CrackScopeNet proves to be a suitable solution for addressing challenges associated with outdoor edge computing.

4.2. Scaling Study

To explore the adaptability of our model, we will adjust the number of channels and stack different numbers of Crack Scope Modules to cater to a broader range of application scenarios. Since CrackSeg9k is composed of multiple crack datasets, we will also investigate the model’s transferability to specific application scenarios.

We adjust the base number of channels after the stem from 32 to 64. Correspondingly, the number of channels in the remaining three feature extraction stages increase from (32, 64, 128) to (64, 128, 160) to capture more features. Meanwhile, the number of Crack Scope Modules stacked in each stage is adjusted from (3, 3, 4) to (3, 3, 3). We refer to the adjusted model as CrackScopeNet_Large. First, we train CrackScopeNet_Large on CrackSeg9k in the same parameter settings as the base version and evaluate the model on the test set. Furthermore, we use the training parameters and weights obtained from CrackSeg9k for these two models as the basis for transferring the models to downstream tasks in two specific scenarios. Images in the Ozgenel dataset are cropped to 448x448 and are high-resolution concrete crack images, similar to some scenarios in CrackSeg9k. The Aerial Track Dataset consists of low-altitude drone-captured images of post-earthquake highway cracks, cropped to 512x512, a type of scene not present in CrackSeg9k.

Table 5 presents the mIoU scores, parameter counts, and FLOPs of the base model CrackScopeNet and the high-accuracy version CrackScopeNet_Large on the CrackSeg9k dataset and two specific scenario datasets. In this table, mIoU(F) represents the mIoU score obtained after pre-training the model on CrackSeg9k and fine-tuning it on the respective dataset. It is evident that the large version of the model achieves higher segmentation accuracy across all datasets, but with approximately double the parameters and three times the FLOPs. Therefore, if computational resources and memory are sufficient, and higher accuracy in crack segmentation is required, the large version or further stacking of Crack Scope Modules can be employed.

For specific scenario training, whether from scratch or fine-tuning, our models are trained for only 20 epochs. It can be seen that even when training from scratch, our models converge quickly. We attribute this phenomenon to the initial design of CrackScopeNet, which consider the morphology of cracks and could successfully capture the necessary contextual information. For training using transfer learning, both versions of the model achieve remarkable mIoU scores on the Ozgenel dataset, with 90.1% and 92.31%, respectively. Even for the Aerial Track dataset, which includes low-altitude remote sensing images of highway cracks not seen in CrackSeg9k, our models still perform exceptionally well, achieving mIoU scores of 83.26% and 84.11%. These results demonstrate the proposed model’s rapid adaptability to small datasets, aligning well with real-world tasks.

4.3. Diagnostic Experiments

To gain more insights into CrackScopeNet, a set of ablative studies on CrackSeg9k are conducted. All the methods mentioned in this section are trained with the same parameters for efficiency in 200 epochs.

Stripe-wise Context Attention. First, we examine the role of the critical SWA module in CrackScopeNet by replacing it with two advanced attention mechanisms, CBAM [

36] and CA [

35]. The results are shown in

Table 6. It demonstrates that without any attention mechanism, merely stacking convolutional neural networks for feature extraction yields poor performance due to the limited receptive field. Then, the SWA attention mechanism, based on stripe pooling and one-dimensional convolution, is adopted, allowing the network structure to capture long-range contextual information. Under this configuration, the model exhibit the best performance.

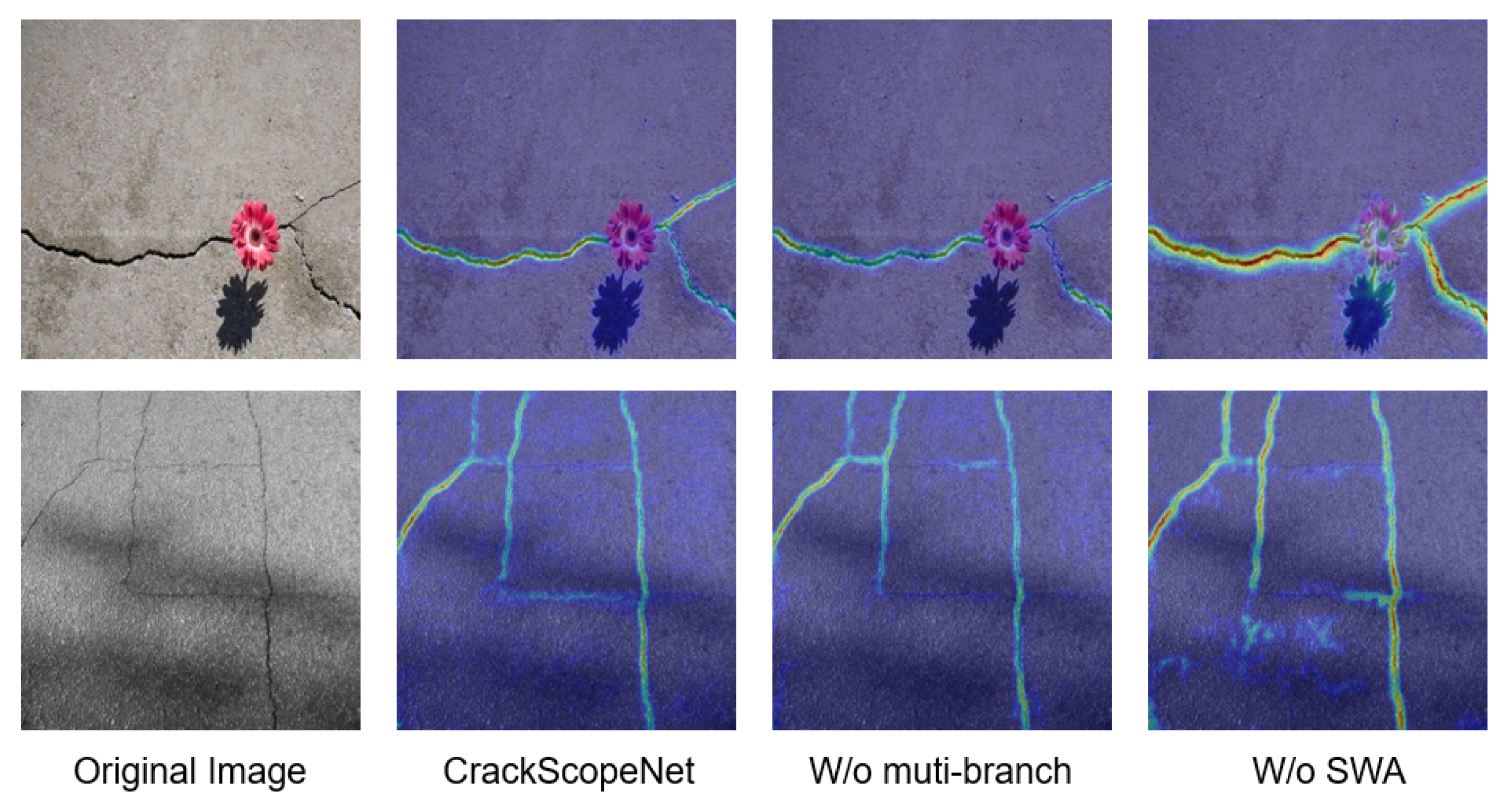

Figure 8 shows the class activation maps (CAM) [

60] before the segmentation head of CrackScopeNet. It can be observed that without SWA, the model is easily disturbed by shadows, whereas with the SWA module, the model can focus on the global crack areas. Next, we sequentially replace the SWA module with the channel-spatial feature-based CBAM attention mechanism and the coordinate attention (CA) mechanism, which also uses stripe pooling. The model parameters do not change significantly, but the performance decline by 0.2% and 0.17%, respectively.

Furthermore, we explore the benefits of different attention mechanisms for other models by optimizing the advanced lightweight crack segmentation network HrSegNetB48 [

29]. HrSegNetB48 consists of high-resolution and auxiliary branches, merging shallow detail information with deep semantic information at each stage. Therefore, we add SWA, CBAM, and CA attention mechanisms after feature fusion to capture richer features.

Table 6 shows the performance of HrSegNetB48 with different attention mechanisms, clearly indicating that introducing the SWA attention mechanism to capture long-range contextual information provides the most significant benefit.

Multi-scale Branch. Then, we examine the effect of the Multi-scale Branch in our Crack Scope Module. To ensure fairness, we replace the multi-scale branch with a convolution of larger kernel size, 5x5 instead of 3x3. The results with or without the multi-scale branch are shown in

Table 6. It is evident that using a 5x5 kernel size convolution instead of the multi-scale branch, even with more floating-point computations, decreases the mIoU score (-0.16%). This demonstrates that blindly adopting large kernel convolutions increases computational overhead without significant performance improvement. The benefits brought by multi-scale branch are further analyzed through the CAM. As shown in the third column of

Figure 8, when multi-scale branch is not used, it is obvious that the network misses the feature information of small cracks, while our model can perfectly capture the features of cracks of various shapes and sizes.

Decoder. CrackScopeNet uses a simple decoder to fuse feature information of different scales, complete the compression of channel features and the fusion of features at different stages. At present, the most popular decoders combine Atrous Spatial Pyramid Pooling (ASPP) [

23] module to introduce multi-scale information. In order to explore whether the introduction of ASPP module can bring benefits to our model and whether our proposed lightweight decoder is effective, we replace decoder with the ASPP method adopted by DeepLabV3+ [

23], and the results are shown in the last two rows of

Table 6. It can be seen that the computational overhead is large because of the need to perform parallel dilated convolution operations on deep semantic information, but the performance of the model is not improved. We believe that this is because local feature information and long-distance context information have been taken into account when feature extraction is carried out in each stage, so it does not need to be complicated for the design of decoder.