Submitted:

09 July 2024

Posted:

10 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

3. Methodology

3.1. ARIMA Models

3.2. Artificial Neural Networks (ANN)

3.3. Forecast Accuracy Metrics

- Mean Absolute Deviation (MAD)

- Mean Squared Error (MSE)

- Mean Absolute Percent Error (MAPE):

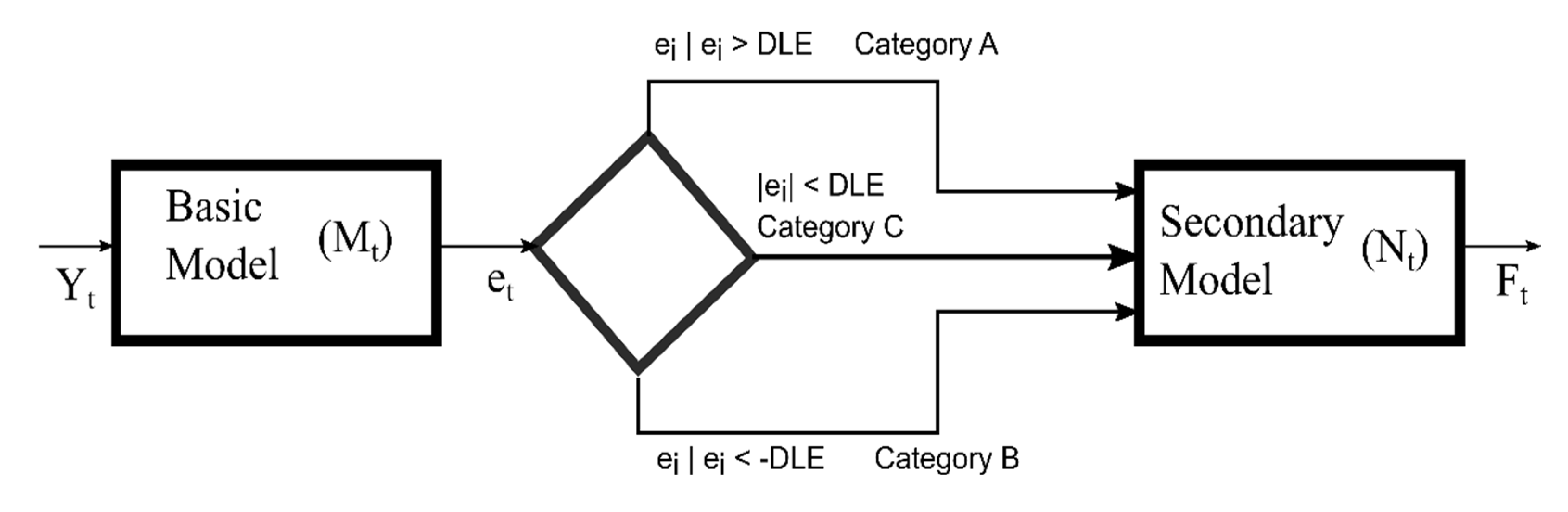

3.4. Hybrid Model

- , Category A

- , Category B

- , Category C

4. Results

4.1. Sunspot Data

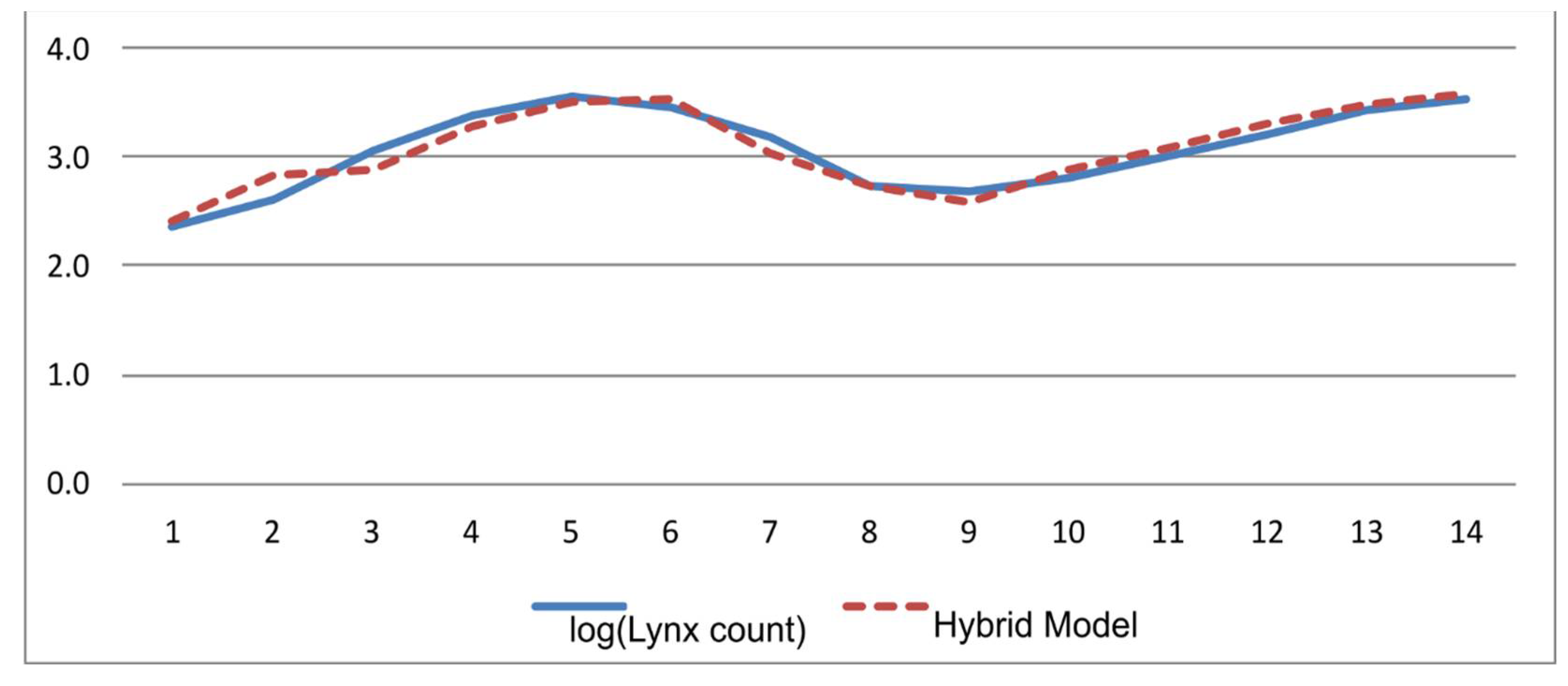

4.2. Canadian Lynx Trapping

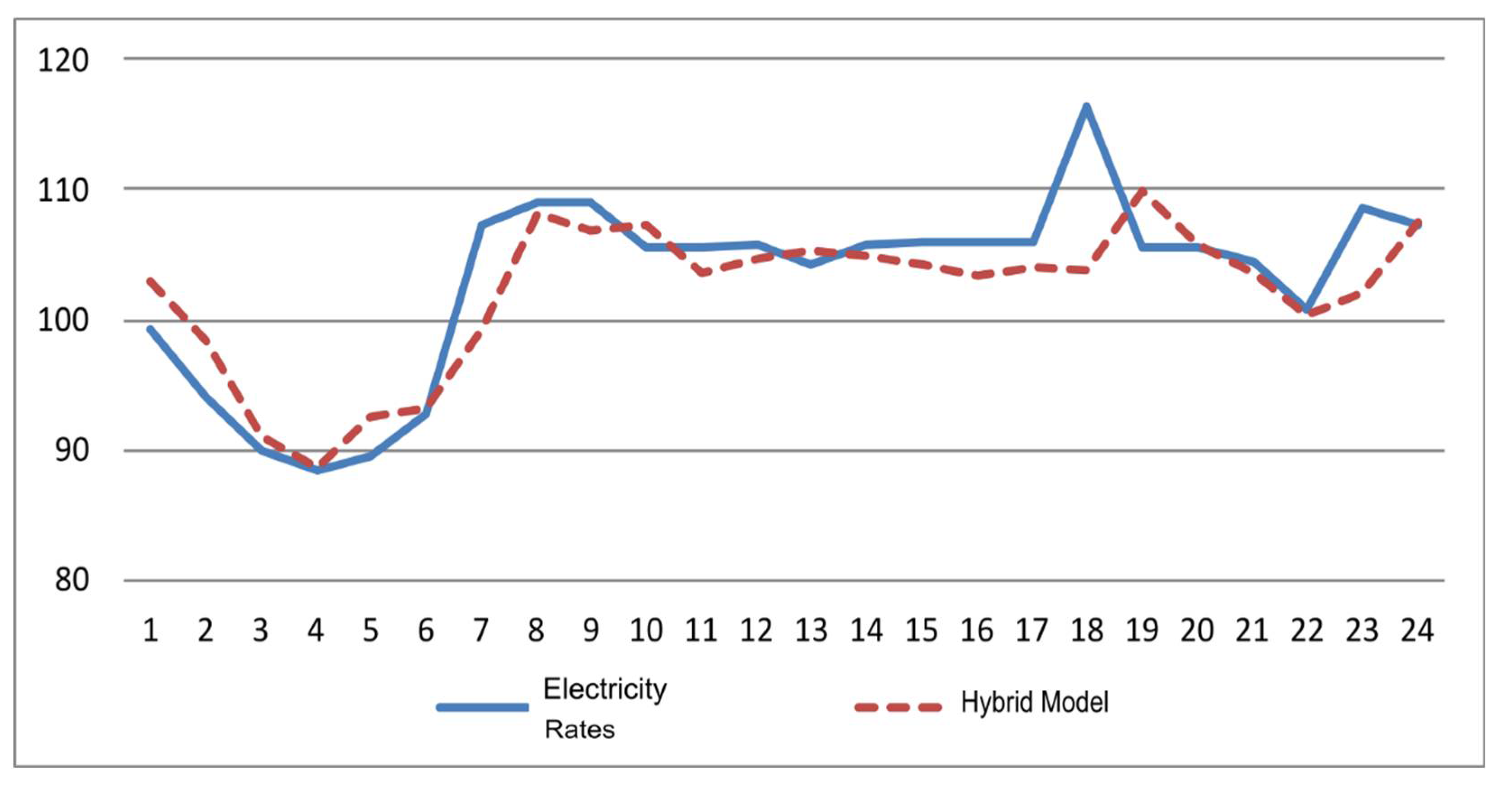

4.3. Hourly Electricity Rates

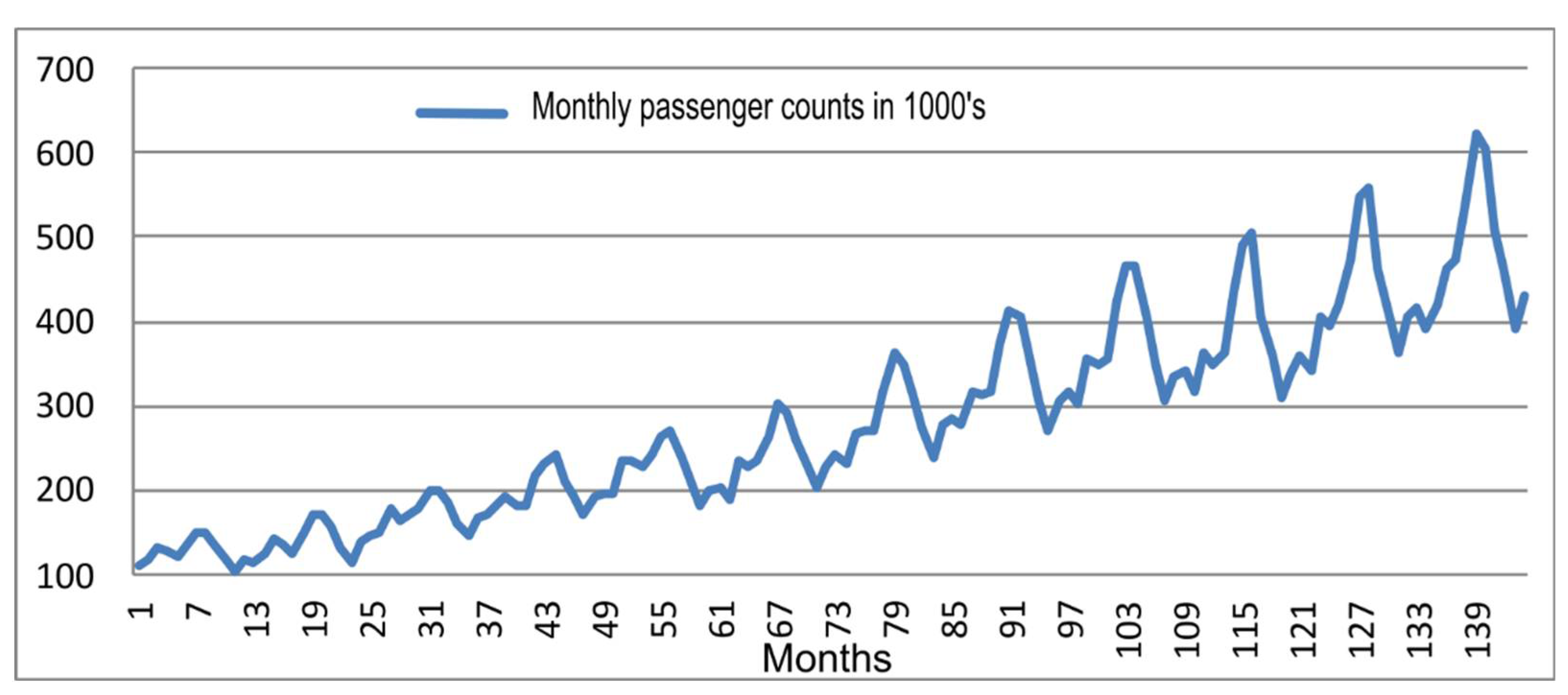

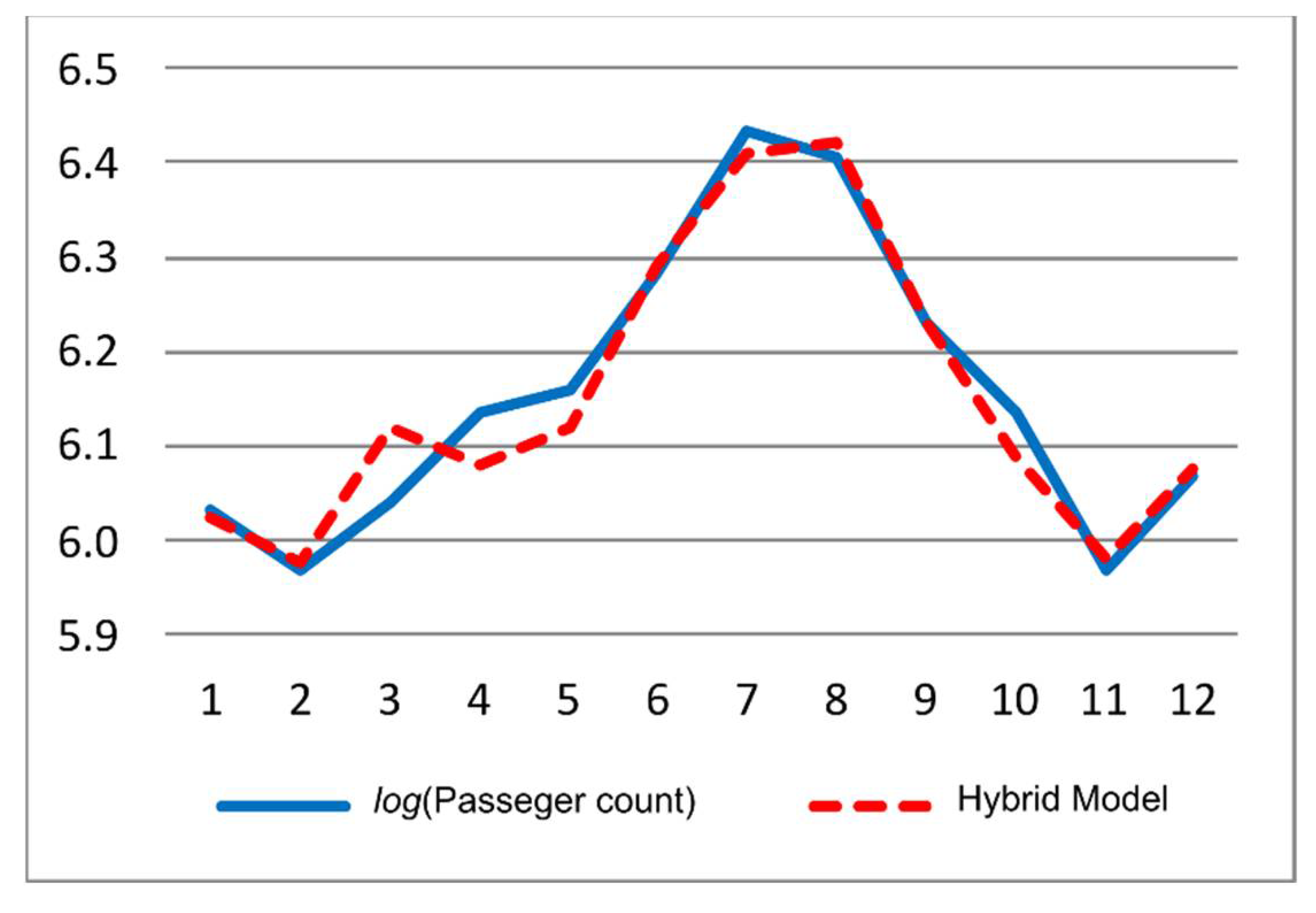

4.4. Airline Passenger Data

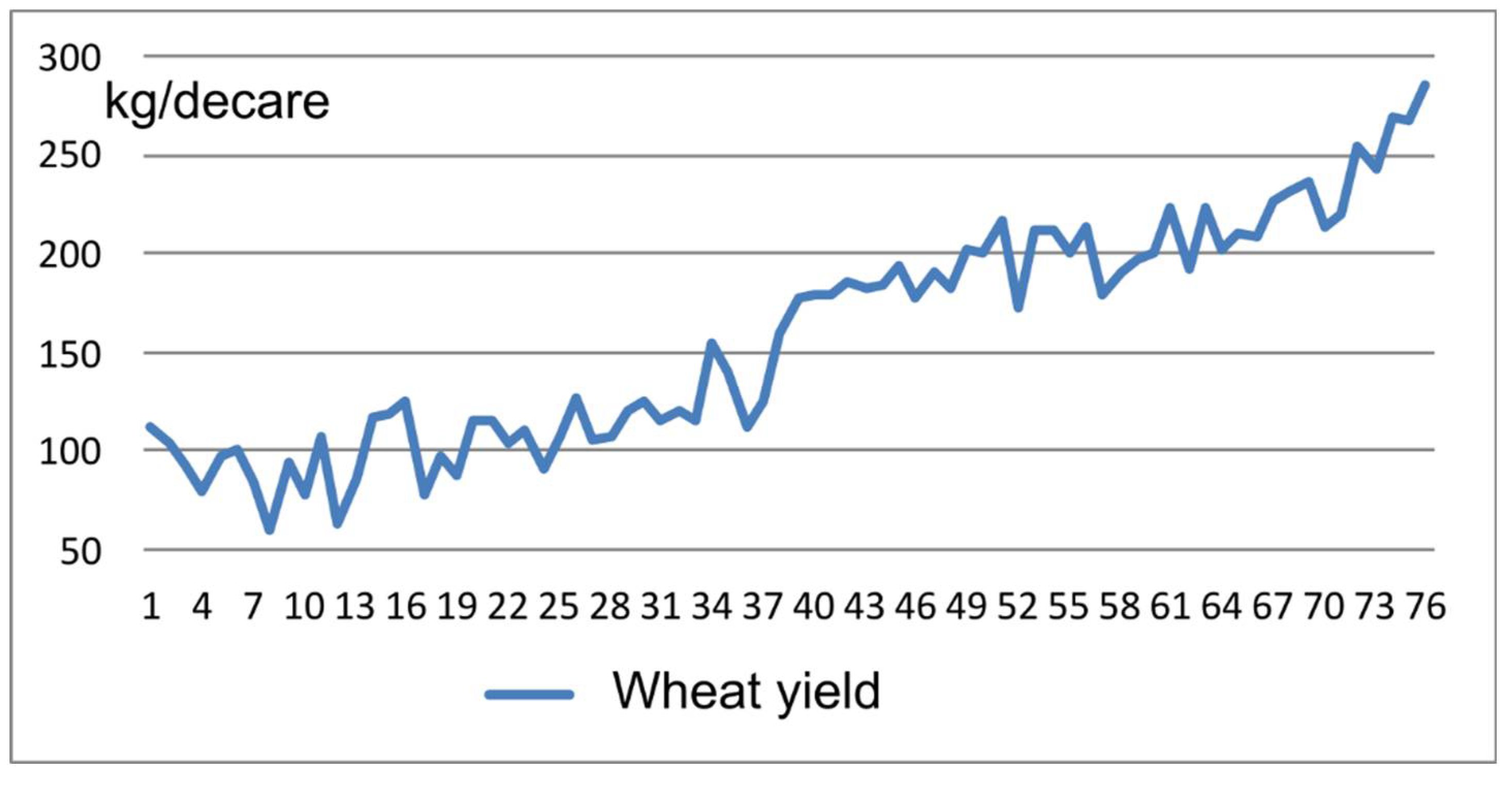

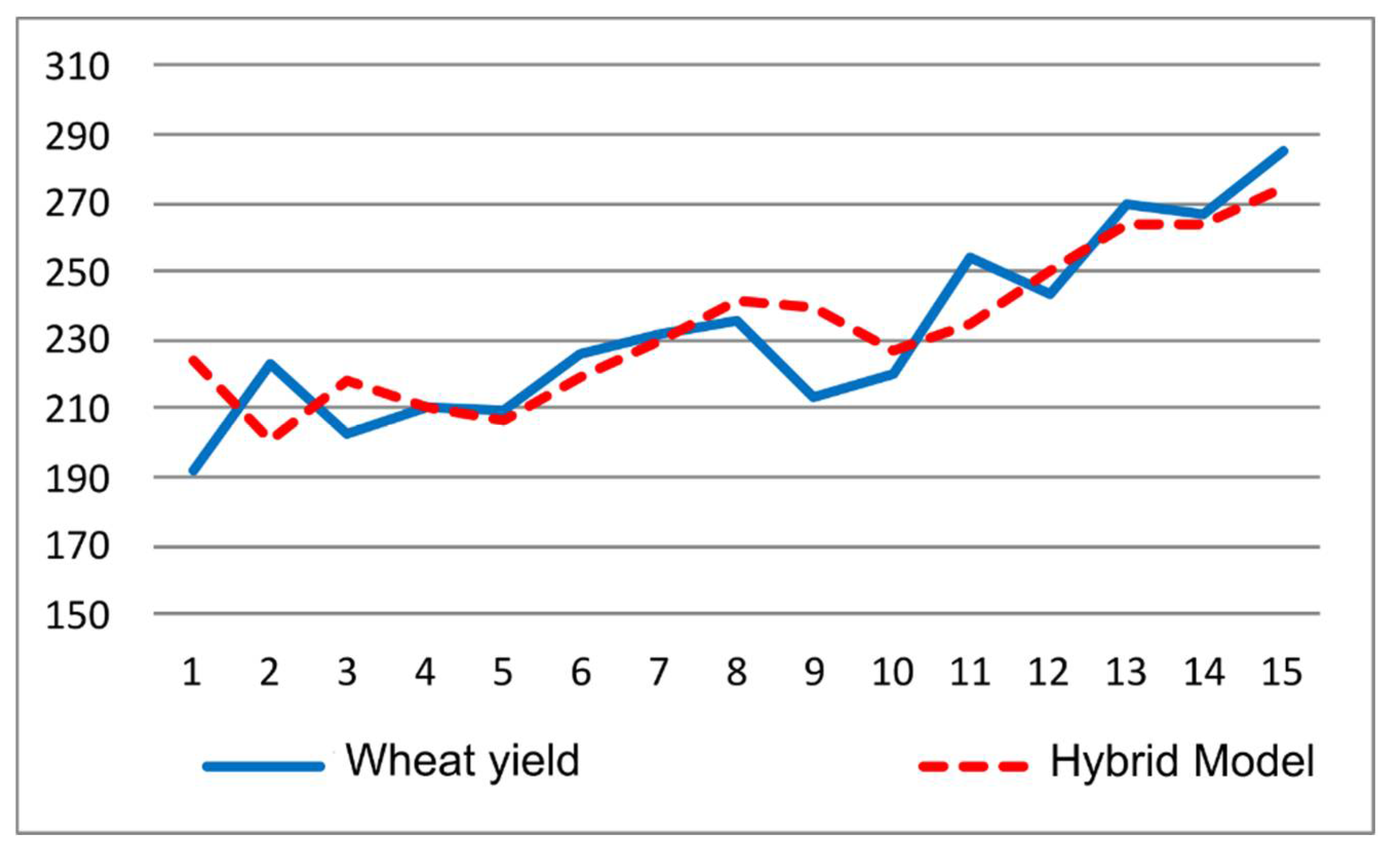

4.5. Wheat Yield in Turkey

5. Conclusion

Funding

Conflicts of Interest

References

- Tsay, R.S.; Chen, R. Nonlinear Time Series Analysis; John Wiley & Sons, 2018; ISBN 978-1-119-26405-7.

- Bates, J.M.; Granger, C.W.J. The Combination of Forecasts. Operational Research Quarterly 1969, 20, 19. [Google Scholar] [CrossRef]

- Smyl, S. A Hybrid Method of Exponential Smoothing and Recurrent Neural Networks for Time Series Forecasting. International Journal of Forecasting 2020, 36, 75–85. [Google Scholar] [CrossRef]

- Zhang, G.P. Time Series Forecasting Using a Hybrid ARIMA and Neural Network Model. Neurocomputing 2003, 50, 159–175. [Google Scholar] [CrossRef]

- Khashei, M.; Bijari, M. An Artificial Neural Network (p,d,q) Model for Timeseries Forecasting. Expert Systems with Applications 2010, 37, 479–489. [Google Scholar] [CrossRef]

- Banihabib, M.E.; Ahmadian, A.; Valipour, M. Hybrid MARMA-NARX Model for Flow Forecasting Based on the Large-Scale Climate Signals, Sea-Surface Temperatures, and Rainfall. Hydrology Research 2018, 49, 1788–1803. [Google Scholar] [CrossRef]

- Camelo, H. do N.; Lucio, P.S.; Leal Junior, J.B.V.; Carvalho, de P.C.M.; Santos, dos D. von G. Innovative Hybrid Models for Forecasting Time Series Applied in Wind Generation Based on the Combination of Time Series Models with Artificial Neural Networks. Energy 2018, 151, 347–357. [Google Scholar] [CrossRef]

- Farajzadeh, J.; Alizadeh, F. A Hybrid Linear-Nonlinear Approach to Predict the Monthly Rainfall over the Urmia Lake Watershed Using Wavelet-SARIMAX-LSSVM Conjugated Model. Journal of Hydroinformatics 2018, 20, 246–262. [Google Scholar] [CrossRef]

- Moeeni, H.; Bonakdari, H. Impact of Normalization and Input on ARMAX-ANN Model Performance in Suspended Sediment Load Prediction. Water Resources Management 2018, 32, 845–863. [Google Scholar] [CrossRef]

- Buyuksahin, U.C.; Ertekina, S. Improving Forecasting Accuracy of Time Series Data Using a New ARIMA-ANN Hybrid Method and Empirical Mode Decomposition. Neurocomputing 2019, 361, 151–163. [Google Scholar] [CrossRef]

- Chakraborty, T.; Chattopadhyay, S.; Ghosh, I. Forecasting Dengue Epidemics Using a Hybrid Methodology. Physica a-Statistical Mechanics and Its Applications 2019, 527, 121266. [Google Scholar] [CrossRef]

- Safari, A.; Davallou, M. Oil Price Forecasting Using a Hybrid Model. Energy 2018, 148, 49–58. [Google Scholar] [CrossRef]

- Aasim; Singh, S. N.; Mohapatra, A. Repeated Wavelet Transform Based ARIMA Model for Very Short-Term Wind Speed Forecasting. Renewable Energy 2019, 136, 758–768. [Google Scholar] [CrossRef]

- Liu, H.-H.; Chang, L.-C.; Li, C.-W.; Yang, C.-H. Particle Swarm Optimization-Based Support Vector Regression for Tourist Arrivals Forecasting. Computational Intelligence and Neuroscience 2018, 2018, 6076475. [Google Scholar] [CrossRef] [PubMed]

- Madan, R.; Mangipudi, P.S. Predicting Computer Network Traffic: A Time Series Forecasting Approach Using DWT, ARIMA and RNN. In 2018 Eleventh International Conference on Contemporary Computing (ic3); Aluru, S., Kalyanaraman, A., Bera, D., Kothapalli, K., Abramson, D., Altintas, I., Bhowmick, S., Govindaraju, M., Sarangi, S.R., Prasad, S., Bogaerts, S., Saxena, V., Goel, S., Eds.; Ieee: New York, 2018; ISBN 978-1-5386-6835-1. [Google Scholar]

- Babu, C.N.; Reddy, B.E. A Moving-Average Filter Based Hybrid ARIMA–ANN Model for Forecasting Time Series Data. Applied Soft Computing 2014, 23, 27–38. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Koehler, A.B. Another Look at Measures of Forecast Accuracy. International Journal of Forecasting 2006, 22, 679–688. [Google Scholar] [CrossRef]

- Makridakis, S.G.; Wheelwright, S.C.; Hyndman, R.J. Forecasting: Methods and Applications; Wiley, 1998; ISBN 978-0-471-53233-0.

- Khashei, M.; Bijari, M.; Raissi Ardali, G.A. Hybridization of Autoregressive Integrated Moving Average (ARIMA) with Probabilistic Neural Networks (PNNs). Computers & Industrial Engineering 2012, 63, 37–45. [Google Scholar] [CrossRef]

- Sunspot Number | SILSO Available online:. Available online: http://www.sidc.be/silso/datafiles (accessed on 10 March 2021).

- Bulmer, M.G. A Statistical Analysis of the 10-Year Cycle in Canada. Journal of Animal Ecology 1974, 43, 701–718. [Google Scholar] [CrossRef]

- Brown, R.G. Smoothing, Forecasting and Prediction of Discrete Time Series; Prentice Hall: Englewood Cliffs, NJ, 1962. [Google Scholar]

- Babu, C.N.; Reddy, B.E. A Moving-Average Filter Based Hybrid ARIMA–ANN Model for Forecasting Time Series Data. Applied Soft Computing 2014, 23, 27–38. [Google Scholar] [CrossRef]

- Turkish Grain Board TMO - Turkish Grain Board Statistics Available online:. Available online: https://www.tmo.gov.tr/bilgi-merkezi/tablolar (accessed on 5 June 2021).

- The Sun -- History Available online:. Available online: https://pwg.gsfc.nasa.gov/Education/whsun.html (accessed on 10 March 2021).

- Izenman, A.J. J. R. Wolf and H. A. Wolfer: An Historical Note on the Zurich Sunspot Relative Numbers. Journal of the Royal Statistical Society. Series A (General) 1983, 146, 311–318. [Google Scholar] [CrossRef]

- Suyal, V.; Prasad, A.; Singh, H.P. Nonlinear Time Series Analysis of Sunspot Data. Sol Phys 2009, 260, 441–449. [Google Scholar] [CrossRef]

- Khashei, M.; Bijari, M. An Artificial Neural Network (p,d,q) Model for Timeseries Forecasting. Expert Systems with Applications 2010, 37, 479–489. [Google Scholar] [CrossRef]

- Zhang, G.P. Time Series Forecasting Using a Hybrid ARIMA and Neural Network Model. Neurocomputing 2003, 50, 159–175. [Google Scholar] [CrossRef]

- Cottrell, M.; Girard, B.; Girard, Y.; Mangeas, M.; Muller, C. Neural Modeling for Time Series: A Statistical Stepwise Method for Weight Elimination. IEEE Transactions on Neural Networks 1995, 6, 1355–1364. [Google Scholar] [CrossRef] [PubMed]

- Rao, T.S.; Gabr, M.M. An Introduction to Bispectral Analysis and Bilinear Time Series Models; Springer Science & Business Media, 2012; ISBN 978-1-4684-6318-7.

- Australian Energy Market Operator Available online:. Available online: https://aemo.com.au/ (accessed on 13 June 2024).

- R-manual R: Monthly Airline Passenger Numbers 1949-1960 Available online:. Available online: https://stat.ethz.ch/R-manual/R-patched/library/datasets/html/AirPassengers.html (accessed on 25 March 2021).

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; 5th edition.; Wiley: Hoboken, New Jersey, 2015; ISBN 978-1-118-67502-1. [Google Scholar]

- Faraway, J.; Chatfield, C. Time Series Forecasting with Neural Networks: A Comparative Study Using the Air Line Data. Journal of the Royal Statistical Society: Series C (Applied Statistics) 1998, 47, 231–250. [Google Scholar] [CrossRef]

- Hamzaçebi, C. Improving Artificial Neural Networks’ Performance in Seasonal Time Series Forecasting. Inf. Sci. 2008, 178, 4550–4559. [Google Scholar] [CrossRef]

- Dunya, Economic Daily Decline in land use for startegic commodity, wheat. Available online: https://www.dunya.com/sehirler/stratejik-urun-bugday-ekim-alanlari-daraliyor-haberi-470018 (accessed on 3 May 2021).

- World Bank Cereal Production (Metric Tons) | Data Available online:. Available online: https://data.worldbank.org/indicator/AG.PRD.CREL.MT (accessed on 22 March 2021).

- Tiryaki̇oglu, M.; Demi̇rtas, B.; Tutar, H. Comparison of Wheat Yield in Turkey: Hatay and Şanlıurfa Study Case. Süleyman Demirel Universitesi Ziraat Fakültesi Dergisi 2017, 12, 56–67. [Google Scholar]

| MODEL | MSE | MAD |

|---|---|---|

| Zhang Hibrit AR(9)-MLP | 280.160 | 12.780 |

| Khashei, Bijari AR(9)-MLP I | 234.206 | 12.117 |

| Khashei, Bijari AR(9)-MLP II | 218.642 | 11.447 |

| Khashei, Bijari, Ardali AR(9)-PNN | 234.775 | 11.549 |

| Proposed Hybrid AR(9)-MLP | 240.896 | 11.416 |

| MODEL | MSE | MAD |

|---|---|---|

| Zhang Hibrit AR(12)-MLP [2] | 0.0172 | 0.1040 |

| Khashei, Bijari AR(12)-MLP I [3] | 0.0136 | 0.0896 |

| Khashei, Bijari AR(12)-MLP II [31] | 0.0100 | 0.0851 |

| Khashei, Bijari, Ardali AR(12)-PNN [17] | 0.0115 | 0.0844 |

| Proposed Hybrid AR(12)-MLP* | 0.0129 | 0.0986 |

| MODEL | MSE | MAD |

|---|---|---|

| Babu, Reddy ANN | 22.4304 | 3.7374 |

| Zhang Hibrit ARIMA(1,0,1)-MLP | 27.0377 | 3.9204 |

| Khashei, Bijari ARIMA(1,0,1)-MLP | 26.1396 | 3.8346 |

| Babu, Reddy ARIMA(1,1,1)-MLP | 18.2793 | 3.2342 |

| Proposed Hybrid ARIMA(1,0,1)-MLP | 14.7566 | 2.5634 |

| MODEL | MSE | MAD |

|---|---|---|

| Faraway, Chatfield ANN | 0.024167 | - |

| Hamzacebi Seasonal-ANN | 0.001083 | 0.0280 |

| Proposed Hybrid SARIMA-MLP | 0.001133 | 0.0242 |

| MODEL | MSE | MAD | MAPE (%) |

|---|---|---|---|

| ARIMA (0,1,0) – stand-alone | 375.33 | 16.00 | 6.99 |

| ANN – stand-alone | 348.39 | 16.29 | 6.81 |

| Proposed hybrid model, ARIMA-MLP | 212.24 | 11.09 | 5.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).