1. Introduction

Data intensive artificial intelligence models have proven their potential in research and professional workflows related to computer vision for geospatial feature detection and extraction. Here, aerial image data plays a fundamental role, but due to computational requirements, researchers employ the division of the available imagery into smaller image tiles. Some of the most popular works in the field use tile data with sizes of 64 × 64, 128 × 128, 256 × 256, 512 × 512, or 1024 × 1024 pixels. The tile size (also referred as “image size/ resolution” in some parts of the manuscript to represent the dimensions of an image) represents the pixel count in an image, and it is important to note that higher tile size contain more information scene information and provide more semantic context. Another key component is the tile overlap, which represents the amount (expressed in percentages), by which an image tile includes the area of an adjacent tile.

It can be considered that higher tile sizes and overlap levels could enhance the learning process of deep learning (DL) models. However, this aspect has not been properly explored, although additional scene information and continuity have the potential to increase the performance of the trained models. The overlap could be considered as a natural data augmentation technique, as it exposes the model to more aspects of the orthoimage tiles, while the additional scene information from higher tile sizes could impact the generalisation capacity of DL implementations by providing more learning context. This could be beneficial, especially for road classification models (a continuous geospatial element that is complex in nature), as they can learn from slightly different perspectives of the area, potentially improving their ability to generalise.

Therefore, the objective of this work is to study the effects of the tile size and overlap levels on the performance prediction of road classification models on novel test data and to identify the optimal combination of size and overlap that would enable a higher generalisation performance. The authors believe that the study could provide relevant insights applicable to the experimental designs of subsequent geospatial studies as the identification of optimal tile size and tile overlap level can contribute to achieving higher DL performance with a lower number of experiments, leading to a decrease in energy consumption required for training. The starting premise of the study is, “For the classification of continuous geospatial elements in aerial imagery with DL techniques, models trained on data with higher tile size and overlap achieve a higher generalisation capacity”. The study involves the binary classification of aerial orthophotos divided into image tiles labelled ‘Road’ or ‘No Road (Background)’ labels using deep learning implementations.

The road classification task involves classifying aerial imagery divided in tiles into ‘Road’ or ‘No Road’ classes (supervised, binary classification task). In binary approaches based on supervised learning, independent samples of are observed. The feature exists in an abstract space , while the labels (representing “Road”/ “No_Road”, in this case). This rule (called classifier), built to predict given , is a function and a classifier with a low classification error, is desired. Since , follows a Bernoulli distribution, but assumptions for the conditional distribution of given cannot be made.

However, the regression function of

onto

can be written as

, where

.

, where

is the noise responsible for the fact that

may not contain enough information to predict

perfectly. The presence of this noise means that the classification error

cannot be driven to zero, regardless of what classifier

is used. However, if

, it can be considered that

contains no information about

, and that, if

“1” is more likely to be the correct label. A Bayes classifier,

, can be used as a function defined by the rule in Equation 1.

This rule, although optimal, cannot be computed because the regression function

is not known. Instead, the algorithm has access to the input data

which contains information about

and, thus, information about

. The discriminative approach described in [

1] states that assumptions on what image predictors are likely to perform correctly cannot be made—this allows the elimination of image classifiers that do not generalise well. The measure of performance for any classifier

is its classification error, and it is expected that with enough observations, the excess risk,

, of a classifier

, will approach zero (by getting as close as possible to

). In other words, the classification error can be driven towards zero, as the size of the training dataset increases (

) (if

is too small, it is unlikely that a classifier with a performance close to that of the Bayes classifier

will be found). In this way, it is expected to find a classifier that performs well in predicting the classes, even though a finite number of observations is available (and thus, a partial knowledge of the distribution

) (the mathematical description of the task was adapted from [

2]).

Supervised learning tasks also enable the application of transfer learning techniques to reuse the weights resulted from the training of neural networks on large datasets (such as ImageNet Large Scale Visual Recognition Challenge, or ILSVRC [

3]). Transfer learning allows a model to start from pre-learned weights (instead of random weight initialisation) and to make use of the learned feature maps (in computer vision applications, earlier layers extract generic features such as edges, colours, textures, while later layers contain more abstract features) [

4].

A large dataset with high variability is fundamental for obtaining DL models that have a high generalisation capacity. The use of a high quality dataset is also important for the statistical analysis of their performance. For this reason, the SROADEX data [

5] (containing binary road information covering approximately 8650 km

2 of the Spanish territory) was used to generate new datasets featuring tiles with sizes of 256 × 256, 512 × 512, or 1024 × 1024 pixels and 0% or 12.5% overlap. The DL models were trained under twelve scenarios based on the combination of different tile size and overlap levels and Convolutional Neural Network (CNN) architectures. Except for these factors, the training of the road classification models was carried out under the same conditions (same hyperparameters, and data augmentation and transfer learning parameters), so that differences in performance metrics are mainly caused by the considered factors. The experiments were repeated three times to reduce the randomness of convergence associated with DL models and enable the statistical analysis, as ANOVA is valid with as little as three samples (a higher repetitions would have resulted in unrealistic training times).

To evaluate the generalisation capability of the models, a test set containing new tiles from a single orthoimage from a north-western region of Spain (Galicia, unseen during training and validation) was generated. The test area covers approximately 825 km2 and can be considered highly representative of the Spanish geography. Afterwards, multiple descriptive, inferential, and main and interaction effect tests were applied to statistically analyse the performance and assess the impact of the tile size, tile overlap, and convolutional neural network (CNN) architecture on the computed metrics. The results show that a higher tile size and overlap enable the development of models that achieve improved road classification performance on unseen data. The findings could guide future work in optimising the mentioned aspects for better model performance.

The main contributions are summarised as follows.

The impact of the tile size and overlap levels on the binary classification of roads was studied on a very large-scale dataset containing aerial imagery covering approximately 8650 km2 of the Spanish territory. Two popular CNN models were trained on datasets with different combinations of tile sizes (256 × 256, 512 × 512, or 1024 × 1024 pixels) and tile overlaps (0% and 12.5%) to isolate their effect on performance. The evaluation was later carried out on a new orthoimage of approximately 825 km2 containing novel data.

An in-depth descriptive and inferential statistical analysis and evaluation was performed next. The main effects of tile size, tile overlap and CNN architecture on the performance metrics obtained on testing data, were found to be highly significant (with computed p-values lower than 0.001). Their joint two-way and three-way interaction effects on the performance had different levels of significance and varied from highly significant to non-significant.

Additional perspectives on the impact of these factors on the performance are provided through an extensive discussion, where additional insights and limitations are described and recommendations for similar geo-studies are proposed.

The rest of the manuscript is organised as follows.

Section 2 presents similar studies that are found in the specialized literature.

Section 3 describes the data used for training and evaluating the DL models.

Section 4 details the training method applied. In

Section 5, the performance metrics on unseen data are reported and statistically analysed. The results are extensively discussed in

Section 6. Finally,

Section 7 presents the conclusions of the study.

2. Related Works

Given the expected rise of autonomous vehicles and their need for higher definition road cartography and better road decision support system, road classification is becoming one of the more important geo-computer vision applications for public agencies. Nonetheless, roads, as continuous geospatial elements, present several challenges related to their different spectral signatures caused by varied materials used for pavement, the high variance of road types (highways, secondary roads, urban road, etc.), the absence of clear markings, and differences in widths, that make their classification in aerial imagery difficult. Furthermore, the analysis of remotely sensed images also presents associated challenges such as the presence of occlusions or shadows in the scenes. Therefore, the task of road classification can be considered complex.

Recent work on this topic takes the deep learning approach to model the input-output relations of the data and obtain a more complex classification function capable of describing road-specific features and achieving a higher generalisation capacity (indicated by a high performance on testing data that was not modelled during training).

In the specialized literature, authors such as Reina et al. [

6] and Lee et al. [

7], among others, identify the need to tile large scenes from medical or remote sensing images due to the memory limitations of GPUs (mainly for semantic segmentation tasks). It was observed that the tiling procedure introduces artifacts in the feature map learning of the models, and the analysis of optimal tile sizes becomes necessary.

After evaluating ten tiles size sizes varying from 296 × 296 to 10,000 × 10,000 pixels, Lee et al. [

7] conclude that the best tile sizes for the lung cancer detection between 500 and 1000. It is important to note that the number of images used in medical imaging rarely surpasses few dozens and training models like VGGNet [

8] (featuring tens of millions of parameters) can be considered a strong indicator of overfitting (where DL models “memorize” the noise in the training data to achieve a higher performance). A higher occurrence of prediction errors near the borders of the tiles was also identified in relevant geo-studies such as [

9], or [

10]). For these reasons, and considering the size of the dataset, we considered appropriate to evaluate three popular tile sizes found in relevant geo-studies (namely, 256× 256, 512 × 512 and 1024× 1024 pixels).

Ünel et al. [

11] recognised the benefit of image tiling in surveillance applications and proposed a PeleeNet model for real-time detection of pedestrians and vehicles from high-resolution imagery. Similarly, Akyon et al. [

12] proposed the Slicing Aided Hyper Inference (SAHI) framework for surveillance applications to detect small objects and objects that are far away in the scene.

In addition, relevant studies in the medical field [

13] have also noted the convenience of having overlap between tiles in the training dataset. Some authors consider that an optimal overlap percentage of 50% [

14] can be applied as a data augmentation technique to improve the performance of the models. Nonetheless, we only selected the 12.5% level of overlap for this study because it ensures that information near tile edges can be correctly processed during training and avoids lower data variability that would be introduced by generating tiles with higher overlap (possibly leading to a biased model, as it would be exposed to many similar data points). In addition, a smaller level of overlap also avoids the processing of an excessive amount of information resulting from higher overlap levels.

Recently, Abrahams et al. [

15] proposed a data augmentation strategy based on random rotations and reflections of the training tiles (without overlap between tiles) called “Flip-n-Slide” to perform semantic segmentation of images where the orientation of the objects in the scenes is important. These studies indicate the relevance of this work in the current geo-computer vision landscape.

Since our DL task is to identify parts of large high-resolution aerial images that contain road elements (at country level, for a subsequent semantic segmentation of the tiles that contain roads), the purpose of our research is to study the optimal tile size for division (tiling) and tile overlap strategy. We consider this aspect to be a topic of great interest for current geo-studies and projects.

As no additional references relevant to our study were identified, articles related to the use of CNNs on image data for road applications that were published after 2018 in peer-reviewed scientific journals will be described and commented on next. In this regard, one of the most discussed areas in the literature is the detection of road defects for safety and maintenance purposes. Chun and Ryu [

16] proposed the use of CNNs and autoencoders to classify oblique images acquired by a circulating vehicle and identify asphalt defects that can cause accidents. Semi-supervised methods were applied to create a novel dataset and data augmentation techniques were used to train the models that demonstrated their effectiveness on 450 test datasets. Maeda et al. [

17] identified the lack of datasets of road deficiencies that would allow road managers to be aware of the defects and evaluate their state for use or repair without compromising safety. The authors generated a dataset of approximately 9000 images and labelled approximately 14,000 instances with eight types of defects. Object detection models were trained afterward to locate the defects in images and additional tests were performed in various scenarios in Japan. Liang et al. [

18] proposed the Lightweight Attentional Convolutional Neural Network to detect road damage in real time on vehicles.

Rajendran et al. [

19] proposed the use of a CNN to identify potholes and road cracks in images taken from a camera connected to a Universal Serial Bus (USB) to create an IoT system that informs the authorities responsible for such defects (so further actions or repair planning can be taken). Zhang et al. [

20] benchmarked different CNN models based on AlexNet, ResNet, SqueezeNet, or ConvNet to detect faults and compare their performance. Fu et al. [

21] proposed a CNN architecture (StairNet) and compared different trained network models based on EfficientNet, GoogLeNet, VGG, ResNet, and MobileNet to identify defects in the concrete pavement. For validation, a platform to run the algorithms was created and a proof of concept on the campus of Nanjing University was developed. Finally, Guzmán-Torres et al. [

22] proposed an improvement in the VGGNet architecture to classify defects in road asphalt. The training was carried out on the dataset containing 1198 image samples that they generated (HWTBench2023). Transfer learning was used during training to achieve accuracy and F1 score metrics of over 89%.

The works of He et al. [

23], Fakhri and Shah-Hosseini [

24], Zhu et al. [

25] and Jiang Y. [

26] use CNNs for the detection of roads from satellite or very high resolution images. In the first article, the authors seek to optimise the hyperparameters of the models. In the second work, in addition to the RGB (Red, Green, Blue) images, prediction data obtained from a previous binary road classification with Random Forest is also incorporated as input for the CNN models to achieve F1 score metrics of 92% on the Massachusetts dataset. In the third paper, qualitative improvements in the results are obtained by replacing the ReLU function in the fully connected network (FCN) with a Maximum Feature Mapping (MFM) function, so that the suppression of a neuron is not done by threshold, but by a competitive relation. In the fourth case, the authors propose a post-processing of the results of the trained CNN network based on a Wavelet filter to eliminate the noise of the areas without roads, obtaining as a result a binary “Road/ No road” classification.

There are also studies aimed at identifying road intersections. Higuchi and Fujimoto [

27] implemented a system that acquires information with a two-dimensional laser range finder (2D LRF), allowing the determination of the movement direction of the autonomous navigating robot to detect road intersections. Eltaher et al. [

28] generated a novel dataset by labelling approximately 7550 road intersections in satellite images and trained the EfficientDet object detection model to obtain the centre of the intersections with average accuracy and recall levels of 82.8% and 76.5%, respectively.

Many existing studies focus on differentiating the types of road surfaces. Dewangan and Sahu [

29] used computer vision techniques to classify the road surface into five classes (curvy, dry, ice, rough, and wet) and obtained accuracies of over 99.9% on the Oxford RobotCar. Lee et al. [

30] proposed a model based on signal processing using a continuous wavelet transform, acoustic sensor information, and a CNN to differentiate thirteen distinct types of pavements in real time. The model was trained on a novel dataset containing seven types of samples (with around 4000 images per category) and delivered an accuracy superior to 95%.

Another important task is the processing of aerial imagery with road information to assign a binary label [

31] or a continuous value [

32] to the tile. Cira et al. [

33] proposed two frameworks based on CNNs to classify image tiles of size 256 × 256 pixels that facilitate the discrimination of image regions where no roads are present to avoid applying semantic segmentation to image tiles when roads are not expected. de la Fuente Castillo et al. [

34] proposed the use of grammar-guided genetic programming to obtain new CNN networks for binary classification of image tiles that achieve performance metrics similar to those achieved by other state-of-the-art models. In [

32], CNNs were trained to process aerial tiles with road information to predict the orientation of straight arrows on marked road pavement.

In the literature review, it was noted that most existing works focus on processing reduced datasets that cover smaller areas and generally feature ideal scenes (where road elements are grouped into clearly defined regions [

35]). Nonetheless, the use of reduced dataset may not be suitable if models capable of large-scale classification are pursued (as also discussed in [

36]). For this reason, data from the SROADEX dataset [

5] (containing orthoimagery covering approximately 8650 km

2 of the Spanish territory that was labelled with road information) was used in this study. This adds real world complexity to the road classification task, to avoid focusing on ideal study scenes and to achieve DL models with a high generalisation capacity.

3. Data

The data used for this study are RGB aerial orthoimages from the Spanish regions covered by the SROADEX dataset [

5], binary labelled with the “Road” and “No road” classes. More details regarding the procedure applied for labelling the data and tile samples can be found in the SROADEX data paper [

5]. As mentioned above, the orthoimages forming the SROADEX dataset cover approximately 8650 km

2 of the Spanish territory.

The digital images within SROADEX have a spatial resolution of 0.5 m and are produced and openly provided by the National Geographical Institute of Spain through the National Plan of Aerial Orthophotography product (Spanish: “

Plan Nacional de Ortofotografía Áerea”, or PNOA [

37]). They are produced by Spanish public agencies that acquired the imagery in photogrammetric flights performed under optimal meteorological conditions. The resulting imagery was orthorectified to remove geometric distortions, radiometrically corrected to balance the histograms, and topographically corrected using terrestrial coordinates of representative ground points using the same standardised procedure defined by their producers.

Taking advantage of this labelled information, the full orthoimages were divided into datasets featuring tiles with (1) a size of 256 × 256 pixels and 0% overlap, (2) a size of 256 × 256 pixels and 12.5% overlap, (3) a size of 512 × 512 pixels and 0% overlap, (4) a size of 512 × 512 pixels and 12.5% overlap, (5) a size of 1024 × 1024 pixels and 0% overlap, and (6) a size of 1024 × 1024 pixels and 12.5% overlap. The tiling strategy applied involved a sequential division of the full orthoimage with the different combinations of tile overlap and tile size selected. To ensure a correct training, tiles with road elements shorter than 25 m were deleted (in the case of tiles of 512 × 512 pixels, this means that the sets only contain tiles where roads occupy at least 50 pixels; while in the case of 1024 × 1024 pixels, they only contain tiles where roads occupy more than 21 pixels). Afterwards, each combination of tile size and tile overlap was split into training and validation sets by applying the division criterion of 95:5%. In this way, six training and six validation sets, corresponding to the combination of each tile size and tile overlap, were generated.

The test set is formed by approximately 825 km

2 of binary road data from four novel regions that were divided into image tiles with 0% overlap at the three tile sizes considered. The test areas were selected because they contain diverse types of representative Spain scenery and enable the statistical validity of the tests applied to objectively evaluate the generalisation capacity of the models.

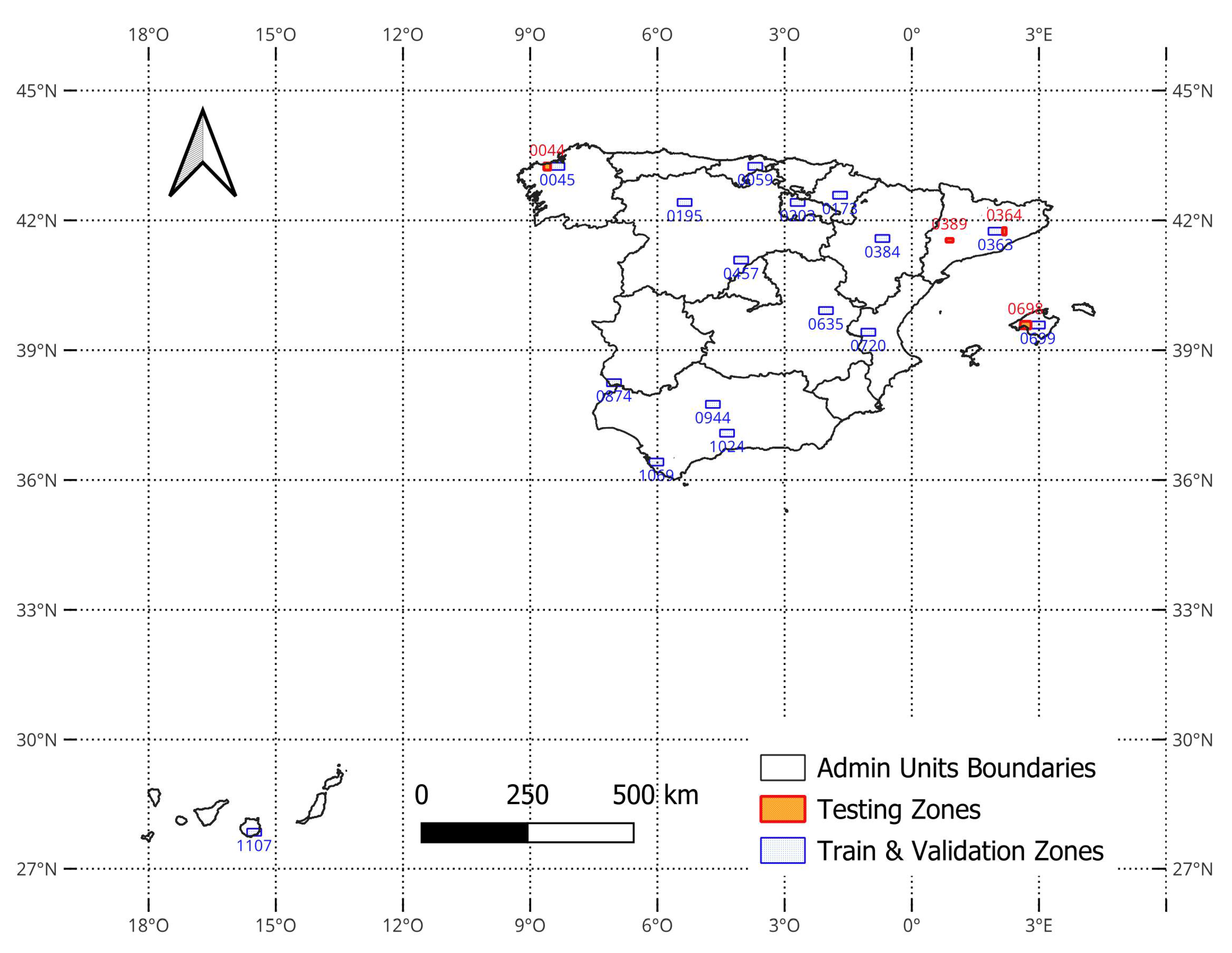

Figure 1 shows the territorial distribution of the train and validation sets (SROADEX data, signalled with blue rectangles) and the test area (signalled with orange rectangles), while

Table 1 shows the number of images and pixels used for training, validation, and testing sets across different tile sizes and overlaps considered.

In

Table 1, it can be observed that the percentages of tiles containing road increases as the tile size increases, to the detriment of tiles that do not contain road elements. For instance, at a tile size of 256 × 256 pixels, the dataset is balanced in terms of the two binary classes (approximately 47.5% of data is labelled with the positive class and 52.5% is labelled with the negative class), whereas, at a tile size of 1024 × 1024 pixels, the data labelled with the “Road” class represents approximative 90% of the samples. This is to be expected, given that, as the scene area increases, the probability of a tile not containing a road decreases. As a result, the class imbalance between the “Road” and “No Road” classes increases as the tile size increases. This implies that the training procedure must incorporate balancing techniques to ensure a correct training and prevent models that are biased towards the positive class.

Regarding the normality of the data, given the size of the sample data (approximately 16 billion pixels × 3 RGB channels, organized in approximately 527,000 images in SROADEX), and following the Central Limit Theorem [

38] that states that a large, independent sample variable approximates to a normal distribution as a sample size becomes larges, regardless of the actual distribution shape of the population, it was assumed that the training and testing data follows a normal distribution. Therefore, given the large dataset size, instead of conducting an empirical test of normality, which would be computationally and practically challenging, we proceeded with the analysis based on the assumption of normality explained, which is a common practice in such scenarios.

4. Training Method

To carry out a comparative study that allows understanding the effect on the performance of different neural network architectures trained for the same task, two classification models, VGG-v1 and VGG-v2 (proposed in

Table 1 of [

39]), that have demonstrated their appropriateness in relevant works related to geospatial objects classification [

10,

40], were selected for training. Briefly, the models are based on the convolutional base of VGG16 [

8], followed by a global average pooling layer, two dense layers (with [512, 512] units for VGG-v1 and [3072, 3072] units for VGG-v2) with ReLU [

41] activations, a dropout layer with a ratio of 0.5 for regularisation, together with a final dense layer with one unit and sigmoid activation that enables the binary classification.

Table 2 shows that the road classification models were trained under twelve different scenarios, each with a different combination of CNN architecture (VGG-v1 and VGG-v2), size (256 × 256 pixels, 512 × 512 pixels, and 1024 × 1024 pixels), and overlap (0% and 12.5%). This approach enables a detailed understanding of how these factors interact and impact the performance of the trained models and which combinations deliver the best results.

To reduce the sources of uncertainty, the standard procedure for training DL models for classification was applied. In this regard, the pixel values of the orthoimage tiles from the training and validation sets were normalised (rescaled from the range [0, 255] to the range [0, 1]) to avoid calculations on large numbers. Afterwards, in-memory data augmentation techniques with small parameter values of up to 5-10% were applied to the training images in the form of random rotations, height and width shifts, or zooming inside tiles (if empty pixels resulted from these operations, they will be assigned with the pixel values from the nearest boundary pixel). Furthermore, random vertical and horizontal flips were applied to expose the convolutional models to more data aspects and ensure the control of the overfitting behaviour specific to models with large number of trainable parameters. Given the structured approach to data collection, instead of a random weight initialisation approach, the weights were initialised by applying transfer learning from ILSVRC [

3]. This enables the re-use of the features on the large-scale data for the road classification task.

In

Section 3, it was discussed that the probability of a tile not containing a road decreases as the tile size increases (there is an inherently higher probability that a larger area contains at least one road), which resulted in higher class imbalance in favour of the “Road” class. To tackle the class imbalance observed in

Table 1 and the associated overfitting behaviour, a weight matrix was applied to penalise the road classification model when wrongly predicting the over-represented class. The weight matrix contains class weights that were computed with Equation 2.

In Equation 2, represents the weight for class , represents the total number of samples in the training set, is the number of classes (in this case, ), while the term represents the number of samples in class . The formula ensures that the underrepresented “No_Road” class will have a higher influence on the training evolution to balance the overrepresentation of the “Road” class at higher tile sizes.

The loss function is the binary cross-entropy and can be defined with the formula defined in Equation 3.

In the context of binary road classification, the loss from Equation 3 measures the “closeness” between the expected “Road” and “No Road” labels and the predictions delivered by the road classification model; represents the number of available samples in the training scenario, is the true label of the -th sample ( is either 0 or 1), and presents the predicted probability of the -th sample being in the positive class (a value between 0 and 1 that represent the model’s confidence that the label of the -th sample is “Road”; a decision limit of 0.5 is applied to infer the positive or negative class label). The dot symbol “” indicates an element-wise multiplication between the corresponding vectors.

The resulting weighted loss function is minimised with the Adam optimiser [

42] (with a learning rate of 0.001) by applying the stochastic gradient descent approach (as the selected loss function is differentiable), the loss for each sample being scaled by the class weight defined earlier. Intuitively, a model that predicts the expected labels will achieve a low loss value.

In the experimental design, it was established that the DL model configurations associated with each training scenarios from

Table 2 will be trained in three different iterations for thirty epochs over the entire dataset. It is important to note that although higher sizes provide more scene information, they also require more computational resources and, consequently, a smaller batch size. The batch size selected for each training scenario was the maximum allowed by the available graphics card. All training experiments were carried out on a Linux server with the Ubuntu 22.04 operating system that featured a dedicated NVIDIA V100-SXM2 graphical card with 16 gigabytes of video random access memory (VRAM). As for the software, the training and evaluation scripts were built with Keras [

43] and TensorFlow [

44], together with their required library dependencies. The code featuring the training and evaluation of the DL implementations, the test data, and the resulting road classification models are available in the Zenodo repository [

45] and are distributed under a CC-BY 4.0 license.

5. Results

The performance metrics results of the road classification models trained under the scenarios described in

Table 2, are reported in

Appendix A. The performance is expressed in terms of loss, accuracy, ROC-AUC score, and precision, recall, and F1 score for the training, validation, and test sets, for each of the three training iterations carried out. The decision threshold for the probability predicted by the model was 0.5 (as discussed in the “Introduction”)—a predicted probability higher or equal to 0.5 would be considered a positive sample, while tiles with a predicted value lower than the threshold are assigned to the negative class.

The loss is calculated with Equation 3. Accuracy is computed using the confusion matrix of the model (expressed in terms of true positive (TP), true negative (TN), false positive (FP) and false negative (FN) predictions) and measures the proportion of correctly predicted samples over the total set size (Equation 4). The precision (proportion of TP among the positive predictions of the model, Equation 5), recall (number of TP among the actual number of positive samples, Equation 6) and F1 score (harmonic mean of precision and recall, Equation 7) metrics offer a more comprehensive perspective of the misclassified cases compared to accuracy, as they consider both FP and FN predictions. The ROC-AUC score is an indicate of the capacity of a model in distinguishing between the positive and negative classes and measures the area under the receiver operating characteristic curve (a plot of the TP rate against the FP rate at various thresholds from (0,0) to (1,1)) by using the prediction scores and the true labels.

The metrics on the training set range from 0.0719 to 0.2270 in the case of the loss, from 0.9072 to 0.9715 in terms of accuracy, from 0.8738 to 0.9190, 0.9073 to 0.9650, and 0.8223 to 0.9137 for the F1 score, precision, and recall metrics, respectively, and from 0.9703 to 0.9909 in the case of the ROC-AUC score. The metrics on the validation set range from 0.0878 to 0.2574 in terms of loss, from 0.8959 to 0.9673 for accuracy, from 0.8475 to 0.9030, 0.8930 to 0.9650, and 0.7939 to 0.9021 for the F1 score, precision, and recall, respectively, and from 0.9628 to 0.9853 in terms of the ROC-AUC score. The metrics on the test set range from 0.0947 to 0.4951 in terms of loss, from 0.8222 to 0.9763 in terms of accuracy, from 0.7924 to 0.8862, 0.8051 to 0.9764, and 0.7662 to 0.8360 in the case of the F1 score, precision, and recall, respectively, with the AUC-ROC score ranging from 0.8939 to 0.9840.

The values of these metrics also vary across different training scenarios and their experiment iterations. For instance, the validation loss in training scenario 3 ranges from 0.1978 to 0.2152, while for scenario 6 it ranges from 0.0941 to 0.1035. Other examples are the training F1 scores from scenario 8 (ranging from 0.9104 to 0.9133) and scenario 9 (with values ranging from 0.9051 to 0.9134), or the test ROC-AUC scores that range from 0.9700 to 0.9706 in training scenario 6 and from 0.9356 to 0.9517 in the case of training scenario 5.

Therefore, the values obtained present important differences across the training scenarios considered and across the experiment iterations and suggest that the trained road classification models were learning at different rates, probably due to the difference in the model architectures and the tile overlap and tile size levels considered (given that the variability of the training and test sets is similar and that the test set covers the same area). This indicated that more in-depth analysis is needed to better understand the performance metrics differences. This study is centred on statistically studying the performance metrics from

Appendix A and uses the metrics obtained by the models on unseen, test data to identify the factors that have the greatest effect on the generalisation capacity of the road classification models. The statistical analysis was performed with the SPSS software [

46].

5.1. Mean Performance on Testing Data Grouped by Training Scenarios

First, to explore the relationship between the performance metrics and the training IDs, detailed descriptive statistics were obtained (including means and their standards deviations) and the statistical analysis of variance (ANOVA) was applied to analyse the differences between group means. The dependent variables are the performance metrics on the test set (loss, accuracy, F1 score, precision, recall and ROC-AUC score), while the training scenarios act as fixed factors. The objective was to verify if statistical differences are present between metrics grouped by training scenario ID (N=3 samples within each training scenario).

The results are presented in

Table 3 in terms of mean performance metrics and their standard deviations, and the ANOVA F1-statistics and their p-values. An F-statistic is the result of the ANOVA test applied to verify if the means between two populations are significantly different and represents the ratio of the variance of the means (between groups) over the mean of the variances (within groups). The p-value associated indicates the probability of the variance between the mean groups is random, with a p-value of lower than 0.05 being considered statistically significant. The Eta (η) and Eta squared (η

2) measures of association are also provided. Eta (η) is a correlation ratio that measures the degree between a categorical independent variable and a continuous dependent variable, ranging from 0 (no association) to 1 (perfect association). Eta Squared (η

2) represents an ANOVA measure of effect size that represents the proportion of the total variance in the dependent variable that is associated with the groups defined by the independent variable.

In

Table 3, it can be observed that all corresponding p-values of the F-statistic are smaller than 0.001 and indicate statistically significant difference between the loss, accuracy, F1 score, precision, recall and ROC-AUC score obtained by the trained models and the different training scenario IDs. These values imply that, for all the studied performance metrics, the variation between groups (different training IDs) is much larger than the variation within groups (same training ID) and suggest that the training ID has a significant effect on the performance of the road classification model across all the metrics considered.

Regarding the η and η

2 measures of association, the values are close to 1 and indicate that the training ID had a significant effect on all the metrics considered and that a considerable proportion of the variance in each metric can be explained by the training ID. The values from

Table 3 indicate an extremely strong positive association between the accuracy and the training ID of the road classification model, a very strong positive association between the loss, the F1 score, precision and ROC-AUC score, and a strong positive association between the recall and the training ID.

The F-statistics and their p-values does not reveal which training IDs are different from the others when there is a significant difference. To reduce the length of the study, the analysis of the boxplots of the performance metrics grouped by training ID was carried out on the loss, F1 score and ROC-AUC score. These metrics are considered appropriate for evaluating the performance on the test set with imbalanced data (in

Table 1, it can be observed that the test set features very different number of positive and negative images at higher sizes), as the F1 score represents the harmonic mean of precision and recall, and it ensures that a model is robust in terms of false positives and false negatives. The ROC-AUC score is based on the predicted probabilities and indicates the capability of a model in distinguishing between the classes and is widely used for imbalanced datasets. As an additional comment, the accuracy is more suitable when evaluating symmetric datasets, as it can lead to a misleading measure of the actual performance in class imbalances scenarios. A comparison of the training scenarios in terms of performance metrics can be found in

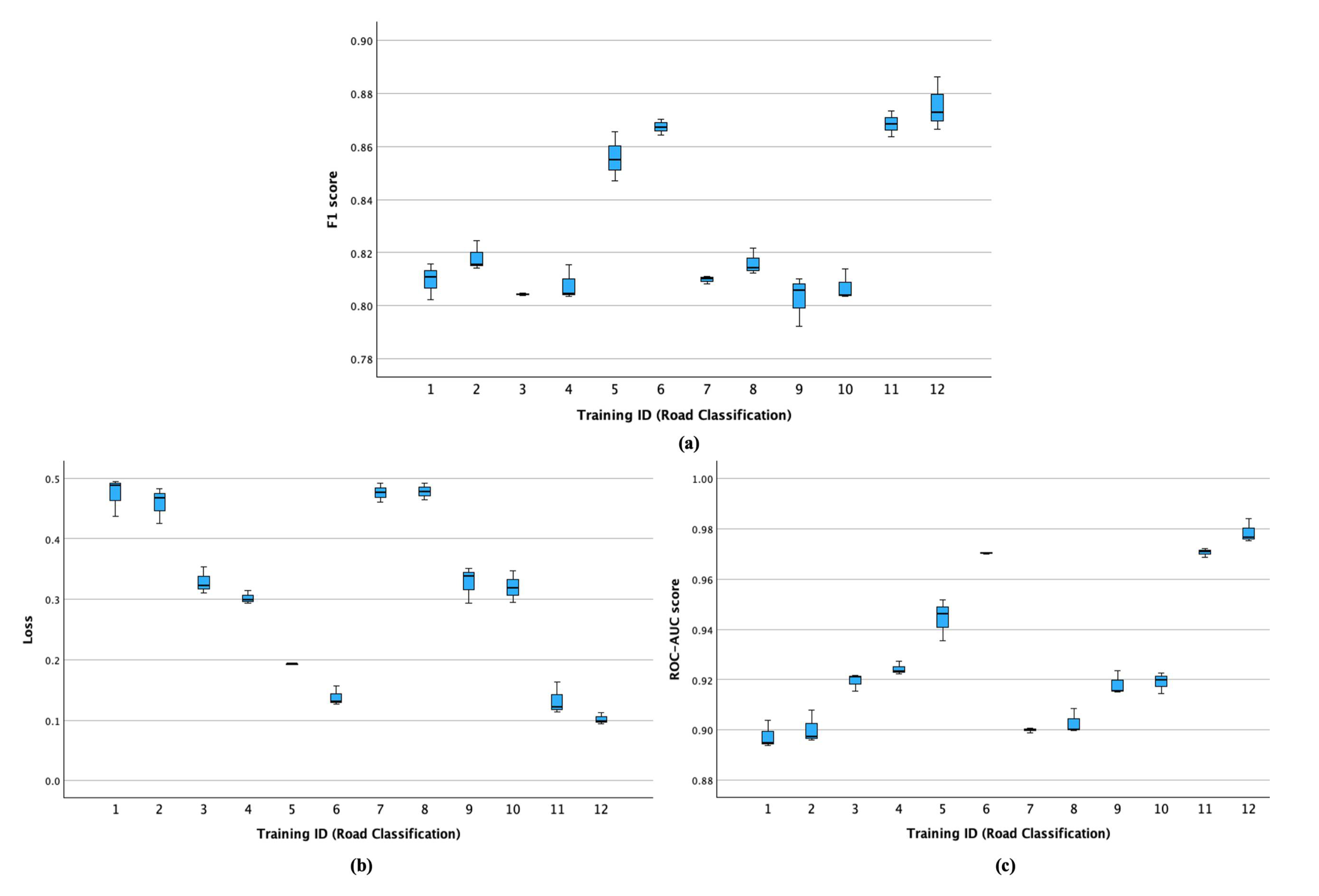

Figure 2.

In

Figure 2, the boxplots of the training scenarios with IDs 1 to 6 present the performance metrics obtained by the VGG-v1 trained for road classification on datasets featuring tiles with a size of 256 × 256 and 0% overlap (scenario 1) to 1024 × 1024 and 12.5% overlap (scenario 6), while scenarios 7-10 contain the same information for the VGG-v2 model. The performance of the configurations follows a similar pattern. The loss progressively decreases for scenarios 1 to 6 and 7 to 12, while the F1 and ROC-AUC scores seem to increase in the same way (indicating an increase in performance as higher size tiles are used). Also, from the overlap perspective, by comparing pairs of consecutive scenarios, the F1 and ROC-AUC scores seem to be higher in scenarios with an even training ID (that feature tiles with an overlap of 12.5%), while the loss values seem to be smaller (possibly indicating a higher performance in scenarios featuring a 12.5% overlap). The highest median F1 and ROC-AUC scores and lowest median loss seem to belong to scenario 12, which is closely followed by scenarios with IDs 11 and 6. Training ID 12 features high variability in the F1 score but a low loss value computed on the unseen test data.

Next, given that the test sample sizes vary greatly (the test sets of higher sizes feature a lower number of images), the Scheffe test was applied to compare the performances in terms of the F1 and ROC-AUC scores and loss values and to identify the best performing ones. Scheffe’s method is a statistical test used for post-hoc analysis after ANOVA, where a comparison is made between each pair of training ID means (it is more conservative in controlling the Type I error rate for all possible comparisons) using a t-test adjusted for overall variability of the data, while maintaining the level of significance at 5%. It is used to make all possible contrasts between group means and outputs the homogeneous subsets that can be obtained. All groups from this analysis feature a sample size

. In

Table 4, the post-hoc test results are presented in terms of homogeneous subsets of configurations for the F1 and ROC-AUC scores and loss metrics grouped by the training scenario ID after applying the Scheffe’s method as described above.

The homogeneous subsets reported in

Table 4 contain proposed configurations whose performances are not significantly different from each other at a level of significance of 5%. For example, configurations 6, 11 and 12 do not have a significantly different F1 and AUC ROC scores (highest values) and loss (lowest values). These configurations are not common between the different homogeneous subsets obtained, implying significantly different performance compared to the rest of the configurations, with the models obtained from the training scenario 12 being the best performers. These post-hoc test results support the observations of the boxplots from

Figure 2.

5.2. Performance of the Best Model

The performance achieved by each of the trained models is presented in

Appendix A. In

Table 3, the computed metrics were grouped by scenario ID and their overall descriptive statistics on the test set were presented. It can be found that all road classification models achieved a high generalisation capacity, as their performance on unseen data reaches mean levels of 0.3111, 0.9043, 0.8286, 0.8700, 0.8019, and 0.9288 in terms of loss, accuracy, F1 score, precision, recall and ROC-AUC scores, respectively, with associated standard deviations of 0.1396, 0.0599, 0.0284, 0.0666, 0.0177, and 0.0292, respectively.

In the statistical analysis carried out in

Section 5.1, a significant variability in the metrics was observed across different training iterations and subsets of the data and models. The best training scenario was 12, as it obtained the highest mean performance on unseen data. The three models trained in this scenario achieved mean values of loss, accuracy, F1 score, precision, recall and ROC-AUC score of 0.1018, 0.9749, 0.8751, 0.9659, 0.8195 and 0.9786, respectively. By crossing this information with the data from

Appendix A, it can be observed that the best training iteration from this scenario was the third one, where loss values of 0.0948, 0.0948 and 0.0984, F1 score values of 0.8871, 0.8871 and 0.8728, and ROC-AUC scores of 0.9808, 0.9808 and 0.9766, were achieved on the train, validation, and test set, respectively. In

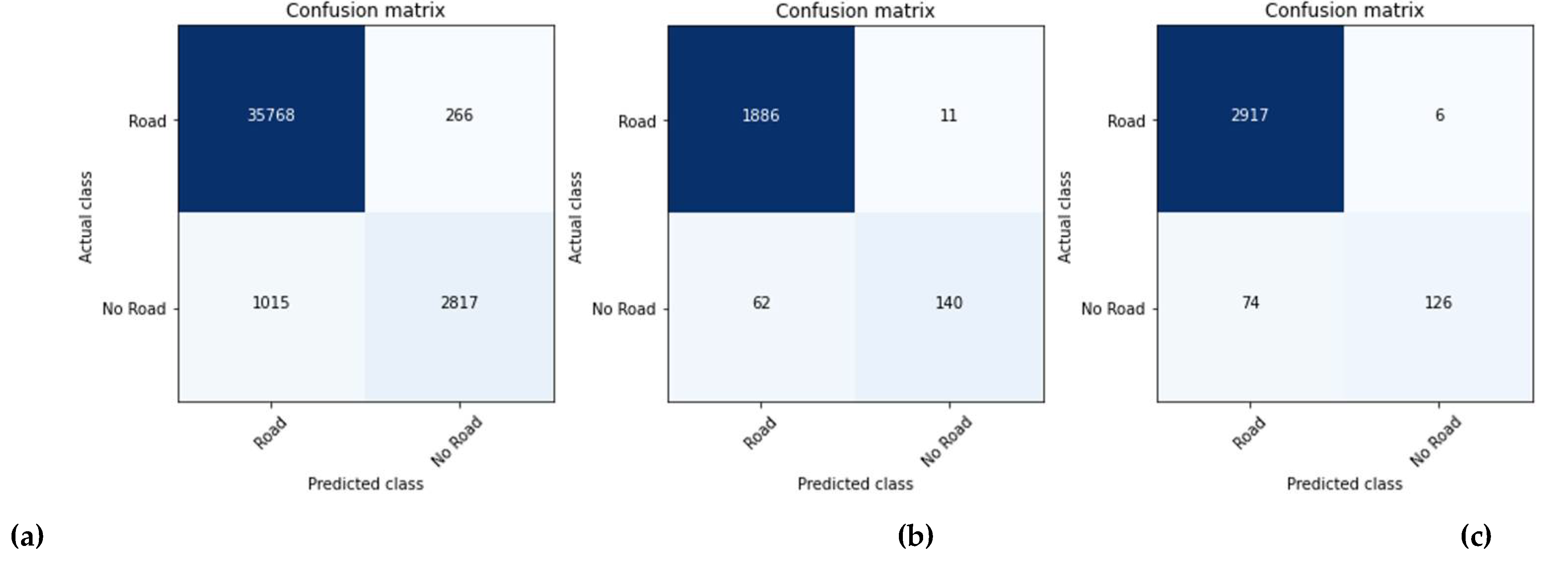

Figure 3, the confusion matrix of the best CNN model (VGG-v2, trained with images of 1024 × 1024, with an overlap of 12.5%) computed on the train, validation, and test set can be found.

By analysing the error rates from the confusion matrix obtained by the best VGG-v2 model on the test set, it can be found that the resulting model correctly classified 38,585 training samples (35,768 as the positive class and 2,817 as the negative classes), while incorrectly predicting 266 negative samples as belonging to the “Road” class and 1015 “Road” samples as belonging to the negative class, the error rate (ratio between incorrect predictions and total samples) on the training set being 3.2% (

Figure 3(a)). On the validation set, the model correctly predicted 1886 positive and 140 negative samples while incorrectly predicting 11 negative samples as positives and 62 positive samples as negatives (as observed in

Figure 3(b)). The corresponding error rate is 3.5%. On the test set, the best performance model correctly predicted 2917 positive and 126 negative samples, while incorrectly predicting 6 negative samples as positive and 74 positive samples as belonging to the negative class (

Figure 3(c)). The associated error rate is 2.6% and proves the high generalisation capability of the CNN models as the error rate is slightly lower when compared to the training and validation sets.

In

Figure 2(a), it can be observed that the best scenario (ID=12) shows a higher variance in the F1 score. One cause could be the inherent randomness associated with the training process of DL models (where the weights have a random component in their initialization, or the random selection of mini batches). This randomness can introduce variability in convergence and performance (even if the models are trained on the same representative, large-scale data) and result in F1 scores that are not entirely consistent across runs. In some experiments, the model might be making more accurate positive class predictions (“Road” class), while in others, it might be better at predicting the negative (“No road”) class, a behavior conditioned by the precision-recall tradeoff. It is important to mention that performance metrics should be evaluated globally, not at the level of a single metric. Scenario 12 is not one of those where higher variability is present in the loss metrics or ROC-AUC score.

5.3. Mean Performance on Unseen Test Data Grouped by Tile Size, Overlap and Neural Network Architecture

In this section, the selected fixed factors are the tile size, tile overlap, and the DL architecture trained. The loss, accuracy, F1 score, precision, recall and AUC-ROC scores on the test set (performance metrics) act again as the dependent variables. ANOVA was applied to obtain the mean and standard deviation of the dependent variables and the inferential statistics (F statistic and its p-value, together with η and η

2). The results are grouped by tile size (N=12 samples for each of the three tile sizes considered), tile overlap (0% and 12.5%, N=18 samples for each group) and trained CNN architecture (VGG-v1 and VGG-v2, N=18 for each group). The results are presented in

Table 5.

In

Table 5, it can be observed that the mean loss values decrease as the tile size increases (from 0.1415 to 0.32 and 0.1415, in the case of the 256 × 256, 512 × 512, and 1024 × 1024 tile sizes, respectively). The standard deviation of the loss values contains small values across each considered size (from 0.0222 to 0.0371). This behaviour is repeated in the case of the accuracy, precision, and ROC-AUC score. The F1 score and its recall component do not display this constant increase pattern. One of the more plausible explanations for this situation could be the significant class imbalance in the data. Given the class weights applied, it seems that the models trained on tiles with a size of 512 × 512 pixels favoured a higher precision (a good identification of the minority class) at the expense of recall metrics (where the majority class is frequently misclassified), resulting in a lower F1 score.

Nonetheless, the p-values in the ANOVA table (corresponding to the F statistic for the tile size as a fixed factor) are smaller than 0.001 for all the dependent variables and indicate that the differences in the metrics across the tile sizes are statistically significant (the observed trends are unlikely to be random). The values of the measures of effect size (η and η2) suggest that tile size has a large effect on these metrics and a very strong positive association between the tile size and the performance metrics (values for η and η2 superior to 0.90, and even approaching 0.99 in the case of loss and accuracy), except for the recall, where the measures of effect indicate a strong positive association in the correlation ratio η (eta) of approximately 0.8 and a substantial effect size of approximately 0.63 (implying that 63% of the variation in recall is attributable to the variation in tile size).

In relation to the overlap as a fixed factor, the mean performance metrics present a slight increase in the “12.5% overlap” level when compared to the “No overlap” group, for all the metrics except for loss (where the average value decreases, which signals a better performance). This indicates a slight increase in the performance of the models trained on tiles featuring an overlap. The standard deviation of the “12.5% overlap” group indicates a slightly more variable performance when there is an overlap between adjacent images. As for the inferential statistics, the computed p-values are higher than 0.05 and indicate that the differences in performance metrics between the two levels of tile overlap are not statistically significant. The η values indicate a weak relationship between tile overlap and each of the performance metrics, while the η2 values show that only a very small proportion of the variance in each performance metric can be explained by tile overlap (for example, the η2 value of 0.015 for the F1 score implies that only 1.5% of the variance in F1 score can be attributed to the level of tile overlap).

When considering the CNN architectures as a fixed factor, similarly to the “overlap” as an independent variable, the means of the performance metrics slightly increase for every metric, except for loss (where a slight decrease can be observed), indicating a slight increase in performance for the VGG-v2 model trained for road classification. They suggest that, on average, the two models perform similarly. The standard deviations of performance metrics of VGG-v2 present slightly higher values when compared to those obtained by the VGG-v1 group and indicate slightly higher variability in the performance of VGG-v2 (for example, the standard deviation of accuracy is 0.0602 for VGG-v1 and 0.0613 for VGG-v2). All the p-values are higher than 0.05 and indicate that the differences in the mean metrics between the two models are not statistically significant. The η values are low and indicate a weak relationship between CNN architectures and the dependent variables (performance metrics). The η2 values are even lower and indicate that an insignificant proportion of the variance in the metrics can be attributed to the model (in the case of accuracy, it approaches zero).

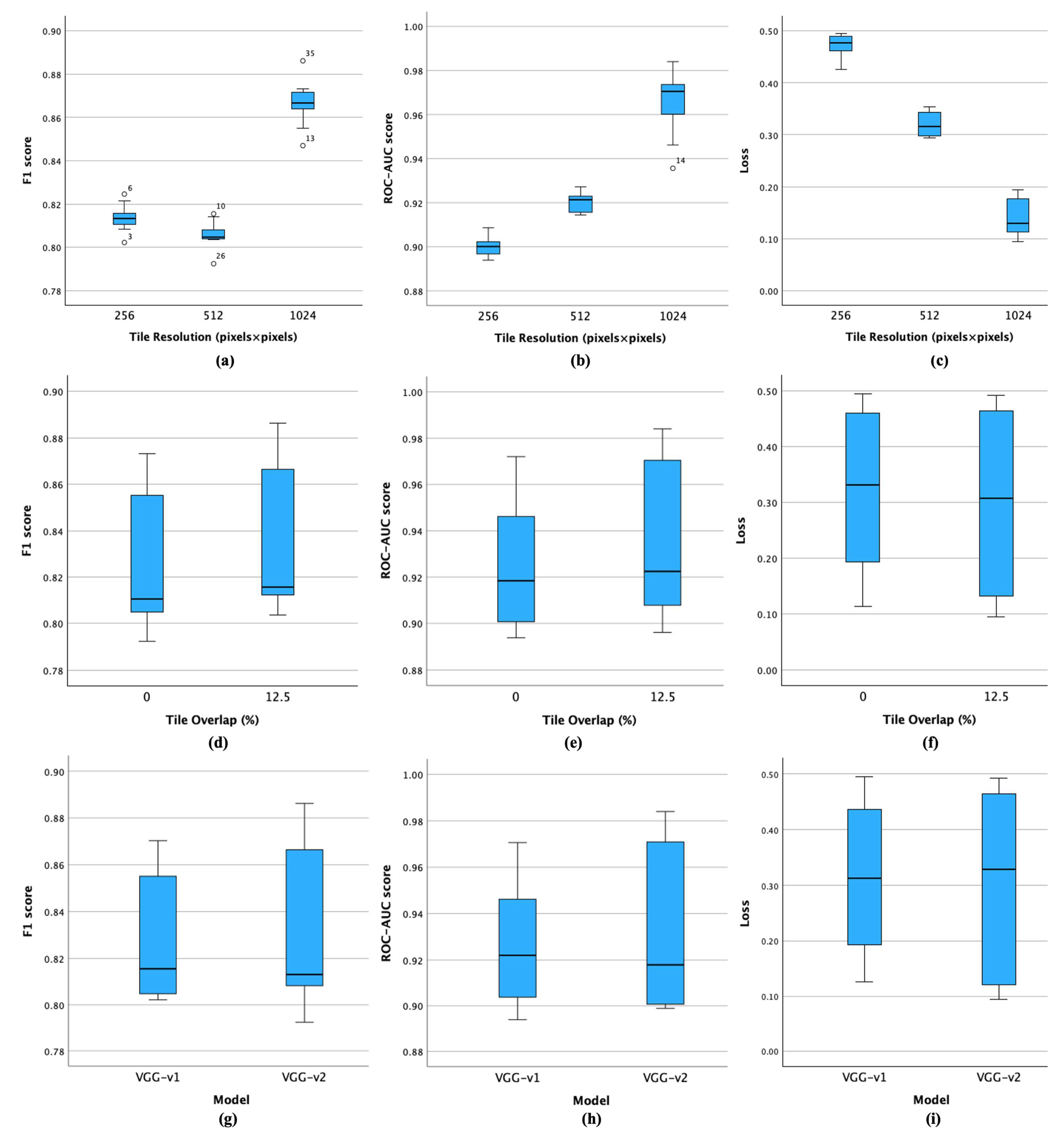

However, the p-values from the ANOVA table do not reveal which groups of the fixed terms are different from the others when there is a significant difference. For this reason, the analysis of the boxplots of the performance metrics grouped by the tile size, tile overlap, and CNN architecture was carried out next for the loss, F1 score and ROC-AUC score values (as illustrated in

Figure 4).

When grouping the metrics by the tile size, an increase in the median performance metrics can be observed at higher tile sizes (together with a decrease in the loss, which is also attributed to better performance), with the exception of the F1 score for the tile size of 512 × 512 pixels which, as discussed before, could be caused by class imbalance present in the data or by a generally more pronounced sensitivity of the CNN models to predicting the positive class at this particular size (a mean higher precision was observed in

Table 5). The results are aligned with those obtained in similar works [

10].

When grouping the performance metrics by the tile overlap, it can be observed that the median F1 and ROC-AUC scores increase in models trained over data with 12.5% overlap (and subsequently, the loss values decrease) when compared to the boxplot featuring models trained on data with no overlap.

Finally, in the case of the boxplots grouped by the trained CNN model in

Figure 4, it can be observed that, although the median F1 and ROC-AUC scores are slightly higher in the case of VGG-v1 (and the median loss value is smaller), the variability of the VGG-v2 is higher. The upper whiskers corresponding to the F1 and ROC-AUC score performance values reach a considerably higher value (and a considerably smaller loss value) when compared to VGG-v1. These values were all computed on unseen, testing data; the boxplot results support the observations from

Table 5. Post hoc tests are not performed in this section, because of the reduced number of groups within the fixed factors.

Next, to quantify the impact of the independent variables on the performance, factorial ANOVA was applied for analysing the main and interaction effects of the fixed factors on the metrics.

5.4. Main and Interaction Effects with Factorial ANOVA

In this section, factorial ANOVA is applied to examine whether the means of F1 and ROC-AUC scores and loss metrics as dependent variables are significantly different across the groups from the training ID, CNN architecture (model), tile size and overlap as fixed factors and whether there are significant interactions between two or more independent variables on the dependent variables. This type of analysis is applied to understand the influence of different categorical independent variables (fixed factors) on a dependent variable.

Factorial ANOVA studies the main effect of each factor (ignoring the effects of the other factors) and studies their interaction effect (combined effect of two or more factors, which could be different from the sum of their main effects) on each dependent variable. For the interaction effect, the null hypothesis states that “the effect of one independent variable on the dependent variable does not differ depending on the level of another independent variable”. A rejected null hypothesis (p-value <0.05) indicates that significant differences exist between the means of two or more independent groups.

The results of the factorial ANOVA test are presented in

Table 6.

Table 6 is divided into the various sources of variation; each source of variation being tested against the three dependent variables (the performance metrics considered) at a significance level of 0.05. The assumptions of factorial ANOVA have been met in this study, as the observations are independent, the residuals follow a normal distribution, and the variance of the observations is homogeneous.

“Corrected Model" and "Intercept" are statistical terms used in the context of regression analysis in “Between-Subjects” factorial ANOVA tables and provide details related to the relationship between the studied variables. In

Table 6, “Corrected Model” (source ID = 1) refers to the sums of squares that can be attributed to all the effects in the model (fixed and random factors, covariates, and their interactions), excluding the intercept. The F-test for the corrected model indicates whether the model explains any variance in the dependent variable (whether the variation in the performance metrics can be explained by the independent variables). The p-values are lower than 0.001, therefore the model is highly statistically significant. The “intercept” (source ID = 2) represents the mean value of the dependent variable when all independent variables are zero; the associated p-values for the three dependent variables (F1 score, ROC-AUC score, and loss) are lower than 0.001, showing that the model intercepts are significantly different from zero.

The main effect null hypothesis studies the marginal effect of a factor when all other factors are kept at a fixed level and states that the effect is not significant on the dependent variables. As can be observed in

Table 6, the effect of the fixed factors “Size” (source ID = 4) and “Overlap” (source ID = 5) on the performance metrics is statistically significant (p-values lower than 0.05 in all cases). This indicates the tile size and tile overlap significantly explain the variation in the dependent variables (F1 and ROC-AUC scores and loss) and that there is a highly significant difference in performance due to different tile sizes (p-values lower than <0.001 for each performance metric) and significant differences caused by tile overlap levels (p-values of 0.0038, <0.001, and 0.0055 for the respective dependent variables). As for the main effect of the CNN models (source ID = 3) on the dependent variables, the p-values for the F1 score and loss are greater than 0.05, indicating that the effect of CNN architecture (“Model”) on these variables is not statistically significant. However, the effect of the CNN architecture on the ROC-AUC score is significant (p-value < 0.05).

As for the interaction effect between tile size and tile overlap (source ID = 6) on the performance metrics, the p-value for F1 score is greater than 0.05, indicating that the interaction effect is not significant for this variable. However, the interaction effect is significant for the ROC-AUC score (p-value <0.05). A similar behaviour (non-significant interaction effect for the F1 and loss metrics, but significant for the ROC-AUC score) is displayed by the interaction effect between the CNN model and the overlap (source ID = 8). The interaction effect between the CNN architecture and size pair of fixed factors (source ID = 8) is statically significant for the ROC-AUC score, but not statistically significant for the loss and F1 score as dependent variables (the computed p-values are higher than 0.05). Nonetheless, the p-value of approximately 0.06 for the F1 score is only slightly above the 0.05 threshold and can be considered to suggest a trend in data.

In the case of the interaction effect between the three fixed factors (CNN architecture, tile size and tile overlap—source ID = 9), the difference in performance is not significant (p-values of 0.8685, 0.0601 and 0.9805 for the F1 and ROC-AUC scores and loss, respectively). Again, the p-value corresponding to 0.06 for the ROC-AUC score is only slightly above the 0.05 threshold and can suggest a trend in data. In

Table 6, “error” (source ID = 10) represents the unexplained variation in the dependent variables. Finally, “total” (source ID = 11) represents the total variation in the dependent variables, while “Corrected Total” (source ID = 12) represents the total variation in the dependent variables after removing the variation due to the model.

As a post-hoc analysis following the factorial ANOVA, the Estimated Marginal Means (EMMs), or predicted marginal means, were computed to help interpret the results from

Table 6. EMMs represent the means of the dependent variables across distinct levels of each factor, averaged over the other factors (to control for the effects of other factors), and are useful for understanding the interaction effects of multiple fixed factors on the performance metrics. In this case, EMMs provide the mean performance metric (F1 score, ROC-AUC score, and loss) at each level of the fixed factors averaged over the levels of the considered factors. For the two-way interaction between tile size and overlap (Size * Overlap, Source ID = 7 in

Table 6), the metrics are averaged over the levels of tile size (256 × 256, 512 × 512, and 1024 × 1024 pixels), and tile overlap (0% and 12.5%). For the three-way interaction between the tile size, overlap, and CNN architecture (VGG-v1 and VGG-v2), the metrics averaged over the levels of the three considered factors (Model * Size * Overlap, Source ID = 9 in

Table 6). The plot of the EMMs from

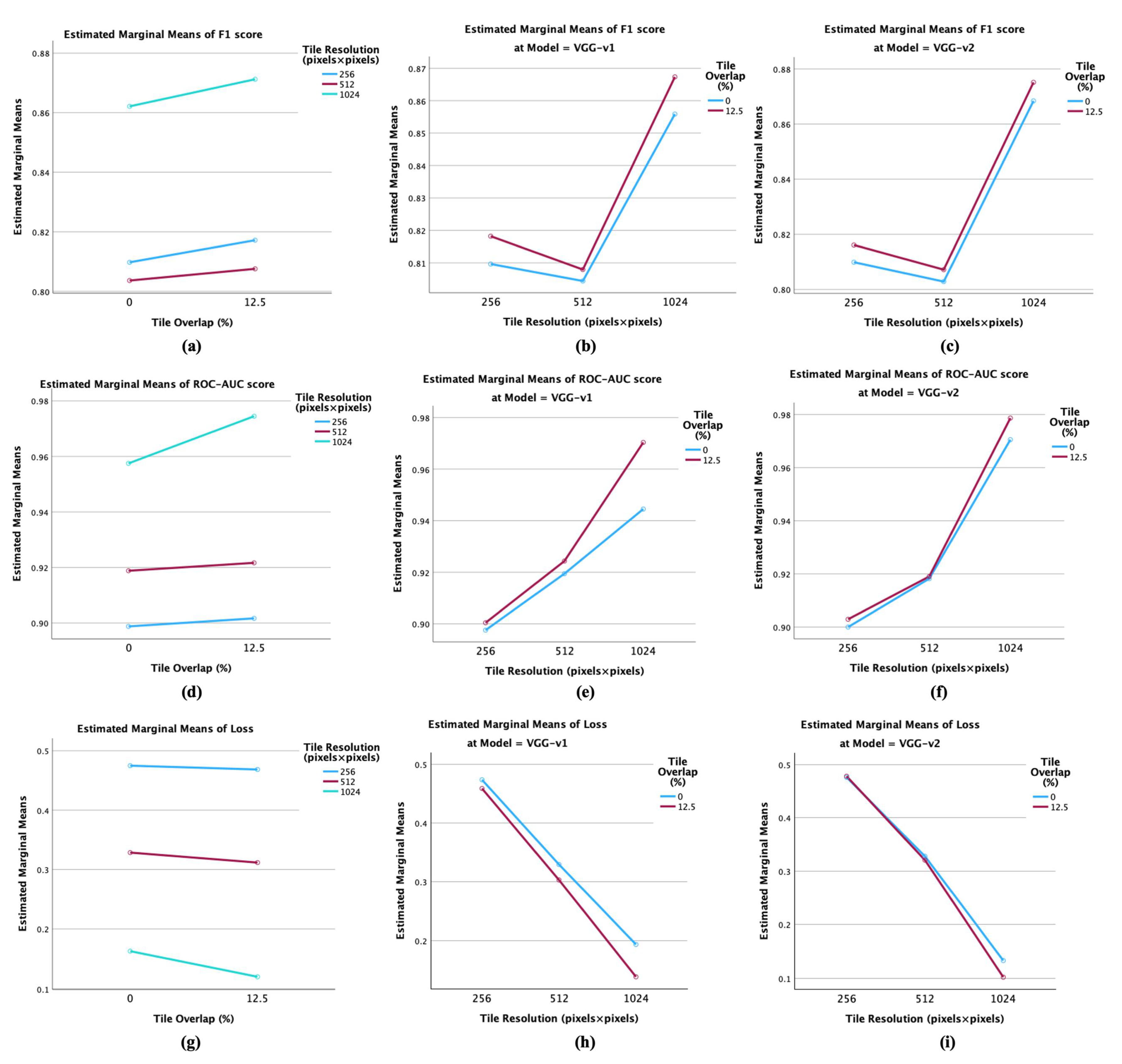

Figure 5 illustrates the means for the interaction effects of the two and three fixed factors mentioned on the dependent variables.

Appendix B presents the numerical values of the EMMs for the two-way interaction between the tile size and overlap (Size * Overlap) on the F1 and AUC-ROC scores and loss values.

Appendix C presents the EMMs values of the three-way interaction effect between the CNN architecture, tile size, and overlap (Model * Size * Overlap) on the same performance metrics.

Subplots (a), (d) and (g) of

Figure 5 present the EEMs of the two-way interaction between the tile size and tile overlap. The graphics suggest that the values of the mean F1 and AUC scores increase as the tile size and tile overlap increases, while the mean loss values decrease as the size increases (an indicator of a better performance). When accounting for the three-way interaction (the rest of

Figure 5’s subplots), it can be observed that the VGG-v2 model displays better performance when compared to VGG-v1 across all dependent variables for all tile sizes and overlaps. For both CNN architectures, the F1 score generally increases as the tile size increases from 256 × 256 to 1024 × 1024 pixels and it slightly improves when the tiles present a 12.5% overlap. This behaviour is also displayed for the ROC-AUC score metric, the value of the model trained on tiles of 1024 × 1024 pixels with a 12.5% overlap is considerably higher. Additionally, VGG-v2 achieved a lower loss when compared to the VGG-v1 model across all tile sizes and overlaps (a lower loss value is an indicator of better performance). As found in

Figure 5, the loss decreases as the tile size increases from 256 × 256 to 1024 × 1024 pixels for both models and is slightly lower on models trained with tiles featuring a 12.5% overlap.

6. Discussion

This work was focused on statistically studying the generalisation capacity of road classification models using unseen testing data and was centred on assessing the impact of different tile size and overlap levels on the performance metrics. The indicators of performance considered were the loss, F1 score, and ROC-AUC score, as these metrics ensure robustness and class distinction (the accuracy may mislead scenarios of imbalanced data present at higher tile sizes).

In this study, a significance level of 0.05 was applied for testing the null hypotheses. If the p-value is lower than 0.05, it can be concluded that the corresponding result is statistically significant. For p-values lower than 0.001, the result is considered highly significant (the observed data has less than 0.1% chance to occur under a correct null hypothesis). Conversely, a p-value > 0.05 (not significant) can be interpreted as there being no robust evidence to reject the null hypothesis. Finally, a p-value slightly above 0.05 can be considered indicative of a trend in the data.

6.1. On the Homogeneity of the Performance and Differences Between Training, Validation, and Testing Results

The data distribution of the training, validation, and test sets can be found in

Table 1, the performance metrics being presented in

Appendix A. The metrics from the “Train” columns of

Appendix A indicate the performance of the models on data they were trained on. As expected, the values are higher on this set, as this data was directly used to model the classification function of the road classification model. At the end of each training iteration, the model had access to its corresponding validation set to compute the loss and tune its training parameters—the performance on the validation data is generally lower than on the training data but is expected to be close if the model generalises well. The testing data has not been processed during training or validation and provides an unbiased evaluation of the resulting model that reflects the real-world usage performance. A considerably better performance on the training data compared to the validation and test data would indicate overfitting. Underfitting would be indicated by low scores across all sets.

The results from

Appendix A and

Table 1 show a high degree of homogeneity in the metrics from the same scenario settings. The results do not present marked overfitting or underfitting behaviour—the results show good performance and consistent performance across sets and indicate well-fitted models. Overfitting would be indicated by a high training score but low validation and test scores. The performance generally decreases from the training set to the validation data and to the test data. The metrics are highest on the training set, slightly lower on the validation set, and lowest on the test set.

The loss value is higher for the test set compared to the train and validation sets across all experiments—this is expected as the model had no access to the test data during training. Nonetheless, the relatively low loss values suggest that the model can make reasonably accurate predictions on the test data. Across all experiments, the F1 score is consistently highest on the training set, followed by the validation set, and lowest on the test set. The F1 score and ROC-AUC score, which are measures of the performance and discriminative ability of a model, respectively, show similar trends to the loss values. The ROC-AUC scores are high for all sets across all experiments, indicating that the models have high discrimination capacity between classes. A higher performance on the training set is a common pattern (a model is best tuned to the data it was trained on) but the performance on the validation and test set is also high and suggests that the model is not overfitting.

6.2. On the Training Scenarios and the Best Model

The descriptive statistics from

Table 3 show that the models present a reduced standard deviation within the same training scenario. For example, within the same scenario ID, the highest standard deviation value for the loss is present in Training ID 1 (±0.0322), in Training ID 12 (±0.0101) for the F1 score, and in Training ID 5 (±0.0082) for the ROC-AUC score. However, noticeable variations in performance were observed across different training scenarios. For example, Training ID 12 yields the lowest mean loss value (0.1018), while training ID 8 yields the highest mean loss (0.4783—smaller values indicate better performances). For the F1 performance metric, the minimum mean value is present in Training ID 3 (0.804) while the maximum mean value corresponds to Training ID 12 (0.8751). As for the ROC-AUC metric, Training ID 1 obtained the minimum mean value (0.8976), while the maximum mean value (0.9786) was obtained by Training ID 12. Therefore, the training scenario significantly impacted the performance of a model, the variation between groups (different training IDs) being much larger than the variation within groups (same training ID). This can also be noted in

Figure 2. All the corresponding p-values are smaller than 0.001 and indicate highly significant statistical differences—the training scenario significantly impacted the ability of the model to generalise to unseen data.

The η and η

2 measures of association from

Table 3 have values close to 1 and indicate a very strong positive association between the loss, the F1, and ROC-AUC scores and the training ID. The values imply that the training scenario had a significant effect on the performance and that a considerable proportion of the variance in each metric can be explained by the training ID.

The results of the post-hoc Scheffe test (

Table 4) revealed that Training IDs 5, 6, 11, and 12 consistently performed better across the three metrics considered for the homogeneous sets and suggested that these scenarios are likely the best performing ones. Alternatively, the models from Training IDs 1 and 2 appear to display worse performance across the considered metrics.

Figure 2 shows that the highest median F1 and ROC-AUC scores and lowest median loss are associated with scenario 12. The best performing model was the VGG-v2 model trained in scenario 12 (on tiles of 1024 × 1024 pixels and 12.5% overlap) which achieved a loss value of 0.0984, an F1 score of 0.8728, and an ROC-AUC score of 0.9766, together with an error rate of 3.5% on the test set (as described in

Section 5.3).

The loss values show some degree of homogeneity across different experiments and iterations, especially within the same training scenario. The F1 scores vary more significantly across different training scenarios and indicate that the precision and recall components were influenced by the training scenario ID. The ROC-AUC scores (indicating the ability of a model in distinguishing between classes) proved to be relatively consistent regardless of the specific experiment or iteration.

6.3. On the Tile Size and Tile Overlap

The increasing class imbalance between the positive and negative classes at higher tile sizes (from approximately 47.5:52.5% to 90:10% for the 256 × 256 and 1024 × 1024 tile sizes, respectively, as presented in

Table 1) was tackled by applying a class weight matrix during training (as described in

Section 4) to prevent models that are biased towards the overrepresented class.

Table 5 shows that the loss performance for size levels ranges from 0.4717 (256 × 256 pixels) to 0.1415 (1024 × 1024 pixels). When analysing the F1 score metric, the mean values range between 0.8056 (512 × 512 pixels) and 0.8667 (1024 × 1024 pixels), while for the ROC-AUC metric, the mean values are between the value 0.9002 (256 × 256 pixels) and 0.9660 (1024 × 1024 pixels). It can also be highlighted that the maximum standard deviation for the loss metric (0.037) is observed for the 1024 × 1024 size. For the F1 and ROC-AUC scores, the standard deviations are generally lower than 0.01 across each size level and indicate a similar performance of the models across the training scenarios. The 1024 × 1024 pixels size obtained the highest mean performance on unseen data for every considered metric. The dependent variables indicate a higher performance of the trained models at higher sizes. The results suggest that more semantic information from a scene helps the models in making more accurate predictions (considerably higher mean precision) but might also make the correct identification of all actual positive cases more difficult (slightly lower mean recall).

In relation to the tile overlap levels, the analysis of the results shows that the mean values of the loss range from 0.3001 (12.5% overlap) to 0.3221 (no overlap). These mean values also increase from 0.8252 (no overlap) to 0.8320 (12.5% overlap) for the F1 score metric and from 0.9250 (no overlap) to 0.9326 (12.5% overlap) for the ROC-AUC score metric. The standard deviations indicate a slightly higher variability at higher overlap. The differences in median performance between the two overlaps can also be identified in

Figure 4. It can be considered that a tile overlap of 12.5% results in better performance than 0% overlap across all metrics. This might suggest that the use of overlapping tiles could help the models in making more accurate predictions due to the more context and continuity provided.

When accounting for the results grouped by the CNN architecture levels (

Table 5 and

Figure 4), it can be observed that the VGG-v1 model performed slightly worse than VGG-v2 in terms of performance (mean loss, F1 and ROC-AUC scores values of 0.3159 and 0.3062, 0.8272 and 0.8299, and 0.9261 and 0.9315, respectively). The variability in performance metrics is slightly higher for the better CNN architecture. Both VGG-v1 and VGG-v2 models perform better with higher tile sizes (1024 × 1024 pixels) across all performance metrics.

The η and η2 measures of association indicate the strength and direction of the relationship between the independent variables and the performance metrics (values closer to one suggest a stronger relationship). For tile size, the η values (between 0.796 to 0.999), and the η2 values (between 0.634 to 0.997) are very high and suggest that tile size significantly affects the performance metrics. For tile overlap and CNN architecture, the η and η2 values are relatively low, suggesting a weaker relationship. This indicates that, while tile overlap and the choice of model do have some effect on the performance metrics, their impact is less significant compared to the tile size. The trend is also encountered in the case of the η2 values (that indicate the proportion of the variance in each performance metric that can be explained by the independent variable).

The results from

Section 5.2 indicate that the use of higher size tiles leads to a better average road classification performance, with a highly significant p-value of less than 0.001 (the models trained on tiles of 1024 × 1024 pixels delivered the best results). The tile overlap of 12.5% slightly outperforms the 0% overlap, and VGG-v2 slightly outperforms VGG-v1. The p-values might suggest that the differences in the means of the performance metrics between the two levels of tile overlap (0% and 12.5%) and CNN architectures (VGG-v1 and VGG-v2) might not have a substantial impact on the performance and could be caused by randomness. However, it is important to note that statistical significance does not always equate to practical significance. In this case, given the reduced number of training repetitions at the scenario level (due to the high computational cost required), the results might imply that the significance cannot be identified by analysing the mean values alone. This aspect is also indicated by the median results from

Figure 4, with better performances being achieved by a model with a higher number of trainable parameters at a higher overlap. For this reason, more statistical analysis was carried out to study the main and the interaction effect on these metrics by applying factorial ANOVA tests.

6.4. On the Main and Interaction Effects of Tile Size, Tile Overlap and Neural Network Architecture

The null hypothesis can be rejected if the p value is < 0.05 (it implies that a significant effect on the performance metrics can be observed when all other factors are kept at a fixed level). As found in

Table 6, the main effect of the tile size is highly significant (p-value lower than 0.001). The main effect of the tile overlap is also highly significant for the ROC-AUC score (p-value less than 0.001) and significant for the F1 score and loss metrics (p-values of 0.0038 and 0.0055, respectively). The main effect of the CNN architecture as a fixed term on the performance proved to be non-significant for the F1 score and loss as dependent variables (p-values higher than 0.05) and significant for the ROC-AUC score (p-value of 0.0014).

The effect on the performance metrics of the interactions between the combined factors analysed in this study was also evaluated. The p-value tests verify if the effect of the model on the dependent variables changes at different levels of the independent term. A significant p-value would suggest that the effect of one of the independent factors on the dependent variables depends on the level of a second independent factor, and vice versa. A p-value lower than 0.05 means that the combined effect on the performance is not significantly different from what would be expected based on their individual effects and there is not enough evidence to reject the null interaction effect hypothesis.

The p-values for the interaction effect between model and tile size (Model * Size) are highly significant for the ROC-AUC score (p-value < 0.001), significant for loss (p-value of 0.0034), but not significant for the F1 score (p-value of 0.0649). These p-values test whether the effect of the model on the dependent variables changes at different tile sizes. The significant p-value suggests that the effect of the model on the loss and ROC-AUC metric depends on the tile size, and vice versa.

The interaction effects of the tile size and tile overlap factors on the performance and CNN architecture and tile overlap factors on the metrics are not significant for the F1 score (p value > 0.05), but it is significant for the ROC-AUC score. This means that the effect of the tile size on the ROC-AUC score depends on the tile overlap level (in the case of the “Model * Overlap” interaction effect) and that the effect of the CNN architecture on the ROC-AUC metric changes at both overlap levels (for the “Model * Overlap” interaction effect), and vice versa.

The p-values for the three-way interaction among CNN architecture, tile size, and tile overlap (Model * Size * Overlap) are not statistically significant for any of the dependent variables (p > 0.05), but in the case of the ROC-AUC score, the p-value of 0.06 is low enough to indicate a trend. Nonetheless, the values imply that the combined effect of model, size and overlap on the performance is not significantly different from what would be expected based on their individual effects and their two-way interactions.

Therefore, while the main effects of tile size and overlap are significant, their interaction effects with the model are not consistently significant across all performance metrics. These statistical interpretations suggest nonetheless that higher tile sizes and a small amount of overlap can improve the performance of these models. The graphics from

Figure 5 support these findings.

6.5. A Qualitative Ranking of the Contributions of the Factors to the Performance

Although the statistical tests applied in this study do not rank the contributions of the tile size, tile overlap, and CNN model on the DL model performance, the experimental and analysis designs that provided the results from

Table 3,

Table 4,

Table 5 and

Table 6 and

Figure 2,

Figure 3,

Figure 4 and

Figure 5 offer significant insights into the effects of these factors on the performance of the models and enable a global, qualitative ranking of the importance of the factors. However, it is important to note that the ranking is based on the road classification results achieved in this study for this specific dataset, tile overlap, and CNN models used in our study. The relative contributions of these factors may vary for other tasks, datasets, or DL models, and further research is needed to generalise these findings (these aspects will be commented more in depth in

Section 6.6).

In this work, the factor with the highest impact on the performance of road classification models proved to be the tile size. In

Table 5 and