Submitted:

12 July 2024

Posted:

15 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- what are the most used data and methodologies in recent papers, namely those related to model calibration;

- what are the most common deviations from consensual best practices and what information is most omitted from methodological descriptions;

- identify how far the faults referred to above are identified and discussed;

- identify new recommendations to improve SDM results, making them clearer and more comprehensive.

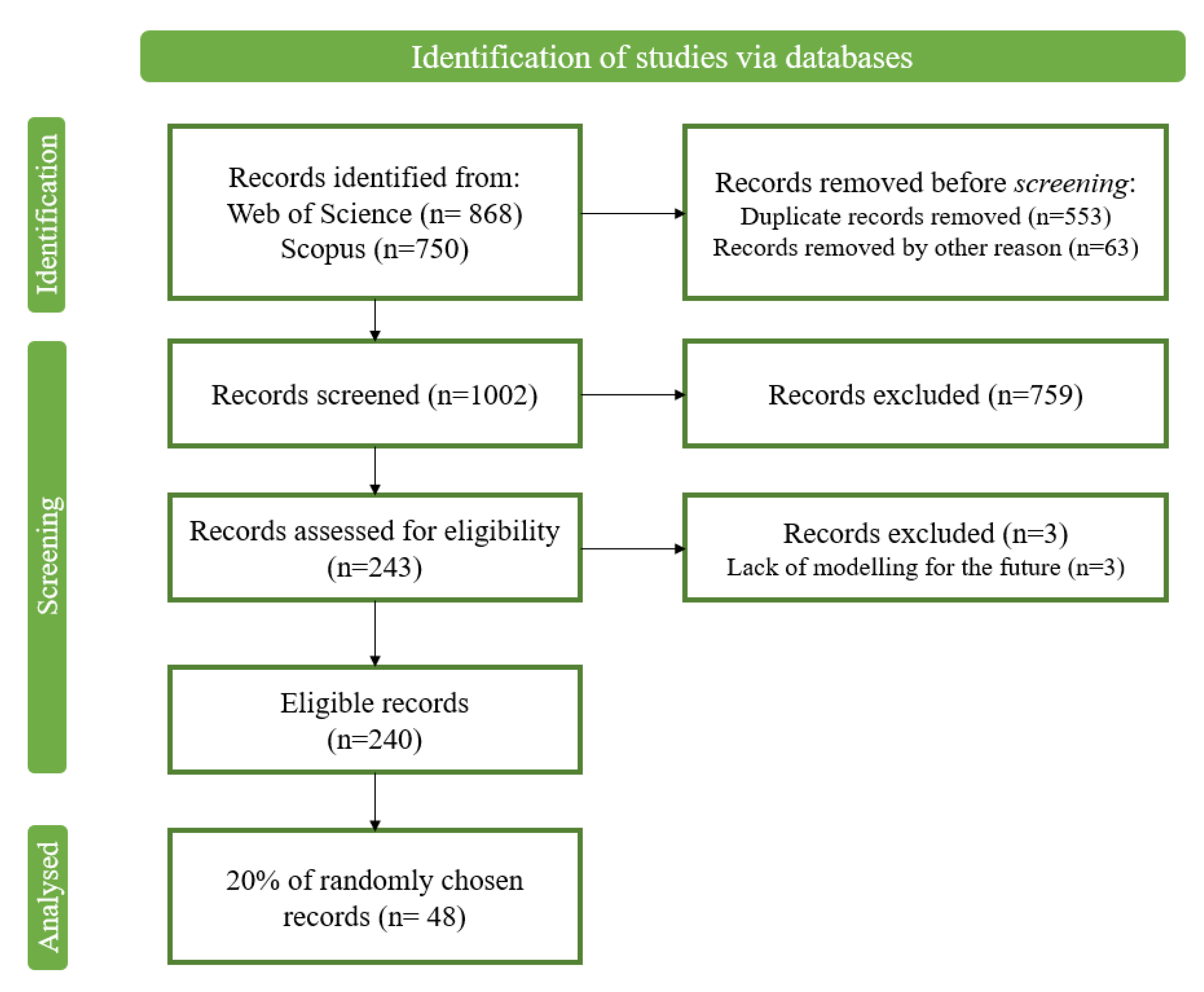

2. Materials and Methods

3. Results

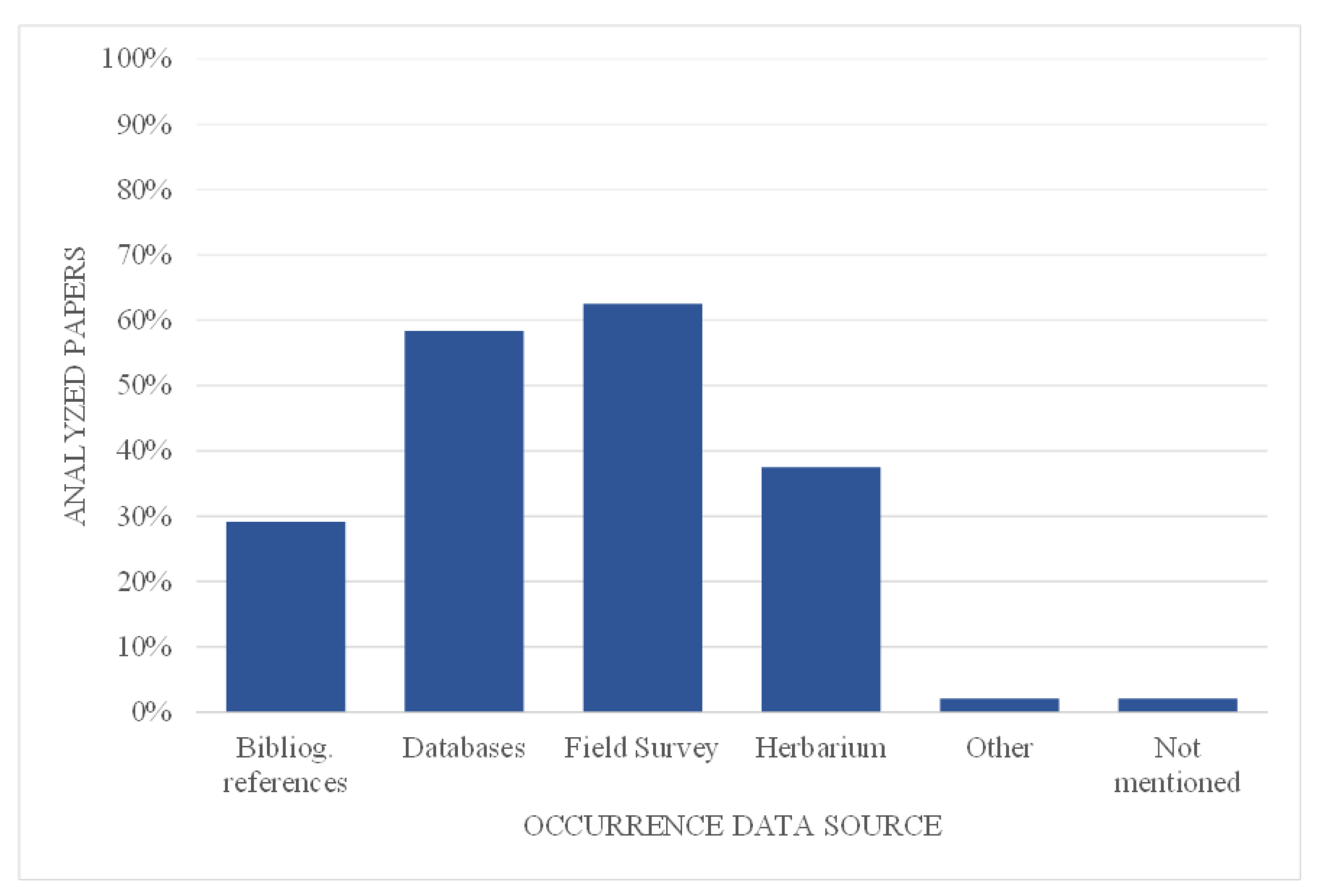

3.1. Species Occurrence Data

3.2. Abiotic Variables

3.2.1. Climate Variables

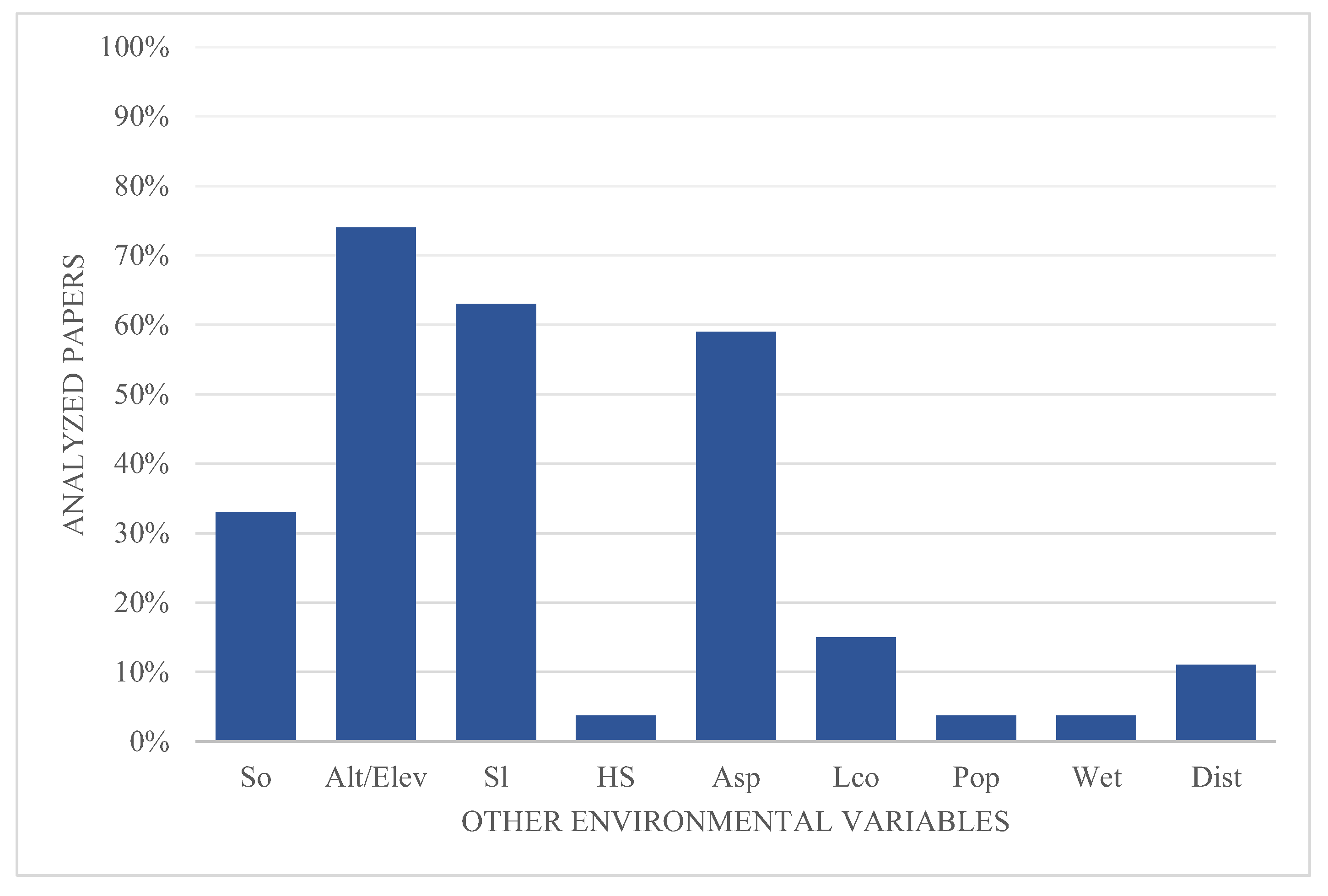

3.2.2. Other Environmental Variables

3.2.3. Variable Selection

3.3. Modelling Algorithm

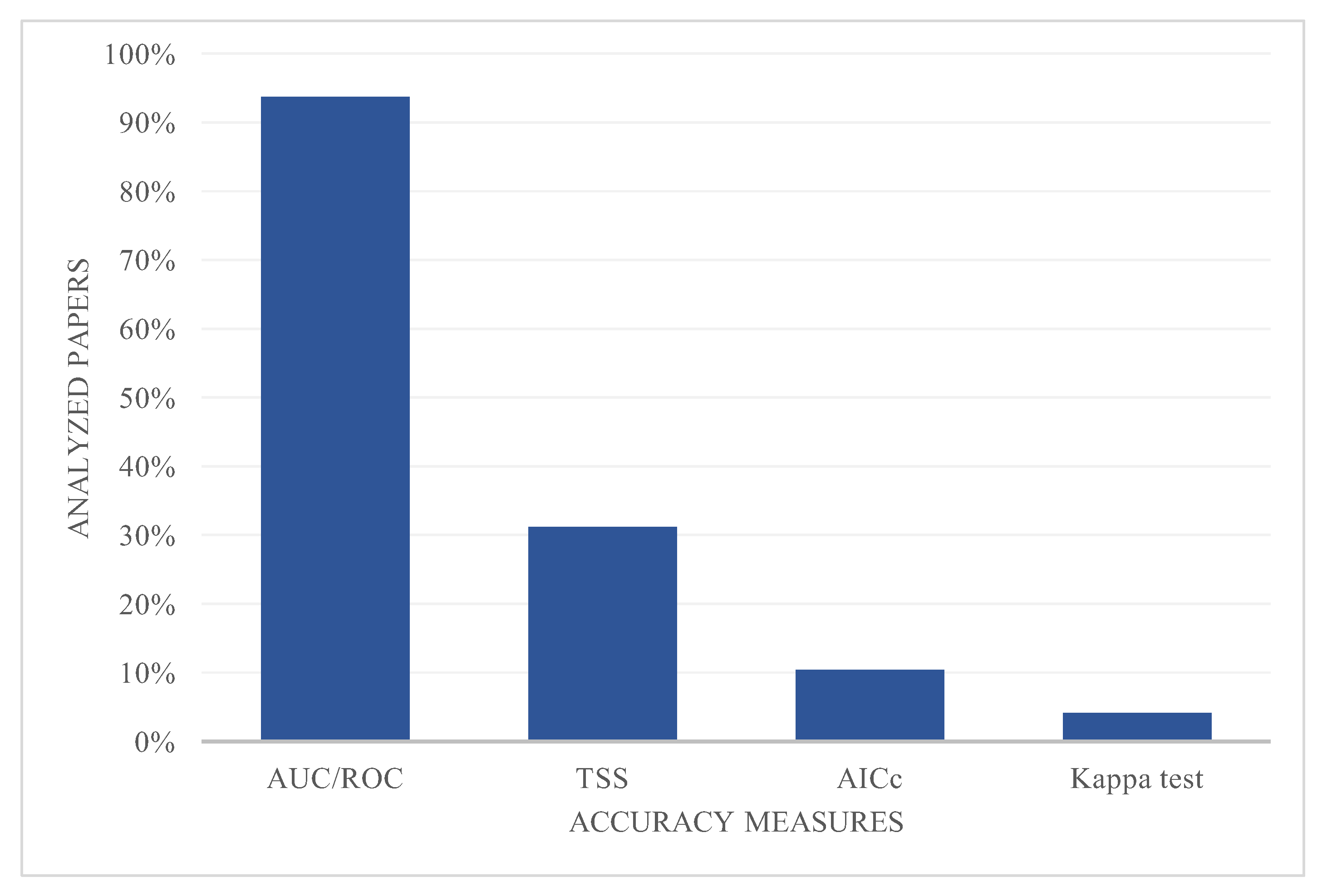

3.4. Model Performance

3.5. Ensemble Models

3.6. Future Climate Projections

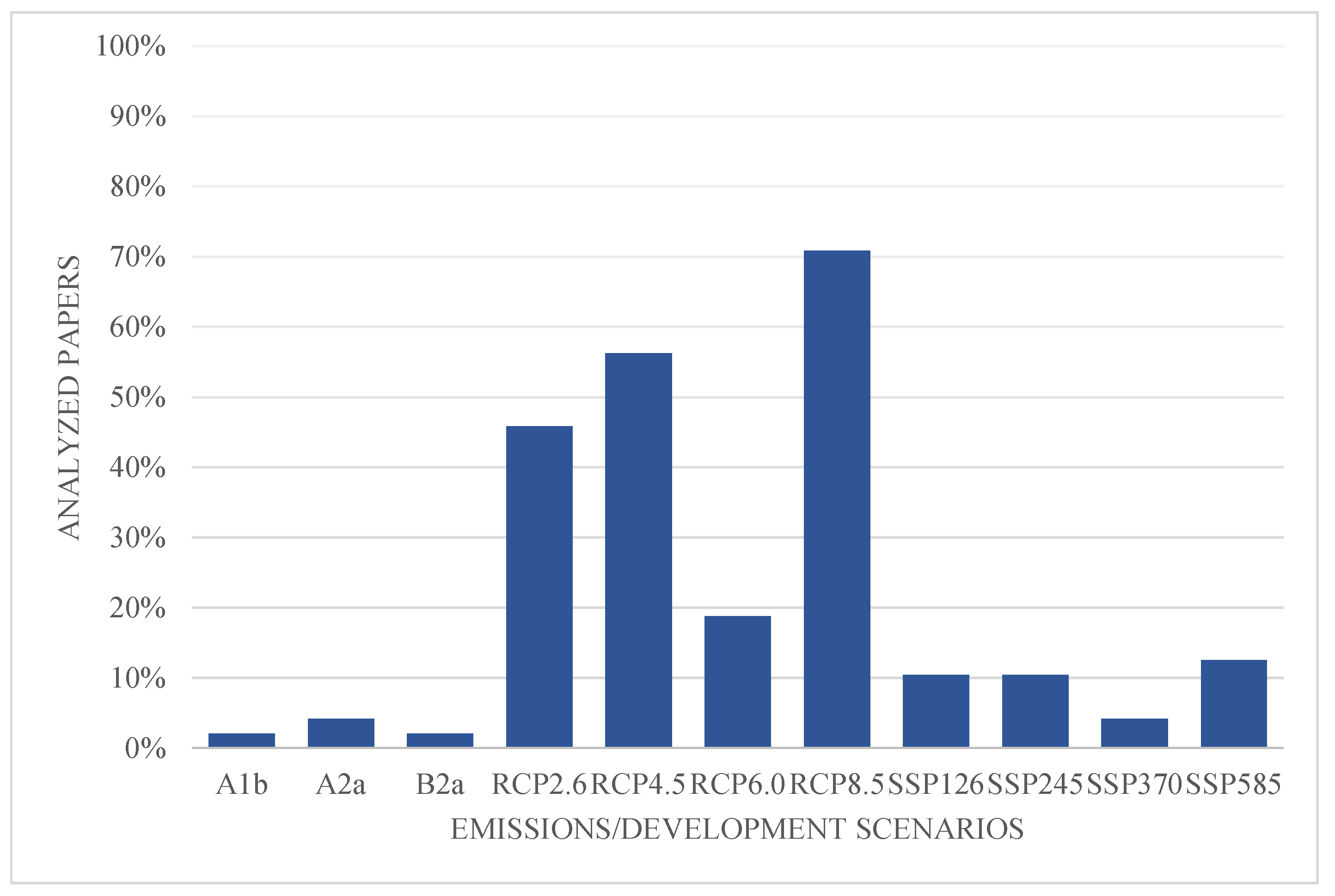

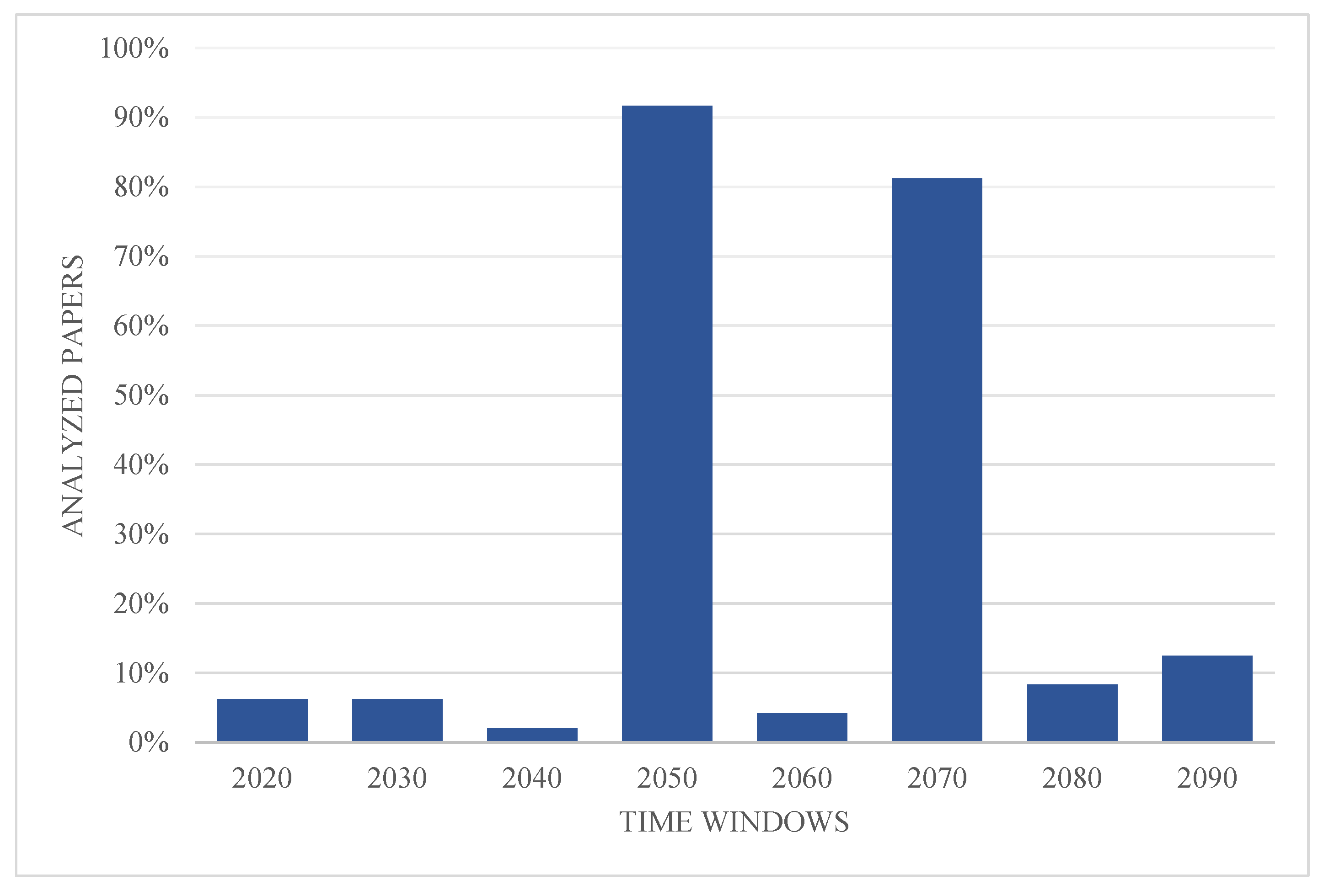

3.6.1. Climate Scenarios

4. Discussion

5. Conclusions

- Target species natural range;

- Considering the total species range in the study area, including a buffer to ensure the inclusion of different environmental conditions;

- Compare the study area and the natural range of the species, and justify the exclusion of certain areas from the model, if this is the case;

- Species' ecological preferences according to the bibliography, to support the selection variables selection;

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- T. Hao, J. Elith, G. Guillera-Arroita, and J. J. Lahoz-Monfort, “A review of evidence about use and performance of species distribution modelling ensembles like BIOMOD,” Diversity and Distributions, vol. 25, no. 5. Blackwell Publishing Ltd, pp. 839–852, May 01, 2019. [CrossRef]

- N. Sillero et al., “Want to model aspecies niche? A step-by-step guideline on correlative ecological niche modelling,” Ecol Modell, vol. 456, Sep. 2021. [CrossRef]

- J. Elith and J. R. Leathwick, “Species distribution models: Ecological explanation and prediction across space and time,” Annu Rev Ecol Evol Syst, vol. 40, pp. 677–697, Dec. 2009. [CrossRef]

- G. Guillera-Arroita, “Modelling of species distributions, range dynamics and communities under imperfect detection: advances, challenges and opportunities,” Ecography, vol. 40, no. 2, pp. 281–295, Feb. 2017. [CrossRef]

- M. B. Araújo et al., “Standards for distribution models in biodiversity assessments,” Sci Adv, vol. 5, no. 1, Jan. 2019. [CrossRef]

- X. Feng, D. S. Park, C. Walker, A. T. Peterson, C. Merow, and M. Papeş, “A checklist for maximizing reproducibility of ecological niche models,” Nat Ecol Evol, vol. 3, no. 10, pp. 1382–1395, Oct. 2019. [CrossRef]

- E. Gómez-Pineda, A. Blanco-García, R. Lindig-Cisneros, G. A. O’Neill, L. Lopez-Toledo, and C. Sáenz-Romero, “Pinus pseudostrobus assisted migration trial with rain exclusion: maintaining Monarch Butterfly Biosphere Reserve forest cover in an environment affected by climate change,” New For (Dordr), vol. 52, no. 6, pp. 995–1010, Nov. 2021. [CrossRef]

- C. Guo, S. Lek, S. Ye, W. Li, J. Liu, and Z. Li, “Uncertainty in ensemble modelling of large-scale species distribution: Effects from species characteristics and model techniques,” Ecol Modell, vol. 306, pp. 67–75, Jun. 2015. [CrossRef]

- C. Merow et al., “What do we gain from simplicity versus complexity in species distribution models?,” Ecography, vol. 37, no. 12, pp. 1267–1281, Dec. 2014. [CrossRef]

- Y. Shen et al., “Predicting the impact of climate change on the distribution of two relict Liriodendron species by coupling the MaxEnt model and actual physiological indicators in relation to stress tolerance,” J Environ Manage, vol. 322, Nov. 2022. [CrossRef]

- F. Xiao, Y. She, J. She, J. Zhang, X. Zhang, and C. Luo, “Assessing habitat suitability and selecting optimal habitats for relict tree Cathaya argyrophylla in Hunan, China: Integrating pollen size, environmental factors, and niche modeling for conservation,” Ecol Indic, vol. 145, Dec. 2022. [CrossRef]

- D. Zurell et al., “A standard protocol for reporting species distribution models,” Ecography, vol. 43, no. 9, pp. 1261–1277, Sep. 2020. [CrossRef]

- M. Pecchi et al., “Species distribution modelling to support forest management. A literature review,” Ecological Modelling, vol. 411. Elsevier B.V., Nov. 01, 2019. [CrossRef]

- P. Bogawski et al., “Current and future potential distributions of three Dracaena Vand. ex L. species under two contrasting climate change scenarios in Africa,” Ecol Evol, vol. 9, no. 12, pp. 6833–6848, Jun. 2019. [CrossRef]

- S. Jian, T. Zhu, J. Wang, and D. Yan, “The Current and Future Potential Geographical Distribution and Evolution Process of Catalpa bungei in China,” Forests, vol. 13, no. 1, Jan. 2022. [CrossRef]

- A. M. Almeida et al., “Prediction scenarios of past, present, and future environmental suitability for the Mediterranean species Arbutus unedo L.,” Sci Rep, vol. 12, no. 1, Dec. 2022. [CrossRef]

- K. Dimobe et al., “Climate change reduces the distribution area of the shea tree (Vitellaria paradoxa C.F. Gaertn.) in Burkina Faso,” J Arid Environ, vol. 181, Oct. 2020. [CrossRef]

- E. A. Farahat and A. M. Refaat, “Predicting the impacts of climate change on the distribution of Moringa peregrina (Forssk.) Fiori — A conservation approach,” J Mt Sci, vol. 18, no. 5, pp. 1235–1245, May 2021. [CrossRef]

- D. Kumar, S. Rawat, and R. Joshi, “Predicting the current and future suitable habitat distribution of the medicinal tree Oroxylum indicum (L.) Kurz in India,” J Appl Res Med Aromat Plants, vol. 23, May 2021. [CrossRef]

- A. J. Mendoza-Fernández et al., “The relict ecosystem of maytenus senegalensis subsp. europaea in an agricultural landscape: Past, present and future scenarios,” Land (Basel), vol. 10, no. 1, pp. 1–15, Jan. 2021. [CrossRef]

- S. K. Rana, H. K. Rana, J. Stöcklin, S. Ranjitkar, H. Sun, and B. Song, “Global warming pushes the distribution range of the two alpine ‘glasshouse’ Rheum species north- and upwards in the Eastern Himalayas and the Hengduan Mountains,” Front Plant Sci, vol. 13, Oct. 2022. [CrossRef]

- G. Tessarolo, J. M. Lobo, T. F. Rangel, and J. Hortal, “High uncertainty in the effects of data characteristics on the performance of species distribution models,” Ecol Indic, vol. 121, Feb. 2021. [CrossRef]

- D. L. Warren, A. Dornburg, K. Zapfe, and T. L. Iglesias, “The effects of climate change on Australia’s only endemic Pokémon: Measuring bias in species distribution models,” Methods Ecol Evol, vol. 12, no. 6, pp. 985–995, Jun. 2021. [CrossRef]

- M. B. Araújo and A. Guisan, “Five (or so) challenges for species distribution modelling,” J Biogeogr, vol. 33, no. 10, pp. 1677–1688, Oct. 2006. [CrossRef]

- M. B. Araújo and A. T. Peterson, “Uses and misuses of bioclimatic envelope models,” 2012.

- M. Fernandez, H. Hamilton, and L. M. Kueppers, “Characterizing uncertainty in species distribution models derived from interpolated weather station data,” Ecosphere, vol. 4, no. 5, May 2013. [CrossRef]

- R. K. Heikkinen, M. Luoto, M. B. Araújo, R. Virkkala, W. Thuiller, and M. T. Sykes, “Methods and uncertainties in bioclimatic envelope modelling under climate change,” Progress in Physical Geography, vol. 30, no. 6. Arnold, pp. 751–777, 2006. [CrossRef]

- J. Beck, M. Böller, A. Erhardt, and W. Schwanghart, “Spatial bias in the GBIF database and its effect on modeling species’ geographic distributions,” Ecol Inform, vol. 19, pp. 10–15, 2014. [CrossRef]

- N. Casajus, C. Périé, T. Logan, M. C. Lambert, S. de Blois, and D. Berteaux, “An objective approach to select climate scenarios when projecting species distribution under climate change,” PLoS One, vol. 11, no. 3, Mar. 2016. [CrossRef]

- C. F. Dormann et al., “Correlation and process in species distribution models: bridging a dichotomy.,” J Biogeogr, vol. 39, no. 12, pp. 2119–2131, 2013. [CrossRef]

- Y. Fourcade, J. O. Engler, D. Rödder, and J. Secondi, “Mapping species distributions with MAXENT using a geographically biased sample of presence data: A performance assessment of methods for correcting sampling bias,” PLoS One, vol. 9, no. 5, May 2014. [CrossRef]

- L. Guevara, B. E. Gerstner, J. M. Kass, and R. P. Anderson, “Toward ecologically realistic predictions of species distributions: A cross-time example from tropical montane cloud forests,” Glob Chang Biol, vol. 24, no. 4, pp. 1511–1522, Apr. 2018. [CrossRef]

- L. Guevara and L. León-Paniagua, “How to survive a glaciation: the challenge of estimating biologically realistic potential distributions under freezing conditions,” Ecography, vol. 42, no. 6, pp. 1237–1245, Jun. 2019. [CrossRef]

- B. M. Marshall and C. T. Strine, “Exploring snake occurrence records: Spatial biases and marginal gains from accessible social media,” PeerJ, vol. 2019, no. 12, 2019. [CrossRef]

- P. W. Moonlight et al., “The strengths and weaknesses of species distribution models in biome delimitation,” Global Ecology and Biogeography, vol. 29, no. 10, pp. 1770–1784, Oct. 2020. [CrossRef]

- D. Rocchini et al., “Anticipating species distributions: Handling sampling effort bias under a Bayesian framework,” Science of the Total Environment, vol. 584–585, pp. 282–290, Apr. 2017. [CrossRef]

- N. Sillero and A. M. Barbosa, “Common mistakes in ecological niche models,” International Journal of Geographical Information Science, vol. 35, no. 2. Taylor and Francis Ltd., pp. 213–226, 2020. [CrossRef]

- L. D. Silva, E. B. de Azevedo, F. V. Reis, R. B. Elias, and L. Silva, “Limitations of species distribution models based on available climate change data: A case study in the azorean forest,” Forests, vol. 10, no. 7, Jul. 2019. [CrossRef]

- T. J. Stohlgren, C. S. Jarnevich, W. E. Esaias, and J. T. Morisette, “Bounding species distribution models,” 2011. [Online]. Available: http://groups.google.com/group/maxent/.

- M. M. ElQadi, A. Dorin, A. Dyer, M. Burd, Z. Bukovac, and M. Shrestha, “Mapping species distributions with social media geo-tagged images: Case studies of bees and flowering plants in Australia,” Ecol Inform, vol. 39, pp. 23–31, May 2017. [CrossRef]

- R. P. Anderson et al., “Report of the task group on GBIF data fitness for use in distribution modelling,” 2016. [Online]. Available: http://www.gbif.org.

- IPCC, Climate Change 2022 – Impacts, Adaptation and Vulnerability. Contribution of Working Group II to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge, UK and New York, NY, USA: Cambridge University Press, 2022. [CrossRef]

- B. C. O’Neill et al., “The Scenario Model Intercomparison Project (ScenarioMIP) for CMIP6,” Geosci Model Dev, vol. 9, no. 9, pp. 3461–3482, Sep. 2016. [CrossRef]

- R. H. Moss et al., “The next generation of scenarios for climate change research and assessment,” Nature, vol. 463, no. 7282. pp. 747–756, Feb. 11, 2010. [CrossRef]

- K. Riahi et al., “The Shared Socioeconomic Pathways and their energy, land use, and greenhouse gas emissions implications: An overview,” Global Environmental Change, vol. 42, pp. 153–168, Jan. 2017. [CrossRef]

- D. P. van Vuuren et al., “The representative concentration pathways: An overview,” Clim Change, vol. 109, no. 1, pp. 5–31, Nov. 2011. [CrossRef]

- B. C. O’Neill et al., “Achievements and needs for the climate change scenario framework,” Nat Clim Chang, vol. 10, no. 12, pp. 1074–1084, Dec. 2020. [CrossRef]

- C. M. Beale and J. J. Lennon, “Incorporating uncertainty in predictive species distribution modelling,” Philosophical Transactions of the Royal Society B: Biological Sciences, vol. 367, no. 1586. Royal Society, pp. 247–258, Jan. 19, 2012. [CrossRef]

- C. F. McSweeney, R. G. Jones, R. W. Lee, and D. P. Rowell, “Selecting CMIP5 GCMs for downscaling over multiple regions,” Clim Dyn, vol. 44, no. 11–12, pp. 3237–3260, Jun. 2015. [CrossRef]

- M. Barbet-Massin, W. Thuiller, and F. Jiguet, “How much do we overestimate future local extinction rates when restricting the range of occurrence data in climate suitability models?,” Ecography, vol. 33, no. 5, pp. 878–886, Oct. 2010. [CrossRef]

- A. Lázaro-Nogal, S. Matesanz, L. Hallik, A. Krasnova, A. Traveset, and F. Valladares, “Population differentiation in a Mediterranean relict shrub: the potential role of local adaptation for coping with climate change,” Oecologia, vol. 180, no. 4, pp. 1075–1090, Apr. 2016. [CrossRef]

- N. Titeux et al., “The need for large-scale distribution data to estimate regional changes in species richness under future climate change,” Divers Distrib, vol. 23, no. 12, pp. 1393–1407, Dec. 2017. [CrossRef]

- M. Chevalier, O. Broennimann, J. Cornuault, and A. Guisan, “Data integration methods to account for spatial niche truncation effects in regional projections of species distribution,” Ecological Applications, vol. 31, no. 7, Oct. 2021. [CrossRef]

- M. Chevalier, A. Zarzo-Arias, J. Guélat, R. G. Mateo, and A. Guisan, “Accounting for niche truncation to improve spatial and temporal predictions of species distributions,” Front Ecol Evol, vol. 10, Aug. 2022. [CrossRef]

- D. Scherrer, M. Esperon-Rodriguez, L. J. Beaumont, V. L. Barradas, and A. Guisan, “National assessments of species vulnerability to climate change strongly depend on selected data sources,” Divers Distrib, vol. 27, no. 8, pp. 1367–1382, Aug. 2021. [CrossRef]

- F. O. G. Figueiredo, G. Zuquim, H. Tuomisto, G. M. Moulatlet, H. Balslev, and F. R. C. Costa, “Beyond climate control on species range: The importance of soil data to predict distribution of Amazonian plant species,” J Biogeogr, vol. 45, no. 1, pp. 190–200, Jan. 2018. [CrossRef]

- G. Zuquim, F. R. C. Costa, H. Tuomisto, G. M. Moulatlet, and F. O. G. Figueiredo, “The importance of soils in predicting the future of plant habitat suitability in a tropical forest,” Plant Soil, vol. 450, no. 1–2, pp. 151–170, May 2020. [CrossRef]

- C. Rovzar, T. W. Gillespie, and K. Kawelo, “Landscape to site variations in species distribution models for endangered plants,” For Ecol Manage, vol. 369, pp. 20–28, Jun. 2016. [CrossRef]

- S. J. E. Velazco, F. Galvão, F. Villalobos, and P. De Marco, “Using worldwide edaphic data to model plant species niches: An assessment at a continental extent,” PLoS One, vol. 12, no. 10, Oct. 2017. [CrossRef]

- X. Feng, D. S. Park, Y. Liang, R. Pandey, and M. Papeş, “Collinearity in ecological niche modeling: Confusions and challenges,” Ecol Evol, vol. 9, no. 18, pp. 10365–10376, Sep. 2019. [CrossRef]

- R. Sehler, J. Li, J. Reager, and H. Ye, “Investigating Relationship Between Soil Moisture and Precipitation Globally Using Remote Sensing Observations,” J Contemp Water Res Educ, vol. 168, no. 1, pp. 106–118, Dec. 2019. [CrossRef]

- S. de Tomás Marín et al., “Fagus sylvatica and Quercus pyrenaica: Two neighbors with few things in common,” For Ecosyst, vol. 10, Jan. 2023. [CrossRef]

- Y. Vitasse, C. C. Bresson, A. Kremer, R. Michalet, and S. Delzon, “Quantifying phenological plasticity to temperature in two temperate tree species,” Funct Ecol, vol. 24, no. 6, pp. 1211–1218, Dec. 2010. [CrossRef]

- P. García-Díaz, T. A. A. Prowse, D. P. Anderson, M. Lurgi, R. N. Binny, and P. Cassey, “A concise guide to developing and using quantitative models in conservation management,” Conserv Sci Pract, vol. 1, no. 2, p. e11, Feb. 2019. [CrossRef]

- C. F. Dormann et al., “Collinearity: A review of methods to deal with it and a simulation study evaluating their performance,” Ecography, vol. 36, no. 1, pp. 27–46, 2013. [CrossRef]

- B. Petitpierre, O. Broennimann, C. Kueffer, C. Daehler, and A. Guisan, “Selecting predictors to maximize the transferability of species distribution models: lessons from cross-continental plant invasions.,” Global Ecology and Biogeography, vol. 26, no. 3, pp. 275–287, 2017. [CrossRef]

- E. P. Tanner, M. Papeş, R. D. Elmore, S. D. Fuhlendorf, and C. A. Davis, “Incorporating abundance information and guiding variable selection for climate-based ensemble forecasting of species’ distributional shifts,” PLoS One, vol. 12, no. 9, Sep. 2017. [CrossRef]

- M. Barbet-Massin, F. Jiguet, C. H. Albert, and W. Thuiller, “Selecting pseudo-absences for species distribution models: How, where and how many?,” Methods Ecol Evol, vol. 3, no. 2, pp. 327–338, Apr. 2012. [CrossRef]

- M. Guerrina, E. Conti, L. Minuto, and G. Casazza, “Knowing the past to forecast the future: a case study on a relictual, endemic species of the SW Alps, Berardia subacaulis,” Reg Environ Change, vol. 16, no. 4, pp. 1035–1045, Apr. 2015. [CrossRef]

- R. Valavi, G. Guillera-Arroita, J. J. Lahoz-Monfort, and J. Elith, “Predictive performance of presence-only species distribution models: a benchmark study with reproducible code,” Ecol Monogr, vol. 92, no. 1, Feb. 2022. [CrossRef]

- A. W. Qazi, Z. Saqib, and M. Zaman-ul-Haq, “Trends in species distribution modelling in context of rare and endemic plants: a systematic review,” Ecological Processes, vol. 11, no. 1. Springer Science and Business Media Deutschland GmbH, Dec. 01, 2022. [CrossRef]

- D. F. Alvarado-Serrano and L. L. Knowles, “Ecological niche models in phylogeographic studies: Applications, advances and precautions,” Mol Ecol Resour, vol. 14, no. 2, pp. 233–248, Mar. 2014. [CrossRef]

- R. Sor, Y. S. Park, P. Boets, P. L. M. Goethals, and S. Lek, “Effects of species prevalence on the performance of predictive models,” Ecol Modell, vol. 354, pp. 11–19, Jun. 2017. [CrossRef]

- C. R. Lawson, J. A. Hodgson, R. J. Wilson, and S. A. Richards, “Prevalence, thresholds and the performance of presence-absence models,” Methods Ecol Evol, vol. 5, no. 1, pp. 54–64, Jan. 2014. [CrossRef]

- J. M. Lobo, A. Jiménez-valverde, and R. Real, “AUC: A misleading measure of the performance of predictive distribution models,” Global Ecology and Biogeography, vol. 17, no. 2. pp. 145–151, Mar. 2008. [CrossRef]

- H. R. Sofaer, J. A. Hoeting, and C. S. Jarnevich, “The area under the precision-recall curve as a performance metric for rare binary events,” Methods Ecol Evol, vol. 10, no. 4, pp. 565–577, Apr. 2019. [CrossRef]

- A. Jiménez-Valverde, “Insights into the area under the receiver operating characteristic curve (AUC) as a discrimination measure in species distribution modelling,” Global Ecology and Biogeography, vol. 21, no. 4, pp. 498–507, Apr. 2012. [CrossRef]

- B. Leroy, R. Delsol, B. Hugueny, C. N. Meynard, M. Barbet-Massin, and C. Bellard, “Title: Without quality presence-absence data, discrimination metrics such as TSS can be misleading measures of model performance 4 5 Word count: 3719 (without references) ; 5123 (with references)”. [CrossRef]

- G. Rapacciuolo, “Strengthening the contribution of macroecological models to conservation practice,” Global Ecology and Biogeography, vol. 28, no. 1, pp. 54–60, Jan. 2019. [CrossRef]

- M. J. Page et al., “The PRISMA 2020 statement: An updated guideline for reporting systematic reviews,” PLoS Medicine, vol. 18, no. 3. Public Library of Science, Mar. 29, 2021. [CrossRef]

- M. J. Page et al., “PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews,” The BMJ, vol. 372. BMJ Publishing Group, Mar. 29, 2021. [CrossRef]

- S. E. Fick and R. J. Hijmans, “WorldClim 2: new 1-km spatial resolution climate surfaces for global land areas,” International Journal of Climatology, vol. 37, no. 12, pp. 4302–4315, Oct. 2017. [CrossRef]

- R. J. Hijmans, S. E. Cameron, J. L. Parra, P. G. Jones, and A. Jarvis, “Very high resolution interpolated climate surfaces for global land areas,” International Journal of Climatology, vol. 25, no. 15, pp. 1965–1978, Dec. 2005. [CrossRef]

- P. J. Platts, P. A. Omeny, and R. Marchant, “AFRICLIM: high-resolution climate projections for ecological applications in Africa,” Afr J Ecol, vol. 53, no. 1, pp. 103–108, 2015. [CrossRef]

- H. G. Wouyou, B. E. Lokonon, R. Idohou, A. G. Zossou-Akete, A. E. Assogbadjo, and R. Glèlè Kakaï, “Predicting the potential impacts of climate change on the endangered Caesalpinia bonduc (L.) Roxb in Benin (West Africa),” Heliyon, vol. 8, no. 3, Mar. 2022. [CrossRef]

- J. Xiao, A. Eziz, H. Zhang, Z. Wang, Z. Tang, and J. Fang, “Responses of four dominant dryland plant species to climate change in the Junggar Basin, northwest China,” Ecol Evol, vol. 9, no. 23, pp. 13596–13607, Dec. 2019. [CrossRef]

- IPCC, Climate Change 2007: Synthesis Report. Contribution of Working Groups I, II and III to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Geneva: Intergovernmental Panel on Climate Change, 2007.

- IPCC, Climate Change 2014: Synthesis Report. Contribution of Working Groups I, II and III to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change. Geneva: Intergovernmental Panel on Climate Change, 2014.

- L. Henckel, U. Bradter, M. Jönsson, N. J. B. Isaac, and T. Snäll, “Assessing the usefulness of citizen science data for habitat suitability modelling: Opportunistic reporting versus sampling based on a systematic protocol,” Divers Distrib, vol. 26, no. 10, pp. 1276–1290, Oct. 2020. [CrossRef]

- R. G. Mateo, A. Gastón, M. J. Aroca-Fernández, S. Saura, and J. I. García-Viñas, “Optimization of forest sampling strategies for woody plant species distribution modelling at the landscape scale,” For Ecol Manage, vol. 410, pp. 104–113, Feb. 2018. [CrossRef]

- J. Seoane, A. Estrada, M. M. Jones, and O. Ovaskainen, “A case study on joint species distribution modelling with bird atlas data: Revealing limits to species’ niches,” Ecol Inform, vol. 77, Nov. 2023. [CrossRef]

- L. M. Ochoa-Ochoa, O. A. Flores-Villela, and J. E. Bezaury-Creel, “Using one vs. many, sensitivity and uncertainty analyses of species distribution models with focus on conservation area networks,” Ecol Modell, vol. 320, pp. 372–382, Jan. 2016. [CrossRef]

- W. Xu, S. Zhu, T. Yang, J. Cheng, and J. Jin, “Maximum Entropy Niche-Based Modeling for Predicting the Potential Suitable Habitats of a Traditional Medicinal Plant (Rheum nanum) in Asia under Climate Change Conditions,” Agriculture (Switzerland), vol. 12, no. 5, May 2022. [CrossRef]

- Q. Zhao et al., “Predicting the potential distribution of perennial plant coptis chinensis franch. In china under multiple climate change scenarios,” Forests, vol. 12, no. 11, Nov. 2021. [CrossRef]

- D. M. Bell and D. R. Schlaepfer, “On the dangers of model complexity without ecological justification in species distribution modeling,” Ecol Modell, vol. 330, pp. 50–59, Jun. 2016. [CrossRef]

- H. L. Kopsco, R. L. Smith, and S. J. Halsey, “A Scoping Review of Species Distribution Modeling Methods for Tick Vectors,” Frontiers in Ecology and Evolution, vol. 10. Frontiers Media S.A., Jun. 15, 2022. [CrossRef]

- S. B. Phillips, V. P. Aneja, D. Kang, and S. P. Arya, “Modelling and analysis of the atmospheric nitrogen deposition in North Carolina,” in International Journal of Global Environmental Issues, Inderscience Publishers, 2006, pp. 231–252. [CrossRef]

- S. J. Phillips, R. P. Anderson, M. Dudík, R. E. Schapire, and M. E. Blair, “Opening the black box: an open-source release of Maxent,” Ecography, vol. 40, no. 7, pp. 887–893, Jul. 2017. [CrossRef]

- J. Elith et al., “Novel methods improve prediction of species’ distributions from occurrence data,” Ecography, vol. 29, no. 2, pp. 129–151, Apr. 2006. [CrossRef]

- J. Elith, M. Kearney, and S. Phillips, “The art of modelling range-shifting species,” Methods Ecol Evol, vol. 1, no. 4, pp. 330–342, Dec. 2010. [CrossRef]

- A. Mauri et al., “Assisted tree migration can reduce but not avert the decline of forest ecosystem services in Europe,” Global Environmental Change, vol. 80, May 2023. [CrossRef]

- R. Sousa-Silva et al., “Adapting forest management to climate change in Europe: Linking perceptions to adaptive responses,” For Policy Econ, vol. 90, pp. 22–30, May 2018. [CrossRef]

- S. Domisch, M. Kuemmerlen, S. C. Jähnig, and P. Haase, “Choice of study area and predictors affect habitat suitability projections, but not the performance of species distribution models of stream biota,” Ecol Modell, vol. 257, pp. 1–10, May 2013. [CrossRef]

| Global Circulation Model (GCM) | Climate Research Centres (CRC) | Country | Number of documents by GCM, % | Number of documents by CRC, % |

|---|---|---|---|---|

| ACCESS1-0 | Australian Community Climate and Earth System Simulator Coupled Model | Australia | 2.1 | 2.1 |

| AFRICLIM | York Institute for Tropical Ecosystems (KITE) and Kenya Meteorological Service | Kenya | 4.3 | 4.3 |

| BCC-CSM1.1 | Beijing Climate Centre Climate System Model | China | 12.8 | 25.5 |

| BCC-CSM2-MR | 12.8 | |||

| CanESM5 | Canadian Earth System Model | Canada | 2.1 | 2.1 |

| CCAFS | CCAFS-Climate Statistically Downscaled Delta Method | Colombia | 6.4 | 6.4 |

| CCCMA | Canadian Centre for Climate Modelling and Analysis | Canada | 2.1 | 2.1 |

| CCSM4 | National Science Foundation (NSF) and National Centre for Atmospheric Research (NCAR) | United States | 29.8 | 31.9 |

| CCSM5 | 2.1 | |||

| CGCM3.1-T63 | Canadian Centre for Climate Modelling and Analysis | Canada | 2.1 | 2.1 |

| CNRM-CM5–1 | CNRM (Centre National de Recherches Météorologiques—Groupe d'études de l'Atmosphère Météorologique) and Cerfacs (Centre Européen de Recherche et de Formation Avancée | France | 2.1 | 12.8 |

| CNRM-CM6–1 | 4.3 | |||

| CNRM-ESM2–1 | 6.4 | |||

| CSIRO | Commonwealth Scientific and Industrial Research Organisation | Australia | 2.1 | 6.4 |

| CSIRO-MK3.6 | 4.3 | |||

| GFDL-CM3 | Geophysical Fluid Dynamics Laboratory (GFDL) | United States | 4.3 | 4.3 |

| GISS-E2-R | Goddard Institute for Space Studies (GISS - NASA) | United States | 2.1 | 2.1 |

| HadCM3 | UK Meteorological Office | United Kingdom | 2.1 | 40.4 |

| HadGEM2-AO | 4.3 | |||

| HadGEM2-ES | 26.1 | |||

| HadGEM-CC | 4.3 | |||

| HadGEM-IS | 2.1 | |||

| IPSL-CM5A-LR | Institut Pierre-Simon Laplace (IPSL) | France | 2.1 | 4.3 |

| IPSL-CM6A-LR | 2.1 | |||

| MIROC5 | Center for Climate System Research (CCSR), National Institute for Environmental Studies (NIES); and Japan Agency for Marine-Earth Science and Technology | Japan | 6.4 | 14.9 |

| MIROC6 | 2.1 | |||

| MIROC-ES2L | 4.3 | |||

| MIROC-ESM | 2.1 | |||

| MPI-ESM-LR | Max Planck Institute for Meteorology | Germany | 2.1 | 2.1 |

| MRI-CGCM3 | Meteorological Research Institute (MRI) | Japan | 8.5 | 12.8 |

| MRI-ESM2-0 | 4.3 | |||

| NorESM1-M | Norwegian Earth System Model (NorESM) | Norway | 2.1 | 2.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).