Submitted:

14 July 2024

Posted:

16 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Background and Motivation

1.2. Literature Overview

1.3. Problem Statement

2. Technical Exposition

2.1. Description of Dataset

2.2. Data Preprocessing

Missing Value Handling

Outlier Handling

| Algorithm 1 Nested Clustered Optimization |

return: final optimal weights allocated on each asset

|

3. Feature Engineering

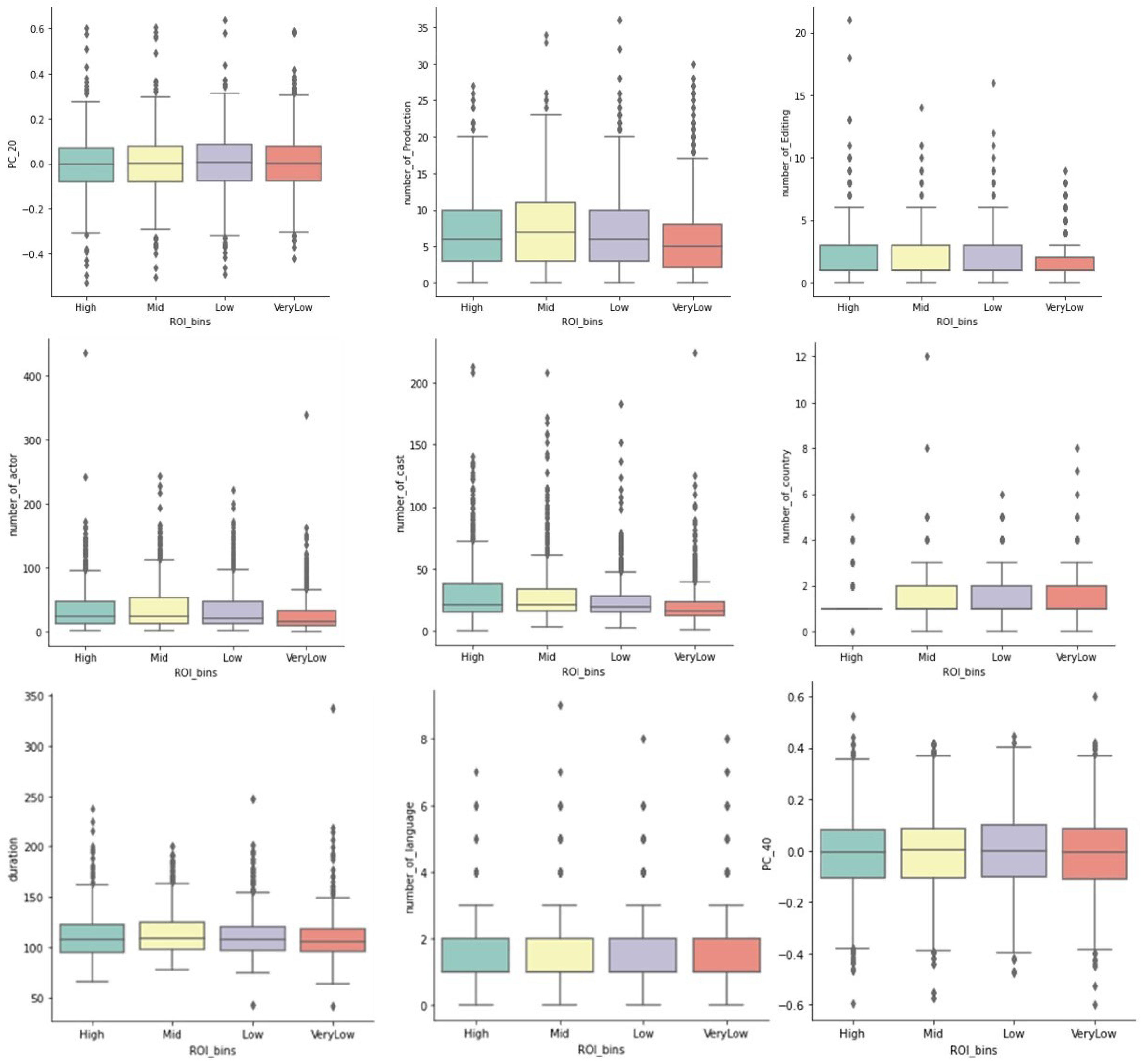

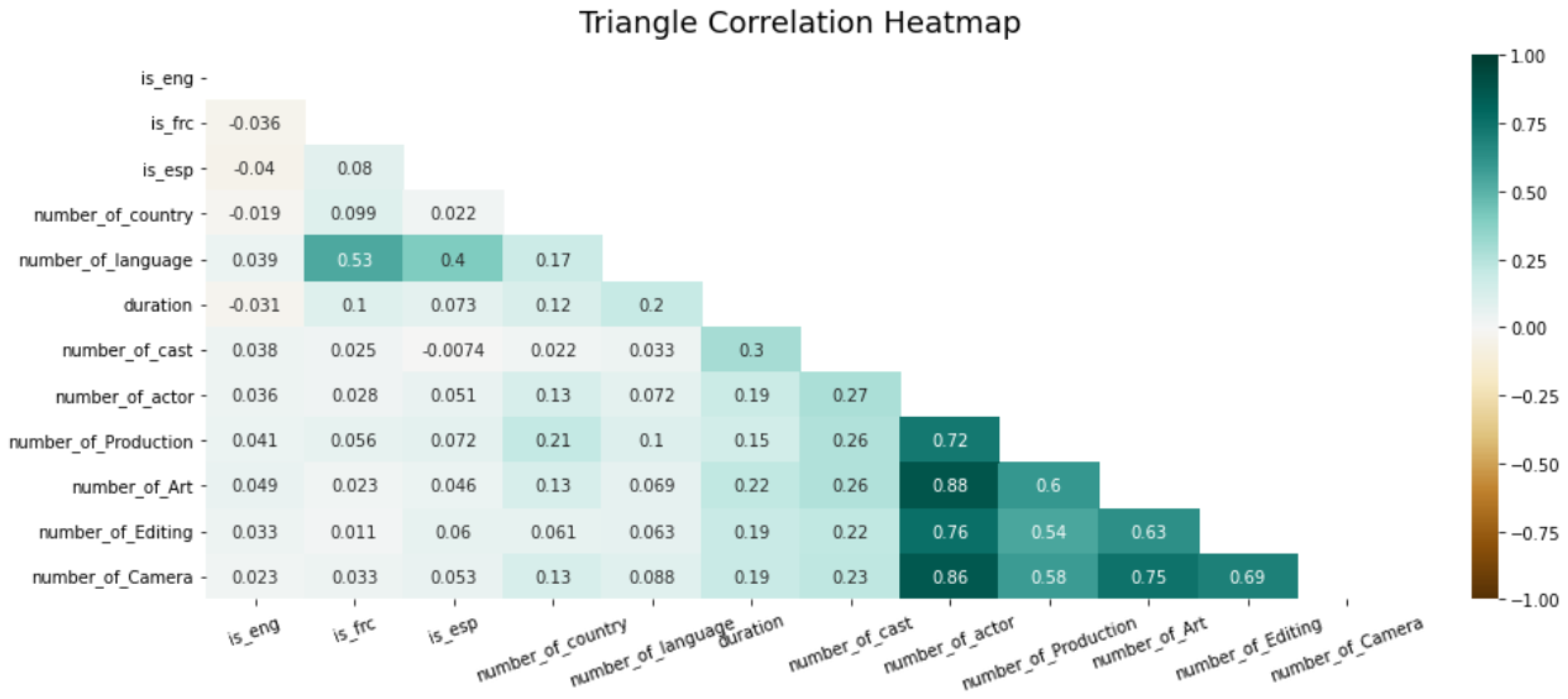

3.1. Exploratory Data Analysis

4. Methodology

4.1. Independent and Dependent Variables

4.2. Models and Model Selection

4.2.1. Evaluation Metrics

4.3. Latent Semantic Analysis

| Algorithm 2 LSA based on TF-IDF and PCA |

|

4.4. Logistic Regression

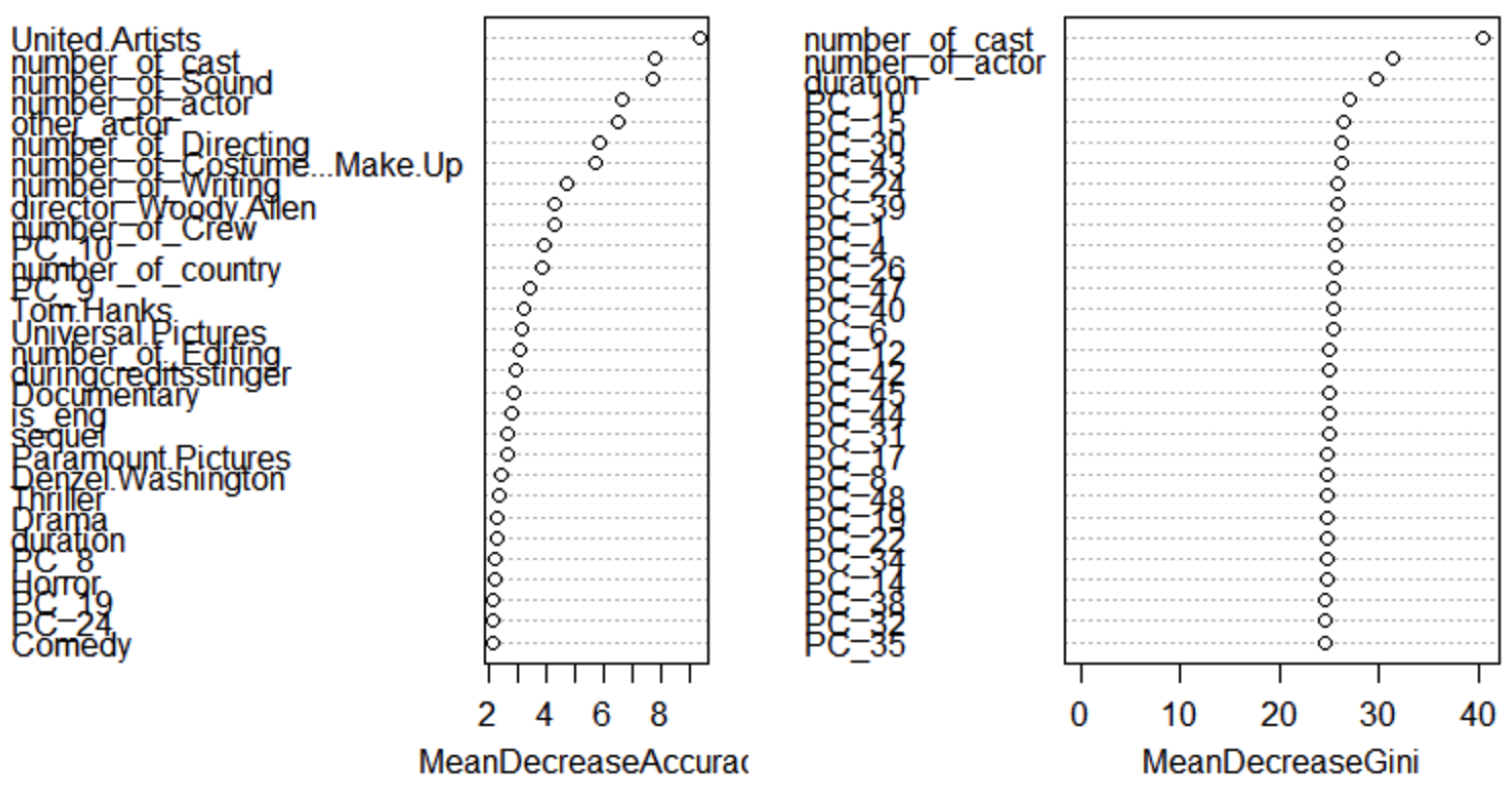

4.5. Tree-Based Models

4.6. Bayesian Model

5. Analysis of Results

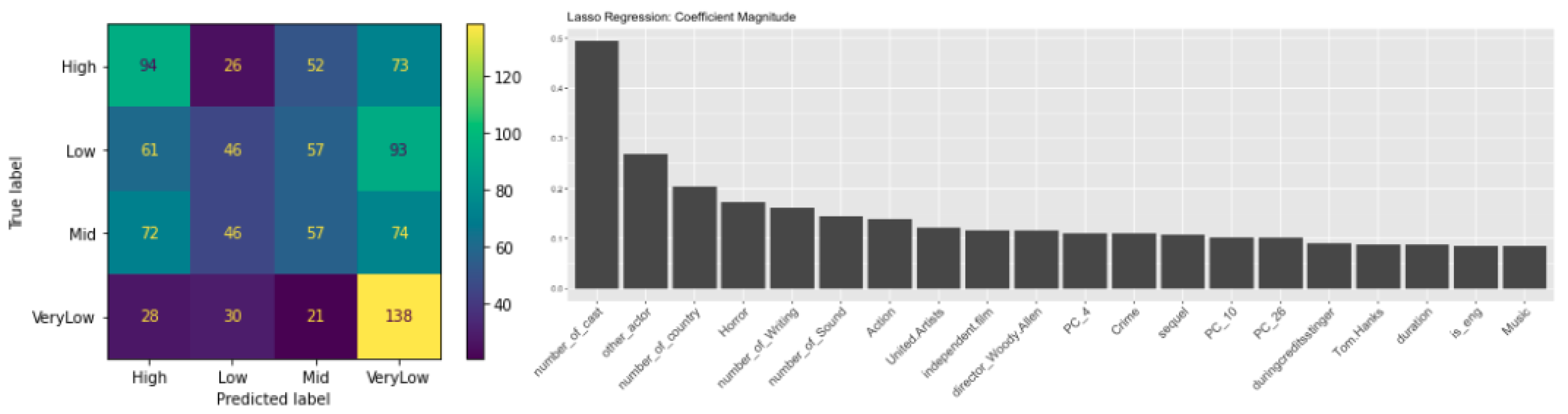

5.1. Linear Models

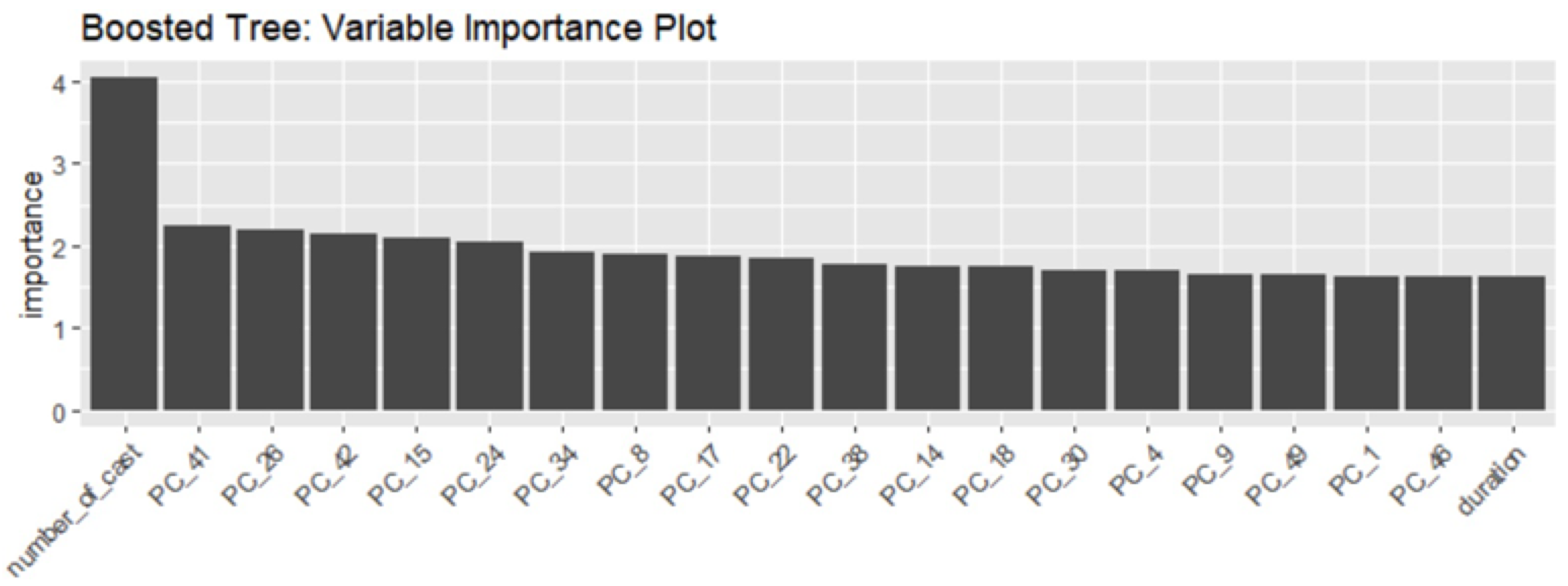

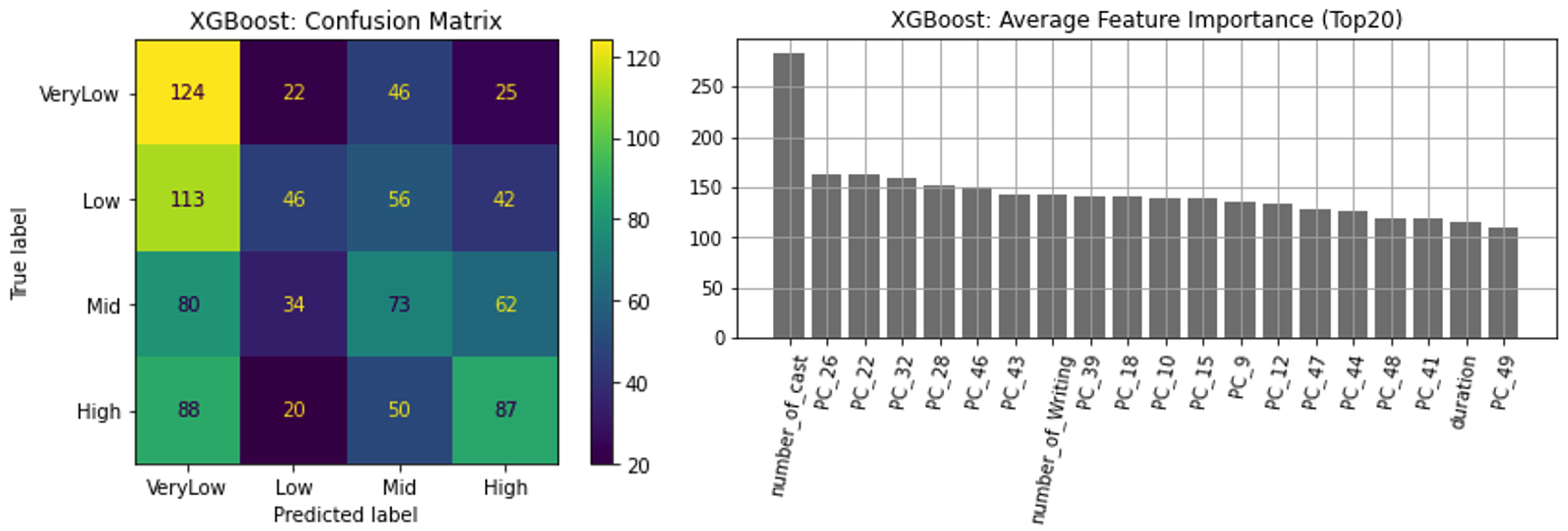

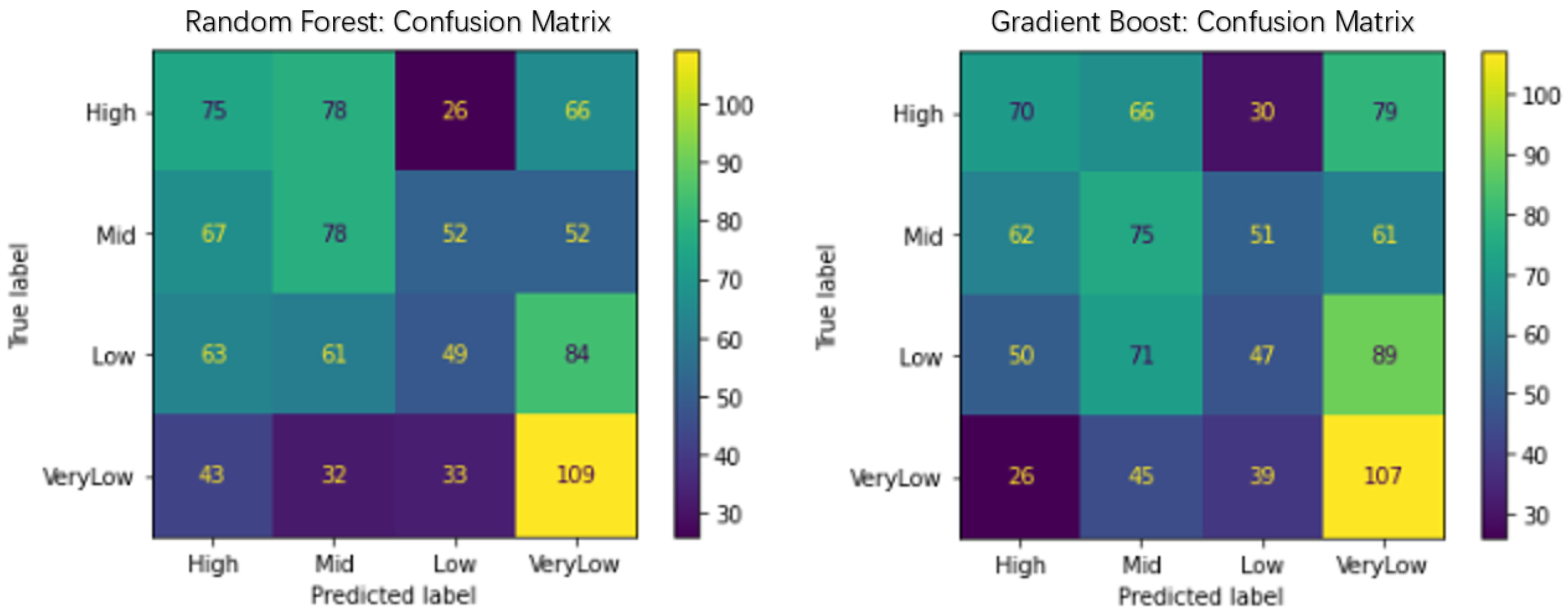

5.2. Tree-Based Models

5.3. Comparison of Models

6. Conclusion

References

- Cao, J., Ku, D., Du, J., Ng, V., Wang, Y., and Dong, W. (2017). A structurally enhanced, ergonomically and human–computer interaction improved intelligent seat’s system. Designs, 1(2):11. [CrossRef]

- Chen, Z., Ge, J., Zhan, H., Huang, S., and Wang, D. (2021). Pareto self-supervised training for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 13663–13672.

- Dai, S., Li, K., Luo, Z., Zhao, P., Hong, B., Zhu, A., and Liu, J. (2024). Ai-based nlp section discusses the application and effect of bag-of-words models and tf-idf in nlp tasks. Journal of Artificial Intelligence General Science (JAIGS), 5(1):13–21.

- Elhedhli, S., Li, Z., and Bookbinder, J. H. (2017). Airfreight forwarding under system-wide and double discounts. EURO Journal on Transportation and Logistics, 6(2):165–183.

- Hong, B., Zhao, P., Liu, J., Zhu, A., Dai, S., and Li, K. (2024). The application of artificial intelligence technology in assembly techniques within the industrial sector. Journal of Artificial Intelligence General Science (JAIGS), 5(1):1–. [CrossRef]

- Huang, D., Liu, Z., and Li, Y. (2024). Research on tumors segmentation based on image enhancement method. arXiv preprint arXiv:2406.05170.

- Jiang, H., Qin, F., Cao, J., Peng, Y., and Shao, Y. (2021). Recurrent neural network from adder’s perspective: Carry-lookahead rnn. Neural Networks, 144:297–306.

- Jin, C., Peng, H., Zhao, S., Wang, Z., Xu, W., Han, L., Zhao, J., Zhong, K., Rajasekaran, S., and Metaxas, D. N. (2024). Apeer: Automatic prompt engineering enhances large language model reranking. arXiv preprint arXiv:2406.14449.

- Jin, C., Che, T., Peng, H., Li, Y., and Pavone, M. (2024). Learning from teaching regularization: Generalizable correlations should be easy to imitate. arXiv preprint arXiv:2402.02769.

- Lai, S., Feng, N., Sui, H., Ma, Z., Wang, H., Song, Z., Zhao, H., and Yue, Y. (2024). Fts: A framework to find a faithful timesieve. arXiv preprint arXiv:2405.19647.

- Li, K., Peng, X., Song, J., Hong, B., and Wang, J. (2024). The application of augmented reality (ar) in remote work and education. arXiv preprint arXiv:2404.10579.

- Li, K., Zhu, A., Zhou, W., Zhao, P., Song, J., and Liu, J. (2024). Utilizing deep learning to optimize software development processes. arXiv preprint arXiv:2404.13630.

- Li, S., Lin, R., and Pei, S. (2024). Multi-modal preference alignment remedies regression of visual instruction tuning on language model. arXiv preprint arXiv:2402.10884.

- Li, S., and Tajbakhsh, N. (2023). Scigraphqa: A large-scale synthetic multi-turn question-answering dataset for scientific graphs. arXiv preprint arXiv:2308.03349.

- Lin, Z., Wang, Z., Zhu, Y., Li, Z., and Qin, H. (2024). Text sentiment detection and classification based on integrated learning algorithm. Applied Science and Engineering Journal for Advanced Research, 3(3):27–33.

- Liu, H., Xie, R., Qin, H., and Li, Y. (2024). Research on dangerous flight weather prediction based on machine learning. arXiv preprint arXiv:2406.12298.

- Liu, S., Yan, K., Qin, F., Wang, C., Ge, R., Zhang, K., Huang, J., Peng, Y., and Cao, J. (2024). Infrared image super-resolution via lightweight information split network. arXiv preprint arXiv:2405.10561. [CrossRef]

- Ni, H., Meng, S., Geng, X., Li, P., Li, Z., Chen, X., Wang, X., and Zhang, S. (2024). Time series modeling for heart rate prediction: From arima to transformers. arXiv preprint arXiv:2406.12199.

- Peng, H., Ran, R., Luo, Y., Zhao, J., Huang, S., Thorat, K., Geng, T., Wang, C., Xu, X., Wen, W., et al. (2024). Lingcn: Structural linearized graph convolutional network for homomorphically encrypted inference. Advances in Neural Information Processing Systems, 36.

- Peng, H., Xie, X., Shivdikar, K., Hasan, M. A., Zhao, J., Huang, S., Khan, O., Kaeli, D., and Ding, C. (2024). Maxk-gnn: Extremely fast gpu kernel design for accelerating graph neural networks training. In Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Volume 2, pages 683–698.

- Wang, H., Li, J., and Li, Z. (2024). Ai-generated text detection and classification based on bert deep learning algorithm. arXiv preprint arXiv:2405.16422.

- Wang, Y., Wang, C., Li, Z., Wang, Z., Liu, X., and Zhu, Y. (2024). Neural radiance fields convert 2d to 3d texture. Applied Science and Biotechnology Journal for Advanced Research, 3(3):40–44.

- Wang, Z., Zhu, Y., Li, Z., Wang, Z., Qin, H., and Liu, X. (2024). Graph neural network recommendation system for football formation. Applied Science and Biotechnology Journal for Advanced Research, 3(3):33–39.

- Xu, K., Wu, Y., Li, Z., Zhang, R., and Feng, Z. (2024). Investigating financial risk behavior prediction using deep learning and big data. International Journal of Innovative Research in Engineering and Management, 11(3):77–81.

- Yang, J., Qin, H., Por, L. Y., Shaikh, Z. A., Alfarraj, O., Tolba, A., Elghatwary, M., and Thwin, M. (2024). Optimizing diabetic retinopathy detection with inception-v4 and dynamic version of snow leopard optimization algorithm. Biomedical Signal Processing and Control, 96(A):106501.

- Zhang, W., Ma, Z., Wang, L., Fan, D., and Ho, Y.-Y. (2023). Genome-wide search algorithms for identifying dynamic gene co-expression via bayesian variable selection. Statistics in Medicine, 42(30):5616–5629. [CrossRef]

- Zhao, P., Li, K., Hong, B., Zhu, A., Liu, J., and Dai, S. (2024). Task allocation planning based on hierarchical task network for national economic mobilization. Journal of Artificial Intelligence General Science (JAIGS), 5(1):22–31.

- Zhong, Y., Liu, Y., Gao, E., Wei, C., Wang, Z., and Yan, C. (2024). Deep learning solutions for pneumonia detection: Performance comparison of custom and transfer learning models. medRxiv.

- Zhou, Q. (2024a). Application of black-litterman bayesian in statistical arbitrage. arXiv preprint arXiv:2406.06706.

- Zhou, Q. (2024b). Portfolio optimization with robust covariance and conditional value-at-risk constraints. arXiv preprint arXiv:2406.00610.

- Zhu, A., Li, K., Wu, T., Zhao, P., Zhou, W., and Hong, B. (2024). Cross-task multi-branch vision transformer for facial expression and mask wearing classification. arXiv preprint arXiv:2404.14606.

- Wang, C., Yang, Y., Li, R., Sun, D., Cai, R., Zhang, Y., Fu, C., and Floyd, L. (2024). Adapting llms for efficient context processing through soft prompt compression. arXiv preprint arXiv:2404.04997.

- Liu, H., Wang, C., Zhan, X., Zheng, H., and Che, C. (2024). Enhancing 3D Object Detection by Using Neural Network with Self-adaptive Thresholding. arXiv preprint arXiv:2405.07479. [CrossRef]

| 1 |

| Variables | Total | Percent |

| homepage | 3091 | 0.643556 |

| companies_3 | 2479 | 0.516136 |

| companies_2 | 1417 | 0.295024 |

| tagline | 844 | 0.175724 |

| plot_keywords | 412 | 0.085780 |

| companies_1 | 351 | 0.073079 |

| actor_3_name | 63 | 0.013117 |

| actor_2_name | 53 | 0.011035 |

| Dependent | ROI Levels (categorical): divided by quartiles, {’very low’, ’low’, ’mid’, ’high’} |

| Independent | Basic Information (numerical): duration, number of casts, etc |

| Production Company (dummy): top 10 most frequent companies | |

| Actor (dummy): top 100 most frequent actors | |

| Director (dummy): top 18 most frequent directors | |

| PC (numerical): 50 scores of the corresponding PCs derived from LSA |

| Models | Total Accuracy | HPR |

|---|---|---|

| Logistic Regression | 33.68% | 62.98% |

| Lasso Logistic Regression | 34.61% | 65.10% |

| Random Forest | 32.13% | 57.26% |

| Gradient Boost | 30.89% | 63.46% |

| LightGBM (XGBoost) | 34.10% | 68.98% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).