1. Introduction

Time series forecasting with Artificial Intelligence has been a prominent research area for many years. Historical data on electricity, traffic, finance, and weather are frequently used to train models for various applications. Some of the earlier techniques in time series forecasting relied on statistics and mathematical models like SARIMA [

1,

2,

3], and TBATs [

4]. These used moving average and seasonal cycles to capture the patterns for future prediction. With the advent of machine learning, new approaches using Linear Regression [

5] were developed. Here, a grouping-based quadratic mean loss function is incorporated to improve linear regression performance in time series prediction. Another approach in machine learning is based on an ensemble of decision trees termed XGBoost [

6]. This uses Gradient Boosted Decision Trees (GBDT) where each new tree focuses on correcting the prediction errors of the preceding trees.

Deep learning introduced some newer approaches. Some of the earlier techniques used, Recurrent Neural Networks (RNNs) [

7] with varying architectures based on Elman RNN, LSTM (Long Short-Term Memory), and GRU (Gated Recurrent Units). These designs capture sequential dependencies and long-term patterns in the data [

8]. The recurrent approaches were followed by use of Convolutional Neural Networks (CNNs) in time series e.g., [

9,

10,

11]. In recent years, transformer-based architectures have become the most popular approach for Natural Language Processing (NLP). Their success in NLP has given rise to the possibility of using them in other domains such as image processing, speech recognition, as well as time series forecasting. Some of the popular transformer-based approached to time series include [

12,

13,

14,

15,

16,

17,

18]. Of these, Informer [

12] introduces a ProbSparse self-attention mechanism with distillation techniques for efficient key extraction. Autoformer [

13] incorporates decomposition and auto-correlation concepts from classic time series analysis. FEDformer [

14] leverages a Fourier enhanced structure for linear complexity. One of the recent transformer-based architectures termed as PatchTST [

16] breaks down a time series into smaller segments to be used as input tokens for the model. Another recent design iTransformer [

18] independently inverts the embedding of each time series variate. The time points of individual series are embedded into variate tokens which are utilized by the attention mechanism to capture multivariate correlations. Further, the feed-forward network is applied for each variate token to learn nonlinear representations. While the above mention designs have shown effective results, transformers face challenges in time series forecasting due to their difficulty in modeling non-linear temporal dynamics, order sensitivity, and high computational complexity for long sequences. Noise susceptibility and handling long-term dependencies further complicate their use in fields involving volatile data such as financial forecasting. Different transformer-based designs such as Autoformer, Informer, and FEDformer aim to mitigate the above issues, but often at the cost of some information loss and interpretability.

As a result, some recent time series research tried to explore approaches other than Transformer based designs. These include LTSF-Linear [

19], ELM [

20], and Timesnet [

21]. LTSF-Linear is extremely simple and uses a single linear layer. It outperforms many Transformer-based models such as Informer, Autoformer, and FEDformer [

12,

13,

14] on the popular time series forecasting benchmarks. TimesNet [

20] uses modular TimesBlocks and an inception block to transform 1D time series into 2D, effectively handling variations within and across periods for multi-periodic analysis. ELM further improves the LTSF-Linear by incorporating dual pipelines with batch normalization and reversible instance normalization. With the recent popularity of state-space approaches [

22], some research in time series has explored these ideas and have achieved promising results e.g., SpaceTime [

23], captures autoregressive processes and includes a “closed-loop” variation for extended forecasting.

The success of LTSF-Linear [

19] and ELM [

20], with straightforward linear architectures, in outperforming more complex transformer-based models has prompted a reevaluation of approaches to time series forecasting. This unexpected outcome challenges the assumption that increasingly sophisticated architectures necessarily lead to better prediction performance. In light of these findings, we propose enhancements to a recently proposed improved LSTM based architecture termed xLSTM. We adapt and improve xLSTM for time series forecasting and term our architecture as xLSTMTime. This model incorporates exponential gating and a revised memory structure, designed to improve performance and scalability in time series forecasting tasks. We compare our xLSTMTime against various state-of-the-art time series prediction models across multiple real-world datasets, and demonstrate its superior performance highlighting the potential of refined recurrent architectures in this domain.

2. Related Work

While LSTM was one of first popular deep learning approaches with applications to NLP, it was over shadowed by the success of transformers. Recently, this architecture was revisited and greatly improved. The revised LSTM is termed as xLSTM - Extended Long Short-Term Memory [

24]. It presents enhancements to the traditional LSTM architecture aimed at boosting its performance and scalability for large language models. Key advancements include the introduction of exponential gating for better normalization and stabilization, a revised memory structure featuring scalar and matrix variants, and the integration into residual block backbones. These improvements allow xLSTM to perform competitively with state-of-the-art Transformers [

25], and State Space Models [

22]. xLSTM has two architecture variations which are termed sLSTM and mLSTM, as explain below.

2.1. sLSTM

The stabilized Long Short-Term Memory (sLSTM) [

24] model is an advanced variant of the traditional LSTM architecture that incorporates exponential gating, memory mixing, and stabilization mechanisms. These enhancements improve the model’s ability to make effective storage decisions, handle rare token prediction in NLP, capture complex dependencies, and maintain robustness during training and inference. The equations describing sLSTM are as described in [

24]. We present these here for the sake of completeness of our work before describing the adaptation of these to the time series forecasting domain.

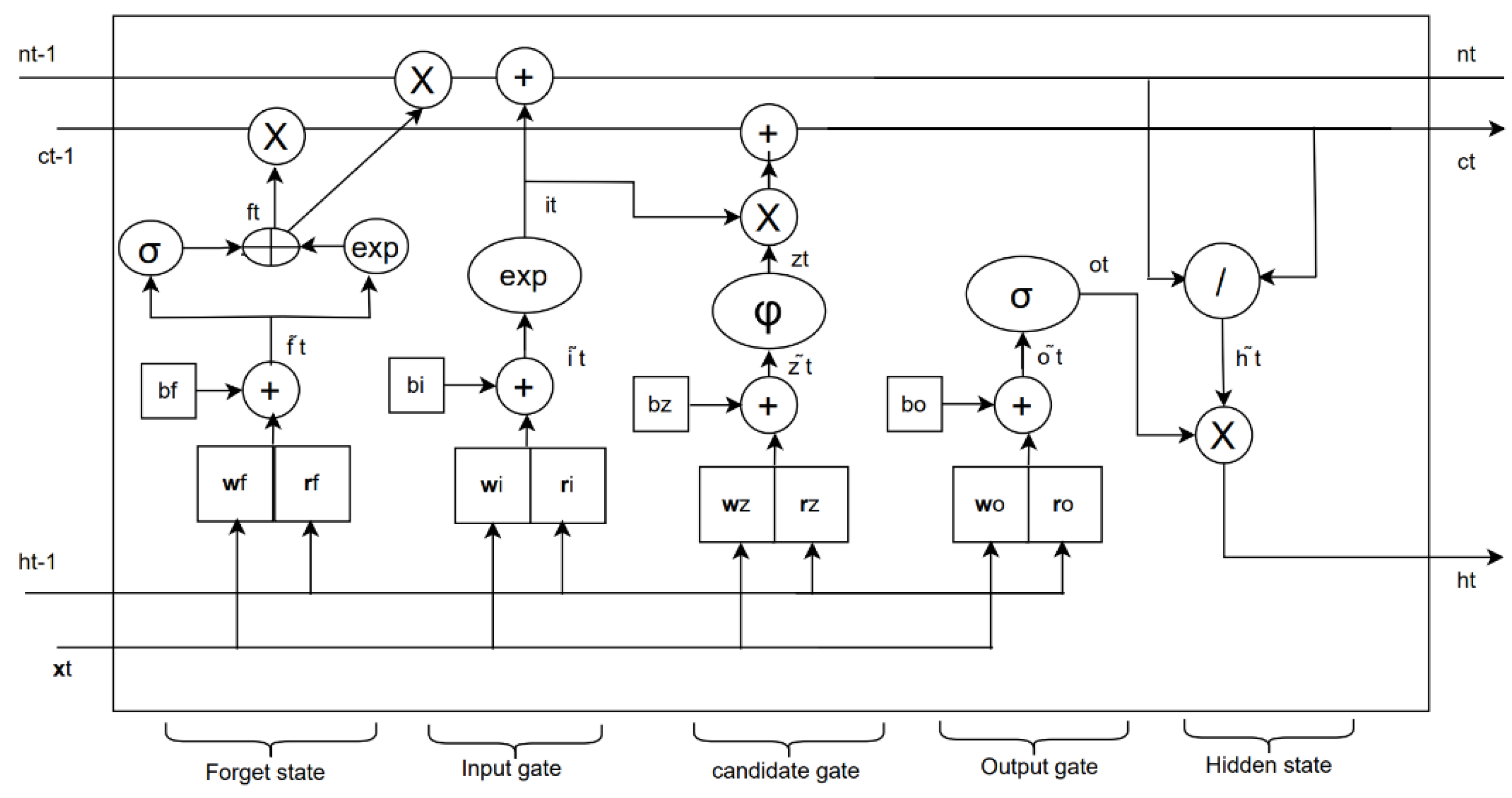

The architecture of sLSTM is depicted in

Figure 1.

For sLSTM, the recurrent relationship between the input and the state is described as:

where

is the cell state at time step

. It retains long-term memory of the network,

is the forget gate,

is the input gate, and

controls the amount of input and the previous hidden state

to be added to the cell state, as described below.

In above equations,

is input vector,

is an activation function,

is the weight matrix,

is the recurrent weight matrix, and.

represents bias.

The model also uses a normalization state as:

where

is the normalized state at time step

. It helps in normalizing the cell state updates. Hidden state

is used for recurrent connections as:

where

is the output gate. The input gate

controls the extent to which new information is added to the cell state as:

Similarly the forget gate

controls the extent to which the previous cell state

is retained.

The output gate

controls the flow of information from the cell state to the hidden state as:

where

is the weight matrix that is applied to the current input

,

is the recurrent weight matrix for the output gate that is applied to the previous hidden state

and

is the bias term for the output gate.

To provide numerical stability for exponential gates, the forget and input gates are combined into another state

as:

Where

is stabilized input gate which is a rescaled version of the original input gate. Similarly, forget gate is stabilized via

which is a rescaled version of the original forget gate as:

To summarize, compared to the original LSTM, the sLSTM adds exponential gating as indicated by equations 5 and 6. Further, use of normalization via equation 3, and finally the stabilization achieved via equations, 8, 9 and 10. These provide considerable improvements to the canonical LSTM.

2.2. mLSTM

The Matrix Long Short-Term Memory (mLSTM) model [

24] introduces a matrix memory cell along with a covariance update mechanism for key-value pair storage which significantly increases the model’s memory capacity. The gating mechanisms work in tandem with the covariance update rule to manage memory updates efficiently. By removing hidden-to-hidden connections, mLSTM operations can be executed in parallel, which speeds up both training and inference processes. These improvements make mLSTM highly efficient for storing and retrieving information, making it ideal for sequence modeling tasks that require substantial memory capacities, such as language modeling, speech recognition, and time series forecasting. mLSTM represents a notable advancement in recurrent neural networks, addressing the challenges of complex sequence modeling effectively.

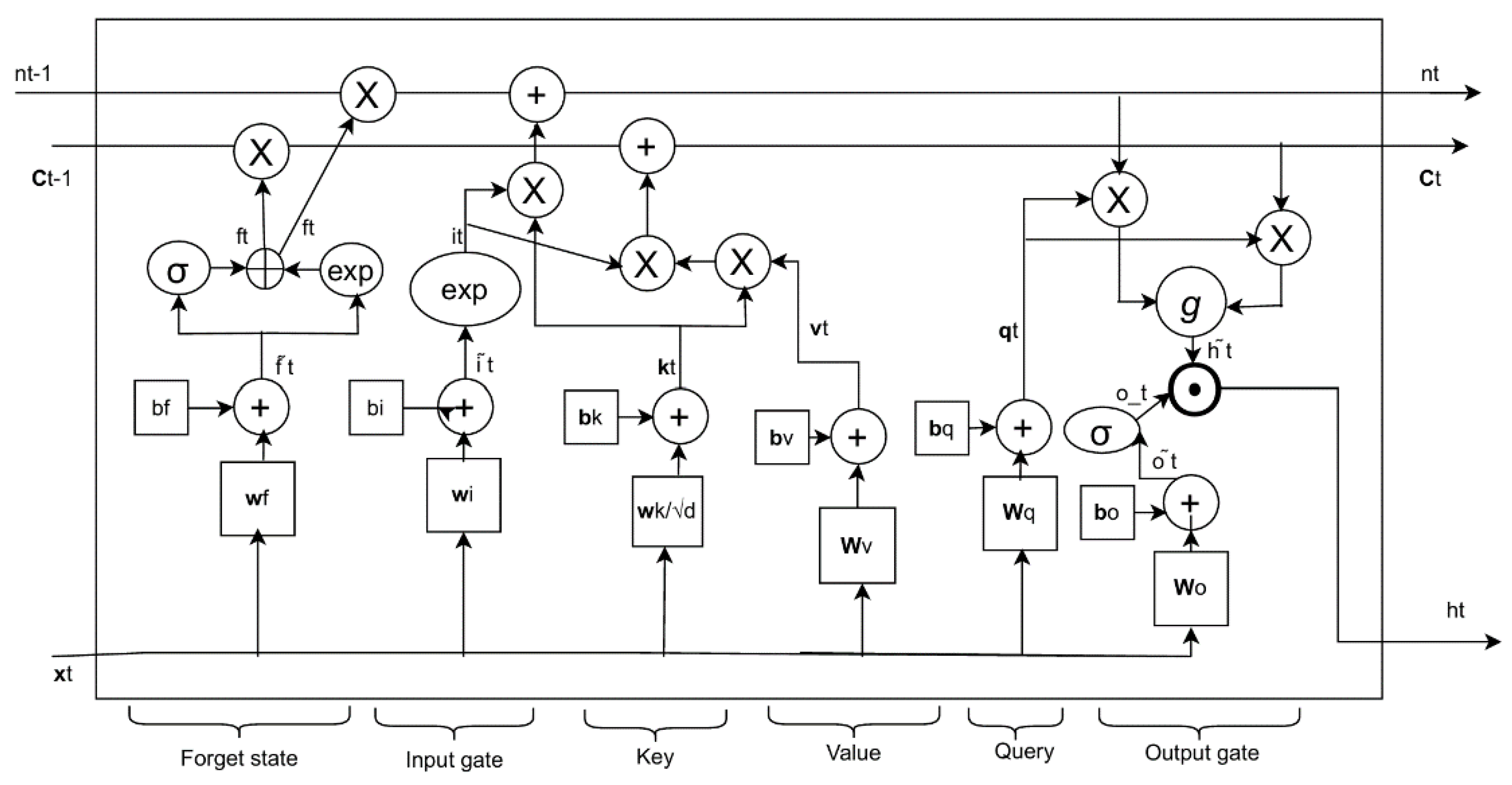

Figure 2 shows the architecture of mLSTM.

Equations 11-19 describe the operations of mLSTM [

24].

is the matrix memory that stores information in a more complex structure than the scalar cell state in a traditional LSTM. Normalization is carried out similar to sLSTM as:

Similar to the transformer architecture, query

, key

, and value

are created as:

where

is the input gate that controls the incorporation of new information into the memory. The forget gate is slightly different as compared to sLSTM as shown below. It determines how much of the previous memory

is to be retained.

The output gate is also slightly different in mLSTM as shown below.

The output gate controls how much of the retrieved memory is passed to the hidden state.

In the next section, we describe how we adapt the sLSTM and mLSTM to the time series domain.

3. Proposed Method

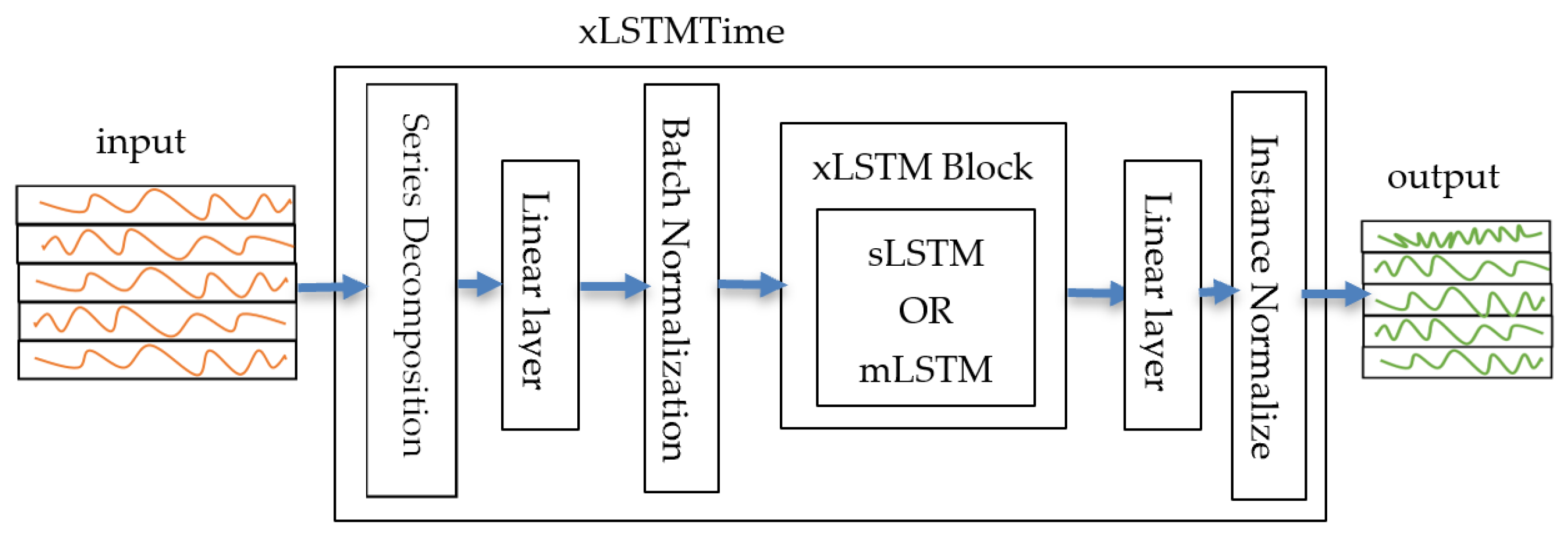

Our proposed xLSTMTime based model combines several key components to effectively handle time series forecasting tasks.

Figure 3 provides an overview of the model architecture.

The input to the model is a time series comprising of multiple sequences. The Series Decomposition block splits the input time series data into two components for each series to capture trend and seasonal information. We implement the approach as proposed in [

13] and described as follows. For the input sequence with context length of

and

number of features, i.e.,

, we apply learnable moving averages on each feature via 1-D convolutions. Then the trend and seasonal components are extracted as:

After decomposition, the data passes through a linear transformation layer to transform it to the dimensionality needed for the xLSTM modules. We further do a batch normalization [

26] to provide stability in learning before feeding the data to the xLSTM modules. Batch Normalization is a transformative technique in deep learning that stabilizes the distribution of network inputs by normalizing the activations of each layer. It allows for higher learning rates, accelerates training, and reduces the need for strict initialization and some forms of regularization like Dropout. By addressing internal covariate shift, Batch Normalization improves network stability and performance across various tasks. It introduces minimal overhead with two additional trainable parameters per layer, enabling deeper networks to train faster and more effectively. [

26]

The xLSTM block contains both the sLSTM and the mLSTM components. The sLSTM component uses scalar memory and exponential gating to manage long-term dependencies and controlling the appropriate memory for the historical information. The mLSTM component uses matrix memory and a covariance update rule to enhance storage capacity and relevant information retrieval capabilities. Depending upon the attributes of the dataset, we choose either the sLSTM or the mLSTM component. For smaller datasets such as ETTm1, ETTm2, ETTh1, ETTh2, ILI and weather, we use sLSTM, whereas for larger datasets such as Electricity, Traffic, and PeMS , the mLSTM is chosen due to its higher memory capacity in better learning for time series patterns. The output from the xLSTM block goes through another linear layer. This layer further transforms the data, preparing it for the final output via instance normalization. The Instance Normalization operates on each channel of time series independently. It normalizes the data within each channel of each component series to have a mean of 0 and a variance of 1. The formula for instance normalization for a given feature map is as follows:

where x represents the input feature map, μ(x) is the mean of the feature map and σ(x) is the standard deviation of the feature map [

27].

4. Results

We test our proposed xLSTM-based architecture on 12 widely used datasets from real-world applications. These datasets include the Electricity Transformer Temperature (ETT) series, which are divided into ETTh1 and ETTh2 (hourly intervals), and ETTm1 and ETTm2 (5-minute intervals). Additionally, we analyze datasets related to Traffic (hourly), Electricity (hourly), Weather (10-minute intervals), Influenza-Like Illness (ILI) (weekly), and Exchange Rate (daily). Another dataset PeMS (PEMS03, PEMS04, PEMS07 and PEMS08) traffic is sourced from the California Transportation Agencies (CalTrans) Performance Measurement System (PeMS).

Table 1.

Characteristics of the different datasets used.

Table 1.

Characteristics of the different datasets used.

| Datasets |

Features |

Timesteps |

Granularity |

| Weather |

21 |

52,696 |

10 min |

| Traffic |

862 |

17,544 |

1 h |

| ILI |

7 |

966 |

1 week |

| ETTh1/ ETTh2 |

7 |

17,420 |

1 h |

| ETTm1/ETTm2 |

7 |

69,680 |

5 min |

| PEMS03 |

358 |

26,209 |

5 min |

| PEMS04 |

307 |

16,992 |

5 min |

| PEMS07 |

883 |

28,224 |

5 min |

| PEMS08 |

170 |

17,856 |

5 min |

| Electricity |

321 |

26,304 |

1 h |

Each model follows a consistent experimental setup, with prediction lengths T of {96, 192, 336, 720} for all datasets except the ILI dataset. For the ILI dataset, we use prediction lengths of {24, 36, 48, 60}. The look-back window

L is 512 for all datasets except ILI dataset, for which we use

L of 96 [

16]. We use Mean Absolute Error (MAE) during the training. For evaluation, the metrics used are MSE (Mean Squared Error) and MAE (Mean Absolute Error).

Table 2 presents the results for the different benchmarks, comparing our results to the recent works in the time series field.

Table 2: Multivariate long-term forecasting outcomes with prediction intervals T = {24, 36, 48, 60} for the ILI dataset and T = {96, 192, 336, 720} for other datasets. The best results are highlighted in red, and the next best results are in blue. The lower number is better.

As can be seen from

Table 2, for a vast majority of the benchmarks, we outperform existing approaches. Only in case of Electricity and ETTh2, in a few of the prediction lengths, our results are second best.

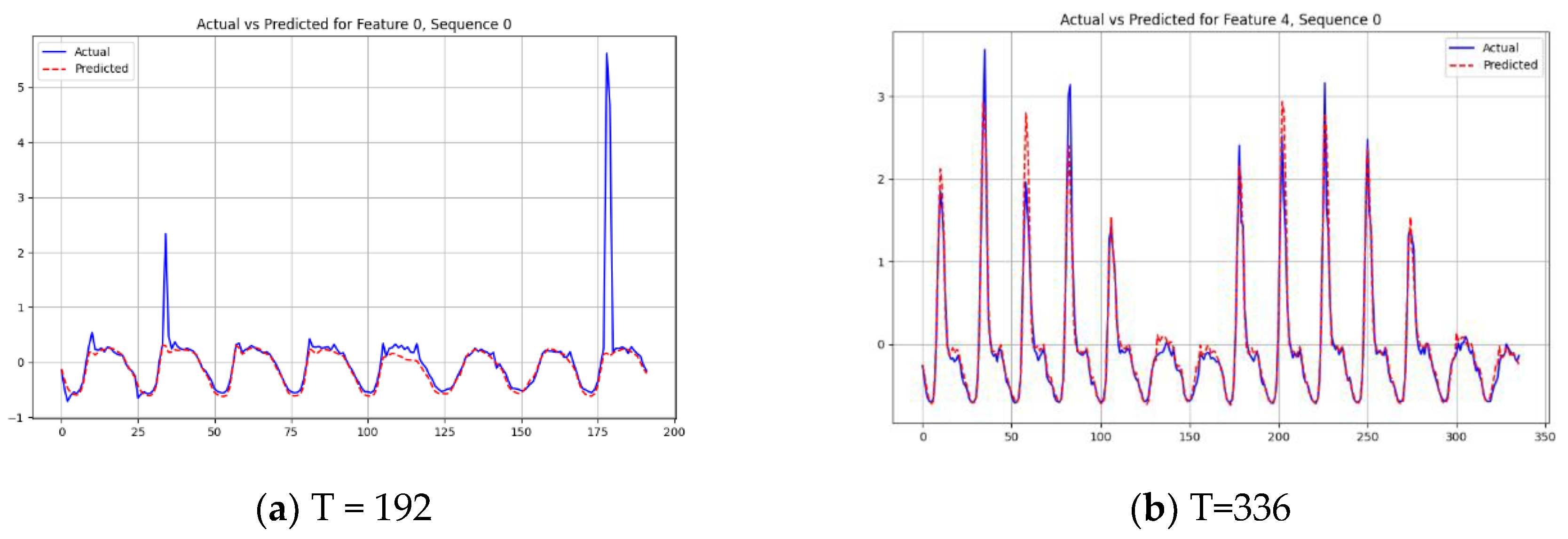

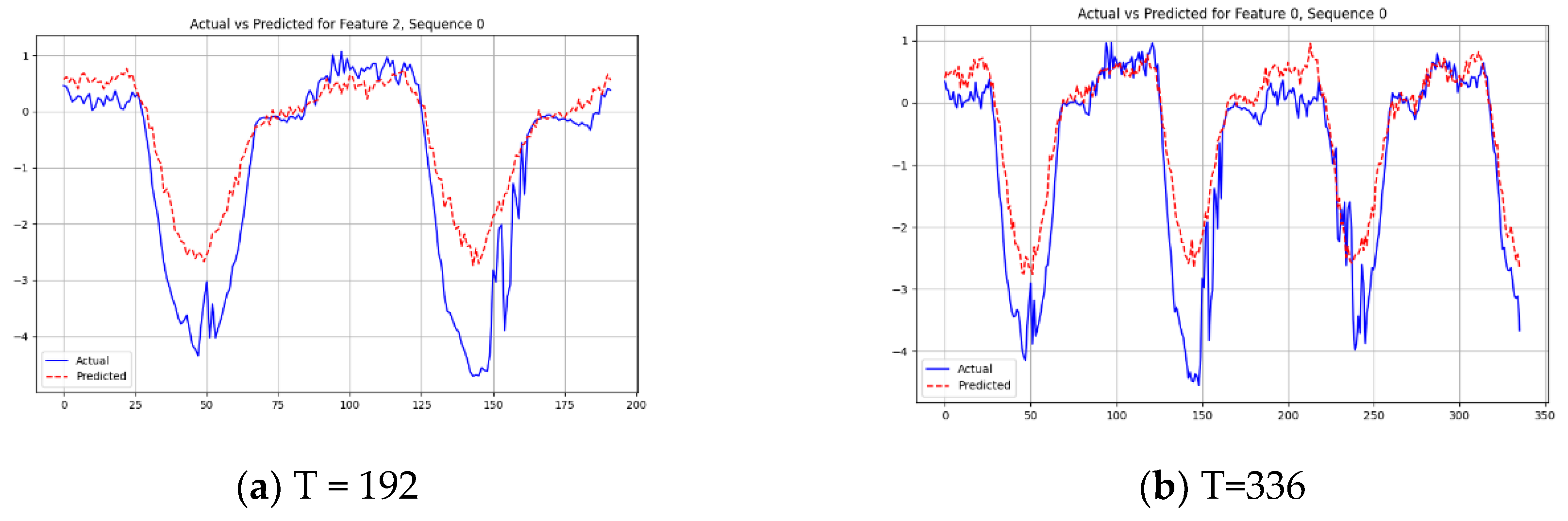

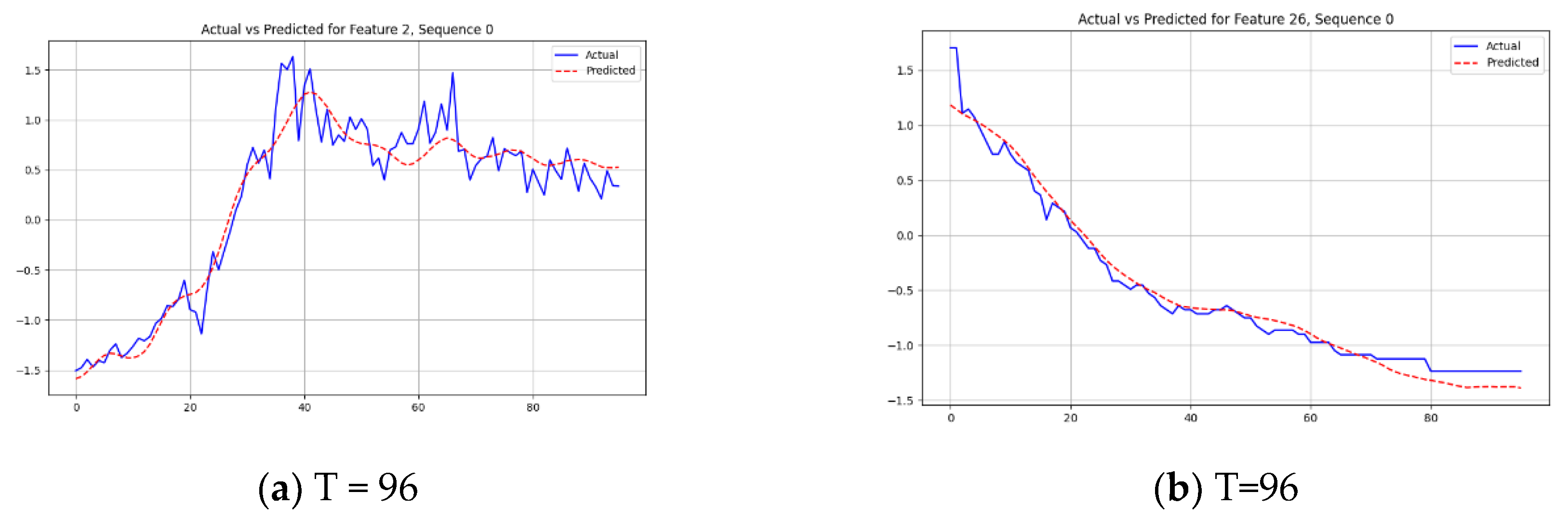

Figure 4 and

Figure 5 show the graphs for actual versus predicted time series values for a few of the datasets. As can be seen, our model learns the periodicity and the variations in the data very nicely for the most part.

Table 3 presents the comparison results for the PeMS datasets. Here our model produces either the best or the second best results when compared with the recent state of the art models.

Figure 6 shows the actual versus predicted graphs for some of the PeMS datasets.

5. Discussion

One of the recent most effective models for time series forecasting is Dlinear. When we compare our approach to the Dlinear model, we obtain substantial improvements across various datasets as indicated by the results in

Table 2. The most significant enhancements are seen in the Weather dataset, with improvements of 18.18% for T=96 and 12.73% for T=192. Notable improvements are also observed in the Illness dataset (22.62% fort T=36) and ETTh2 dataset (11.23% for T=192). These results indicate that our xLSTMTime model consistently outperforms DLinear, especially in complex datasets for varying prediction lengths.

Another notable recent model for time series forecasting is PatchTST. The comparison between our xLSTMTime model and PatchTST reveals a nuanced performance landscape. xLSTMTime demonstrates modest but consistent improvements over PatchTST in several scenarios, particularly in the Weather dataset, with enhancements ranging from 1.03% to 3.36%. The most notable improvements were observed in Weather forecasting at T=96 and T=336, as well as in the ETTh1 dataset for T=720 (1.34% improvement). In the Electricity dataset, xLSTMTime shows slight improvements at longer prediction lengths (T=336 and T=720). However, xLSTMTime also shows some limitations. In the Illness dataset, for shorter prediction lengths, it underperforms PatchTST by 14.78% for T=24, although it outperforms for T=60 by 3.54%. Mixed results were also observed in the ETTh2 dataset, with underperformance for T=336 but better performance at other prediction lengths. Interestingly, for longer prediction horizons (T=720), the performance of xLSTMTime closely matches or slightly outperforms PatchTST across multiple datasets, with differences often less than 1%. This could be attributed to the better long term memory capabilities of the xLSTM approach.

Overall, the comparative analysis suggests that while xLSTMTime is highly competitive with PatchTST, a state-of-the-art model for time series forecasting, its advantages are specific to certain datasets and prediction lengths. Moreover, its consistent outperformance of DLinear across multiple scenarios underscores its robustness. The overall performance profile of xLSTMTime, showing significant improvements in most cases over DLinear and PatchTST, establishes its potential in the field of time series forecasting. Our model demonstrates particular strengths at longer prediction horizons in part due to the long context capabilities of xLSTM coupled with extraction of seasonal and trend information in our implementation.

In comparing the xLSTMTime model with iTransformer, RLinear, PatchTST, Crossformer, DLinear, and SCINet on the PeMS datasets (

Table 3), we also achieve superior performance. For instance, in the PEMS03 dataset, for a 12-step prediction, xLSTMTime achieves approximately 9% better MSE, and 5% better MAE. This trend continues across other prediction intervals and datasets, highlighting xLSTMTime’s effectiveness in multivariate forecasting. Notably, xLSTMTime often achieves the best or second-best results in almost all cases, underscoring its effectiveness in various forecasting scenarios.

6. Conclusions

In this paper, we adapt the recently enhanced recurrent architecture of xLSTM which has demonstrated competitive results in the NLP domain for time series forecasting. Since xLSTM with its improved stabilization, exponential gating and higher memory capacity offer potentially a better deep learning architecture, by properly adapting it to the time series domain via series decomposition, batch and instance normalization, we develop the xLSTMTime architecture for LTSF. Our xLSTMTime model demonstrates excellent performance against state-of-the-art transformer-based models as well as other recently proposed time series models. Through extensive experiments on diverse datasets, the xLSTMTime showed superior accuracy in terms of MSE and MAE, making it a viable alternative to more complex models. We highlight the potential of xLSTM architectures in the time series forecasting arena, paving the way for more efficient and interpretable forecasting solutions, and further exploration using recurrent models.

Author Contributions

Conceptualization, M.A. and A.M.; methodology, M.A.; software, M.A.; validation, M.A., A.M..; formal analysis, M.A and A.M.; investigation, M.A and A.M.; resources, M.A.; data curation, M.A.; writing—original draft preparation, M.A. and A.M.; writing—review and editing, M.A. and A.M; visualization, M.A.; supervision, A.M; project administration, A.M. All authors have read and agreed to the published version of the manuscript.

Funding

The authors received no financial support for this research.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Dubey, Ashutosh Kumar, Abhishek Kumar, Vicente García-Díaz, Arpit Kumar Sharma, and Kishan Kanhaiya. “Study and analysis of SARIMA and LSTM in forecasting time series data.” Sustainable Energy Technologies and Assessments 47 (2021): 101474. [CrossRef]

- Zhang, Guoqiang Peter. “An investigation of neural networks for linear time-series forecasting.” Computers & Operations Research 28, no. 12 (2001): 1183-1202. [CrossRef]

- De Livera, A.M.; Hyndman, R.J.; Snyder, R.D. Forecasting time series with complex seasonal patterns using exponential smoothing. J. Am. Stat. Assoc. 2011, 106, 1513–1527. [Google Scholar] [CrossRef]

- Ristanoski, Goce, Wei Liu, and James Bailey. “Time series forecasting using distribution enhanced linear regression.” In Advances in Knowledge Discovery and Data Mining: 17th Pacific-Asia Conference, PAKDD 2013, Gold Coast, Australia, April 14–17, 2013, Proceedings, Part I 17, pp. 484-495. Springer Berlin Heidelberg, 2013. [CrossRef]

- Chen, Tianqi, and Carlos Guestrin. “Xgboost: A scalable tree boosting system.” In Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining, pp. 785-794. 2016. [CrossRef]

- Hewamalage, Hansika, Christoph Bergmeir, and Kasun Bandara. “Recurrent neural networks for time series forecasting: Current status and future directions.” International Journal of Forecasting 37, no. 1 (2021): 388-427. [CrossRef]

- Petneházi, G. Recurrent neural networks for time series forecasting. arXiv 2019, arXiv:1901.00069. [Google Scholar]

- Zhao, Bendong, Huanzhang Lu, Shangfeng Chen, Junliang Liu, and Dongya Wu. “Convolutional neural networks for time series classification.” Journal of Systems Engineering and Electronics 28, no. 1 (2017): 162-169. [CrossRef]

- Borovykh, Anastasia, Sander Bohte, and Cornelis W. Oosterlee. “Conditional time series forecasting with convolutional neural networks.” arXiv 2017, arXiv:1703.04691. arXiv:1703.04691.

- Koprinska, Irena, Dengsong Wu, and Zheng Wang. “Convolutional neural networks for energy time series forecasting.” In 2018 international joint conference on neural networks (IJCNN), pp. 1-8. IEEE, 2018. [CrossRef]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. Proc. AAAI Conf. Artif. Intell. 2021, 35, 11106–11115. [Google Scholar] [CrossRef]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. In Advances in Neural Information Processing Systems 34 (NeurIPS 2021); Neural Information Processing Systems Foundation, Inc. (NeurIPS): San Diego, CA, USA, 2021; pp. 22419–22430. [Google Scholar]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting. In Proceedings of the 39th International Conference on Machine Learning PMLR 2022, Baltimore, MD, USA, 17–23 July 2022; pp. 27268–27286. [Google Scholar]

- Li, S.; Jin, X.; Xuan, Y.; Zhou, X.; Chen, W.; Wang, Y.X.; Yan, X. Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. In Advances in Neural Information Processing Systems 32 (NeurIPS 2019); Neural Information Processing Systems Foundation, Inc. (NeurIPS): San Diego, CA, USA, 2019. [Google Scholar]

- Nie, Y.; Nguyen, N.H.; Sinthong, P.; Kalagnanam, J. A Time Series is worth 64 words: Long-term forecasting with Transformers. arXiv arXiv:2211.14730, 2022.

- Liu, S.; Yu, H.; Liao, C.; Li, J.; Lin, W.; Liu, A.X.; Dustdar, S. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In Proceedings of the International Conference on Learning Representations 2022, Online, 25–29 April 2022. [Google Scholar]

- Liu, Yong, Tengge Hu, Haoran Zhang, Haixu Wu, Shiyu Wang, Lintao Ma, and Mingsheng Long. “itransformer: Inverted transformers are effective for time series forecasting.” arXiv preprint arXiv:2310.06625 (2023).

- Zeng, Ailing, Muxi Chen, Lei Zhang, and Qiang Xu. “Are transformers effective for time series forecasting?.” In Proceedings of the AAAI conference on artificial intelligence, vol. 37, no. 9, pp. 11121-11128. 2023. [CrossRef]

- Alharthi, Musleh, and Ausif Mahmood. “Enhanced Linear and Vision Transformer-Based Architectures for Time Series Forecasting.” Big Data and Cognitive Computing 8, no. 5 (2024): 48. [CrossRef]

- Wu, Haixu, Tengge Hu, Yong Liu, Hang Zhou, Jianmin Wang, and Mingsheng Long. “Timesnet: Temporal 2d-variation modeling for general time series analysis.” arXiv preprint arXiv:2210.02186 (2022).

- Gu, Albert, Karan Goel, and Christopher Ré. “Efficiently modeling long sequences with structured state spaces.” arXiv preprint arXiv:2111.00396 (2021).

- Zhang, Michael, Khaled K. Saab, Michael Poli, Tri Dao, Karan Goel, and Christopher Ré. “Effectively modeling time series with simple discrete state spaces.” arXiv preprint arXiv:2303.09489 (2023).

- Beck, Maximilian, Korbinian Pöppel, Markus Spanring, Andreas Auer, Oleksandra Prudnikova, Michael Kopp, Günter Klambauer, Johannes Brandstetter, and Sepp Hochreiter. “xLSTM: Extended Long Short-Term Memory.” arXiv preprint arXiv:2405.04517 (2024).

- Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. “Attention is all you need.” Advances in neural information processing systems 30 (2017).

- Ioffe, Sergey, and Christian Szegedy. “Batch normalization: Accelerating deep network training by reducing internal covariate shift.” In International conference on machine learning, pp. 448-456. pmlr, 2015.

- Kim, Taesung, Jinhee Kim, Yunwon Tae, Cheonbok Park, Jang-Ho Choi, and Jaegul Choo. “Reversible instance normalization for accurate time-series forecasting against distribution shift.” In International Conference on Learning Representations. 2021.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).