Submitted:

16 July 2024

Posted:

17 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

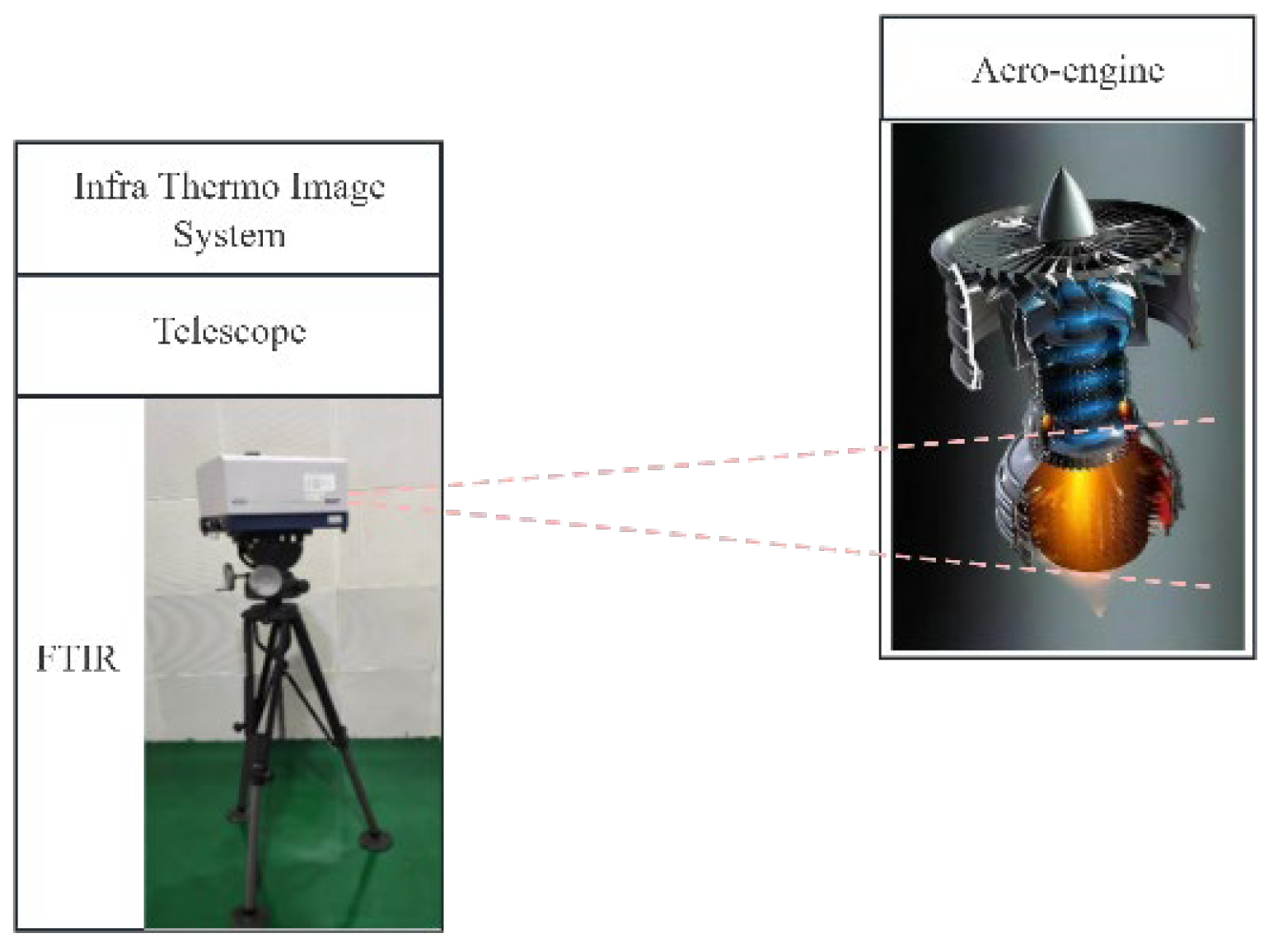

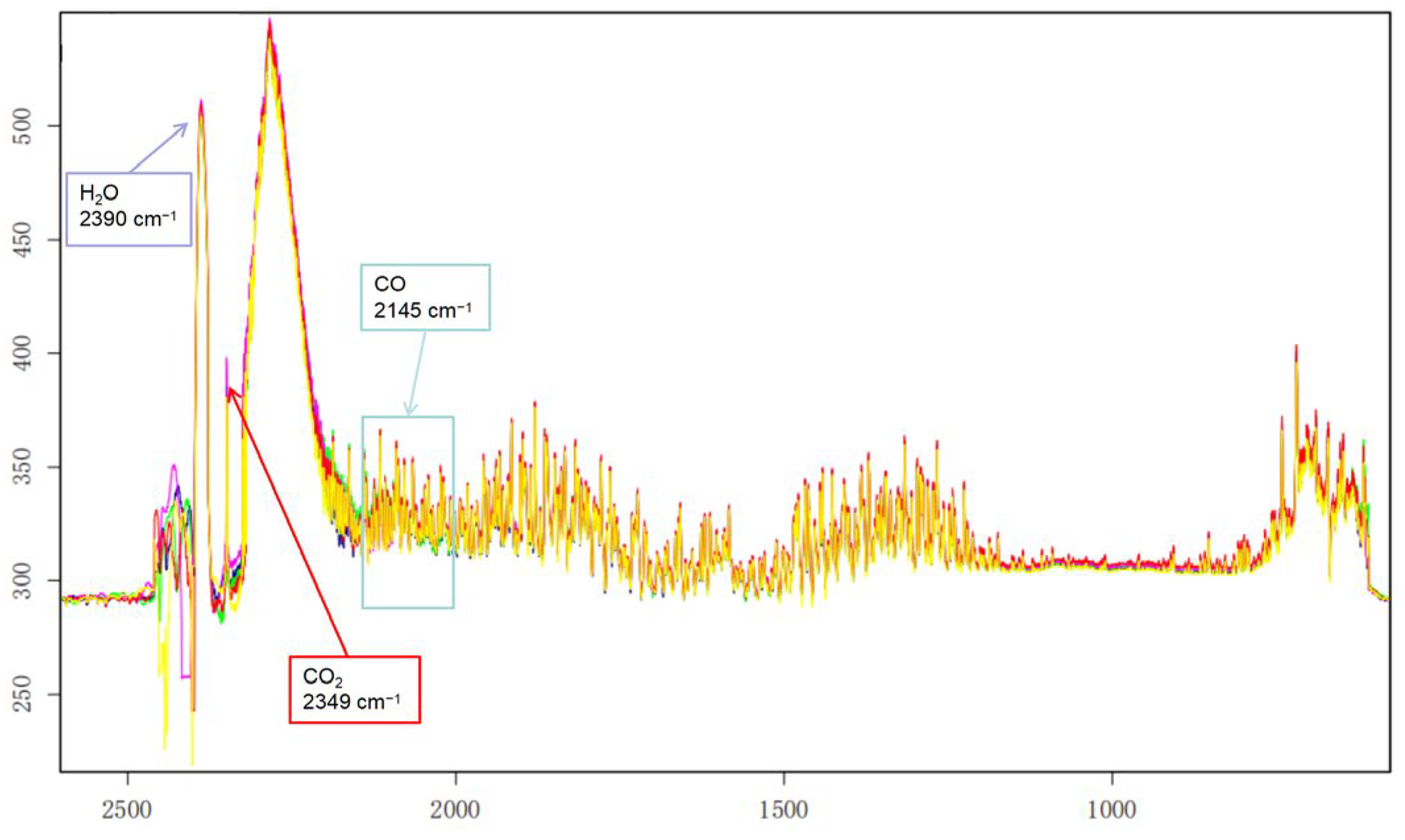

- To achieve the classification of aero-engines, this paper employs an infrared spectroscopy detection method to measure the spectra of aero-engines’ hot jets, which are significant sources of aero-engines’ infrared radiation, using an FTIR spectrometer. The FT-IR spectrometer’s infrared spectrum offers characteristic molecular-level information about substances, thereby enhancing the scientific of classifying aero-engines based on their infrared spectra.

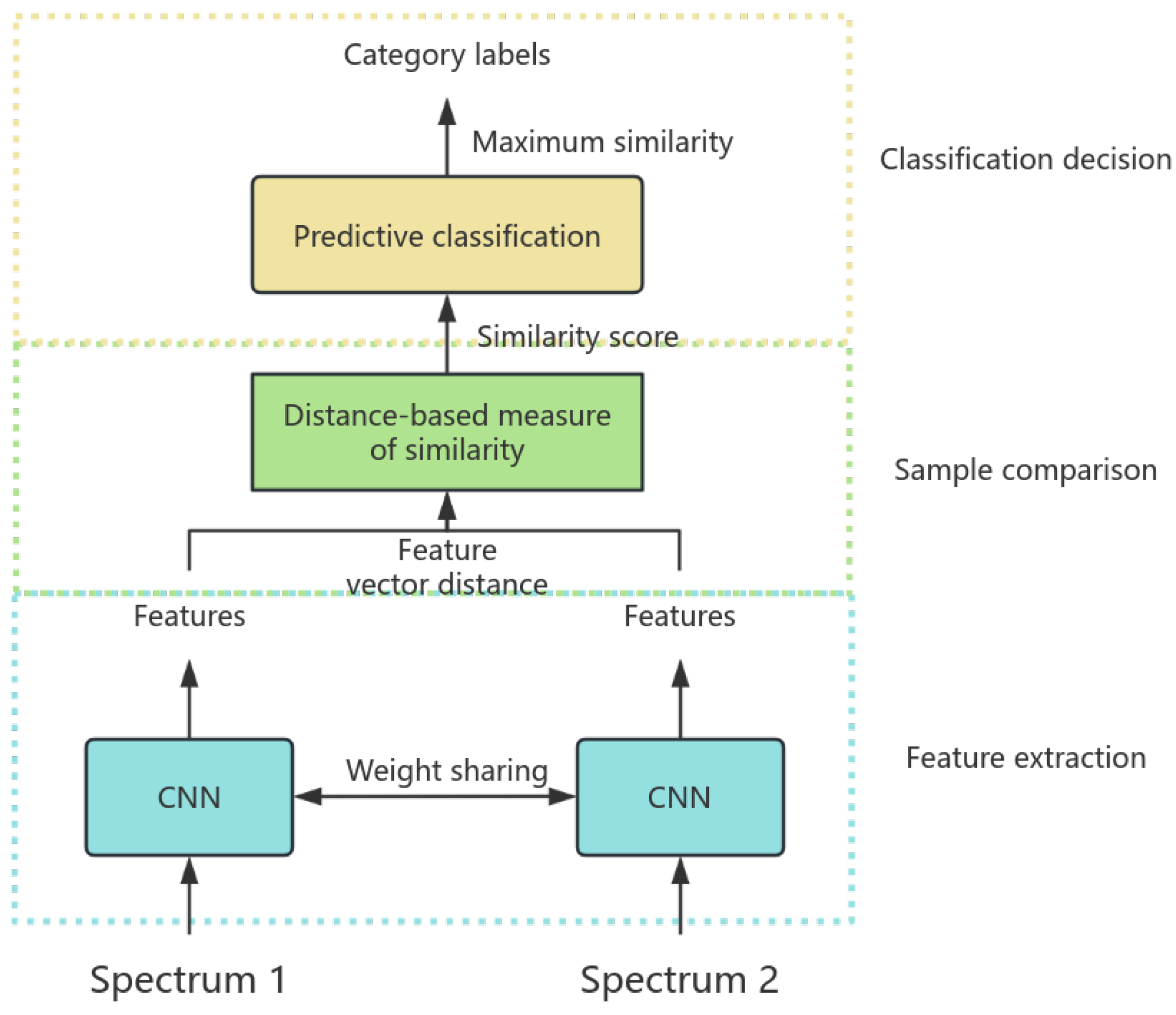

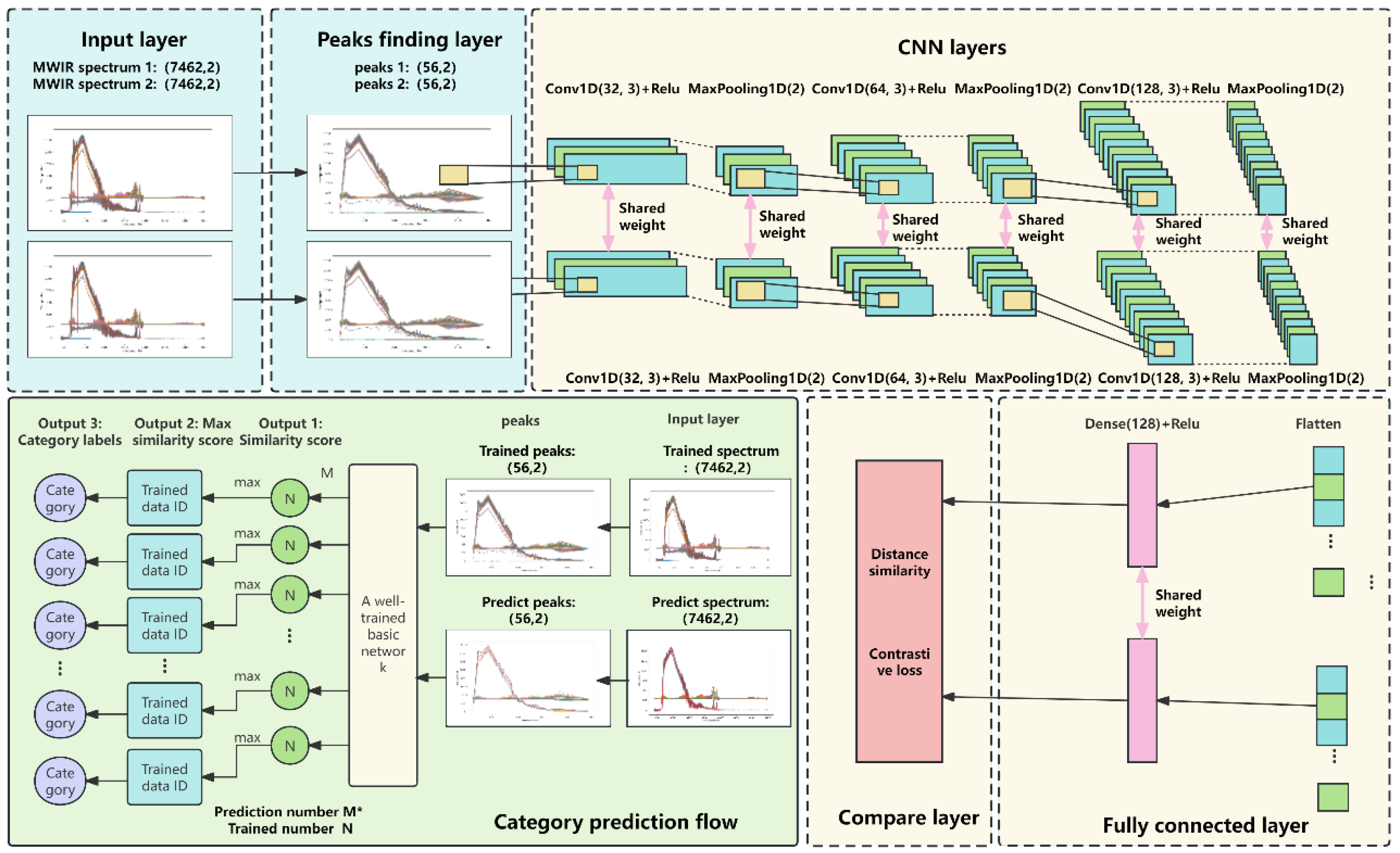

- This paper presents a SCNN for classifying the hot jet spectrum of aero-engines using a data matching method. The network is based on 1DCNN, and feature similarity is calculated using the Euclidean distance metric. Subsequently, a spectral comparison method is employed for the purpose of performing spectrum classification.

- The objective of this paper is to propose an algorithm for identifying peaks that will optimize the training and prediction speed of the SCNN. The algorithm identifies the peak value in the mid-infrared spectrum data and quantifies the high-frequency peaks, which are subsequently employed as input for the SCNN.

2. Experimental Design and Dataset Production for Hot Jet Infrared Spectrum Measurement of Aero-Engines

2.1. Aero-Engine Spectrum Measurement Experiment Design

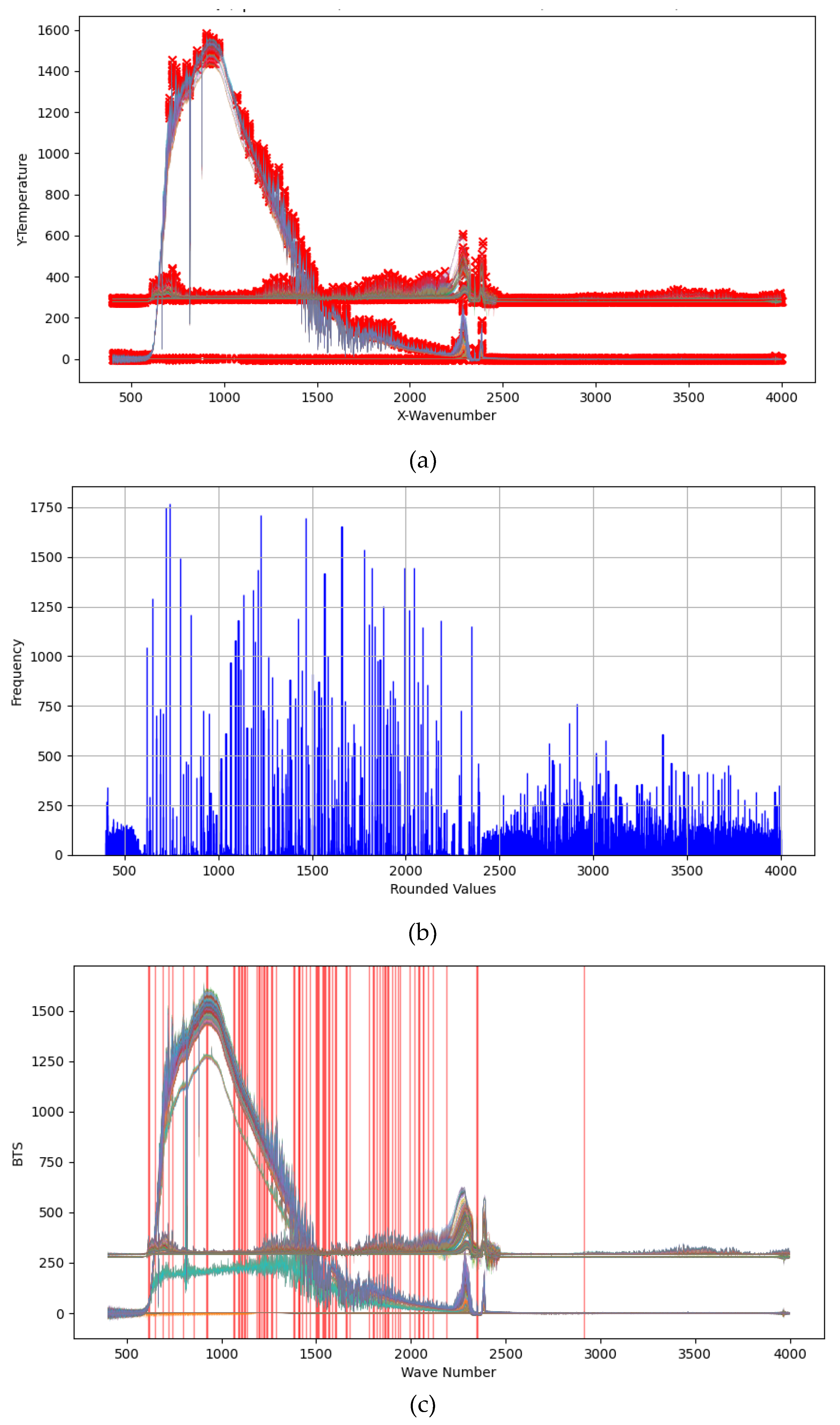

2.2. Spectrum Data Preprocessing and Data Set Production

3. Architectural Design of Peak Finding Siamese Convolutional Neural Network

3.1. Overall Network Structure Design

3.2. Base Network Architecture

3.3. Peak Finding Algorithm

| Algorithm 1: Peak finding and peak statistics algorithm |

| Input: Spectrum data |

| Output: Peak data |

| ① Crop the mid-infrared band (400-4000cm-1) of spectrum data. ② Data smoothing. ③ Set the parameters of the sliding window peak finding algorithm. ④ Count the wavenumber position of each peak. ⑤ The wave number positions associated with high frequency were identified by applying threshold value proportions. ⑥ According to the peak wave number position, the points near each data are extracted as the peak data points. ⑦ Output peak data for each spectrum data. |

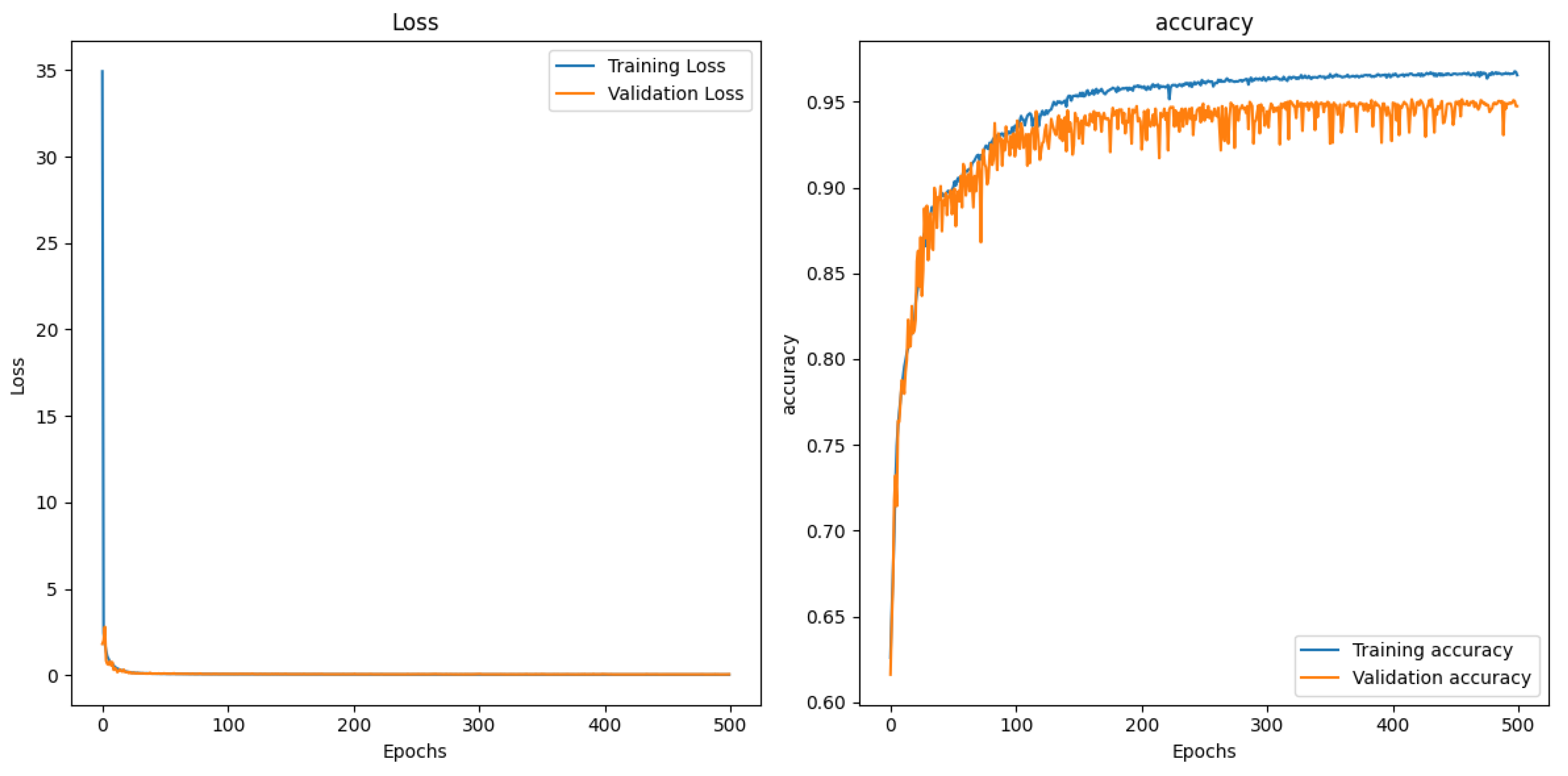

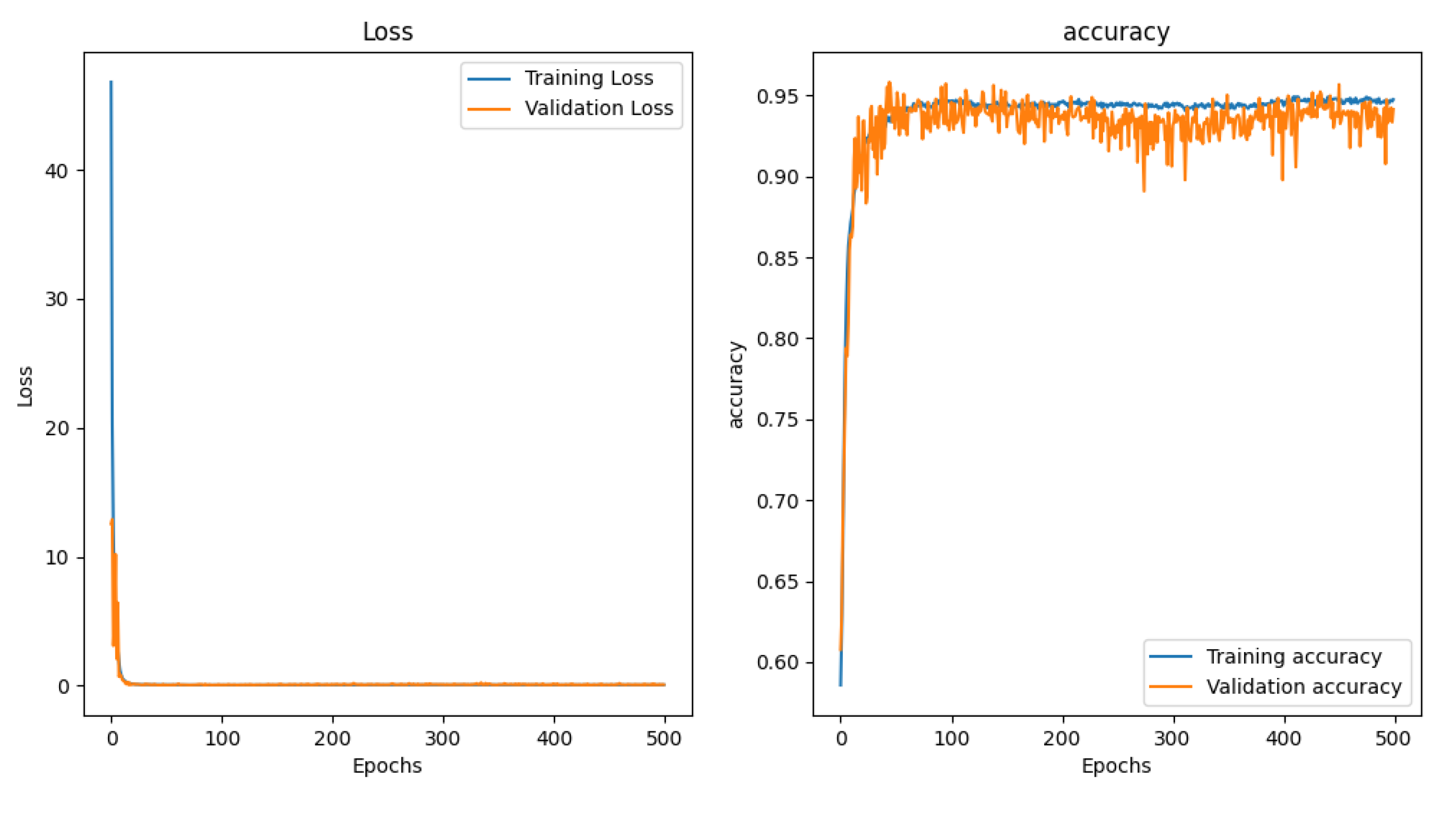

3.4. Network Training Methodology

4. Experiment and Result

4.1. Performance Measures and Experiment Results

4.2. The Traditional Method Classifies and Compares Experimental Results

4.3. Ablation Experiment Analysis

5. Summary

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- ROZENSTEIN O, PUCKRIN E, ADAMOWSKI J. Development of a new approach based on midwave infrared spectroscopy for post-consumer black plastic waste sorting in the recycling industry. Waste Management, 2017, 68: 38-44. [CrossRef]

- HOU X, LV S, CHEN Z, et al. Applications of Fourier transform infrared spectroscopy technologies on asphalt materials. Measurement, 2018: 304-316. [CrossRef]

- OZAKI Y. Infrared Spectroscopy—Mid-infrared, Near-infrared, and Far-infrared/Terahertz Spectroscopy. Analytical Sciences,2021: 1193-1212. [CrossRef]

- Jang, H.-D.; Kwon, S.; Nam, H.; Chang, D.E. Semi-Supervised Autoencoder for Chemical Gas Classification with FTIR Spectrum. Sensors,2024,24, 3601. [CrossRef]

- UDDIN Md P, MAMUN Md A, HOSSAIN Md A. PCA-based Feature Reduction for Hyperspectral Remote Sensing Image Classification. IETE Technical Review, 2021: 377-396.

- XIA J, BOMBRUN L, ADALI T, et al. Spectral–Spatial Classification of Hyperspectral Images Using ICA and Edge-Preserving Filter via an Ensemble Strategy. IEEE Transactions on Geoscience and Remote Sensing, 2016: 4971-4982. [CrossRef]

- JIA S, ZHAO Q, ZHUANG J, et al. Flexible Gabor-Based Superpixel-Level Unsupervised LDA for Hyperspectral Image Classification. IEEE Transactions on Geoscience and Remote Sensing, 2021: 10394-10409. [CrossRef]

- Zhang Y, Li T. Three different SVM classification models in Tea Oil FTIR Application Research in Adulteration Detection//Journal of Physics: Conference Series. IOP Publishing, 2021, 1748(2): 022037.

- Chen T, Guestrin C. XGBoost: A scalable tree boosting system//Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining. 2016: 785-794.

- Breiman L. Random Forests. Machine learning, 2001, 45: 5-32.

- Kumaravel ArulRaj,Muthu Karthikeyan,Deenadayalan Narmatha.A View of Artificial Neural Network Models in Different Application Areas.E3S Web of Conferences,2021,287(a).

- LI X, LI Z, QIU H, et al. An overview of hyperspectral image feature extraction, classification methods and the methods based on small samples. Applied Spectroscopy Reviews, 2021: 1-34. [CrossRef]

- CHEN Y, LIN Z, ZHAO X, et al. Deep Learning-Based Classification of Hyperspectral Data. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2014: 2094-2107.

- ZHOU W, KAMATA S I, WANG H, et al. Multiscanning-Based RNN-Transformer for Hyperspectral Image Classification. IEEE Transactions on Geoscience and Remote Sensing, 2023: 1-19. [CrossRef]

- Hu, H.; Xu, Z.; Wei, Y.; Wang, T.; Zhao, Y.; Xu, H.; Mao, X.; Huang, L. The Identification of Fritillaria Species Using Hyperspectral Imaging with Enhanced One-Dimensional Convolutional Neural Networks via Attention Mechanism. Foods 2023, 12, 4153. [CrossRef]

- Ma, Y.; Lan, Y.; Xie, Y.; Yu, L.; Chen, C.; Wu, Y.; Dai, X. A Spatial–Spectral Transformer for Hyperspectral Image Classification Based on Global Dependencies of Multi-Scale Features. Remote Sens. 2024, 16, 404. [CrossRef]

- JIA S, JIANG S, LIN Z, et al. A Semisupervised Siamese network for Hyperspectral Image Classification. IEEE Transactions on Geoscience and Remote Sensing, 2022: 1-17. [CrossRef]

- ONDRASOVIC M, TARABEK P. Siamese Visual Object Tracking: A Survey. IEEE Access, 2021: 110149-110172. [CrossRef]

- XU T, FENG Z, WU X J, et al. Toward Robust Visual Object Tracking With Independent Target-Agnostic Detection and Effective Siamese Cross-Task Interaction. vol. 32, pp. 1541-1554, 2023. [CrossRef]

- WANG L, WANG L, WANG Q, et al. SSA-SiamNet: Spectral–Spatial-Wise Attention-Based Siamese network for Hyperspectral Image Change Detection. IEEE Transactions on Geoscience and Remote Sensing, 2022: 1-18.

- MELEKHOV I, KANNALA J, RAHTU E. Siamese network features for image matching//2016 23rd International Conference on Pattern Recognition (ICPR), Cancun. 2016.

- LI Y, CHEN C L P, ZHANG T. A Survey on Siamese network: Methodologies, Applications, and Opportunities. IEEE Transactions on Artificial Intelligence, 2022: 994-1014.

- HUANG L, CHEN Y. Dual-Path Siamese CNN for Hyperspectral Image Classification With Limited Training Samples. IEEE Geoscience and Remote Sensing Letters, 2021: 518-522. [CrossRef]

- MIAO J, WANG B, WU X, et al. Deep Feature Extraction Based on Siamese network and Auto-Encoder for Hyperspectral Image Classification. IEEE Conference Proceedings,IEEE Conference Proceedings, 2019.

- NANNI L, MINCHIO G, BRAHNAM S, et al. Experiments of Image Classification Using Dissimilarity Spaces Built with Siamese networks. Sensors, 2021, 21(5): 1573. [CrossRef]

- LIU B, YU X, ZHANG P, et al. Supervised Deep Feature Extraction for Hyperspectral Image Classification. IEEE Transactions on Geoscience and Remote Sensing, 2018: 1909-1921. [CrossRef]

- LI X, LI Z, QIU H, et al. An overview of hyperspectral image feature extraction, classification methods and the methods based on small samples. Applied Spectroscopy Reviews, 2021: 1-34. [CrossRef]

- KRUSE FredA, KIEREIN-YOUNG K S, BOARDMAN JosephW. Mineral mapping at Cuprite, Nevada with a 63-channel imaging spectrometer. Photogrammetric Engineering and Remote Sensing,Photogrammetric Engineering and Remote Sensing, 1990.

- BROMLEY J, BENTZ J W, BOTTOU L, et al. SIGNATURE VERIFICATION USING A “SIAMESE” TIME DELAY NEURAL NETWORK//Series in Machine Perception and Artificial Intelligence,Advances in Pattern Recognition Systems Using Neural Network Technologies. 1994: 25-44.

- THENKABAIL PrasadS, KRISHNA M, TURRAL H. Spectral Matching Techniques to Determine Historical Land-use/Land-cover (LULC) and Irrigated Areas Using Time-series 0.1-degree AVHRR Pathfinder Datasets. Photogrammetric Engineering and Remote Sensing,Photogrammetric Engineering and Remote Sensing, 2007.

- SOHN K. Improved deep metric learning with multi-class N-pair loss objective. Neural Information Processing Systems,Neural Information Processing Systems, 2016.

- Homan D C, Cohen M H, Hovatta T, et al. MOJAVE. XIX. Brightness Temperatures and Intrinsic Properties of Blazar Jets. The Astrophysical Journal, 2021, 923(1): 67. [CrossRef]

- ZHOU W, ZHANG J, JIE D. The research of near infrared spectral peak detection methods in big data era//2016 ASABE International Meeting. 2016.

- Tieleman, T., & Hinton, G. (2012). Lecture 6.5-rmsprop: divide the gradient by a running average of its recent magnitude. COURSERA: Neural networks for machine learning, 4(2), 26–31.

| Name | Measurement Pattern | Spectral Resolution (cm−1) | Spectral Measurement Range (µm) | Full Field of View Angle |

|---|---|---|---|---|

| EM27 | Active/Passive | Active: 0.5/1 Passive: 0.5/1/4 | 2.5~12 | 30 mrad (no telescope) (1.7°) |

| Telemetry Fourier Transform Infrared Spectrometer | Passive | 1 | 2.5~12 | 1.5° |

| Aero-Engine Serial Number | Environmental Temperature | Environmental Humidity | Detection Distance |

|---|---|---|---|

| Engine 1 (Turbofan) | 19℃ | 58.5%Rh | 5m |

| Engine 2 (Turbofan) | 16℃ | 67%Rh | 5m |

| Engine 3 (Turbojet) | 14℃ | 40%Rh | 5m |

| Engine 4 (Turbojet UAV) | 30℃ | 43.5%Rh | 11.8m |

| Engine 5 (Turbojet UAV with propeller at tail) | 20℃ | 71.5%Rh | 5m |

| Engine 5 (Turbojet) | 19℃ | 73.5%Rh | 10m |

| Dataset | Type | Number of data pieces | Number of error data | Full band data volume | Medium wave range data volume |

|---|---|---|---|---|---|

| 1 | Engine 1 (Turbojet UAV) | 193 | 0 | 16384 | 7464 |

| 2 | Engine 2 (Turbojet UAV with propeller at tail) | 48 | 0 | 16384 | 7464 |

| 3 | Engine 3 (Turbojet) | 202 | 3 | 16384 | 7464 |

| 4 | Engine 4 (Turbofan) | 792 | 17 | 16384 | 7464 |

| 5 | Engine 5 (Turbofan) | 258 | 2 | 16384 | 7464 |

| 6 | Engine6 (Turbojet) | 384 | 4 | 16384 | 7464 |

| Forecast results | |||

|---|---|---|---|

| Positive samples | Negative samples | ||

| Real results | Positive samples | TP | TN |

| Negative samples | FP | FN | |

| Methods | Parameter Settings |

|---|---|

| PF-SCNN | Conv1D(32, 3), Conv1D(64, 3), Conv1D(128, 3), activation=‘relu’ |

| MaxPooling1D(2)(x) | |

| Dense(128, activation=‘relu’) | |

| Optimizers= RMSProp,(learning_rate=0.0001) | |

| loss=contrastive_loss, metrics=[accuracy] | |

| epochs=500 |

| Evaluation criterion | Accuracy | Precision | Recall | Confusion matrix |

F1-score |

|---|---|---|---|---|---|

| Dataset | 99.46% | 99.77% | 99.56% | [27 0 0 0 0 0] [ 0 72 0 0 0 0] [ 0 0 21 0 0 0] [ 0 1 0 37 0 0] [ 0 0 0 0 20 0] [ 0 0 0 0 0 6] |

99.66% |

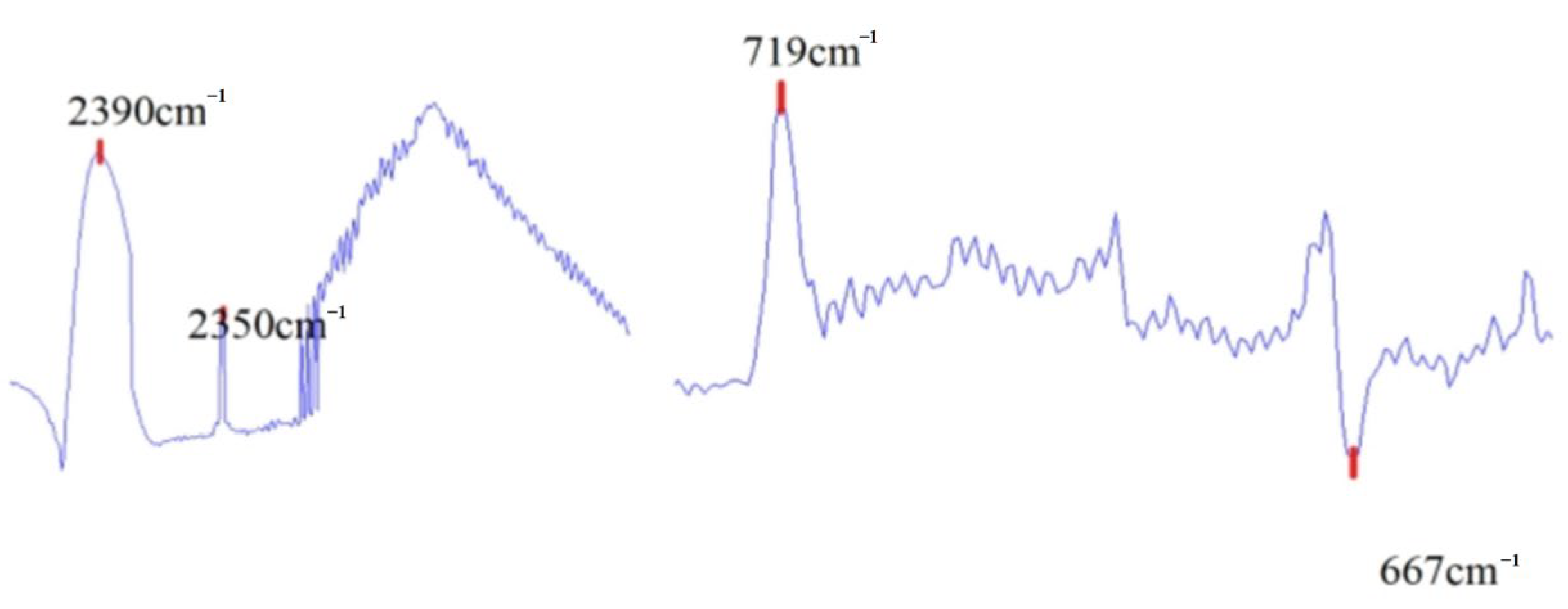

| Characteristic peak type | Emission peak (cm-1) | Absorption peak (cm-1) | ||

|---|---|---|---|---|

| Standard feature peak value | 2350 | 2390 | 720 | 667 |

| Feature peak range value | 2350.5-2348 | 2377-2392 | 722-718 | 666.7-670.5 |

| Methods | Parameter Settings |

|---|---|

| SVM | decision_function_shape = ‘ovr’, kernel = ‘rbf’ |

| XGBoost | objective = ‘multi:softmax’, num_classes = num_classes |

| CatBoost | loss_function = ‘MultiClass’ |

| Adaboost | n_estimators = 200 |

| Random Forest | n_estimators = 300 |

| LightGBM | objective’: ‘multiclass’, ‘num_class’: num_classes |

| Neural Network | hidden_layer_sizes = (100), activation = ‘relu’, solver = ‘adam’, max_iter = 200 |

| Evaluation criterion | Accuracy | Precision score | Recall | Confusion matrix | F1-score | |

|---|---|---|---|---|---|---|

| Classification methods | ||||||

| CO2 feature vector + SVM | 59.78% | 44.15% | 47.67% | [ 8 0 3 0 0 0] [ 0 3 0 0 0 0] [ 9 1 12 0 0 0] [ 0 3 1 84 22 33] [ 0 0 0 0 0 0] [ 0 0 0 0 0 0] |

42.38% | |

| CO2 feature vector + XGBoost | 94.97% | 92.44% | 93.59% | [15 0 3 0 0 0] [ 0 7 0 0 0 0] [ 2 0 13 0 0 0] [ 0 0 0 83 3 0] [ 0 0 0 1 19 0] [ 0 0 0 0 0 33] |

92.95% | |

| CO2 feature vector + CatBoost | 94.41% | 90.35% | 93.52% | [15 0 2 0 0 0] [ 0 6 0 0 0 0] [ 2 0 14 0 0 0] [ 0 0 0 83 4 0] [ 0 1 0 1 18 0] [ 0 0 0 0 0 33] |

91.81% | |

| CO2 feature vector + AdaBoost | 79.89% | 63.66% | 71.49% | [17 5 6 0 0 0] [ 0 2 0 0 0 0] [ 0 0 10 0 0 0] [ 0 0 0 84 18 3] [ 0 0 0 0 0 0] [ 0 0 0 0 4 30] |

62.56% | |

| CO2 feature vector + Random Forest | 94.41% | 91.40% | 92.70% | [15 0 4 0 0 0] [ 0 7 0 0 0 0] [ 2 0 12 0 0 0] [ 0 0 0 83 3 0] [ 0 0 0 1 19 0] [ 0 0 0 0 0 33] |

91.91% | |

| CO2 feature vector + LightGBM | 94.41% | 90.68% | 92.40% | [14 0 2 0 0 0] [ 0 6 0 0 0 0] [ 3 0 14 0 0 0] [ 0 0 0 82 2 0] [ 0 1 0 2 20 0] [ 0 0 0 0 0 33] |

91.42% | |

| CO2 feature vector + Neural Networks | 84.92% | 76.79% | 76.57% | [17 0 2 0 0 0] [ 0 6 0 0 0 0] [ 0 0 12 0 0 0] [ 0 0 2 84 18 0] [ 0 1 0 0 0 0] [ 0 0 0 0 4 33] |

76.02% | |

| Accuracy | Precision | Recall | Confusion Matrix | F1-score | Running time | |

|---|---|---|---|---|---|---|

| Peaks+SVM | 58.15 | 48.09 | 43.58 | [ 0 0 0 0 0 0] [13 42 0 0 0 0] [ 0 0 20 0 13 6] [14 30 0 38 0 0] [ 0 0 1 0 7 0] [ 0 0 0 0 0 0] |

41.02 | 0.54 |

| Accuracy | Precision | Recall | Confusion Matrix | F1-score | Running time | |

| Peaks+XGBoost | 98.91 | 96.78 | 99.01 | [27 0 0 0 0 0] [ 0 72 0 1 0 0] [ 0 0 21 0 0 1] [ 0 0 0 37 0 0] [ 0 0 0 0 20 0] [ 0 0 0 0 0 5] |

97.76 | 1.27 |

| Evaluation criterion | Accuracy | Precision score | Recall | Confusion matrix |

F1-score |

|---|---|---|---|---|---|

| Dataset | 99.46% | 99.24% | 99.56% | [27 0 0 0 0 0] [ 0 72 0 0 0 0] [ 0 0 21 0 0 0] [ 0 0 1 37 0 0] [ 0 0 0 0 20 0] [ 0 0 0 0 0 6] |

99.39% |

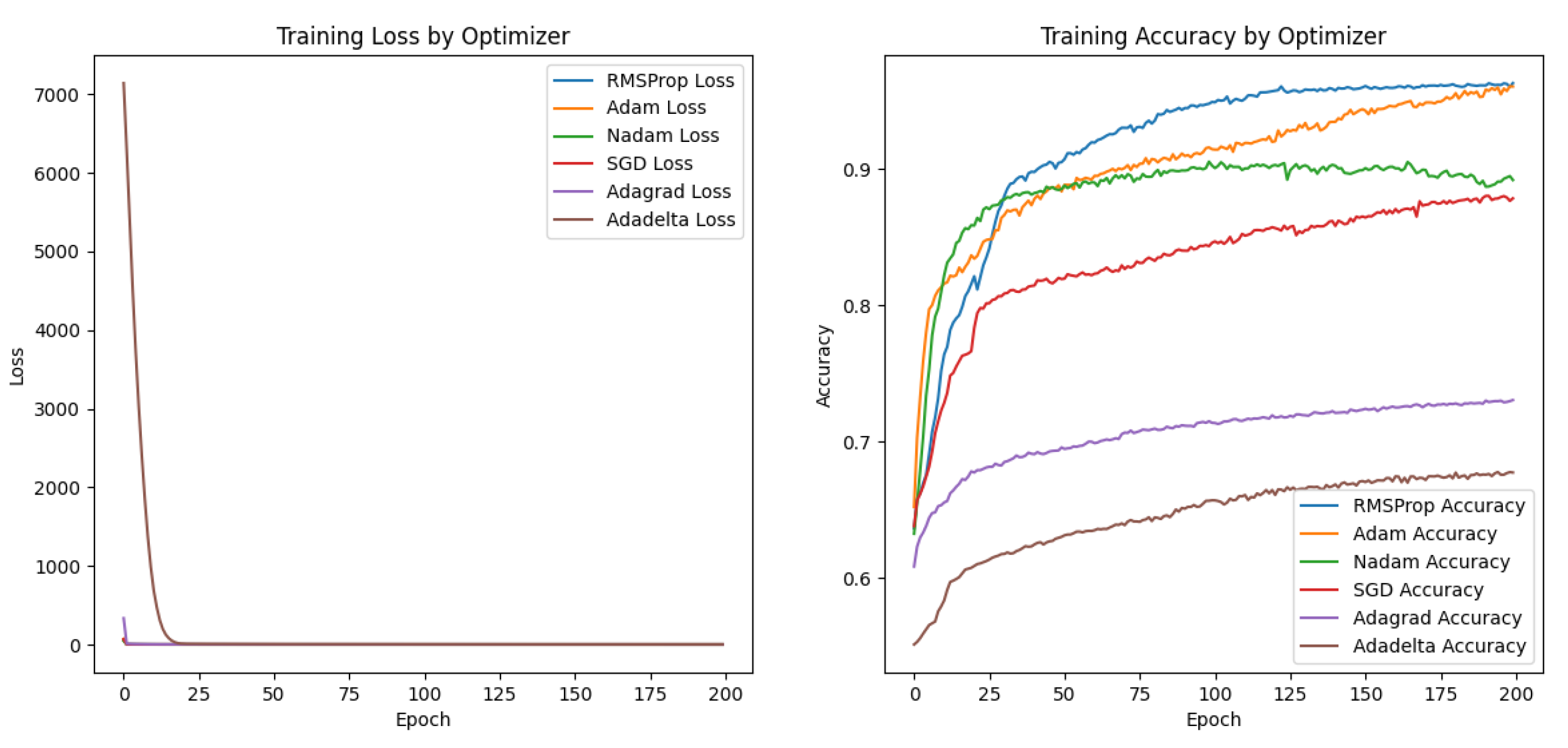

| Methods | Parameter Settings |

|---|---|

| RMSProp | learning rate=0.0001, clipvalue=1.0 |

| Adam | learning rate=0.0001, clipvalue=1.0 |

| Nadam | learning rate=0.0001, clipvalue=1.0 |

| SGD | learning rate=0.0001, clipvalue=1.0 |

| Adagrad | learning rate=0.0001, clipvalue=1.0 |

| Adadelta | learning rate=0.0001, clipvalue=1.0 |

| Optimizers | Prediction accuracy | Training time/s | Title 3 |

|---|---|---|---|

| RMSProp | 96% | 1180.96 | data |

| Adam | 96% | 1014.82 | data 1 |

| Nadam | 89% | 1523.14 | |

| SGD | 88% | 1101.65 | |

| Adagrad | 73% | 1021.90 | |

| Adadelta | 68% | 991.90 |

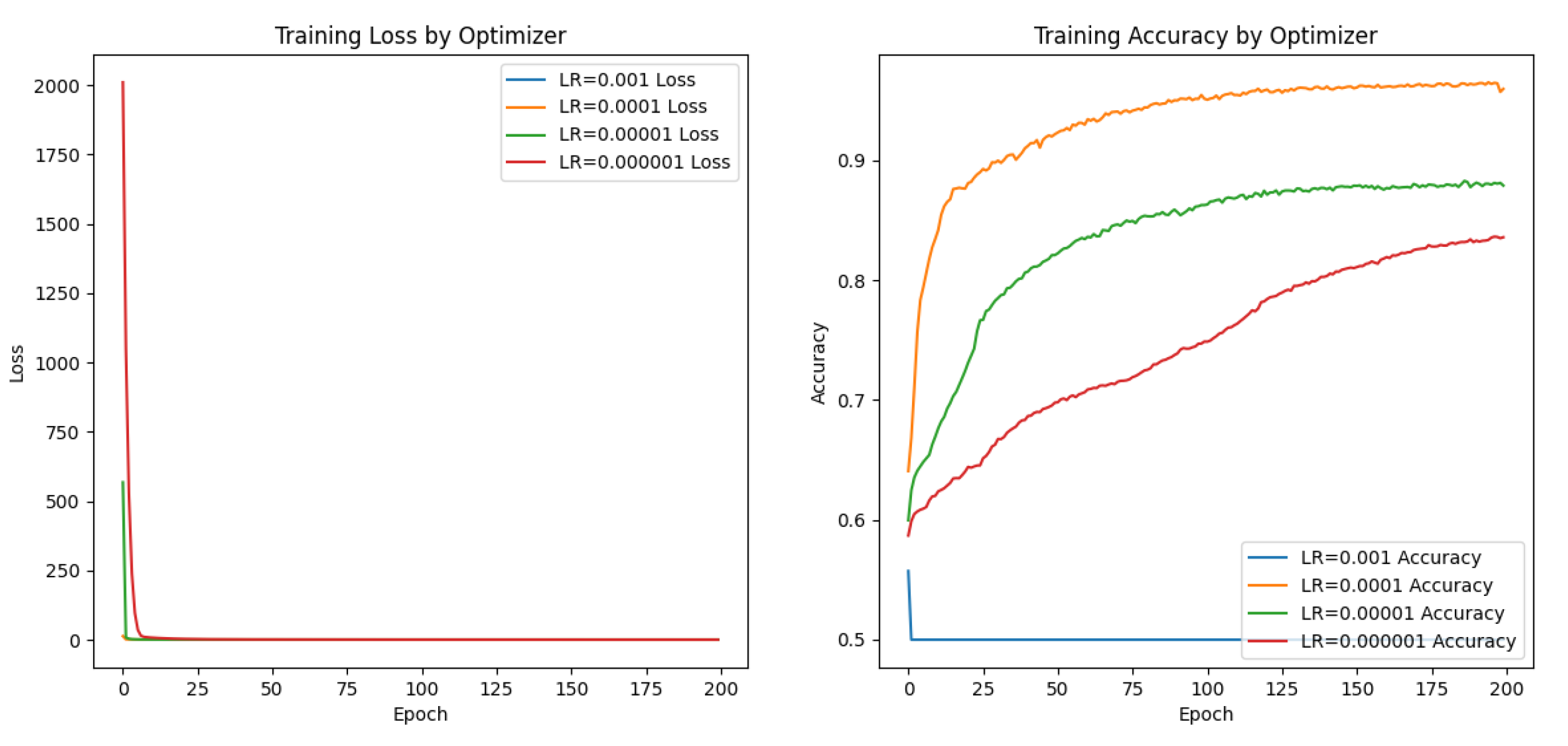

| Learning rate | Prediction accuracy | Training time/s |

|---|---|---|

| 0.001 | 0.50 | 1283.49 |

| 0.0001 | 0.96 | 1252.83 |

| 0.00001 | 0.88 | 1171.39 |

| 0.000001 | 0.84 | 1193.72 |

| Methods | Prediction time |

|---|---|

| PF-SCNN | 71s Each data ; 3:44:17 total |

| SCNN | 79s Each data; 4:30:45.78 total |

| CO2 feature vector +SVM | 0.12 s |

| CO2 feature vector +XGBoost | 0.30 s |

| CO2 feature vector+CatBoost | 4.74 s |

| CO2 feature vector +AdaBoost | 0.39 s |

| CO2 feature vector +Random Forest | 0.56 s |

| CO2 feature vector +LightGBM | 0.44 s |

| CO2 feature vector +Neural Networks | 0.85 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).