Submitted:

13 July 2024

Posted:

18 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Works

2.1. Color Standardization

2.2. Vitamin C Determination

2.3. Multivariate Regression Algorithms

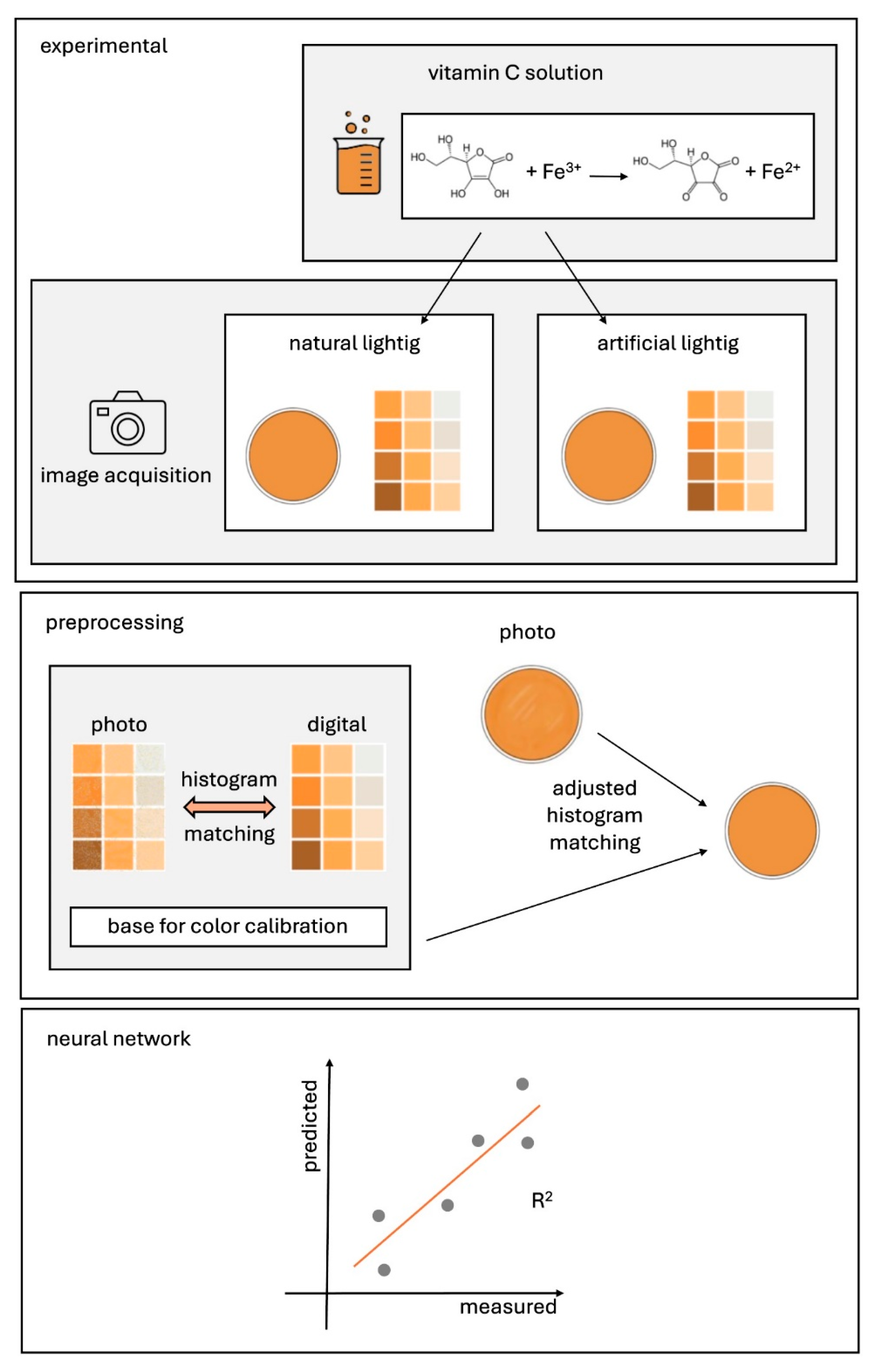

3. Proposed Method

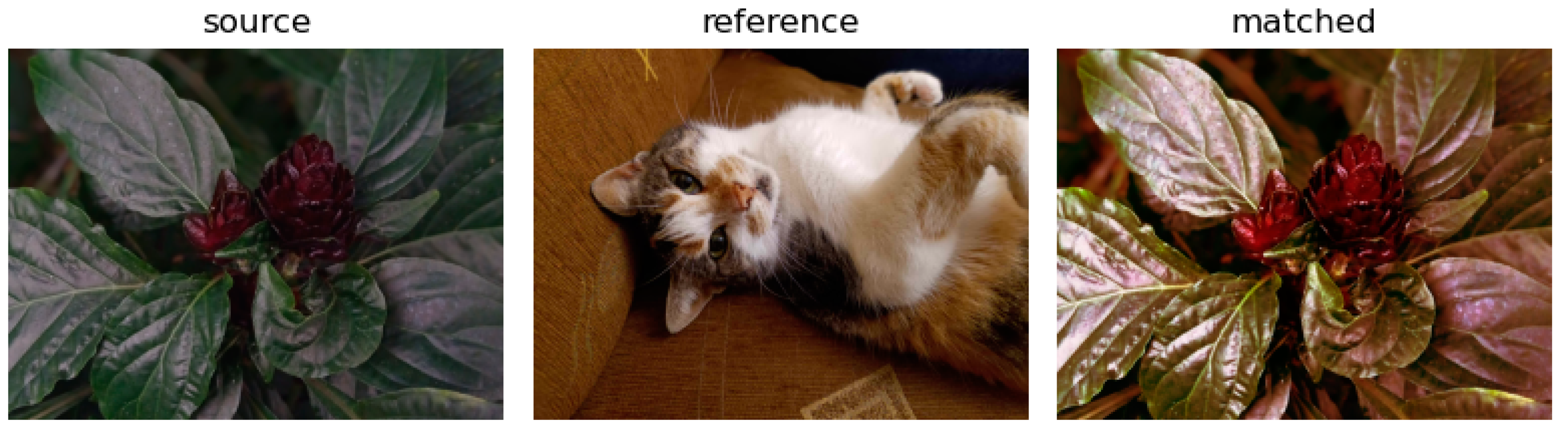

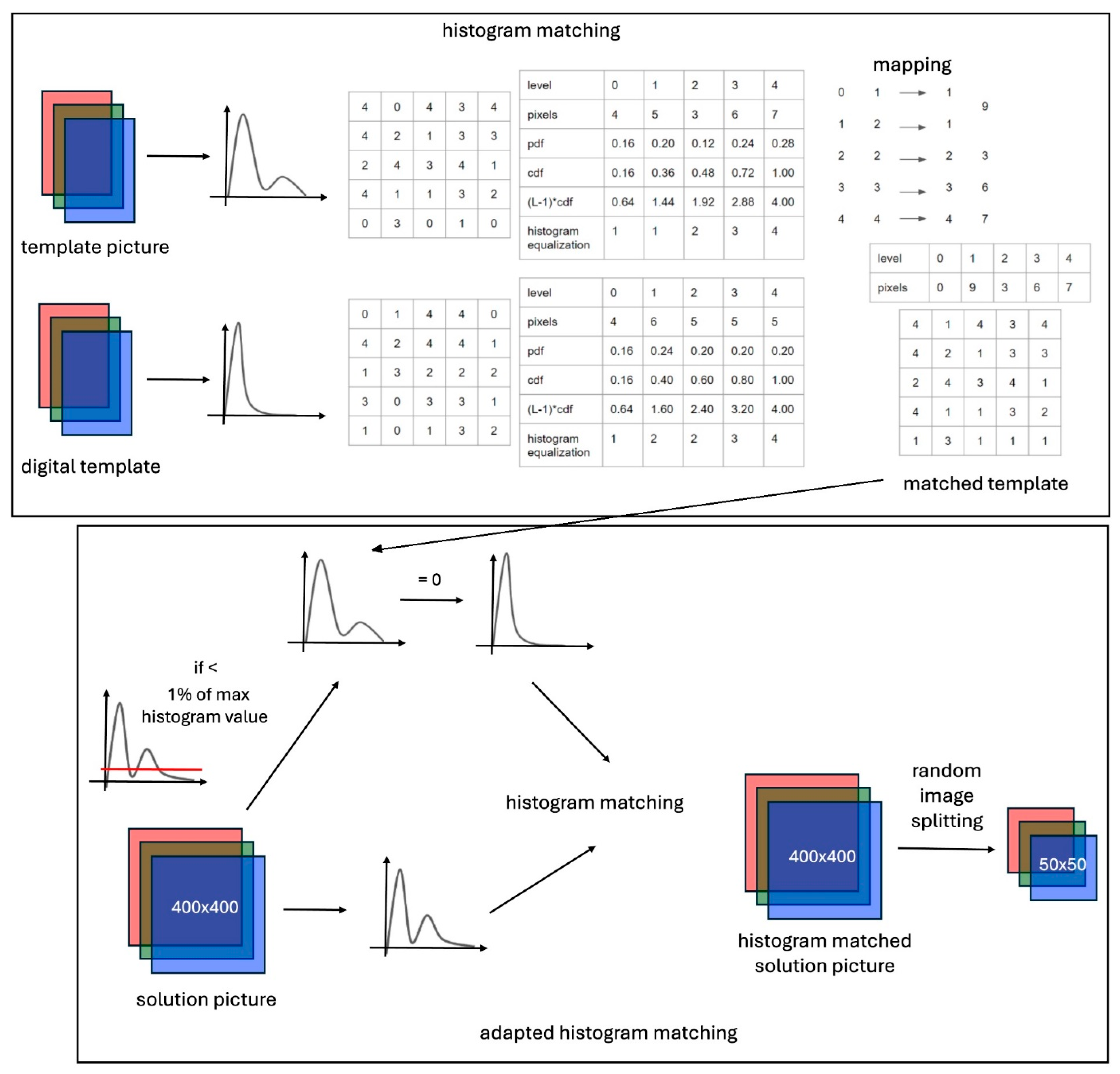

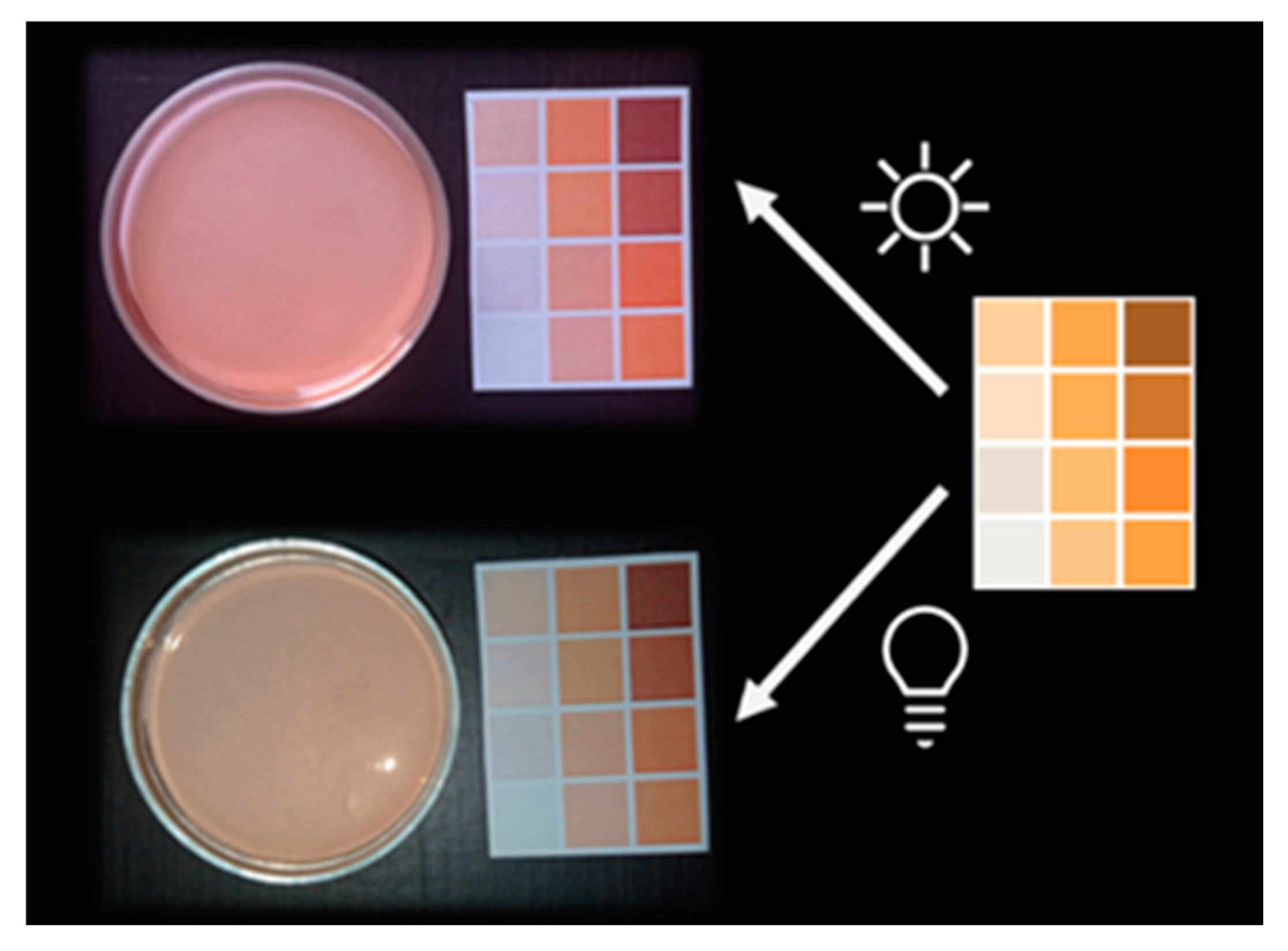

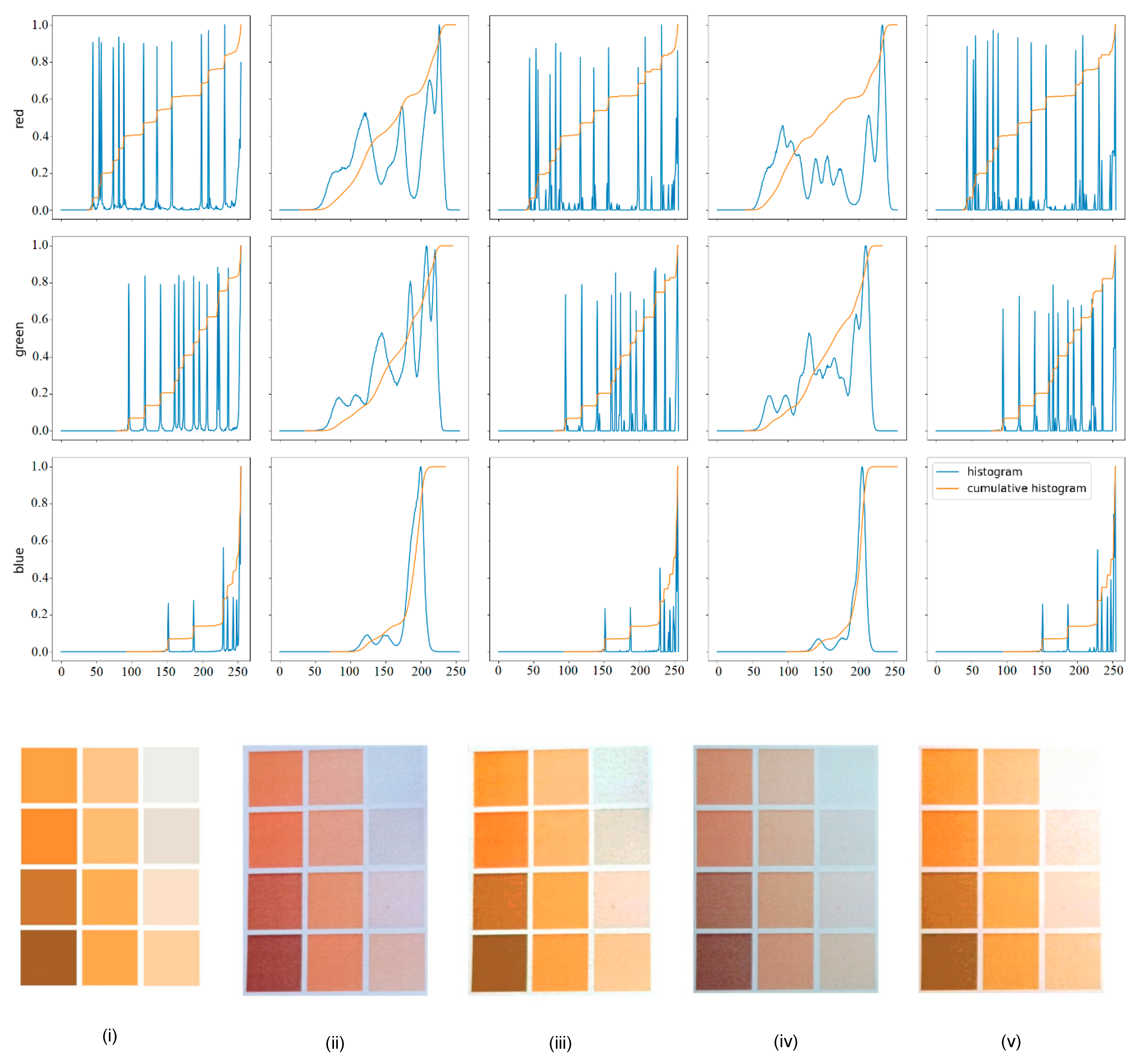

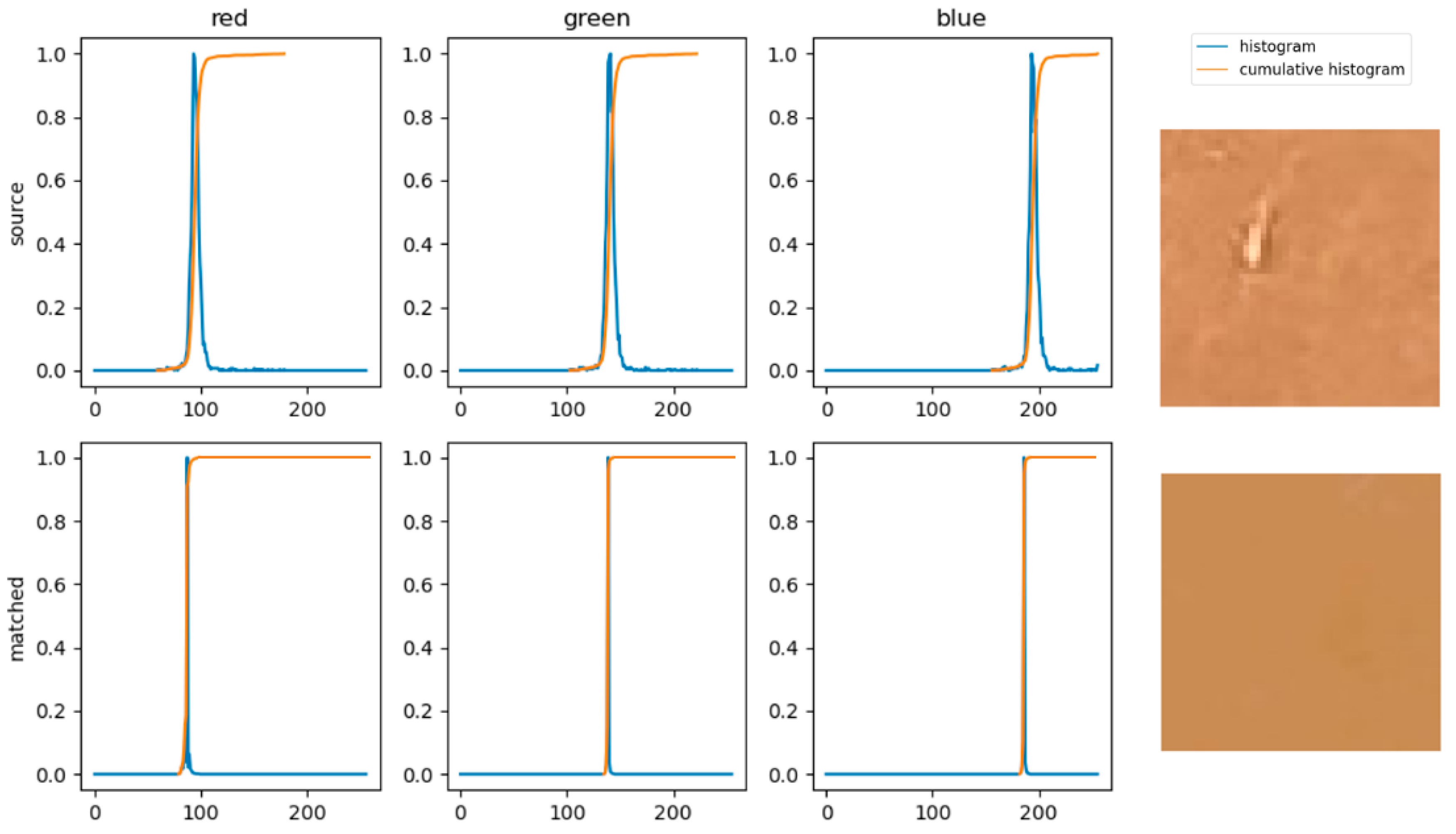

3.1. Color Matching Algorithm

3.2. Software

4. Results and Discussion

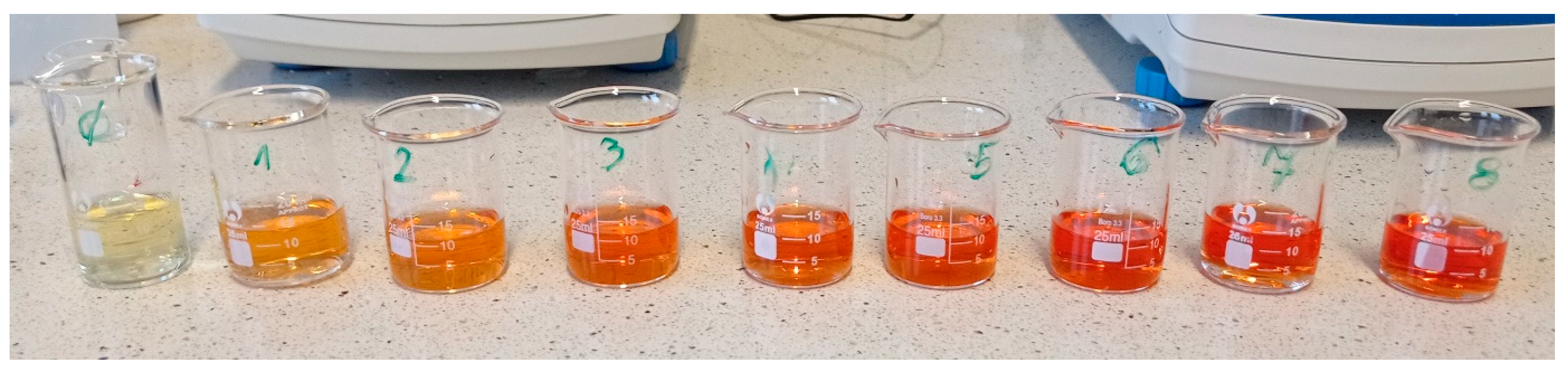

4.1. Reagents, Laboratory Equipment and Measurement Procedure

4.2. Preparation of a Color Template and Picture Acquisition

- training set: 60416 images

- validation set: 20480 images

- test set: 20480 images.

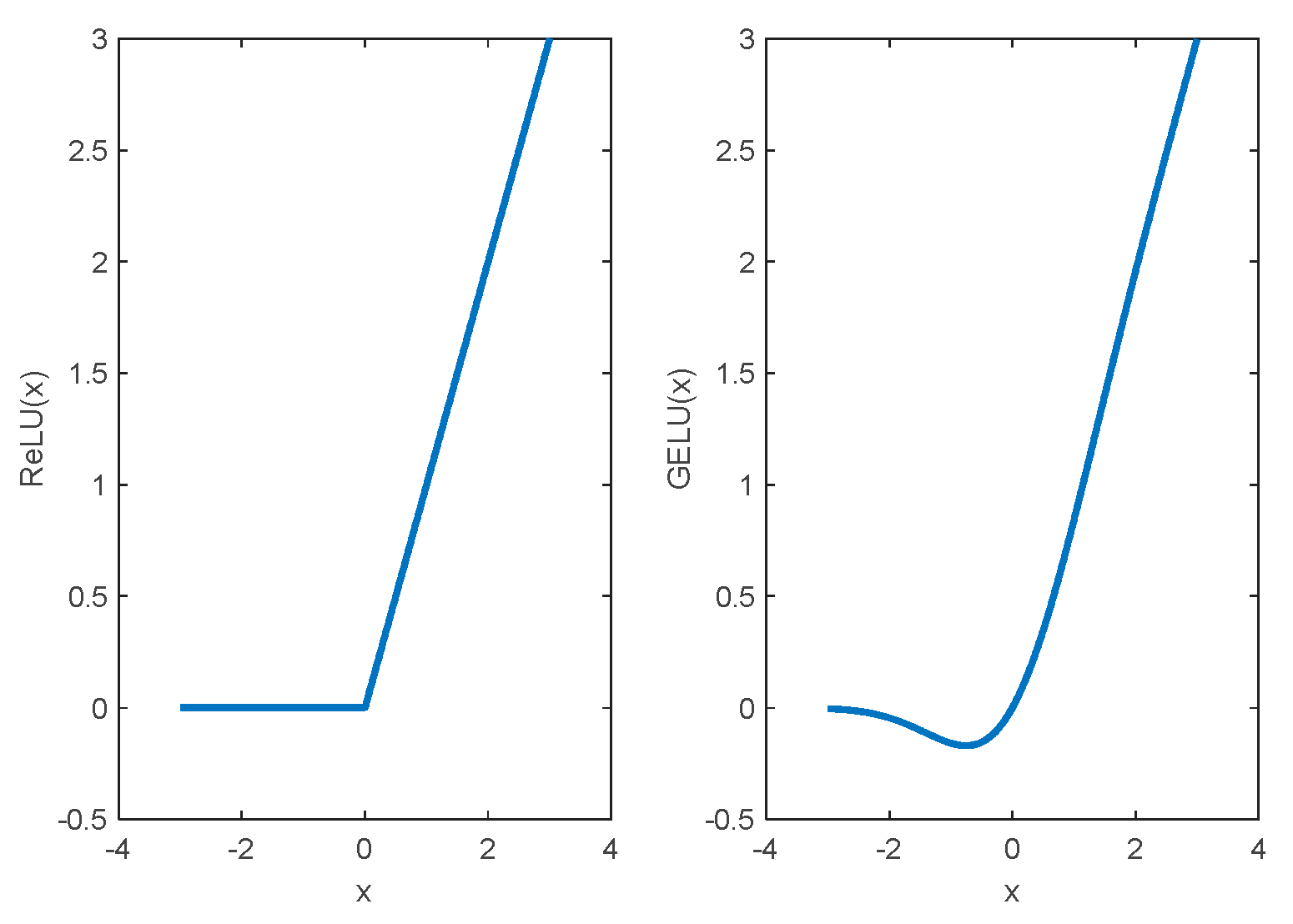

4.3. Network Architecture

4.4. Ablation Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Minz, P.S.; Saini, C.S. Evaluation of RGB Cube Calibration Framework and Effect of Calibration Charts on Color Measurement of Mozzarella Cheese. J. Food Meas. Charact. 2019, 13, 1537–1546. [Google Scholar] [CrossRef]

- Ernst, A.; Papst, A.; Ruf, T.; Garbas, J.U. Check My Chart: A Robust Color Chart Tracker for Colorimetric Camera Calibration. In Proceedings of the ACM International Conference Proceeding Series; 2013.

- Sunoj, S.; Igathinathane, C.; Saliendra, N.; Hendrickson, J.; Archer, D. Color Calibration of Digital Images for Agriculture and Other Applications. ISPRS J. Photogramm. Remote Sens. 2018, 146, 221–234. [Google Scholar] [CrossRef]

- Kim, M.; Kim, B.; Park, B.; Lee, M.; Won, Y.; Kim, C.Y.; Lee, S. A Digital Shade-Matching Device for Dental Color Determination Using the Support Vector Machine Algorithm. Sensors (Switzerland) 2018, 18, 3051. [Google Scholar] [CrossRef] [PubMed]

- Karaimer, H.C.; Brown, M.S. Improving Color Reproduction Accuracy on Cameras. In Proceedings of the Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; 2018; pp. 6440–6449.

- Roy, S.; kumar Jain, A.; Lal, S.; Kini, J. A Study about Color Normalization Methods for Histopathology Images. Micron 2018, 114, 42–61. [Google Scholar] [CrossRef]

- Food and Agriculture Organization, World Health Organization, Vitamin and Mineral Requirements in Human Nutrition Second Edition. FAO/WHO Rep. 1998, 1–20. doi:92 4 154612 3.

- Food and Agriculture Organization, World Health Organization, Human Vitamin and Mineral Requirements. FAO/WHO Rep. 2001.

- Lykkesfeldt, J. On the Effect of Vitamin C Intake on Human Health: How to (Mis)Interprete the Clinical Evidence. Redox Biol. 2020, 34, 101532. [Google Scholar] [CrossRef] [PubMed]

- Dosed, M.; Jirkovsk, E.; Kujovsk, L.; Javorsk, L.; Pourov, J.; Mercolini, L.; Remi, F. Vitamin C—Sources, Physiological Role, Kinetics, Deficiency, Use, Toxicity, and Determination. Nutrients 2021, 615, 1–34. [Google Scholar] [CrossRef]

- Suntornsuk, L.; Gritsanapun, W.; Nilkamhank, S.; Paochom, A. Quantitation of Vitamin C Content in Herbal Juice Using Direct Titration. J. Pharm. Biomed. Anal. 2002, 28, 849–855. [Google Scholar] [CrossRef]

- Klimczak, I.; Gliszczyńska-Świgło, A. Comparison of UPLC and HPLC Methods for Determination of Vitamin C. Food Chem. 2015, 175, 100–105. [Google Scholar] [CrossRef]

- Gazdik, Z.; Zitka, O.; Petrlova, J.; Adam, V.; Zehnalek, J.; Horna, A.; Reznicek, V.; Beklova, M.; Kizek, R. Determination of Vitamin C (Ascorbic Acid) Using High Performance Liquid Chromatography Coupled with Electrochemical Detection. Sensors 2008, 8, 7097–7112. [Google Scholar] [CrossRef]

- Bunaciu, A.A.; Bacalum, E.; Aboul-Enein, H.Y.; Udristioiu, G.E.; Fleschin, Ş. FT-IR Spectrophotometric Analysis of Ascorbic Acid and Biotin and Their Pharmaceutical Formulations. Anal. Lett. 2009, 42, 1321–1327. [Google Scholar] [CrossRef]

- Zhu, Q.; Dong, D.; Zheng, X.; Song, H.; Zhao, X.; Chen, H.; Chen, X. Chemiluminescence Determination of Ascorbic Acid Using Graphene Oxide@copper-Based Metal-Organic Frameworks as a Catalyst. RSC Adv. 2016, 6, 25047–25055. [Google Scholar] [CrossRef]

- Berg, R.W. Investigation of L (+)-Ascorbic Acid with Raman Spectroscopy in Visible and UV Light. Appl. Spectrosc. Rev. 2015, 50, 193–239. [Google Scholar] [CrossRef]

- Yang, H.; Irudayaraj, J. Rapid Determination of Vitamin C by NIR, MIR and FT-Raman Techniques. J. Pharm. Pharmacol. 2010, 54, 1247–1255. [Google Scholar] [CrossRef]

- Zykova, E.V.; Sandetskaya, N.G.; Ostrovskii, O.V.; Verovskii, V.E. Methods of Analysis and Process Control Determining Ascorbic Acid in Medicinal Preparations By Capillary Zone Electrophoresis and Micellar. Pharm. Chem. J. 2010, 44, 463–465. [Google Scholar] [CrossRef]

- Dodevska, T.; Hadzhiev, D.; Shterev, I. A Review on Electrochemical Microsensors for Ascorbic Acid Detection: Clinical, Pharmaceutical, and Food Safety Applications. Micromachines 2023, 14, 41. [Google Scholar] [CrossRef]

- Huang, L.; Tian, S.; Zhao, W.; Liu, K.; Guo, J. Electrochemical Vitamin Sensors: A Critical Review. Talanta 2021, 222, 121645. [Google Scholar] [CrossRef] [PubMed]

- Broncová, G.; Prokopec, V.; Shishkanova, T.V. Potentiometric Electronic Tongue for Pharmaceutical Analytics: Determination of Ascorbic Acid Based on Electropolymerized Films. Chemosensors 2021, 9, 110. [Google Scholar] [CrossRef]

- Coutinho, M.S.; Morais, C.L.M.; Neves, A.C.O.; Menezes, F.G.; Lima, K.M.G. Colorimetric Determination of Ascorbic Acid Based on Its Interfering Effect in the Enzymatic Analysis of Glucose: An Approach Using Smartphone Image Analysis. J. Braz. Chem. Soc. 2017, 28, 2500–2505. [Google Scholar] [CrossRef]

- Porto, I.S.A.; Santos Neto, J.H.; dos Santos, L.O.; Gomes, A.A.; Ferreira, S.L.C. Determination of Ascorbic Acid in Natural Fruit Juices Using Digital Image Colorimetry. Microchem. J. 2019, 149, 104031. [Google Scholar] [CrossRef]

- Kong, L.; Gan, Y.; Liang, T.; Zhong, L.; Pan, Y.; Kirsanov, D.; Legin, A.; Wan, H.; Wang, P. A Novel Smartphone-Based CD-Spectrometer for High Sensitive and Cost-Effective Colorimetric Detection of Ascorbic Acid. Anal. Chim. Acta 2020, 1093, 150–159. [Google Scholar] [CrossRef]

- Li, C.; Xu, X.; Wang, F.; Zhao, Y.; Shi, Y.; Zhao, X.; Liu, J. Portable Smartphone Platform Integrated with Paper Strip-Assisted Fluorescence Sensor for Ultrasensitive and Visual Quantitation of Ascorbic Acid. Food Chem. 2023, 402, 134222. [Google Scholar] [CrossRef]

- Zhaoa, W.; Caoa, P.; Zhua, Y.; Liua, S.; Gaob, H.-W.; Huang, C. Rapid Detection of Vitamin C Content in Fruits and Vegetables Using a Digital Camera and Color Reaction. Quim. Nov. 2020, 43, 1421–1430. [Google Scholar] [CrossRef]

- Dumancas, G.G.; Ramasahayam, S.; Bello, G.; Hughes, J.; Kramer, R. Chemometric Regression Techniques as Emerging, Powerful Tools in Genetic Association Studies. TrAC - Trends Anal. Chem. 2015, 74, 79–88. [Google Scholar] [CrossRef]

- Wold, S.; Sjöström, M.; Eriksson, L. PLS-Regression: A Basic Tool of Chemometrics. Chemom. Intell. Lab. Syst. 2001, 58, 109–130. [Google Scholar] [CrossRef]

- Li, B.; Morris, J.; Martin, E.B. Model Selection for Partial Least Squares Regression. Chemom. Intell. Lab. Syst. 2002, 64, 79–89. [Google Scholar] [CrossRef]

- Smola, A.J.; Schokopf, B. A Tutorial on Support Vector Regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press, 2016; ISBN 9780262035613.

- Lathuiliere, S.; Mesejo, P.; Alameda-Pineda, X.; Horaud, R. A Comprehensive Analysis of Deep Regression. In Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE, 2020; Vol. 42, pp. 2065–2081.

- Pascual, L.; Gras, M.; Vidal-Brotóns, D.; Alcañiz, M.; Martínez-Máñez, R.; Ros-Lis, J.V. A Voltammetric E-Tongue Tool for the Emulation of the Sensorial Analysis and the Discrimination of Vegetal Milks. Sensors Actuators B Chem. 2018, 270, 231–238. [Google Scholar] [CrossRef]

- Wójcik, S.; Ciepiela, F.; Jakubowska, M. Computer Vision Analysis of Sample Colors versus Quadruple-Disk Iridium-Platinum Voltammetric e-Tongue for Recognition of Natural Honey Adulteration. Meas. J. Int. Meas. Confed. 2023, 209, 112514. [Google Scholar] [CrossRef]

- Lee, M. Mathematical Analysis and Performance Evaluation of the GELU Activation Function in Deep Learning. J. Math. 2023, 2023, Article. [Google Scholar] [CrossRef]

- Sikandar, S.; Mahum, R.; Alsalman, A.M. A Novel Hybrid Approach for a Content-Based Image Retrieval Using Feature Fusion. Appl. Sci. 2023, 13, 4581. [Google Scholar] [CrossRef]

- Hasan, M.A.; Haque, F.; Sabuj, S.R.; Sarker, H.; Goni, M.O.F.; Rahman, F.; Rashid, M.M. An End-to-End Lightweight Multi-Scale CNN for the Classification of Lung and Colon Cancer with XAI Integration. Technologies 2024, 12, 56. [Google Scholar] [CrossRef]

| Layer (type) | Output shape | Param # |

|---|---|---|

| Input layer | (None, 50, 50, 3) | 0 |

| Conv2D | (None, 50, 50,3 2) | 128 |

| Activation | (None, 50, 50, 32) | 0 |

| Conv2D | (None, 50, 50, 64) | 2112 |

| Activation | (None, 50, 50, 64) | 0 |

| AveragePooling2D | (None, 46, 46, 64) | 0 |

| LayerNormalization | (None, 46, 46, 64) | 128 |

| Conv2D | (None, 46, 46, 64) | 4160 |

| Activation | (None, 46, 46, 64) | 0 |

| AveragePooling2D | (None, 44, 44, 64) | 0 |

| GlobalMaxPooling2D | (None, 64) | 0 |

| Flatten | (None, 64) | 0 |

| Dense | (None, 150) | 9750 |

| Dropout | (None, 150) | 0 |

| Dense | (None, 50) | 7550 |

| Dense | (None, 1) | 51 |

| Total params: 23879 (93.28 KB) Trainable params: 23879 (93.28 KB) Non-trainable params: 0 (0.00 Byte) | ||

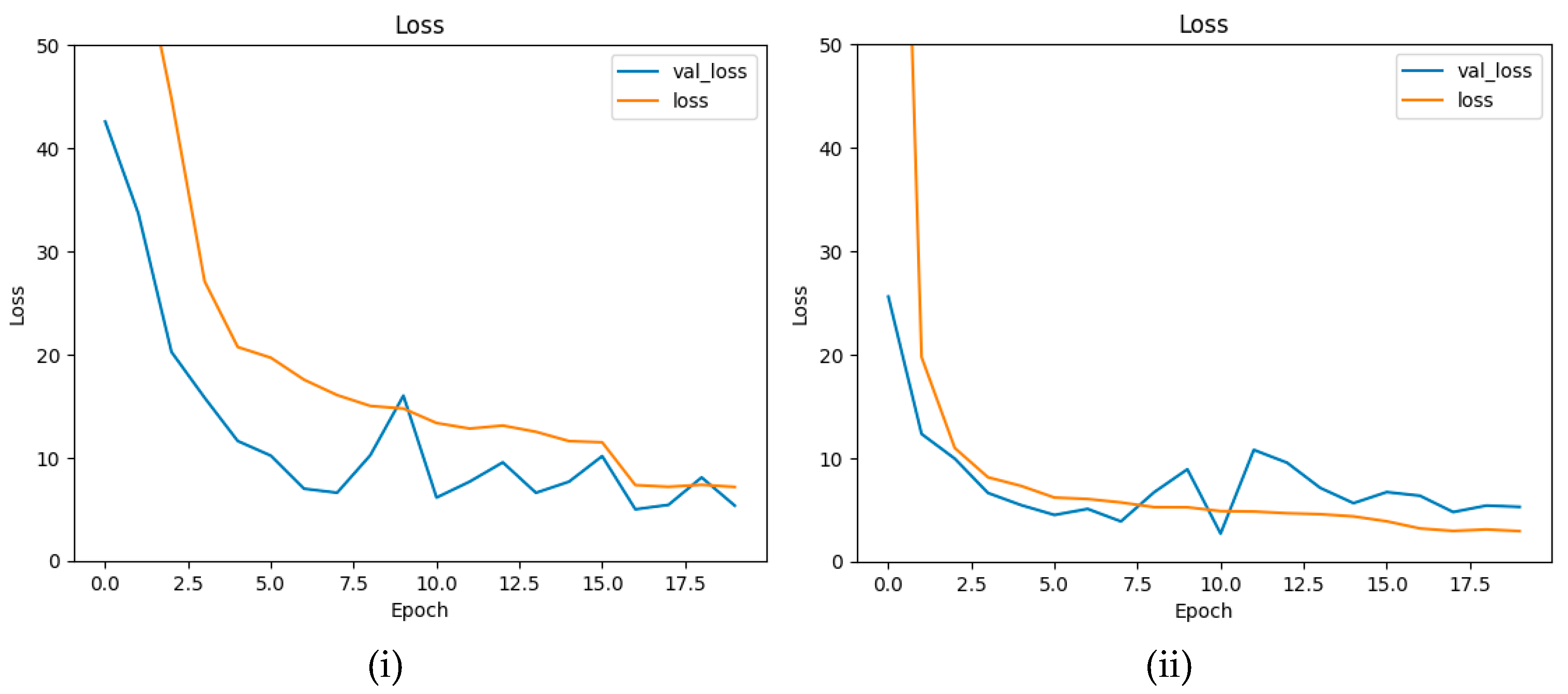

| Parameter | Setting |

|---|---|

| Image size | 50 x 50 x 3 |

| Loss function | MSE + MAE |

| Optimizer | Adam |

| Initial learning rate | 0.001 |

| Metric | RMSE |

| Batch size | 30 |

| Epoch | 20 |

| Shuffle | Every epoch |

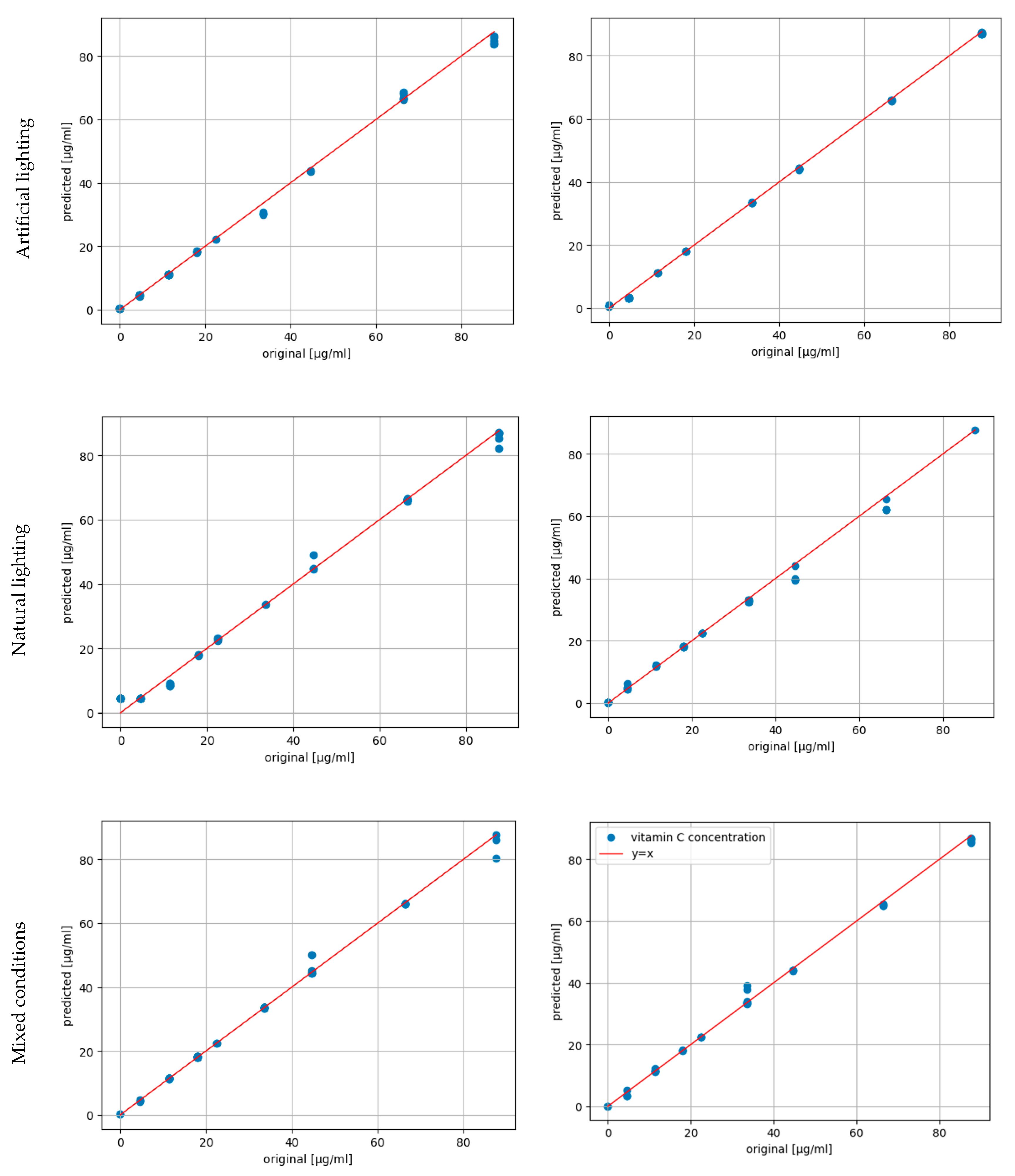

| Artificial lighting, original | Artificial lighting, matched | Natural lighting, original | Natural lighting, matched | Mixed lighting, original | Mixed lighting, matched | |

|---|---|---|---|---|---|---|

| slope | 0.9799 | 0.9961 | 0.9706 | 0.9452 | 0.9784 | 0.9825 |

| intercept | -0.0036 | -0.2091 | 1.1888 | 0.6051 | 0.5832 | 0.4086 |

| RMSE / µg·mL-1 | 1.5769 | 0.7525 | 2.2900 | 1.9542 | 1.4828 | 1.3510 |

| R2 score | 0.9979 | 0.9999 | 0.9953 | 0.9956 | 0.9966 | 0.9968 |

| Artificial lighting, original | Artificial lighting, matched | Natural lighting, original | Natural lighting, matched | Mixed lighting, original | Mixed lighting, matched | |

|---|---|---|---|---|---|---|

| Slope | 0.9058 | 1.0138 | 0.9538 | 1.0071 | 1.0005 | 1.0112 |

| Intercept | 0.6891 | -0.3598 | -0.8347 | 0.2263 | 0.8747 | 0.0203 |

| RMSE / µg·mL-1 | 5.8415 | 1.7077 | 3.9504 | 2.5151 | 4.3488 | 2.8211 |

| R2 score | 0.9557 | 0.9962 | 0.9798 | 0.9918 | 0.9755 | 0.9897 |

| Change in architecture | RMSE / µg·mL-1 | R2 score | Comparison |

|---|---|---|---|

| Batch size = 120 | 2.3535 | 0.9947 | Decrease of R2 score and increase of RMSE in comparison to final model. |

| Image size = 16 | 4.0146 | 0.9825 | Significant decrease of R2 score and increase of RMSE in comparison to final model. Lower decline of initial rate in comparison to final version. |

| Loss function modification | 3.8203 | 0.9856 | Decrease of R2 score and significant increase of RMSE in comparison to final model. Minimized differences between training and validation loss during training. |

| Optimizer = Adagrad | 3.1625 | 0.9877 | Decrease of R2 score and increase of RMSE in comparison to final model. |

| Optimizer = SGD | - | - | There was no change in loss value between the epochs. The model did not learn to predict the concentration of vitamin C solutions. |

| Initial learning rate = 0.01 or 0.0001 | - | - | No significant change in the results was detected. The results achieved did not vary significantly in comparison to final model results. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).