1. Introduction

The rapid advancement of artificial intelligence (AI) in financial services has transformed traditional banking and finance paradigms, ushering in an era of unprecedented financial inclusion. AI-driven technologies offer a unique promise to democratize access to financial services, thereby enhancing customer satisfaction and broadening the economic participation of traditionally underserved or marginalized groups [

1]. However, as the penetration of AI into these critical sectors deepens, it raises significant ethical concerns and challenges related to the transparency, accountability, and legitimacy of algorithmic decisions—factors that fundamentally influence user trust and the perceived fairness of these systems [

2,

3].

Drawing upon organizational justice theory, which emphasizes the importance of fairness perceptions in shaping individual attitudes and behaviors [

4], this study investigates how ethical dimensions of AI algorithms influence user satisfaction and recommendation in the context of financial inclusion. Additionally, we employ the heuristic-systematic model [

5] to understand how users process information about AI systems and form perceptions of algorithmic fairness.

Despite the extensive deployment of AI in financial services, significant gaps remain in our understanding of how these algorithms impact user response, particularly through the lens of ethical operations and fairness. While current literature extensively explores the utility and efficiency of AI-driven systems in enhancing financial inclusion service access [

6,

7], there is scant focus on the nuanced relationships between the ethical dimensions of AI algorithms and user satisfaction as well as their recommendation. This oversight persists despite growing concerns over algorithmic bias, which can exacerbate financial exclusion instead of alleviating it [

8,

9].

To address this research gap, this paper examines how the ethical constructs of algorithm transparency, accountability, and legitimacy collectively influence perceived algorithmic fairness and, consequently, users' satisfaction with AI-driven financial inclusion and their recommendation of it as well. Grounded in organizational justice theory and the heuristic-systematic model, we propose a conceptual model that links these ethical constructs to user satisfaction and recommendation [

10] through the mediating role of perceived algorithmic fairness, thereby providing a holistic view of the ethical implications of AI in financial services.

The contributions of this study are multifaceted. First, by quantitatively demonstrating how ethical considerations in AI deployment influence perceived fairness and user response, it highlights the potential of ethical AI to enhance financial inclusion outcomes. Second, through the quantitative data, this study offers a richer, more nuanced understanding of user perceptions and behaviors in relation to AI-driven services, advancing the application of organizational justice theory and the heuristic-systematic model in the context of AI and financial inclusion. Third, by linking ethical constructs and users' perceived algorithmic fairness directly to user response, this paper expands existing research [

11,

12] on financial technology behavior to include ethical dimensions, providing a more comprehensive framework for studying AI in financial services. Finally, the findings provide actionable insights for financial institutions and Fintech developers, emphasizing the importance of ethical practices in algorithm design to foster satisfaction and recommendation among users.

2. Theoretical Background

2.1. AI in Financial Inclusion

The integration of Artificial Intelligence (AI) in financial services has revolutionized the landscape of financial inclusion. AI-driven technologies, such as machine learning algorithms, predictive analytics, and automated decision-making systems, have made it possible to extend financial services to previously underserved populations. These technologies enable financial institutions to assess creditworthiness, detect fraud, and personalize financial products with unprecedented accuracy and efficiency. Studies have shown that AI can significantly reduce the cost of delivering financial services, thereby making it feasible to serve low-income and remote populations [

13,

14,

15]. As a result, AI has the potential to bridge the financial inclusion gap and promote economic empowerment for marginalized communities [

16].

However, despite the promising benefits of AI in financial inclusion, there are also significant concerns about fairness, transparency, and accountability that need to be addressed [

17,

18]. AI systems can perpetuate and even amplify existing biases if not properly managed [

19]. For instance, if the data used to train these algorithms contain biases, the resulting decisions may unfairly disadvantage certain groups [

20]. This can lead to a vicious cycle where the very people who are supposed to benefit from financial inclusion are instead further marginalized [

21].

While several studies have investigated the ethical implications of AI in various domains [

22,

23], there is a notable research gap in understanding the specific ethical challenges and considerations in the context of AI-driven financial inclusion. Given the potential impact of AI on marginalized communities and the unique characteristics of the financial inclusion landscape, it is crucial to investigate how ethical principles and algorithmic fairness can be operationalized in this specific context.

Therefore, it is crucial to ensure that AI systems are designed and implemented with ethical considerations in mind, promoting fairness and equity in financial services. This requires a proactive approach that involves diverse stakeholders, including regulators, financial institutions, and civil society organizations, to develop guidelines and best practices for responsible AI deployment in financial inclusion [

24,

25]. By addressing the research gap and prioritizing the investigation of ethical issues and algorithmic fairness in AI-driven financial inclusion, we can work towards ensuring that the benefits of these technologies are distributed equitably and that the rights and interests of marginalized communities are protected.

2.2. Organizational Justice Theory

Organizational justice theory provides a useful framework for understanding user responses to AI-driven financial inclusion. This theory posits that individuals evaluate the fairness of organizational processes and outcomes based on three dimensions: distributive justice, procedural justice, and interactional justice [

26]. Distributive justice refers to the perceived fairness of outcomes, procedural justice to the fairness of processes used to determine outcomes, and interactional justice to the quality of interpersonal treatment during the process.

Applying organizational justice theory to AI in financial inclusion provides a robust framework for understanding how users perceive the fairness of AI-driven financial services, especially in terms of algorithm transparency, accountability, and legitimacy. Algorithm transparency aligns closely with procedural justice, as it refers to the extent to which users can see and understand the processes behind algorithmic decisions. When users can comprehend how an algorithm works and on what basis decisions are made, they are more likely to perceive the process as fair [

27]. This perceived fairness can increase trust in the system and overall satisfaction with the service.

Algorithm accountability involves establishing mechanisms through which algorithmic decisions can be audited, explained, and, if necessary, contested [

28,

29]. This concept is tied to both procedural and interactional justice. Procedural justice is addressed when users know that there are checks and balances to ensure that the algorithms operate as intended and can be corrected if errors occur. Interactional justice comes into play when users feel that they are treated with respect and given adequate explanations for decisions that affect them.

Algorithm legitimacy, closely related to distributive justice, refers to the extent to which users perceive the use of algorithms as appropriate, justified, and in line with societal norms and values. Distributive justice focuses on the perceived fairness of outcomes. When users believe that algorithmic decisions are legitimate, they are more likely to accept the outcomes as fair, even if the outcomes are not in their favor [

30,

31].

In conclusion, organizational justice theory, which encompasses distributive, procedural, and interactional justice, provides a comprehensive framework for understanding the relationship between algorithm transparency, accountability, and legitimacy, and user responses to AI-driven financial inclusion. By ensuring that AI-driven financial services adhere to these principles of organizational justice, institutions can foster trust, satisfaction, and acceptance among users.

2.3. Heuristics-Systematic Model

The heuristics-systematic model explains how individuals process information and make judgments. According to this model, people use two types of cognitive processing: heuristic processing, which is quick and based on simple cues or rules of thumb, and systematic processing, which is slower and involves more deliberate and thorough analysis of information [

5]. In the context of AI-driven financial inclusion, the heuristics-systematic model helps us understand the pathways through which algorithm transparency, accountability, and legitimacy impact perceived algorithmic fairness and, ultimately, user satisfaction.

Algorithm transparency can influence both heuristic and systematic processing. Users may heuristically trust transparent algorithms if they are provided with clear, easy-to-understand summaries or visualizations of how the algorithm works [

2]. Simple explanations and transparency badges (e.g., "This decision was made by a transparent AI") can serve as heuristic cues that enhance perceived fairness. For users who engage in systematic processing, detailed documentation, access to algorithmic audits, and the ability to explore the decision-making process enhance perceived fairness [

32]. Transparency allows these users to thoroughly evaluate and verify the fairness of the algorithmic processes.

Similarly, algorithm accountability can affect both types of cognitive processing. Accountability cues, such as the presence of customer support for AI-related queries or the availability of a clear appeals process, can act as heuristics that assure users of the algorithm's fairness. Users who process information systematically will appreciate detailed accountability mechanisms, such as audit trails, regular performance reviews of the AI system, and clear protocols for rectifying erroneous decisions [

33]. These elements provide the depth needed for users to perceive the system as accountable and fair.

Lastly, algorithm legitimacy can also influence heuristic and systematic processing. Simple endorsements from regulatory bodies or ethical certifications can serve as heuristic cues that bolster the perceived legitimacy of the algorithm [

34]. Users engaging in systematic processing will look for deeper validation, such as evidence of bias mitigation efforts, compliance with ethical standards, and comprehensive impact assessments. These factors contribute to a robust perception of legitimacy, reinforcing the overall fairness of the algorithm.

To summarize, the heuristics-systematic model offers a comprehensive framework for understanding the impact of algorithm transparency, accountability, and legitimacy on perceived algorithmic fairness. By addressing the cognitive processing routes of users, financial institutions can effectively foster trust and satisfaction in AI-driven financial services. Ensuring that algorithmic systems cater to both heuristic and systematic processing can lead to a more inclusive and user-friendly experience, ultimately promoting the success of AI-driven financial inclusion initiatives.

2.4. Perceived Algorithmic Fairness

Perceived algorithmic fairness is a critical determinant of user response to AI-driven financial inclusion. It encompasses users' perceptions of the transparency, accountability, and legitimacy of algorithmic decisions. Research suggests that when users perceive algorithms to be fair, they are more likely to trust the technology and feel satisfied with the services provided [

35,

36,

37]. For instance, a study by Shin [

2] examined how perceived fairness of a personalized AI system influenced user trust and perceived usefulness; ultimately, these factors influenced user satisfaction with the system.

To enhance perceived algorithmic fairness, it is essential to focus on transparency, accountability, and legitimacy. Algorithm transparency involves providing users with clear information about how decisions are made, including the data used and the logic of the algorithm. Grimmelikhuijsen [

38] stated that algorithmic transparency encompasses key aspects of procedural fairness. Ideally, explaining an algorithmic decision demonstrates that it was made impartially and thoughtfully. A study by Starke [

39] highlighted the importance of algorithmic accountability in the context of algorithmic decision-making, showing that perceived fairness is in relation to attributes such as transparency or accountability. Qin et al. [

40] found that the favorable attitudes towards AI-driven employee performance evaluations enhance perceived legitimacy, which in turn fosters a sense of fairness in the assessment process.

In the context of financial inclusion, studies have begun to explore the role of perceived algorithmic fairness. For example, Adeoye et al. [

16] pointed out that when leveraging AI and data analytics to enhance financial inclusion, it's crucial to address various challenges and considerations. Key among these are ensuring data privacy and security, and promoting fairness and transparency in AI algorithms. However, empirical studies of the AI-driven financial inclusion fairness and its antecedents are still scarce.

To sum up, the successful integration of AI in financial inclusion hinges on addressing ethical considerations and fostering perceived algorithmic fairness. By enhancing transparency, accountability, and legitimacy, financial institutions can improve user trust and satisfaction, thereby advancing the goal of inclusive financial services. As the field of AI-driven financial inclusion continues to evolve, further research is needed to understand the nuances of perceived algorithmic fairness and develop effective strategies for promoting it.

3. Hypotheses Development

3.1. Ethical Considerations and Users’ Perceived Algorithmic Fairness

The ethical dimensions of AI algorithms—transparency, accountability, and legitimacy—play a crucial role in shaping users' perceptions of algorithmic fairness. These factors work in concert to create an overall perception of fairness, which is critical for user acceptance and trust in AI-driven financial inclusion services.

Transparency enables users to understand how algorithmic decisions are made [

41]. When AI systems provide clear explanations and insights into their decision-making processes, users are more likely to perceive them as fair [

42].

Accountability is another critical ethical dimension that ensures AI systems can be audited, questioned, and rectified when necessary [

43]. When users know that there are mechanisms in place to hold AI systems accountable for their decisions, they are more likely to perceive them as fair [

44]. Accountable AI systems afford users a sense of fairness, which, in turn, promotes a sense of satisfaction and recommendation. In the financial inclusion context, accountability measures such as clear dispute resolution processes and human oversight can enhance users' perceptions of algorithmic fairness.

Legitimacy refers to the extent to which users perceive the use of AI algorithms as appropriate and justified within a given context. When AI systems align with societal norms and values, users are more likely to accept their decisions as fair (Martin and Waldman, 2023). In the case of AI-driven financial inclusion, legitimacy can be established through compliance with ethical standards, regulatory approval, and alignment with financial inclusion goals. Studies have shown that perceived legitimacy enhances trust and continuous usage intention of AI systems [

31]. Hence, based on the above discussion, we propose the following hypotheses:

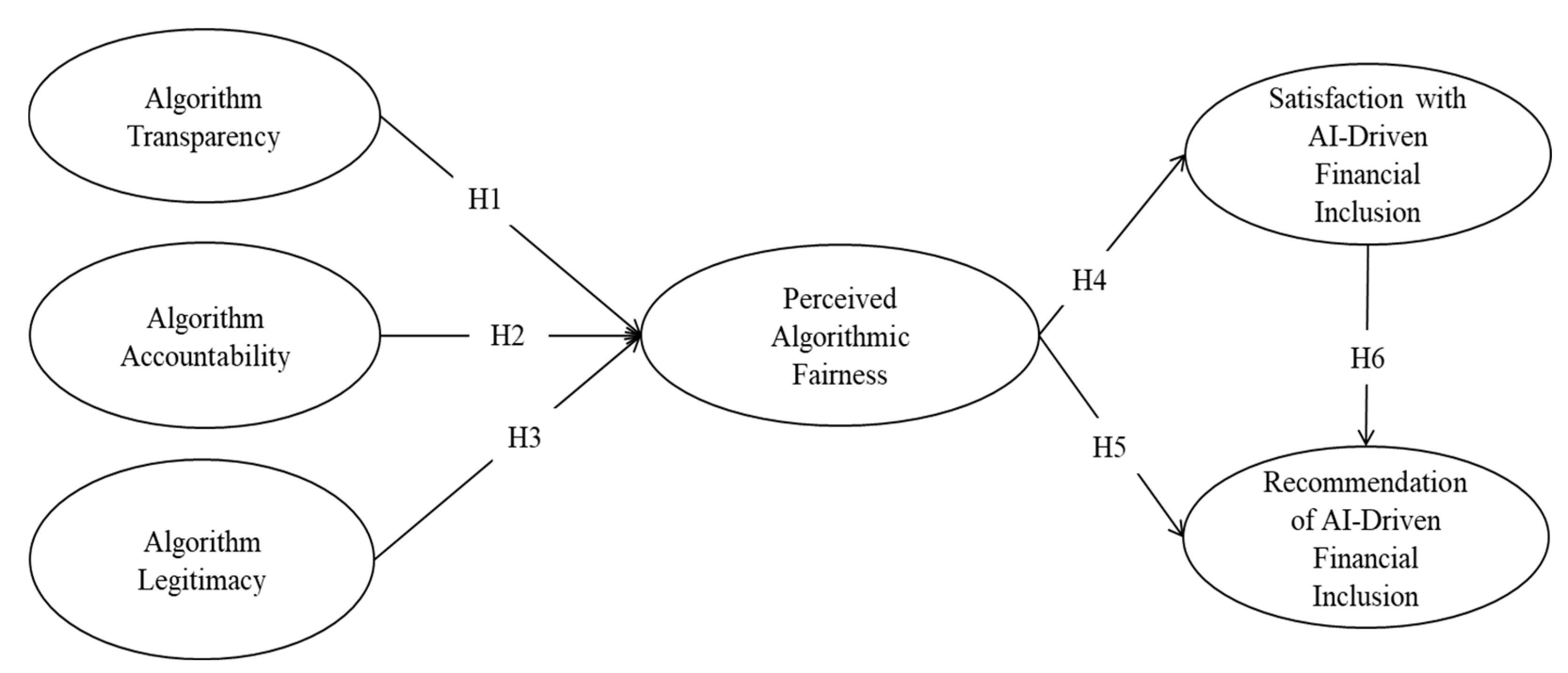

H1: Algorithm transparency positively influences perceived Algorithmic fairness.

H2: Algorithm accountability positively influences perceived Algorithmic fairness.

H3: Algorithm legitimacy positively influences perceived Algorithmic fairness.

These three ethical considerations—transparency, accountability, and legitimacy—work together to shape users' overall perceptions of algorithmic fairness. By addressing these aspects in AI system design and implementation, financial institutions can foster positive response among users of AI-driven financial inclusion services.

3.2. Perceived Algorithmic Fairness, Users’ Satisfaction and Recommendation

Perceived algorithmic fairness is a critical factor that influences users’ attitudes and behaviors towards AI-driven systems. When users perceive AI algorithms as fair, they are more likely to be satisfied with the services provided and recommend them to others [

45,

46].

In the context of financial inclusion, perceived algorithmic fairness can significantly impact users’ satisfaction with AI-driven services. Users who view an AI system as fair tend to be more satisfied with its outputs. Conversely, those who perceive the AI system as unfair are likely to be dissatisfied with the results it generates [

47]. This satisfaction can stem from the belief that the AI system treats them equitably and makes unbiased decisions [

48].

Furthermore, perceived algorithmic fairness can also influence users’ likelihood to recommend AI-driven financial inclusion services to others. Users who perceive an AI system as fair are more inclined to recommend it to others. In contrast, those who view it as unfair tend to respond negatively, potentially spreading unfavorable opinions [

49]. This phenomenon may be explained by reciprocity. Users who believe AI-driven financial inclusion systems employ fair evaluation processes are likely to respond positively in return. Conversely, those who feel fairness principles have been violated are prone to express negative reactions [

50]. We propose that users' perception of fairness in the algorithmic evaluation process directly influences their likelihood of reciprocating positively. Specifically, the fairer users perceive the process to be, the more likely they are to recommend the AI-driven financial inclusion system to others. Thus, we hypothesize:

H4: Perceived algorithmic fairness positively influences users’ satisfaction with AI-driven financial inclusion.

H5: Perceived algorithmic fairness positively influences users’ recommendation of AI-driven financial inclusion.

These hypotheses reflecting the different ways in which perceived fairness can impact user behavior. While satisfaction is a personal response to the service, recommendation involves sharing one's positive experience with others, potentially expanding the reach of AI-driven financial inclusion services.

3.3. Satisfaction with AI-Driven Financial Inclusion and Recommendation

Users' satisfaction with AI-driven financial inclusion services can have a significant impact on their recommendation behavior. When users are satisfied with their experiences with an AI system, they are more likely to engage in positive word-of-mouth and recommend the services to others [

51,

52].

In the context of financial inclusion, user satisfaction can stem from various factors, such as the ease of access to financial services, the quality of personalized offerings, and the overall experience with the AI-driven system. When users feel that the AI-driven financial services meet their needs and expectations, they are more likely to be satisfied and, in turn, recommend these services to others [

53]. This recommendation behavior can help expand the reach of financial inclusion initiatives and attract new users [

54]. Therefore, based on the above evidences, we hypothesize:

H6: Users’ satisfaction with AI-driven financial inclusion positively influences their recommendation of it.

The relationship between users’ satisfaction and their likelihood to recommend completes the logical chain from ethical considerations to perceived fairness, satisfaction, and ultimately, recommendation. It emphasizes the importance of not only ensuring algorithmic fairness but also delivering a satisfying user experience to promote the wider use of AI-driven financial inclusion services. The research model based on the research hypotheses so far is shown in

Figure 1.

4. Research Methodology and Research Design

4.1. Questionnaire Design and Measurements

Our investigation began with the development of a comprehensive questionnaire designed to capture relevant data for our analysis. Recognizing the importance of expert input, we sought evaluations from esteemed professors in the Finance and Information Technology departments. Their invaluable feedback led to refinements in the questionnaire, enhancing its precision and relevance.

The questionnaire was structured to assess six key dimensions of AI-driven financial inclusion services. These included the extent to which the algorithms used in the evaluation process were transparent, accountable, and legitimate; users' perceived fairness of the algorithms; their satisfaction with the AI-driven financial inclusion services; and their likelihood to recommend the services to others.

The introductory section of the questionnaire outlined the study's purpose and assured participants of confidentiality and anonymity. It also provided survey instructions. The first part collected basic demographic information such as age, gender, income level, and education to establish a foundational understanding of respondents' backgrounds. The second part comprised items carefully crafted to assess the six constructs under investigation.

The measurement items for algorithmic transparency, accountability, legitimacy, and perceived fairness [

31,

55] evaluated respondents' perceptions of AI-driven financial inclusion in terms of openness, responsibility, and morality. These items assessed how users viewed the fairness, explainability, and trustworthiness of AI systems used in financial inclusion services. The satisfaction construct [

45] evaluated respondents' views on the services' ability to meet their needs and expectations. This included assessing users' overall contentment, perceived value, and the effectiveness of AI-driven solutions in addressing financial requirements. Lastly, the recommendation construct [

56] measured the extent to which respondents were likely to endorse or suggest these services to others, assessing their willingness to recommend based on their experiences.

4.2. Sampling and Data Collection

This study targeted users of AI-driven financial inclusion services. To ensure a diverse and representative sample, participants were recruited from various demographics, including different age groups, income levels, educational backgrounds, and geographical locations. The inclusion criteria required participants to have experience with AI-facilitated financial services, ensuring informed responses regarding algorithmic fairness, satisfaction, and recommendation likelihood.

A stratified random sampling technique was employed to ensure representation across key demographic segments, including age, gender, income, education level, and geographical region. This approach helped obtain a balanced sample reflecting the diversity of the AI-driven financial inclusion services user base.

Data collection utilized a reliable online survey platform, enabling wide reach and participant convenience. The survey was distributed via email and social media channels to maximize participation. Partnerships with financial service providers further facilitated survey dissemination to their customers, enhancing the response rate.

Prior to participation, respondents were informed about the study's purpose, the voluntary nature of their participation, and the confidentiality of their responses. Informed consent was obtained from all participants. The survey was designed to take approximately 15-20 minutes, with assurances that responses would be anonymized to protect privacy.

This rigorous methodology and design aimed to provide robust and reliable insights into the influence of ethical considerations and perceived algorithmic fairness on user satisfaction and recommendation in the context of AI-driven financial inclusion. By carefully structuring the questionnaire, selecting diverse participants, and employing stratified sampling, the study sought to capture a comprehensive and accurate picture of user perceptions and experiences with these innovative financial services.

The survey targeted users with experience in AI-driven financial inclusion services in China, conducted from late April to early May 2024. Out of 697 received questionnaires, 675 were deemed valid after excluding 22 with incomplete responses.

Table 1 presents the demographic profile of the respondents. The sample comprised 57% male (n=385) and 43% female (n=290) participants. Age distribution skewed younger, with 21-30 year-olds representing the largest group (40.6%), followed by 31-40 year-olds (23.7%). These two cohorts accounted for over 64% of the sample, while only 7.9% were over 50. The respondents were predominantly well-educated, with 58.8% holding bachelor's degrees and 23.4% possessing master's degrees or higher. Merely 4% had a high school education or below. Regarding monthly income, 57.6% earned less than 5,000 RMB, 33.6% between 5,000-10,000 RMB, and 8.8% over 10,000 RMB. Most participants demonstrated substantial experience with AI-driven financial services: 59.1% had used them for over a year, 29.8% for 6-12 months, and 11.1% for less than 6 months. Geographically, respondents were concentrated in first-tier (38.1%) and second-tier (40.1%) cities, totaling 78.2% of the sample, while 21.7% resided in third-tier cities or below.

5. Data Analysis and Results

This study utilized covariance-based structural equation modeling (CB-SEM) to examine the complex relationships among multiple independent and dependent variables. CB-SEM is a sophisticated statistical approach that allows for the concurrent analysis of intricate interrelationships between constructs. This methodology is particularly well-suited for testing theoretical models with multiple pathways and latent variables, offering a comprehensive framework for assessing both direct and indirect effects within a single analytical model.

The research model was evaluated using a two-step approach, comprising a measurement model and a structural model. Factor analysis and reliability tests were conducted to assess the factor structure and dimensionality of the key constructs: algorithm transparency, accountability, legitimacy, perceived algorithmic fairness, satisfaction with AI-driven financial inclusion, and recommendation of AI-driven financial inclusion services. Convergent validity was examined to determine how effectively items reflected their corresponding factors, while discriminant validity was assessed to ensure statistical distinctiveness between factors. Mediation analysis was employed to investigate the intermediary role of perceived algorithmic fairness. The following sections detail the results of these analyses, providing a comprehensive overview of the model's validity and the relationships between constructs.

5.1. Measurement Model

In the measurement model, we evaluated the convergent and discriminant validity of the measures. As shown in

Table 2, standardized item loadings ranged from 0.662 to 0.894, exceeding the minimum acceptable threshold of 0.60 proposed by Hair et al. [

57]. Cronbach's α values for each construct ranged from 0.801 to 0.838, surpassing the recommended 0.7 threshold [

58], thus providing strong evidence of scale reliability. Composite reliability (CR) was also employed to assess internal consistency, with higher values indicating greater reliability. According to Raza et al. [

59], CR values between 0.6 and 0.7 are considered acceptable, while values between 0.7 and 0.9 are deemed satisfactory to good. In this study, all CR values exceeded 0.80, indicating satisfactory composite reliability. Furthermore, all average variance extracted (AVE) values surpassed 0.50, meeting the criteria established by Fornell and Larcker [

60] for convergent validity. These results collectively demonstrate that our survey instrument possesses robust reliability and convergent validity.

Table 3 presents Pearson's correlation coefficients for all research variables, revealing significant correlations among most respondent perceptions. To establish discriminant validity, we employed the Fornell-Larcker criterion, comparing the square root of the Average Variance Extracted (AVE) with factor correlation coefficients. As illustrated in

Table 3, the square root of AVE for each factor substantially exceeds its correlation coefficients with other factors. This aligns with Fornell and Larcker's [

60] assertion that constructs are distinct if the square root of the AVE for a given construct surpasses the absolute value of its standardized correlation with other constructs in the analysis. These findings provide robust evidence of the scale's discriminant validity, confirming that each construct captures a unique aspect of the phenomenon under investigation and is empirically distinguishable from other constructs in the model.

Self-reported data inherently carries the potential for common method bias or variance, which can stem from multiple sources, including social desirability [

61,

62]. To address this concern, we implemented statistical analyses as recommended by Podsakoff and Organ [

61] to assess the presence and extent of common method bias. Specifically, we employed the Harman one-factor test to evaluate whether the measures were significantly affected by common method bias, which can either inflate or deflate intercorrelations among measures depending on various factors. This approach allows us to gauge the potential impact of method effects on our findings and ensure the robustness of our results.

The Harman one-factor test involves conducting an exploratory factor analysis on all relevant variables without rotation. Our analysis results reveal the emergence of one factor that explains only 39.116% of the total variance, which is well below the critical threshold of 50% that would indicate problematic common method bias. Consequently, we can reasonably conclude that our data are not substantially affected by common method bias. This finding enhances the validity of our results and mitigates concerns about systematic measurement error influencing the observed relationships between constructs in our study.

5.2. Structural Model

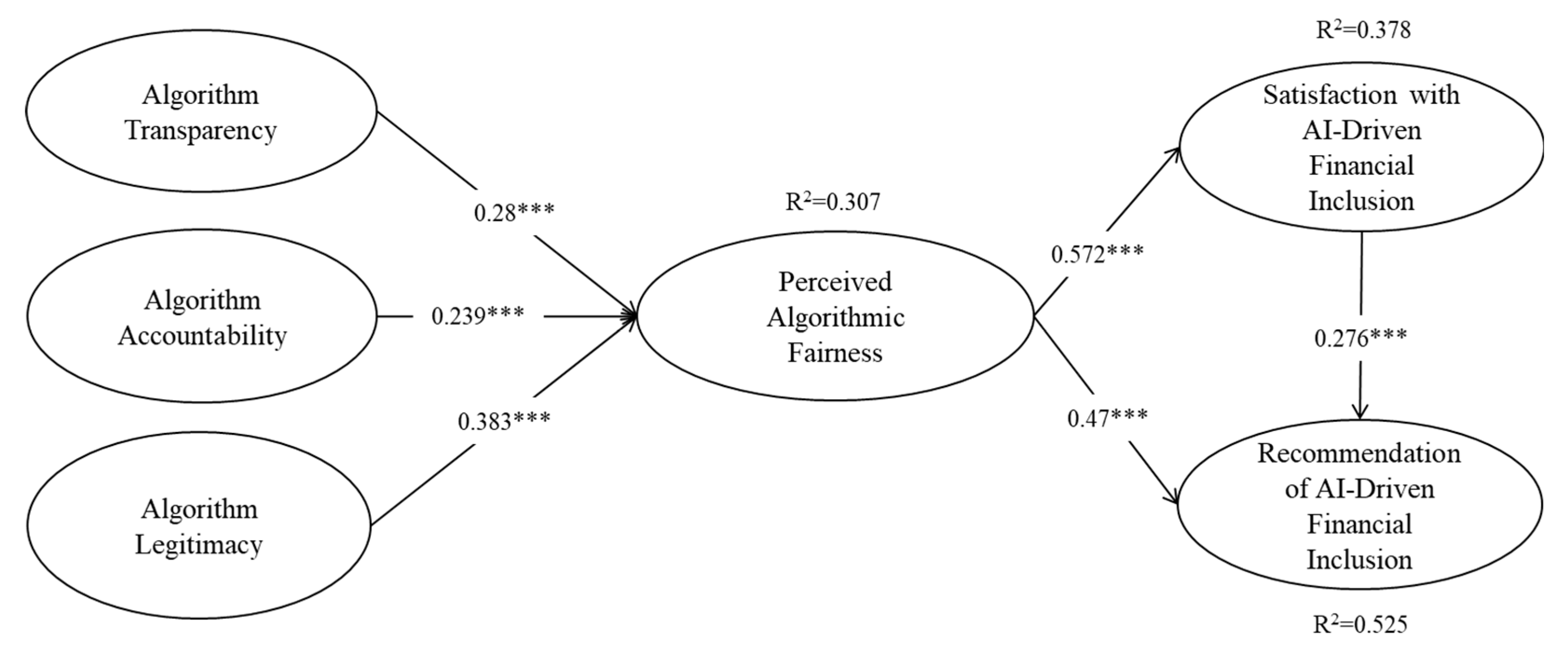

We evaluated the structural model to validate the relationships between constructs in the research model. The analysis revealed all paths were positive and significant at the 0.05 level, with

Table 4 presenting standardized path coefficients, significance levels, and explanatory power (R²) for each construct. The R² values for perceived algorithmic fairness (30.7%), satisfaction with AI-driven financial inclusion (37.8%), and recommendation of AI-driven financial inclusion (52.5%) indicated acceptable levels of explanation. Our findings supported all hypotheses: algorithm transparency (β = 0.28, p < 0.001), accountability (β = 0.239, p < 0.001), and legitimacy (β = 0.383, p < 0.001) positively influenced perceived algorithm fairness, collectively explaining 30.7% of its variance (H1-H3). Perceived algorithm fairness significantly affected users' satisfaction (β = 0.572, p < 0.001, R² = 37.8%) and recommendation (β = 0.47, p < 0.001) of AI-driven financial inclusion services (H4-H5). Additionally, users' satisfaction positively impacted their recommendation of these services (β = 0.276, p < 0.001), with perceived fairness and satisfaction jointly explaining 52.5% of the variance in recommendations (H6).

Figure 2 presents a visual representation of the standardized path coefficients and the significance levels for each hypothesis. And, the structural model demonstrated acceptable fit, with detailed hypothesis testing results and model fit indices presented in

Table 4 and

Table 5.

5.3. Mediating Effect of Perceived Algorithmic Fairness between Ethical Considerations, Users’ Satisfaction, and Recommendation

The mediating effect of value alignment was analyzed using the PROCESS macro in SPSS, extending the basic linear regression model by introducing a mediator variable. Our study results, presented in

Table 6, indicate a significant mediating effect when the 95% confidence interval does not include zero. We employed a three-step approach: first testing the relationship between independent (X) and dependent (Y) variables, then examining the relationship between X and the mediating (M) variable, and finally assessing the combined effect of X and M on Y. This process determines whether full or partial mediation occurs based on the relative magnitudes of the coefficients (β11, β21, β31). In this study, we investigated the mediating role of perceived algorithm fairness in the relationships between ethical considerations of AI-driven financial inclusion services and users' satisfaction and recommendation. Our findings demonstrate that perceived algorithm fairness positively mediated both the relationship between ethical considerations and users' satisfaction with AI-driven financial inclusion services, and the relationship between perceived algorithm fairness and users' recommendation of these services. These results highlight the crucial role of perceived fairness in shaping user attitudes and behaviors towards AI-driven financial inclusion services, emphasizing its importance in the ethical implementation and user acceptance of such technologies.

6. Discussion and Implications for Research and Practice

6.1. Discussion of Key Findings

Through the lens of organizational justice theory and the heuristic-systematic model, this study examines the impact of ethical considerations on user perceptions, satisfaction, and recommendation behavior in AI-driven financial inclusion services. We adopt an ethics-centered approach to assess the effects of algorithm transparency, accountability, and legitimacy on perceived algorithmic fairness, user satisfaction, and service recommendation likelihood. This framework utilizes ethical considerations as key determinants of user experience in AI-driven financial inclusion services. Moreover, we posit that perceived algorithmic fairness serves as a crucial psychological mediator between these ethical considerations and user response (satisfaction and recommendation). By investigating these relationships, our study aims to enhance understanding of how ethical considerations shape user perceptions and behaviors in the rapidly evolving domain of AI-driven financial services. This research contributes to the growing body of knowledge at the intersection of AI ethics, user experience, and financial inclusion, offering valuable insights for both practitioners and policymakers in this field.

This study's findings align with existing research that underscores the importance of algorithmic fairness, accountability, and transparency as key determinants of individual behavior in the context of AI technology [

33,

45]. To the best of our knowledge, this research represents the first empirical investigation into ethical considerations within the domain of AI-driven financial inclusion and their impact on user responses. Our study uniquely highlights the mediating role of perceived algorithmic fairness, offering a novel perspective on the relationship between users' ethical perceptions of algorithms and their subsequent satisfaction with and recommendation of these services. This approach contributes to a more nuanced understanding of the interplay between AI ethics and user experience in the context of financial inclusion technologies.

The results of this study yield several significant findings and contributions to the field. Firstly, our results demonstrate that ethical considerations (algorithm transparency, accountability, and legitimacy) in AI-driven financial inclusion are strong predictors (β = 0.28, 0.239, 0.383; p < 0.001) of perceived algorithm fairness. This aligns with and extends previous research findings [

31] in the context of AI-driven technologies. Secondly, our findings reveal that perceived algorithm fairness significantly predicts both users' satisfaction and their likelihood to recommend AI-driven financial inclusion services (β = 0.572, 0.47; p < 0.001). This provides a valuable contribution to the existing literature [

47,

48], as few studies have empirically addressed this issue in the context of AI-powered financial inclusion, despite growing interest in AI ethics. Thirdly, we found that perceived algorithm fairness positively mediates the relationship between ethical considerations and user responses. This suggests that ethical considerations are crucial factors affecting users' perceived algorithm fairness in AI-driven financial inclusion, which in turn influences users' satisfaction and recommendation behavior.

Our research contributes to the literature by demonstrating the value of an ethics-centered approach to AI-driven financial inclusion, developing constructs to measure ethical considerations, and empirically validating the role of perceived algorithmic fairness as a psychological mediator. This study provides a foundation for further research into the ethical aspects of AI-driven financial inclusion and offers valuable insights for service providers and policymakers. It highlights the importance of ethical considerations in designing and implementing AI-driven financial inclusion services to enhance user satisfaction and promote wider adoption.

6.2. Implications for Research

This study contributes several theoretical implications for existing AI technology and financial inclusion research. Firstly, this research broadens the application of organizational justice theory [

26] beyond traditional settings and into the realm of digital finance. By demonstrating the relevance of justice principles in understanding user perceptions of algorithmic fairness in AI-driven financial services, we extend the theory's scope and applicability. This aligns with the growing need to understand fairness in technological contexts, as highlighted by recent work on algorithmic fairness [

63,

64]. Our empirical validation of perceived algorithmic fairness as a mediator between ethical considerations and user responses contributes to this literature, offering insights into how fairness perceptions influence user behavior in AI-driven systems.

Furthermore, our findings reinforce the importance of the heuristic-systematic model [

65] in explaining how users process information about AI systems. The significant impact of ethical considerations on perceived algorithmic fairness supports the idea that users employ both heuristic and systematic processing when evaluating AI-driven services. This extends our understanding of user cognitive processes in the context of complex technological systems.

Our research also bridges a crucial gap between AI ethics literature [

66] and user experience studies in Fintech [

7,

67]. By providing a holistic framework that connects ethical considerations, user perceptions, and behavioral outcomes in AI-driven financial services, we offer a more comprehensive approach to studying these interconnected aspects. This addresses the need for interdisciplinary research in the rapidly evolving field of AI-driven financial technology. By providing concrete evidence of how ethical considerations in AI design can influence user perceptions and behaviors in the critical area of financial inclusion, we contribute to the growing body of literature on AI's broader societal implications [

68,

69]. This work helps to ground theoretical discussions about AI ethics in empirical reality, offering valuable insights for both researchers and practitioners working to ensure that AI technologies are developed and deployed in socially beneficial ways.

Lastly, the significant relationship we found between perceived algorithmic fairness and user satisfaction/recommendation behavior aligns with and extends previous work [

47]. Our results suggest that ethical considerations could be incorporated into these frameworks, particularly for AI-driven services. This provides a more nuanced understanding of the factors influencing technology adoption in ethically sensitive contexts.

6.3. Implications for Practice

In addition to theoretical implications, several practical implications emerge for stakeholders in the AI-driven financial inclusion sector. First, financial service providers and AI developers should prioritize ethical considerations in the design and implementation of AI-driven financial inclusion services. This includes focusing on algorithm transparency, accountability, and legitimacy. By embedding these ethical principles into their systems from the outset, companies can enhance user trust and satisfaction, potentially leading to higher adoption rates and customer loyalty. Organizations should strive to make their AI algorithms more transparent to users, providing clear, understandable explanations of how AI systems make decisions, particularly in areas such as loan approvals or credit scoring. Implementing user-friendly interfaces that offer insights into the decision-making process can help build trust and improve user perceptions of fairness.

Second, Financial institutions should develop robust accountability frameworks for their AI systems, including regular audits of AI decision-making processes, clear channels for users to contest decisions, and mechanisms to rectify errors or biases identified in the system. To enhance perceptions of algorithm legitimacy, organizations should ensure their AI systems comply with relevant regulations and industry standards. They should also actively engage with regulatory bodies and participate in the development of ethical guidelines for AI in financial services. Communicating these efforts to users can further reinforce the legitimacy of their AI-driven services.

Third, Developers and providers should adopt a user-centric approach in designing AI-driven financial inclusion services. This involves conducting regular user surveys, focus groups, and usability tests to understand user perceptions of algorithmic fairness and to identify areas for improvement in the user experience. Additionally, financial service providers should invest in training programs for their staff to understand the ethical implications of AI in financial inclusion. This knowledge can then be translated into better customer service and more informed interactions with users, potentially improving overall satisfaction and trust in the services. Organizations should implement comprehensive fairness metrics and monitoring systems for their AI algorithms. Regular assessment and reporting on these metrics can help identify potential biases or unfair practices early, allowing for timely interventions and adjustments to maintain high levels of perceived fairness. Service providers should also develop personalized communication strategies to explain AI-driven decisions to users, especially when those decisions might be perceived as unfavorable. Clear, empathetic, and individualized explanations can help maintain user trust and satisfaction, even in challenging situations.

Lastly, Financial institutions, technology companies, and regulatory bodies should collaborate to establish industry-wide standards for ethical AI in financial inclusion. This could include developing shared guidelines for algorithm transparency, accountability, and fairness, which can help create a more consistent and trustworthy ecosystem for users. Furthermore, organizations should conduct regular assessments of the long-term impacts of their AI-driven financial inclusion services on user financial health and overall well-being. This can help ensure that the services are truly beneficial and align with the broader goals of financial inclusion and ethical AI deployment.

7. Limitations and Future Research Directions

Based on our findings and discussions, several limitations of the current study and potential directions for future research can be identified. The sample characteristics, focused on users of AI-driven financial inclusion services in a specific geographic context, may not fully represent the diverse global population that could benefit from such services. The cross-sectional design of our study limits our ability to infer causality and observe changes in perceptions and behaviors over time. Additionally, while we focused on algorithm transparency, accountability, and legitimacy, there may be other ethical dimensions relevant to AI-driven financial inclusion that were not captured in our study.

To address these limitations and further advance the field, we propose several directions for future research. Longitudinal studies could track changes in user perceptions, satisfaction, recommendation behavior, and other responses over time, providing insights into how ethical considerations and perceived fairness evolve as users become more familiar with AI-driven financial services. Cross-cultural comparisons could reveal how cultural values and norms influence perceptions of algorithmic fairness and ethical considerations in AI-driven financial inclusion. Future studies could also explore additional ethical dimensions, such as privacy concerns, data ownership, or the potential for algorithmic discrimination based on protected characteristics. Experimental designs could help establish causal relationships between specific ethical design features and user perceptions or behaviors, providing more concrete guidance for designing ethical AI systems in financial services.

On the other hand, expanding the research to include perspectives from other stakeholders, such as regulators, policymakers, and AI developers, could offer a more comprehensive understanding of the challenges and opportunities in implementing ethical AI in financial inclusion. Finally, investigating the role of user education and AI literacy in shaping perceptions of algorithmic fairness and ethical considerations could provide insights for developing effective user education programs. Studies exploring how different regulatory approaches to AI in financial services influence user perceptions, provider behaviors, and overall market dynamics could inform policy development in this rapidly evolving sector.

Appendix. Measurement Items

| Constructs |

Measurements |

Source(s) |

| Algorithm Transparency |

The criteria and evaluation processes of AI-driven financial inclusion services are publicly disclosed and easily understandable to users. |

Shin (2021); Liu and Sun (2024) |

| The AI-driven financial inclusion services provide clear explanations for its decisions and outputs that are comprehensible to affected users. |

| The AI-driven financial inclusion services provide insight into how its internal processes lead to specific outcomes or decisions. |

| Algorithm Accountability |

The AI-driven financial inclusion services have a dedicated department responsible for monitoring, auditing, and ensuring the accountability of its algorithmic systems. |

Liu and Sun (2024) |

| The AI-driven financial inclusion services are subject to regular audits and oversight by independent third-party entities, such as market regulators and relevant authorities. |

| The AI-driven financial inclusion services have established clear mechanisms for detecting, addressing, and reporting any biases or errors in its algorithmic decision-making processes. |

| Algorithm Legitimacy |

I believe that the AI-driven financial inclusion services align with industry standards and societal expectations for fair and inclusive financial practices. |

Shin (2021) |

| I believe that the AI-driven financial inclusion services comply with relevant financial regulations, data protection laws, and ethical guidelines for AI use in finance. |

| I believe that the AI-driven financial inclusion services operate in an ethical manner, promoting fair access to financial services without bias or discrimination. |

| Perceived Algorithmic Fairness |

I believe the AI-driven financial inclusion services treat all users equally and does not discriminate based on personal characteristics unrelated to financial factors. |

Shin (2021); Liu and Sun (2024) |

| I trust that the AI-driven financial inclusion services use reliable and unbiased data sources to make fair decisions. |

| I believe the AI-driven financial inclusion services make impartial decisions without prejudice or favoritism. |

| Satisfaction with AI-Driven Financial Inclusion |

Overall, I am satisfied with the AI-driven financial inclusion services I have experienced. |

Shin and Park (2019) |

| The AI-driven financial inclusion services meet or exceed my expectations in terms of accessibility, efficiency, and fairness. |

| I am pleased with the range and quality of services provided through AI-driven financial inclusion platforms. |

| Recommendation of AI-Driven Financial Inclusion |

I will speak positively about the benefits and features of AI-driven financial inclusion services to others. |

Mukerjee (2020) |

| I would recommend AI-driven financial inclusion services to someone seeking my advice on financial services. |

| I will encourage my friends, family, and colleagues to consider using AI-driven financial inclusion services. |

References

- Mhlanga, D. Industry 4.0 in finance: the impact of artificial intelligence (AI) on digital financial inclusion. Int. J. Financ. Stud. 2020, 8(3), 45. [CrossRef]

- Shin, D. User perceptions of algorithmic decisions in the personalized AI system: Perceptual evaluation of fairness, accountability, transparency, and explainability. J. Broadcast. Electron. Media 2020, 64, 541–565. [Google Scholar] [CrossRef]

- Martin, K.; Waldman, A. Are algorithmic decisions legitimate? The effect of process and outcomes on perceptions of legitimacy of AI decisions. J. Bus. Ethics 2023, 183, 653–670. [Google Scholar] [CrossRef]

- Colquitt, J.A. On the dimensionality of organizational justice: a construct validation of a measure. J. Appl. Psychol. 2001, 86(3), 386–400. [Google Scholar] [CrossRef] [PubMed]

- Todorov, A.; Chaiken, S.; Henderson, M.D. The heuristic-systematic model of social information processing. In The Persuasion Handbook: Developments in Theory and Practice; Dillard, J.P., Pfau, M., Eds.; Sage: Thousand Oaks, CA, USA, 2002; pp. 195–211. [Google Scholar] [CrossRef]

- Yasir, A.; Ahmad, A.; Abbas, S.; Inairat, M.; Al-Kassem, A.H.; Rasool, A. How Artificial Intelligence Is Promoting Financial Inclusion? A Study On Barriers Of Financial Inclusion. In Proceedings of the 2022 International Conference on Business Analytics for Technology and Security (ICBATS), Dubai, UAE, February 2022; pp. 1–6. [Google Scholar]

- Kshetri, N. The role of artificial intelligence in promoting financial inclusion in developing countries. J. Glob. Inf. Technol. Manag. 2021, 24(1), 1–6. [Google Scholar] [CrossRef]

- Jejeniwa, T.O.; Mhlongo, N.Z.; Jejeniwa, T.O. AI solutions for developmental economics: opportunities and challenges in financial inclusion and poverty alleviation. Int. J. Adv. Econ. 2024, 6(4), 108–123. [Google Scholar] [CrossRef]

- Uzougbo, N.S.; Ikegwu, C.G.; Adewusi, A.O. Legal accountability and ethical considerations of AI in financial services. GSC Adv. Res. Rev. 2024, 19(2), 130–142. [Google Scholar] [CrossRef]

- Hentzen, J.K.; Hoffmann, A.; Dolan, R.; Pala, E. Artificial intelligence in customer-facing financial services: a systematic literature review and agenda for future research. Int. J. Bank Mark. 2022, 40(6), 1299–1336. [Google Scholar] [CrossRef]

- Max, R.; Kriebitz, A.; Von Websky, C. Ethical considerations about the implications of artificial intelligence in finance. In Handbook on Ethics in Finance; Springer: Cham, Switzerland, 2021; pp. 577–592. [Google Scholar] [CrossRef]

- Aldboush, H.H.; Ferdous, M. Building Trust in Fintech: An Analysis of Ethical and Privacy Considerations in the Intersection of Big Data, AI, and Customer Trust. Int. J. Financ. Stud. 2023, 11(3), 90. [Google Scholar] [CrossRef]

- Telukdarie, A.; Mungar, A. The impact of digital financial technology on accelerating financial inclusion in developing economies. Procedia Comput. Sci. 2023, 217, 670–678. [Google Scholar] [CrossRef]

- Ozili, P.K. Financial inclusion, sustainability and sustainable development. In Smart Analytics, Artificial Intelligence and Sustainable Performance Management in a Global Digitalised Economy; Springer: Cham, Switzerland, 2023; pp. 233–241. [Google Scholar] [CrossRef]

- Lee, C.C.; Lou, R.; Wang, F. Digital financial inclusion and poverty alleviation: Evidence from the sustainable development of China. Econ. Anal. Policy 2023, 77, 418–434. [Google Scholar] [CrossRef]

- Adeoye, O.B.; Addy, W.A.; Ajayi-Nifise, A.O.; Odeyemi, O.; Okoye, C.C.; Ofodile, O.C. Leveraging AI and data analytics for enhancing financial inclusion in developing economies. Finance Account. Res. J. 2024, 6, 288–303. [Google Scholar] [CrossRef]

- Owolabi, O.S.; Uche, P.C.; Adeniken, N.T.; Ihejirika, C.; Islam, R.B.; Chhetri, B.J.T. Ethical implication of artificial intelligence (AI) adoption in financial decision making. Comput. Inf. Sci. 2024, 17(1), 1–49. [Google Scholar] [CrossRef]

- Mhlanga, D. The role of big data in financial technology toward financial inclusion. Front. Big Data 2024, 7, 1184444. [Google Scholar] [CrossRef] [PubMed]

- Akter, S.; McCarthy, G.; Sajib, S.; Michael, K.; Dwivedi, Y.K.; D’Ambra, J.; Shen, K.N. Algorithmic bias in data-driven innovation in the age of AI. Int. J. Inf. Manag. 2021, 60, 102387. [Google Scholar] [CrossRef]

- Ntoutsi, E.; Fafalios, P.; Gadiraju, U.; Iosifidis, V.; Nejdl, W.; Vidal, M.E.; Staab, S. Bias in data-driven artificial intelligence systems—An introductory survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, e1356. [Google Scholar] [CrossRef]

- Stănescu, C.G.; Gikay, A.A. (Eds.) . Discrimination, Vulnerable Consumers and Financial Inclusion: Fair Access to Financial Services and the Law; Routledge: London, UK, 2020. [Google Scholar]

- Munoko, I.; Brown-Liburd, H.L.; Vasarhelyi, M. The ethical implications of using artificial intelligence in auditing. J. Bus. Ethics 2020, 167, 209–234. [Google Scholar] [CrossRef]

- Schönberger, D. Artificial intelligence in healthcare: a critical analysis of the legal and ethical implications. Int. J. Law Inf. Technol. 2019, 27, 171–203. [Google Scholar] [CrossRef]

- Agarwal, A.; Agarwal, H.; Agarwal, N. Fairness Score and process standardization: framework for fairness certification in artificial intelligence systems. AI Ethics 2023, 3, 267–279. [Google Scholar] [CrossRef]

- Purificato, E.; Lorenzo, F.; Fallucchi, F.; De Luca, E.W. The use of responsible artificial intelligence techniques in the context of loan approval processes. Int. J. Hum.-Comput. Interact. 2023, 39, 1543–1562. [Google Scholar] [CrossRef]

- Greenberg, J. Organizational justice: Yesterday, today, and tomorrow. J. Manag. 1990, 16(2), 399–432. [Google Scholar] [CrossRef]

- Robert, L.P.; Pierce, C.; Marquis, L.; Kim, S.; Alahmad, R. Designing fair AI for managing employees in organizations: a review, critique, and design agenda. Hum.-Comput. Interact. 2020, 35(5-6), 545-575. [CrossRef]

- Novelli, C.; Taddeo, M.; Floridi, L. Accountability in artificial intelligence: what it is and how it works. AI Soc. 2023, 1–12. [Google Scholar] [CrossRef]

- Busuioc, M. Accountable artificial intelligence: Holding algorithms to account. Public Adm. Rev. 2021, 81(5), 825–836. [Google Scholar] [CrossRef] [PubMed]

- Morse, L.; Teodorescu, M.H.M.; Awwad, Y.; Kane, G.C. Do the ends justify the means? Variation in the distributive and procedural fairness of machine learning algorithms. J. Bus. Ethics 2021, 1–13. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, X. Towards more legitimate algorithms: A model of algorithmic ethical perception, legitimacy, and continuous usage intentions of e-commerce platforms. Comput. Hum. Behav. 2024, 150, 108006. [Google Scholar] [CrossRef]

- Shin, D. Embodying algorithms, enactive artificial intelligence and the extended cognition: You can see as much as you know about algorithm. J. Inf. Sci. 2023, 49(1), 18–31. [Google Scholar] [CrossRef]

- Shin, D.; Zhong, B.; Biocca, F.A. Beyond user experience: What constitutes algorithmic experiences? Int. J. Inf. Manag. 2020, 52, 102061. [Google Scholar] [CrossRef]

- König, P.D.; Wenzelburger, G. The legitimacy gap of algorithmic decision-making in the public sector: Why it arises and how to address it. Technol. Soc. 2021, 67, 101688. [Google Scholar] [CrossRef]

- Cabiddu, F.; Moi, L.; Patriotta, G.; Allen, D.G. Why do users trust algorithms? A review and conceptualization of initial trust and trust over time. Eur. Manag. J. 2022, 40(5), 685–706. [Google Scholar] [CrossRef]

- Shulner-Tal, A.; Kuflik, T.; Kliger, D. Fairness, explainability and in-between: understanding the impact of different explanation methods on non-expert users’ perceptions of fairness toward an algorithmic system. Ethics Inf. Technol. 2022, 24(2), 1–13. [Google Scholar] [CrossRef]

- Narayanan, D.; Nagpal, M.; McGuire, J.; Schweitzer, S.; De Cremer, D. Fairness perceptions of artificial intelligence: A review and path forward. Int. J. Hum.-Comput. Interact. 2024, 40(1), 4-23. [CrossRef]

- Grimmelikhuijsen, S. Explaining why the computer says no: Algorithmic transparency affects the perceived trustworthiness of automated decision-making. Public Adm. Rev. 2023, 83(2), 241–262. [Google Scholar] [CrossRef]

- Starke, C.; Baleis, J.; Keller, B.; Marcinkowski, F. Fairness perceptions of algorithmic decision-making: A systematic review of the empirical literature. Big Data Soc. 2022, 9, 1–16. [Google Scholar] [CrossRef]

- Qin, S.; Jia, N.; Luo, X.; Liao, C.; Huang, Z. Perceived fairness of human managers compared with artificial intelligence in employee performance evaluation. J. Manag. Inf. Syst. 2023, 40(4), 1039–1070. [Google Scholar] [CrossRef]

- Sonboli, N.; Smith, J.J.; Cabral Berenfus, F.; Burke, R.; Fiesler, C. Fairness and transparency in recommendation: The users’ perspective. In Proceedings of the 29th ACM Conference on User Modeling, Adaptation and Personalization, Utrecht, The Netherlands, June 2021; pp. 274–279. [Google Scholar] [CrossRef]

- Shin, D.; Lim, J.S.; Ahmad, N.; Ibahrine, M. Understanding user sensemaking in fairness and transparency in algorithms: algorithmic sensemaking in over-the-top platform. AI Soc. 2024, 39, 477–490. [Google Scholar] [CrossRef]

- Kieslich, K.; Keller, B.; Starke, C. Artificial intelligence ethics by design. Evaluating public perception on the importance of ethical design principles of artificial intelligence. Big Data Soc. 2022, 9, 1–15. [Google Scholar] [CrossRef]

- Cabiddu, F.; Moi, L.; Patriotta, G.; Allen, D.G. Why do users trust algorithms? A review and conceptualization of initial trust and trust over time. Eur. Manag. J. 2022, 40(5), 685–706. [Google Scholar] [CrossRef]

- Shin, D.; Park, Y.J. Role of fairness, accountability, and transparency in algorithmic affordance. Comput. Hum. Behav. 2019, 98, 277–284. [Google Scholar] [CrossRef]

- Ababneh, K.I.; Hackett, R.D.; Schat, A.C. The role of attributions and fairness in understanding job applicant reactions to selection procedures and decisions. J. Bus. Psychol. 2014, 29, 111–129. [Google Scholar] [CrossRef]

- Ochmann, J.; Michels, L.; Tiefenbeck, V.; Maier, C.; Laumer, S. Perceived algorithmic fairness: An empirical study of transparency and anthropomorphism in algorithmic recruiting. Inf. Syst. J. 2024, 34(2), 384–414. [Google Scholar] [CrossRef]

- Wu, W.; Huang, Y.; Qian, L. Social trust and algorithmic equity: The societal perspectives of users' intention to interact with algorithm recommendation systems. Decis. Support Syst. 2024, 178, 114115. [Google Scholar] [CrossRef]

- Bambauer-Sachse, S.; Young, A. Consumers’ intentions to spread negative word of mouth about dynamic pricing for services: Role of confusion and unfairness perceptions. J. Serv. Res. 2023, 27(3), 364–380. [Google Scholar] [CrossRef]

- Schinkel, S.; van Vianen, A.E.; Ryan, A.M. Applicant reactions to selection events: Four studies into the role of attributional style and fairness perceptions. Int. J. Sel. Assess. 2016, 24(2), 107–118. [Google Scholar] [CrossRef]

- Yun, J.; Park, J. The effects of chatbot service recovery with emotion words on customer satisfaction, repurchase intention, and positive word-of-mouth. Front. Psychol. 2022, 13, 922503. [Google Scholar] [CrossRef] [PubMed]

- Jo, H. Understanding AI tool engagement: A study of ChatGPT usage and word-of-mouth among university students and office workers. Telemat. Inform. 2023, 85, 102067. [Google Scholar] [CrossRef]

- Li, Y.; Ma, X.; Li, Y.; Li, R.; Liu, H. How does platform's fintech level affect its word of mouth from the perspective of user psychology? Front. Psychol. 2023, 14, 1085587. [Google Scholar] [CrossRef] [PubMed]

- Barbu, C.M.; Florea, D.L.; Dabija, D.C.; Barbu, M.C.R. Customer experience in fintech. J. Theor. Appl. Electron. Commer. Res. 2021, 16(5), 1415–1433. [Google Scholar] [CrossRef]

- Shin, D. Why does explainability matter in news analytic systems? Proposing explainable analytic journalism. Journalism Stud. 2021, 22(8), 1047–1065. [Google Scholar] [CrossRef]

- Mukerjee, K. Impact of self-service technologies in retail banking on cross-buying and word-of-mouth. Int. J. Retail Distrib. Manag. 2020, 48(5), 485–500. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.; Tatham, R. Multivariate Data Analysis, 6th ed.; Pearson Prentice-Hall: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Hair, J.F.; Gabriel, M.; Patel, V. “AMOS Covariance-Based Structural Equation Modeling (CBSEM): Guidelines on its Application as a Marketing Research Tool. ” Braz. J. Mark. 2014, 13, 44–55. [Google Scholar]

- Raza, S.A.; Qazi, W.; Khan, K.A.; Salam, J. Social isolation and acceptance of the learning management system (LMS) in the time of COVID-19 pandemic: an expansion of the UTAUT model. J. Educ. Comput. Res. 2021, 59(2), 183–208. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Structural equation models with unobservable variables and measurement error: Algebra and statistics. J. Mark. Res. 1981, 18(3), 382–388. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; Organ, D.W. Self-reports in organizational research: Problems and prospects. J. Manag. 1986, 12(4), 531–544. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.Y.; Podsakoff, N.P. Common method biases in behavioral research: a critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88(5), 879. [Google Scholar] [CrossRef] [PubMed]

- Newman, D.T.; Fast, N.J.; Harmon, D.J. When eliminating bias isn’t fair: Algorithmic reductionism and procedural justice in human resource decisions. Organ. Behav. Hum. Decis. Process. 2020, 160, 149–167. [Google Scholar] [CrossRef]

- Birzhandi, P.; Cho, Y.S. Application of fairness to healthcare, organizational justice, and finance: A survey. Expert Syst. Appl. 2023, 216(15), 119465. [Google Scholar] [CrossRef]

- Chen, S.; Chaiken, S. The heuristic-systematic model in its broader context. In Dual-process Theories in Social Psychology; Chaiken, S., Trope, Y., Eds.; Guilford Press: New York, NY, USA, 1999; pp. 73–96. [Google Scholar]

- Shi, S.; Gong, Y.; Gursoy, D. Antecedents of trust and adoption intention toward artificially intelligent recommendation systems in travel planning: a heuristic-systematic model. J. Travel Res. 2021, 60(8), 1714–1734. [Google Scholar] [CrossRef]

- Belanche, D.; Casaló, L.V.; Flavián, C. Artificial Intelligence in FinTech: understanding robo-advisors adoption among customers. Ind. Manag. Data Syst. 2019, 119(7), 1411–1430. [Google Scholar] [CrossRef]

- Bao, L.; Krause, N.M.; Calice, M.N.; Scheufele, D.A.; Wirz, C.D.; Brossard, D.; Xenos, M.A. Whose AI? How different publics think about AI and its social impacts. Comput. Hum. Behav. 2022, 130, 107182. [Google Scholar] [CrossRef]

- Khogali, H.O.; Mekid, S. The blended future of automation and AI: Examining some long-term societal and ethical impact features. Technol. Soc. 2023, 73, 102232. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).