Submitted:

22 July 2024

Posted:

23 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Research Focus

- RQ1: How can DFR be effectively implemented in an existing Linux-Hadoop big data wireless network without disrupting its operations?

- RQ2: Can digital forensics investigations within a Linux-Hadoop cluster be simplified for both experienced and inexperienced investigators, considering the various configurations of different Hadoop environments?

3. Literature Review

3.1. Background

3.2. Review of Related Work

3.3. Conclusion

4. Research Methodology

- Prototype development.

- Prototype evaluation.

4.1. Prototype Development

4.2. Prototype Testing and Evaluation

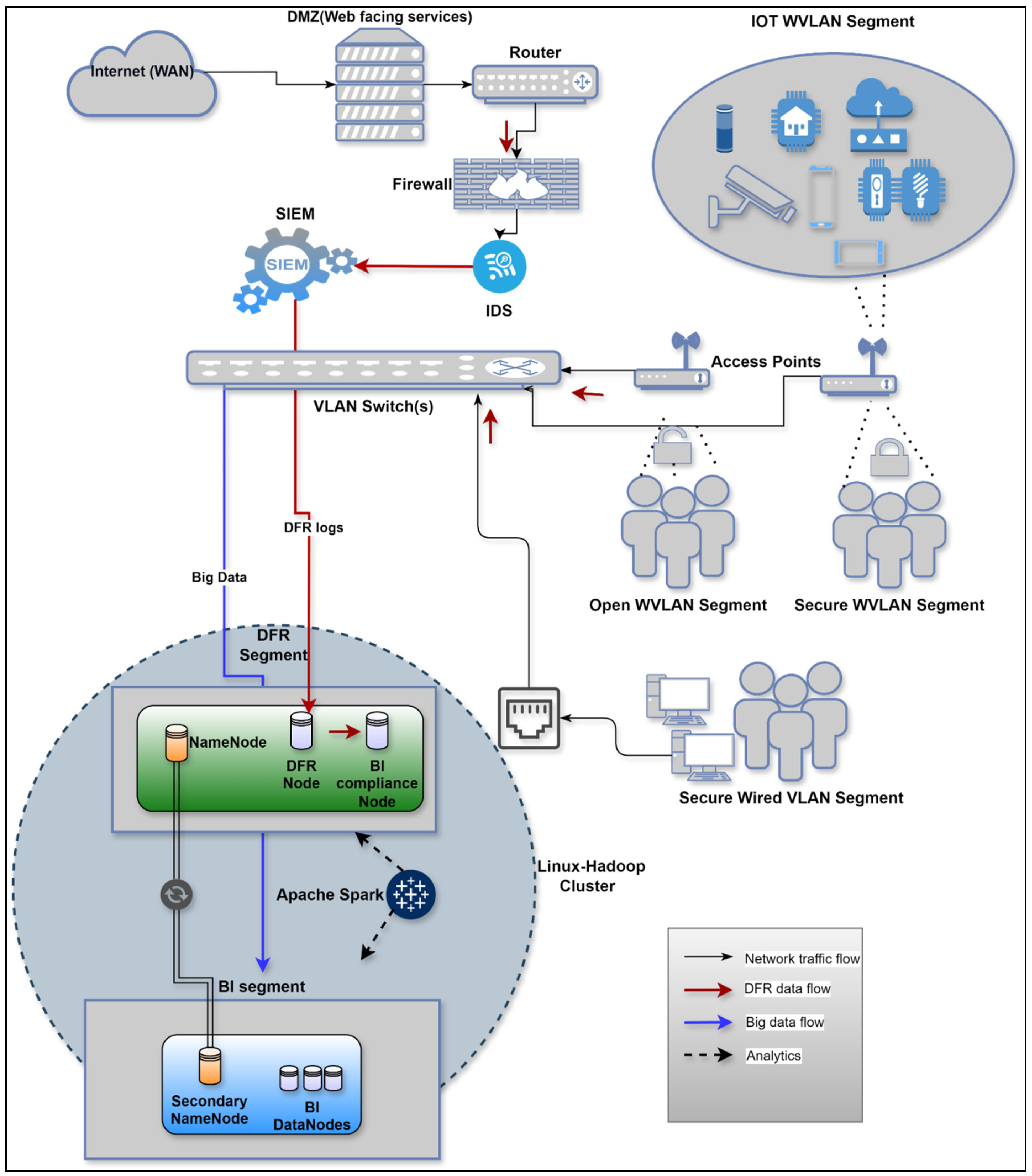

5. Proposed Framework

5.1. Requirements Analysis

- Architecture: Research on DFR and big data frameworks informed the identification of necessary system components and their functionalities.

- Operational needs: The practical needs of digital forensics investigations, such as data collection, secure storage, efficient processing, and access control, were key drivers in defining the system requirements.

- Technological capabilities: Leveraging existing technologies like Hadoop and Apache Spark, and integrating advanced hardware like graphical processing units (GPUs), ensured that the system could meet the performance and efficiency demands of both BI and forensics analytics.

- Compliance and security: Ensuring compliance with legal standards and maintaining robust security measures were paramount in defining the system requirements.

- Data Collection and Storage: The system must be capable of capturing and securely storing network access logs, firewall logs, IDS logs, SIEM logs, and Hadoop cluster data. It should centralise the storage of all relevant forensic data to facilitate easy management and analysis.

- Network Segmentation and Security: Network segmentation and security of both the big data network and its DFR segment must be implemented.

- Efficient Data Processing: The system must leverage technologies that effectively enforce both BI and forensics analytics, ensuring efficient processing and analysis of large data volumes.

- Metadata Management: The system should provide efficient metadata management through a secure centralised node, crucial for precise and efficient forensic analysis.

- Access Control and Compliance: Strict access control measures must be enforced to ensure that only authorised personnel can interact with the forensic data. Furthermore, the system should log all security policies and maintain an up-to-date terms and conditions big data collection policy.

- Time Synchronisation: Effective synchronisation with the network’s network time protocol (NTP) server is essential to adhere to digital forensics admissibility requirements.

- Resource Allocation: The forensic nodes must have sufficient storage and processing capabilities to handle the volume of data collected.

5.2. Framework Overview

5.3. Research Question 1 Insights

- First, reconfigure the wireless network to place the forensic nodes on the same segment as the NameNode using VLANs or subnetting to achieve network segmentation.

- Next, prepare the Forensic nodes by installing Linux OS, Java, and Hadoop, setting up SSH access, and updating host resolution.

- Install and configure Hadoop on the forensic nodes, and subsequently synchronise configuration files from the NameNode. Adjust these configurations accordingly to accurately reflect their role as forensic nodes.

- Start necessary Hadoop services on the forensic nodes, such as DataNode and any additional services related to forensic readiness.

- Finally, verify the integration by ensuring the forensic nodes are recognised by the NameNode and can communicate efficiently, and by testing data retrieval and forensic tool operations to confirm the setup.

5.4. Research Question 2 Insights

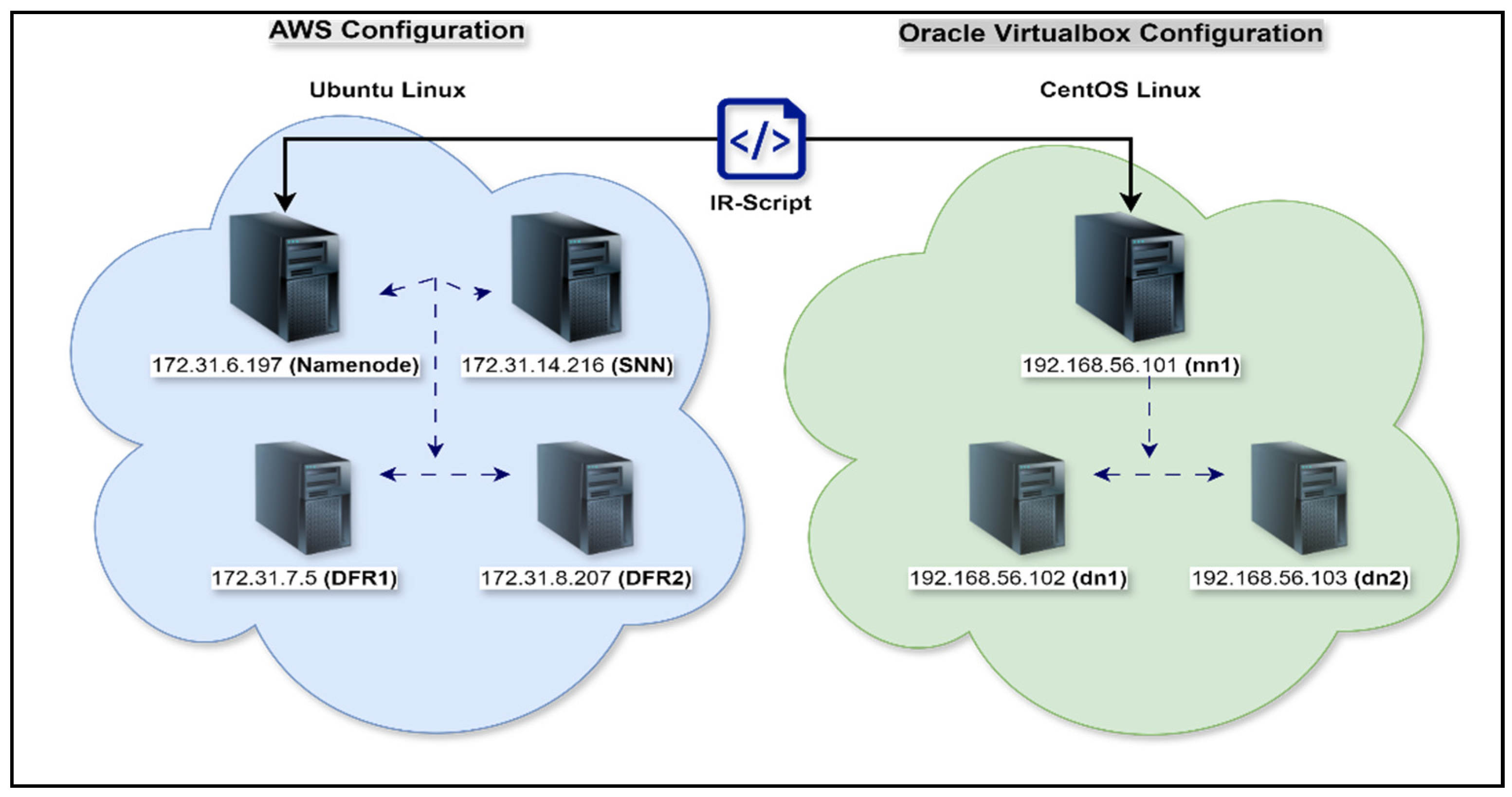

6. Prototype Configuration and Evaluation

6.1. AWS Configuration.

- OS details- Ubuntu Server 22.04 LTS

- Virtualisation environment- Amazon Web Services (AWS).

- Four EC2 instances namely NameNode, Secondary NameNode(SSN) and two data nodes (DFR1 and DFR2)

- All instances were configured to run on the same subnet to ensure network connectivity and efficient data transfer within the Hadoop cluster.

- A Key Pair was created to enable a secure connection to the AWS instances. This key pair was used to SSH into the instances.

- A security group was configured to allow necessary traffic:

- IAM Configuration: IAM role was configured and attached to instances.

- Java and Hadoop were downloaded and installed on each instance.

- Password-less SSH set up on all EC2 nodes.

- The /home/ubuntu/.bashrc shell script was modified in all the nodes to configure the required environment for Java and Hadoop.

- Hadoop core files on the NameNode were configured and copied to the other nodes using the scp command.

- The Hadoop Filesystem on the NameNode was then formatted and Hadoop daemons started.

- Lastly, the IR-script was developed within the Ubuntu environment, tested and improved iteratively. The results are discussed in the evaluation Section 4.1 below.

6.2. Oracle VB Configuration

- OS details- CentOS 8

- Virtualisation environment- Oracle VB installed on Windows 11 Dell laptop.

- Three virtual machines (VMs) were created namely nn1 and two data nodes (dn1 and dn2)

- All VMs were configured to run on the same internal network using host-only adaptors for internal communication and network address translation (NAT) adaptors for effective external communication.

- Password-less SSH was then set up on all VMs.

- The /home/hadoop/.bashrc shell script was modified in all the nodes to configure the required environment for Java and Hadoop.

- Hadoop core files on the NameNode (nn1) were configured and copied to the other nodes.

- HDFS on nn1 was formatted and Hadoop daemons started.

- The IR-script was then executed and the results were examined as discussed in the evaluation section below.

6.3. IR-script Overview.

6.4. IR-Script Evaluation.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Ahmed, Hameeza, Muhammad Ali Ismail, and Muhammad Faraz Hyder. "Performance optimization of hadoop cluster using linux services." In 17th IEEE International Multi Topic Conference 2014, pp. 167-172. IEEE, 2014.

- Akinbi, Alex Olushola. "Digital forensics challenges and readiness for 6G Internet of Things (IoT) networks." Wiley Interdisciplinary Reviews: Forensic Science 5, no. 6 (2023): e1496. [CrossRef]

- Asim, Mohammed, Dean Richard McKinnel, Ali Dehghantanha, Reza M. Parizi, Mohammad Hammoudeh, and Gregory Epiphaniou. "Big data forensics: Hadoop distributed file systems as a case study." Handbook of Big Data and IoT Security (2019): pp.179-210.

- Beloume, Amy. 2023. “The Problems of Internet Privacy and Big Tech Companies.” The Science Survey. February 28, 2023. https://thesciencesurvey.com/news/2023/02/28/the-problems-of-internet-privacy-and-big-tech-companies/.

- Elgendy, Nada, and Ahmed Elragal. "Big data analytics: a literature review paper." In Advances in Data Mining. Applications and Theoretical Aspects: 14th Industrial Conference, ICDM 2014, St. Petersburg, Russia, July 16-20, 2014. Proceedings 14, pp. 214-227. Springer International Publishing, 2014.

- Evaluating Prototypes.” https://www.tamarackcommunity.ca/hubfs/Resources/Tools/Aid4Action%20Evaluating%20Prototypes%20Mark%20Cabaj.pdf. (accessed on 20 May 2024).

- Häggman, Anders, Tomonori Honda, and Maria C. Yang. "The influence of timing in exploratory prototyping and other activities in design projects." In International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, vol. 55928, p. V005T06A023. American Society of Mechanical Engineers, 2013. [CrossRef]

- Harshany, Edward, Ryan Benton, David Bourrie, and William Glisson. 2020. “Big Data Forensics: Hadoop 3.2.0 Reconstruction.” Forensic Science International: Digital Investigation 32 (April): 300909. [CrossRef]

- Joshi, Pramila. "Analyzing big data tools and deployment platforms." International Journal of Multidisciplinary Approach & Studies 2, no. 2 (2015): pp. 45-56.

- Kumar, Yogendra, and Vijay Kumar. "A Systematic Review on Intrusion Detection System in Wireless Networks: Variants, Attacks, and Applications." Wireless Personal Communications (2023): pp. 1-58.

- Messier, Ric, and Michael Jang. Security strategies in Linux platforms and applications. Jones & Bartlett Learning, 2022.

- Mpungu, Cephas, Carlisle George, and Glenford Mapp. "Developing a novel digital forensics readiness framework for wireless medical networks using specialised logging." In Cybersecurity in the Age of Smart Societies: Proceedings of the 14th International Conference on Global Security, Safety and Sustainability, London, September 2022, pp. 203-226. Cham: Springer International Publishing, 2023.

- Nazeer, Sumat, Faisal Bahadur, Arif Iqbal, Ghazala Ashraf, and Shahid Hussain. "A Comparison of Window 8 and Linux Operating System (Android) Security for Mobile Computing." International Journal of Computer (IJC) 17, no. 1 (2015):pp. 21-29.

- Olabanji, Samuel Oladiipo, Oluseun Babatunde Oladoyinbo, Christopher Uzoma Asonze, Tunboson Oyewale Oladoyinbo, Samson Abidemi Ajayi, and Oluwaseun Oladeji Olaniyi. "Effect of adopting AI to explore big data on personally identifiable information (PII) for financial and economic data transformation." Available at SSRN 4739227 (2024). [CrossRef]

- Oo, Myat Nandar. "Forensic Investigation on Hadoop Big Data Platform." PhD diss., MERAL Portal, 2019.

- Russom, Philip. "Big data analytics." TDWI best practices report, fourth quarter 19, no. 4 (2011): pp. 1-34.

- Sachowski, Jason. Implementing Digital Forensic Readiness: From Reactive to Proactive Process, 2nd ed.; Boca Raton, Fl: Crc Press, Taylor & Francis Group, NW, U.S. 2019.

- Sadia, Halima. 2022. “10 Prototype Testing Questions a Well-Experienced Designer Need to Ask.” Webful Creations. November 28, 2022. https://www.webfulcreations.com/10-prototype-testing-questions-a-well-experienced-designer-need-to-ask/.

- Sarker, Iqbal H. AI-Driven Cybersecurity and Threat Intelligence: Cyber Automation, Intelligent Decision-Making and Explainability. Springer Nature, 2024.

- Shoderu, Gabriel, Stacey Baror, and Hein Venter. "A Privacy-Compliant Process for Digital Forensics Readiness." In International Conference on Cyber Warfare and Security, vol. 19, no. 1, pp. 337-347. 2024. [CrossRef]

- Singh, Dilpreet, and Chandan K. Reddy. "A survey on platforms for big data analytics." Journal of big data 2 (2015): pp. 1-20. [CrossRef]

- Taylor, Ronald C. "An overview of the Hadoop/MapReduce/HBase framework and its current applications in bioinformatics." BMC bioinformatics 11 (2010): pp 1-6. [CrossRef]

- Thakur, Manikant. "Cyber security threats and countermeasures in digital age." Journal of Applied Science and Education (JASE) 4, no. 1 (2024): pp. 1-20. [CrossRef]

- Thanekar, Sachin Arun, K. Subrahmanyam, and A. B. Bagwan. "A study on digital forensics in Hadoop." Indonesian Journal of Electrical Engineering and Computer Science 4, no. 2 (2016): 473-478. [CrossRef]

- Yaman, Okan, Tolga Ayav, and Yusuf Murat Erten. "A Lightweight Self-Organized Friendly Jamming." International Journal of Information Security Science 12, no. 1 (2023): pp. 13-20. [CrossRef]

| IR-script Generated Files |

Forensics artefacts | AWS | Oracle VB |

Results Summary |

|---|---|---|---|---|

| OS_Info.txt |

|

√ √ √ √ √ √ √ √ √ ⍻ √ |

√ √ √ √ √ √ √ √ √ ⍻ √ |

All the target OS digital forensics artefacts on both systems were effectively retrieved. However, IDS checks were inconclusive (see conclusion section for details) |

| Hadoop_Info.txt |

|

√ √ √ √ √ √ √ √ √ √ |

√ √ √ √ √ √ √ √ √ √ |

All the target Hadoop cluster digital forensics artefacts on both systems were effectively retrieved. |

| Script_Log.txt |

|

√ | √ | Time-stamped log files on both systems were generated. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).