Submitted:

23 July 2024

Posted:

25 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Preliminaries

2.1. The Elo Rating System

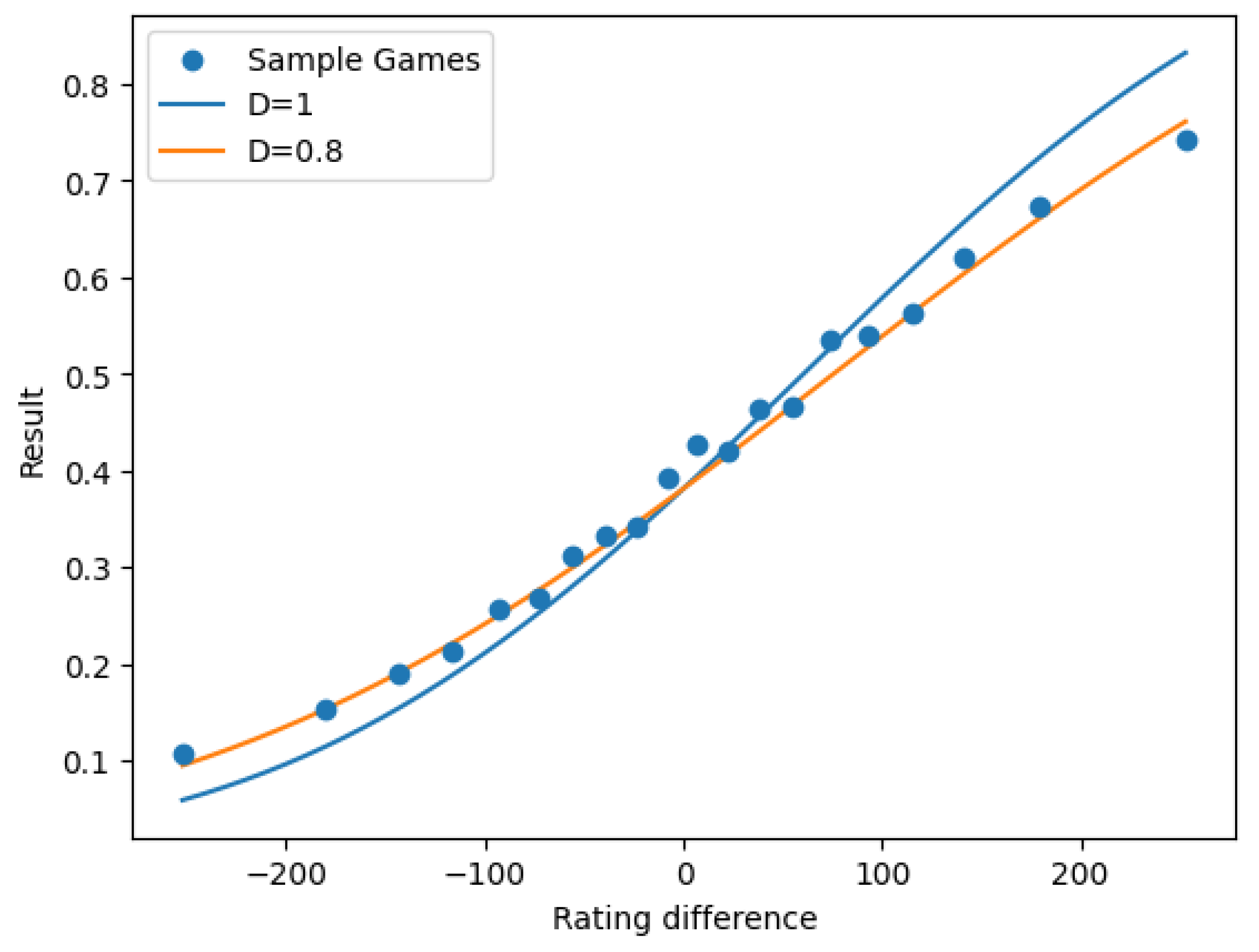

2.2. Basis of the Static Elo Model

2.3. Statistical Inference in the Static Model

2.4. Basis of the Elo Adjustment Formula

2.5. Asymmetric Games

2.6. Accuracy Metrics for Elo Rating Systems

3. Stochastic Elo Models

3.1. Continuous Models

3.2. Accuracy Metrics for the Stochastic Model

3.3. Non-Homogeneous Process

3.4. A Discrete Model

4. Computational Study

4.1. Experimental Setup

- The expected result is determined by Eq. (8) with constant L.

- During each season, we denote by "entering teams" to the teams that didn’t play the previous season (during the first season, they are all entering teams).

- We divide each season in two parts (I and II), the former comprised of the games that start before every entering team has played at least m games.

- During part I, in each match between two non-entering teams, we update their ratings (from the previous season) according to Eq. (1) using a fixed factor K.

- When part I ends, we compute the rating estimator for each entering team using the games in part I and the current ratings of non-entering teams.

- During part II, since we have ratings for all teams, we can just update them every match using Eq. (1) with the same K-factor.

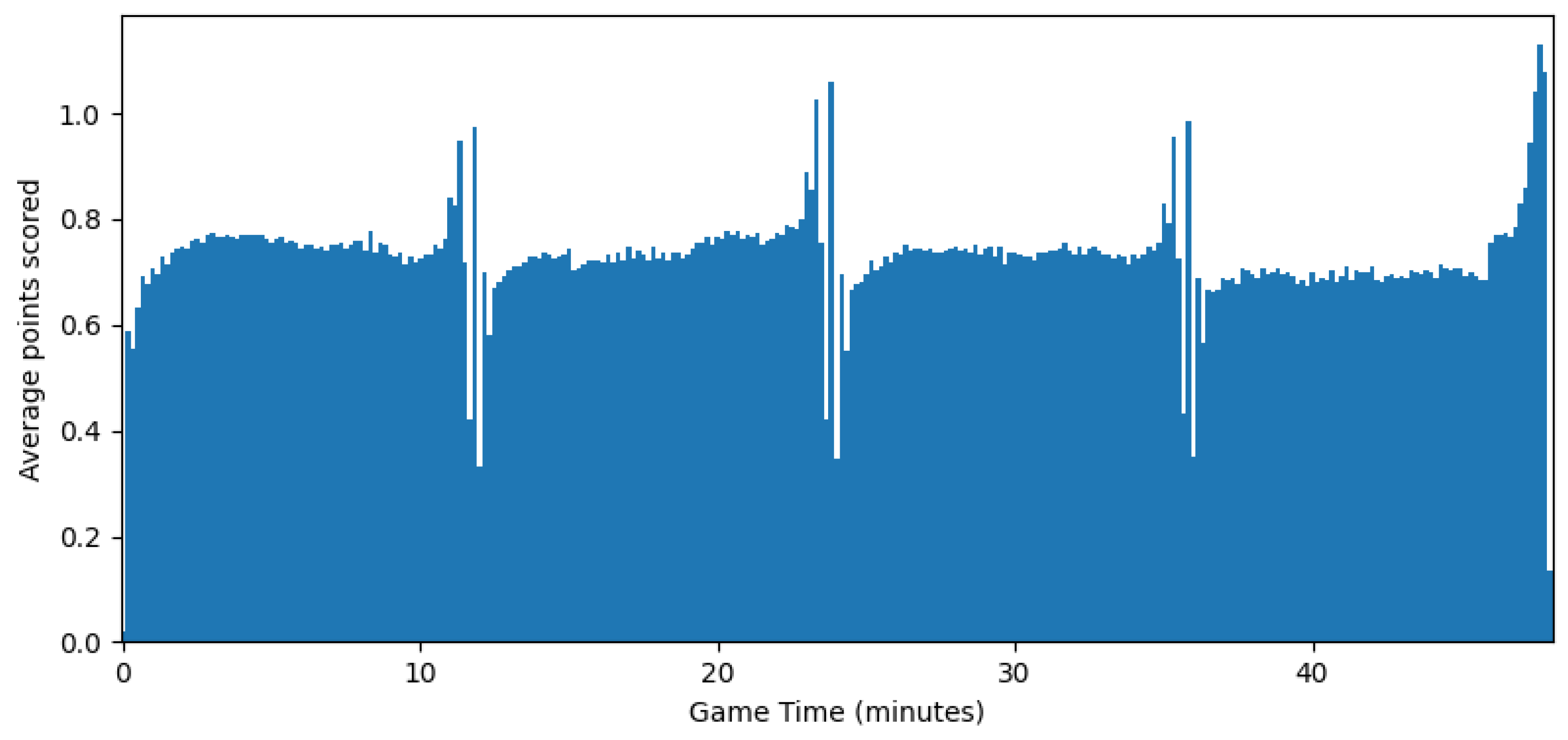

4.2. Basketball Results

4.2.1. Static Elo

- Without Elo, simply assuming .

- Fixing and minimizing as a function of L (no change in strength).

- Fixing and minimizing as a function of K (no home advantage).

- Minimizing as a function of K and L (standard Elo system).

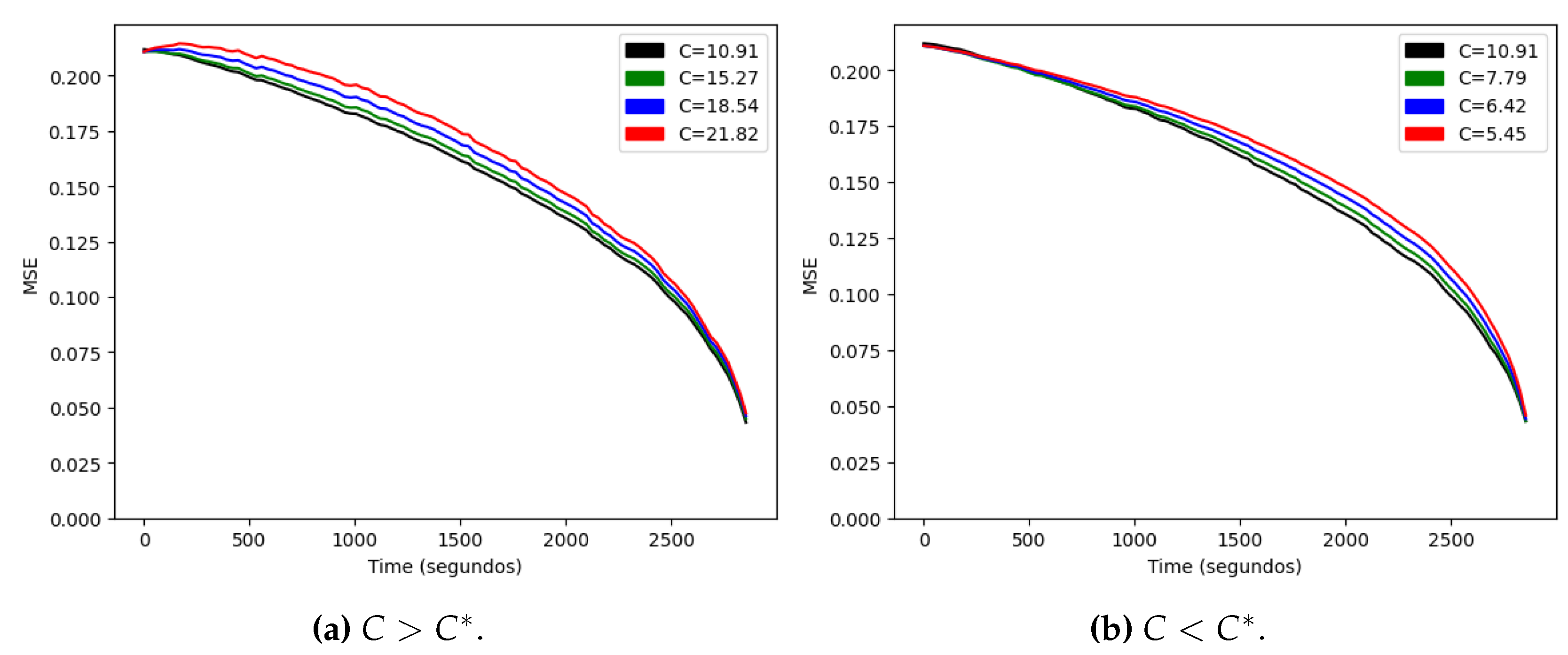

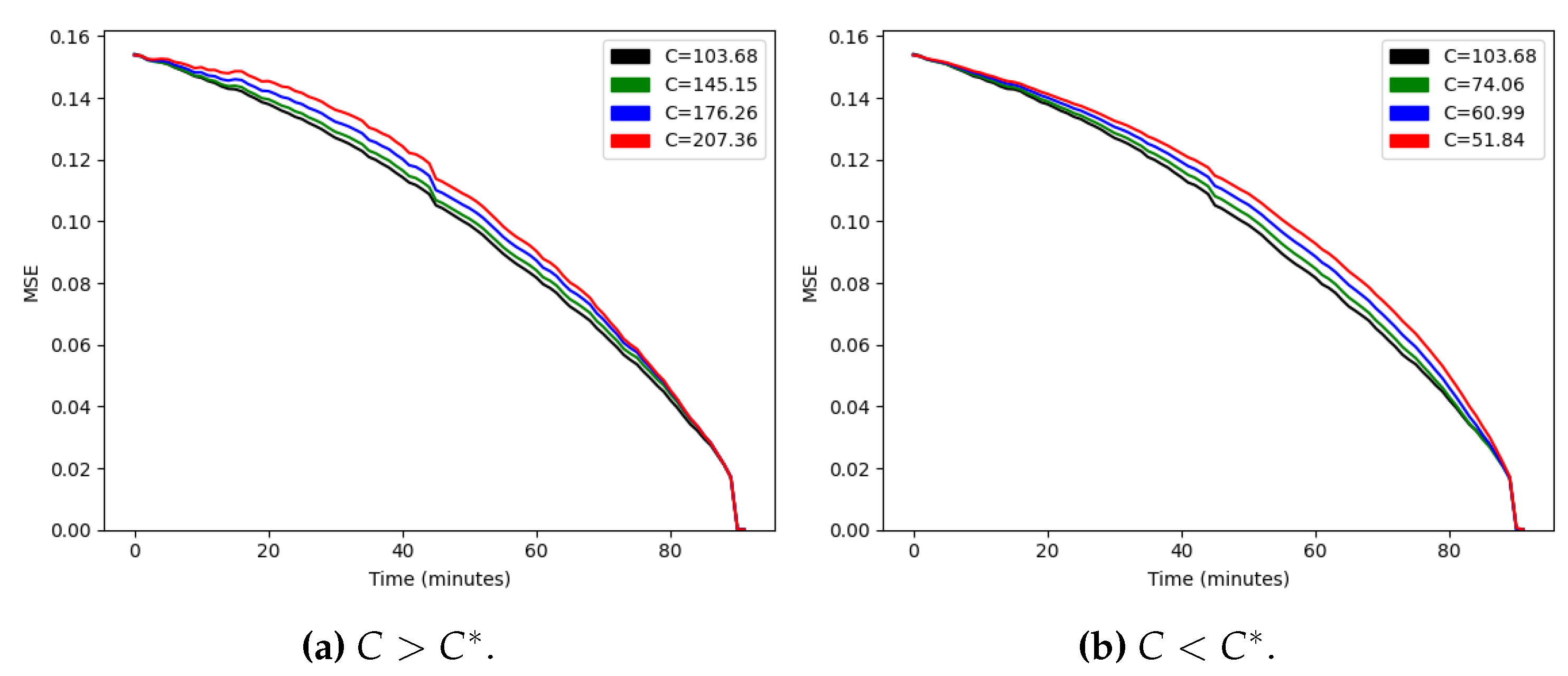

4.2.2. Stochastic Elo

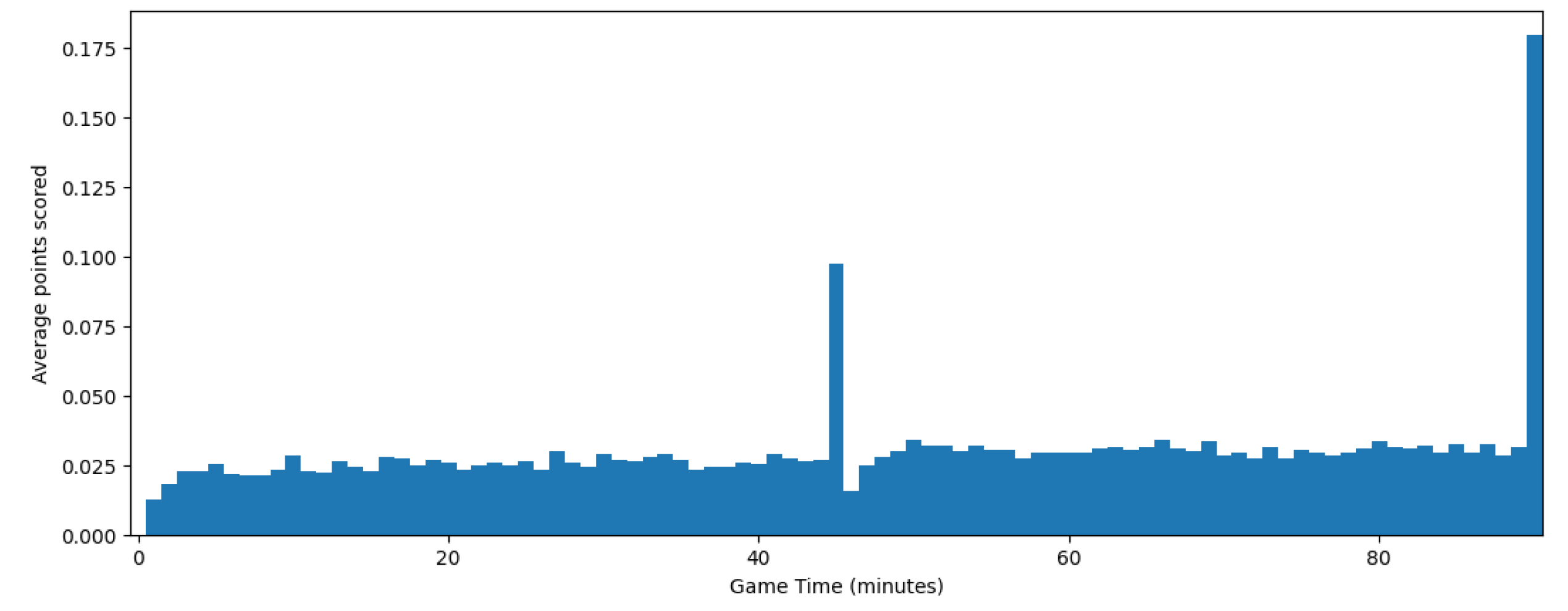

4.3. Soccer Results

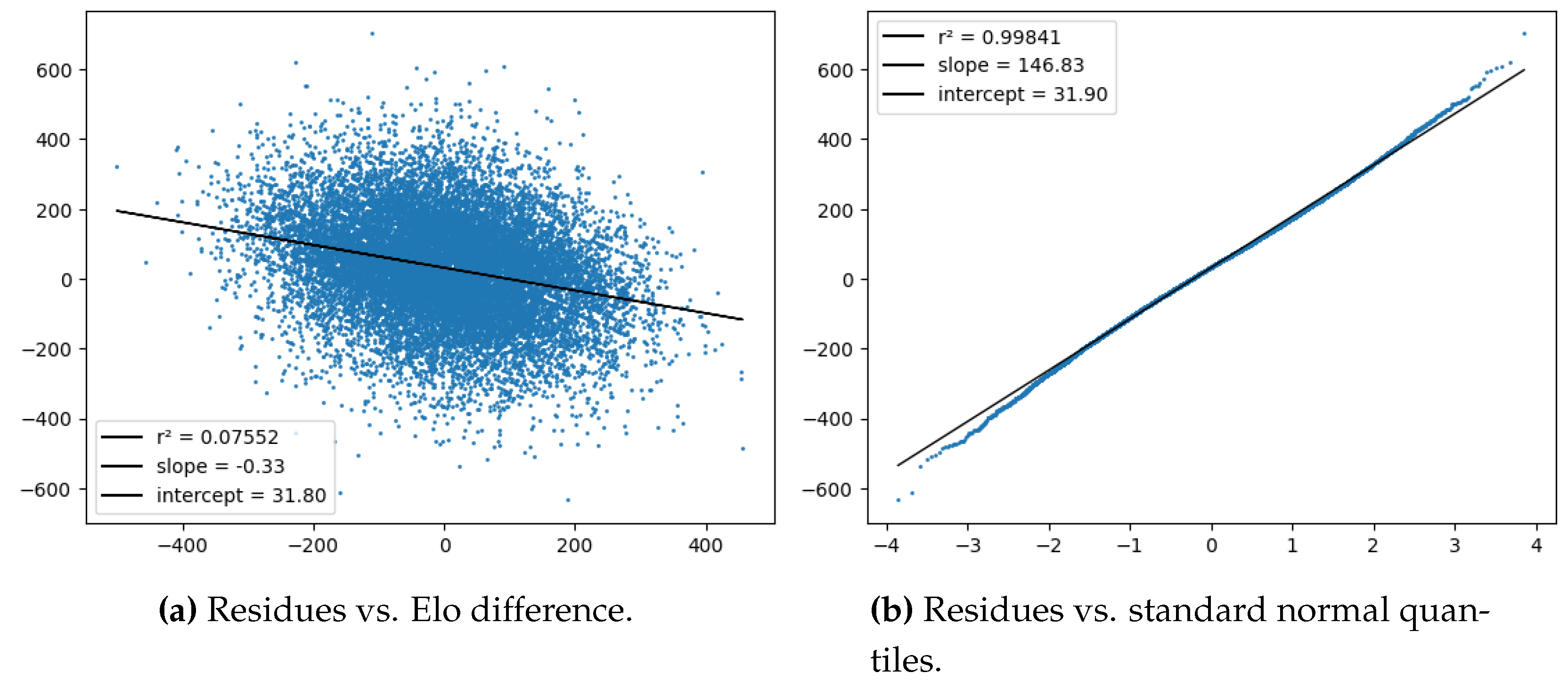

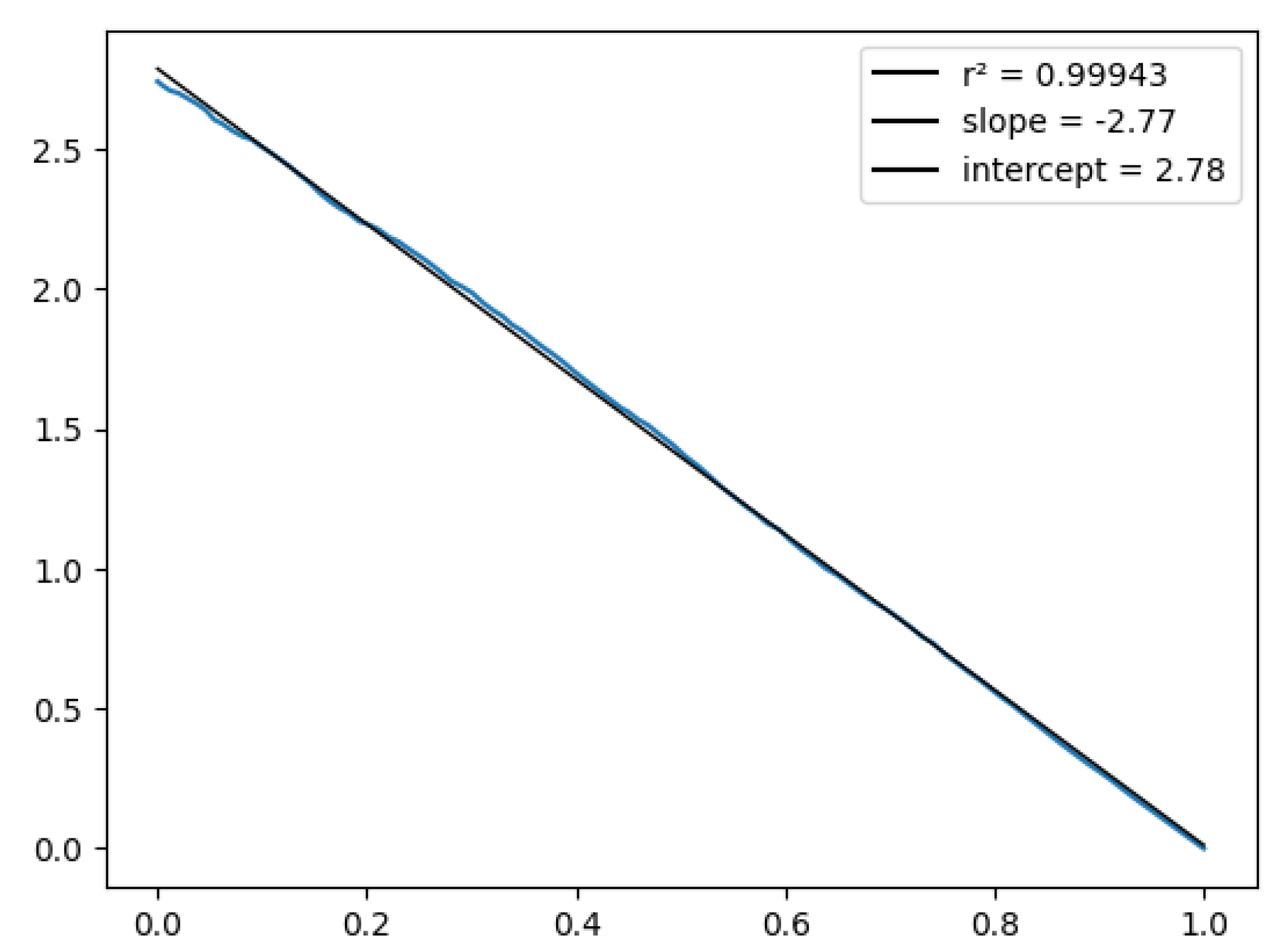

4.3.1. Static Elo

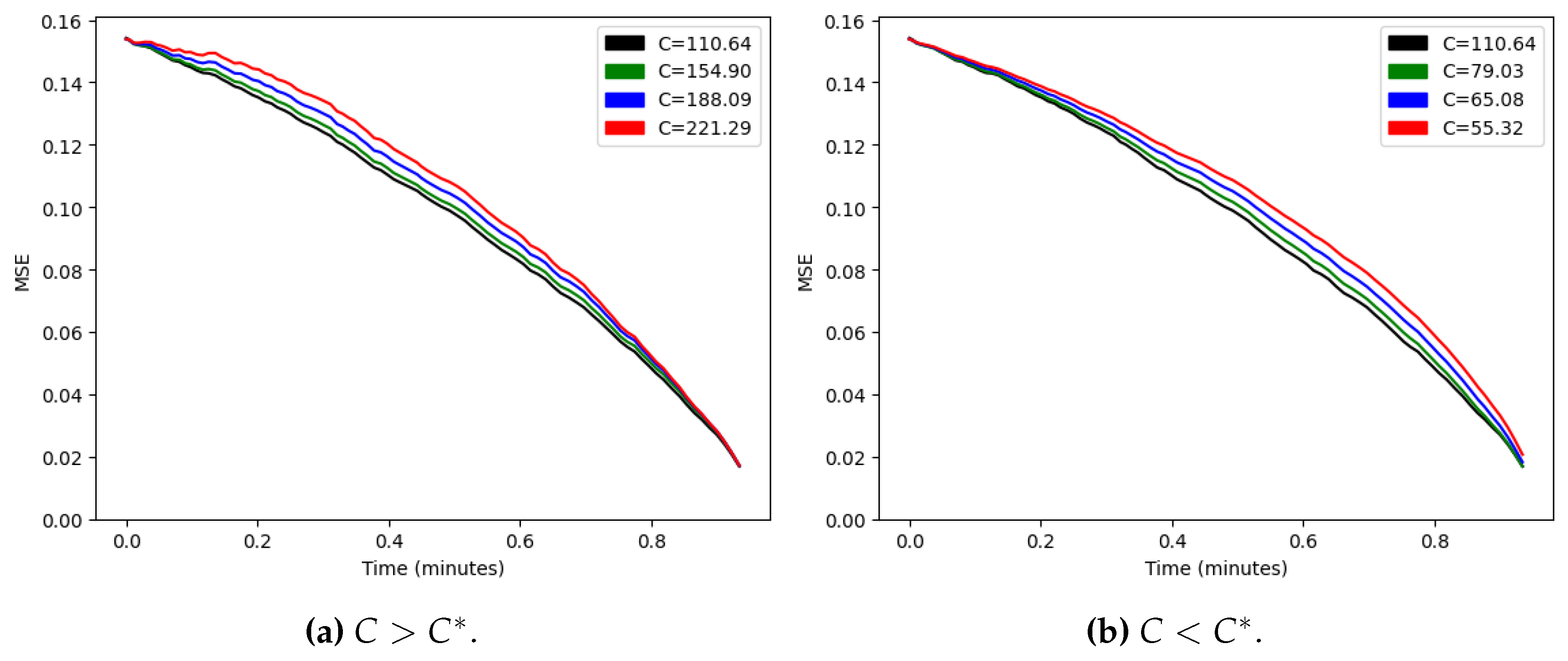

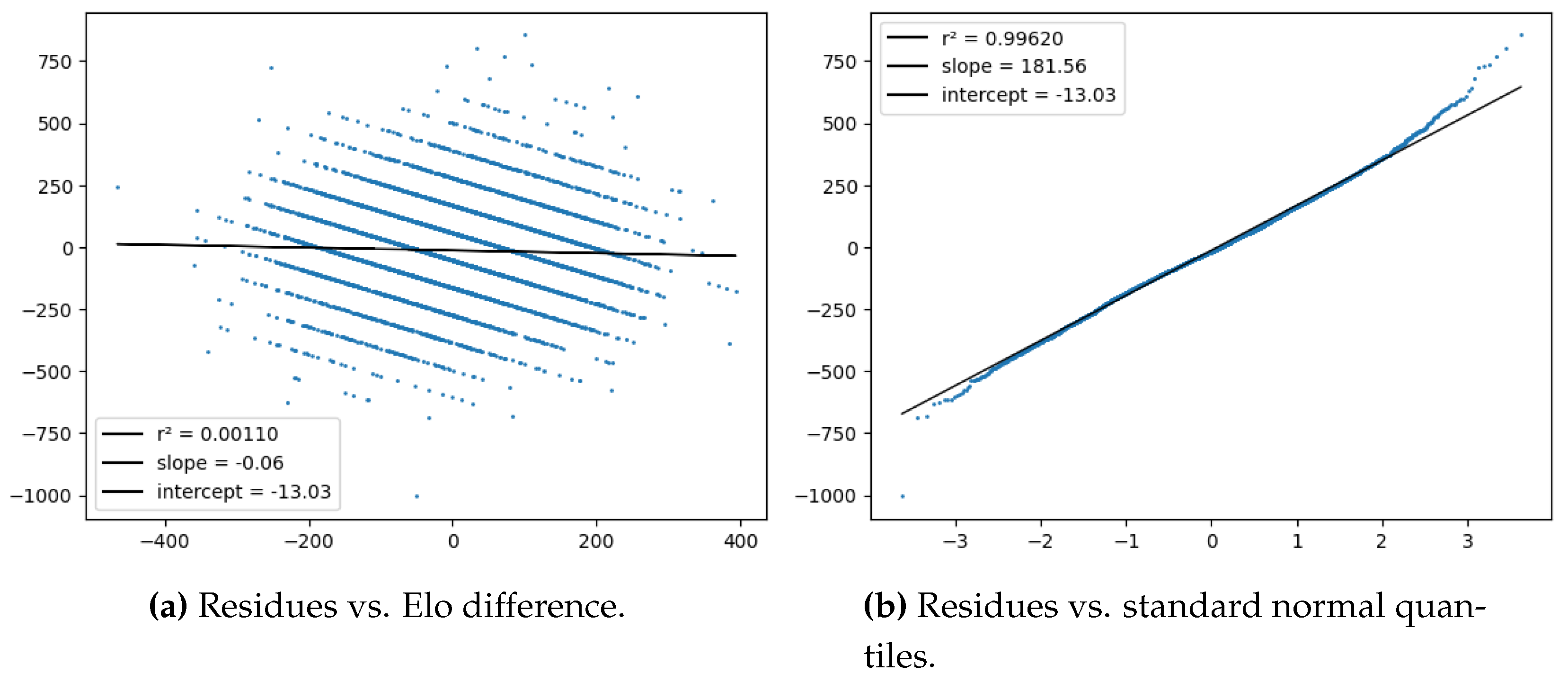

4.3.2. Stochastic Elo

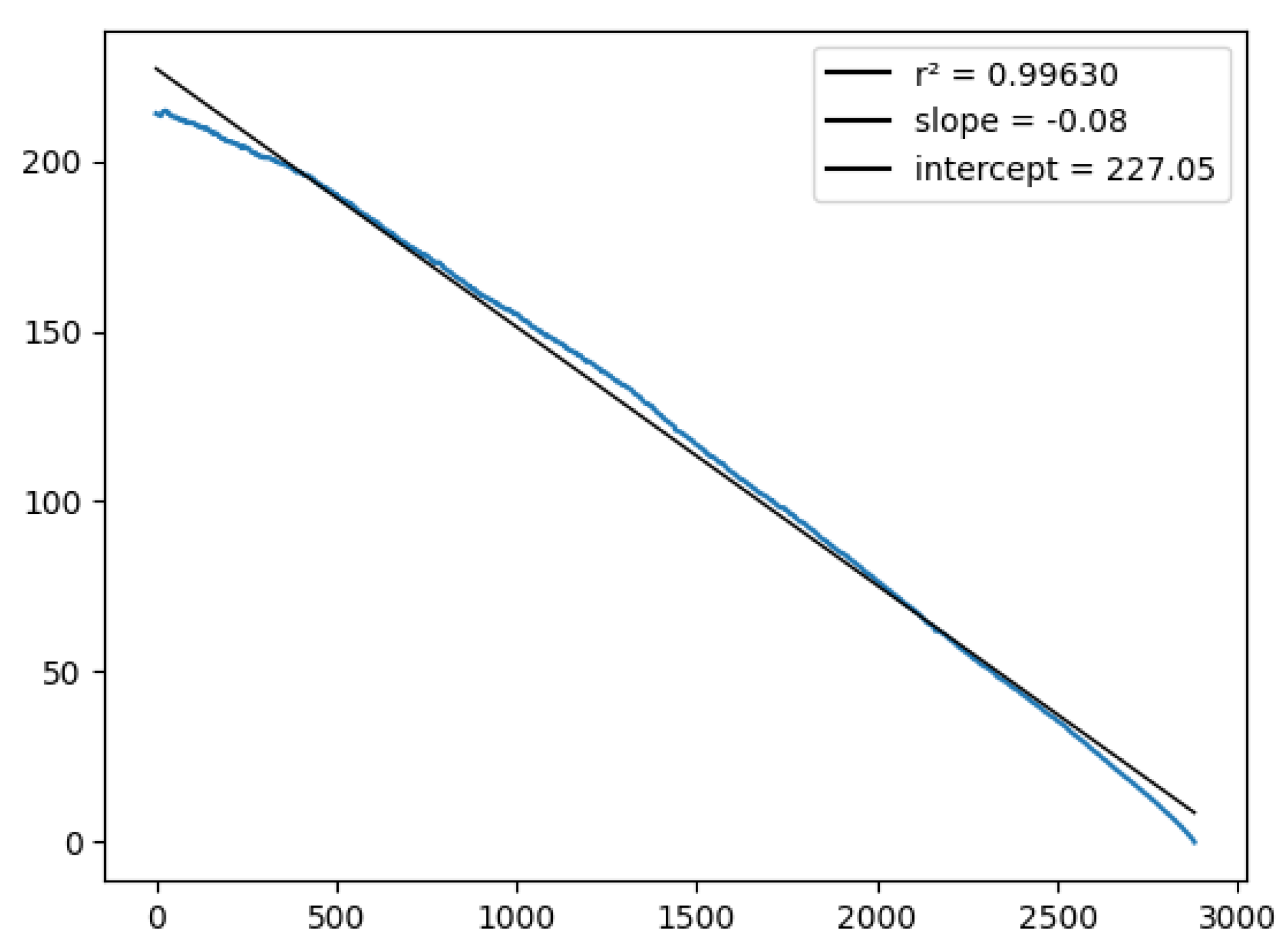

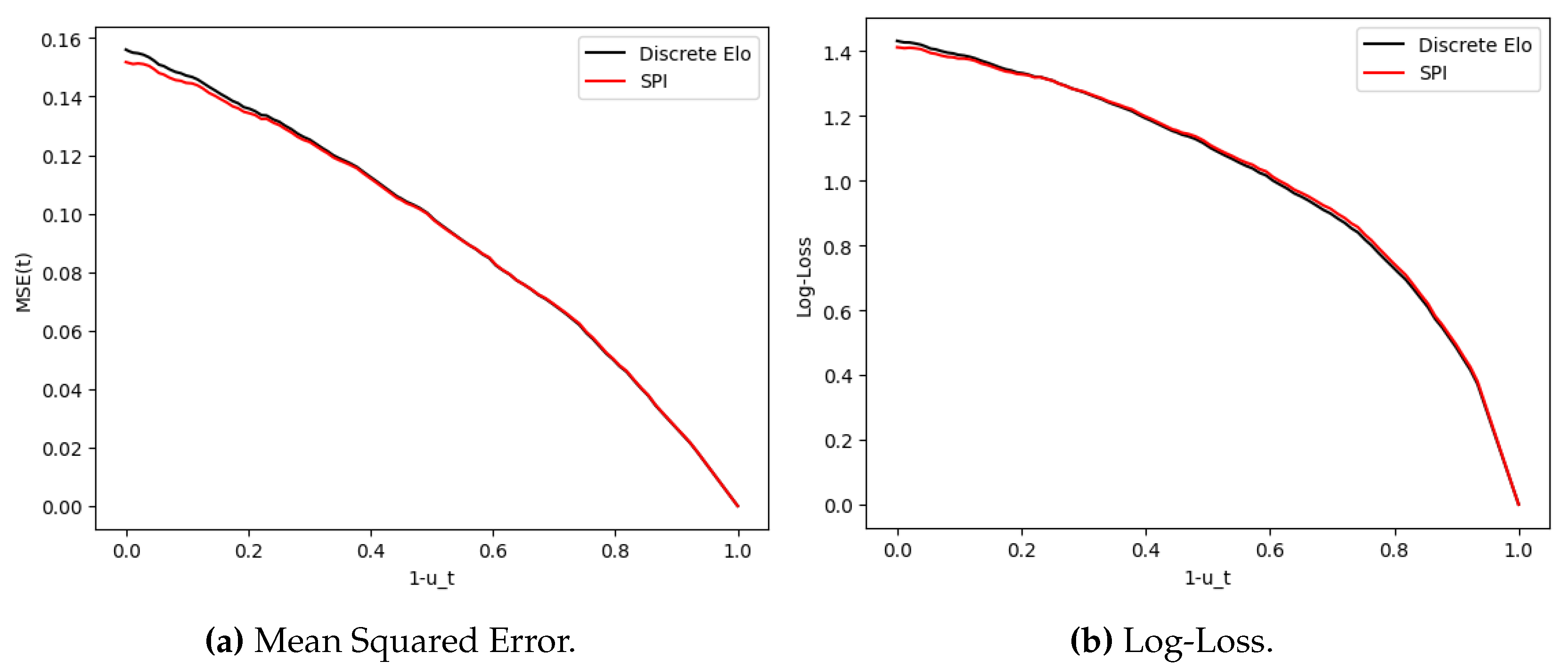

4.3.3. Discrete Elo model

5. Conclusions

5.1. Future Work

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Appendix A. Proofs Regarding the Static Rating Estimators

Appendix B. Proof That the Discrete Model Is an Elo Model

Appendix C. Implementation of the Rating System from SPI

References

- Aldous, D. , Elo Ratings and the Sports Model: A Neglected Topic in Applied Probability? Statistical Science 2017, No. 4, 616–629. [Google Scholar] [CrossRef]

- Elo, A.E. The Rating of Chessplayers, Past and Present, 2nd ed.; Arco Publishing Inc.: New York, USA, 1986. [Google Scholar]

- Glickman, M.E. Paired Comparison Models with Time-Varying Parameters. PhD, Harvard University, Cambridge, Massachussets, May 1993.

- Steele, J.M. Stochastic Calculus and Financial Applications, 1st ed.; Springer-Verlag: New York, USA, 2010. [Google Scholar]

- Jabin, P.E.; Junca, S. A Continuous Model For Ratings. SIAM Journal on Applied Mathematics, 2015, 75 (2), 420–442.

- Bondesson, L. Generalized gamma convolutions and related classes of distributions and densities, Lecture Notes in Stat. 76, Springer, New York, 1992.

- Cramér, H. Über eine Eigenschaft der normalen Verteilungsfunktion. Math Z, 1936, Vol 41 405–-414.

- Glickman, M.E.; Jones, A.C. Rating the Chess Rating System. Chance, 1999, 12 (2)0 21–28.

- Skellam, J. G. The frequency distribution of the difference between two Poisson variates belonging to different populations. Journal of the Royal Statistical Society, 1946, Vol. 109 Issue 3 296.

- Ferri, C.; Hernandez-Orallo, J.; Modroiu, R. An Experimental Comparison of Performance Measures for Classification Pattern Recognition Letters, 2009, 30 27–38. [CrossRef]

- Karlis. D.; Ntzoufras, I. Analysis of sports data by using bivariate Poisson models The Statistician, 2003, 52 Part 3 381-–393. [CrossRef]

- How Our Club Soccer Predictions Work. Available online: https://fivethirtyeight.com/methodology/how-our-club-soccer-predictions-work/ (accessed on 14 July 2024).

- A guide to ESPN’s SPI ratings. Available online: https://www.espn.com/world-cup/story/_/id/4447078/ ce/us/guide-espn-spi-ratings (accessed on 14 July 2024).

| No Elo | -53.78 | 0.23876 | 0.24394 |

| 0 | -60.89 | 0.26498 | 0.29590 |

| 14.71 | 0 | 0.22333 | 0.22308 |

| 16.19 | -60.74 | 0.21179 | 0.21744 |

| No Elo | 48.11 | - | 0.17881 | 0.18188 |

| 0 | 52.12 | 1 | 0.17248 | 0.19252 |

| 9.90 | 0 | 1 | 0.16346 | 0.16136 |

| 10.80 | 52.68 | 1 | 0.15420 | 0.15396 |

| 11.82 | 52.50 | 0.874 | 0.15387 | 0.15341 |

| No Elo | 0.56115 | - | 0.17881 | 0.18188 |

| 0 | 0.61525 | 1 | 0.17256 | 0.19258 |

| 0.11702 | 0 | 1 | 0.16348 | 0.16141 |

| 0.12888 | 0.61560 | 1 | 0.15424 | 0.15397 |

| 0.14781 | 0.62004 | 0.86536 | 0.15389 | 0.15334 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).