1. Introduction

Autonomous vehicles (AVs) are envisioned to become integral to future transportation systems, offering numerous benefits such as improved mobility, reduced emissions, and enhanced safety by minimizing human-related errors [

1,

2,

3]. Additionally, AVs offer users the opportunity to engage in non-driving-related tasks (NDRTs) while being transported, thereby transforming the concept of private vehicle driving [

4]. To better understand the benefits, it is essential to recognize that each automation level has distinct characteristics, allowing for specific advantages. The SAE International’s “Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles” provides a well-known categorization of automation, dividing autonomous driving into six levels. While Levels 0 to 2 require human drivers to monitor and control the vehicle, Levels 4 and 5 presuppose high and full automation respectively. Among them, Level 3 refers to a type of conditional automation, also known as partial or semi-autonomous driving, requiring the driver to take vehicle control in situations unmanageable by the autonomous system [

5]. In all five levels, AVs benefit from automation capabilities but also face challenges that could potentially undermine their benefits.

Semi-autonomous vehicles (Level 3) have attracted attention for their potential to allow interaction between drivers and autonomous systems [

2,

6,

7]. This level of automation has advantages and disadvantages, making it a contentious design choice. On the positive side, interaction with semi-autonomous vehicles enhances their acceptance, addressing one of the key cognitive barriers to widespread adoption [

8,

9,

10,

11]. However, requiring drivers to be ready to take over control conflicts with one of the main benefits of AVs: allowing drivers to engage in non-driving-related tasks. Asking users to remain alert throughout the ride due to potential critical situations negatively impacts their experience, ultimately reducing AVs acceptance [

8]. Thus, the primary issue with such vehicles is often categorized as the "out-of-the-loop" problem. The problem is defined as the absence of appropriate perceptual information and motor processes to manage the driving situation [

12,

13]. The out-of-the-loop problem occurs when sudden take-over requests (TORs) by the vehicle disrupts drivers’ motor calibration and gaze patterns, ultimately delaying reaction times, deteriorating driving performance, and increasing the likelihood of accidents [

12,

13,

14,

15]. Appropriate interface designs that facilitate driver-vehicle interaction and prepare drivers to take over control can mitigate the out-of-the-loop problem.

To ensure a safe and efficient transition from autonomous to manual control, it is crucial to provide drivers with appropriate Human-Machine Interfaces (HMIs) that bring them back into the loop. Inappropriate or delayed responses to TORs frequently lead to accidents that pose considerable harm to drivers, passengers, and those on the road. HMIs that provide appropriate warning signals can facilitate this transition, making it faster and more efficient by enhancing situational awareness and performance [

1,

3,

16,

17]. For instance, multimodal warning signals allow users to perform non-driving-related tasks while ensuring their attention can be quickly recaptured when necessary, thereby preventing dangerous driver behavior [

18]. Warning signals such as auditory, visual, and tactile convey semantic and contextual information about the driving situation that is crucial when retrieving control [

18,

19,

20]. The process of taking control of the vehicle involves several information processing stages, including the perception of stimuli (visual, auditory, or tactile), cognitive processing of the traffic situation, decision-making, resuming motor readiness, and executing the action [

17,

21,

22]. Effective multimodal warning signals play an indispensable role in moderating information processing during take-over by enhancing situational awareness and performance.

Situational awareness and reaction time are two critical factors within the process of taking over control. Situational awareness is defined as "the perception of elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future" [

23]. According to this definition, the perception of potential threats and their comprehension constitute the first stages in the information processing stages, where the driver’s attention is captured and the context of the critical situation is understood [

18,

23]. A unimodal or multimodal signal that is able to deliver urgency can already impact in these early stages [

18,

24]. For instance, auditory non verbal sounds are shown to enhance perceived urgency and draw back attention to the driving tasks [

25,

26,

27]. Similarly, visual warnings alone or combined with auditory signals are successful in retrieving attention and increasing awareness of a critical situation [

20,

25,

28]. Besides situational awareness, reaction times are commonly used metrics to evaluate drivers’ behavior during TOR. In general, reaction times encompasses the entire take-over process, from perceiving the stimuli (perception reaction time) to performing the motor action (movement reaction time) [

22,

29]. Differentiating between these stages is crucial for calculating the effect of a warning signal on reaction time. Discrete results in reaction time studies have revealed that the method of calculation should be considered precisely [

22]. Perception reaction time is defined as the time required to perceive the stimuli, cognitively process the information, and make a decision. Movement reaction times is the time needed to perform the motor action [

29]. While perception reaction times can be influenced by modality and characteristic of a signal, movement reaction time is more driven by the complexity of the situation and the expected maneuver [

22,

30]. As a result, the visual modality can affect movement reaction times by alerting the driver while simultaneously providing complex spatial information, such as distance and direction [

31]. Understanding the roles of situational awareness and reaction times in the take-over process, and the effects of warning signals on each of them, allows us to determine when and where different types of signals are beneficial or detrimental to ensure a safer transition during TORs.

In addition to safe transitions that facilitate the integration of AVs into everyday life, acceptance factors play a crucial role in determining the feasibility of such integration. Increasing user acceptance can be effectively achieved through collaborative control and shared decision-making strategies, such as TORs [

7,

8,

32]. However, providing TORs in semi-autonomous vehicles has a dual effect on their acceptance. While such requests can increase trust through enhanced interaction, they also induce anxiety during the take-over process [

8,

33]. Therefore, measuring perceived feelings of anxiety and trust is essential when studying conditional AVs. Subjective measures, like acceptance questionnaires, have been used to determine acceptance, with recent examples specifically designed for AVs. The Autonomous Vehicle Acceptance Model (AVAM) provides a multi-dimensional approach in quantifying acceptance aspects across different levels of automation [

34].

The measuring methods used to understand drivers situational awareness and reaction times during TOR are as crucial as their definition. Differences in measuring methods lead to incommensurable results that make reproducibility difficult [

35,

36]. For instance, studies have used different metrics to understand drivers’ situational awareness and reaction time , including gaze related reaction time, gaze-on-road duration, hands-on-wheel reaction time, actual intervention reaction time, left-right car movement time [

17,

35,

37,

38]. Therefore, most research on driver behavior during critical events has produced mixed results. Additionally, most studies have been conducted in risk-free simulated environments due to the dangerous nature of the situations [

39,

40], usually carried out in laboratory-based setups, which are costly, space-consuming, and lack realism. As a result, research has questioned the extent to which findings under such conditions generalize to the real world [

41]. One of the main reasons is the fact that participants in conventional driving simulations remain conscious of their surroundings, reducing the perceived realism of the driving scenario. For results to be generalizable to the real world, it is particularly important to simulate driving conditions that elicit similar natural driving behavior, including realistic ambience, increased workload, prolonged driving, meaningful signals, various behavioral measures, and diverse population sample [

41,

42]. To mitigate these limitations, virtual reality (VR) has been proposed as an inexpensive and compact solution, providing a higher immersion feeling [

43,

44,

45,

46]. Unlike conventional driving simulations, VR offers a more immersive driving experience, allowing researchers to isolate and fully engage drivers in critical situations. By enhancing perceived realism, VR makes simulated events feel more real [

47,

48], resulting in drivers’ behaviors closer to real life and findings that are more ecologically valid than those obtained through conventional driving simulations [

49,

50]. In addition, VR-based driving simulations offer other advantages which make them preferred over traditional systems, including integrated eye-tracking to collect visual metrics to understand situational awareness [

38,

51], controllability and repeatability [

52], as well as usability for education and safety training [

53,

54,

55]. Hence, VR holds the potential to investigate drivers’ behavior in a safe, efficient, and reproducible manner, which is of particular interest when designing multimodal interfaces for future autonomous transport systems.

In this study we used VR to investigate the impact of signal modalities—audio, visual, and audio-visual—on situational awareness and reaction time during TOR in semi-autonomous vehicles. We collected quantitative data on both objective behavioral metrics (visual and motor behavior) and subjective experience (AVAM questionnaire) from a large and diverse population sample, encompassing varying ages and driving experience. We hypothesized that the presence of any warning signal (audio, visual, or audio-visual) would lead to a higher success rate compared to a no-warning condition. Specifically, we posited that the audio warning signal would enhance awareness of the critical driving situation, while a visual signal would lead to a faster motor response. Furthermore, we predicted that the audio-visual modality, by combining the benefits of both signals, would result in the fastest reaction times and the highest level of situational awareness. Additionally, we expected that seeing the object of interest, regardless of the warning modality, would directly improve the success rate. Finally, we expected a positive correlation between self-reported preferred behavior and actual behavior during critical events and hypothesized that the presence of warning signals would be associated with increased perceived trust and decreased anxiety levels in drivers. By examining drivers’ behavior under these conditions, we can gain a better understanding of the effect of signal modalities on performance.

4. Discussion

The aim of this experiment was to investigate the effect of unimodal and multimodal warning signals on reaction time and situational awareness during the take-over process by collecting visual metrics, motor behavior, and subjective scores in a VR environment. The results demonstrated distinct effects of audio and visual warning signals during the take-over control process. The visual warning signal effectively reduced reaction time and increased dwell time on the object of interest, indicating enhanced situational awareness. In contrast, the audio warning signal increased situational awareness but did not affect reaction time. The multimodal (audio-visual) signal did not exceed the effectiveness of the visual signal alone, suggesting its impact is primarily due to the visual component rather than the audio. Additionally, the positive impact of both unimodal and multimodal signals on successful maneuvering during critical events demonstrates the complex moderating role of these modalities in decreasing reaction time and increasing situational awareness. The results indicate that when visual warnings are present, the negative effect of longer reaction time on success is lessened. In other words, visual warnings, by providing more robust cues, help mitigate the adverse impact of longer reaction time during take-over. Furthermore, the impact of dwell time on success is moderated by the presence of visual or audio-visual warning signals, possibly because the warnings offer sufficient information or cues, reducing the necessity for additional dwell time for success maneuvering. Finally, the positive impact of providing unimodal and multimodal warning signals on reducing anxiety and enhancing trust has been confirmed. In conclusion, various signal modalities impact reaction time and situational awareness differently, thereby influencing the take-over process, while their effect on anxiety and trust components is evident but independent of situational awareness and reaction time.

Despite conducting our experiment in a realistic VR environment, this study has some limitations. Although past studies have suggested that it is important to lower the frequency of critical events [

41], here we used 12 critical events in succession. However, the continuous driving session designed in this study was relatively long-lasting in order to compensate for this. Another issue is being in a VR environment for a prolonged period of time can induce motion sickness [

63], which can negatively influence the participant’s experience. Yet, the wooden car was designed to provide a higher feeling of immersion to mitigate these side effects. For this research study, we used specific types of warning signals, the audio signal was speech-based rather than a generic tone and the visual signal was sign-based with potential hazards highlighted. Given this design, our findings may not be directly comparable with other studies in which different types of signals were used [

64,

65]. Regarding the reaction time calculation, we used a particular approach in which three different types of reactions (steering, braking or acceleration) were accounted for. In contrast, most studies usually consider only one type of reaction or a combination of them when calculating reaction time [

66]. Similarly, when calculating dwell time of gaze, we had accounted for the entire event duration rather than the duration until the first gaze or actual response [

35,

38,

67]. Thus, for reproducibility of the results, the aforementioned limitations should be considered. Despite these limitations, reaction time and situational awareness are commonly used metrics for evaluating warning signals on take-over processes in AVs [

37], and the immersive VR environment enabled a way to assess ecologically valid driver responses over a large and diverse population sample [

49,

50,

68].

The take-over process consists of several cognitive and motor stages, from becoming aware of a potential threat to the actual behavior leading to complete take-over [

17,

21,

69]. This procedure is influenced by various factors, and the effect of signal modality can be examined at each stage. Broadly, this procedure can be divided into two main phases, initial and latter, that overlap with situational awareness and reaction time respectively. In the initial stage of the take-over process, stimuli such as warning signals alert the driver about the critical situation. The driver perceives this message and cognitively processes the transferred information [

17,

22]. Endsley’s perception and comprehension components of situational awareness align with the initial stages of perceiving and cognitively processing information during the early stage of the take-over process [

23,

70]. Despite the differences between auditory and visual signals [

24,

71], the current study aligns with previous findings demonstrating the ability of unimodal and multimodal signals to enhance situational awareness [

18]. Thereby fostering the initial stage of the reaction time. While situational awareness defines the early stages of cognitive and motor processes during take-over, reaction time establishes the latter stages.

Following the initial, the subsequent stage involves decision-making and selecting an appropriate response, which is heavily dependent on previous driving experience. Thereafter, the driver’s motor actions are calibrated and transitioned to a ready phase, involving potential adjustments to the steering wheel or pedals. The process culminates with the execution of the reaction required to manage a critical situation [

17,

22]. According to the definition of reaction time, it passed through two steps of comprehension and movement [

29]. Comprehension reaction time overlaps with the concept of situational awareness, while movement reaction time refers more to the latter stages of the take-over process, including calibration and actual motor action. Our calculation of reaction time focused on measuring the actual hand or feet movements from the start of a critical event (including driver calibration) to the movement reaction time. Our findings confirm that both visual and audio-visual signals reduce reaction time to the same extent. The audio modality however was not able to extend its effect to the reaction time phases. Despite the fact that the multimodal signal (audio-visual) helps decrease reaction time, it was not a significant moderator of reaction time in ensuring success. This is explainable when considering the late stages of the take-over process. Before movement calibration and actual action, there is a decision-making stage where visual warning signals are crucial [

17,

22]. A fundamental characteristic of visual warning signals encompasses the type of information they convey [

31]. Indeed, visual warnings can provide additional information in comparison to auditory signals, including spatial information (e.g., depth and speed) and distractor information (e.g., type, kind) [

24,

31,

72]. This complex information enhances decision-making on the more appropriate type of reaction for the situation, compensating for the faster effect of auditory signals. While audio signals impact the early stages of take-over, visual signals provide valuable information that assists in the process.

Enhancing user experience is a critical aspect in the development and adoption of AV technology [

36]. Therefore, the ways users subjectively perceive each aspect of the technology should be developed alongside technical advancements. Our experiment showed that all types of signal modalities could create a calmer and trustworthy experience for the driver of an AV. However, we were unable to connect this finding to the cognitive process drivers undergo during the take-over. Further studies should delve deeper into extending subjective measurements to encompass cognitive and behavioral processes that arise during the drive.

Author Contributions

Conceptualization, A.H., S.D., and J.M.C.; methodology, A.H., S.D., and J.M.C.; software, A.H., S.D., J.M.C., F.N.N. and M.A.W.; validation, A.H., S.D., J.M.C., F.N.N. and M.A.W.; formal analysis, S.D; investigation, F.N.N., M.A.W.; resources, F.N.N., M.A.W.; data curation, A.H., J.M.C.; writing – original draft preparation, S.D., A.H. and J.M.C.; writing – review and editing, A.H., S.D. and J.M.C.; visualization, S.D. and J.M.C; supervision, G.P. and P.K.; project administration, S.D., F.N.N. and M.A.W.; funding acquisition, G.P. and P.K. All authors have read and agreed to the published version of the manuscript.

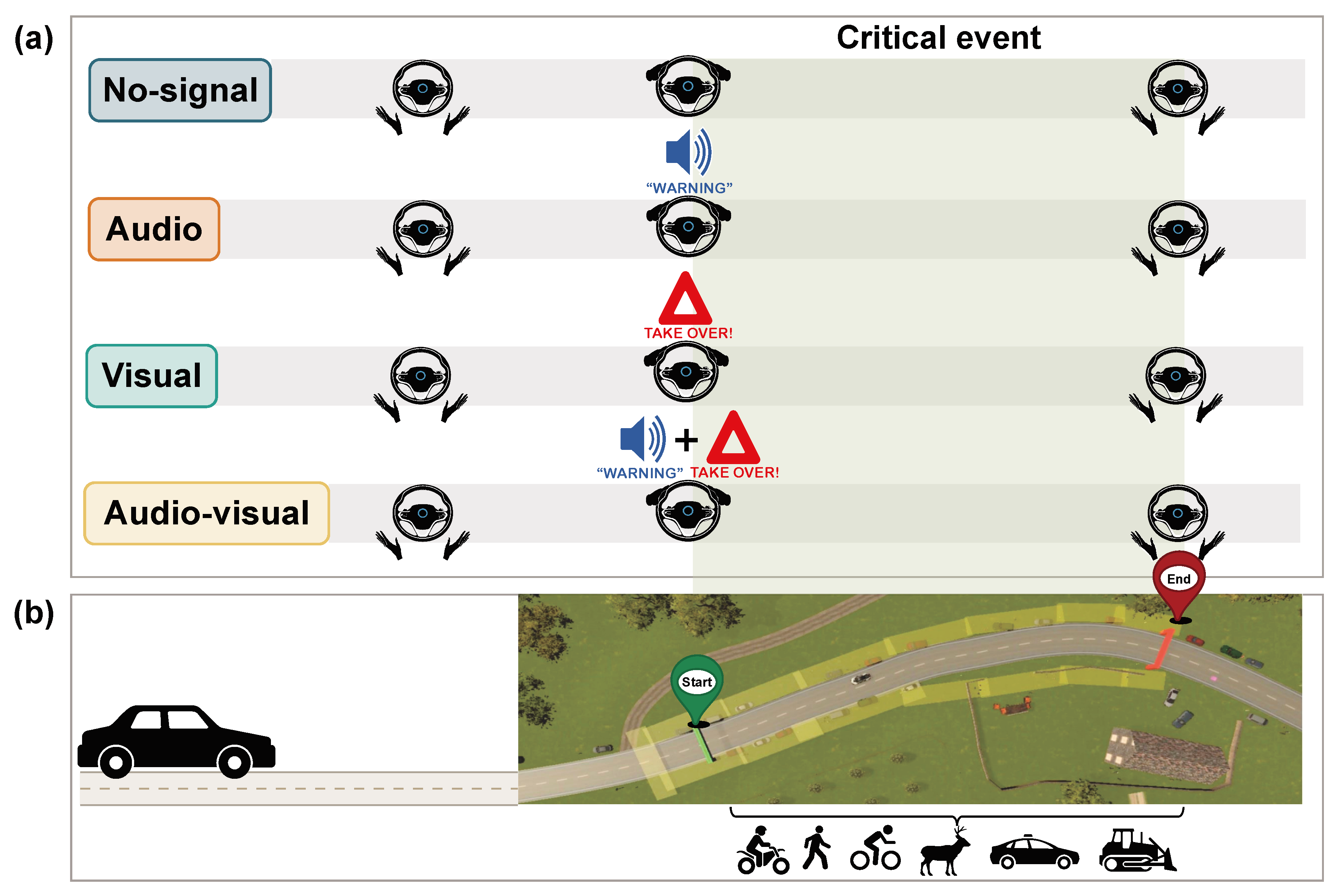

Figure 1.

(a) The figure illustrates the four experimental conditions during the semi-autonomous drive, with the green area depicting a critical event from start to end. During critical events, participants transitioned from “automatic drive” (hands off wheel) to “manual drive” (hands on wheel). In the no-signal condition, no signal prompted participants to take over vehicle control. In the audio condition, they would hear a "warning" sound, and in the visual condition, they would see a red triangle on the windshield. In the audio-visual condition, participants would hear and see the audio and visual signals together. (b) Aerial view of the implementation of a critical traffic event in the VR environment.

Figure 1.

(a) The figure illustrates the four experimental conditions during the semi-autonomous drive, with the green area depicting a critical event from start to end. During critical events, participants transitioned from “automatic drive” (hands off wheel) to “manual drive” (hands on wheel). In the no-signal condition, no signal prompted participants to take over vehicle control. In the audio condition, they would hear a "warning" sound, and in the visual condition, they would see a red triangle on the windshield. In the audio-visual condition, participants would hear and see the audio and visual signals together. (b) Aerial view of the implementation of a critical traffic event in the VR environment.

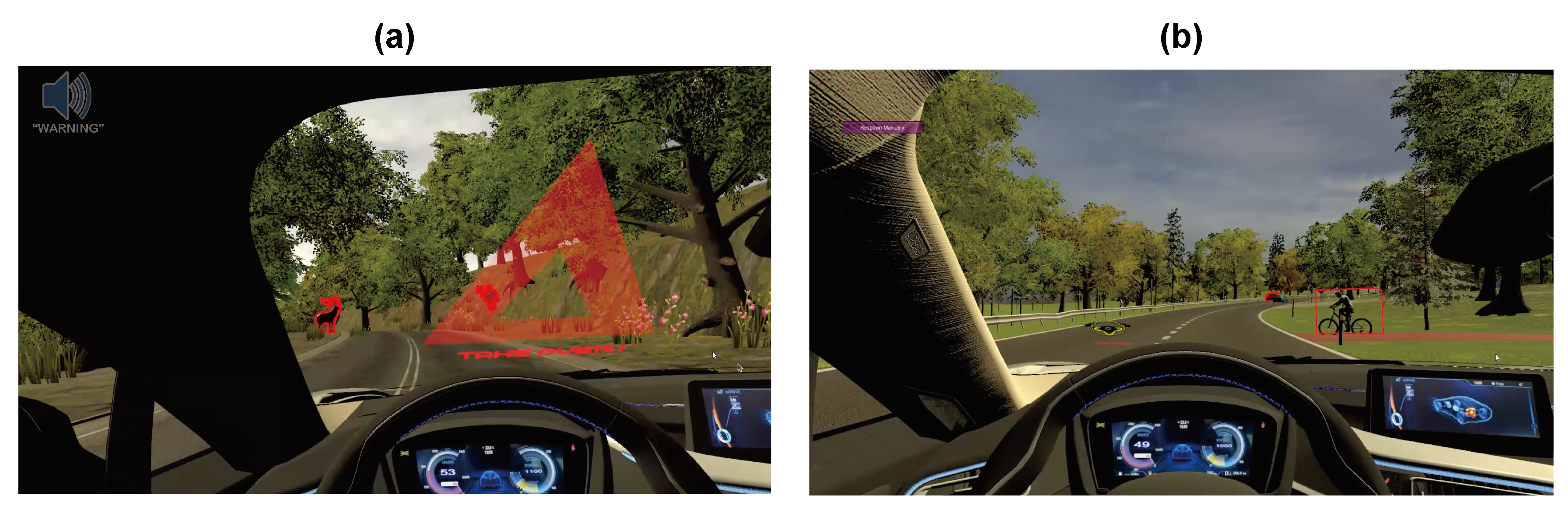

Figure 2.

Exemplary images of the driver’s view in the audio-visual condition during two different critical events. (a) Auditory and visual feedback alerting the driver at the beginning of a critical event, and the view of the windshield after take-over for the subsequent drive (b). The “warning” logo on the top left was not visible to participants.

Figure 2.

Exemplary images of the driver’s view in the audio-visual condition during two different critical events. (a) Auditory and visual feedback alerting the driver at the beginning of a critical event, and the view of the windshield after take-over for the subsequent drive (b). The “warning” logo on the top left was not visible to participants.

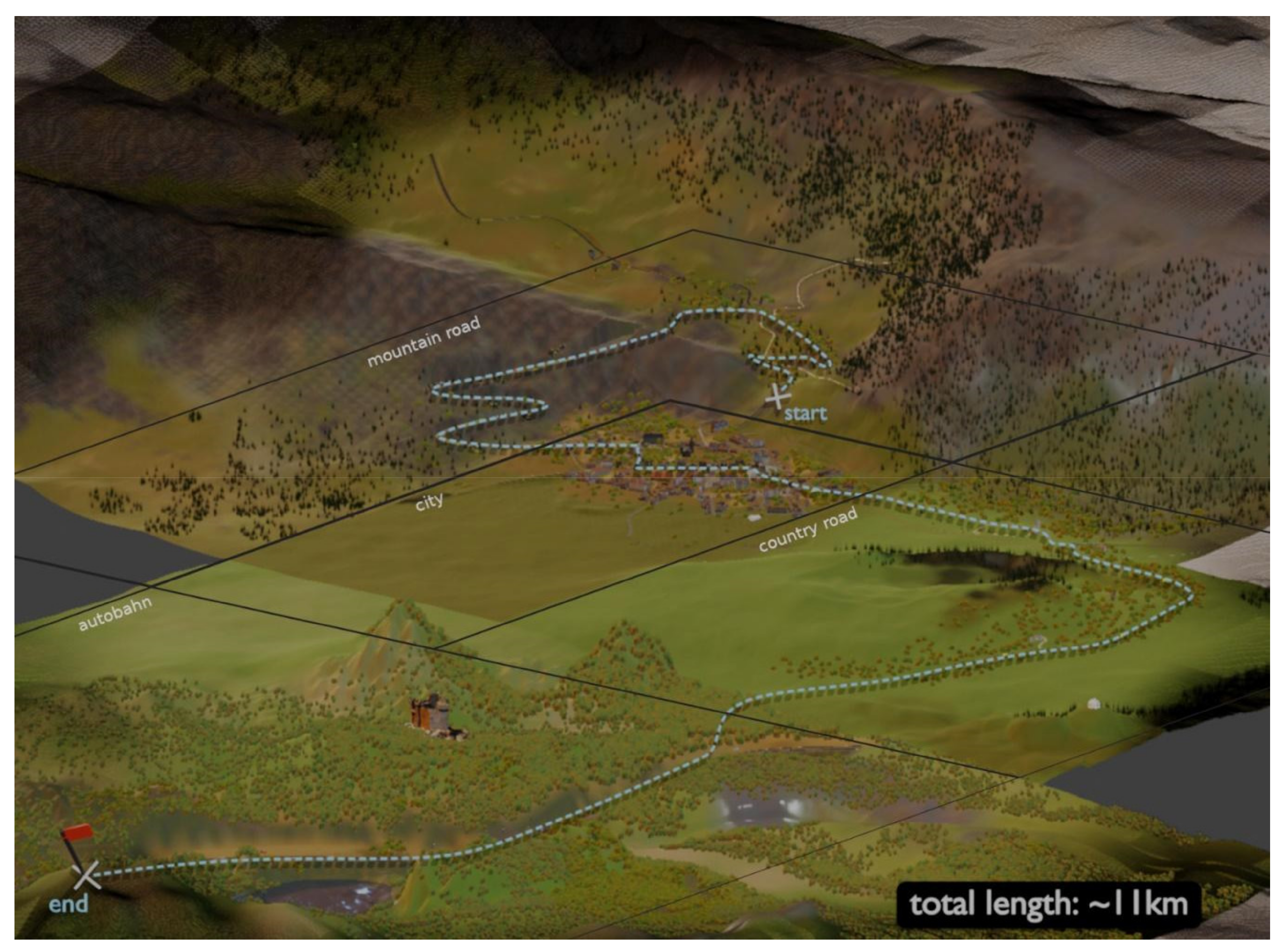

Figure 3.

LoopAR road map bird view from start to end. Image from [

57].

Figure 3.

LoopAR road map bird view from start to end. Image from [

57].

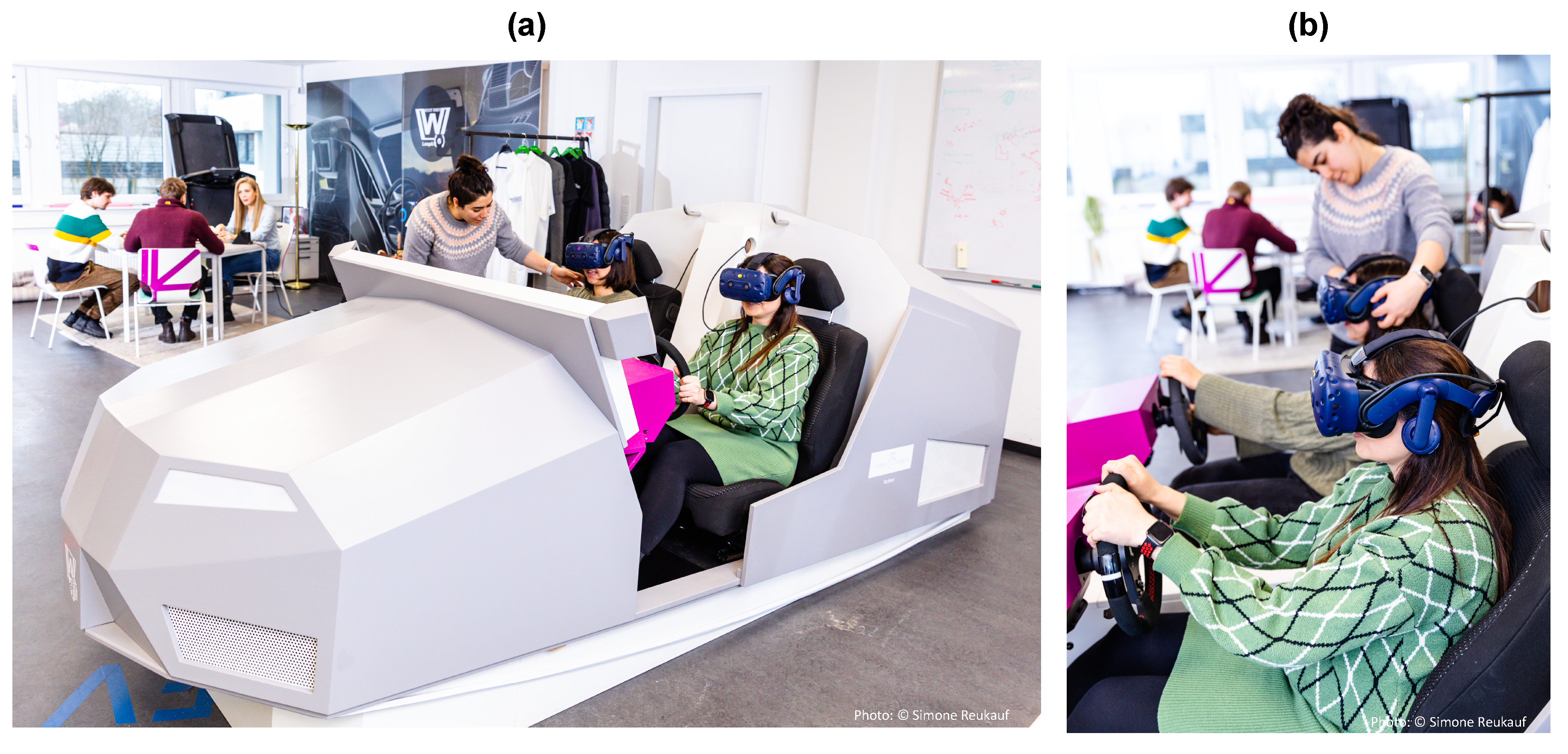

Figure 4.

Two figures illustrating the experimental setup during measurement. (a) Two participants sitting on the wooden car simulator wearing the VR Head Mounted Display. (b) Experimenter ensuring correct positioning of the Head Mounted Display and steering wheel..

Figure 4.

Two figures illustrating the experimental setup during measurement. (a) Two participants sitting on the wooden car simulator wearing the VR Head Mounted Display. (b) Experimenter ensuring correct positioning of the Head Mounted Display and steering wheel..

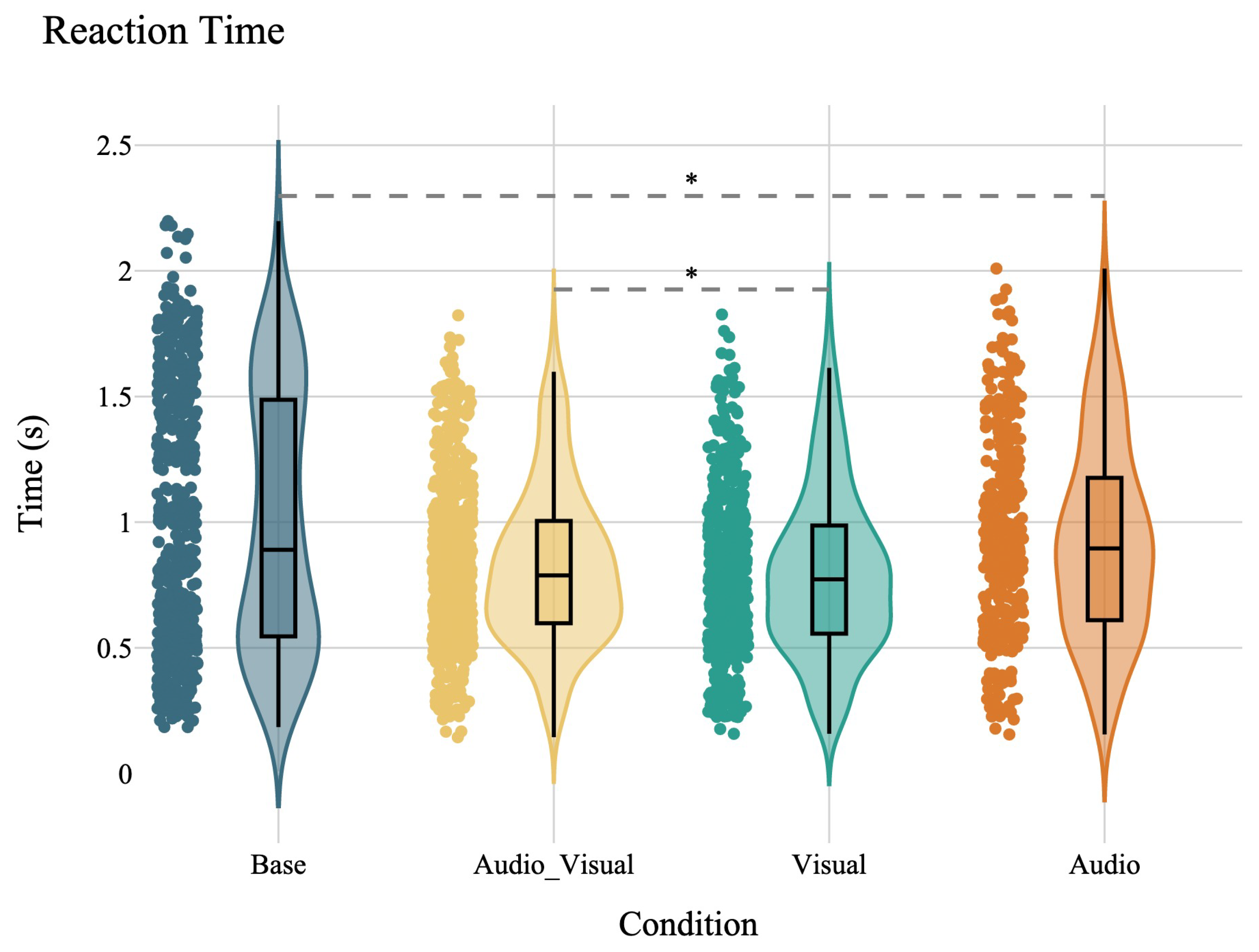

Figure 5.

The plot depicts the distribution of reaction times across different conditions: base, audio_visual, visual, and audio. The y-axis shows the reaction time in seconds, while the x-axis lists the different conditions. The violin plots illustrate the density of each variable, with the box plots inside showing the median. The scatter points overlay the distribution, indicating individual data points. The gray dashed line connects the conditions that are not significantly different.

Figure 5.

The plot depicts the distribution of reaction times across different conditions: base, audio_visual, visual, and audio. The y-axis shows the reaction time in seconds, while the x-axis lists the different conditions. The violin plots illustrate the density of each variable, with the box plots inside showing the median. The scatter points overlay the distribution, indicating individual data points. The gray dashed line connects the conditions that are not significantly different.

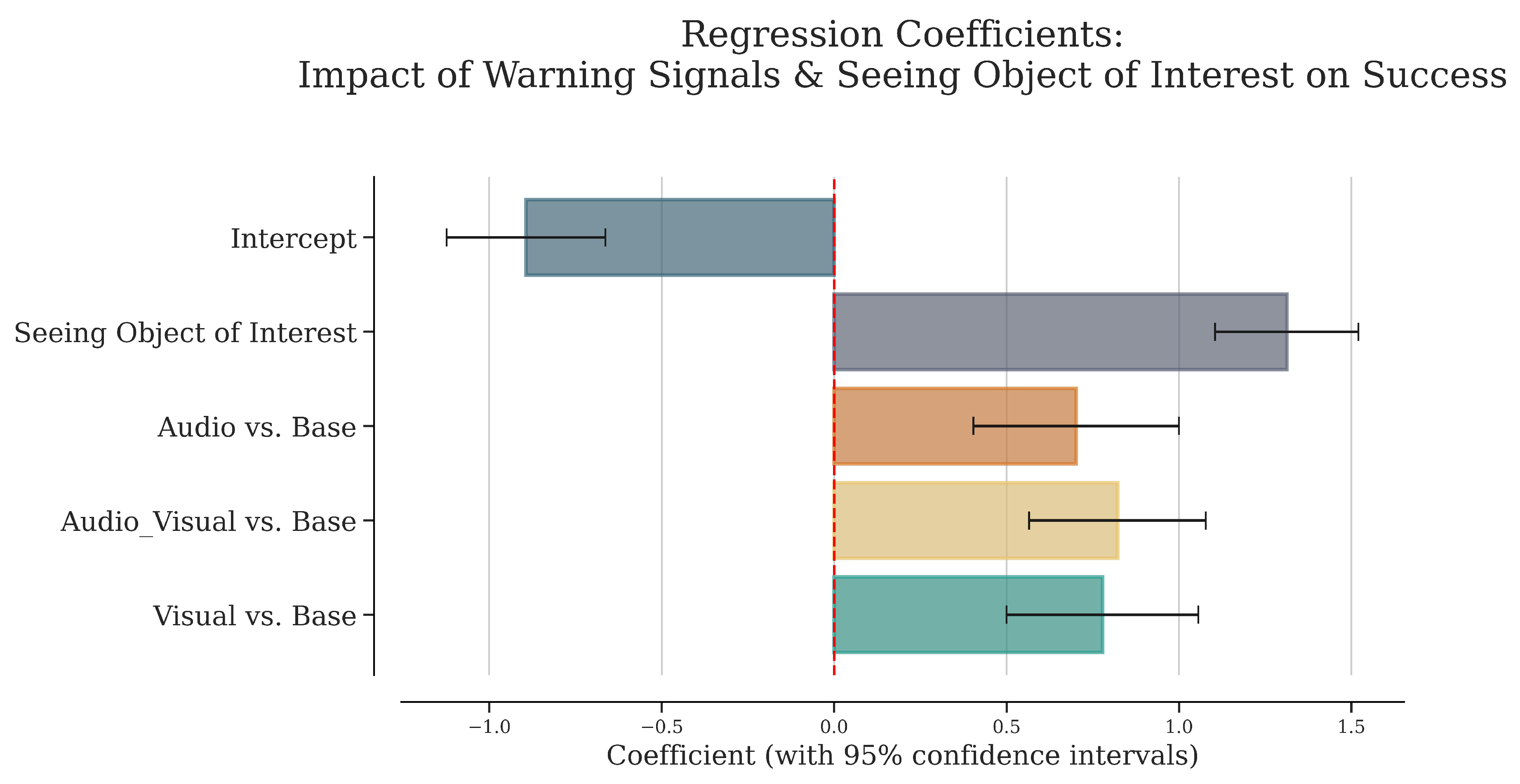

Figure 6.

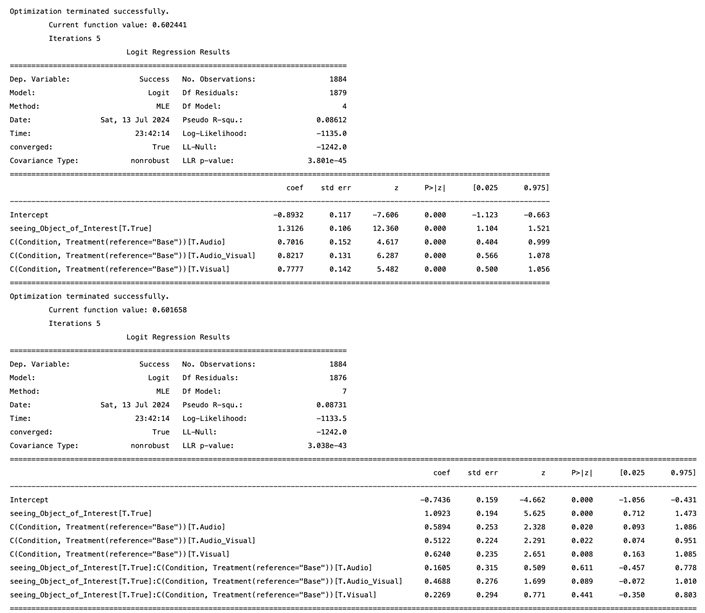

The plot represents the coefficient estimates of the effect of warning signals and seeing objects of interest on success. The Intercept represents the reference level (base condition). The horizontal x-axis represents the regression coefficient, which shows strength and direction of the relationship between predictors (each type of warning signal and seeing objects of interest) and the probability of success. The vertical y-axis lists the warning signal types. Each bar represents the estimated coefficient for the predictor. The horizontal lines through the bars (error bars) represent the 95% confidence intervals for the coefficient estimates. The red dashed line at 0 represents the null hypothesis (coefficient ).

Figure 6.

The plot represents the coefficient estimates of the effect of warning signals and seeing objects of interest on success. The Intercept represents the reference level (base condition). The horizontal x-axis represents the regression coefficient, which shows strength and direction of the relationship between predictors (each type of warning signal and seeing objects of interest) and the probability of success. The vertical y-axis lists the warning signal types. Each bar represents the estimated coefficient for the predictor. The horizontal lines through the bars (error bars) represent the 95% confidence intervals for the coefficient estimates. The red dashed line at 0 represents the null hypothesis (coefficient ).

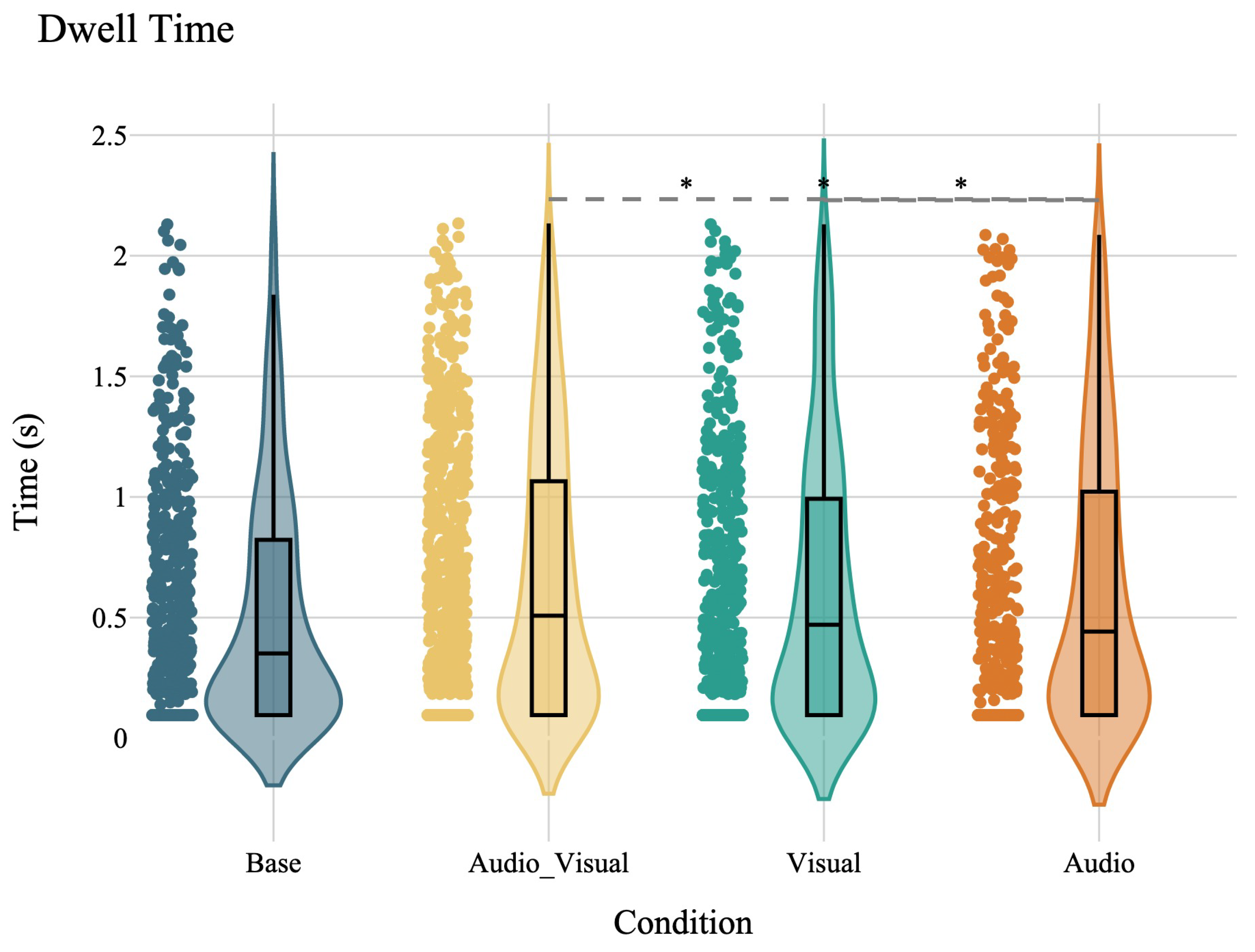

Figure 7.

The plot depicts the distribution of dwell time across different conditions: base, audio_visual, visual, and audio. The y-axis shows the dwell time in seconds, while the x-axis lists the different conditions. The violin plots illustrate the density of each variable, with the box plots inside showing the median. The scatter points overlay the distribution, indicating individual data points. The gray dashed line connects the conditions that are not significantly different.

Figure 7.

The plot depicts the distribution of dwell time across different conditions: base, audio_visual, visual, and audio. The y-axis shows the dwell time in seconds, while the x-axis lists the different conditions. The violin plots illustrate the density of each variable, with the box plots inside showing the median. The scatter points overlay the distribution, indicating individual data points. The gray dashed line connects the conditions that are not significantly different.

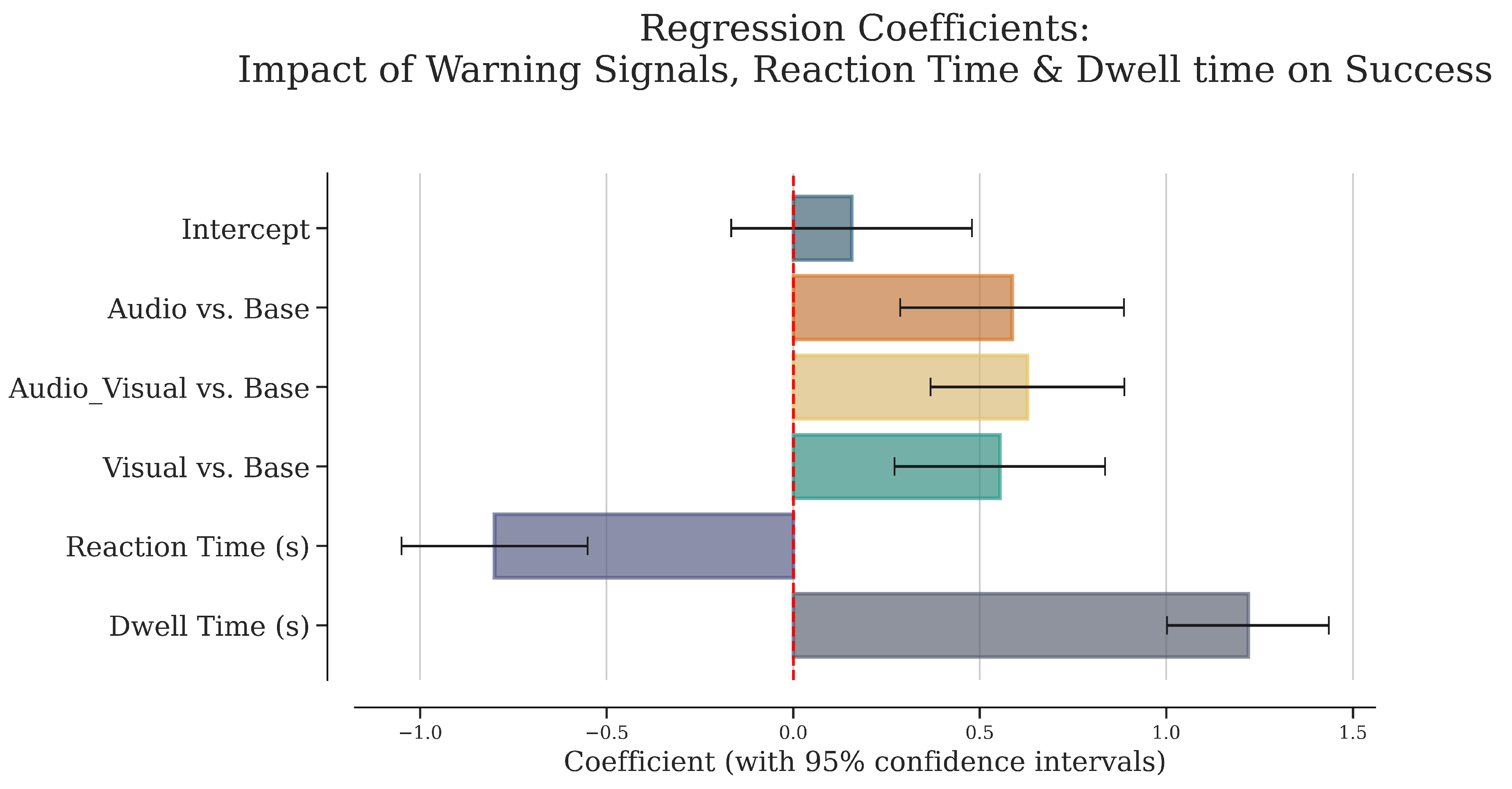

Figure 8.

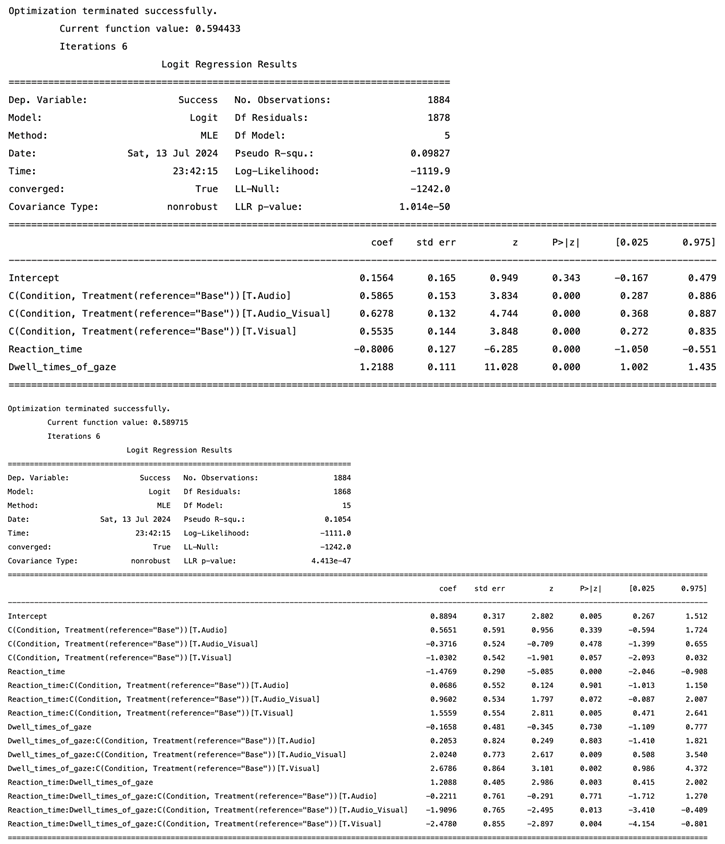

The plot represents the coefficient estimates of the warning signal, reaction time and dwell time effect on success. The Intercept represents the reference level (base condition). The horizontal x-axis represents the regression coefficient, which shows strength and direction of the relationship between predictor and the probability of success. The vertical y-axis lists the warning signal types. Each bar represents the estimated coefficient for the predictor. The horizontal lines through the bars (error bars) represent the 95% confidence intervals for the coefficient estimates. The red dashed line at 0 represents the null hypothesis (coefficient ).

Figure 8.

The plot represents the coefficient estimates of the warning signal, reaction time and dwell time effect on success. The Intercept represents the reference level (base condition). The horizontal x-axis represents the regression coefficient, which shows strength and direction of the relationship between predictor and the probability of success. The vertical y-axis lists the warning signal types. Each bar represents the estimated coefficient for the predictor. The horizontal lines through the bars (error bars) represent the 95% confidence intervals for the coefficient estimates. The red dashed line at 0 represents the null hypothesis (coefficient ).

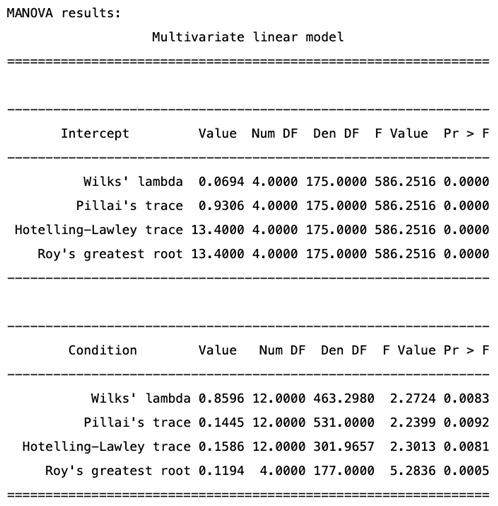

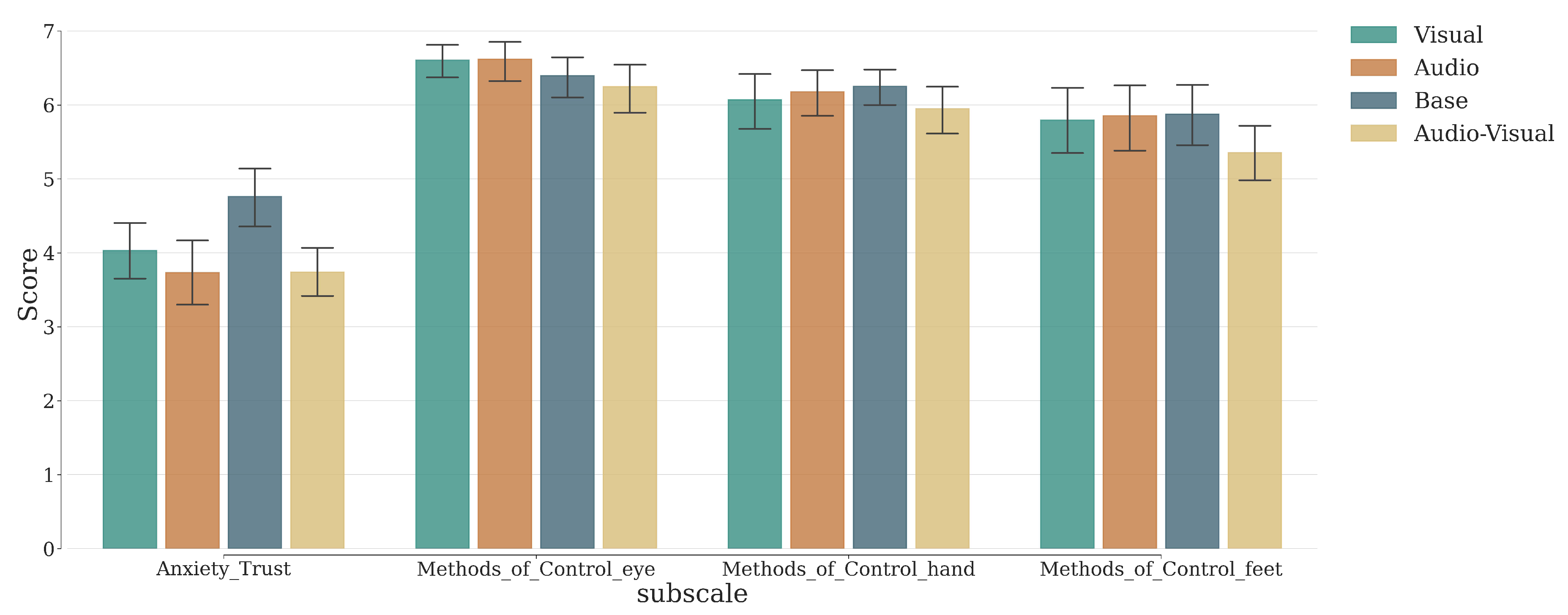

Figure 9.

Anxiety_Trust and Method of Control Analysis Results. The plot shows the results of post-hoc tests for each dependent variable (questionnaire subscales), comparing the mean scores of each level of the independent variable (condition). The error bars represent the standard error of the mean (SEM), illustrating the variability of each mean score across different conditions.

Figure 9.

Anxiety_Trust and Method of Control Analysis Results. The plot shows the results of post-hoc tests for each dependent variable (questionnaire subscales), comparing the mean scores of each level of the independent variable (condition). The error bars represent the standard error of the mean (SEM), illustrating the variability of each mean score across different conditions.

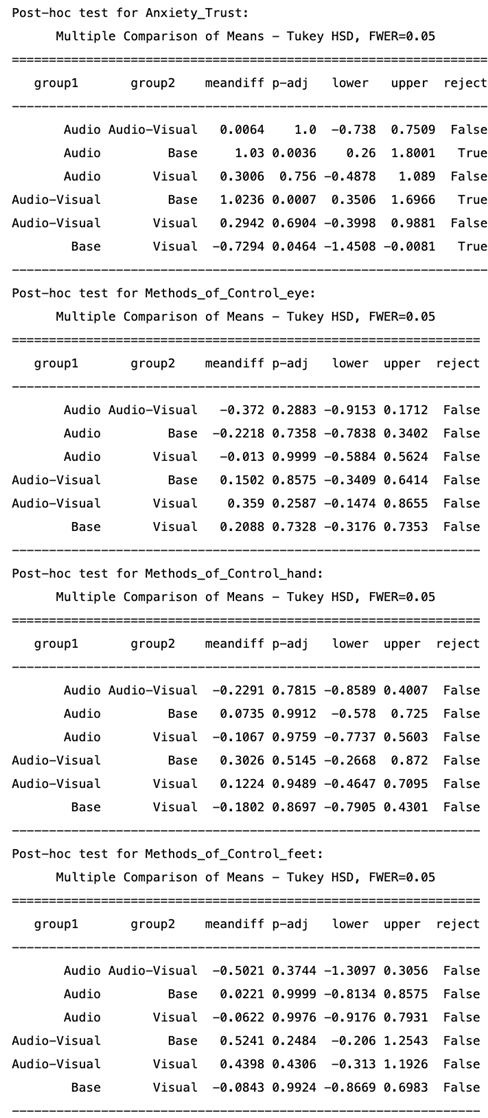

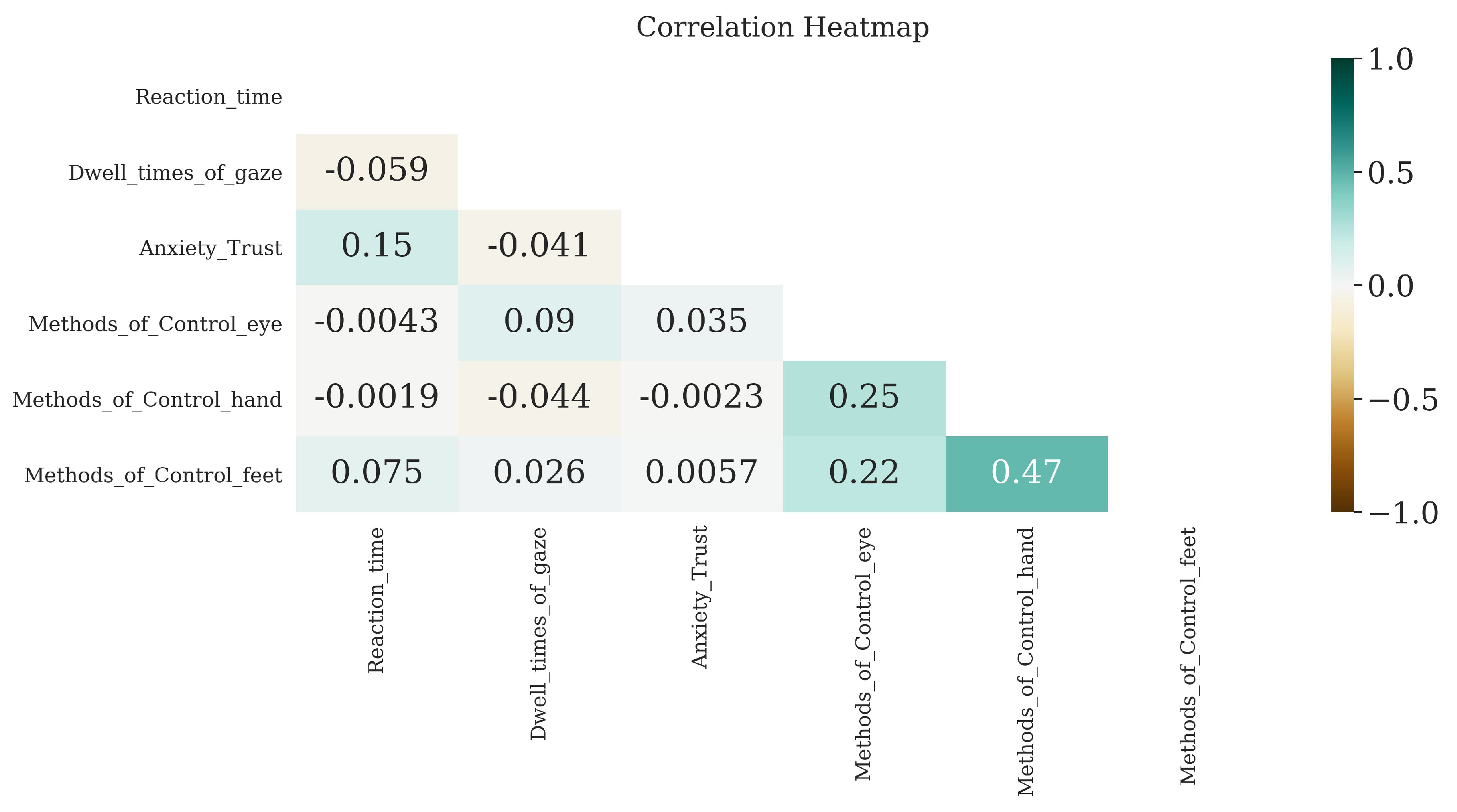

Figure 10.

Correlation matrix. The plot shows the result of the Spearman rank correlation between reaction time, dwell time and gaze hits count with the questionnaire subscales. Dwell times with Anxiety_Trust (, ), with Methods_of_Control_eye (, ), with Methods_of_Control_hand (, ) and with Methods_of_Control_feet (, ).

Figure 10.

Correlation matrix. The plot shows the result of the Spearman rank correlation between reaction time, dwell time and gaze hits count with the questionnaire subscales. Dwell times with Anxiety_Trust (, ), with Methods_of_Control_eye (, ), with Methods_of_Control_hand (, ) and with Methods_of_Control_feet (, ).

Table 1.

Exploratory factor analysis result.

Table 1.

Exploratory factor analysis result.

| Dimension |

Items |

Factor |

Communality |

| |

|

1 |

2 |

|

| Anxiety_Trust |

Anxiety_1 |

.77 |

.11 |

.60 |

| |

Anxiety_2 |

.66 |

-.12 |

.45 |

| |

Anxiety_3 |

.45 |

-.01 |

.20 |

| |

Perceived_safety_1 |

.78 |

-.00 |

.61 |

| |

Perceived_safety_2 |

-.78 |

-.12 |

.62 |

| |

Perceived_safety_3 |

-.69 |

-.05 |

.47 |

| Methods |

| of Control |

Eye |

.02 |

.38 |

.15 |

| |

Hand |

.03 |

.66 |

.44 |

| |

Feet |

.00 |

.57 |

.32 |