Submitted:

26 July 2024

Posted:

26 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Statement of the Problem

1.2. Research Aim and Objectives

- To identify the risk factors that influence accident severity in traffic accidents in the United Kingdom.

- To compare the performance of Random Forest and Logistic Regression in the prediction of traffic accident fatalities using an accident dataset from the United Kingdom.

- Determine whether or not there are differences in traffic accident fatalities using factors such as road type, light conditions, weather conditions, and road surface conditions drawn from an accident dataset from the United Kingdom.

2. Theoretical Background

2.1. Logistic Regression

2.1.1. Binary Logistic Regression

2.1.2. Multinomial Logistic Regression

2.1.3. Ordinal Logistic Regression

2.1.4. Baseline Category Logit Model

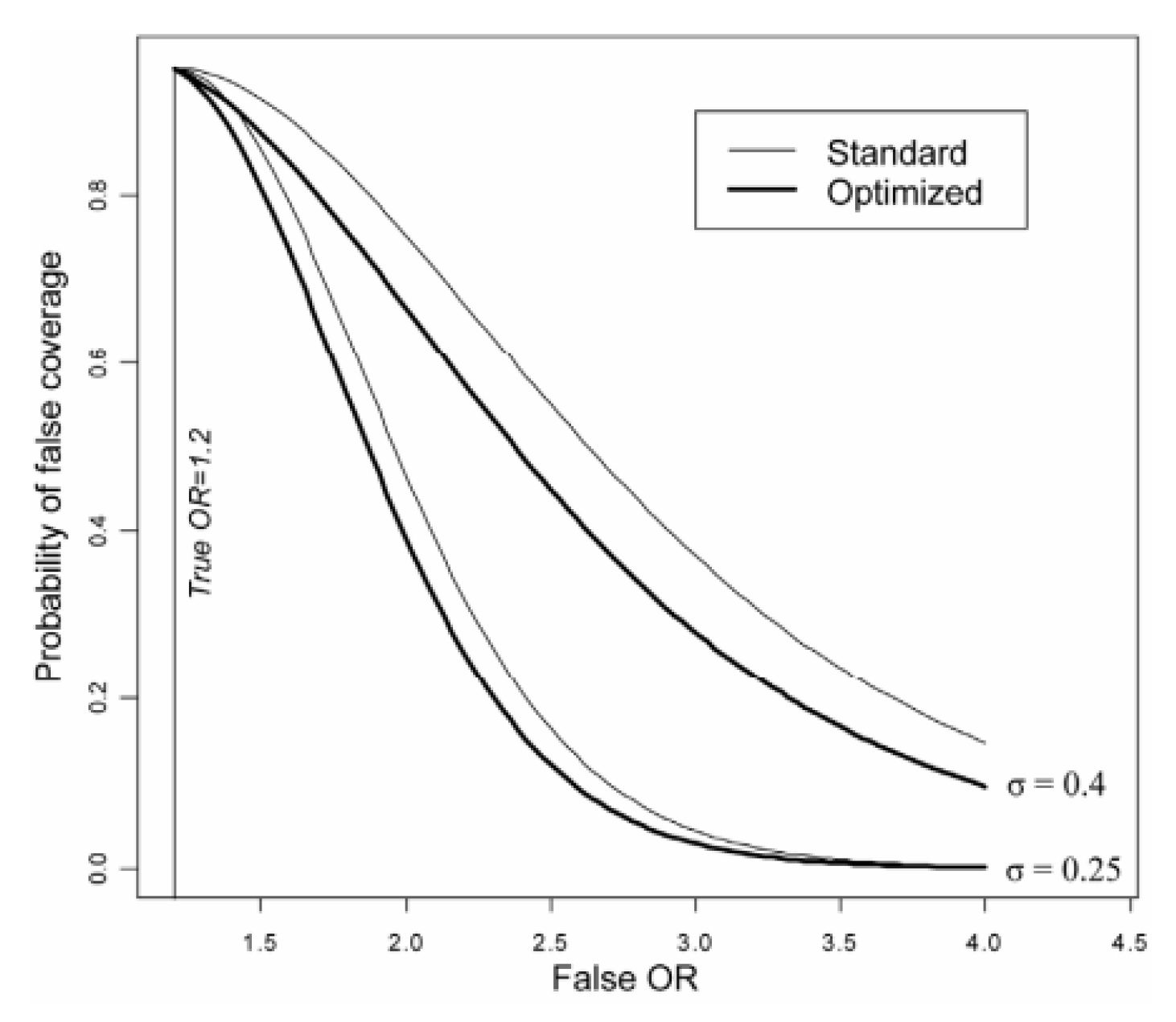

2.1.5. Confidence Intervals for Logistic Regression

2.2. Model Adequacy Checks

2.2.1. The Coefficient of Determination

2.2.2. Wald Test

2.2.3. Deviance

2.3. Akaike Information Criterion

2.4. Other Statistical Methods

2.4.1. Bayes Theorem for Classification

2.4.2. Linear Discriminant Analysis

2.5. Machine Learning Methods

2.5.1. Random Forest

- ∈ (1, ⋯, p), which is the number of preselected directions for splitting

- ∈ (1, ⋯, n), which is the number of sampled data points in each tree.

- ∈ (1, ⋯, ), which is the number of leaves in each tree.

- Uniformly chose in without replacement

- Set = partition on associated with the root of the trees

- For all 1 ≤≤, set = ∅

- Set = 1 and level = 0.

- while < do if = ∅ ; then level = level + 1

- Let B be the first element in where B contains exactly one point then ← \ ←∪

- Calculate the predicted value ) at t equal to the average of the falling in the cell of t in partition ∪

- Calculate the random forest estimate at the query point

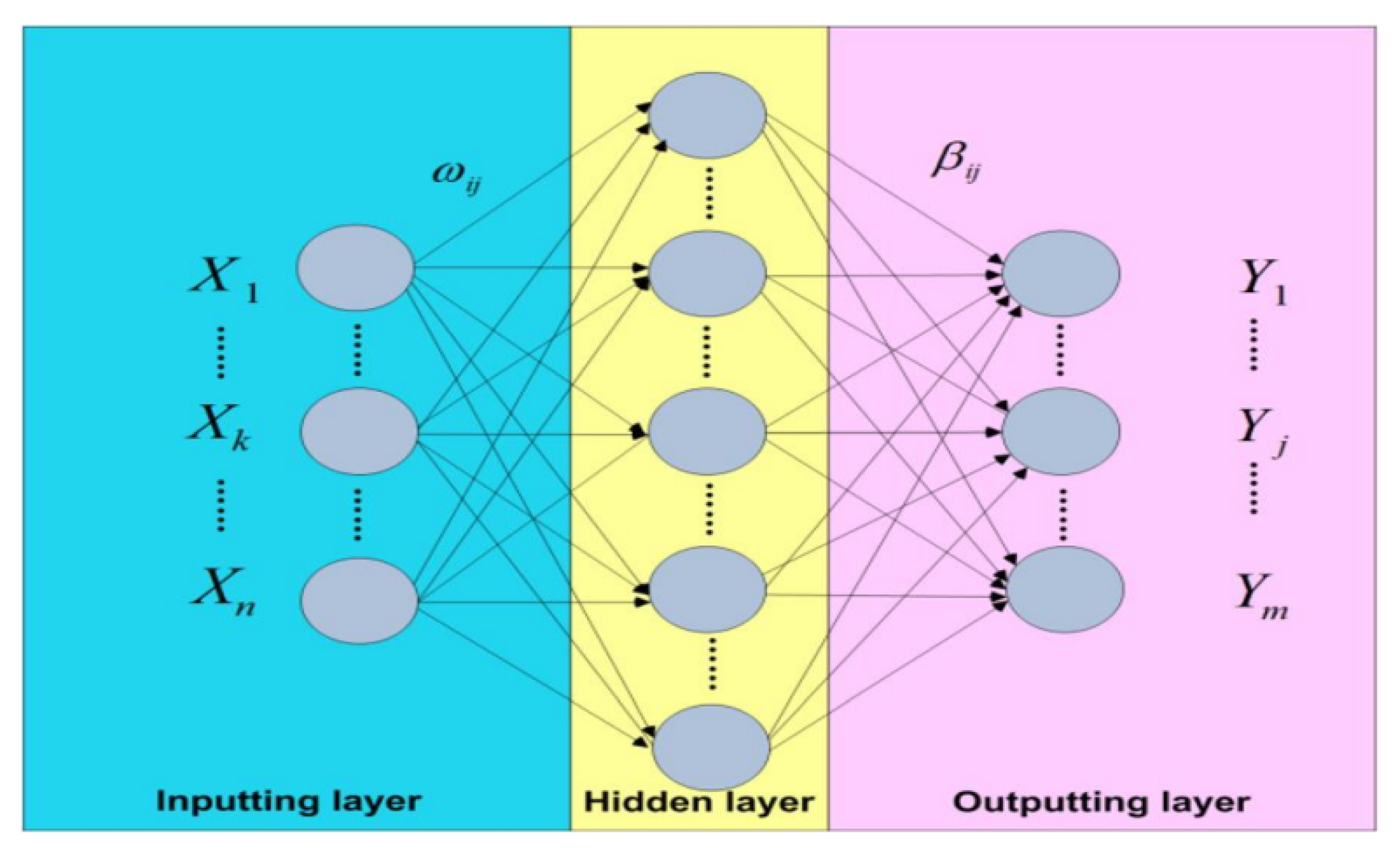

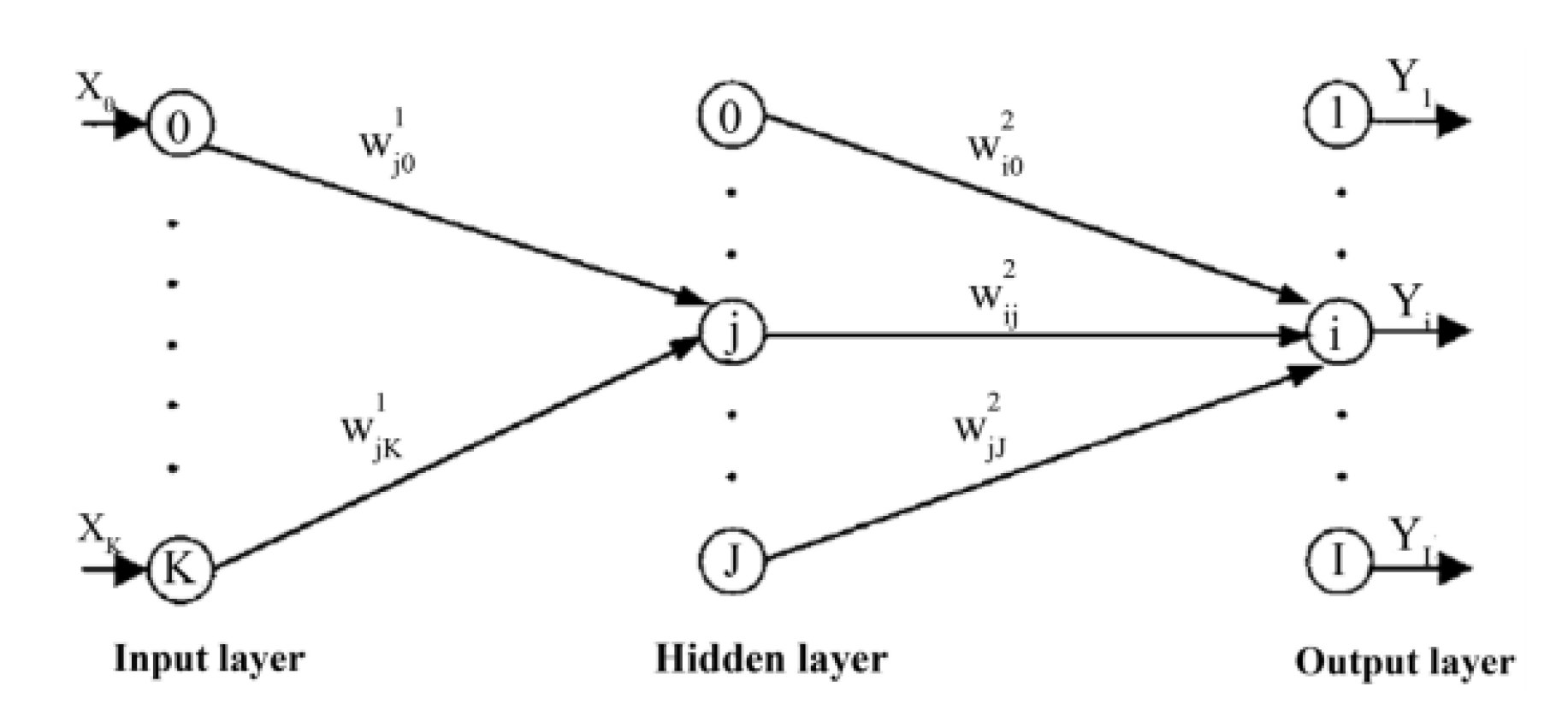

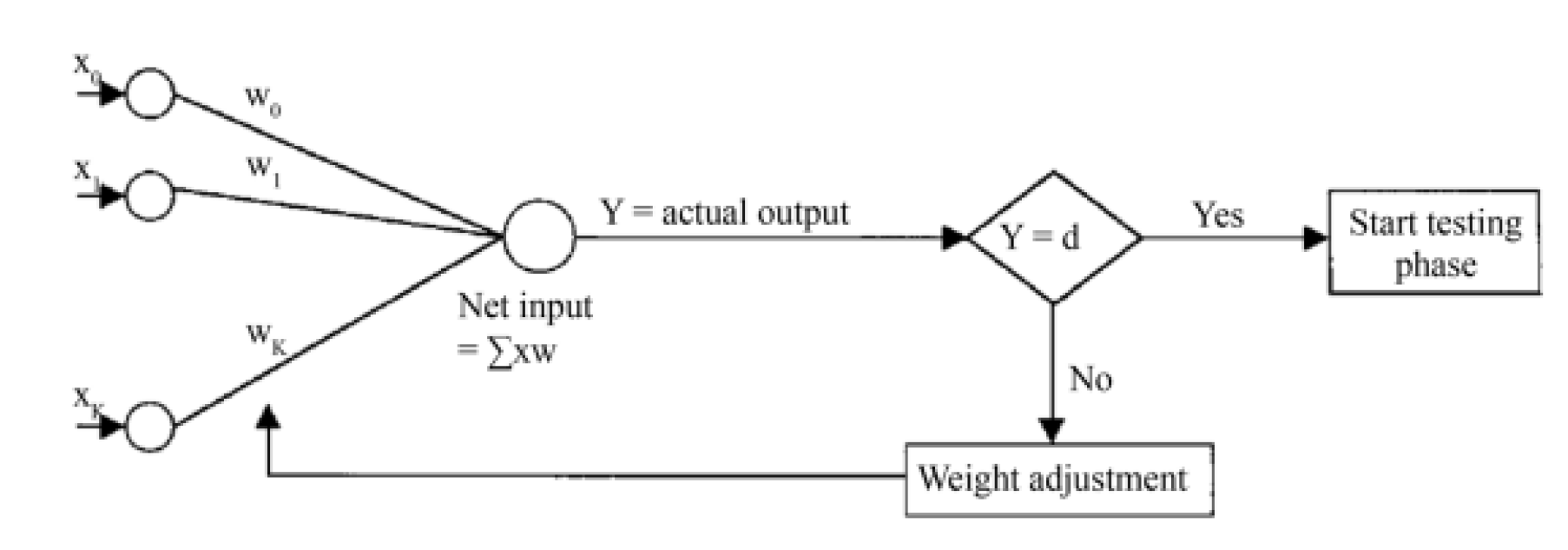

2.5.2. Artificial Neural Network

- = Error function to be minimised,

- W = the weight vector,

- = the number of training patterns,

- I = the number of output nodes,

- = the desired output of node i if the pattern p is introduced to the MLP,

- = the actual output of node i if pattern p is introduced to the MLP

- = the change of weight vector,

- = the learning parameter and

- = the gradient vector concerning weight vector W

2.6. Classification Evaluation Metrics

2.6.1. Confusion Matrix

- Kappa is a measure of accuracy that accounts for the possibility that the agreement occurred by chance. The data is checked for balance, with 1 being a balanced value, therefore agreement, and 0 being an unbalanced value, thus disagreement.

- No information Rate (NIR) reveals the accuracy achievable when predicting the majority class label. The lower the better that will indicate equal representation.

- Accuracy is the frequency of true predictions divided by the total frequency of predictions. The higher the better.

- Balanced accuracy is calculated using the average of the true positive and true negative rates, hence again, the higher the better.

- The ability of a classifier to distinguish negative labels is measured by item specificity.

- The weighted average of recall and precision is used to calculate the item F-score.

2.6.2. Accuracy, Precision, and F-Score

2.7. Cohen’s Kappa

2.7.1. Receiver Operating Characteristic Curve

2.8. Class Imbalance

3. Literature Review

3.1. Statistical Learning Methods

3.2. Machine Learning Methods

3.3. Discussion of Important Variables

4. Materials and Methods

4.1. The Data

5. Data Analysis

5.1. Introduction

5.2. Exploratory Data Analysis (EDA)

5.2.1. Handling Imbalances

| Category | Fatal | Non-Fatal | Row Total | Chi-square |

| (f)(%) | (f)(%) | (f)(%) | P-value | |

| Weekdays | 16097(72.07%) | 95264(85.55%) | 111361(76.11%) | 0.000 |

| Weekends | 6237 (27.93%) | 28724 (82.16%) | 34961 (23.89%) | |

| Carriageway | 20604 (92.25%) | 110406 (84.27%) | 131010 (89.54%) | 0.000 |

| One way/Slip | 586 (2.62%) | 3891 (86.91%) | 4477 (3.06%) | |

| Roundabout | 1066 (4.77%) | 9263 (89.68%) | 10329 7.06% | |

| Others | 78 (0.35%) | 428 (84.59%) | 506 (0.35%) | |

| Day | 15595 (69.83%) | 92476 (85.57%) | 108071 (73.86%) | 0.000 |

| Night | 6739 (30.17%) | 31512 (82.38%) | 38251 (26.14%) | |

| Fine | 18869 (84.49%) | 101585 (84.34%) | 120454 (82.32%) | 0.000 |

| Fog/Mist | 140 (0.63%) | 613 (81.41%) | 753 (0.52%) | |

| Raining | 2718 (12.17%) | 17280 (86.41%) | 19998 (13.67%) | |

| Snowing | 40 (0.18%) | 265 (86.89%) | 305 (0.21%) | |

| Other | 567 (2.54%) | 4245 (88.22%) | 4812 (3.29%) | |

| Dry | 15658 (70.11%) | 86361 (84.65%) | 102019 (69.72%) | 0.038 |

| Snow | 6354 (28.45%) | 35567 (84.84%) | 41921 (28.65%) | |

| Wet | 322 (1.44%) | 2060 (86.48%) | 2382 (1.63%) | |

| Rural | 9760 (43.70%) | 40275 (80.49%) | 50035 (34.20%) | 0.000 |

| Urban | 12574 (56.30%) | 83713 (86.94%) | 96287 (65.81%) | |

| Police Absent | 1961 (8.78%) | 24754 (92.66%) | 26715 (18.26%) | 0.000 |

| Police Present | 20373 (91.22%) | 99234 (82.97%) | 119607 (81.74%) | |

| 1 Quarter | 5043 (22.58%) | 29738 (85.50%) | 34781 (23.77%) | 0.000 |

| 2 Quarter | 5666 (25.37%) | 30177 (84.19%) | 35843 (24.50%) | |

| 3 Quarter | 5879 (26.32%) | 31087 (84.10%) | 36966 (25.26%) | |

| 4 Quarter | 5746 (25.73%) | 32986 (85.17%) | 38732 (26.47%) |

5.3. Logistic Regression Analysis

| Covariates | Df | Deviance | AIC |

| None | 230649 | 230687 | |

| Quarter | 3 | 230732 | 230764 |

| The Speed limit | 1 | 230742 | 230778 |

| The Road surface condition | 2 | 230788 | 230822 |

| The Weather conditions | 4 | 230819 | 230849 |

| The Day of week | 1 | 230848 | 230884 |

| The Road type | 3 | 231059 | 231091 |

| The Light conditions | 1 | 231046 | 231082 |

| Place of accident | 1 | 231304 | 231340 |

| The Number of vehicles | 1 | 232902 | 232938 |

| Police attendance | 1 | 234040 | 234076 |

5.4. Binomial Logistic Regression Model

| Covariates | Estimate | Odds Ratio | Sd | Z-Value | P-value |

| Intercept | -0.2110327 | 0.8097476 | 0.0326297 | -6.468 | 0.0000 |

| Number of Vehicles | -0.3284669 | 0.7200268 | 0.0070883 | -46.339 | 0.0000 |

| Day:Weekends | 0.1610077 | 1.1746941 | 0.0114158 | 14.104 | 0.0000 |

| Road Type:Slip | -0.1888540 | 0.8279073 | 0.0297239 | -6.354 | 0.0000 |

| Road Type Round About | -0.4055796 | 0.6665904 | 0.0210848 | -19.236 | 0.0000 |

| Road Type: Others | 0.1125592 | 1.1191385 | 0.0845239 | 1.332 | 0.182964 |

| Speed limit | 0.0046902 | 1.0047012 | 0.0004875 | 9.621 | 0.0000 |

| Light Conditions: Night | 1.2657635 | 3.5457989 | 0.0118409 | 19.904 | 0.0000 |

| Weather Conditions: Fog/Mist | -0.1769851 | 0.8377922 | 0.0674142 | -2.625 | 0.008656 |

| Weather Conditions: Raining | -0.2391297 | 0.7873128 | 0.0186169 | -12.845 | 0.0000 |

| Weather Conditions: Snowing | -0.0793410 | 0.9237249 | 0.1135330 | -0.699 | 0.484654 |

| Weather Conditions: Others | -0.1058903 | 0.8995233 | 0.0303448 | -3.490 | 0.000484 |

| Road Surface: Snow | -0.0155717 | 0.9845489 | 0.0147245 | -1.058 | 0.290266 |

| Road Surface: Wet | -0.5092450 | 0.6009491 | 0.0435462 | -11.694 | 0.0000 |

| Place of Accident: Urban | -0.3623045 | 0.6960704 | 0.0141845 | -25.542 | 0.0000 |

| Police Attendance Yes | 0.8546232 | 2.3504886 | 0.0151043 | 56.581 | 0.0000 |

| 2nd Quarter | 0.1260474 | 1.1343359 | 0.0148897 | 8.465 | 0.0000 |

| 3rd Quarter | 0.1066344 | 1.1125274 | 0.0148262 | 7.192 | 0.0000 |

| 4th Quarter | 0.0500855 | 1.0513610 | 0.0141083 | 3.550 | 0.000385 |

5.5. Analysis of Variance (ANOVA) for Traffic Accident Fatalities

| Df | Deviance | Residual Df | P-value | |

| The Number of Vehicles | 1 | 1839.3 | 173582 | 0.000 |

| The Day of Week | 1 | 428.4 | 173581 | 0.000 |

| The Road Type | 3 | 588.9 | 173578 | 0.000 |

| The Speed limit | 1 | 2061.2 | 173577 | 0.000 |

| The Light Conditions | 1 | 262.3 | 173576 | 0.000 |

| The Weather Conditions | 4 | 414 | 173572 | 0.000 |

| The Road Surface | 2 | 128.3 | 173570 | 0.000 |

| Place of Accident | 1 | 750 | 173569 | 0.000 |

| Police Attendance | 1 | 3433.9 | 173568 | 0.000 |

| Quarter | 3 | 83.2 | 173565 | 0.000 |

5.6. Wald Test Results for Traffic Accident Fatalities

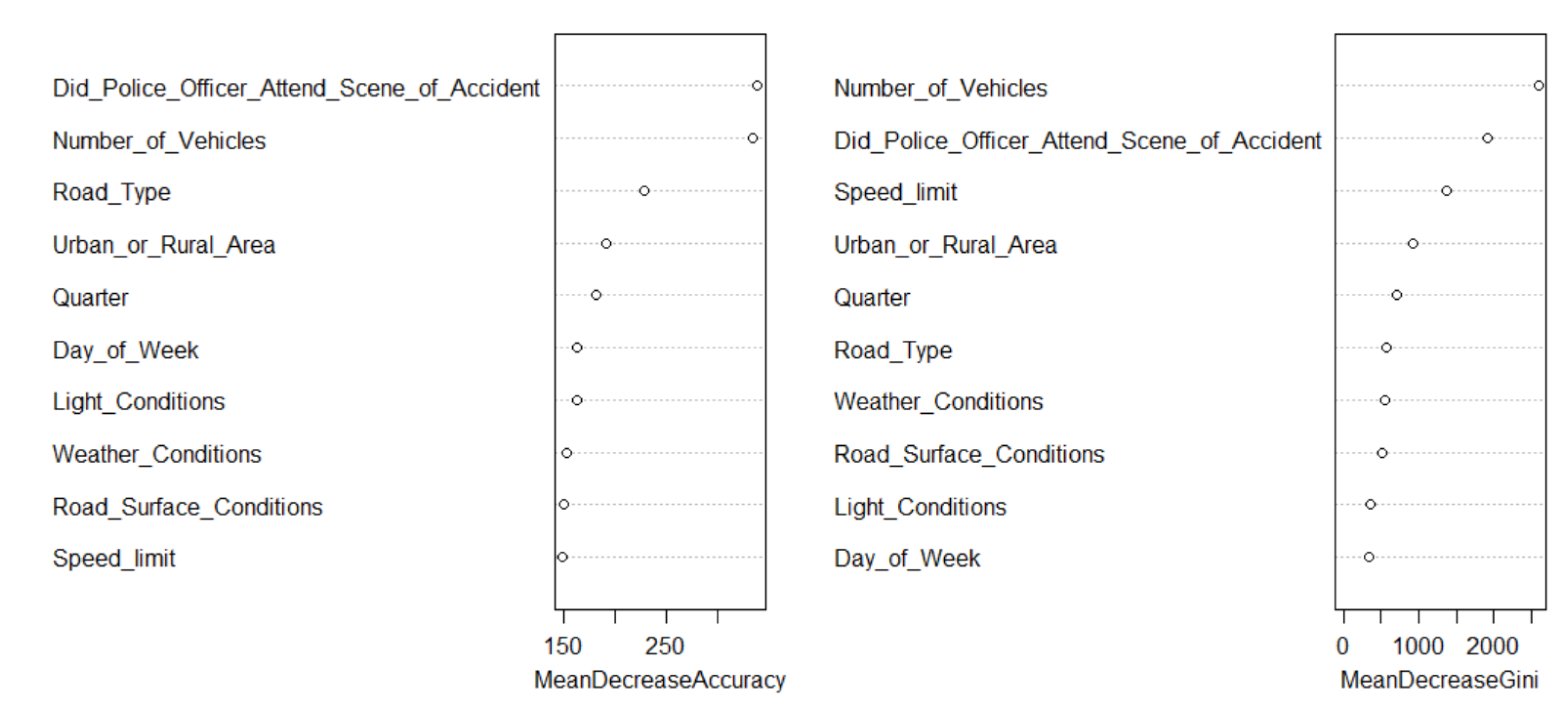

5.7. Random Forest Model

| Covariates | Mean Decrease Accuracy |

| The Number of Vehicles | 248.5413 |

| The Day of week | 118.059 |

| The Road Type | 159.1584 |

| The Speed limit | 102.1669 |

| The Light Conditions | 119.8348 |

| The Weather Conditions | 103.2044 |

| The Road Surface Conditions | 108.9508 |

| The Place of Accident | 140.143 |

| The Police Attendance | 221.5322 |

| The Quarter | 120.894 |

| Type of random tress: Classification | |||

| Number of trees : 500 | |||

| OOB: Estimate of Error Rate: 36.28% | |||

| Confusion Matrix | 0 | 1 | class.error |

| 0 | 55548 | 31244 | 0.3599871 |

| 1 | 31734 | 55058 | 0.3656328 |

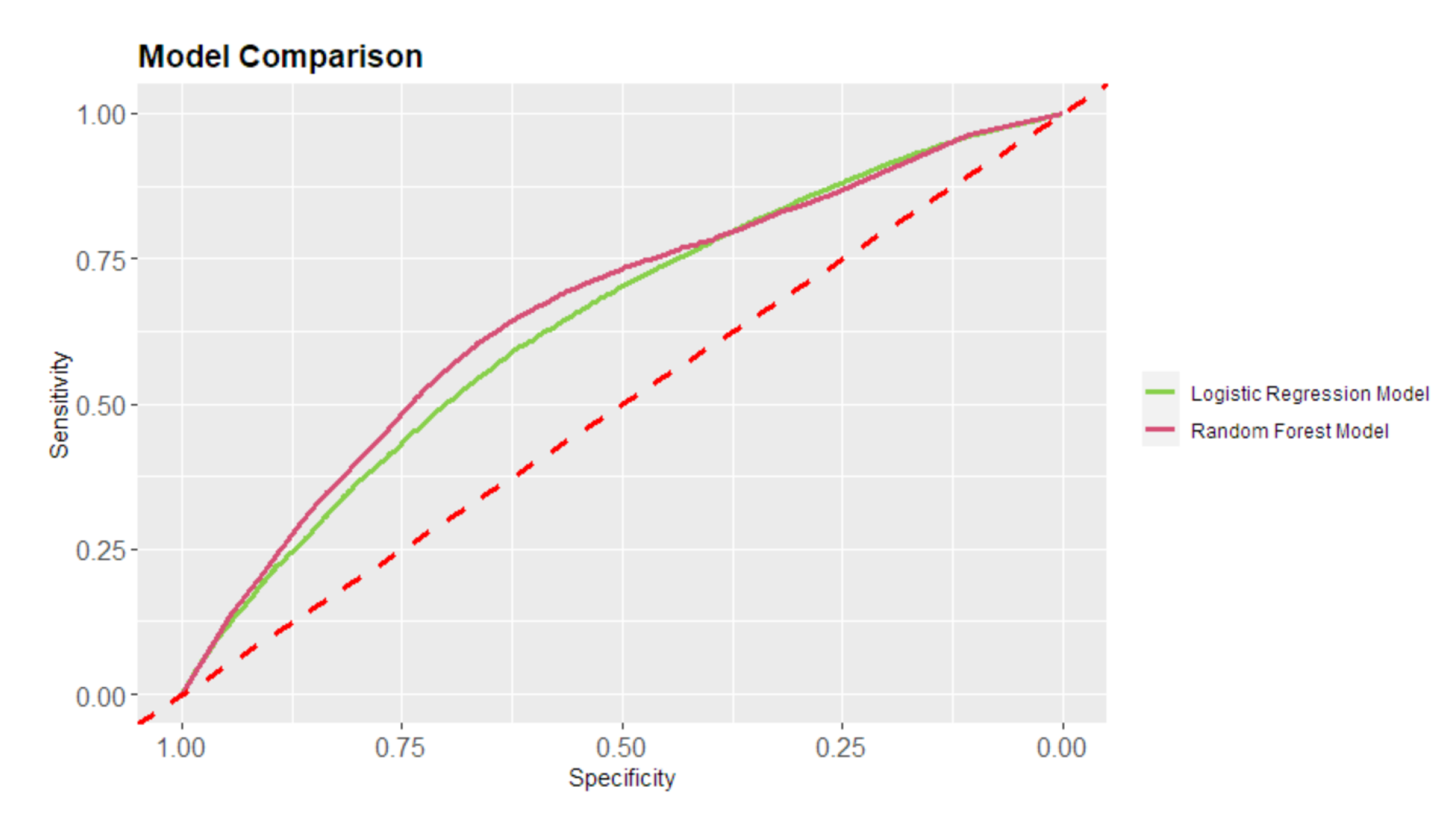

5.8. Model Performance Comparison for Random Forests and Logistic Regression

| Logistic Regression Model | Random Forest Model | |

| Accuracy | 0.7985 | 0.640 |

| Recall | 0.1935 | 0.6429 |

| 95% Confidence Interval | (0.7964, 0.8005) | (0.6376;0642 |

| No Information Rate | 0.8920 | 0.6021 |

| Kappa | 0.1147 | 0.1611 |

| Mcnemar’s Test P-Value | 0.0000 | 0.0000 |

| Sensitivity | 0.8620 | 0.9048 |

| Precision | 0.2736 | 0.9048 |

| Specificity | 0.2736 | 0.2395 |

| Prevalence | 0.8920 | 0.6021 |

| Balanced Accuracy | 0.5678 | 0.5721 |

| Gini Index | 0.2801 | 0.3179 |

| F1 Score | 0.2267 | 0.7517 |

| AUC | 0.64 | 0.6589 |

5.9. Confidence Intervals

| Covaraites | Estimate | 95% Shortest width CI | Std.Error | 95% CI standard |

| Number of Vehicles | -0.3284669 | 0.7100925; 0.73010000 | 0.0070883 | -0.3424; -0.3146 |

| Day:Weekends | 0.1610077 | 1.148702141; 1.2021274009 | 0.0114158 | 0.1386; 0.1834 |

| Road Type:Slip | -0.1888540 | 0.7810525515; 0.8775729826 | 0.0297239 | |

| Road Type: Round About | -0.4055796 | 0.639604151; 0.6947151372 | 0.021084 | |

| Road Type: Others | 0.1125592 | 0.9482779306; 1.320784719 | 0.0845239 | -0.0531; 0.2782 |

| Speed limit | 0.0046902 | 1.003741683 ; 1.005661667 | 0.0004875 | 0.0037 ; 0.0056 |

| Light Conditions: Night | 1.2657635 | 3.46445501 ; 3.629052751 | 0.0118409 | 0.2125 ;0.2589 |

| Weather Conditions: Raining | -0.2391297 | 0.759102227 ;0.816571684 | 0.0186169 | |

| Weather Conditions: Snowing | -0.0793410 | 0.7394373794 ; 1.153941738 | 0.1135330 | -0.3019 ; 0.1432 |

| Road Surface conditionaal: Snow | -0.0155717 | 0.9565409105 ; 1.013377002 | 0.0147245 | -0.0444 ; 0.0133 |

| Road Surface: Wet | -0.5092450 | 0.551785689; 0.654492961 | 0.0435462 | |

| Place of Accident: Urban | -0.3623045 | 0.676985029; 0.7156937836 | 0.0141845 | -0.3901; -0.3345 |

| Police Attendance Yes | 0.8546232 | 2.281923601 ;2.42111366 | 0.0151043 | 0.8250 ;0.8842 |

| 4th Quarter | 0.0500855 | 1.022686749 ;1.080839191 | 0.0141083 | 0.0224 ;0.0777 |

| McFadden psedo R square | Cox and snell r square | Nagelkerke R square |

| 0.04151248 | 0.05592391 | 0.07456521 |

| Covaraites | Estimate | Wald | DF | P-value |

| Intercept | -0.2110327 | 41.8 | 1 | 0.00 |

| Number of Vehicles | -0.3284669 | 2147.3 | 1 | 0.0 |

| Day:Weekends | 0.1610077 | 198.9 | 1 | 0.00 |

| Road Type:Slip | -0.1888540 | 40.4 | 1 | 0.00 |

| Road Type Round About | -0.4055796 | 1.8 | 1 | 0.18 |

| Road Type: Others | 0.1125592 | 370.0 | 1 | 0.00 |

| Speed limit | 0.0046902 | 92.6 | 1 | 0.00 |

| Light Conditions: Night | 1.2657635 | 396.2 | 1 | 0.00 |

| Weather Conditions: Fog/Mist | -0.1769851 | 6.9 | 1 | 0.0087 |

| Weather Conditions: Raining | -0.2391297 | 12.2 | 1 | 0.00048 |

| Weather Conditions: Snowing | -0.0793410 | 165.0 | 1 | 0.00 |

| Weather Conditions: Others | -0.1058903 | 1 | 1 | 0.48 |

| Road Surface: Snow | -0.0155717 | 1.1 | 1 | 0.29 |

| Road Surface: Wet | -0.5092450 | 136.8 | 1 | 0.00 |

| Place of Accident: Urban | -0.3623045 | 652.4 | 1 | 0.00 |

| Police Attendance Yes | 0.8546232 | 3201.5 | 1 | 0.00 |

| 2nd Quarter | 0.1260474 | 71.7 | 1 | 0.0 |

| 3rd Quarter | 0.1066344 | 51.7 | 1 | 0.00 |

| 4th Quarter | 0.0500855 | 12.6 | 1 | 0.00039 |

6. Summary, Conclusions and Recommendations

References

- Andeta, J.A. Road-traffic accident prediction model: Predicting the Number of Casualties, 2021.

- Olsen, J.R.; Mitchell, R.; Ogilvie, D.; Team, M.S. Effect of a new motorway on social-spatial patterning of road traffic accidents: A retrospective longitudinal natural experimental study. PloS one 2017, 12, e0184047. [Google Scholar] [CrossRef] [PubMed]

- Abubakar, I.; Tillmann, T.; Banerjee, A. Global, regional, and national age-sex specific all-cause and cause-specific mortality for 240 causes of death, 1990-2013: a systematic analysis for the Global Burden of Disease Study 2013. Lancet 2015, 385, 117–171. [Google Scholar]

- Duncan, C.; Khattak, A.; Council, F. APPLYING THE ORDERED PROBIT MODEL TO INJURY SEVERITY IN TRUCK-PASSENGER CAR REAR-END COLLISIONS. Transportation Research Record 1998. [Google Scholar] [CrossRef]

- Shankar, V.; Mannering, F.; Barfield, W. Effect of roadway geometrics and environmental factors on rural freeway accident frequencies. Accident Analysis & Prevention 1995, 27, 371–389. [Google Scholar]

- Chen, M.; Zhou, L.; Choo, S.; Lee, H. Analysis of Risk Factors Affecting Urban Truck Traffic Accident Severity in Korea. Sustainability 2022, 14, 2901. [Google Scholar] [CrossRef]

- Farmer, C.M.; Braver, E.R.; Mitter, E.L. Two-vehicle side impact crashes: the relationship of vehicle and crash characteristics to injury severity. Accident Analysis & Prevention 1997, 29, 399–406. [Google Scholar]

- Subramanian, R. Passenger vehicle occupant fatality rates by type and size of vehicle; Citeseer, 2006.

- Anvari, M.B.; Kashani, A.T.; Rabieyan, R. Identifying the most important factors in the at-fault probability of motorcyclists by data mining, based on classification tree models. International Journal of Civil Engineering 2017, 15, 653–662. [Google Scholar] [CrossRef]

- Kumar, S.; Toshniwal, D. A data mining approach to characterize road accident locations. Journal of Modern Transportation 2016, 24, 62–72. [Google Scholar] [CrossRef]

- Iranitalab, A.; Khattak, A. Comparison of four statistical and machine learning methods for crash severity prediction. Accident Analysis & Prevention 2017, 108, 27–36. [Google Scholar]

- Bedard, M.; Guyatt, G.H.; Stones, M.J.; Hirdes, J.P. The independent contribution of driver, crash, and vehicle characteristics to driver fatalities. Accident Analysis & Prevention 2002, 34, 717–727. [Google Scholar]

- Chang, L.Y.; Wang, H.W. Analysis of traffic injury severity: An application of non-parametric classification tree techniques. Accident Analysis & Prevention 2006, 38, 1019–1027. [Google Scholar]

- KIM, S.H.; CHUNG, S.B.; SONG, K.H.; CHON, K.S. Development of an Accident Prediction Model using GLIM (Generalized Log-linear Model) and EB method: A case of Seoul. Journal of the Eastern Asia society for Transportation studies 2005, 6, 3669–3682. [Google Scholar]

- Kim, J.H. Multicollinearity and misleading statistical results. Korean journal of anesthesiology 2019, 72, 558–569. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, L.A. Using logistic regression in determining the effective variables in traffic accidents. Applied mathematical Sciences 2017, 11, 2047–2058. [Google Scholar] [CrossRef]

- Harrell, F.E. Regresión modeling strategies: whit applications to linear models, logistic regression, and survival analysis. Technical report, 2001.

- Chatterjee, S.; Hadi, A.S. Regression analysis by example; John Wiley & Sons, 2015.

- Czepiel, S.A. Maximum likelihood estimation of logistic regression models: theory and implementation. Available at czep. net/stat/mlelr. pdf 2002, 83. [Google Scholar]

- Garson, D. Logistic Regression with SPSS. North Carolina State University. Public administration Program 2009. [Google Scholar]

- Norušis, M.J. SPSS statistics 17.0: Statistical procedures companion; Prentice Hall/Pearson Education, 2008.

- Agresti, A. Categorical Data Analysis. John Wiley & Sons. Inc., Publication 2002, 15, 24. [Google Scholar]

- Dahiya, R.C.; Guttman, I. Shortest confidence and prediction intervals for the log-normal. The Canadian Journal of Statistics/La Revue Canadienne de Statistique 1982, pp. 277–291.

- Mbachu, H.; Nduka, E.; Nja, M. Designing a pseudo R-Squared goodness-of-fit measure in generalized linear models. Journal of Mathematics Research 2012, 4, 148. [Google Scholar] [CrossRef]

- Menard, S. Applied logistic regression analysis; Vol. 106, Sage, 2002.

- Al-Ghamdi, A.S. Using logistic regression to estimate the influence of accident factors on accident severity. Accident Analysis & Prevention 2002, 34, 729–741. [Google Scholar]

- Tsay, R.S. Multivariate time series analysis: with R and financial applications; John Wiley & Sons, 2013.

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An introduction to statistical learning; Vol. 112, Springer, 2013.

- Scornet, E.; Biau, G.; Vert, J.P. Supplementary materials for: Consistency of random forests. arXiv 2015, 1510. [Google Scholar]

- Breiman, L. Random forests. Machine learning 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Louppe, G.; Wehenkel, L.; Sutera, A.; Geurts, P. Understanding variable importances in forests of randomized trees. Advances in neural information processing systems 26 2013. [Google Scholar]

- Karayılan, T.; Kılıç, Ö. Prediction of heart disease using neural network. 2017 International Conference on Computer Science and Engineering (UBMK). IEEE, 2017, pp. 719–723.

- Abdelwahab, H.T.; Abdel-Aty, M.A. Development of artificial neural network models to predict driver injury severity in traffic accidents at signalized intersections. Transportation Research Record 2001, 1746, 6–13. [Google Scholar] [CrossRef]

- Guns, R.; Lioma, C.; Larsen, B. The tipping point: F-score as a function of the number of retrieved items. Information Processing & Management 2012, 48, 1171–1180. [Google Scholar]

- Deng, X.; Liu, Q.; Deng, Y.; Mahadevan, S. An improved method to construct basic probability assignment based on the confusion matrix for classification problem. Information Sciences 2016, 340, 250–261. [Google Scholar] [CrossRef]

- Berry, K.J.; Mielke Jr, P.W. A generalization of Cohen’s kappa agreement measure to interval measurement and multiple raters. Educational and Psychological Measurement 1988, 48, 921–933. [Google Scholar] [CrossRef]

- Mandrekar, J.N. Receiver operating characteristic curve in diagnostic test assessment. Journal of Thoracic Oncology 2010, 5, 1315–1316. [Google Scholar] [CrossRef]

- Japkowicz, N.; Stephen, S. The class imbalance problem: A systematic study. Intelligent data analysis 2002, 6, 429–449. [Google Scholar] [CrossRef]

- Visa, S.; Ralescu, A. The effect of imbalanced data class distribution on fuzzy classifiers-experimental study. The 14th IEEE International Conference on Fuzzy Systems, 2005. FUZZ’05. IEEE, 2005, pp. 749–754.

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: synthetic minority over-sampling technique. Journal of artificial intelligence research 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Ossiander, E.M.; Cummings, P. Freeway speed limits and traffic fatalities in Washington State. Accident Analysis & Prevention 2002, 34, 13–18. [Google Scholar]

- Hauer, E. Statistical road safety modeling. Transportation Research Record 2004, 1897, 81–87. [Google Scholar] [CrossRef]

- Anastasopoulos, P.C.; Tarko, A.P.; Mannering, F.L. Tobit analysis of vehicle accident rates on interstate highways. Accident Analysis & Prevention 2008, 40, 768–775. [Google Scholar]

- Oppong, R.A. Statistical analysis of road accidents fatality in Ghana using Poisson regression. PhD thesis, 2012.

- Anderson, M. Safety for whom? The effects of light trucks on traffic fatalities. Journal of health economics 2008, 27, 973–989. [Google Scholar] [CrossRef] [PubMed]

- Ye, F.; Lord, D. Comparing three commonly used crash severity models on sample size requirements: Multinomial logit, ordered probit and mixed logit models. Analytic methods in accident research 2014, 1, 72–85. [Google Scholar] [CrossRef]

- Caliendo, C.; Guida, M.; Parisi, A. A crash-prediction model for multilane roads. Accident Analysis & Prevention 2007, 39, 657–670. [Google Scholar]

- Milton, J.; Mannering, F. The relationship among highway geometrics, traffic-related elements and motor-vehicle accident frequencies. Transportation 1998, 25, 395–413. [Google Scholar] [CrossRef]

- Poch, M.; Mannering, F. Negative binomial analysis of intersection-accident frequencies. Journal of transportation engineering 1996, 122, 105–113. [Google Scholar] [CrossRef]

- Shankar, V.; Milton, J.; Mannering, F. Modeling accident frequencies as zero-altered probability processes: an empirical inquiry. Accident Analysis & Prevention 1997, 29, 829–837. [Google Scholar]

- Miaou, S. The relationship between truck accidents and geometric design of road sections: Poisson versus negative binomial regressions. Accident; Analysis and Prevention 1994, 26, 471–482. [Google Scholar] [CrossRef]

- Valent, F.; Schiava, F.; Savonitto, C.; Gallo, T.; Brusaferro, S.; Barbone, F. Risk factors for fatal road traffic accidents in Udine, Italy. Accident Analysis & Prevention 2002, 34, 71–84. [Google Scholar]

- Guo, L.; Ge, P.S.; Zhang, M.H.; Li, L.H.; Zhao, Y.B. Pedestrian detection for intelligent transportation systems combining AdaBoost algorithm and support vector machine. Expert Systems with Applications 2012, 39, 4274–4286. [Google Scholar] [CrossRef]

- AKIN, H.B.; Şentürk, E. Bireylerin mutluluk düzeylerinin ordinal lojistik regresyon analizi ile incelenmesi-analysing levels of happiness of individuals with ordinal logistic analysis. Öneri Dergisi 2012, 10, 183–193. [Google Scholar]

- Mussone, L.; Ferrari, A.; Oneta, M. An analysis of urban collisions using an artificial intelligence model. Accident Analysis & Prevention 1999, 31, 705–718. [Google Scholar]

- Yan, X.; Yi, P.; Wu, C.; Zhong, M. ICTIS 2011. American Society of Civil Engineers, 2011.

- Warner, P. Ordinal logistic regression. BMJ Sexual & Reproductive Health 2008, 34, 169–170. [Google Scholar]

- Karayiannis, N.; Venetsanopoulos, A. ANNs: Learning Algorithms. Performance Evaluation and Applications. Kluwer Academic Publishers, Boston 1993.

- Jadaan, K.S.; Al-Fayyad, M.; Gammoh, H.F. Prediction of road traffic accidents in jordan using artificial neural network (ANN). Journal of Traffic and Logistics Engineering 2014, 2. [Google Scholar] [CrossRef]

- Brijesh Kumar, B.; Sourabh, P. Mining educational data to analyze students’ performance, 2011.

- Kunt, M.M.; Aghayan, I.; Noii, N. Prediction for traffic accident severity: comparing the artificial neural network, genetic algorithm, combined genetic algorithm and pattern search methods. Transport 2011, 26, 353–366. [Google Scholar] [CrossRef]

- Rezaie Moghaddam, F.; Afandizadeh, S.; Ziyadi, M. Prediction of accident severity using artificial neural networks. International Journal of Civil Engineering 2011, 9, 41–48. [Google Scholar]

- Chibueze, O.T.; Nonyelum, O.F. Prediction of Solid Waste Generation Using Self-Organizing Map and Multilayer Perceptron Neural Networks. IUP Journal of Science & Technology 2010, 6. [Google Scholar]

- Krishnaveni, S.; Hemalatha, M. A perspective analysis of traffic accident using data mining techniques. International Journal of Computer Applications 2011, 23, 40–48. [Google Scholar] [CrossRef]

- Beshah, T.; Ejigu, D.; Abraham, A.; Snášel, V.; Krömer, P. Knowledge discovery from road traffic accident data in Ethiopia: Data quality, ensembling and trend analysis for improving road safety. Neural Network World 2012, 22, 215. [Google Scholar] [CrossRef]

- Tavakoli Kashani, A.; Shariat-Mohaymany, A.; Ranjbari, A. A data mining approach to identify key factors of traffic injury severity. PROMET-Traffic&Transportation 2011, 23, 11–17. [Google Scholar]

- Criminisi, A.; Shotton, J. Decision forests for computer vision and medical image analysis; Springer Science & Business Media, 2013.

- Effati, M.; Thill, J.C.; Shabani, S. Geospatial and machine learning techniques for wicked social science problems: analysis of crash severity on a regional highway corridor. Journal of Geographical Systems 2015, 17, 107–135. [Google Scholar] [CrossRef]

- Han, J.; Kamber, M.; Pei, J. Data mining concepts and techniques third edition. The Morgan Kaufmann Series in Data Management Systems 2011, 5, 83–124. [Google Scholar]

- Pakgohar, A.; Tabrizi, R.S.; Khalili, M.; Esmaeili, A. The role of human factor in incidence and severity of road crashes based on the CART and LR regression: a data mining approach. Procedia Computer Science 2011, 3, 764–769. [Google Scholar] [CrossRef]

- Al-Radaideh, Q.A.; Daoud, E.J. Data mining methods for traffic accident severity prediction. Int. J. Neural Netw. Adv. Appl 2018, 5, 1–12. [Google Scholar]

- Li, X.; Lord, D.; Zhang, Y.; Xie, Y. Predicting motor vehicle crashes using support vector machine models. Accident Analysis & Prevention 2008, 40, 1611–1618. [Google Scholar]

- Kadilar, G.O. Effect of driver, roadway, collision, and vehicle characteristics on crash severity: a conditional logistic regression approach. International journal of injury control and safety promotion 2016, 23, 135–144. [Google Scholar] [CrossRef]

- Theofilatos, A.; Graham, D.; Yannis, G. Factors affecting accident severity inside and outside urban areas in Greece. Traffic injury prevention 2012, 13, 458–467. [Google Scholar] [CrossRef] [PubMed]

- Potoglou, D.; Carlucci, F.; Cirà, A.; Restaino, M. Factors associated with urban non-fatal road-accident severity. International journal of injury control and safety promotion 2018, 25, 303–310. [Google Scholar] [CrossRef]

- Christoforou, Z.; Cohen, S.; Karlaftis, M.G. Vehicle occupant injury severity on highways: An empirical investigation. Accident Analysis & Prevention 2010, 42, 1606–1620. [Google Scholar]

- Herman, J.; Kafoa, B.; Wainiqolo, I.; Robinson, E.; McCaig, E.; Connor, J.; Jackson, R.; Ameratunga, S. Driver sleepiness and risk of motor vehicle crash injuries: a population-based case control study in Fiji (TRIP 12). Injury 2014, 45, 586–591. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.Y.; Chi, G.B.; Jing, C.X.; Dong, X.M.; Wu, C.P.; Li, L.P. Trends in road traffic crashes and associated injury and fatality in the People’s Republic of China, 1951–1999. Injury control and safety promotion 2003, 10, 83–87. [Google Scholar] [CrossRef] [PubMed]

- Aarts, L.; Van Schagen, I. Driving speed and the risk of road crashes: A review. Accident Analysis & Prevention 2006, 38, 215–224. [Google Scholar]

- Tefft, B.C. The Prevalence of Motor Vehicle Crashes Involving Road Debris, United States, 2011-2014. Age (years) 2016, 20, 10–1. [Google Scholar]

- Clarke, D.; Ward, P.; Truman, W.; Bartle, C. Fatal vehicle-occupant collisions: An in-depth study. Road Safety Research Report 2007. [Google Scholar]

- Chang, L.Y.; Mannering, F. Analysis of injury severity and vehicle occupancy in truck-and non-truck-involved accidents. Accident Analysis & Prevention 1999, 31, 579–592. [Google Scholar]

- Rakha, H.; Van Aerde, M. Statistical analysis of day-to-day variations in real-time traffic flow data. Transportation research record 1995, pp. 26–34.

- Pai, C.W.; Saleh, W. Exploring motorcyclist injury severity in approach-turn collisions at T-junctions: Focusing on the effects of driver’s failure to yield and junction control measures. Accident Analysis & Prevention 2008, 40, 479–486. [Google Scholar]

- Anowar, S.; Yasmin, S.; Tay, R. Comparison of crashes during public holidays and regular weekends. Accident Analysis & Prevention 2013, 51, 93–97. [Google Scholar]

- Kuhn, M.; Johnson, K.; Kuhn, M.; Johnson, K. Over-fitting and model tuning. Applied predictive modeling 2013, pp. 61–92.

- Mokoma, L.D. Investigating pedestrian safety solutions on rural high-order roads. Case-study: R71 Polokwane. Master’s thesis, University of Cape Town, 2017.

- Hawkins, R.K. Motorway traffic behaviour in reduced visibility conditions. Vision in Vehicles II. Second International Conference on Vision in VehiclesApplied Vision AssociationErgonomics SocietyAssociation of Optometrists, 1988.

- De Pauw, E.; Daniels, S.; Thierie, M.; Brijs, T. Safety effects of reducing the speed limit from 90 km/h to 70 km/h. Accident Analysis & Prevention 2014, 62, 426–431. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).