1. Introduction

The current development of artificial intelligence requires exhaustive computation [

1], and the reinforcement learning (RL) area is no exception to this issue. Algorithms such as Deep Q-Network (DQN) [

2] have achieved significant results in advancing RL by integrating neural networks. However, the requirement for thousands or millions of training episodes remains constant [

3]. Training agents in parallel has proven to be an effective strategy [

4] to reduce the number of episodes [

5], which is feasible in simulated environments but poses an implementation challenge in real-world physical training environments.

In the context of reinforcement learning, Markov Decision Processes (MDPs) are typically the mathematical model used to describe the working environment [

7]. MDPs have enabled the development of classical algorithms such as Value Iteration [

8], Monte Carlo, SARSA, and Q-Learning, among others.

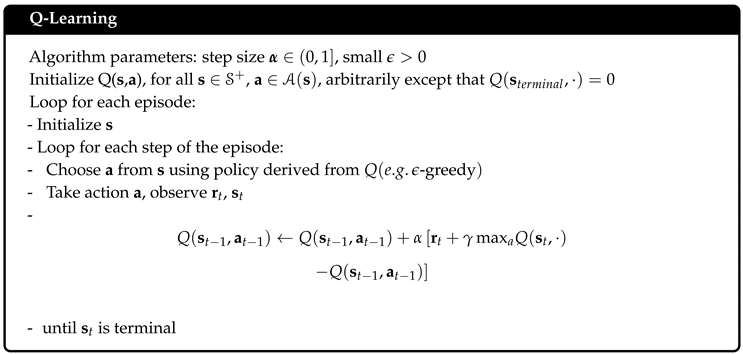

One of the algorithms most widely used is Q-Learning proposed by Christopher Watkins in 1989 [

9]. Different approaches have been proposed based on the Q-Learning algorithm [

10]. Its main advantage focuses on an optimal policy that maximizes the reward obtained by the agent if the action-state set is visited by a number infinitely many times [

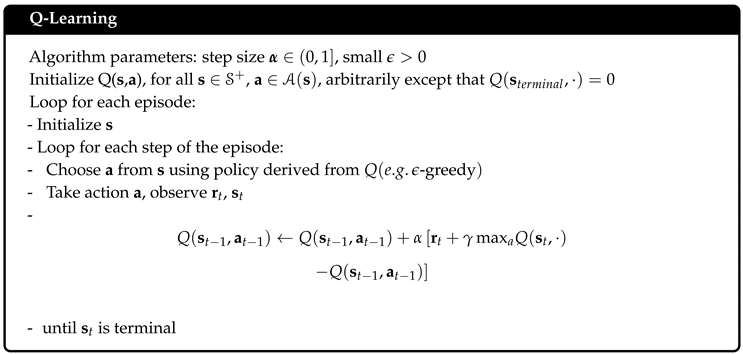

9]. The algorithm Q-Learning implemented in this work was taken from [

9] and shown in Figure 1.

Some disadvantages of Q-Learning include the rapid table size growth as the number of states and actions [

11] the problem of dimensional explosion. Furthermore, this algorithm tends to require a significant number of iterations to find a solution to a certain problem, and the adjustment of its multiple parameters can prolong this process.

Regarding the parameters of Q-Learning, the algorithm directly requires the assignment of the learning rate

and the discount factor

, which are generally tuned empirically through trial and error by observing the rewards obtained in each training session [

12]. The learning rate

directly influences the magnitude of updates made by the agent to its memory table. In [

13], 8 different methods for tuning this parameter are explored, including iteration-dependent variations and changes based on positive or negative table fluctuation. Experimental results from [

13] show that for the Frozen Lake environment, rewards converge above 10,000 iterations across all conducted experiments.

In Q-Learning, the exploration-exploitation dilemma is commonly addressed using the

-Greedy policy, where one agent decides to choose between a random action and, the action with the highest Q-value with a certain probability. Current research around the algorithm focuses extensively on the exploration factor

, as described in [

14] and highlighted due to its potential to cause exploration imbalance and slow convergence [

15,

16,

17].

The reward function significantly impacts the agent’s performance as reported in various applications. For instance, in path planning tasks for mobile robots [

18], routing protocols in unmanned robotic networks [

19], and scheduling of gantry workstation cells [

20], the reward function plays a crucial role by influencing the observed variations in agent performance. Regarding the initial values of the Q-table, the algorithm does not provide recommendations for their assignment. Some works as [

21], which utilize the whale optimization algorithm proposed in [

22], aim to optimize the initial values of the Q-table to address the slow convergence caused by Q-Learning initialization issues.

The reward function directly impacts the agent’s training time. It can guide the agent through each state or be sparse across the state space, requiring the agent to learn from delayed rewards. As concluded in [

23] when analyzing the effect of dense and sparse rewards in Q-Learning: "An important lesson here is that sparse rewards are indeed difficult to learn from, but not all dense rewards are equally good."

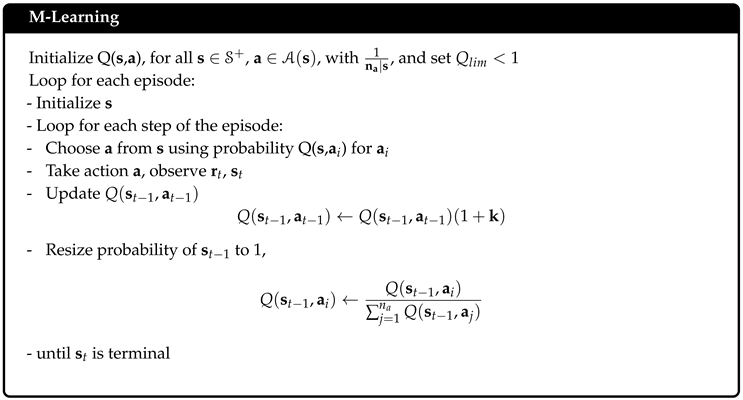

The heuristic proposed takes some conceptual ideas from Q-Learning along with its different proposals to search for alternatives focused on applications in real-physical environments. This heuristic allows for reducing the complexity of adjustment and time involved in the use of Q-learning. Moreover, the proposed algorithm, similar to Q-Learning, works on storage in a table and the relationship between states and actions through a numerical value. This algorithm is termed M-learning, and it will be explained in detail and referred to throughout the document.

2. Definition of the M-Learning Algorithm

The main idea for the M-learning approach is to reflect on how an agent is considered to solve a task and what this implies in terms of the elements that produce the Markov processes. In contrast to Q-learning, this is not defined directly, rather it is based on the premise that the proposed reward function is consistent to be achieved and that consequently, the agent will seek to maximize the reward by reaching to complete the task eventually. This perspective merges two different problems into the same element to tune: the reward function and the way to get the solution.

In general, in the formulation of a task or problem and presented, the solution is implicitly defined or vice versa, if a solution is described, the problem is implicit. Although there is no notion of how to get there, like two sides of a coin in which you cannot always notice both sides. On the other hand, if you are searching to control the angle in a dynamic system, the solution will be the position state to be . Now, when the approach of a task for an agent is thought within the framework of the MDP, the solution must also be described by its elements. Specifically, the solution to a task described as an MDP is a particular s of S, which we will call the objective state .

In the reinforcement learning cycle, the reward signal aims to guide the agent in searching for a solution. Through it, the agent obtains feedback from the environment as to whether or not her actions are favorable. Q-learning uses a reward factor to modify the action-state and estimate its value. However, in environments where the reward is distributed dispersely and in which the agent most of the time does not receive feedback from the Q-learning environment, it must solve the search for the reward with time in exploration, reaching the exploitation-exploration dilemma, usually attacked with a policy of type -greedy.

The use of the -greedy policy, as a decision-making mechanism, requires the setting of the parameter of the decay rate , as the time that the agent will be able to explore looking for feedback from the environment, with a focus on reducing the number of iterations necessary to find a solution. This turns out to be decisive, as it is subject to the configuration before the training process and is not typical of the learning process, it is only possible to assign a favorable value by interacting with the problem through computation, making variations in the decay rate and number of iterations; keeping in mind that it is also conditioned by the rest of the parameters to be defined.

If the reward is thought of as the only source of stimulus for the agent in an environment with dispersed reward, the agent must explore the state space seeking to interact with states where it obtains a reward and, based on these, modify its behavior. As these states are visited more, the agent should be able to reduce the level of exploration and exploit more of its knowledge about the rewarded states. The agent explores in search of stimuli and to the extent that it acquires knowledge it also reduces arbitrary decision making. Exploration-exploitation can be understood discretely about each state in the state space. In this way, the agent can propose regions that it knows in the state space and, depending on how favorable or unfavorable, reinforce behaviors that lead it to achieve its objective or seek to explore other regions.

As stated, exploration and decision-making are strongly linked to the way the reward function is interpreted. The specific proposal in M-Learning consists of first limiting the reward function to values between -1 and 1, assigning the maximum value to the reward for reaching the goal state

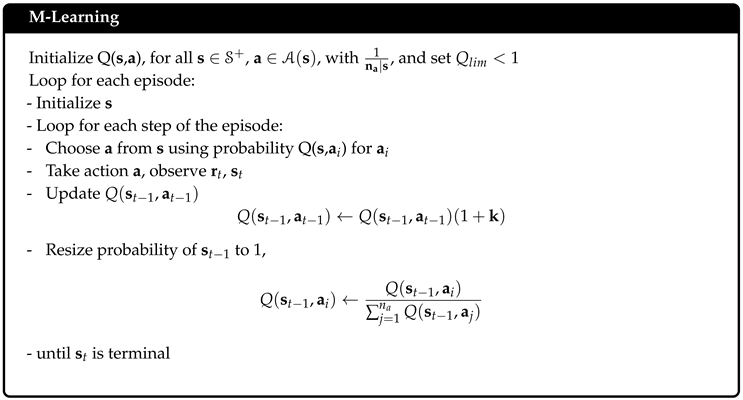

. Second, each action in a state has a percentage value that represents the level of certainty about the favorableness of that action and, therefore, the sum of all actions in a state is 1. The M-Learning algorithm is shown below.

The first step in the M-Learning algorithm is the initialization of the table, unlike Q-learning, where the initial value can be set arbitrarily. The value of each state-action is initialized as a percentage value of the state according to the number of shares available. In this way, the initial value for each action of a state will be the same as shown in (

1).

Once the table is initialized, the cycle for the episodes begins; the initial state of the agreement agent is then established with observation of the environment. The cycle of steps begins within the episode and, based on the initial state of the agent, it chooses an action to take. To select an action, the agent evaluates the current state according to its table, obtains the values for each action, and it selects one at random using its value as its probability of choice. Thus, the value of each action represents its probability of choice for a given state, as presented in equation (

2).

After selecting an action, the agent acts on the environment and observes its response in the form of the reward and the new state. When the agent has already passed to the new state, evaluate the action taken and modifies the value of that action-state according to (

3).

The percentage value of modification

of the value of the previous state-action

is computed under the existence consideration of the reward (

4), if

, exists,

is calculated based on the current reward or the current and previous reward. If there is no reward stimulus, it is calculated as the differential of information contained in the current state and previous

.

When the environment returns a reward to the agent for his action,

,

is a measure of the approach or distance concerning

, , in specific, the best case of the new state will be the target state

with maximum positive reward

, in the other case, the new state will be a state radically opposite to the objective state and its reward will be maximum but negative

. For cases where the reward is not maximal, the value of

may be calculated by a function that maps the reward differential within the range of

, for this case, a first-order relation is proposed.

If the reward function of the environment is described inform such that its possible values are 1, 0, and -1; the calculation of

k can be rewritten by replacing

with

. This representsenvironments where it is difficult to establish a continuous signal that correctly guides the agent through the state space to reach your goal. In these situations, it may be convenient to only generate a stimulus on the agent by achieving the objective or cause the end of the episode. According to this, the Equation (

4) can be rewritten as (

6).

When the environment does not return a reward

, the agent evaluates the correctness of its action based on the difference in information stored for its current and previous state, as depicted in Equation (

7). This is the way the agent spreads the reward stimulus.

To calculate the information of a state

, the difference between the value of the maximum action and the average of the possible actions in that state is evaluated in Eq. (

8). The average value of the stock is also its initial value, which represents that there is no knowledge in that state that the agent can use to select a stock. In other words, the variation concerning the mean is a percentage indicator of the level of certainty that the agent has in selecting a certain action.

Since the number of shares available for the previous and current state may be different, which modifies the average value of the shares, it is necessary to put them on the same scale to be able to compare them. When the average value of the shares is established, its maximum variation of information is also established, this is how much is needed in the value of an action to have a probability of 1. The maximum information is then the difference between the maximum value and the average share value (

9).

Since we want to modify the previous state-action, we adjust the information value of the current state to the information scale of the previous state, using (

10).

It is possible that for a state

all its actions have been restricted in such a way that the value of all its shares possible is zero making it impossible to calculate the maximum information of

. For this reason, a negative constant value is established to indicate that the action taken leads to a state that we want to avoid. With this, the information differential is defined according to (

7).

The magnitude is a parameter that controls the level of maximum saturation of an action due to propagation. With this value, it is possible to control how close the value of an action can be to the possible extremes 0 and 1.

After calculating

k and making the modification in the action-state

must be distributed the probabilities within the state to maintain

applying (

12) for state actions previous.

In this way the variation in the value of the selected action over the rest of the actions. The beginning What is established is that the agent, having greater certainty of an action, reduces the possibility of selection of the other actions, or, on the contrary, by reducing the possibility of selecting an action, increases the level of certainty about the rest of the actions.

Similar to the

-greedy, if you want to maintain a level minimum exploration value, it is necessary to restrict the maximum value that an action can achieve. This is established by the parameter

,, by conditioning the calculation of the redistribution of probabilities for the case in which the maximum action exceeds the saturation limit; ;

, if this happens, it reduces the value of

in such a way that when applying (

12) it becomes the saturation limit

.

Finally, we return to the beginning of the cycle for the number of steps and continue executing the algorithm until reaching the limit of steps or reaching a final state.

3. Experimental Setup

In order to compare the performance of M-Learningagent training is carried out in front of Q-Learning using both algorithms in two different environments. Both groups of agents will have the same number of episodes of training and the same step limit per episode.

To measure the performance of the agents, a test is carried out Finish each episode until you complete the episode limit. During the episode, the agent will be able to modify his table according to her algorithm. At the end of the episode, either by reaching an end state or reaching the limit of steps per episode, The agent goes to the test stage where it cannot modify the values from her table. In the test stage, the agent plays 100 episodes with the table obtained at the end of the episode training. The policy applied in both agents for theselecting an action in the test is always taking the action with a higher value. In this way, we seek to measure the agent’s learning process and its ability to maintain the knowledge acquired.

The selected environment is Frozen Lake,

Figure 1, which It consists of crossing a frozen lake without falling through a hole. Frozen Lake is available at the Gymnasium bookstore, which is a maintained fork of OpenAI’s Gym [

24] library which they define as ”An API standard for learning for reinforcement.” This environment has two variations which represent two different problems for the agent, In the first case, the environment allows the agent to move in the desired direction, and the state transition function of the environment is deterministic; In the second case when the agent wants to move in a certain direction environment may or may not lead you in that direction with a certain probability, the state transition function of the environment It is stochastic.

In the Frozen Lake environment, the agent receives a reward of 1 for reaching the other end of the lake, -1 for falling into a hole, and 0 for the rest of the states. For the stochastic environment, the agent will move in the desired direction with a probability of 1/3; otherwise, it will move in any direction perpendicular with the same probability of 1/3 in both directions.

Table 1 summarizes the configuration of the experimental methodology.

3.1. Parameter Tuning

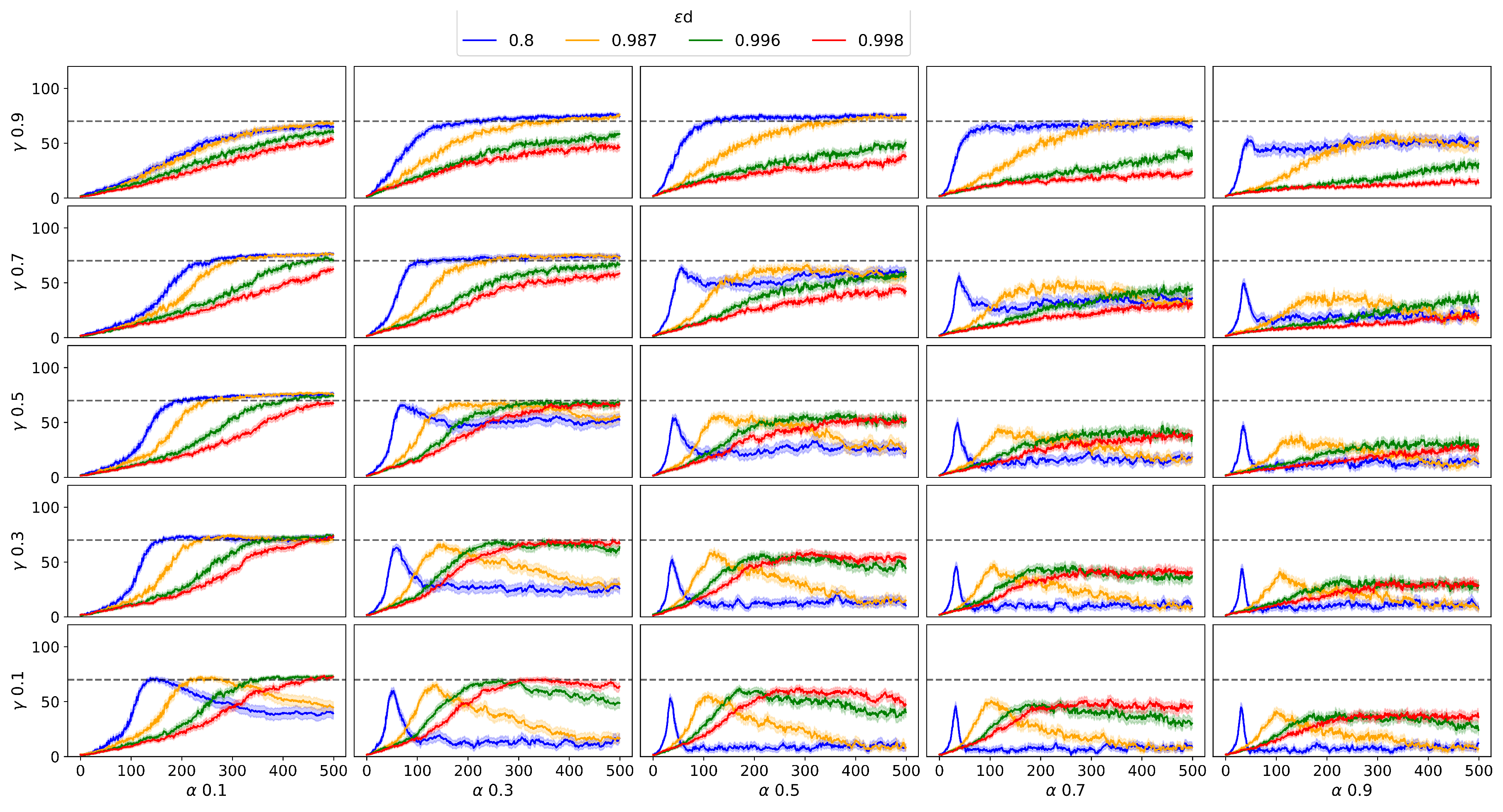

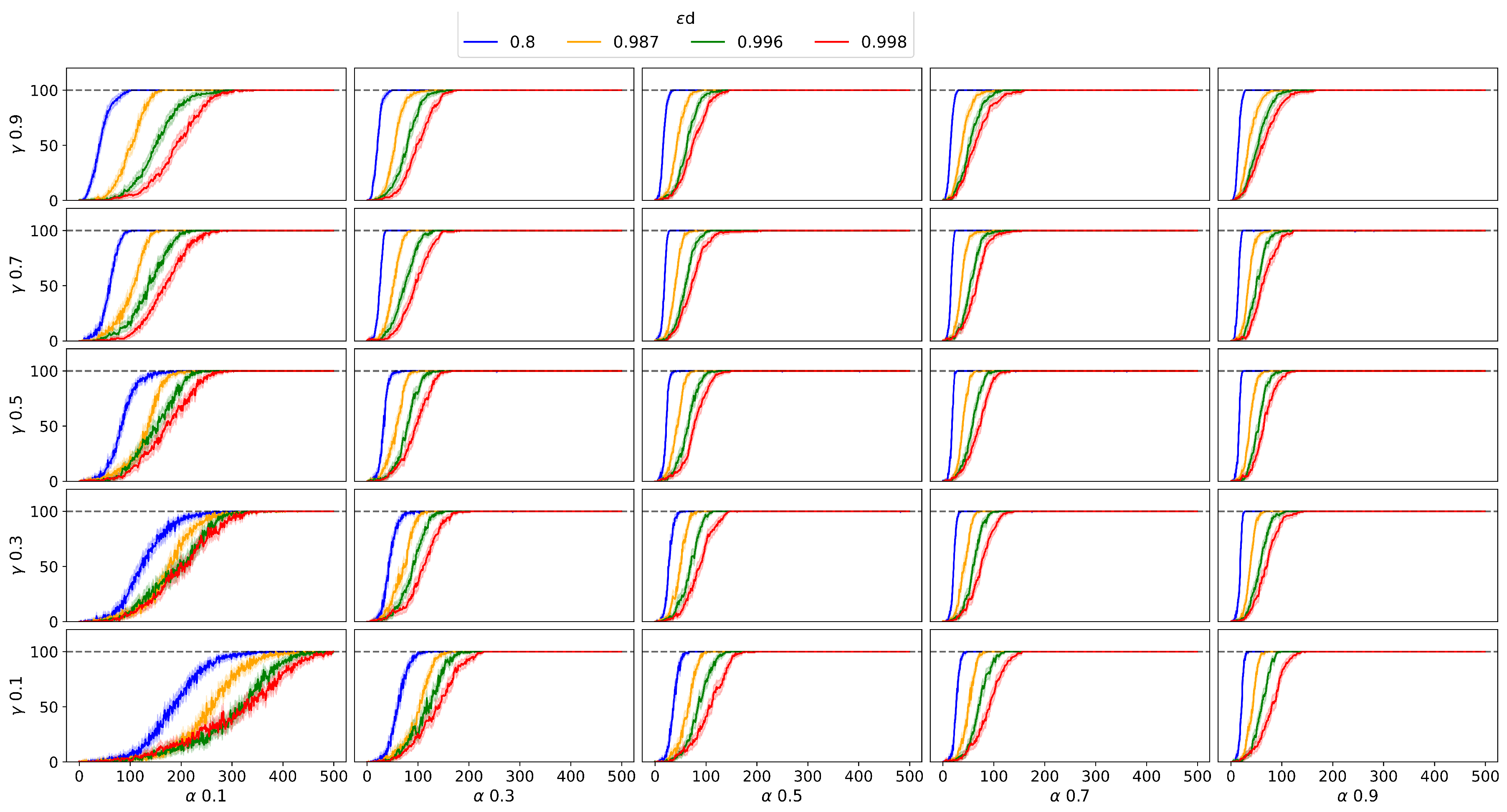

To train agents using Q-Learning it is necessary to set the parameters of the algorithm. The initial values of the table are set as a pseudo-random value from 0 to 1. The value of for the -greedy policy is set to 0.005. Since , and directly impact the performance of the algorithm, a mesh search is performed for these 3 parameters.

: 0.8, 0.987, 0.996, 0.998

: 0.1, 0.3, 0.5, 0.7, 0.9

: 0.1, 0.3, 0.5, 0.7, 0.9

The training of agents using our proposed M-learning method requires the setting of parameter.

6. Conclusions

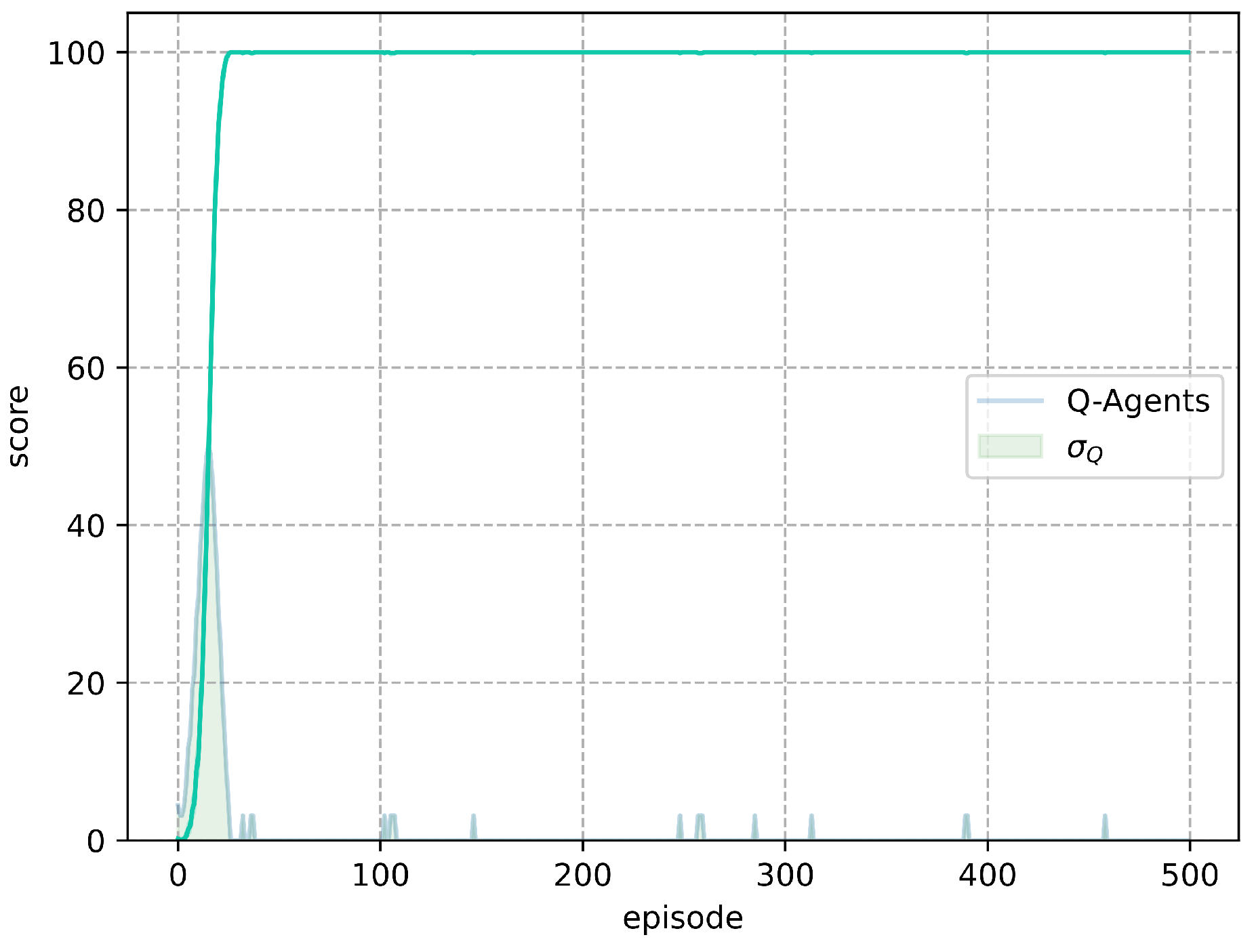

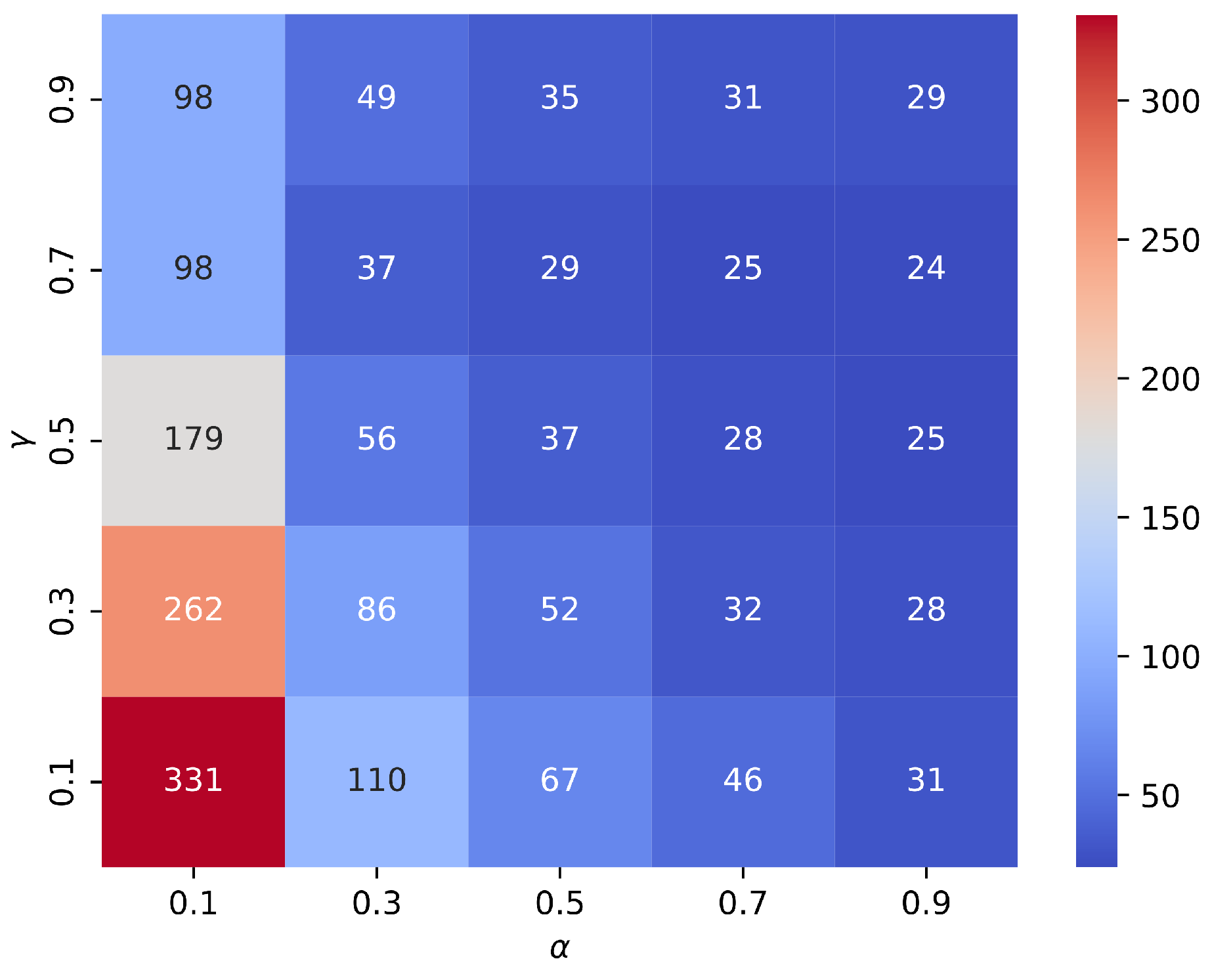

The use of reinforcement learning for an unknown problem using Q-Learning, requires carrying out a broad exploration of its parameters, as presented in

Figure 2 and

Figure 5, with very diverse results and in some cases, non-convergence to a solution. This undoubtedly entails computing multiple times or making a systematic exploration with different parameters in the same environment. In this work, a total training of 42,000 agents was carried out, and 21,000,000 episodes with Q-Learning; before 2,000 agents and 1,000,000 M-Learning episodes.

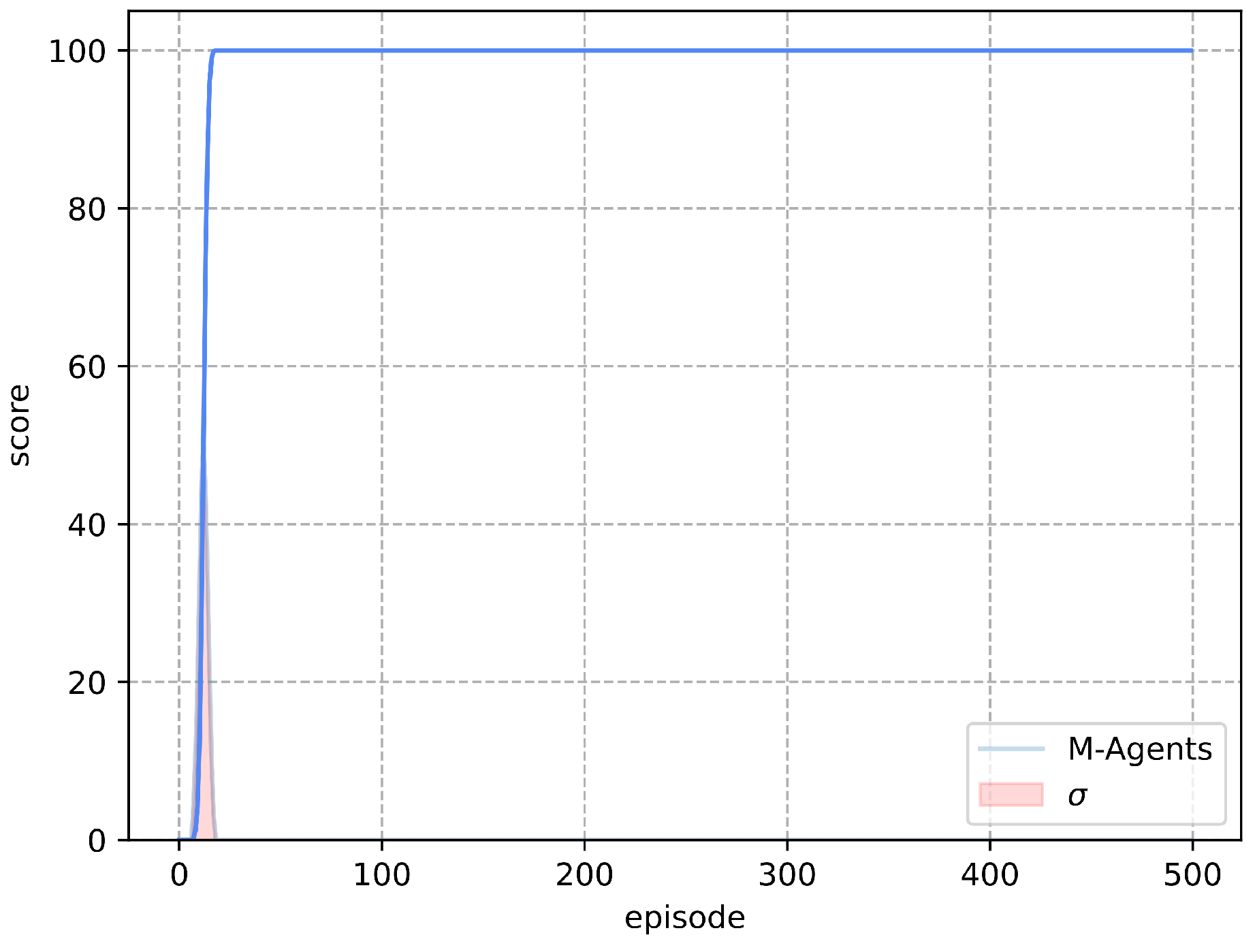

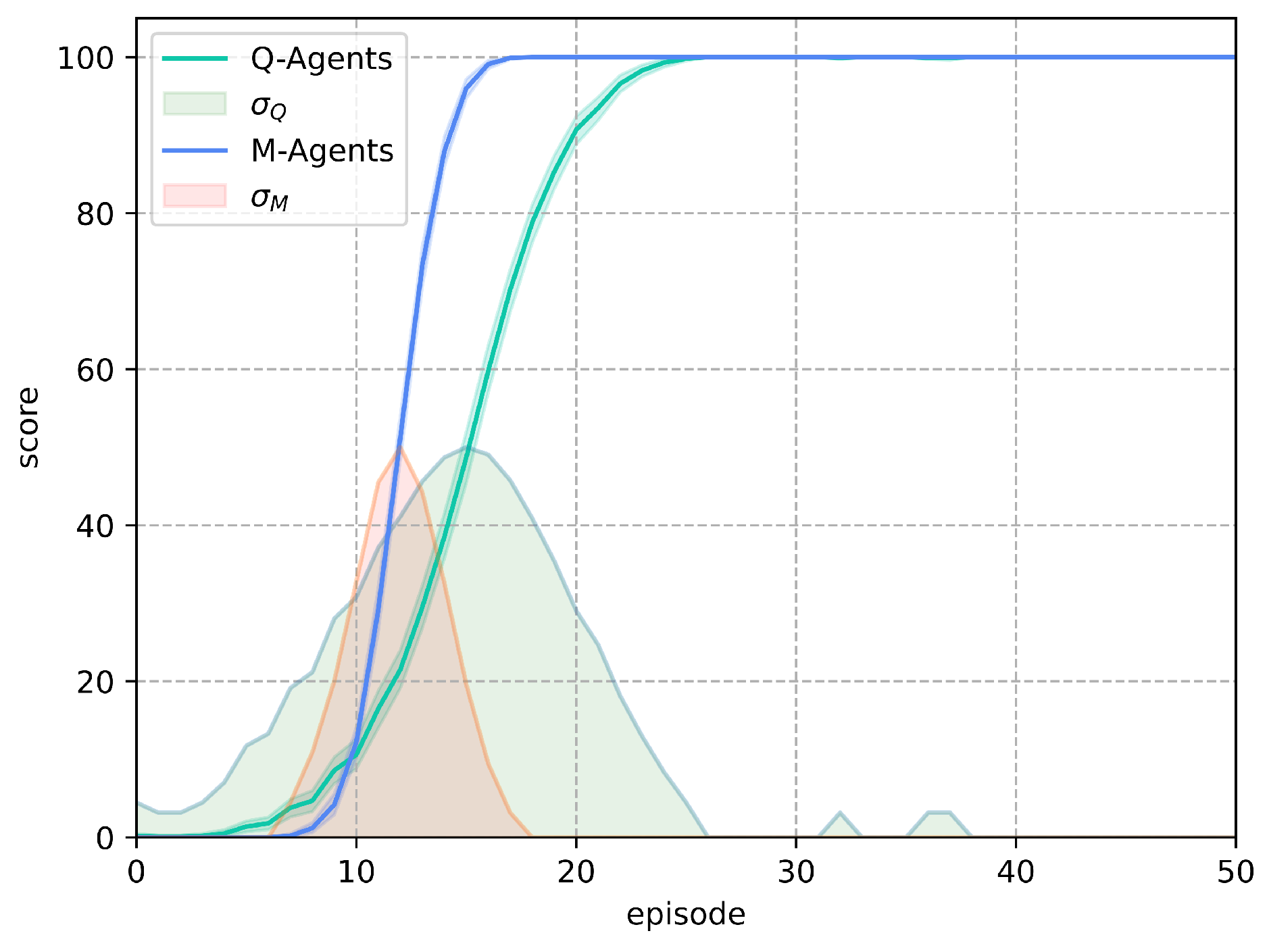

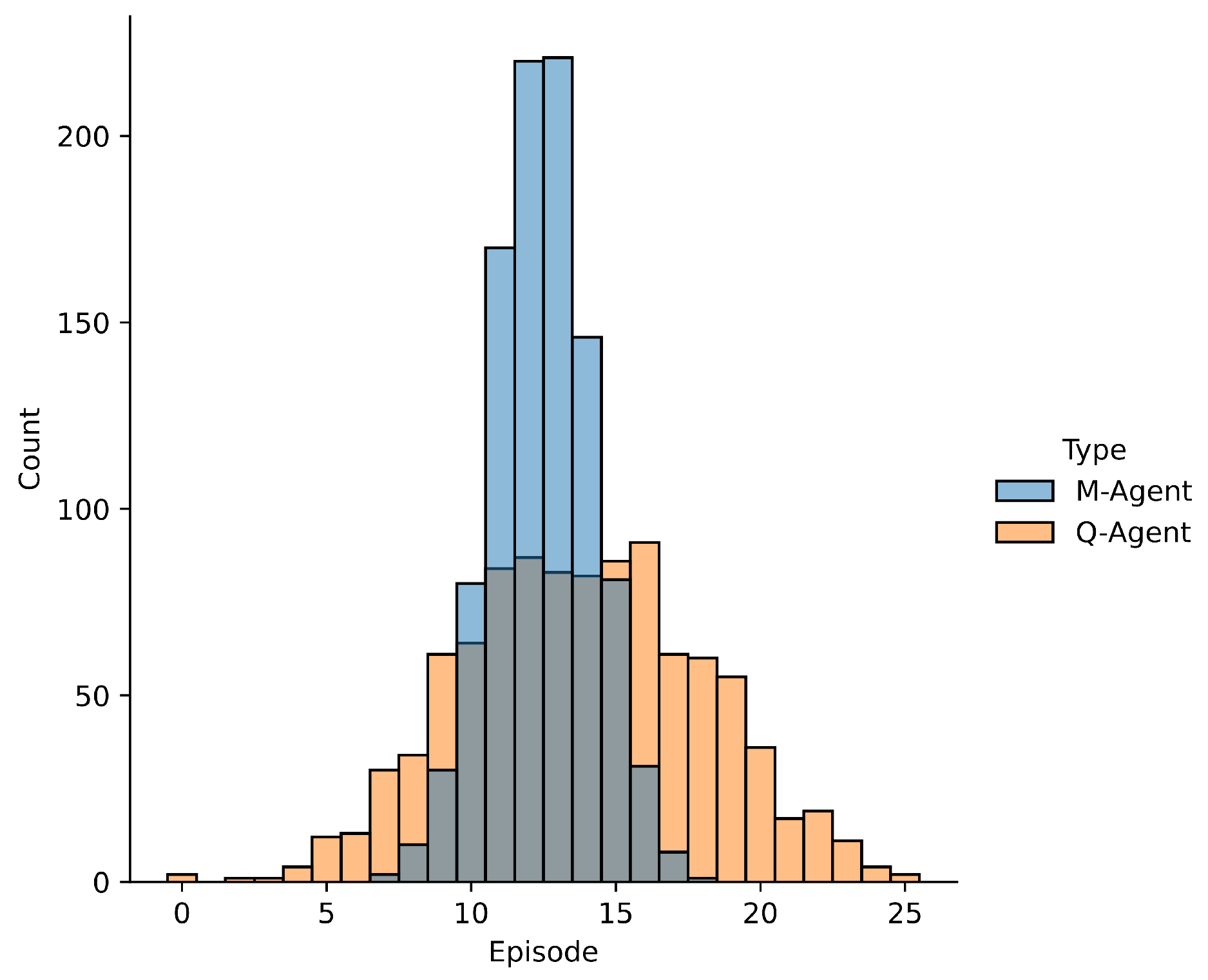

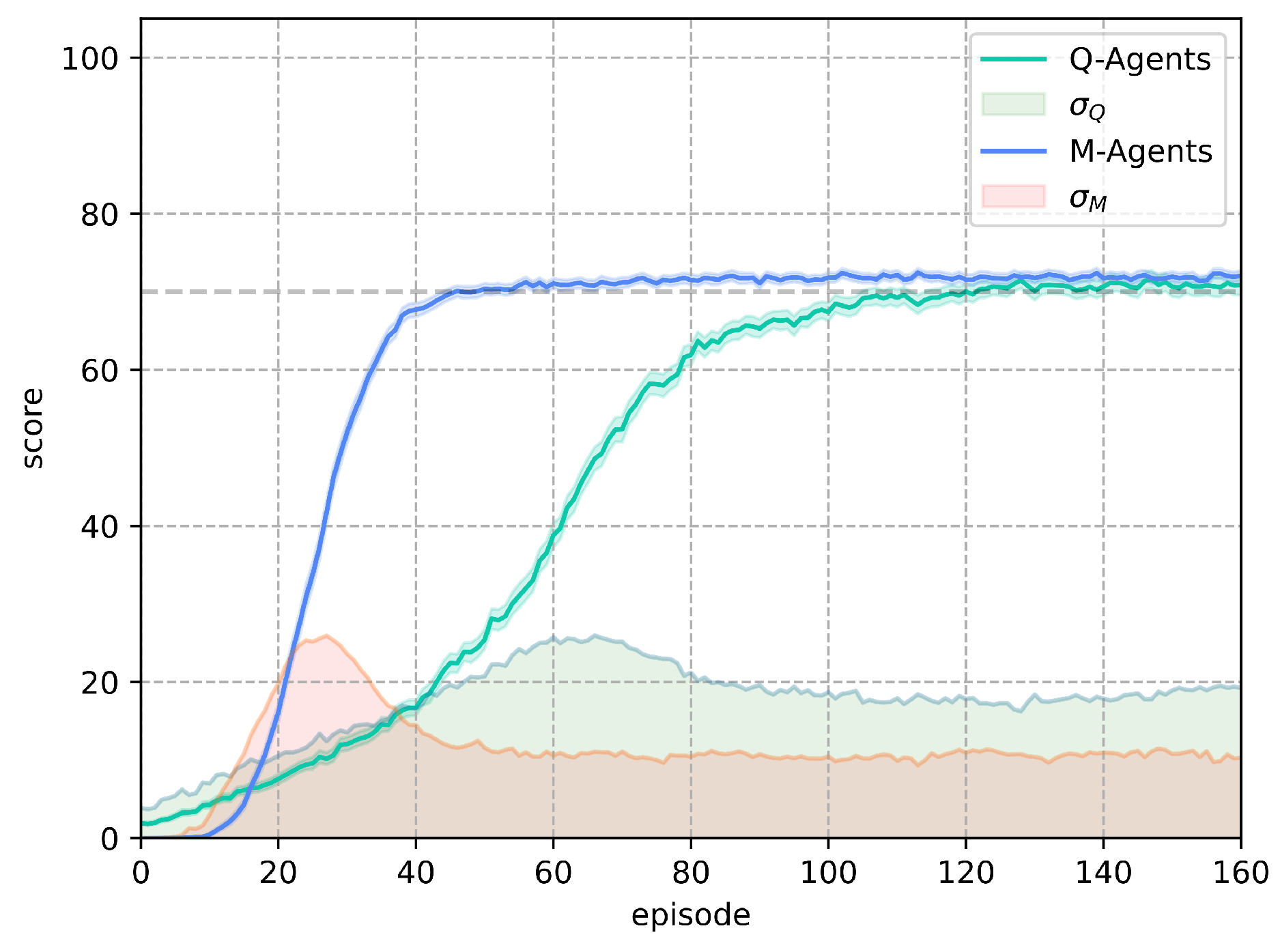

For the Frozen Lake environment deterministic, the algorithm M-Learning shows faster learning with less variability in the results obtained by the trained agents compared to Q-Learning as presented in

Figure 9. M-Learning takes 8 episodes less than Q-Learning for all agents to achieve score of 100 for the deterministic configuration of the environment. This represents a decrease of 30.7% in the number of episodes necessary for all agents to achieve a score of 100. Regarding the way in which the results obtained are distributed,

Figure 10, although the average number of episodes to achieve the objective among the two algorithms have a difference close to one episode; M-learning presents a reduction of 58.37% in the standard deviation of episodes, which shows more consistent results.

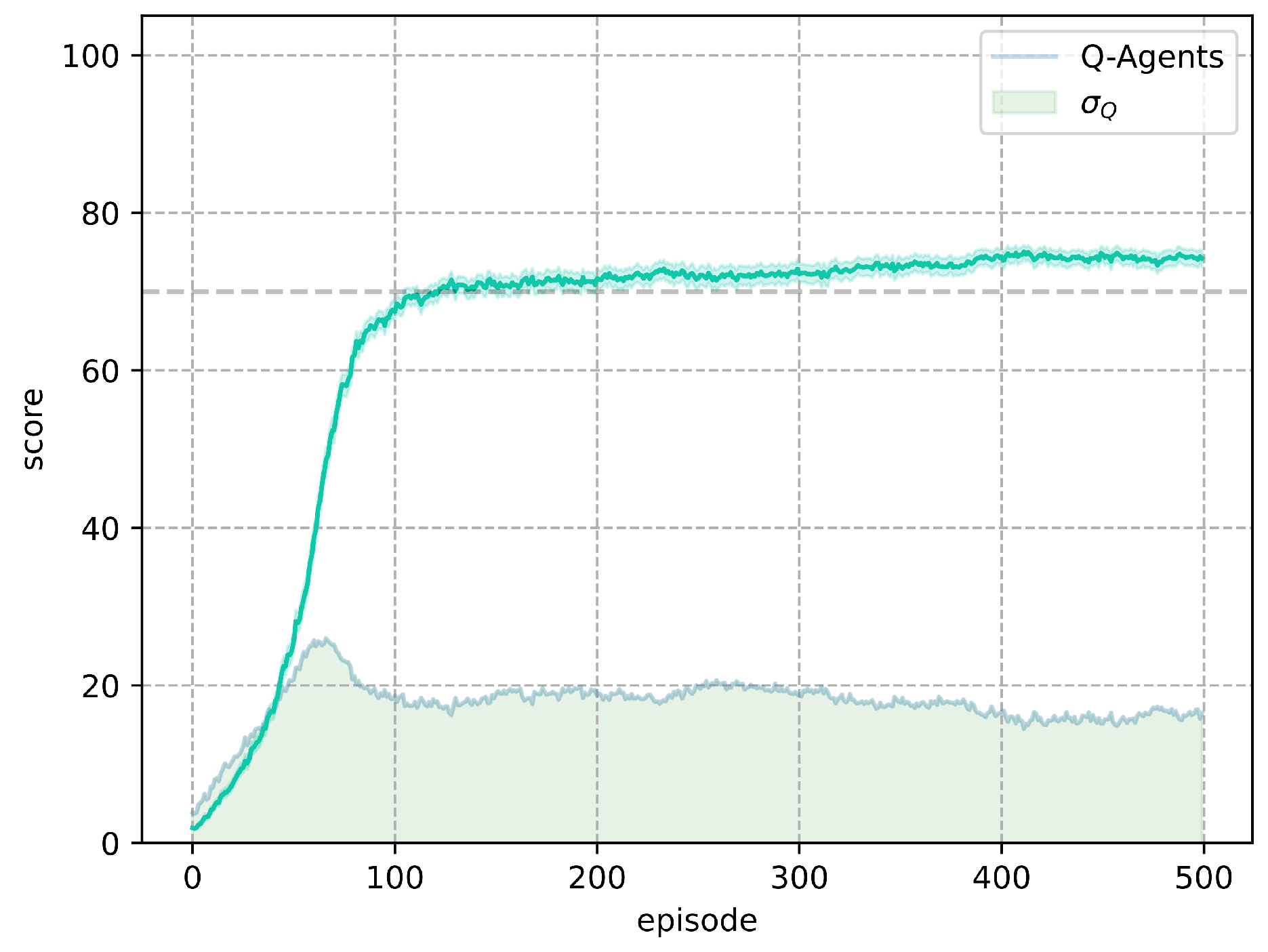

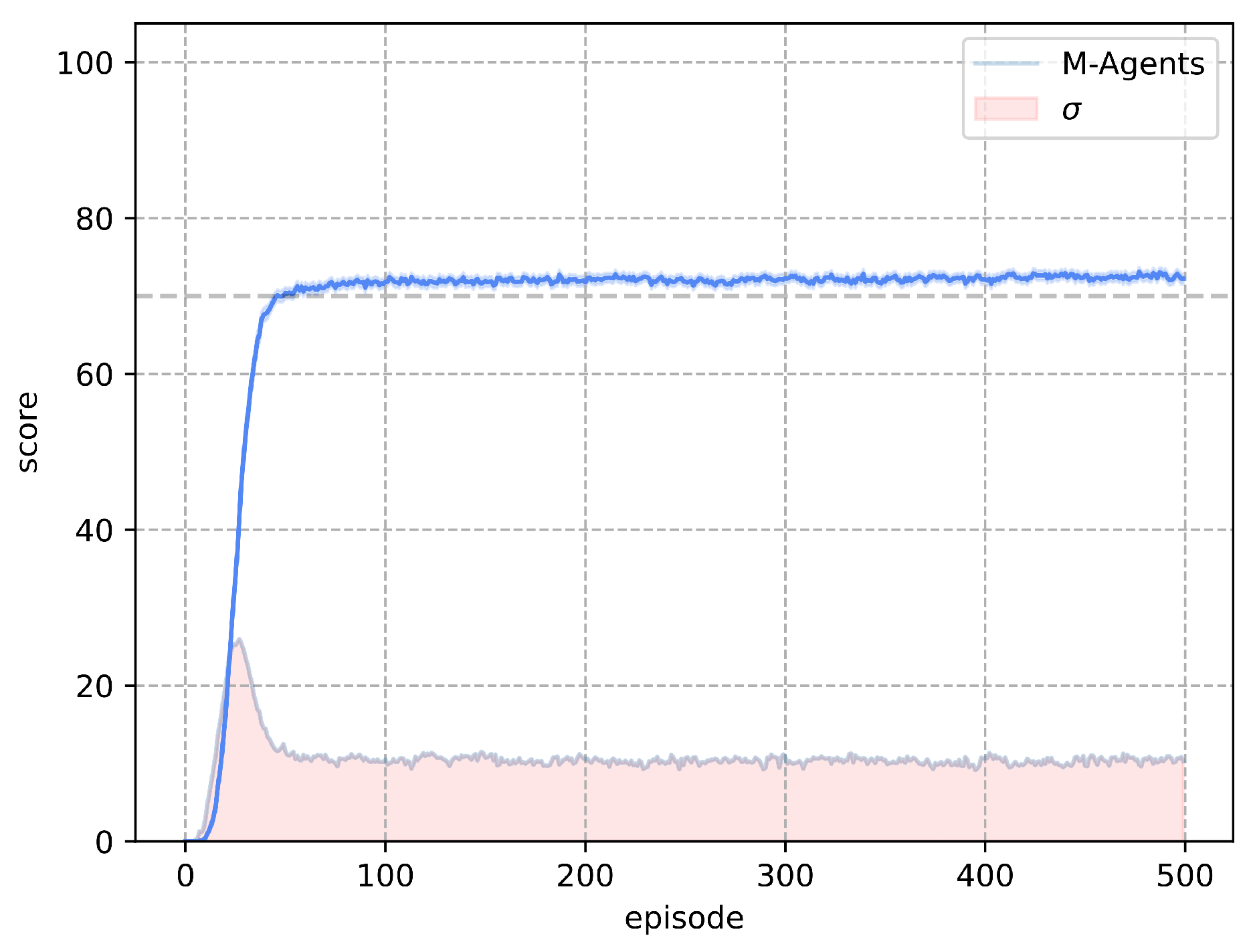

For the stochastic Frozen Lake environment, the algorithm M-Learning shows faster learning with less variability in the results obtained by the trained agents compared to Q-Learning as presented in

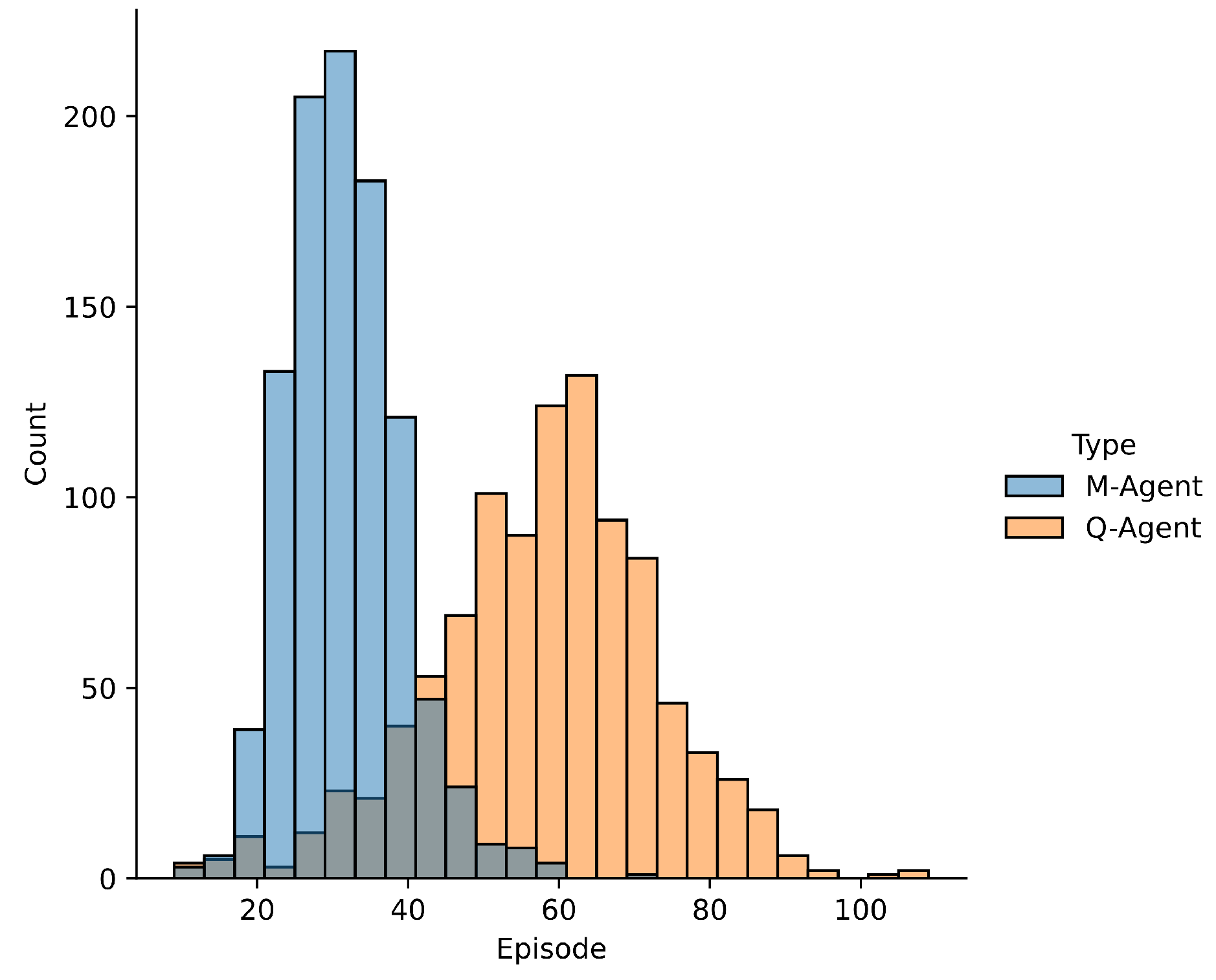

Figure 11. M-Learning takes 74 episodes less than Q-Learning to obtain a score above 70 for all agents in the stochastic configuration of the atmosphere. This represents a decrease of 61.66% in the number of episodes necessary for all agents to exceed a score of 70. Regarding how the results obtained are distributed, in

Figure 12, the average number of episodes to achieve the objective is 45.84%. lower for M-Learning, similar to the deterministic environment M-Learning presents a reduction of 49.75% in the standard deviation of episodes, which shows more consistent results.

Finally, for the Frozen Lake environment in its two configurations and with delayed reward, the algorithm proposed M-Learning with a single parameter to tune , presents better performance with a smaller number of episodes and less variability in the scores of the agents. The results obtained in this document show that the proposed heuristic for the propagation of the information with delayed reward is favorable for environments like Frozen Lake.

With the results obtained for M-Learning, it is proposed That future research continue with the evaluation of its behavior in other environments, to identify the problems where it can be useful.