1. Introduction

Cutting stock problems are combinatorial problems involving the creation of small objects from large objects according to a cutting plan made of cutting patterns. The larger the number of small objects, the greater the number of possible cutting patterns, and hence these problems are classified as NP-hard optimization problems [

1]. In this study, we focus on the single stock-size cutting problem according to Wascher’s classification [

2] for the one-dimensional case, or one-dimensional cutting stock problem (1D-CSP), as it is best known in most of the literature. The 1D-CSP arises in industries manufacturing goods from paper, metal, glass, and wood, among others. The goal is the reduction of material usage and, therefore, the minimization of the related costs. Apart from cost reduction, industries such as the building industry are concerned with reducing gas emissions due to metal and concrete usage, as pointed out in [

3]. Some other studies also address the problem by considering mathematical models that include the reuse of leftovers, as in [

4].

Most approaches to solving the 1D-CSP are based on linear programming (LP) methods, with the column generation technique being one of the most outstanding ones. Reference [

5] presents some LP formulations proposed in the early stages of addressing the 1D-CSP. Additionally, a comprehensive survey of LP methods used to solve the 1D-CSP up to 2015 can be found in [

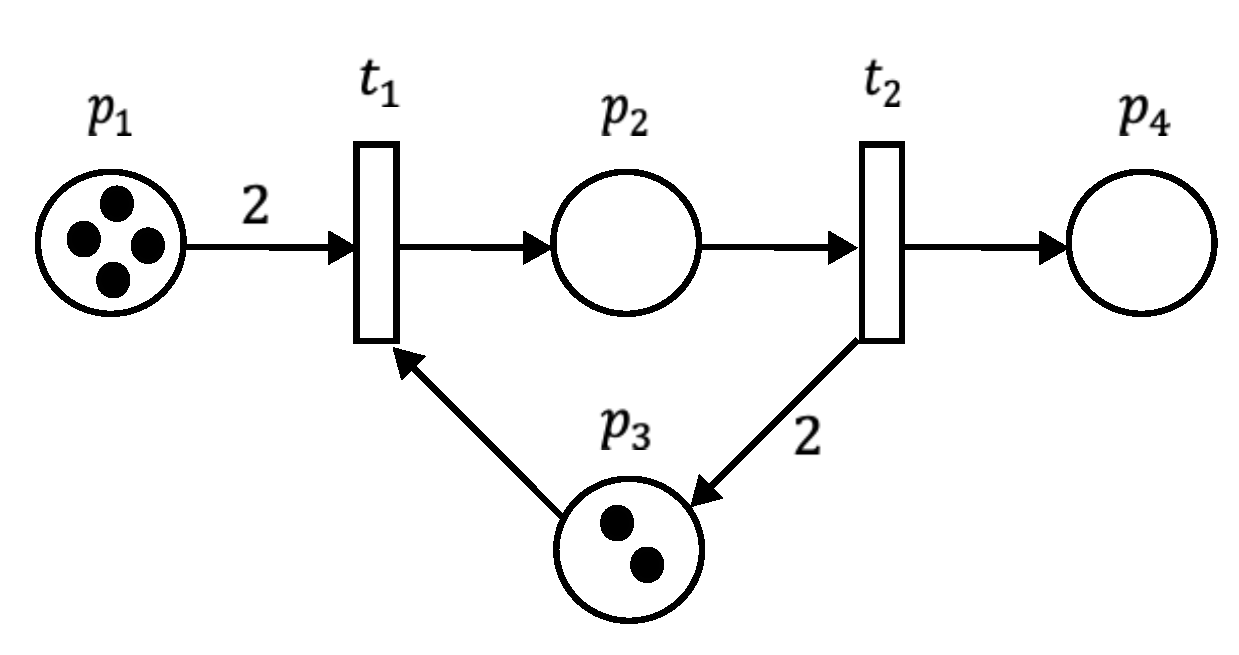

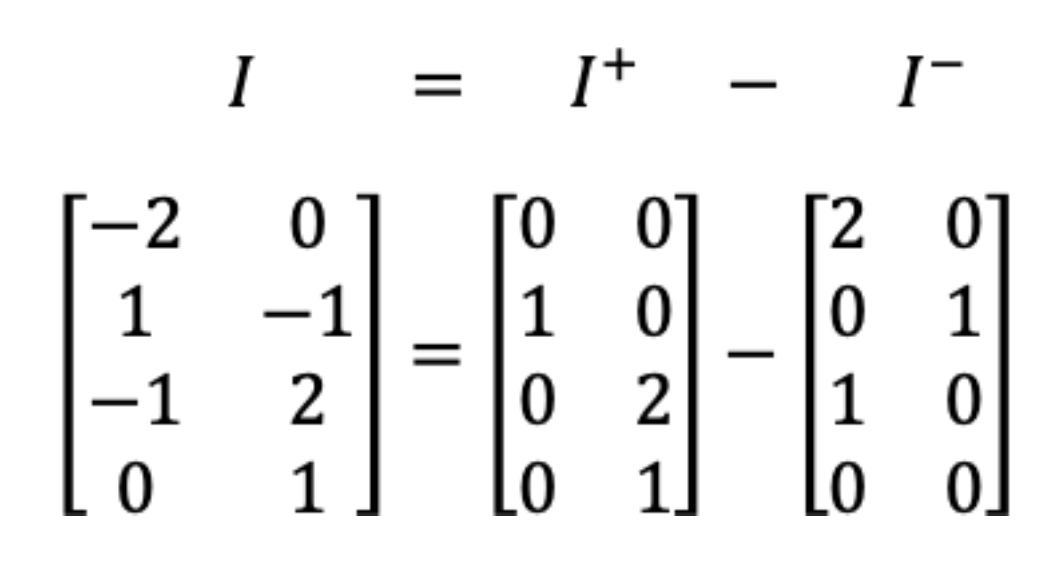

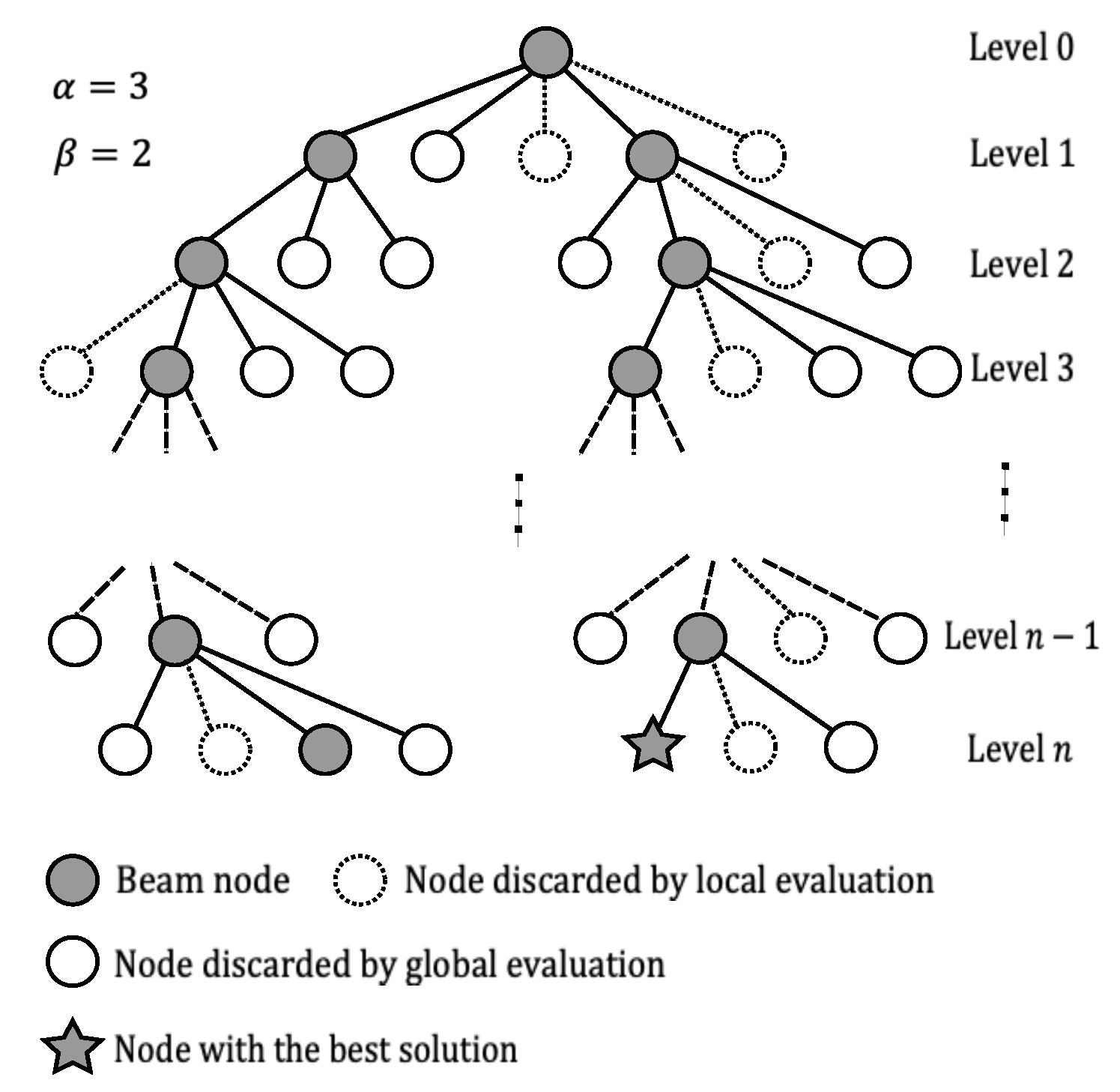

6]. Column generation and other techniques focus on generating and reducing the number of cutting patterns. In the 1D-CSP, a cutting pattern is an ordered sequence of items cut from a large object or stock. One-cut models and arc-flow models are other paradigms used to address the 1D-CSP, as solutions to the 1D-CSP can be constructed as sequences of items. It is well-known that LP methods can yield optimal solutions to the 1D-CSP, provided that the complexity of the instances is small, that is, with a small number of small objects or items. This limitation has led to the use of heuristic and metaheuristic approaches. Although these methods usually result in near-optimal solutions, the computational time is typically higher compared to LP methods. Additionally, a drawback of using metaheuristics is the complexity of solution representation and the numerous parameters that must be set and tuned. Since a solution for the 1D-CSP can be sequentially constructed, we propose using ordinary Petri nets (PN) to model the solution construction. We take advantage of the reachability tree to find the best solution through the implementation of a filtered beam search algorithm (FBS). Beam search algorithms are a type of any-time search algorithm, suitable for optimization problems where solutions can be constructed sequentially and represented as a tree structure. Unlike other any-time search methods, beam search algorithms do not perform backtracking. Only the most promising nodes at each level are retained to continue searching for the best path from the root node to a desired final node, while non-promising nodes are pruned permanently. The FBS is an improvement to the classical beam search algorithm. At each level, it first filters the nodes through a local evaluation and then performs a global evaluation among the filtered nodes to retain the best ones for further investigation.

In the literature, most mathematical models for the 1D-CSP consider single-objective functions. However, [

7] demonstrated the effectiveness of using a bi-objective function. In this study, we consider the bi-objective function of the mathematical model (1)-(3) based on the one used in [

8] for the 1D-CSP without contiguity. The objective function to be minimized accounts for both the minimization of waste and the minimization of the number of stocks with waste.

In model (

1)-(3),

is the length of the stock,

m is the number of different items,

k is the total number of stocks used.

represents the demand or total number of orders of item

i, while

is the length of item

i,

is the number of orders of item

i in stock

j.

and

are defined in Equations (

4) and (5), respectively, where

represents the wastage of stock

j.

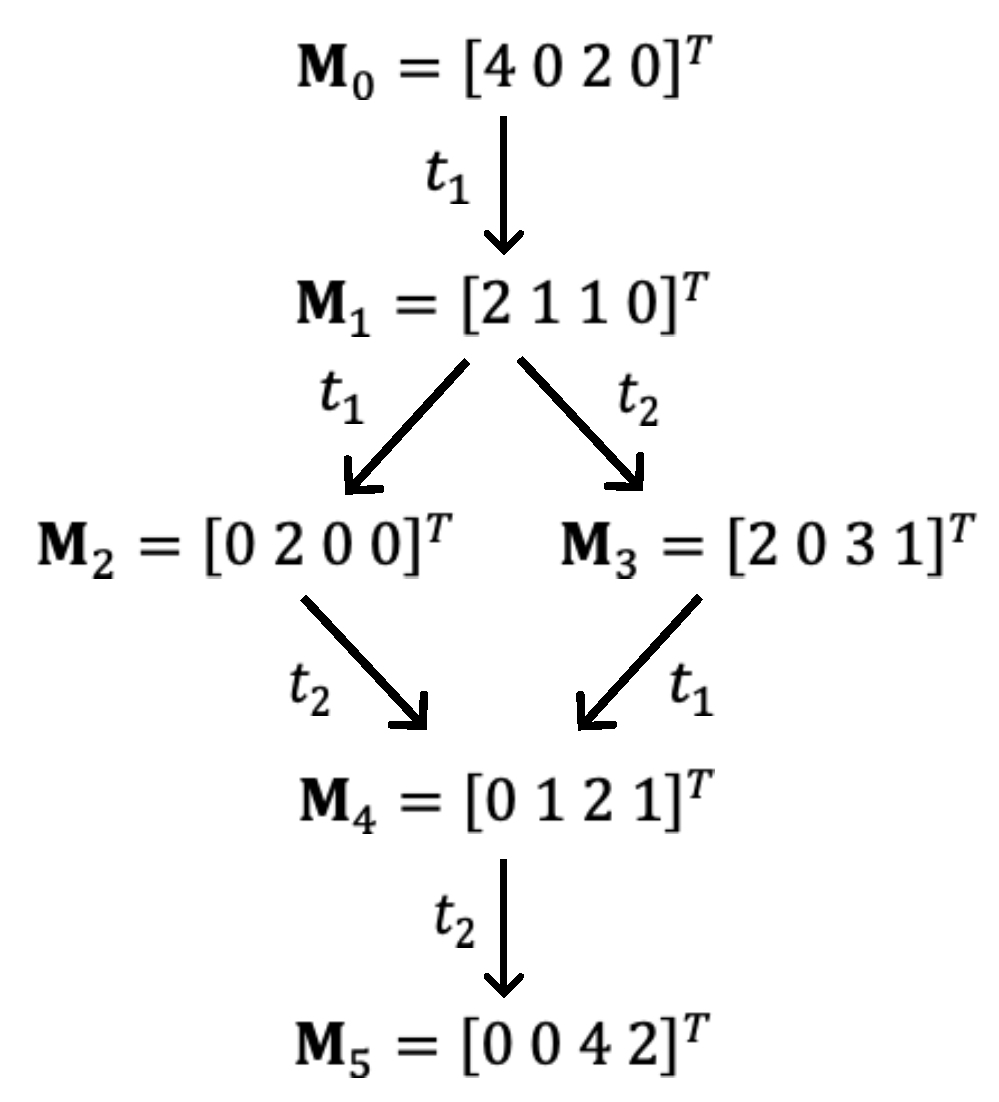

Even though the main drawback of PN is state explosion, we do not generate the complete reachability tree. Instead, we generate promising states from the initial state that lead to the desired final state with minimum waste, according to the FBS, using the bi-objective function (

1) to evaluate the nodes. The initial state or marking of the PN is where no items have been taken to form a solution, and the final state or marking is where all items have been taken to form a solution. At each level of the tree, partial solutions are evaluated locally, and the best ones are then evaluated globally. Nodes with the best partial solutions are kept for further investigation. The advantages of using PN are twofold: they are useful for validating solutions when PN is deadlock-free, and they can be combined with graph search methods to solve optimization problems. Some of the problems addressed under the PN paradigm include job and flow shop scheduling problems. In these cases, PN and graph search methods like

algorithms [

9,

10,

11,

12] and beam search algorithms [

13,

14,

15,

16] have been used to obtain the best scheduling solutions. However, to the best of our knowledge, PN has not been used to solve cutting problems. Our experimental results show that PN is suitable for modeling pattern generation and solving the 1D-CSP, with acceptable performance in both solution quality and computational time.

2. Literature Review

It is widely considered that Kantorovich first addressed the 1D-CSP in [

17] providing an LP formulation of the problem and using the multipliers method to solve it. Later, [

18,

19] used the column generation technique to solve the integer linear programming formulation by addressing an associated knapsack problem. Following the introduction of the column generation technique, several procedures were developed to improve its computational performance using branch-and-price algorithms [

20,

21,

22,

23]. Other algorithms have focused on constructing and reducing the number of patterns [

24,

25,

26,

27,

28]. Alternative approaches to modeling and solving the 1D-CSP include the arc-flow and one-cut formulations. The arc-flow formulation, a graph-based approach, was introduced in [

29]. Recently [

30] proposed and compared arc-flow formulations based on other algorithms. One-cut formulations have received little attention since their use in [

31]. Moreover, [

32] proved the equivalence among pattern-based formulations, such as that of Gilmore & Gomory [

18,

19], and the arc-flow and one-cut formulations. [

33] used dynamic programming to solve the 1D-CSP, and [

34] showed the relationship between arc-flow formulations and dynamic programming in solving optimization problems.

LP methods are restricted to instances of a small number of items, and to overcome this limitation, many researchers have proposed metaheuristic methods like genetic algorithms [

35,

36,

37]. However, the use of genetic algorithms faces the difficulty of finding the best way to encode the solutions or cutting patterns, as pointed out in [

38,

39,

40,

41]. Evolutionary programming was used in [

8,

42] to address the 1D-CSP with and without contiguity. Additionally, [

43] concluded that evolutionary programming performs better than genetic algorithms for solving the 1D-CSP. Swarm intelligence algorithms, such as particle swarm optimization algorithms [

44,

45,

46,

47] and ant colony optimization algorithms [

48,

49,

50], have also been used to solve the 1D-CSP. The main issues with these two algorithms are parameter tuning and the need for hybridizations to avoid premature convergence and control the search space. Additionally, discretization procedures are required since these algorithms are better suited for continuous problems. Other metaheuristic algorithms for solving the 1D-CSP include tabu search, simulated annealing [

51], and iterative local search [

52,

53]. More recently, [

54] introduced the least-lost algorithm to simultaneously minimize waste and stocks with waste in the 1D-CSP, showing better results than the evolutionary programming algorithm of [

8]. [

55] compares a Generate & Solve algorithm to the column generation technique. The Generate & Solve algorithm uses a reduction technique based on the one presented in [

56] to deal with large instances of the 1D-CSP. Although the algorithm is not better than the column generation technique, it can solve larger instances in acceptable computational time with quasi-optimal solutions. [

57] introduces a deep reinforcement learning algorithm to minimize the number of stocks and maximize the length of leftovers. The algorithm performs well in terms of computational time and handling large instances.

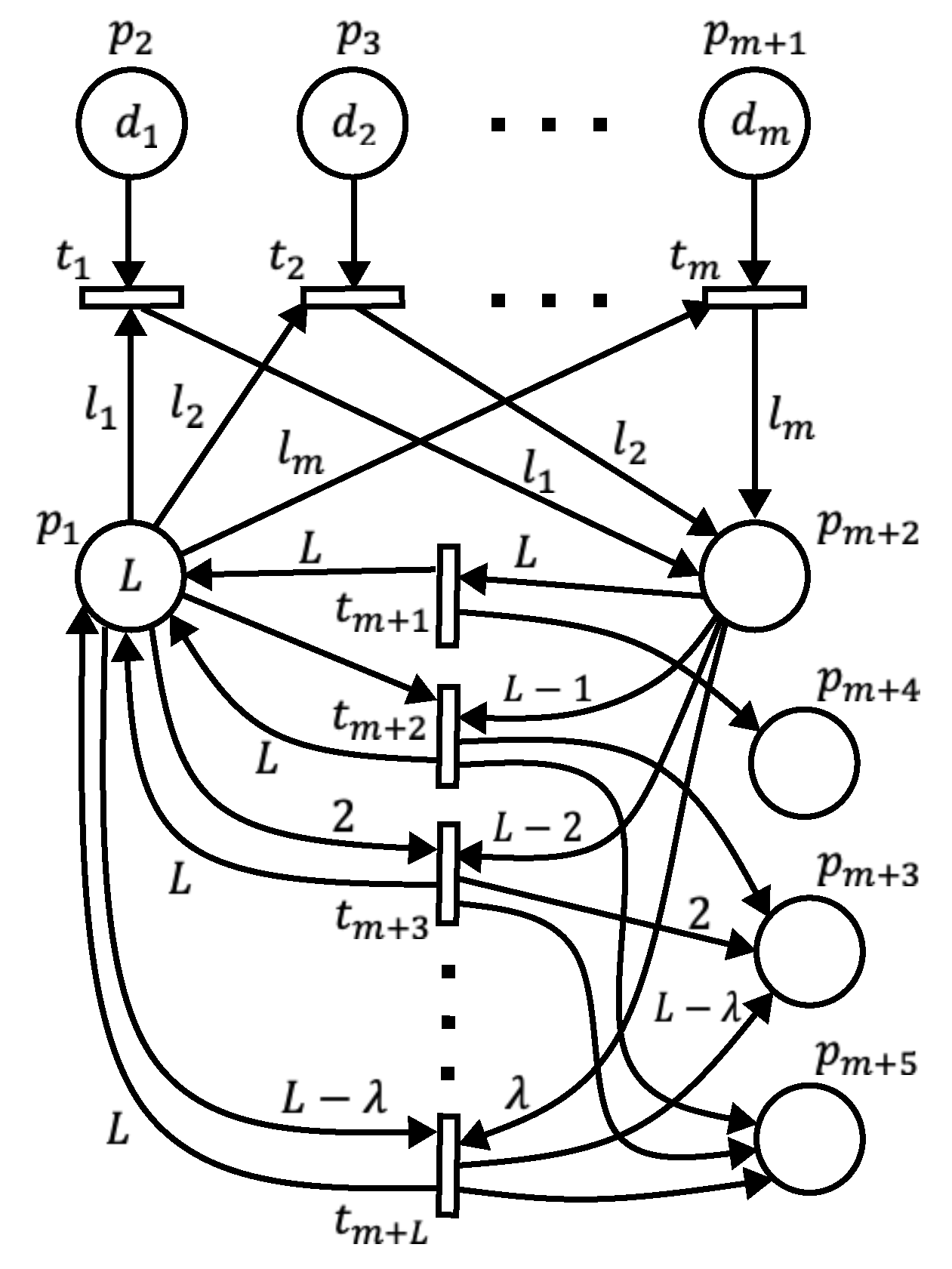

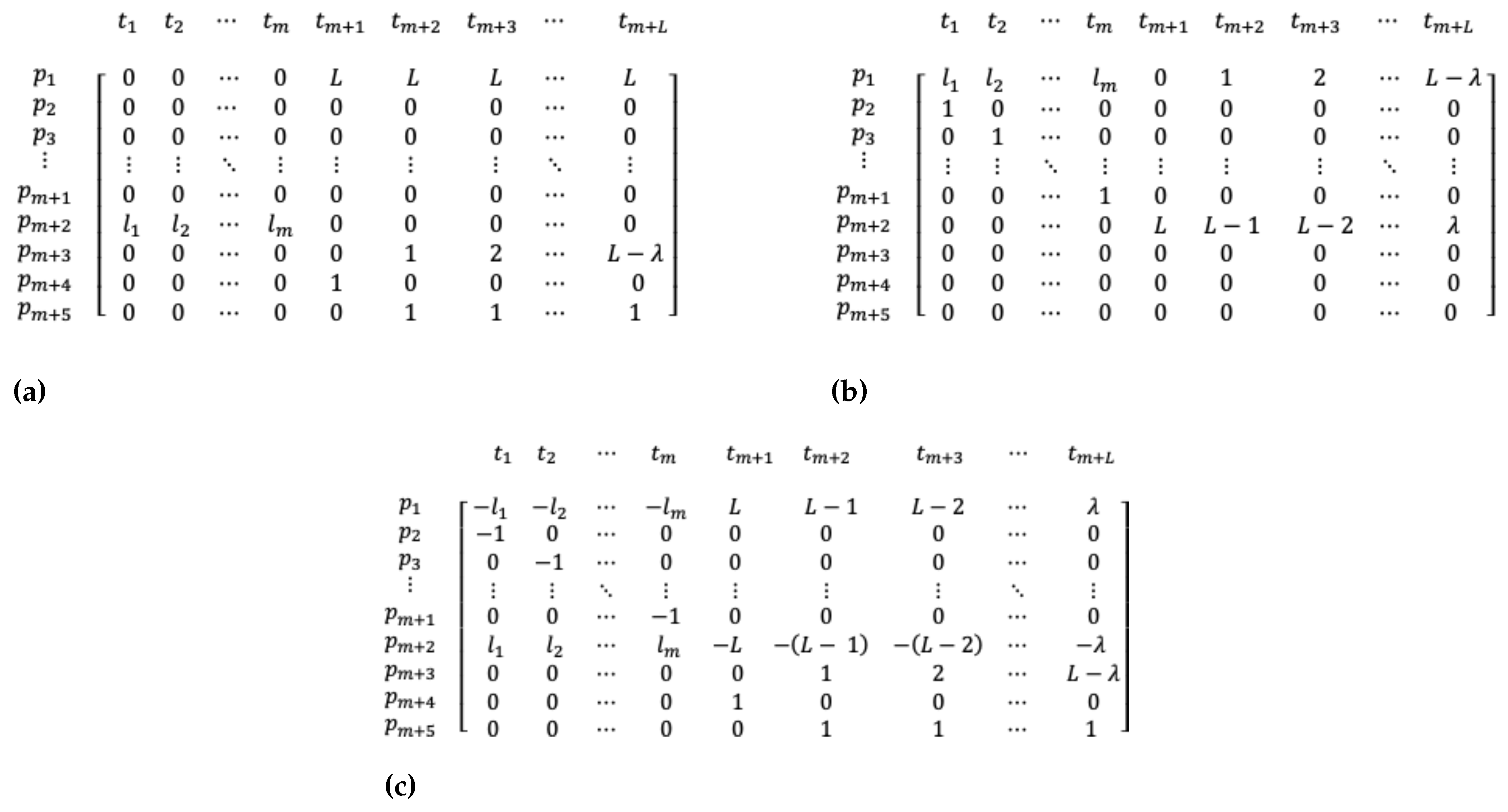

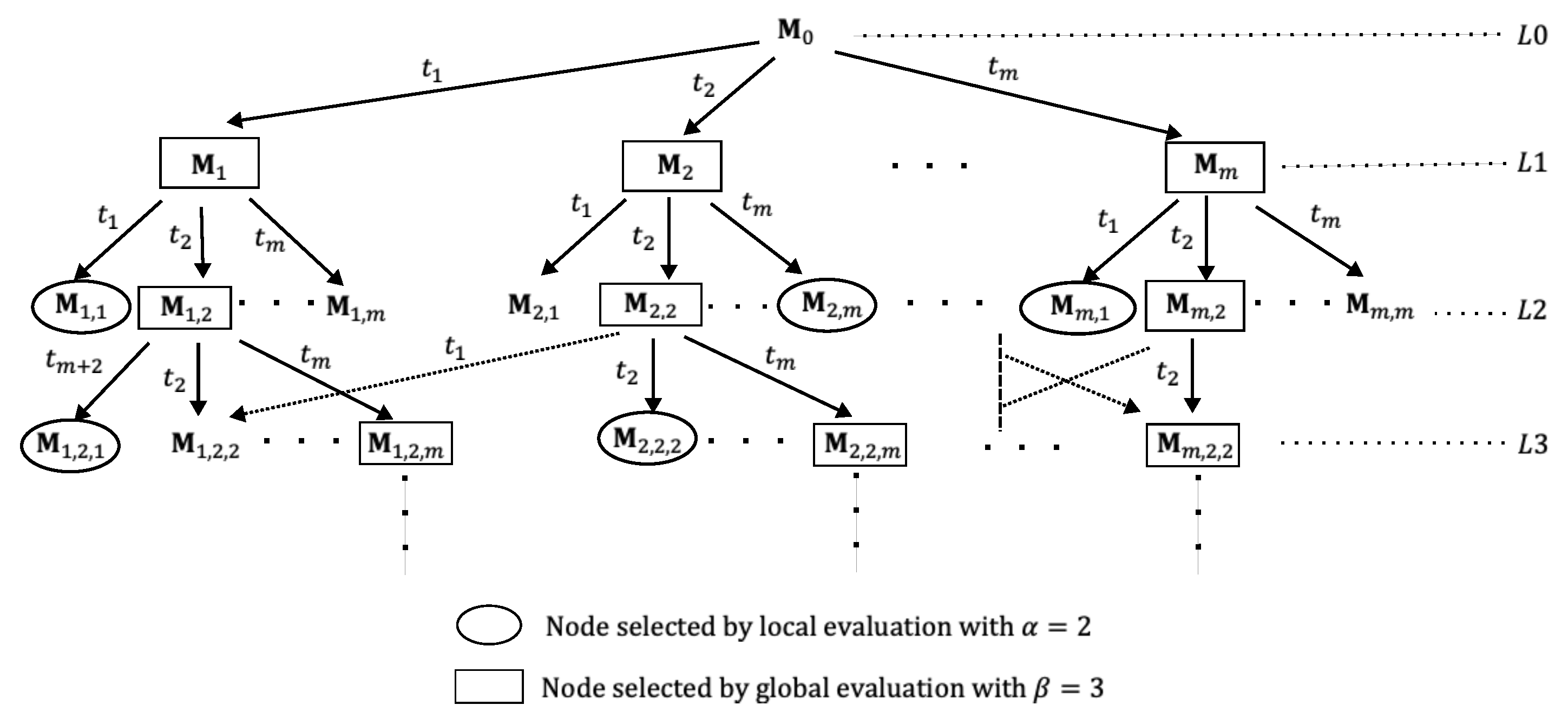

5. Filtered Beam Search Algorithm Implementation

Consider the reachability tree construction of the PN-CSP with initial marking

for a given instance of the 1D-CSP. Starting from this initial marking, all potential solutions are derived by investigating firing sequences leading to the final marking

. However, exhaustive exploration of all sequences is computationally intensive. To solve this issue, we use the FBS, which reduces explored markings as we progress in the reachability tree. FBS does not perform backtracking like traditional any-time search methods, optimizing computational efficiency. The effectiveness of FBS depends on parameters

and

, chosen to balance exploration and exploitation. Algorithm 1 outlines our FBS implementation, starting by generating all child nodes from firing transitions

at the initial marking or the root node (level 0 or

), resulting in

m markings at level 1 (

), as shown in

Figure 7. In our FBS, each marking at L1 is neither locally nor globally evaluated, treating each node as a potential beam node, contrary to [

59]. We made this decision because, by the local evaluation, the algorithm will tend to select the solutions with the largest items placed first, minimizing waste in the initial stock. Preliminary experiments showed this approach yields better solutions compared to selecting only

nodes at

. Thus, starting from L2, we apply both local and global evaluations, setting

to ensure solution diversity.

In most beam search algorithm implementations, node costs at any node are computed using a function that considers cumulative costs up to the node and an estimation of the remaining solution’s cost. The effectiveness of these algorithms heavily depends on choosing the right estimation cost function. Usually, in the FBS algorithm, local evaluation uses cumulative costs, while global evaluation considers both cumulative and estimated costs. Our FBS implementation employs the bi-objective function (

1) as a fitness function to perform both local and global evaluations. This function calculates the complete solution, capturing the number of stocks used, the total waste generated, and the number of stocks with waste that have been generated in the solution.

As we progress in constructing the reachability tree, each node or marking represents only a partial solution, thus its corresponding cost is also partial. Despite not having a complete solution, partial solutions provide insights into the number of stocks used, those with or without waste, and the amount of unused material or potential waste on the current stock. For example, at the initial marking or root node, no stock has been used, resulting in no stocks with or without waste, and an unused material length of

L. At level L1, item markings represent solutions where one order of each item has been taken, but no stock has been fully utilized with or without waste. Eventually, as the reachability tree progresses, pattern markings are reached at some level, indicating that a cutting pattern has been obtained. At the first pattern marking, a stock has been used, and the waste amount for the stock is known. Subsequent item markings’ costs must consider the used stock and its waste. It can be seen from the bi-objective function (

1) that the first term sums the partial wastage of each used stock, while the second sums the number of stocks with waste. We compute two cumulative costs accordingly: one for waste using Equation (

8) and another for the number of stocks with waste using Equation (

9). Both are employed in Equation (

10) for the global evaluation of nodes. These two cumulative costs are computed upon reaching a pattern marking, while Equation (

11) is utilized for local evaluations, considering the unused material of the current stock in each solution.

In Equations (

8) and (

11),

is obtained from the incidence matrix of PN-CSP, where

for

. This is because when a pattern transition is fired, the marking of place

is immediately updated to

L, indicating that the amount of waste in the already used stock is lost. However, this amount of waste can be determined from the incidence matrix. The cumulative costs

and

are set to zero for the item markings between the initial marking and the first pattern marking. These costs are computed and updated at each reached pattern marking. Consequently, the outcomes of

and

are retained for the item markings between two subsequent pattern markings. Once the final marking is reached, the last

outcome is set as the best solution

. To determine whether the final marking has been reached, at each level, we compare the markings obtained with the desired final marking

. However, we only consider the marking of places

. Specifically, we compare the marking

, obtained at some level, with the final marking

. The ’*` means that the marking of the corresponding places is not considered for the comparison. This is because we do not know in advance how much waste would be generated by the best solution or the number of stocks with and without waste. However, we know that at the final marking, there are no more item orders to place, and the last stock is generated when the last item order is taken for the final cutting pattern.

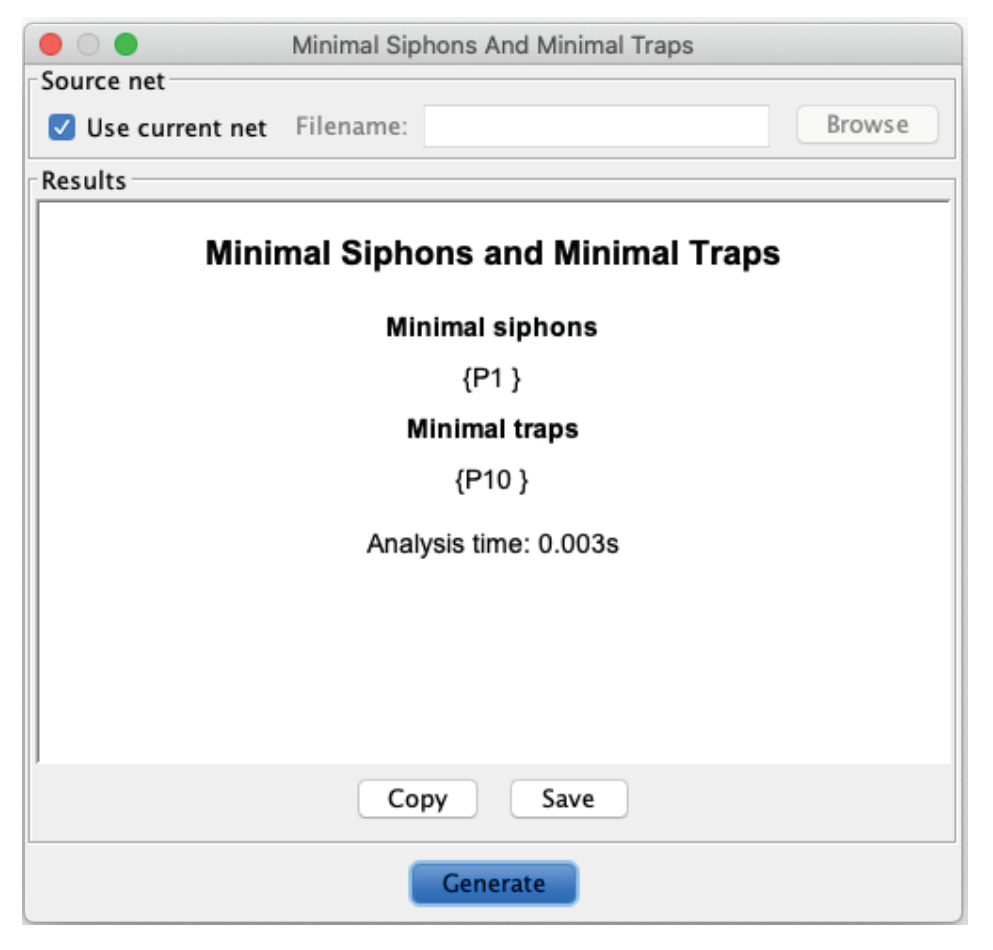

Observe from Algorithm 1, lines 17 and 29, that when performing global and local evaluations, we do not necessarily select

or

number of markings, respectively. Instead, we select the minimum value between

(or

) and the number of markings obtained globally (or locally). This is because, in a reachability tree, a marking could be generated by firing different transitions, resulting in fewer markings than the

markings needed at a level or fewer than the

markings needed from a parent marking or beam node. In some beam search algorithm implementations, if the required

nodes are not met for some level, then more parent nodes at the previous level are explored to meet the required

nodes. However, we do not do this in our FBS implementation, as it is possible to encounter dead markings. This might make it impossible to complete the required number of nodes. Dead markings are related to the liveness property of a PN and this property is closely related to the presence of siphons [

67]. It is known that a PN with siphons is likely to be non-live, as once the places of a siphon become unmarked at some marking, they remain unmarked in all subsequent markings. We performed a structural analysis for an instance with ten different items and a total of 20 orders, using an open-source PN modeling platform called PIPE2 version 4.3.0 [

68] to determine the presence of siphons. The results are shown in

Figure 8, indicating that place

(

according to the enumeration of the PIPE2 platform) is a minimal siphon. Extending this result for the general PN-CSP model with the initial marking in the general form, and considering the dynamics described in the above paragraphs, places

could be minimal siphons and could become unmarked at some marking. The PN-CSP would become deadlocked if all the places

are unmarked, although this situation indicates that the desired final marking has been reached. However, to prevent our algorithm from getting stuck due to incomplete

nodes at some levels and potentially failing to reach the desired final marking, we decided to use the minimum number of nodes as outlined in Algorithm 1.

|

Algorithm 1 Pseudocode of algorithm FBS-PN-CSP |

- Input:

Number of different item types (m), stock length (L), item lengths , and item orders . - Output:

- 1:

With the instance data, generate the incidence matrices , and obtain the incidence matrix . - 2:

Generate the initial marking as . - 3:

Set the beamwidth and the filterwidth . - 4:

- 5:

- 6:

- 7:

Generate level of the reachability tree with the marking as the root node such that . - 8:

- 9:

- 10:

while do

- 11:

for each marking in do

- 12:

Get , and . - 13:

end for

- 14:

if level > 1 then

- 15:

Kept the best markings in with the lowest cost and form the set with these markings. Eliminate the remaining markings from . - 16:

else

- 17:

Kept the markings in and form the set with these markings. - 18:

end if

- 19:

- 20:

for each marking in do

- 21:

Determine the reachable markings from with Equation ( 6) and form the set with those reachable markings, where . - 22:

if then

- 23:

Obtain , , and for each . - 24:

if level >0 then

- 25:

Check whether any marking has already been generated previously. If it has, let be the previously generated marking that is equal to , and then eliminate from the reachability tree the one with the maximum cost. - 26:

if then

- 27:

Kept the markings with the lowest cost and eliminate the remaining ones from . - 28:

end if

- 29:

else

- 30:

Keep the markings in . - 31:

end if

- 32:

Make

- 33:

end if

- 34:

end for

- 35:

Check whether the final marking has been reached. - 36:

if has been reached then

- 37:

- 38:

- 39:

else

- 40:

- 41:

- 42:

end if

- 43:

end while |

6. Experiments

Although we identified a wide variety of datasets in the literature, most studies do not use common datasets or performance measures to compare the algorithms. Additionally, implementing the published algorithms correctly can be challenging, and often, the code is not shared. Nevertheless, we identified two algorithms that share some similarities with our algorithm and are not based on populations. These algorithms were tested on five datasets that we found suitable for comparison with our algorithm. The first algorithm is the Least Lost Algorithm (LLA), introduced in [

54], which uses a dataset of ten instances with a single stock size. We refer to this dataset as

hinterding because, to the best of our knowledge, it was first used in [

35]. The LLA is executed in two phases. In the first one, the item lengths are arranged in decreasing order while in the second, the second half of the arrangement is put before the first half. In both phases, the items are taken from the arrangement to construct cutting patterns by beginning with those that have no waste. If no additional cutting patterns without waste can be found, the search continues with patterns that have one unit of waste. This process continues by increasing the allowed waste by one unit until all the item orders have been included in a cutting pattern. If the number of stocks used is above the optimal theoretical value by more than 5%, the algorithm performs the second phase. The feature of increasing the allowed waste by one unit is represented in our PN-CSP model with the pattern transitions. The LLA was compared to the EP algorithm shown in [

8] over the same dataset and the LLA yielded better results.

The other algorithm we compare to the FBS-PN-CSP is the Generate & Solve algorithm (G&S) presented in [

55]. This algorithm is also executed in two phases. The first one reduces the problem size using a tree method that constructs all possible cutting patterns. Feasible cutting patterns are then selected and sent to the second phase, where an integer linear programming (ILP) method is implemented to obtain the best solution for the reduced set of solutions. In the first phase, a new reduced set of solutions is generated by considering an aging mechanism for previously used solutions, which can be re-selected as long as they have not reached a specified age limit. These new solutions are then sent to the second phase to determine the best solution among them, replacing the older solution if the new one is better. This process continues until a predefined time limit is reached, and the last solution found is returned as the best solution for the problem. The G&S algorithm was compared to the column generation technique. Both algorithms performed similarly for small instances, but the G&S algorithm was more effective for large instances. From the datasets used in [

55], we selected the ones called

hard28,

hard10, and

wae_gau1, available at [

69]. Additionally, we included the dataset

falkenauer_U from the same repository, which consists of four groups named

binpack1,

binpack2,

binpack3, and

binpack4.

Table 1 provides details about the datasets. The third column lists the minimum and maximum number of item types among the instances of each dataset, while the fourth column shows the number of different item types, along with the standard deviation in rounded brackets. Both the

hinterding dataset and the

hard10 dataset have four different item types, but the variability in the former is greater than in the latter.

The comparisons among the three algorithms were made with respect to the number of stocks used and its optimal theoretical value, which can be obtained using Equation

12. The experiments were conducted on a Quad-Core Intel

(R) Core

(TM) i5 computer with a 3.4 GHz processor and 8 GB RAM. All three algorithms were coded in Python 3.11.1. For the second phase of the G&S algorithm, we used the Scipy package with the

linprog library. We also set the same parameter values as in [

55], that is, specifically: a time limit of 600 seconds for the G&S algorithm, a mutation rate of 10%, a maximum age of 2, and a maximum number of cutting patterns for the tree construction of 1,000,000. Additionally, we also executed the G&S algorithm 10 times for each instance. However, we did not set a time limit in the second phase of the G&S algorithm, as the solutions from the ILP solver were obtained in an acceptable time.

One of the issues with beam search algorithms is the selection of the beam width . The smaller the value, the faster the search, but this comes at the loss of optimality due to aggressive pruning. We conducted preliminary experiments to determine suitable and values, considering the condition . These experiments were carried out with the hinterding dataset. In all ten instances of this dataset, we observed that the best solutions were found with a beam width between 5 and 25; with higher values, the solution improvement was negligible. Thus, for all the experiments with the FBS-PN-CSP algorithm, we used the interval for the beam width, at steps 5, 15, 25, and 35, with for each value of . However, for the hinterding dataset, we additionally implemented a value of 45, and for the hard10 dataset, we only could implement a beam width of . With , no good solution was found in any instance.

7. Results

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9 show the best results obtained with each algorithm for the number of stocks used and the time taken. Additionally, we provide the

value for all the instances and the percentage above this value (%

) obtained by each algorithm. The FBS-PN-CSP, G&S, and LLA algorithms were compared only with the

hinterding and

falkenauer_U datasets since the LLA algorithm ran out of memory for the other datasets. The minimum value of stocks used among the compared algorithms is highlighted in boldface. For the G&S algorithm, we provide the best solution found within the 10 executions, and likewise, for the FBS-PN-CSP algorithm, we include the best combinations of beam width and filter width where the best stock result was found.

For the hinterding dataset, the three algorithms obtained the same optimal theoretical value for the 1a-5a instances and the 7a instance. The FBS-PN-CSP and the G&S algorithms also obtained the same best result for the 6a instance. Only the G&S had the optimal theoretical value for the 8a-10a instances. The FBS-PN-CSP algorithm had better results than the LLA algorithm for the 6a, 8a, and 9a instances, while for the 10a instance, both had the same result. Although the FBS-PN-CSP algorithm was not better than the G&S algorithm for instances 8a-10a, it obtained a percentage above the optimal theoretical value of less than 1%. The FBS-PN-CSP algorithm was more efficient than the G&S algorithm in 6 out of 7 instances where they are equal, namely 2a-7a. However, the LLA algorithm had better times in all the instances where it had the same results as the other two algorithms. The beam width of the FBS-PN-CSP algorithm seems to increase as the number of items and the stock length increase. The highest beam width and filter width values were obtained in the 10a instance, which has the higher number of items and the longest stock.

The results of the FBS-PN-CSP and G&S algorithms are shown in

Table 3 for the

hard28 dataset. Both algorithms obtained the same best results except for the BPP900 and BPP781 instances, where the FBS-PN-CSP had the better results. Nevertheless, neither obtained the optimal theoretical value. The FBS-PN-CSP was more efficient in 15 out of 27 instances where both algorithms obtained the same result. As for the beam width of the FBS-PN-CSP, this was the minimum value of the tested values, that is,

for all the instances. For the

hard10 instances, the G&S algorithm was better than the FBS-PN-CSP algorithm as shown in

Table 4. However, the G&S algorithm only had the optimal theoretical value in one instance, namely HARD9. Even with the smaller value of the beam width, the time required for the FBS-PN-CSP was far higher than the time of the G&S algorithm. For this reason, we could not implement larger values for the beam width since the computational cost was prohibited, although it did not run out of memory. Despite the results for stock used by the FBS-PN-CSP algorithm, it was not far from those of the G&S and could be at least equal if higher values of the beam width can be implemented.

In 12 out of 17 instances of the

waegau1 dataset, the FBS-PN-CSP algorithm matched the best results of the G&S algorithm (

Table 5), and in 9 of these 12 instances, it had better times. In 4 out of the 17 instances, the FBS-PN-CSP had better results, and only in one instance, the G&S was better than the FBS-PN-CSP. In 7 out of the 17 instances, the FBS-PN-CSP obtained the optimal theoretical value, while the G&S did so in only four instances. Regarding beam width, four instances required the higher tested values, namely 25 and 35, but with a small filter width.

Table 6 shows the best stock results for the

binpack1 instances of the

falkenauer_U dataset, where the FBS-PN-CSP, G&S, and LLA algorithms are compared. The FBS-PN-CSP and the G&S algorithms obtained the optimal theoretical values of each instance, but the FBS-PN-CSP had the best times. The LLA algorithm achieved the optimal theoretical values in 12 out of 20 instances and had better times than the FBS-PN-CSP algorithm. Only in two instances did the FBS-PN-CSP use the higher beam width values (25 and 35), but in most instances, the value was 5, which is the smallest value tested. For the

binpack2 instances, the FBS-PN-CSP had the same results as the G&S algorithm in 19 instances (

Table 7), and these were equal to the corresponding optimal theoretical values. All the results of the G&S algorithm were equal to the optimal theoretical value. The LLA algorithm had the optimal theoretical values in half of the instances and the best times among the three algorithms. The FBS-PN-CSP algorithm had better times than the G&S in ten of the instances where they had the same stock result. The beam width was mostly 15 throughout the

binpack2 instances. The

binpack3 results (

Table 8) show that the G&S algorithm obtained the optimal theoretical values in all instances, and the FBS-PN-CSP algorithm did so in 17 instances. Likewise, the LLA algorithm achieved this in only 11 instances, but with better times than the FBS-PN-CSP and G&S algorithms. Only in the instance u500_14 did the FBS-PN-CSP obtain a better time than the G&S algorithm. The values of the beam width were 15 and 25 in most instances, and the filter value was higher than in the previous groups of instances of the

falkenauer_U dataset. Similar results were obtained for the

binpack4 instances shown in

Table 9, where the G&S algorithm outperformed the other two algorithms. In all instances, this algorithm obtained the optimal theoretical value, the FBS-PN-CSP did so in 14 instances, and the LLA algorithm in 7 instances, but only with better times than the FBS-PN-CSP. The beam width was mostly higher, namely 25 throughout the instances, as was the filter width. Although in the instances of

binpack2,

binpack3, and

binpack4 the FBS-PN-CSP was not better than the G&S in terms of the number of stocks used, the percentage above the optimal theoretical values was less than 1% with a difference of one stock in all cases.