Submitted:

01 August 2024

Posted:

02 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- We provide the first holistic study to cover and connect various families of videoSSL methods comprehensively.

- Extensive evaluations on various downstream tasks using different protocols are presented, offering insights into the performance and utility of learned videoSSL models across different task families.

- We detail various challenges associated with videoSSL methods and recognize the strengths of existing papers that have laid initial foundations in this direction.

2. Problem Definition: Video Self-Supervised Learning

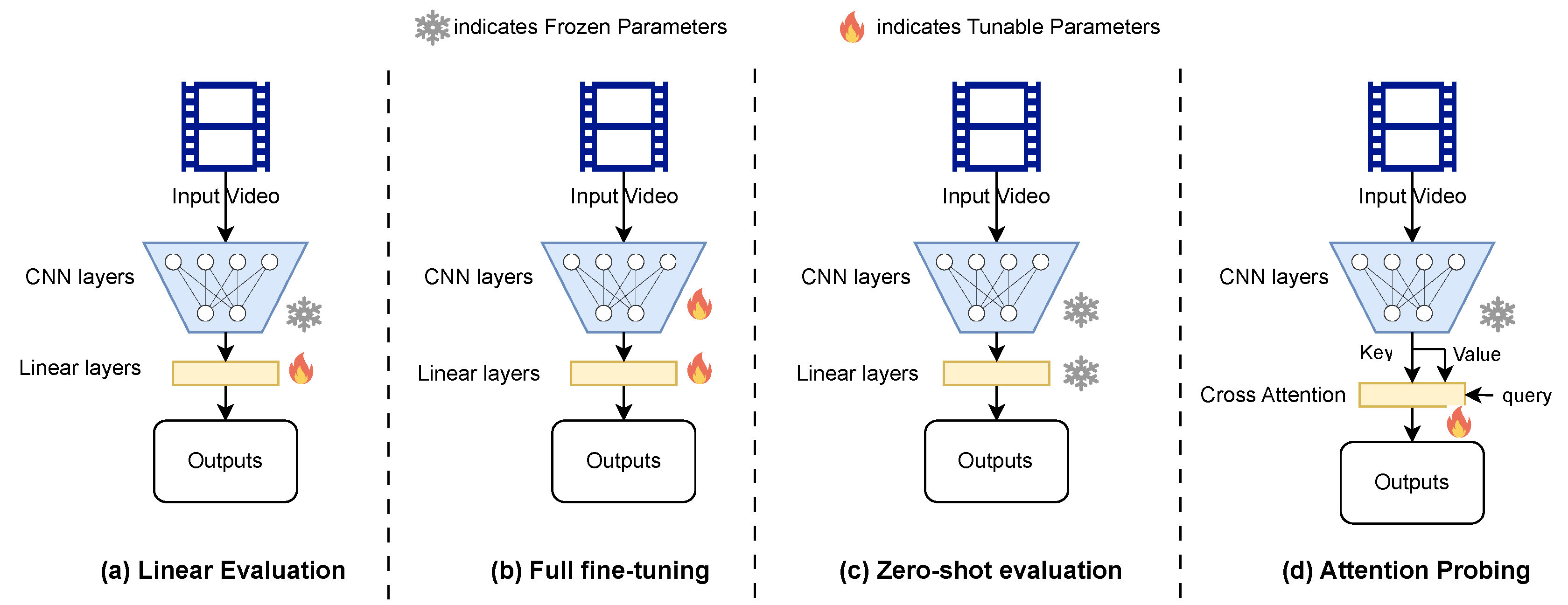

3. Representation Learning

3.1. Learning Objectives with Low-Level Cues

3.1.1. Learning the Temporal Cues

Temporal Order

Learning Playback-Rate of Video

Learning across Short-Long Temporal Contexts

Temporal Coherence

Temporal Correspondence

3.1.2. Learning the Spatial Cues:

Spatial Order

Spatial Orientation

Spatial Continuity

3.1.3. Learning the Spatiotemporal Cues

Spatiotemporal Order

Spatiotemporal Continuity

3.1.4. Learning Low-Level Motion Cues

3.1.5. Learning Geometric Cues

3.2. Learning Objectives with High-Level Cues

3.2.1. Discrimination Based Learning Objectives

3.2.2. Similarity Based Learning Objectives

3.3. Learning with Precomputed Visual Priors

3.3.1. Optical Flow

3.3.2. Motion Trajectories

3.3.3. Silhouettes

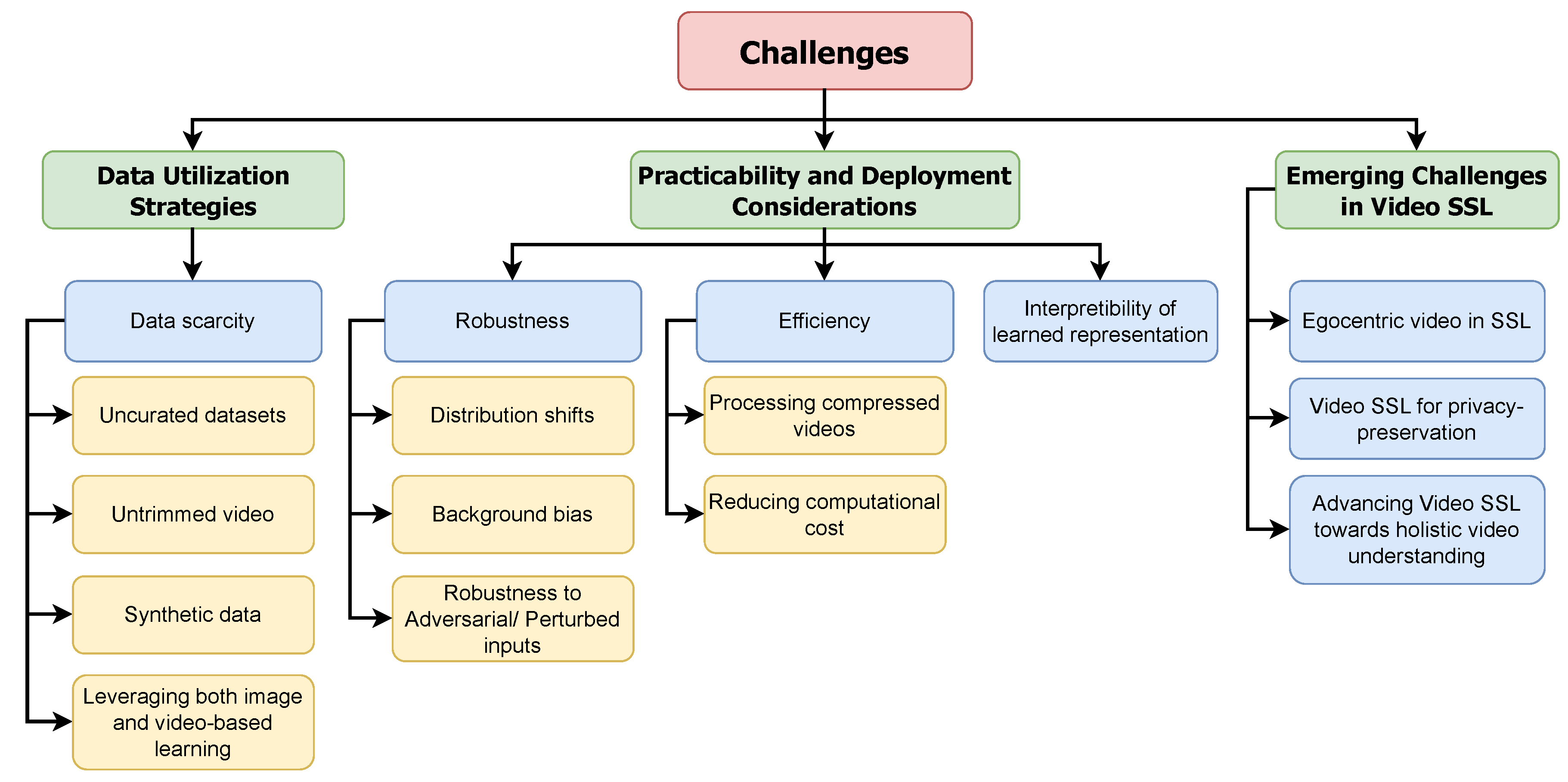

4. Challenges, Issues and Proposed Solutions in Video SSL

4.1. Data Utilization Strategies

4.1.1. Data Scarcity

Learning from Uncurated Datasets

Using Untrimmed Videos

Using Synthetic Data

Leveraging Both Image and Video-Based Learning

4.2. Practicability and Deployment Considerations

4.2.1. Robustness

Distribution Shifts

Background Bias

Robustness to Adversarial/Perturbed Inputs

4.2.2. Efficiency:

Processing Compressed Video

Reducing Computational Cost

4.2.3. Interpretibility of Learned Representation:

4.3. Navigating Emerging Challenges in Video SSL

4.3.1. Egocentric Video in SSL:

4.3.2. Video SSL for Privacy-Preservation:

4.3.3. Advancing Video SSL Towards Holistic Video Understanding:

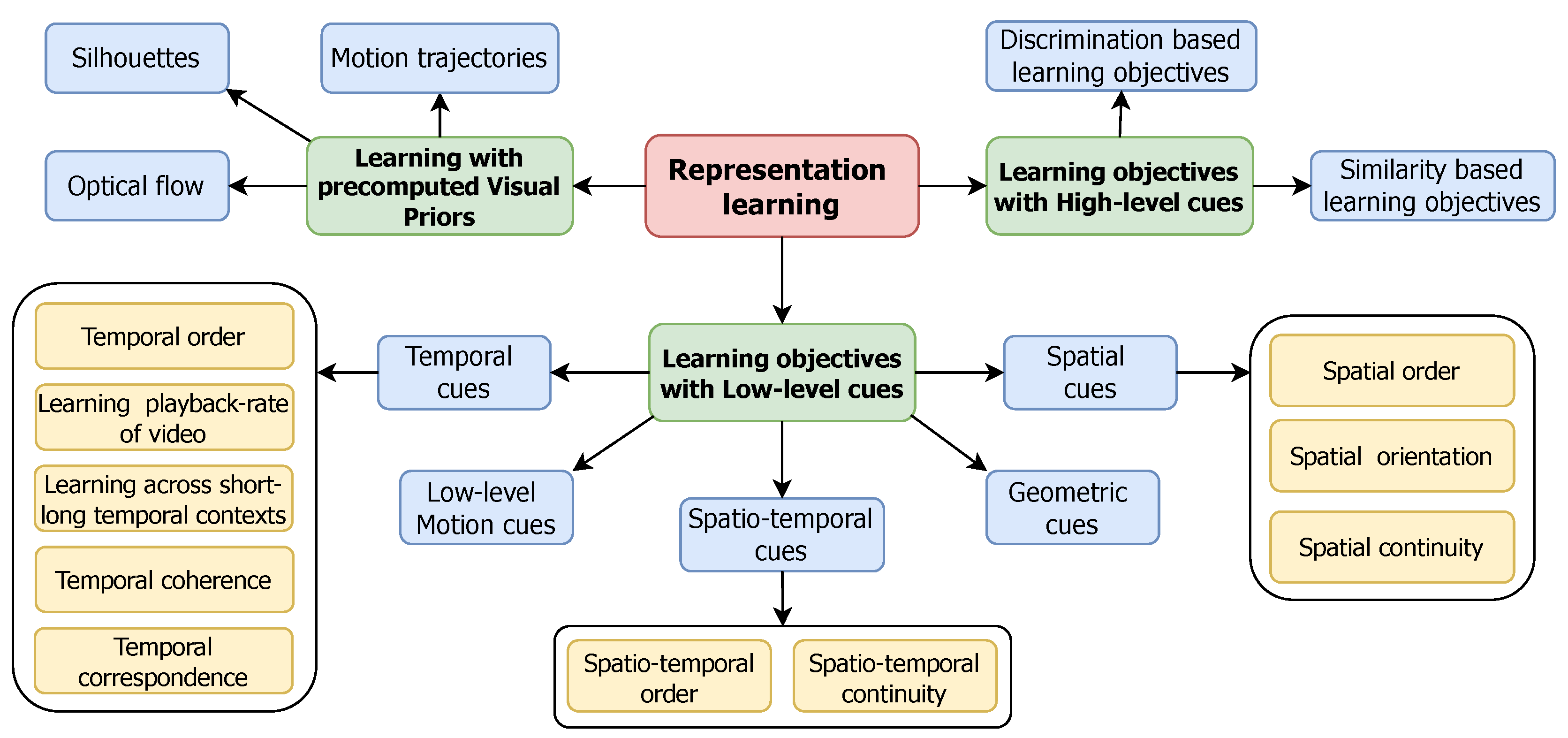

5. Experimental Comparison

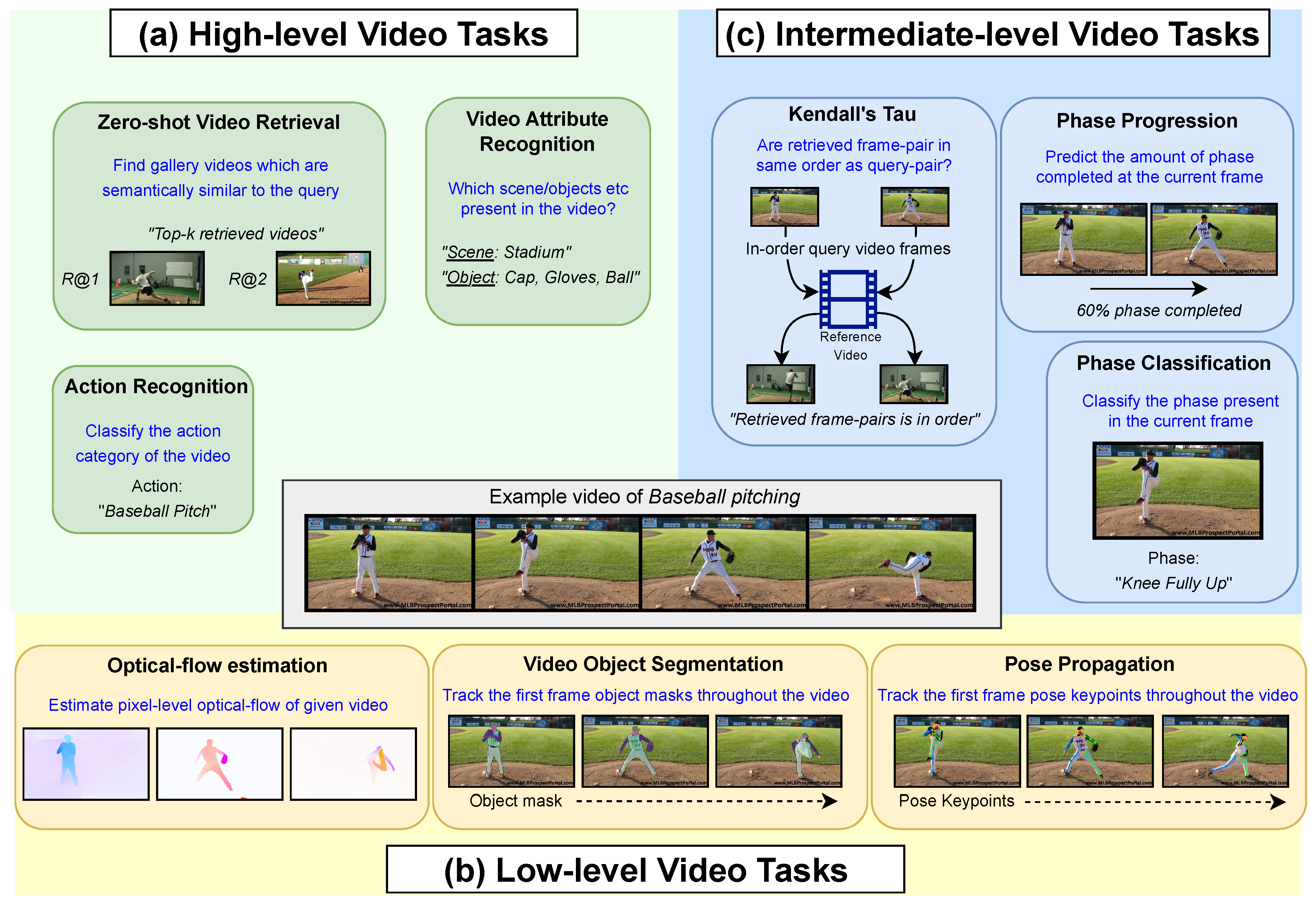

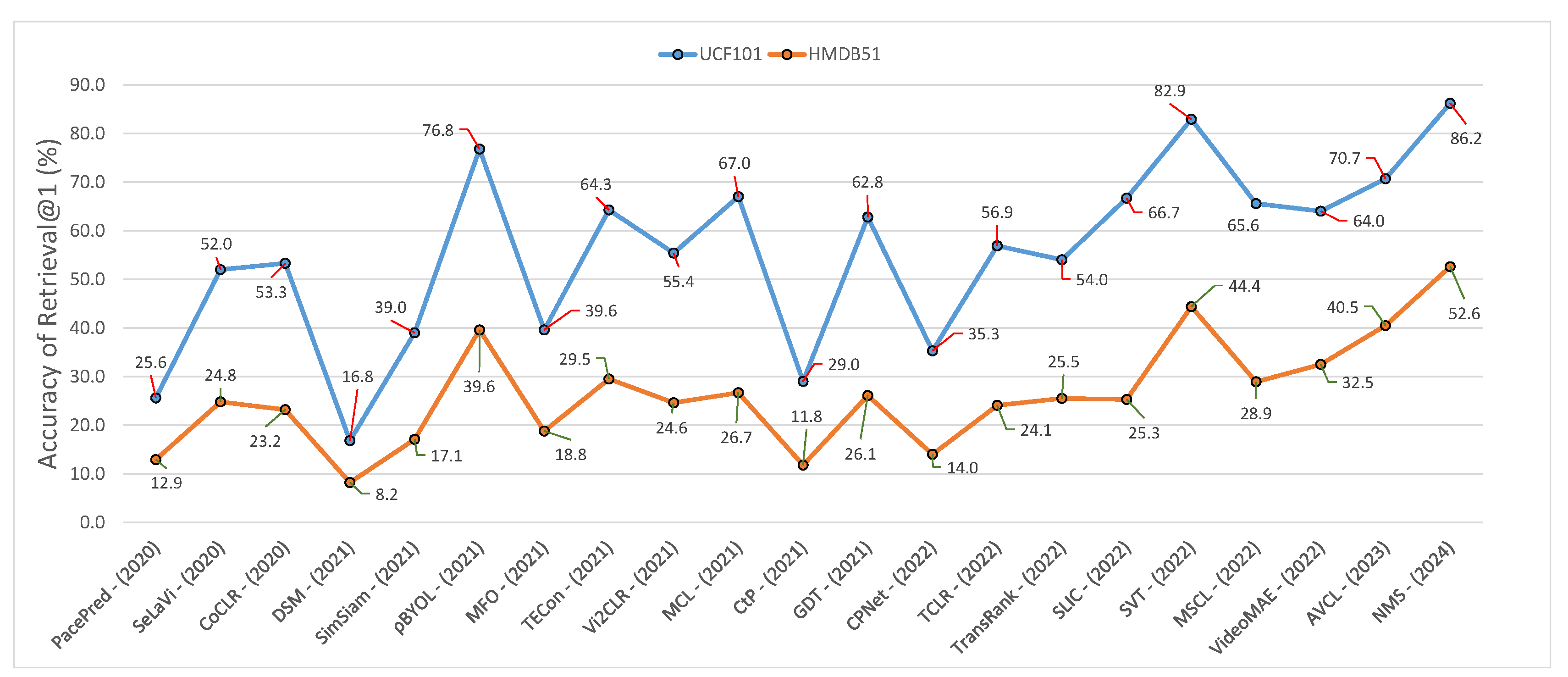

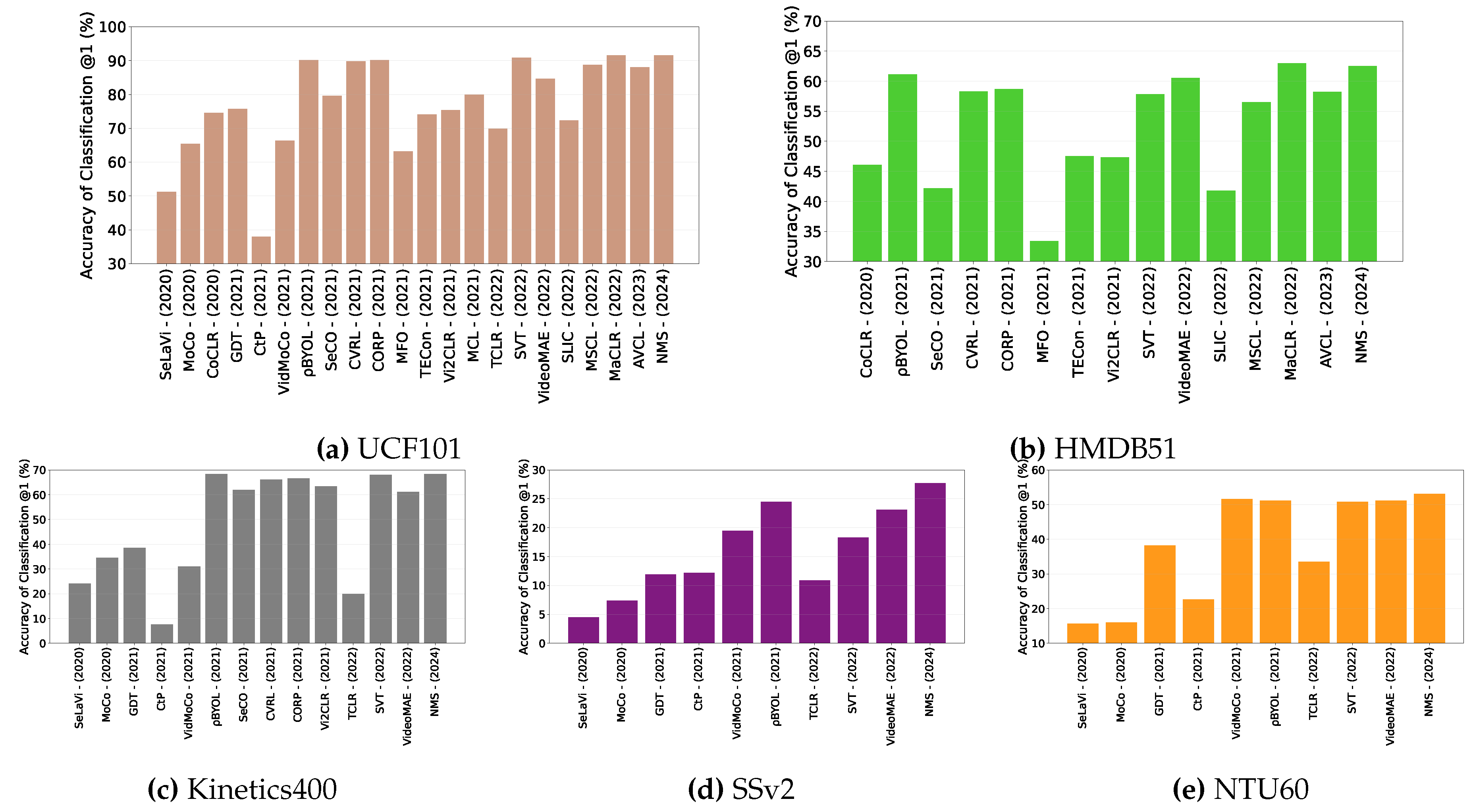

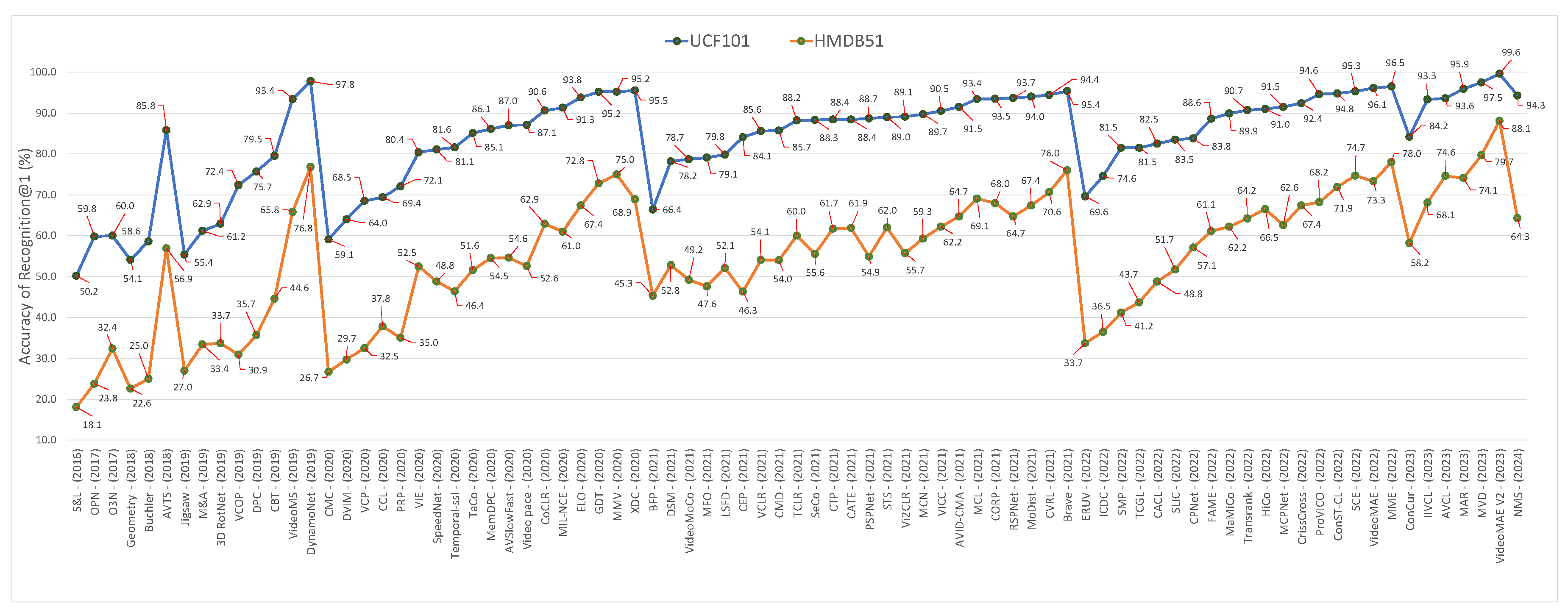

5.1. Semantic Understanding Based Tasks

5.1.1. Action Recognition

5.1.2. Video Classification

5.2. Action Phases Related Downstream Tasks

5.2.1. Phase Classification

5.2.2. Phase Progression

5.2.3. Kendall Tau

5.2.4. Observations

5.3. Frame-to-Frame Temporal Correspondence Based Tasks

5.3.1. Video Object Segmentation (VOS):

5.3.2. Pose Propagation

5.3.3. Gait Recognition

5.3.4. Observations

5.4. Optical Flow Estimation Related Downstream Task

5.4.1. Observations

5.5. Robustness

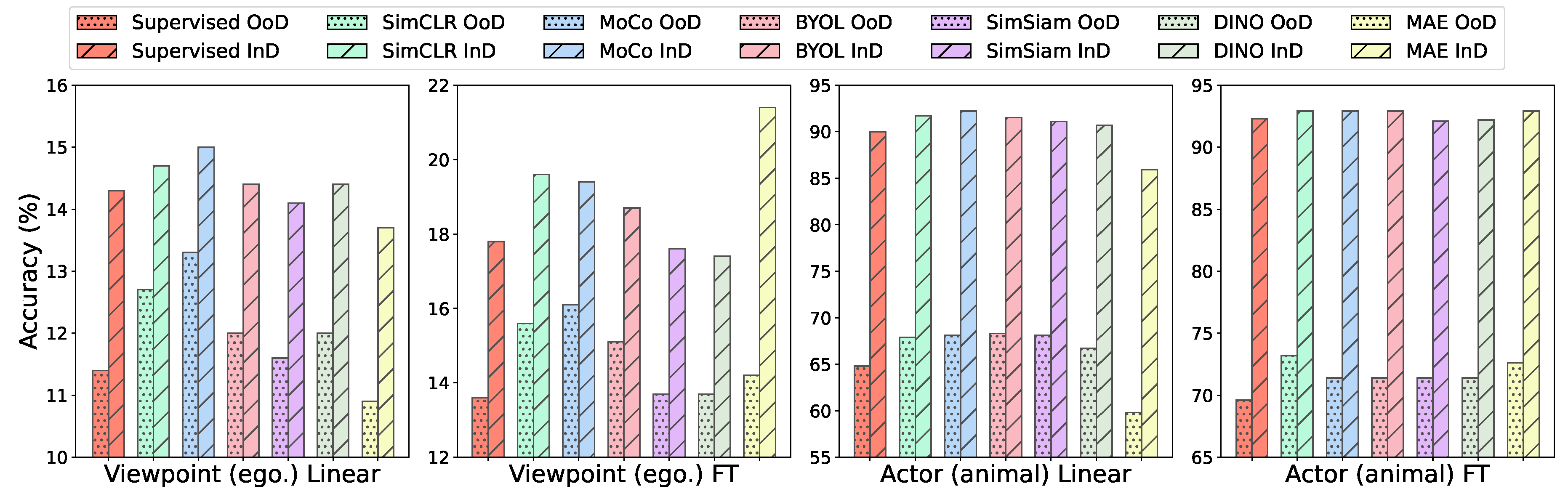

5.5.1. Distribution Shifts

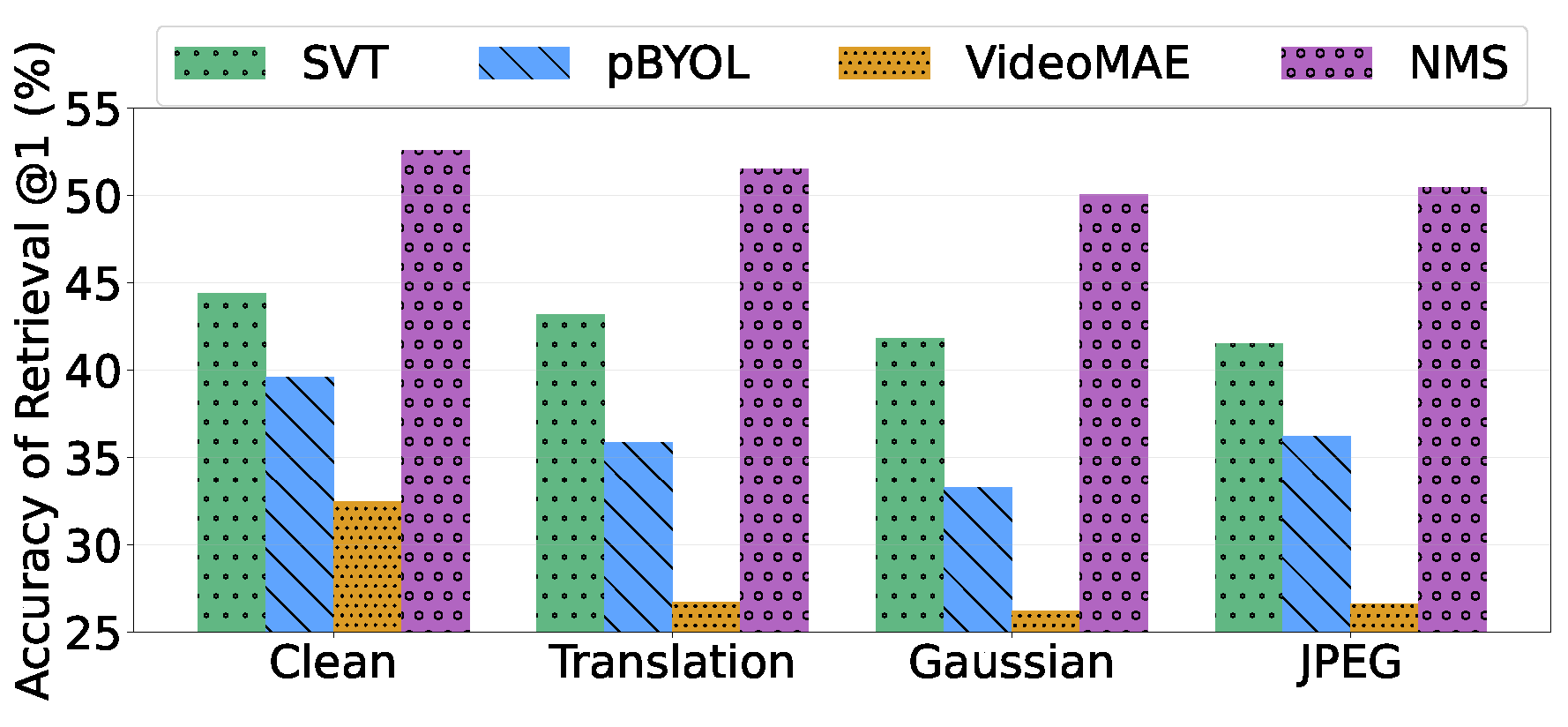

5.5.2. Input Perturbations

5.5.3. Observations

5.6. Overall Experimental Summary and Future Directions

Action Recognition

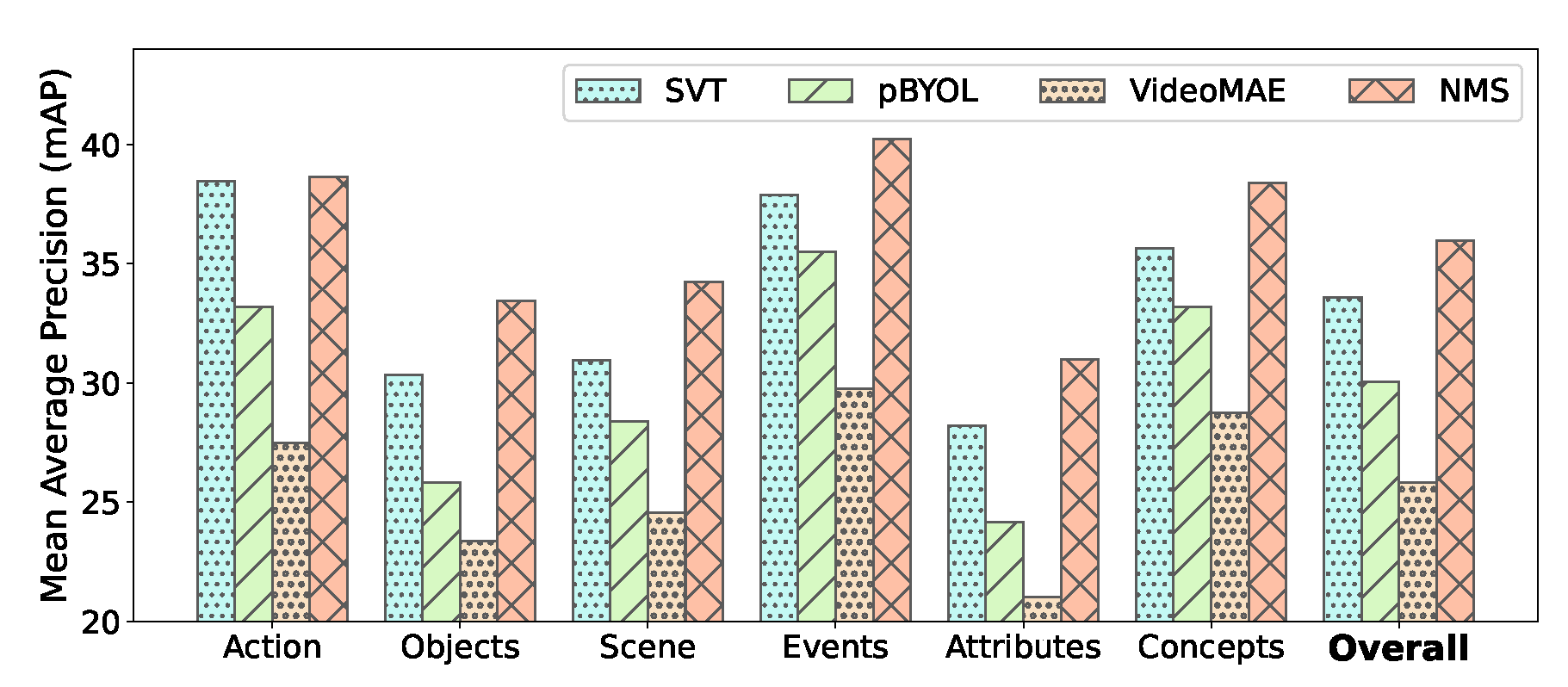

Video Attribute Recognition

Phase-Related Tasks

Temporal Correspondence Tasks

Optical Flow Estimation

6. Conclusion

Acknowledgments

References

- Hara, K.; Kataoka, H.; Satoh, Y. Learning spatio-temporal features with 3d residual networks for action recognition. Proceedings of the IEEE international conference on computer vision workshops, 2017, pp. 3154–3160.

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A closer look at spatiotemporal convolutions for action recognition. Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, 2018, pp. 6450–6459.

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? a new model and the kinetics dataset. proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 6299–6308.

- Xie, S.; Sun, C.; Huang, J.; Tu, Z.; Murphy, K. Rethinking spatiotemporal feature learning: Speed-accuracy trade-offs in video classification. Proceedings of the European conference on computer vision (ECCV), 2018, pp. 305–321.

- Neimark, D.; Bar, O.; Zohar, M.; Asselmann, D. Video transformer network. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 3163–3172.

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A video vision transformer. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 6836–6846.

- Liu, Z.; Ning, J.; Cao, Y.; Wei, Y.; Zhang, Z.; Lin, S.; Hu, H. Video swin transformer. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 3202–3211.

- Girdhar, R.; Carreira, J.; Doersch, C.; Zisserman, A. Video action transformer network. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 244–253.

- Zhao, H.; Torralba, A.; Torresani, L.; Yan, Z. Hacs: Human action clips and segments dataset for recognition and temporal localization. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 8668–8678.

- Diba, A.; Fayyaz, M.; Sharma, V.; Paluri, M.; Gall, J.; Stiefelhagen, R.; Van Gool, L. Large scale holistic video understanding. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part V 16. Springer, 2020, pp. 593–610.

- Schiappa, M.C.; Rawat, Y.S.; Shah, M. Self-supervised learning for videos: A survey. ACM Computing Surveys 2022. [Google Scholar] [CrossRef]

- Bardes, A.; Garrido, Q.; Ponce, J.; Chen, X.; Rabbat, M.; LeCun, Y.; Assran, M.; Ballas, N. V-JEPA: Latent Video Prediction for Visual Representation Learning 2023.

- Misra, I.; Zitnick, C.L.; Hebert, M. Shuffle and learn: unsupervised learning using temporal order verification. Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14. Springer, 2016, pp. 527–544.

- Fernando, B.; Bilen, H.; Gavves, E.; Gould, S. Self-supervised video representation learning with odd-one-out networks. Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 3636–3645.

- Lee, H.Y.; Huang, J.B.; Singh, M.; Yang, M.H. Unsupervised representation learning by sorting sequences. Proceedings of the IEEE international conference on computer vision, 2017, pp. 667–676.

- Wei, D.; Lim, J.J.; Zisserman, A.; Freeman, W.T. Learning and using the arrow of time. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 8052–8060.

- Xu, D.; Xiao, J.; Zhao, Z.; Shao, J.; Xie, D.; Zhuang, Y. Self-supervised spatiotemporal learning via video clip order prediction. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 10334–10343.

- Xue, F.; Ji, H.; Zhang, W.; Cao, Y. Self-supervised video representation learning by maximizing mutual information. Signal processing: Image communication 2020, 88, 115967. [Google Scholar] [CrossRef]

- Luo, D.; Liu, C.; Zhou, Y.; Yang, D.; Ma, C.; Ye, Q.; Wang, W. Video cloze procedure for self-supervised spatio-temporal learning. Proceedings of the AAAI conference on artificial intelligence, 2020, Vol. 34, pp. 11701–11708.

- Hu, K.; Shao, J.; Liu, Y.; Raj, B.; Savvides, M.; Shen, Z. Contrast and order representations for video self-supervised learning. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 7939–7949.

- Yao, T.; Zhang, Y.; Qiu, Z.; Pan, Y.; Mei, T. Seco: Exploring sequence supervision for unsupervised representation learning. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, Vol. 35, pp. 10656–10664.

- Guo, S.; Xiong, Z.; Zhong, Y.; Wang, L.; Guo, X.; Han, B.; Huang, W. Cross-architecture self-supervised video representation learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 19270–19279.

- Luo, D.; Zhou, Y.; Fang, B.; Zhou, Y.; Wu, D.; Wang, W. Exploring relations in untrimmed videos for self-supervised learning. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 2022, 18, 1–21. [Google Scholar] [CrossRef]

- Kumar, V. Unsupervised Learning of Spatio-Temporal Representation with Multi-Task Learning for Video Retrieval. 2022 National Conference on Communications (NCC). IEEE, 2022, pp. 118–123.

- Dorkenwald, M.; Xiao, F.; Brattoli, B.; Tighe, J.; Modolo, D. Scvrl: Shuffled contrastive video representation learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 4132–4141.

- Bai, Y.; Fan, H.; Misra, I.; Venkatesh, G.; Lu, Y.; Zhou, Y.; Yu, Q.; Chandra, V.; Yuille, A. Can temporal information help with contrastive self-supervised learning? arXiv preprint arXiv:2011.13046, arXiv:2011.13046 2020.

- Liu, Y.; Wang, K.; Liu, L.; Lan, H.; Lin, L. Tcgl: Temporal contrastive graph for self-supervised video representation learning. IEEE Transactions on Image Processing 2022, 31, 1978–1993. [Google Scholar] [CrossRef] [PubMed]

- Jenni, S.; Jin, H. Time-equivariant contrastive video representation learning. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 9970–9980.

- Cho, H.; Kim, T.; Chang, H.J.; Hwang, W. Self-supervised visual learning by variable playback speeds prediction of a video. IEEE Access 2021, 9, 79562–79571. [Google Scholar] [CrossRef]

- Wang, J.; Jiao, J.; Liu, Y.H. Self-supervised video representation learning by pace prediction. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XVII 16. Springer, 2020, pp. 504–521.

- Benaim, S.; Ephrat, A.; Lang, O.; Mosseri, I.; Freeman, W.T.; Rubinstein, M.; Irani, M.; Dekel, T. Speednet: Learning the speediness in videos. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 9922–9931.

- Chen, P.; Huang, D.; He, D.; Long, X.; Zeng, R.; Wen, S.; Tan, M.; Gan, C. Rspnet: Relative speed perception for unsupervised video representation learning. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, Vol. 35, pp. 1045–1053.

- Dave, I.R.; Jenni, S.; Shah, M. No More Shortcuts: Realizing the Potential of Temporal Self-Supervision. Proceedings of the AAAI Conference on Artificial Intelligence, 2024, Vol. 38, pp. 1481–1491.

- Jenni, S.; Meishvili, G.; Favaro, P. Video representation learning by recognizing temporal transformations. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXVIII 16. Springer, 2020, pp. 425–442.

- Yang, C.; Xu, Y.; Dai, B.; Zhou, B. Video representation learning with visual tempo consistency. arXiv preprint arXiv:2006.15489, arXiv:2006.15489 2020.

- Behrmann, N.; Fayyaz, M.; Gall, J.; Noroozi, M. Long short view feature decomposition via contrastive video representation learning. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 9244–9253.

- Recasens, A.; Luc, P.; Alayrac, J.B.; Wang, L.; Strub, F.; Tallec, C.; Malinowski, M.; Pătrăucean, V.; Altché, F.; Valko, M.; others. Broaden your views for self-supervised video learning. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 1255–1265.

- Fang, B.; Wu, W.; Liu, C.; Zhou, Y.; He, D.; Wang, W. Mamico: Macro-to-micro semantic correspondence for self-supervised video representation learning. Proceedings of the 30th ACM International Conference on Multimedia, 2022, pp. 1348–1357.

- Liu, C.; Yao, Y.; Luo, D.; Zhou, Y.; Ye, Q. Self-supervised motion perception for spatiotemporal representation learning. IEEE Transactions on Neural Networks and Learning Systems 2022. [Google Scholar] [CrossRef] [PubMed]

- Ranasinghe, K.; Naseer, M.; Khan, S.; Khan, F.S.; Ryoo, M.S. Self-supervised video transformer. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 2874–2884.

- Qian, R.; Li, Y.; Yuan, L.; Gong, B.; Liu, T.; Brown, M.; Belongie, S.J.; Yang, M.H.; Adam, H.; Cui, Y. On Temporal Granularity in Self-Supervised Video Representation Learning. BMVC, 2022, p. 541.

- Jeong, S.Y.; Kim, H.J.; Oh, M.S.; Lee, G.H.; Lee, S.W. Temporal-Invariant Video Representation Learning with Dynamic Temporal Resolutions. 2022 18th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS). IEEE, 2022, pp. 1–8.

- Yao, Y.; Liu, C.; Luo, D.; Zhou, Y.; Ye, Q. Video playback rate perception for self-supervised spatio-temporal representation learning. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 6548–6557.

- Knights, J.; Harwood, B.; Ward, D.; Vanderkop, A.; Mackenzie-Ross, O.; Moghadam, P. Temporally coherent embeddings for self-supervised video representation learning. 2020 25th International Conference on Pattern Recognition (ICPR). IEEE, 2021, pp. 8914–8921.

- Chen, M.; Wei, F.; Li, C.; Cai, D. Frame-wise Action Representations for Long Videos via Sequence Contrastive Learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 13801–13810.

- Zhang, H.; Liu, D.; Zheng, Q.; Su, B. Modeling video as stochastic processes for fine-grained video representation learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 2225–2234.

- Dwibedi, D.; Aytar, Y.; Tompson, J.; Sermanet, P.; Zisserman, A. Temporal cycle-consistency learning. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 1801–1810.

- Hadji, I.; Derpanis, K.G.; Jepson, A.D. Representation learning via global temporal alignment and cycle-consistency. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 11068–11077.

- Haresh, S.; Kumar, S.; Coskun, H.; Syed, S.N.; Konin, A.; Zia, Z.; Tran, Q.H. Learning by aligning videos in time. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 5548–5558.

- Wills, J.; Agarwal, S.; Belongie, S. What went where. Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition; IEEE Computer Society: USA, 2003. [Google Scholar]

- Jabri, A.; Owens, A.; Efros, A. Space-time correspondence as a contrastive random walk. Advances in neural information processing systems 2020, 33, 19545–19560. [Google Scholar]

- Bian, Z.; Jabri, A.; Efros, A.A.; Owens, A. Learning pixel trajectories with multiscale contrastive random walks. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 6508–6519.

- Xu, J.; Wang, X. Rethinking self-supervised correspondence learning: A video frame-level similarity perspective. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 10075–10085.

- Li, R.; Liu, D. Spatial-then-temporal self-supervised learning for video correspondence. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 2279–2288.

- Noroozi, M.; Favaro, P. Unsupervised learning of visual representations by solving jigsaw puzzles. European conference on computer vision. Springer, 2016, pp. 69–84.

- Ahsan, U.; Madhok, R.; Essa, I. Video jigsaw: Unsupervised learning of spatiotemporal context for video action recognition. 2019 IEEE Winter Conference on Applications of Computer Vision (WACV). IEEE, 2019, pp. 179–189.

- Gidaris, S.; Singh, P.; Komodakis, N. Unsupervised representation learning by predicting image rotations. arXiv preprint arXiv:1803.07728, arXiv:1803.07728 2018.

- Jing, L.; Yang, X.; Liu, J.; Tian, Y. Self-supervised spatiotemporal feature learning via video rotation prediction. arXiv preprint arXiv:1811.11387, arXiv:1811.11387 2018.

- Geng, S.; Zhao, S.; Liu, H. Video representation learning by identifying spatio-temporal transformations. Applied Intelligence.

- Zhang, Y.; Po, L.M.; Xu, X.; Liu, M.; Wang, Y.; Ou, W.; Zhao, Y.; Yu, W.Y. Contrastive spatio-temporal pretext learning for self-supervised video representation. Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Vol. 36, pp. 3380–3389.

- Wang, R.; Chen, D.; Wu, Z.; Chen, Y.; Dai, X.; Liu, M.; Yuan, L.; Jiang, Y.G. Masked video distillation: Rethinking masked feature modeling for self-supervised video representation learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 6312–6322.

- Buchler, U.; Brattoli, B.; Ommer, B. Improving spatiotemporal self-supervision by deep reinforcement learning. Proceedings of the European conference on computer vision (ECCV), 2018, pp. 770–786.

- Kim, D.; Cho, D.; Kweon, I.S. Self-supervised video representation learning with space-time cubic puzzles. Proceedings of the AAAI conference on artificial intelligence, 2019, Vol. 33, pp. 8545–8552.

- Zhang, Y.; Zhang, H.; Wu, G.; Li, J. Spatio-temporal self-supervision enhanced transformer networks for action recognition. 2022 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 2022, pp. 1–6.

- Tong, Z.; Song, Y.; Wang, J.; Wang, L. VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training. Advances in Neural Information Processing Systems, 2022.

- Feichtenhofer, C.; Fan, H.; Li, Y.; He, K. Masked Autoencoders As Spatiotemporal Learners. Advances in Neural Information Processing Systems, 2022.

- Wang, L.; Huang, B.; Zhao, Z.; Tong, Z.; He, Y.; Wang, Y.; Wang, Y.; Qiao, Y. Videomae v2: Scaling video masked autoencoders with dual masking. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 14549–14560.

- Yang, H.; Huang, D.; Wen, B.; Wu, J.; Yao, H.; Jiang, Y.; Zhu, X.; Yuan, Z. Self-supervised Video Representation Learning with Motion-Aware Masked Autoencoders. arXiv preprint arXiv:2210.04154, arXiv:2210.04154 2022.

- Song, Y.; Yang, M.; Wu, W.; He, D.; Li, F.; Wang, J. It Takes Two: Masked Appearance-Motion Modeling for Self-supervised Video Transformer Pre-training. arXiv preprint arXiv:2210.05234, arXiv:2210.05234 2022.

- Sun, X.; Chen, P.; Chen, L.; Li, C.; Li, T.H.; Tan, M.; Gan, C. Masked Motion Encoding for Self-Supervised Video Representation Learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 2235–2245.

- Stone, A.; Maurer, D.; Ayvaci, A.; Angelova, A.; Jonschkowski, R. Smurf: Self-teaching multi-frame unsupervised raft with full-image warping. Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition, 2021, pp. 3887–3896.

- Teed, Z.; Deng, J. Raft: Recurrent all-pairs field transforms for optical flow. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part II 16. Springer, 2020, pp. 402–419.

- Sun, Z.; Luo, Z.; Nishida, S. Decoupled spatiotemporal adaptive fusion network for self-supervised motion estimation. Neurocomputing 2023, 534, 133–146. [Google Scholar] [CrossRef]

- Gan, C.; Gong, B.; Liu, K.; Su, H.; Guibas, L.J. Geometry guided convolutional neural networks for self-supervised video representation learning. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 5589–5597.

- Sriram, A.; Gaidon, A.; Wu, J.; Niebles, J.C.; Fei-Fei, L.; Adeli, E. HomE: Homography-Equivariant Video Representation Learning. arXiv preprint arXiv:2306.01623, arXiv:2306.01623 2023.

- Das, S.; Ryoo, M.S. ViewCLR: Learning Self-supervised Video Representation for Unseen Viewpoints. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 5573–5583.

- Gutmann, M.; Hyvärinen, A. Noise-contrastive estimation: A new estimation principle for unnormalized statistical models. Proceedings of the thirteenth international conference on artificial intelligence and statistics. JMLR Workshop and Conference Proceedings, 2010, pp. 297–304.

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M.; others. Bootstrap your own latent-a new approach to self-supervised learning. Advances in neural information processing systems 2020, 33, 21271–21284. [Google Scholar]

- Chen, X.; He, K. Exploring simple siamese representation learning. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, pp. 15750–15758.

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748, arXiv:1807.03748 2018.

- Han, T.; Xie, W.; Zisserman, A. Video representation learning by dense predictive coding. Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, 2019, pp. 0–0.

- Han, T.; Xie, W.; Zisserman, A. Memory-augmented dense predictive coding for video representation learning. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part III 16. Springer, 2020, pp. 312–329.

- Lorre, G.; Rabarisoa, J.; Orcesi, A.; Ainouz, S.; Canu, S. Temporal Contrastive Pretraining for Video Action Recognition. The IEEE Winter Conference on Applications of Computer Vision, 2020, pp. 662–670.

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. International conference on machine learning. PMLR, 2020, pp. 1597–1607.

- Qian, R.; Meng, T.; Gong, B.; Yang, M.H.; Wang, H.; Belongie, S.; Cui, Y. Spatiotemporal contrastive video representation learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 6964–6974.

- Feichtenhofer, C.; Fan, H.; Xiong, B.; Girshick, R.; He, K. A large-scale study on unsupervised spatiotemporal representation learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 3299–3309.

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 9729–9738.

- Pan, T.; Song, Y.; Yang, T.; Jiang, W.; Liu, W. Videomoco: Contrastive video representation learning with temporally adversarial examples. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 11205–11214.

- Caron, M.; Misra, I.; Mairal, J.; Goyal, P.; Bojanowski, P.; Joulin, A. Unsupervised learning of visual features by contrasting cluster assignments. Advances in neural information processing systems 2020, 33, 9912–9924. [Google Scholar]

- Hjelm, R.D.; Fedorov, A.; Lavoie-Marchildon, S.; Grewal, K.; Bachman, P.; Trischler, A.; Bengio, Y. Learning deep representations by mutual information estimation and maximization. arXiv preprint arXiv:1808.06670, arXiv:1808.06670 2018.

- Bachman, P.; Hjelm, R.D.; Buchwalter, W. Learning representations by maximizing mutual information across views. Advances in Neural Information Processing Systems, 2019, pp. 15535–15545.

- Devon Hjelm, R.; Bachman, P. Representation Learning with Video Deep InfoMax. arXiv preprint arXiv:2007.13278, arXiv:2007.13278 2020.

- Sarkar, P.; Beirami, A.; Etemad, A. Uncovering the Hidden Dynamics of Video Self-supervised Learning under Distribution Shifts. arXiv preprint arXiv:2306.02014, arXiv:2306.02014 2023.

- Dave, I.; Gupta, R.; Rizve, M.N.; Shah, M. TCLR: Temporal contrastive learning for video representation. Computer Vision and Image Understanding 2022, 219, 103406. [Google Scholar] [CrossRef]

- Wang, J.; Lin, Y.; Ma, A.J.; Yuen, P.C. Self-supervised temporal discriminative learning for video representation learning. arXiv preprint arXiv:2008.02129, arXiv:2008.02129 2020.

- Chen, Z.; Lin, K.Y.; Zheng, W.S. Consistent Intra-video Contrastive Learning with Asynchronous Long-term Memory Bank. IEEE Transactions on Circuits and Systems for Video Technology 2022. [Google Scholar] [CrossRef]

- Tao, L.; Wang, X.; Yamasaki, T. Self-supervised video representation learning using inter-intra contrastive framework. Proceedings of the 28th ACM International Conference on Multimedia, 2020, pp. 2193–2201.

- Tao, L.; Wang, X.; Yamasaki, T. An improved inter-intra contrastive learning framework on self-supervised video representation. IEEE Transactions on Circuits and Systems for Video Technology 2022, 32, 5266–5280. [Google Scholar] [CrossRef]

- Zhu, Y.; Shuai, H.; Liu, G.; Liu, Q. Self-supervised video representation learning using improved instance-wise contrastive learning and deep clustering. IEEE Transactions on Circuits and Systems for Video Technology 2022, 32, 6741–6752. [Google Scholar] [CrossRef]

- Khorasgani, S.H.; Chen, Y.; Shkurti, F. Slic: Self-supervised learning with iterative clustering for human action videos. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 16091–16101.

- Miech, A.; Alayrac, J.B.; Smaira, L.; Laptev, I.; Sivic, J.; Zisserman, A. End-to-end learning of visual representations from uncurated instructional videos. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 9879–9889.

- Tokmakov, P.; Hebert, M.; Schmid, C. Unsupervised learning of video representations via dense trajectory clustering. Computer Vision–ECCV 2020 Workshops: Glasgow, UK, August 23–28, 2020, Proceedings, Part II 16. Springer, 2020, pp. 404–421.

- Zach, C.; Pock, T.; Bischof, H. A duality based approach for realtime tv-l 1 optical flow. Pattern Recognition: 29th DAGM Symposium, Heidelberg, Germany, September 12-14, 2007. Proceedings 29. Springer, 2007, pp. 214–223.

- Wang, J.; Jiao, J.; Bao, L.; He, S.; Liu, Y.; Liu, W. Self-supervised spatio-temporal representation learning for videos by predicting motion and appearance statistics. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 4006–4015.

- Wang, J.; Jiao, J.; Bao, L.; He, S.; Liu, W.; Liu, Y.h. Self-supervised video representation learning by uncovering spatio-temporal statistics. IEEE Transactions on Pattern Analysis and Machine Intelligence 2021, 44, 3791–3806. [Google Scholar]

- Xiao, F.; Tighe, J.; Modolo, D. Maclr: Motion-aware contrastive learning of representations for videos. European Conference on Computer Vision. Springer, 2022, pp. 353–370.

- Ni, J.; Zhou, N.; Qin, J.; Wu, Q.; Liu, J.; Li, B.; Huang, D. Motion Sensitive Contrastive Learning for Self-supervised Video Representation. Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXXV. Springer, 2022, pp. 457–474.

- Coskun, H.; Zareian, A.; Moore, J.L.; Tombari, F.; Wang, C. GOCA: guided online cluster assignment for self-supervised video representation Learning. Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXXI. Springer, 2022, pp. 1–22.

- Han, T.; Xie, W.; Zisserman, A. Self-supervised co-training for video representation learning. Advances in Neural Information Processing Systems 2020, 33, 5679–5690. [Google Scholar]

- Fan, C.; Hou, S.; Wang, J.; Huang, Y.; Yu, S. Learning gait representation from massive unlabelled walking videos: A benchmark. IEEE Transactions on Pattern Analysis and Machine Intelligence 2023. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Y.; Chen, X.; Wang, J. Object-contextual representations for semantic segmentation. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part VI 16. Springer, 2020, pp. 173–190.

- Alwassel, H.; Mahajan, D.; Korbar, B.; Torresani, L.; Ghanem, B.; Tran, D. Self-supervised learning by cross-modal audio-video clustering. Advances in Neural Information Processing Systems 2020, 33, 9758–9770. [Google Scholar]

- Kalayeh, M.M.; Kamath, N.; Liu, L.; Chandrashekar, A. Watching too much television is good: Self-supervised audio-visual representation learning from movies and tv shows. arXiv preprint arXiv:2106.08513 arXiv:2106.08513 2021.

- Kalayeh, M.M.; Ardeshir, S.; Liu, L.; Kamath, N.; Chandrashekar, A. On Negative Sampling for Audio-Visual Contrastive Learning from Movies. arXiv preprint arXiv:2205.00073 arXiv:2205.00073 2022.

- Qing, Z.; Zhang, S.; Huang, Z.; Xu, Y.; Wang, X.; Tang, M.; Gao, C.; Jin, R.; Sang, N. Learning from untrimmed videos: Self-supervised video representation learning with hierarchical consistency. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 13821–13831.

- Qing, Z.; Zhang, S.; Huang, Z.; Xu, Y.; Wang, X.; Gao, C.; Jin, R.; Sang, N. Self-Supervised Learning from Untrimmed Videos via Hierarchical Consistency. IEEE Transactions on Pattern Analysis and Machine Intelligence 2023. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Zhou, S.; Liu, D. Learning Fine-Grained Features for Pixel-wise Video Correspondences. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 9632–9641.

- Thoker, F.M.; Doughty, H.; Snoek, C. Tubelet-Contrastive Self-Supervision for Video-Efficient Generalization. arXiv preprint arXiv:2303.11003 arXiv:2303.11003 2023.

- Kong, Q.; Wei, W.; Deng, Z.; Yoshinaga, T.; Murakami, T. Cycle-contrast for self-supervised video representation learning. Advances in Neural Information Processing Systems 2020, 33, 8089–8100. [Google Scholar]

- Diba, A.; Sharma, V.; Safdari, R.; Lotfi, D.; Sarfraz, S.; Stiefelhagen, R.; Van Gool, L. Vi2clr: Video and image for visual contrastive learning of representation. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 1502–1512.

- Lin, W.; Mirza, M.J.; Kozinski, M.; Possegger, H.; Kuehne, H.; Bischof, H. Video Test-Time Adaptation for Action Recognition. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 22952–22961.

- Lin, J.; Zhang, R.; Ganz, F.; Han, S.; Zhu, J.Y. Enhancing Unsupervised Video Representation Learning by Decoupling the Scene and the Motion. AAAI, 2021.

- Wang, J.; Gao, Y.; Li, K.; Lin, Y.; Ma, A.J.; Cheng, H.; Peng, P.; Huang, F.; Ji, R.; Sun, X. Removing the background by adding the background: Towards background robust self-supervised video representation learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 11804–11813.

- Zhang, M.; Wang, J.; Ma, A.J. Suppressing Static Visual Cues via Normalizing Flows for Self-Supervised Video Representation Learning. Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Vol. 36, pp. 3300–3308.

- Tian, F.; Fan, J.; Yu, X.; Du, S.; Song, M.; Zhao, Y. TCVM: Temporal Contrasting Video Montage Framework for Self-supervised Video Representation Learning. Proceedings of the Asian Conference on Computer Vision, 2022, pp. 1539–1555.

- Ding, S.; Qian, R.; Xiong, H. Dual contrastive learning for spatio-temporal representation. Proceedings of the 30th ACM International Conference on Multimedia, 2022, pp. 5649–5658.

- Akar, A.; Senturk, U.U.; Ikizler-Cinbis, N. MAC: Mask-Augmentation for Motion-Aware Video Representation Learning. 33rd British Machine Vision Conference 2022, BMVC 2022, London, UK, November 21-24, 2022. BMVA Press, 2022.

- Assefa, M.; Jiang, W.; Gedamu, K.; Yilma, G.; Kumeda, B.; Ayalew, M. Self-Supervised Scene-Debiasing for Video Representation Learning via Background Patching. IEEE Transactions on Multimedia 2022. [Google Scholar] [CrossRef]

- Kim, J.; Kim, T.; Shim, M.; Han, D.; Wee, D.; Kim, J. Spatiotemporal Augmentation on Selective Frequencies for Video Representation Learning. arXiv preprint arXiv:2204.03865 arXiv:2204.03865 2022.

- Chen, B.; Selvaraju, R.R.; Chang, S.F.; Niebles, J.C.; Naik, N. Previts: contrastive pretraining with video tracking supervision. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 1560–1570.

- Huang, L.; Liu, Y.; Wang, B.; Pan, P.; Xu, Y.; Jin, R. Self-supervised video representation learning by context and motion decoupling. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 13886–13895.

- Ding, S.; Li, M.; Yang, T.; Qian, R.; Xu, H.; Chen, Q.; Wang, J.; Xiong, H. Motion-aware contrastive video representation learning via foreground-background merging. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 9716–9726.

- Liu, J.; Cheng, Y.; Zhang, Y.; Zhao, R.W.; Feng, R. Self-Supervised Video Representation Learning with Motion-Contrastive Perception. 2022 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 2022, pp. 1–6.

- Nie, M.; Quan, Z.; Ding, W.; Yang, W. Enhancing motion visual cues for self-supervised video representation learning. Engineering Applications of Artificial Intelligence 2023, 123, 106203. [Google Scholar] [CrossRef]

- Gupta, R.; Akhtar, N.; Mian, A.; Shah, M. Contrastive self-supervised learning leads to higher adversarial susceptibility. Proceedings of the AAAI Conference on Artificial Intelligence, 2023, Vol. 37, pp. 14838–14846.

- Yu, Y.; Lee, S.; Kim, G.; Song, Y. Self-supervised learning of compressed video representations. International Conference on Learning Representations, 2021.

- Hwang, S.; Yoon, J.; Lee, Y.; Hwang, S.J. Efficient Video Representation Learning via Masked Video Modeling with Motion-centric Token Selection. arXiv preprint arXiv:2211.10636, arXiv:2211.10636 2022.

- Li, Q.; Huang, X.; Wan, Z.; Hu, L.; Wu, S.; Zhang, J.; Shan, S.; Wang, Z. Data-Efficient Masked Video Modeling for Self-supervised Action Recognition. Proceedings of the 31st ACM International Conference on Multimedia, 2023, pp. 2723–2733.

- Qing, Z.; Zhang, S.; Huang, Z.; Wang, X.; Wang, Y.; Lv, Y.; Gao, C.; Sang, N. Mar: Masked autoencoders for efficient action recognition. IEEE Transactions on Multimedia 2023. [Google Scholar] [CrossRef]

- Dave, I.R.; Rizve, M.N.; Chen, C.; Shah, M. Timebalance: Temporally-invariant and temporally-distinctive video representations for semi-supervised action recognition. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 2341–2352.

- Escorcia, V.; Guerrero, R.; Zhu, X.; Martinez, B. SOS! Self-supervised Learning over Sets of Handled Objects in Egocentric Action Recognition. Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XIII. Springer, 2022, pp. 604–620.

- Xue, Z.S.; Grauman, K. Learning fine-grained view-invariant representations from unpaired ego-exo videos via temporal alignment. Advances in Neural Information Processing Systems 2024, 36. [Google Scholar]

- Dave, I.R.; Chen, C.; Shah, M. Spact: Self-supervised privacy preservation for action recognition. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 20164–20173.

- Fioresi, J.; Dave, I.R.; Shah, M. Ted-spad: Temporal distinctiveness for self-supervised privacy-preservation for video anomaly detection. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 13598–13609.

- Rehman, Y.A.U.; Gao, Y.; Shen, J.; de Gusmao, P.P.B.; Lane, N. Federated self-supervised learning for video understanding. European Conference on Computer Vision. Springer, 2022, pp. 506–522.

- Bardes, A.; Ponce, J.; LeCun, Y. Mc-jepa: A joint-embedding predictive architecture for self-supervised learning of motion and content features. arXiv preprint arXiv:2307.12698 arXiv:2307.12698 2023.

- Lai, Q.; Zeng, A.; Wang, Y.; Cao, L.; Li, Y.; Xu, Q. Self-supervised Video Representation Learning via Capturing Semantic Changes Indicated by Saccades. IEEE Transactions on Circuits and Systems for Video Technology 2023. [Google Scholar] [CrossRef]

- Lin, W.; Ding, X.; Huang, Y.; Zeng, H. Self-Supervised Video-Based Action Recognition With Disturbances. IEEE Transactions on Image Processing 2023. [Google Scholar] [CrossRef] [PubMed]

- Qian, R.; Ding, S.; Liu, X.; Lin, D. Static and Dynamic Concepts for Self-supervised Video Representation Learning. Computer Vision–ECCV2022: 17th European Conference, TelAviv, Israel, October 23–27, 2022, Proceedings, Part XXVI. Springer, 2022, pp. 145–164.

- Lin, W.; Liu, X.; Zhuang, Y.; Ding, X.; Tu, X.; Huang, Y.; Zeng, H. Unsupervised Video-based Action Recognition With Imagining Motion And Perceiving Appearance. IEEE Transactions on Circuits and Systems for Video Technology 2022. [Google Scholar] [CrossRef]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402 arXiv:1212.0402 2012.

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: a large video database for human motion recognition. 2011 International conference on computer vision. IEEE, 2011, pp. 2556–2563.

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P. ; others. The kinetics human action video dataset. arXiv preprint arXiv:1705.06950 arXiv:1705.06950 2017.

- Goyal, R.; Ebrahimi Kahou, S.; Michalski, V.; Materzynska, J.; Westphal, S.; Kim, H.; Haenel, V.; Fruend, I.; Yianilos, P.; Mueller-Freitag, M.; others. The" something something" video database for learning and evaluating visual common sense. Proceedings of the IEEE international conference on computer vision, 2017, pp. 5842–5850.

- Shahroudy, A.; Liu, J.; Ng, T.T.; Wang, G. Ntu rgb+ d: A large scale dataset for 3d human activity analysis. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 1010–1019.

- Shao, D.; Zhao, Y.; Dai, B.; Lin, D. Finegym: A hierarchical video dataset for fine-grained action understanding. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 2616–2625.

- Li, Y.; Li, Y.; Vasconcelos, N. Resound: Towards action recognition without representation bias. Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 513–528.

- Thoker, F.M.; Doughty, H.; Bagad, P.; Snoek, C.G. How Severe Is Benchmark-Sensitivity in Video Self-supervised Learning? Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXXIV. Springer, 2022, pp. 632–652.

- Diba, A.; Fayyaz, M.; Sharma, V.; Paluri, M.; Gall, J.; Stiefelhagen, R.; Van Gool, L. Large Scale Holistic Video Understanding. European Conference on Computer Vision. Springer, 2020, pp. 593–610.

- Tang, Y.; Ding, D.; Rao, Y.; Zheng, Y.; Zhang, D.; Zhao, L.; Lu, J.; Zhou, J. Coin: A large-scale dataset for comprehensive instructional video analysis. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 1207–1216.

- Gupta, R.; Roy, A.; Christensen, C.; Kim, S.; Gerard, S.; Cincebeaux, M.; Divakaran, A.; Grindal, T.; Shah, M. Class prototypes based contrastive learning for classifying multi-label and fine-grained educational videos. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2023, pp. 19923–19933.

- Zhang, W.; Zhu, M.; Derpanis, K.G. From actemes to action: A strongly-supervised representation for detailed action understanding. Proceedings of the IEEE international conference on computer vision, 2013, pp. 2248–2255.

- Sermanet, P.; Xu, K.; Levine, S. Unsupervised Perceptual Rewards for Imitation Learning. Proceedings of Robotics: Science and Systems, 2017.

- Sermanet, P.; Lynch, C.; Hsu, J.; Levine, S. Time-contrastive networks: Self-supervised learning from multi-view observation. 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). IEEE, 2017, pp. 486–487.

- Pont-Tuset, J.; Perazzi, F.; Caelles, S.; Arbeláez, P.; Sorkine-Hornung, A.; Van Gool, L. The 2017 davis challenge on video object segmentation. arXiv preprint arXiv:1704.00675 arXiv:1704.00675 2017.

- Li, X.; Liu, S.; De Mello, S.; Wang, X.; Kautz, J.; Yang, M.H. Joint-task self-supervised learning for temporal correspondence. Advances in Neural Information Processing Systems 2019, 32. [Google Scholar]

- Gordon, D.; Ehsani, K.; Fox, D.; Farhadi, A. Watching the world go by: Representation learning from unlabeled videos. arXiv preprint arXiv:2003.07990 arXiv:2003.07990 2020.

- Wang, X.; Jabri, A.; Efros, A.A. Learning correspondence from the cycle-consistency of time. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 2566–2576.

- Li, R.; Zhou, S.; Liu, D. Learning Fine-Grained Features for Pixel-wise Video Correspondences. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 9632–9641.

- Jhuang, H.; Gall, J.; Zuffi, S.; Schmid, C.; Black, M.J. Towards understanding action recognition. International Conf. on Computer Vision (ICCV), 2013, pp. 3192–3199.

- Yu, S.; Tan, D.; Tan, T. A framework for evaluating the effect of view angle, clothing and carrying condition on gait recognition. 18th International Conference on Pattern Recognition (ICPR’06). IEEE, 2006, Vol. 4, pp. 441–444.

- Butler, D.J.; Wulff, J.; Stanley, G.B.; Black, M.J. A naturalistic open source movie for optical flow evaluation. Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, October 7-13, 2012, Proceedings, Part VI 12. Springer, 2012, pp. 611–625.

- Menze, M.; Heipke, C.; Geiger, A. Joint 3d estimation of vehicles and scene flow. ISPRS annals of the photogrammetry, remote sensing and spatial information sciences 2015, 2, 427–434. [Google Scholar] [CrossRef]

- Sigurdsson, G.A.; Gupta, A.; Schmid, C.; Farhadi, A.; Alahari, K. Charades-ego: A large-scale dataset of paired third and first person videos. arXiv preprint arXiv:1804.09626, arXiv:1804.09626 2018.

- Zhang, Y.; Doughty, H.; Shao, L.; Snoek, C.G. Audio-adaptive activity recognition across video domains. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 13791–13800.

- Roitberg, A.; Schneider, D.; Djamal, A.; Seibold, C.; Reiß, S.; Stiefelhagen, R. Let’s play for action: Recognizing activities of daily living by learning from life simulation video games. 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2021, pp. 8563–8569.

| 1 | |

| 2 |

| Method | Phase Classification | Phase Progression | Kendall Tau | |||

| PennAction | Pouring | PennAction | Pouring | PennAction | Pouring | |

| SaL [13] | 68.2 | - | 39.0 | - | 47.4 | - |

| TCN [164] | 68.1 | 89.5 | 38.3 | 80.4 | 54.2 | 85.2 |

| TCLR [94] | 79.9 | - | - | - | 82.0 | - |

| CARL [45] | 93.1 | 93.7 | 91.8 | 93.5 | 98.5 | 99.2 |

| VSP [46] | 93.1 | 93.9 | 92.3 | 94.2 | 98.6 | 99.0 |

| Method | Venue | DAVIS | JHMDB | |||

| J&Fm | Jm | Fm | PCK@0.1 | PCK@0.2 | ||

| VFS [53] | ICCV-2021 | 68.9 | 66.5 | 71.3 | 60.9 | 80.7 |

| UVC [166] | NeurIPS-2019 | 56.3 | 54.5 | 58.1 | 56.0 | 76.6 |

| CRW [51] | NeurIPS-2020 | 67.6 | 64.8 | 70.2 | 59.3 | 80.3 |

| SimSiam [79] | CVPR-2021 | 66.3 | 64.5 | 68.2 | 58.4 | 77.5 |

| MoCo [87] | MoCo-2020 | 65.4 | 63.2 | 67.6 | 60.4 | 79.3 |

| VINCE [167] | arXiv-2020 | 65.6 | 63.4 | 67.8 | 58.2 | 76.3 |

| TimeCycle [168] | CVPR-2019 | 40.7 | 41.9 | 39.4 | 57.7 | 78.5 |

| MCRW [52] | CVPR-2022 | 57.9 | - | - | 62.6 | 80.9 |

| FGVC [169] | ICCV-2023 | 77.4 | 70.5 | 74.4 | 66.8 | - |

| NMS [33] | AAAI-2024 | 62.1 | 60.5 | 63.6 | 43.1 | 69.7 |

| ST-MAE [66] | Neurips-2022 | 53.5 | 52.6 | 54.4 | - | - |

| VideoMAE [65] | Neurips-2022 | 53.8 | 53.2 | 54.4 | 36.5 | 62.1 |

| MotionMAE [68] | arXiv-2022 | 56.8 | 55.8 | 57.8 | - | - |

| SVT [40] | CVPR-2022 | 48.5 | 46.8 | 50.1 | 35.3 | 62.66 |

| StT [54] | CVPR-2023 | 74.1 | 71.1 | 77.1 | 63.1 | 82.9 |

| Method | Venue | Pretraining Dataset | NM | BG | CL | Mean |

|---|---|---|---|---|---|---|

| GaitSSB [110] | T-PAMI (2023) | GaitLU-1M (1 million videos) | 83.30 | 75.60 | 28.70 | 62.53 |

| BYOL [86] | CVPR (2022) | Kinetics400 (160k videos) | 90.65 | 80.51 | 28.59 | 66.58 |

| VideoMAE [65] | Neurips (2022) | Kinetics400 (160k videos) | 65.30 | 57.21 | 21.40 | 47.97 |

| NMS [33] | AAAI (2024) | Kinetics400 (160k videos) | 98.60 | 92.57 | 28.66 | 73.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).