Submitted:

01 August 2024

Posted:

06 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Literature Reviews

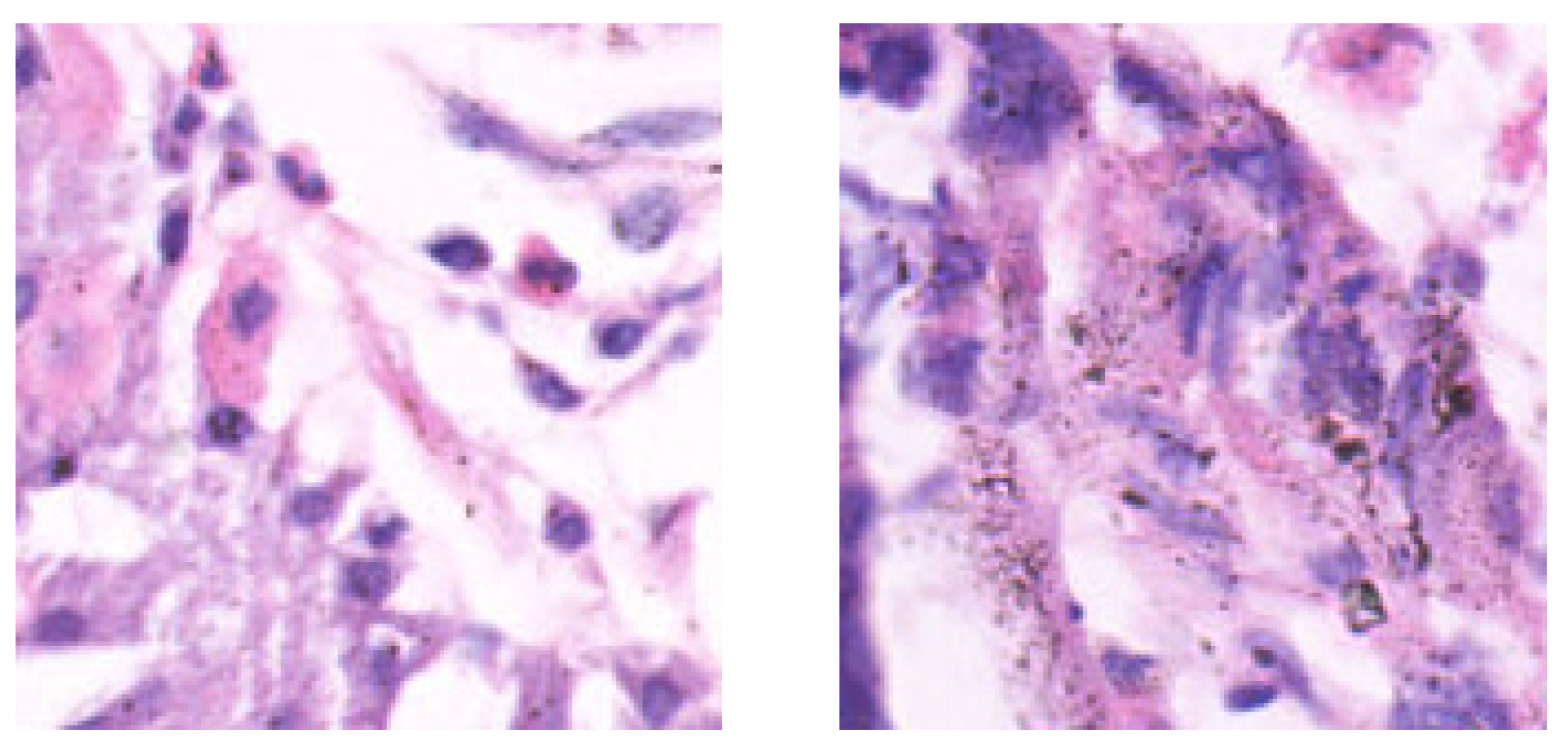

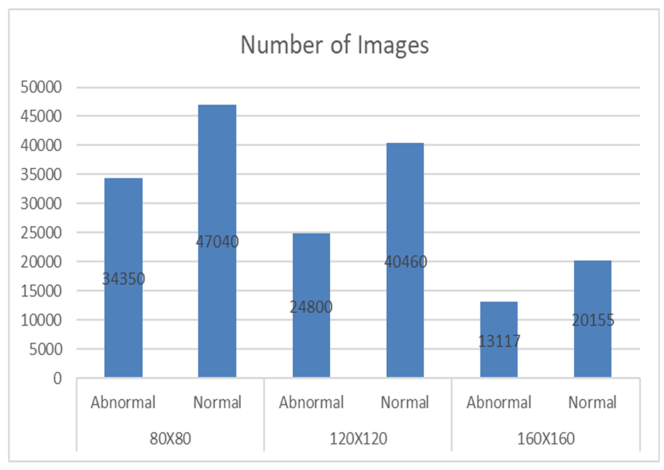

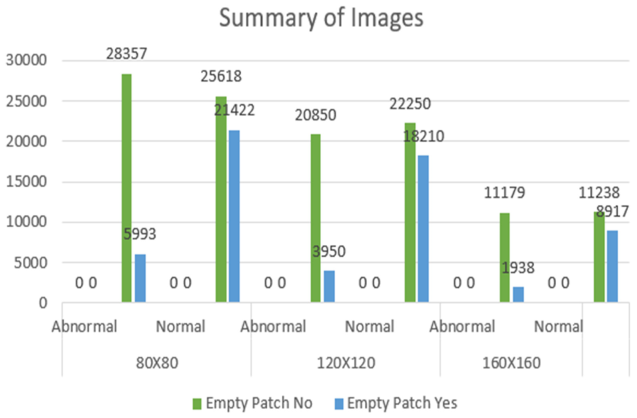

2.2. Dataset Description

|

|

2.3. Methodology Overview

2.4. Empty Patch Removal Process

2.5. Pretrained networks as Base Models

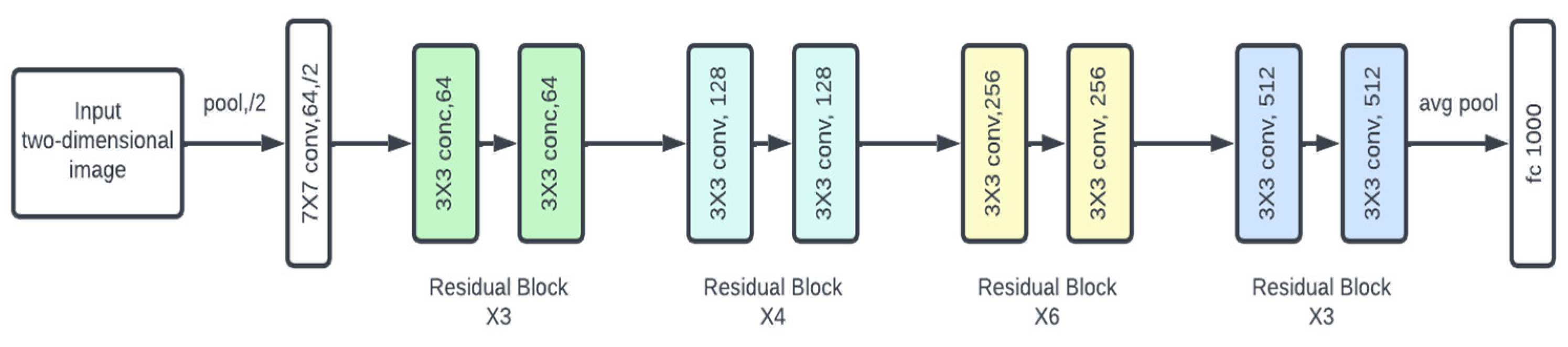

- ResNet34

- Architecture:

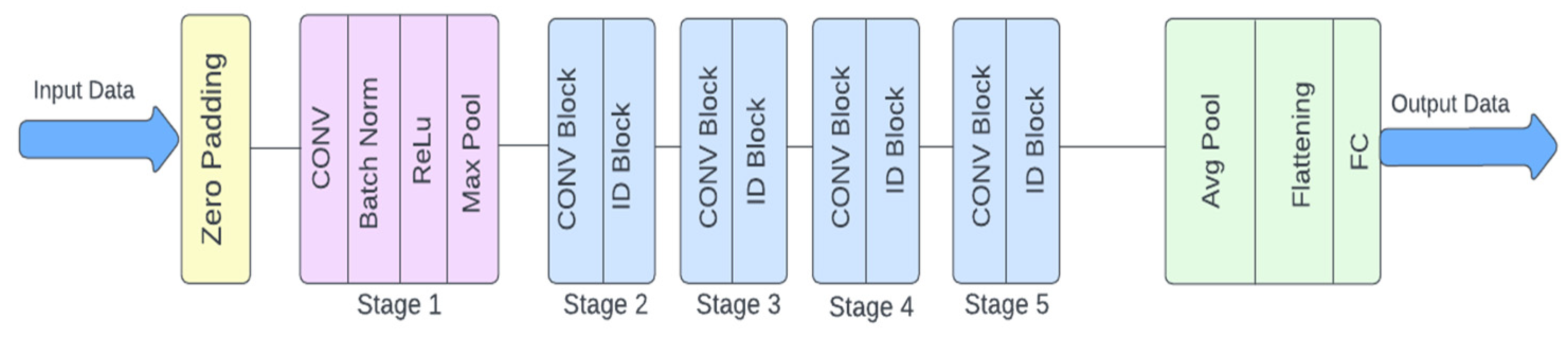

- ResNet50

- Architecture:

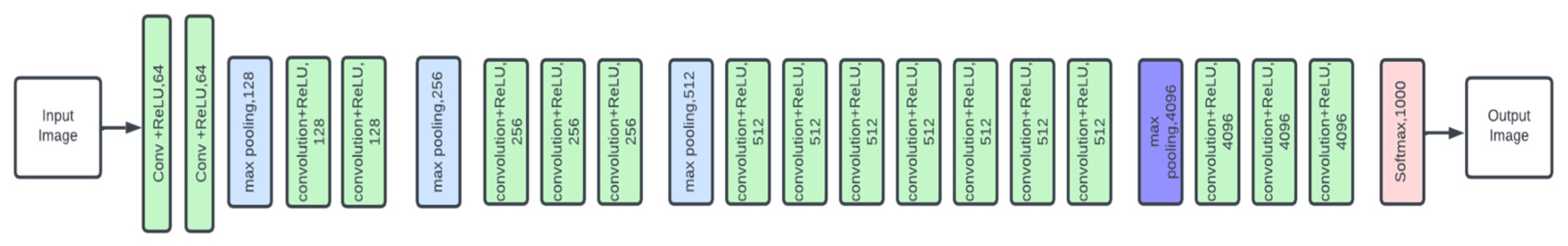

- VGGNet16

- Architecture:

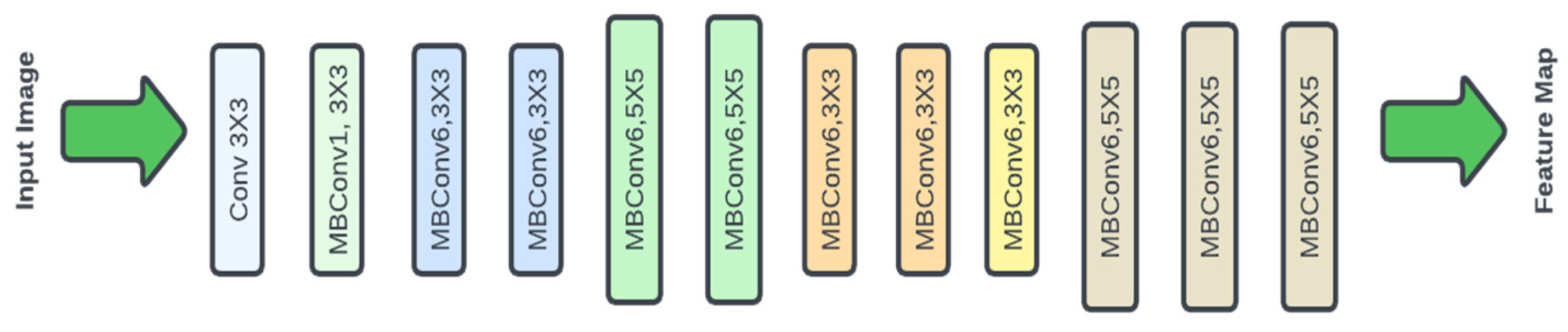

- Efficientnet Architecture:

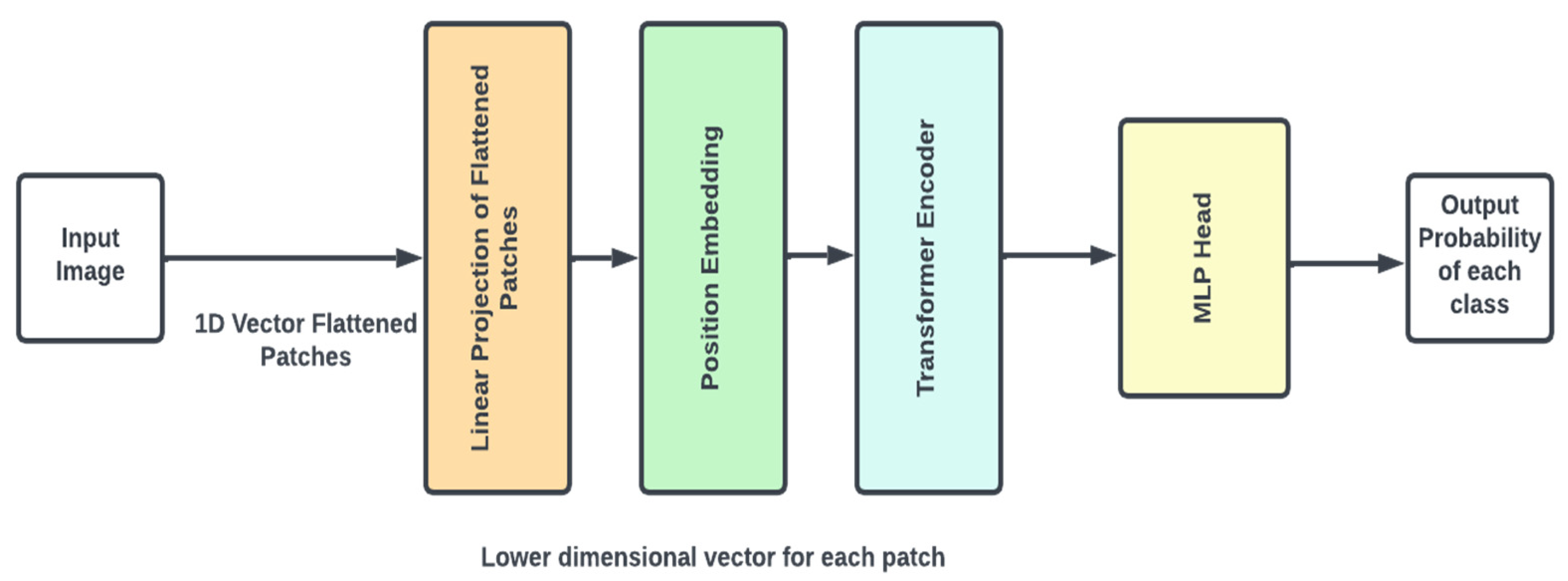

- VitNet Architecture:

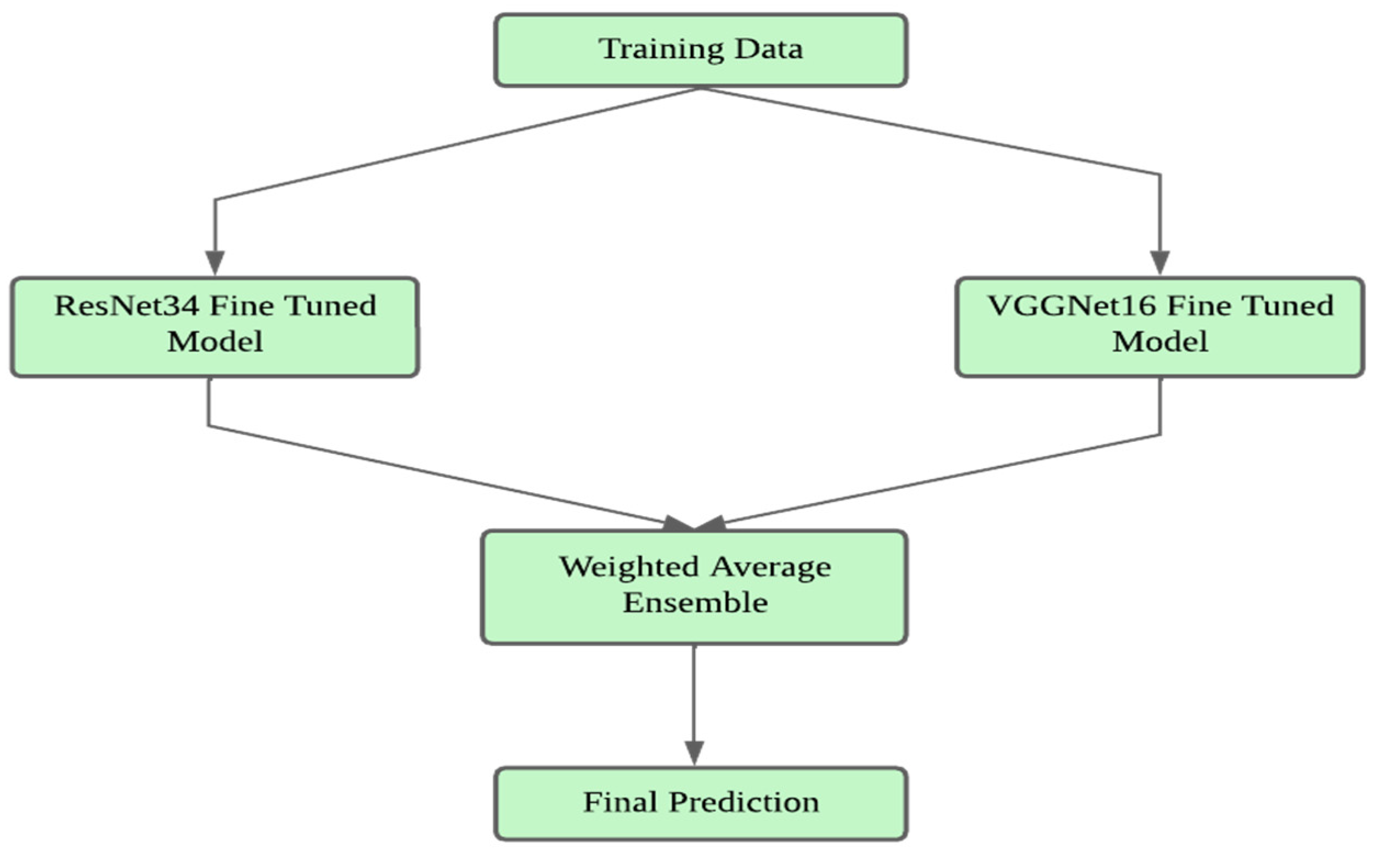

- Ensemble Architecture:

2.6. Experimental Setting

3. Results

| Model | Fold | Train Accuracy | Train Loss | Val Accuracy | Val Loss | Jaccard Index | AUC | Specificity | Sensitivity |

|---|---|---|---|---|---|---|---|---|---|

| Resnet34 | 1 | 98.7508 | 0.0331 | 93.6131 | 0.2175 | 0.7447 | 0.9719 | 0.9604 | 0.8494 |

| 2 | 97.8192 | 0.0604 | 93.7206 | 0.2092 | 0.7472 | 0.9717 | 0.9638 | 0.8427 | |

| 3 | 98.8546 | 0.0329 | 93.8111 | 0.2210 | 0.7384 | 0.9760 | 0.9699 | 0.8204 | |

| 4 | 97.7773 | 0.0596 | 93.8226 | 0.1724 | 0.7466 | 0.9777 | 0.9625 | 0.8491 | |

| 5 | 98.1767 | 0.0487 | 93.7910 | 0.2399 | 0.7640 | 0.9754 | 0.9518 | 0.8900 | |

| ResNet50 | 1 | 98.4818 | 0.0409 | 93.7408 | 0.1936 | 0.8763 | 0.8979 | 0.9682 | 0.8277 |

| 2 | 96.2419 | 0.0958 | 94.2439 | 0.1880 | 0.8860 | 0.9069 | 0.9703 | 0.8435 | |

| 3 | 98.4410 | 0.0413 | 93.4971 | 0.2024 | 0.8742 | 0.9042 | 0.9577 | 0.8508 | |

| 4 | 97.0654 | 0.0769 | 92.7688 | 0.2016 | 0.8601 | 0.9071 | 0.9431 | 0.8711 | |

| 5 | 93.5411 | 0.1673 | 93.8459 | 0.1869 | 0.8772 | 0.9064 | 0.9648 | 0.8480 | |

| VitNet | 1 | 83.6243 | 0.3631 | 83.7591 | 0.3555 | 0.6945 | 0.7132 | 0.9347 | 0.4916 |

| 2 | 82.9835 | 0.3816 | 84.2836 | 0.3490 | 0.6969 | 0.7076 | 0.9493 | 0.4660 | |

| 3 | 78.4974 | 0.4755 | 78.8656 | 0.4560 | 0.5946 | 0.5054 | 0.9988 | 0.0121 | |

| 4 | 82.5612 | 0.3898 | 84.2841 | 0.3505 | 0.6986 | 0.7015 | 0.9488 | 0.4542 | |

| 5 | 78.3217 | 0.5231 | 77.4105 | 0.5353 | 0.5674 | 0.5 | 1 | 0 | |

| VggNet | 1 | 98.7371 | 0.0366 | 93.7591 | 0.2579 | 0.8763 | 0.9746 | 0.9747 | 0.8053 |

| 2 | 96.2693 | 0.0969 | 94.3341 | 0.1785 | 0.8868 | 0.9757 | 0.9738 | 0.8353 | |

| 3 | 98.5683 | 0.0417 | 93.9220 | 0.2120 | 0.8763 | 0.9751 | 0.9720 | 0.8178 | |

| 4 | 94.3224 | 0.1519 | 93.7681 | 0.1621 | 0.8756 | 0.9733 | 0.9685 | 0.8245 | |

| 5 | 98.3636 | 0.0487 | 93.7910 | 0.2329 | 0.8768 | 0.9724 | 0.9693 | 0.8302 | |

| EfficientNet | 1 | 74.9474 | 0.5035 | 75.4057 | 0.5142 | 0.6094 | 0.7478 | 0.7172 | 0.7784 |

| 2 | 82.4435 | 0.3866 | 83.0474 | 0.3685 | 0.6829 | 0.6726 | 0.9421 | 0.4030 | |

| 3 | 81.0359 | 0.4139 | 82.3709 | 0.3855 | 0.6704 | 0.6346 | 0.9463 | 0.3229 | |

| 4 | 82.3379 | 0.3879 | 84.2231 | 0.3531 | 0.6975 | 0.6725 | 0.9419 | 0.4030 | |

| 5 | 82.6571 | 0.3805 | 83.5210 | 0.3651 | 0.6944 | 0.6816 | 0.9401 | 0.4232 | |

| Ensemble | 1 | 98.3587 | 0.0436 | 99.3430 | 0.0252 | 0.9867 | 0.9904 | 0.9957 | 0.9850 |

| 2 | 98.5187 | 0.0410 | 99.2421 | 0.0211 | 0.9839 | 0.9875 | 0.9962 | 0.9787 | |

| 3 | 98.6001 | 0.0384 | 99.2056 | 0.0237 | 0.9836 | 0.9898 | 0.9936 | 0.9861 | |

| 4 | 97.9234 | 0.0627 | 97.8197 | 0.0651 | 0.9562 | 0.9642 | 0.9886 | 0.9398 | |

| 5 | 98.5642 | 0.0390 | 99.0869 | 0.0221 | 0.9823 | 0.9866 | 0.9943 | 0.9789 |

| Model | Fold | Train Accuracy | Train Loss | Val Accuracy | Val Loss | Jaccard Index | AUC | Specificity | Sensitivity |

|---|---|---|---|---|---|---|---|---|---|

| Resnet34 | 1 | 98.8627 | 0.0327 | 96.8167 | 0.1097 | 0.8347 | 0.9879 | 0.9812 | 0.9075 |

| 2 | 99.2847 | 0.0187 | 97.0297 | 0.1327 | 0.8444 | 0.9815 | 0.9884 | 0.8884 | |

| 3 | 98.9145 | 0.0336 | 96.9364 | 0.1025 | 0.8392 | 0.9907 | 0.9901 | 0.8762 | |

| 4 | 99.1640 | 0.0234 | 96.4475 | 0.1208 | 0.8175 | 0.9879 | 0.9836 | 0.8778 | |

| 5 | 99.2957 | 0.0214 | 96.6893 | 0.1074 | 0.8149 | 0.9897 | 0.9877 | 0.8642 | |

| ResNet50 | 1 | 99.5552 | 0.0125 | 96.7257 | 0.1120 | 0.9316 | 0.9518 | 0.9756 | 0.9281 |

| 2 | 99.2449 | 0.0213 | 96.9856 | 0.1156 | 0.9372 | 0.9254 | 0.9951 | 0.8557 | |

| 3 | 99.7229 | 0.0089 | 97.0930 | 0.1198 | 0.9388 | 0.9493 | 0.9833 | 0.9154 | |

| 4 | 99.5007 | 0.0142 | 96.8166 | 0.1253 | 0.9339 | 0.9379 | 0.9853 | 0.8905 | |

| 5 | 99.5042 | 0.0147 | 97.0748 | 0.0995 | 0.9379 | 0.9406 | 0.9860 | 0.8951 | |

| VitNet | 1 | 86.6963 | 0.3109 | 87.4715 | 0.2875 | 0.7518 | 0.7395 | 0.9488 | 0.5301 |

| 2 | 84.6948 | 0.3495 | 86.1826 | 0.3185 | 0.7254 | 0.7123 | 0.9470 | 0.4775 | |

| 3 | 82.2817 | 0.4486 | 81.7531 | 0.4287 | 0.6310 | 0.5 | 1 | 0 | |

| 4 | 85.7447 | 0.3298 | 87.9123 | 0.2929 | 0.7581 | 0.7607 | 0.9464 | 0.5750 | |

| 5 | 84.2084 | 0.3465 | 86.3265 | 0.3085 | 0.7231 | 0.6767 | 0.9582 | 0.3951 | |

| VggNet | 1 | 76.6185 | 0.4764 | 75.6162 | 0.5053 | 0.6152 | 0.7657 | 0.7776 | 0.7538 |

| 2 | 88.3740 | 0.2758 | 89.1768 | 0.2611 | 0.7889 | 0.7476 | 0.9592 | 0.5361 | |

| 3 | 88.1351 | 0.2822 | 88.3388 | 0.2732 | 0.7756 | 0.7372 | 0.9605 | 0.5139 | |

| 4 | 86.6576 | 0.3140 | 88.2826 | 0.2813 | 0.7673 | 0.6979 | 0.9591 | 0.4368 | |

| 5 | 88.3702 | 0.2745 | 88.4198 | 0.2781 | 0.7779 | 0.7460 | 0.9594 | 0.5325 | |

| EfficientNet | 1 | 99.1949 | 0.0265 | 96.8167 | 0.1372 | 0.9340 | 0.9878 | 0.9867 | 0.8818 |

| 2 | 99.8978 | 0.0054 | 96.9416 | 0.1716 | 0.9363 | 0.9886 | 0.9873 | 0.8884 | |

| 3 | 98.4339 | 0.0464 | 97.1824 | 0.1130 | 0.9403 | 0.9883 | 0.9901 | 0.8897 | |

| 4 | 96.4544 | 0.1011 | 96.0322 | 0.1098 | 0.9176 | 0.9860 | 0.9757 | 0.8905 | |

| 5 | 99.8366 | 0.0061 | 96.9614 | 0.1410 | 0.9338 | 0.9902 | 0.98363 | 0.9005 | |

| Ensemble | 1 | 97.2581 | 0.0728 | 97.6125 | 0.0646 | 0.9501 | 0.9593 | 0.9853 | 0.9332 |

| 2 | 97.7973 | 0.0622 | 98.1738 | 0.0533 | 0.9627 | 0.9647 | 0.9913 | 0.9381 | |

| 3 | 97.7894 | 0.0603 | 97.8756 | 0.0618 | 0.9531 | 0.9636 | 0.9874 | 0.9399 | |

| 4 | 97.8457 | 0.0625 | 97.5778 | 0.0685 | 0.9486 | 0.9549 | 0.9876 | 0.9223 | |

| 5 | 98.9735 | 0.0316 | 99.4250 | 0.0226 | 0.9872 | 0.9895 | 0.9965 | 0.9826 |

| Model | Fold | Train Accuracy | Train Loss | Val Accuracy | Val Loss | Jaccard Index | AUC | Specificity | Sensitivity |

|---|---|---|---|---|---|---|---|---|---|

| Resnet34 | 1 | 99.6311 | 0.0132 | 97.9807 | 0.0648 | 0.8949 | 0.9961 | 0.9887 | 0.9398 |

| 2 | 99.5496 | 0.0148 | 98.1776 | 0.0656 | 0.9022 | 0.9913 | 0.9922 | 0.9341 | |

| 3 | 99.7337 | 0.0083 | 98.1038 | 0.0535 | 0.8928 | 0.9976 | 0.9912 | 0.9308 | |

| 4 | 97.1184 | 0.0896 | 97.2005 | 0.0821 | 0.8665 | 0.9931 | 0.9806 | 0.9361 | |

| 5 | 99.5437 | 0.0213 | 98.4187 | 0.0738 | 0.9105 | 0.9929 | 0.9862 | 0.9739 | |

| ResNet50 | 1 | 99.3660 | 0.0288 | 98.4396 | 0.0579 | 0.9678 | 0.9739 | 0.9904 | 0.9573 |

| 2 | 99.5842 | 0.0170 | 98.3143 | 0.0592 | 0.9628 | 0.9719 | 0.9894 | 0.9544 | |

| 3 | 99.8263 | 0.0066 | 98.1038 | 0.0776 | 0.9599 | 0.9684 | 0.9874 | 0.9494 | |

| 4 | 99.9538 | 0.0018 | 97.9348 | 0.0743 | 0.9574 | 0.9620 | 0.9903 | 0.9338 | |

| 5 | 99.7718 | 0.0112 | 98.3708 | 0.0625 | 0.9648 | 0.9832 | 0.9839 | 0.9826 | |

| VitNet | 1 | 82.2614 | 0.4526 | 81.6888 | 0.4532 | 0.6295 | 0.5 | 1 | 0 |

| 2 | 84.4919 | 0.3426 | 86.2870 | 0.3141 | 0.7307 | 0.6980 | 0.9555 | 0.4405 | |

| 3 | 81.9212 | 0.4638 | 83.0248 | 0.4527 | 0.6543 | 0.5 | 1 | 0 | |

| 4 | 84.3706 | 0.3502 | 84.6718 | 0.3393 | 0.6977 | 0.6841 | 0.9498 | 0.4184 | |

| 5 | 82.0483 | 0.3951 | 86.3919 | 0.3069 | 0.7274 | 0.6337 | 0.9776 | 0.2898 | |

| VggNet | 1 | 77.3246 | 0.4784 | 76.5826 | 0.4820 | 0.6291 | 0.7732 | 0.7643 | 0.7822 |

| 2 | 90.0875 | 0.2296 | 93.0702 | 0.1838 | 0.8607 | 0.7924 | 0.9604 | 0.6244 | |

| 3 | 91.0623 | 0.2155 | 92.8018 | 0.1911 | 0.8572 | 0.8139 | 0.9641 | 0.6636 | |

| 4 | 91.0069 | 0.2178 | 93.0925 | 0.1655 | 0.8589 | 0.8168 | 0.9628 | 0.6709 | |

| 5 | 91.4476 | 0.2077 | 92.0146 | 0.1945 | 0.8444 | 0.8186 | 0.9659 | 0.6712 | |

| EfficientNet | 1 | 99.9769 | 0.0014 | 98.2101 | 0.1121 | 0.9623 | 0.9946 | 0.9915 | 0.9398 |

| 2 | 99.6882 | 0.0098 | 97.9498 | 0.0795 | 0.9584 | 0.9944 | 0.9952 | 0.9088 | |

| 3 | 98.8773 | 0.0347 | 97.9683 | 0.0896 | 0.9579 | 0.9921 | 0.9934 | 0.9122 | |

| 4 | 99.2738 | 0.0244 | 97.2005 | 0.0940 | 0.9464 | 0.9912 | 0.9834 | 0.9243 | |

| 5 | 99.6692 | 0.0091 | 98.1312 | 0.0814 | 0.9586 | 0.9948 | 0.9896 | 0.9391 | |

| Ensemble | 1 | 98.4785 | 0.0412 | 98.8067 | 0.0313 | 0.9751 | 0.9829 | 0.9910 | 0.9749 |

| 2 | 99.0877 | 0.0268 | 98.9066 | 0.0325 | 0.9753 | 0.9775 | 0.9955 | 0.9594 | |

| 3 | 99.1203 | 0.0298 | 99.1873 | 0.0260 | 0.9830 | 0.9866 | 0.9945 | 0.9787 | |

| 4 | 99.4813 | 0.0150 | 99.7246 | 0.0079 | 0.9942 | 0.9964 | 0.9977 | 0.9952 | |

| 5 | 98.9165 | 0.0319 | 99.3291 | 0.0245 | 0.9836 | 0.9901 | 0.9948 | 0.9855 |

4. Discussion

4.1. Future Directions

5. Conclusion

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rai, H.M. Cancer detection and segmentation using machine learning and deep learning techniques: a review. Multimedia Tools Appl. 2023, 83, 27001–27035. [Google Scholar] [CrossRef]

- Kuntz, S.; Krieghoff-Henning, E.; Kather, J.N.; Jutzi, T.; Höhn, J.; Kiehl, L.; Hekler, A.; Alwers, E.; von Kalle, C.; Fröhling, S.; et al. Gastrointestinal cancer classification and prognostication from histology using deep learning: Systematic review. Eur. J. Cancer 2021, 155, 200–215. [Google Scholar] [CrossRef] [PubMed]

- Mirza, O.M.; Alsobhi, A.; Hasanin, T.; Ishak, M.K.; Karim, F.K.; Mostafa, S.M. Computer Aided Diagnosis for Gastrointestinal Cancer Classification Using Hybrid Rice Optimization With Deep Learning. IEEE Access 2023, 11, 76321–76329. [Google Scholar] [CrossRef]

- Suzuki, H.; Yoshitaka, T.; Yoshio, T.; Tada, T. Artificial intelligence for cancer detection of the upper gastrointestinal tract. Dig. Endosc. 2021, 33, 254–262. [Google Scholar] [CrossRef] [PubMed]

- Yasmin, F.; Hassan, M.; Hasan, M.; Zaman, S.; Bairagi, A.K.; El-Shafai, W.; Fouad, H.; Chun, Y.C. GastroNet: Gastrointestinal Polyp and Abnormal Feature Detection and Classification With Deep Learning Approach. IEEE Access 2023, 11, 97605–97624. [Google Scholar] [CrossRef]

- Yong, M.P.; Hum, Y.C.; Lai, K.W.; Lee, Y.L.; Goh, C.-H.; Yap, W.-S.; Tee, Y.K. Histopathological Gastric Cancer Detection on GasHisSDB Dataset Using Deep Ensemble Learning. Diagnostics 2023, 13, 1793. [Google Scholar] [CrossRef] [PubMed]

- Sivari, E.; Bostanci, E.; Guzel, M.S.; Acici, K.; Asuroglu, T.; Ayyildiz, T.E. A New Approach for Gastrointestinal Tract Findings Detection and Classification: Deep Learning-Based Hybrid Stacking Ensemble Models. Diagnostics 2023, 13, 720. [Google Scholar] [CrossRef] [PubMed]

- Gunasekaran, H.; Ramalakshmi, K.; Swaminathan, D.K.; J, A.; Mazzara, M. GIT-Net: An Ensemble Deep Learning-Based GI Tract Classification of Endoscopic Images. Bioengineering 2023, 10, 809. [Google Scholar] [CrossRef] [PubMed]

- Su, Q.; Wang, F.; Chen, D.; Chen, G.; Li, C.; Wei, L. Deep convolutional neural networks with ensemble learning and transfer learning for automated detection of gastrointestinal diseases. Comput. Biol. Med. 2022, 150, 106054. [Google Scholar] [CrossRef] [PubMed]

- R. A. Almanifi, M. A. M. Razman, I. M. Khairuddin, M. A. Abdullah and A. P. P. A. Majeed, "Automated Gastrointestinal Tract Classification Via Deep Learning and The Ensemble Method," 2021 21st International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, Republic of, 2021, pp. 602-606, doi: 10.23919/ICCAS52745.2021.9649754. keywords: {Deep learning;Automation;Stacking;Predictive models;Control systems;Gastrointestinal tract;Data models;Gastrointestinal tract;Colorectal cancer;Deep learning;CNN;Transfer learning;Ensemble}. [CrossRef]

- Haile, M.B.; Salau, A.; Enyew, B.; Belay, A.J. Detection and classification of gastrointestinal disease using convolutional neural network and SVM. Cogent Eng. 2022. [Google Scholar] [CrossRef]

- Billah, M.; Waheed, S. Gastrointestinal polyp detection in endoscopic images using an improved feature extraction method. Biomed. Eng. Lett. 2017, 8, 69–75. [Google Scholar] [CrossRef] [PubMed]

- Chao, W.-L.; Manickavasagan, H.; Krishna, S.G. Application of Artificial Intelligence in the Detection and Differentiation of Colon Polyps: A Technical Review for Physicians. Diagnostics 2019, 9, 99. [Google Scholar] [CrossRef]

- Li, B.; Meng, M.Q.-H. Automatic polyp detection for wireless capsule endoscopy images. Expert Syst. Appl. 2012, 39, 10952–10958. [Google Scholar] [CrossRef]

- Guo, L.; Gong, H.; Wang, Q.; Zhang, Q.; Tong, H.; Li, J.; Lei, X.; Xiao, X.; Li, C.; Jiang, J.; et al. Detection of multiple lesions of gastrointestinal tract for endoscopy using artificial intelligence model: a pilot study. Surg. Endosc. 2020, 35, 6532–6538. [Google Scholar] [CrossRef] [PubMed]

- Charfi, S.; El Ansari, M.; Balasingham, I. Computer-aided diagnosis system for ulcer detection in wireless capsule endoscopy images. IET Image Process. 2019, 13, 1023–1030. [Google Scholar] [CrossRef]

- Wang, X.; Qian, H.; Ciaccio, E.J.; Lewis, S.K.; Bhagat, G.; Green, P.H.; Xu, S.; Huang, L.; Gao, R.; Liu, Y. Celiac disease diagnosis from videocapsule endoscopy images with residual learning and deep feature extraction. Comput. Methods Programs Biomed. 2019, 187, 105236. [Google Scholar] [CrossRef] [PubMed]

- Renna, F.; Martins, M.; Neto, A.; Cunha, A.; Libânio, D.; Dinis-Ribeiro, M.; Coimbra, M. Artificial Intelligence for Upper Gastrointestinal Endoscopy: A Roadmap from Technology Development to Clinical Practice. Diagnostics 2022, 12, 1278. [Google Scholar] [CrossRef]

- Liedlgruber, M.; Uhl, A. Computer-Aided Decision Support Systems for Endoscopy in the Gastrointestinal Tract: A Review. IEEE Rev. Biomed. Eng. 2011, 4, 73–88. [Google Scholar] [CrossRef] [PubMed]

- Naz, J.; Sharif, M.; Yasmin, M.; Raza, M.; Khan, M.A. Detection and Classification of Gastrointestinal Diseases using Machine Learning. Curr. Med Imaging Former. Curr. Med Imaging Rev. 2021, 17, 479–490. [Google Scholar] [CrossRef] [PubMed]

- Ghanzouri, I.; Amal, S.; Ho, V.; Safarnejad, L.; Cabot, J.; Brown-Johnson, C.G.; Leeper, N.; Asch, S.; Shah, N.H.; Ross, E.G. Performance and usability testing of an automated tool for detection of peripheral artery disease using electronic health records. Sci. Rep. 2022, 12, 1–11. [Google Scholar] [CrossRef]

- Singh, A.; Wan, M.; Harrison, L.; Breggia, A.; Christman, R.; Winslow, R.L.; Amal, S. Visualizing Decisions and Analytics of Artificial Intelligence Based Cancer Diagnosis and Grading of Specimen Digitized Biopsy: Case Study for Prostate Cancer. In Proceedings of the Companion Proceedings of the 28th International Conference on Intelligent User Interfaces; Association for Computing Machinery: New York, NY, USA, March 27, 2023; pp. 166–170. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).