1. Introduction

Women's football is experiencing growth in its practice, as reflected by increases in federation licenses [

1]. This also translates into a need for professionalization and sports organization from the elite level down to early ages. This professionalization is evident not only in the increased competitive density [

2] and its physical demands in elite teams but also in lower categories [

3]. According to this structural change and the increased frequency of sports competitions at young ages in international contexts, it is essential for technical teams of national selections to optimize load management and recovery strategies [

4] to minimize the risk of injury and optimize sports performance [

5,

6,

7,

8].

Previous research indicates that sports performance specialists consider the reduction of recovery times between competitions, which leads to congested competitive periods and accumulated fatigue, as the primary risk for non-contact injuries and overtraining [

1]. To meet these objectives, external and internal training/competition load monitoring strategies are widely used [

9,

10,

11], as well as subjective fatigue markers [

12,

13,

14].

To adjust training loads, coaches and trainers use periodization models, adjusting loads based on the external load performed, internal load response, and recovery perceived by the players [

15]. In this regard, the quantification of external load is carried out by analyzing players' locomotor/mechanical and physiological profiles from various derived metrics (total distance, distance per minute, high-intensity distance, accelerations, decelerations, etc.) from Global Positioning Systems (GPS) [

9,

10,

11]. On the other hand, perceived intensity during training/competition shows associations with objective internal load markers, such as heart rate and time spent in different training zones [

16]. Due to its ease of data collection, the primary method used to quantify perceived internal load is sRPE, calculated from exposure minutes and the differential Rate of Perceived Effort (RPE) by the athletes [

17].

Additionally, questionnaires about players' well-being have proven to be a sensitive tool for identifying the state of adaptation to the loads imposed during competitive microcycles, both in microcycles with one competition per week [

13,

14] and in periods with up to three weekly competitions [

2]. These questionnaires assess various items such as fatigue, sleep quality, muscle soreness, mood, and stress, and can represent how athletes respond to the intensity and volume of football matches and training sessions [

12]. Despite some controversy regarding its validity [

18], this tool is also widely used by technical teams to guide decisions and adjust the contents and intensities of future training sessions and the distribution of playing time during competition [

19].

Despite the implications demonstrated by load control on performance optimization and the reduction of injury and illness risks [

20], literature on training load control strategies during congested periods is limited in international competitions for young female football players [

3,

21], where the different distribution of playing time during competition can lead to varying weekly training loads, creating mismatches in players' total weekly load [

11,

22]. To balance these differences in training loads provided by the competition itself, coaches plan the microcycle differently for starting players and non-starters [

23,

24]. Indeed, on the day after the match (MD+1), non-starters, generally those who play less than 60 minutes, undergo a compensation session. Meanwhile, starting players perform less intense and lower volume training to facilitate recovery and balance readiness for the rest of the microcycle between both conditions. Non-starting players in the English Premier League, despite compensation sessions, experience a much lower training load than starters, especially in high intensity running [

25]. Specifically, in women's football, it has also been reported that throughout a season, starting players experience higher internal loads, including respiratory RPE, leg RPE, and cognitive RPE for total weekly load, match load, monotony, and stress [

11]. Therefore, implementing daily monitoring will help balance differences in training loads between starters and non-starters players, optimizing performance and reducing injury risk. It is crucial for coaches and fitness trainers to adjust their training plans according to individual needs and game loads, using monitoring tools and well-being questionnaires to guide their decisions.

However, most available scientific literature to date comes from professional club environments with adult subjects [

2], without information on these congested periods in young female international football players. This means that informed decisions based on scientific literature about training control and adjustment for young female players are often made based on results from senior male [

2] or female players [

10], which complicates the proper adjustment of training doses in this specific context. For example, the physical characteristics and different maturation states of young players can lead to different physiological responses to training and competition demands compared to other populations, such as young male or adult female football players [

26]. For all these reasons, and due to the risks associated with the maturation processes of early age [

27], this research aims to: i) quantify, monitor, and describe differences in internal and external load, subjective well-being, and perceived recovery patterns among international U-16 female football players, and ii) compare the total weekly load between starters and non-starters during a development tournament with three matches in seven days.

2. Materials and Methods

Design

To achieve the objectives of this research, an observational study was conducted during a U16 female football development tournament in May during the 22/23 season. Data were collected during seven days every training sessions and matches using global positioning systems (GPS), as well as differential perceived exertion [

17], recovery [

28]and wellness [

12] questionnaires to calculate internal load and perceived recovery. Among the 18 players analyzed, there were 3 central defenders, 4 full-backs, 5 midfielders, 3 wingers, and 2 forwards. The classification of players as starters or non-starters was done retrospectively based on the last match played. Specifically, players who were starters in Match 1 were classified as starters during sessions 1 and 2. Starters from Match 2 were classified as starters during sessions 3 and 4 and players who were starters in Match 3 were classified as starters for sessions 5, 6, and 7.

Participants

Eighteen adolescent outfield players (excluding goalkeepers) from the U16 women's national football team were monitored during each training session and match throughout the camp. The players of this study (Weight=56.85±5.52Kg; Height=1.66±0.06 m; IMC=20.76±1.35 K/m2) , were recruited by convenience (a non-probabilistic method) due to their inclusion in the selected players by the head coach for the tournament. The analyzed players belonged to the team ranked 7th in the FIFA rankings at the time of the tournament (May 2023).

The criteria for including participants in the research were: i) completing all training sessions (full duration); ii) completing more than 85% of the wellness and training intensity questionnaires during the observed period; iii) not having had any serious injuries in the last 6 months or minor injuries in the last 4 weeks (according to the consensus statement on injuries). Goalkeepers were excluded due to their different physical profiles and distinct training content during the sessions. Data collection was a condition of the players' participation in the tournament and was conducted daily. Although the players are not professionals, provisional approval for the study was obtained from the National Federation involved, with individual player data being collected beforehand as a condition for their participation in the national team [

29]. However, all players were informed about the aim and procedures of the study in accordance with the Declaration of Helsinki and guardians provided informed consent. Ethical approval was obtained from Autonomous University, Madrid. Approval number: CEI-124-2528.

Distribution of Training Sessions and Matches

The training and competition volume, internal load, external load, recovery, and perceived well-being of the players were monitored daily over seven consecutive days during a UEFA U16 Development Tournament in Eerikkilä, Finland. Throughout the observation period, three training sessions (sessions 1, 3, and 6), three matches (sessions 2, 4, and 7), and one gym session (session 5) were conducted. All sessions took place at the Eerikkilä Sport & Outdoor Resort, and data were collected by members of the national team’s technical staff, who are also the authors of this research. All training and match sessions were conducted on the same playing surface. The team consistently used a 1-4-3-3 formation, comprising two center-backs, two full-backs, one central midfielder, two central midfielders, two wingers, and one striker. The research did not alter the training session designs planned by the national team coach. Our analysis focused exclusively on the team’s primary on-field training sessions. These sessions included warm-up activities, the main phase of training, and subsequent cool-down activities. The training sessions encompassed a wide range of activities, including physical aspects (such as warm-up routines and circuit-based exercises), technical components (including rondos, ball control, passing drills, and finishing exercises), and tactical elements (including positional games, pressure tasks, specific movement patterns, and set pieces). All activities were supervised by the national coach and the rest of the coaching staff. The established periodization by the technical staff during the tournament was structured into three microcycles, each consisting of one training session and one match. In response to the organizational constraints that this structure generates, the head coach adopted a mixed approach [

24]. This approach involved dedicating part of each session to recovery strategies or load reduction to enhance the players' readiness for the next match. In the remaining portion of the session, the coaches increased the intensity by incorporating the typical MD-1 training structure to emphasize team movements and tactical preparation. Accordingly, recovery strategies, passing drills, rondos, and collective positioning were included as elements typical of an MD+1 session. Conversely, MD-1 contents encompassed reaction speed tasks, small-sided games, offensive situations, competitive finishing games, and set pieces.

External load

The training and competition demands were quantified using the WIMU PRO™ GPS device (RealtrackSystems S.L., Almería, Spain). Intra- and inter-unit reliability were deemed aceptable [

30], with the intraclass correlation coefficient recorded at 0.65 for the x-coordinate and 0.85 for the y-coordinate for the systems analyzed. Data collected were analyzed using SPRO™ software (version 958; RealtrackSystems, Almería, Spain). The data were extracted using the same software. The following metrics were introduced for statistical analysis: Total Distance (TD), Relative Total Distance (TD/min), High-Speed Running (calculated as the distance covered at a speed greater than 80% of each player's maximum recorded speed during competition; HSR), Relative High-Speed Running (HSR/min), Player Load (PL), and Relative Player Load (PL/min). To avoid biases between units, the same unit was used by each player throughout the analysis period. The unit was placed on the upper back of the players 30 minutes prior to each session (training and match) and was removed immediately following the end of the session. During training sessions, monitoring was conducted throughout the entire session; for matches, players were monitored from the start until the final whistle, including the warm-up on the field for both training sessions and matches. The mean value of each training session was expressed relative to the average external load recorded during the three matches for players who competed for more than 80 minutes: (mean training session external load × 100) ÷ mean competitive-match external load.

Internal load

The internal workload of players was assessed using the differential session Rate of Perceived Effort (sRPE): sRPE

breath, sRPE

leg, and sRPE

cog [

9,

11]. These were calculated by multiplying each RPE value by the session duration (in minutes) for each training session [

17] (including recovery periods between exercises) and match (including warm-up). The 10-point Borg RPE scale was administered 5-30 minutes after training sessions and matches [

16]. The sRPE is a validated indicator of overall internal load in football [

16]. All players were familiarized with the RPE

breath, RPE

leg, and RPE

cog. Players reported their RPE scores ten minutes after finishing each field session.

Recovery and wellness

Perceived ratings by the players were monitored daily each morning between 09:00 and 10:00, following breakfast, using a Google Forms questionnaire specifically designed for the research. Perceived recovery was assessed using a Likert scale ranging from 0 to 10 points, where 0 represents extremely poor recovery/extreme fatigue with expected performance decrements, 5 indicates adequate recovery with expected performance maintenance, and 10 signifies excellent recovery and high energy levels with anticipated performance improvements [

28]. Perceived well-being was also evaluated using the same Google Forms questionnaire, with questions based on those developed by McLean et al [

12]. The questionnaire consisted of a Likert scale from 1 to 5 arbitrary units (A.U.), where players rated their fatigue, sleep quality, muscle soreness, stress, and mood (5 = very refreshed, very rested, very well, very relaxed, and very positive, respectively; 1 = always tired, insomnia, very sore, very stressed, and very upset/irritable/depressed, respectively). Additionally, the sum of scores across the different dimensions of the questionnaire was analyzed to yield an overall well-being score, with a maximum possible score of 25. All players were familiar with the questionnaire, as it is used from the lower age categories up to the U16 selection.

Statistics

Statistical analyses and graphs were conducted using SPSS (version 22, Inc., Chicago, IL, USA) and Graphpad Prism v9.0 (Boston, MA, USA). Normality assumption was tested for each variable with the Saphiro-Wilk test. Data were analyzed through factorial linear mixed modeling with a 95% confidence interval (CI) to assess the impact of training/competition seasons along the tournament. This approach is suitable for repeated measures data from unbalanced designs, which was the case in our study, as there is some missing data. In this study, the role of the players, based on whether they started the match as starters or non-starters, was used as a fixed effect. Pairwise comparisons were examined using Bonferroni’s post-hoc test. For weekly training load an independent sample T-Test or Mann-Whitney U were carried out to stablish the possible differences between starters and non-starters. Results are presented as mean ± standard deviation (SD), with significance set at p < 0.05.

4. Discussion

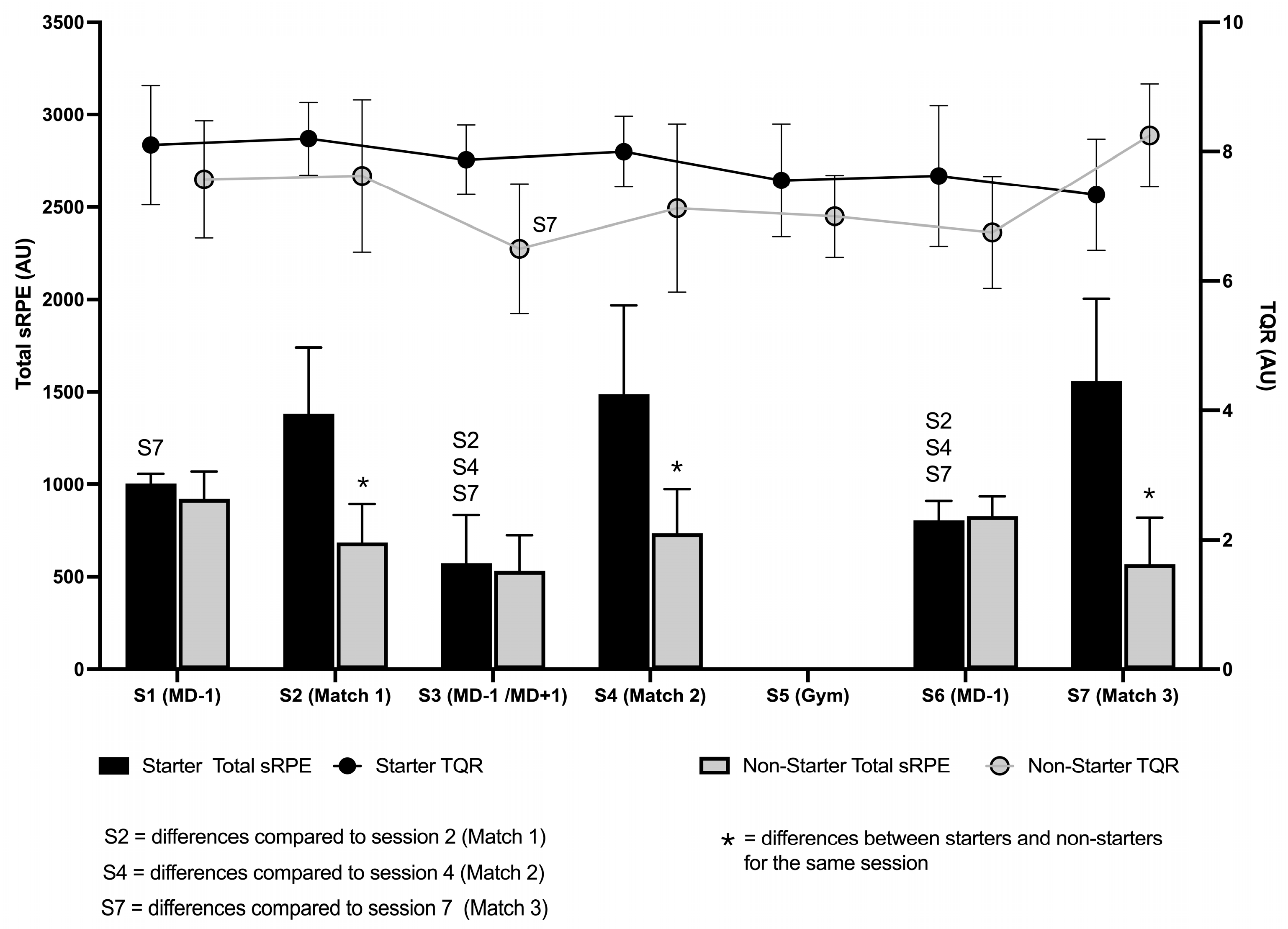

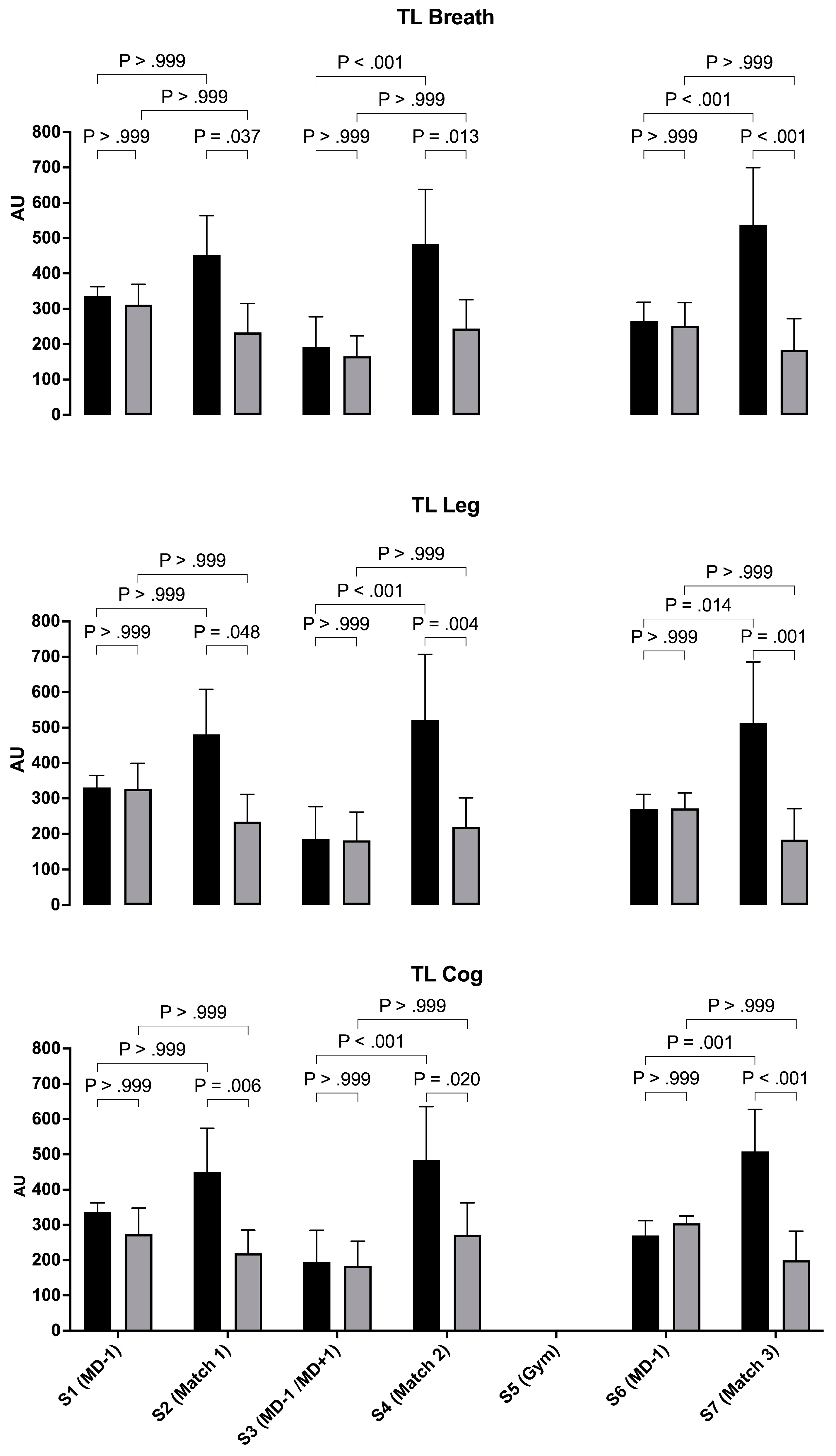

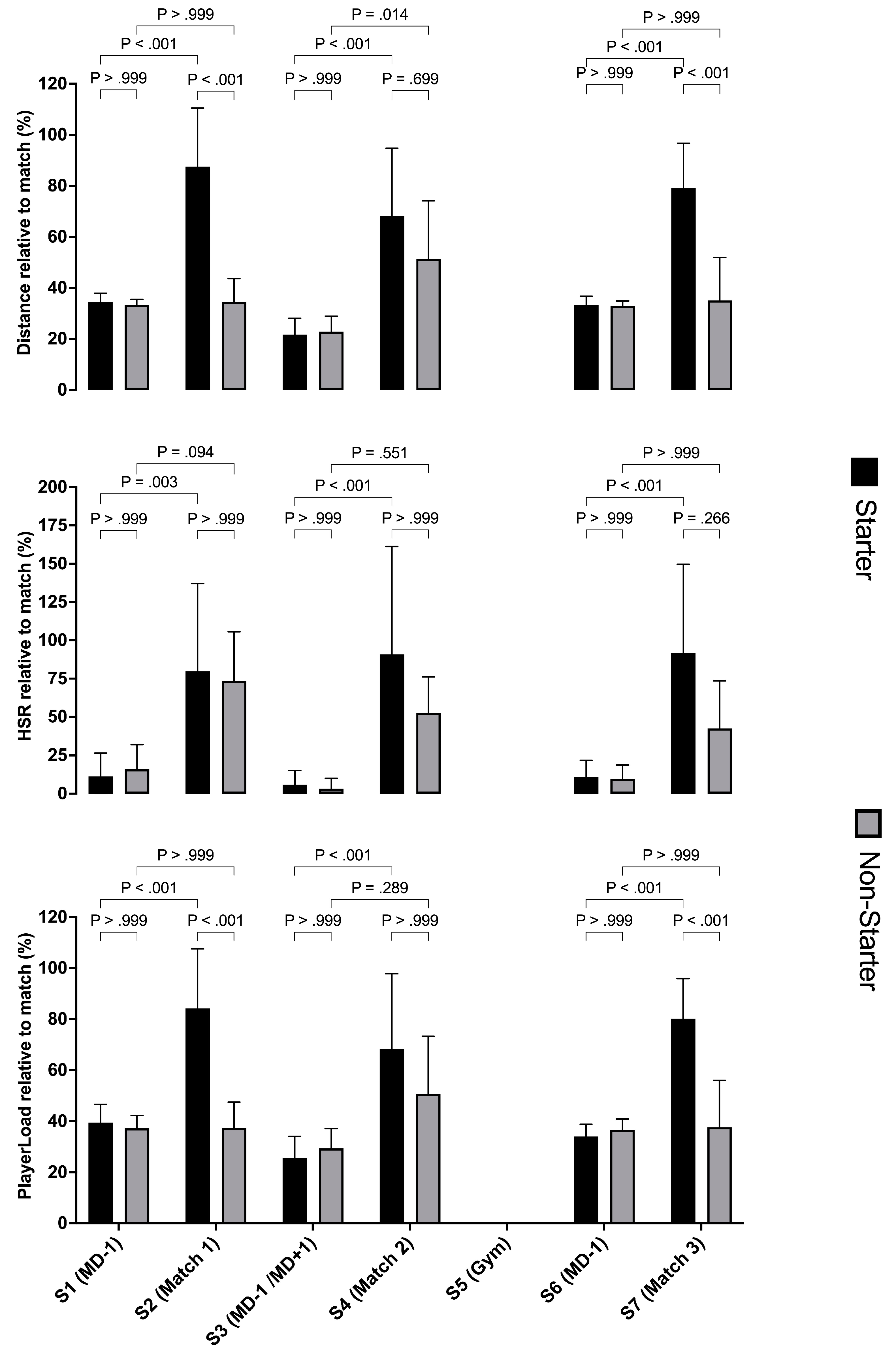

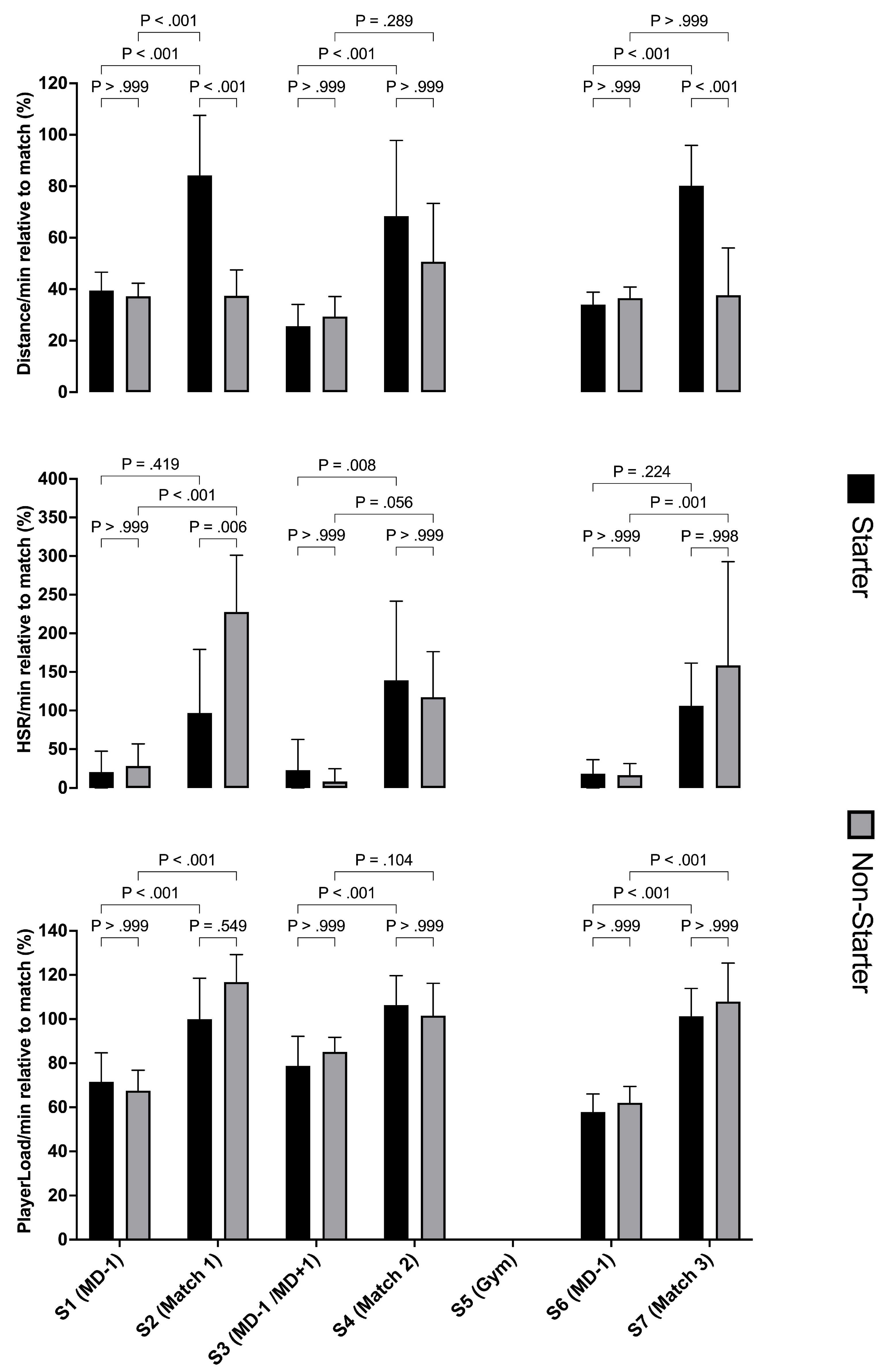

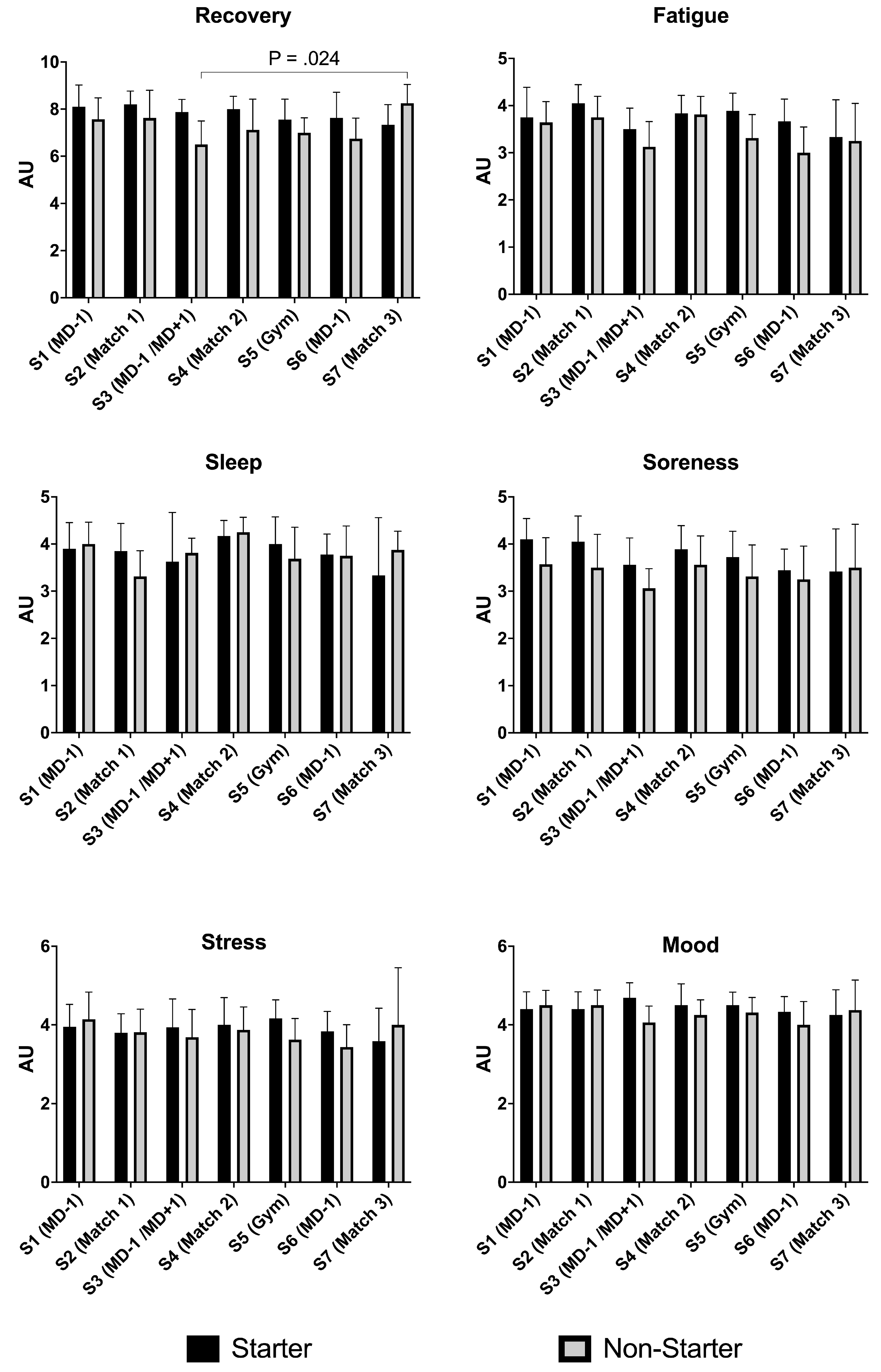

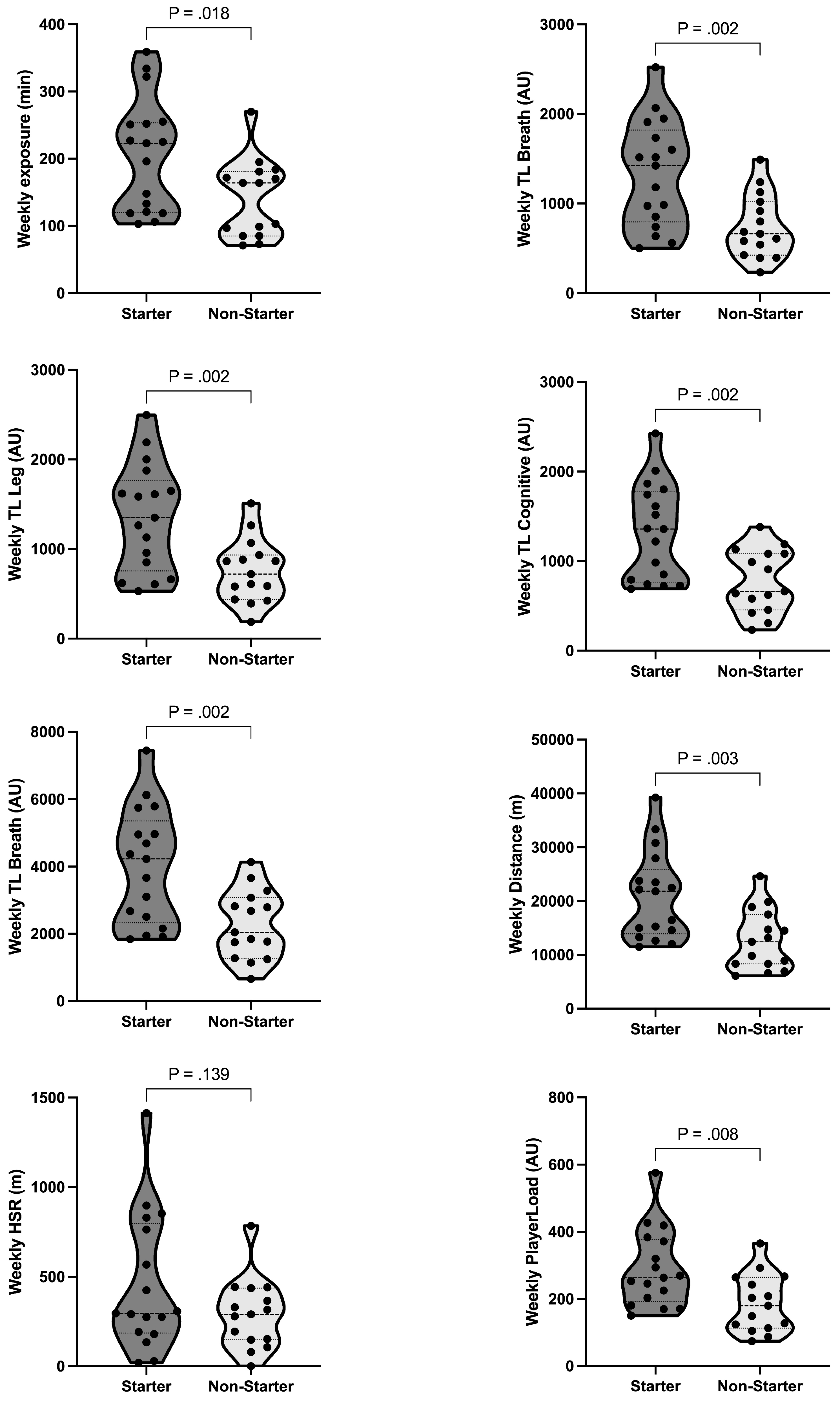

This research aimed to quantify and describe the differences in internal and external load, subjective well-being, and perceived recovery patterns among international U16 female soccer players during a friendly development tournament with three matches in seven days. Additionally, it sought to compare the total weekly load between starters and non-starters during a highly congested schedule. According to the observed results, it can be stated that starting players exhibited an undulatory pattern in both internal and external load, were matches being the primary training stimulus. On the other hand, non-starter players displayed a linear load pattern, consistent across all training sessions. For both roles, there was no significant decline in player well-being throughout the tournament, suggesting that microcycle periodization and rotations were effective in enabling players to cope with the tournament training loads. On the other hand, the substitution and periodization strategies were not effective in balancing the cumulative training loads between starters and non-starters during the tournament. Although the players' perceived wellness and recovery did not change (Figure X), both external and internal loads experienced by non-starters were significantly lower than those experienced by starters. This indicates that starters, who had greater exposure and higher external loads during matches, also reported higher RPE values due to the increased intensity of tournament matches compared to training. As a result, the total internal loads for starters were higher during the tournament, while non-starters experienced lower overall training loads, except for HSR (Figure X).

Although 1-week microcycles are mostly used to plan periodization programming in club teams [

10,

11], during national team training camps and tournaments, players compete every 48-72 hours [

2]. During these developmental ages, this competitive stimulus is significantly greater than what they experience at their clubs, as players in national team settings face a drastic increase in the frequency of competitive matches compared to their club context [

2]. In our study, we represented the internal and external loads of 3 microcycles consisting of 1 training day and 1 official match. In longer national team training camps, the MD-1 day is similar to the club training sessions before a match and includes physical activation, tactical refinements, and set pieces practices [

23]. However, in this specific context, MD-1 provides the only opportunity to facilitate the recovery of players with higher exposure, while performance optimization is required for players with lower match exposure due to fewer playing minutes. Due to the opposing nature of these training objectives [

23], it is necessary to identify which training strategies will optimize both recovery and performance based on match exposure. According to our results, the internal load perceived by the players was similar on all training days between starters and non-starters, but there were differences between the two groups on match days (

Figure 2). This is not surprising since the starters had more playing minutes during the competition affecting the sRPE calculations (

Table 1). Similarly, the match-related external load markers analyzed (i.e., TD, HSR, and PL) during the tournament showed a similar profile being higher for starters during the matches (

Figure 3). Furthermore, these differences between conditions (starter vs. non-starter) on match days lead to different patterns in the longitudinal analysis of the tournament, which do not necessarily result in differences in perceived recovery or the players' perceived well-being, except on day 3 (

Figure 1), suggesting that all players are able to cope with tournament demands using the current periodization and players´ rotation approach regardless of having played as a starter or as a non-starter. These results are difficult to compare as, to our knowledge, no other research has compared internal load, external load, and wellness and recovery during a congested competition period in female young players based on playing role. However, considering the differences in the population (elite Brazilian males vs. adolescent females) and the higher absolute external load, the adjustments in external load made by the team based on the distance to days/hours to the competition are similar in both contexts. The last training session before a match MD-1 is the day with the lowest values in TD, HSR, PL, and high metabolic running distance, regardless of how many hours have passed since the previous match [

24].Based on our findings and those from other studies [

2,

9,

10,

21,

24], there appears to be a consensus on the periodization strategies on MD-1. These strategies involve a tapering period in the hours leading to the match, where the training load is deliberately minimized to enhance recovery and increase the readiness to compete [

9]. Our results highlight the importance of monitoring training load during the periodization process to adequately prepare players for international games [

15,

31]. Providing the optimal loading stimulus to players during such a short period, as experienced in international tournaments (in our study, 3 games in 7 days), is crucial for achieving peak performance and minimizing the potential risk of injury and illness [

20]. Moreover, monitoring load becomes even more essential compared to club contexts for two reasons: first, to minimize spikes in load during the transition from club to national team, and second, because the time available for recovery between competitions is shorter. Any discomfort or injury can significantly limit player availability, which has been shown to be a determining factor for sporting success in football [

32].

Another fundamental aspect that physical trainers and coaches need to manage is balancing the training dose between starters and non-starters so that players who play fewer minutes in competition do not have a lower training dose [

22], different monotony and strain [

11], and do not decrease the accumulate load [

8]. All these factors together, if maintained in time, could decrease physical performance and increase the predisposition to injury in subsequent microcycles [

8]. According to the results of this study, the load imposed during matches did not result in a difference in recovery values or perceived well-being between starters and non-starters at any point during the tournament, despite different training loads (TL) (

Figure 1,

Figure 2, and

Figure 3). These results are contradictory to those previously observed in professional soccer players [

2,

13], where reductions in sleep quality, fatigue, muscle soreness, stress, and mood were observed on MD+1 using a well-being questionnaire similar to the one in the present study [

12]. Additionally, a relationship between different markers of competitive demands (RPE, duration, sRPE, HSR, sprint distance, accelerations, and decelerations) and reductions in sleep quality, fatigue, and muscle soreness were observed the day after the competition [

13]. These contradictory results may be due to several factors, such as the different distribution of total minutes played during the competition or environmental conditions that could have modified the RPE values [

33]. Furthermore, the analyzed team won all three matches they played, which could also have moderately to largely altered the perception of effort, as well as sleep quality, stress, and fatigue [

14].

Based on the current results, it seems that the periodization adopted during the tournament for non-starters may have been overly conservative, as non-starters had a lower weekly load (both internal and external) than starters (

Figure 1,

Figure 2 and

Figure 3), suggesting they received a lower training dose. Compensation sessions are frequently conducted on MD+1 or MD+2 to balance the loads between starters and non-starters, dividing the group to balance the weekly training load [

11,

22]. However, in international contexts with a congested competition calendar, there is the complication of having to prepare for the next match with only one training session between matches, making it impossible to establish subgroups of players with different training contents. A feasible alternative in this context could be conducting compensation sessions immediately after the match with those players who played fewer minutes, so that all players reach the next training session with similar fatigue and recovery conditions [

34]. Future research should identify whether this approach allows balancing the weekly training load without affecting recovery, perceived well-being, and readiness of players in congested schedules with only one training session between competitions. Moreover, this compensation in training loads is even more relevant for adolescent players, as there is a higher risk of injury at these due to the linear relationship between growth rate and estimated injury likelihood [

27]. During maturation, players could, therefore, experience more severe injuries because of the combined effects of a vulnerable musculoskeletal system and an increase in training load demands [

35]. For this, practitioners should use injury prevention and load monitoring strategies that are appropriate for each player’s stage of development. Clubs and national team staffs should aim to develop interventions targeted at reducing the injury incidence and burden observed during these high-risk phases of adolescence [

27]. Furthermore, the democratization of exposure minutes is very relevant in early ages to expose players to competitive contexts, allowing the technical staff to gather information on the technical behavior and collective tactics of all selected players during official competitions and not only during training sessions.

The present study had some limitations that need to be addressed. Namely, the small sample size, which came from only one young national team, and the analysis period, which was only three microcycles in one week. Consequently, future studies should attempt to analyze larger sample sizes and include more competition days to confirm the results of the present research. Additionally, future research lines can perform correlation and regression analyses to identify the interrelationship between competitive demands and the degree of influence on players' recovery and well-being in subsequent training sessions and/or matches. Similarly, another limitation in the present study is that no divisions were generated based on each player's position, which could lead to different interpretations depending on their position on the field. However, this issue was not addressed due to the limited sample size of this investigation.