Submitted:

06 August 2024

Posted:

08 August 2024

You are already at the latest version

Abstract

Keywords:

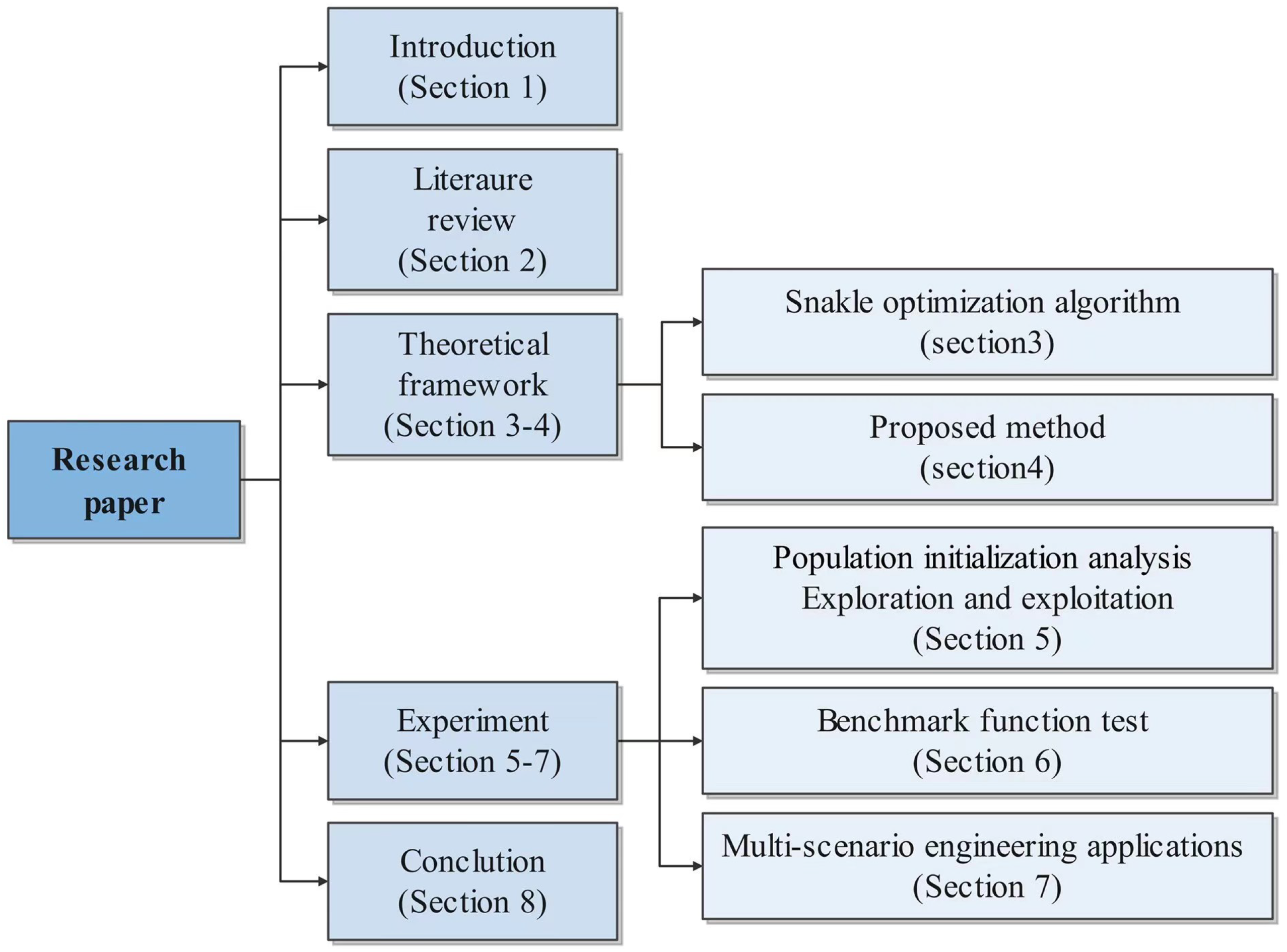

1. Introduction

2. Literature Review

3. Snake Optimization Algorithm

3.1. Initialization

3.2. Dividing the Swarm into Two Equal Groups: Males and Females

3.3. Evaluate Each Group and Define Temperature and Food Quantity

- Temperature () can be expressed as:

- The definition of quantity (Q) is:

3.4. Exploration Phase (No Food)

3.5. Exploitation Phase (Food Exists)

- If the temperature (0.6) (hot):

- If the temperature (0.6) (cold):

- Fight Mode:

- Mating Mode:

3.6. Termination Conditions

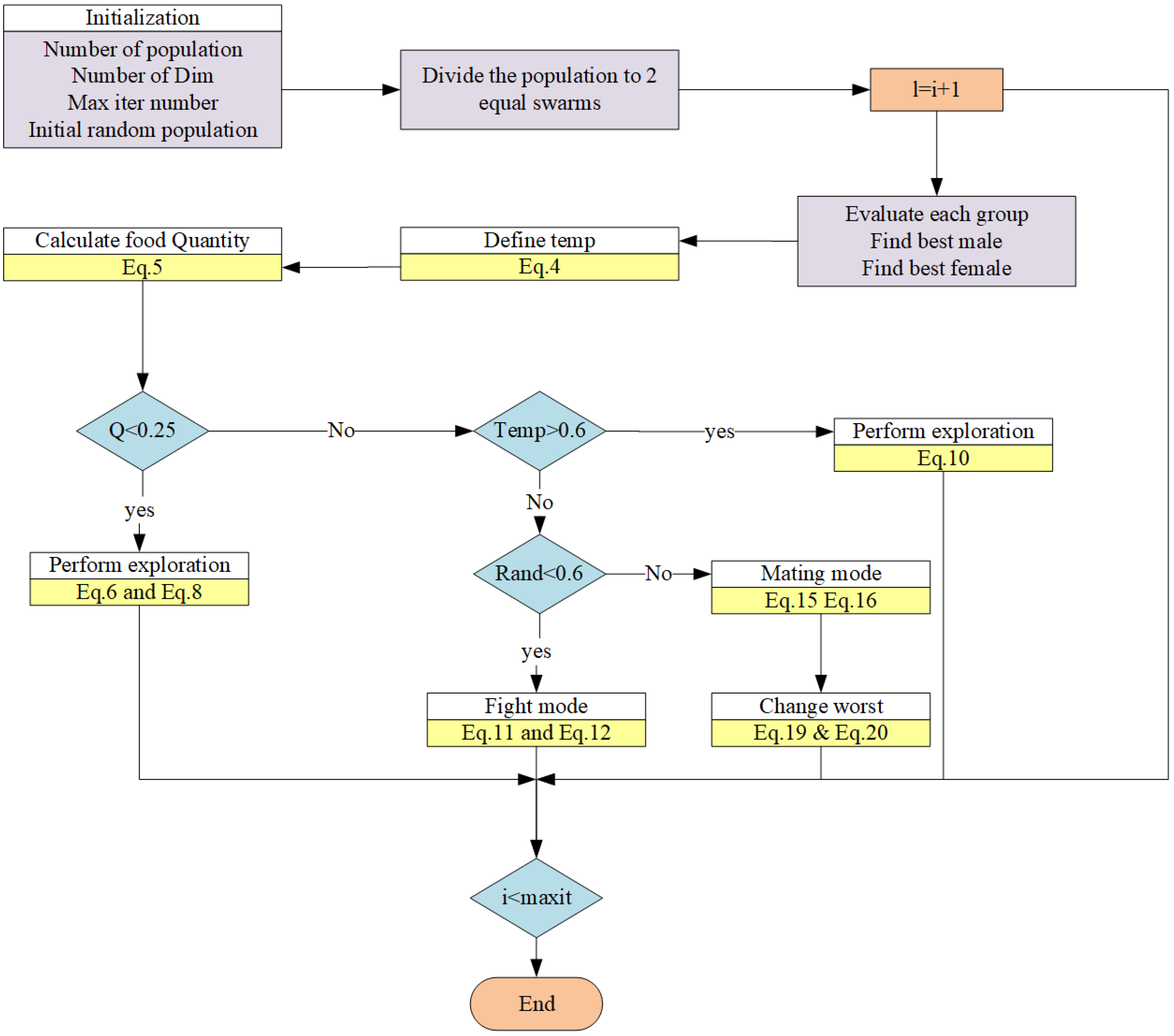

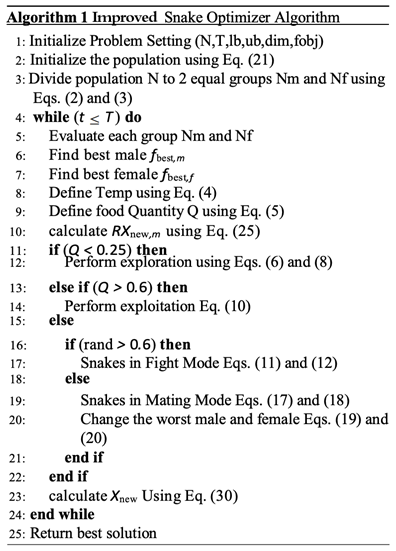

3.7. Algorithm Flowchart

4. Proposed Method

4.1. Initialization Using Sobol Sequences

- Generate a Sobol sequence , where and d is the dimensionality of the problem.

- Map the generated points to the search space defined by the lower and upper bounds, and respectively.

- Assign the mapped points to the initial positions of the individuals in the swarm.

4.2. Incorporating the RIME Optimization Algorithm

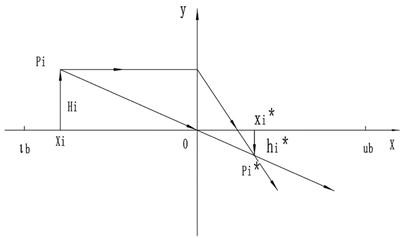

4.3. Lens Imaging Reverse Learning

5. Population Initialization and Exploration-Exploitation Analysis

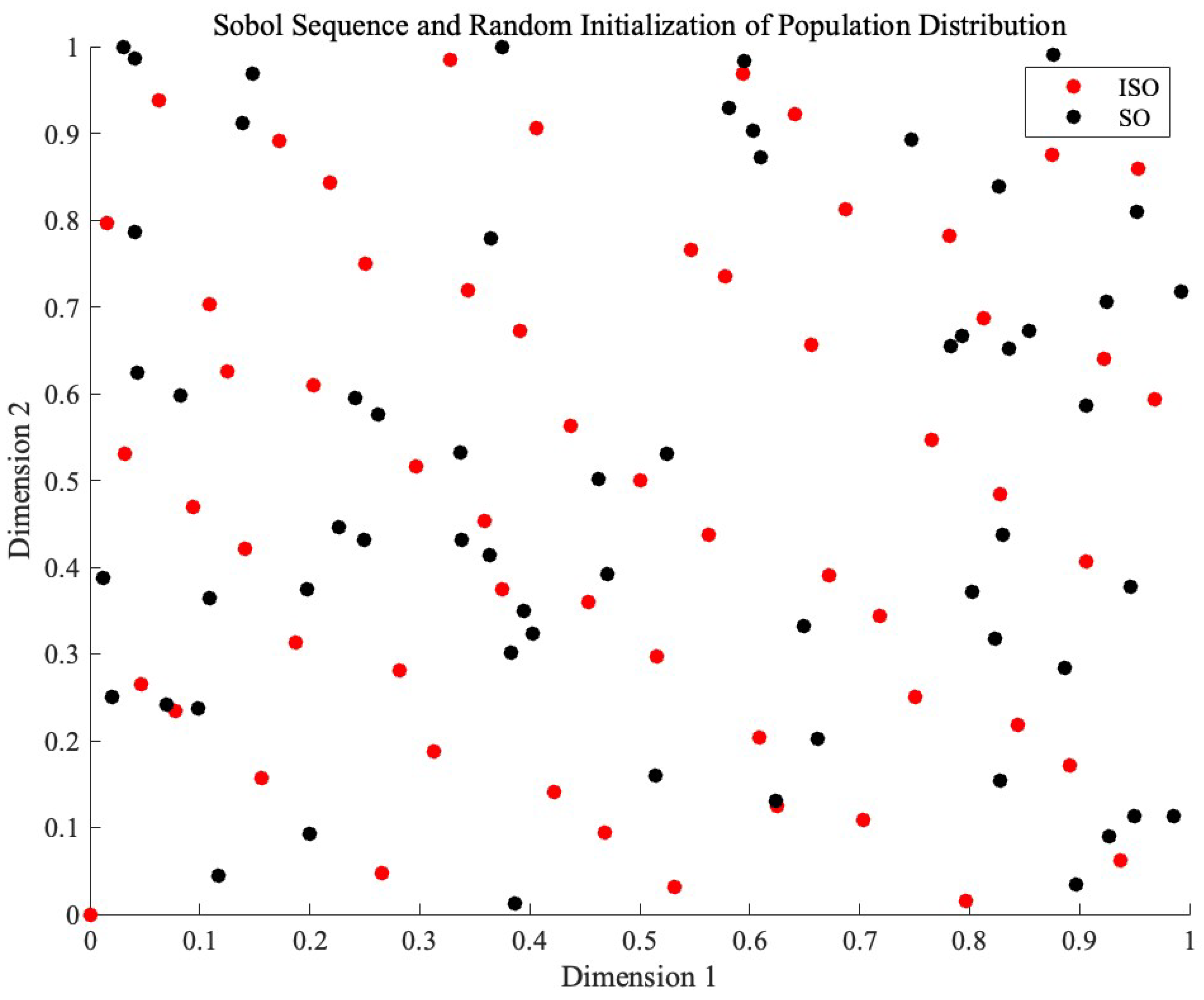

5.1. Population Initialization Analysis

| Parameter | SO (Random Initialization) | ISO (Sobol Sequence) |

|---|---|---|

| Star Discrepancy | 0.133966 | 0.047965 |

| Average Nearest Neighbor Distance | 0.069264 | 0.086739 |

| Sum of Squared Deviations (SSD) | 11.255603 | 9.990234 |

Star Discrepancy

Average Nearest Neighbor Distance

Sum of Squared Deviations (SSD)

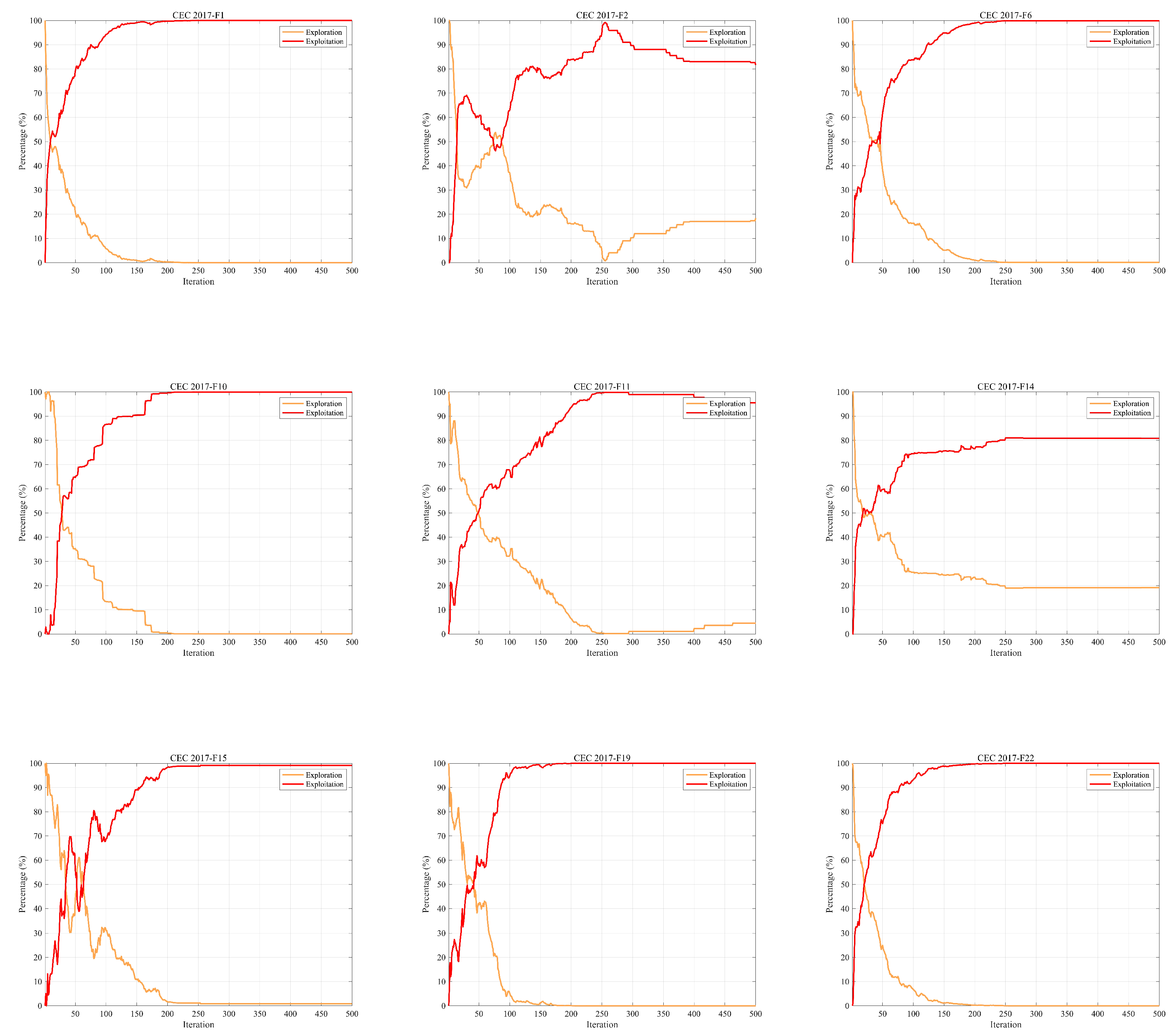

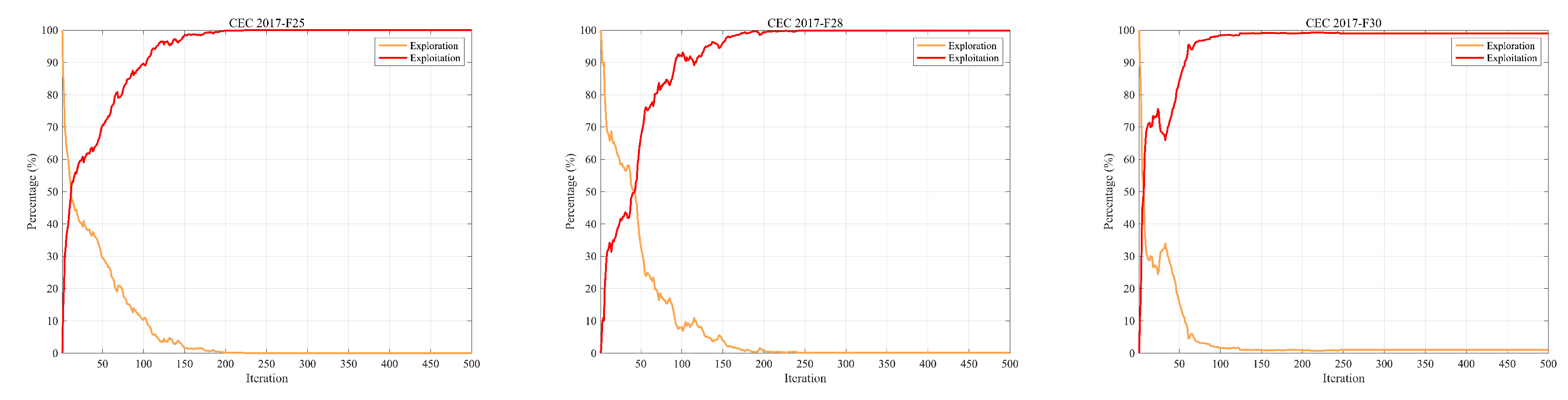

5.2. Exploration-Exploitation Analysis

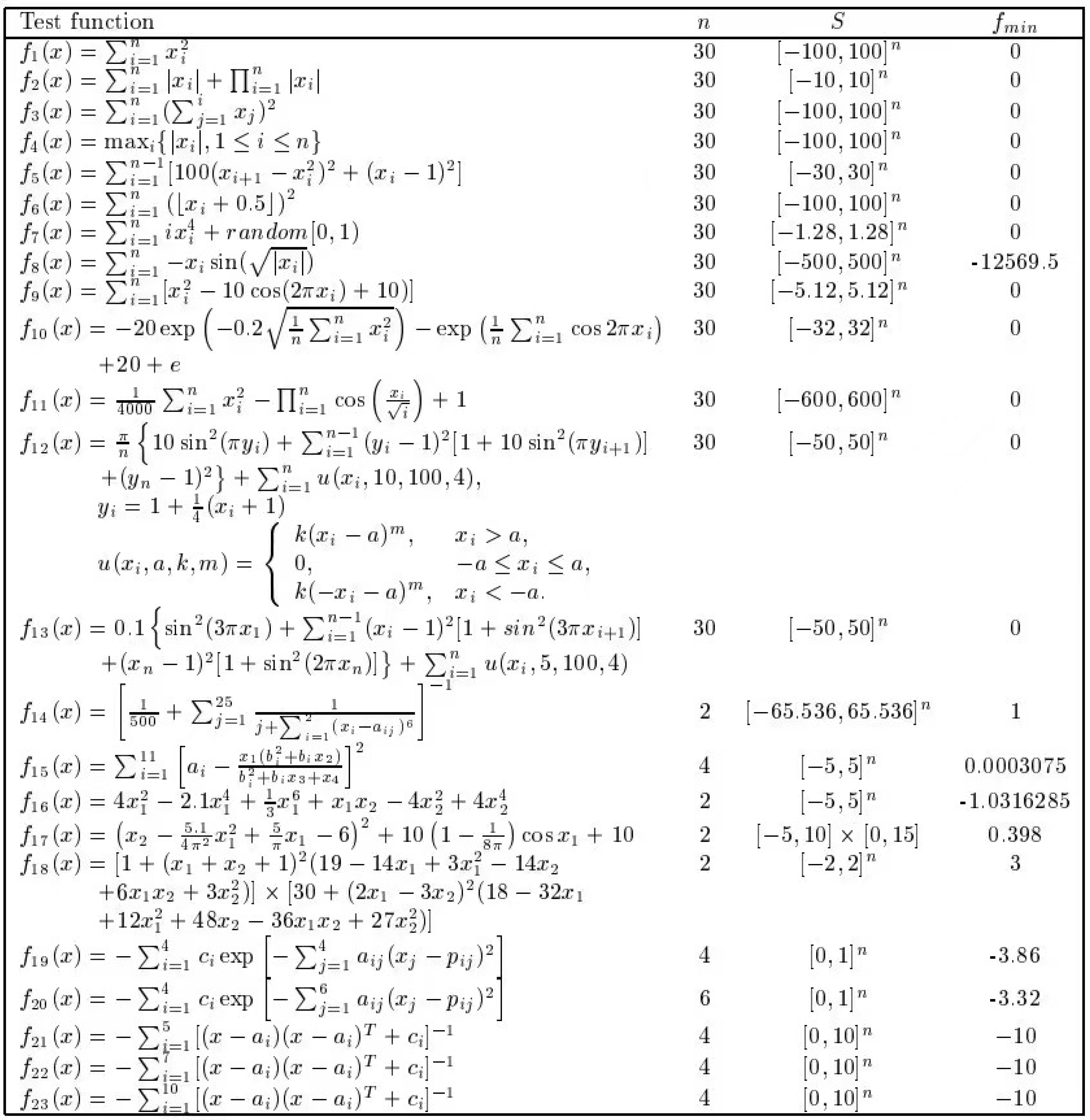

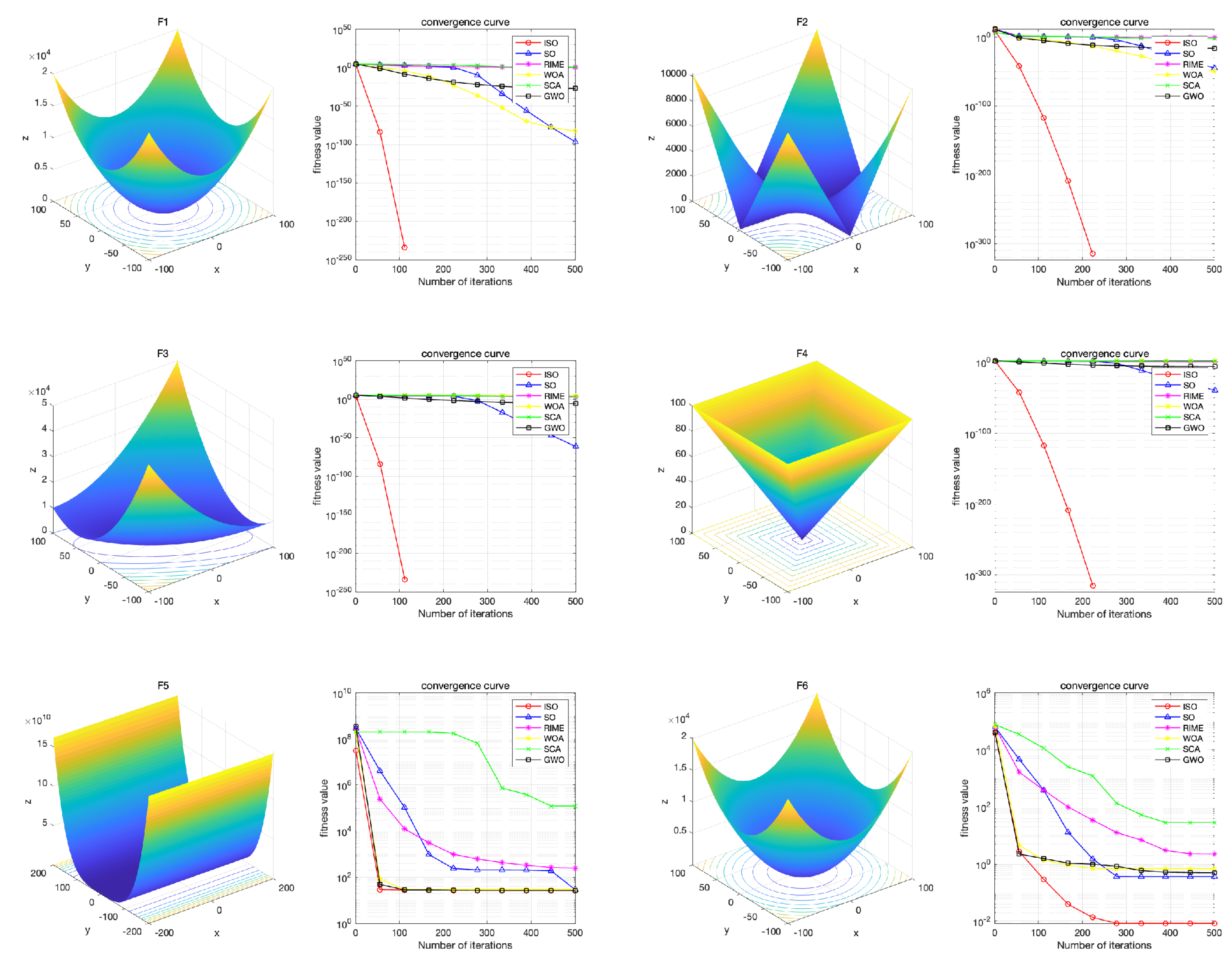

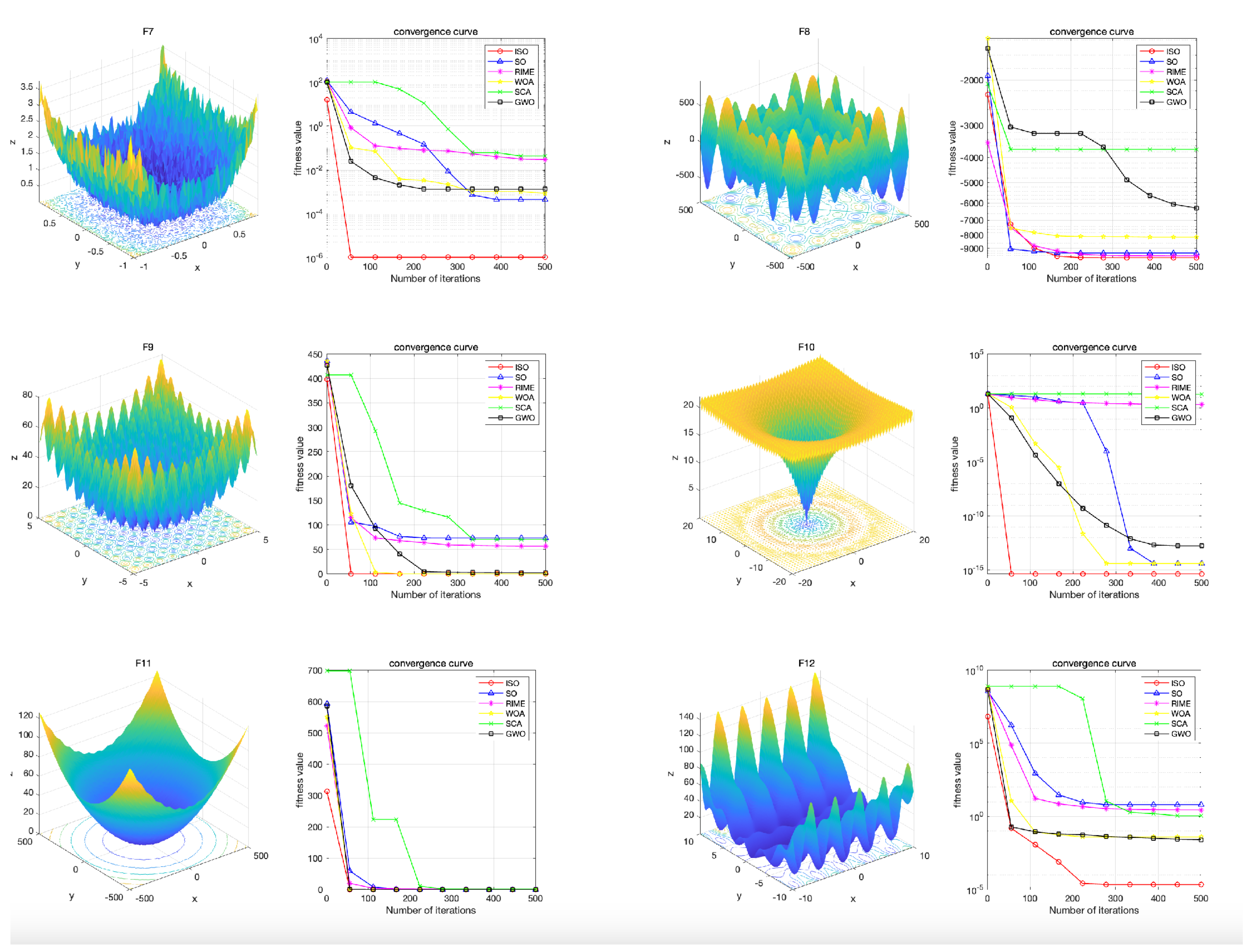

6. Benchmark Function Test

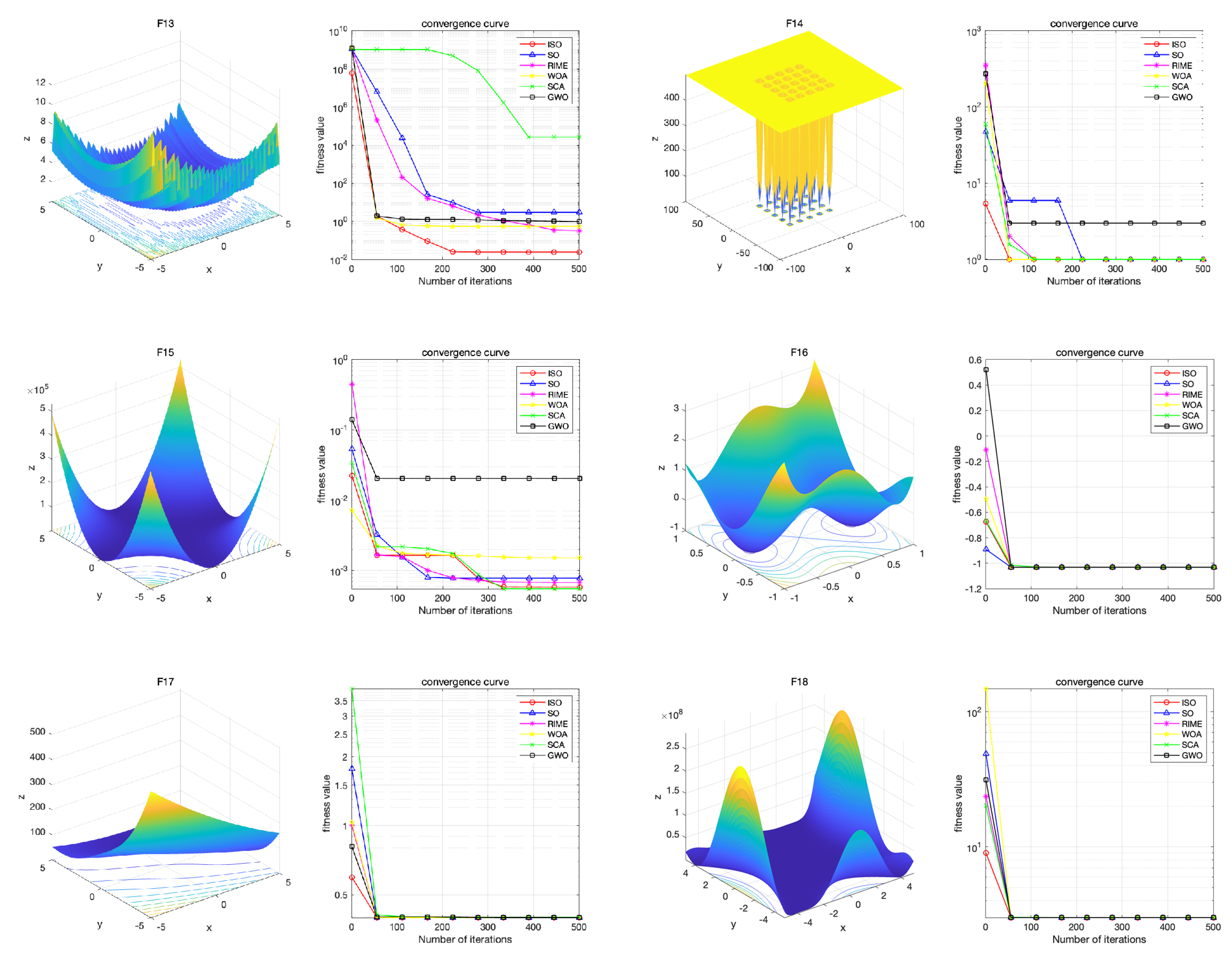

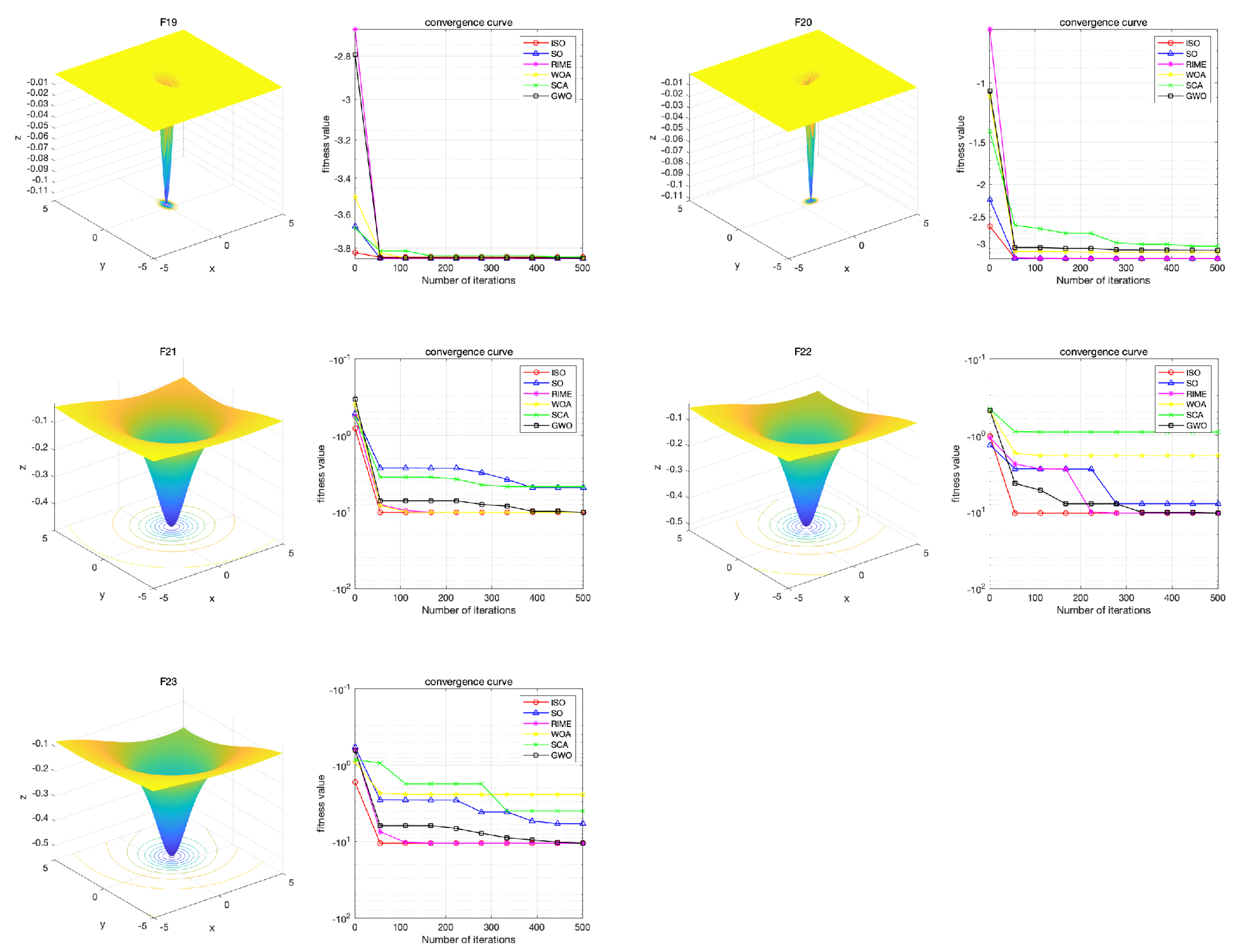

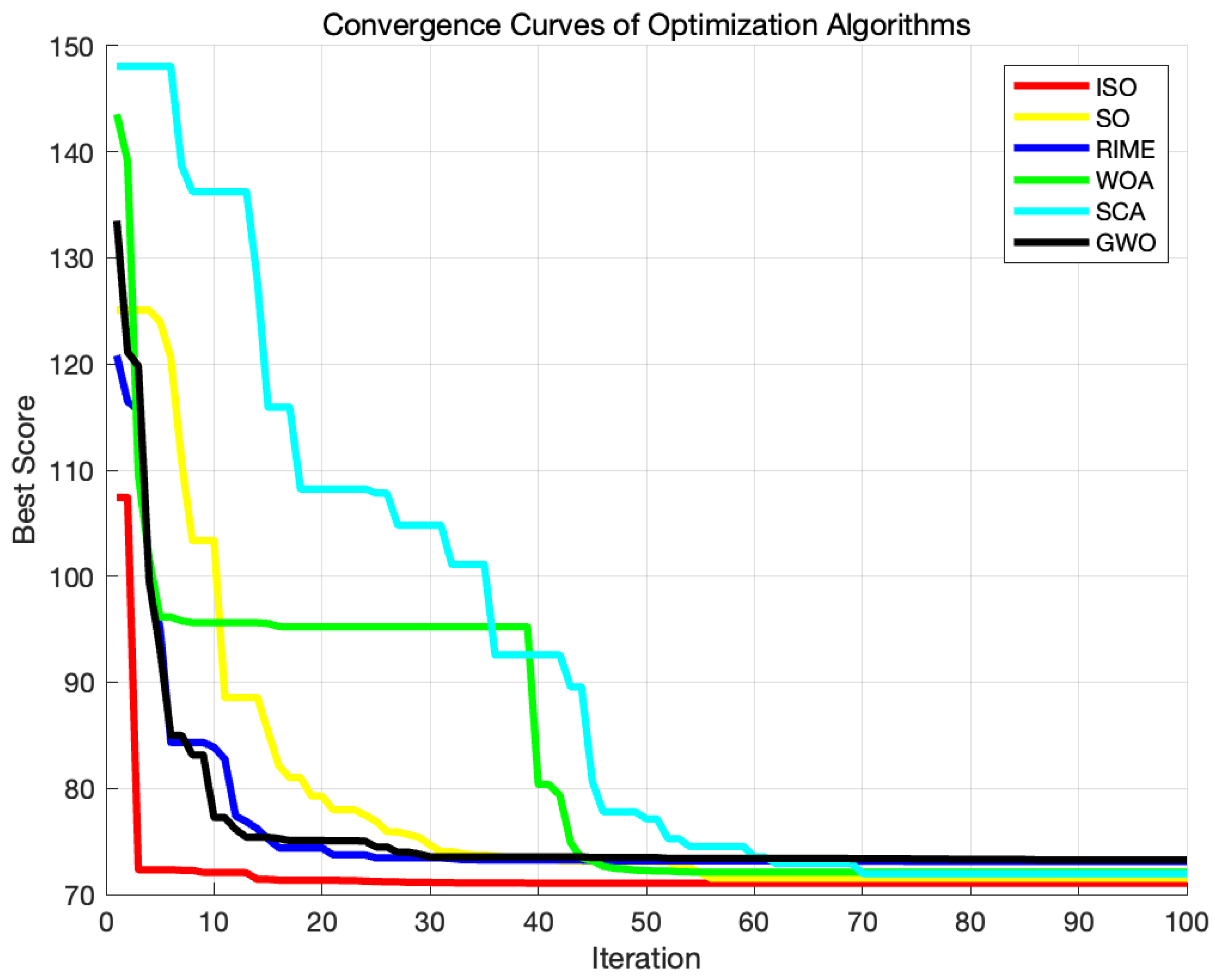

6.1. Convergence Behavior Analysis

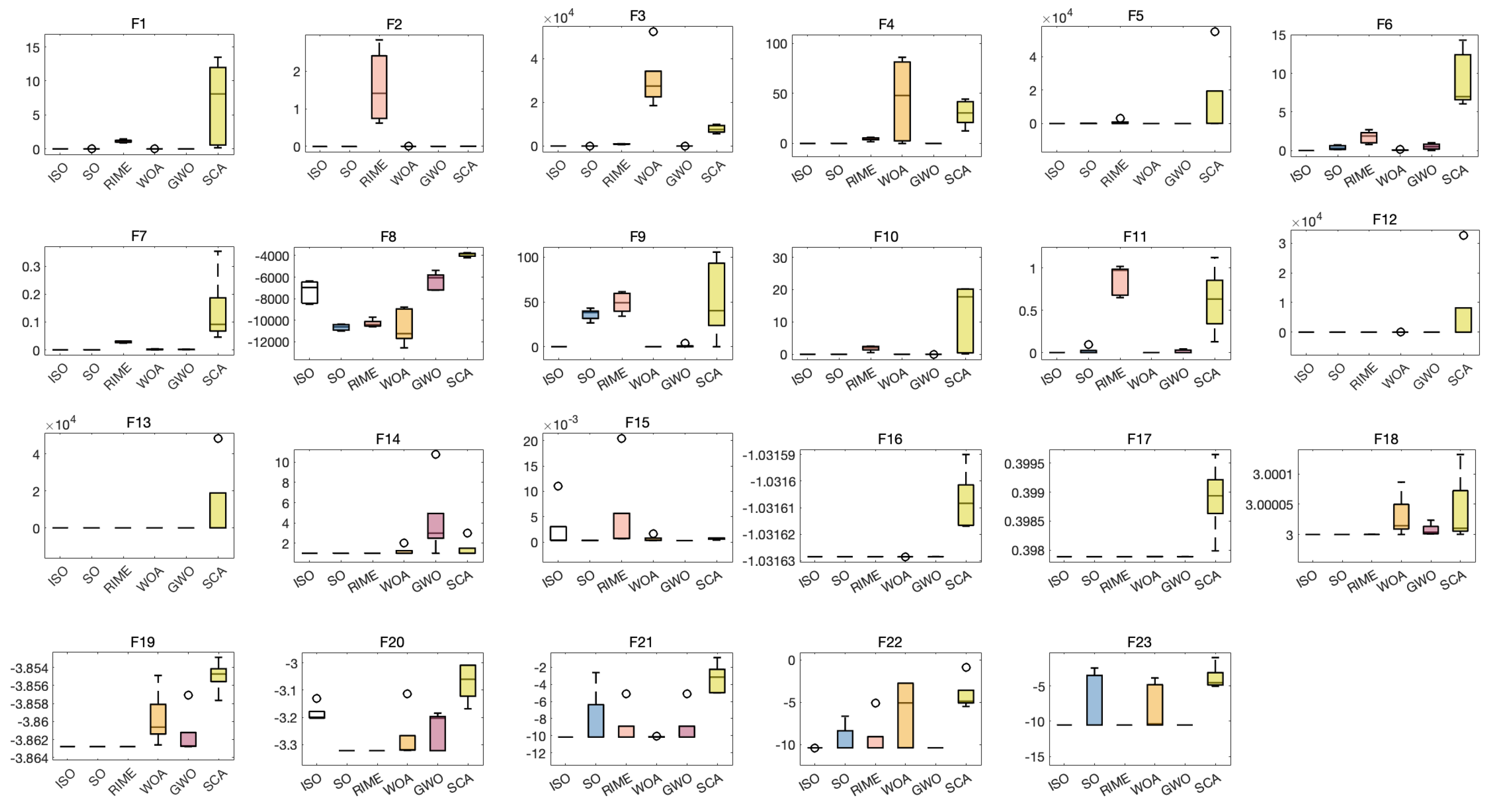

6.2. Detailed Analysis of the Benchmark Function

| ISO | SO | RIME | WOA | GWO | SCA | |

|---|---|---|---|---|---|---|

| F1 Maximum value | 0 | 1.6156e-101 | 0.79632 | 6.2667e-90 | 2.9418e-32 | 0.018227 |

| F1 Standard Deviation | 0 | 3.0829e-96 | 0.82593 | 3.6085e-80 | 2.1056e-30 | 5.8823 |

| F1 Average Value | 0 | 2.1112e-96 | 1.6654 | 1.1703e-80 | 1.3072e-30 | 3.4484 |

| F1 Median Value | 0 | 5.977e-97 | 1.5769 | 6.7968e-86 | 3.3005e-31 | 0.38834 |

| F1 Minimum Value | 0 | 8.8973e-96 | 3.7043 | 1.1438e-79 | 5.9552e-30 | 15.6586 |

| F2 Maximum value | 0 | 1.5847e-46 | 0.53371 | 2.392e-57 | 2.0754e-19 | 4.9074e-05 |

| F2 Standard Deviation | 0 | 5.2612e-44 | 0.4858 | 1.0992e-52 | 6.9339e-19 | 0.0088126 |

| F2 Average Value | 0 | 3.0919e-44 | 0.90418 | 4.0029e-53 | 1.1986e-18 | 0.0077208 |

| F2 Median Value | 0 | 6.2431e-45 | 0.75306 | 2.2097e-55 | 9.5475e-19 | 0.0039093 |

| F2 Minimum Value | 0 | 1.7346e-43 | 2.0696 | 3.5024e-52 | 2.2958e-18 | 0.027479 |

| F3 Maximum value | 0 | 1.1664e-67 | 384.3923 | 19995.9104 | 1.1741e-09 | 1525.2676 |

| F3 Standard Deviation | 0 | 1.1251e-59 | 304.3303 | 8915.4857 | 6.7043e-07 | 4607.7335 |

| F3 Average Value | 0 | 5.4436e-60 | 854.9073 | 35898.743 | 4.7077e-07 | 10138.0775 |

| F3 Median Value | 0 | 4.0227e-63 | 827.1762 | 36953.5381 | 8.146e-08 | 11024.7254 |

| F3 Minimum Value | 0 | 3.1096e-59 | 1402.0287 | 48574.3146 | 1.5437e-06 | 16323.6006 |

| F4 Maximum value | 0 | 5.1604e-43 | 3.4574 | 3.6318 | 9.928e-09 | 9.027 |

| F4 Standard Deviation | 0 | 2.964e-41 | 1.6497 | 30.5907 | 1.2116e-07 | 6.7815 |

| F4 Average Value | 0 | 2.3189e-41 | 6.0868 | 41.9403 | 1.5419e-07 | 21.0788 |

| F4 Median Value | 0 | 7.0935e-42 | 5.6659 | 37.7864 | 1.2797e-07 | 22.0562 |

| F4 Minimum Value | 0 | 8.5465e-41 | 9.573 | 85.1509 | 3.9006e-07 | 29.6541 |

| F5 Maximum value | 24.7598 | 28.8231 | 52.2265 | 27.2556 | 26.179 | 82.5777 |

| F5 Standard Deviation | 0.59228 | 54.4177 | 458.7257 | 0.21881 | 0.67703 | 18885.0142 |

| F5 Average Value | 25.3956 | 54.7162 | 445.2294 | 27.67 | 27.1109 | 9078.5037 |

| F5 Median Value | 25.1402 | 28.9162 | 353.4091 | 27.7765 | 27.1375 | 2236.0169 |

| F5 Minimum Value | 26.4062 | 159.0777 | 1680.5587 | 27.866 | 27.9309 | 61772.1619 |

| F6 Maximum value | 0.00022812 | 0.12251 | 0.43999 | 0.028669 | 0.24964 | 5.1908 |

| F6 Standard Deviation | 0.0021647 | 0.23817 | 0.80692 | 0.058841 | 0.25713 | 11.0357 |

| F6 Average Value | 0.0021236 | 0.36652 | 1.5199 | 0.11855 | 0.54914 | 12.1774 |

| F6 Median Value | 0.0013819 | 0.29371 | 1.5159 | 0.11984 | 0.50366 | 7.2204 |

| F6 Minimum Value | 0.0072755 | 0.90824 | 3.017 | 0.22648 | 0.99279 | 35.4493 |

| F7 Maximum value | 5.9325e-06 | 9.8841e-05 | 0.0066106 | 7.2842e-05 | 0.00054522 | 0.013495 |

| F7 Standard Deviation | 1.8724e-05 | 0.00013572 | 0.013488 | 0.0037777 | 0.0013254 | 0.064203 |

| F7 Average Value | 3.4074e-05 | 0.00027541 | 0.030573 | 0.0027863 | 0.001424 | 0.066626 |

| F7 Median Value | 3.7737e-05 | 0.000272 | 0.030255 | 0.0014118 | 0.0010548 | 0.042689 |

| F7 Minimum Value | 6.2134e-05 | 0.00053759 | 0.047252 | 0.012901 | 0.0049689 | 0.21389 |

| F8 Maximum value | -8941.9029 | -11202.8618 | -11212.2637 | -12567.4537 | -7241.0015 | -3964.0364 |

| F8 Standard Deviation | 705.6836 | 419.4716 | 352.7549 | 1680.0918 | 470.9275 | 158.2354 |

| F8 Average Value | -7979.4087 | -10433.1947 | -10551.4162 | -10362.0449 | -6462.9317 | -3734.5521 |

| F8 Median Value | -7675.3417 | -10305.079 | -10469.8854 | -9965.6122 | -6406.543 | -3705.014 |

| F8 Minimum Value | -7314.8199 | -10014.2347 | -10071.167 | -8522.1804 | -5790.5202 | -3541.7477 |

| F9 Maximum value | 0 | 32.1315 | 35.0709 | 0 | 0 | 0.16486 |

| F9 Standard Deviation | 0 | 9.6948 | 16.224 | 0 | 1.895 | 38.8566 |

| F9 Average Value | 0 | 47.3503 | 58.4451 | 0 | 1.087 | 46.9693 |

| F9 Median Value | 0 | 47.4626 | 55.8667 | 0 | 1.7053e-13 | 52.2689 |

| F9 Minimum Value | 0 | 64.4491 | 88.9517 | 0 | 5.2149 | 99.3974 |

| F10 Maximum value | 4.4409e-16 | 4.4409e-16 | 1.5369 | 4.4409e-16 | 5.0182e-14 | 0.047233 |

| F10 Standard Deviation | 0 | 1.1235e-15 | 0.36392 | 2.4841e-15 | 7.1937e-15 | 10.1462 |

| F10 Average Value | 4.4409e-16 | 3.6415e-15 | 2.0332 | 5.4179e-15 | 5.7643e-14 | 10.2912 |

| F10 Median Value | 4.4409e-16 | 3.9968e-15 | 2.0954 | 5.7732e-15 | 5.7288e-14 | 10.3939 |

| F10 Minimum Value | 4.4409e-16 | 3.9968e-15 | 2.6224 | 7.5495e-15 | 6.7946e-14 | 20.352 |

| F11 Maximum value | 0 | 0 | 0.70668 | 0 | 0 | 0.32025 |

| F11 Standard Deviation | 0 | 0.19571 | 0.1102 | 0 | 0.0072452 | 0.27456 |

| F11 Average Value | 0 | 0.12263 | 0.87297 | 0 | 0.0044876 | 0.81096 |

| F11 Median Value | 0 | 0.026978 | 0.86309 | 0 | 0 | 0.87535 |

| F11 Minimum Value | 0 | 0.48677 | 1.0302 | 0 | 0.015867 | 1.2516 |

| F12 Maximum value | 8.7918e-06 | 0.64823 | 0.64372 | 0.005086 | 0.019088 | 0.64244 |

| F12 Standard Deviation | 0.00033743 | 2.0266 | 1.6162 | 0.011681 | 0.010848 | 85.404 |

| F12 Average Value | 0.00014159 | 2.4297 | 2.2166 | 0.017477 | 0.035523 | 37.3233 |

| F12 Median Value | 3.9058e-05 | 1.8708 | 1.9305 | 0.015435 | 0.038864 | 8.3931 |

| F12 Minimum Value | 0.0010994 | 7.3143 | 5.9443 | 0.044372 | 0.048986 | 278.8304 |

| F13 Maximum value | 0.10897 | 0.68249 | 0.10148 | 0.093222 | 0.11287 | 2.7887 |

| F13 Standard Deviation | 0.42285 | 0.72067 | 0.044075 | 0.15559 | 0.19083 | 3464.4011 |

| F13 Average Value | 0.86081 | 1.5917 | 0.15969 | 0.26413 | 0.51709 | 1600.5508 |

| F13 Median Value | 0.97321 | 1.3588 | 0.15913 | 0.20027 | 0.5127 | 24.2384 |

| F13 Minimum Value | 1.3428 | 2.6575 | 0.24852 | 0.48656 | 0.84402 | 10046.4838 |

| F14 Maximum value | 0.998 | 0.998 | 0.998 | 0.998 | 0.998 | 0.998 |

| F14 Standard Deviation | 1.813e-16 | 1.5457 | 6.6484e-12 | 3.0198 | 3.5827 | 0.95833 |

| F14 Average Value | 0.998 | 1.6899 | 0.998 | 2.2728 | 4.1415 | 1.5934 |

| F14 Median Value | 0.998 | 0.998 | 0.998 | 0.99815 | 2.9821 | 0.99823 |

| F14 Minimum Value | 0.998 | 5.9288 | 0.998 | 10.7632 | 10.7632 | 2.9821 |

| F15 Maximum value | 0.00030749 | 0.00032065 | 0.00040604 | 0.0003206 | 0.00030749 | 0.00055656 |

| F15 Standard Deviation | 0.0003688 | 0.0062831 | 0.0095202 | 0.00040904 | 0.0084458 | 0.00036924 |

| F15 Average Value | 0.00063972 | 0.0024876 | 0.006571 | 0.00078746 | 0.0043389 | 0.0010789 |

| F15 Median Value | 0.00054085 | 0.00050553 | 0.00067254 | 0.00073404 | 0.00030813 | 0.0010486 |

| F15 Minimum Value | 0.0012759 | 0.020363 | 0.020363 | 0.0015046 | 0.020363 | 0.0015135 |

| F16 Maximum value | -1.0316 | -1.0316 | -1.0316 | -1.0316 | -1.0316 | -1.0316 |

| F16 Standard Deviation | 1.282e-16 | 1.9582e-16 | 1.2238e-07 | 9.7558e-11 | 2.1284e-08 | 3.4995e-05 |

| F16 Average Value | -1.0316 | -1.0316 | -1.0316 | -1.0316 | -1.0316 | -1.0316 |

| F16 Median Value | -1.0316 | -1.0316 | -1.0316 | -1.0316 | -1.0316 | -1.0316 |

| F16 Minimum Value | -1.0316 | -1.0316 | -1.0316 | -1.0316 | -1.0316 | -1.0315 |

| F17 Maximum value | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.39796 |

| F17 Standard Deviation | 0 | 0 | 4.5491e-07 | 2.0914e-06 | 6.7395e-05 | 0.0012934 |

| F17 Average Value | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.39791 | 0.39942 |

| F17 Median Value | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.39935 |

| F17 Minimum Value | 0.39789 | 0.39789 | 0.39789 | 0.39789 | 0.3981 | 0.4025 |

| F18 Maximum value | 3 | 3 | 3 | 3 | 3 | 3 |

| F18 Standard Deviation | 1.9244e-15 | 3.0553e-15 | 5.1877e-08 | 2.0542e-05 | 1.1567e-05 | 6.5547e-05 |

| F18 Average Value | 3 | 3 | 3 | 3 | 3 | 3.0001 |

| F18 Median Value | 3 | 3 | 3 | 3 | 3 | 3 |

| F18 Minimum Value | 3 | 3 | 3 | 3.0001 | 3 | 3.0002 |

| F19 Maximum value | -3.8628 | -3.8628 | -3.8628 | -3.8628 | -3.8628 | -3.8623 |

| F19 Standard Deviation | 8.6315e-16 | 7.4015e-16 | 5.2188e-07 | 0.0031652 | 0.0037075 | 0.0033693 |

| F19 Average Value | -3.8628 | -3.8628 | -3.8628 | -3.8594 | -3.8604 | -3.8563 |

| F19 Median Value | -3.8628 | -3.8628 | -3.8628 | -3.8597 | -3.8626 | -3.8546 |

| F19 Minimum Value | -3.8628 | -3.8628 | -3.8628 | -3.8539 | -3.8549 | -3.852 |

| F20 Maximum value | -3.2031 | -3.322 | -3.322 | -3.3218 | -3.322 | -3.1176 |

| F20 Standard Deviation | 0.041065 | 0.057431 | 0.057429 | 0.060089 | 0.1023 | 0.49659 |

| F20 Average Value | -3.1637 | -3.2863 | -3.2863 | -3.2927 | -3.2182 | -2.7968 |

| F20 Median Value | -3.1675 | -3.322 | -3.322 | -3.3208 | -3.1985 | -3.0029 |

| F20 Minimum Value | -3.0977 | -3.2031 | -3.2031 | -3.1688 | -3.0272 | -1.8081 |

| F21 Maximum value | -10.1532 | -10.1532 | -10.1532 | -10.1513 | -10.1529 | -7.4983 |

| F21 Standard Deviation | 2.1479 | 2.871 | 2.9233 | 2.6293 | 0.00097496 | 2.523 |

| F21 Average Value | -9.4737 | -8.4288 | -6.8697 | -8.1101 | -10.1514 | -2.9758 |

| F21 Median Value | -10.1532 | -10.1532 | -5.1007 | -10.1429 | -10.1515 | -2.6826 |

| F21 Minimum Value | -3.3607 | -2.6305 | -2.6305 | -5.0551 | -10.1498 | -0.49728 |

| F22 Maximum value | -10.4029 | -10.4029 | -10.4029 | -10.4025 | -10.4023 | -5.2831 |

| F22 Standard Deviation | 2.1119 | 3.0882 | 3.1212 | 2.7809 | 1.6676 | 0.86604 |

| F22 Average Value | -9.735 | -7.2831 | -8.0118 | -9.0997 | -9.8738 | -4.335 |

| F22 Median Value | -10.4029 | -7.2186 | -10.4014 | -10.3924 | -10.4012 | -4.6794 |

| F22 Minimum Value | -3.7243 | -2.7659 | -3.7243 | -2.7656 | -5.1276 | -2.7142 |

| F23 Maximum value | -10.5364 | -10.5364 | -10.5363 | -10.5355 | -10.5361 | -6.7449 |

| F23 Standard Deviation | 2.119 | 3.7908 | 2.6046 | 3.9107 | 0.00085842 | 1.6382 |

| F23 Average Value | -9.8663 | -6.4037 | -8.9183 | -6.8186 | -10.5352 | -3.7579 |

| F23 Median Value | -10.5364 | -5.3924 | -10.5356 | -7.0568 | -10.5355 | -3.477 |

| F23 Minimum Value | -3.8354 | -2.4217 | -5.1281 | -1.8594 | -10.5337 | -0.94217 |

| SO | RIME | WOA | GWO | SCA | |

|---|---|---|---|---|---|

| F1 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 |

| F2 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 |

| F3 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 |

| F4 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 |

| F5 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 | 3.2984e-04 | 1.8267e-04 |

| F6 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 |

| F7 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 |

| F8 | 1.8267e-04 | 1.8267e-04 | 0.0010 | 1.8267e-04 | 1.8267e-04 |

| F9 | 6.3864e-05 | 6.3864e-05 | 1 | 2.1655e-04 | 6.3864e-05 |

| F10 | 9.6605e-05 | 6.3864e-05 | 1.8923e-04 | 5.7206e-05 | 6.3864e-05 |

| F11 | 0.0149 | 6.3864e-05 | 1 | 0.0779 | 6.3864e-05 |

| F12 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 | 1.8267e-04 |

| F13 | 0.0312 | 0.0140 | 0.0113 | 0.0211 | 1.8267e-04 |

| F14 | 0.0384 | 1.3093e-04 | 1.3093e-04 | 1.3093e-04 | 1.3093e-04 |

| F15 | 0.8501 | 0.1620 | 0.3847 | 0.2730 | 0.0073 |

| F16 | 0.0891 | 1.2855e-04 | 1.2855e-04 | 1.2855e-04 | 1.2855e-04 |

| F17 | 1 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 | 6.3864e-05 |

| F18 | 0.0060 | 1.7661e-04 | 1.7661e-04 | 1.7661e-04 | 1.7661e-04 |

| F19 | 0.1851 | 1.0997e-04 | 1.0997e-04 | 1.0997e-04 | 1.0997e-04 |

| F20 | 9.0134e-04 | 0.0022 | 0.0028 | 0.2404 | 3.2138e-04 |

| F21 | 0.1770 | 0.0055 | 0.0044 | 0.0167 | 7.2031e-04 |

| F22 | 0.0323 | 0.0029 | 0.0023 | 0.0029 | 0.0013 |

| F23 | 0.0124 | 0.0027 | 7.2031e-04 | 0.0027 | 5.4476e-04 |

7. Engineering Application

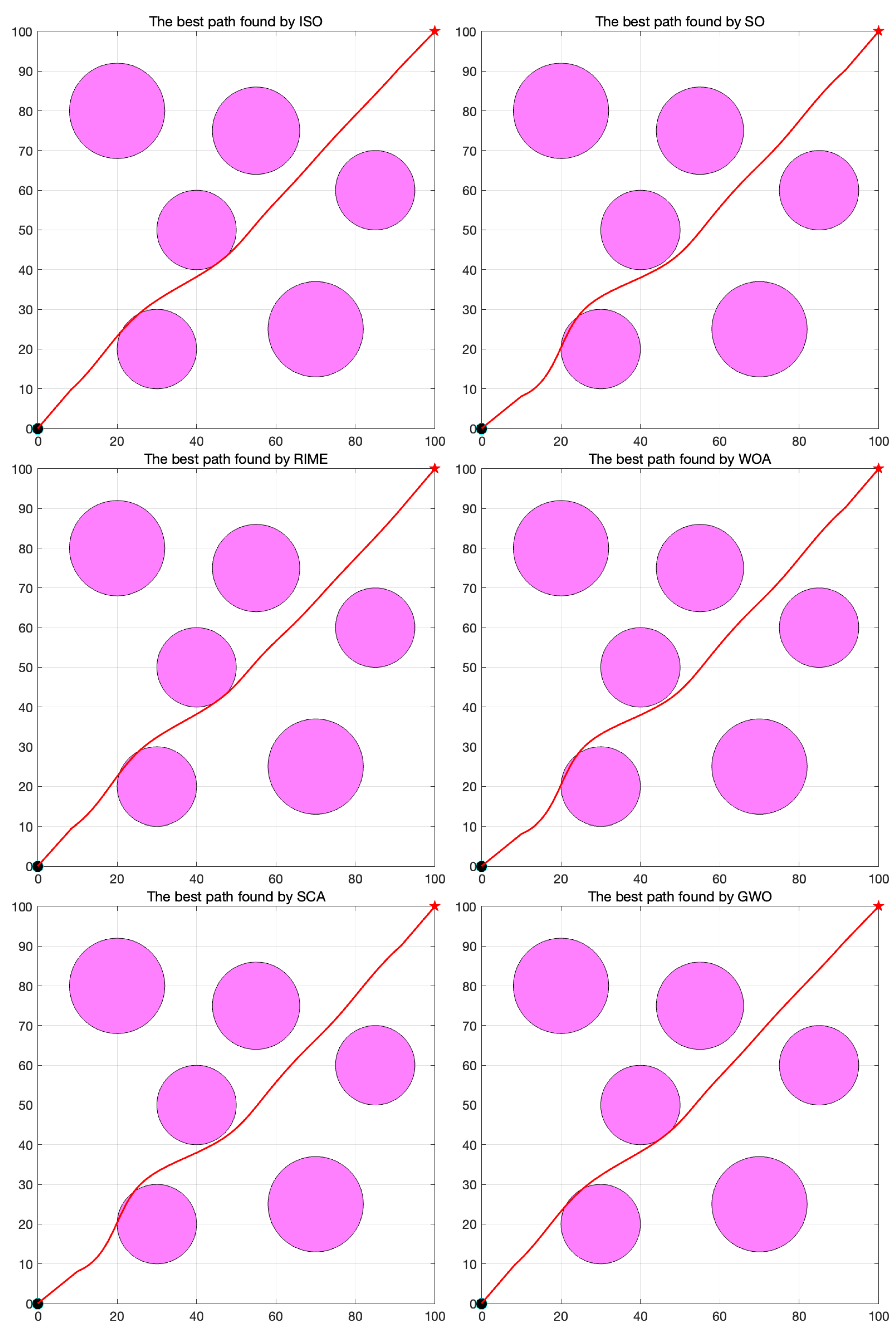

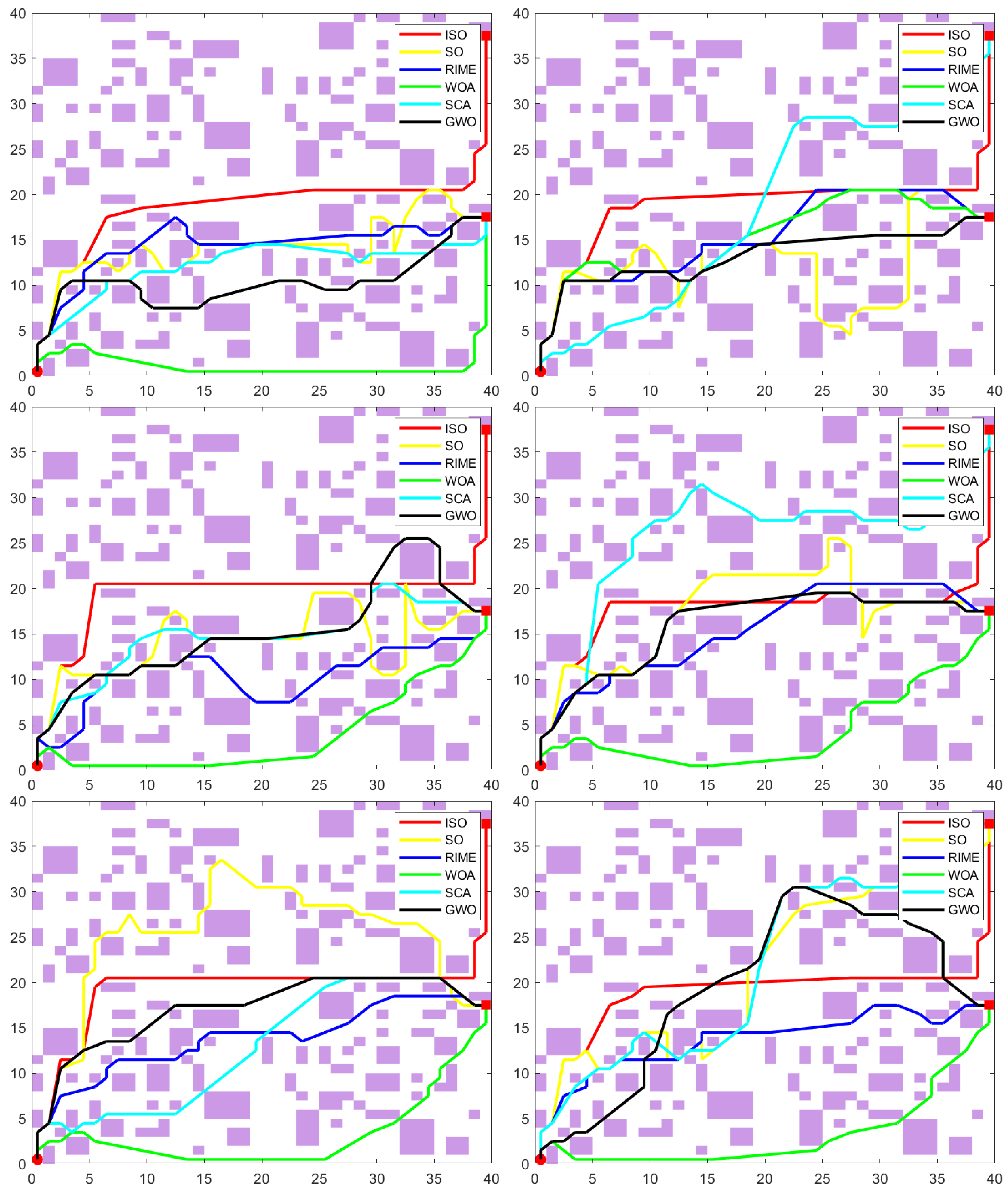

7.1. UAV Path Planning

| ISO | SO | RIME | GWO | SCA | WOA |

|---|---|---|---|---|---|

| 70.892 | 73.207 | 73.151 | 72.152 | 74.522 | 73.133 |

7.2. Robot Path Planning

| Experiment | ISO | SO | RIME | WOA | SCA | GWO |

| 1 | Success (68.4081) | Failure (71.0511) | Failure (53.4309) | Failure (57.3173) | Failure (83.4763) | Failure (53.6302) |

| 2 | Success (70.0802) | Failure (85.3329) | Failure (54.1934) | Failure (53.826) | Success (87.9804) | Failure (50.4595) |

| 3 | Success (72.376) | Failure (79.9242) | Failure (56.6274) | Failure (49.7342) | Failure (80.1427) | Failure (59.6362) |

| 4 | Success (71.117) | Failure (71.9905) | Failure (51.5289) | Failure (51.3301) | Success (80.7291) | Failure (51.0745) |

| 5 | Success (71.799) | Failure (79.826) | Failure (50.7607) | Failure (50.8605) | Failure (76.9859) | Failure (52.1692) |

| 6 | Success (69.3769) | Success (81.929) | Failure (50.8476) | Failure (50.207) | Success (91.7876) | Failure (64.3891) |

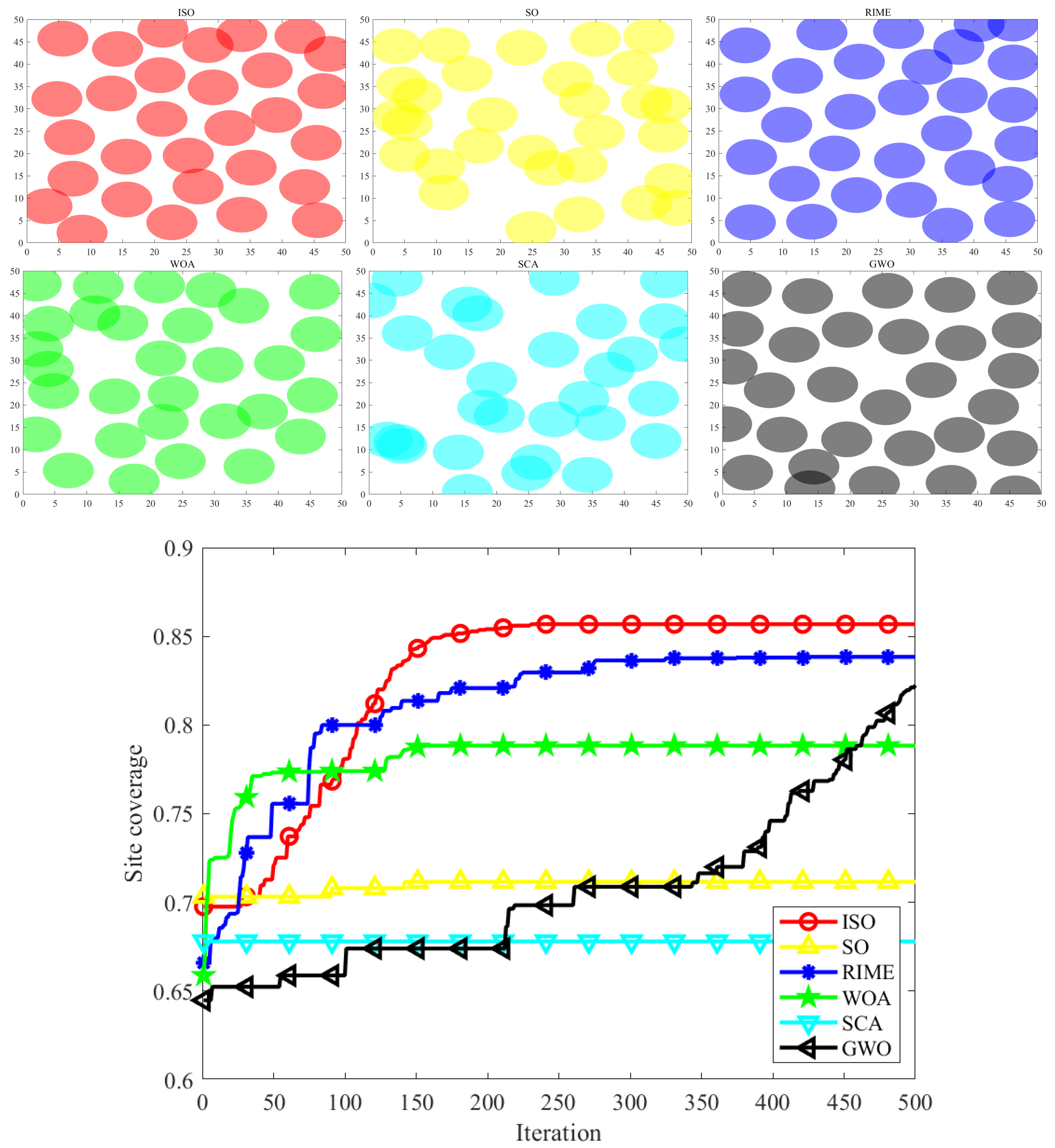

7.3. Wireless Sensor Network Node Deployment

| ISO | SO | RIME | GWO | SCA | WOA |

|---|---|---|---|---|---|

| 0.8571 | 0.71137 | 0.83802 | 0.78817 | 0.67697 | 0.80669 |

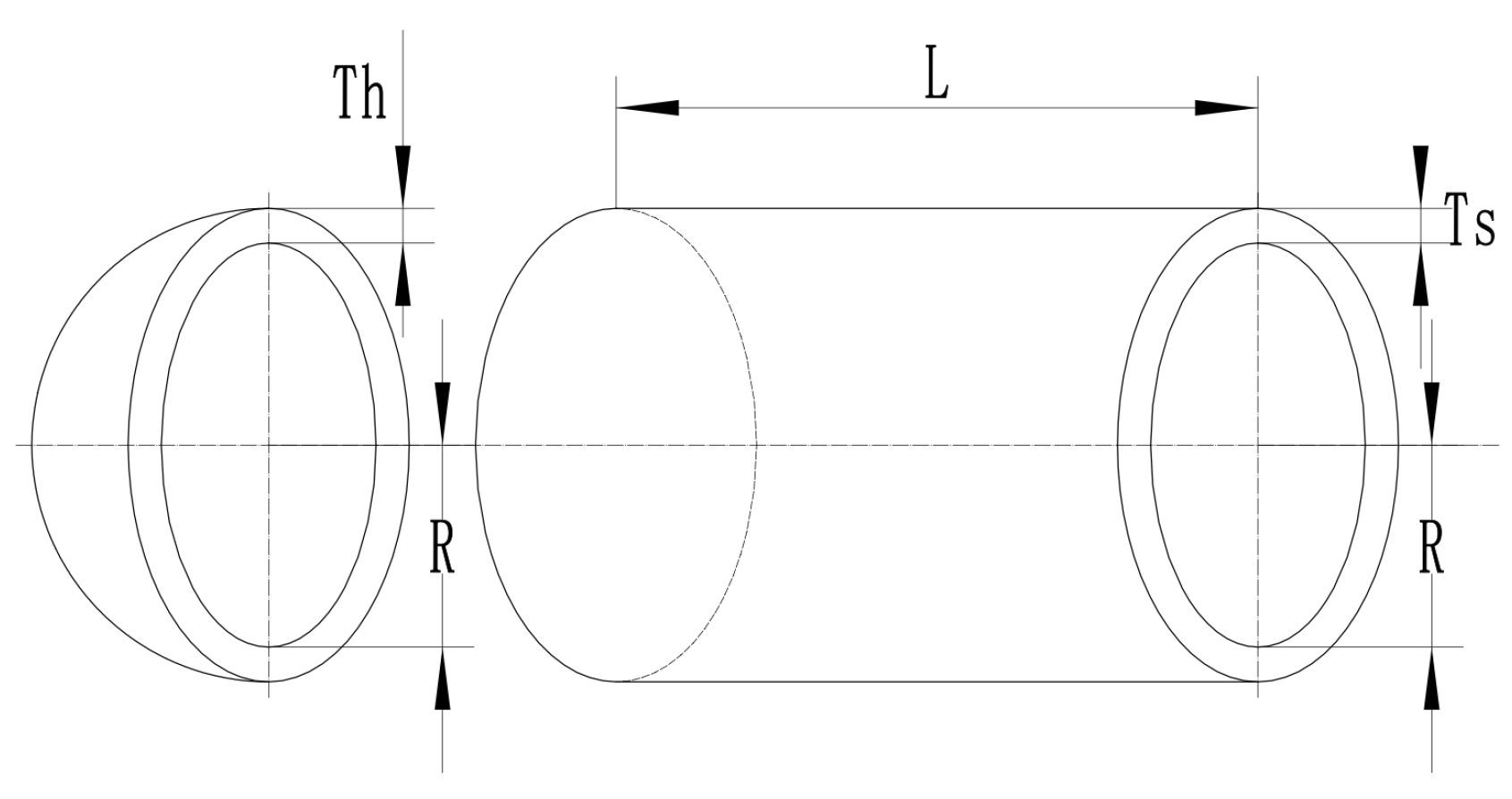

7.3.1. Pressure Vessel Design

| Algorithm | h | l | t | b | Optimal value | Ranking |

| ISO | 0.1991 | 3.3339 | 9.1857 | 0.1991 | 1.6712 | 1 |

| SO | 0.19372 | 3.437 | 9.192 | 0.19883 | 1.6757 | 2 |

| RIME | 0.4176 | 2.3298 | 4.9704 | 0.68011 | 3.1046 | 6 |

| SCA | 0.19728 | 3.8021 | 9.181 | 0.19971 | 1.7338 | 4 |

| GWO | 0.1942 | 3.4412 | 9.1891 | 0.19897 | 1.6776 | 3 |

| WOA | 0.125 | 8.3518 | 8.3518 | 0.24085 | 2.3073 | 5 |

8. Conclusion and Outlook

References

- J. Tang, G. Liu, and Q. T. Pan, "A Review on Representative Swarm Intelligence Algorithms for Solving Optimization Problems: Applications and Trends," IEEE/CAA J. Autom. Sinica, vol. 8, no. 10, pp. 1627-1643, Oct. 2021. [CrossRef]

- Breiman, L., Cutler, A. A deterministic algorithm for global optimization. Mathematical Programming 58, 179â199 (1993). [CrossRef]

- Tansel Dokeroglu, Ender Sevinc, Tayfun Kucukyilmaz, Ahmet Cosar,A survey on new generation metaheuristic algorithms,Computers & Industrial Engineering,Volume 137,2019,106040,ISSN 0360-8352. [CrossRef]

- P. Agrawal, H. F. Abutarboush, T. Ganesh and A. W. Mohamed, "Metaheuristic Algorithms on Feature Selection: A Survey of One Decade of Research (2009-2019)," in IEEE Access, vol. 9, pp. 26766-26791, 2021. [CrossRef]

- Adam, S. P., Alexandropoulos, S. A. N., Pardalos, P. M., & Vrahatis, M. N. (2019). No free lunch theorem: A review. Approximation and optimization: Algorithms, complexity and applications, 57-82. [CrossRef]

- Fatma A. Hashim, Abdelazim G. Hussien,Snake Optimizer: A novel meta-heuristic optimization algorithm,KnowledgeBasedSystems,Volume 242,2022,108320. [CrossRef]

- Peng, L., Yuan, Z., Dai, G. et al. A Multi-strategy Improved Snake Optimizer Assisted with Population Crowding Analysis for Engineering Design Problems. J Bionic Eng 21, 1567â1591 (2024). [CrossRef]

- Tsakiridis, N. L., Theocharis, J. B., & Zalidis, G. C. (2016). DECO3R: A Differential Evolution-based algorithm for generating compact Fuzzy Rule-based Classification Systems. Knowledge-Based Systems, 105, 160-174. [CrossRef]

- Su, H., Zhao, D., Heidari, A. A., Liu, L., Zhang, X., Mafarja, M., & Chen, H. (2023). RIME: A physics-based optimization. Neurocomputing, 532, 183-214. [CrossRef]

- Fattahi, E., Bidar, M., & Kanan, H. R. (2018). Focus group: an optimization algorithm inspired by human behavior. International Journal of Computational Intelligence and Applications, 17(01), 1850002. [CrossRef]

- Chakraborty A, Kar A K. Swarm intelligence: A review of algorithms[J]. Nature-inspired computing and optimization: Theory and applications, 2017: 475-494. [CrossRef]

- Mirjalili S, Mirjalili S. Genetic algorithm[J]. Evolutionary algorithms and neural networks: Theory and applications, 2019: 43-55. [CrossRef]

- Espejo P G, Ventura S, Herrera F. A survey on the application of genetic programming to classification[J]. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews), 2009, 40(2): 121-144. [CrossRef]

- Maheri A, Jalili S, Hosseinzadeh Y, et al. A comprehensive survey on cultural algorithms[J]. Swarm and Evolutionary Computation, 2021, 62: 100846. [CrossRef]

- Bertsimas, D., & Tsitsiklis, J. (1993). Simulated annealing. Statistical science, 8(1), 10-15. [CrossRef]

- Kennedy, J., & Eberhart, R. (1995, November). Particle swarm optimization. In Proceedings of ICNN’95-international conference on neural networks (Vol. 4, pp. 1942-1948). ieee.

- Han, K. H., & Kim, J. H. (2002). Quantum-inspired evolutionary algorithm for a class of combinatorial optimization. IEEE transactions on evolutionary computation, 6(6), 580-593. [CrossRef]

- Xu, L. D. (1994). Case based reasoning. IEEE potentials, 13(5), 10-13. [CrossRef]

- Qiang, W., & Zhongli, Z. (2011, August). Reinforcement learning model, algorithms and its application. In 2011 International Conference on Mechatronic Science, Electric Engineering and Computer (MEC) (pp. 1143-1146). IEEE.

- Dorigo, M., & Stützle, T. (2019). Ant colony optimization: overview and recent advances (pp. 311-351). Springer International Publishing.

- Abualigah, L., Shehab, M., Alshinwan, M., & Alabool, H. (2020). Salp swarm algorithm: a comprehensive survey. Neural Computing and Applications, 32(15), 11195-11215. [CrossRef]

- Hashim, F. A., & Hussien, A. G. (2022). Snake Optimizer: A novel meta-heuristic optimization algorithm. Knowledge-Based Systems, 242, 108320. [CrossRef]

- Yan, C., & Razmjooy, N. (2023). Optimal lung cancer detection based on CNN optimized and improved Snake optimization algorithm. Biomedical Signal Processing and Control, 86, 105319. [CrossRef]

- Zheng, W., Pang, S., Liu, N., Chai, Q., & Xu, L. (2023). A compact snake optimization algorithm in the application of WKNN fingerprint localization. Sensors, 23(14), 6282. [CrossRef]

- Hu, G., Yang, R., Abbas, M., & Wei, G. (2023). BEESO: Multi-strategy boosted snake-inspired optimizer for engineering applications. Journal of Bionic Engineering, 20(4), 1791-1827. [CrossRef]

- Osuna-Enciso, V., Cuevas, E., & Castañeda, B. M. (2022). A diversity metric for population-based metaheuristic algorithms. Information Sciences, 586, 192-208. [CrossRef]

- Agushaka, J. O., & Ezugwu, A. E. (2022). Initialisation approaches for population-based metaheuristic algorithms: a comprehensive review. Applied Sciences, 12(2), 896. [CrossRef]

- Joe, S., & Kuo, F. Y. (2008). Constructing Sobol sequences with better two-dimensional projections. SIAM Journal on Scientific Computing, 30(5), 2635-2654. [CrossRef]

- Su, H., Zhao, D., Heidari, A. A., Liu, L., Zhang, X., Mafarja, M., & Chen, H. (2023). RIME: A physics-based optimization. Neurocomputing, 532, 183-214. [CrossRef]

- Zhong, R., Yu, J., Zhang, C., & Munetomo, M. (2024). SRIME: a strengthened RIME with Latin hypercube sampling and embedded distance-based selection for engineering optimization problems. Neural Computing and Applications, 36(12), 6721-6740. [CrossRef]

- Osuna-Enciso, V., Cuevas, E., & Castañeda, B. M. (2022). A diversity metric for population-based metaheuristic algorithms. Information Sciences, 586, 192-208. [CrossRef]

- Learning, L. (2021). Image Formation by Lenses. Fundamentals of Heat, Light & Sound.

- Ouyang, C., Zhu, D., & Qiu, Y. (2021). Lens learning sparrow search algorithm. Mathematical Problems in Engineering, 2021(1), 9935090. [CrossRef]

- Osuna-Enciso, V., Cuevas, E., & Castañeda, B. M. (2022). A diversity metric for population-based metaheuristic algorithms. Information Sciences, 586, 192-208. [CrossRef]

- Cuevas, E., Luque, A., Morales Castañeda, B., & Rivera, B. (2024). A Measure of Diversity for Metaheuristic Algorithms Employing Population-Based Approaches. In Metaheuristic Algorithms: New Methods, Evaluation, and Performance Analysis (pp. 49-72). Cham: Springer Nature Switzerland.

- Cuevas, E., Diaz, P., Camarena, O., Cuevas, E., Diaz, P., & Camarena, O. (2021). Experimental analysis between exploration and exploitation. Metaheuristic Computation: A Performance Perspective, 249-269. [CrossRef]

- Yang, X. S., Deb, S., & Fong, S. (2014). Metaheuristic algorithms: optimal balance of intensification and diversification. Applied Mathematics & Information Sciences, 8(3), 977. [CrossRef]

- Clément, F., Doerr, C., & Paquete, L. (2022). Star discrepancy subset selection: Problem formulation and efficient approaches for low dimensions. Journal of Complexity, 70, 101645. [CrossRef]

- Thiémard, E. (2001). An algorithm to compute bounds for the star discrepancy. journal of complexity, 17(4), 850-880. [CrossRef]

- Bansal, P. P., & Ardell, A. J. (1972). Average nearest-neighbor distances between uniformly distributed finite particles. Metallography, 5(2), 97-111. [CrossRef]

- Kobayashi, K., & Salam, M. U. (2000). Comparing simulated and measured values using mean squared deviation and its components. Agronomy Journal, 92(2), 345-352. [CrossRef]

- Salleh, M. N. M., Hussain, K., Cheng, S., Shi, Y., Muhammad, A., Ullah, G., & Naseem, R. (2018). Exploration and exploitation measurement in swarm-based metaheuristic algorithms: An empirical analysis. In Recent Advances on Soft Computing and Data Mining: Proceedings of the Third International Conference on Soft Computing and Data Mining (SCDM 2018), Johor, Malaysia, February 06-07, 2018 (pp. 24-32). Springer International Publishing.

- Mirjalili, S. (2016). SCA: a sine cosine algorithm for solving optimization problems. Knowledge-based systems, 96, 120-133. [CrossRef]

- Mirjalili, S., & Lewis, A. (2016). The whale optimization algorithm. Advances in engineering software, 95, 51-67. [CrossRef]

- Mirjalili, S., Mirjalili, S. M., & Lewis, A. (2014). Grey wolf optimizer. Advances in engineering software, 69, 46-61. [CrossRef]

- Garcà a-Martà nez, C., Gutiérrez, P. D., Molina, D., Lozano, M., & Herrera, F. (2017). Since CEC 2005 competition on real-parameter optimisation: a decade of research, progress and comparative analysisâs weakness. Soft Computing, 21, 5573-5583. [CrossRef]

- Sun, Y., & Genton, M. G. (2011). Functional boxplots. Journal of Computational and Graphical Statistics, 20(2), 316-334. [CrossRef]

- Wilcoxon, F., Katti, S., & Wilcox, R. A. (1970). Critical values and probability levels for the Wilcoxon rank sum test and the Wilcoxon signed rank test. Selected tables in mathematical statistics, 1, 171-259.

- Tsouros, D. C., Bibi, S., & Sarigiannidis, P. G. (2019). A review on UAV-based applications for precision agriculture. Information, 10(11), 349.(6), 4233-4284. [CrossRef]

- Delavarpour, N., Koparan, C., Nowatzki, J., Bajwa, S., & Sun, X. (2021). A technical study on UAV characteristics for precision agriculture applications and associated practical challenges. Remote Sensing, 13(6), 1204. [CrossRef]

- Yang, L., Fan, J., Liu, Y., Li, E., Peng, J., & Liang, Z. (2020). A review on state-of-the-art power line inspection techniques. IEEE Transactions on Instrumentation and Measurement, 69(12), 9350-9365. [CrossRef]

- Zhang, Y., Yuan, X., Li, W., & Chen, S. (2017). Automatic power line inspection using UAV images. Remote Sensing, 9(8), 824. [CrossRef]

- Li, X., & Yang, L. (2012, August). Design and implementation of UAV intelligent aerial photography system. In 2012 4th International Conference on Intelligent Human-Machine Systems and Cybernetics (Vol. 2, pp. 200-203). IEEE.

- ZHANG, J. (2021). Review of the light-weighted and small UAV system for aerial photography and remote sensing. National Remote Sensing Bulletin, 25(3), 708-724. [CrossRef]

- Lyu, C., & Zhan, R. (2022). Global analysis of active defense technologies for unmanned aerial vehicle. IEEE Aerospace and Electronic Systems Magazine, 37(1), 6-31. [CrossRef]

- .

- Roberge, V., Tarbouchi, M., & Labonté, G. (2012). Comparison of parallel genetic algorithm and particle swarm optimization for real-time UAV path planning. IEEE Transactions on industrial informatics, 9(1), 132-141. [CrossRef]

- Puente-Castro, A., Rivero, D., Pazos, A., & Fernandez-Blanco, E. (2022). A review of artificial intelligence applied to path planning in UAV swarms. Neural Computing and Applications, 34(1), 153-170. [CrossRef]

- Mac, T. T., Copot, C., Tran, D. T., & De Keyser, R. (2016). Heuristic approaches in robot path planning: A survey. Robotics and Autonomous Systems, 86, 13-28. [CrossRef]

- Zhang, H., Wang, Y., Zheng, J., & Yu, J. (2018). Path planning of industrial robot based on improved RRT algorithm in complex environments. IEEE Access, 6, 53296-53306. [CrossRef]

- Kunchev, V., Jain, L., Ivancevic, V., & Finn, A. (2006). Path planning and obstacle avoidance for autonomous mobile robots: A review. In Knowledge-Based Intelligent Information and Engineering Systems: 10th International Conference, KES 2006, Bournemouth, UK, October 9-11, 2006. Proceedings, Part II 10 (pp. 537-544). Springer Berlin Heidelberg.

- Chakraborty, S., Elangovan, D., Govindarajan, P. L., ELnaggar, M. F., Alrashed, M. M., & Kamel, S. (2022). A comprehensive review of path planning for agricultural ground robots. Sustainability, 14(15), 9156. [CrossRef]

- Weimin, Z. H. A. N. G., Yue, Z. H. A. N. G., & Hui, Z. H. A. N. G. (2022). Path planning of coal mine rescue robot based on improved A* algorithm. Coal Geology & Exploration, 50(12), 20. [CrossRef]

- Vivaldini, K. C., Galdames, J. P., Bueno, T. S., Araújo, R. C., Sobral, R. M., Becker, M., & Caurin, G. A. (2010, March). Robotic forklifts for intelligent warehouses: Routing, path planning, and auto-localization. In 2010 IEEE International Conference on Industrial Technology (pp. 1463-1468). IEEE.

- Raja, P., & Pugazhenthi, S. (2012). Optimal path planning of mobile robots: A review. International journal of physical sciences, 7(9), 1314-1320. [CrossRef]

- Souissi, O., Benatitallah, R., Duvivier, D., Artiba, A., Belanger, N., & Feyzeau, P. (2013, October). Path planning: A 2013 survey. In Proceedings of 2013 international conference on industrial engineering and systems management (IESM) (pp. 1-8). IEEE.

- Lim, H. B., Teo, Y. M., Mukherjee, P., Lam, V. T., Wong, W. F., & See, S. (2005, November). Sensor grid: Integration of wireless sensor networks and the grid. In The IEEE Conference on Local Computer Networks 30th Anniversary (LCN’05) l (pp. 91-99). IEEE.

- Sanchez-Ibanez, J. R., Pérez-del-Pulgar, C. J., & Garcà a-Cerezo, A. (2021). Path planning for autonomous mobile robots: A review. Sensors, 21(23), 7898. [CrossRef]

- Farman, H., Javed, H., Ahmad, J., Jan, B., & Zeeshan, M. (2016). Gridâbased hybrid network deployment approach for energy efficient wireless sensor networks. Journal of Sensors, 2016(1), 2326917. [CrossRef]

- Lim, H. B., Teo, Y. M., Mukherjee, P., Lam, V. T., Wong, W. F., & See, S. (2005, November). Sensor grid: Integration of wireless sensor networks and the grid. In The IEEE Conference on Local Computer Networks 30th Anniversary (LCN’05) l (pp. 91-99). IEEE.

- Carbajo, R. S., & Mc Goldrick, C. (2017). Decentralised peer-to-peer data dissemination in wireless sensor networks. Pervasive and Mobile Computing, 40, 242-266. [CrossRef]

- KrÄo, S., Cleary, D., & Parker, D. (2005). Enabling ubiquitous sensor networking over mobile networks through peer-to-peer overlay networking. Computer communications, 28(13), 1586-1601. [CrossRef]

- Park, H., & Hutchinson, S. (2014, May). Robust optimal deployment in mobile sensor networks with peer-to-peer communication. In 2014 IEEE International Conference on Robotics and Automation (ICRA) (pp. 2144-2149). IEEE.

- Dash, S. K., Mohapatra, S., & Pattnaik, P. K. (2010). A survey on applications of wireless sensor network using cloud computing. International Journal of Computer Science & Emerging Technologies, 1(4), 50-55.

- Ahmed, K., & Gregory, M. (2011, December). Integrating wireless sensor networks with cloud computing. In 2011 seventh international conference on mobile ad-hoc and sensor networks (pp. 364-366). IEEE.

- Elamin, W. M., Endan, J. B., Yosuf, Y. A., Shamsudin, R., & Ahmedov, A. (2015). High pressure processing technology and equipment evolution: a review. Journal of Engineering Science & Technology Review, 8(5). [CrossRef]

- Ballesteros, A., Ahlstrand, R., Bruynooghe, C., Von Estorff, U., & Debarberis, L. (2012). The role of pressure vessel embrittlement in the long term operation of nuclear power plants. Nuclear engineering and design, 243, 63-68. [CrossRef]

- Chen, X., Fan, Z., Chen, Y., Zhang, X., Cui, J., Zheng, J., & Shou, B. (2018, July). Development of lightweight design and manufacture of heavy-duty pressure vessels in China. In Pressure Vessels and Piping Conference (Vol. 51593, p. V01BT01A023). American Society of Mechanical Engineers.

- Khanduja, N., & Bhushan, B. (2021). Recent advances and application of metaheuristic algorithms: A survey (2014â2020). Metaheuristic and evolutionary computation: algorithms and applications, 207-228. [CrossRef]

- Yaghoubzadeh-Bavandpour, A., Bozorg-Haddad, O., Zolghadr-Asli, B., & Gandomi, A. H. (2022). Improving approaches for meta-heuristic algorithms: A brief overview. Computational Intelligence for Water and Environmental Sciences, 35-61. [CrossRef]

- Talbi, E. G. (2021). Machine learning into metaheuristics: A survey and taxonomy. ACM Computing Surveys (CSUR), 54(6), 1-32. [CrossRef]

- Chiroma, H., Gital, A. Y. U., Rana, N., Abdulhamid, S. I. M., Muhammad, A. N., Umar, A. Y., & Abubakar, A. I. (2020). Nature inspired meta-heuristic algorithms for deep learning: recent progress and novel perspective. In Advances in Computer Vision: Proceedings of the 2019 Computer Vision Conference (CVC), Volume 1 1 (pp. 59-70). Springer International Publishing.

- da Costa Oliveira, A. L., Britto, A., & Gusmão, R. (2023). Machine learning enhancing metaheuristics: a systematic review. Soft Computing, 27(21), 15971-15998. [CrossRef]

- Hassanzadeh, M. R., & Keynia, F. (2021). An overview of the concepts, classifications, and methods of population initialization in metaheuristic algorithms. Journal of Advances in Computer Engineering and Technology, 7(1), 35-54.

- Han, Y., Chen, W., Heidari, A. A., Chen, H., & Zhang, X. (2024). Balancing ExplorationâExploitation of Multi-verse Optimizer for Parameter Extraction on Photovoltaic Models. Journal of Bionic Engineering, 21(2), 1022-1054. [CrossRef]

- Sharma, P., & Raju, S. (2024). Metaheuristic optimization algorithms: A comprehensive overview and classification of benchmark test functions. Soft Computing, 28(4), 3123-3186. [CrossRef]

- Hussain, K., Salleh, M. N. M., Cheng, S., & Naseem, R. (2017). Common benchmark functions for metaheuristic evaluation: A review. JOIV: International Journal on Informatics Visualization, 1(4-2), 218-223. [CrossRef]

- Yang, X. S. (2010). Engineering optimization: an introduction with metaheuristic applications. John Wiley & Sons.

- Bozorg-Haddad, O., Solgi, M., & Loáiciga, H. A. (2017). Meta-heuristic and evolutionary algorithms for engineering optimization. John Wiley & Sons.

- Hidary, J. D., & Hidary, J. D. (2019). Quantum computing: an applied approach (Vol. 1). Cham: Springer.

- Fauseweh, B. (2024). Quantum many-body simulations on digital quantum computers: State-of-the-art and future challenges. Nature Communications, 15(1), 2123. [CrossRef]

- Dahi, Z. A., & Alba, E. (2022). Metaheuristics on quantum computers: Inspiration, simulation and real execution. Future Generation Computer Systems, 130, 164-180. [CrossRef]

- Gharehchopogh, F. S. (2023). Quantum-inspired metaheuristic algorithms: comprehensive survey and classification. Artificial Intelligence Review, 56(6), 5479-5543. [CrossRef]

- Rylander, B., Soule, T., Foster, J. A., & Alves-Foss, J. (2000, July). Quantum Genetic Algorithms. In GECCO (p. 373).

- Ross, O. H. M. (2019). A review of quantum-inspired metaheuristics: Going from classical computers to real quantum computers. Ieee Access, 8, 814-838. [CrossRef]

- Nielsen, M6. A., & Chuang, I. L. (2010). Quantum computation and quantum information. Cambridge university press. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).