Considering the three characteristics, a neural network model, transformer model, is suggested. For example, as regards to the non-linear combination, in a transformer model with leading transformer blocks and following feed forward networks, all processes from inputs to output are (fully) connected.

Furthermore, as respects to the time ordering of elements, the consideration about the time ordering is not made by the method such that the positional encoding contained in the transformer architecture is not considered, where the positional encoding usually plays a role in providing the input data with the information on time sequences. Since the elements belonging to the information set, are independently extracted from the same interval, the sequence of elements in does not need to refer to the time ordering in the random draw. Thus, the positional encoding operation could be left out here.

Finally, as mentioned earlier, it is assumed that different bidders are expected to submitt different predicted values of the random cutoff as bids. The transformer model is able to create various results in the predicted values by adjusting hyperparmeters such as the number of transformer blocks, etc, although it is admitted that the other neural network models also gives rise to the diverse results through the relevant hyperparameter tunning. Accordingly, the method to predict the random cutoff would be suggested through the application of the transformer model in the next section.

3.1. Data Description

The data used in this paper originates from the records about the auctions held by the Korea Electric Power Corporation, called the KEPCO. The KEPCO provides the information on the reserve price, the budget price, the ratio , called the bottom rate for the lowest successful bid, and bid prices for all participants including the winner’s bid price, etc for each auction held by the company over 2018 all year around. The reserve price is not revealed to all bidders until all bidders completely submit their bids. Therefore, when the determination for a winner is announced, the reserve price is also publicized to all bidders.

The normalized reserve price is derived such that the reserve price is divided by the budget price. Then, the normalized reserve price is also multiplied by the ratio

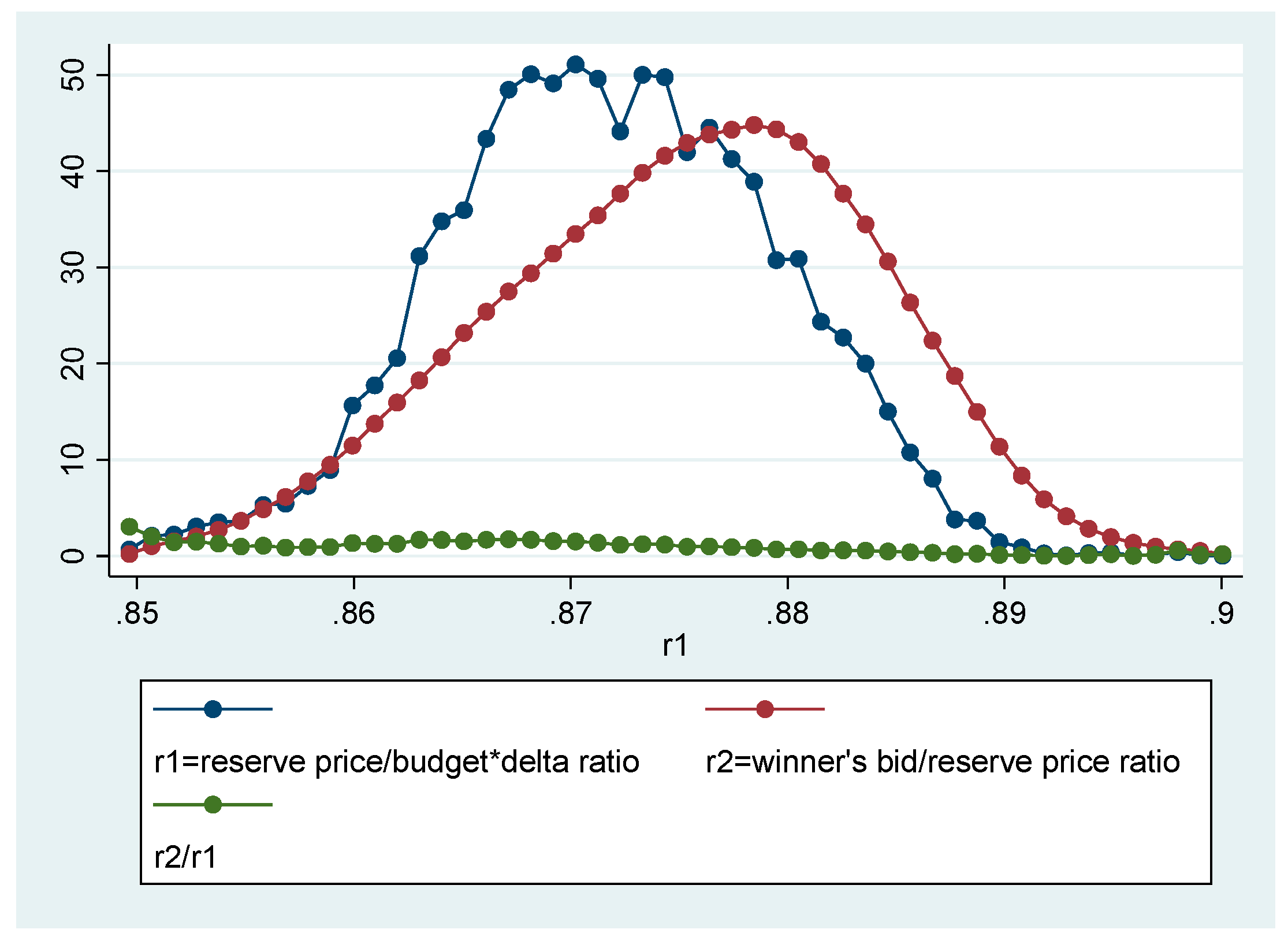

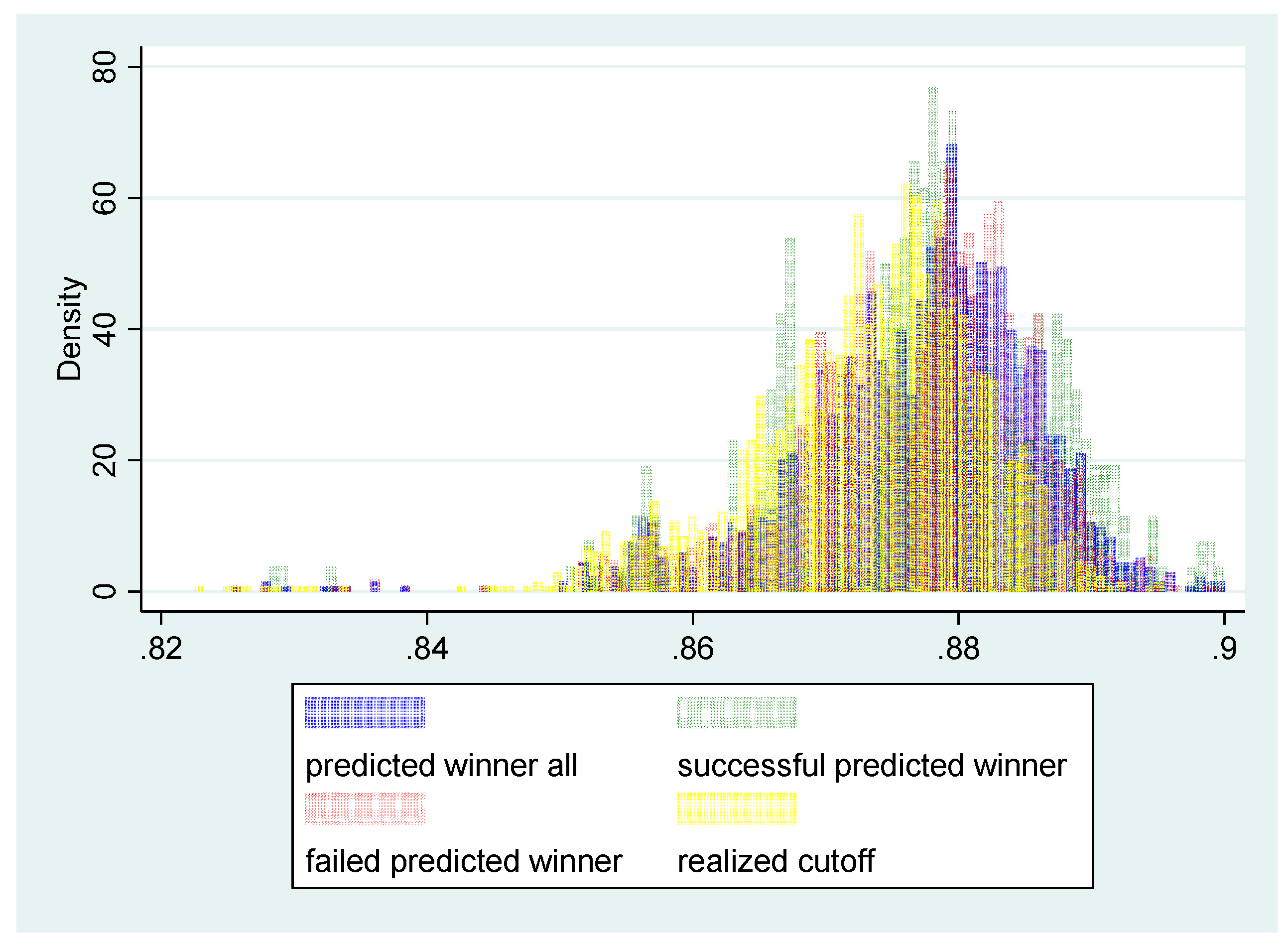

, and then, the random cutoff is eventually calculated. The random cutoff is the criterion to determine a winner since the normalized bid, the ratio of bid price over the reserve price should be above and the closest to the criterion in order for a bidder to be a winner. As shown in the

Figure 2, there are two kinds of kernel distributions. The one is for the ratio of reserve price to budget price (normalized reserve price) multiplied by the bottom rate for the winning bid. The other is for the ratio of winner’s bid to reserve price, called the normalized bid. As indicated in the

Figure 2, the two distributions corresponding to the two kinds of ratios are mostly overlapped, even though the distribution of the latter is more left skewed.

The

Figure 2 shows the results of ratio between kernel density estimations. The

indicates the ratio of values derived from two kernel densities for the same points. For instance, for a point, 0.86199027, the kernel density estimation for

equals 20.571264, and kernel density estimation for

returns 15.962241. Thus, the ratio,

reaches 1.288745. As indicated in

Figure 2, there is no dramatic change in the trend of the ratio, even though the ratio declines slowly. Additionally, the

Table 1 indicates that the mean in the ratio,

is slightly less than one.

3.2. Main Model Architecture

As previously stated, the transformer model architecture is employed in order to predict the random cutoff. The Keras API provides the transformer architecture called “Timeseries classification with a Transformer model” example. This transformer architecture is adopted and slightly modified (according to Jurafsky and Martin [

9], Vaswani

et al. [

10]). One transformer block consists of a few layers in the following manner.

Particularly, above all, the multi-head attention layer is located prior to all the other layers in order to perform the self-attention, which is followed by the layer normalization. Then, two different 1D convolution layers are applied. Once all transformer blocks completely function well, a position-wise feedforward fully connected is provided.

-

Transformer Block()

= MultiHeadAttention(), where Self Attention layer is applied

= LayerNorm(), where Layer Normalization is applied

= +

= Conv1D(), where 1D Convolution Neural Network is applied

= Conv1D()

= LayerNorm()

= +

= Transformer Block(), where the number of blocks equals 5

= FFN(), where feedforward layer fully connected is applied

3.2.1. Data Construction

Total data consists as follows. , replacing t with N. What needs to be concerned about is that stands for the ratio of reserve price over budget price, called the normalized reserve price. Additionally, the normalized random cutoff could be derived from the multiplication of the ratio, by , the bottom rate for the lowest successful bid. The normalized reserve price is easily transformed into the normalized random cutoff and is public information to all bidders before submitting bids. Thus, it is sufficient to analyze and predict the ratio, instead of directly dealing with the normalized random cutoffs.

The time window with 35 elements and slide 1 is applied to the data. More specifically, when the time window reaches , the time window contains 35 elements in the following manner, =.

Since the slide of time window equals 1, the time window moves to the right side one by one. Thus, the next window will be =. The time window could not proceed forward in order to exceed since 35 elements are included in the time window.

The batch size of data inserted into the transformer architecture is supposed to equal b. Therefore, the data, delivered to the transformer algorithm consists of ∈, where ∈ eventually means the indepedent variables. For example, let =, =, .

The number of elements in equals 35, indicating the number of indepedent variables or the number of features inserted into the transformer model. The dependent variable or target variable is = and =.

Furthermore, the next will be constructed in the following manner.

=, = and =, =.

After the procedure is repeated t times, it would be reached that =,

= and =, =, where .

3.2.2. Self-Attention Mechanism

Next, considering the self attention mechanism, the weight matrices for query, key, and value will be introduced as follows.

∈, ∈, ∈

The matrices for query, key, and value are constructed through the multiplication of the weight matrices by the same input data, .

∈, ∈, ∈

, ,

=, =, =

As mentioned earlier, the same input data, is repeatedly applied to the matrices for query, key and value, respectively, which implies that the self-attention algorithm is employed as follows. In other words, the self-attention algorithm investigates the similarities between self-elements as shown below.

= SelfAttention(Q, K, V)=softmax,

where ==,

=,

where =softmax, softmax=

Meanwhile, the softmax function could be decomposed into the combination of the value matrices in the following manner.

softmax=+ +

Particularly, the function of the softmax for each and implies the similarity between and , although both and possible. The combination of the softmax with , softmax for each means the summation of all the similarities between and ,,⋯,. Thus, the summation of all the similarities acts as a role to abstract the information on all the interactions between and ,,⋯, for each .

For example, if all the elements contained in ,,⋯,are skewedly sampled and not well equally drawn, the similarities would be expected to be high, and the summation comes to a relatively higher value, compared to the case that once those elements are evenly sampled, the summation would reach a comparatively low value.

As shown earlier,

results in the dimension of the matrix equaling

. Since the total dimension needs to be

, indicating the dimension of the input data,

, the multiple

,

to

are concatenated each other as mentioned below, and and the number of all the parameters in the multiheadattention equals 5,040.

6

MultiHeadAttention()=(⊕⊕⊕⊕),

where the operator ⊕ means the concatenation, and , and MultiHeadAttention()∈.

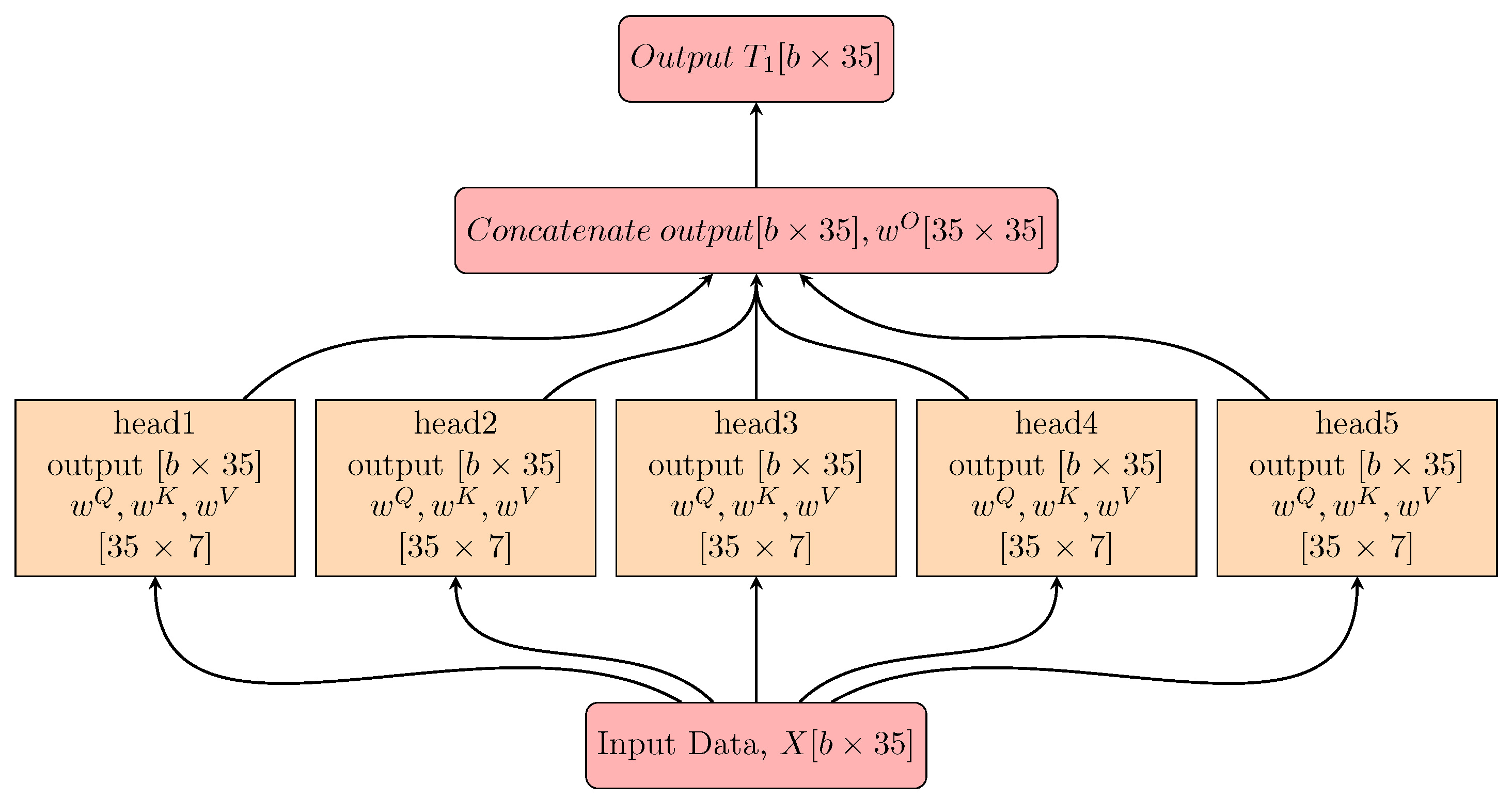

Meanwhile, the

Figure 3 depicts the summarization of the multihead self-attention layer. In this

Figure 3, “b” stands for the batch size. The input data

is inserted into the 5 heads and is applied to their own set of key, query and value weight matrices, respectively. Those matrixes belong to the dimension,

. The outputs from each head are concatenated with the dimension,

and then projected to 35, which results in an output of the same size as input data.

3.2.3. 1D Convolution Layer

Conv1D() means 1D Convolution neural network or convolution layer. There are two convolution layers suggested earlier. That is, the one is the 1st convolutional layer, where the input channel equals the number of elements, , the number of filters (output channels) equals 5, and the kernel size equals 1, and the other is the 2nd convolutional layer, where the input channel equals the number of filters, the number of filters (output channels) equals the number of elements, , and the kernel size equals 1.

More specifically, the set of all the parameters relevant to the 1st convolutional layer is written as ⋃, ⋯,⋃. Thus, the number of all the parameters equals 180. For instance, if an input data, contains the elements as in , then, Conv1D() produces a result as follows.

+⋯+,

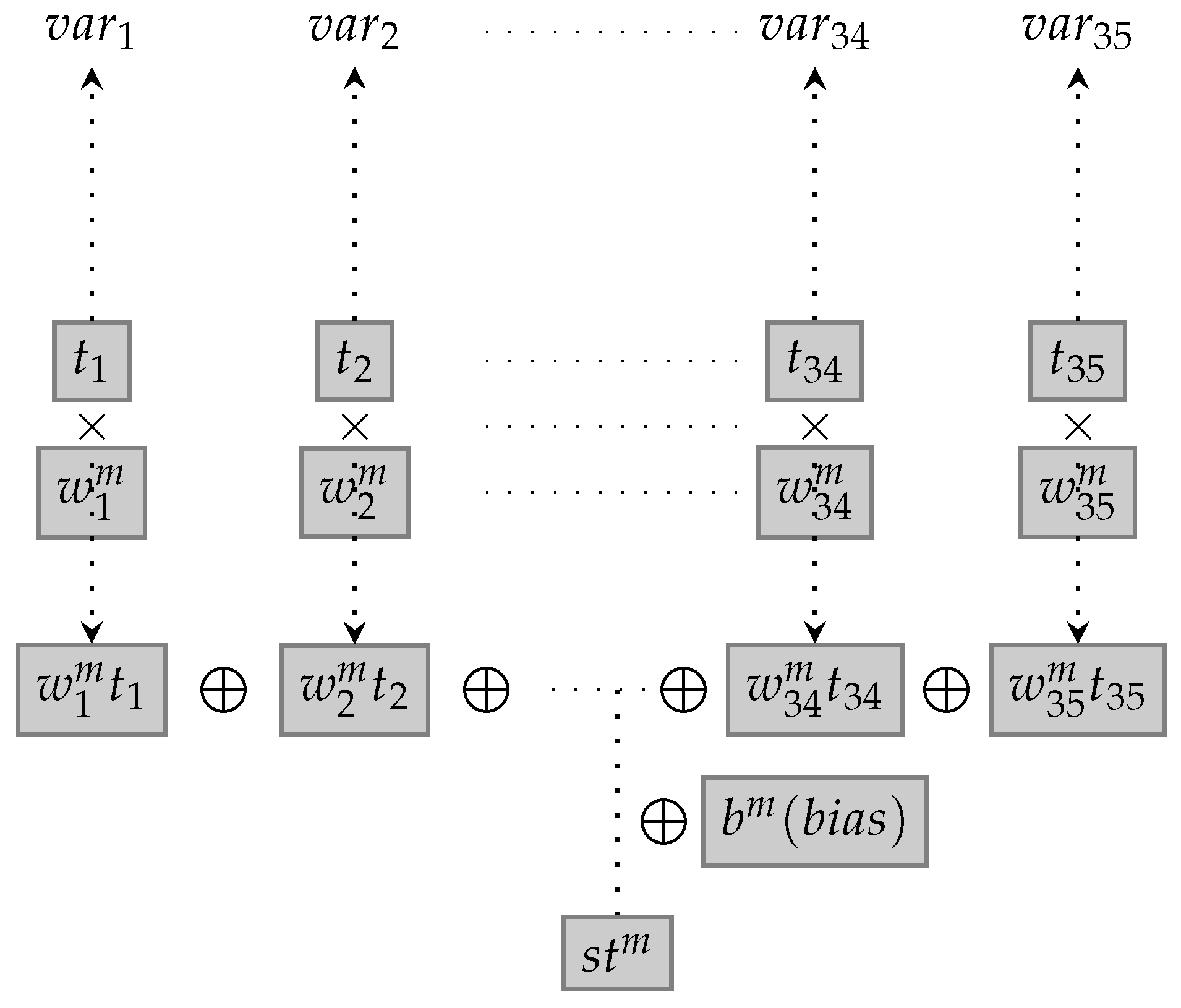

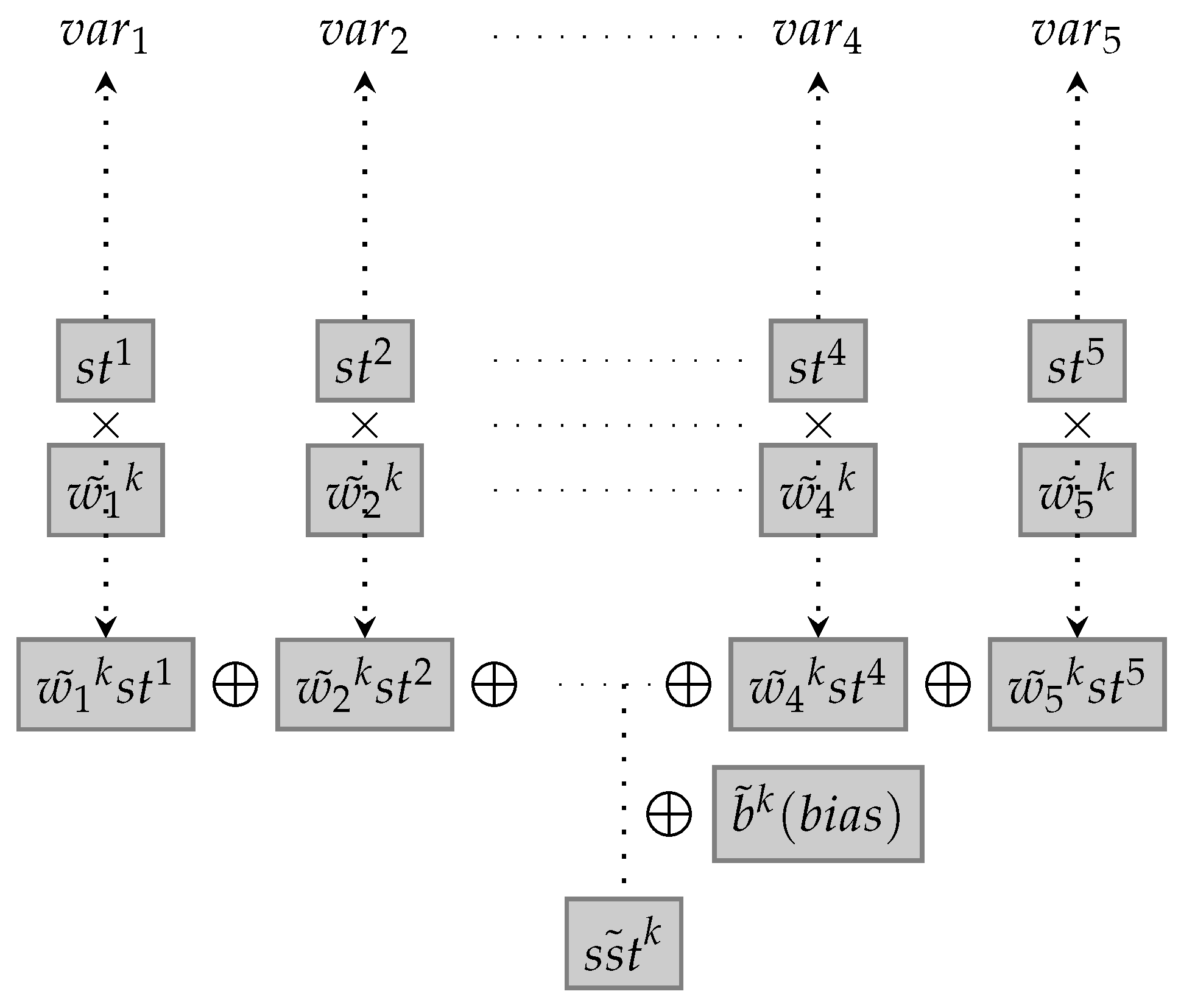

The

Figure 4 depicts the Conv1D(

). Then, “var” represents the variable. Thus, 35 independent variables or features are inserted into the Conv1D(

), which results in 5 dependent variables or outputs.

Input data, contains 35 elements and 35 dimensional information. The function of the 1st convolutional layer compresses the 35 pieces of information to 5 dimensional information. It demonstrates that the layer functions in extracting potential patterns from the 35 pieces of information and categorizing the 5 classification. In other words, the 1st convolutional layer is designed to find 5 types of latent patterns from the 35 types of information delivered by the multiheadattention.

Next, the set of all the parameters relevant to the 2nd convolutional layer is articulated as in ⋃, ⋯,⋃, and the number of all the parameters equals 210.

As similarily stated earlier, if an input data, consists of , Conv1D() makes a result in the following manner.

+⋯+,

Thus, the function of the 2nd convolutional layer plays a pivotal role in extending the compressed 5 dimensional information to the 35 pieces of information. The

Figure 5 describes the Conv1D(

). 5 independent variables or features are delivered into the Conv1D(

), which produces 35 dependent variables or outputs.