2.1. The Original Artificial Gorilla Force Algorithm

Artificial gorilla troop optimization algorithm (GTO) [

16] is a new type of intelligent optimization algorithm proposed by mathematizing the collective social life habits of gorillas. The algorithm mainly simulates the population migration and courtship behavior of gorillas and other life behaviors to find the optimal, which has the advantages of strong ability to find the optimal and fast convergence speed, etc. The GTO algorithm mainly consists of an exploration phase and a development phase, and the development phase contains two kinds of behaviors, namely, following the silverback gorillas and competing for the adult females.

First, the GTO is initialized by setting parameters such as , , , , and . Here, represents the number of gorillas in the population; is the maximum number of iterations; controls the migration strategy of the gorillas to unknown locations, with values ranging between 0 and 1; is set to 0.3 to calculate the intensity of the gorillas' violent behavior; determines the two mechanisms in the exploitation phase, with a value of 0.5. Subsequently, the gorilla population is randomly initialized in the search space.

Secondly, during the exploration phase, the silverback gorilla leads the other gorillas to live in groups in the natural environment. This phase primarily involves three mechanisms for global search: migrating to unknown locations, migrating to known locations, and migrating to other gorillas' locations. Mechanism 1 allows gorillas to randomly explore the space, with the execution condition

; Mechanism 2 enhances the algorithm's exploration of the space, with the execution condition

; Mechanism 3 strengthens the algorithm's ability to escape local optima, with the execution condition

. The specific formulas for the exploration phase are as follows in equations (1) to (6):

In the formulas, and represent the current position and the position in the next iteration of a gorilla, respectively, while and are positions of randomly selected gorillas. denotes the current iteration number. , , , , and are all random numbers; , while . and represent the upper and lower bounds of the variables, respectively. is a random number within the range [-C,C], where the parameter has significant variation in the initial stages and gradually decreases later on. indicates the leadership ability of the silverback gorilla, which might make incorrect decisions due to lack of experience in finding food or managing the group. At the end of the exploration phase, the fitness values of and are calculated and compared. If the fitness value of is smaller, the position is replaced by .

Finally, during the exploitation phase, the algorithm employs two behavioral mechanisms: following the silverback gorilla and competing for mature females. The parameter is used to switch between these mechanisms. If , the gorillas follow the silverback gorilla. The silverback, as the leader of the group, guides the gorillas to food sources and ensures the safety of the group. All gorillas in the group adhere to the decisions made by the silverback. If , competition occurs. The silverback gorilla may age and die, allowing a blackback gorilla to potentially become the leader, or other male gorillas may challenge the silverback in combat to dominate the group.

When

, the mechanism for following the silverback gorilla is described by the following equations:

In the equations: represents the position of the silverback gorilla (the optimal position). denotes the position of each candidate gorilla during the iteration. indicates the total number of gorillas.

When

, the calculation formula for the competitive adult female mechanism is shown in Equation (10):

Where represents the intensity of gorilla competition; denotes the coefficient of the competition degree; represents the impact of violence on the dimensions of the solution. and are random numbers within the interval . When , is a random value from a normal distribution and within the problem dimensions; otherwise, is a random value from a normal distribution.

At the end of the development phase, a population operation is conducted, which involves estimating the fitness values of all individuals. If , the individuals replace the individuals. The best solution (the minimum fitness value) obtained from the entire population is considered the silverback gorilla.

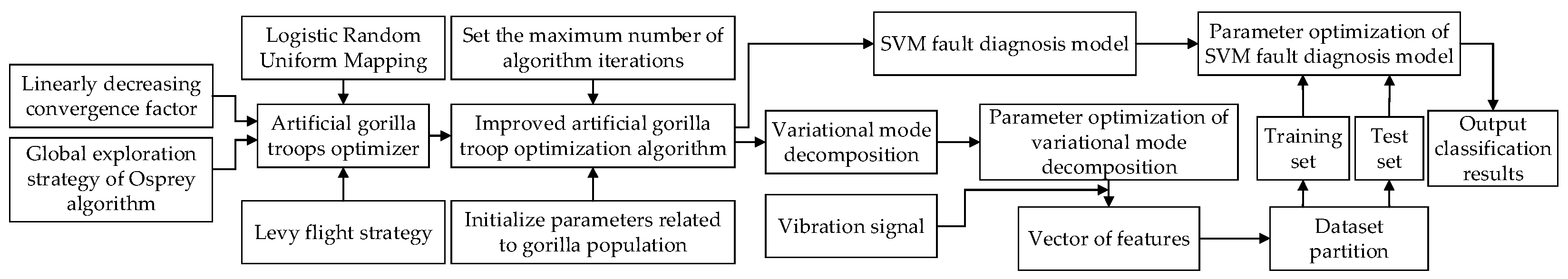

2.2. Improved Artificial Gorilla Force Algorithm (OLGTO)

Based on the iterative optimization process of the GTO algorithm, it is known that the GTO algorithm has a good capability to avoid local optima, achieving high convergence accuracy and speed. However, in the early stages of iteration, the large number of formula parameters prevents timely information exchange among gorillas, adversely affecting the algorithm's ability to escape local optima and impacting convergence speed and accuracy in later stages. To address these issues, the algorithm is improved in four aspects:

(1) Initialization with Logistic Chaotic Mapping: Employing Logistic chaotic mapping to enhance the diversity of the gorilla population, ensuring a more uniform and random distribution within the defined search space.

(2) Improvement of the Weight Factor: Modifying the weight factor to balance the algorithm's global search capabilities and its ability to escape local optima.

(3) Osprey Optimization Algorithm: Replacing the second formula in the original GTO algorithm's Equation (1) with the global exploration strategy of the Osprey Optimization Algorithm in the first stage. This change aims to prevent delays in information exchange among the population due to the numerous parameters in the original formula, thereby enhancing the algorithm's global search capabilities.

(4) Levy Flight Strategy: Applying Levy flight to the gorilla's position update after the development phase, aiding the algorithm in escaping local optima.

2.2.1. Logistic Chaotic Mapping Initializes Gorilla Population

Logistic chaotic mapping is a simple yet nonlinear mapping with complex dynamic behavior used to generate chaotic sequences. Compared to other chaotic mappings, it has the advantages of a relatively simple expression, strong adaptability, and the generated sequences tend to stabilize without infinitely increasing or decreasing [

17].

Using Logistic chaotic mapping to initialize the population can enhance population diversity, thereby improving the optimization performance and global search capability of the algorithm. The Logistic chaotic mapping is mapped to the search space through equations (11)-(12):

Where

is the control parameter of the system. When

is within the range

, the system enters a chaotic state [

18], and in this study,

is set to 4.

is a random number within the interval

;

represents the chaotic sequence generated by equation (11);

denotes the chaotic sequence mapped to the search space; a and b are the lower and upper bounds of the search space, respectively.

Firstly, a chaotic sequence of length 30 (where the population size is 15 and the search space dimension is 2) is generated using equation (11) and iterated accordingly. Next, the generated chaotic sequence is mapped to the search space with two-dimensional ranges of

and

using equation (12). Finally, the initial population is generated as described by equation (13):

2.2.2. Linearly Decreasing Weight Factors

Due to the GTO algorithm's inability to effectively control the iteration step size during the iterative process, once an optimal solution is identified, other individuals quickly converge towards this optimal solution, leading to premature convergence to a local optimum and losing the opportunity for further exploration of the global optimum. However, by employing the linear decreasing strategy for the convergence factor as shown in equation (14), the balance between the exploration and exploitation behaviors of the GTO algorithm can be improved [

19]. This approach enables the algorithm to comprehensively explore the search space while also performing fine-tuned exploitation near high-quality solutions, thereby enhancing the algorithm's adaptability and robustness across different problems.

Where and represent the initial and final weight factors, with values of 1.5 and 0.4, respectively. and denote the current iteration number and the maximum number of iterations. The convergence factor starts with a higher weight in the early stages to maintain strong global search capabilities and linearly decreases as the iteration progresses, thereby enhancing the algorithm's local exploitation ability.

2.2.3. Integrated Osprey Algorithm Global Exploration Strategy

In the original GTO algorithm, the second formula in equation (1) of the first-stage exploration strategy contains numerous parameters, which leads to a slower information exchange among gorillas and subsequently affects the global search speed and convergence performance of the algorithm. To address this issue, the more efficient Osprey Optimization Algorithm is used to replace the second formula in equation (1) during the first-stage global exploration strategy [

20]. This introduces randomness into the global search process, thereby enhancing the convergence performance of the GTO algorithm. The global exploration strategy of the Osprey Optimization Algorithm is described by equations (15)-(17):

Where

represents the new position information of the i-th osprey in the j-th dimension during the first stage;

denotes the selected fish for the i-th osprey in the j-th dimension;

is a random number within the interval

;

is a random number from the set

; and

and

denote the upper and lower bounds of the optimization search space, respectively. The updated formula for the gorilla position is as follows:

2.2.4. Levy Flight Strategy

In the exploitation phase of the GTO algorithm, the population leader (the silverback gorilla) is responsible for guiding the population towards food sources. At this stage, the accuracy of the silverback gorilla's exploration is particularly crucial. Therefore, incorporating the Levy flight strategy [

21] into the gorilla position update formula (equation (7)) during the GTO algorithm's exploitation phase helps the algorithm utilize known optimal solutions while exploring new potential solutions. The Levy flight strategy employs step lengths with heavy-tailed distributions, as described by equation (19). This strategy allows for both small and large jumps, where large jumps enable the algorithm to explore new areas of the search space and escape local optima, while small jumps facilitate detailed search in known high-quality regions. This multi-scale search capability enhances the algorithm's flexibility in handling complex problems.

Where

is the power-law exponent of the Levy distribution, typically ranging between

and is set to 1.5 in this study.

,

, The standard deviation of

is calculated using equation (20).

Where is the gamma function.

The improved gorilla position update formula is given by equation (21) as follows:

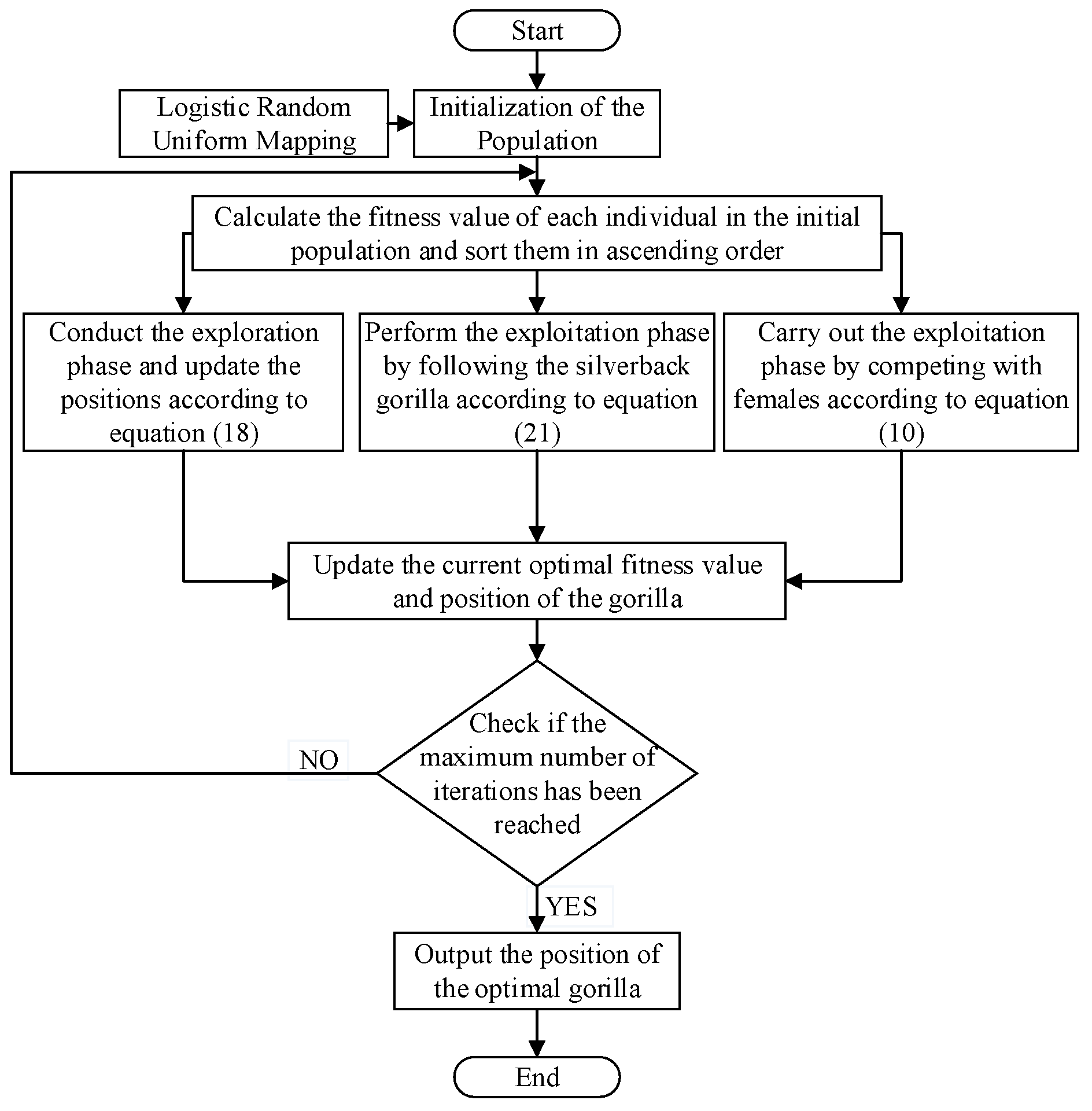

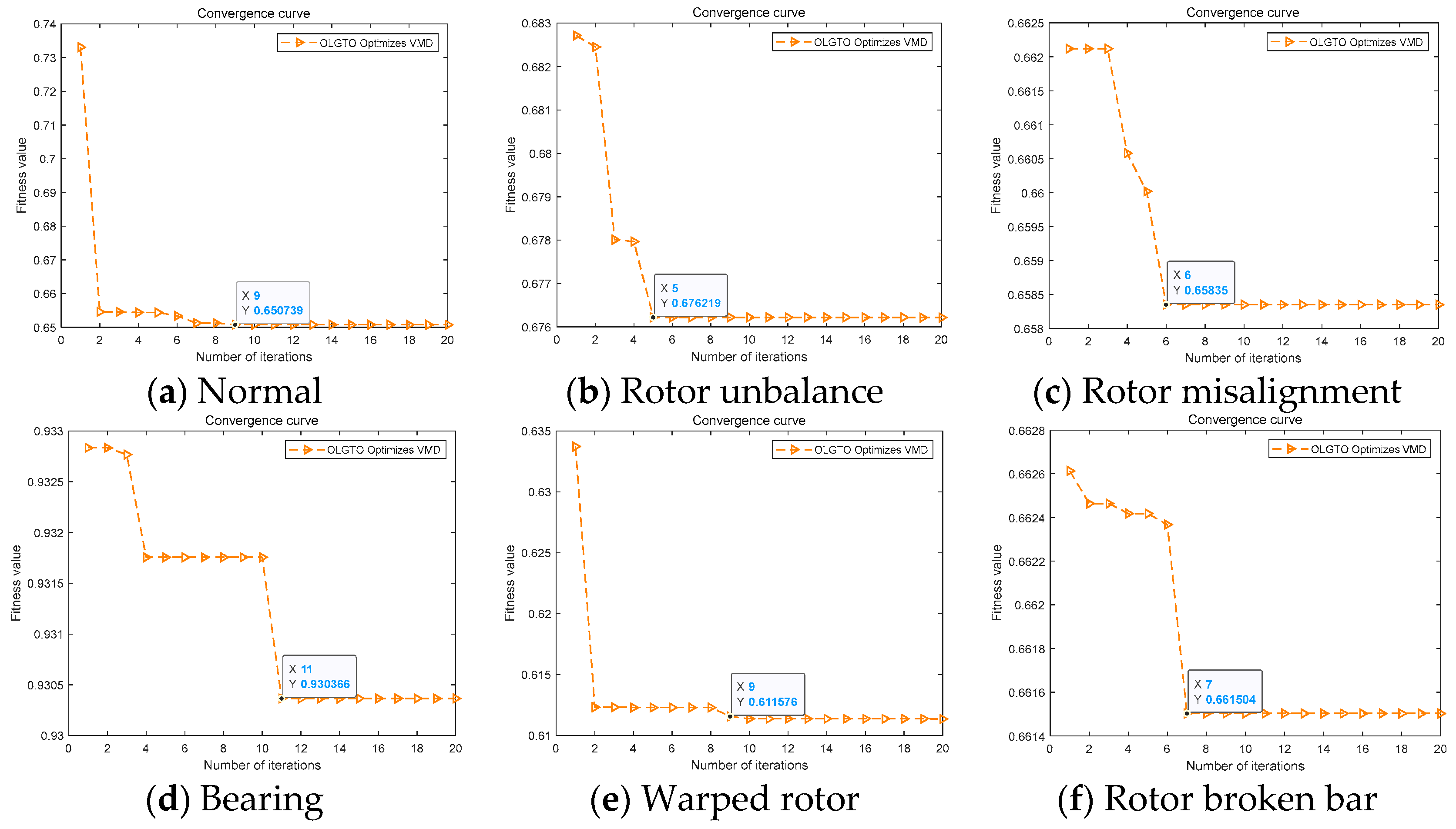

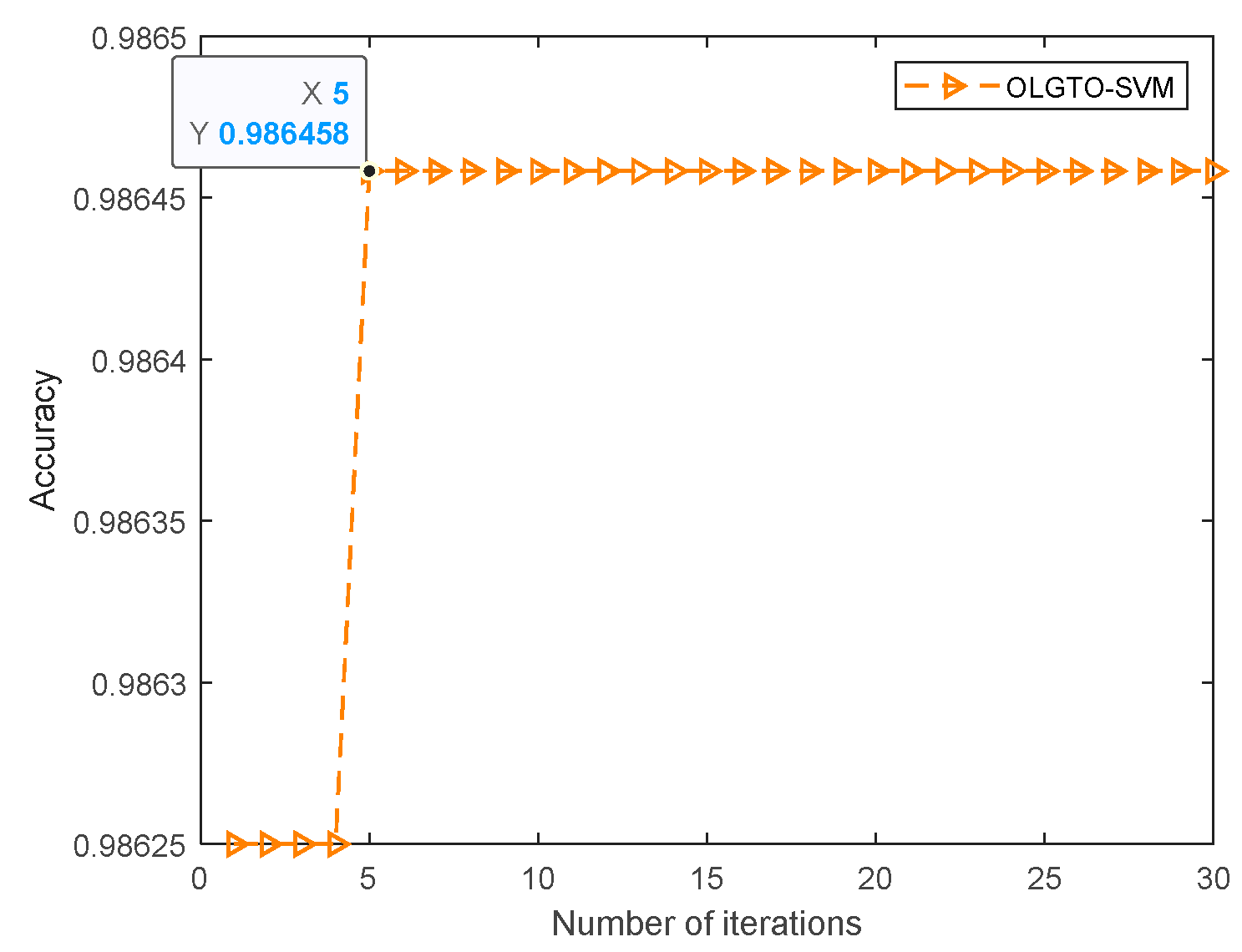

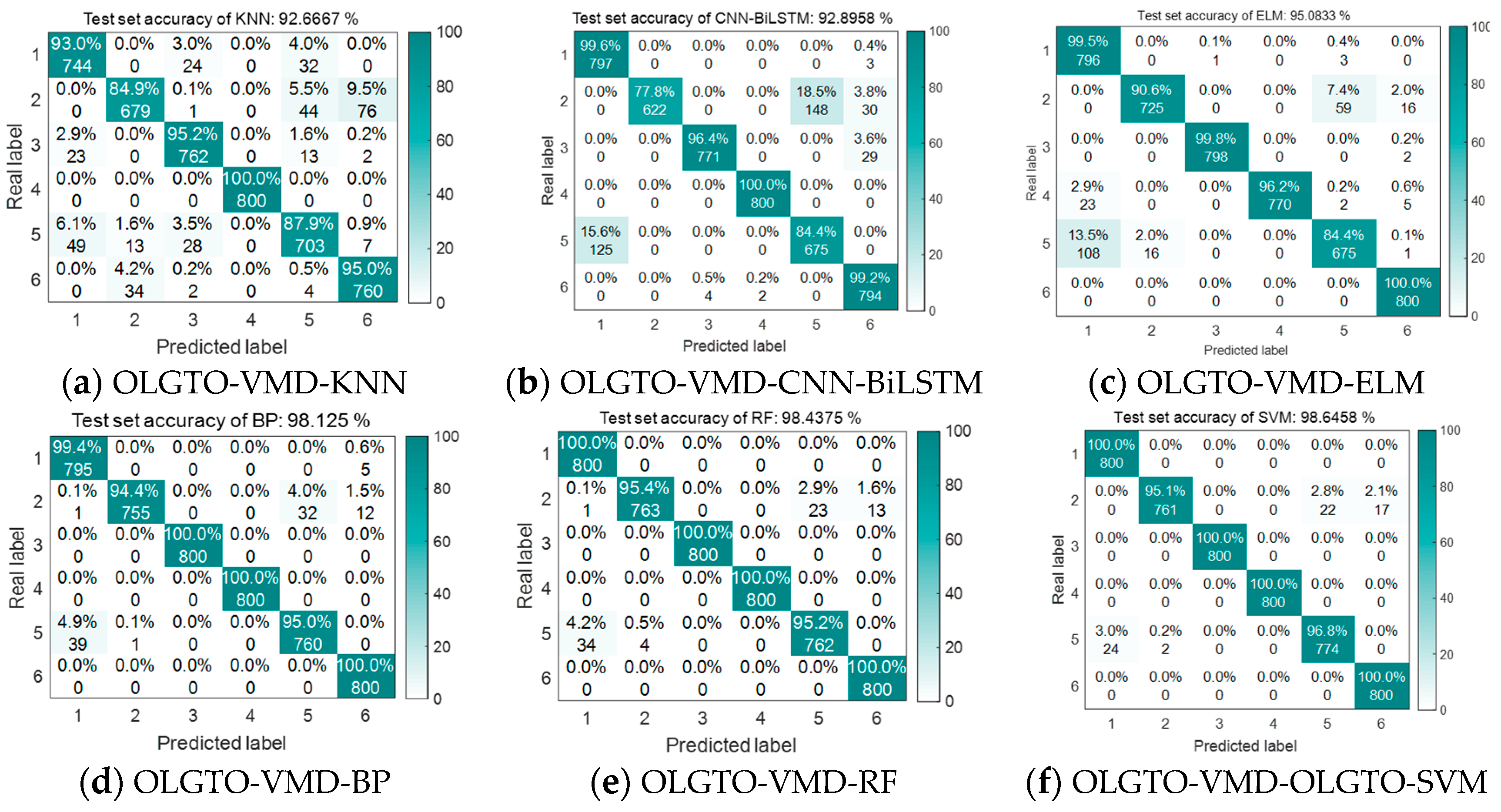

This paper improves the GTO algorithm based on the above strategies, balancing the algorithm's exploration and exploitation capabilities and enhancing the convergence speed. The improved algorithm is used to optimize the parameters of VMD and SVM. The specific process of the improved GTO algorithm is shown in

Figure 1:

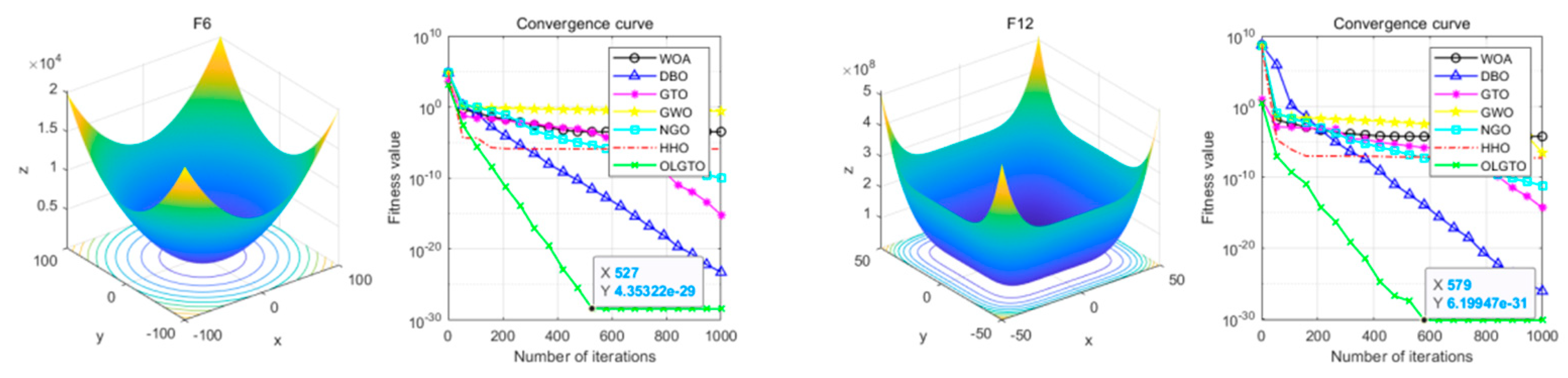

2.3. Improved Algorithm Testing

To validate the effectiveness and superiority of the improved GTO algorithm, it is tested on the single-peaked function F6 and the multi-peaked function F12 from the CEC2005 benchmark function set. The results are compared with the Whale Optimization Algorithm (WOA), Dung beetle optimization algorithm (DBO), Grey Wolf Optimizer (GWO), Northern Goshawk Optimization (NGO), Harris Hawks Optimization (HHO), the original GTO algorithm, and the improved GTO algorithm (OLGTO). Functions F6 and F12 are defined by equations (22) and (23), respectively.

Where the dimensions and optimal minimum values for the F6 and F12 functions are 30 and 0, respectively. The search ranges for these functions are [-100, 100] and [-50, 50], respectively.

The population size and maximum number of iterations for the seven algorithms are uniformly set to 100 and 1000, respectively. In the OLGTO and GTO algorithms, the exploration probability p and control parameter Beta are set to 0.03 and 3, respectively. The weight factor W for GTO is set to 0.5. OLGTO employs a linear decreasing strategy and Levy flight strategy, with parameters

,

,

. The convergence results of the tested algorithms are shown in

Figure 2.

In the entire search space, unimodal functions have only one global optimum and are primarily used to evaluate the algorithm's exploitation capability, i.e., its ability to converge to the optimal solution. Unimodal functions are relatively simple, allowing for clear measurement of convergence speed and accuracy. On the other hand, multimodal functions contain multiple local optima and are highly suitable for assessing the algorithm's exploration capability. Complex multimodal functions can test the algorithm's ability to avoid local optima and find the global optimum while evaluating the balance between exploration and exploitation. From the comparative results in

Figure 2, it can be observed that for unimodal functions, the OLGTO algorithm consistently converges at a faster rate and ultimately achieves higher convergence accuracy than the other six algorithms. For multimodal functions, except for DBO and OLGTO, the WOA, GTO, GWO, NGO, and HHO algorithms all experience a significant decline in convergence speed after 200 iterations, falling into local optima and resulting in lower final convergence accuracy. Although the OLGTO algorithm shows a noticeable decrease in convergence speed around the 400th iteration, indicating a tendency to fall into local optima, it quickly resumes its convergence and reaches the optimal solution by the 579th iteration. This demonstrates the algorithm's strong balance between exploration and exploitation and its ability to escape local optima. In summary, the OLGTO algorithm exhibits strong adaptability compared to other algorithms, effectively avoiding local optima. This proves the effectiveness and superiority of the improved algorithm.