Submitted:

05 August 2024

Posted:

14 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Preliminaries

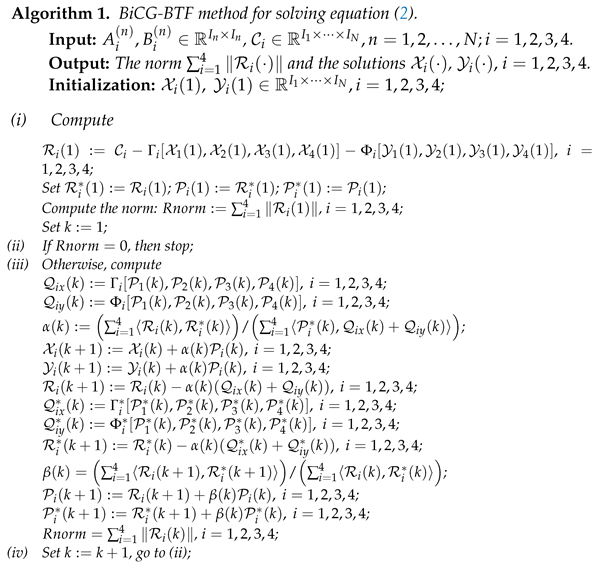

3. An Iterative Algorithm For Solving The Problem 1.1 And 1.2

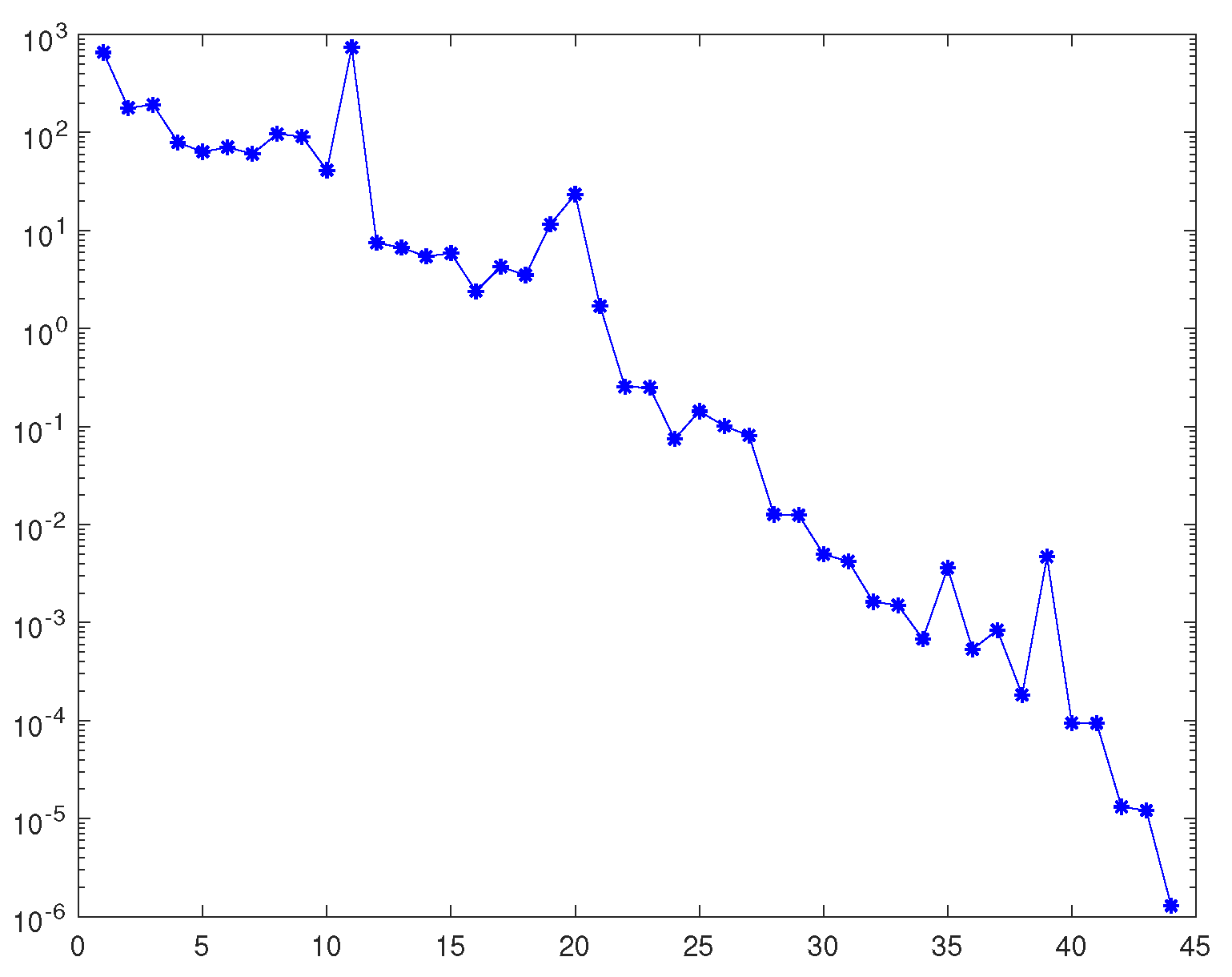

4. Numerical Examples

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ahmadi-Asl, S.; Beik, F.P.A. An efficient iterative algorithm for quaternionic least-squares problems over the generalized η-(anti-)bi-Hermitian matrices. Linear Multilinear Algebra 2017, 65, 1743–1769. [Google Scholar] [CrossRef]

- Ahmadi-Asl, S.; Beik, F.P.A. Iterative algorithms for least-squares solutions of a quaternion matrix equation. J. Appl. Math. Comput. 2017, 53, 95–127. [Google Scholar] [CrossRef]

- Bai, Z.-Z.; Golub, G.H.; Ng, M.K. Hermitian and skew-Hermitian splitting methods for non-Hermitian positive definite linear systems. SIAM J. Matrix Anal. Appl. 2003, 24, 603–626. [Google Scholar] [CrossRef]

- Ballani, J.; Grasedyck, L. A projection method to solve linear systems in tensor format. Numer. Linear Algebra Appl. 2013, 20, 27–43. [Google Scholar] [CrossRef]

- Bank, R.E.; Chan, T.-F. An analysis of the composite step biconjugate gradient method. Numer. Math. 1993, 66, 295–319. [Google Scholar] [CrossRef]

- Bank, R.E.; Chan, T.-F. A composite step bi-conjugate gradient algorithm for nonsymmetric linear systems. Numer. Algorithms 1994, 7, 1–16. [Google Scholar] [CrossRef]

- Beik, F.; Ahmadi-Asl, S. An iterative algorithmfor η-(anti)-Hermitian least-squares solutions of quaternion matrix equations. Electron. J. Linear Algebra 2015, 30, 372–401. [Google Scholar] [CrossRef]

- Beik, F.; Panjeh, F.; Movahed, F.; AhmadiâAsl, S. On the Krylov subspace methods based on tensor format for positive definite Sylvester tensor equations. Numer. Linear Algebra Appl. 2016, 23, 444–466. [Google Scholar] [CrossRef]

- Beik, F.; Jbilou, K.; Najafi-Kalyani, M.; et al. GolubâKahan bidiagonalization for ill-conditioned tensor equations with applications. Numer. Algorithms 2020, 84, 1535–1563. [Google Scholar] [CrossRef]

- Chen, Z.; Lu, L. A projection method and Kronecker product preconditioner for solving Sylvester tensor equations. Sci. China Math. 2012, 55, 1281–1292. [Google Scholar] [CrossRef]

- Duan, X.F.; Zhang, Y.S.; Wang, Q.W. An efficient iterative method for solving a class of constrained tensor least squares problem. Appl. Numer. Math. 2024, 196, 104–117. [Google Scholar] [CrossRef]

- Freund, R.W.; Golub, G.H.; Nachtigal, N.M. Iterative solution of linear systems. Acta Numer. 1992, 1, 44. [Google Scholar] [CrossRef]

- Gao, Z.-H.; Wang, Q.-W.; Xie, L. The (anti-)η-Hermitian solution to a novel system of matrix equations over the split quaternion algebra. Math. Meth. Appl. Sci. 2024, 1–18. [Google Scholar] [CrossRef]

- Grasedyck, L. Existence and computation of low Kronecker-rank approximations for large linear systems of tensor product structure. Computing 2004, 72, 247–265. [Google Scholar] [CrossRef]

- Guan, Y.; Chu, D. Numerical computation for orthogonal low-rank approximation of tensors. SIAM J. Matrix Anal. Appl. 2019, 40, 1047–1065. [Google Scholar] [CrossRef]

- Guan, Y.; Chu, M.T.; Chu, D. Convergence analysis of an SVD-based algorithm for the best rank-1 tensor approximation. Linear Algebra Appl. 2018, 555, 53–69. [Google Scholar] [CrossRef]

- Guan, Y.; Chu, M.T.; Chu, D. SVD-based algorithms for the best rank-1 approximation of a symmetric tensor. SIAM J. Matrix Anal. 2018, 39, 1095–1115. [Google Scholar] [CrossRef]

- Hajarian, M. Developing Bi-CG and Bi-CR methods to solve generalized Sylvester-transpose matrix equations. Int. J. Auto. Comput. 2014, 11(1), 25–29. [Google Scholar] [CrossRef]

- He, Z.-H.; Wang, X.-X.; Zhao, Y.-F. Eigenvalues of quaternion tensors with applications to color video processing. J. Sci. Comput. 2023, 94, 1. [Google Scholar] [CrossRef]

- Heyouni, M.; Saberi-Movahed, F.; Tajaddini, A. On global Hessenberg based methods for solving Sylvester matrix equations. Comput. Math. Appl. 2019, 77, 77–92. [Google Scholar] [CrossRef]

- Hu, J.; Ke, Y.; Ma, C. Efficient iterative method for generalized Sylvester quaternion tensor equation. Comput. Appl. Math. 2023, 42, 237. [Google Scholar] [CrossRef]

- Huang, N.; Ma, C.-F. Modified conjugate gradient method for obtaining the minimum-norm solution of the generalized coupled Sylvester-conjugate matrix equations. Appl. Math. Model. 2016, 40, 1260–1275. [Google Scholar] [CrossRef]

- Jia, Z.; Wei, M.; Zhao, M.-X.; et al. A new real structure-preserving quaternion QR algorithm. J. Comput. Appl. Math. 2018, 343, 26–48. [Google Scholar] [CrossRef]

- Karimi, S.; Dehghan, M. Global least squares method based on tensor form to solve linear systems in Kronecker format. Trans. Inst. Measure. Control 2018, 40, 2378–2386. [Google Scholar] [CrossRef]

- Ke, Y. Finite iterative algorithm for the complex generalized Sylvester tensor equations. J. Appl. Anal. Comput. 2020, 10, 972–985. [Google Scholar] [CrossRef] [PubMed]

- Kolda, T.G. Multilinear operators for higher-order decompositions; Sandia National Laboratory(SNL): Albuquerque, NM, and Livermore, CA, 2006. [Google Scholar]

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Kyrchei, I. Cramerâs rules for Sylvester quaternion matrix equation and its special cases. Adv. Appl. Clifford Algebras 2018, 28, 1–26. [Google Scholar] [CrossRef]

- Li, B.-W.; Tian, S.; Sun, Y.-S.; et al. Schur-decomposition for 3D matrix equations and its application in solving radiative discrete ordinates equations discretized by Chebyshev collocation spectral method. J. Comput. Phys. 2010, 229, 1198–1212. [Google Scholar] [CrossRef]

- Li, T.; Wang, Q.-W.; Duan, X.-F. Numerical algorithms for solving discrete Lyapunov tensor equation. J. Comput. Appl. Math. 2020, 370, 112676. [Google Scholar] [CrossRef]

- Li, T.; Wang, Q.-W.; Zhang, X.-F. Gradient based iterative methods for solving symmetric tensor equations. Numer. Linear Algebra Appl. 2022, 29. [Google Scholar] [CrossRef]

- Li, T.; Wang, Q.-W.; Zhang, X.-F. A Modified conjugate residual method and nearest kronecker product preconditioner for the generalized coupled Sylvester tensor equations. Mathematics 2022, 10, 1730. [Google Scholar] [CrossRef]

- Li, X.; Ng, M.K. Solving sparse non-negative tensor equations: Algorithms and applications. Front. Math. China 2015, 10, 649–680. [Google Scholar] [CrossRef]

- Li, Y.; Wei, M.; Zhang, F.; et al. Real structure-preserving algorithms of Householder based transformations for quaternion matrices. J. Comput. Appl. Math. 2016, 305, 82–91. [Google Scholar] [CrossRef]

- Liang, Y.; Silva, S.D.; Zhang, Y. The tensor rank problem over the quaternions. Linear Algebra Appl. 2021, 620, 37–60. [Google Scholar] [CrossRef]

- Lv, C.; Ma, C. A modified CG algorithm for solving generalized coupled Sylvester tensor equations. Appl. Math. Comput. 2020, 365, 124699. [Google Scholar] [CrossRef]

- Malek, A.; Momeni-Masuleh, S.H. A mixed collocation–finite difference method for 3D microscopic heat transport problems. J. Comput. Appl. Math. 2008, 217, 137–147. [Google Scholar] [CrossRef]

- Mehany, M.S.; Wang, Q.-W.; Liu, L. A System of Sylvester-like quaternion tensor equations with an application. Front. Math. 2024, 1–20. [Google Scholar] [CrossRef]

- Najafi-Kalyani, M.; Beik, F.P.A.; Jbilou, K. On global iterative schemes based on Hessenberg process for (ill-posed) Sylvester tensor equations. J. Comput. Appl. Math. 2020, 373, 112216. [Google Scholar] [CrossRef]

- Peng, Y.; Hu, X.; Zhang, L. An iteration method for the symmetric solutions and the optimal approximation solution of the matrix equation AXB=C. Appl. Math. Comput. 2005, 160, 763–777. [Google Scholar] [CrossRef]

- Qi, L. Eigenvalues of a real supersymmetric tensor. J. Symbolic Comput. 2005, 40, 1302–1324. [Google Scholar] [CrossRef]

- Qi, L. Symmetric nonnegative tensors and copositive tensors. Linear Algebra Appl. 2013, 439, 228–238. [Google Scholar] [CrossRef]

- Qi, L.; Chen, H.; Chen, Y. Tensor eigenvalues and their applications; J. Symbolic Comput., Springer, 2018.

- Qi, L.; Luo, Z. Tensor analysis: Spectral theory and special tensors; SIAM: Philadelphia, 2017. [Google Scholar]

- Saberi-Movahed, F.; Tajaddini, A.; Heyouni, M.; et al. Some iterative approaches for Sylvester tensor equations, Part I: A tensor format of truncated Loose Simpler GMRES. Appl. Numer. Math. 2022, 172, 428–445. [Google Scholar] [CrossRef]

- Saberi-Movahed, F.; Tajaddini, A.; Heyouni, M.; et al. Some iterative approaches for Sylvester tensor equations, Part II: A tensor format of Simpler variant of GCRO-based methods. Appl. Numer. Math. 2022, 172, 413–427. [Google Scholar] [CrossRef]

- Song, G.; Wang, Q.-W.; Yu, S. Cramer’s rule for a system of quaternion matrix equations with applications. Applied Math. Comput. 2018, 336, 490–499. [Google Scholar] [CrossRef]

- Wang, Q.-W.; He, Z.-H.; Zhang, Y. Constrained two-sided coupled Sylvester-type quaternion matrix equations. Automatica 2019, 101, 207–213. [Google Scholar] [CrossRef]

- Wang, Q.-W.; Xu, X.; Duan, X. Least squares solution of the quaternion Sylvester tensor equation. Linear Multilinear Algebra 2021, 69, 104–130. [Google Scholar] [CrossRef]

- Xie, M.; Wang, Q.-W. Reducible solution to a quaternion tensor equation. Front. Math. China 2020, 15, 1047–1070. [Google Scholar] [CrossRef]

- Xie, M.; Wang, Q.-W.; He, Z.-H.; et al. A system of Sylvester-type quaternion matrix equations with ten variables. Acta Math. Sin. (Engl. Ser.) 2022, 38, 1399C–1420. [Google Scholar] [CrossRef]

- Zhang, F.; Wei, M.; Li, Y.; et al. Special least squares solutions of the quaternion matrix equation AXB+CXD=E. Comput. Math. Appl. 2016, 72, 1426–1435. [Google Scholar] [CrossRef]

- Zhang, X. A system of generalized Sylvester quaternion matrix equations and its applications. Appl. Math. Comput. 2016, 273, 74–81. [Google Scholar] [CrossRef]

- Zhang, X.-F.; Li, T.; Ou, Y.-G. Iterative solutions of generalized Sylvester quaternion tensor equations. Linear Multilinear Algebra 2024, 72, 1259–1278. [Google Scholar] [CrossRef]

- Zhang, X.-F.; Wang, Q.-W. Developing iterative algorithms to solve Sylvester tensor equations. Appl. Math. Comput. 2021, 409, 126403. [Google Scholar] [CrossRef]

- Zhang, X.-F.; Wang, Q.-W. On RGI Algorithms for Solving Sylvester Tensor Equations. Taiwan. J. Math. 2022, 1, 1–19. [Google Scholar] [CrossRef]

| IT | The number of iteration steps |

| CPU time | The Elapsed CPU time in seconds |

| Res | is the residual at kth iteration. |

| The order three tensor with pseudo-random values drawn from a uniform | |

| distribution on the unit interval | |

| The upper triangular portion of Hilbert matrix | |

| The upper triangular portion of matrix with all 1 | |

| Identity matrix | |

| Zero matrix | |

| The tridiagonal matrix with |

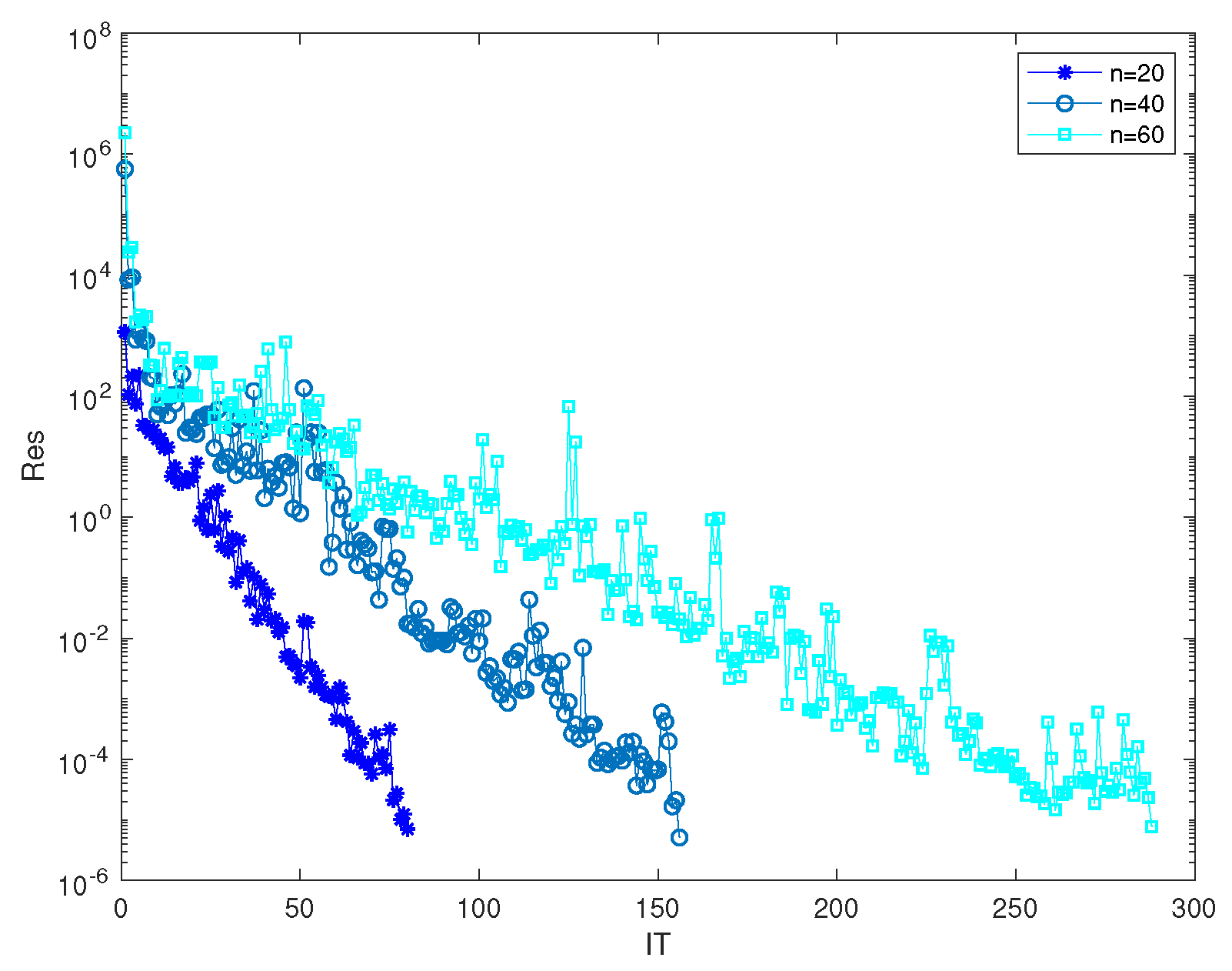

| IT | CPU time | Res | |

| n=20 | 82 | 8.3272 | 7.7182e-06 |

| n=40 | 156 | 24.8187 | 5.1233e-06 |

| n=60 | 288 | 91.4387 | 7.6898e-06 |

| p=10 | p=25 | p=30 | |

| Algorithm 1 | 25.6884(320) | 140.8233(1270) | 202.7709(1580) |

| CGLS [49] | 15.5685(219) | 144.3166(1266) | 230.7340(1832) |

| p=10 | p=15 | p=20 | |

| Algorithm | 45.4157(576) | 104.4370(1095) | 163.6110(1700) |

| CGLS [49] | 44.0701(579) | 118.2419(1296) | 230.7978(2298) |

| Algorithm 1 | 5.6210e-09(248) | 6.0527e-09(188) | 4.1925e-09(248) |

| CGLS [49] | 9.9300e-09(106) | 9.7035e-09(178) | 8.9552e-09(167) |

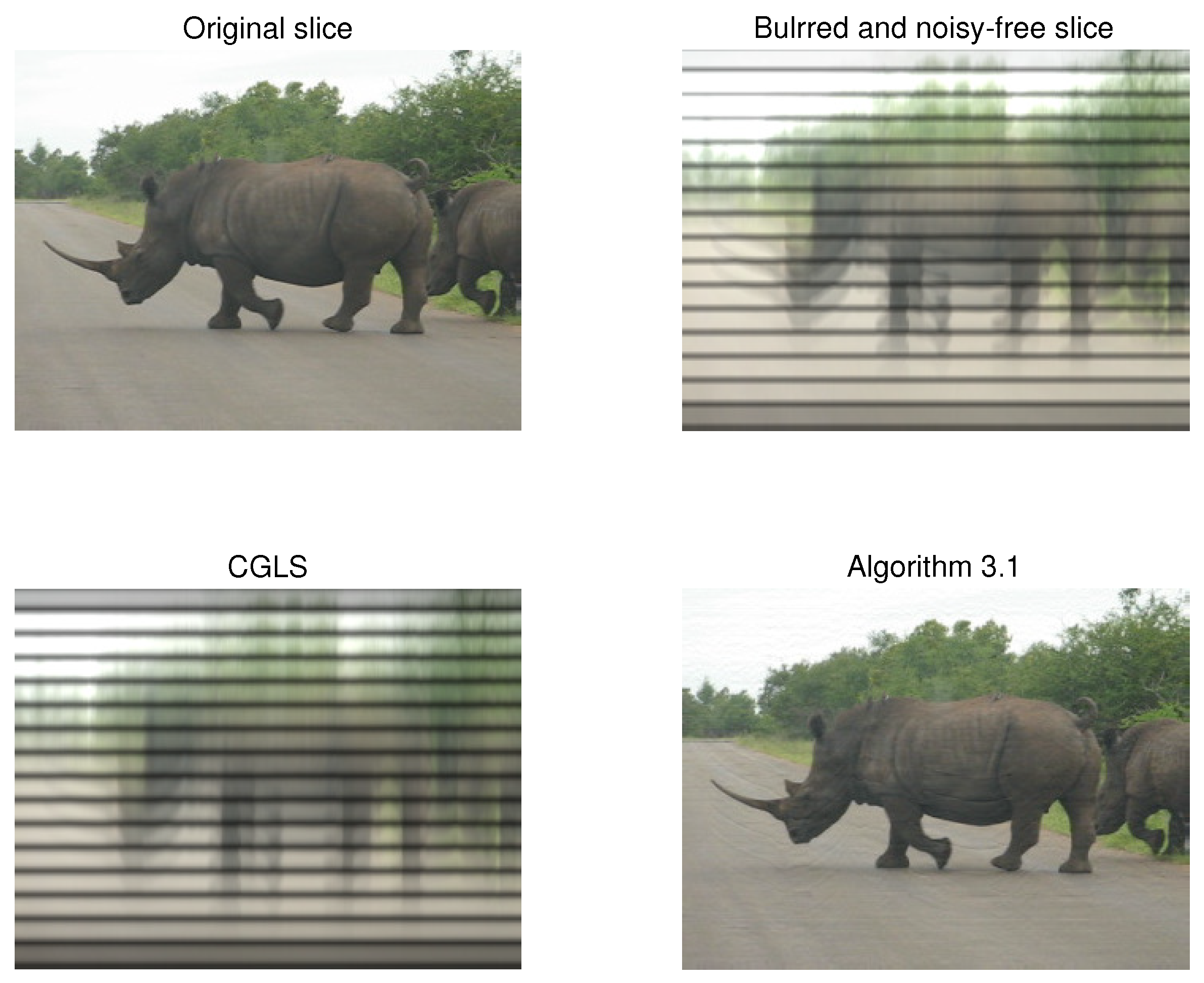

| Algorithm([r,s]) | Algorithm (PSNR/RE) | CGLS(PSNR/RE) |

| [3,3] | 38.6181(0.0235) | 13.8404(0.3769) |

| [6,6] | 37.3721(0.0300) | 14.0529(0.3694) |

| [8,8] | 33.8073(0.0338) | 14.7958(0.3551) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).