1. Introduction

Understanding the composition, health, and distribution of ecosystems is critical to effective environmental management, planning, and conservation. All these goals rely on one cornerstone process: ecosystem mapping, which involves the spatial identification and delineation of distinct ecological units [

1]. However, the accuracy of ecosystem maps greatly affects their utility in practical applications. It is a key element in understanding the dynamics of a natural environment and thus plays a huge role in conservation, resource management, and policy formulation [

2]. Traditionally, remote sensing technologies have been used comprehensively to understand and manage ecosystems by giving an overview of large landscapes. With the years, satellite imaging has undergone revolutions that have enabled researchers to capture increasingly detailed and comprehensive data. Among the very newest developments, the Sentinel satellite constellation—the new frontiers in Earth observation with very high-resolution optical and radar imagery—added Sentinel-1 and Sentinel-2 to the list [

3]. Thus, the present work is related to the domain of ecosystem mapping and is striving for an improvement in accuracy through the integration of Sentinel-1 and Sentinel-2 data, allied to more advanced classification techniques.

Even with the benefits that optical imagery delivers, there are a number of inherent limitations within this data source that impact the accuracy of ecosystem maps produced on the basis of this data alone [

4]. Perhaps the most important of these issues is spectral saturation, whereby the dense vegetation cover has very little penetration of light through the canopy, and thus little or no information can be obtained from the signal about the characteristics of the land cover [

3]. This problem is significant in terms of distinguishing between different types of vegetation, especially within intensively forested ecosystems. Other challenges include the impact of atmospheric conditions on optical data. Cloud cover may obscure the ground surface from view, in which case retrieval of any useful information becomes impossible. Even partial cloud cover may introduce a variety of artifacts and inconsistencies within the data, which may lead to a misclassification of ecosystem components [

5].

It is in this regard literature also points to the need for fusing multi-sensor data for accurate ecosystem mapping [

6]. Previous research shows that optical and radar data complement each other, where each modality uniquely provides additional insight into characteristics, land cover types, and ecosystem dynamics [

7]. Additionally, research efforts have demonstrated efficient algorithms of machine learning, such as Random Forest, in classifying complex landscapes from remote sensing data [

6]. Nevertheless, against the backdrop of all these developments, gaps persist in understanding the complete potential of techniques for data fusion using machine learning and their application across diverse ecosystems [

8].

The inadequacies of single-sensor optical imagery in ecosystem mapping have made researchers rather very active in exploring various avenues. First, there has been the development of sophisticated image processing techniques and more advanced classification algorithms [

6]. These advanced classification algorithms help leverage the spectral information within the optical data to extract subtle signatures associated with different ecosystem constituents. However, this effectiveness is still potentially constrained by intrinsic data quality problems and complex spectral characteristics of various ecosystems [

9]. Another strategy under development is the integration of radar imagery and other ancillary data sets that complement optical data. It also gives topographic features information, which can be very useful in distinguishing between wetlands and terrestrial ecosystems [

10]. This is especially true for radar imagery, particularly synthetic aperture radar imagery, due to the fact that it can partially penetrate cloud covers and vegetation canopies. On the other hand, special expertise is often required for the interpretation of SAR data, which may be more difficult in comparison with optical imagery [

11].

Even though the integration of multi-sensor data has some bright prospects in improving accuracy in ecosystem mapping, a good deal of knowledge gaps and uncertainties remain to be filled [

7]. For certain types of ecosystems, the optimal combination of data sources and the most efficient techniques of data fusion are less frequently well-defined [

2]. The synergies of optical and radar data—how they work together—are being researched for various ecosystems, and, consequently, studies are still underway for developing standardized protocols [

11]. Secondly, further investigation on the impact of varying spatial and temporal resolutions of the different sensor data on the accuracy of ecosystem maps is required [

12]. Normally, optical imagery will have finer spatial resolution compared to SAR data that may have coarser resolution [

13]. How to effectively integrate such disparate resolutions has long been one of the challenges toward reaching the optimal mapping accuracy. The first is Sentinel-1, an ESA constellation of radar satellites that provide C-band SAR data with high temporal resolution [

14]. Another ESA constellation is Sentinel-2, which provides high-resolution multispectral imagery acquired over a broad range of spectral bands [

15]. Confident of the potential of these two sources of data, this study will explore how far their combination can offer better delineation of critical habitats such as wetlands, riverine systems, and mangrove forests. We hope to be able to compensate for the limitations of one type of sensor by using the strengths of the other.

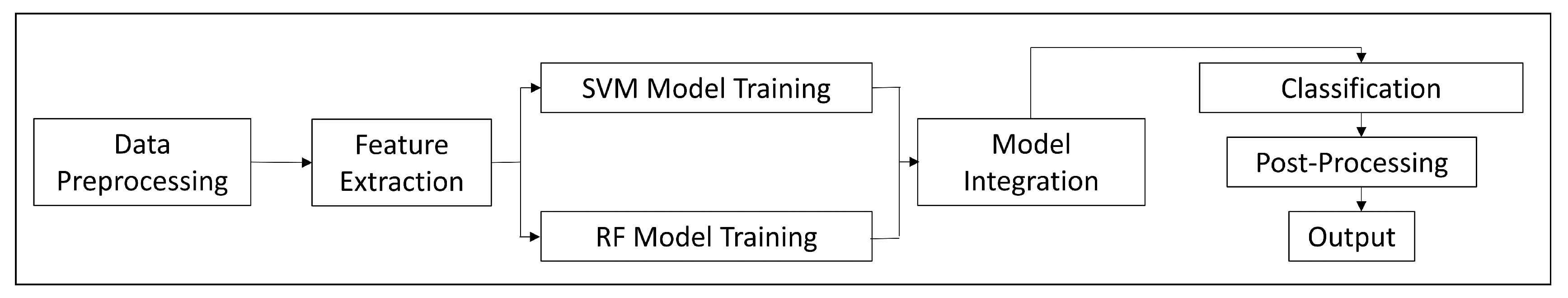

This knowledge gap on the effectiveness of Sentinel-1 and Sentinel-2 data fusion in enhancing ecosystem mapping accuracy is what this study seeks to address. In pursuit of these broad objectives, the study has been carefully designed to achieve some specific objectives. First, it will assess the efficiency in effectively evaluating the effectiveness of Sentinel-1 and Sentinel-2 data fusion in enhancing the delineation and characterization of an ecosystem. This study will target three of the most important ecosystems in Bangladesh: wetlands, riverine systems, and mangrove forests. These are among the most critical ecosystems that maintain global biodiversity and climate regulation. The comparison between the ecosystem maps produced from the integrated Sentinel-1 and Sentinel-2 datasets and that produced using only Sentinel-2 imagery will be drawn out comprehensively. The comparison’s objective is to express these improvements in accuracy and detail due to the data fusion approach. The choice of the SVM (Support Vector Machine) based RF classifier, implemented in this study for classifying the ecosystem classes, was based on various factors.

First, strong points in SVMs are their strengths in treating high-dimensional datasets and their efficiency in making discriminations of complex and nonlinear trends in data [

17]. Integrating the former into the RF framework has the advantage of employing the excellent classifier of SVM and using the ensemble learning of RF to gain better performance and accuracy. The strengths of the two algorithms are integrated in this approach, therefore acting as a synergistic combination of SVM’s discriminative power with RF’s versatility and efficiency [

18]. In addition, there is room for flexibility regarding parameter tuning of an RF classifier based on SVM, so that classification parameters could be optimized with respect to the ecosystem mapping task’s specifics. In other words, RF classifiers based on SVM have already been proven to be effective in different remote sensing applications, including land cover classification and vegetation mapping, thereby making them quite suitable for ecosystem classification tasks [

19]. The adoption for this study of the said classifier is in line with the pursuit of achieving precise and reliable classification outcomes that turn out to be very important in the full understanding of ecosystem dynamics and further help in making informed decisions on conservation and management initiatives. The study will provide measurements of several parameters, such as overall classification accuracy, class-specific accuracy metrics like producer’s and user’s accuracies, and impact of fusion techniques on the classification outcome.

In addition, environmental factors like cloud cover, roughness of terrain, and seasonality will be used to compare the analyses. Further, such research may also aid in formulating standardized protocols for using remote sensing data in ecological assessment processes, thereby furthering scientific knowledge and environmental stewardship. If these objectives are met, it would be expected of this study to set a base standard in ecosystem mapping.

Such integration could be designed to not only further our capabilities for global environmental monitoring but also to enlighten more effective conservation and management of the world’s most vulnerable ecosystems. We, therefore, expect that this research would be able to demonstrate significant benefits that come from data fusion between Sentinel-1 and Sentinel-2, thus delivering a robust scientific basis for adoption in environmental science and policy making.

4. Discussion

The methodology developed in this study, which integrates SAR and optical data, opens the door for remote sensing applications in ecosystem mapping. This will not only increase the accuracy of classification in such tasks but will also provide a repeatable model for similar studies to be taken elsewhere. Such future research should also consider including other data sources, such as LiDAR and high-resolution satellite image integration, in an effort to further increase the accuracy and detail of ecosystem classification. On the other hand, enhancing the current algorithms for machine learning may be one way to achieve new discoveries relevant to the complex interactions occurring within and between the ecosystems.

Although these results are very promising from this pilot study, the classification methodology applied may have some intrinsic limitations. That is, a proposed SVM-based RF model in turn may introduce variability into the classification results since the SVM algorithm itself might be sensitive to the choice of kernel functions and hyper parameter tuning. Future studies are needed to assess advanced machine-learning techniques, for example, deep learning architectures that were recently reported to be more robust and adaptable to the intrinsic complex and nonlinear relationship present in the ecosystem data. Additional support information sources, for example, digital elevation models, soil maps, and climatic data, may be integrated with the aim of improving the accuracy and interpretability of the ecosystem classifications. The addition of these extra layers of information on the underlying biophysical and environmental drivers of the ecosystems under investigation would be the key to gaining some better, more cogent insights into the ecosystems in question. Where the methodology developed in the present research provides a new milestone in remote sensing application for ecosystem mapping, the integration with SAR and optical data sets only. Besides, the improvement in classification accuracy, this approach could also provide a repeatable model for similar kinds of studies across different geographical contexts. Future research should thus incorporate other sources of data and help to integrate data, e.g., through LiDAR and integration of high-resolution satellite images, to improve the extent of accuracy and refinement of these classifications of ecosystems. In addition, new machine learning algorithms could provide further insight into the complex web of interactions existing within and between ecosystems.

The results of this study mean a high level of accuracy in the mapping of ecosystems, which can have serious implications for environmental conservation and management. It provides better ways to assess the size of the ecosystems and gives a more detailed representation in support of better decision-making for habitat protection, land use planning, and biodiversity conservation. This increased mapping capacity is conducive for policymakers and practitioners of conservation in monitoring ecological changes and improving the tracking system for the efficiency of conservation interventions that foster prioritization for conservation and restoration.

In a nutshell, the integration of Sentinel-1 and Sentinel-2 data has high potential for the increase in accuracy in ecosystem mapping. The findings of the present study highlight synergistic benefits through the integration of SAR and optical sensors within complex environments, such as mangroves, riverine systems, and wetlands. Methodological novelties and enhanced classification accuracy from data fusions bring important consequences for the conservation and management of ecosystems, allowing for a more solid underpinning of environmental monitoring, habitat assessment, and conservation planning developments. Such advances are likely to be further embellished in the coming times with more integration of new data sources, advanced analysis methods, and complementary environmental data for deepening current knowledge and stewardship of the natural world.

5. Conclusion

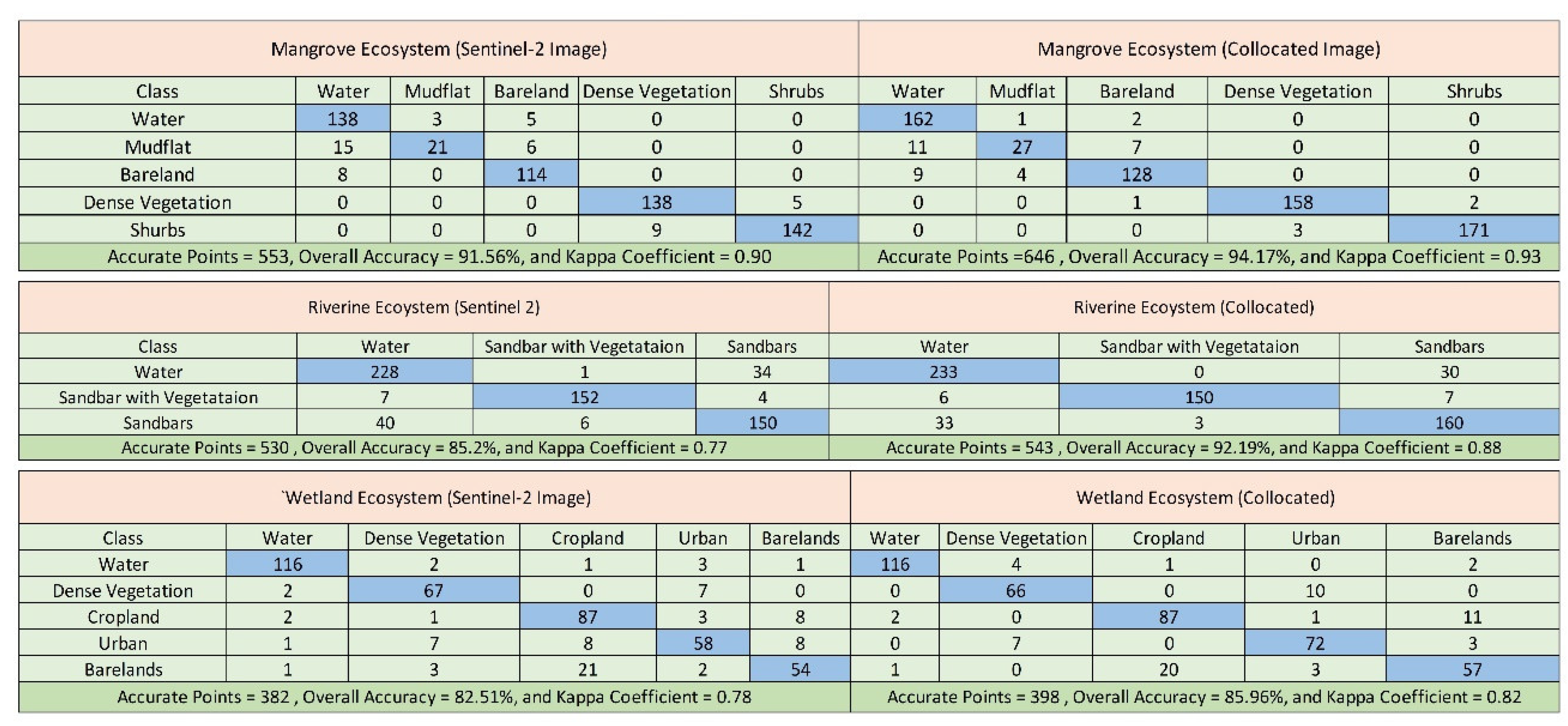

The current study investigates the potential of integrating Sentinel-1 and Sentinel-2 satellite data to improve the accuracy of ecosystem mapping for three different ecosystems: wetlands. In this respect, the random forest classification method is used in this research to evaluate the effectiveness of the strategy in efficiently distinguishing these key habitat types. These results strongly confirm the belief that combined Sentinel-1 and Sentinel-2 data outperform single-sensor Sentinel-2 imagery for highly accurate mapping of ecosystems across all the studied ecosystems.

Comparing the methodology of fusion between Sentinel-1 and Sentinel-2 images and a single-sensor Sentinel-2 image, classified by a Random Forest classifier, gives a significant contribution to the enhancement of precision in ecosystem mapping. The results show that the fusion approach always performs better than single-sensor imagery for classification accuracy of these very different ecosystems like mangroves, riverine areas, or wetlands. This analysis indicates that the collocated imagery, resulting from the fusion of Sentinel-1 and Sentinel-2 data, excels at capturing features of complex ecosystems, particularly water bodies, vegetation density, and land cover types. This confirms that multi-sensor data fusion is very relevant in improving the classification result in densely vegetated or cloud-covered areas.

The present research also underlines the need to integrate radar and optical data sources in the pursuit of more accurate and complete results for ecosystem mapping. Further, it also brings into focus the power of machine learning algorithms, of which Random Forest was one example, at harnessing fused data for accurate classification of ecosystem components. This paper presents multisensory data fusion as the most potent method of improving accuracy in ecosystem mapping. This could be done by fusing the complementary strengths of Sentinel-1 and Sentinel-2 data to catch more detail across diverse ecosystems, mitigating in turn the limitations of single-sensor approaches.

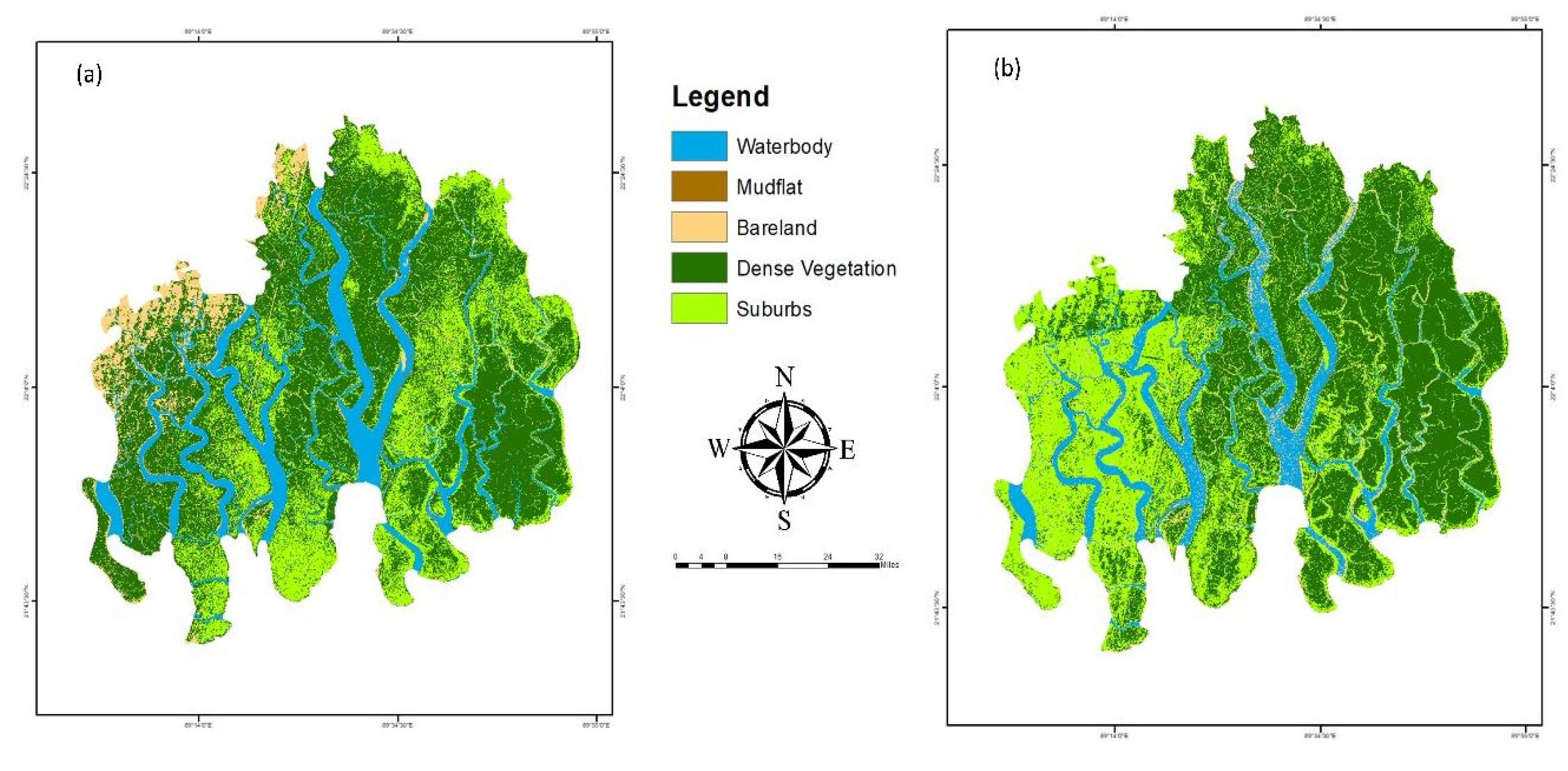

Figure 5.

Comparative Analysis of Mangrove Ecosystem Mapping. Figure (a) displays the Sentinel-2 image classification, while figure (b) shows the collocated image classification, both highlighting land cover types.

Figure 5.

Comparative Analysis of Mangrove Ecosystem Mapping. Figure (a) displays the Sentinel-2 image classification, while figure (b) shows the collocated image classification, both highlighting land cover types.

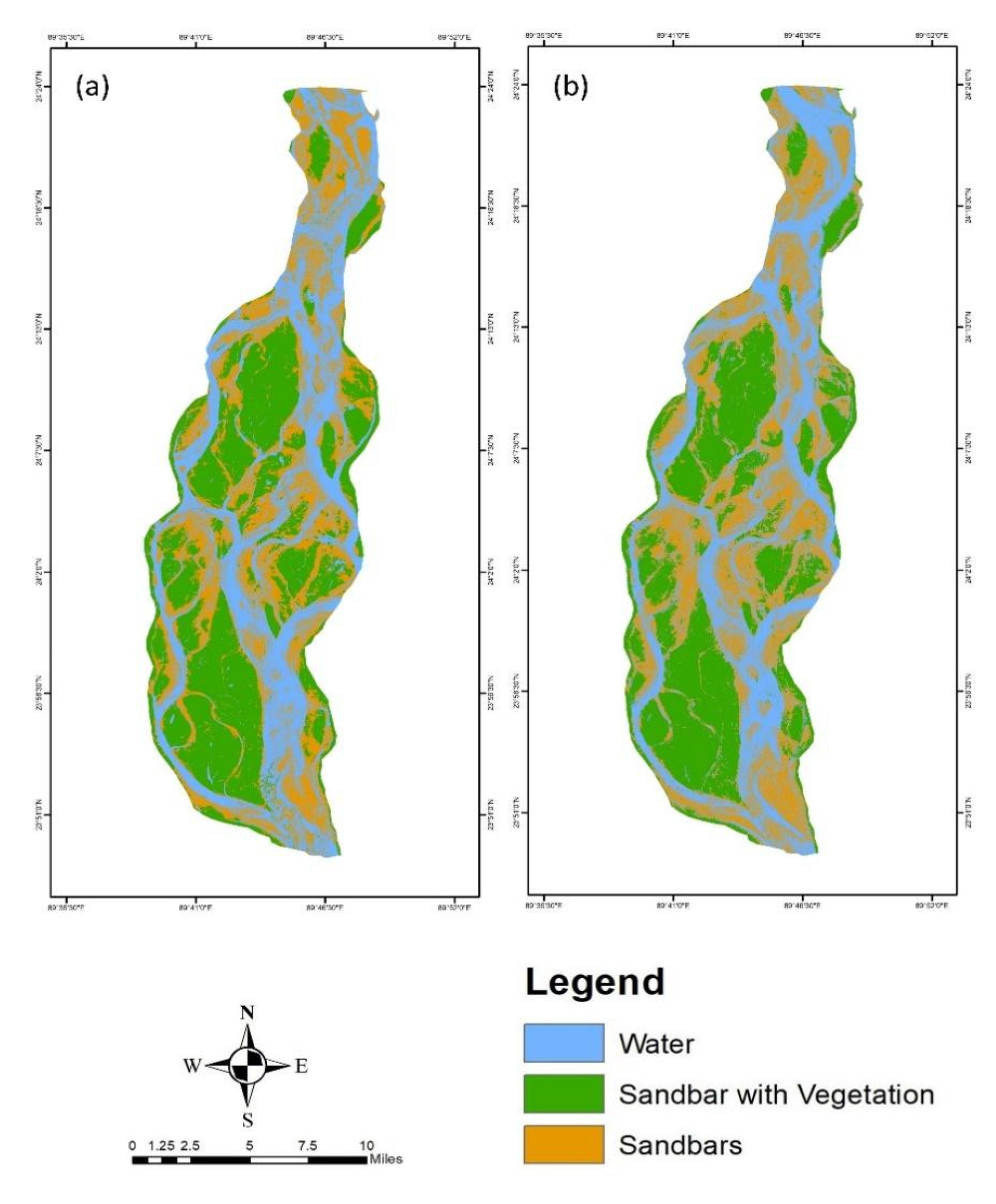

Figure 6.

Comparative Analysis of Riverine Ecosystem Mapping Using: (a) Sentinel 2 image (b) Collocated image (6(a) shows the Sentinel-2 satellite image interpretation, with water bodies, sandbars, and vegetation-rich areas distinctly classified; 6(b) displays the collocated image enhanced accuracy, revealing a more nuanced distribution of these key ecological features).

Figure 6.

Comparative Analysis of Riverine Ecosystem Mapping Using: (a) Sentinel 2 image (b) Collocated image (6(a) shows the Sentinel-2 satellite image interpretation, with water bodies, sandbars, and vegetation-rich areas distinctly classified; 6(b) displays the collocated image enhanced accuracy, revealing a more nuanced distribution of these key ecological features).

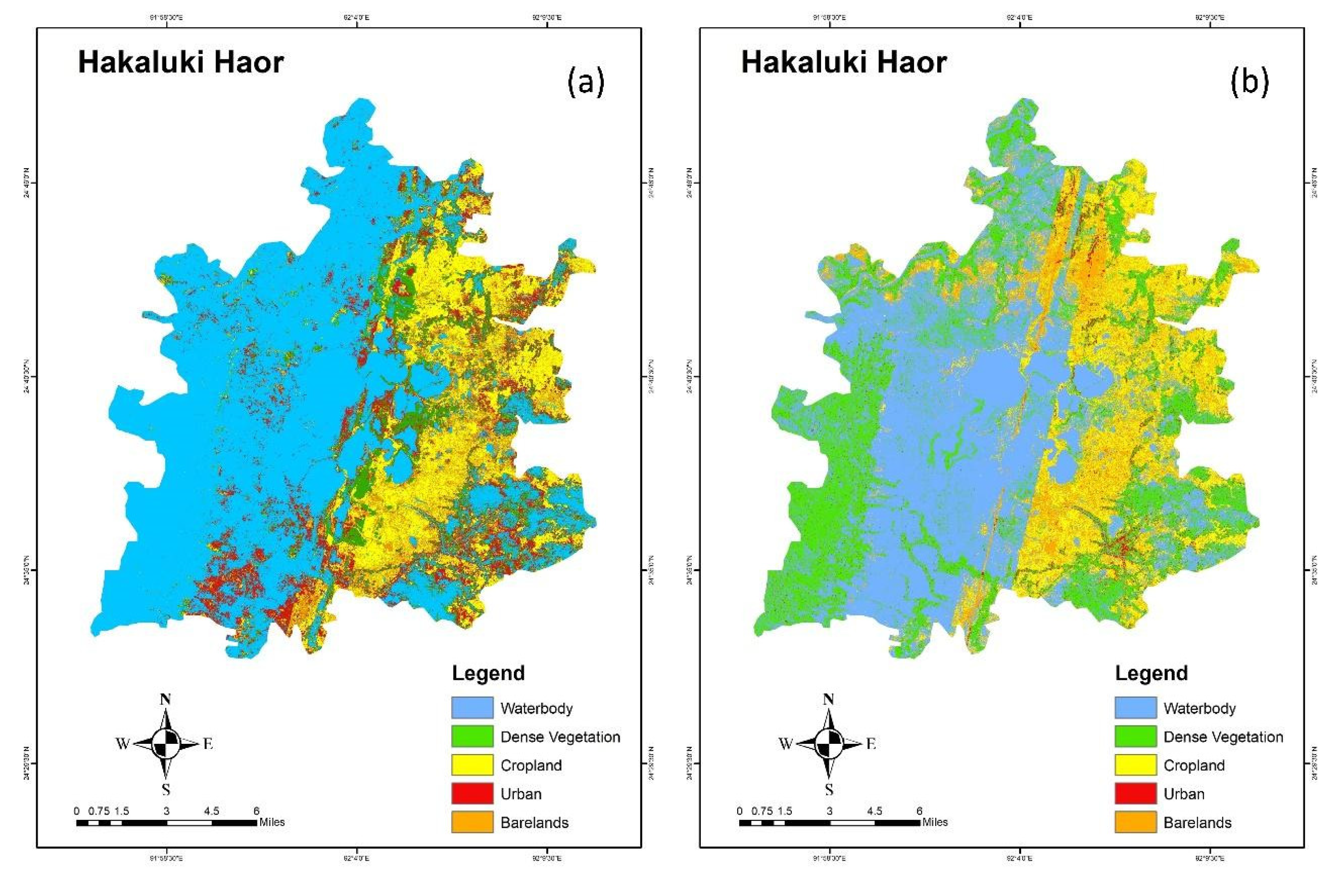

Figure 7.

Comparative Analysis of Wetland Ecosystem Mapping in Hakaluki Haor: (a) depicts the ecosystem distribution using a Sentinel-2 satellite image, while (b) shows the distribution using a collated image.

Figure 7.

Comparative Analysis of Wetland Ecosystem Mapping in Hakaluki Haor: (a) depicts the ecosystem distribution using a Sentinel-2 satellite image, while (b) shows the distribution using a collated image.

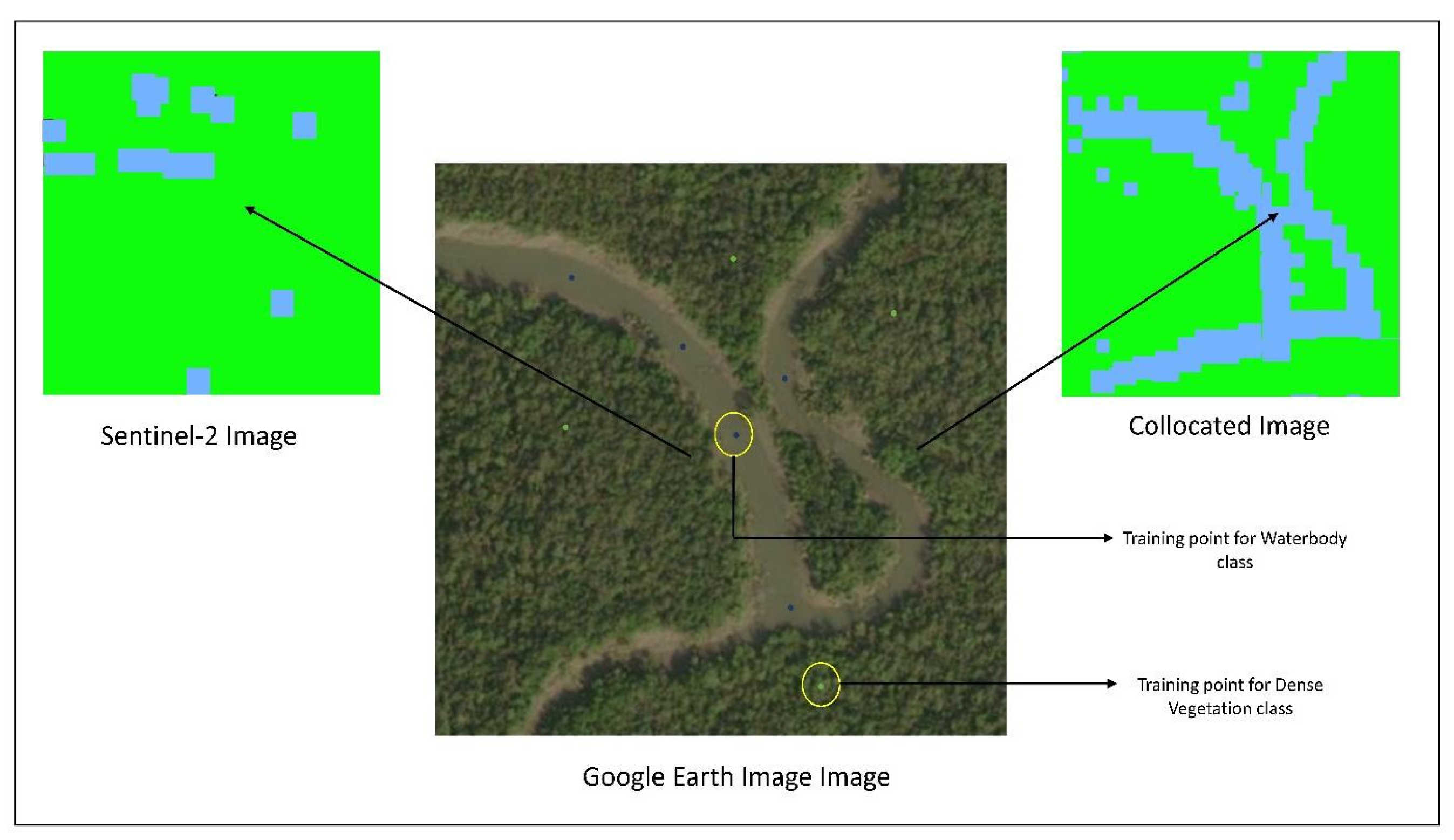

Figure 8.

Comparative study showcasing the effectiveness of Sentinel-2 satellite imagery against collocated ground-truth data for identifying mangrove vegetation and waterbody classes.

Figure 8.

Comparative study showcasing the effectiveness of Sentinel-2 satellite imagery against collocated ground-truth data for identifying mangrove vegetation and waterbody classes.

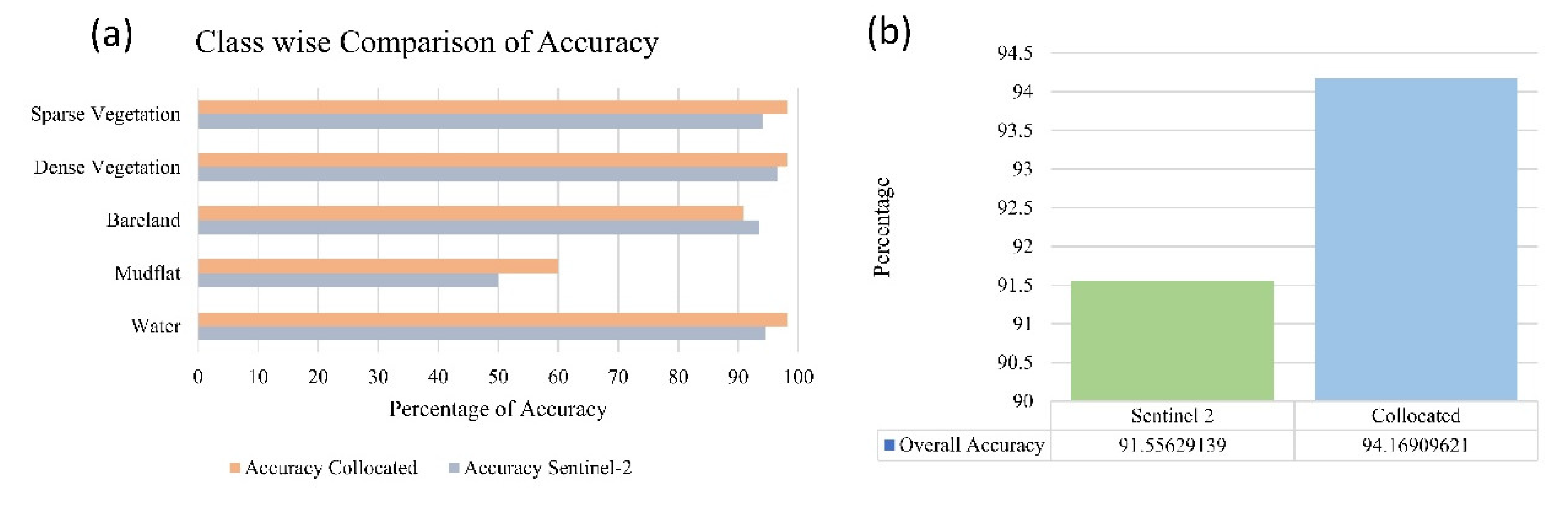

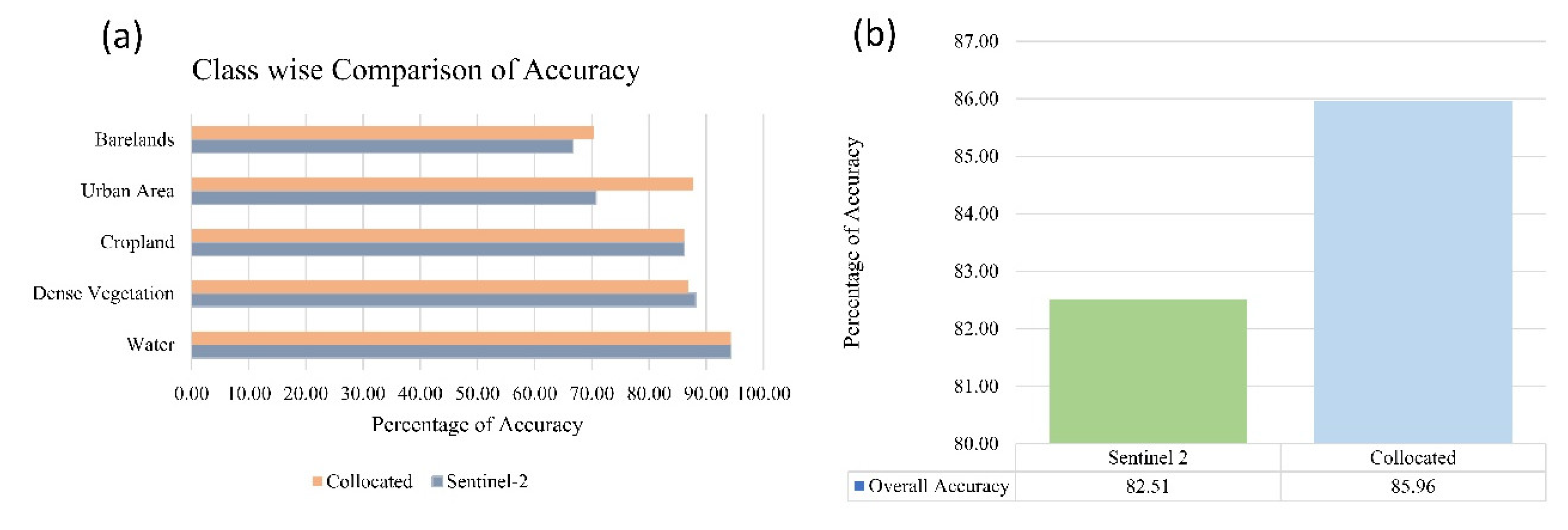

Figure 9.

Accuracy Assessment of Mangrove Ecosystem Classification (a) showing the class-wise accuracy for different land cover types, and (b) depicting the overall accuracy percentage of the Sentinel-2 and collocated data methods.

Figure 9.

Accuracy Assessment of Mangrove Ecosystem Classification (a) showing the class-wise accuracy for different land cover types, and (b) depicting the overall accuracy percentage of the Sentinel-2 and collocated data methods.

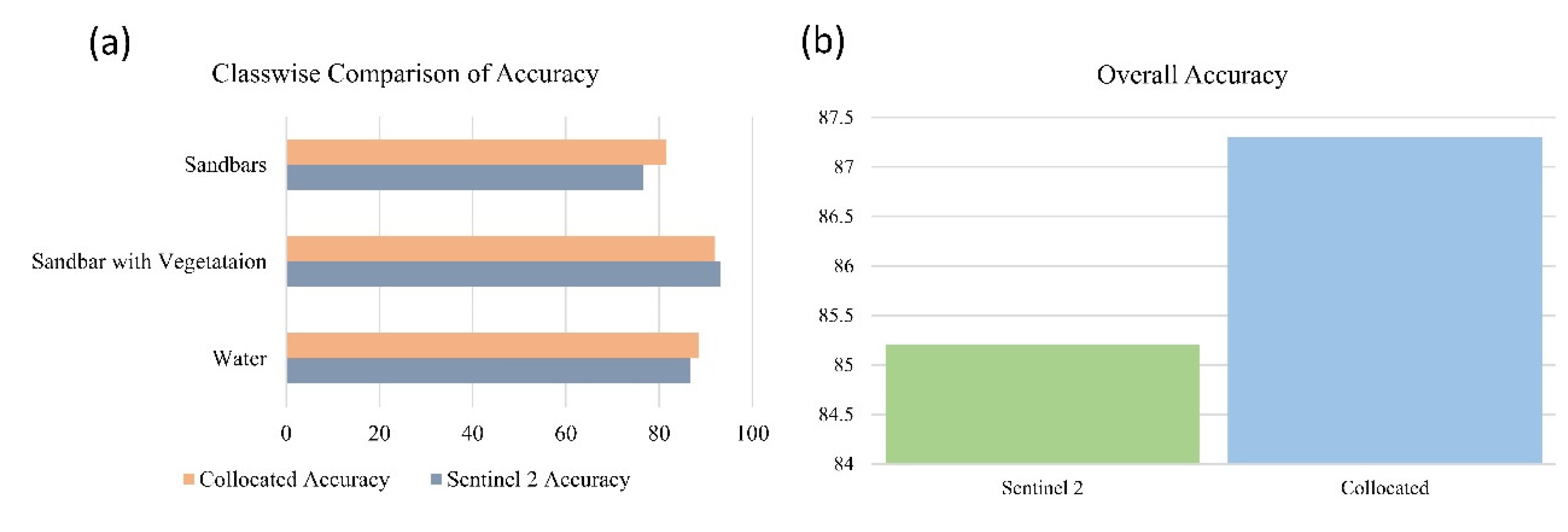

Figure 10.

Comparative Analysis of Riverine Ecosystem Classification Accuracy: (a) demonstrates the class-wise accuracy for riverine ecosystem, (b) presents the overall accuracy of the ecosystem delineation, comparing the performance of Sentinel 2 data against the Collocated data source.

Figure 10.

Comparative Analysis of Riverine Ecosystem Classification Accuracy: (a) demonstrates the class-wise accuracy for riverine ecosystem, (b) presents the overall accuracy of the ecosystem delineation, comparing the performance of Sentinel 2 data against the Collocated data source.

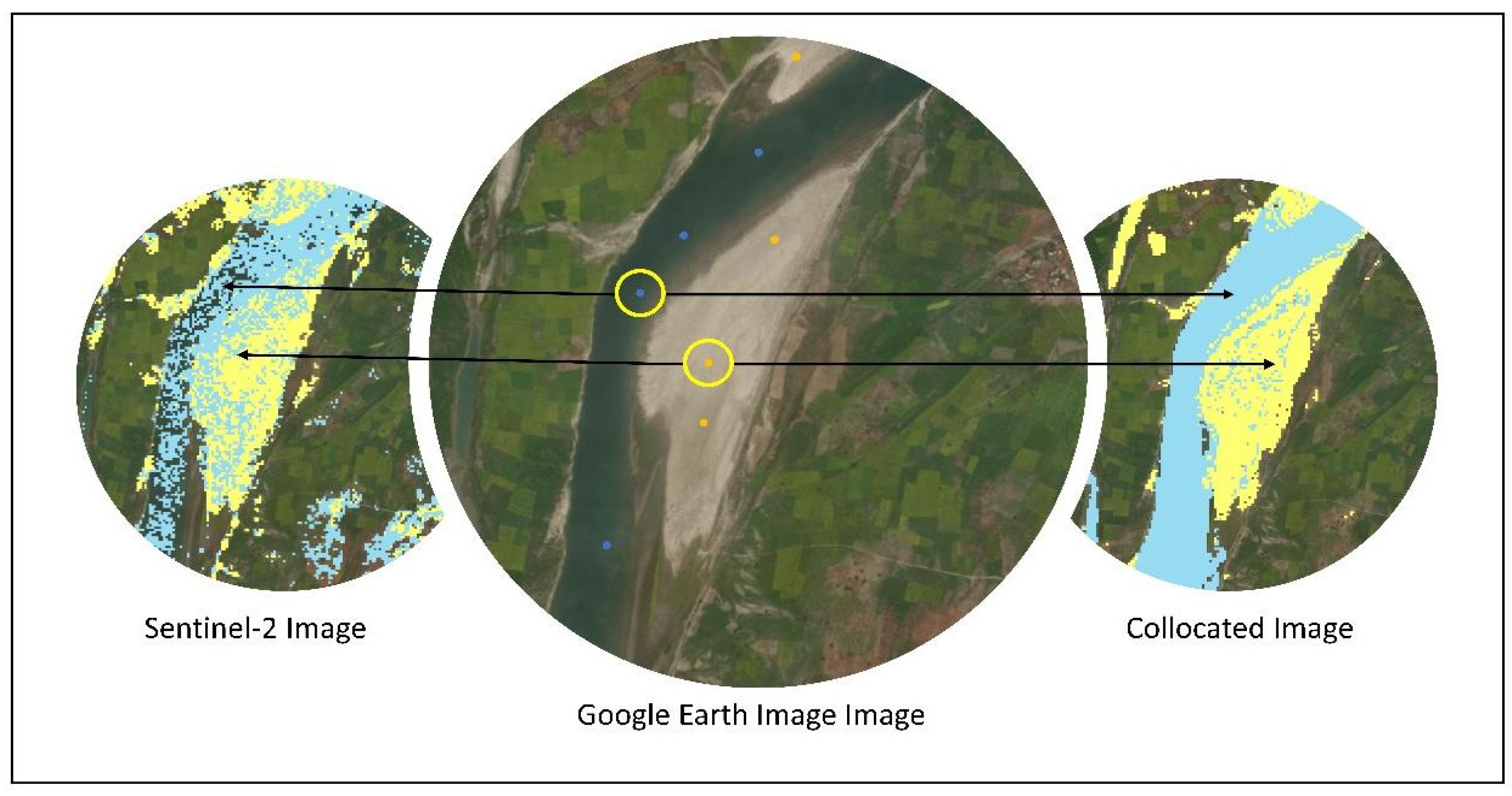

Figure 11.

Comparative analysis of the classification outcome of the two different images. The left panel displays a classified Sentinel-2 image, the center panel shows the corresponding Google Earth image for reference, and the right panel presents the classification results using a collocated image.

Figure 11.

Comparative analysis of the classification outcome of the two different images. The left panel displays a classified Sentinel-2 image, the center panel shows the corresponding Google Earth image for reference, and the right panel presents the classification results using a collocated image.

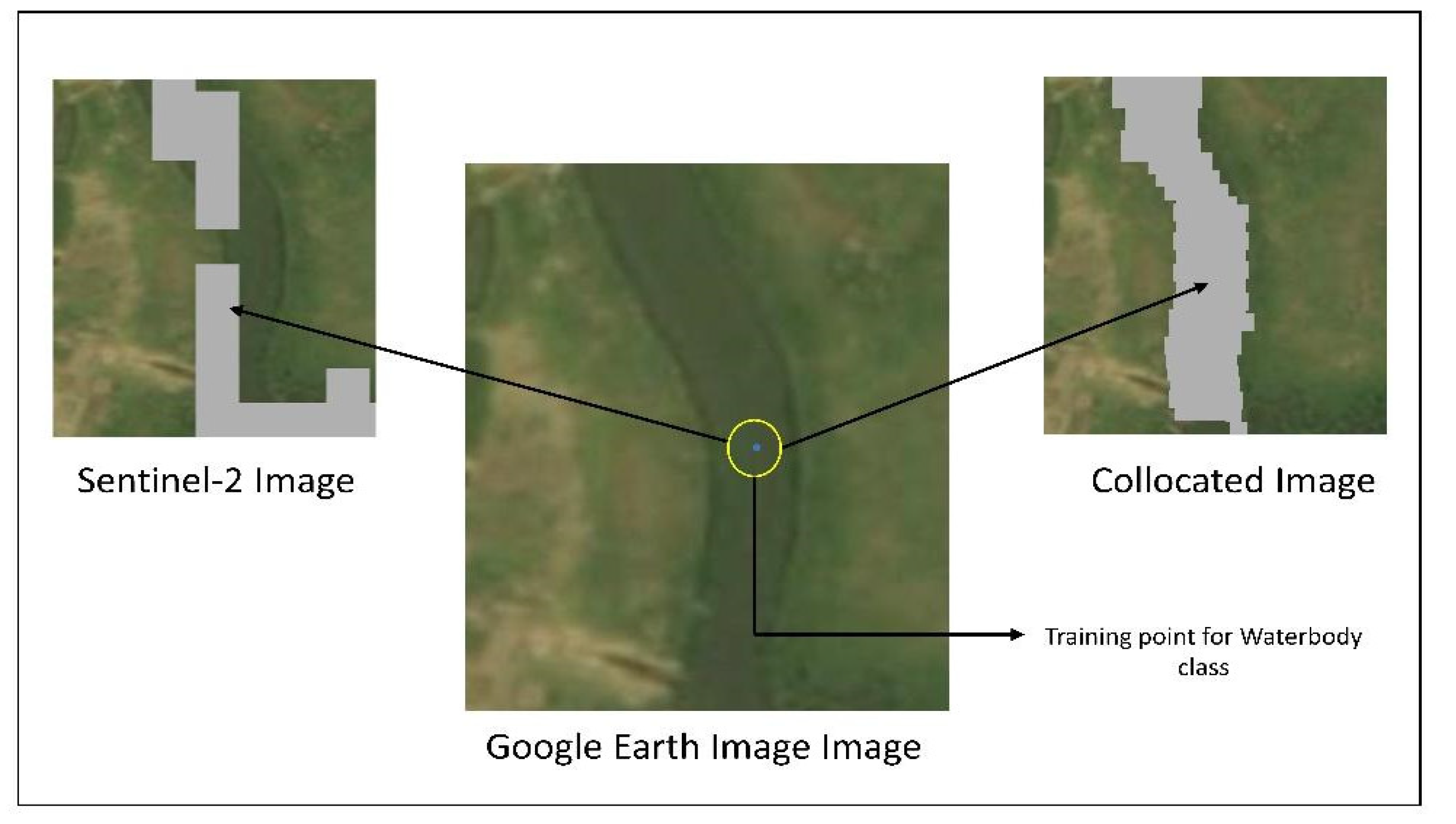

Figure 12.

Comparative analysis of the classification outcome of the two different images.

Figure 12.

Comparative analysis of the classification outcome of the two different images.

Figure 13.

(a) Class-wise accuracy comparison of wetland ecosystem classification using Sentinel-2 and Collocated imagery, (b) Overall accuracy comparison, highlighting the superior performance of collocated imagery over Sentinel-2.

Figure 13.

(a) Class-wise accuracy comparison of wetland ecosystem classification using Sentinel-2 and Collocated imagery, (b) Overall accuracy comparison, highlighting the superior performance of collocated imagery over Sentinel-2.

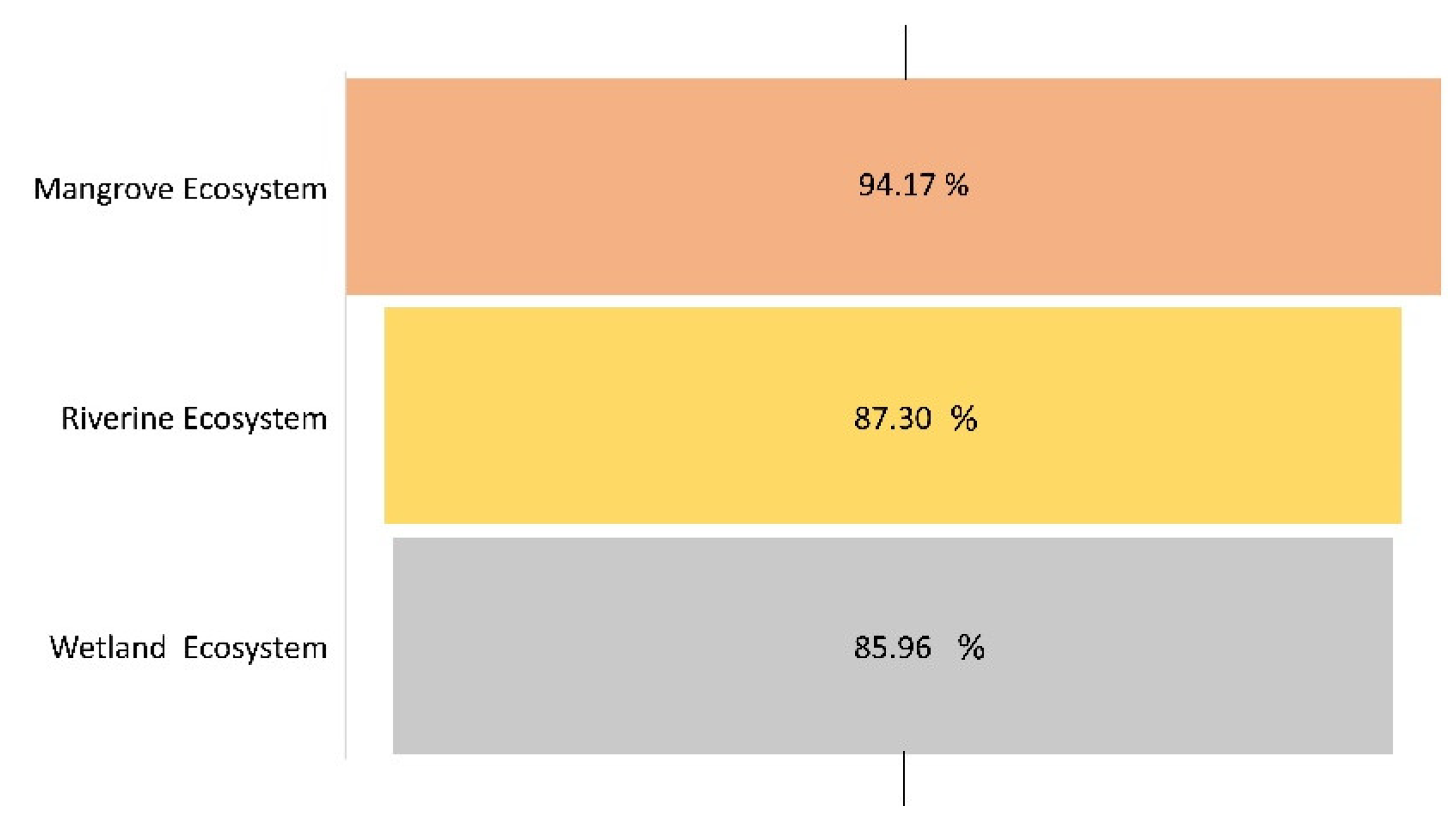

Figure 14.

Bar chart displaying the accuracy of image synthesis for Mangrove, Riverine, and Wetland ecosystems, with the highest accuracy observed in Mangrove ecosystems.

Figure 14.

Bar chart displaying the accuracy of image synthesis for Mangrove, Riverine, and Wetland ecosystems, with the highest accuracy observed in Mangrove ecosystems.

Table 1.

Description of Ecosystem Classes for Remote Sensing Analysis.

Table 1.

Description of Ecosystem Classes for Remote Sensing Analysis.

| Ecosystem Type |

LULC class |

Description |

Haor Basin

ecosystem |

Waterbody |

The land covered by water in the form of rivers, ponds, and beels. |

| Dense Vegetation |

Areas covered by evergreen trees that grow naturally in the land and along the river. |

| Crop Land |

This land is normally used for producing crops. |

| Bare Land |

This land has no vegetation and abandoned crops. |

| Human Settlement |

This land is occupied by people’s-built settlement. |

| Floodplain ecosystem |

Waterbody |

The land covered by water in the form of river. |

| Sandbar with vegetation |

Sandbars with vegetation covers. |

| Sandbar |

Sandbars with no vegetation and abandoned. |

| Mangrove ecosystem |

Waterbody |

The land covered by water in the form of river |

| Mudflat |

A stretch of muddy land |

| Bare land |

This land has no vegetation and abandoned crops |

| Dense Vegetation |

Areas covered by evergreen trees that grow naturally in the land and along the river |

| Shrubs |

Small mangrove trees |

Table 2.

Comparison of the Area Captured in 2 Different Methods.

Table 2.

Comparison of the Area Captured in 2 Different Methods.

| Classes |

Sentinel 2 Image |

Collocated Image |

| Area (square km) |

|---|

| Waterbody |

7.91 |

9.64 |

| Mudflat |

0.14 |

0.05 |

| Bare land |

4.48 |

2.71 |

| Dense Vegetation |

28.05 |

24.65 |

| Sparse Vegetation |

12.28 |

15.79 |

Table 3.

Comparison of the Area Captured in 2 Different Methods.

Table 3.

Comparison of the Area Captured in 2 Different Methods.

| Classes |

Sentinel 2 Image |

Collocated Image |

| Area (square km) |

|---|

| Waterbody |

2.47 |

2.65 |

| Sandbar with Vegetation |

2.57 |

2.41 |

| Sandbar |

2.14 |

2.12 |

Table 4.

Comparison of the Area Captured in 2 Different Methods.

Table 4.

Comparison of the Area Captured in 2 Different Methods.

| Classes |

Sentinel 2 Image |

Collocated Image |

| Area (square km) |

|---|

| Waterbody |

2.73 |

2.15 |

| Dense Vegetation |

0.41 |

1.20 |

| Cropland |

0.67 |

0.64 |

| Buildup Area |

0.57 |

0.12 |

| Bare land |

0.32 |

0.59 |